Abstract

Laparoscopic ultrasound-guided liver microwave ablation requires precise navigation and spatial accuracy. We developed an image fusion navigation system that integrates laparoscopic, ultrasound, and 3D liver model images into a unified real-time visualization. The Apple Vision Pro mixed reality device projects all essential image information into the surgeon’s field of view in real-time. This system reduces cognitive load and enhances surgical precision and efficiency. Comparative experiments showed a significant improvement in puncture accuracy under AVP guidance (success rate of 90%) compared to traditional methods (42.5%), benefiting both novice and experienced surgeons. According to the NASA Task Load Index evaluation, the system also reduced the workload of surgeons. In eight patients, ablation was successful with minimal blood loss, no major complications, and rapid recovery. Despite challenges such as cost and fatigue, these results highlight the potential of mixed reality technology to improve spatial navigation, reduce cognitive demands, and optimize complex surgical procedures.

Subject terms: Translational research, Hepatocellular carcinoma

Introduction

Laparoscopic ultrasound-guided hepatic microwave ablation surgery is an advanced minimally invasive technique used to treat liver tumors. This technique, although less invasive and quicker to recover from than traditional surgery, is technically demanding and operationally challenging1. Laparoscopic ultrasound (LUS) accurately identifies and assesses hepatic hemangiomas during surgery, avoiding unnecessary excisions and precisely delineating tumor margins to maximize the preservation of healthy liver tissue2. LUS employs a specially designed flexible-tip probe (four-directional adjustment, 10 mm diameter) with a frequency range of 5.0–10.0 MHz, providing high-definition images. Its small size and narrow scanning range require operation through a 12 mm disposable Trocar, supporting color Doppler blood flow imaging3. Depending on the need, the probes are divided into linear array for precise marking and convex array for wider scanning range, facilitating puncture operations and recognition of deep structures. Compared to open surgery ultrasound, the close contact of the LUS probe with the liver surface through an abdominal wall-fixed Trocar is challenging, increasing the difficulty of probe manipulation and target scanning. The limited scanning range of the LUS probe and the oblique planes generated from different puncture sites pose challenges for surgeons without specialized ultrasound training. Surgeons need to be familiar with laparoscopic liver anatomy and skilled in manipulating the probe and puncture needle to precisely adjust the direction of puncture4.

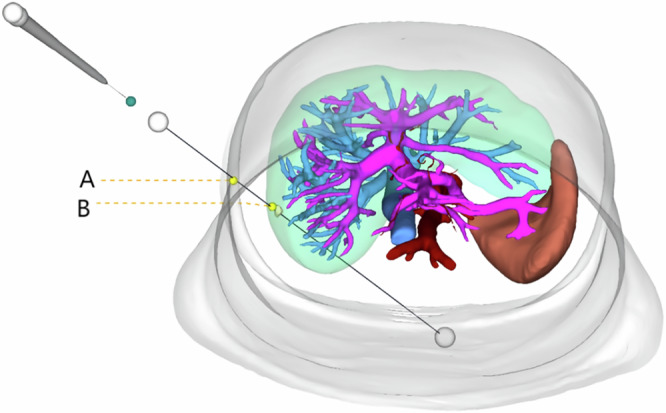

The LUS probe has a very narrow clear scanning area, focused at the center. During puncture, it is necessary to ensure that the needle shaft and the midline of the probe’s side are on the same plane for effective ultrasound monitoring of the puncture needle. The needle tip must remain visible throughout the procedure; if the needle does not move on the screen, it indicates a deviation from the intended path, and the operation should be stopped immediately. After adjusting the probe, continue the insertion under ultrasound monitoring to ensure precision and safety. LUS-guided ablation therapy involves multiple steps including localization, puncture, and observation. Due to the lack of bracket guidance and standard exploration planes, the operation is challenging5. Once the ablation needle passes through the abdominal wall under laparoscopy, its mobility is restricted. After passing through point A (puncture point on the abdominal wall) and point B (entry point on the liver surface) (Fig. 1), the principle of “two points and one line” makes it difficult to adjust the path once established6. If the needle tip does not reach the tumor or the position is unsatisfactory, it must be withdrawn and readjusted. Using computer-imaging three-dimensional reconstruction technology to precisely plan the LUS scanning planes and needle paths during microwave ablation can reduce intraoperative uncertainty and repetitive adjustments of the path, thereby saving surgical time, reducing average hospital stays, and shortening the learning curve of the technique7,8.

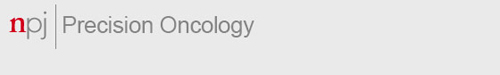

Fig. 1. Ablation needle path and the constraints of the “two points and a line” principle.

The path of the ablation needle from point A (abdominal wall puncture site) to point B (liver surface puncture site) is shown. The figure highlights the restriction on needle trajectory adjustments due to the “two points and one line” principle.

Advanced ultrasound equipment from various manufacturers now features mature fusion imaging technology, such as real-time virtual sonography (RVS). RVS co-registers real-time ultrasound (US) images with preloaded CT or MRI images via workstation software, creating CT/MRI-US fusion images for surgical reference9. Ultrasound systems are equipped with electromagnetic or optical navigation trackers that monitor external sensors on the US probe, calibrating the probe and ultrasound image positions. Co-registration aligns the ultrasound coordinate system with the CT or MRI system using identical anatomical reference points, synchronizing the two coordinate systems. In addition to CT and MRI, pre-acquired three-dimensional ultrasound (3D-US) can be used for US-US fusion imaging. Electromagnetic navigation systems depend on magnetic fields to locate surgical tools, but electromagnetic interference from other magnetic devices can impact accuracy. Electromagnetic sensors must operate within a specific range, which may limit the freedom and flexibility of surgical operations. Optical navigation systems require a direct line of sight between the sensor and the surgical tool, with any obstruction causing tracking errors. Both electromagnetic and optical tracking devices necessitate stringent sterilization and are costly10,11. It is noteworthy that surgeons face particular difficulties in spatially locating the laparoscopic ultrasound probe and the ablation needle. They constantly shift their attention between the laparoscopic and ultrasound images, expending significant effort to correlate the information between the two screens (Fig. 2). In response to these technological limitations, we have developed an advanced image fusion navigation system that combines real-time adjusted 3D views with sophisticated data processing technologies to provide more accurate and intuitive surgical navigation information. This system seamlessly integrates intraoperative ultrasound images, laparoscopic visuals, and liver 3D reconstruction models on a single screen, significantly enhancing the visual efficiency and precision of surgeries (Fig. 3).

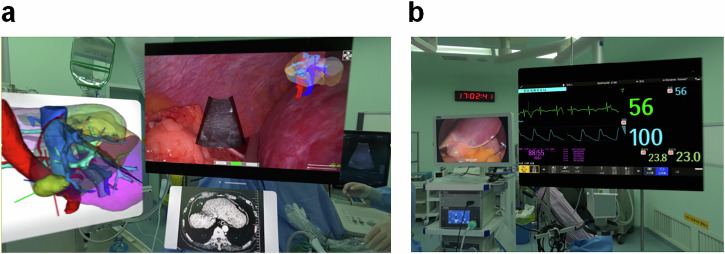

Fig. 2. Spatial localization and information synchronization of the LUS probe and ablation needle.

a Schematic illustrating the spatial localization difficulties of the laparoscopic ultrasound probe and ablation needle. b Surgeons must switch attention between Screen 1 (laparoscopy) and Screen 2 (ultrasound) to synchronize information between the two displays.

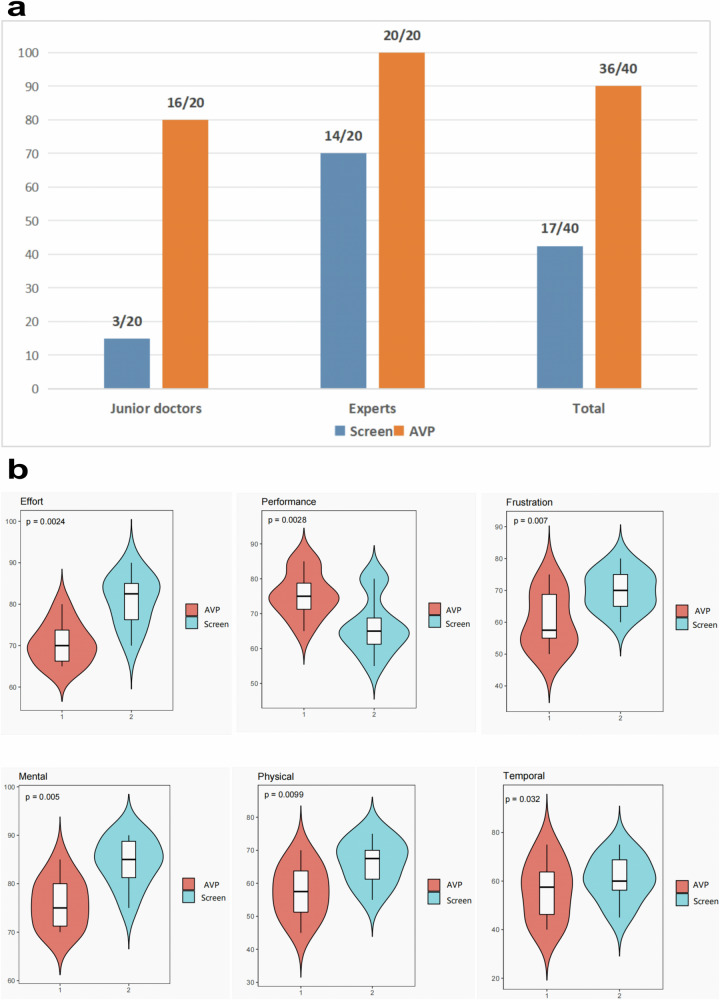

Fig. 3. Schematic of the image fusion navigation system.

Illustration of the advanced image fusion navigation system, integrating real-time adjusted intraoperative ultrasound images, laparoscopic visuals, and liver 3D reconstruction models on a single screen to improve surgical precision and visual efficiency.

The Apple Vision Pro (AVP) is an innovative mixed-reality device developed by Apple, integrating both Virtual Reality (VR) and Augmented Reality (AR) technologies12. The device features an intuitive three-dimensional interactive interface, enabling users to operate through natural interactions such as eye movements, gestures, and voice commands13. Key technological aspects of the AVP include two high-resolution micro-OLED displays with up to 23 million pixels and 12 high-performance cameras that provide precise scene parsing and eye-tracking capabilities. Additionally, the AVP is equipped with various sensors, including anti-flicker sensors, ambient light sensors, depth sensors, and inertial measurement units. Thanks to its dual R1 and M2 chip architecture, the AVP efficiently processes data from cameras and sensors, performs complex computer vision algorithms, produces clear graphical images, and ensures high energy efficiency14. These integrated technologies reduce the delay from image capture to display to just 12 milliseconds, offering users a high-precision and ultra-low latency spatial computing experience. Through these advanced technologies, the AVP seamlessly integrates digital information into the real world, pioneering new forms of user interaction15,16.

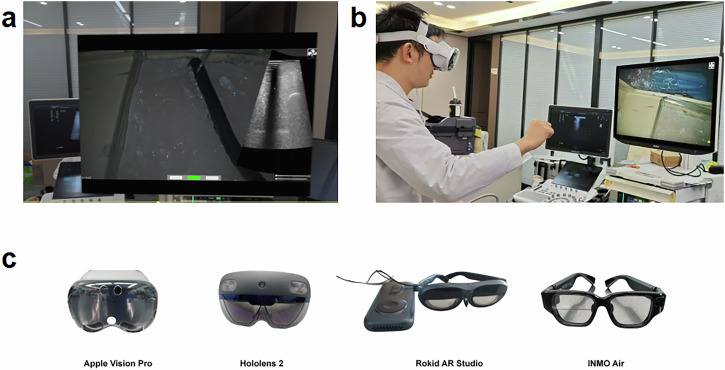

In recent years, the application of commercially available head-mounted displays in medical surgery has received widespread attention. Among these devices, AVP (released in 2024) stands out in surgical navigation with its high-resolution display (3600 × 3200px) and eye-tracking technology. Priced at $3499, AVP may be expensive for the average consumer, but compared to traditional medical devices, such as CT scanners, MRI machines, and navigation systems that can cost millions of dollars, AVP appears much more affordable. Despite AVP offering unparalleled visual quality and precision, some users have reported experiencing motion sickness and eye fatigue after prolonged use, which could limit comfort during long surgeries. Other commercially available head-mounted displays, such as Microsoft HoloLens 2 (released in 2019), Rokid AR Studio (released in 2023), and INMO Air (released in 2021), also offer AR capabilities for medical applications but have significant differences in performance and specifications. Microsoft HoloLens 2 offers a powerful interactive experience, including gesture, eye tracking, and voice control, with a resolution of 2048 × 1080px and a field of view of 43 × 29°. Although it provides decent visualization, the lower resolution and smaller field of view make it less suitable for complex surgeries. Rokid AR Studio is a more lightweight and affordable device, with a resolution of 1920 × 1200px and a field of view of 50°, suitable for simpler tasks. In contrast, INMO Air is a compact device with the lowest display resolution (640 × 400px) and the narrowest field of view (26°), limiting its application in surgeries requiring high precision and detail. Despite the differences among these devices, they all demonstrate usability in surgical environments, while AVP, with its superior resolution, eye tracking, and gesture-based interaction capabilities, emerges as the ideal tool for performing high-precision surgeries17,18 (Table 1).

Table 1.

Summary of technical specifications for commercially available head-mounted displays used

| Apple Vision Pro | Hololens2 | Rokid AR Studio | INMO Air | |

|---|---|---|---|---|

| Release date | 2024 | 2019 | 2023 | 2021 |

| Design | Hat-like | Hat-like | Glasses-like | Glasses-like |

| Weight | 600g | 566g | 76g | 79g |

| Interaction | Hand, eye, voice | Hand, eye, voice | Hand, controller | Touchpad |

| Field of view | 110 × 79° | 43 × 29° | 50° | 26° |

| SLAM | 6DoF | 6DoF | 6DoF | 6DoF |

| Computing | On-board | On-board | External pad | On-board |

| Resolution | 3600 × 3200px | 2048 × 1080px | 1920 × 1200px | 640 × 400px |

| Optics | Pancake | Waveguide | BirdBath | Waveguide |

| Price | $3699 | $3500 | $1200 | $410 |

SLAM Simultaneous Localization and Mapping.

Results

Guidance method comparison

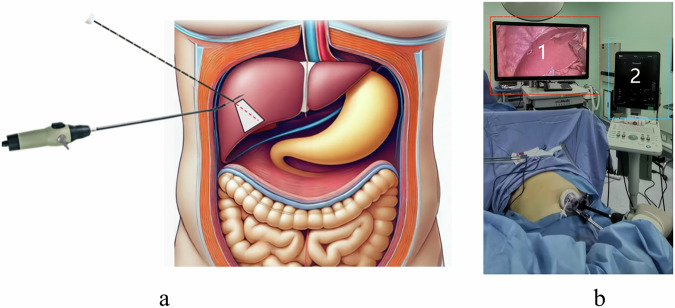

The AVP real-time image fusion guidance system significantly outperformed traditional 2D screen-based guidance. Under traditional guidance, the success rate was 42.5% (17/40), compared to 90% (36/40) with AVP. Junior surgeons achieved a success rate of 15% (3/20) with traditional methods, improving to 80% (16/20) using AVP. Experienced surgeons improved from 70% (14/20) to 100% (20/20). These differences were statistically significant (P < 0.05) (Fig. 4).

Fig. 4. Comparison between traditional screen guidance and AVP real-time image fusion guidance.

a Success Rate Comparison: The success rate of junior doctors using traditional guidance was 15% (3/20 targets), while with AVP guidance, the success rate increased to 80% (16/20 targets). Experts had a success rate of 70% (14/20 targets) with traditional guidance, which increased to 100% (20/20 targets) with AVP guidance. In the traditional screen guidance group, 17 out of 40 targets were successfully hit (42.5%), whereas in the AVP guidance group, 36 out of 40 targets were successfully hit (90%). b NASA Task Load Index (TLX) Evaluation of Workload: Compared to traditional screen guidance, the use of the AVP real-time image fusion guidance system significantly reduced the surgeons’ mental, physical, and operational workload, while also improving task performance and reducing frustration. All workload dimensions showed statistically significant differences (P < 0.05).

Workload reduction with NASA TLX

The NASA Task Load Index (TLX) demonstrated that the AVP system significantly reduced cognitive, physical, and temporal demands compared to traditional guidance. It also improved overall task performance, surgeon satisfaction, and reduced frustration levels (P < 0.05). Junior surgeons particularly benefited from reduced spatial localization challenges and cognitive fatigue. Experienced surgeons reported smoother operations and better visualization. Most surgeons adapted to the AVP system within 15 min and expressed positive feedback regarding its usability and comfort.

Clinical outcomes of AVP-assisted ablation

Between June and August 2024, eight patients underwent laparoscopic ultrasound-guided microwave ablation for liver hemangiomas with the AVP system. Operating times ranged from 65 to 147 min (mean, 105). Blood loss was minimal, and none of the patients required transfusions. All lesions were successfully ablated without intraoperative complications. Most patients resumed walking and eating the next day; average hospital stay was four days. No major postoperative complications were observed, and liver function tests (AST, ALT, bilirubin) normalized within one week (Table 2). During the surgical tests on 8 real patients, the surgeons unanimously agreed that AVP reduced cognitive load and improved operational fluidity. By overlaying real-time images and providing precise spatial guidance in the dynamic surgical field, surgeons were able to make decisions more quickly, reduce the number of visual switches, and make the surgical process more fluid and efficient. These feedback results are consistent with the NASA TLX scores from the training box experiment.

Table 2.

Patient characteristics for the application of real-time image fusion and AVP navigation system

| 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | |

|---|---|---|---|---|---|---|---|---|

| Sex | Female | Female | Male | Male | Male | Female | Female | Male |

| Age (yr) | 40 | 42 | 53 | 58 | 42 | 48 | 36 | 47 |

| BMI (kg/m2) | 27.4 | 24.3 | 26.4 | 23.8 | 29.1 | 24.6 | 26.4 | 25.3 |

| Number | Multiple | Multiple | Multiple | Multiple | Multiple | Solitary | Multiple | Multiple |

| LS (mm) | 56 | 79 | 48 | 72 | 76 | 53 | 51 | 10 |

| PLT (109/L) | 296 | 232 | 236 | 245 | 258 | 241 | 252 | 249 |

| ALB (g/L) | 48.1 | 42.7 | 41.4 | 43.5 | 46.6 | 48.4 | 42.1 | 47.6 |

| AST (u/L) | 27 | 16 | 22 | 22 | 21 | 22 | 23 | 17.7 |

| ALT (u/L) | 28 | 13 | 37 | 25 | 31 | 21 | 24 | 15.6 |

| TBIL (u mol/L) | 16.9 | 6.6 | 13.7 | 12 | 17.4 | 11.6 | 14 | 16.13 |

| SCR (u mol/L) | 54 | 53 | 60 | 85 | 90 | 60 | 55 | 90 |

| Operation time (min) | 120 | 70 | 65 | 134 | 112 | 80 | 147 | 130 |

| Blood loss (mL) | 20 | 5 | 50 | 50 | 20 | 100 | 20 | 50 |

| Abdominal drainage | NO | YES | YES | YES | YES | YES | YES | NO |

| Blood transfusion | NO | NO | NO | NO | NO | NO | NO | NO |

BMI body mass index, LS lesion size, PLT platelet, ALB albumin, AST aspartate Transaminase, ALT Alanine aminotransferase, TBIL total bilirubin, SCR Serum creatinine.

Discussion

The AVP real-time image fusion navigation system proposed in this study demonstrated significant advantages in laparoscopic ultrasound-guided liver microwave ablation compared to traditional screen-based guidance methods. Compared to traditional methods, the AVP system directly projects fused images of laparoscopic, ultrasound, and 3D liver models into the surgeon’s field of view, reducing the need for frequent shifts in focus, thus effectively lowering cognitive load and improving surgical precision. This result aligns with the studies by Mitchell Doughty et al. (2022) and Arrigo Palumbo (2022), which found that mixed reality technology optimized information presentation, reducing visual switching and cognitive load, particularly in complex surgeries, thereby enhancing the efficiency of the surgeon’s operations19,20. Compared to existing mixed reality devices (such as Microsoft HoloLens 2 or Rokid AR Studio), the AVP system offers advantages in higher resolution, a wider field of view, and more precise eye-tracking technology. Devices like HoloLens 2 are often limited in their practical application in complex surgical environments due to their lower display resolution and narrower field of view.AVP’s high visual quality enables a clearer display of key anatomical structures such as the liver, which is crucial for precise surgical interventions. However, some users experience visually induced motion sickness and eye fatigue or discomfort after prolonged use of the AVP. Opinions on the comfort of the AVP are mixed21. In our study, all clinical doctors used the same unit of AVP, which might not have been the best fit for some participants. Despite the significant advantages of the AVP system, this study has certain limitations, and the generalizability of the results requires further validation through larger-scale clinical trials. Manuel Birlo et al. (2022) pointed out in their study that although mixed reality technology is widely applied in various fields, its effectiveness and feasibility in different types of surgeries remain to be further explored22. Therefore, future research should focus on multi-center, large-scale clinical validation to further assess the generalizability and long-term effects of the AVP system. The AVP system’s ability to collect large amounts of data provides an additional advantage for future exploration of deep learning and machine learning, although this study did not delve into the development of related models. Future research could explore how to use this data to train deep learning and machine learning models, thereby improving surgical outcomes and operational efficiency. Overall, this study demonstrates the great potential of the AVP real-time image fusion navigation system in liver microwave ablation. By projecting fused images from multiple imaging modalities into the surgeon’s field of view in real-time, the AVP system effectively enhances surgical precision, reduces cognitive load, and significantly improves workload. Compared to traditional 2D screen-based guidance methods, the AVP system excels in reducing visual switching and enhancing spatial localization. Although there are still certain limitations, such as device cost and clinical adaptability, with technological advancements, the AVP system is expected to become an important tool in laparoscopic surgery, providing surgeons with a more intuitive and efficient method for surgical navigation.

Methods

System design overview

We have developed an advanced image fusion navigation system aimed at improving surgical precision and efficiency. The system integrates laparoscopic images, laparoscopic ultrasound images, and preoperative 3D liver reconstruction models into a unified and intuitive surgical view, reducing surgeons’ cognitive load while improving surgical precision and navigation efficiency. Surgeons interact with the system via AVP. During preoperative and intraoperative phases, AVP is used to view 3D liver models and patient CT images to assist in planning ablation needle paths and LUS scan planes. During surgery, AVP directly projects real-time fused laparoscopic and ultrasound images into the surgeon’s field of view. Surgeons can use natural gestures to interact with these visualization interfaces, adjusting views and selecting relevant imaging layers. Surgical assistants ensure accurate alignment of laparoscopic images, 3D models, and real-time ultrasound images using controllers, dynamically adjusting parameters like transparency and scaling to enhance visualization. In the image fusion navigation system window, the real-time ultrasound images captured during surgery need to be manually adjusted and aligned with the actual position of the ultrasound probe to ensure image accuracy. The system is built using Unity and OpenCV, with interface design based on PySide6 to enable intuitive user interaction. High-resolution images captured from laparoscopic and ultrasound devices are processed on a high-performance computing workstation. Advanced image preprocessing techniques, such as adaptive histogram equalization, Retinex-based brightness enhancement, and motion blur reduction, are applied to improve image clarity and usability. Real-time fused images are streamed to AVP with minimal latency, ensuring a seamless image flow. For the three-dimensional reconstruction of the liver, we employed Hisense Medical Imaging Processing Software CAS-LV, which is designed for medical image preprocessing and segmentation. The software automatically performs 3D reconstruction of liver tissues and organs using algorithms such as filtering, interpolation, morphological processing, and threshold segmentation. Users need only make minimal adjustments on DICOM images to automatically segment the target organs and tissues. One of the key challenges in using the AVP in the operating room is its inability to connect directly to the internet due to security concerns. To address this, we established a Wi-Fi network between the AVP and the computer using a router, enabling real-time image streaming from the computer to the AVP. This configuration allows the AVP to function as the display terminal for real-time fused laparoscopic and ultrasound images. Additionally, the AVP’s mixed-reality capabilities enable the surgeon to view critical CT scans and 3D reconstruction models directly in their field of vision. By integrating these various imaging modalities into one cohesive view, the AVP provides a more comprehensive and accurate representation of the surgical site.

The workstation is configured as follows: It is equipped with an Intel Core i9 14900K CPU, providing robust computing power to efficiently handle complex image processing tasks; it features an NVIDIA RTX 4090 GPU, supporting high-quality 3D image rendering and real-time processing; it has 192GB DDR5 RAM, ensuring smooth system performance during multitasking, especially when processing large-scale 3D image data; it includes a 2TB SSD, offering fast data read and write speeds, crucial for quick access and storage of important image data during surgeries. The workstation is responsible for receiving image data from a signal acquisition card. We use the TC-UB570 PRO signal acquisition card, capturing images from the Optomedic Stellar 4K Optical 3D Fluorescence Laparoscope and BK1202 intraoperative ultrasound with 1080P resolution at 60 FPS in YUY2 video format, and transmitting the captured image data in real-time to the computing workstation.

The development platform for the application in this study was chosen to be Unity, with the OpenCV library utilized for image reading, display, processing, and analysis. The interface design is based on the PySide6 framework, with Unity enabling the loading and display of the liver’s three-dimensional model. The UI interface allows for calling the model loading program, displaying the loaded 3D model transparently to aid doctors in intuitively understanding and manipulating the position of surgical instruments and the structure of the liver and lesions. The system can update and display the merged images in real-time during the surgery and showcase them in a unified 3D view. The processed images are streamed to the AVP glasses with low latency, providing real-time displays for the surgeons.

Image processing

For ultrasound image processing, various techniques were employed, including histogram equalization, adaptive histogram equalization, and brightness enhancement using Retinex principles, alongside contrast and brightness improvements. Given that histogram equalization might distort image data, this study adopted the single-scale Retinex method, which enhances brightness via Gaussian blur, logarithmic transformations, and image subtraction. Furthermore, Wiener filtering was applied to deblur images from potential motion blur during surgeries. Adaptive histogram equalization was also implemented to highlight critical information and further refine the image quality. Prior to merging ultrasound images with laparoscopic imagery, the ultrasound images underwent brightness enhancement to maintain essential features and avoid distortions due to operational mishaps. Surgeons can conveniently adjust the brightness increment through the UI interface during operations. To prevent the boundaries of images extracted from the ultrasound video from obstructing the laparoscopic view, the image boundaries need to be made transparent. The boundaries of the images extracted from the ultrasound video are rendered transparent using OpenCV. This process is applied only to the boundary areas of the video frames, not the entire image.

Additionally, the study integrated OpenCV’s video encoding and decoding capabilities to facilitate real-time video data transmission and designed recording features to meet the needs of minimally invasive surgery’s live imaging systems. The UI design, considering user experience and usability, offers a simple, clear, and easy-to-operate interface. Combined with OpenCV and PySide6, this research implemented functionalities such as real-time marking and tracking, image zoom, rotation, brightness adjustment, and transparency, enhancing the visualization and convenience of surgical operations. The use of PySide6’s event handling and signal-slot mechanisms ensures the system’s stability and reliability (Fig. 5).

Fig. 5. Schematic of the integrated surgical navigation system.

Schematic of the integrated liver 3D reconstruction and surgical navigation system supported by a high-performance workstation. It employs CAS-LV for 3D reconstruction, Unity for model display, and integrates OpenCV for image processing and PySide6 for UI design.

Surgical procedure

Surgeons authenticate their identity using the Optic ID iris recognition system while wearing AVP glasses. Optic ID rapidly unlocks by recognizing the unique patterns of the iris, a feature particularly critical in healthcare, as only authorized users can access sensitive patient data. Additionally, the system supports hands-free login in sterile environments. Preoperatively, doctors must review electronic medical records to verify surgical patient details and plan the LUS scanning planes and needle trajectory. During surgery, various windows can be dynamically added to the field of view of the AVP glasses, including the image fusion navigation system window, patient CT images, dynamic 3D reconstruction models, vital signs (such as heart rate, blood pressure, and oxygen saturation), and alert notifications. These windows operate independently from the image fusion navigation system, providing comprehensive support with real-time navigational imaging and essential vital sign information (Fig. 6). Key features of the image fusion navigation system display include:

Precise correlation between real-time ultrasound imaging and operative actions: In the image fusion navigation system window, the intraoperative ultrasound images captured in real-time are displayed directly beneath the ultrasound probe. Surgeons can precisely observe the relationship between the ultrasound images and the actual procedures through the AVP glasses, thereby enhancing operational accuracy. The real-time positions of the needle and other surgical instruments are clearly visible, ensuring their accurate placement within the target area.

Loading and displaying 3D models: The UI interface of the image fusion navigation system supports the loading and display of liver 3D models. Surgical assistants can scale, rotate, and make the 3D models transparent through UI options in the AVP glasses, manually aligning and overlaying them on the laparoscopic view. This flexible manipulation enhances surgeons’ spatial understanding and precision in complex surgical environments.

Support operations by surgical assistants: Surgical assistants can manually adjust the display settings of ultrasound images and 3D reconstruction models in the image fusion navigation system, such as scaling, rotation, and transparency. Based on the surgeons’ requirements, assistants optimize the image display in real-time, preventing obstructions of the real scene and enhancing the visibility and safety of the puncture site.

Fig. 6. Multi-window dynamic display function of AVP in surgery.

a During the procedure, AVP displays multiple windows, including the image fusion navigation system, patient CT images, and dynamic 3D reconstructed models, providing real-time visual support to assist the surgeon in planning the LUS scanning plane and needle trajectory. b AVP also displays essential vital signs information (such as heart rate, blood pressure, and oxygen saturation) along with alert notifications, ensuring the surgeon can monitor the patient’s condition in real-time and respond promptly.

While image fusion technology enhances intraoperative navigation accuracy, AVP further optimizes the way information is accessed. On traditional 2D screens, surgeons need to lift or turn their heads to view fused images, which limits gaze freedom and results in frequent visual switching, affecting the fluidity of the surgery. In contrast, AVP directly projects key images into the surgeon’s natural field of view, synchronizing information with their gaze and reducing focus shifts, thus improving both operational accuracy and surgical fluidity.

To evaluate the effectiveness of the AVP real-time image fusion guidance system in LUS-guided liver microwave ablation, we conducted a controlled experimental study comparing the AVP system with traditional screen-based guidance. Four participants were involved in the study, including two junior residents (novices lacking experience in ultrasound operation and image interpretation) and two senior hepatobiliary surgeons (with experience in over 100 laparoscopic ultrasound-guided ablation procedures). Prior to the experiment, researchers only demonstrated to the novices how to operate intraoperative ultrasound and maneuver the laparoscopic ultrasound probe through a trocar. Each participant performed 10 puncture attempts under two conditions: traditional screen-based guidance and AVP real-time image fusion guidance, for a total of 20 attempts per participant and 80 attempts across all participants. Under the traditional screen guidance condition, laparoscopic and ultrasound images are displayed on separate monitors, and the surgeon needs to switch their gaze between the two screens. This method does not use any image fusion technology, presenting significant challenges in spatial localization and needle guidance. To avoid the learning effect, the experiment was conducted using a random assignment method, with some participants using AVP first and others using traditional screen guidance first. The post-experiment data analysis showed that the effect of different test sequences on the results was not significant, further confirming the reliability of the data. The experiment was conducted in a customized laparoscopic training box. The laparoscopic camera and intraoperative ultrasound probe were introduced through trocars mounted on the top surface of the model trainer, which was covered with a 2 cm thick silicone skin layer. The ultrasound probe’s scanning depth was set to 6 cm. The operators were tasked with puncturing targets within an agar block, represented by egg white spheres. Each agar block measured 25 × 25 × 15 cm (approximately the size of a human liver) and contained 10 targets (1 cm diameter egg white spheres) at depths of 3 cm or 5 cm. The agar model was placed on a uniaxial motion platform inside the laparoscopic training box. The platform was capable of head-to-tail movement to simulate liver motion during physiological respiration. The motor control unit could simulate various respiratory rates and patterns. Ultrasound coupling gel was applied to the top surface of the agar. A new target was selected for each attempt, and repeated targeting was not permitted. Participants selected targets within the agar block and inserted the ablation needle from any location on the training box’s silicone skin surface. Participants could perform ultrasound scans at any angle of their choice. Once the ablation needle penetrated the model surface, only forward movement of the needle was allowed; withdrawal to change the puncture point was not permitted. To meet the “hit” criteria, the tip of the ablation needle had to be within the target; if the tip completely missed or passed through the target, the attempt was classified as a “miss.” The order of attempts under each condition was determined by random drawing. After needle insertion, participants were required to remain stationary. The success of each puncture was independently assessed by a senior ultrasound specialist who was blinded to the conditions and participants (Fig. 7). Data were expressed as simple counts and percentages, and differences between groups were analyzed using chi-square tests. Statistical analysis was performed using R software (version 3.5.3), with a significance level set at P < 0.05.

Fig. 7. AVP real-time image fusion navigation system and head-mounted display devices.

a Customized laparoscopic training box containing a 25 × 25 × 15 cm agar model with 10 targets (1 cm diameter protein balls), placed at depths of 3 cm and 5 cm. A uniaxial motion platform simulates liver movement. b Participants complete 10 puncture attempts each under traditional screen guidance and AVP real-time image fusion guidance, with the puncture needle inserted through a silicone skin layer. c Comparison of medical head-mounted display devices, including AVP, Microsoft HoloLens 2, Rokid AR Studio, and INMO Air.

Additionally, to evaluate the impact of AVP real-time image fusion guidance on workload, we Invited 10 surgeons who had used the system to complete the NASA Task Load Index (TLX) questionnaire. The study compared the supportive effectiveness of AVP real-time image fusion guidance versus conventional intraoperative screen guidance. Statistical analysis included normality tests and t-tests, all conducted using R software (version 3.5.3).

Supplementary information

Acknowledgements

This work was supported by Famous doctor of Yunnan “Xingdian Talent Support Program”(XDYC-MY-2022-0032); Yunnan Fundamental Research Project (202201AS070002); Key S&T Special Projects of Yunnan (NO.202402AA310056); Innovation Team Special Program of Yunnan (NO.202505AS350004).

Author contributions

Conceptualization, Tao Lan and Sichun Liu; Writing - Original Draft, Tao Lan; Writing - Review & Editing, all authors; Funding Acquisition, Yun Jin; Resources, Yun Jin and Jiang Han; Supervision, Yun Jin and Jiang Han. All authors read and approved the final manuscript.

Data availability

The datasets generated and/or analyzed during the current study are not publicly available due to subject privacy protection but are available from the corresponding author upon reasonable request.

Code availability

The underlying code for this study is not publicly available but may be made available to qualified researchers on reasonable request from the corresponding author.

Competing interests

The authors declare no competing interests.

Ethics approval

We adhered to all relevant ethical regulations, including the Declaration of Helsinki. This study was approved by the Medical Ethics Committee of the First People’s Hospital of Yunnan Province. Informed consent was obtained from the patient prior to publication of this report.

Footnotes

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

These authors contributed equally: Tao Lan, Sichun Liu.

Contributor Information

Jiang Han, Email: 2864611379@qq.com.

Yun Jin, Email: colourcloud@126.com.

Supplementary information

The online version contains supplementary material available at 10.1038/s41698-025-00867-z.

References

- 1.Iezzi, R. et al. Emerging Indications for Interventional Oncology: Expert Discussion on New Locoregional Treatments. Cancers15, 308 (2023). [DOI] [PMC free article] [PubMed]

- 2.Machi, J., Oishi, A. J., Furumoto, N. L. & Oishi, R. H. Intraoperative ultrasound. Surg. Clin. North Am.84, 1085 (2004). [DOI] [PubMed] [Google Scholar]

- 3.Ferrero, A., Lo, T. R. & Russolillo, N. Ultrasound Liver Map Technique for Laparoscopic Liver Resections. World J. Surg.43, 2607 (2019). [DOI] [PubMed] [Google Scholar]

- 4.Hu, Y. et al. Application of Indocyanine Green Fluorescence Imaging Combined with Laparoscopic Ultrasound in Laparoscopic Microwave Ablation of Liver Cancer. Med. Sci. Monit.28, e937832 (2022). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Sindram, D. et al. Real-time three-dimensional guided ultrasound targeting system for microwave ablation of liver tumours: a human pilot study. HPB13, 185 (2011). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Santambrogio, R., Bianchi, P., Pasta, A., Palmisano, A. & Montorsi, M. Ultrasound-guided interventional procedures of the liver during laparoscopy: technical considerations. Surg. Endosc.16, 349 (2002). [DOI] [PubMed] [Google Scholar]

- 7.Santambrogio, R., Vertemati, M., Barabino, M. & Zappa, M. A. Laparoscopic Microwave Ablation: Which Technologies Improve the Results. Cancers15, 1814 (2023). [DOI] [PMC free article] [PubMed]

- 8.Machi, J. et al. Ultrasound-guided radiofrequency thermal ablation of liver tumors: percutaneous, laparoscopic, and open surgical approaches. J. Gastrointest Surg.5, 477 (2001). [DOI] [PubMed] [Google Scholar]

- 9.Kitada, T. et al. Effectiveness of real-time virtual sonography-guided radiofrequency ablation treatment for patients with hepatocellular carcinomas. Hepatol. Res.38, 565 (2008). [DOI] [PubMed] [Google Scholar]

- 10.Takamoto, T. et al. Feasibility of Intraoperative Navigation for Liver Resection Using Real-time Virtual Sonography With Novel Automatic Registration System. World J. Surg.42, 841 (2018). [DOI] [PubMed] [Google Scholar]

- 11.Lv, A., Li, Y., Qian, H. G., Qiu, H. & Hao, C. Y. Precise Navigation of the Surgical Plane with Intraoperative Real-time Virtual Sonography and 3D Simulation in Liver Resection. J. Gastrointest. Surg.22, 1814 (2018). [DOI] [PubMed] [Google Scholar]

- 12.Dhawan, R., Bikmal, A. & Shay, D. From virtual to reality: Apple Vision Pro’s applications in plastic surgery. J. Plast. Reconstr. Aesthet. Surg.94, 43 (2024). [DOI] [PubMed] [Google Scholar]

- 13.Waisberg, E. et al. Apple Vision Pro: the future of surgery with advances in virtual and augmented reality. Ir. J. Med. Sci.193, 345 (2024). [DOI] [PubMed] [Google Scholar]

- 14.Waisberg, E. et al. Apple Vision Pro and why extended reality will revolutionize the future of medicine. Ir. J. Med. Sci.193, 531 (2024). [DOI] [PubMed] [Google Scholar]

- 15.Ruger, C. et al. Ultrasound in augmented reality: a mixed-methods evaluation of head-mounted displays in image-guided interventions. Int. J. Comput. Assist. Radio. Surg.15, 1895 (2020). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Ong, J. et al. Head-mounted digital metamorphopsia suppression as a countermeasure for macular-related visual distortions for prolonged spaceflight missions and terrestrial health. Wearable Technol.3, e26 (2022). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Masalkhi, M. et al. Apple Vision Pro for Ophthalmology and Medicine. Ann. Biomed. Eng.51, 2643 (2023). [DOI] [PubMed] [Google Scholar]

- 18.Doughty, M., Ghugre, N. R. & Wright, G. A. Augmenting Performance: A Systematic Review of Optical See-Through Head-Mounted Displays in Surgery. J. Imaging8, 203 (2022). [DOI] [PMC free article] [PubMed]

- 19.Palumbo, A. Microsoft HoloLens 2 in Medical and Healthcare Context: State of the Art and Future Prospects. Sensors22, 7709 (2022). [DOI] [PMC free article] [PubMed]

- 20.Xia, Z., Zhang, Y., Ma, F., Cheng, C. & Hu, F. Effect of spatial distortions in head-mounted displays on visually induced motion sickness. Opt. Express31, 1737 (2023). [DOI] [PubMed] [Google Scholar]

- 21.Birlo, M., Edwards, P., Clarkson, M. & Stoyanov, D. Utility of optical see-through head mounted displays in augmented reality-assisted surgery: A systematic review. Med. Image Anal.77, 102361 (2022). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Johnsson, J. et al. Artificial neural networks improve early outcome prediction and risk classification in out-of-hospital cardiac arrest patients admitted to intensive care. Crit. Care24, 474 (2020). [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Data Availability Statement

The datasets generated and/or analyzed during the current study are not publicly available due to subject privacy protection but are available from the corresponding author upon reasonable request.

The underlying code for this study is not publicly available but may be made available to qualified researchers on reasonable request from the corresponding author.