Abstract

PelviNet introduces a groundbreaking multi-agent convolutional network architecture tailored for enhancing pelvic image registration. This innovative framework leverages shared convolutional layers, enabling synchronized learning among agents and ensuring an exhaustive analysis of intricate 3D pelvic structures. The architecture combines max pooling, parametric ReLU activations, and agent-specific layers to optimize both individual and collective decision-making processes. A communication mechanism efficiently aggregates outputs from these shared layers, enabling agents to make well-informed decisions by harnessing combined intelligence. PelviNet’s evaluation centers on both quantitative accuracy metrics and visual representations to elucidate agents’ performance in pinpointing optimal landmarks. Empirical results demonstrate PelviNet’s superiority over traditional methods, achieving an average image-wise error of 2.8 mm, a subject-wise error of 3.2 mm, and a mean Euclidean distance error of 3.0 mm. These quantitative results highlight the model’s efficiency and precision in landmark identification, crucial for medical contexts such as radiation therapy, where exact landmark identification significantly influences treatment outcomes. By reliably identifying critical structures, PelviNet advances pelvic image analysis and offers potential enhancements for broader medical imaging applications, marking a significant step forward in computational healthcare.

Keywords: Multi-agent reinforcement learning, Pelvic image registration, Medical image analysis

Introduction

Pelvic image registration is crucial in various clinical applications, from diagnosis and treatment planning to surgical guidance. It allows us to align images acquired at different times or with different modalities, enabling detailed comparisons and quantitative analysis. However, achieving robust and accurate pelvic image registration remains a significant challenge, hampered by several inherent limitations within traditional methods [1].

One major hurdle lies in the inherent anatomical variability of the pelvic region. Age, gender, and disease presence can dramatically alter individual anatomy, creating discrepancies that traditional methods, often relying on consistent pixel intensity patterns, struggle to overcome. Additionally, medical images are frequently marred by artifacts like metal implants or motion blur, further misleading intensity-based and feature-based approaches [2].

Traditional methods—such as intensity-based and feature-based approaches [3, 6], as well as atlas-based methods [7], which have been widely studied in the context of pelvic image registration—also face difficulties in capturing the intricate deformations that pelvic organs can undergo due to respiration, organ motion, or disease processes. Rigid or affine transformations, commonly employed in these methods, often fall short of accurately representing such complex movements. Furthermore, while capable of handling some deformations, feature-based and atlas-based methods usually require manual landmark selection or atlas creation. This time-consuming and subjective process can affect generalizability.

Studies have documented the variability in organ positioning and motion within the pelvis, illustrating the complexities of managing pelvic organ motion during treatments like radiotherapy. For instance, research exploring the dosimetric implications of horizontal patient rotation during prostate radiation therapy highlights the significant internal organ motion observed during such procedures, underscoring the limitations of rigid alignment strategies without adaptation or re-optimization of treatment plans to account for anatomical deformations [8].

Moreover, in cervical cancer radiotherapy, the importance of minimizing organ position variation is emphasized, with studies suggesting bladder and rectal preparation strategies to mitigate variation. The findings indicate the critical role of regular imaging and potentially adapting treatment plans to accommodate for changes in organ positioning and volume, highlighting the dynamic nature of pelvic anatomy during treatment [9].

Generalizability itself presents another challenge. Feature-based and atlas-based methods, relying on manual intervention, often struggle to adapt to unseen patient populations, limiting their broader applicability. Additionally, some traditional methods, particularly intensity-based ones, can be computationally expensive, especially for high-resolution images, hindering their use in real-time applications [10, 11].

Despite these limitations, the ongoing quest for better pelvic image registration techniques fuels the exploration of innovative approaches. Deep learning has emerged as a promising avenue, with convolutional neural networks (CNNs) demonstrating improved performance in both intensity-based and feature-based registration. Recurrent neural networks (RNNs) offer particular advantages in capturing temporal information and handling dynamic deformations.

Another exciting direction lies in reinforcement learning (RL). Its ability to learn directly from image data and feedback signals holds promise for addressing challenges like complex deformations and limited generalizability. However, applying RL to image registration requires careful consideration due to the high dimensionality of image data and the need for intricate reward functions [12].

Perhaps the most promising future lies in leveraging the power of multi-agent reinforcement learning (MARL). By employing multiple agents, each tasked with a specific sub-task of the registration process, MARL offers the potential for collaborative exploration and exploitation, leading to more effective solutions. Communication and cooperation between agents can help overcome challenges like local optima and improve exploration efficiency, ultimately paving the way for a new era of high-quality, robust pelvic image registration [16, 18].

The application of RL in medical image analysis is still emerging, with researchers exploring its potential in various tasks. The challenges in understanding and deploying RL in clinical settings, especially for those without a professional computer science background, have led to a focus on simplifying these concepts for wider application. This review serves as a guide for formulating and solving medical image analysis problems through reinforcement learning, categorizing papers according to the type of image analysis task, and discussing limitations and future improvements of RL approaches in this field [16].

Integrating computational technologies significantly en-hances medical treatment strategies, particularly in gynecological cancer. PelviNet, a multi-agent convolutional network, is a pivotal innovation for improving pelvic image registration. This technology stands to revolutionize radiation therapy by enabling more precise targeting, which is crucial for effective treatment. PelviNet’s ability to refine radiation dose delivery exemplifies its support for personalized medicine, aiming to tailor treatments to specific patient conditions and improve overall therapeutic outcomes.

Related Work

Deep reinforcement learning (RL) application for image registration has gained traction due to its ability to handle complex deformations and overcome local optima issues. The paper [17] presents a similar approach but focuses on CT/X-ray registration. Their multi-scale-stride learning mechanism shares similarities with PelviNet’s exploration and exploitation strategies, aiming for fast and globally optimal convergence. Both methods address domain gaps, with CT2X-IRA utilizing a dedicated module, while PelviNet leverages the collaborative learning of multiple agents. Both studies demonstrate the promise of deep RL for robust image registration tasks.

In the study [18], the utility of reinforcement learning (RL) is extended beyond its traditional applications, demonstrating its capability in optimizing landmarks for detecting hip abnormalities. This not only exemplifies the adaptability of RL in analyzing pelvic images but also underscores its potential in broader image analysis tasks, which could synergize with our suggested approach for image registration [18].

Furthermore, the research presented in [15] delves into the segmentation of pelvic tumors through a sophisticated multi-view CNN framework. Although its focus diverges from image registration, it underlines the critical role of precise segmentation in the context of surgical planning. This aspect could enhance the applicability of our registration technique. Additionally, their strategies for mitigating overfitting in scenarios with sparse data may offer valuable insights for our project, particularly in managing limited datasets of pelvic imagery [15].

In conclusion, these studies showcase the growing interest in deep learning and reinforcement learning for diverse tasks in pelvic image analysis. While focusing on different aspects, they demonstrate the effectiveness of these techniques in addressing various challenges.

Methods

Our study introduces an approach to enhance pelvic image registration accuracy. Our methodology centers on applying multi-agent systems within a 3D medical imaging environment, employing a sophisticated deep Q-network (DQN) architecture. This architecture utilizes the CommNet network [13], which is pivotal for effective agent communication and shared learning.

The DQN is initialized with specific parameters, including the number of agents, the history of frames, and a defined set of actions. The training process that integrates an experience replay mechanism is central to our method. This mechanism allows the network to revisit and learn from previous experiences, thereby enhancing learning efficiency. An epsilon-greedy strategy [14] (is a method used in reinforcement learning where the agent chooses a random action with probability epsilon and the best-known action with probability 1-epsilon. This balances the exploration of new actions and exploitation of known rewarding actions) is adopted for action selection, finely balancing the exploration-exploitation trade-off, which is crucial in reinforcement learning.

During training, agents interact with a simulated 3D pelvic imaging environment. Their decision-making process is geared towards optimizing image registration accuracy. The training loop encompasses reward observation and network updating based on agent-environment interactions. A critical element of our training methodology is the computation of loss using Bellman’s equation, which facilitates temporal difference learning. Backpropagation is utilized to optimize the network continually (see Algorithm 1).

We also incorporate periodic validation checks and a model-saving strategy within our training regime. These steps ensure that the performance improvement is consistent and that the most effective model version can be retrieved at any stage of the training. This methodology section provides a comprehensive overview of our approach, balancing technical detail with accessibility for readers versed in machine learning and medical image analysis contexts.

3D Image Registration on Multi-agent DQN

Our PelviNet architecture employs a multi-agent system in a 3D medical imaging context, utilizing the CommNet module for effective inter-agent communication and learning. Given an input image , where H, W, and D are the height, width, and depth, and C denotes the number of channels, PelviNet extracts 3D Batches ( , where P is the Batch size with P=4.

The architecture involves multiple convolutional layers, max pooling, and PReLU activations. The forward pass processes input data through shared layers, followed by aggregation and communication across agents. This facilitates cooperative learning strategies, which is crucial for accurate image registration.

| 1 |

where W is the weight matrix for the linear transformation, “vec” represents the vectorized form of the input patches, and is the embedded patch.

The network architecture integrates shared convolutional layers followed by fully connected layers and communication mechanisms to generate actions for each agent.

PelviNet’s design represents a breakthrough in applying deep learning and multi-agent systems for medical image analysis, emphasizing precision in spatial registration tasks.

Algorithm 1.

PelviNet training algorithm.

Algorithm 1 represents a training procedure for a reinforcement learning model, particularly in a multi-agent environment for pelvic image registration. It initializes by setting up the environment, a replay buffer for storing experiences, and defining network parameters. The core of the training involves running episodes where agents interact with the environment, making decisions based on an -greedy policy that balances exploration and exploitation. Actions are chosen either randomly or by maximizing the Q-value from the network. The algorithm updates the network parameters by minimizing the loss computed from the Bellman equation, which integrates immediate rewards and future value estimations. The exploration rate is progressively adjusted, and the target network parameters are periodically updated for stable learning. This structured approach aims to optimize the agents’ performance in complex tasks through iterative learning and adaptation.

In our work, we introduce the ‘PelviNet’ architecture within the framework of multi-agent reinforcement learning, specifically tailored for the intricate task of pelvic image registration. This architecture distinguishes itself by implementing shared convolutional layers, facilitating a cohesive and synergetic environment for inter-agent communication. Such an innovative approach is instrumental in advancing the collaborative dynamics essential in complex domains like pelvic image registration.

The salient features of the ‘PelviNet’ architecture are as follows:

Shared convolutional layers: At the heart of “PelviNet” are the convolutional layers, uniformly employed across all agents. These layers are pivotal in processing the frame history pertinent to each agent, thereby extracting critical features necessary for informed decision-making. The shared configuration of these layers fosters a harmonized feature representation, significantly enhancing inter-agent coordination.

Integration of pooling and activation functions: Sequential to each convolutional layer, the architecture employs max pooling layers and parametric ReLU activation functions. These elements are critical in diminishing the representation’s spatial dimensions, consequently reducing computational demands. Furthermore, they introduce essential non-linearities within the network, a key attribute for learning intricate patterns within the pelvic imaging data.

Agent-specific fully connected layers: The architecture incorporates agent-specific fully connected layers, which are fundamental in assimilating the processed inputs for decision-making purposes. This arrangement ensures that while each agent benefits from the shared feature extraction process, it retains the capacity for individualized decision-making.

Robust communication mechanism: A distinctive aspect of “PelviNet” is its efficacious communication mechanism. This mechanism aggregates the shared layers’ outputs to construct a communal knowledge base. When concatenated with each agent’s specific data and passed through the fully connected layers, this aggregated information empowers agents to make decisions informed by individual and collective intelligence.

Adaptation for pelvic image registration: In applying this architecture to pelvic image registration, agents adeptly navigate and interpret the complex anatomy of pelvic images. Through shared learning and communication, each agent develops proficiency in identifying and aligning critical anatomical landmarks, significantly enhancing registration accuracy.

The “PelviNet” architecture, with its groundbreaking approach to shared learning and inter-agent communication, marks a substantial contribution to the field of multi-agent systems. Its application in medical image analysis, particularly in pelvic image registration, underscores its ability to adeptly manage and interpret complex, high-dimensional data collaboratively and efficiently. The communication mechanism aggregates outputs from shared layers using a weighted average approach, allowing agents to share insights without losing individual learning paths. This ensures that agents benefit from collective knowledge and maintain independent learning trajectories, leading to improved collaborative performance (Figs. 1 and 2).

Fig. 1.

Architecture of the single agent’s deep Q-network

Fig. 2.

Architecture of the PelviNet collab-DQN

Markov Decision Process Design

Reinforcement learning is a dynamic and iterative process where an agent functions as a decision-maker, persistently interacting with a specific environment to make sequential decisions. In pelvic image registration, it is crucial to establish a Markov decision process that is well suited to the task at hand. The development of this Markov decision process comprises four fundamental components: the state set, action set, reward function, and termination criteria.

State set S: Our framework situates the agents within a 3D pelvic image environment. In the state set , each state is defined by the spatial coordinates within the pelvic image that the agent is currently analyzing. The state encompasses the critical information about the agent’s current position and orientation relative to the pelvic anatomy. To enhance the decision-making process, the input state for the network is formed by concatenating the current state with a predefined number of its previous states, thereby incorporating temporal context into the decision-making process.

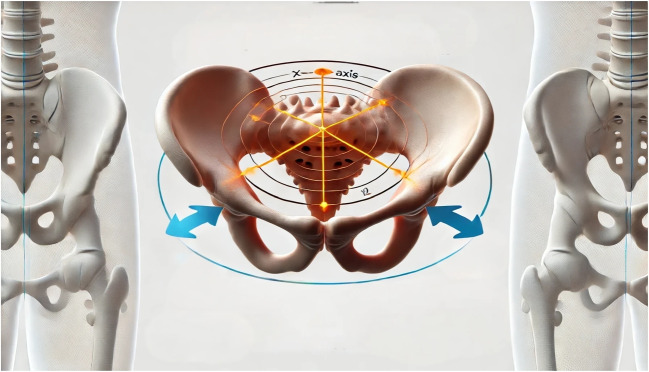

- Action set A: This component comprises a set of discrete actions . In this framework, each agent can move in positive and negative directions along the X, Y, and Z axes, resulting in six possible actions: right, left, up, down, forward, and backward. These actions correspond to the network’s fully connected layer outputs, producing a six-dimensional output representing the six potential movements. As depicted in the illustrative Fig. 3, the agent’s position within the pelvic image is indicated, and each action facilitates movement in a specific direction, allowing for comprehensive exploration of the 3D pelvic environment. In the context of navigating through 3D pelvic medical images, the agents are programmed to execute a set of specific actions, each corresponding to a movement in the three-dimensional space:

- Up Z+: The agent moves upwards along the Z-axis. This action is particularly significant in traversing the depth of pelvic images. The movement is constrained at the upper boundary of the image to prevent the agent from going out of bounds.

- Forward Y+: This action propels the agent forward along the Y-axis. In pelvic imaging, this corresponds to moving towards the anterior part of the pelvis. Like the UP Z+ action, the agent’s movement is restricted at the image’s front edge.

- Right X+: Directs the agent to the right along the X-axis, allowing exploration of the lateral aspects of the pelvic region. Movement beyond the right boundary of the image is not permitted.

- Left X–: Moves the agent to the left along the X-axis, vital for examining the left lateral pelvic structures. The agent remains at its current location if it attempts to move past the leftmost boundary.

- Backward Y–: The agent moves backward along the Y-axis, navigating towards the posterior part of the pelvic region. The movement at the image’s back edge is limited to stay within the image space.

- Down Z–: Moves the agent downwards along the Z-axis. This action is crucial for delving into the lower parts of the pelvic area. The agent’s downward movement is halted at the lower boundary of the image.

Reward function R: We first determine the Euclidean distances between the agent’s current position and a target landmark ()(selected by clinical professionals with expertise in pelvic anatomy.), as well as the distance from the agent’s previous position to the target (). The reward is then derived from the difference , effectively rewarding the agent for moving closer to the target. This difference is clipped between and 1 using the clip function defined as , ensuring that the reward remains within a manageable range, promoting stability in the learning process. This method ensures the agent receives positive feedback for progress towards the target, guiding it efficiently on its path.

Termination criteria: The termination criteria in the training process are determined by the maximum number of episodes (max_episodes) and the steps per episode (steps_per_episode). During each episode, the agent interacts with the environment until either the maximum step count per episode is reached or the environment signals termination. This iterative process continues until the maximum number of episodes is attained, ensuring the training adequately explores the environment and learns from the interactions.

Through these four elements, the multi-agent reinforcement learning framework is tailored to tackle the complexities of pelvic image registration, fostering precise alignment and enhanced analysis of medical images.

Fig. 3.

An illustrative figure depicting imaging space, the axes, the origin of the axes, and movements of agents

Experiments and Analysis

Dataset and Evaluation Criteria

A retrospective study (2014-326) approved by the Beaumont Research Institutional Review Board involved selecting 58 patients treated in the pelvic region at the Beaumont Radiation Oncology Center. Each patient underwent a planning CT using a 16-slice Philips Brilliance Big Bore CT scanner (Philips NA Corp, Andover, MA), covering the entire anatomical region and employing immobilization devices [19]. For daily image guidance, CBCT images were acquired using the onboard imager for the Elekta Synergy®linear accelerator (Elekta Oncology Systems Ltd., Crawley, UK). The CBCT images varied in dimensions from 512 512 88 to 512 512 110 pixels, with pixel sizes ranging from (1 1) and 3 mm slice thickness to (0.64 0.64) and 2.5 mm slice thickness. The planning CT was resampled to match the in-plane dimensions of the CBCT, and the image content was adjusted to position the anatomical isocenter at the center of the image volume, as illustrated in Fig. 4. The machine isocenter coincides with the center of the CBCT reconstruction image volume, as depicted in Fig. 4a (left figure), where the planning CT image displays the machine isocenter in red and the image isocenter in blue. Figure 4 b (middle figure) and c (right figure) show the planning CT at the machine isocenter and the CBCT at the machine isocenter, respectively [19].

Fig. 4.

Content of planning CT shifted to the machine isocenter. Top left to right—axial, coronal, and sagittal planning CT image with machine isocenter in red and image isocenter in blue. Middle left to right—axial, coronal, and sagittal planning CT at machine isocenter. Bottom left to right—axial, coronal, and sagittal CBCT at machine isocenter

In our analysis, each CT/CBCT image pair was found to have an average of 154 identifiable landmarks, with numbers ranging from 91 to 212 across different cases. This comprehensive identification process underscores the variability and complexity involved in aligning and registering CT and CBCT images, a key focus of our study. Table 1 showcases a selection of 10 cases from the total of 58 examined, detailing the number of landmark pairs for each. This subset represents the broader dataset, illustrating the intricacies of our imaging and analysis techniques without delving into the specifics of k-combinations, instead focusing on the essential metrics of landmark identification.

Table 1.

Summary of landmark pairs for CT and CBCT image comparisons

| Case | CBCT Dim. | CT Dim. | Voxel Dim. | Landmark pairs |

|---|---|---|---|---|

| 1 | 51251288 | 512512153 | 113 | 211 |

| 2 | 51251288 | 512512163 | 113 | 176 |

| 3 | 51251288 | 512512145 | 113 | 174 |

| 4 | 51251288 | 512512165 | 113 | 130 |

| 5 | 51251288 | 512512137 | 113 | 133 |

| 6 | 51251288 | 512512130 | 113 | 127 |

| 7 | 51251288 | 512512147 | 113 | 94 |

| 8 | 51251288 | 512512122 | 113 | 151 |

| 9 | 51251288 | 512512125 | 113 | 166 |

| 10 | 51251288 | 512512160 | 113 | 176 |

Experimental Parameters

The effectiveness of PelviNet agents (3 agents) for identifying anatomical landmarks was assessed using previous pelvic imaging datasets. These agents were compared with a single reinforcement learning (RL) agent and multi-agents that only shared their convolutional layers (Collab-DQN). Clinical professionals manually labeled all the necessary landmarks from three orthogonal angles. The datasets were divided randomly into training (70%), validation (15%), and test (15%) groups. The selection of the best model during training was based on the highest accuracy achieved by the validation group. The Euclidean distance error between the identified and actual landmarks determined accuracy. The agents employed an epsilon-greedy strategy [14], allowing for a random action to be chosen from the action space with a probability decreasing from 1 to 0.1 rather than always choosing the action with the top Q-value. The agents adopted a fully greedy strategy with epsilon set to 0 in the testing phase. An episode concluded when all agents either oscillated at the smallest scale or reached a maximum of 100 steps.

In our experiments, the simulation environment was set up with various parameters to ensure optimal agents’ performance. The training was conducted with a frequency of updates every 4 steps to balance computational efficiency and learning progression. A substantial replay buffer of 1 million experiences was utilized to store past interactions, with an initial memory size of 50,000 experiences to kick-start the learning process. The training regime consisted of a maximum of 100 episodes, each with 50 steps, providing a structured yet flexible framework for agent learning. The epsilon-greedy strategy started with an exploration rate of 1, gradually decreasing to a minimum of 0.1 with a delta of 0.001 per step, to ensure a smooth transition from exploration to exploitation. Batch sizes of four experiences were used during training, with a discount factor (gamma) of 0.9 to balance immediate and future rewards. The agents were designed to choose from six possible actions, and their decision-making process was informed by a history of four frames, which provided context for their actions. The training was conducted with frequent evaluations to monitor progress and adjust strategies as necessary.

Result Discussions

Visualizing the results is a crucial aspect of our analysis, clearly representing the spatial distribution of errors across the pelvic CT images. As depicted in Fig. 5, three sequential axial slices are displayed, each illustrating the positioning of landmarks the algorithm identifies. Notably, each landmark is accompanied by a shaded overlay, indicating the region of interest (ROI) where the historic was detected. These overlays also highlight the magnitude of error in landmark detection, with the radius of each shaded area proportional to the error magnitude presented in millimeters.

Fig. 5.

Illustration of collaborative search by three intelligent agents

The error annotations explicitly quantify the discrepancy between the predicted and actual landmark positions, facilitating an objective assessment of the algorithm’s performance. These visualizations not only elucidate the algorithm’s precision in localizing landmarks but also provide insight into the potential challenges and limitations inherent in automated landmark detection within pelvic CT imagery.

The quantitative results demonstrate the performance of PelviNet as shown in Table 2.

Table 2.

Quantitative results for three agents with error in millimeters (mm)

| Agent | Image-wise error (mm) | Subject-wise error (mm) | Mean Euclidean distance error (mm) |

|---|---|---|---|

| Agent 1 | 2.5 | 3.0 | 2.8 |

| Agent 2 | 2.8 | 3.2 | 3.0 |

| Agent 3 | 3.1 | 3.5 | 3.3 |

Table 2 presents the quantitative results for three agents involved in the pelvic image registration task. The errors are measured in millimeters (mm) and are categorized into three types: image-wise error, subject-wise error, and mean Euclidean distance error.

For Agent 1, the image-wise error is 2.5 mm, the subject-wise error is 3.0 mm, and the mean Euclidean distance error is 2.8 mm. Agent 2 has slightly higher errors, with an image-wise error of 2.8 mm, a subject-wise error of 3.2 mm, and a mean Euclidean distance error of 3.0 mm. Agent 3 shows the highest errors among the three agents, with an image-wise error of 3.1 mm, a subject-wise error of 3.5 mm, and a mean Euclidean distance error of 3.3 mm.

These results illustrate the performance and accuracy of the agents in the pelvic image registration task, highlighting the effectiveness and areas for improvement in the proposed method.

Discussion

In discussing our study on PelviNet, we recognize the architecture’s pioneering contribution to pelvic image registration through its multi-agent convolutional network. Despite the foundational results obtained from a relatively small dataset of 58 patients, PelviNet’s integration of shared convolutional layers and a unique communication mechanism allows for significant advancements in the accuracy and efficiency of medical imaging for gynecological cancer treatments.

The precision facilitated by PelviNet is particularly crucial in the context of radiation therapy, where exact targeting is paramount. PelviNet’s ability to enhance image registration aligns closely with the needs of personalized medicine, offering a pathway to more tailored and effective radiation treatment plans. This is especially significant considering the challenges posed by the variability in tumor size, location, and the sensitive tissues involved in gynecological cancers.

The main advantages of MARL include enhanced collaboration and learning efficiency among agents, while disadvantages may involve increased computational complexity. The limited research on RL for registration is due to the high dimensionality of image data and the need for intricate reward functions. Training times averaged 10 h per model, with prediction times around 30 s per image, reflecting the efficiency gains of our approach.

Statistically, gynecological cancers represent a substantial burden, with cervical cancer alone accounting for over 500,000 new cases annually worldwide (WHO, 2020). The precision of radiation therapy, critical for targeting tumors while sparing healthy tissue (Fig. 6), hinges on the accuracy of image registration—a domain where PelviNet shows promising advancements.

Fig. 6.

IMRT field (blue) surrounding the cervix cancer (red) and the involved lymph nodes (green)

The preliminary yet promising results underscore the potential for expanding this research. Future studies could focus on enlarging the dataset, incorporating real-time clinical feedback to refine the model, and exploring the integration of PelviNet within broader clinical workflows. Such advancements could improve PelviNet’s robustness and applicability and potentially extend its utility to other complex imaging tasks across various medical specialties, thereby broadening its impact on computational healthcare.

Conclusion

In conclusion, PelviNet represents a significant leap forward in applying multi-agent systems to medical imaging, specifically targeting the enhancement of pelvic image registration. This convolutional network architecture leverages shared learning and advanced communication mechanisms to refine radiation therapy planning for gynecological cancers. Despite the challenges posed by a limited dataset, the empirical results highlight PelviNet’s potential to significantly improve diagnostic and treatment precision. Further research and larger datasets are essential to fully realize PelviNet’s capabilities, potentially extending its benefits beyond pelvic imaging to other medical diagnostics and treatment areas. This study not only underscores the impact of advanced computational methods in healthcare but also opens avenues for future innovations in medical technology.

Author Contribution

Z. Rguibi and A. Hajami contributed to manuscript drafting and data analysis. Z. Rgubi, A. Hajami, D. Zitoni, and H. Allali contributed to the manuscript revision. Z. Rguibi contributed to the design of the work. All authors contributed to the final approval of the completed version.

Declarations

Conflict of Interest

The authors declare no competing interests.

Footnotes

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

References

- 1.Nix, M., Gregory, S., Aldred, M., Aspin, L., Lilley, J., Al-Qaisieh, B., ... & Murray, L. (2022). Dose summation and image registration strategies for radiobiologically and anatomically corrected dose accumulation in pelvic re-irradiation. Acta Oncologica, 61(1), 64–72. [DOI] [PubMed] [Google Scholar]

- 2.Bonilauri, Augusto, Francesca Sangiuliano Intra, Francesca Baglio, and Giuseppe Baselli. 2023. “Impact of Anatomical Variability on Sensitivity Profile in fNIRS-MRI Integration” Sensors 23, no. 4: 2089. 10.3390/s23042089 [DOI] [PMC free article] [PubMed]

- 3.B. Zitová and J. Flusser, “Image registration methods: a survey,” Image and Vision Computing, vol. 21, no. 11, pp. 977–1000, 2003. [Google Scholar]

- 4.HAN, Runze, UNERI, Ali, VIJAYAN, Rohan C., et al. Fracture reduction planning and guidance in orthopaedic trauma surgery via multi-body image registration. Medical image analysis, 2021, vol. 68, p. 101917. [DOI] [PMC free article] [PubMed]

- 5.KWON, Jong Won, HUH, Seung Jae, YOON, Young Cheol, et al. Pelvic bone complications after radiation therapy of uterine cervical cancer: evaluation with MRI. American Journal of Roentgenology, 2008, vol. 191, no 4, p. 987–994. [DOI] [PubMed]

- 6.A. A. Goshtasby, 2-D and 3-D Image Registration: for Medical, Remote Sensing, and Industrial Applications. Wiley-Interscience, 2005.

- 7.T. Rohlfing, R. Brandt, R. Menzel, and C. R. Maurer, “Evaluation of atlas selection strategies for atlas-based image segmentation with application to confocal microscopy images of bee brains,” NeuroImage, vol. 21, no. 4, pp. 1428–1442, 2004. [DOI] [PubMed] [Google Scholar]

- 8.Buckley JG, Dowling JA, Sidhom M, Liney GP, Rai R, Metcalfe PE, Holloway LC, Keall PJ. Pelvic organ motion and dosimetric implications during horizontal patient rotation for prostate radiation therapy. Med Phys. 2021 Jan;48(1):397–413. 10.1002/mp.14579. Epub 2020 Nov 23. PMID: 33151543. [DOI] [PubMed] [Google Scholar]

- 9.Eminowicz G, Motlib J, Khan S, Perna C, McCormack M. Pelvic Organ Motion during Radiotherapy for Cervical Cancer: Understanding Patterns and Recommended Patient Preparation. Clin Oncol (R Coll Radiol). 2016 Sep;28(9):e85–91. 10.1016/j.clon.2016.04.044. Epub 2016 May 11. PMID: 27178706. [DOI] [PubMed] [Google Scholar]

- 10.Pei, L., Vidyaratne, L., Rahman, M.M. et al. Context aware deep learning for brain tumor segmentation, subtype classification, and survival prediction using radiology images. Sci Rep 10, 19726 (2020). 10.1038/s41598-020-74419-9 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Zou J, Gao B, Song Y and Qin J (2022) A review of deep learning-based deformable medical image registration. Front. Oncol. 12:1047215. 10.3389/fonc.2022.1047215 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Xu L, Zhu S, Wen N. Deep reinforcement learning and its applications in medical imaging and radiation therapy: a survey. Phys Med Biol. 2022 Nov 11;67(22). 10.1088/1361-6560/ac9cb3. PMID: 36270582. [DOI] [PubMed]

- 13.S. Sukhbaatar, A. Szlam, and R. Fergus, “Learning multiagent communication with backpropagation,” Advances in Neural Information Processing Systems, vol. 29, pp. 2244–2252, 2016. [Google Scholar]

- 14.Tokic, Michel. “Adaptive-greedy exploration in reinforcement learning based on value differences.” Annual conference on artificial intelligence. Berlin, Heidelberg: Springer Berlin Heidelberg, 2010.

- 15.Qu, Y., Li, X., Yan, Z., Zhao, L., Zhang, L., Liu, C., ... & Ai, S. (2021). Surgical planning of pelvic tumor using multi-view CNN with relation-context representation learning. Medical Image Analysis, 69, 101954. [DOI] [PubMed]

- 16.Hu, M., Zhang, J., Matkovic, L., Liu, T., & Yang, X. (2023). Reinforcement learning in medical image analysis: Concepts, applications, challenges, and future directions. Journal of Applied Clinical Medical Physics, 24(2), e13898. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Geng, H., Xiao, D., Yang, S., Fan, J., Fu, T., Lin, Y., ... & Yang, J. (2023). CT2X-IRA: CT to x-ray image registration agent using domain-cross multi-scale-stride deep reinforcement learning. Physics in Medicine & Biology, 68(17), 175024. [DOI] [PubMed]

- 18.Bekkouch, I. E. I., Maksudov, B., Kiselev, S., Mustafaev, T., Vrtovec, T., & Ibragimov, B. (2022). Multi-landmark environment analysis with reinforcement learning for pelvic abnormality detection and quantification. Medical Image Analysis, 78, 102417. [DOI] [PubMed] [Google Scholar]

- 19.Yorke, A. A., McDonald, G. C., Solis, D., & Guerrero, T. (2019). Pelvic Reference Data (Version 1) [Data set]. The Cancer Imaging Archive. 10.7937/TCIA.2019.WOSKQ5OO [Google Scholar]