Our research focuses on improving the early detection of oral precancers/cancers in primary dental care settings with a novel mobile platform that can be used by front-line providers to aid in assessing whether a patient has an oral mucosal condition that requires further follow-up with an oral cancer specialist.

Abstract

Oral cancer is a major global health problem. It is commonly diagnosed at an advanced stage, although often preceded by clinically visible oral mucosal lesions, termed oral potentially malignant disorders, which are associated with an increased risk of oral cancer development. There is an unmet clinical need for effective screening tools to assist front-line healthcare providers to determine which patients should be referred to an oral cancer specialist for evaluation. This study reports the development and evaluation of the mobile detection of oral cancer (mDOC) imaging system and an automated algorithm that generates a referral recommendation from mDOC images. mDOC is a smartphone-based autofluorescence and white light imaging tool that captures images of the oral cavity. Data were collected using mDOC from a total of 332 oral sites in a study of 29 healthy volunteers and 120 patients seeking care for an oral mucosal lesion. A multimodal image classification algorithm was developed to generate a recommendation of “refer” or “do not refer” from mDOC images using expert clinical referral decision as the ground truth label. A referral algorithm was developed using cross-validation methods on 80% of the dataset and then retrained and evaluated on a separate holdout test set. Referral decisions generated in the holdout test set had a sensitivity of 93.9% and a specificity of 79.3% with respect to expert clinical referral decisions. The mDOC system has the potential to be utilized in community physicians’ and dentists’ offices to help identify patients who need further evaluation by an oral cancer specialist.

Prevention Relevance: Our research focuses on improving the early detection of oral precancers/cancers in primary dental care settings with a novel mobile platform that can be used by front-line providers to aid in assessing whether a patient has an oral mucosal condition that requires further follow-up with an oral cancer specialist.

Introduction

Oral cancer is a major global health problem. Despite the ease of direct visual examination of the oral cavity, oral cancer is frequently diagnosed at an advanced stage in which therapy is more difficult, more morbid, and less successful than treatment for patients with earlier stages of disease (1). Oral cancer precursors designated as oral potentially malignant disorders (OPMD) are oral mucosal lesions (OML) with an increased risk of developing into oral cancer. Leukoplakia, oral lichen planus, and erythroplakia are the common types of OPMDs. Thus, there is an identifiable group with increased risk of oral cancer that can be referred to oral cancer specialists for surveillance. However, front-line healthcare providers such as primary care physicians, nurse practitioners, community dentists, and dental hygienists, who are usually the first to see patients with OMLs, often lack the training and expertise needed to recognize and distinguish oral cancer and OPMDs from benign OMLs (2–5). Major contributory factors leading to delayed detection and diagnosis of oral cancer include knowledge gaps, lack of experience, and breakdown of communication between primary care providers and oral cancer specialists (1, 4, 6). Yet, early diagnosis of oral cancer is essential for improving outcomes as it significantly improves long-term survival and reduces treatment-related morbidity (7, 8).

The identification of high-risk OMLs is clinically challenging (9). The U.S. Preventive Services Task Force does not currently recommend oral cancer screening conducted by primary care providers in the general population due to lack of evidence that screening the general population using currently available techniques leads to increased identification of patients with oral cancer or worrisome precancerous conditions (10). However, the American Dental Association recommends that dental professionals screen for OPMDs in all patients, particularly those with increased risk of oral cancer (11, 12). The current standard practice for oral cancer screening is conventional oral examination (COE), which involves both visual and tactile examination of the oral cavity under white light (WL; ref. 12). Clinical management of innocuous lesions involves surveillance. If a suspicious OML is found during COE, this is typically followed up with a referral to an oral cancer specialist for further evaluation, which often includes biopsy for microscopic examination, the gold standard for diagnosing oral cancer, and oral epithelial dysplasia (OED; ref. 9). OED is a microscopic diagnosis based on the architectural and cellular changes in the epithelium caused by the accumulation of genetic alterations within the oral mucosa. The presence of OED in OPMDs indicates an increased risk of progression to oral cancer.

However, COE is subjective, dependent upon the examiner’s expertise, and has poor diagnostic accuracy in distinguishing OPMDs with OED or oral cancer from their benign mimics (3, 13). This is particularly relevant in resource-constrained areas, where access to oral cancer specialists is limited (14). It is important that early identification of benign and suspicious lesions be both sensitive and specific to avoid inappropriate referrals to oral cancer specialists as these appointments often require time, travel, and expenses on the patients’ part and clinical time for the specialist.

Adjunctive methods are often used for oral cancer screening and include the use of staining methods such as Lugol’s iodine and toluidine blue (15–17). These minimally invasive methods rely on using a topical dye to increase the contrast between abnormal and normal tissues. Optical imaging methods, such as narrow-band imaging, and biomarkers have also been explored. Autofluorescence (AF) imaging is another optical imaging technique that has been widely studied as a noninvasive tool to improve the early detection of oral cancer and its precursors (18) because of its ability to increase the contrast between abnormal lesions and surrounding normal tissue, improving sensitivity for early detection of oral cancer and OED. Many studies, using the commercially available VELscope imaging tool (19) and other imaging platforms, have shown that AF visualization is more sensitive than examination under WL for detection of abnormal OMLs, with abnormal oral lesions showing decreased AF and appearing dark using standard AF imaging (20–27). However, the specificity of AF imaging is limited because inflammatory, cancerous, and precancerous lesions can all show loss of AF (13, 28). Because COE and AF imaging rely on subjective recognition skills, it is difficult for nonexpert providers to use these techniques to accurately detect oral cancer and its precursors (28).

The use of machine learning algorithms to interpret WL and AF images has the potential to improve the identification of subjects in need of further evaluation by an oral cancer specialist by reducing subjectivity and the need for clinical expertise. This could be especially beneficial in settings with limited access to oral cancer specialists. Existing work has primarily focused on using deep learning methods to interpret WL images of the oral cavity (29–32). Although many pilot studies have demonstrated the promise of machine learning to improve point-of-care diagnostics for oral cancer (30–34), there remains a need for an easy-to-use mobile oral imaging device that implements machine learning to help mid-level healthcare providers make appropriate referral decisions in primary medical and dental care settings.

In this article, we describe a mobile detection of oral cancer (mDOC) smartphone multimodal imaging platform to assist healthcare providers with identifying subjects in need of further evaluation by an oral cancer specialist. mDOC integrates the documentation of demographic and risk information, the collection of WL and AF images, and machine learning analysis to provide clinicians with a data-driven approach to triage patients in primary medical and dental care settings. The mDOC system consists of a smartphone equipped with low-cost optics and electronics and a user-friendly application that enables collection of AF and WL images from various anatomic sites of the oral cavity. Images of oral mucosa collected using the mDOC system are automatically analyzed by a machine learning–based algorithm to generate a referral determination (“do not refer” or “refer” for specialist evaluation and possible biopsy). In this study, mDOC imaging results and automated referral recommendations are presented and compared with referral decisions made by expert clinicians in a study of 29 healthy volunteers and 120 subjects seeking care for the evaluation of an OML.

Materials and Methods

mDOC system design and characterization

The mDOC device (Fig. 1) is a smartphone platform for WL and AF imaging of the oral mucosa. The mDOC system consists of a HUAWEI P30 Pro smartphone, optics, and electronics housed in a custom 3D printed case (Fig. 1A and B). AF and WL images are acquired using the smartphone camera to capture 2,448 × 3,280 pixel resolution images. AF imaging is enabled through the addition of a 405-nm LED (Mouser Electronics, 897-LZ4V0UB0R00U8) excitation source, which is powered through an Arduino Nano (Arduino, A000005) via an external 7.4 V rechargeable LiPo battery (URGENEX, 903048). Light from the 405-nm LED passes through a condenser lens, a bandpass filter (Semrock, FF01-400/40-25), and collimating lens to illuminate the tissue. AF emitted from the tissue passes through a 435-nm longpass filter (Omega, XF3088) and is imaged by the smartphone camera. WL images are acquired with an auto white balance setting using the smartphone flash as the WL illumination source. The 405-nm LED is controlled via serial communication over USB between the Arduino Nano and the smartphone. The cost of goods of the complete mDOC system is $1370 USD. A breakdown of the cost by component is shown in Supplementary Table S1.

Figure 1.

mDOC imaging system. A, Prototype mDOC device. B, Diagram of mDOC system and components. C, Image of United States Air Force resolution target taken with mDOC in WL imaging mode. Group 3 element 4 is resolved (see inset), indicating transverse resolution of 44 μm. D, Screenshots from the custom mDOC Android application that guides the clinician through the data collection process.

A custom Android application, which was developed in Android Studio in the Kotlin language, guides the user through data collection and imaging (Fig. 1D). Patient demographics and risk factors, including age, gender, ethnicity, race, smoking habits, and alcohol consumption habits, are collected. The user is then guided to capture a WL and AF image pair at each site of interest in the oral cavity. Images are collected sequentially, with the application controlling the WL and AF illumination sources during the capture sequence. Images are captured using the smartphone camera autofocus and auto exposure settings. The application allows the user to vary the smartphone camera zoom setting from 1× to 10× (optical zoom from 1×–3×; a combination of optical and digital zoom from 3×–5×; and digital zoom from 5×–10×, as per the smartphone specifications).

The nominal working distance of mDOC is 10 cm. A peak irradiance measurement was taken at this working distance using a power meter (Newport, Model 1918-R). The field of view of mDOC varies depending on the zoom value. To characterize the spatial resolution of the system, a United States Air Force resolution target was imaged with the mDOC device under WL illumination. The depth of field (DOF) of the mDOC system was measured by locking the camera focus on a United States Air Force resolution target at a working distance of 10 cm and acquiring subsequent images while that target was translated axially; the DOF was determined by evaluating line plots across various elements of the resolution target at each target location.

Data collection

The mDOC system was used to image subjects from two different groups representing different levels of prevalence and risk for oral cancer: (i) a low-prevalence, low-risk population of healthy volunteers at Rice University; (ii) a high-prevalence, at-risk patient population at the UTHealth School of Dentistry at Houston and the University of Texas M.D. Anderson Cancer Center. All studies were conducted in accordance with the principles set forth in the Belmont Report.

The study of healthy volunteers was reviewed and approved by the Institutional Review Board of Rice University (protocols #2020-220, #2016-172). Healthy adult volunteers were invited to participate. Written informed consent was obtained from all subjects.

The clinical study was conducted in high-prevalence, at-risk patient populations at the Oral Pathology/Oral Medicine Clinic of UTHealth School of Dentistry at Houston and at the Head and Neck Surgery Clinic of the University of Texas M.D. Anderson Cancer Center. Community dentists and physicians refer patients with OMLs suspected of being OPMDs and other chronic mucocutaneous oral inflammatory and ulcerative diseases to the Oral Pathology/Oral Medicine Clinic at the UTHealth School of Dentistry at Houston. Patients are referred to the M.D. Anderson Head and Neck Surgery Clinic for the treatment of biopsy-proven oral cancer and high-risk OED.

The study was reviewed and approved by the institutional review boards of the UTHealth School of Dentistry at Houston (#HSC-DB-20-1317), M.D. Anderson Cancer Center (#2021-0781), and Rice University (#2021-220). At each clinical site, adult patients presenting with an OML were invited to participate. Written informed consent was obtained from all subjects.

A statistical power analysis for the clinical study showed that with α = 0.05, power = 0.80, and degrees of freedom = 1 for 2 × 2 matrices of frequencies of counts, a sample size of 88 is needed to detect medium effect sizes. On this basis, a sample size of 120 was selected to detect medium effect sizes while allowing for the chance that some patient images may not be of sufficient quality for algorithm development.

Prior to subject enrollment, users were trained to use the mDOC system with an oral training model. Imaging of each subject was performed during a single imaging session during the patient exam; no special positioning of the subject was required. Prior to imaging, demographic data were collected for each subject. Oral cancer risk factors such as smoking and drinking habits were recorded for each subject in the patient population. To ensure standardized conditions for imaging, the room lights were switched off to minimize the effects of ambient light. One or more WL and AF image pairs were captured at each anatomic site of interest. In the healthy volunteer study, images were obtained from a predetermined list of anatomic sites of interest, including the floor of mouth, ventral tongue, lateral tongue, buccal mucosa, gingiva, and soft/hard palate. In the clinical study, sites of interest were selected at the clinician’s discretion, and the clinical impression/description of each site was recorded. Following the imaging session, deidentified data and images were uploaded to a secure REDCap database (RRID: SCR_003445).

For each patient imaged in the clinical study, at a subsequent review session, WL and AF mDOC images were reviewed by an expert oral cancer specialist from the corresponding clinical institution (A.M. Gillenwater or N. Vigneswaran), who provided an expert clinical opinion for each imaged site regarding whether the patient should be referred for evaluation by an oral cancer specialist. The expert clinical referral decision was meant to serve as the ground truth label for each site and, as such, was based on the expert clinician’s visual inspection and palpation of the site during the patient’s visit and any additional information provided at the review session. During this review session, the expert clinician had access to the patient demographics and oral cancer risk factors recorded in the study, the clinical impression/description of the oral site, clinical features of OMLs (if any), histopathology results from any biopsies that were taken per standard of care at the time of the imaging session, and mDOC images of the oral site but were blinded to the automated mDOC referral recommendation. The expert clinical referral decision was called out as one of three possible categories: (i) do not refer; (ii) refer for reasons other than suspicion of precancer/cancer; or (iii) refer for suspicion of precancer/cancer. (The category “refer for reasons other than suspicion of precancer/cancer” included, for example, a referral to remove a benign growth.) Categories (ii) and (iii) were then condensed to a single category: “refer,” resulting in a final binary expert clinical referral decision of “do not refer” or “refer” for each oral site, which served as the ground truth for analysis. All oral sites in healthy volunteers were considered to have a ground truth referral decision of “do not refer” for analysis purposes.

Dataset curation

A manual quality control (QC) assessment of all AF and WL images was performed by a single nonclinician reviewer, who was blinded to all clinical information and pathologic diagnosis. Images were defined to be of high quality if the anatomic site of interest was in focus and at least 50% of the tissue of interest was fully illuminated. Images passing QC were manually annotated to outline the oral mucosa of interest, excluding teeth, shadows, gloves, dental tools, and any areas of oral mucosa not part of the region of interest. Each image was then masked using the associated annotation, cropped to the bounding box of the annotation, and resized to 224 × 224 pixels for compatibility with the input into the model used for the algorithm.

Image data from sites for which image pairs were collected were split into three subdatasets for analysis: a WL-only dataset, an AF-only dataset, and a multimodal dataset that combined WL and AF image pairs. The full dataset was divided to create a final training set (80% of the dataset) and a holdout test set (20% of the dataset); data were stratified by the ground truth referral decision for each study population. A cross-validation strategy was implemented to assess the generalizability of the models developed on the mDOC training dataset. Thus, the final training dataset was further randomly split at an anatomic site level and stratified by ground truth referral decision into four folds to create a cross-validation training and validation dataset, corresponding to an overall 60–20–20 training–validation–test set split for each modality (stratified k-fold cross-validation). The median number of WL or AF images per anatomic site was 1 (range: 1–6). In each case, all images from a single anatomic site were allocated to the training, validation, or holdout test set.

Algorithm development

An image analysis algorithm was developed using a PyTorch framework to generate an automated referral recommendation based on the mDOC WL and/or AF images. A small, mobile-friendly network, mobilenet_v3_small, was selected for the binary image classification task. The ground truth for model development was binary expert referral decision, in which the output of the model was the probability of the “refer” category. The network was pretrained on the ImageNet dataset and then fine-tuned on the mDOC dataset. Training and validation were set to a maximum of 120 epochs, with a 60-epoch minimum before stability was calculated. The learning rate step was set to 20 epochs. The training and validation batch sizes were set to 5. All algorithm development was performed on a computer with two GeForce RTX 2080 Ti graphics processing units with 11 GB synchronous dynamic random access memory (SDRAM) each.

First, a unimodal approach was taken. In this study, WL images and AF images were used separately to train and validate unimodal models using cross-validation methods. Cross-validation was first performed on the training and validation datasets to determine generalizability. Then, based on cross-validation performance, the training and validation datasets were combined into a final training set to train a single model that was then evaluated using the holdout test set.

A multimodal approach was then considered. In this study, pretrained unimodal models were combined to create an ensemble model that takes the output features of the unimodal models and concatenates the features before applying a linear classifier layer in a late fusion strategy. Cross-validation was first used with the ensemble model to assess generalizability, and then the model was retrained on the final training set and evaluated using the holdout test set.

Metrics for evaluation

The performance of each model was evaluated based on the AUC-ROC value. The model with the best AUC-ROC value was saved for each unimodal and multimodal run. Early stopping based on loss curves was used to mitigate overfitting. The AUC-ROC value of the validation and holdout test sets was calculated based on the highest scoring image per anatomic site to obtain an anatomic site level analysis. Additionally, a Brier score metric was calculated to assess the reliability of the model’s softmax probability output. For evaluating performance of cross-validation, a two-tailed Wilcoxon signed-rank test was used to determine whether differences in mean AUC-ROC values for the training and validation sets of each modality were statistically significant (35). Differences were considered significant if the P value was less than or equal to 0.05. For each algorithm, an optimal threshold was determined based on the Youden index (36, 37), and sensitivity and specificity are reported at this threshold. Briefly, this index is calculated using the difference of the true-positive rate and false-positive rate and is indicative of the point on the curve that is farthest from the line of chance. Additional metrics used to validate the machine learning models included precision and the F1 score. A gradient-based visualization method, Grad-CAM++ (https://github.com/jacobgil/pytorch-grad-cam), was used to generate attention maps to highlight regions of the image contributing to the final model prediction (38). The oral mucosal annotation was used to mask the attention map and then overlaid on the WL image passed into the network.

Data availability

Data underlying the results presented in this article may be obtained from the corresponding author upon reasonable request.

Results

A peak irradiance value of 10 mW/cm2 was measured from the AF illumination source at a working distance of 10 cm. At a working distance of 10 cm and at the most commonly used optical zoom factor of 3×, the field of view was measured to be 2.5 × 3.4 cm, and the transverse resolution in the WL imaging mode was measured to be 44 μm (Fig. 1C; inset shows group 3, element 4 resolved). The DOF of mDOC was measured to be 5 mm, 2.5 cm, and 6 cm for imaging features of size 44, 100, and 300 μm, respectively.

mDOC images were collected from a healthy volunteer population (29 subjects) as well as a high-prevalence, at-risk population (120 subjects). Table 1 summarizes the demographics and habits of the 149 subjects included in the analysis. The patient population was largely White (71.7%), non-Hispanic (90%), and female (57.5%) and was an average age of 63.3 ± 14.3 years old. Based on self-reported smoking and drinking habits, a majority of this population did not smoke cigarettes (83.3%), did not use other smoking tobacco (90.8%), and did not use smokeless tobacco (91.7%) but did drink (58.3%). The healthy volunteer study population was primarily Asian (55.2%), non-Hispanic (89.7%), and male (55.2%), and the average age was 28.9 ± 9.3 years. Smoking and drinking habits were not recorded for this population.

Table 1.

Summary of subjects and demographic information.

| Patient study | Healthy volunteer study | ||

|---|---|---|---|

| N (%)a | N (%)a | ||

| N | 120 | 29 | |

| Age | Range (years) | 19–87 | 21–56 |

| Mean (years) | 63.3 ± 14.3 | 28.9 ± 9.3 | |

| Gender | Female | 69 (57.5%) | 13 (44.8%) |

| Male | 51 (42.5%) | 16 (55.2%) | |

| Ethnicity | Hispanic | 12 (10.0%) | 3 (10.3%) |

| Non-Hispanic | 108 (90.0%) | 26 (89.7%) | |

| Race | American Indian or Alaska Native | 0 (0.0%) | 0 (0.0%) |

| Asian | 22 (18.3%) | 16 (55.2%) | |

| Black or African American | 8 (6.7%) | 0 (0.0%) | |

| Native Hawaiian or Other Pacific Islander | 0 (0.0%) | 0 (0.0%) | |

| White | 86 (71.7%) | 12 (41.4%) | |

| Not recorded | 1 (0.8%) | 0 (0.0%) | |

| Multiple | 3 (2.5%) | 1 (3.4%) | |

| Cigarette smoking habits | Smokes | 20 (16.7%) | |

| Does not smoke | 100 (83.3%) | ||

| Not recorded | 0 (0.0%) | ||

| Other smoking tobacco habits | Smokes | 10 (8.3%) | |

| Does not smoke | 109 (90.8%) | ||

| Not recorded | 1 (0.8%) | ||

| Smokeless tobacco habits | Uses smokeless tobacco | 8 (6.7%) | |

| Does not use smokeless tobacco | 110 (91.7%) | ||

| Not recorded | 2 (1.7%) | ||

| Alcohol consumption habits | Drinks | 70 (58.3%) | |

| Does not drink | 49 (40.8%) | ||

| Not recorded | 1 (0.8%) |

Percentages (%) in each category for each study may add up to more than 100% due to rounding.

WL and AF image pairs were collected from a total of 332 anatomic sites. Nineteen sites were removed through manual QC. The remaining 313 anatomic sites were included in the analysis set. These sites included the buccal mucosa (33), lateral tongue (80), gingiva (44), ventral tongue (32), soft palate (16), floor of mouth (10), lip (7), hard palate (3), retromolar trigone (1), and other sites (30). A dataset breakdown of the training, validation, and holdout test sets by expert referral decision is shown in Table 2. The corresponding split by anatomic site into the training, validation, and holdout test sets is shown in Supplementary Table S2.

Table 2.

Sites imaged using mDOC, stratified by expert clinical referral decision. The cross-validation training and validation dataset splits are indicated as an average value (rounded to the nearest integer) across the four folds. A separate holdout test set was used.

| Multimodal (WL and AF pairs) dataset | |||||

|---|---|---|---|---|---|

| Referral decision | Training set | Validation set | Test set | Total | |

| Do not refer | 86 | 29 | 29 | 144 | |

| Refer for othera | 45 | 15 | 14 | 74 | |

| Refer for suspicion of precancer/cancer | 58 | 18 | 19 | 95 | |

| Total | 189 | 62 | 62 | 313 | |

Refer for reasons other than suspicion of precancer/cancer.

A summary of the classification algorithm performance based on cross-validation for the unimodal WL and AF datasets and the multimodal dataset is provided. The unimodal WL model had a mean AUC-ROC value of 0.85 ± 0.02 for the training dataset and 0.81 ± 0.03 for the validation dataset, with a P value of 0.25. The unimodal AF model had a mean AUC-ROC value of 0.85 ± 0.03 for the training dataset and 0.84 ± 0.03 for the validation dataset, with a P value of 1.0. The multimodal model was composed of two pretrained mobilenet_v3_small models and trained and validated on the multimodal dataset. The mean AUC-ROC values for the multimodal training dataset and multimodal validation dataset were 0.91 ± 0.02 and 0.89 ± 0.02, respectively, with a P value of 0.125. Cross-validation results showed that there was no statistically significant difference between the training and validation mean AUC-ROC values across all three datasets (P > 0.05). This indicated good generalization of each model across the folds and that new models for each modality could reliably be trained on all data.

Following cross-validation, the training and validation datasets were combined into a final training set used to train a model, and the performance of this model was evaluated on the holdout test set (Fig. 2). The AUC-ROC value for the WL-only final training dataset was 0.82, and the AUC-ROC value for the holdout test dataset was 0.82. The AUC-ROC value for the AF-only final training dataset was 0.86, and the AUC-ROC value for the holdout test dataset was 0.84. The AUC-ROC value for the multimodal final training dataset was 0.97, and the AUC-ROC value for the holdout test dataset was 0.93. The multimodal model outperformed unimodal models in both the final training set and the holdout test set.

Figure 2.

AUC-ROC results for the WL, AF, and MM datasets using image inputs. AUC-ROC results on the final training set and performance on the holdout test set are shown. The best performing model on the holdout test set was the multimodal model. MM: multimodal.

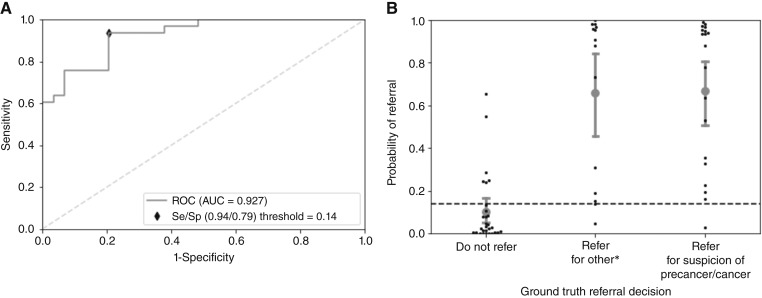

Figure 3A shows the ROC curve for the best performing multimodal model evaluated on the holdout test set. The corresponding scatterplot of the predicted values in the holdout test set categorized by referral decision is depicted in Fig. 3B. The Brier score for this model was 0.143. A cut-point threshold of 0.14 was calculated from the holdout test set; values above this threshold result in an mDOC prediction of “refer,” whereas values below this threshold result in an mDOC prediction of “do not refer.” At this threshold, there were six false positives and two false negatives, resulting in a sensitivity of 93.9% and a specificity of 79.3% for referral to an oral cancer specialist. The precision and F1 score were 0.84 and 0.89, respectively. The confusion matrix for the holdout test set is shown in Supplementary Table S3.

Figure 3.

ROC curve and scatterplot distribution for the best performing model trained on the MM dataset. A, ROC curve of validation on the holdout test set with the optimal Se/Sp called out. B, mDOC probability of referral distribution categorized by expert referral decision on the holdout test set. Error bars indicate the 95% CI. CI, confidence interval; MM, Multimodal; Se, sensitivity; Sp, specificity. *Refer for reasons other than suspicion of precancer/cancer.

A representative set of WL and AF images from the holdout test set and the corresponding mDOC results are displayed in Fig. 4 for each expert clinical referral category. A Grad-CAM++ attention map providing a visual indication of the regions of the image contributing most strongly to the automated classification result is overlaid on the outlined anatomic region of interest in the WL image. Figure 4A depicts images of a clinically normal lateral tongue with an expert referral decision of “do not refer.” The mDOC score is 0.02, and the mDOC referral recommendation is “do not refer,” correctly matching the expert clinical referral decision. Figure 4B shows images of a lichen planus lesion on the right buccal mucosa. The expert referral decision is “refer for reasons other than suspicion of precancer/cancer.” The mDOC score is 0.95, and the binary mDOC referral recommendation is “refer,” correctly matching the expert clinical referral decision. Figure 4C shows images of a lesion with a clinical impression of proliferative verrucous leukoplakia on the left buccal mucosa. The expert referral decision is “refer for suspicion of precancer/cancer.” The mDOC score is 0.64, and the binary mDOC referral decision is “refer,” correctly matching the expert referral decision. The attention maps highlight clinically relevant regions of the image pairs for the two “refer” cases in Fig. 4B and C.

Figure 4.

Representative mDOC images from the holdout test dataset. Left to right: WL image; AF image; Grad-CAM++ attention map overlaid on the original WL image. A, Clinically normal lateral tongue. Expert referral decision: Do not refer. mDOC referral decision: Do not refer (score = 0.02). B, Lichen planus on the left buccal mucosa. Expert referral decision: Refer for reasons other than suspicion of precancer/cancer. mDOC referral decision: Refer (score = 0.95). C, Proliferative verrucous leukoplakia on the left buccal mucosa. Expert referral decision: Refer for suspicion of precancer/cancer. mDOC referral decision: Refer (score = 0.64). *Note: the attention map indicates the relative contribution of areas of the image to the mDOC automated prediction.

Discussion

The mDOC system addresses the need for a portable device that is easy-to-use in community medical and dental care settings to help healthcare providers with limited expertise in determining which patients with OMLs should be referred to follow-up with an oral cancer specialist and to improve communication between primary care providers and oral cancer specialists. The mDOC system captures images in two modalities, WL and AF; the user is guided by a custom application to collect images of the oral cavity, as well as document demographic and risk factors. An automated deep learning image classification algorithm was developed to analyze mDOC images and predict whether a patient should be referred for further evaluation by an oral cancer specialist. All of the information documented in the mDOC system can be reviewed by the oral cancer specialist at the referral appointment. The mDOC models showed good generalizability when performance was evaluated using cross-validation. The results using a separate holdout test set showed that classification accuracy was higher when both WL and AF images were analyzed with a multimodal model compared with using either modality alone. Multimodal model attention maps indicated that the algorithm focused on clinically relevant portions of the images corresponding to OMLs.

The strength of the mDOC referral algorithm is its ability to distinguish chronic nonneoplastic inflammatory and ulcerative OMLs in the “refer for reasons other than suspicion of precancer/cancer” category from normal or benign OMLs, not requiring referral to an oral cancer specialist. Previous studies using smartphone imaging systems have focused on differentiating normal or benign OMLs versus premalignant or malignant OMLs, and thus a decision to refer does not also consider nonneoplastic chronic oral ulcerative mucositis that needs further evaluation and possible biopsy by an oral medicine or clinical oral pathology specialist for accurate diagnosis and long-term management (32, 34, 39, 40). Interestingly, the two “refer” cases in Fig. 4B and C are examples of clinically challenging OMLs, lichen planus and proliferative verrucous leukoplakia. Lichen planus is challenging to diagnose, especially for mid-level medical and dental care providers but even for experienced oral examiners, because it has a variety of presentations, and its clinical presentation can closely mimic that of oral precancers and cancers, nonneoplastic benign frictional keratosis, and oral candidiasis (41, 42). Proliferative verrucous leukoplakia is likewise clinically challenging because its differential diagnosis includes lesions ranging from benign frictional/alveolar ridge keratosis, oral lichen planus to oral leukoplakia, especially in its earlier stages, but it is important to identify early and accurately diagnose because of its high-risk for malignant transformation (42–45). In this study, mDOC was able to correctly identify the two sites as needing referral to an oral cancer specialist. In particular, most of the “refer for reasons other than suspicion of precancer/cancer” cases in the test dataset were accurately predicted by mDOC for referral in addition to correctly classifying the vast majority of “refer for suspicion of precancer/cancer.” The expert clinical impression for the “refer for reasons other than suspicion of precancer/cancer” cases spans a range of risk levels from benign oral conditions, such as atrophic candidiasis or fibroma, to low-risk OPMDs like lichen planus or lichenoid mucositis with postinflammatory melanosis.

In addition to the high sensitivity of the multimodal referral algorithm, mDOC also demonstrated its potential to classify oral conditions that can be difficult for nonexpert clinicians to correctly identify with acceptable specificity (79.3%) and thus avoid unnecessary referral to an oral cancer specialist. In comparison, one study that used a referral system that considers benign conditions did so with comparable sensitivity (93.88%) to mDOC but lower specificity (57.55%; ref. 31). Another study using a mobile phone application that assessed the presence of a lesion versus a normal variant also had comparable sensitivity (92%) to mDOC but lower specificity (67.5%) and saw improved performance when only considering OPMDs for referral (32).

Our study has a few limitations. The first limitation is the size and composition of the dataset. The dataset is relatively small for training a deep learning classification algorithm. Cross-validation was used in our analysis to address the potential issue of model overfitting. In addition, the imbalance of healthy volunteers and the underrepresentation of some benign oral conditions in the dataset may have biased the results; future work to mitigate this bias could include targeted data collection and weighting algorithms. Although the number of false negatives was minimized in this analysis, balancing sensitivity with specificity in clinical decision making is an important consideration to prevent both underdiagnosis and overdiagnosis. Additionally, a QC check to verify image quality at the time the image is taken would allow the user to retake images and potentially improve the performance of the model and usability of the mDOC system. Finally, the study analysis relied on manual annotations of the oral mucosa in the WL and AF images used for the mDOC algorithm, and furthermore, the model was executed in a development environment on a computer. Additional work to automate the oral mucosa segmentation process in real-time should be investigated for deployment of the mDOC algorithm. Future work will involve assessing the compatibility with other smartphone devices, exploring uncertainty quantification methods such as Bayesian approaches, and validation in an external dataset.

With validation in a larger population and real-time implementation of the model on a smartphone platform, mDOC has the potential to improve the early detection of oral precancers and cancers in primary medical and dental care settings.

Supplementary Material

Supplementary Table S1: Cost breakdown of mobile Detection of Oral Cancer (mDOC) System prototype.

Supplementary Table S2: Anatomic site distribution across the training, validation, and holdout test sets.

Supplementary Table S3: mDOC referral decision versus expert referral decision for the holdout test set.

Acknowledgments

The authors would like to thank Nathaniel Holland of UTHealth School of Dentistry for his assistance with the statistical analyses for the patient study. The authors also thank all the healthy volunteers and patients who volunteered to participate in the study. Research reported in this publication was supported by the National Institute of Dental and Craniofacial Research of the NIH grants R21DE030532 (to R. Richards-Kortum and A.M. Gillenwater) and R01DE029590 (to R. Richards-Kortum, A.M. Gillenwater, and R.A. Schwarz).

Footnotes

Note: Supplementary data for this article are available at Cancer Prevention Research Online (http://cancerprevres.aacrjournals.org/).

Authors’ Disclosures

R. Mitbander, D. Brenes, J.B. Coole, A. Kortum, I.S. Vohra, J. Carns, R.A. Schwarz, I. Varghese, S. Durab, S. Anderson, N.E. Bass, A.D. Clayton, H. Badaoui, A.M. Gillenwater, N. Vigneswaran, and R. Richards-Kortum report grants from National Institute of Dental and Craniofacial Research during the conduct of the study; and an IP disclosure has been filed with Rice University. No disclosures were reported by the other authors.

Authors’ Contributions

R. Mitbander: Conceptualization, data curation, software, formal analysis, validation, investigation, visualization, methodology, writing–original draft, writing–review and editing. D. Brenes: Software, writing–review and editing. J.B. Coole: Investigation, methodology, writing–review and editing. A. Kortum: Methodology, writing–review and editing. I.S. Vohra: Investigation, writing–review and editing. J. Carns: Conceptualization, data curation, formal analysis, supervision, validation, visualization, methodology, writing–original draft, project administration, writing–review and editing. R.A. Schwarz: Conceptualization, data curation, formal analysis, validation, visualization, methodology, project administration, writing–review and editing. I. Varghese: Investigation, writing–review and editing. S. Durab: Investigation, writing–review and editing. S. Anderson: Investigation, project administration, writing–review and editing. N.E. Bass: Project administration, writing–review and editing. A.D. Clayton: Data curation, writing–review and editing. H. Badaoui: Investigation, project administration, writing–review and editing. L. Anandasivam: Writing–review and editing. R.A. Giese: Writing–review and editing. A.M. Gillenwater: Conceptualization, resources, data curation, supervision, funding acquisition, validation, investigation, writing–original draft, project administration, writing–review and editing. N. Vigneswaran: Conceptualization, resources, data curation, supervision, funding acquisition, validation, investigation, writing–original draft, project administration, writing–review and editing. R. Richards-Kortum: Conceptualization, resources, formal analysis, supervision, funding acquisition, validation, visualization, methodology, writing–original draft, project administration, writing–review and editing.

References

- 1. Warnakulasuriya S, Kerr AR. Oral cancer screening: past, present, and future. J Dent Res 2021;100:1313–20. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2. Kerr AR, Robinson ME, Meyerowitz C, Morse DE, Aguilar ML, Tomar SL, et al. Cues used by dentists in the early detection of oral cancer and oral potentially malignant lesions: findings from the National Dental Practice-Based Research Network. Oral Surg Oral Med Oral Pathol Oral Radiol 2020;130:264–72. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3. Epstein JB, Güneri P, Boyacioglu H, Abt E. The limitations of the clinical oral examination in detecting dysplastic oral lesions and oral squamous cell carcinoma. J Am Dent Assoc 2012;143:1332–42. [DOI] [PubMed] [Google Scholar]

- 4. Varela-Centelles P, Castelo-Baz P, Seoane-Romero J. Oral cancer: early/delayed diagnosis. Br Dent J 2017;222:643. [DOI] [PubMed] [Google Scholar]

- 5. Gaballah K, Faden A, Fakih FJ, Alsaadi AY, Noshi NF, Kujan O. Diagnostic accuracy of oral cancer and suspicious malignant mucosal changes among future dentists. Healthcare (Basel) 2021;9:263. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6. Brodowski R, Czenczek-Lewandowska E, Migut M, Leszczak J, Lewandowski B. Evaluating the reasons for delays in treatment of oral cavity cancer patients. Curr Issues Pharm Med Sci 2022;35:53–7. [Google Scholar]

- 7. Ribeiro MFA, Oliveira MCM, Leite AC, Bruzinga FFB, Mendes PA, Grossmann SdMC, et al. Assessment of screening programs as a strategy for early detection of oral cancer: a systematic review. Oral Oncol 2022;130:105936. [DOI] [PubMed] [Google Scholar]

- 8. Chaturvedi AK, Udaltsova N, Engels EA, Katzel JA, Yanik EL, Katki HA, et al. Oral leukoplakia and risk of progression to oral cancer: a population-based cohort study, J Natl Cancer Inst 2020;112:1047–54. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9. Gates JC, Abouyared M, Shnayder Y, Farwell DG, Day A, Alawi F, et al. Clinical management update of oral leukoplakia: a review from the American head and neck society cancer prevention service. Head Neck 2025;47:733–41. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10. US Preventive Services Task Force; Barry MJ, Nicholson WK, Silverstein M, Chelmow D, Coker TR, Davis EM, et al. Screening and preventive interventions for oral health in adults: US preventive Services Task Force recommendation statement. JAMA 2023;330:1773–9. [DOI] [PubMed] [Google Scholar]

- 11. Rethman MP, Carpenter W, Cohen EEW, Epstein J, Evans CA, Flaitz CM, et al. Evidence-based clinical recommendations regarding screening for oral squamous cell carcinomas. J Am Dent Assoc 2010;141:509–20. [DOI] [PubMed] [Google Scholar]

- 12. Lingen MW, Abt E, Agrawal N, Chaturvedi AK, Cohen E, D’Souza G, et al. Evidence-based clinical practice guideline for the evaluation of potentially malignant disorders in the oral cavity: a report of the American Dental Association. J Am Dent Assoc 2017;148:712–27.e10. [DOI] [PubMed] [Google Scholar]

- 13. Yang EC, Schwarz RA, Lang AK, Bass N, Badaoui H, Vohra IS, et al. In vivo multimodal optical imaging: improved detection of oral dysplasia in low-risk oral mucosal lesions. Cancer Prev Res (Phila) 2018;11:465–76. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14. Kanmodi KK, Amzat J, Nwafor JN, Nnyanzi LA. Global health trends of human papillomavirus-associated oral cancer: a review. Public Health Chall 2023;2:e126. [Google Scholar]

- 15. Zhang L, Williams M, Poh CF, Laronde D, Epstein JB, Durham S, et al. Toluidine blue staining identifies high-risk primary oral premalignant lesions with poor outcome. Cancer Res 2005;65:8017–21. [DOI] [PubMed] [Google Scholar]

- 16. Xiao T, Kurita H, Shimane T, Nakanishi Y, Koike T. Vital staining with iodine solution in oral cancer: iodine infiltration, cell proliferation, and glucose transporter 1. Int J Clin Oncol 2013;18:792–800. [DOI] [PubMed] [Google Scholar]

- 17. Epstein JB, Scully C, Spinelli J. Toluidine blue and Lugol’s iodine application in the assessment of oral malignant disease and lesions at risk of malignancy. J Oral Pathol Med 1992;21:160–3. [DOI] [PubMed] [Google Scholar]

- 18. van Schaik JE, Halmos GB, Witjes MJH, Plaat BEC. An overview of the current clinical status of optical imaging in head and neck cancer with a focus on Narrow Band imaging and fluorescence optical imaging. Oral Oncol 2021;121:105504. [DOI] [PubMed] [Google Scholar]

- 19. Chaurasia A, Alam SI, Singh N. Oral cancer diagnostics: an overview. Natl J Maxillofac Surg 2021;12:324–32. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20. Yang EC, Vohra IS, Badaoui H, Schwarz RA, Cherry KD, Quang T, et al. Development of an integrated multimodal optical imaging system with real-time image analysis for the evaluation of oral premalignant lesions. J Biomed Opt 2019;24:1–10. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21. Quang T, Tran EQ, Schwarz RA, Williams MD, Vigneswaran N, Gillenwater AM, et al. Prospective evaluation of multimodal optical imaging with automated image analysis to detect oral neoplasia in vivo. Cancer Prev Res (Phila) 2017;10:563–70. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22. Messadi DV, Younai FS, Liu H-H, Guo G, Wang C-Y. The clinical effectiveness of reflectance optical spectroscopy for the in vivo diagnosis of oral lesions. Int J Oral Sci 2014;6:162–7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23. Awan KH, Morgan PR, Warnakulasuriya S. Evaluation of an autofluorescence based imaging system (VELscopeTM) in the detection of oral potentially malignant disorders and benign keratoses. Oral Oncol 2011;47:274–7. [DOI] [PubMed] [Google Scholar]

- 24. Scheer M, Neugebauer J, Derman A, Fuss J, Drebber U, Zoeller JE. Autofluorescence imaging of potentially malignant mucosa lesions. Oral Surg Oral Med Oral Pathol Oral Radiol Endod 2011;111:568–77. [DOI] [PubMed] [Google Scholar]

- 25. Paderni C, Compilato D, Carinci F, Nardi G, Rodolico V, Lo Muzio L, et al. Direct visualization of oral-cavity tissue fluorescence as novel aid for early oral cancer diagnosis and potentially malignant disorders monitoring. Int J Immunopathol Pharmacol 2011;24:121–8. [DOI] [PubMed] [Google Scholar]

- 26. Rashid A, Warnakulasuriya S. The use of light-based (optical) detection systems as adjuncts in the detection of oral cancer and oral potentially malignant disorders: a systematic review. J Oral Pathol Med 2015;44:307–28. [DOI] [PubMed] [Google Scholar]

- 27. Brenes D, Nipper A, Tan M, Gleber-Netto F, Schwarz R, Pickering C, et al. Mildly dysplastic oral lesions with optically-detectable abnormalities share genetic similarities with severely dysplastic lesions. Oral Oncol 2022;135:106232. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28. Yang EC, Tan MT, Schwarz RA, Richards-Kortum RR, Gillenwater AM, Vigneswaran N. Noninvasive diagnostic adjuncts for the evaluation of potentially premalignant oral epithelial lesions: current limitations and future directions. Oral Surg Oral Med Oral Pathol Oral Radiol 2018;125:670–81. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29. Fu Q, Chen Y, Li Z, Jing Q, Hu C, Liu H, et al. A deep learning algorithm for detection of oral cavity squamous cell carcinoma from photographic images: a retrospective study. EClinicalMedicine 2020;27:100558. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30. Lin H, Chen H, Weng L, Shao J, Lin J. Automatic detection of oral cancer in smartphone-based images using deep learning for early diagnosis. J Biomed Opt 2021;26:086007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31. Welikala RA, Remagnino P, Lim JH, Chan CS, Rajendran S, Kallarakkal TG, et al. Automated detection and classification of oral lesions using deep learning for early detection of oral cancer. IEEE Access 2020;8:132677–93. [Google Scholar]

- 32. Haron N, Rajendran S, Kallarakkal TG, Zain RB, Ramanathan A, Abraham MT, et al. High referral accuracy for oral cancers and oral potentially malignant disorders using telemedicine. Oral Dis 2023;29:380–9. [DOI] [PubMed] [Google Scholar]

- 33. Birur NP, Patrick S, Bajaj S, Raghavan S, Suresh A, Sunny SP, et al. A novel mobile health approach to early diagnosis of oral cancer. J Contemp Dent Pract 2018;19:1122–8. [PMC free article] [PubMed] [Google Scholar]

- 34. Uthoff RD, Song B, Sunny S, Patrick S, Suresh A, Kolur T, et al. Point-of-care, smartphone-based, dual-modality, dual-view, oral cancer screening device with neural network classification for low-resource communities. PLoS One 2018;13:e0207493. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35. Rainio O, Teuho J, Klén R. Evaluation metrics and statistical tests for machine learning. Sci Rep 2024;14:6086. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36. Youden WJ. Index for rating diagnostic tests. Cancer 1950;3:32–5. [DOI] [PubMed] [Google Scholar]

- 37. Fluss R, Faraggi D, Reiser B. Estimation of the youden index and its associated cutoff point. Biom J 2005;47:458–72. [DOI] [PubMed] [Google Scholar]

- 38. Chattopadhay A, Sarkar A, Howlader P, Balasubramanian VN. Grad-CAM++: generalized gradient-based visual explanations for deep convolutional networks. 2018 IEEE Winter Conf Appl Comput Vis WACV [Internet]. 2018[cited 2025 Jan 9]. p. 839–47. Available from:https://ieeexplore.ieee.org/abstract/document/8354201 [Google Scholar]

- 39. Birur NP, Song B, Sunny SP, Keerthi G, Mendonca P, Mukhia N, et al. Field validation of deep learning based Point-of-Care device for early detection of oral malignant and potentially malignant disorders. Sci Rep 2022;12:14283. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40. Song B, Sunny S, Li S, Gurushanth K, Mendonca P, Mukhia N, et al. Mobile-based oral cancer classification for point-of-care screening. J Biomed Opt 2021;26:065003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41. Binnie R, Dobson ML, Chrystal A, Hijazi K. Oral lichen planus and lichenoid lesions - challenges and pitfalls for the general dental practitioner. Br Dent J 2024;236:285–92. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42. Vigneswaran N, Williams MD. Epidemiologic trends in head and neck cancer and aids in diagnosis. Oral Maxillofac Surg Clin North Am 2014;26:123–41. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43. Gupta RK, Rani N, Joshi B. Proliferative verrucous leukoplakia misdiagnosed as oral leukoplakia. J Indian Soc Periodontol 2017;21:499–502. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44. Staines K, Rogers H. Proliferative verrucous leukoplakia: a general dental practitioner-focused clinical review. Br Dent J 2024;236:297–301. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45. Thompson LDR, Fitzpatrick SG, Müller S, Eisenberg E, Upadhyaya JD, Lingen MW, et al. Proliferative verrucous leukoplakia: an expert consensus guideline for standardized assessment and reporting. Head Neck Pathol 2021;15:572–87. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Supplementary Table S1: Cost breakdown of mobile Detection of Oral Cancer (mDOC) System prototype.

Supplementary Table S2: Anatomic site distribution across the training, validation, and holdout test sets.

Supplementary Table S3: mDOC referral decision versus expert referral decision for the holdout test set.

Data Availability Statement

Data underlying the results presented in this article may be obtained from the corresponding author upon reasonable request.