Abstract

Online classes are now integral to higher education, particularly for students at two-year community colleges, who are profoundly underrepresented in experimental research. Here, we provided a rigorous test of using interpolated retrieval practice to enhance learning from an online lecture for both university and community college students (N = 703). We manipulated interpolated activity (participants saw review slides or answered short quiz questions) and onscreen distractions (control, memes, TikTok). Our results showed that interpolated retrieval enhanced online learning for both student groups, but this benefit was moderated by onscreen distractions. Surprisingly, the presence of TikTok videos produced an ironic effect of distraction—it enhanced learning for students in the interpolated review condition, allowing them to perform similarly to students who took the interpolated quizzes. Moreover, we showed in an exploratory analysis that the intervention-induced learning improvements were mediated by a composite measure of engaged learning, thus providing a mechanistic account of our findings. Finally, our data provided preliminary evidence that interpolated retrieval practice might reduce the achievement gap for Black students.

Subject terms: Education, Human behaviour

Interpolating a lecture with quiz questions enhanced online learning for both community college and university students. The benefit is influenced by level of distraction, is associated with engagement, and can reduce racial achievement gaps.

Introduction

Online learning has become integral to higher education, and the COVID-19 pandemic made it nearly ubiquitous1. The convenience and flexibility of online courses attract a diverse student body, particularly those who hold a job, have family obligations, or live too far from a college campus to physically attend lectures2,3. Although online education has made distance learning widely accessible, it also introduces unique pedagogical challenges. Relative to students in live classes, online learners are frequently distracted by external stimuli4 and are more prone to mind wandering5. Consequently, discovering interventions to improve learning from online lectures is crucial for enhancing educational outcomes. Recent research has shown that embedding brief quizzes into a learning episode (i.e., interpolated retrieval practice, IRP) can reduce mind wandering and enhance learning6–8. In the present high-powered study, we aimed to provide a rigorous test of this intervention. An key element of this study was the involvement of community college students, who are twice as likely (~41%) as four-year university students (~20%) to enroll exclusively online9 but are profoundly underrepresented in experimental research.

The increasing prevalence of online instructions has raised questions about their efficacy relative to traditional face-to-face instructions. Although some studies have demonstrated that online classes can be comparable to in-person classes10–12, many have not. For example, relative to students who enroll in face-to-face courses, students who enroll online are more likely to engage in off-task activities13 or drop the course14,15, and less likely to achieve a passing grade2,16 or obtain a college degree17. Although factors that underlie these poor learning outcomes are numerous and might evade causal inferences due to their correlational nature, one potential contributor is that students are prone to experiencing inattention either from mind wandering8 or from external distractions when viewing online lectures18.

Involuntary mind wandering—a spontaneous shift of attention away from the target task to some unrelated thoughts19–21—is detrimental to comprehension and learning22–26. This issue is exacerbated in longer study sessions like video lectures, during which maintaining focus becomes increasingly difficult over time—a pattern known as vigilance decrement27. Indeed, students tend to mind-wander more and learn less as a lecture progresses28,29. Inserting brief quizzes into a learning task (e.g., asking a few short-answer questions), however, has been shown to reduce mind wandering7, increase engagement30, and promote retention of the tested material31. These IRP opportunities can also promote new learning of content presented after the interpolated quiz across myriad scenarios—a finding termed test-potentiated new learning (TPNL)6. For example, TPNL has been observed when people study word lists32–35, paired associates36–39, spatial routes40, text passages41,42, and video lectures7,43–45. IRP has been touted as a potential remedy for inattentiveness and poor learning outcomes in online education6,46,47. It is especially promising given its cost-effectiveness, scalability, and simple implementation.

Several explanations have been proposed to account for the TPNL effect. Some researchers argued that IRP promotes new learning by increasing learners’ attention toward the material (i.e., the attention hypothesis)7,48. In contrast, other researchers suggested that switching from encoding to retrieval induces a mental context change, which helps students segregate the materials learned pre- and post-retrieval and reduces memory load35,49,50. Lastly, IRP has been proposed to promote new learning because it helps learners optimize their encoding and retrieval strategies36,51. Although these various accounts are not necessarily mutually exclusive, (e.g., sustained attention may be necessary to support the development of optimal retrieval strategies), we focus specifically on the attention hypothesis. We address the theoretical relevance of our experiment when we introduce its methodology.

Although ample evidence suggests that IRP is a potent learning enhancer, the vast majority comes from laboratory studies. Despite a meta-analysis showing that online (g = 0.69) and lab (g = 0.76) studies produce comparable effect sizes, it is nonetheless difficult to draw firm conclusions because only three of the included studies were conducted online6. Moreover, extant online studies have either recruited participants from specialized data pools (e.g., MTurk)44,52,53 that can produce unreliable outcomes54–56, employed simplistic materials (e.g., word lists, paired associates) that differ from typical classroom content52,57,58, or have produced inconclusive results59. Therefore, it was essential to examine the impact of IRP for online learning using materials and participant populations that resemble real-world situations.

An additional concern when drawing broad conclusions from existing data is that all studies that have linked IRP to a reduction in mind wandering were conducted in the laboratory7,60–62. Critically, unlike the “messy” environments under which online learning occurs, the laboratory places participants in a “pristine” environment. For example, students are strongly discouraged, if not prohibited, from using digital devices, talking with other participants, or partaking in unrelated activities. The laboratory is constructed to foster focused attention on a single task, which creates a nearly ideal learning condition. In contrast, online learners might view a lecture in a noisy cafe, at home with pets or housemates, or in the presence of myriad other potential distractions (e.g., online shopping, instant messaging, social media, notifications from other connected devices, etc.).

The different attentional demands of laboratory and online studies present a unique challenge to using IRP as a learning intervention. As stated previously, a prominent account for TPNL is that IRP reduces inattention. This account is supported by evidence that IRP reduces mind wandering7, increases students’ expectations that they will be tested again48, and lengthens students’ voluntary study duration30,31. IRP has been proposed to reduce inattention for several reasons. First, when attempting to answer a question, participants must, at the very least, temporarily reorient their attention to the learning task. Second, experiencing retrieval failure might prompt participants to stay engaged with subsequent materials. Third, answering questions allows students to express what they know, a form of active learning that might foster engagement. Despite progress in understanding the relationship between attention and TPNL, current theoretical models do not yet permit precise predictions about whether IRP’s benefit would be enhanced or diminished in highly distracting environments. On the one hand, IRP might buffer learners against the distractions that they must ignore, leading to a more pronounced benefit. On the other hand, the opposite might occur if the “messy” conditions of online learning overwhelm the advantages of interpolated testing. Despite the lack of precision of the attention hypothesis, and unlike the context change or strategy optimization accounts mentioned earlier, the account unambiguously predicts that the magnitude of TPNL would be influenced by factors that can affect attention during encoding.

This study aimed to evaluate IRP as an intervention for online learning. All participants completed the experiment online, which should be more distracting than laboratory environments, but we also manipulated the level of onscreen distractions (none, static meme pictures, dynamic TikTok videos) to examine their effects on learning and mind wandering. Although we suspected that the benefits of IRP might be mitigated in online relative to in-person learning, we could not directly assess this possibility because we did not include an in-person group. This omission was intentional, as our objective was to study online learning. Therefore, we manipulated onscreen distraction levels to ensure that we could draw causal arguments about the effects of distractions on TPNL.

Community colleges educate approximately one-third of the undergraduate population in the United States, and about half of the students who enroll exclusively online. Further, the COVID-19 pandemic has had an especially lasting impact on the education delivery of two-year colleges. Although universities have largely returned to in-person education, for many community colleges, a majority of their courses have not returned to in-person delivery63–65. Despite the essential role that community college plays in post-secondary education, students at these institutions are profoundly underrepresented in educational research in general66,67 and experimental research in particular68. Researchers have often promoted retrieval practice as an intervention for online learning, and yet community college students – perhaps the largest stakeholders for online education – are conspicuously absent in this literature. Moreover, students at two-year colleges are far more diverse than their four-year counterparts, both in socioeconomic status and race/ethnicity69, so the involvement of community college students also provides an opportunity for us to investigate whether IRP can reduce racial achievement gaps in online learning. Studies have documented differences in performance on working memory70 and executive function tasks across racial groups in nationally representative samples71,72. Given the role of these cognitive functions in learning efficiency73, metacognition74, and retrieval practice benefits75–78, it is important to assess our intervention’s effectiveness across diverse student populations.

To permit a comparison between university and community college students, we recruited half of our participants from four-year public universities (Iowa State University in the United States—ISU, Toronto Metropolitan University in Canada—TMU) and half from two-year community colleges (American River College in California—ARC, Des Moines Area Community College in Iowa—DMACC, Mohawk College in Canada—MC, Columbus State Community College—CSCC, and Cuyahoga Community College—Tri-C in Ohio).

Methods

Design and Participants

Iowa State University’s institutional review board (IRB) provided ethics oversight for data collected in the United States under the protocol 21-037. Toronto Metropolitan University’s IRB provided ethics oversight for data collected in Canada under the protocol REB-2020-285. In addition, ethics approval has been obtained from all community colleges involved.

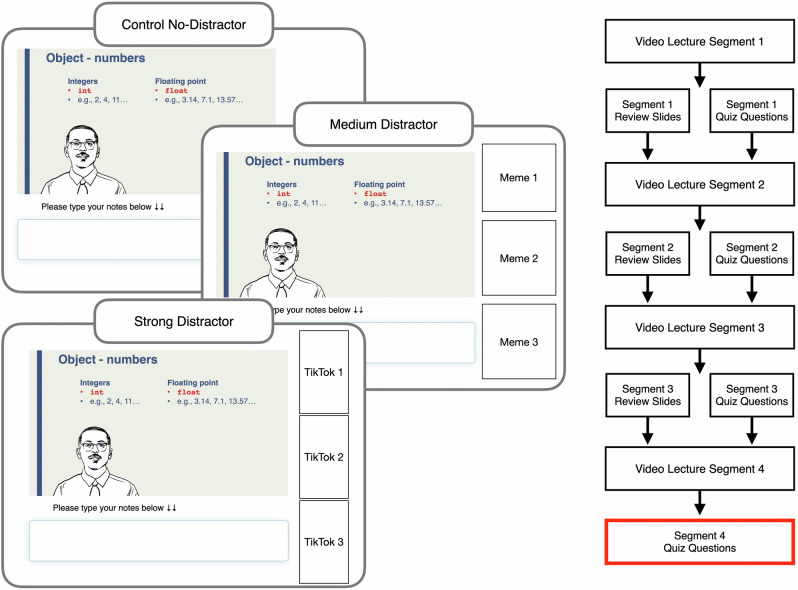

The experiment employed a 2 (interpolated activity: review, quiz) × 3 (onscreen distraction: control/no-distractor, medium/meme distractor, strong/TikTok distractor) between-subjects design, with institution type (university, community college) as a subject variable. A critical objective was to ensure that our study resembled real-world online learning while retaining the advantages of an experiment. To this end, we developed a collection of high-quality, ~20 min STEM video lectures (computer science, animal ecology, physics, and statistics), with a different pair of man and woman instructors for each topic. Two instructors were middle-aged adults, and six were young adults. Two were Black, and six were White (see Figure S1 in Supplementary Information). All lecture videos are available on the OSF page. Participants viewed one of these videos in the presence of no distractors, medium distractors (memes), or strong distractors (TikTok videos), and were required to engage in either interpolated retrieval practice or review following the first three segments of the lecture. All participants completed an interpolated quiz following the fourth and final segment of the lecture and then a final cumulative test approximately 24 hours later (Fig. 1). To assess mind wandering, all participants responded to one mind wandering probe (MWP) during each segment of the lecture. Finally, all participants indicated how likely they were to be tested before each lecture segment, their level of engagement following each lecture segment, and were free to take notes during the lecture.

Fig. 1. Screenshots of the lecture video and experimental flowchart.

In the control, no-distractor condition, the area to the right of the lecture video was left blank. In the medium distractor condition, a different set of three meme pictures were shown for the duration of each lecture segment. The memes included a picture with a brief caption meant to convey humor. In the strong distractor condition, a fresh set of three TikTok videos were shown per minute of the lecture segment. The videos generally depicted humorous, light-hearted encounters, adorable animals, and nature scenes. The criterial test for Segment 4 is highlighted with a thick red border. The photographic image of the instructor was replaced with a line-drawing for publication purposes. Participants watched a real human serve as the instructor.

We conducted a pilot experiment (N = 439) to determine a possible effect size for our power analysis. The pilot data showed a moderate TPNL effect, t(437) = 4.37, p < .001, d = 0.42. Based on this effect size, we needed a minimum of 91 participants per condition to achieve .80 power. Because it took multiples of eight participants to complete a full counterbalance, we rounded up each condition to 96 participants (note that we omitted this consideration in our preregistration). We opted to over-provision our data collection goal by 20% to account for attrition and our preregistered data exclusion criteria. Therefore, we aimed to collect data from 115 participants per condition (for 690 participants across the six conditions), with approximately half of the participants from community colleges.

We recruited 898 participants in the experiment and excluded data from 195 (see Table 1 for exclusion reasons). We approached recruitment practices by employing the most efficient method permitted by each college’s institutional review board. At ISU and Tri-C, we recruited participants through mass emails. At ARC, DMACC, and MC, a staff member sent recruitment emails or embedded our study’s advertisement in electronic newsletters. At CSCC, the advertisement was posted to students’ learning management system. At TMU, we first attempted to recruit students on the TMU subreddit. Unfortunately, this approach resulted in very poor-quality data (e.g., many Reddit participants did not follow instructions). Consequently, we excluded all of the data acquired from Reddit (N = 61) and recruited students at TMU via flyers posted around campus.

Table 1.

Number of participants excluded from analysis

| Exclusion reason | N |

|---|---|

| Missed all four mind wandering probes | 143 (39) |

| Did not attempt to answer any questions on the criterial test | 27 (18) |

| Low English proficiency | 11 (2) |

| Not taking the experiment seriously at all | 10 (1) |

| Browsing other websites during the entire study | 4 (1) |

Number in parentheses refer to participants who were from the TMU subreddit.

Ultimately, we retained data from 703 participants for analysis. Participants’ self-reported characteristics are displayed in Table 2. Of these participants, 622 completed both Sessions 1 and 2, and 81 did not complete Session 2 (i.e., cumulative test). A programming error affected the data of 27 participants. Specifically, we had included one incorrect question on the criterial test for these participants. To ensure that all of the data were based on a common set of questions, we removed the incorrect question from analysis for these participants so that their criterial test performance was based on three instead of four questions.

Table 2.

Number of participants as a function of institution and demographic variables

| Institution | N |

|---|---|

| Iowa State University | 187 (27%) |

| Toronto Metropolitan University | 186 (27%) |

| American River College | 98 (14%) |

| Columbus State Community College | 96 (14%) |

| Des Moines Area Community College | 52 (7%) |

| Cuyahoga Community College | 52 (7%) |

| Mohawk College | 31 (4%) |

| Gender | N | Mean Age |

|---|---|---|

| Woman | 418 (61%) | 24.3 |

| Man | 239 (35%) | 22.7 |

| Non-cisgender | 30 (4%) | 22.2 |

| Race | N from Universities | N from Community Colleges |

|---|---|---|

| Asian/Asian American/Asian Canadian | 48 (14%) | 28 (9%) |

| Black/African American/African Canadian | 28 (8%) | 39 (13%) |

| Hispanic/Latinx | 13 (4%) | 36 (12%) |

| Multi-racial | 9 (3%) | 26 (8%) |

| Others | 4 (1%) | 3 (1%) |

| White | 246 (71%) | 175 (57%) |

| Parental Social Economic Status | N from Universities | N from Community Colleges |

|---|---|---|

| Upper Class | 39 (11%) | 14 (5%) |

| Middle Class | 235 (67%) | 146 (52%) |

| Working Class | 71 (20%) | 100 (35%) |

| Fully Supported by Government Assistance | 5 (1%) | 23 (8%) |

These data include all participants who completed Session 1. Percentages are displayed in parentheses. We could not determine the college for one participant due to a program error (the participant could have attended ARC, CSCC, or Tri-C). For the demographic data, 48 participants chose not to report their race, 16 chose not to report their gender, and 70 chose not to report their SES. All demographics information was based on self-reports.

We retained all 703 participants for analysis to maximize statistical power for the Session 1 data, a decision justified by the absence of an interaction between Session 2 participation and other independent variables on criterial test performance. Specifically, participation during Session 2 did not produce a significant interaction with interpolated activity, F(1, 691) = 0.04, p = .843, ηp2 < .001, 95% CI = [0.000, 0.005], BF01 = 5.22, onscreen distractor, F(2, 691) = 1.17, p = .310, ηp2 = .003, 95% CI = [0.000, 0.015], BF01 = 8.00, or a three-way interaction with both variables, F(2, 691) = 0.17, p = .845, ηp2 < .001, 95% CI = [0.000, 0.006], BF01 = 7.13. Participants who completed both sessions received a $20 Amazon electronic gift card, whereas participants who finished only Session 1 received a $15 electronic gift card.

Materials and Procedure

The experiment included two sessions. In Session 1, participants were first presented with the informed consent form. Those who did not provide their consent were sent to a thank-you page and the experiment did not start. If participants provided their consent, they started the experiment by watching a ~ 20 min lecture video broken into four ~5 min segments. A head-and-shoulder view of the instructor appeared in front of the lecture slides (see Fig. 1). To ensure a consistent presentation style and to minimize potential instructor effects on engagement and learning, we instructed our actors (ISU Department of Theater instructors and students) to deliver the lecture flatly. Similar to an actual lecture, the latter segments presented content that built upon earlier segments. Participants were encouraged to take notes in the area below the video lecture and were informed that their notes would not remain visible across lecture segments. For participants in the control, no-distractor condition, the rightmost 20% of their screen was left blank. For participants in the medium distractor condition, the same area was occupied by three static memes, each with a short caption. These images conveyed humor and were meant to simulate online advertisements or click-baits. A new set of memes was shown for each segment. Therefore, each participant saw a total of 12 memes. For participants in the strong distractor condition, the rightmost area was occupied by three silent, captioned TikTok videos depicting humorous encounters, adorable animals, or calming nature scenes. To maximize distractions, each video cycled for approximately one minute and was then replaced by a new video, so each participant was presented a total of 60 TikTok videos.

Before the experiment, participants were told that they would watch a four-segment lecture video and receive either quiz questions or review slides after each segment. Participants were also told that a cumulative test would follow at the end of the experiment. In reality, participants were randomly assigned to either an interpolated review or interpolated quiz condition, which determined the activity for Segments 1 to 3. After each lecture segment, participants in the quiz condition answered four brief factual questions (e.g., what term does Python use to represent characters?). They had 20 s to answer each question and were then presented with the correct answer (e.g., str) as feedback for 3 s. Participants in the review condition were shown four review slides following each segment, with 23 s allocated to study each slide. The slides presented the same information as the interpolated quiz questions but with the answers embedded (e.g., Python uses “str” to represent characters).

In addition to the interpolated activity, participants were informed that they would encounter mind-wandering probes (MWP) at an unpredictable point during each lecture segment. Participants were shown an MWP and instructed to familiarize themselves with the five options: (1) what the professor is saying right now, (2) something related to an earlier part of the lecture, (3) relating the lecture to my own life, (4) something unrelated to the lecture, and (5) zoning out. Consistent with prior literature, we considered the first three options task-related thinking and the last two off-task60. Each probe appeared silently for 10 s, covering the instructor’s face. The instructor continued to teach during the entire probe duration. We opted for this approach because we wanted to catch participants being completely off-task by missing the MWP entirely.

Participants were instructed to focus on the lecture and ignore any irrelevant images/videos on screen should they appear. Participants were also encouraged to type notes below the lecture, as they would for an actual class. Before watching each segment, participants were asked to rate the likelihood of being quizzed after the segment on a scale from 0 (sure that there will not be a quiz) to 100 (sure that there will be one). Consistent with prior studies48, interpolated quizzes increased test expectations across segments (from 68% to 83%), F(3, 1047) = 37.83, p < 0.001, ηp2 = 0.047, 95% CI = [0.028, 0.073], whereas interpolated review reduced them (from 65% to 53%), F(3, 1056) = 29.82, p < 0.001, ηp2 = 0.037, 95% CI = [0.021, 0.058].

Immediately after each lecture segment and before the interpolated activity, participants completed an abbreviated, six-item Math and Science Engagement Scales79 (see Table S1 of Supplementary Information). In addition to measuring subjective engagement, which we hypothesized to be related to the benefits of interpolated testing, the questionnaire also served as a brief (~1 min) retention interval. After viewing Segment 4, all participants completed the engagement questionnaire and then a quiz for Segment 4. Performance on this criterial test provided the key measure of interest. Next, participants answered demographic and data quality control questions (e.g., how seriously they took the experiment). Participants were assured that their responses would not impact compensation. Twenty-four hours later, participants were sent an automated email instructing them to participate in Session 2 within 12 h.

During Session 2, participants first answered four inference questions. They then completed a cumulative test, which contained all of the questions from the interpolated and criterial quizzes. At the end of the session, participants were debriefed and thanked. Session 1 took approximately 45 min and Session 2 about 15 min.

Data Analysis and Preregistration Statement

We first address the effects of IRP on test performance and mind wandering for university and community college students; we then examine the influence of onscreen distractions on test performance. Finally, we present two preregistered exploratory analyses: we first report a mediation analysis that tests the attention account; we then examine whether IRP can reduce racial achievement gaps.

All analyses were conducted using two-tailed tests with an alpha level of .05. We report Cohen’s d and partial eta square (ηp2) for effect sizes. Neither the criterial test data, W = 0.92, p < 0.001, nor the cumulative test data were normally distributed, W = 0.96, p < 0.001. This outcome is not surprising given that the criterial test contained only four questions and the cumulative test contained 16 questions. For null results, we also report Bayes Factor (BF01), which quantifies support for the null hypothesis (H0) against the alternative hypothesis (H1), with a greater value indicating greater support of the null. For all Bayesian analyses, we used the default parameters in JASP80 for our priors—a point-null (at zero) for H0 and a zero-centered, two-sided Cauchy distribution with a 0.707 scaling factor for H1.

This study was preregistered on the Open Science Framework at 10.17605/OSF.IO/URWVM on March 25, 2022. We deviated from our preregistered analyses on two occasions. First, we noticed an error in our preregistration, in which we stated that we would conduct a 2 (interpolated activity) × 3 (onscreen distraction) × 2 (on-task or off-task) ANOVA, without specifying the dependent measure and how we would measure mind wandering. That analysis actually described a 2 (interpolated activity) × 3 (onscreen distraction) ANOVA, and mind wandering (on-task or off-task) was the dependent variable. In the results section, we report the outcomes of this exploratory analysis by combining five measures related to engaged learning, including a dependent measure tied to mind wandering. Second, we had planned to conduct an ANOVA to examine whether our manipulations of interpolated activity and distraction would affect inference question performance. At the time of preregistration, we considered using performance on the inference questions as a measure of participants’ ability to integrate pieces of information within and across lecture segments. In hindsight, these questions were very difficult to produce and we could not ascertain that they actually measured integration. Moreover, participants generally performed poorly on them. An exploratory analysis showed that university students (M = 0.45) outperformed community college students on these inference questions (M = .39), t(620) = 2.65, p = .008, d = 0.21, 95% CI = [0.06, 0.37]. But none of our planned analyses revealed significant effects of any independent variables on these questions, so we will not mention them further.

Finally, for completeness, we also preregistered two planned exploratory analyses: one on whether instructor gender affected our dependent measures, and the other on temporal changes to mind wandering based on interpolated activity. We report the outcomes of these analyses in the Supplementary Information under Supplementary Notes 1 and 2.

Results

Did IRP Enhance New Learning for University and Community College Students?

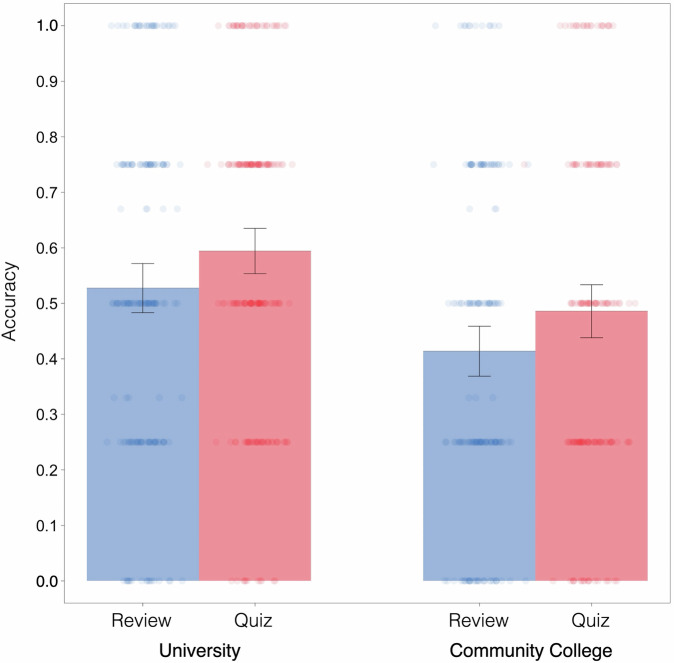

Figure 2 shows that IRP enhanced new learning for both university and community college students. A 2 (interpolated quiz, interpolated review) x 2 (university, community college) ANOVA showed that prior retrieval (M = 0.55) improved Segment 4 test performance relative to review (M = 0.47), F(1, 699) = 9.56, p = 0.002, ηp2 = 0.013, 95% CI = [0.002, 0.035]. Moreover, university students (M = 0.56) outperformed community college students (M = 0.45), F(1, 699) = 24.45, p < .001, ηp2 = 0.034, 95% CI = [0.012, 0.064]. But perhaps most importantly, the two variables did not interact, F(1, 699) = 0.01, p = 0.905, ηp2 < .001, 95% CI = [0.000, 0.004], BF01 = 8.40. Both university and community college (CC) students benefited from IRP, tuniversity(371) = 2.20, p = 0.029, d = 0.23, 95% CI = [0.02, 0.43]; tCC(328) = 2.17, p = 0.030, d = 0.24, 95% CI = [0.02, 0.46]. Therefore, inserting brief quizzes into an online lecture promoted learning of the last segment of the lecture for both student groups - even in an uncontrolled online environment.

Fig. 2. Criterial test performance as a function of interpolated activity and institution type.

Error bars are 95% confidence intervals. University: N = 373. Community College: N = 330. Each dot represents the data of an individual participant. The dots were displaced horizontally to improve visibility.

In addition to the criterial Segment 4 test, participants completed a cumulative test a day later. Similar to the criterial test, prior retrieval enhanced cumulative test performance (M = 0.62) relative to review (M = .54), F(1, 618) = 15.98, p < .001, d = 0.32, 95% CI = [0.01, 0.06], and university students (M = .62) outperformed community college students (M = .53), F(1, 618) = 16.07, p < .001, ηp2 = 0.025, 95% CI = [0.007, 0.055]. The interaction was not significant, F(1, 618) = 0.25, p = 0.617, ηp2 < 0.001, 95% CI = [0.000, 0.009], BF01 = 7.06.

For mind wandering, we examined the proportion of probes for which participants claimed to have engaged in task-related thinking, which included “what the professor is saying,” “something related to an earlier part of the lecture,” and “relating the lecture to my own life.” This dependent measure captured all thoughts associated with learning from the lecture60, and the data from all four MWPs were combined to increase reliability81. We conducted the same 2×2 ANOVA, but unlike the data on test performance, we found no credible evidence that interpolated activity affected engagement with lecture content (Mreview = .67, Mquiz = .69), F(1, 699) = 0.49, p = 0.485, ηp2 < 0.001, 95% CI = [0.000, 0.010], BF01 = 9.06, nor did it interact with institution type, F(1, 699) = 0.04, p = .846, ηp2 = .005, 95% CI = [0.000, 0.021], BF01 = 8.53. The main effect of institution type was also not significant, F(1, 699) = 3.56, p = .060, ηp2 < .001, 95% CI = [0.000, 0.005], BF01 = 1.99.

Before concluding that there was little credible evidence that IRP influences mind wandering, it was important to validate our measure. If the mind-wandering responses accurately reflected participants’ cognitive state, then participants with more task-related thinking responses should exhibit better test performance. Indeed, the proportion of task-related thinking across the four mind-wandering probes was correlated with both Segment 4 test performance (r = .32, 95% CI = [0.25, 0.38], p < .001) and cumulative test performance (r = .33, 95% CI = [0.26, 0.40], p < .001). These results are noteworthy because of their predictive validity, such that participants’ real-time responses to the MWPs predicted their test performance both minutes (Segment 4 criterial test) and a day (cumulative test) later. In sum, unlike previous laboratory studies, we found no credible evidence that IRP reduced mind wandering despite it having improved learning. We further investigated the role that attention plays in the TPNL effect later in an exploratory analysis.

Effects of Onscreen Distractions

We now examined the influence of interpolated activity (quiz, review) and onscreen distraction (control, meme, video) on test performance (see Fig. 3). The key findings for this analysis are those pertaining to the distraction manipulation, because the main effect of interpolated activity is the same as that reported in the prior section. The main effect of distraction was not significant, F(2, 697) = 1.38, p = .252, ηp2 = .004, 95% CI = [0.000, 0.016], BF01 = 11.71. The interaction between distraction and interpolated activity also did not reach significance, F(2, 697) = 2.58, p = .077, ηp2 = .007, 95% CI = [0.000, 0.023], BF01 = 3.11, but it exhibited an unexpected pattern. To further scrutinize this result, we conducted an exploratory analysis for each distractor condition. These comparisons revealed a significant TPNL effect for both the no-distractor control condition, t(233) = 2.83, p = .005, d = 0.37, 95% CI = [0.11, 0.63], and the meme condition, t(233) = 2.62, p = .009, d = 0.34, 95% CI = [0.08, 0.60], but not for the TikTok condition, t(231) = 0.06, p = .955, d < 0.01, 95% CI = [-0.25, 0.26], BF01 = 6.97. In fact, participants in the TikTok condition exhibited numerically identical performance following interpolated review and quizes (both Ms = .51). We suspect that the interaction’s lack of significance was driven by two of the three distraction conditions (i.e., control and meme) having produced the same, moderate TNPL effect, thereby increasing the power needed to detect an interaction.

Fig. 3. Criterial test performance as a function of interpolated activity and onscreen distraction.

Error bars are 95% confidence intervals. Participants in the control condition did not have any onscreen distractors (N = 235). Participants in the medium distractor condition had three memes on the right side of the screen during the lecture (N = 235). Participants in the strong distractor condition had three TikTok videos playing on the right side of the screen throughout the lecture (N = 233). Each dot represents the data of an individual participant. The dots were displaced horizontally to improve visibility.

At first glance, the null effect in the strong distractor condition suggests that TPNL diminished with onscreen distractions, but a closer examination of Fig. 3 suggests a more nuanced interpretation. Specifically, the TikTok videos reduced accuracy (M = 0.51) relative to no distractors (M = 0.54) for participants in the interpolated quiz condition, but this slight drop in performance was not the main contributor to the elimination of the TPNL effect. Instead, for participants in the interpolated review condition, the onscreen distractions unexpectedly enhanced, rather than reduced, their criterial test performance. Indeed, interpolated review participants who were given video distractors (M = 0.51) actually outperformed their no-distractor counterparts (M = 0.43), t(239) = 2.10, p = 0.037, d = 0.27, 95% CI = [0.02, 0.52]. Therefore, rather than IRP merely failing to benefit memory amid onscreen distractions, our data revealed an ironic effect of distraction – the TikTok videos might have kept students engaged with the lecture. We must note that this is an unexpected finding, so further research is needed to ascertain its reliability and generality. For example, our onscreen distractions was silent, so participants could listen to the lecture content while watching the TikTok videos, which might ironically help participants stay engaged with the content. We believe that the same benefit might not occur for participants who received interpolated quizzes because the quizzes already kept them engaged with the lecture.

We now present results from the cumulative test. Onscreen distraction did not have a main effect on cumulative test performance, F(2, 616) = 1.72, p = 0.180, ηp2 = 0.006, 95% CI = [0.000, 0.021], BF01 = 11.18. If anything, participants in the video distractor condition (M = .60) produced the best performance overall (MControl = 0.57, MMeme = 0.56). Further, interim activity and distraction did not interact, F(1, 616) = 0.51, p = .600, ηp2 = .002, 95% CI = [0.000, 0.011], BF01 = 18.43.

Exploratory Analysis on Engaged Learning

Contrary to several laboratory studies, our data showed that taking interpolated quizzes did not credibly reduce mind wandering. In the following exploratory analysis, we aimed to obtain a more reliable measure related to the broader concepts of attention, engagement, and effort by combining multiple variables that were individually less reliable into a composite measure82,83. The five variables were participants’ STEM engagement score for video lecture Segment 4, the number of Segment 4 idea units captured by participants’ Segment 4 notes, participants’ expectation that they will be quizzed following the Segment 4 lecture, the task-related thinking score from the four MWPs, and participants’ answer to the post-experiment question about how seriously they took the experiment (5-point scale).

We used artificial intelligence (OpenAI’s GPT4) to code for the presence of idea units in participants’ notes. To ensure that ChatGPT’s codings were satisfactorily accurate, we compared GPT’s coding with two trained undergraduate coders on a randomly selected subset of 40 participants’ data per topic. Each lecture segment had between 14 and 19 idea units. Therefore, each research assistant coded 10,200 data points. Across these data points, GPT’s coding produced agreement rates comparable to that between the human coders (GPT vs. Human A: 84%, GPT vs. Human B: 81%, Human A vs. Human B: 87%). We will detail the AI-assisted coding methodology in a separate report.

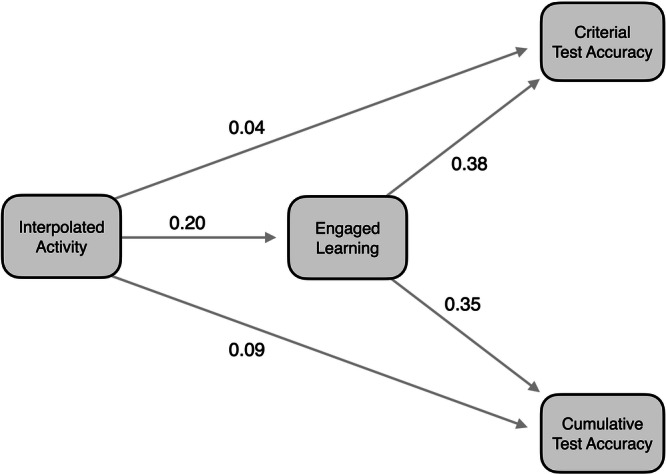

Consistent with the idea that the five measures were related to the same latent construct, they converged onto a single factor in an exploratory factor analysis with principal axis factoring, eigenvalue = 1.89, proportion of variance = 0.26 (see Table S2 of Supplementary Information). For exposition purposes, we termed this composite measure engaged learning, which was computed for each participant by averaging the Z scores of each measure because we did not have a priori notions about differential weights. As shown in Fig. 4, a 2 (interpolated activity) × 3 (distractor) ANOVA revealed that interpolated retrieval increased engaged learning, F(1, 697) = 32.27, p < 0.001, ηp2 = 0.044, 95% CI = [0.019, 0.078]. Neither the main effect of distractor, F(2, 697) = 0.41, p = 0.662, ηp2 = 0.001, 95% CI = [0.000, 0.009], BF01 = 30.77, nor the interaction was significant, F(2, 697) = 1.69, p = 0.185, ηp2 = 0.005, 95% CI = [0.000, 0.018], BF01 = 5.45.

Fig. 4. Engaged learning composite score as a function of interpolated activity and onscreen distraction.

Error bars are 95% confidence intervals. Participants in the control condition did not have any onscreen distractors (N = 235). Participants in the medium distractor condition had three memes on the right side of the screen during the lecture (N = 235). Participants in the strong distractor condition had three TikTok videos playing on the right side of the screen throughout the lecture (N = 233). Each dot represents the data of an individual participant. The dots are displaced horizontally to improve visibility. The y-axis is truncated at -1 and +1 to promote visibility of the central tendency.

If IRP potentiates new learning by promoting engagement with the lecture content, it should mediate the effect between interim activity (our independent variable) and test performance. Note that we have preregistered this mediation analysis conceptually, although the preregistration did not specify exactly how we would represent the concept of engaged learning. After removing participants with missing data (in either the mediator or the DVs), a mediation model84 (see Fig. 5) with 622 participants revealed a significant indirect effect for criterial test performance, β = 0.15, 95% CI = [0.09, 0.21], proportion-mediated = 0.65, p < 0.001, as well as cumulative test performance, β = 0.14, 95% CI = [0.08, 0.20], proportion-mediated = 0.45, p < 0.001. A sensitivity analysis showed that the smallest proportion-mediated our sample could detect with 0.50 power was 0.08. We chose 0.50 power for the sensitivity analysis based on a recommendation for exploratory analysis85. Therefore, unlike the mind wandering data alone, the composite engaged learning score revealed a mediation between interpolated activity and criterial test performance.

Fig. 5. Mediation model (N = 622) depicting the relationship between interpolated activity, engaged learning composite score, and criterial and cumulative test performance.

Values are standardized parameter estimates.

Exploratory Analysis on Achievement Gaps

The racial diversity of the community college samples provided an opportunity to explore whether IRP could reduce race-based achievement gaps (note that this analysis was also preregistered conceptually but not precisely). Because we needed to break down the data by race, we collapsed across onscreen distraction conditions to increase power. Moreover, we only included data from race groups with at least 20 participants per condition (see Table 2). We acknowledge that this threshold is arbitrary, but it was necessary to ensure reliable outcomes.

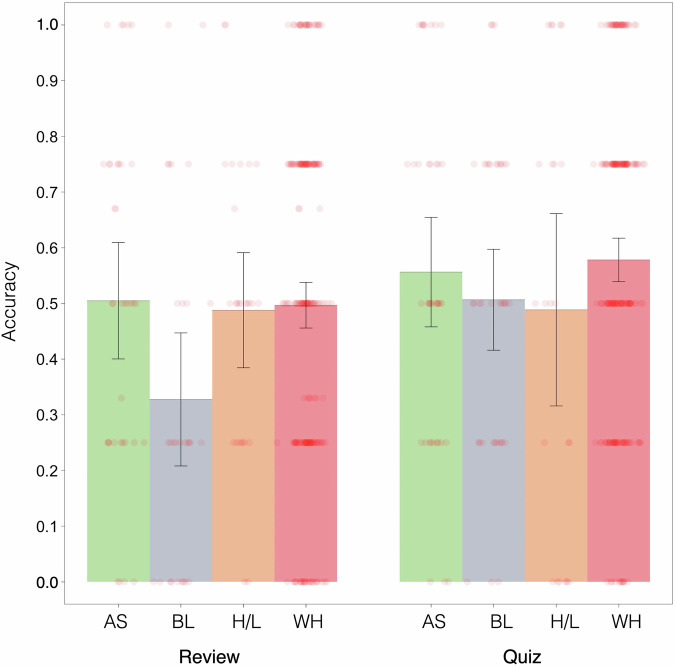

As can be seen in the left panel of Fig. 6, there was a substantial achievement decrement in criterial test performance for Black students (M = 0.33) relative to White (M = 0.50), Asian (M = 0.51), and Hispanic/Latinx (M = 0.49) students in the interpolated review condition, F(3, 301) = 2.77, p = 0.042, ηp2 = 0.027, 95% CI = [0.000, 0.065]. Strikingly, this achievement gap was greatly reduced in the interpolated retrieval condition (see right panel of Fig. 6), F(3, 304) = 1.10, p = .348, ηp2 = .011, 95% CI = [0.000, 0.036], BF01 = 9.09, such that Black students (M = 0.51) performed similarly to White (M = 0.58), Asian (M = 0.56), and Hispanic/Latinx students (M = .49). To be fully transparent, the 2 (interpolated activity) × 4 (race) interaction was not significant, F(3, 605) = 0.94, p = 0.420, ηp2 = 0.005, 95% CI = [0.000, 0.017], BF01 = 12.87, perhaps because the smaller sample sizes of the minority groups limited statistical power (see Table 2). So it is important to interpret these results with caution. A sensitivity analysis showed that the smallest effect size our sample could detect with 0.50 power was .018 (N = 304 for this analysis, and the remaining analyses in this section all had equal or slightly larger samples).

Fig. 6. Criterial test performance as a function of interpolated activity and race.

AS refers to Asians/Asian-Americans/Asian-Canadians (N = 76), BL refers to Black (N = 67), H/L refers to Hispanic/Latinx (N = 49), WH refers to White (N = 421). Error bars are 95% confidence intervals. Each dot represents the data of an individual participant. The dots were displaced horizontally to improve visibility.

To ensure that the achievement gap findings were not driven by sampling differences—which is a distinct possibility given the small sample sizes for the minority groups in this analysis – we conducted the same analyses as above but replaced criterial test performance with participants’ self-reported GPA. If sampling differences had contributed to the achievement gap and its reduction, such that the Black students in the interpolated review condition happened to be lower-achieving and those in the interpolated quiz condition higher-achieving, we should observe lower GPA for the Black students relative to other students in the interpolated review condition but not in the interpolated quiz condition. Critically, unlike test performance, GPA exhibited racial achievement gaps regardless of whether participants were in the interpolated review, F(3, 281) = 4.51, p = 0.004, ηp2 = 0.046, 95% CI = [0.005, 0.095] (MBlack = 2.96, MWhite = 3.43, MAsian = 3.56, MHispanic = 3.32), or interpolated quiz condition, F(3, 284) = 12.17, p < 0.001, ηp2 = 0.114, 95% CI = [0.049, 0.181] (MBlack = 2.76, MWhite = 3.36, MAsian = 3.74, MHispanic = 3.19). If anything, the GPA gap between Black and White students was at least as large in the interpolated quiz condition (d = 0.85) as in the interpolated review condition (d = 0.72). Therefore, the beneficial effects of IRP on racial achievement gaps was not driven by a sampling error.

For cumulative test performance, we did not include Hispanic/Latinx students because they did not meet the sample size cutoff. Unlike the criterial test results, there was an achievement gap for students in both the review, F(2, 245) = 3.85, p = .023, ηp2 = .030, 95% CI = [0.000, 0.080], and quiz conditions, F(2, 250) = 3.71, p = 0.026, ηp2 = 0.029, 95% CI = [0.000, 0.077], with Black students (Mreview = .40, Mquiz = .55) underperforming Asian students (Mreview = .52, Mquiz = .61) and White students (Mreview = 0.55, Mquiz = 0.67). Despite this result, we emphasize that Black students exhibited at least the same gains from retrieval practice as the other racial groups; therefore, IRP either reduced achievement gaps (for criterial test performance) or did not magnify it (for cumulative test performance).

Together, these results demonstrate that interpolated retrieval practice might, under some circumstances, reduce race-based achievement gaps. Although these data are intriguing and promising, they must be interpreted with caution because of the small sample size for the racial minority groups (N ≈ 30 per condition) relative to White participants (N > 180 per condition). Nevertheless, the sample sizes of the minority groups here are comparable to many experimental intervention studies86,87. Lastly, one might wonder if our data also demonstrated achievement gaps based on socioeconomic status (SES). They did not. Specifically, neither participants in the interpolated review, t(321) = 0.23, p = 0.817, d = -0.03, 95% CI = [-0.25, 0.20], BF01 = 7.86, nor those in the interpolated quiz conditions showed an SES-based achievement gap, t(321) = 0.98, p = 0.330, d = -0.11, 95% CI = [-0.33, 0.11], BF01 = 5.13. The negative effect sizes here indicate that lower SES participants performed slightly better than higher SES participants. These null effects might be driven by pronounced range restrictions in the socioeconomic data, given that nearly 90% of the participants reported that they were from middle or working class. For transparency and completeness, these data are presented in full in Supplementary Note 3 and Table S3.

Discussion

Several key findings emerged from this experiment. First, IRP improved new learning relative to interpolated review, and this result applied equally to university and community college students. However, the benefit of IRP varied with onscreen distractions, such that it was eliminated for participants in the strong-distraction condition. Second, we observed the benefits of IRP on test performance even when it did not significantly reduce the frequency of mind wandering, but an exploratory composite variable that combined multiple attention/engagement-based metrics revealed that the TPNL effect was mediated by task engagement. Third, IRP enhanced delayed, cumulative test performance regardless of onscreen distractions. Lastly, we found preliminary evidence that IRP might reduce race-based achievement gaps between Black and White students. We now discuss the implications of these findings.

Lab-based research has provided ample evidence that IRP can enhance learning, but as we discussed earlier, much of this research occurred under “pristine” laboratory conditions. Here, we showed that IRP enhanced online learning even when we imposed no “guard rails” against distractions. However, the IRP advantage over interpolated review was absent for participants who watched the lecture with onscreen TikTok videos. This finding and the mediation analysis results suggest that attention during lecture viewing was crucial for the test-potentiated new learning effect.

Online learning and attention

To gain a better understanding of the TPNL phenomenon, it is important to contextualize our effect size against the broader literature. Specifically, even in the no-distractor condition (d = 0.37), our effect size was more modest than in many laboratory studies. For example, Chan et al. reported a meta-analytic effect size of g = 0.75 (k = 84)6. We do not believe that the moderate TPNL effect observed here was specific to our lecture materials, because other studies have demonstrated powerful TPNL benefits using similar lectures in the lab (ds > 1.00)7,60,88,89. Nor do we believe that the modest effect was specific to our public university and community college samples (e.g., participants in other studies were from elite universities7,60), since both groups exhibited similar effect sizes, despite the university students having significantly outperformed community college students.

An intriguing alternative possibility is that the uncontrolled online learning environment, even for participants in the no-distractor condition, already acted as a divided attention condition relative to in-lab environments. This hypothesis is consistent with the idea that IRP potentiates new learning because it promotes attentive encoding after retrieval, so environments (online learning) or manipulations (onscreen distractions) that interfere with attentional control should reduce the TPNL effect. If one assumes that the typical online learning environment is highly distracting, then entertaining stimuli that promote engagement with the experiment itself might enhance learning, even if they are irrelevant to the lecture. Specifically, in the absence of the onscreen distractions or interpolated quizzes, participants in the interpolated review condition might be prone to disengaging entirely from the experiment (e.g., web browsing, texting, mind wandering). But the TikTok videos might ironically keep some participants engaged (Fig. 4), thereby promoting learning for participants in the interpolated review condition. This ironic effect of distraction aligns with the practice of using humor or offering breaks to raise student engagement, despite these approaches seemingly running counter to typical pedagogical objectives90–92.

Limitations

Given the unexpected nature of this ironic effect, we must interpret it with caution. Further, it is likely that the match between the modality of the distractor (silent videos or pictures) and target material (e.g., voiced lecture) would interact. For example, the beneficial effects of our video distractors might not hold if both the distractor and the target material were voiced, because stimuli that occupy the same perceptual domain often produce powerful interference93. An additional discrepancy between our study and other divided attention studies was that we aimed to implement distractors that resembled the typical passive distractors encountered by online learners (e.g., click-baits, other opened applications), so participants could ignore them - as they could in the real world. In contrast, most lab-based divided attention studies required participants to dual-task, such that they must respond to multiple stimuli simultaneously94,95. Future research can address whether our results – particularly the interaction between interpolated activity and distractions – would generalize to dual-task situations when students must respond to irrelevant stimuli (e.g., chatting on instant messaging platforms).

A potential intervention to reduce achievement gaps

The large sample of the present study and the involvement of the (more diverse) community college students allowed us i) to demonstrate race-based achievement gaps in our data and ii) to show that IRP could reduce these achievement gaps for Black students. However, it is interesting that IRP reduced achievement gaps for criterial test performance but not for cumulative test performance. Based on this dissociation, one might suggest that our results are less than impressive. We argue that, to the contrary, these findings should be investigated further because they suggest the possibility that a low-cost, scalable intervention can be leveraged to reduce some race-based achievement gaps, which are notoriously prevalent and intractable96. For example, despite having spent billions of dollars on several educational reforms, the achievement gaps in the U.S. have remained stubbornly consistent for decades97.

Constraints on generality

A promising aspect of the present finding is that the intervention of IRP had an immediate impact on students’ learning, and it did not require a multi-day commitment or extensive training from students and instructors86. A shortcoming of our results is that we had a small sample size for the minority groups (e.g., 67 Black students took the criterial test and 58 took the cumulative test), so these data are by no means representative, but we must also emphasize that experimental intervention research aimed at reducing racial achievement gaps often included similar87 or even smaller samples86. Moreover, the number of intervention studies in education has declined rapidly in recent years, but researchers have made ever more practice or policy recommendations based on correlational data98,99. This problematic trend can only be reversed through more experimental research. We believe that interventions aimed at reducing the long-standing racial achievement gaps warrant further experimental investigations.

Conclusions

In the present study, we aimed to provide a rigorous evaluation of interpolated quizzes as an online learning intervention. To this end, we produced four high-quality STEM lectures taught by eight instructors, and we examined the efficacy of our intervention with students across seven institutions, including community college students, who have been profoundly underrepresented in experimental research. Using a realistic manipulation of onscreen distractions, we also examined a key theoretical account of the test-potentiated new learning effect—that IRP enhances new learning because it promotes engagement with the material. Together, our study showed that IRP can be implemented in online lectures to boost student learning. Not only did interpolated quizzes promote new learning immediately, they also enhanced delayed recall of the lecture material. Lastly, our data showed that IRP might reduce race-based achievement gaps in some situations.

Supplementary information

Acknowledgements

This work is supported by the United States National Science Foundation (NSF) Science of Learning and Augmented Intelligence Grant 2017333 to Chan and Szpunar. The funders had no role in the study design, data collection and analysis, decision to publish or preparation of the manuscript.

Author contributions

Chan and Szpunar conceptualized the research. Chan and Ahn produced the materials for the research. Ahn programmed the experiment. Ahn, Assadipour, and Gill collected the data. Chan and Ahn conducted the analysis. Chan wrote the original draft of the manuscript. All authors contributed comments to the manuscript. Ahn was at Iowa State University when this work was conducted.

Peer review

Peer review information

Communications Psychology thanks Andrew Butler and the other, anonymous, reviewer(s) for their contribution to the peer review of this work. Primary Handling Editors: Troby Ka-Yan Lui. A peer review file is available.

Data availability

All analyses were conducted using JASP. The data and the lecture materials are available at https://osf.io/3fx8d/?view_only=a2228ccf7c4540bb923ed9e54f7a0441.

Code availability

The R codes for the figures are available at 10.17605/OSF.IO/3FX8D or https://osf.io/3fx8d/?view_only=a2228ccf7c4540bb923ed9e54f7a0441.

Competing interests

The authors declare no competing interests.

Footnotes

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

The online version contains supplementary material available at 10.1038/s44271-025-00234-5.

References

- 1.Chan, J. C. K. & Ahn, D. Unproctored online exams provide meaningful assessment of student learning. Proc. Natl. Acad. Sci. USA120, e2302020120 (2023). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Francis, M. K., Wormington, S. V. & Hulleman, C. The costs of online learning: Examining differences in motivation and academic outcomes in online and face-to-face community college developmental mathematics courses. Front. Psychol.10, 2054 (2019). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Travers, S. Supporting online student retention in community colleges: What data is most relevant? The Quarterly Review of Distance Education17, 49–61 (2016). [Google Scholar]

- 4.Aivaz, K. A. & Teodorescu, D. College students’ distractions from learning caused by multitasking in online vs. Face-to-face classes: A case study at a public university in romania. Int. J. Environ. Res. Public Health19, 11188 (2022). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Wammes, J. D. & Smilek, D. Examining the influence of lecture format on degree of mind wandering. Journal of Applied Research in Memory and Cognition6, 174–184 (2017). [Google Scholar]

- 6.Chan, J. C. K., Meissner, C. A. & Davis, S. D. Retrieval potentiates new learning: A theoretical and meta-analytic review. Psychol. Bull.144, 1111–1146 (2018). [DOI] [PubMed] [Google Scholar]

- 7.Szpunar, K. K., Khan, N. Y. & Schacter, D. L. Interpolated memory tests reduce mind wandering and improve learning of online lectures. Proc. Natl. Acad. Sci. USA110, 6313–6317 (2013). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Szpunar, K. K. Directing the wandering mind. Curr. Dir. Psychol. Sci.26, 40–44 (2017). [Google Scholar]

- 9.National Center for Education Statistics. Undergraduate enrollment. Condition of education. (U.S. Department of Education, 2023).

- 10.McCutcheon, K., Lohan, M., Traynor, M. & Martin, D. A systematic review evaluating the impact of online or blended learning vs. Face-to-face learning of clinical skills in undergraduate nurse education. J. Adv. Nurs.71, 255–270 (2015). [DOI] [PubMed] [Google Scholar]

- 11.Shea, P. & Bidjerano, T. Does online learning impede degree completion? A national study of community college students. Computers & Education75, 103–111 (2014). [Google Scholar]

- 12.Woldeab, D., Yawson, R. M. & Osafo, E. A systematic meta-analytic review of thinking beyond the comparison of online versus traditional learning. E-Journal of Business Education & Scholarship of Teaching14, 1–24 (2020). [Google Scholar]

- 13.Ochs, C., Gahrmann, C. & Sonderegger, A. Learning in hybrid classes: The role of off-task activities. Sci. Rep.14, 1629 (2024). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Hachey, A. C., Conway, K. M. & Wladis, C. W. Community colleges and underappreciated assets: Using institutional data to promote success in online learning. Onl. J. Dist. Learn. Admin.16, 1–18 (2013). [Google Scholar]

- 15.Xu, D. & Jaggars, S. S. The impact of online learning on students’ course outcomes: Evidence from a large community and technical college system. Econ. Educ. Rev.37, 46–57 (2013). [Google Scholar]

- 16.Johnson, H. P., Mejia, M. C. & Cook, K. Successful online courses in California’s community colleges. (Public Policy Institute of California, 2015).

- 17.Xu, D. & Jaggars, S. S. The effectiveness of distance education across virginia’s community colleges: Evidence from introductory college-level math and english courses. Educ. Eval. Policy Anal.33, 360–377 (2011). [Google Scholar]

- 18.Jaggars, S. Online learning: Does it help low-income and underprepared students? Community College Research Center Working Paper 26 (2011).

- 19.Seli, P. et al. Mind-wandering as a natural kind: A family-resemblances view. Trends Cogn. Sci.22, 479–490 (2018). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Smallwood, J. & Schooler, J. W. The restless mind. Psychol. Bull.132, 946–958 (2006). [DOI] [PubMed] [Google Scholar]

- 21.Smallwood, J. & Schooler, J. W. The science of mind wandering: Empirically navigating the stream of consciousness. Annu. Rev. Psychol.66, 487–518 (2015). [DOI] [PubMed] [Google Scholar]

- 22.Bonifacci, P., Viroli, C., Vassura, C., Colombini, E. & Desideri, L. The relationship between mind wandering and reading comprehension: A meta-analysis. Psychon. Bull. Rev.30, 40–59 (2023). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Conrad, C. & Newman, A. Measuring mind wandering during online lectures assessed with eeg. Front. Hum. Neurosci.15, 697532 (2021). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Pachai, A. A., Acai, A., LoGiudice, A. B. & Kim, J. A. The mind that wanders: Challenges and potential benefits of mind wandering in education. Scholarsh. Teach. Learn. Psychol.2, 134–146 (2016). [Google Scholar]

- 25.Smallwood, J., Fishman, D. J. & Schooler, J. W. Counting the cost of an absent mind: Mind wandering as an underrecognized influence on educational performance. Psychon. Bull. Rev.14, 230–236 (2007). [DOI] [PubMed] [Google Scholar]

- 26.Wong, A. Y., Smith, S. L., McGrath, C. A., Flynn, L. E. & Mills, C. Task-unrelated thought during educational activities: A meta-analysis of its occurrence and relationship with learning. Contemp. Educ. Psychol.71, 102098 (2022). [Google Scholar]

- 27.Zanesco, A. P., Denkova, E. & Jha, A. P. Mind-wandering increases in frequency over time during task performance: An individual-participant meta-analytic review. Psychol. Bull. (2024). [DOI] [PubMed]

- 28.Kane, M. J. et al. Individual differences in task-unrelated thought in university classrooms. Mem. Cognit.49, 1247–1266 (2021). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Risko, E. F., Anderson, N., Sarwal, A., Engelhardt, M. & Kingstone, A. Everyday attention: Variation in mind wandering and memory in a lecture. Appl. Cogn. Psychol.26, 234–242 (2012). [Google Scholar]

- 30.van der Meij, H. & Böckmann, L. Effects of embedded questions in recorded lectures. Journal of Computing in Higher Education33, 235–254 (2021). [Google Scholar]

- 31.Davis, S. D. & Chan, J. C. K. Effortful tests and repeated metacognitive judgments enhance future learning. Educ. Psychol. Rev.35, 86 (2023). [Google Scholar]

- 32.Ahn, D. & Chan, J. C. K. Does testing enhance new learning because it insulates against proactive interference. Memory & Cognition50, 1664–1682 (2022). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Pastötter, B. & Frings, C. The forward testing effect is reliable and independent of learners’ working memory capacity. J. Cogn.2, 37 (2019). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Szpunar, K. K., McDermott, K. B. & Roediger, H. L. Testing during study insulates against the buildup of proactive interference. J. Exp. Psychol. Learn. Mem. Cogn.34, 1392–1399 (2008). [DOI] [PubMed] [Google Scholar]

- 35.Yang, C. et al. Testing potential mechanisms underlying test-potentiated new learning. J. Exp. Psychol. Learn. Mem. Cogn.48, 1127–1143 (2022). [DOI] [PubMed] [Google Scholar]

- 36.Ahn, D. & Chan, J. C. K. Does testing potentiate new learning because it enables learners to use better strategies? J. Exp. Psychol. Learn. Mem. Cogn.50, 435–457 (2024). [DOI] [PubMed] [Google Scholar]

- 37.Choi, H. & Lee, H. S. Knowing is not half the battle: The role of actual test experience in the forward testing effect. Educ. Psychol. Rev.32, 765–789 (2020). [Google Scholar]

- 38.Davis, S. D. & Chan, J. C. Studying on borrowed time: How does testing impair new learning. J. Exp. Psychol. Learn. Mem. Cogn.41, 1741–1754 (2015). [DOI] [PubMed] [Google Scholar]

- 39.Lee, H. S. & Ahn, D. Testing prepares students to learn better: The forward effect of testing in category learning. J. Educ. Psychol.110, 203–217 (2018). [Google Scholar]

- 40.Li, T., Ma, X., Pan, W. & Huo, X. The impact of transcranial direct current stimulation combined with interim testing on spatial route learning in patients with schizophrenia. J. Psychiatr. Res.177, 169–176 (2024). [DOI] [PubMed] [Google Scholar]

- 41.Kriechbaum, V. M. & Bäuml, K.-H. T. Retrieval practice can promote new learning with both related and unrelated prose materials. Journal of Applied Research in Memory and Cognition (2024).

- 42.Wissman, K. T., Rawson, K. A. & Pyc, M. A. The interim test effect: Testing prior material can facilitate the learning of new material. Psychon. Bull. Rev.18, 1140–1147 (2011). [DOI] [PubMed] [Google Scholar]

- 43.Parong, J. & Green, C. S. The forward testing effect after a 1‐day delay across dissimilar video lessons. Appl. Cogn. Psychol.37, 1037–1044 (2023). [Google Scholar]

- 44.Risko, E. F., Liu, J. & Bianchi, L. Speeding lectures to make time for retrieval practice: Can we improve the efficiency of interpolated testing. J. Exp. Psychol. Appl.30, 268–281 (2023). [DOI] [PubMed] [Google Scholar]

- 45.Hew, K. F. & Lo, C. K. Comparing video styles and study strategies during video-recorded lectures: Effects on secondary school mathematics students’ preference and learning. Interactive Learning Environments28, 847–864 (2020). [Google Scholar]

- 46.Yang, C., Potts, R. & Shanks, D. R. Enhancing learning and retrieval of new information: A review of the forward testing effect. NPG Sci. Learn.3, 1–9 (2018). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Szpunar, K. K., Moulton, S. T. & Schacter, D. L. Mind wandering and education: From the classroom to online learning. Front. Psychol.4, Article 495 (2013). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48.Chan, J. C. K., Manley, K. D. & Ahn, D. Does retrieval potentiate new learning when retrieval stops but new learning continues? J. Mem. Lang.115, 104150 (2020). [Google Scholar]

- 49.Don, H. J., Yang, C., Boustani, S. & Shanks, D. R. Do partial and distributed tests enhance new learning. J. Exp. Psychol. Appl.29, 358–373 (2022). [DOI] [PubMed] [Google Scholar]

- 50.Pastötter, B., Engel, M. & Frings, C. The forward effect of testing: Behavioral evidence for the reset-of-encoding hypothesis using serial position analysis. Front. Psychol.9, 1197 (2018). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51.Chan, J. C. K., Manley, K. D., Davis, S. D. & Szpunar, K. K. Testing potentiates new learning across a retention interval and a lag: A strategy change perspective. J. Mem. Lang.102, 83–96 (2018). [Google Scholar]

- 52.Weinstein, Y., Gilmore, A. W., Szpunar, K. K. & McDermott, K. B. The role of test expectancy in the build-up of proactive interference in long-term memory. J. Exp. Psychol. Learn. Mem. Cogn.40, 1039–1048 (2014). [DOI] [PubMed] [Google Scholar]

- 53.Yue, C. L., Soderstrom, N. C. & Bjork, E. L. Partial testing can potentiate learning of tested and untested material from multimedia lessons. J. Educ. Psychol.107, 991–1005 (2015). [Google Scholar]

- 54.Chmielewski, M. & Kucker, S. C. An mturk crisis? Shifts in data quality and the impact on study results. Soc. Psychol. Personal. Sci.11, 464–473 (2020). [Google Scholar]

- 55.Goodman, J. K., Cryder, C. E. & Cheema, A. Data collection in a flat world: The strengths and weaknesses of mechanical turk samples. Journal of Behavioral Decision Making26, 213–224 (2013). [Google Scholar]

- 56.Peer, E., Rothschild, D., Gordon, A., Evernden, Z. & Damer, E. Data quality of platforms and panels for online behavioral research. Behav. Res. Methods54, 1643–1662 (2022). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 57.Finn, B. & Roediger, H. L. Interfering effects of retrieval in learning new information. J. Exp. Psychol. Learn. Mem. Cogn.39, 1665–1681 (2013). [DOI] [PubMed] [Google Scholar]

- 58.Garcia, N. H. Divided attention and its effect on forward testing. (University of North Florida, 2022).

- 59.Schmitt, A. Mind wandering, mental imagery, and the combined effects of interpolated pretesting and testing on learning. (California State University Northridge, 2023).

- 60.Jing, H. G., Szpunar, K. K. & Schacter, D. L. Interpolated testing influences focused attention and improves integration of information during a video-recorded lecture. J. Exp. Psychol. Appl.22, 305–318 (2016). [DOI] [PubMed] [Google Scholar]

- 61.Pan, S. C., Sana, F., Schmitt, A. G. & Bjork, E. L. Pretesting reduces mind wandering and enhances learning during online lectures. Journal of Applied Research in Memory and Cognition9, 542–554 (2020). [Google Scholar]

- 62.Welhaf, M. S., Phillips, N. E., Smeekens, B. A., Miyake, A. & Kane, M. J. Interpolated testing and content pretesting as interventions to reduce task-unrelated thoughts during a video lecture. Cogn. Res. Princ. Implic.7, 1–22 (2022). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 63.Kurlaender, M., Cooper, S., Rodriguez, F. & Bush, E. From the disruption of the pandemic, a path forward for community colleges. Change56, 29–36 (2024). [Google Scholar]

- 64.Hart, C. M. D., Hill, M., Alonso, E. & Xu, D. “I don’t think the system will ever be the same”: Distance education leaders’ predictions and recommendations for the use of online learning in community colleges post-covid. J. Higher Educ. 1-25 (2024).

- 65.Weissmann, S. Online learning still in high demand at community college. Inside Higher Ed (2023).

- 66.Macon, D. K. Student satisfaction with online courses versus traditional courses: A meta-analysis. (Northcentral University, 2011).

- 67.Schinske, J. N. et al. Broadening participation in biology education research: Engaging community college students and faculty. CBE. Life. Sci. Educ.16, mr1 (2017). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 68.Pape-Lindstrom, P., Eddy, S. & Freeman, S. Reading quizzes improve exam scores for community college students. CBE. Life. Sci. Educ.17, ar21 (2018). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 69.Reber, S. & Smith, E. College enrollment disparities: Understanding the role of academic preparation. (Center on Children and Families at Brookings, 2023).

- 70.Akhlaghipour, G. & Assari, S. Parental education, household income, race, and children’s working memory: Complexity of the effects. Brain Sci10, 950 (2020). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 71.Castora-Binkley, M., Peronto, C. L., Edwards, J. D. & Small, B. J. A longitudinal analysis of the influence of race on cognitive performance. J. Gerontol. B Psychol. Sci. Soc. Sci.70, 512–518 (2015). [DOI] [PubMed] [Google Scholar]

- 72.Rea-Sandin, G., Korous, K. M. & Causadias, J. M. A systematic review and meta-analysis of racial/ethnic differences and similarities in executive function performance in the united states. Neuropsychology35, 141–156 (2021). [DOI] [PubMed] [Google Scholar]

- 73.McDermott, K. B. & Zerr, C. L. Individual differences in learning efficiency. Curr. Dir. Psychol. Sci.28, 607–613 (2019). [DOI] [PubMed] [Google Scholar]

- 74.Grabman, J. H. & Dodson, C. S. Unskilled, underperforming, or unaware? Testing three accounts of individual differences in metacognitive monitoring. Cognition242, 105659 (2024). [DOI] [PubMed] [Google Scholar]

- 75.Chan, J. C. K., Davis, S. D., Yurtsever, A. & Myers, S. J. The magnitude of the testing effect is independent of retrieval practice performance. J. Exp. Psychol. Gen153, 1816–1837 (2024). [DOI] [PubMed] [Google Scholar]

- 76.Tse, C.-S. & Pu, X. The effectiveness of test-enhanced learning depends on trait test anxiety and working-memory capacity. J. Exp. Psychol. Appl18, 253–264 (2012). [DOI] [PubMed] [Google Scholar]

- 77.Zheng, Y., Sun, P. & Liu, X. L. Retrieval practice is costly and is beneficial only when working memory capacity is abundant. NPJ Sci Learn8, 8 (2023). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 78.Zheng, Y., Shi, A. & Liu, X. L. A working memory dependent dual process model of the testing effect. NPJ Sci Learn9, 56 (2024). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 79.Wang, M.-T., Fredricks, J. A., Ye, F., Hofkens, T. L. & Linn, J. S. The math and science engagement scales: Scale development, validation, and psychometric properties. Learn. Instr.43, 16–26 (2016). [Google Scholar]

- 80.Wagenmakers, E. J. et al. Bayesian inference for psychology. Part ii: Example applications with JASP. Psychon. Bull. Rev.25, 58–76 (2018). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 81.Welhaf, M. S. et al. A “goldilocks zone” for mind-wandering reports? A secondary data analysis of how few thought probes are enough for reliable and valid measurement. Behav. Res. Methods55, 327–347 (2023). [DOI] [PubMed] [Google Scholar]

- 82.Balota, D. A. et al. Veridical and false memories in healthy older adults and in dementia of the alzheimer’s type. Cogn. Neuropsychol.16, 361–384 (1999). [PubMed] [Google Scholar]

- 83.Chan, J. C. K. & McDermott, K. B. The effects of frontal lobe functioning and age on veridical and false recall. Psychon. Bull. Rev.14, 606–611 (2007). [DOI] [PubMed] [Google Scholar]

- 84.MacKinnon, D. P., Lockwood, C. M., Hoffman, J. M., West, S. G. & Sheets, V. A comparison of methods to test mediation and other intervening variable effects. Psychol. Methods7, 83–104 (2002). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 85.Review, P. B. Statistical guidlines. (2020).

- 86.Brady, L. M. et al. A leadership-level culture cycle intervention changes teachers’ culturally inclusive beliefs and practices. Proc Natl Acad Sci USA121, e2322872121 (2024). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 87.Cohen, G. L., Garcia, J., Apfel, N. & Master, A. Reducing the racial achievement gap: A social-psychological intervention. Science313, 1307–1310 (2006). [DOI] [PubMed] [Google Scholar]

- 88.Szpunar, K. K., Jing, H. G. & Schacter, D. L. Overcoming overconfidence in learning from video-recorded lectures: Implications of interpolated testing for online education. J. Appl. Res. Mem. Cogn3, 161–164 (2014). [Google Scholar]

- 89.Assadipour, Z., Ahn, D. & Chan, J. C. K. Interpolated retrieval of relevant material, not irrelevant material, enhances new learning of a video lecture in-person and online. Manuscript under review (2025).

- 90.Bieg, S. & Dresel, M. Relevance of perceived teacher humor types for instruction and student learning. Soc. Psychol. Educ.21, 805–825 (2018). [Google Scholar]

- 91.Bryant, J., Comisky, P. W., Crane, J. S. & Zillmann, D. Relationship between college teachers’ use of humor in the classroom and students’ evaluations of their teachers. J. Educ. Psychol.72, 511–519 (1980). [Google Scholar]

- 92.Gorham, J. & Christophel, D. M. The relationship of teachers’ use of humor in the classroom to immediacy and student learning. Commun. Educ.39, 46–62 (1990). [Google Scholar]

- 93.Cowan, N. & Barron, A. Cross-modal, auditory-visual stroop interference and possible implications for speech memory. Percept. Psychophys.41, 393–401 (1987). [DOI] [PubMed] [Google Scholar]

- 94.Mulligan, N. W., Buchin, Z. L. & Zhang, A. L. The testing effect with free recall: Organization, attention, and order effects. J. Mem. Lang.125, 104333 (2022). [Google Scholar]

- 95.Naveh-Benjamin, M., Guez, J., Hara, Y., Brubaker, M. S. & Lowenschuss-Erlich, I. The effects of divided attention on encoding processes under incidental and intentional learning instructions: Underlying mechanisms. Q. J. Exp. Psychol.67, 1682–1696 (2014). [DOI] [PubMed] [Google Scholar]

- 96.Same, M. R. et al. Evidence-Supported Interventions Associated with Black Students’ Education Outcomes: Findings from a Systematic Review of Research. (U.S. Department of Education, 2018).

- 97.Peterson, B., Bligh, R. & Robinson, D. H. Consistent federal education reform failure: A case study of nebraska from 2010-2019. Mid-Western Educational Researcher34, 6 (2022). [Google Scholar]

- 98.Brady, A. C., Griffin, M. M., Lewis, A. R., Fong, C. J. & Robinson, D. H. How scientific is educational psychology research? The increasing trend of squeezing causality and recommendations from non-intervention studies. Educ. Psychol. Rev.35, 14 (2023). [Google Scholar]

- 99.Hsieh, P. P.-H. et al. Is educational intervention research on the decline. J. Educ. Psychol.97, 523–529 (2005). [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Data Availability Statement

All analyses were conducted using JASP. The data and the lecture materials are available at https://osf.io/3fx8d/?view_only=a2228ccf7c4540bb923ed9e54f7a0441.

The R codes for the figures are available at 10.17605/OSF.IO/3FX8D or https://osf.io/3fx8d/?view_only=a2228ccf7c4540bb923ed9e54f7a0441.