Electronic health records (EHRs) have been widely adopted in medical practice, yet clinicians often struggle to find important clinical information, owing to factors such as “note bloat.”1 Outside medical records (OMRs) are often cluttered and redundant and lack document metadata, exacerbating challenges with patient data review.2 Poor EHR usability, which includes difficulty finding relevant information, correlates with clinician burnout.3

Optical character recognition (OCR) can extract text from scanned documents and create meaningful efficiencies, particularly in workflows that rely on faxed or nondigitized documents.4 Although OCR has been adopted in other industries to improve efficiency,5 health care institutions still disproportionately rely on paper documentation to exchange information.6 As a quaternary referral practice, our institution serves many patients who bring large volumes of OMRs. These documents typically contain information such as test results and clinical notes.

To address these challenges, we designed, implemented, and evaluated a clinician-centered OCR keyword search application for easier review of OMRs. The application provided a web application interface that allowed clinicians to search and review portable document format and Health Level 7 International Clinical Document Architecture documents—the 2 main document formats of OMRs received by our institution.

We hypothesized that using an OCR-based text application would improve review time and staff satisfaction. A survey-based evaluation of baseline workflows and a software intervention was used to measure possible time savings and changes in satisfaction with OMR review processes. A survey-based evaluation was chosen for its ease of implementation and because time log data across multiple applications (the intervention application and our EHR system) could not be reliably obtained and compared.

Methods

We conducted a prospective, survey-based evaluation of an intervention consisting of an internally developed, OCR-enabled web application that allowed staff to search OMRs. This was part of an ongoing quality improvement project and was deemed exempt by our institutional review board (#23-012069). Informed consent was waived given the low risk of participation with the intervention, the low risk of participant reidentification, and the quality improvement nature of the work. Survey recipients also had the option not to respond to the survey.

Our study population comprised the clinical and allied health staff from multiple clinical specialties in Rochester, Minnesota, and Scottsdale, Arizona. The primary quality improvement initiative involved the Division of Hematology; a limited number of other participants were also included at their request to the project team. Preinterventional and postinterventional surveys were administered by email with a single follow-up reminder email. Pre-intervention surveys were distributed and accepted between July 17-July 28, 2023; post-intervention surveys were distributed and accepted between October 10-17, 2023, 10 weeks later. The survey instruments included questions about respondents’ work roles, clinical department, practice location, and time spent and satisfaction with outside records review, at both baseline and postintervention. Satisfaction was assessed using a visual customer satisfaction score, a survey instrument commonly used in business to quickly ascertain sentiment about a given product, process, or service.7,8 Response types were a combination of multiple choice and free text. The full survey instruments are provided in Supplementary Materials (available online at https://www.mcpdigitalhealth.org/). The survey was updated once during the study to include additional questions about quality and safety concerns arising from outside records review.

Results

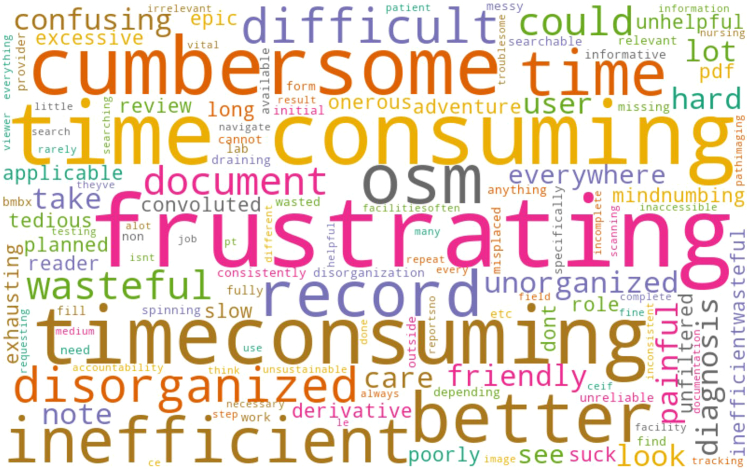

A total of 89 participants, or 17.1% of study invitees, responded to the preinterventional survey, whereas 71 participants, or 13.6% of invitees, responded to the postinterventional survey. By location, 97% of respondents were from the Rochester, Minnesota, practice site and 3% were from Scottsdale, Arizona. By department, 73% of respondents worked primarily in hematology, with the remainder working primarily in gastroenterology, rheumatology, cardiology, dermatology, endocrinology, oncology, plastic surgery, general internal medicine, and clinical genomics. A free text description of their experience participants was summarized as a word cloud (Figure). The most common descriptions were “frustrating,” “time consuming,” and “cumbersome.” All feedback received was either negative or offered advice to improve efficiency. Specific issues discussed were difficulty in finding relevant information, an unintuitive EHR user interface, and lack of data organization. Across all respondents, the median review time per patient was 20 minutes. Quality and safety issues raised included the ordering of duplicate testing and unnecessary procedures due to challenges with finding previous results in OMRs.

Figure.

Word cloud based on the descriptions of 50 participants who were asked to write their experience with manual review of outside medical records in 3 words.

In the postinterventional survey, 20 participants reported using the OCR search application and 13 answered follow-up questions concerning their experience. For those who did not use the application, the most common reasons were not being aware it existed, not having time to adopt a new application, or not having a need to review OMRs during the study period. Barriers for adoption included lack of integration in the EHR, unfamiliarity with the application, and slow loading times. Among participants who did use the application, their user experience improved significantly (P<.05) compared with that in the preinterventional survey (Table 1). Additionally, respondents reported median time saved in review to be 12 minutes per patient on follow-up surveys (Table 2). Respondents felt that the application better organized outside records for review and that key word searches improved efficiency.

Table 1.

User Experience With OCR Application Compared With a Preinterventional Baselinea

| Role | Baseline user experience score | User experience score with OCR application |

|---|---|---|

| All | 2, 1-2 (59) | 4, 4-5 (13)b |

| Providers | 2, 1-2 (24) | 5, 5-5 (5)c |

| Nursing | 2, 2-2 (14) | 4, 4-4 (5)c |

| Administration | 2, 1-3 (12) | 4, 3-4 (3)c |

| Other | 2, 2-3 (9) | No responses |

User experience was measured using a visual customer satisfaction score and translated into a score from 1 to 5, where 1 denotes a poor experience. Median with interquartile range is shown; sample size is in parenthesis. Preinterventional and postinterventional experience scores were compared using the Mann-Whitney U test.

Abbreviation: OCR, optical character recognition.

P<.001

P<.05

Table 2.

Time Saved per Patient With OCR Application Compared With a Preinterventional Baseline

| Role | Baseline review time (min) | Review time saved with OCR application (min) |

|---|---|---|

| All | 20, 15-30 (73) | 12, 8-16 (12) |

| Providers | 20, 15-28 (39) | 10, 7-11 (4) |

| Nursing | 30, 15-35 (13) | 25, 17-37 (4) |

| Administration | 16, 6-22 (12) | 10, 5-15 (4) |

| Other | 20, 15-31 (9) | No responses |

Median with interquartile range is shown; sample size is in parenthesis.

Abbreviation: OCR, optical character recognition.

Discussion

Our survey reported the burden for reviewing OMRs was significant owing to an inefficient and disorganized process. Similar challenges with OMRs have been described in the interhospital patient transfer literature.9,10 Negative sentiments were unanimous and likely reflected a generalizable issue across clinical departments. Introducing an OCR search application saved time and improved satisfaction. Results also highlighted an opportunity to prevent duplicate testing or unnecessary procedures.

The survey provides key insights for software adoption such as resources for training staff, integration into the EHR, and minimizing application wait times, which were subsequently pursued and incorporated into the application. A limitation of our study was a low response rate, which may bias results. Our decision to limit survey invitations to 1 initial email and follow-up was based on the initiative's overall goal to facilitate time savings, rather than create additional administrative responsibilities for staff. Changes to OMR collection processes aimed at decreasing the volume of records imported were also implemented near the end of our intervention period, which may have confounded our results, although our survey questions asked specifically about the impact of the OCR application.

By digitizing and consolidating OMRs, an OCR search application allows for future integration of large language models for tasks such as summarization, which we anticipate will yield additional improvements in efficiency. However, this requires further work to ensure summarizations are accurate and free of hallucinations11 and bias.

Conclusion

A manual review of OMRs is inefficient and adversely affects staff satisfaction. An intervention consisting of the use of an internally developed OCR search application increases both efficiency and satisfaction in the review process.

Potential Competing Interests

The authors report no competing interests.

Acknowledgments

The authors gratefully acknowledge Dr Kevin Ruff, Jamie Purdeu, and the Outside Materials Organization System program for their support, the Center for Digital Health for their technology and infrastructure support, and Dalio Philanthropies for the seed grant that enabled the initial development of the software evaluated in this study.

Footnotes

Supplemental material can be found online at https://www.mcpdigitalhealth.org/. Supplemental material attached to journal articles has not been edited, and the authors take responsibility for the accuracy of all data.

Supplemental Online Material

References

- 1.Rule A., Bedrick S., Chiang M.F., Hribar M.R. Length and redundancy of outpatient progress notes across a decade at an academic medical center. JAMA Netw Open. 2021;4(7) doi: 10.1001/jamanetworkopen.2021.15334. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Hartzband P., Groopman J. Off the record—avoiding the pitfalls of going electronic. N Engl J Med. 2008;358(16):1656–1658. doi: 10.1056/NEJMp0802221. [DOI] [PubMed] [Google Scholar]

- 3.Melnick E.R., Dyrbye L.N., Sinsky C.A., et al. The association between perceived electronic health record usability and professional burnout among US physicians. Mayo Clin Proc. 2020;95(3):476–487. doi: 10.1016/j.mayocp.2019.09.024. [DOI] [PubMed] [Google Scholar]

- 4.Hsu E., Malagaris I., Kuo Y.F., Sultana R., Roberts K. Deep learning-based NLP data pipeline for EHR-scanned document information extraction. JAMIA Open. 2022;5(2) doi: 10.1093/jamiaopen/ooac045. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Choy K.-W., Goswami S., Lewandowski D., Whiteman R. Fueling digital operations with analog data. McKinsey; 2024. https://www.mckinsey.com/capabilities/operations/our-insights/fueling-digital-operations-with-analog-data [Google Scholar]

- 6.Pylypchuk Y., Everson J. ONC Data Brief; 2023. Interoperability and Methods of Exchange among Hospitals in 2021; pp. 1–17.https://www.healthit.gov/data/data-briefs/interoperability-and-methods-exchange-among-hospitals-2021 [Google Scholar]

- 7.CSAT and NPS® Score Cards (QSC): Qualtrics XM: the leading experience management software. https://www.qualtrics.com/support/social-connect/insights/csat-and-nps-score-cards-qsc/

- 8.Alismail S., Zhang H. 2018. The use of emoji in electronic user experience questionnaire: an exploratory case study. Paper presented at: Proceedings of the 51st Hawaii International Conference on System Sciences; January 6, 2018. [Google Scholar]

- 9.Herrigel D.J., Carroll M., Fanning C., Steinberg M.B., Parikh A., Usher M. Interhospital transfer handoff practices among US tertiary care centers: a descriptive survey. J Hosp Med. 2016;11(6):413–417. doi: 10.1002/jhm.2577. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Mueller S.K., Shannon E., Dalal A., Schnipper J.L., Dykes P. Patient and physician experience with interhospital transfer: a qualitative study. J Patient Saf. 2021;17(8):e752–e757. doi: 10.1097/PTS.0000000000000501. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Ji Z., Lee N., Frieske R., et al. Survey of hallucination in natural language generation. ACM Comput Surv. 2023;55(12):1–38. doi: 10.1145/357173. [DOI] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.