Abstract

k-mer-based methods are widely used in bioinformatics, but there are many gaps in our understanding of their statistical properties. Here, we consider the simple model where a sequence S (e.g., a genome or a read) undergoes a simple mutation process through which each nucleotide is mutated independently with some probability r, under the assumption that there are no spurious k-mer matches. How does this process affect the k-mers of S? We derive the expectation and variance of the number of mutated k-mers and of the number of islands (a maximal interval of mutated k-mers) and oceans (a maximal interval of nonmutated k-mers). We then derive hypothesis tests and confidence intervals (CIs) for r given an observed number of mutated k-mers, or, alternatively, given the Jaccard similarity (with or without MinHash). We demonstrate the usefulness of our results using a few select applications: obtaining a CI to supplement the Mash distance point estimate, filtering out reads during alignment by Minimap2, and rating long-read alignments to a de Bruijn graph by Jabba.

Keywords: confidence intervals, k-mers, MinHash, mutation process, sketching, Jaccard similarity

1. INTRODUCTION

K-Mer-based methods have become widely used, for example, for genome assembly (Bankevich et al., 2012), error correction (Salmela et al., 2017), read mapping (Jain et al., 2017; Li, 2018), variant calling (Standage et al., 2019), genotyping (Sun and Medvedev, 2018; Denti et al., 2019), database search (Solomon and Kingsford, 2016; Harris and Medvedev, 2018), metagenomic sequence comparison (Wood and Salzberg, 2014), and alignment-free sequence comparison (Song et al., 2014; Ondov et al., 2016; Sarmashghi et al., 2019). A simple but influential recent example has been the Mash distance (Ondov et al., 2016), which uses the MinHash Jaccard similarity between the sets of k-mers in two sequences to estimate their average nucleotide divergence.

Mash has been applied to determine the appropriate reference genome for in silico analyses (Schwengers et al., 2019), for genome compression (Tang et al., 2019), for clustering genomes (Brown et al., 2016; Ondov et al., 2016), and for estimating evolutionary distance from low-coverage sequencing data sets (Sarmashghi et al., 2019). k-Mer-based methods such as Mash are often faster and more practical then alignment-based methods. However, while the statistics behind sequence alignment is well understood (Gusfield, 1997), there are many gaps in our understanding of the statistics behind k-mer-based methods.

Consider the following simple mutation model and the questions it raises. There is a sequence of nucleotide S that undergoes a mutation process, through which every position is mutated with some constant probability r1, independently of other nucleotides. In this model, we assume that S does not have any repetitive k-mers and that a mutation always results in a unique k-mer (we say that there are no spurious matches). This mutation model captures both a simple model of sequence evolution (e.g., Jukes-Cantor) and a simple model of errors generated during sequencing, under the assumptions that k is large enough and the repeat content low enough to make the effect of spurious matches negligible. It is applied to analyze algorithms, and the predictions of the model often reflect performance on real biological sequences (Ondov et al., 2016; Sarmashghi et al., 2019).

How does this simple mutation model affect the k-mers of S? This question bears resemblance, but is distinct from questions studied by Lander and Waterman (1988) and in alignment-free sequence comparison (Song et al., 2014) (we elaborate on the connection in Section 1.1). Some aspects of this question have been previously explored (Miclotte et al., 2016; Salmela et al., 2017; Wang and Au, 2020), but some very basic ones have not. For example, what is the distribution of the number of mutated k-mers? The expectation of this distribution is known and trivial to derive, but we do not know its variance.

For another example, consider that the k-mers of S fall into mutated stretches (which, inspired by the Lander/Waterman statistics, we call islands) and nonmutated stretches (which we call oceans). What is the distribution on the number of these stretches? We do not even know the expected value. We answer these and other questions in this article, with most of the results captured in Table 1.

Table 1.

The Expectation, Variances, and Hypothesis Tests Derived in This Article

| Variable | Expectation | Variance | interval |

|---|---|---|---|

| Lq | |||

| Jaccard | — | — | |

| MinHash Jaccard | — | — | See Theorem 6 |

| see Theorem 11 |

We use q as shorthand for  as a placeholder for some function of r1 and k that is independent of L; see the theorems for the full expressions.

as a placeholder for some function of r1 and k that is independent of L; see the theorems for the full expressions.

We immediately apply our findings to derive hypothesis tests and confidence intervals (CIs) for r1 from the number of observed mutated k-mers, the Jaccard similarity, and the Jaccard similarity under MinHash. Previously, none was known, even though point estimates from these had been frequently used (e.g., Mash). To do this, we observe that our random variables are m-dependent (Hoeffding et al., 1948), which, roughly speaking, means that the only dependencies involve k-mers nearby in the sequence. We apply a technique called Stein's method (Ross, 2011) to approximate these as normal variables and thereby obtain hypothesis tests and CIs.

We demonstrate the usefulness of our results using a few select applications: obtaining a CI to supplement the Mash distance point estimate (Ondov et al., 2016), filtering out reads during alignment by Minimap2 (Li, 2018), and rating long-read alignments to a de Bruijn graph by Jabba (Miclotte et al., 2016). These examples illustrate how the use of the simple mutation model and the techniques from our article could have potentially improved several widely used tools. Our technique can also be applied to new questions as they arise. Our code for computing all the intervals in this article is freely available at https://github.com/medvedevgroup/mutation-rate-intervals.

1.1. Related work

Here we give more background on how our article relates to other previous work.

1.1.1. Lander/Waterman statistics

There is a natural analogy between the stretches of mutated k-mers and the intervals covered by random clones in the work of Lander and Waterman (1988). Each error can be viewed as a random clone with fixed length k, and thus, the islands in our study correspond to “covered islands” in theirs. However, their focus was to determine how much redundancy was necessary to cover all (or most) of a genomic sequence, which would correspond to how many nucleotide mutations are needed so that most of the k-mers in the sequence are mutated. In particular, they expect average coverage of the sequence by clones to be greater than 1, while in our study we expect the corresponding value,  , to be much less than 1. Thus, the approximations applied in Lander and Waterman (1988) do not hold in our case.

, to be much less than 1. Thus, the approximations applied in Lander and Waterman (1988) do not hold in our case.

1.1.2. Alignment-free sequence comparison

In alignment-free analysis, two sequences are compared by comparing their respective vectors of k-mer counts (Song et al., 2014). Two such vectors can be compared in numerous ways, for example, through the D2 similarity measure, which can be viewed as a generalization of the number of mutated k-mers we study in this article. However, in alignment-free analysis, both the underlying model and the questions studied are somewhat different. In particular, alignment-free analysis usually works with much smaller values of k, for example, (Wu et al., 2005). This means that most k-mers are present in a sequence, and k-mers will match between and within sequences even if they are in different locations and not evolutionarily related. Our model and questions assume that these spurious matches are background noise that can be ignored (which is justifiable for larger k), while they form a crucial component of alignment-free analysis. As a result, much of the work in measuring expectation and variance in metrics such as D2 is done with respect to the distribution of the original sequences, rather than after a mutation process (Reinert et al., 2009; Burden et al., 2014). Even when the mutation processes have been studied, they have typically been very different from the ones we consider here (e.g., the “common motif model”; Reinert et al., 2009).

Later works (Morgenstern et al., 2015; Röhling et al., 2020) did consider the simple mutation model that we study here, although still with a small k. Sequence similarity has also been estimated using the average common substring length between two sequences (Haubold et al., 2009). This is similar to the distribution of oceans that we study in our article, but the difference is that oceans are both left- and right-maximal, while the common substrings considered by Haubold et al. (2009) and others are only right-maximal.

2. PRELIMINARIES

Let be a positive integer. Let denote the interval of integers , which intuitively captures positions along a string. Let be a positive integer. The k-span at position is denoted as Ki and is the range of integers (inclusive of the endpoints). Intuitively, a k-span captures the interval of a k-mer. We think of as representing an interval of length that contains L k-spans. To simplify the statements of the theorems, we will in some places require that (or similar), that is, that the interval is of length at least . We believe this covers most practical cases of interest, but, if necessary, the results can be rederived without this assumption.

We define the simple mutation model as a random process that takes as input two integers and and a real-valued nucleotide error rate

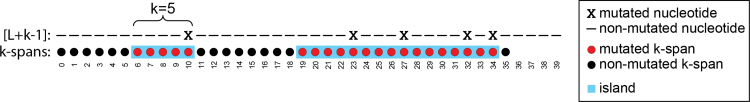

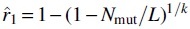

. For every position in , the process mutates it with probability r1. A mutation at position i is said to mutate the k-spans . We define Nmut as a random variable, which is the number of mutated k-spans. As shorthand notation, we use  to denote the probability that a k-span is mutated. Figure 1 shows an example.

to denote the probability that a k-span is mutated. Figure 1 shows an example.

FIG. 1.

An example of the simple mutation process, with and . There are five nucleotides that are mutated (marked with an x). For example, the mutation at position 10 mutates the k-spans (marked in red). Note that an isolated nucleotide mutation (e.g., at position 10) can affect up to k k-spans (e.g., ), but nearby nucleotide mutations can affect the same k-span (e.g., mutation of nucleotides at positions 23 and 27 both affect .) There are two islands (marked in blue) and three oceans, and . For example, is an island, and is an ocean.

The simple mutation model formalizes the notion of a string S undergoing mutations where there are no spurious matches, that is, there are no duplicate k-mers in S and a mutation always creates a unique k-mer. This is also closely related to assuming that S is random and k is large enough so that such spurious matches happen with low probability. The simple mutation model captures these scenarios by representing S using the interval and a k-mer as a k-span.

We can partition the sequence into alternating intervals called islands and oceans. The range is an island iff all are mutated, and the range is maximal, that is, and are either not mutated or out of bounds. Similarly, the range is an ocean iff none of is mutated, and the interval is maximal. We define Nocean as a random variable, which is the number of oceans, and Nisl as the number of islands (Fig. 1).

Consider two strings composed of a set of k-mers A and B, respectively, and let be a non-negative integer. The Jaccard similarity between A and B is defined as . The MinHash sketch CS of a set C is the set of the s smallest elements in C, under a uniformly random permutation hash function. The MinHash Jaccard similarity between A and B is defined as  , or, equivalently

, or, equivalently  (Broder, 1997). To transplant this to our model, we define the sketching simple mutation model as an extension of the simple mutation model, with an additional non-negative integer parameter .

(Broder, 1997). To transplant this to our model, we define the sketching simple mutation model as an extension of the simple mutation model, with an additional non-negative integer parameter .

We follow the intuition of representing a string S with no spurious matches. For every position i, if Ki is nonmutated (respectively, mutated), we think of Ki as being shared (respectively, distinct) between the strings before and after the mutation process. Formally, let be a universe that contains an element for every nonmutated Ki and, for every mutated Ki, contains two elements a-distincti and b-distincti. Let A be the set of all and a-distincti, and let B be the set of all and b-distincti. The output of the sketching simple mutation model is the MinHash Jaccard similarity between A and B, that is,  . Note that the Jaccard similarity (without sketches) would, in our simple mutation model, be the ratio between the number of and the size of , which is

. Note that the Jaccard similarity (without sketches) would, in our simple mutation model, be the ratio between the number of and the size of , which is

Given a distribution with a parameter of interest p, an approximate -CI is an interval that contains p with limiting probability . Closely related, an approximate hypothesis test with significance level is an interval that contains a random variable with limiting probability . We drop the word “approximate” in the rest of the article, for brevity. We use the notation to mean . Given , we define , where is the inverse of the cumulative distribution function of the standard Gaussian distribution. Let denote the hypergeometric distribution with population size x, y success states in population, and z trials. We define . Both and Fn can be easily evaluated in programming languages such as R or Python.

3. NUMBER OF MUTATED k-MERS: EXPECTATION AND VARIANCE

In this section, we look at the distribution of , that is, the number of mutated k-mers. The approach we take to this kind of analysis, which is standard, is to express as a sum of indicator random variables whose pairwise dependence can be derived. Let Xi be the random variable corresponding to whether or not the k-span Ki is mutated; that is, iff at least one of its nucleotides is mutated. Hence,  . We can express . By linearity of expectation, we have

. We can express . By linearity of expectation, we have

| (1) |

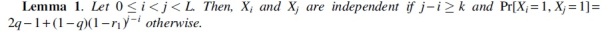

The key to the computation of variance is the joint probabilities of two k-mers being mutated.

Proof. Set . If , then Ki and do not overlap, and therefore, the variables Xi and are independent. Otherwise, consider three events. E1 is the event that at least one of the positions is mutated. E2 is the event that none of is mutated and one of is mutated. E3 is the event that none of is mutated. Notice that the three events form a partition of the event space and so we can write

We can now compute the variance using tedious but straightforward algebraic calculations. As we show in the following section, knowing the variance allows us to obtain a CI or do a hypothesis test based on .

4. HYPOTHESIS TEST FOR M-DEPENDENT VARIABLES

Our derivations of hypothesis tests and CIs follow the strategy used for the binomials, which we now describe so as to provide intuition. In the case of estimating the success probability p of a binomial variable X when the number of trials L is known, a CI for p is called a binomial proportion CI (Casella and Berger, 2002). There are multiple ways to calculate such an interval, as described and compared in Brown et al. (2001), and we follow the approach of the Wilson score interval (Wilson, 1927). It works by first approximating the binomial with a normal distribution and then applying a standard score.

The result is that , where and is a function such that ; recall that . This can be solved for X to obtain a hypothesis test . This can be converted into a CI by finding all values of p for which holds. In the particular case of the binomial, a closed form solution is possible (Wilson, 1927), but, more generally, one can also find the solution numerically.

Although random variables such as are not binomial, they have a specific form of dependence between the trials, which allows us to apply a similar strategy. A sequence of L random variables is said to be m-dependent if there exists a bounded m (with respect to L) such that if , then the two sets and are independent (Hoeffding et al., 1948). In other words, m-dependence says that the dependence between a sequence of random variables is limited to be within blocks of length m along the sequence.

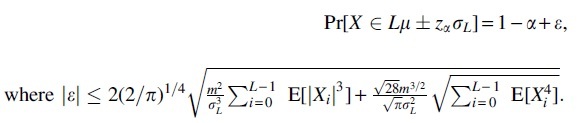

It is known that the sum of m-dependent random variables is asymptotically normal (Hoeffding et al., 1948) and this was previously used to construct heuristic hypothesis tests and CIs (Miao and Gastwirth, 2004). Even stronger, the rate of convergence of the sum of m-dependent variables to the normal distribution is known due to a technique called Stein's method [see theorem 3.5 in Ross (2011)]. (This technique applies even to the case where m is not bounded, but that will not be the case in our article.) Here, we apply Stein's method to obtain a formally correct hypothesis test together with a rate of convergence for a sum of m-dependent (not necessarily identically distributed) Bernoulli variables.

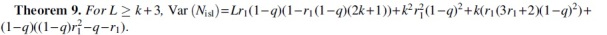

Lemma 3. Let be a sum of m-dependent Bernoulli random variables, where Xi has success probability pi. Let , , and . Then, and

Proof. Let and let Z be a standard normal random variable. From theorem 3.6 in Ross (2011), we have where dW(.,.) denotes the Wasserstein metric. Since Z is a standard normal random variable, we have the following standard inequality between the Kolmogorov and Wasserstein metrics [see, e.g., section 3 in Ross (2011)]:

As seen, m-dependence is well suited for dealing with variables in the simple mutation model. In most natural cases, the error when , and Lemma 3 gives a hypothesis test with a significance level .

5. HYPOTHESIS TESTS FOR AND AND CIs FOR r1

There is a natural point estimator for r1 using , defined as  This estimator is both the method of moments and the maximum likelihood estimator, meaning it has nice convergence properties as L increases (Wasserman, 2013). In this section, we extend it to a CI and a hypothesis test, both from and (with and without sketching). In the setting, Lemma shows that are m-dependent with . Hence, we can apply Lemma 3 to

This estimator is both the method of moments and the maximum likelihood estimator, meaning it has nice convergence properties as L increases (Wasserman, 2013). In this section, we extend it to a CI and a hypothesis test, both from and (with and without sketching). In the setting, Lemma shows that are m-dependent with . Hence, we can apply Lemma 3 to

Corollary 4. Let

, and Then and

where and c is a constant that depends only on r1 and k. In particular, when r1 and k are independent of L, we have .

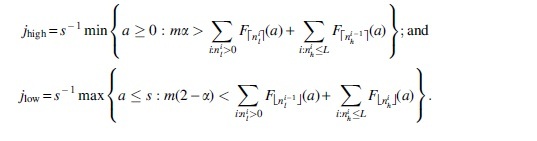

Corollary 4 gives the closed-form boundaries for a hypothesis test on Nmut. To compute a CI for q (equivalently for r1), we can numerically find the range of q for which the observed lies between and . In other words, the upper bound on the range would be given by the value of q for which the observed is and the lower bound by the value of q for which the observed is . These observations are made rigorous in Theorem 5. We use the notation to denote with parameter

.

Theorem 5. For fixed k, r1, and , for a given observed value of there exists an L large enough such that there exists a unique such that and a unique such that , and

where and c is a constant that depends only on r1 and k. In particular, for fixed r1 and k, we have .

Note that this theorem states that for sufficiently large L, there is a unique solution for the value of q for which the observed is (and similarly a unique solution for the value of q for which the observed is ). For small L, we have no such guarantee (although we believe the theorem holds true for all ); to deal with this possibility, our software verifies if the solutions are indeed unique by computing the derivative inside the proof of Theorem 5 and checking if it is positive. If it is, then the proof guarantees the solutions to be unique; if it is not, our software reports this. However, during our validations, we did not find such a case to occur.

We want to underscore how the difference between a CI and a hypothesis test is relevant in our case. A CI is useful when we have two sequences, one of which having evolved from the other and we would like to estimate their mutation rate from the number of mutated k-spans. A hypothesis test is useful when we know the mutation rate a priori, for example, the error rate of a sequencing machine. In this case, we may want to know whether a read could have been generated from a putative genome location, given the number of observed mutated k-spans. We see both applications in Section 7.

In some cases, is not observed, but instead we observe another random variable , where is a monotone function. For example, if , then T is the Jaccard similarity between the original and the mutated sequence (in our model). In this case, a hypothesis test with significance level is to check if T lies between and . In addition to the Jaccard, Lu et al. (2017) describe 14 other variables that are a function of , L, and k. These are as follows: Anderberg, Antidice, Dice, Gower, Hamman, Hamming, Kulczynski, Matching, Ochiai, Phi, Russel, Sneath, Tanimoto, and Yule. We can apply our hypothesis test to any of these variables, as long as they are monotone with respect to .

We can also use Lemma 3 as a basis for deriving a hypothesis test on in the sketching model. The proof is more involved and interesting in its own right, but is left for the Supplementary Appendix due to space constraints.

Theorem 6. Consider the sketching simple mutation model with known parameters s, k, , r1, and output . Let and let be an integer. For , let and . Let

Then, assuming that r1 and k are independent of L, and ,

We can compute a CI for q from in the same manner as with Corollary 4. Let and be defined as in Theorem 6, but explicitly parameterized by the value of q. Then we numerically find the smallest value for which and the largest value for which . The following theorem guarantees that is a CI for q.

Theorem 7. For fixed k, r1, , m, and a given observed value of , there exists an L large enough such that there exist unique intervals and such that , if and only if , and if and only if . Moreover, assuming that r1, k, and m are independent of L, we have

6. NUMBER OF ISLANDS AND OCEANS

In this section, we derive the expectation and variance of and and the hypothesis test based on them. For , we follow the same strategy as for , namely, to express as a sum of indicator random variables whose joint probabilities can be derived. Let us define a right border as a position i such that Ki is mutated and is not. We denote it by an indicator variable Bi, for . Let us also say that there exists an end-of-string border iff is mutated. We will denote this by an indicator variable Z. A right border is a position where an island ends and an ocean begins, and the end-of-string border exists if the last island is terminated not by an ocean but by the end of available nucleotides in the string to make a k-mer. The number of islands is then the number of borders, that is, .

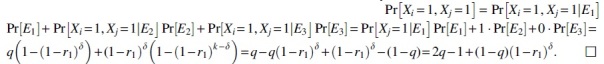

To compute the expectation, observe that Z is a Bernoulli variable with parameter q. For Bi, observe that the only way that Ki is mutated while is not is if position i is mutated and the positions are not. Therefore, . By linearity of expectation,

| (2) |

Next, we derive dependencies between border variables and use them to compute the variance.

Proof. Observe that when , the positions that have an effect on Bi (i.e., ) and those that have an effect on Bj (i.e., ) are disjoint. Hence, Bi and Bj are independent in this case. When , Bi and Bj cannot co-occur. This is because implies that position j is not mutated, while implies that it is. By the same logic, Z is independent of all Bi for . For the case when , implies that positions are not mutated. Therefore, there is an end-of-string border when iff one of the positions is mutated. Thus,

.

Lemma 8 also shows that is m-dependent, with , Therefore, a hypothesis test on can be obtained as a corollary of Lemma 3.

Corollary 10. Fix r1 and let . Then, the probability that is , where and c is a constant that depends only on r1 and k. In particular, when r1 and k are independent of L, we have .

Unlike for Corollary 4, it is not as straightforward to invert this hypothesis test into a CI for r1, since the endpoints of the interval of are not monotone in r1. We therefore do not pursue this direction here. The derivation of the expectation and variance for are analogous and left for the Supplementary Appendix (Theorem 12). Observe that , so, as expected, the expectation and variance are identical to in the higher order terms. Corollary 10 also holds for the case that n is the observed number of oceans, if we just replace with .

An immediate application of is to compute a hypothesis test for the coverage by exact regions (), a variable earlier applied to score read mappings in Miclotte et al. (2016). is the fraction of positions in that lie in k-spans that are in oceans. The total number of bases in all the oceanic k-spans is the number of nonmutated k-spans plus, for each ocean, an extra “starter” bases. We can then write the following:

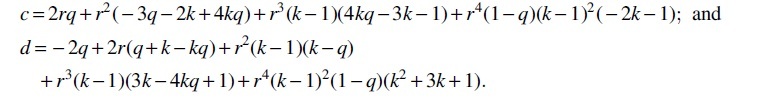

We can use the expectations and variances of [Eq. (1) and Theorem 2] and (Theorem 12) to derive the expectation and variance of :

Theorem 11. and, for , , where

Then, observing that is a linear combination of m-dependent variables and hence itself m-dependent, we can apply Lemma 3 and obtain that, when r1 and k are independent of L,

7. EMPIRICAL RESULTS AND APPLICATIONS

In this section, we evaluate the accuracy of our results and demonstrate several applications. A sanity check validation of the correctness of our formulas for and is shown in Supplementary Appendix Table SA1, however, most of the expectation and variance formulas are evaluated indirectly through the accuracy of the corresponding CIs. We focus the evaluation on accuracy rather than run time, since calculating the CI took no more than a few seconds for most cases (the only exception was for sketch sizes of 100k or more, the evaluation took on the order of minutes). Memory use was negligible in all cases.

7.1. CIs based on

In this section, we evaluate the accuracy of the CIs produced by Corollary 4 (other CIs will be evaluated indirectly through applications). We first simulate the simple mutation model to measure the accuracy, shown in the left three groups (i.e., ) of Table 2, for . We observe that the predicted CIs are very accurate at , and also accurate for smaller k and r1 when . Similar results hold for (Supplementary Appendix Table SA2) and (Supplementary Appendix Table SA3). The remainder of the cases had a CI that was too conservative; these are also the cases with some of the smallest variances (Supplementary Appendix Table SA1) and we suspect that, similar to the case of the binomial, the normal approximation of m-dependent variables deteriorates with very small variances. However, further investigation is needed.

Table 2.

The Accuracy of the Confidence Intervals for r1 Predicted by Corollary 4, for and for Various Values of L, r1, and k (the First Three Groups) and for the Escherichia coli Sequence (the Fourth Group)

| |

L = 100 |

L = 1000 |

L = 10,000 |

Escherichia coli |

||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| r1 = | 0.001 | 0.01 | 0.1 | 0.2 | 0.001 | 0.01 | 0.1 | 0.2 | 0.001 | 0.01 | 0.1 | 0.2 | 0.001 | 0.01 | 0.1 | 0.2 |

| 0.91 | 1.00 | NA | NA | 0.95 | 0.96 | NA | NA | 0.95 | 0.95 | NA | NA | 0.95 | 0.95 | NA | NA | |

| 0.91 | 1.00 | 1.00 | NA | 0.94 | 0.95 | 0.94 | NA | 0.95 | 0.95 | 0.96 | NA | 0.95 | 0.95 | 0.95 | NA | |

| 0.91 | 0.96 | 1.00 | 1.00 | 0.93 | 0.95 | 0.95 | 0.95 | 0.95 | 0.94 | 0.95 | 0.95 | 0.95 | 0.94 | 0.93 | 0.94 | |

NA indicates that the experiment was not run; for the first three groups, we only ran on parameters where (otherwise they were not of interest), while for E. coli, we ran with the same range of values of r1 and k as in the first three groups. In each cell, we report the fraction of 10,000 replicates for which the true r1 falls into the predicted CI. For the E. coli sequence, we used the strain Shigella flexneri Shi06HN159.

CI, confidence interval.

Next, we investigate how well our predictions hold up when we simulate mutations along a real genome, where we can only observe the set of k-mers without their positions in the genome (as in alignment-free sequence comparison). We start with the Escherichia coli genome sequence and, with probability r1, for every position, flip the nucleotide to one of three other nucleotides, chosen with equal probability. Let A and B be the set of distinct k-mers in E. coli before and after the mutation process, respectively. We let and . We then calculate the 95% CI for r1 under the simple mutation model (Corollary 4) by plugging in n for . The rightmost group in Table 2 shows the accuracy of these CIs. We see that the simple mutation model we consider in this article is a good approximation to mutations along a real genome such as E. coli.

7.2. Mash distance

The Mash distance (Ondov et al., 2016) (and its follow-up modifications; Ondov et al., 2019; Sarmashghi et al., 2019) first measures the MinHash Jaccard similarity j between two sequences and then uses a formula to give a point estimate for r1 under the assumptions of the sketching simple mutation model. While a hypothesis test was described in Ondov et al. (2016), it was only for the null model where the two sequences were unrelated. Theorem 6 allows us instead to give a CI for r1, based on the MinHash Jaccard similarity, in the sketching simple mutation model.

Table 3 reproduces a subset of Table 1 from Ondov et al. (2016), but using CIs given by Theorem 6. For most cases, the predicted CIs are highly accurate, with an error of at most two percentage points. The three exceptions happen when s is small and q is large; in such cases, the predicted CI is too conservative (i.e., too large). In Supplementary Appendix Table SA4, we also tested the accuracy with a real E. coli genome by letting A and B be the set of distinct k-mers in the genome before and after mutations, respectively, letting and , and applying Theorem 6 with those values. The accuracy is very similar to that in the simple mutation model, demonstrating that for a genome such as E. coli, the simple mutation model is a good approximation.

Table 3.

The Confidence Intervals Predicted by Theorem 6 and Their Accuracy

| Sketch size | r1 = 0.05, q = 0.659 |

r1 = 0.15, q = 0.967 |

r1 = 0.25, q = 0.998 |

||||||

|---|---|---|---|---|---|---|---|---|---|

| Accuracy | Low | High | Accuracy | Low | High | Accuracy | Low | High | |

| 100 | 0.97 | 0.037 | 0.069 | 1.00 | 0.103 | 0.303 | 1.00 | 0.119 | 1.000 |

| 1000 | 0.96 | 0.046 | 0.055 | 0.97 | 0.133 | 0.174 | 1.00 | 0.193 | 0.375 |

| 10,000 | 0.95 | 0.049 | 0.051 | 0.96 | 0.144 | 0.156 | 0.96 | 0.232 | 0.277 |

| 100,000 | 0.95 | 0.049 | 0.051 | 0.95 | 0.148 | 0.152 | 0.96 | 0.243 | 0.257 |

| 1,000,000 | 0.94 | 0.050 | 0.050 | 0.95 | 0.149 | 0.151 | 0.96 | 0.247 | 0.253 |

For each sketch size and r1 value, we show the number of trials for which the true r1 falls within the predicted CI. The reported CI corresponds to applying Theorem 6 with . Here, , , , and the sketch size s and r1 are varied as shown. The number of trials for each cell is 1000, and for Theorem 6.

7.3. Filtering out reads during alignment to a reference

Minimap2 is a widely used long-read aligner (Li, 2018). The algorithm first picks certain k-mers in a read as seeds. Then, it identifies a region of the read and a region of the reference that potentially generated it [called a chain in Li (2018)]. Let n be the number of seeds in the read and let be the number of those that exist in the reference region. Minimap2 models the error rate of the k-mers as a homogenous Poisson process and estimates the sequence divergence between the read and the reference as (which is the maximum likelihood estimator in that model). If is above a threshold, the alignment is abandoned. Li (2018) observes that due to invalid assumptions, is only approximate and can be biased, but nevertheless maintains a good correlation with the true divergence.

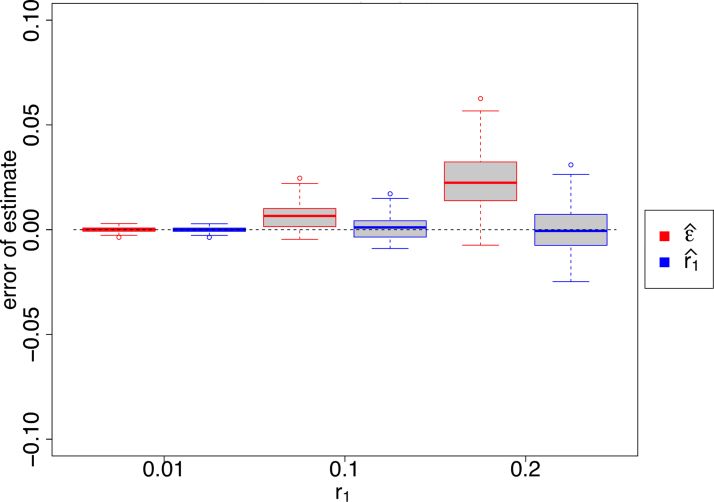

Using our article, we can obtain a more accurate estimate of r1. The situation is very similar to estimating r1 from , except that only a subset of k-spans are being “tracked.” Therefore, the maximum likelihood estimator for q is and for r1 is  . Figure 2 and Supplementary Appendix Figure SA3 show the relative performance of the two estimators ( and ) for sequences of different lengths, with our much closer to the simulated rate than in both cases.

. Figure 2 and Supplementary Appendix Figure SA3 show the relative performance of the two estimators ( and ) for sequences of different lengths, with our much closer to the simulated rate than in both cases.

FIG. 2.

Estimates of sequence divergence as done by mimimap2 () and by us (). Reads are simulated from a random 10 kbp sequence introducing mutations at the given r1 rate. For each r1 value, 100 reads are used. As in Li (2018), we use and, using a random hash function, identify as seeds the k-mer minimizers, one for every window of 25 k-mers. In the case when is undefined, we set .

7.4. Evaluating an alignment of a long read to a graph

Jabba (Miclotte et al., 2016) is an error-correction algorithm for long-read data. At one stage, the algorithm evaluates whether a read is likely to have originated from a given location in the reference. Because Jabba's reference is a de Bruijn graph and not a string, it uses the specialized score for the evaluation. In this scenario, the mutation process corresponds to sequencing errors at a known error rate r1 and the question is whether the read is likely to have arisen through this process from the given location of the reference. The authors assume the simple mutation model and derive the expected score as  . They then give a lower rating to reads with a score that has “significant deviation” from this expected value. It is not clear how much of a deviation is deemed to be significant or how it was calculated.

. They then give a lower rating to reads with a score that has “significant deviation” from this expected value. It is not clear how much of a deviation is deemed to be significant or how it was calculated.

Theorem 11, which gives and , would have allowed (Miclotte et al., 2016) to take a more rigorous approach. It shows that the expectation computed by Miclotte et al. (2016) is correct only in the limit as , while our formula is exact and closed-form. More substantially, we can make the determination of “significant deviation” more rigorous.

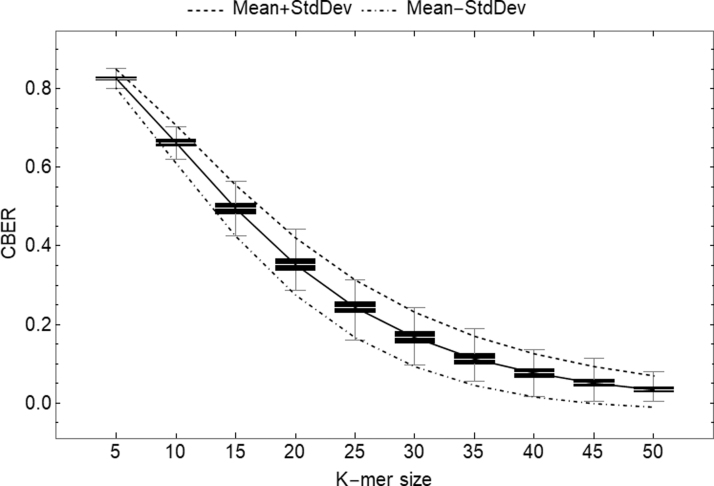

We regenerated Figure 2 from Miclotte et al. (2016), using the same range of values for k [called m in Miclotte et al. (2016)] and an error rate of as in Miclotte et al. (2016) and plotted the 95% CI as follows: . Figure 3 demonstrates that this range would have done a good job at capturing most of the generated reads. Table 4 gives the number of values that fall inside of the 95% CI when using a simple mutation process with the same for sequences of length 10,000 for 5000 replicates, with k ranging from 5 to 50 in steps of 5, depicting good agreement between simulation and Theorem 11.

FIG. 3.

Box and whisker plot of scores for 5000 replicates of random strings of length 10,000nt, with mutations introduced at a rate of . The solid black line corresponds to the empirical median of , while the dashed top line corresponds to and the bottom dot-dashed line corresponds to , both computed from Theorem 11.

Table 4.

A Total of 5000 Sequences, Each of Length 10,000nt, Underwent a Simple Mutation Process with Mutation Probability

| k-mer size | 5 | 10 | 15 | 20 | 25 | 30 | 35 | 40 | 45 | 50 |

|---|---|---|---|---|---|---|---|---|---|---|

| % inside CI | 0.95 | 0.95 | 0.95 | 0.95 | 0.95 | 0.95 | 0.94 | 0.94 | 0.95 | 0.93 |

The percent of associated scores that fell inside of the 95% CI as determined by Theorem 11 is shown.

8. CONCLUSION

The simple mutation model has been used broadly to model either biological mutations or sequencing errors. However, its use has usually been limited to derive the expectations of random variables, for example, the expected number of mutated k-mers. In this article, we take this a step further and show that the dependencies between indicator variables in this model (e.g., whether a k-mer at a given position is mutated) are often easy to derive and are limited to nearby locations. This limited dependency allows us to show that the sum of these indicators is approximately normal. As a result, we are able to obtain hypothesis tests and confidence tests in this model.

The most immediate application of our article is likely to compute a CI for average nucleotide identity from the MinHash sketching Jaccard. Previously, only a point estimate was available, using Mash. However, we hope that our technique can be applied by others to random variables that we did not consider. All that is needed is to derive the joint probability of the indicator variables and compute the variance. Computing the variance by hand is tedious and error-prone but can be done with the aid of software such as Mathematica.

We test the robustness of the simple mutation model in the presence of spurious matches by using a real E. coli sequence. However, we do not explore the robustness with respect to violations such as the presence of indels (which result in different string lengths) or the presence of more repeats than in E. coli. This type of robustness has already been explored in other articles that use the simple mutation model (Fan et al., 2015; Ondov et al., 2016; Sarmashghi et al., 2019). However, exploring the robustness of our CIs in downstream applications is important in future work.

On a more technical note, it would be interesting to derive more tight error bounds for our CIs, both in terms of more tightly capturing the dependencies on L, r1, and k, and accurately tracking constants. The error bound that is stated in Lemma 3 is likely not tight in either respect, due to the inherent loss when transferring between the Wasserstein and Kolmogorov metrics and due to loose inequalities within the proof of theorem 3.5 in Ross (2011).

Ideally, tight error bounds would give the user a way to know, without simulations, when the CIs are accurate, in the same way that we know that the Wilson score interval for a binomial will be inaccurate when is low. For example, it would be useful to better theoretically explain and predict which values in Table 2 deviate from 0.95.

Another practical issue is with the implementation of the algorithm to compute a CI for q from . Theorem 7 guarantees that the algorithm is correct as L goes to infinity. However, the user of the algorithm will not know if L is large enough for the CI to be correct. There are several heuristic ways to check this, which we have implemented in the software: a short simulation to check the true coverage of the reported CI, a check that the sets in the definitions of and are not empty, and a check that and are monotonic with respect to q in the range .

ACKNOWLEDGMENTS

P.M. is grateful to Kirsten E. Eilertson and Benjamin Shaby for discussion.

AUTHOR DISCLOSURE STATEMENT

The authors declare they have no conflicting financial interests

FUNDING INFORMATION

P.M. was supported by NSF awards 1453527 and 1439057. A.B. was supported, in part, by the NSF grant CCF-1850443. This material is based upon work supported by the National Science Foundation under Grant No. 1664803.

REFERENCES

- Bankevich, A., Nurk, S., Antipov, D., et al. 2012. SPAdes: A new genome assembly algorithm and its applications to single-cell sequencing. J. Comput. Biol. 19, 455–477. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Broder, A.Z. 1997. On the resemblance and containment of documents, pp. 21–29. In: Proceedings. Compression and Complexity of SEQUENCES 1997 (Cat. No. 97TB100171). IEEE, Salerno, Italy. [Google Scholar]

- Brown, C.T., Olm, M.R., Thomas, B.C., and Banfield, J.F.. 2016. Measurement of bacterial replication rates in microbial communities. Nat. Biotechnol. 34, 1256. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Brown, L.D., Cai, T.T., and DasGupta, A.. 2001. Interval estimation for a binomial proportion. Stat. Sci. 16, 101–117. [Google Scholar]

- Burden, C.J., Leopardi, P., and Forêt, S.. 2014. The distribution of word matches between markovian sequences with periodic boundary conditions. J. Comput. Biol. 21, 41–63. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Casella, G., and Berger, R.L.. 2002. Statistical Inference, Vol. 2. Duxbury Pacific Grove, CA. [Google Scholar]

- Denti, L., Previtali, M., Bernardini, G., et al. 2019. MALVA: Genotyping by Mapping-free ALlele detection of known VAriants. iScience 18, 20–27. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fan, H., Ives, A.R., Surget-Groba, Y., and Cannon, C.H.. 2015. An assembly and alignment-free method of phylogeny reconstruction from next-generation sequencing data. BMC Genomics 16, 522. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gusfield, D. 1997. Algorithms on Strings, Trees and Sequences: Computer Science and Computational Biology. Cambridge University Press. [Google Scholar]

- Harris, R.S., and Medvedev, P.. 2020. Improved representation of sequence bloom trees. Bioinformatics 36, 721–727. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Haubold, B., Pfaffelhuber, P., Domazet-Loso, M., and Wiehe, T.. 2009. Estimating mutation distances from unaligned genomes. J. Comput. Biol. 16, 1487–1500. [DOI] [PubMed] [Google Scholar]

- Hoeffding, W., Robbins, H., et al. 1948. The central limit theorem for dependent random variables. Duke Math. J. 15, 773–780. [Google Scholar]

- Jain, C., Dilthey, A., Koren, S., et al. 2017. A fast approximate algorithm for mapping long reads to large reference databases, pp. 66–81. In: International Conference on Research in Computational Molecular Biology. Ed: S. Cenk Sahinalp. Springer, Hongkong. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lander, E.S., and Waterman, M.S.. 1988. Genomic mapping by fingerprinting random clones: A mathematical analysis. Genomics 2, 231–239. [DOI] [PubMed] [Google Scholar]

- Li, H. 2018. Minimap2: Pairwise alignment for nucleotide sequences. Bioinformatics 34, 3094–3100. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lu, Y.Y., Tang, K., Ren, J., et al. 2017. Cafe: Accelerated alignment-free sequence analysis. Nucleic Acids Res. 45(W1), W554–W559. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Miao, W., and Gastwirth, J.L.. 2004. The effect of dependence on confidence intervals for a population proportion. Am. Stat. 58, 124–130. [Google Scholar]

- Miclotte, G., Heydari, M., Demeester, P., et al. 2016. Jabba: Hybrid error correction for long sequencing reads. Algorithms Mol. Biol. 11, 1–12. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Morgenstern, B., Zhu, B., Horwege, S., and Leimeister, C.A.. 2015. Estimating evolutionary distances between genomic sequences from spaced-word matches. Algorithms Mol. Biol. 10, 5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ondov, B.D., Starrett, G.J., Sappington, A., et al. 2019. Mash Screen: High-throughput sequence containment estimation for genome discovery. Genome Biol. 20, 232. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ondov, B.D., Treangen, T.J., Melsted, P., et al. 2016. Mash: Fast genome and metagenome distance estimation using minhash. Genome Biol. 17, 132. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Reinert, G., Chew, D., Sun, F., and Waterman, M.S.. 2009. Alignment-free sequence comparison (i): Statistics and power. J. Comput. Biol. 16, 1615–1634. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Röhling, S., Linne, A., Schellhorn, J., et al. 2020. The number of k-mer matches between two dna sequences as a function of k and applications to estimate phylogenetic distances. PLos One 15, e0228070. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ross, N. 2011. Fundamentals of Stein's method. Probab. Surv. 8, 210–293. [Google Scholar]

- Salmela, L., Walve, R., Rivals, E., and Ukkonen, E.. 2017. Accurate self-correction of errors in long reads using de Bruijn graphs. Bioinformatics 33, 799–806. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sarmashghi, S., Bohmann, K., Gilbert, M.T.P., et al. 2019. Skmer: Assembly-free and alignment-free sample identification using genome skims. Genome Biol. 20, 1–20. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schwengers, O., Hain, T., Chakraborty, T., and Goesmann, A.. 2019. Referenceseeker: Rapid determination of appropriate reference genomes. BioRxiv. Vol. 8636. 21. [Google Scholar]

- Solomon, B., and Kingsford, C.. 2016. Fast search of thousands of short-read sequencing experiments. Nat. Biotechnol. 34, 300–302. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Song, K., Ren, J., Reinert, G., et al. 2014. New developments of alignment-free sequence comparison: Measures, statistics and next-generation sequencing. Briefings Bioinf. 15, 343–353. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Standage, D.S., Brown, C.T., and Hormozdiari, F.. 2019. Kevlar: A mapping-free framework for accurate discovery of de novo variants. bioRxiv. Vol. 5491. 54. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sun, C., and Medvedev, P.. 2018. Toward fast and accurate snp genotyping from whole genome sequencing data for bedside diagnostics. Bioinformatics 35, 415–420. [DOI] [PubMed] [Google Scholar]

- Tang, T., Liu, Y., Zhang, B., et al. 2019. Sketch distance-based clustering of chromosomes for large genome database compression. BMC Genomics 20, 1–9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wang, A., and Au, K.F.. 2020. Performance difference of graph-based and alignment-based hybrid error correction methods for error-prone long reads. Genome Biol. 21, 14. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wasserman, L. 2013. All of Statistics: A Concise Course in Statistical Inference. Springer Science & Business Media, Berlin, Germany. [Google Scholar]

- Wilson, E.B. 1927. Probable inference, the law of succession, and statistical inference. J. Am. Stat. Assoc. 22, 209–212. [Google Scholar]

- Wood, D.E., and Salzberg, S.L.. 2014. Kraken: Ultrafast metagenomic sequence classification using exact alignments. Genome Biol. 15, R46. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wu, T.-J., Huang, Y.-H., and Li, L.-A.. 2005. Optimal word sizes for dissimilarity measures and estimation of the degree of dissimilarity between DNA sequences. Bioinformatics 21, 4125–4132. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.