ABSTRACT

Large language models (LLMs) have emerged as powerful tools in many fields, including clinical pharmacology and translational medicine. This paper aims to provide a comprehensive primer on the applications of LLMs to these disciplines. We will explore the fundamental concepts of LLMs, their potential applications in drug discovery and development processes ranging from facilitating target identification to aiding preclinical research and clinical trial analysis, and practical use cases such as assisting with medical writing and accelerating analytical workflows in quantitative clinical pharmacology. By the end of this paper, clinical pharmacologists and translational scientists will have a clearer understanding of how to leverage LLMs to enhance their research and development efforts.

Keywords: artificial intelligence, clinical pharmacology, drug development, drug discovery, large language model, translational science

1. Introduction

The advent of artificial intelligence (AI) has ushered in a new era of innovation across various fields, including drug discovery and development [1, 2, 3]. Large language models (LLMs), such as GPT‐4, have demonstrated remarkable capabilities in processing and generating human‐like text and programming codes, offering unprecedented opportunities to enhance several aspects of the drug discovery and development processes [4].

Prior reviews have underscored the importance of Natural Language Processing (NLP) in Model Informed Drug Development (MIDD) [5] and pharmacology [6]. They highlighted NLP's role in enhancing drug discovery, clinical trials, and pharmacovigilance through functionalities like named entity recognition and relation extraction.

Since the release of ChatGPT, there has been a rapid increase in both the number of LLMs [7, 8] and their uptake in the biomedical domains [9]. In [10], Peter Lee et al. highlight the transformative impact of GPT‐4 on healthcare, including diagnostics, personalized treatment, and medical research. It discusses practical applications and ethical considerations, showcasing AI's potential to revolutionize patient care. Due to the anticipation that LLMs would permeate every area of health care, a thorough tutorial was developed to help the biomedical informatics community harness them effectively [11].

Recent studies have further illustrated applications and limitations of LLMs in pharmacometrics. Shin et al. [12] evaluated the capabilities of ChatGPT and Gemini LLMs in generating NONMEM code, finding that while these models can efficiently create initial coding templates, the output often contains errors requiring expert revision. Cloesmeijer et al. [13] further explored the capability of LLMs in supporting model simulations and R‐Shiny implementation. These efforts offer a glimpse into a promising future where LLMs could significantly assist with pharmacometrics tasks, albeit with the need for human oversight.

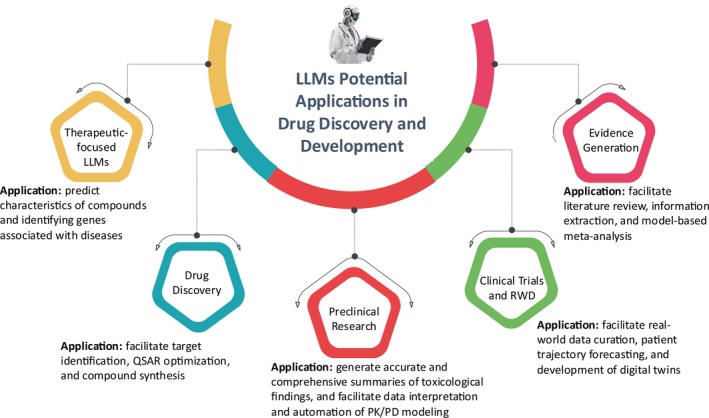

This primer offers clinical pharmacologists (CP) and translational scientists (TS) insights for leveraging LLMs to enhance their research and development workflows and lists helpful resources for implementing LLM‐based technologies effectively. CP and TS have distinct needs that include gathering and integrating information from multiple sources, often across several disciplines. Furthermore, given their use of clinical data, CP and TS professionals must be mindful of ethical and privacy considerations in the usage of LLMs. In this primer, we examine the category of general‐purpose LLMs trained on human textual language [4]; specialized LLMs that are trained on specific scientific languages (such as gene and protein sequences) are not the primary focus of this primer. By providing fundamental concepts, application areas (as shown in Figure 1), concrete use cases as well as links to useful tools (see Supporting Information), we aim to bridge the gap between AI advancements and their practical utility in drug discovery and development. Furthermore, it addresses the challenges and limitations associated with LLMs, providing a balanced perspective on their potential and areas for future improvement. Through these discussions, we hope to empower CP and TS to leverage the full potential of LLMs in their work.

FIGURE 1.

Potential applications of LLMs in drug discovery and development. LLMs, large language models; PD, pharmacodynamics; PK, pharmacokinetics; RWD, real‐world data.

2. Fundamentals of Large Language Models

2.1. What Are Large Language Models?

An LLM is a type of deep neural network that has been trained on massive amounts of text data, designed to comprehend, generate, and respond to human‐like text [14]. The “large” in LLMs refers both to the model size as reflected by the number of trainable weights as well as the immense size of the data set on which it has been trained on. LLMs are an example of a Foundation Model [15], as they are large‐scale models that have been pre‐trained and serve as a versatile base for a variety of downstream tasks. They are trained to take in a sequence of words from a text and predict the next word. It turns out that the task of next‐word prediction [16] compels the LLM to learn the relationship between words within texts and derive comprehension of the context. Through the self‐supervised learning process of next‐word prediction, LLMs have emerged with remarkable capabilities to understand and generate human‐like text in a way that was unexpected to many researchers [14].

2.2. Key Terminologies and Concepts

Tokenization is a crucial first step in preparing text data for training LLMs—which entails splitting the text into the chosen basic units, tokens—that the LLMs process. Depending on the tokenization scheme chosen, the resulting tokens can be words, subwords, or even individual characters. Next, these tokens are converted from strings to integers to produce token IDs via the use of a vocabulary, mapping each unique token to a unique integer. The token IDs are subsequently converted into embedding vectors (further explained below), which are then fed into the LLM. See Figure 2 for a schematic representation of the above‐described process.

FIGURE 2.

A schematic representation of how input text is tokenized and fed to the LLM to generate the next word. LLM, large language model.

Based on the transformer model [17], which utilizes the attention mechanism, LLMs process the input text data much more efficiently and effectively than the recurrent neural networks (RNNs) [18]. Unlike RNNs, which process text sequentially, transformers leverage the attention mechanism to consider all input tokens simultaneously, thus enabling parallel processing and capturing long‐range dependencies within the text. This attention mechanism assigns weights to different parts of the input sequence, allowing the model to focus on the most relevant words for a given task.

A key component in understanding transformers is the concept of embeddings. Embeddings are dense vector representations of tokens in a fixed‐dimensional space [16]. These vectors capture semantic relationships: for example, words with similar meanings will have similar embeddings, facilitating the model's ability to generalize and make predictions based on learned associations [16]. To handle the sequential nature of language data, transformers also incorporate positional encoding [17], which is achieved by adding a unique, position‐specific vector to each token's embedding. This allows the model to differentiate between tokens based on their position in the sequence, thus preserving the syntactic and semantic structure of the input text. Together, embeddings and positional encoding enable the transformer's ability to comprehend intricate relationships between words.

Context size, also referred to as the context window, is a commonly mentioned concept in the use of LLMs. It dictates the amount of preceding text the model can consider when generating the next token. A larger context size gives the LLM access to more extensive relevant information, such as a whole document relevant to the query, resulting in potentially better comprehension of the relevant material and enabling it to produce more accurate responses. The context size for LLMs ranges from ~8 K for Llama3, ~128 K tokens for GPT‐4, to ~200 K tokens for Claude by Anthropic, to around 1 million tokens for Gemini 1.5 Pro from Google; see [19] for a comparison across commonly used LLMs.

To summarize the inner workings of the LLMs: the input tokens are passed in, their embeddings computed, which together with their positional encoding are taken through transformer blocks, resulting in the logits that are used for deriving the next token's probabilities [14]. To convert the computed logits to the predicted token probabilities, temperature scaling is applied before passing in a softmax layer [14], which ensures that the probabilities of the next token are appropriately normalized to sum up to 1. Note that the temperature scaling is an important parameter in the generation of text by LLMs, impacting the diversity and creativity of the generated content [14]. This method introduces a probabilistic selection process in the next token generation task, allowing for control between either more varied and potentially innovative outputs when the temperature is high (close to 1), or more predictable and potentially conventional outputs when the temperature is low (close to 0).

Finally, Reinforcement Learning from Human Feedback (RLHF) is a technique designed to align LLMs with human intentions by incorporating direct human feedback into the training process [20]. This method involves three key steps: first, the model undergoes supervised fine‐tuning using a data set of human‐provided demonstrations to illustrate desired behaviors. Subsequently, a reward model is trained to predict human preferences by having human evaluators rank various model outputs based on their alignment with user instructions. Finally, the LLM is fine‐tuned further using reinforcement learning algorithms, where the reward model's scores act as the reward signal. This iterative process helps the model generate responses that are better aligned with the human intent, thereby increasing the impact of LLMs in applications.

For a glossary of commonly used terms associated with LLMs, please refer to Table 1.

TABLE 1.

Glossary of terms.

| Attention mechanism | A component of the transformer neural network architecture that allows the model to focus on specific parts of the input sequence when making predictions |

| Autoregressive model | A model that generates each token in a sequence based on the preceding tokens, often used in sequence modeling tasks such as language generation |

| BERT | Stands for “Bidirectional Encoder Representations from Transformers,” which is a type of transformer model that reads text bidirectionally to generate context‐aware representations |

| Chain‐of‐thought prompting | A prompting technique whereby the model is guided to generate intermediate reasoning steps before arriving at the answer |

| Context window | The span of text that a language model can consider at one time when generating or interpreting language |

| Data augmentation | Techniques used to increase the diversity of training data without collecting new data, often by perturbing the existing data in some manner |

| Few‐shot learning | A prompt engineering approach where the language model is given a few examples of the task it needs to perform |

| Fine‐tuning | The process of adjusting a pretrained language model on a smaller, specific data set to improve its performance on particular tasks |

| GPT | Stands for “Generative Pre‐trained Transformer,” which is a type of large language model developed by OpenAI that excels in generating text based on user input |

| Knowledge graph | A structured representation of information where entities are nodes and relationships between them are edges, used to enhance understanding and retrieval of information |

| Large language model | A type of AI model designed to understand and generate human language by processing large amounts of text data via self‐supervised learning |

| Model hallucination/confabulation | When a language model generates plausible‐sounding but inaccurate or wrong information |

| Pretraining | The initial phase of training a language model on a large corpus of text data to learn general language patterns |

| Prompt | An input text provided to a language model to guide its generation of a response or continuation |

| RAG | Stands for “Retrieval‐Augmented Generation”, which is a model that combines retrieval of relevant documents with generative capabilities to improve response accuracy |

| Self‐attention | A mechanism where each token in the input sequence pays attention to other tokens, allowing the model to capture relationships between them |

| Token | A unit of text, such as a word or subword, that a language model processes individually |

| Tokenization | The process of converting a sequence of text into tokens that can be processed by a language model |

| Transformer model | A type of neural network architecture that uses self‐attention mechanisms to process input data in parallel, making it highly effective for language tasks |

| Zero‐shot learning | A prompt engineering approach where the language model is expected to perform a task without having seen any examples of it during training |

2.3. Prompt Engineering

Since LLMs have been primarily trained for next word prediction [14], optimizing them for specific tasks relies on effective prompt engineering. By carefully designing the input prompts given to these models, users can guide the LLMs to generate more accurate and relevant outputs by providing the appropriate context.

LLMs are versatile in their functionality, and zero‐shot learning [21, 22] refers to the capability to generalize to entirely new tasks without having encountered any specific examples beforehand.

In comparison, few‐shot learning [23] refers to the ability of LLMs to learn from a few examples the user provides as part of the input prompt [14]. Chain‐of‐Thought (CoT) prompting [24] refers to the approach whereby instead of expecting the LLMs to provide the desired output from just the input, they are also requested to provide the intermediate steps in reaching the output. This is analogous to how humans typically break a larger problem into smaller subparts, each of which may involve logical deductions, and the result of each intermediate step is used in the subsequent one. As shown in [24], as the LLMs increase in size, more reasoning capabilities can be unlocked with CoT, and hence increase its benefits in applications. For examples of prompting approaches mentioned, refer to Table 2. An illustration of the key components of a prompt is shown in Figure 3.

TABLE 2.

Different ways of using LLMs for various tasks.

| Task | Input to LLM | Output of LLM |

|---|---|---|

| Next word prediction | MVCD1 is | “MVCD1 is actually a gene that encodes a protein involved in cell signaling and regulation. …” |

| Zero‐shot learning |

What is the gene? VEGFA ⇒ |

“VEGF ⇒ Vascular Endothelial Growth Factor” |

| You are a forecasting system and you will take in a list of values and predict the next number. Generate a total of five forecasts. Just output the values with no text. The input values are: 423, 491, 505, 404, 359, 310, 300, 280, 265 | “250,235,220,205,190” | |

| Few‐shot learning |

Human: TP53 → Murine: Trp53 Human: MYC → Murine: Myc Human: EGFR → |

“Murine: Egfr” |

| Chain‐of‐thought prompting |

A doctor prescribes a medication to a patient that must be taken at a dose of 5 mg/kg of body weight/day. The patient weighs 73 kg. The medication is available in 50 mg tablets. How many tablets should the patient take each day? Please provide a step‐by‐step reasoning |

“To determine the number of tablets the patient should take each day: 1. Calculate the total daily dose: 5 mg/kg/day × 73 kg = 365 mg/day 2. Determine the number of tablets: 365 mg/(50 mg/tablet) = 7.3 tablets 3. Round to the nearest whole number: The patient should take 7 tablets each day” |

FIGURE 3.

An illustration of the components of a prompt, which helps the LLM to give the user desired output.

2.4. Improving Accuracy and Relevance

In scientific applications, the accuracy and relevance of information generated by LLMs are crucial for making advancements in research and informing decision‐making. However, it is well known that LLMs can often confabulate (or hallucinate) information [11] if they are not grounded on facts and/or data relevant to the application area of interest. Thus, methods to further improve the accuracy and provide citations for the generated information are crucial to build confidence and trust in LLM applications.

Retrieval‐augmented generation (RAG) [25] is a widely used approach that combines two powerful techniques: retrieval and generation. First, information pertinent to the application of interest, such as documents (e.g., scientific papers, manuals, model codes, etc.), is collected and preprocessed by splitting it into smaller chunks, such as paragraphs, which are subsequently converted to embeddings (i.e., numeric vector representations) and stored in a vector database that the LLM can utilize. When a user query is received by the LLM, it is converted to the same embedding space, and a similarity search is performed within the vector database to identify the most relevant information. The original query is then augmented with the retrieved information as additional context, which is then passed to the LLM to generate the response. It has been shown [26] that RAG can significantly enhance the accuracy of LLMs as compared to CoT prompting.

Fine‐tuning a pretrained LLM on domain‐specific data to adapt it to a specific task is another way to improve performance [14]. In the context of chemical text mining, it has been shown that fine‐tuning can improve the model's performance substantially as compared to prompting [27].

In the context of using LLM for medical question answering, instruction fine‐tuning on the PaLM2 model gave rise to the Med‐PaLM 2 that demonstrated substantial improvement in performance on multiple benchmarks [28]. While offering performance improvements, fine‐tuning over a large set of model parameters can entail a high computational burden [11]. Hence, parameter‐efficient fine tuning (PEFT) methods such as low‐rank adaptation (LoRA) [14, 29, 30] limit model weights that are updated in the training process to lie within a smaller dimensional subspace of the complete weight parameter space, thereby reducing the computational resources required. In addition, the model weight updates obtained from the LoRA training process can be saved separately from the original model weights, thereby reducing the storage requirements and enabling the storage of multiple versions of an LLM, each customized to a particular data set [14].

3. Applications of Large Language Models in Drug Discovery and Development

In this section, we explore five key applications of LLMs schematically illustrated in Figure 1, each discussed within its subsection.

3.1. Therapeutics‐Focused Large Language Models

The availability and widespread use of LLMs have inspired many researchers to explore applications in their workflow. The prevalence of errors and misinformation from general application LLMs, such as ChatGPT, has highlighted the need for domain‐specific, next‐generation LLMs for biomedical research [31]. The ability of LLMs to process natural language and work with unstructured data to provide native language responses allows application in various processes in drug discovery and development.

Liang et al. developed a tool called DrugChat [32], which consists of a graph neural network (GNN), LLM, and an adaptor. DrugChat uses a molecular graph of a compound as input, predicts various characteristics, and allows users to prompt various questions, particularly about physical and chemical properties, but can also expand into questions related to later stages of drug development.

The development of domain‐specific LLMs for biomedical research is not only being led by biotech and pharmaceutical companies. Google recently developed Tx‐LLM [33], a generalist model trained on a wide variety of tasks. The developers envision the tool as an end‐to‐end therapeutic development solution, spanning from early‐stage (e.g., target discovery) to late‐stage development (e.g., clinical trial approval strategy). In their initial report, the developers demonstrated the tool's ability to identify genes associated with type 2 diabetes, predict binding affinities of candidate molecules for the target protein, predict potential toxicities of candidate molecules, and assess the probability of clinical trial approval.

3.2. Drug Discovery

As reviewed in [34], LLMs can facilitate target identification by mining biomedical literature to unveil connections between targets, genes, and diseases.

There are significant opportunities for implementing LLM in early discovery. AI has been widely explored for quantitative structure–activity relationship (QSAR) optimization, with many efforts using machine learning (ML) and deep learning (DL) to understand complex relationships—a good fit given the nature of the discipline. There are opportunities for LLM to streamline QSAR efforts. NLP has been successfully implemented to process QSAR data into vectors that are compatible with various ML and DL algorithms [35]. Although these NLP techniques were not LLM based, incorporating LLMs for this application may further expand the compatibility of source data. The ability of LLM to work with different data structures, including unstructured data, allows for the aggregation of a wide range of source data, enabling more complex analyses.

LLM can also be applied to compound synthesis. The advancements in robotic automation, which are already controlled by computer algorithms, offer opportunities for LLMs to be involved in the design and execution of bench experiments. Boiko et al. [36] presented a multi‐LLM‐based intelligent agent capable of automating the design, planning, and performance of complex scientific experiments, such as compound synthesis. The system is capable of performing web searches, document searches, writing and executing code, and manipulating robotic experimental platforms. The end‐to‐end automation opportunity with LLM can extend to other processes that utilize autonomous infrastructures.

Finally, LLMs are increasingly used in target safety assessments across industries like pharmaceuticals and chemicals. These models analyze vast amounts of data, including research papers and safety reports, to predict risks associated with specific targets. In drug development, LLMs help identify toxicological risks by correlating molecular structures with safety profiles. They also assist in assessing environmental hazards by interpreting regulatory guidelines and historical data.

3.3. Preclinical Research

Toxicology has seen significant advancements with the integration of AI, particularly through the use of ML and DL to predict toxicological signals. However, one of the main limitations of these approaches is the availability of compatible data. LLMs offer rapid and efficient processing of data from various sources, which is immensely valuable for the thorough and accurate curation of preclinical and clinical safety data.

Silberg et al. [37] demonstrated that LLMs could generate accurate and comprehensive summaries of toxicological findings from drug labels, leading to the development of UniTox [37], a database that spans numerous types of toxicities and is likely the largest human database covering almost all approved medications. The summaries produced by LLMs were validated against established toxicology databases, such as DICTrank and DILIrank, showing good concordance. Additional validation by clinicians showed that the toxicities annotated by LLMs were in high degree of agreement with assessments made by human experts. The ability to quickly and thoroughly assess safety profiles of compounds allows for databases like UniTox to be continuously updated with minimal human effort. Although the validation results were impressive, there are still misalignments that necessitate the need for human supervision and review of LLM outputs.

The potential opportunity of using LLM for data interpretation to circumvent human bias can also be implemented in the preclinical space. Berce et al. [38] described the use of LLM in generating clinical score sheets that are used to assess animal health and behavior during animal studies to minimize animal distress during study conduct. Different LLMs were evaluated [38] to generate clinical score sheets for a mouse model of inflammatory bowel disease. Although the LLMs evaluated performed well, there were instances of hallucinations and the generation of incorrect or irrelevant information, which emphasizes the need for human review. Such findings led the authors to advocate that LLMs should only be used for generating the initial draft, while human input is still needed to complete the final report.

The use of LLMs for complete automation is also being explored for complex analyses such as pharmacokinetics and pharmacodynamics (PKPD) modeling of preclinical data. As previously discussed, Shin et al. [12] demonstrated that ChatGPT and Gemini can generate content to support the use of NONMEM for PKPD analysis. Although NONMEM is most notably used for clinical PKPD modeling, it is frequently employed for modeling preclinical data gathered from animal studies. The authors showed that the LLMs were able to generate training materials for NONMEM, providing an overview of NONMEM code structure, and generating code for NONMEM analyses using simple lay language prompts. While the generated code was not fully reproducible and contained errors that required correction by an experienced user, LLMs offer an efficient option for creating initial coding templates for PKPD analyses. This capability has the potential to significantly reduce the time required for PKPD scientists in both the preclinical and clinical space.

3.4. Clinical Trials and Real‐World Data

A recent perspective [30] highlights the transformative potential of LLMs in leveraging real‐world data (RWD). For instance, LLMs can be used in data curation pipelines using templated prompts, thereby enabling the extraction of relevant information without the need for task‐specific fine tuning. This reduces data‐wrangling efforts and ultimately enhances the generation of real‐world evidence from RWD [30].

LLMs have also demonstrated their potential in forecasting patient trajectories. Makarov et al. [39] introduce the Digital Twin—Generative Pretrained Transformer (DT‐GPT) model, which combines an existing LLM together with rich electronic health record (EHR) data to create digital twins of patients. The DT‐GPT model effectively addresses common challenges in RWD such as missingness, noise, and limited sample sizes. It was shown to outperform a number of ML methods in forecasting clinical variables. This work exemplifies the transformative potential of LLMs in the modeling and simulation of data from both clinical trials and real‐world settings.

3.5. Literature Review

3.5.1. Evidence Generation

LLMs hold significant potential for transforming how clinical evidence is gathered and assessed. These models provide new methods for extracting, analyzing, and interpreting data from various medical and scientific fields, thereby making the process of evidence generation faster and more comprehensive [40]. One of the most valuable applications of LLMs is their ability to automate literature searches. Typically, researchers carrying out systematic reviews or meta‐analyses must manually sift through vast amounts of published studies, which is time‐consuming. However, with tools like GPT‐4, this process can be significantly expedited. These models can quickly find relevant studies, summarize key points, and highlight important trends, often completing in just a few hours [41]. For instance, Luo et al. [42] showed how LLMs can speed up the early stages of systematic reviews by automating tasks like screening and data extraction. Similarly, LLM‐like TrialMind [43] have shown they can pull key data from clinical studies with impressive accuracy, pulling from large databases like PubMed. In the domain of pharmacokinetics (PK), the extraction of PK parameters from across literature from PubMed demonstrates promising results [44]. Such technology is particularly helpful in fields where new studies are being published constantly, such as oncology and cardiology, where staying up‐to‐date is critical.

Beyond just searching the literature, LLMs hold the promise of bringing efficiency by turning unstructured data into more usable formats. Many clinical reports, especially in areas like radiology, are written in free text, making it difficult to retrieve specific pieces of information. LLMs can identify relevant details—like patient demographics, outcomes, or treatment protocols—from these reports and convert them into structured data ready for analysis [45, 46]. For example, Reichenpfader et al. [47] show how LLMs are being used to extract clinical data from radiology reports, solving the challenge of dealing with free‐text documentation. These models are also being used to pull information from clinical trial reports, significantly speeding up the data extraction phase of meta‐analyses [48]. Another major advantage of LLMs is their ability to continuously update evidence as new studies are published [42]. Instead of waiting months for updates, these models can incorporate new findings in real‐time, ensuring that reviews and meta‐analyses stay relevant. This is a significant improvement over traditional methods, where the lag time between data collection and publication can render reviews outdated almost immediately. LLMs provide a way to keep systematic reviews current and accurate as the science evolves.

3.5.2. Application in Model‐Based Meta‐Analysis (MBMA)

One of the significant challenges in model‐based meta‐analysis (MBMA) is harmonizing data across studies that may use different terminologies, outcomes, and measurement scales. LLMs can assist in this process by identifying synonyms or related terms across studies and standardizing the data for integration into a meta‐analysis. For example, if one study reports outcomes as “odds ratios” and another as “risk ratios,” LLMs can standardize these measures to enable their inclusion in a pooled analysis. Additionally, LLMs can help with more complex tasks like imputing missing data or transforming noncomparable datasets into a format that can be analyzed together. This is particularly useful in MBMA, where integrating data from different types of clinical studies (e.g., RCTs and observational studies) is critical for making robust predictions [2].

LLMs can also enhance predictive modeling efforts within MBMA. These models can work through large data sets to identify patterns and predict future endpoints and patient outcomes, such as drug efficacy or safety across different populations. For instance, LLMs can help develop dose–response models by analyzing data from multiple clinical trials and observational studies, providing insights into optimal dosing strategies or identifying populations at risk of adverse events [49]. In [50], the authors applied GPT‐4 to conduct network meta‐analysis (NMA) in four case studies. Using API calls, relevant information was extracted from publications. Subsequently, R code was generated to perform NMA, and based on the results, reports summarizing the results were produced [50]. The LLM demonstrated over 99% accuracy in data extraction across all case studies and generated R scripts that could be executed end‐to‐end without human intervention. While the LLM was not always consistent, the study highlighted the potential for LLMs to significantly reduce the time involved in NMAs, suggesting that with further refinement, such technologies demonstrate promise in health technology assessments (HTAs).

Additionally, the integration of LLMs into quantitative modeling frameworks, such as pharmacometrics or systems pharmacology, allows researchers to incorporate data from diverse sources into predictive models. This leads to more accurate predictions for clinical trial outcomes or drug development processes [2]. Furthermore, MBMA requires the development of complex statistical models, which can be time‐consuming and prone to error. LLMs can assist by automating parts of the model‐building process. For example, LLMs can suggest appropriate covariates or interaction terms based on the data, reducing the need for manual trial‐and‐error model development. LLMs can also be used to generate code for statistical or PK/PD modeling software, further streamlining the model development process.

4. Use Cases

In this section, we present three specific case studies that demonstrate the use of LLMs.

4.1. Case Study 1: LLM Tool for Biomedical Queries

Cutting‐edge text embedding models based on LLMs have the potential to significantly enhance the process of identifying relevant academic papers and experimental results in response to biomedical queries, such as safety assessments, biomarker discovery, and mechanism of action studies. The vast amount of literature in this field, including over 37 million academic articles on PubMed [51], makes it challenging to quickly locate pertinent information. Researchers in biopharma are often overwhelmed by this sheer volume of data, hindering their ability to efficiently find and utilize critical findings. Advanced search mechanisms powered by LLMs can be invaluable in this context. For example, the NV‐embed model [52] has recently achieved state‐of‐the‐art performance [53] on the MTEB benchmark [54].

Fine‐tuning LLMs for document embedding tasks can improve performance, especially when dealing with highly specialized text data, tabular data, or slide decks. Even on academic articles, which are in text form, various techniques are required for the best performance. As these articles are often lengthy (e.g., over thousands of words), it is necessary to split a document into multiple text chunks. However, while a chunk would represent detailed information, it may miss high‐level information such as paper titles or keywords, necessitating additional steps to include these elements for effective retrieval.

Despite their potential, several challenges are associated with using LLMs for biomedical literature searches. One significant challenge is the potential absence of required information. In some cases, the answer to a specific query might not exist within the available literature. Another challenge is the rapidly evolving nature of biomedical research, where information that is accurate today may become outdated tomorrow. This necessitates continuous updates and retraining of the models to ensure they remain current.

From a technical perspective, developing effective retrieval systems poses several challenges. One primary issue is the reliance on embedding similarity between the query and the retrieved text. Traditional training methods for embedding models are not ideally suited for this use case, as embeddings for questions and answers can differ significantly. While training adjustments can help improve the alignment, a fundamental issue remains: if the answer to a query does not exist, or if the model does not know what the answer should look like, effective retrieval becomes problematic. Another technical challenge is ensuring that the model can handle the vast and diverse range of biomedical topics, including specialized terminologies and concepts.

This requires extensive training on diverse and comprehensive data sets to enhance the model's robustness and accuracy.

Despite these challenges, the future of LLMs in biomedical research is promising. As these models improve in their reasoning abilities, they could potentially handle more complex tasks such as hypothesis generation by integrating multiple experimental observations. This would significantly enhance research capabilities beyond simple search functions, assisting researchers in formulating new hypotheses, designing experiments, and even predicting potential outcomes based on existing data.

In conclusion, while there are several challenges associated with using LLMs for biomedical literature searches, their potential benefits are substantial. By addressing the technical and domain‐specific challenges, LLMs can transform the way researchers access and utilize biomedical information, ultimately accelerating the pace of discovery and development in the field.

4.2. Case Study 2: LLM for Regulatory Intelligence and Medical Writing

In the pharmaceutical industry, regulatory intelligence and medical writing are two critical challenges that demand substantial time and expertise, yet often rely on labor‐intensive processes. The vast and complex regulatory landscape requires professionals to gather, interpret, and apply data from numerous sources with differing formats and large volumes, posing significant barriers to efficiency and accuracy. NLP, ML, and Generative AI (Gen‐AI) solutions, when crafted by seasoned regulatory experts, offer a transformative answer to these challenges by automating data extraction, standardizing diverse formats, and providing targeted insights for more precise, data‐driven decisions.

4.2.1. Regulatory Intelligence Challenges and RIA as a Solution

Regulatory intelligence is pivotal for maintaining compliance and optimizing drug development strategies. It traditionally involves navigating and curating information from sources such as the FDA, EMA, and other global agencies. Manual efforts to stay updated on regulatory changes and analyze competitive landscapes are time‐consuming and prone to error. The Regulatory Intelligence Assistant (RIA) [55], an AI‐powered tool designed by regulatory professionals, addresses these limitations by automating data collection and summarization, gathering information from diverse regulatory sources and streamlining it for immediate use. RIA's automation capabilities extend to repetitive tasks like regulatory submissions, document generation, and correspondence tracking, significantly enhancing operational efficiency and freeing professionals to focus on strategic decision‐making.

One of RIA's core strengths is its smart alert system, which notifies regulatory teams of relevant guideline updates. Rather than sifting through vast amounts of information, professionals receive curated alerts that streamline regulatory monitoring. Additionally, RIA delivers real‐time regulatory updates, curating information based on specific therapeutic areas, agencies, or development phases, enabling regulatory teams to receive actionable insights tailored to their immediate needs. RIA's cross‐regional consistency checks help ensure that information from multiple regions is harmonized, making global registrations smoother and reducing the risk of errors.

RIA's competitive intelligence capabilities further amplify its value by enabling smarter clinical development strategies. By tracking competitor pipelines and analyzing clinical trial data, RIA provides a clear view of market trends, opportunities for differentiation, and gaps in the competitive landscape. With predictive analytics, RIA can identify regulatory precedents, simulate possible outcomes, and even forecast review timelines, helping teams strategically allocate resources and mitigate risks proactively.

4.2.2. Automating Medical Writing With REGAIN

Medical writing is another area where AI is revolutionizing pharmaceutical processes. Generating complex, data‐intensive documents, such as clinical study reports, regulatory submission dossiers, and safety summaries, has traditionally required significant time and manual effort. AI‐driven tools, such as REGAIN, particularly those leveraging NLP and ML, streamline this process by automatically extracting essential information from clinical databases and research literature, summarizing findings, and generating structured, compliant documentation.

With REGAIN, medical writers can produce high‐quality reports and submissions more quickly and accurately. Automation reduces time spent on laborious data entry and proofreading, allowing writers to focus on strategic insights and the narrative development that contextualizes the data. This AI‐enhanced workflow not only increases output efficiency but also ensures compliance across regions by standardizing content according to regulatory guidelines.

4.3. Case Study 3: Apollo‐AI Advances Quantitative Clinical Pharmacology Through Intelligent Agent Frameworks

In the evolving landscape of clinical pharmacology, the integration of AI has the potential to accelerate the analytical workflows of quantitative clinical pharmacologists (QCP) and translational scientists (TS). Currently under development, Apollo‐AI [56] is a software system designed to augment the analytical capabilities of QCP and TS professionals by streamlining PK and PD analysis and code generation during drug development. These tasks include data cleaning and merging—such as handling missing values and integrating data from multiple sources—conducting exploratory data analysis, and performing a range of PK/PD analyses, from basic noncompartmental analysis (NCA) to advanced population pharmacokinetic and pharmacodynamic modeling.

The Apollo‐AI system aims to overcome some of the typical limitations of LLM‐based tools by leveraging its agent‐based architecture and a “fit for purpose” user interface. With built‐in human oversight, it aims to mitigate issues like hallucinations while delivering domain‐specific functionality tailored to the unique needs of clinical pharmacology.

The system's architecture is built on foundational principles designed to enhance user experience through a structured, agent‐based approach. Each agent has a clearly defined role, operating within a specific scope to focus efficiently on assigned tasks. The UI is purpose‐built for the complex workflows typical in QCP and TS analyses, going beyond generic chat‐based interfaces. Instead, Apollo‐AI provides a comprehensive, end‐to‐end analysis platform that integrates essential features like data visualization, workflow management, code editing, and report generation. This specialized interface allows users to iteratively develop, refine, and manage complex analysis projects (e.g., developing a PK model, running an NCA) with the support of dedicated agents. A key strength of Apollo‐AI's infrastructure is its secure backend, ensuring data protection and regulatory compliance. This allows clinical pharmacologists to confidently handle sensitive patient data and proprietary research findings while maintaining strict confidentiality.

Apollo‐AI integrates a team of specialized AI agents to streamline and enhance the analytical workflows for QCP and TS. Key agents—the Conversational Agent, Planning Agent, Task Agents, and Global Agent—work together to ensure both precision and efficiency in PK/PD analyses.

The Conversational Agent is the primary user interface, converting natural language inputs into actionable tasks. For instance, when a data analyst requests to model drug concentration levels, the Conversational Agent may interpret this as a PK modeling workflow, verify with the end user before assigning it to the Planning Agent. The Planning Agent then builds a comprehensive methodological plan, detailing data sources, variable selection criteria, and analytical methods to align with study objectives and user needs. Task Agents handle the execution, performing functions like code generation, running analyses on clinical trial datasets, and summarizing results. They may also manage preparatory tasks like data cleaning and outlier detection. The Global Agent oversees coordination among all agents, delivering context‐specific feedback throughout the process. This structured, agent‐based framework keeps human experts actively engaged, preserving a human‐in‐the‐loop approach that safeguards analytical integrity and scientific rigor.

Finally, a crucial component of Apollo‐AI is the Agent‐Computer Interface (ACI), which enables smooth collaboration among the AI agents by offering an environment like the IDEs used by software engineers. Through the ACI, agents can efficiently access and manage code repositories, data sets, and analytical scripts, reducing errors and boosting overall system performance.

By automating repetitive and time‐consuming tasks, Apollo‐AI has the potential to increase workflow efficiency while maintaining high accuracy through continuous human oversight. This collaboration between AI agents and human users ensures that analyses are both efficient and robust. As Apollo‐AI continues to develop, it has the potential to improve analytical workflows, enabling researchers to receive real‐time insights from their data.

5. Challenges and Limitations

One of the main limitations with LLMs is their tendency to hallucinate (also known as confabulate), producing incorrect information, particularly when addressing complex questions. This becomes a serious concern when accuracy is paramount, such as in evidence generation. For instance, Gwon et al. [57] found that while LLMs like ChatGPT can successfully identify relevant studies, they also generate a fair amount of irrelevant or even fabricated references. Hence, while LLMs can speed up the process of gathering evidence, human oversight is still needed to ensure everything is accurate.

Another limitation is that while LLMs excel at processing natural language, they often struggle with complex medical concepts, especially when it involves interpreting numerical data or making fine‐tuned clinical judgments. For instance, LLMs can readily extract qualitative data from clinical studies, but they might not do as well when dealing with quantitative results such as effect sizes or p values, which are essential in applications such as MBMA [58]. This underscores the necessity for human experts to validate the LLM‐generated outputs to ensure their accuracy and relevance.

Ethical and transparency issues also pose significant challenges when using LLMs. A major concern is the risk of bias in the data that is used to train these models. If the training data are biased, LLMs could perpetuate these biases in their outputs, leading to skewed or inaccurate conclusions. With respect to transparency, the “black box” nature of LLMs makes it hard to understand how they reach certain decisions, which complicates efforts to validate their results or obtain regulatory approval for their use in clinical settings [59]. Data privacy and security is another concern: it is essential to ensure that regulations are followed and risks are mitigated [60].

6. Conclusion and Future Directions

Applications of LLMs are rapidly emerging across every facet of drug discovery and development. The examples discussed in this primer (including links to resources provided in Table S1 of the Supporting Information) represent a broad spectrum of applications, and as LLMs become more mainstream, it is anticipated that even more innovative uses will emerge. These tools have demonstrated substantial promise and have yielded encouraging validation results. However, given the critical nature of efforts in drug development, it is imperative that LLMs are used primarily for initial drafts (such as generating analysis codes, results, and reports), while human review remains essential. Despite their limitations, effectively incorporating LLMs into the workflow offers significant potential to save time and effort [61].

We envision that human scientists can collaborate with LLMs at three different levels: as a tool, assistant, or partner [2], presenting many opportunities. One significant initial benefit is for LLMs to save time and mental effort on routine tasks, thereby allowing human scientists to focus more of their cognitive resources on scientific interpretation and other complex tasks that require higher mental capacity. While applications of LLMs face challenges and limitations, ongoing efforts to scale up model sizes and incorporate reasoning capabilities, along with future technological advancements, may help mitigate or overcome these issues.

The text understanding, reasoning, and generation capabilities of existing LLMs pave the way for future capabilities of AI. In particular, by fine‐tuning LLMs to domain knowledge followed by instruction tuning, LLM‐based AI agents can be constructed to accomplish specific tasks in biomedical research [62]. By combining single AI agents that are focused on subtasks, multi‐agent AI systems could lay the groundwork for the aspirational “AI scientists” that can be used to empower biomedical research [62]. In conclusion, the advent of LLMs unlocks unprecedented opportunities for human‐machine collaboration [2, 62], heralding a new era of innovation that is just beginning to unfold.

Conflicts of Interest

All authors were employees and additionally may be shareholders of their respective companies at the time of writing.

Disclaimer

The contents are those of the author(s) and do not necessarily represent the official views of, nor an endorsement, by FDA/HHS or the U.S. Government. As Associate Editors for Clinical and Translational Science, Qi Liu and Mohamed Shahin were not involved in the review or decision process for this paper.

Supporting information

Data S1.

Acknowledgments

J.L. would like to acknowledge Logan Brooks, Omid Bazgir, Gengbo Liu, Erick Velasquez, Joy Hsu, Jin Y. Jin, and Amita Joshi for their discussions and support. ChatGPT was used to edit language to help enhance the readability of the manuscript.

Funding: The authors received no specific funding for this work.

References

- 1. Vamathevan J., Clark D., Czodrowski P., et al., “Applications of Machine Learning in Drug Discovery and Development,” Nature Reviews. Drug Discovery 18 (2019): 463–477. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2. Terranova N., Renard D., Shahin M. H., et al., “Artificial Intelligence for Quantitative Modeling in Drug Discovery and Development: An Innovation and Quality Consortium Perspective on Use Cases and Best Practices,” Clinical Pharmacology and Therapeutics 115 (2024): 658–672. [DOI] [PubMed] [Google Scholar]

- 3. Naik K., Goyal R. K., Foschini L., et al., “Current Status and Future Directions: The Application of Artificial Intelligence/Machine Learning for Precision Medicine,” Clinical Pharmacology and Therapeutics 115 (2024): 673–686. [DOI] [PubMed] [Google Scholar]

- 4. Zheng Y., Koh H. Y., Yang M., et al., “Large Language Models in Drug Discovery and Development: From Disease Mechanisms to Clinical Trials,” arXiv [q‐bio.QM] (2024), http://arxiv.org/abs/2409.04481. [Google Scholar]

- 5. Bhatnagar R., Sardar S., Beheshti M., and Podichetty J. T., “How Can Natural Language Processing Help Model Informed Drug Development?: A Review,” JAMIA Open 5 (2022): ooac043. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6. Trajanov D., Trajkovski V., Dimitrieva M., et al., “Review of Natural Language Processing in Pharmacology,” Pharmacological Reviews 75 (2023): 714–738. [DOI] [PubMed] [Google Scholar]

- 7. Zhao W. X., Zhou K., Li J., et al., “A Survey of Large Language Models,” arXiv [cs.CL] (2023), http://arxiv.org/abs/2303.18223. [Google Scholar]

- 8. Minaee S., Mikolov T., Nikzad N., et al., “Large Language Models: A Survey,” (2024), http://arxiv.org/abs/2402.06196.

- 9. Wang J., Cheng Z., Yao Q., Liu L., Xu D., and Hu G., “Bioinformatics and Biomedical Informatics With ChatGPT: Year One Review,” Quantitative Biology 12 (2024): 345–359. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10. Lee P., Goldberg C., and Kohane I., The AI Revolution in Medicine: GPT‐4 and Beyond (Pearson, 2023). [Google Scholar]

- 11. Sahoo S. S., Plasek J. M., Xu H., et al., “Large Language Models for Biomedicine: Foundations, Opportunities, Challenges, and Best Practices,” Journal of the American Medical Informatics Association 31 (2024): 2114–2124. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12. Shin E., Yu Y., Bies R. R., and Ramanathan M., “Evaluation of ChatGPT and Gemini Large Language Models for Pharmacometrics With NONMEM,” Journal of Pharmacokinetics and Pharmacodynamics 51 (2024): 187–197. [DOI] [PubMed] [Google Scholar]

- 13. Cloesmeijer M. E., Janssen A., Koopman S. F., Cnossen M. H., Mathôt R. A. A., and SYMPHONY consortium , “ChatGPT in Pharmacometrics? Potential Opportunities and Limitations,” British Journal of Clinical Pharmacology 90 (2024): 360–365. [DOI] [PubMed] [Google Scholar]

- 14. Raschka S., Build a Large Language Model (From Scratch) (Manning Publications, 2024), https://www.oreilly.com/library/view/build‐a‐large/9781633437166AU/. [Google Scholar]

- 15. Bommasani R., Hudson D. A., Adeli E., et al., “On the Opportunities and Risks of Foundation Models,” arXiv [cs.LG] (2021), http://arxiv.org/abs/2108.07258. [Google Scholar]

- 16. Wolfram S., What Is ChatGPT Doing: … And Why Does It Work? (Wolfram Media, 2023). [Google Scholar]

- 17. Vaswani A., Shazeer N., Parmar N., et al., “Attention Is all You Need,” arXiv [cs.CL] (2017), http://arxiv.org/abs/1706.03762. [Google Scholar]

- 18. Goodfellow I., Bengio Y., and Courville A., Deep Learning (MIT Press, 2016). [Google Scholar]

- 19. Jin Q., Wan N., Leaman R., et al., “Demystifying Large Language Models for Medicine: A Primer,” arXiv [cs.AI] (2024), http://arxiv.org/abs/2410.18856. [Google Scholar]

- 20. Ouyang L., Wu J., Jiang X., et al., “Training Language Models to Follow Instructions With Human Feedback,” arXiv [cs.CL] (2022), http://arxiv.org/abs/2203.02155. [Google Scholar]

- 21. Meier J., Rao R., Verkuil R., Liu J., Sercu T., and Rives A., “Language Models Enable Zero‐Shot Prediction of the Effects of Mutations on Protein Function,” bioRxiv (2021), 10.1101/2021.07.09.450648. [DOI] [Google Scholar]

- 22. Gruver N., Finzi M., Qiu S., and Wilson A. G., “Large Language Models are Zero‐Shot Time Series Forecasters,” arXiv [cs.LG] (2023), http://arxiv.org/abs/2310.07820. [Google Scholar]

- 23. Brown T. B., Mann B., Ryder N., et al., “Language Models are Few‐Shot Learners,” arXiv [cs.CL] (2020), http://arxiv.org/abs/2005.14165. [Google Scholar]

- 24. Wei J., Wang X., Schuurmans D., et al., “Chain‐of‐Thought Prompting Elicits Reasoning in Large Language Models,” arXiv [cs.CL] (2022), http://arxiv.org/abs/2201.11903. [Google Scholar]

- 25. Lewis P., Perez E., Piktus A., et al., “Retrieval‐Augmented Generation for Knowledge‐Intensive NLP Tasks,” arXiv [cs.CL] (2020), http://arxiv.org/abs/2005.11401. [Google Scholar]

- 26. Xiong G., Jin Q., Lu Z., and Zhang A., “Benchmarking Retrieval‐Augmented Generation for Medicine,” arXiv [cs.CL] (2024), http://arxiv.org/abs/2402.13178. [Google Scholar]

- 27. Zhang W., Wang Q., Kong X., et al., “Fine‐Tuning Large Language Models for Chemical Text Mining,” Chemical Science 15 (2024): 10600–10611. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28. Singhal K., Tu T., Gottweis J., et al., “Towards Expert‐Level Medical Question Answering With Large Language Models,” arXiv [cs.CL] (2023), http://arxiv.org/abs/2305.09617. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29. Hu E. J., Shen Y., Wallis P., et al., “LoRA: Low‐Rank Adaptation of Large Language Models,” (2021), http://arxiv.org/abs/2106.09685.

- 30. Anderson W., Braun I., Bhatnagar R., et al., “Unlocking the Capabilities of Large Language Models for Accelerating Drug Development,” Clinical Pharmacology and Therapeutics 116 (2024): 38–41. [DOI] [PubMed] [Google Scholar]

- 31. Pal S., Bhattacharya M., Lee S.‐S., and Chakraborty C., “A Domain‐Specific Next‐Generation Large Language Model (LLM) or ChatGPT Is Required for Biomedical Engineering and Research,” Annals of Biomedical Engineering 52 (2024): 451–454. [DOI] [PubMed] [Google Scholar]

- 32. Liang Y., Zhang R., Zhang L., and Xie P., “DrugChat: Towards Enabling ChatGPT‐Like Capabilities on Drug Molecule Graphs,” arXiv [q‐bio.BM] (2023), http://arxiv.org/abs/2309.03907. [Google Scholar]

- 33. Chaves J. M. Z., Wang E., Tu T., et al., “Tx‐LLM: A Large Language Model for Therapeutics,” arXiv [cs.CL] (2024), http://arxiv.org/abs/2406.06316. [Google Scholar]

- 34. Liu Z., Roberts R. A., Lal‐Nag M., Chen X., Huang R., and Tong W., “AI‐Based Language Models Powering Drug Discovery and Development,” Drug Discovery Today 26 (2021): 2593–2607. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35. Bouhedjar K., Boukelia A., Khorief Nacereddine A., Boucheham A., Belaidi A., and Djerourou A., “A Natural Language Processing Approach Based on Embedding Deep Learning From Heterogeneous Compounds for Quantitative Structure‐Activity Relationship Modeling,” Chemical Biology & Drug Design 96 (2020): 961–972. [DOI] [PubMed] [Google Scholar]

- 36. Boiko D. A., MacKnight R., Kline B., and Gomes G., “Autonomous Chemical Research With Large Language Models,” Nature 624 (2023): 570–578. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37. Silberg J., Swanson K., Simon E., et al., “UniTox: Leveraging LLMs to Curate a Unified Dataset of Drug‐Induced Toxicity From FDA Labels,” medRxiv (2024), 10.1101/2024.06.21.24309315. [DOI] [Google Scholar]

- 38. Berce C., “Artificial Intelligence Generated Clinical Score Sheets: Looking at the Two Faces of Janus,” Laboratory Animal Research 40, no. 1 (2024): 21, 10.1186/s42826-024-00206-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39. Makarov N., Bordukova M., Rodriguez‐Esteban R., Schmich F., and Menden M. P., “Large Language Models Forecast Patient Health Trajectories Enabling Digital Twins,” medRxiv (2024): 2024.07.05.24309957, 10.1101/2024.07.05.24309957. [DOI] [Google Scholar]

- 40. Zhang G., Jin Q., McInerney J. D., et al., “Leveraging Generative AI for Clinical Evidence Synthesis Needs to Ensure Trustworthiness,” Journal of Biomedical Informatics 153 (2024): 104640, 10.1016/j.jbi.2024.104640. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41. Li M., Sun J., and Tan X., “Evaluating the Effectiveness of Large Language Models in Abstract Screening: A Comparative Analysis,” Systematic Reviews 13 (2024): 219. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42. Luo X., Chen F., Zhu D., et al., “Potential Roles of Large Language Models in the Production of Systematic Reviews and Meta‐Analyses,” Journal of Medical Internet Research 26 (2024): e56780, 10.2196/56780. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43. Wang Z., Cao L., Danek B., et al., “Accelerating Clinical Evidence Synthesis With Large Language Models,” arXiv [cs.CL] (2024), http://arxiv.org/abs/2406.17755. [Google Scholar]

- 44. Gonzalez Hernandez F., Nguyen Q., Smith V. C., et al., “Named Entity Recognition of Pharmacokinetic Parameters in the Scientific Literature,” Scientific Reports 14 (2024): 23485. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45. Tang L., Sun Z., Idnay B., et al., “Evaluating Large Language Models on Medical Evidence Summarization,” NPJ Journal of Digital Medicine 6 (2023): 158. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46. Jiang H., Xia S., Yang Y., et al., “Transforming Free‐Text Radiology Reports Into Structured Reports Using ChatGPT: A Study on Thyroid Ultrasonography,” European Journal of Radiology 175 (2024): 111458. [DOI] [PubMed] [Google Scholar]

- 47. Reichenpfader D., Müller H., and Denecke K., “A Scoping Review of Large Language Model Based Approaches for Information Extraction From Radiology Reports,” NPJ Journal of Digital Medicine 7 (2024): 222. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48. Gartlehner G., Kahwati L., Hilscher R., et al., “Data Extraction for Evidence Synthesis Using a Large Language Model: A Proof‐Of‐Concept Study,” Research Synthesis Methods 15 (2024): 576–589. [DOI] [PubMed] [Google Scholar]

- 49. Shahin M. H., Barth A., Podichetty J. T., et al., “Artificial Intelligence: From Buzzword to Useful Tool in Clinical Pharmacology,” Clinical Pharmacology and Therapeutics 115 (2024): 698–709. [DOI] [PubMed] [Google Scholar]

- 50. Reason T., Benbow E., Langham J., Gimblett A., Klijn S. L., and Malcolm B., “Artificial Intelligence to Automate Network Meta‐Analyses: Four Case Studies to Evaluate the Potential Application of Large Language Models,” Pharmacoeconom Open 8 (2024): 205–220. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51.“PubMed” [cited 1 Nov 2024], https://pubmed.ncbi.nlm.nih.gov/.

- 52. Lee C., Roy R., Xu M., et al., “NV‐Embed: Improved Techniques for Training LLMs as Generalist Embedding Models,” (2024), http://arxiv.org/abs/2405.17428.

- 53.“MTEB Leaderboard” [cited 1 Nov 2024], https://huggingface.co/spaces/mteb/leaderboard.

- 54. Muennighoff N., Tazi N., Magne L., and Reimers N., “MTEB: Massive Text Embedding Benchmark,” arXiv [cs.CL] (2022), http://arxiv.org/abs/2210.07316.

- 55. Vivpro RIA . [cited 29 Oct 2024], https://vivpro.ai/products.

- 56. InsightRX , “InsightRX,” [Internet] 2021. [cited 29 Oct 2024], https://www.insight‐rx.com/.

- 57. Gwon Y. N., Kim J. H., Chung H. S., et al., “The Use of Generative AI for Scientific Literature Searches for Systematic Reviews: ChatGPT and Microsoft Bing AI Performance Evaluation,” JMIR Medical Informatics 12 (2024): e51187. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 58. Oami T., Okada Y., and Nakada T.‐A., “Performance of a Large Language Model in Screening Citations,” JAMA Network Open 7 (2024): e2420496. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 59. Meng X., Yan X., Zhang K., et al., “The Application of Large Language Models in Medicine: A Scoping Review,” iScience 27 (2024): 109713. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 60. Murdoch B., “Privacy and Artificial Intelligence: Challenges for Protecting Health Information in a New Era,” BMC Medical Ethics 22 (2021): 122. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 61. Lubiana T., Lopes R., Medeiros P., et al., “Ten Quick Tips for Harnessing the Power of ChatGPT in Computational Biology,” PLoS Computational Biology 19 (2023): e1011319. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 62. Gao S., Fang A., Huang Y., et al., “Empowering Biomedical Discovery With AI Agents,” Cell 187 (2024): 6125–6151. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Data S1.