Abstract

Accurate variant calling from whole-exome sequencing (WES) data is vital for understanding genetic diseases. Recently, commercial variant calling software have emerged that do not require bioinformatics or programming expertise, hence enabling independent analysis of WES data by smaller laboratories and clinics and circumventing the need for dedicated and expensive computers and bioinformatics staff. This study benchmarks four non-programming variant calling software namely, Illumina BaseSpace Sequence Hub (Illumina), CLC Genomics Workbench (CLC), Partek Flow, and Varsome Clinical, for the variant calling of three Genome in a Bottle (GIAB) whole-exome sequencing datasets (HG001, HG002 and HG003). Following alignment of sequence reads to the human reference genome GRCh38, variants were compared against high-confidence regions from GIAB datasets and assessed using the Variant Calling Assessment Tool (VCAT). Illumina’s DRAGEN Enrichment achieved the highest precision and recall scores for single nucleotide variant (SNV) and insertions/deletion (indel) calling at over 99% for SNVs and 96% for indels while Partek Flow using unionised variant calls from Freebayes and Samtools had the lowest indel calling performance. Illumina had the highest true positives (TP) variant counts for all samples and all four software shared 98–99% similarity of TP variants. Run times were shortest for CLC and Illumina ranging from 6 to 25 min and 29 to 36 min respectively, while Partek Flow took the longest (3.6 to 29.7 h). This study provides information for clinicians and biologists without programming expertise in their selection of software for variant analysis that balance accuracy, sensitivity, and runtime.

Supplementary Information

The online version contains supplementary material available at 10.1038/s41598-025-97047-7.

Keywords: Benchmarking, Variant calling, Whole-exome sequencing, No-programming software, GIAB.

Subject terms: Cancer genetics, Disease genetics, Genetic testing, Next-generation sequencing

Introduction

Next-generation sequencing (NGS) has emerged as a revolutionary tool in genomics, offering unprecedented capabilities for the sequencing of DNA and RNA. NGS enables the simultaneous sequencing of millions to billions of DNA fragments, reforming various areas of biological and medical research. Among the various NGS methodologies, whole-exome sequencing (WES) has emerged as a powerful and cost-effective tool, capturing and sequencing the exonic regions of the genome that are highly relevant to human health and disease1.

Variant calling, a pivotal step in the WES workflow, involves the identification of genetic variations or mutations within the sequenced exome which encompass single nucleotide polymorphisms (SNPs), insertions, deletions, and structural rearrangements, each potentially contributing to the phenotypic diversity and implications in numerous diseases2. Hence, accurate variant calling is paramount for elucidating the genetic basis of diseases, understanding population genetics, and guiding personalized medicine initiatives. The field of bioinformatics has evolved rapidly to address the analytical challenges of variant calling3. Variant calling requires bioinformatics tools and computational resources to execute tasks which comprise of several key steps aiming to accurately identify genetic variants from sequencing data. First, sequenced reads are aligned to a reference genome and commonly used aligners are BWA4, Bowtie25 and Novoalign (Novocraft Technologies, Selangor, Malaysia). Then, for the identification of deviations from reference sequence, the Genome Analysis Toolkit (GATK) is often used. GATK employs algorithms for base quality score recalibration, local realignment, and variant calling6. In recent years, DeepVariant employs deep learning algorithms in their variant calling pipelines7. Dynamic Read Analysis for GENomics (DRAGEN)(Illumina Inc., USA), processes and analyse WES data with machine learning capabilities on specific modules within its pipelines.

A wide range of variant calling algorithms, tools, and pipelines are available, each employing different methodologies and assumptions. Benchmarking enables a systematic comparison of these methods under standardized conditions, facilitating the identification of best practices and the development of guidelines for variant calling to assess their performance and quality. The Genome in a Bottle (GIAB) and the National Institute of Standards (NIST) have compiled seven gold standard datasets (HG001-7) containing high-confidence variant calls attained from diverse sequencing technologies and library preparation methods8. The collaboration of Global Alliance for Genomics and Health (GA4GH), GIAB, and PrecisionFDA defined the best practices and criteria for small variant benchmarking in a stratified manner which allowed researchers to publicly test and validate their variant calling methods9,10. Hence, these gold standards have been extensively used for comparative analyses amongst variant callers on performance11–16.

Biologists often rely on bioinformatics specialists to analyse NGS data. Recently, there are commercial software emerging which do not require prior programming knowledge to perform variant calling analyses. Moreover, there is a noticeable lack of benchmarking studies to evaluate and compare the performance, quality and limitations of these software. Hence, we aimed to evaluate the performance, quality, and limitations of four software across three GIAB individual WES gold standard datasets (HG001-3). The comparisons from this study could assist scientists in their choice of software for their analysis of genomic data, without the need for programming or additional labour. Moreover, these user-friendly software improves the accessibility of NGS analysis for smaller laboratories and pathologists involved in genomic testing.

Methods

Data acquisition

For our analysis, three WES sequence reads from GIAB individuals were selected and retrieved from NCBI sequence read archive (SRA)17. The widely used and well known HG001 sample is from a female patient of Caucasian ancestry, while HG002 and HG003 are Ashkenazim Jewish males. SRA accession identification for HG001, HG002, and HG003 are ERR1905890 (https://www.ncbi.nlm.nih.gov/sra/ERX1966271[accn]), SRR2962669 (https://www.ncbi.nlm.nih.gov/sra/SRX1453593[accn]), and SRR2962692 (https://www.ncbi.nlm.nih.gov/sra/SRX1453614[accn]) respectively. All three samples had exome libraries prepared with the Agilent SureSelect Human All Exon Kit V5 (Agilent Technologies, CA) and paired-end sequencing with a minimum read length of 125 bp was performed. Region BED file was downloaded from Agilent (https://earray.chem.agilent.com/suredesign, accessed on 15 January 2024).

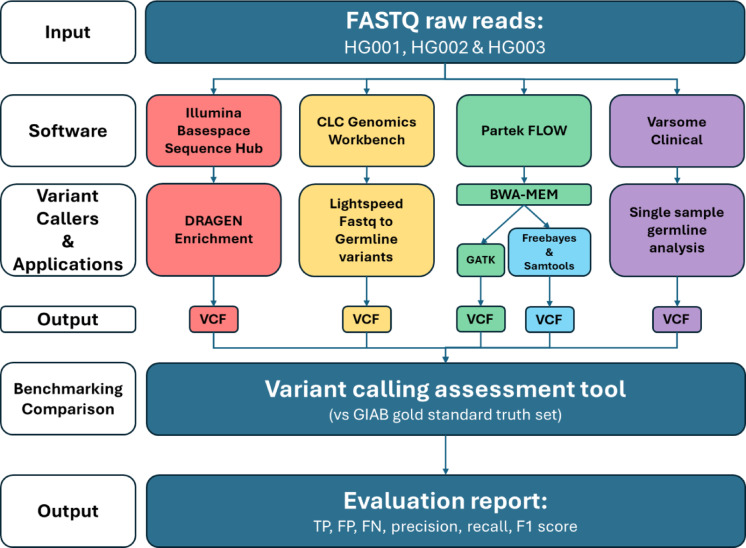

Software and variant calling

Four software and their respective variant calling tools/applications were utilised: Illumina BaseSpace Sequence Hub (Illumina Inc., USA) - Dragen Enrichment (Illumina), CLC Genomics Workbench (https://digitalinsights.qiagen.com) - Lightspeed to Germline variants (CLC), Partek Flow using either GATK (Partek (GATK)) or Freebayes and Samtools (Partek (F + S)) and Varsome Clinical - single sample germline analysis (Varsome) (Fig. 1). These software were selected as they do not require programming. The list of tools, applications, software, and their respective versions used in this study are listed in Table 1. First, all raw sequencing data from the samples were imported and run on the variant calling tools/applications mentioned above. Samples were aligned to human reference genome version GRCh38 and variant calling were done in single sample mode on default settings.

Fig. 1.

Benchmarking workflow of four variant calling software. GIAB, Genome in a Bottle; TP, True Positives; FP, False Positives; FN, False Negatives.

Table 1.

List of software and tools used for analysis.

| Variant calling software | Software version | Tool/application | Version | Pipelines | Computing location | Price model | Cost (SGD) |

|---|---|---|---|---|---|---|---|

|

Illumina Basespace Sequence Hub |

N.A. | DRAGEN Enrichment | 4.2.7 | DRAGEN with machine learning | Cloud | Annual subscription + credits | $735d |

|

CLC Genomics Workbench |

23.0.5 | LightSpeed Fastq to Germline Variants | 23.1 | Unknown |

Clouda or Local PCb |

Annual subscription | $22,249e or $8450f |

| Partek flow | 11.0.24.0325 | BWA-MEM | 0.7.17 | Manual configuration | Cloudc | Annual subscription | $7828 |

| GATK | 4.2 | ||||||

| FreeBayes | 1.3.6 | ||||||

| Samtools | 1.4.1 | ||||||

| Varsome clinical | 11.14 | Single sample germline analysis | Unknown | Sentieon (bwa-mem) aligner & Sentieon DNAscope caller | Cloud | Charged by base count or variant count per sample | $2490g |

aUser must already be subscribed to Amazon Web Service to use this software for cloud analysis.

bIntel Xeon Gold 5218 @ 2.30 GHz x 32, 64GB RAM, Linux OS. Analysis was performed on this local PC in this study.

c40 cores, 503.80GB RAM, Linux OS.

dCost is for annual subscription which comes with credits and 1 TB storage, additional credits can be purchased separately; $1,463 for 1,000 credits.

eCost is for CLC Genomics Workbench Desktop Premium version and Cloud Module.

fCost is for CLC Genomics Workbench Desktop Premium version.

gEstimated cost for this project. Cost can be variable due to tiered pricing for different increments of base/variant counts.

A manual pipeline was used for Partek Flow: firstly, imported FASTQ files were identified as unaligned reads and alignment was performed using BWA-MEM on default settings. Next, variant calling was performed either with GATK or Freebayes and Samtools. For Freebayes and Samtools, variants from each caller were unionised to produce a single VCF file which was used for evaluation.

All software are commercial and are allowed for academic use. Illumina, Partek, and Varsome are web-based software and typically offered as a Software-as-a-Service (SaaS). CLC is a standalone software installed locally on a desktop computer.

Support and documentation for the software are as follows: CLC, https://resources.qiagenbioinformatics.com/manuals/clcgenomicsworkbench/2106/User_Manual.pdf; Illumina, https://support.illumina.com/sequencing/sequencing_software/dragen-bio-it-platform/documentation.html; Partek, https://help.partek.illumina.com/partek-flow/user-manual; Varsome, https://docs.varsome.com/en/analysis-of-the-results.

Benchmarking of variant calling software

The VCF files generated by the four variant calling software were then evaluated using the Variant Calling Assessment Tool (VCAT) against the latest GIAB gold standard high confidence regions (v4.2.1) and filtered by the exome capture kit regions. VCAT version 4.1.0 is within the Illumina BaseSpace Sequence Hub (Illumina Inc., USA). VCF Gold standard truth sets were prebuilt into VCAT which were from NIST Genome in a Bottle version 4.2.1, and comparisons were made between the truth sets against all samples (HG001-3). Briefly, hap.py preprocesses query and truth VCF files, then generates a subset of variants in the specified region for each query VCF and BED file combination. Subsequently, hap.py calls BCFtools to obtain counts of SNVs and indels for each variant subset and calls vcfeval to compare each variant subset to the gold standard truth sets. VCAT used the following tools: BCFtools v1.9, BEDtools v2.26.0, Haplotype compare v0.3.15, tabix and bgzip from HTSlib v1.9 and rtg-tools v3.12.1. Comparison outputs from VCAT are total true positives (TP), total false positives (FP), total false negatives (FN), SNV TP, SNV FP, SNV FN, Indel TP, Indel FP and Indel FN. Performance identifiers such as precision, recall F1 scores and non-assessed variants were also calculated and output by VCAT. Definitions for benchmarking terminologies are shown in Supplementary Table 1.

Data analysis and graphing

Comparison outputs were processed using Python 3.10.12 with the pandas library. UpSet plots were generated using matplotlib and upsetplot libraries.

Results

WES data benchmarking strategy

GIAB reference standards aim to provide high-quality, well-characterized reference genomes that serve as benchmarks for evaluating the accuracy and performance of various genome sequencing technologies. Our study used 3 GIAB reference standards and their respective truth sets. Our strategy for variant caller software benchmarking is shown in Fig. 1.

HG001, HG002 and HG003 have an average coverage of 613, 240, and 203 respectively, while average quality scores are 38.81, 36.32, and 36.31 respectively. (Supplementary Fig. 1). To ensure consistency in downstream analysis, we chose datasets that were generated with identical capture kits. The average coverage and average quality score of bases for HG001 was higher than that for HG002 and HG003 (Supplementary Fig. 1). This could be due to submissions to NCBI SRA by different authors and differences in sequencing depth.

Analysis of variants called by software

Within the targeted regions of the capture kit, the results for SNV variant calling for all software are comparable (Table 2). The range of variants per software for HG001, HG002, and HG003 were 41,554 to 43,132. For indel variant calling, all software showed larger variance (Table 2), with the range of variants per software for HG001, HG002, and HG003 ranging from 3,503 to 5,521. Variant calling software with GATK in their pipelines (Partek Flow & Varsome Clinical) produced little to no multi-nucleotide variant (MNV) and other variant types besides SNV and indels. As for transition-to-transversion ratio, all variant calling software produced values above 2.4.

Table 2.

VCF statistics from variant calling software analysis.

| Sample | SRA accession | Software/platform | Variant caller | SNVs | Indels | MNVs | Other | Ts/Tv |

|---|---|---|---|---|---|---|---|---|

| HG001 | ERR1905890 | Illumina | DRAGEN | 41,633 | 3748 | 0 | 45,114 | 2.56 |

| CLC | Unknown | 41,602 | 3865 | 484 | 54 | 2.57 | ||

| Partek | GATK | 43,112 | 4314 | 0 | 12 | 2.48 | ||

| Freebayes + Samtools | 42,843 | 4642 | 950 | 183 | 2.46 | |||

| Varsome | GATK | 42,950 | 3887 | 0 | 0 | 2.51 | ||

| HG002 | SRR2962669 | Illumina | DRAGEN | 41,725 | 3719 | 0 | 45,235 | 2.56 |

| CLC | Unknown | 41,693 | 3656 | 449 | 51 | 2.57 | ||

| Partek | GATK | 42,979 | 4084 | 0 | 7 | 2.51 | ||

| Freebayes + Samtools | 42,763 | 5509 | 925 | 160 | 2.48 | |||

| Varsome | GATK | 42,816 | 3611 | 0 | 0 | 2.52 | ||

| HG003 | SRR2962692 | Illumina | DRAGEN | 41,659 | 3683 | 0 | 45,134 | 2.54 |

| CLC | Unknown | 41,554 | 3597 | 465 | 51 | 2.54 | ||

| Partek | GATK | 43,132 | 4063 | 0 | 8 | 2.48 | ||

| Freebayes + Samtools | 42,624 | 5521 | 991 | 173 | 2.46 | |||

| Varsome | GATK | 42,831 | 3503 | 0 | 0 | 2.5 |

SNV, single nucleotide variant; Indels, insertion or deletion; MNV, multi-nucleotide variant; ts/tv, transition-transversion ratio.

Variant calling performance

To assess the benchmarking performance of the variant calling software, the targeted regions were analysed for true positives (TP), false positives (FP), and false negatives (FN) of SNVs and indels against GIAB gold standard truth sets using VCAT (Supplementary Table 2). Precision, recall and harmonic mean (F1) scores for SNVs and indels were then calculated and are presented in Table 3.

Table 3.

Benchmarked values from variant calling software analysis.

| Sample | SRA accession | Software/platform | Variant caller | SNV precision | SNV recall | SNV F1 | SNV non-assessed | Indel precision | Indel recall | Indel F1 | Indel non-assessed | Average run time (min) |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| HG001 | ERR1905890 | Illumina | DRAGEN | 99.87% | 99.72% | 99.80% | 8.77% | 97.92% | 97.65% | 97.79% | 26.96% | 35.81 |

| CLC | Unknown | 98.74% | 99.23% | 98.99% | 9.94% | 88.19% | 91.56% | 89.84% | 27.83% | 25.63 | ||

| Partek | GATK | 97.86% | 99.23% | 98.54% | 10.97% | 85.79% | 94.70% | 90.03% | 30.37% | 1,782.05 | ||

| Freebayes + Samtools | 96.23% | 99.22% | 97.70% | 10.88% | 69.05% | 92.70% | 79.15% | 24.95% | 769.13 | |||

| Varsome | GATK | 98.58% | 99.20% | 98.89% | 11.46% | 93.29% | 94.85% | 94.07% | 28.32% | 183.67 | ||

| HG002 | SRR2962669 | Illumina | DRAGEN | 99.87% | 99.33% | 99.60% | 6.97% | 97.27% | 96.15% | 96.71% | 19.89% | 29.21 |

| CLC | Unknown | 98.65% | 98.83% | 98.74% | 8.02% | 91.92% | 92.06% | 91.99% | 19.98% | 7.32 | ||

| Partek | GATK | 97.92% | 98.86% | 98.39% | 8.74% | 87.14% | 93.61% | 90.26% | 21.59% | 247.80 | ||

| Freebayes +Samtools | 96.49% | 98.84% | 97.65% | 8.71% | 56.89% | 91.44% | 70.14% | 16.50% | 347.56 | |||

| Varsome | GATK | 98.10% | 98.56% | 98.33% | 8.90% | 94.65% | 91.85% | 93.23% | 19.21% | 116.67 | ||

| HG003 | SRR2962692 | Illumina | DRAGEN | 99.92% | 99.41% | 99.66% | 7.82% | 96.54% | 96.41% | 96.47% | 23.24% | 28.51 |

| CLC | Unknown | 98.54% | 98.92% | 98.73% | 8.65% | 91.82% | 93.12% | 92.46% | 22.57% | 6.25 | ||

| Partek | GATK | 97.80% | 98.84% | 98.32% | 9.99% | 84.67% | 92.86% | 88.57% | 24.43% | 218.12 | ||

| Freebayes + Samtools | 96.34% | 98.89% | 97.60% | 9.43% | 55.45% | 91.47% | 69.05% | 19.50% | 314.43 | |||

| Varsome | GATK | 97.98% | 98.42% | 98.20% | 10.00% | 94.13% | 90.48% | 92.27% | 22.55% | 111.00 |

SNV, single nucleotide variant; Indel, insertion or deletion; F1, harmonic mean.

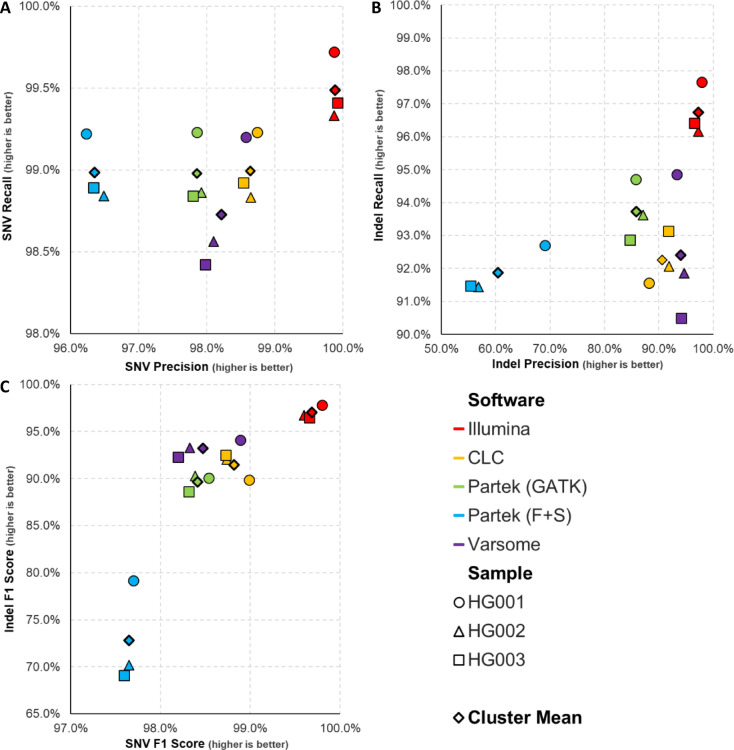

We segregated the benchmark performance for SNVs and indels into separate plots by recall versus precision (Fig. 2A & B). For SNV variant calling, Illumina performed the best for all three samples with a cluster mean of 99.89% for precision and 99.49% for recall (Fig. 2A; Table 3). This was followed by CLC, Partek (GATK), Partek (F + S), and Varsome, with SNV cluster means ranging from 96 to 98% for precision and around 98% for recall. For indel variant calling, Illumina also outperformed the other variant callers with indel precision cluster mean of 97.24% and indel recall cluster mean of 96.74% (Fig. 2B; Table 3). Following Illumina, indel precision and recall declined with Varsome showing the highest after Illumina, then CLC, Partek (GATK), and finally Partek (F + S), which had the lowest indel precision cluster mean of 60.5%.

Fig. 2.

Variant calling software benchmark performances. Scatter plots representing (A) SNV recall vs. precision, (B) Indel recall vs. precision, (C) Indel F1 score vs. SNV F1 score. (A,B) Cluster means were calculated by averaging precision scores for x-coordinates and recall scores for y-coordinates. (C) cluster means were calculated by averaging SNV F1 scores for x-coordinates and Indel F1 scores for y-coordinates.

Next, we analysed the harmonic mean performance of the variant callers by plotting indel F1 scores against SNV F1 scores (Fig. 2C; Table 3), with higher harmonic mean scores indicating better performance. Illumina expectedly outperformed the other variant calling software as its SNV and indel performance was also the best. Illumina had indel F1 cluster mean of 96.99% and SNV F1 cluster mean of 99.69%. CLC, Partek (GATK) and Varsome clustered closely, with indel F1 cluster means ranging from 89 to 93% and SNV F1 cluster means around 98%. Partek (F + S) showed the lowest performance for indel F1 score of 72.8% but performed similarly with the rest for SNV F1 score.

For non-assessed SNVs and indels, lower scores indicated better performance as software are able to accurately call variants within specified regions, with lower scores indicating fewer variants outside benchmark regions (Table 3). Among the non-assessed SNV, Illumina has the lowest score averaging at 7.85%, while Varsome has the highest, averaging at 10.12%.

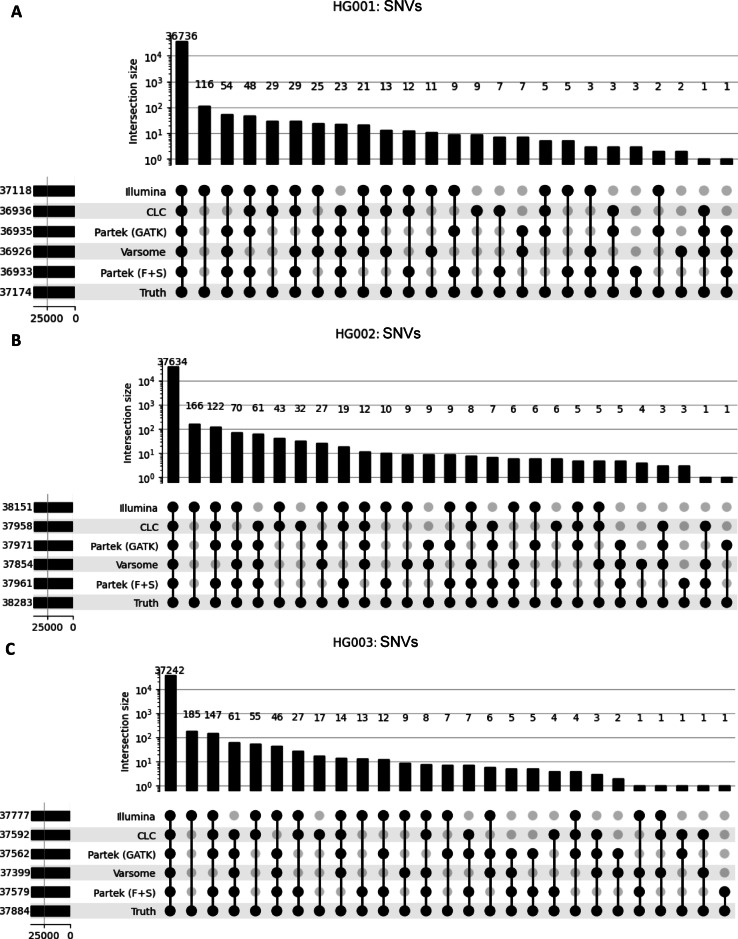

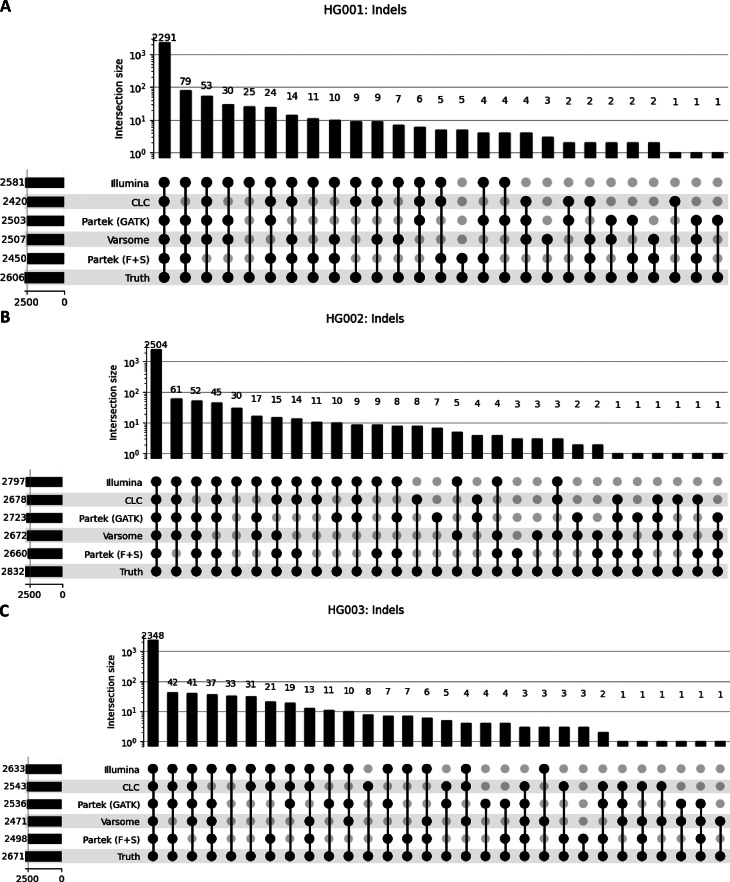

TP for SNVs (Fig. 3A-C) and indels (Fig. 4A-C) called by each software across all three GIAB samples are illustrated on upset plots. Illumina consistently had the highest TP counts for SNVs and indels across all samples. While Varsome had the lowest TP counts for SNVs across all samples. For indels, CLC had the lowest TP count for HG001 (2420), Partek(F + S) had the lowest for HG002 (2660) and Varsome had the lowest for HG003 (2471). TP, FP, and FN variants for all samples called by each software were also compared (Supplementary Fig. 2). Overall, all software demonstrated 98–99% similarity in TP variants. However, Partek (F + S) exhibited a higher number of FP and FN across all three GIAB samples.

Fig. 3.

UpSet plot illustrating the intersections of true positive SNV calls across five different tools for three gold standard datasets: (A) HG001, (B) HG002, and (C) HG003. The intersections are based on true positive SNV counts, which were determined by comparing the SNVs called by each tool against the truth set. Only SNVs that were present in the truth set were included in the intersections. The vertical bars represent the number of variants in each overlapping set, as shown by the coloured dots and connecting lines in the lower panel. The total number of SNVs is denoted on the left, beside their respective software.

Fig. 4.

UpSet plot illustrating the intersections of true positive indel calls across five different tools for three gold standard datasets: (A) HG001, (B) HG002, and (C) HG003. The intersections are based on true positive indels, which were determined by comparing the indels called by each tool against the truth set. Only indels that were present in the truth set were included in the intersections. The vertical bars represent the number of variants in each overlapping set, as shown by the coloured dots and connecting lines in the lower panel. The total number of indels is denoted on the left, beside their respective software.

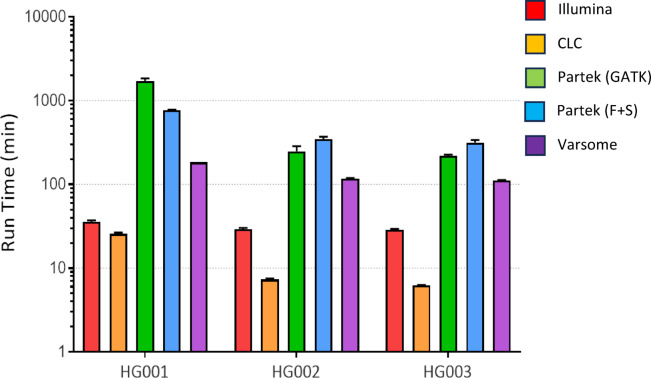

Variant calling duration

We evaluated the time taken to execute variant calling pipelines from FASTQ to VCF (Fig. 5). We performed variant calling in triplicates to ensure run times are consistent for each analysis. The fastest variant calling software from our study is CLC for HG001–3 with average run times ranging from of 6.23 to 25.63 min (Table 3). Illumina came in second with average run times ranging from 30.02 to 35.81 min. Varsome had average run times about the 2-hour mark and Partek(F + S) had average run times about the 5-hour mark. Partek(GATK) had the slowest average run times of 16-hour mark.

Fig. 5.

Histograms represent triplicate variant calling run times for the different software for each sample.

Additional software features

Tertiary analysis, which involves the annotation and interpretation of gene variants identified during secondary analysis, is essential for translating raw genomic data into meaningful biological insights like pathogenicity and clinical relevance. Here, we compare the tertiary analysis features of the four software used in this study, as well as their customisability for variant calling (Table 4).

Table 4.

List of additional features in variant calling software.

| Variant calling software | Illumina BaseSpace sequence hub | CLC genomics workbench | partek flow | Varsome clinical | |

|---|---|---|---|---|---|

| Customisable pipelinea | ✔ | ✔ | ✔ | ✖ | |

| Tertiary analysis (filtering, annotation, classification) | Basicb | ✖ | ✔ | ✔ | ✔ |

| Custom Database | ✖ | ✖ | ✔ | ✖ | |

| VEP | ✖ | ✖ | ✔ | ✖ | |

| SnpEff | ✖ | ✖ | ✔ | ✖ | |

| ACMG/AMP | ✖ | ✖ | ✖ | ✔ | |

| CADD | ✖ | ✖ | ✖ | ✔ | |

| cBioportal | ✖ | ✖ | ✖ | ✔ | |

| CIViC | ✖ | ✖ | ✖ | ✔ | |

| CKB | ✖ | ✖ | ✖ | ✔ | |

| ClinGen | ✖ | ✖ | ✖ | ✔ | |

| Clinvar | ✖ | ✖ | ✖ | ✔ | |

| COSMIC | ✖ | ✖ | ✖ | ✔ | |

| dbSNP | ✖ | ✖ | ✖ | ✔ | |

| FATHMM | ✖ | ✖ | ✖ | ✔ | |

| OMIM | ✖ | ✖ | ✖ | ✔ | |

| PharmGKB | ✖ | ✖ | ✖ | ✔ | |

| PMKB | ✖ | ✖ | ✖ | ✔ | |

VEP, Variant Effect Predictor; SnpEff, SNP effect; ACMG, American College of Medical Genetics and Genomics; AMP, Association for Molecular Pathology; CADD, Combined Annotation Dependent Depletion; CIViC, Clinical Interpretation of Variants in Cancer; CKB, Clinical Knowledgebase; ClinGen, Clinical Genome Resource; Clinvar, Archive of interpretations of clinically relevant variants; COSMIC, Catalogue of Somatic Mutations in Cancer; dbSNP, Single Nucleotide Polymorphism database; FATHMM, Functional analysis through hidden Markov models; OMIM, Online Mendelian Inheritance in Man; PharmGKB, Pharmacogenomics Knowledge Base; PMKB, Precision Medicine Knowledge Base.

aCustomisation for secondary analysis variant calling parameters.

bBasic annotations to transcript information from RefSeq and Ensembl.

Illumina, CLC and Partek offer options to adjust variant calling parameters during secondary analysis, while Varsome operates as a non-customisable, click-and-run tool. Examples of customisable parameters include target BED region base padding, duplicate marking and quality filtering.

In terms of tertiary analysis, Illumina does not have their own tools/applications. CLC, Partek, and Varsome provide basic functionalities such as transcript and protein annotation from knowledge databases. Partek extends its capabilities by incorporating tools like Ensembl Variant Effect Predictor (VEP) and SnpEff, which annotate and predict the effects of mutations. Furthermore, Partek can annotate variants from custom databases such as dbSNP, 1000 Genomes, Catalogue of Somatic Mutations in Cancer (COSMIC), and ClinVar using VCF files. Varsome, on the other hand, leverages a comprehensive, cross-referenced knowledge base that includes resources from ClinVar, ClinGen, COSMIC, dbSNP, and many others.

Discussion

Benchmarking is essential for improving genomic science, developing new technologies, and ensuring their reliable use. In this study, we compared four variant calling software, assessing their performance based on precision, sensitivity and duration of analysis. Unlike previously published studies, we focused on variant calling software that do not require programming skills or a bioinformatician. This approach could assist biologists in making informed decisions when selecting software that provides the most reliable results from raw sequencing data. Our results showed that Illumina had the best performance in SNV and indel variant calling with significantly shorter run time, while CLC, Partek(GATK), and Varsome had similar performance.

Differences in run times and performance across the software are attributable to the use of different aligners and variants callers. Illumina, CLC, and Varsome use proprietary tools such as DRAGEN enrichment, Lightspeed module, and Sentieon, respectively. While Partek Flow uses open-source programs such as BWA-MEM, GATK, Freebayes, and Samtools (Table 1). Although Sentieon (used by Varsome) is proprietary, it is built upon open-source programs for alignment (BWA-MEM) and variant calling (GATK) with machine learning models. Illumina, CLC and Varsome feature preset pipeline/workflow that streamline variant calling into a click-and-run process, whereas Partek Flow requires manual pipeline construction (see Methods for details). This manual setup in Partek Flow offers researchers the flexibility to experiment with different aligners and variant callers, allowing for customization of the pipeline to meet specific research needs, and potentially optimize analysis for unique experimental conditions or study objectives.

A separate study comparing Sentieon and GATK for variant calling found similar precision and recall between the two, but Sentieon was significantly faster than GATK without compromising accuracy18. In our study, Varsome (using Sentieon) and Partek(using GATK) had identical performance in SNV but not indel variant calling. In terms of run time, our findings align with a previous study, showing that Varsome outperforms Partek(GATK) in speed, even when tertiary analysis is included in the Varsome pipeline.

Our findings concluded that Illumina’s DRAGEN outperforms other software using GATK in this study, consistent with a recent benchmarking study on whole genome sequencing that compared the performance of the DRAGEN and GATK pipelines19. Additionally, co-developed GATK-DRAGEN analysis methods also outperformed standard GATK workflows in terms of speed and accuracy20. On the contrary, others have found that Freebayes and Samtools performed better than GATK in calling SNPs on Illumina sequencers, while GATK outperforms them in indel calling21. We took a simpler approach to combine variant calls from Freebayes and Samtools compared to the method used in another study22, yet still found that GATK performs better.

When conducting NGS data analysis, it is essential to recognise that the process should not be limited to secondary analysis alone. Tertiary analysis is indispensable for variant annotation and interpretation, and it goes beyond basic analysis to include advanced analysis such as data integration across different omics layers, biological pathway and functional analysis, and clinical and translational studies. These are useful for biomarker discovery and the development of personalised treatment strategies23–25. Varsome’s additional features for tertiary analysis stood out by incorporating a broader range of databases and the ability to automatically classify variants, in contrast to CLC and Partek. While both CLC and Partek offer tertiary analysis, CLC provides only basic protein and transcript annotation. Varsome’s classification of variants follows the guidelines set by the American College of Medical Genetics and Genomics (ACMG) and the Association for Molecular Pathology (AMP), making it applicable across various gene inheritance patterns, and diseases26. Additionally, Varsome’s click-and-run single sample germline analysis integrates tertiary analysis within its workflow. The goal of tertiary analysis is to identify which sequence variants are pertinent to a patient’s clinical condition27.

Although Illumina does not offer its own tools for tertiary analysis, VCF files generated by Illumina can be used with other software that provide tertiary analysis, such as Varsome, and Qiagen’s Clinical Insights (https://digitalinsights.qiagen.com). For software with limited functionality, like CLC, which only provides basic annotation, users can manually pair VCF data with online databases that offer more comprehensive tertiary analysis to complete their NGS data. Databases such as ClinVar and Precision Oncology Knowledge Base (OncoKB) can be used to analyse variants for clinical actionability, therapeutic implications, or to annotate biological and clinical information28–30.

Analysis of NGS requires a substantial amount of computing power and such analysis is typically performed on a reasonably powered computer (PC) or cloud servers such as Google or Amazon. All four software in our study are cloud-based, but CLC has the option for performing analyses locally on a PC. Cloud based software is useful for scientists that do not have access to a PC, as it eliminates the upfront cost of purchasing a PC and its maintenance. The pricing models and costs for these software in Singapore are listed in Table 1. CLC and Partek offer annual subscriptions that cover unlimited analyses, samples, variants or bases. Illumina charges both annual subscription and credits used for computing or analysis. Varsome’s subscription is based on the number of bases per sample per analysis from FASTQ to VCF, which includes tertiary analysis. If tertiary analysis is needed, Varsome’s fees are based on the number of variants per sample.

One limitation of our study is that it only focused on sequencing data generated from Agilent’s capture kit and sequenced on Illumina’s platform, which may not reflect the performance of variant callers on data from other sequencing platforms and capture kits. Different platforms and capture kits have different systematic sequencing errors, which can help differentiate between true or false positives31,32. Moreover, different aligners and variant callers perform differently across diverse sequencing technologies21. These discrepancies in systematic errors and performance limit the broader applicability of our findings. Furthermore, the use of only three GIAB datasets for benchmarking variant calling tools limits our ability to generalize findings to broader populations and detect rare variants. The limited sample diversity and size may reduce the accuracy in evaluating tool effectiveness across different genomic regions and conditions. Therefore, a larger and more diverse sample set (e.g. non-GIAB samples, different sequencing platform data) is needed to increase the robustness of the best-performing variant callers. The GIAB gold standard truth set used in this study incorporates data from multiple sequencing technologies and computational methods to address sequencing challenges such as repetitive DNA sequences and AT/GC rich regions33. However, these benchmarks primarily focus on SNVs and short indels, leaving structural variants (SVs) unaddressed. SVs, which involve duplications, deletions, or inversions of at least 1 kilobase of DNA, present significant sequencing difficulties34. Long-read sequencing coupled with advanced computational tools overcome these challenges, improving the detection of SVs and analysis accuracy35,36. Currently, there are no commercially available non-programming SV callers, however GIAB gold standard truth sets for SV calling have been published37,38. Future benchmarking studies could explore SV calling performance as non-programming software and gold standards become available.

As new user-friendly bioinformatic software continue to emerge, it is imperative that these are subjected to benchmarking. Our study found that Illumina’s DRAGEN enrichment analysis for WES variant calling was the most sensitive and accurate. However, comprehensive variant analysis often requires integration with tertiary analysis using software like Varsome Clinical, which provides detailed annotation and classification. In conclusion, these findings provide valuable insights for researchers and clinicians in their selection of software for NGS secondary analysis. Moreover, these no-programming variant calling software can benefit smaller laboratories, clinics and biologists in developing countries, allowing them to process NGS data effectively without the need for expensive PC or additional bioinformatics expertise.

Electronic supplementary material

Below is the link to the electronic supplementary material.

Acknowledgements

This study was supported by the grant from National Medical Research Council of Singapore (MOH-OFIRG19nov-0019) awarded to Ann Lee.

Author contributions

A.L. and M.W. conceived the study. M.W. and B.L. performed the data analysis. MH and NYL assisted in writing and reviewing. All authors wrote, reviewed and approved the final manuscript.

Data availability

The datasets used during the current study are publicly available from the NCBI sequence read archive (https://www.ncbi.nlm.nih.gov/sra) and other information could be found in the methods section.

Declarations

Competing interests

The authors declare no competing interests.

Footnotes

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

References

- 1.Ng, S. B. et al. Targeted capture and massively parallel sequencing of 12 human exomes. Nature461, 272–276 (2009). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Koboldt, D. C. Best practices for variant calling in clinical sequencing. Genome Med.12, 91 (2020). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Pereira, R., Oliveira, J. & Sousa, M. Bioinformatics and computational tools for next-generation sequencing analysis in clinical genetics. J. Clin. Med.9, 132 (2020). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Li, H. & Durbin, R. Fast and accurate short read alignment with Burrows–Wheeler transform. Bioinformatics25, 1754–1760 (2009). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Langmead, B. & Salzberg, S. L. Fast gapped-read alignment with bowtie 2. Nat. Methods. 9, 357–359 (2012). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.McKenna, A. et al. The genome analysis toolkit: A mapreduce framework for analyzing next-generation DNA sequencing data. Genome Res.20, 1297–1303 (2010). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Poplin, R. et al. Scaling accurate genetic variant discovery to tens of thousands of samples. BioRxiv10.1101/201178 (2017). [Google Scholar]

- 8.Zook, J. M. et al. Extensive sequencing of seven human genomes to characterize benchmark reference materials. Sci. Data. 3, 160025 (2016). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Zook, J. M. et al. An open resource for accurately benchmarking small variant and reference calls. Nat. Biotechnol.37, 561–566 (2019). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Olson, N. D. et al. PrecisionFDA truth challenge V2: calling variants from short and long reads in difficult-to-map regions. Cell. Genomics. 2, 100129 (2022). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Kim, S. et al. Strelka2: fast and accurate calling of germline and somatic variants. Nat. Methods. 15, 591–594 (2018). [DOI] [PubMed] [Google Scholar]

- 12.Chen, J., Li, X., Zhong, H., Meng, Y. & Du, H. Systematic comparison of germline variant calling pipelines cross multiple next-generation sequencers. Sci. Rep.9, 9345 (2019). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Supernat, A., Vidarsson, O. V., Steen, V. M. & Stokowy, T. Comparison of three variant callers for human whole genome sequencing. Sci. Rep.8, 17851 (2018). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Zhao, S., Agafonov, O., Azab, A., Stokowy, T. & Hovig, E. Accuracy and efficiency of germline variant calling pipelines for human genome data. Sci. Rep.10, 20222 (2020). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Krusche, P. et al. Best practices for benchmarking germline small-variant calls in human genomes. Nat. Biotechnol.37, 555–560 (2019). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Barbitoff, Y. A., Abasov, R., Tvorogova, V. E., Glotov, A. S. & Predeus, A. V. Systematic benchmark of state-of-the-art variant calling pipelines identifies major factors affecting accuracy of coding sequence variant discovery. BMC Genom.23, 155 (2022). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Leinonen, R., Sugawara, H. & Shumway, M. The sequence read archive. Nucleic Acids Res.39, D19–D21 (2011). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Kendig, K. I. et al. Sentieon DNASeq variant calling workflow demonstrates strong computational performance and accuracy. Front. Genet.10, 736 (2019). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Betschart, R. O. et al. Comparison of calling pipelines for whole genome sequencing: an empirical study demonstrating the importance of mapping and alignment. Sci. Rep.12, 21502 (2022). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Alganmi, N. & Abusamra, H. Evaluation of an optimized germline exomes pipeline using BWA-MEM2 and Dragen-GATK tools. PLoS One. 18, e0288371 (2023). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Hwang, S., Kim, E., Lee, I. & Marcotte, E. M. Systematic comparison of variant calling pipelines using gold standard personal exome variants. Sci. Rep.5, 17875 (2015). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Cantarel, B. L. et al. BAYSIC: a bayesian method for combining sets of genome variants with improved specificity and sensitivity. BMC Bioinform.15, 104 (2014). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Hasin, Y., Seldin, M. & Lusis, A. Multi-omics approaches to disease. Genome Biol.18, 83 (2017). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Pal, M., Muinao, T., Boruah, H. P. D. & Mahindroo, N. Current advances in prognostic and diagnostic biomarkers for solid cancers: detection techniques and future challenges. Biomed. Pharmacother.146, 112488 (2022). [DOI] [PubMed] [Google Scholar]

- 25.Mosele, F. et al. Recommendations for the use of next-generation sequencing (NGS) for patients with metastatic cancers: a report from the ESMO precision medicine working group. Ann. Oncol.31, 1491–1505 (2020). [DOI] [PubMed] [Google Scholar]

- 26.Li, M. M. et al. Standards and guidelines for the interpretation and reporting of sequence variants in cancer. J. Mol. Diagn.19, 4–23 (2017). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Gargis, A. S. et al. Good laboratory practice for clinical next-generation sequencing informatics pipelines. Nat. Biotechnol.33, 689–693 (2015). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Suehnholz, S. P. et al. Quantifying the expanding landscape of clinical actionability for patients with cancer. Cancer Discov. 14, 49–65 (2024). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Chakravarty, D. et al. OncoKB: A precision oncology knowledge base. JCO Precis Oncol. 1–16 10.1200/PO.17.00011 (2017). [DOI] [PMC free article] [PubMed]

- 30.Landrum, M. J. et al. ClinVar: public archive of relationships among sequence variation and human phenotype. Nucleic Acids Res.42, D980–D985 (2014). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Zook, J. M. et al. Integrating human sequence data sets provides a resource of benchmark SNP and indel genotype calls. Nat. Biotechnol.32, 246–251 (2014). [DOI] [PubMed] [Google Scholar]

- 32.Xu, Y. et al. A new massively parallel nanoball sequencing platform for whole exome research. BMC Bioinform.20, 153 (2019). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Wagner, J. et al. Benchmarking challenging small variants with linked and long reads. Cell. Genomics. 2, 100128 (2022). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Freeman, J. L. et al. Copy number variation: new insights in genome diversity. Genome Res.16, 949–961 (2006). [DOI] [PubMed] [Google Scholar]

- 35.Logsdon, G. A., Vollger, M. R. & Eichler, E. E. Long-read human genome sequencing and its applications. Nat. Rev. Genet.21, 597–614 (2020). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Treangen, T. J. & Salzberg, S. L. Repetitive DNA and next-generation sequencing: computational challenges and solutions. Nat. Rev. Genet.13, 36–46 (2012). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Chapman, L. M. et al. A crowdsourced set of curated structural variants for the human genome. PLoS Comput. Biol.16, e1007933 (2020). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Zook, J. M. et al. A robust benchmark for detection of germline large deletions and insertions. Nat. Biotechnol.38, 1347–1355 (2020). [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Data Availability Statement

The datasets used during the current study are publicly available from the NCBI sequence read archive (https://www.ncbi.nlm.nih.gov/sra) and other information could be found in the methods section.