Abstract

Qualitative research is important to advance health equity as it offers nuanced insights into structural determinants of health inequities, amplifies the voices of communities directly affected by health inequities, and informs community-based interventions. The scale and frequency of public health crises have accelerated in recent years (e.g., pandemic, environmental disasters, climate change). The field of public health research and practice would benefit from timely and time-sensitive qualitative inquiries for which a practical approach to qualitative data analysis (QDA) is needed. One useful QDA approach stemming from sociology is flexible coding. We discuss our practical experience with a team-based approach using flexible coding for qualitative data analysis in public health, illustrating how this process can be applied to multiple research questions simultaneously or asynchronously. We share lessons from this case study, while acknowledging that flexible coding has broader applicability across disciplines. Flexible coding provides an approachable step-by-step process that enables collaboration among coders of varying levels of experience to analyze large datasets. It also serves as a valuable training tool for novice coders, something urgently needed in public health. The structuring enabled through flexible coding allows for prioritizing urgent research questions, while preparing large datasets to be revisited many times, facilitating secondary analysis. We further discuss the benefit of flexible coding for increasing the reliability of results through active engagement with the data and the production of multiple analytical outputs.

Keywords: qualitative data analysis, flexible coding, health equity, research methodology, secondary data analysis

INTRODUCTION

Health equity research aims to understand and improve the well-being of communities that are systematically excluded from healthcare and public health resources. Leading public health scholars have championed strengthening qualitative research science (Griffith et al., 2017, 2023; Shelton et al., 2022; Stickley et al., 2022; Teti et al., 2020) recognizing it as a valuable tool for advancing public health and health equity. Qualitative approaches enable researchers to obtain deep contextual understandings of the pathways by which social determinants of health and inequities in these factors affect individual and community health (Adkins-Jackson et al., 2022). It offers nuanced perspectives on the complexities of how individuals and communities experience and overcome these inequities. By amplifying the voices of those directly impacted by structural inequities, qualitative research offers potential to ground interventions in individual and community-level lived experiences (Griffith et al., 2023; Jeffries et al., 2019; Shelton et al., 2017, 2022) a context that might be overlooked with strictly quantitative methods.

Despite the growing recognition of qualitative research’s utility for health equity, leading public health journals tend to publish more quantitative research (Stickley et al., 2022). Many researchers face barriers to applying qualitative methodologies, including the time and resources needed for data collection and analysis and limited or inadequate training experiences (Silverio, S., Hall, J., & Sandall, J., 2020; Vindrola-Padros et al., 2020). Further, team-based analysis of large qualitative datasets presents additional challenges, including recruiting and training team members, ensuring effective coordination and alignment on goals and methods, and maintaining coding consistency (Beresford et al., 2022).

The ability of qualitative research to illuminate structural drivers and solutions to health inequities increases the demand for methodological guidance in public health. Valuable guidance exists on building collaborative teams to analyze large datasets within time constraints (Bates et al., 2023; Giesen & Roeser, 2020; Vindrola-Padros et al., 2020), transitioning to methods that leverage virtual tools and support geographically dispersed teams (Roberts et al., 2021; Singh et al., 2022), and coding (Beresford et al., 2022; Locke et al., 2022)—a common, time-consuming qualitative data analysis technique (QDA) across various disciplines. However, little step-by-step guidance exists on coding qualitative data in a way that can effectively answer urgent public health questions in a timely manner. Furthermore, while there is pragmatic guidance for novice health equity researchers and practitioners on qualitative analytic strategies (Saunders et al., 2023), there is insufficient guidance on managing large, multifaceted projects or datasets involving teams with varying levels of expertise.

We need enhanced strategies to make qualitative methodologies more accessible and applicable in health equity research. This will enable qualitative and quantitative data to be used in partnership to provide a more holistic understanding of the social determinants of health by elucidating how health experiences are shaped and providing a deeper contextual understanding of the relationship between structural factors and health outcomes (Adkins-Jackson et al., 2022). Our field could benefit from developing and disseminating methods for analyzing large qualitative datasets and establishing structures that enable the efficient use of the data for multiple analyses.

Sociology scholars have proposed flexible coding as an effective approach for multidisciplinary teams working with large datasets, leveraging the capabilities of qualitative data analysis software (QDAS) (Deterding & Waters, 2021). In theory, flexible coding accommodates larger qualitative datasets and diverse team involvement with interdisciplinary perspectives. Flexible coding structures data in a way that streamlines collaborative coding and enables reanalysis. This structuring also facilitates involving novice coders in robust research, and enhances interpretation, dissemination, and translation of research findings through the production of multiple outputs throughout the analysis process. Moreover, this structure enhances analysis efficiency, facilitating community-based participatory research (CBPR). By segmenting the data, community-academic partnerships can conduct prioritized, focused, and timely review of specific topics based on community needs, focusing on the most urgent research questions. As a result, the process becomes more efficient and responsive, accelerating the transfer of knowledge into practical application. Yet, there is a paucity of practical guidance on applying flexible coding guidelines and managing the process for practice-based fields such as public health, and research questions and approaches that involve community-academic partnerships.

This paper addresses these gaps by outlining the use of the flexible coding method for nimble community-based participatory research seeking to leverage large qualitative datasets and multidisciplinary teams. It shows how flexible coding supports timely and rigorous qualitative data analyses that are poised to inform policy, systems, and environmental transformations to promote health equity. As we demonstrate in the following sections, employing a flexible coding approach facilitates an accessible and meaningful engagement process for community-academic partnerships to analyze qualitative data and addresses challenges many qualitative teams face. To advance the application of flexible coding for time-sensitive health equity research, we provide a comprehensive overview of our step-by-step methods. This process allowed us to address multiple research questions concurrently, organizing and equipping our team with practical tools for rigorous analysis. While we present a public health research project as a case study, we recognize and encourage this guide’s application across diverse disciplines engaging in qualitative analysis.

SETTING

Project context

To provide context into our approach, we use examples from two ongoing studies from our research partnership using a tailored flexible coding approach. Guided by the National Institute on Minority Health and Health Disparities (NIMHD) Research Framework and the Multisystemic Promotores/Community Health Worker Model (Matthew et al., 2017; Montiel, et al., 2021), these studies aimed to develop a roadmap for community-centered recovery following the COVID-19 pandemic. These studies emphasized community-based participatory research approaches (Israel et al., 1998), with community partners guiding every stage— research question identification, participant recruitment, data collection, analysis, and dissemination.

First, the Community Activation to TrAnsform Local sYSTems (CATALYST) study is a multi-year study that emerged from the Orange County Health Equity COVID-19 Community-Academic Partnership (OCHEC-CAP) (Washburn et al., 2022), formed in May 2020 and including six local community-based organizations and academic partners. CATALYST was designed to examine the role of community health workers (CHW) in addressing COVID-19 inequities in Orange County, CA, with a focus on Latina/o/é, Asian, and Pacific Islander communities (LeBrón et al., 2024). Multilingual data collection occurred from January 2023 to January 2024 through semi-structured interviews and focus group discussions. Given the project’s context and the large sample size (63 transcripts, 127 participants, totaling 70 hours of transcribed interactions) we used an abductive analysis approach. This method is recursive and iterative, allowing us to develop new theories while also refining or dismissing existing theories based on their alignment with current literature. It involves parallel and equal engagement with the data and extant theoretical understandings (Tavory & Timmermans, 2014). During this analysis, multiple investigators addressed diverse questions specific to different datasets. Table 1 outlines these datasets.

Table 1.

Project datasets

| Dataset | Languages | Transcripts | Participants | |

|---|---|---|---|---|

| 1 | Interviews and focus groups with CHWs in Orange County (OC CHW) | English; Spanish | 24 | 60 |

| 2 | Interviews and focus groups with community residents in OC (OC Residents) | English; Spanish; Vietnamese; Korean | 7 | 35 |

| 3 | Interviews with institutional representatives and policymakers in OC (OC IRP) | English | 15 | 15 |

| 4 | Interviews with California CHWs (CA CHW) | English; Spanish | 17 | 17 |

The second study is through the California Collaborative for Public Health Research (CPR3) initiative. It builds on findings from CATALYST highlighting pandemic-related mental health inequities. This involves a secondary analysis of the data from CHWs and Orange County residents (n= 48 of the 63 transcripts and 112 of the 127 participants). Using a popular education approach — a participatory, discussion-based method that actively engages communities to integrate their lived experiences to advance health equity (Wiggins, 2012)—we engaged CHWs in discussions to identify visions for mental health equity, community resilience, and future mental health promotion during public health crises.

Team & research structure

Our research partnership constitutes a collaborative effort between community and academic partners, bringing together researchers from diverse disciplinary backgrounds (e.g., health services, public health, psychology, sociology, anthropology, ethnic studies) focused on health equity and justice. Much of our analytical work is conducted remotely, facilitated by digital communication tools (e.g., Slack), as well as in-person and hybrid research meetings (via Zoom). The analytic team structure aligns with the various stages and steps of the analytic process, which is described in greater detail in the sections that follow. Presented in Table 2 is an overview of how the team structure maps onto roles in the flexible coding process. Given the sequential nature of the qualitative data analysis process, these roles are flexible, meaning the same people can find themselves in several roles, depending on their availability and skills.

Table 2.

Role structure

| Role | Description | Performed by |

|---|---|---|

| Primary researcher (PR) | Guides the analysis process | Often the Principal Investigator or a Co-Investigator or team member leading an analysis |

| Data preparation team | Prepares and maintains QDAS database | Often undergraduate students new to our lab and processes |

| Index coding team (ICT) | Indexes transcripts | Often novice coders, commonly undergraduate students within our lab, who are relatively new to qualitative research methodologies |

| Analytical coding team (ACT) | Applies analytical codebook | Often individuals with previous experience in indexing and coding, involving staff members, advanced undergraduates, and graduate students trained in qualitative methods |

| Community interpretation team (CIT) | Provides input throughout different project phases and guides the direction of analysis | Often a collaborative effort involving coders, primary researchers, community partners, and other academic contributors engaged in the research process |

Prior to this study, our team collaborated on a longitudinal evaluation of a community-level COVID-19 equity intervention. During that evaluation, we explored strategies to equip a wider team, beyond the primary researcher (PR), to conduct qualitative data analysis on a large dataset in a timely manner. Given the limited qualitative training in public health, we recognized a need for a structured approach to involve team members with varying levels of expertise. This experience also highlighted the importance of assessing the quality of the coding process throughout. Additionally, the qualitative dataset provided rich insights that prompted us to revisit the data, leading us to recognize the need for a structure, such as flexible coding. These experiences informed the recommendations and practices outlined in the following section.

PROCESS

In the sections that follow, our primary focus is to explain the steps involved in going from raw data to in-depth analyses, using the capabilities of flexible coding and sharing our lessons learnt. First, we describe practices at the data collection stage that maximize participation of multidisciplinary academic and community partners in developing data collection instruments, ensuring data is collected in a format amenable to flexible coding. Second, we describe considerations in selecting QDAS to support flexible coding involving a large team of researchers. Third, we detail a four-stage protocol (Table 3) to apply flexible coding that allows teams of researchers to address individual research questions.

Table 3.

Flexible coding process overview (Adapted from Deterding & Waters, 2021)

| Stage | Description | Team | Products |

|---|---|---|---|

| Stage 0: Preparing for flexible analysis | -Transcribing & redacting -Collecting other artifacts (e.g., memos) -Assigning attributes (e.g., strata) |

Data preparation team | QDAS project files containing: -Transcripts with standardized naming/structure and attributes -Interview team memos -Data collection notes |

| Stage 1: Organizing the dataset through indexing | -Developing indexing codebook -Index coding & validation -Writing reflective memos |

ICT | Updated QDAS project files from Stage 0 containing: -Index codebook -Indexed transcripts -Indexing memos |

| Stage 2: Applying analytical codes based on individual research questions | -Identifying RQ and applicable dataset guided by community input -Identifying applicable index codes -Developing the analytical codebook with community feedback -Applying analytical codes to index coded data and assessing ICA -Writing reflective memos |

PR, CIT & ACT | QDAS project file per RQ containing: -Transcripts with attributes, index codes, analytical codes -Indexing and analytical memos |

| Stage 3: Refining the analysis | -Writing final reflective memo -Running QDAS reports -Consulting community guidance to sensitize analysis -Using literature to sensitize analysis |

PR, ACT & CIT | In-depth analysis rooted in the data |

Notes: QDAS indicates qualitative data analysis software; RQ indicates research question; PR: Primary Researcher; ACT: Analytical Coding Team; ICT: Indexing coding Team; CIT: Community Interpretation Team

Establishing effective practices for data collection

Establishing effective practices during data collection is crucial to be positioned to conduct subsequent analyses. For our team, these practices involve developing, in collaboration with community partners, a robust interview and focus group guide rooted in key topics related to the overarching research questions. Prior to data collection, the team familiarizes themselves with the guides, to allow for the benefits of semi-structured interviews, while ensuring all topics are discussed. Where feasible, we audio or video record the discussions to enable us to work with transcripts instead of relying solely on our notes and to capture nuances in interactions and tones that we can reference later in the analysis. Additionally, a two-person interview team enhances the quality of data: one individual trained in qualitative data collection facilitates the conversation, while the other focuses on note taking, recording, and observations. This second role, usually filled by a student, also serves as a training opportunity in qualitative data collection. The interview team drafts individual respondent-level memos promptly within 24-hours post-data collection to capture immediate insights and nuanced details, enriching the collected data. Further, assigning unique identifiers to the different datasets and individuals simplifies the naming convention, allowing a systematic respondent ID classification.

To ensure clarity and efficiency, we provide a detailed overview of the entire process in advance, including roles, responsibilities, timelines, and specific tasks like prompt memo completion, thereby making the workflow transparent to all team members. These practices prepare the team for the subsequent data analysis phase.

Considerations in selecting qualitative data analysis software

There are several factors to consider when selecting the tools to use for qualitative data analysis, including the ability to facilitate the generation of inter-coder agreement (ICA); suitability for use by teams of different sizes (we opted for flexibility with open seats rather than individual licenses); easy version control of main files; compatibility across different operating systems (e.g., Mac, Windows); and flexibility in analysis capabilities such as generating reports, creating matrices, and visuals. Most major software packages cover these key capabilities. Throughout our process, we have used Atlas.ti as our QDAS, but recognize the capabilities are similar across other software.

A four-stage flexible coding process

As data collection progresses, we begin with a four-stage process for flexible coding, adapted from Detering & Waters (2018, see Table 3). The process includes preparing transcripts and other artifacts for flexible analysis (Stage 0); applying index codes to the raw data to identify segments of the data that relate to specific topics of focus (Stage 1); applying analytical codes to relevant indexed subsets of the raw data, based on specific research questions (Stage 2); and refining the analysis iteratively (Stage 3). This structured framework begins after some data collection has occurred. We detail linear steps in our analysis, while acknowledging the iterative nature of the process (Locke et al., 2022).

Stage 0: Preparing for flexible analysis

We adhere to the guidance Deterding and Waters (2021) outline for transcription and database design to structure our source materials. For example, we establish a transcription protocol with detailed instructions for the transcriptionists. While a professional transcription company handled this task in our case, it can alternatively be undertaken by members of the research team. The protocol begins with instructions on formatting the transcripts. We use verbatim transcriptions and include periodic timestamps, facilitating convenient revisiting of the source recordings for evaluating tone of voice or facial expressions when deemed necessary by the analysis team in subsequent stages. We also incorporate the respondent’s unique identifier within the transcript body along with the interviewer’s initials in cases involving multiple interviewers instead of using generic ‘respondent’ and ‘interviewer’ names. This practice allows for easier data identification when reviewing excerpts and enables the consideration of potential interviewer effects during subsequent analysis stages.

We also provide guidance for redaction, where we remove identifying details while retaining crucial contextual information. For instance, we omit titles that pose identification risks (e.g., “City Mayor”) but maintain the city they represent. When positions are less identifiable (e.g., “CHW in Santa Ana”), we retain both details for context preservation. This becomes valuable when analyzing the data to recognize important details without returning to the source recordings. Lastly, we implement a standardized file naming structure for all transcripts, incorporating the participant’s or event’s identifier, the date, and the interviewer’s initials. For instance, the format might resemble “CHW01_12Feb23_MM”. We also incorporate transcription validation (usually by a more novice team member) to ensure necessary data has been redacted. Lastly, some of our transcripts require translation into English, and in such cases, we retain both the original language and the English transcripts. Once the transcripts are ready, we gather all the memos and notes from the interview team. These transcripts, memos, and any other source material are saved in a QDAS file.

The team then moves to assigning respondent-level attributes to each individual transcript file. Attributes are participants’ personal characteristics relevant to the study. This is similar to applying a code to a whole transcript to describe transcript characteristics. Attributes allow us to review differences and similarities in codes across different categories or attributes (i.e. race, gender, geography). For our project, these respondent-level attributes are assigned to show person-level data from the participant demographic information collection, including participants’ gender, race, city, age group, and the interviewee set/segment/group (e.g., OC CHWs, OC residents, CA CHWs) (Table 1).

Product:

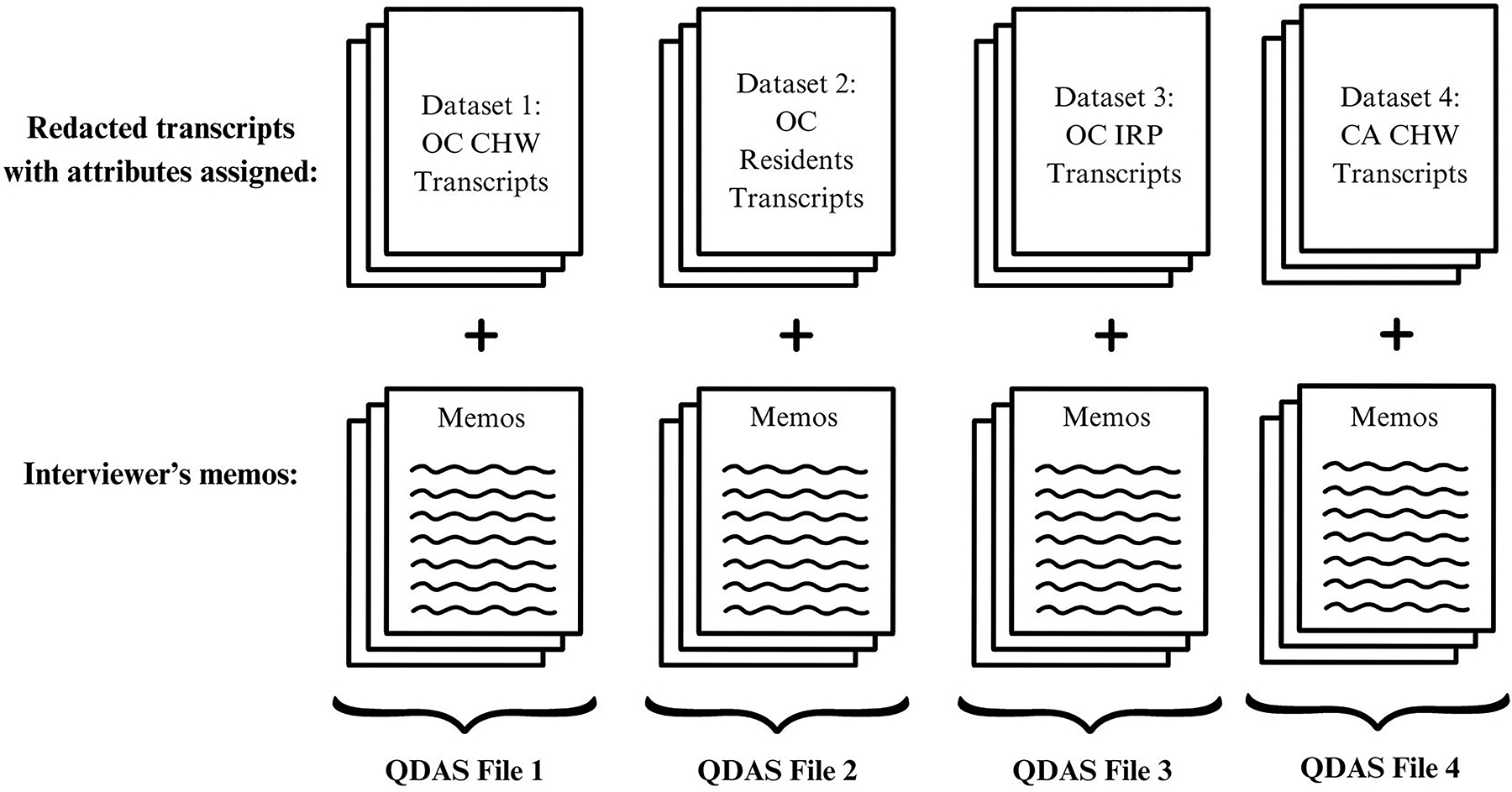

A product of this phase is a QDAS database with a project file per dataset that contains redacted transcripts with standardized naming convention and attributes and interview team respondent-level memos and notes. This is the current source file (Figure 1) ready for indexing.

Figure 1.

QDAS Design for CATALYST Study

Stage 1: Organizing the dataset through indexing

With the database set up, our focus shifts to preparing for the analysis phase.

Developing indexing codebook

Analysis begins with indexing, a step in which we categorize our data by topics of focus. Indexing is foundational for flexible coding, as future analyses draw upon indexed segments of data. Accordingly, we recommend two coders index the data and assess coding agreement.

The Indexing Coding Team (ICT), usually at least 2 coders, creates an indexing codebook, based on the interview or focus group guide (Table 4). We assign a code, or brief high-level category name, to each question in the interview or focus group guide, describing the topic/theme of the question. Other indexing codes are added depending on the nature of the research project, topics that emerged during data collection, or based on community feedback of priority topics for analysis. For instance, our discussion guides did not have a question about the role of language during the pandemic, but this was noted in many memos, thus it was assigned an indexing code. We also include an index code for “Good quotes” and “Aha moments” to capture moments in the data where participants clearly express something or moments in the data where we fully understand a concept. Later in the analysis process, we query instances where these index codes overlap with an analytical code, to identify strong passages in the data.

Table 4.

Example of an indexing codebook based on an interview guide

| # | Index Code | Category | Interview Question / Description |

|---|---|---|---|

| 1 | Interviewee information | Community Context | What led you to become a CHW? |

| 2 | Communities served | Community Context | First, how would you describe the communities with whom you worked (and/or assisted) during the COVID-19 pandemic? |

| 3 | COVID-19 effect on communities | COVID-19 Context | Now, could you share about how the COVID-19 pandemic has affected the communities with whom you work? |

| 4 | COVID-19 and chronic disease | COVID-19 Context | How, if at all, did the COVID-19 pandemic affect chronic disease risk or outcomes, such as high blood pressure, diabetes, and cancer? Can you share an example? |

| 5 | COVID-19 and mental health | COVID-19 Context | How, if at all, did the COVID-19 pandemic affect mental health risk or outcomes? Can you share an example of anything you have witnessed or experienced around mental health within the community you serve? |

| 6 | CHW role | CHW Model | As a CHW, how would you describe your work to someone who was not familiar with CHW models? |

| 7 | Language | CHW Model | Instances where the role of language is discussed |

Index Coding & Validation

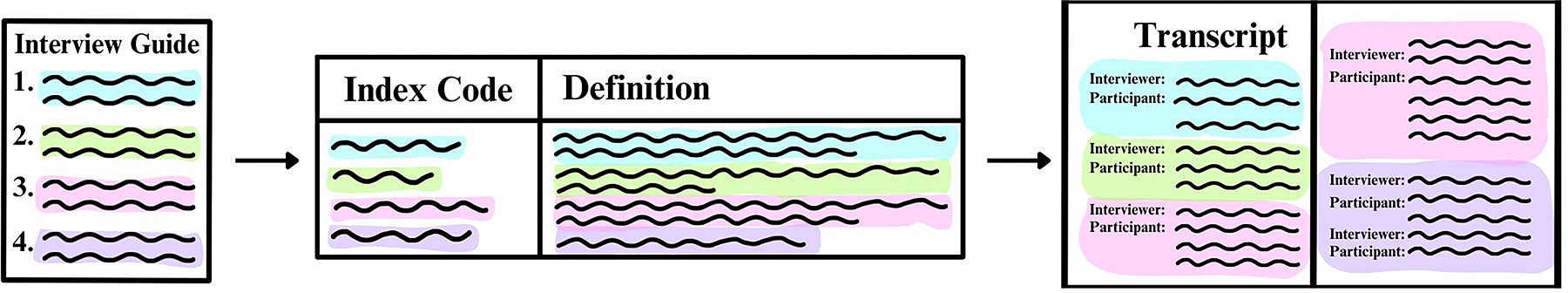

Once the indexing codebook is ready, the ICT applies these codes to the transcripts (Figure 2). The indexing codebook is added to the QDAS source file to begin indexing. Initially, we begin with the data collected thus far; if further data collection ensues, seamless integration into the project is facilitated by indexing the new data and incorporating it into the source file. Each ICT coder is assigned transcripts to index. They do a first pass to broadly capture each question asked under an index code. Given the non-linear nature of semi-structured interviews and focus groups, they then do a second pass, addressing one or two index codes at a time, identifying if that topic or theme is discussed elsewhere, until all index codes are applied. Each index coder usually dedicates about two hours to indexing a transcript from an interview or focus group that lasted 1 to 1.5 hours. Due to the nature of the topic of our project, our data is often coded under several index codes. For example, a participant may discuss how mental health issues affected their chronic diseases in the same paragraph, which would get indexed both under ‘COVID-19 and mental health’ and ‘COVID-19 and chronic disease’. This underscores the importance of recognizing that certain passages can be relevant to multiple index codes. Properly capturing this one-to-many relationship is crucial because researchers focusing on different areas, such as mental health or chronic diseases, will rely on these index codes to access pertinent data. If the passages are not indexed accurately, important information could be overlooked.

Figure 2.

Example of Indexing based on interview guide

We strongly recommend paired indexing, wherein two index coders independently index the same transcript, assess their intercoder agreement (ICA), and reconcile differences to generate a consolidated indexed transcript. This process is guided by the Krippendorff’s α coefficient, a common measurement for ICA, with a score of 0.8 and above indicating high reliability (O’Connor & Joffe, 2020). Once this level of agreement is reached, coders proceed with distributing the remaining transcripts (i.e. split coding) and discuss any indexing questions that arise thereafter. Paired indexing guarantees thoroughness, minimizes errors, and serves as an effective training tool for novice coders, helping them become proficient in using QDAS and understanding the coding process. Under time or resource constraints, this process may be applied only until coders gain confidence in indexing and using QDAS, instead of being applied to all transcripts.

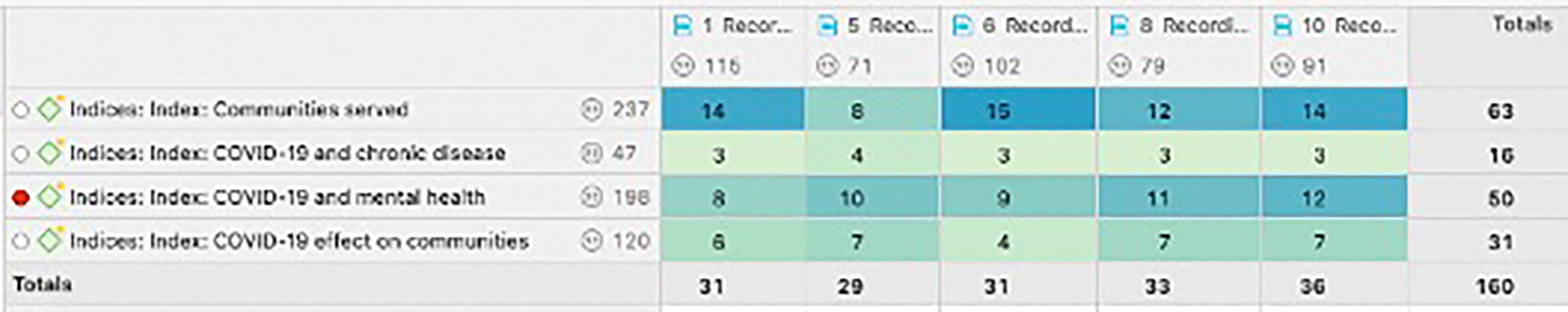

Once index coding is complete, we use the QDAS functionality of constructing a matrix of code-document analysis to verify if all index codes are in every transcript (Figure 3). Where this is not the case, the ICT returns to the transcripts to ensure the topic was not discussed or to determine whether it was oversight in the indexing process.

Figure 3.

Example of Atlas.ti code-document analysis

During indexing, a crucial practice involves the concurrent creation and maintenance of reflective memos. The indexing team generates respondent-level memos (one for each transcript they code), along with cross-case memos to document patterns, emerging similarities, and differences across their transcripts. This process serves a multifaceted purpose, contributing to refining our analysis during this crucial research phase while nurturing the development of memo-writing and analytical skills within our team, especially among those new to this process. Writing memos is an important step for researchers to begin describing and analyzing what they are observing, while also being reflexive on their positionality with respect to the participants and topics. During this stage, the ICT begins a list of potential “analytic codes” and their definitions as ideas and insights emerge. Insights from these memos can be shared with the Community Interpretation Team (CIT) to inform the potential focus and directions for the first analysis.

Product:

A product of this stage is a QDAS project that contains transcript files with attributes, index codes, and memos. This version is the new source dataset to be used for analysis. It allows for easy retrieval of the sections of each transcript that apply to the researcher’s topic of interest.

Stage 2: Applying analytical codes based on each research question (RQ)

The subsequent phase involves analytical coding, a stage guided by each individual research question. During this phase, the primary researcher develops unique analytic codes to address a research question, and the analytic coding team systematically applies these codes to the relevant segments of the text. This stage allows the involvement of multiple researchers, each dedicated to different research questions within the overarching study.

Defining the research question and dataset

The first step involves the PR selecting a focused research question (RQ), based on the overarching study or inquiries emerging during data collection, indexing, and discussions with community partners and the CIT. Each RQ undergoes feedback and refinement in a community partner forum prior to analytical coding. Subsequently, the PR outlines the RQ’s aims and objectives to provide a detailed articulation to guide coders on when, why, and what to code. Furthermore, this helps narrow down the datasets to address the question. For example, is the question encompassing the voices of CHWs only, or should it also include the voices of community residents? Once the dataset is determined, the PR is ready to identify the specific index codes needed.

Identifying the index codes

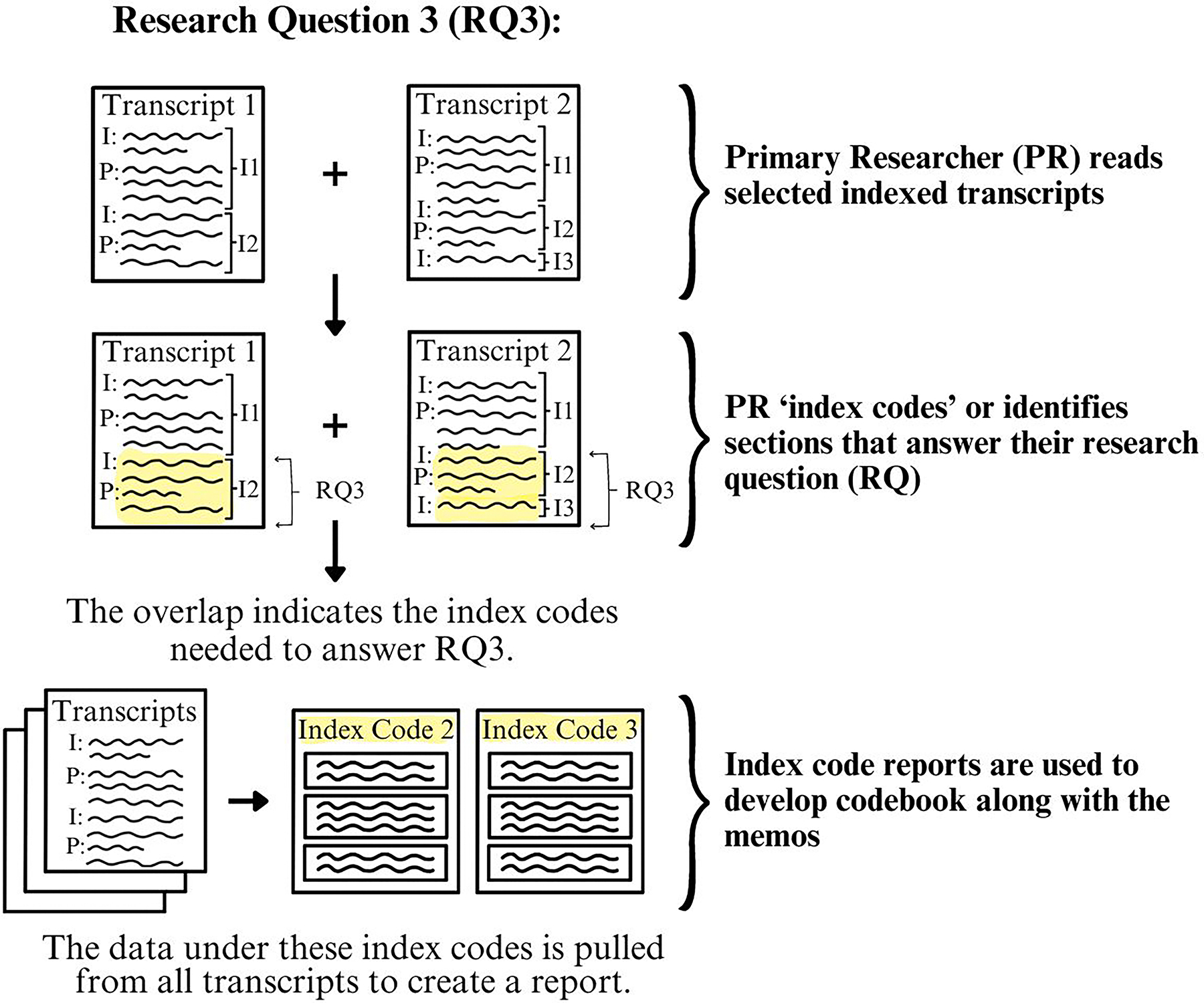

With the dataset selected, the PR is ready to identify the index codes for their RQ. In some cases, the choice is direct. For example, when working on the mental health project, the mental health index code was selected from all transcripts. Similarly, when looking into the impact of language on CHW efforts, the language index code was selected. When a research question could span multiple index codes, or the PR is new to the project and not well-acquainted with the interview guide and index codes, the PR conducts a focused review of a subset of selected transcripts to identify the relevant index codes for their research question. These transcripts are thoughtfully chosen to represent the overall population (e.g., attributes) and the dataset type, considering factors such as including both interviews and focus groups if present in the selected dataset or incorporating samples from each perspective type included in the analysis (e.g., IRPs, CHWs, and residents). For instance, two transcripts per group are reviewed. Within this subset, the PR applies a new designated code, labeled with a concise name for the research question (e.g., RQ3), a process we refer to as indexing for the RQ. This indexed subset is then reviewed to identify the original index codes it overlaps with, determining the index codes to be included in the analysis (Figure 4). This is often done through a code-co-occurrence analysis, a feature of the QDAS.

Figure 4.

Process of identifying multiple index codes that apply to a RQ

Developing the analytical codebook

After selecting index codes, the data corresponding to these index codes is aggregated. This data reduction process simplifies the analysis by narrowing the researcher’s focus, wherein they read these specific sections rather than entire transcripts. For instance, in the mental health analysis, mental health index codes encompass approximately 13% of the total quotations in the transcripts.

Alongside this indexed data, the PR reviews the ICT and interview team memos. They then formulate the initial analytical codebook tailored to their research question, including inductive (e.g., informed by qualitative data) and deductive codes (e.g., concepts from existing theories or frameworks) and noting the boundaries and connections across these codes. This step focuses on systematically linking concepts from Stage 1 to the data. The codebook can contain the following fields: 1) Code Name, 2) Definition, 3) Example quotes, and 4) Boundaries between the codes (e.g., distinctions between codes). Recognizing the codebook as a living document, the ACT (Analytical Coding Team) and PR meet multiple times to refine the codebook, including feedback sessions with the CIT, consultations with the research team, and revisiting the literature. Finally, the ACT, comprising two or more coders selected for their interest, training, and availability convenes to discuss the codebook, examine sample quotes, address initial queries with the PR, and implement necessary modifications such as clarifying code definitions or boundaries.

The ACT then creates a QDAS file for the specific research question by duplicating the source file with the relevant datasets, differentiating the applicable index codes (usually by color-coding them or removing non-relevant index codes) and adding the analytical codebook following QDAS methods. This file name includes appropriate terminology for the research questions (ex. “RQ3_CHWs_Coding File”). The file is duplicated for each coder to begin analytically coding. Table 6 shows the concurrent research questions our team worked on and the different index codes that apply to each.

Table 6.

Example of multiple concurrent research questions (RQ)

| RQ | Topic | Index codes* | Primary Researcher Educational Discipline /Position |

|---|---|---|---|

| 1 | Essential CHW function from policymaker perspectives | IRP: 3, 6, 8, 13 | Assistant Professor, Public Health |

| 2 | Structural supports for CHWs from policymaker perspectives | IRP: 3, 8–14 | Assistant Professor, Public Health |

| 3 | How CHWs are supported to be prepared to respond to needs from pandemic | OC CHW: 1, 2–9, 15–26 | Associate Professor, Family Medicine |

| 4 | How CHWs confronted various forms of injustice and contribute to systemic change during the COVID-19 pandemic | OC CHW: 7, 8, 21 | Associate Professor, Chicano/Latino Studies Associate Professor, Public Health |

| 5 | Ways individuals within communities encountered the effects of COVID-19 and the role CHWs played from their perspective | Residents: 4–8 | Assistant Professor, Anthropology |

| 7 | Mental health issues affecting communities and the CHW-led efforts to address the needs that emerged and/or were worsened during the COVID-19 pandemic | OC CHW: 5 Residents: 6 IRP: 5 |

Associate Professor, Chicano/Latino Studies Associate Professor, Public Health |

| 8 | The impact and role of language during the COVID-19 pandemic | CHW: 5 Residents: 6 IRP: 5 |

Professor of Public Health |

Note: CHW: Community Health Worker; IRP: Institutional and policy representatives; OC: Orange County

The numbers refer to the numbered index code within each dataset

Applying analytical codes

To streamline communication around each research question, we utilize a dedicated channel (e.g., Slack), linking the Principal Researcher (PR) and the Analytical Coding Team (ACT).

To start the process, each ACT member independently codes the selected indices within the same transcript. Then, they meet with the PR to discuss initial coding insights, address any challenging codes, and outline the coding timeline. An effective strategy when feasible includes the PR first coding a transcript for the ACT to independently code, ensuring consistent codebook application.

Following this, the coders move to the remaining transcripts, concentrating on applying 1–2 analytic codes at a time to each selected index code, until all analytical codes have been applied in each transcript. Focusing on a small subset of the codes at a time to only the applicable sections of a transcript enhances the reliability and validity of the coding process. When feasible, the ACT does paired coding, independently coding the same transcripts and systematically evaluating the ICA. The ACT initially meets after coding 5–10% of the transcripts. The coders maintain a shared file where both coders independently keep notes on the transcripts they have coded up to the meeting point. During ICA meetings, the team documents any discrepancies or changes needed. The team also communicates while independently coding if they have questions on how to apply a code or doubts on a particular transcript. If changes to the codebook are required, the coders go back to transcripts previously coded and code again for any edited or new codes to reflect changes. Paired coding is done until they reach an overall 0.8 ICA. They also assess the ICA for individual codes to ensure all are meeting the standards of 0.8 ICA. Once 0.8 ICA is achieved, and taking into consideration the timing constraints and the team’s coding experience, the team may implement split coding (Campbell et al., 2013; O’Connor & Joffe, 2020) or the remaining transcripts. In our projects, achieving a 0.8 ICA typically requires coding through 2–5 transcripts. Through split coding, the ACT divides the remaining transcripts for independent coding, with continuous open discussions for any new insights or questions, ensuring consistent and accurate coding across the team.

Simultaneously, each coder maintains a memo, encompassing respondent-level (e.g., subset of one transcript) and cross-case analyses. This could be a written memo or “analytical maps” (Singh et al., 2022). The memos highlight noteworthy aspects, identify intriguing elements, address ambiguities, examine unexpected occurrences, assess the utilization of specific codes, and propose potential analytical foci. The PR actively engages with the memo, posing clarifying questions, encouraging coders to revisit data for a more comprehensive understanding of phenomena, and evaluating the uniqueness or representativeness of certain phenomena within the dataset. This step is critical to ensuring that the team remains close to the data, and that the qualitative research process is iterative. This serves to deepen and accelerate qualitative analysis and is a critical precursor to writing the manuscript or other dissemination materials (e.g., public-facing summaries of emerging findings).

We provide an example of paired coding for a project involving 7 out of 16 total index codes, which accounts for 32% of quotations in the transcripts. Applying 22 analytical codes to these selected indexes required approximately one hour of analytical coding per transcript per coder (Table 7). This process would have been longer and less reliable had it been applied to 100% of the transcripts. Ensuring reliability becomes more feasible when consistently applying analytical codes across a portion of the transcripts, rather than attempting to maintain reliability over an exhaustive duration if the whole transcript is being coded at once. The time spent indexing, though longer, is a one-time activity that prepares transcripts for efficient analytical coding later.

Table 7.

Example of estimated timing for indexing and coding one RQ

| Step | Source | Team | Timea |

|---|---|---|---|

| Index all transcripts & validate indexingb | 16 index codes; 15 full transcripts | ICT | ~1.5–2.5 hours per person per transcript = 45–75+ hours |

| Create analytical codebook | 7 index codes; selected indices from 15 transcripts; 15 memos | PR | ~30 mins per transcript and associated memo = 8 hours |

| Apply analytical codebookc | 7 index codes; selected indices from 15 transcripts | ACT | ~16 hours per person = 32 hours |

Note:

Time estimates vary according to level of experience with coding and prior familiarity with the data (e.g., interviewer indexing transcript vs. researcher indexing transcript that they did not collect).

Indexing involves applying all the indexes, applying 1–2 indices at a time and re-reviewing transcripts to ensure that transcripts were fully indexed.

The time that analytic coding takes depends on the number of codes in the analytic codebook.

Products:

A QDAS project file per RQ that contains transcript files with attributes, indexing and analytical codes, and indexing and analytical memos. This file is now ready for the PR to review and continue the in-depth analysis.

Stage 3: Refining the Analysis

Once all transcripts are coded, the PR is ready to deepen the analysis, aiming to establish theoretical contributions grounded in the data. The ACT creates a final reflective memo, harnessing new ideas and patterns they observe. To create this memo, the ACT leverages several QDAS capabilities, including code-document analysis querying to produce a matrix showing code frequency within each document or querying the co-occurrence of attributes and codes to identify any patterns and negative cases. In the memo, they describe key codes, summarize their observations and the patterns present, and include quotes describing their observations.

The PR engages with the reflective memo to move the analysis along by identifying guiding categories and patterns/themes. The PR uses this final memo, the coded data, and the QDAS reports to fully understand the data and refine the data-based theories. They also use the literature to guide what to scrutinize in the coding/analysis and use it to sensitize and deepen the analysis. Throughout this process, the PR meets regularly with the ACT to discuss initial findings and gaps. The PR and ACT revisit the coded data frequently to validate concepts or recode for any new concepts. In this stage of analysis, the PR works closely with much reduced data, attentively organized into analytical codes and can use the QDAS capability to test the theories and patterns that are forming. The PR then organizes and groups the emerging findings from the codes into categories, followed by patterns and themes -- all of which begin to create a narrative and theoretical framework grounded in the data.

The PR and ACT then prepare these groupings for review and discussion with the CIT. The CIT guides the analysis and points to areas where we might need to revisit the data to deepen the analysis. The PR and the ACT continue this process iteratively, reviewing their analysis and revisiting the data until they can move from descriptive to interpretative analysis. To continue to deepen the interpretive analysis, the PR and the ACT work through the different data sources (e.g., transcripts, memos, literature). They note details such as observations across different participants/sources of data (racial/ethnic groups, participant ages, interviews vs focus groups), negative cases to earlier patterns, discrepancies between the data and expectations, surprising findings, and analogies used by participants or coders. All this helps move the narrative forward, with the themes telling the story, rather than simply describing what was observed at surface level.

Product:

A product of this stage is an in-depth analysis rooted in the data ready for use in forums applicable to the research question, project, and research team.

DISCUSSION

We have distilled our experience working with a large dataset and a diverse team in a community-academic partnership into a step-by-step summary for applying flexible coding in qualitative data analysis for health equity research. Our practical guidance, based on the original description of flexible coding by Deterding and Waters (2021) is tailored for public health, and practice-based approaches, making complex methodologies more approachable for novice researchers and enabling meaningful multidisciplinary community-academic research. Nonetheless, this guide can apply across multiple disciplines and for datasets of varying sizes, as it provides a systematic method for structuring and coding the data.

We draw from our collective experiences working on these projects and implementing a standardized flexible coding approach to offer key lessons learnt to assist research teams in adopting a flexible coding strategy. These key lessons and opportunities include maximizing the qualitative dataset’s impact, responding to community priorities, standardizing data analysis with a diverse team, continuously engaging with the data, forming and supporting the analytic coding team, and training qualitative scholars.

Maximizes data impact

Our outlined flexible coding approach extends the utility of our data beyond traditional analysis methods. It helps prioritize urgent public health topics for immediate attention while also preparing the data for future and secondary analyses. This is critical, considering the time-intensive demands of qualitative methods for researchers, community partners, and participants—such as developing the facilitation guides, recruiting participants, and conducting and participating in interviews or focus groups.

Enabling secondary data analysis is a key benefit of flexible coding, and it is a practice that should be encouraged in public health (Ruggiano & Perry, 2019). As health equity researchers, we aim to minimize the burden of participation and maximize participants’ contributions, recognizing that many participants come from communities facing significant structural inequities. A flexible coding approach organizes the data (transcripts and multiple memos) to facilitate revisiting and conducting focused secondary analysis, eliminating the need to start an analysis from scratch if new priorities or research questions emerge. For future and secondary analysis, researchers can leverage the indexed data by selecting only the relevant index codes and reviewing the corresponding memos of those involved in data collection and index coding.

Responsive to community priorities

Through this practical approach to qualitative data analysis, we can engage in authentic community-based participatory qualitative research, where community partners prioritize and guide the research process. For effective health equity research, academic teams must rely on having an established community-academic partnership – true involvement of community in every step is critical for health equity. This process enables teams to identify and prioritize key pressing questions to address. For instance, our collaboration involved CHWs and community and academic partners to identify and prioritize multiple research questions, ensuring findings could be quickly incorporated into their urgent advocacy work. This required us to prioritize specific research questions and conduct rapid and focused data analysis to ensure our findings were useful and relevant.

Provides standardization and leverages technology

Standardizing the QDA process is crucial for enhancing project planning, particularly when tackling new research questions within existing datasets and outlining future projects and grants. Through this process, we have gained detailed insights into the various steps and the expected timing of tasks. This has helped us foster more integrated community-academic partnerships, as we define the processes to authentically integrate community partners in analysis and dissemination, an essential part of CBPR (Israel et al., 2017), but a common gap. A deeper understanding of processes conducive to authentic engagement can significantly aid partnerships in planning and budgeting their projects.

This standardization also allows us to effectively utilize advancements in software technology. Flexible coding encourages consistent use of various QDAS capabilities to strengthen the analysis. The integration of emerging artificial intelligence (AI) capabilities to assist with analysis is a promising avenue (Morgan, 2023). AI could assist with initial indexing and memo writing, potentially reducing early-stage coding time. Nevertheless, researchers must remain deeply engaged with the data throughout the analytic process and adopt processes to assess the quality of any aspects of qualitative data analysis supported by AI.

Encourages continuous engagement with the data

By not attempting to answer all research questions at once, flexible coding enables us to easily investigate in-depth different facets of the data. Flexible coding addresses the challenge coders face of applying numerous codes to transcripts, where specific codes are applicable only to specific segments of transcripts (Giesen & Roeser, 2020). This process increases the reliability and validity of coding, as coders can focus on one topic at a time across a subset of a transcript, making the task more focused and less time consuming.

The creation of multiple research artifacts (including various memos) throughout different phases enhances the quality of the data analysis process, offering numerous opportunities to guide the study’s direction, enhances validity, and provides another integrity check of findings and interpretations (Locke et al., 2022). Moreover, this iterative, engaged process prioritizes discussions with community partners to ensure alignment with their priorities, a critical practice for promoting health equity. The flexible coding structure allowed our team to share and have joint discussion of all outputs with community partners for their input (e.g., determining key attributes, review of index codes, identifying research questions, informing the codebook, interpreting the initial analysis to identify emerging themes).

Through this process, the lead researcher remains engaged with the coders and reviews coding reports and memos throughout the process, not simply at the end. As is common in qualitative research, the researcher plays a critical role in the data analysis, interpretation, and dissemination (Wa-Mbaleka, 2020). Contrary to quantitative research, we acknowledge the influence of the researcher and as such, endorse researcher reflexivity (Denzin & Lincoln, 2011; Rankl et al., 2021). Reflexivity of community-academic partnership members is also critical to engaging in the qualitative data analysis process. Importantly, we note that continuous engagement of all researchers increases the strength of the analysis, ensuring different viewpoints and different artifacts are available at different phases.

Accommodates different team models

A flexible coding approach enabled us to accommodate different team models. It allowed students in our academic lab to contribute to and learn qualitative data analysis without being involved in every aspect, accommodating the varying task durations and students’ tenure in the lab. Additionally, this approach enabled different investigators to conduct focused research based on their specific interest area. Nonetheless, varying team capacity and availability can impact team formations.

A key team is the ACT, often formed based on students’ interest and current QDA experience. We strongly recommend every ACT designate a lead coder with strong qualitative experience to oversee the team. Based on team composition, there can be potential variation in the number of meetings an ACT will need to ensure alignment on codebook implementation and address discrepancies. Several factors can impact inter-coder agreement. One common challenge is an overly complex codebook with too many codes, which can lead to unclear choices and potential overuse of double and triple coding. This suggests a need for simplifying the codebook. Additionally, rarely used codes may indicate a mismatch with the data or that coders are overlooking some codes due to the abundance of options. The codebook must have clear definitions and examples (Table 5) to help coders understand the intended use of the code and to have boundaries for the codes when needed. Lastly, new coding teams should discuss and define their coding approaches (sentences, or paragraphs, etc.), considering the project’s goals. For example, coding full paragraphs may be more suitable for projects aiming to include personal anecdotes or stories, while projects requiring smaller segments or powerful one-line statements may benefit from coding smaller sections.

Table 5.

Example of an analytical codebook

| Code | Definition | Illustrative Quotes |

|---|---|---|

| Mental/behavioral health - Residents | Mental/behavioral health outcomes for residents, such as depression, anxiety, and substance use. Includes mental health outcomes due to or worsened by COVID and regardless of COVID (i.e. pre-pandemic). Refers to resident and/or community mental health issues/patterns/burdens. Additionally includes suicidality and related mental health issues. | “I’ve seen a lot of mental health issues already beforehand, but I think with COVID, all if it elevated to a completely different level.” CHWIP002 “Social isolation and depression and anxiety is very big in our community, that if we continue to do online and continue to do this, that our elders are going to be getting into more and more of a depression.” CHWIP001 |

| Grief | Grief related to the loss of loved ones during the pandemic due to complications with COVID | “I think one of the things that really hit hard was the amount of funerals that happened. And I think that was, like, a physical sign that this was affecting our communities.” CHWIP002 “And right now what we are seeing in the kids and elderly is a lot of anxiety, a lot of depression or stress. And it could be the aftermath that resulted from Covid, due to all the emotions they went through. They lost their families, mother died, father died.” CHWFG001 |

| Stigma | Stigma related to having COVID, appearance, social status, or stigma against mental health (e.g. not believing mental health exists or against seeking mental health help). | “There’s serious stigma for mental wellness, for depression, for substance abuse, for spousal abuse, for all of those things. Like, most people don’t talk about that. And so, trying to have a conversation or do education around that is tough.” CHWIP001 |

| COVID hardships | Stressor: Losses or challenges that residents experienced during COVID pandemic, including financial hardship, job loss, closing small businesses owned by residents, loss of loved ones, food insecurity, housing expenses or instability, housing density, occupational hazards (e.g., working in health care or food industry), “no safety net” (CHWIP004), barriers to cultural practices, navigating technology or internet demands/injustices, domestic violence. | “...In terms of financially, a lot of our families, young families, adults, lost jobs because of COVID. They’ve lost family members because of COVID, so these are all different challenges that they’ve had to go through during that time.” CHWIP001 “The unemployment part also came, a lot of unemployed people, coming to us, looking for a job. Where could we tell them, or how could we help them get a job? We didn’t have anything to do, a direct way to help them.” CHWIP009 |

Serves as a training tool

Tailored flexible coding is an important training tool, which facilitates a team-based approach to coding large datasets that strengthens individual and collective capacity for QDA. Using a flexible coding approach also serves as an effective means of training novice coders in qualitative data analysis, allowing them to grasp the methodology by initially applying index codes, an inherently simpler process. Tailored flexible coding provides an opportunity to grow the qualitative skills of our public health workforce -- by engaging students and those new to qualitative methodologies in concrete steps as they build upon their familiarity with qualitative data analysis (Figure 5). Nonetheless, we also call for more formal qualitative training through the curriculum for both undergraduate and graduate Public Health programs.

Figure 5.

Steps of Tailored Flexible Coding to Gain Qualitative Data Analysis Experience

Strengths & Limitations

This paper has several strengths, as well as some considerations relevant to the scope of focus and generalizability to other contexts. This paper focuses specifically on the process of qualitative data analysis with a flexible coding approach. While other phases of qualitative research are not within the scope of this paper, we acknowledge good analysis is impacted by strong practices in the design and data collection phases of the research project. It is essential to note that qualitative methods and methodologies alone may not inherently align with the goals of health equity and health justice research. Aligning research goals with a critical health equity stance should guide method selection (Bowleg, 2017). Lastly, while our process involved a large team, the concepts discussed could be applied by smaller teams or individuals analyzing qualitative data, particularly with large datasets, expanding the reach of our findings beyond our team’s scope. This process has applicability beyond what we have used it for. While we argue for its benefit in public health and with large datasets, flexible coding is a systematic approach to structuring data for reliable analysis that can benefit most qualitative analysis. Any team, researching any topic, and with any amount of data could benefit from this type of process or ideas from this process.

CONCLUSIONS

This paper sought to describe a tailored flexible coding approach for practice-based fields such as public health, particularly health equity scholarship that incorporates a community-based participatory research approach. Given the growing scale and frequency of public health crises (e.g., pandemic, environmental disasters, climate change), the field would benefit from timely and time-sensitive qualitative inquiries for which a practical approach to QDA is needed. Insights and processes delineated here have strong potential to contribute valuable information on community- and practice-based evidence and offer implications for policy, systems, environmental, and programmatic transformations, beyond what can be gleaned from quantitative research alone.

Acknowledgements:

We extend our sincere appreciation to the invaluable contributions and dedicated support provided by our partnership members: Latino Health Access, Orange County Asian and Pacific Islander Community Alliance, Radiate Consulting, GREEN-MPNA, and AltaMed. Their commitment to these research efforts has been instrumental in guiding the research questions, shaping the research approach, and interpreting and disseminating findings.

A heartfelt thank you goes out to the Community Health Workers (CHWs) and Community Scientist Workers (CSWs) whose invaluable insights and tireless efforts in engaging our communities have been pivotal in shaping our understanding of the critical work of CHWs in the COVID-19 pandemic.

We are also immensely grateful to all participants whose involvement made this research possible. We express our gratitude to advocates promoting health and racial equity and to the individuals who shared their stories, resilience, and aspirations, shaping the essence of this research.

Special recognition is extended to the University of California, Irvine (UCI) students whose commitment, enthusiasm, and assistance greatly facilitated our research efforts and significantly contributed to the success of this project. We also acknowledge the representatives of UCI Program in Public Health for their support and collaboration.

Additionally, we are thankful for the financial support of several institutions and agencies.

Funding statement:

The author(s) disclosed receipt of the following financial support for the research, authorship, and/or publication of this article: Research reported in this RADx® Underserved Populations (RADx-UP) publication was supported by the National Institutes of Health under Award Number (U01MD017433); NIH Loan Repayment Program; California Collaborative for Pandemic Recovery and Readiness Research (CPR3) Program, which was funded by the California Department of Public Health; and the University of California, Irvine Wen School of Population & Public Health, Department of Chicano/Latino Studies, Center for Population, Inequality, and Policy (CPIP), and Interim COVID-19 Research Recovery Program (ICRRP). The content is solely the responsibility of the authors and does not necessarily represent the official views of the funders

Grant Number:

U01MD017433; 140673F/14447SC

Footnotes

Conflict of interest statement: The author(s) declared no potential conflicts of interest with respect to the research, authorship, and/or publication of this article’.

Statements and Declarations

Ethical considerations: All protocols for the CATALYST study (which we provide examples from) were reviewed and approved by the University of California, Irvine institutional review board in 2020 (UCI IRB #1272).

Consent to participate: not applicable

Data availability statement:

Data sharing not applicable to this article as no datasets were generated or analyzed during the current study.

REFERENCES

- Adkins-Jackson PB, Chantarat T, Bailey ZD, & Ponce NA (2022). Measuring Structural Racism: A Guide for Epidemiologists and Other Health Researchers. American Journal of Epidemiology, 191(4), 539–547. 10.1093/aje/kwab239 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bates G, Le Gouais A, Barnfield A, Callway R, Hasan MN, Koksal C, Kwon HR, Montel L, Peake-Jones S, White J, Bondy K, & Ayres S (2023). Balancing Autonomy and Collaboration in Large-Scale and Disciplinary Diverse Teams for Successful Qualitative Research. International Journal of Qualitative Methods, 22, 160940692211445. 10.1177/16094069221144594 [DOI] [Google Scholar]

- Beresford M, Wutich A, Du Bray MV, Ruth A, Stotts R, SturtzSreetharan C, & Brewis A (2022). Coding Qualitative Data at Scale: Guidance for Large Coder Teams Based on 18 Studies. International Journal of Qualitative Methods, 21, 160940692210758. 10.1177/16094069221075860 [DOI] [Google Scholar]

- Bowleg L (2017). Towards a Critical Health Equity Research Stance: Why Epistemology and Methodology Matter More Than Qualitative Methods. Health Education & Behavior, 44(5), 677–684. 10.1177/1090198117728760 [DOI] [PubMed] [Google Scholar]

- Campbell JL, Quincy C, Osserman J, & Pedersen OK (2013). Coding In-depth Semistructured Interviews: Problems of Unitization and Intercoder Reliability and Agreement. Sociological Methods & Research, 42(3), 294–320. 10.1177/0049124113500475 [DOI] [Google Scholar]

- Denzin NK, & Lincoln YS (2011). The Sage handbook of qualitative research. sage. [Google Scholar]

- Deterding NM, & Waters MC (2021). Flexible Coding of In-depth Interviews: A Twenty-first-century Approach. Sociological Methods & Research, 50(2), 708–739. 10.1177/0049124118799377 [DOI] [Google Scholar]

- Giesen L, & Roeser A (2020). Structuring a Team-Based Approach to Coding Qualitative Data. International Journal of Qualitative Methods, 19, 1609406920968700. 10.1177/1609406920968700 [DOI] [Google Scholar]

- Griffith DM, Satterfield D, & Gilbert KL (2023). Promoting Health Equity Through the Power of Place, Perspective, and Partnership. Preventing Chronic Disease, 20, 230160. 10.5888/pcd20.230160 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Griffith DM, Shelton RC, & Kegler M (2017). Advancing the Science of Qualitative Research to Promote Health Equity. Health Education & Behavior, 44(5), 673–676. 10.1177/1090198117728549 [DOI] [PubMed] [Google Scholar]

- Israel BA, Schulz AJ, Parker EA, Becker AB, Allen AJ, Guzman JR, & Lichtenstein R (2017). Critical issues in developing and following CBPR principles. Community-Based Participatory Research for Health: Advancing Social and Health Equity, 3, 32–35. [Google Scholar]

- Israel BA, Schulz A, Parker E, & Becker A (1998). Review of community-based research: Assessing partnership approaches to improve public health. 19, 173–202. 10.1146/annurev.publhealth.19.1.173 [DOI] [PubMed] [Google Scholar]

- Jeffries N, Zaslavsky AM, Diez Roux AV, Creswell JW, Palmer RC, Gregorich SE, Reschovsky JD, Graubard BI, Choi K, Pfeiffer RM, Zhang X, & Breen N (2019). Methodological Approaches to Understanding Causes of Health Disparities. American Journal of Public Health, 109(S1), S28–S33. 10.2105/AJPH.2018.304843 [DOI] [PMC free article] [PubMed] [Google Scholar]

- LeBrón AMW, Michelen M, Morey B, Montiel Hernandez GI, Cantero P, Zarate S, Foo MA, Peralta S, Chow JJ, Mangione J, Tanjasiri S, & Billimek J (2024). Community Activation to TrAnsform Local sYSTems (CATALYST): A Qualitative Study Protocol. International Journal of Qualitative Methods (Forthcoming), Volume 23: 10. 10.1177/16094069241284217 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Locke K, Feldman M, & Golden-Biddle K (2022). Coding Practices and Iterativity: Beyond Templates for Analyzing Qualitative Data. Organizational Research Methods, 25(2), 262–284. 10.1177/1094428120948600 [DOI] [Google Scholar]

- Matthew RA, Willms L, Voravudhi A, Smithwick J, Jennings P, & Machado-Escudero Y (2017). Advocates for Community Health and Social Justice: A Case Example of a Multisystemic Promotores Organization in South Carolina. 25(3–4), 344–364. 10.1080/10705422.2017.1359720 [DOI] [Google Scholar]

- Montiel GI, Moon KJ, Cantero PJ, Pantoja L, Ortiz H, Arpero S, Montanez A, & Nawaz S (2021). Queremos transformar comunidades: Incorporating civic engagement as an equity strategy in promotor-led COVID-19 response efforts in latinx communities. 33, 79–101. [Google Scholar]

- Morgan DL (2023). Exploring the Use of Artificial Intelligence for Qualitative Data Analysis: The Case of ChatGPT. International Journal of Qualitative Methods, 22, 16094069231211248. 10.1177/16094069231211248 [DOI] [Google Scholar]

- O’Connor C, & Joffe H (2020). Intercoder Reliability in Qualitative Research: Debates and Practical Guidelines. International Journal of Qualitative Methods, 19, 1609406919899220. 10.1177/1609406919899220 [DOI] [Google Scholar]

- Rankl F, Johnson GA, & Vindrola-Padros C (2021). Examining What We Know in Relation to How We Know It: A Team-Based Reflexivity Model for Rapid Qualitative Health Research. Qualitative Health Research, 31(7), 1358–1370. 10.1177/1049732321998062 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Roberts JK, Pavlakis AE, & Richards MP (2021). It’s More Complicated Than It Seems: Virtual Qualitative Research in the COVID-19 Era. International Journal of Qualitative Methods, 20, 160940692110029. 10.1177/16094069211002959 [DOI] [Google Scholar]

- Ruggiano N, & Perry TE (2019). Conducting secondary analysis of qualitative data: Should we, can we, and how? Qualitative Social Work, 18(1), 81–97. 10.1177/1473325017700701 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Saunders CH, Sierpe A, Von Plessen C, Kennedy AM, Leviton LC, Bernstein SL, Goldwag J, King JR, Marx CM, Pogue JA, Saunders RK, Van Citters A, Yen RW, Elwyn G, & Leyenaar JK (2023). Practical thematic analysis: A guide for multidisciplinary health services research teams engaging in qualitative analysis. BMJ, e074256. 10.1136/bmj-2022-074256 [DOI] [PubMed] [Google Scholar]

- Shelton RC, Griffith DM, & Kegler MC (2017). The Promise of Qualitative Research to Inform Theory to Address Health Equity. Health Education & Behavior, 44(5), 815–819. 10.1177/1090198117728548 [DOI] [PubMed] [Google Scholar]

- Shelton RC, Philbin MM, & Ramanadhan S (2022). Qualitative Research Methods in Chronic Disease: Introduction and Opportunities to Promote Health Equity. Annual Review of Public Health, 43(1), 37–57. 10.1146/annurev-publhealth-012420-105104 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Silverio S, Hall J, & Sandall J (2020). Time and qualitative research: Principles, pitfalls, and perils. In annual qualitative research symposium, University of Bath, Bath. [Google Scholar]

- Singh H, Tang T, Thombs R, Armas A, Nie JX, Nelson MLA, & Gray CS (2022). Methodological Insights From a Virtual, Team-Based Rapid Qualitative Method Applied to a Study of Providers’ Perspectives of the COVID-19 Pandemic Impact on Hospital-To-Home Transitions. International Journal of Qualitative Methods, 21, 160940692211071. 10.1177/16094069221107144 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stickley T, O’Caithain A, & Homer C (2022). The value of qualitative methods to public health research, policy and practice. Perspectives in Public Health, 142(4), 237–240. 10.1177/17579139221083814 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tavory I, & Timmermans S (2014). Abductive analysis: Theorizing qualitative research. The University of Chicago Press. [Google Scholar]

- Teti M, Schatz E, & Liebenberg L (2020). Methods in the Time of COVID-19: The Vital Role of Qualitative Inquiries. International Journal of Qualitative Methods, 19, 160940692092096. 10.1177/1609406920920962 [DOI] [Google Scholar]

- Vindrola-Padros C, Chisnall G, Cooper S, Dowrick A, Djellouli N, Symmons SM, Martin S, Singleton G, Vanderslott S, Vera N, & Johnson GA (2020). Carrying Out Rapid Qualitative Research During a Pandemic: Emerging Lessons From COVID-19. Qualitative Health Research, 30(14), 2192–2204. 10.1177/1049732320951526 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wa-Mbaleka S (2020). The Researcher as an Instrument. In Costa AP, Reis LP, & Moreira A (Eds.), Computer Supported Qualitative Research (pp. 33–41). Springer International Publishing. [Google Scholar]

- Washburn KJ, LeBrón AMW, Reyes AS, Becerra I, Bracho A, Ahn E, & Boden-Albala B (2022). Orange County, California COVID-19 Vaccine Equity Best Practices Checklist: A Community-Centered Call to Action for Equitable Vaccination Practices. 6(1), 3–12. 10.1089/heq.2021.0048 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wiggins N (2012). Popular education for health promotion and community empowerment: A review of the literature. Health Promotion International, 27(3), 356–371. 10.1093/heapro/dar046 [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

Data sharing not applicable to this article as no datasets were generated or analyzed during the current study.