Abstract

This work shares a dataset that contains Spanish (SPA) to Mexican Sign Language (MSL) glosses -transcripted MSL- pairs of sentences for a downstream task. The methodology used to prepare the shared dataset considered the construction of SPA-to-MSL corpus with a specific representation of the SPA language for MSL interpretation. The proposed corpus is a reference dataset for evaluating diverse neural machine translation (NMT) system variants. With the support of grammatical MSL books and advice from MSL interpreters, this study developed a 3000 sentence pairs SPA-to-MSL dataset. The distribution of 3000 sentences in the corpus follows the linguistic composition of the SPA language. With the aim of testing the functionality of the corpus as a data source for NMT, two neural transformers were used to test the usability of the proposed dataset. The first NMT model uses a Helsinki-NLP SPA-to-SPA transformer developed by the Language Technologies Research Group at the University of Helsinki. The second NMT model considers a SPA-to-SPA pre-trained neural transformer presented as a BARTO approach. Both evaluations considered a transfer learning strategy, which has been demonstrated to be effective for modeling low-resource languages. The NMT evaluation produced 91.13 and 94.23 BLEU that coincide with the state-of-the-art results in NMT for arbitrary languages. Moreover, the evaluation of a professional MSL interpreter established 94% of effective translation of SPA sentences in MSL structures.

Subject terms: Quality of life, Patient education

Background & Summary

According to Besacier1, a low resource language (LRL) is a language that lacks a unique writing system, lacks or has limited web presence, lacks linguistic expertise, and lacks electronic resources as corpora (monolingual and parallel). LRLs are categorized based on the amount of publicly available data2. A summary of relevant LRLs is presented in Table 1, showing significant characteristics of such languages listed in the third column.

Table 1.

Language categories according to the study presented by Joshi et al in2.

| Class | Description | Language Examples |

|---|---|---|

| 0 | Have limited resources and have rarely been considered in language technologies | Slovene, Sinhala |

| 1 | Have some unlabelled data; however, collecting labelled data is challenging | Nepali, Telugu |

| 2 | A small set of labels has been collected, and have language support communities | Zulu, Irish |

| 3 | Has a strong web presence and cultural community. They have been benefited by unsupervised pre-training | Afrikaans, Urdu |

| 4 | Have a large amount of unlabeled data, but a significant amount of labeled data, have dedicated NLP communities researching these languages | Russian, Hindi |

| 5 | Have a strong online presence. These have been massive investments in the development of resources and technologies | English, Japanese. |

Sign languages (SLs) are considered LRLs compared to spoken languages. This characteristic arises from the fact that SLs often lack standardized written forms and comprehensive dictionaries, making it difficult to identify signs when finding grammatical relationships.

SLs are complex and not verbal languages used primarily by deaf people to communicate. In general, SLs are a system of manual movements, facial expressions, body gestures, and various symbolic uses of space3. SLs vary according to geographic region and local interpretations, among other factors. Although SLs are very useful, there is a barrier between people with hearing impairment and those without knowledge of SLs. One of the significant reasons that explain the mentioned barrier is that fewer educational resources and trained experts (linguists, teachers, interpreters) are available for SLs interpretation. In addition, technological tools and resources (such as speech recognition software) are primarily developed for spoken languages, with less focus on accommodating SLs. Historically, they do not have the same recognition as spoken languages in different technical and scientific disciplines4.

MSL in Mexico is a minority language and presents a disadvantage in a cultural context because SPA determines the social value of communication5. Hence, modern methods are needed to integrate MSL and similar signed languages in societies with more advanced translation strategies. Such strategies may apply automated machine learning and NLP approaches. However, it is necessary to develop the corresponding datasets that can be used in the mentioned ML strategies. Moreover, such databases must be efficiently synthesized given the admitted linguistic rules for the spoken and the signed languages.

Scientists working in linguistics have found a way to capture and design language datasets through written support by categories, the so-called “glosses”6. Specifically, glossed SLs and SPA are lexically similar but syntactically different. Glosses rule the grammatical order of SLs execution. Glossification, as defined in7, is the transcribing of a natural language to glosses. Glosses consist of fewer tokens in contrast to their original language. For example, the English sentence, Do you like to watch TV?, transcribes to American Sign Language (ASL) to TV watch you like?. Most SLs have a subject-object-verb (SOV) structure, such as German Sign Language (GSL) and Mexican Sign Language (MSL).

Concerning MSL glosses, the literature is scarce. Some grammatical dictionaries, such as8, explain how to gloss. For example, for the sentence “Está lloviendo en la ciudad” ("It is raining in the city”), it is: “ciudad ah llover” ("city there rain”).

In the case of text-to-text (T-T) glosses, there are some corpora available from languages to glosses, as Table 2 summarizes, including corpora with glosses to spoken language annotations. The widely used SLT dataset RWTH-PHOENIX-Weather 2014T contains only 8,257 parallel sentences. For comparison: for translation between spoken languages, 6,000 parallel sentences is considered a “tiny” amount9. In the case of MSL glosses, no validated database can be used to perform NMT, especially considering the permissible rules that define the interpretation of SPA to MSL.

Table 2.

Some available SL corpus with gloss annotations and spoken language translations.

| Corpus | Language-Pair | Parallel Gloss - Text Pairs | Vocabulary Size (gloss/spoken) |

|---|---|---|---|

| Signum50 | DGS-German | 780 | 565 / 1051 |

| NCSLGR51 | ASL-English | 1875 | 2484 / 3104 |

| ASLG-PC1252 | ASL-English | 87710 | 12344 / 16788 |

| RWTH-PHOENIX-Weather 2014T53 | DGS-German | 7096 + 519 + 642 | 1066 / 2887 + 393 / 951 + 411 / 1001 |

| Dicta-Sign-LSF-v254 | French SL-French | 2904 | 2266 / 5028 |

| The Public DGS Corpus55 | DGS-German | 63912 | 4694 / 23404 |

We have developed a dataset of SPA-to-MSL gloss of 3000 pairs. The development of the proposed gloss follows the linguistic rules considered valid for the SPA language, having similar representativeness, word organization, and structural similarity. The development of the gloss also considered the linguistic organization of the MSL, which provides a formal data source for performing natural language processing studies to help deaf people based on machine learning approaches.

The proposed glosses can be used to perform NMT from scratch or using a transfer-learning technique. In this study, the assessment technique uses an NMT model trained on a high-resource language pair to initialize a child model. In this case, we trained two transformer models pre-trained on a SPA corpus and fine-tuned them on our SPA-to-MSL dataset, obtaining acceptable performance in NMT metrics.

The article is organized as follows. Section Methods describes the methodology to fine-tune the chosen pre-trained models on the SPA-to-MSL dataset. Section Data Records analyzes the dataset, the vocabulary and sentence length per language, Section Technical validation includes the validation of the data set refinement of the M1 and M2 models and assessing their performance through visualization of NMT metrics such as BLEU, ROUGE, and TER.

The following section describes some fundamentals of NMT models and the necessity of a complete and usable corpus in the considered languages to justify the development and testing of the proposed corpus.

Methods

Neural machine translation (NMT)

NMT develops and implements artificial neural network models that produce precise relationships between linguistic structures in two different languages. Thus, in NMT, the linguistic resources of a language pair are determined by the available amount of parallel corpora in the corpus between the considered languages. There are no minimum requirements for the size of the parallel corpora to categorize a language pair as high, low, or highly low-resource. Even if a language has many monolingual corpora while still having a small parallel corpus with another language, this language is considered LR for the NMT task.

Formally speaking, an NMT model γ translates a sentence xsrc in the source src language to a sentence ytgt in the target tgt. With a parallel training corpus C, the model γ is usually trained by minimizing the negative log-likelihood loss:

| 1 |

Here p(ytgt∣xsrc; γ) is the conditional probability of obtaining ytgt given xsrc with the model γ. NMT models commonly generate the target sentence from left to right. Considering that y contains m words, the conditional probability can be written as:

| 2 |

The encoder-decoder architecture is frequently used in NMT tasks. The encoder transforms the source into a sequence of hidden states, and the decoder generates target words conditioned on the source’s hidden representations and the previously generated target words. The encoder and decoder can be recurrent neural networks (RNN)10 or neural transformers11. NMT of low-resource languages has benefited from techniques used in neural network theory, like zero-shot and transfer learning.

Zero-shot

It refers to the ability of a translation model to handle language pairs Lγ has never been seen during training. It is often achieved using multilingual NMT and transfer learning. For example, if a model was trained on English-to-French and English-to-German pairs, it might still be able to perform French-to-English translation, even though it was not explicitly trained for that pair12. Zero-shot learning is useful because it eliminates the requirement of parallel data between every pair of languages.

Transfer-learning NMT

It is a sub-area of ML that transfers or adapts knowledge gained from solving one automatic learning problem, task, or model -known as the parent model- by applying it to a related one -known as the child model. The parent model is trained on a large corpus of parallel data from a high-resource language pair, which is then used to initialize the parameters of a child model trained on a small dataset (LRL)13. This technique’s advantages include reducing the size requirement for child data training, improving the performance of the child task, and faster convergence compared to child models trained from scratch.

There are two approaches to performing transfer learning: warm start and cold start. The first one involves leveraging pre-trained models or existing knowledge from related tasks to initialize the training of a new model. This involves training a model on a large dataset, using the pre-trained model’s weights and parameters to initialize the latest model and training the model on the specific task or domain using the task-specific data, adjusting pre-trained weights14–16. The latter involves training a model from scratch without leveraging pre-trained models. This process involves randomly initializing the model’s weights and parameters and training the model from scratch using specific data17–19. Frequently, the parent and child have the same target language20–22, while others use the same source language for both parent and child23,24. However, there is the possibility that the parent and child do not share languages25,26. Despite the advantages offered by transfer learning, it is necessary to introduce a complementary process to refine the relationship between the child and the parent language. This process is known as fine-tuning.

Fine-tuning is a process in ML where a pre-trained model is further trained on a specific dataset to adapt it to a particular task. It is a method that leverages the knowledge acquired by the model during its initial training phase and refines it to perform better on a specialized task. In automatic translation based on NLP, the fine-tuning uses a supervised training form. This process is where a pre-trained model is trained on a smaller, task-specific dataset with labeled examples. A language model is trained on a massive amount of general text data. Then, this pre-trained model is adapted to a specific task by training it on a smaller dataset with labeled samples. This technique has broadly been used in MT for LRL20,27,28.

Once the NMT’s usual characteristics have been evaluated, the dataset is described in detail, along with the corresponding analysis that validates it as a useful corpus between traditional SPA and MSL.

Dataset development methodology and evaluation methods

The sentences dataset (corpus with SPA as source and MSL as target) was built taking into account MSL dictionaries29,30, MSL grammar books31, apps like interseña32 and the assessment of an MSL interpreter. In total, there are 3000 samples of SPA (SPA) to MSL pairs for NMT task. The sentences include common phrases in SPA like greetings, expressions about the weather, emotions, days of the week, and questions.

This study considered configuring all sentences in the dataset to follow the grammar rules of the SPA language. In addition, a general distribution of grammar sentences in the dataset and the ones in the general SPA language was taken into account.

The corpus design strategy considered using a sentence source with a fundamental subject, then a verb conjugated in different tenses with common complements. Then, the selected verbs in the previous sentences were fixed, changing subjects to consider male-female, singular-plural, explicit-implicit, and personal-impersonal sentences. In addition, some common complements were selected to change some alternative verbs with the proposed subjects. This strategy provided a full set of sentence variants in the corpus, providing it with the variability complexity expected for a corpus potentially used for automatic translation. Figure 1 shows how variations of one SPA-to-MSL pair are done: The attributive sentence which contains adjective (ADJ): yo (S) estoy (V) confundido (ADJ) (SPA) (I am confused (ENG)) turns into yo (S) confundido (adjective) (MSL). If adj is fixed and S varies with different pronouns -yo, tu, el, ella, nosotros,... (SPA) (I, you, he, she, we ..... (ENG)) the verb is conjugated in correspondence to S. Hence different versions of the same SPA-to-MSL pair are obtained.

Fig. 1.

Corpus design process.

All the sentences were proposed considering common sentences used in the SPA language. The sentences and phrases match the standard and official grammar rules. This could look artificial regarding the colloquial sentences one may hear daily. Nevertheless, the proposed database must contain sentences corresponding to the official SPA language. Given this justification, the proposed sentences fulfill such conditions making them adequate for performing natural language processing studies as the examples shown in this study.

Several formal metrics were used to validate the developed corpus. The following subsections present the general characteristics of those metrics applied to the proposed corpus.

Lexical similarity

Disclaimer

This paper does not aim to reflect the real use of the SPA language. Instead, the goal is to develop a set of sentences based on grammar rules that do not intend to reflect actual language use. This study also acknowledges that language use includes variation by context, which their dataset does not attempt to mirror.

This section presents the lexical similarity of proposed SPA-to-MSL pairs. This study considers two methods for lexical similarity analysis: jaccard similarity and cosine similarity with word embedding.

Lexical similarity quantifies the similarity of two lexical units, typically as a real number. A lexical unit can be an individual word, a compound word, or a text segment33. In computational linguistics, lexical similarity often refers to similarity between sets of words or texts, which can be quantified using metrics such as jaccard similarity and cosine similarity, among others. Jaccard similarity and cosine similarity with word embeddings were computed to measure the similarity score between MSL and SPA sentences. Jaccard similarity score measures similarity between sets; it is considered simple and efficient, but does not capture semantic relationships. In contrast, cosine similarity with word embeddings captures semantic relationships but requires word-pre-trained embeddings.

Jaccard similarity

It is an algorithm of the n-gram class. Measures the similarity between two sets of data. Jaccard score goes from 0 to 1. The higher the score, the higher the similarity between the two sets. The jaccard J (A,B) similarity between two sets A and B is defined as the size of their intersection divided by the size of their union. Mathematically, it can be expressed as :

| 3 |

Cosine similarity with word embeddings

TF − IDF stands for tthe term frequency − inverse document frequency, as it is the product of both of them. TF − IDF vectorizer is a well-known NLP tool that converts a collection of documents into numerical results, that is, word embeddings. This method is widely used in information retrieval and text mining to identify significant words within documents with applications such as sentiment analysis34,35 and document classification36.

- Term frequency (TF). It is the number of times a word appears in a document. Measures how important a word w is in the document d. It is defined by equation (4) where fw,d is the frequency of w in d and is all the words w*

4 - Inverse document frequency (IDF). A word’s IDF is how rare the word is in the document. It is described by equation (5), where ND is the total number of documents, where D is all the documents, and the denominator computes the cardinality of the set of documents that contain a word w.

5

Hence, TF − IDF is given by:

| 6 |

- Cosine similarity. It is the angle between two vectors and in the n-dimensional space. Equation (7) expresses the cosine similarity of and , where is the dot product of the vectors and and and are the magnitudes.

7

The value of cosine similarity ranges from −1 to 1: a) 1 indicates that the two vectors are identical, b) 0 indicates that the two vectors are orthogonal (no similarity), and c) −1 indicates that the two vectors are opposed.

As in the case of jaccard similarity, both Xtrain and Xval were tokenized, lowercased, lemmatized, stop words and punctuation were removed with the spacy library®. TF − IDFvectorizer from scikitlearn® library was installed.

NMT validation models

This study considers two SPA-pretrained models to validate the proposed corpus: Helsinki-NLP/opus-mt-es-es and BARTO. Artificial translation models, This selection is given considering that the SPA and MSL glosses have direct correspondence in many words. For example, the word libro (SPA) (book (ENG)) in MSL gloss is LIBRO (MSL).

Helsinki-NLP/opus-mt-es-es (Model M1)

This model is a part of the OPUS-MT project37, a collection of pre-trained NMT models developed by the Language Technology Research Group at the University of Helsinki38. It is built on the Marian MT framework network. It’s underlying architecture is a transformer encoder-decoder model. It is designed for paraphrasing, correction within SPA. This model can be retrieved from an online repository at Hugging Face®39. This may seem redundant, but it’s useful in scenarios where a specific dialect or variant of a language needs specialized handling or where bilingual data in the same language is used for training. This model has potential applications in paraphrasing, style transfer, and domain adaption, which is the objective of this work: SPA to MSL adaptation. This model’s architecture is based on transformer architecture. It uses the sentence piece (SP) method as tokenization. SP analyzes the frequency and occurrence of character sequences to learn an optimal set of subword units. It uses a unigram language model to estimate the probability of different subwords.

BARTO (Model M2)

Bidirectional and autoregressive transformers (BART) were proposed in40. It is a denoising autoencoder that maps a corrupted document to the original document from which it was derived. It is implemented as seq2seq model with a bidirectional encoder over a corrupted text and a left-to-right autoregressive decoder. This model used six layers in the encoder and decoder, 12 layers each. BART uses several corruption methods, including token masking, token deletion, text infilling (randomly replacing spans of text), and sentence permutations (shuffling the order of sentences). BART model variants come in different sizes, including:

Bart-base: It comes with six encoder layers, six decoder layers, and 139 million parameters.

Bart-large: It comes with 12 encoder layers, 12 decoder layers, and 406 million parameters.

BART uses SP, which helps to handle OOV words by breaking them into subunits.

BARTO41 is a variant model of BART; it follows the BART base architecture of encoder and decoder with six layers each. It has twelve attention heads and 768 hidden dimensions. It was trained exclusively in the SPA language and uses the same corruption methods as BART, but masks 30% tokens. It uses SP to build a tokenizer of 50,264 tokens and is suitable for generative tasks like summarizing, question answering, and MT.

Fine-tuning process

Fine-tuning of pre-trained NMT models involves several stages, the overall process is depicted on Fig. 2. The model M1 and M2 were fine-tuned in Google Colab®, the transformers library of Hugging Face® was used:

Fig. 2.

Fine-tuning process on SPA-to-MSL corpus.

Preparation of the dataset: The data set was converted to comma separated values and divided into training 80% and validation 20% (Xtrain, Xval). Figure 17 shows a diagram of the methodology followed for pre-processing.

The class Autotokenizer that is different for each pre-trained model is loaded. This outputs the sentences in the correct format that each model accepts. For example, the M1 format is the following: , . Through a preprocessing function, the entire dataset is tokenized and truncated to max_length

The outputs of the tokenizer are in the format of a dictionary, this dictionary contains: input_ids, in this case the inputs associated with SPA sentences, while the IDs associated with MSL are stored in labels field. An attention_mask is also created and indicates which tokens in a sequence should be attended and which should be ignored, and decoder_input_ids are the shifted versions of labels.

A data_collator is a component of the transformers Hugging Face Library that prepares batches of data for model training or inference and ensures dynamic padding, i.e, ensuring all the sequences in the batch have the same length

The model is loaded for seq2seq generation, i.e., map an input sequence to an output sequence.

Hyperparameters were chosen to be equal for both models:

Fig. 17.

Data-processing workflow for using the proposed corpus from SPA to MSL.

• evaluation-strategy: It was set to “epoch”. This means that the model is evaluated at the end of each epoch

• The validation batchsize = 64

• The training batchsize = 32

• number of training nepochs = 20

• Weight decay = 0.01

• Optimizer = Adam with β1 = 0.9, β2 = 0.9999 and ϵ = 1 × 10−8

-

7.

The previous hyperparameters and the loaded model were passed to the Trainer class API to start training and evaluation with SPA-to-MSL dataset.

This fine-tuning process was implemented for both NMT models. Such a strategy simplified the validation of the proposed corpus.

Computational resources

Both M1 and M2 models were downloaded from Hugging Face42 fine-tuned on Google Colab® environment using the transformers library. Training arguments were defined in TrainingArguments class, containing all the hyperparameters for training and evaluation. Next, both pre-trained models were fine-tuned with the help of Trainer class API. The evaluation used a Colab L4 GPU with 22.5 GB of RAM. The Colab backend environment provides 53 GB of RAM and uses Python 3.

Evaluation metrics

The metrics to evaluate and compare the performance of the M1 and M2 models are sacreBLEU, ROUGE, and TER. which are frequently used in NMT to assess the quality of translation.

Bilingual language evaluation understudy (BLEU)

It is a tool for assessing the quality of machine-generated text43. The BLEU score evaluates how close translations are to their reference. It does not measure intelligibility or grammatical correctness. Also, different implementations of BLEU could produce varying scores due to inconsistencies in tokenization, casing handling, and smoothing techniques, and often, manual tuning of parameters is required for optimal performance in specific datasets. SacreBLEU44 addresses several shortcomings of the original BLEU metric as it provides a standardized implementation. It specifies tokenization, lower casing, and smoothing methods to eliminate ambiguity. SacreBLEU directly computes BLEU at a corpus level, considering the entire test set as a single unit instead of calculating individual sentences like traditional BLEU does. This leverages real-world performance.

This metric ranges from 0 to 1. Few translations attain a score of 1 unless they are identical to their reference. Hence, there are more reference translations per sentence. Typically, a BLEU of ≥50% is considered acceptable for NMT. The precision for n-grams is calculated as the ratio of the number of overlapping n-grams between the candidate translation and the reference translation to the total number of n-grams in the candidate where pn is the precision for n-grams.

| 8 |

To prevent non-sense or bad translations, there is a Brevity Penalty (BP) to prevent short translations from receiving high scores. It is calculated as follows:

| 9 |

Here c is the length of the candidate translation and r is the length of the reference translation. The geometric mean of the precisions over different n-grams (usually 1 to 4) and wn is the weight of each n-gram often set to

| 10 |

The final sacreBLEU score is calculated by combining the brevity penalty and the geometric mean of calculated precision.

| 11 |

Translation edit rate (TER)

TER45 is a metric that evaluates the quality of machine translations by calculating the number of edits needed to transform the hypothesis (candidate sentence) into the reference sentence. The types of edit are as follows:

Insertion I: Adding a word to the hypothesis.

Deletion D: Removing a word from the hypothesis.

Substitution S: Replace a word in the hypothesis with a different word.

Shift Sh: Moving a contiguous sequence of words to different positions.

The equation of TER is the following.

| 12 |

A low TER (close to 0) indicates high-quality translations with few required edits, while a high TER indicates lower-quality translations with many edits needed to match the reference.

Recall-oriented understudy for gisting evaluations (ROUGE)

ROUGE46 is a set of metrics used to evaluate the quality of summaries by comparing them to reference summaries and translation tasks. Measures the overlap of n-grams between the candidate summary and the reference summary. The most commonly used n-grams are unigrams (ROUGE-1) and bigrams (ROUGE-2).

| 13 |

Data Records

Data records repository

The SPA-to-MSL dataset47 is publicly available on :10.6084/m9.figshare.28519580 and 10.57760/sciencedb.21522. It consists of multiple files in different formats to support the use and evaluation of SPA-to-MSL.

esp-lsm_glosses_corpus.xlsx: The primary dataset that contains 3,000 pairs of sentences in Spanish and their corresponding MSL glosses in an Excel®spreadsheet with .xlsx extension. The first column called esp contains sentences in SPA while the second column, named msl, contains their corresponding translation to MSL.

esp-lsm_glosses_corpus.csv: This is a comma separated values file (csv) version of esp-lsm_glosses_corpus.xlsx for easier processing in NLP pipelines.

esp_lsm_analysis.ipynb: A Jupyter notebook containing exploratory data analysis (EDA) on the dataset, including statistical insights.

Grammatical structures in MSL

The SPA-to-MSL dataset was built considering the following grammatical structures:

1. Gender:

Real academia española (RAE)® defines gender48 as the grammatical category inherent in nouns and pronouns, encoded by agreement in other classes of words, and that in animated pronouns and nouns can express sex. It is divided into male (M) and female (F). In MSL, gender does not exist. Therefore, defined articles (Ar) el, la, los, las (the in English) and non-defined ones uno, una, unos, unas (a/an in english) do not exist in MSL. Furthermore, for the F gender, the hand sign for mujer (woman(ENG)) goes after the subject (S). In sentence 1 (a), the word niña(SPA) (girl(ENG)) is made up of the hand signs niño + mujer(SPA) (boy + woman(ENG)), the same for word, esposa(SPA) (wife(ENG)) that becomes esposo + mujer (husband + woman(ENG)) in MSL.

La (Ar) niña (S)(SPA) / The (Ar) girl (S)(ENG) / niño (S) + mujer (S)(MSL)

La (Ar) esposa (S)(SPA) / The (Ar) wife (S)(ENG) / esposo (S) + mujer (S) (MSL)

2. Plural:

Plural and singular determine the quantity of a noun. Plural means more than one instance of the noun that is being expressed. They are made up of adding morphemes -s or -es at the end of the word. However, these morphemes do not exist in MSL. In MSL, the plural is built using different methods. This is demonstrated in the following sentences. In the first method, plurals are in the form of number + subject (num + S) or the reverse order (S + num) like sentence labeled with letter (a) Tres (num) patos (S)(SPA)three (num) ducks (S)(ENG) becomes tres (num) + pato (S)(MSL) or pato (S) + tres (num)(MSL). The second case uses repetitions of the hand sign. It is not necessary to count the number of repetitions. This method is used when the characteristics of the hand sign or their classifier (cl) facilitate it. In the sample labeled with letter (b): Las (Ar) casas (S)(SPA) (the (Ar) houses (S)(ENG)), the plural casas becomes casa (S) + casa (S) + casa (S) in MSL. The third method involves using quantitative adjectives (ADJ) that add information about the quantity of the subject indefinitely; some examples are many, some, and all like sentences labeled with letter (c) where sentence muchos (ADJ) gatos (S)(SPA) / many cats (ADJ) (S)(ENG) becomes gatos (S) muchos (ADJ) in MSL. The quantitative adjective is placed after S. A fourth method includes plural pronouns (PRONS): ellos (as), nosotros y ustedes (they,we and you). In this kind of sentence, S is signed first and the PRON is placed after. Sentence (d) is a clear example: los (Ar) maestros (S) (SPA) turns into maestros (S) ellos (PRON) (MSL):

Tres (num) patos (S) (SPA) / three (num) ducks (S)(ENG) / tres (num) + pato (S)(MSL)

Las (Ar) casas (S) (SPA) / The (Ar) houses (S)(ENG) / casa (S) + casa (S) + casa (S) (MSL)casa (S) + casa (S) + casa (S) (MSL)

Muchos (ADJ) gatos (S) (SPA) / many (ADJ) cats (S)(ENG) / gatos (S) muchos (ADJ) (MSL)

Los (Ar) maestros (S) (SPA) / the (Ar) professors (S) (ENG) / maestros (S) ellos (PRON) (MSL).

3. Attributive sentences/copular sentences:

The structure of attributive sentences is subject (S) + verb (V) + adjective/attributive (ADJ). Some examples of copular verbs are the verb to be (is, am, are, was, were), appear, seem, look, sound, smell, taste, feel, become, and get.

The structure of an attributive sentence in MSL is S + A, and the verb is omitted. Sentences 3 (a) and (b) are attributive sentences. Sentence 3 (a): Mi amiga (S) es (V) distraída (ADJ)(SPA) whose order is S + V + adj becomes S + adj in MSL: amigo mujer mía (S) distraída (A)(MSL).

Mi amiga (S) es (V) distraída (ADJ)(SPA) / My friend (S) is (V) distracted (ADJ)(ENG) / Amigo mujer mía (S) distraída (ADJ)(MSL).

El niño (S) es sordo (ADJ)(SPA) / The boy (S) is (V) deaf (ADJ)(ENG) / Niño (S) sordo (ADJ)(MSL).

4. Circumstantial complement of place (CCP).

CCP comprises information about the place where the action in which the expressed verb takes place. CCP can be identified by issuing the question where?. In MSL this kind of sentence if the CCP is made up of a noun phrase, the place P goes first, then the adverb (ADV) ahí(SPA) (thereENG), S and at the end the V. This grammatical structure satisfies the following structure: CCP + there (ADV) + S + V. As an example in sentence 4 (a): mi amigo (S) estudia (V) en la (ADV) Biblioteca (CCP)(SPA) (My friend (S) studies (V) (at the (ADV) library (CCP))(ENG)) if the question Where does my friend study? is formulated, the answer will be the CCP of the sentence: at the (ADV) library. In MSL the order of the sentence is changed to P + ADV + S + V: biblioteca (P) ahí (ADV) amigo mío (S) estudiar (V).

Mi amigo (S) estudia (V) en la (ADV) biblioteca (CCP) (SPA) / My friend (S) studies (V) (at the (ADV) library) (CCP) (ENG) / Biblioteca (P) ahí (ADV) amigo mí (S) estudiar (V) (MSL).

Mi amiga (S) va (V) (a la (ADV) universidad) (CCP) (SPA) / My friend (S) goes (V) to University (A) (ENG) / Mi amiga (S) va (V) (a la (ADV) universidad) (CCP) (MSL).

5. Transitive sentences (TS)

As the word transitive indicates, a transitive verb transfers the action to something or someone- and object (O or direct object (DO)). Transitive verbs demand objects because they exert an action on them. In other words, if the transitive verb lacks an object to affect, the transitive verb is incomplete or does not make sense49. The subject can be either a noun (N) or a pronoun (PRON). Examples of TS are 5 (a) and (b). Sentence 5 (a) : Ella (S) cocina (V) arroz (DO)(SPA) (She (S) cooks (V) rice (DO) (ENG)) with structure S + V + DO becomes S + DO + VElla (S) arroz (DO) cocinar (V) in MSL. Here She is the PRON and the S is

Ella (S) cocina (V) arroz (DO)(SPA) / She (S) cooks (V) rice (DO)(ENG) / Ella (S) arroz (DO) cocinar (V)(MSL).

Yo (S) aprendo (V) LSM (DO)(SPA) / I (S) learn (V) MSL (DO)(ENG) / Yo (S) LSM (DO) aprender (V)(MSL).

6. Intransitive sentences (IS)

IS do not include DO. Intransitive verbs do not require an object to act upon. Some examples of intransitive verbs are cry, sing, sleep, laugh, etc. Sentences 6 (a) and (b) demonstrate how SPA IS are turned to MSL structure. Sentence 6 (a) with grammatical structure S + VEl (S) corre (V)(SPA) (He (S) runs (V)(ENG)) is similar in MSL: El (S) correr (V)(MSL). El (S) corresponds to a PRON, and the verb is in a lemmatized form.

El (S) corre (V)(SPA) / He (S) runs (V)(ENG) / El (S) correr (V)(MSL).

La (Ar) niña (S) canta (V)(SPA) / The (Ar) girl (S) sings (V)(ENG) / niño (S) + mujer (S) cantar (V)(MSL).

7. Circumstantial Complement of Time (CCT)

CCT offers information about the time when the verb takes action. Expressions like: Morning, at 6 P.M, Early, Yesterday bring information about the exact instant in which the verb is occurring. Sentences 7 (a) and (b) are examples of the usage of CCT. In both cases the order S + V + DO + CCT switches to CCT + S + DO + V in MSL

(Nosotros (as))Veremos (V) una película (DO) mañana (CCT)(SPA) / We (S) will watch (V) a movie (DO) tomorrow (CCT) (ENG) / mañana (CCT) nosotros (PRON) película (DO) ver (V)(MSL).

(yo) fui (V) a bailar (DO) ayer (CCT)(SPA) / I (PRON) went (V) dancing (DO) yesterday (CCT)(ENG) / ayer (CCT) yo (S) bailar (DO) ir (V)(MSL).

Technical Validation

Composition analysis of SPA-to-MSL dataset

Figures 3 and 4 show the frequency of tagged tokens for SPA and MSL sentences, respectively, after being processed as documents with Spacy® library. When comparing data sets, a clear difference is that SPA sentences contain more determiners (DET) than MSL sentences. Examples of DET in SPA are un, una, la, el, su, mi (a, an, the (ENG)), in contrast to MSL la, el, un, una does not exist. In addition, auxiliary (AUX) such as ser, estar, and conjugations of the same verbto be in SPA do not exist in MSL. There are fewer AUXs in MSL.

Fig. 3.

POS for SPA sentences.

Fig. 4.

POS for MSL sentences.

Common adjectives in the SPA dataset include: oyente, sordo, alegre, triste, etc. (SPA) (hearing, deaf, sad, happy (ENG)). PRONS like yo, tú, el ella, nosotros, ustedes, ellos are present in both MSL. PUNCT refers to punctuation like ?,¿, (). Adverbs (ADV) in the dataset primarily represent place, time, and negation. There are more ADVs in the SPA dataset.

Figure 5 illustrates the distribution of sentence lengths for both languages. The median sentence length for SPA is four words per sentence, while for MSL it is three words per sentence. The interquartile range (IQR) for SPA sentences is wider than for MSL, indicating variation in sentence lengths. Some outliers are present in both datasets, represented by dots above the whiskers in the boxplot.

Fig. 5.

Distribution of sentence lengths of SPA-to-MSL pairs.

Regarding sentence structures, SPA sentences may contain articles (el, la, los, las, un, una), auxiliary verbs (ser, estar), and other grammatical components that are not typically present in MSL glosses. Additionally, some structures in MSL involve classifiers or the fusion of multiple Spanish words into a single gloss representation. The dataset also includes sentences with different syntactic structures, such as circumstantial complement of place (CCP), circumstantial complement of time (CCT), and transitive sentences (TS).

Some outliers in MSL include structures with A + S articles (A) with subject (S) that become subject (S) in SPA: “La casa / El niño” ("The house / The boy”), or impersonal sentences that do not have a specific subject like hace calor/ está haciendo calor ("It is hot” / “It is warm”), become one word in MSL: “casa / niño” (house) and “calor” (warm/hot) respectively. Plural forms that use classifiers are also included in these kind of sentences: hace calor/ está haciendo calor ("los autos” / “las casas”), become one word in MSL: “auto+auto+auto (car+car+car)/casa+casa+casa (house+house+house)”. Regarding samples with 6, 7, and 8 words. Sentences of 6 words include a variety of structures, some of them with circumstantial complement of place (CCP), circumstantial complement of time (CCT), Transitive sentences (TS), etc. Some examples are as follows.

• Sentences with six words:

TS: Mi tía quiere aprender LSM (SPA) (my aunt wishes to learn MSL) → tío mujer mía lsm aprender querer (MSL) (uncle+woman mine MSL to learn to want).

TCC: mi novio irá a la universidad la próxima semana (SPA) (my boyfriend will go to university next week) → próxima semana novio mío universidad ir (MSL) (next week boyfriend my university to go)

• Sentences with seven words:

TCC: Los niños estudian inglés todos los días (SPA) (The kids study english every day) → todos los días niño ellos inglés estudiar (MSL) (every day boy them english to study)

CCP: Las niñas juegan futbol en la calle (SPA) (The girls play soccer in the street) → calle ahí niño mujer ellas futbol jugar (MSL) (street there boy woman they soccer to play)

• Sentences with eight words:

TCC: Mi hija va a la escuela de lunes a viernes (SPA) (My daughter goes to school from Monday to Friday) → Lunes a Viernes hijo+mujer mía universidad ir (MSL) (Monday to Friday son+woman my university to go)

Splitting data

Originally, the vocabulary size of SPA sentences was 820 tokens and 650 for MSL. The autotokenizer of the model simplified the process of loading the appropriate tokenizer for the pre-trained language model-in this case SP- and it loaded special tokens: <S> -start of the sentence, <UNK> -unknown words, <PAD> -padding token, </S> - end of a sentence) that were specific to the chosen model. The data set was divided into 80% data for training and the rest for validation.

We obtain the metrics of the dataset. Unique words were obtained by computing the set of words, without considering punctuation; the total number of words (total words)) and out of vocabulary (OOV) words present in validation data but not in training data (Table 3).

Table 3.

Statistics of MSL Dataset.

| Sentences | Xtrain | Xval | ||

|---|---|---|---|---|

| 2400 | 600 | |||

| Languages | SPA | MSL | SPA | MSL |

| Unique words | 759 | 579 | 447 | 347 |

| Total words | 9887 | 8727 | 2389 | 2170 |

| OOV | — | — | 54 | 41 |

Lexical analysis

According to the jaccard similarity, the data set was divided as follows: 80% for training defined as Xtrain (2400 sentences) and 20% for validation defined as Xval (600 sentences). Given this dataset separation, J (A,B) was computed for each SPA-to-MSL pair in Xval. The average J (A,B) resulted in 0.35 score. The frequency distribution of J (A,B) score is shown in Fig. 6. To obtain the similarity score, the data set was tokenized, lowercased, lemmatized, punctuation, and stop words were excluded. More than 100 sentences had close to 0 scores. Most sentences were placed between 0.4 and 0.6, and a few sentences (approximately 30 sentences) presented a score of 1.0. The vectorizer was fitted on Xtrain, then Xval was transformed into TF − IDF, a sparse matrix of size 600 × 564 (embeddings). With the embeddings, the cosine similarity was calculated with equation (7). An average similarity score of 0.61 was obtained. The distribution of the cosine similarity score is shown in Fig. 7 where most SPA-to-MSL sentences of Xval have a score of 1.0, nearly 140 sentences have a score of 0.0 and there is no score of −1. Figure 8 shows the percentage difference between the source and target sets of sentences. In the case of determiners (DET), the graph shows that SPA sentences have 68.83% more than MSL sentences; in the same way, the quantity of auxiliary elements (AUX) in SPA is 84.85% higher in SPA than in MSL.

Fig. 6.

Distribution of jaccard similarity score of SPA-to-MSL sentences.

Fig. 7.

Cosine similarity for SPA-to-MSL dataset.

Fig. 8.

Percentage difference between POS in SPA and MSL.

Quantitative validation

In the M1 and M2 models decoder_input_ids (tensor of integers), there are shifted versions of the labels or the IDs associated with MSL sentences. During training, the model uses the decoder_input_ids with an attention mask to ensure that it does not use the token it is trying to predict, so the model performs inference similarly. The decoder in the transformer model performs inference by predicting tokens one by one. The predictions produced a list of sentences and the references a list of lists. Here, we used one reference and one prediction.

The training arguments, including hyperparameters, were initially chosen on the basis of standard values rather than through systematic optimization. The selection of parameters such as batch size, learning rate, and number of epochs, without extensive fine-tuning, follows general practices commonly applied to transformer-based models. The model was trained using the Adam optimizer, which provided an efficient balance of learning adjustments during training.

Since hyperparameter optimization was not conducted, the validation dataset was used solely to monitor the model performance and prevent over-fitting during training rather than to guide hyperparameter tuning. This allowed the validation set to serve strictly for evaluation rather than influencing hyperparameter selection.

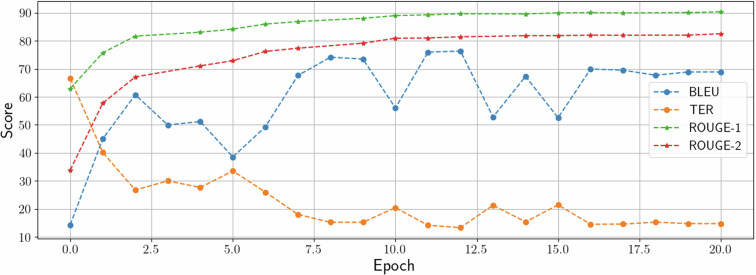

Figure 9 shows the translation quality performance for M1 with the NMT metrics described in Section Evaluation metrics. BLEU converges to 70 after 20 epochs. TER score decreases from 70 to 15 and stabilizes at 7.5 epochs, ROUGE-1 reaches 92 scores, and ROUGE-2 goes approximately from 35 to 82. Figure 10 shows the validation and training loss that indicates an optimal fit as both decrease and stabilize. The validation loss goes from 2.6 to 0.45 and the training loss from 3.62 to 0.21. Regarding the M2 model. Figure 11 shows the performance of the translation metrics. The BLEU starts from 65 to 82 in 20 epochs, ROUGE-1 with 85 and converges close to 92, ROUGE-2 from 78 and reaches 90 and TER from 18 stabilizes at 8. Regarding validation and training loss, the training loss in M2 is higher at the beginning, rapidly decreases in one epoch, and converges to 0. The validation loss starts at 0.044 and converges to 0.001 in 20 epochs (Fig. 12).

Fig. 9.

Translation metrics for M1.

Fig. 10.

Training and validation loss for model M1.

Fig. 11.

Translation metrics for M2.

Fig. 12.

Training and validation loss for model M2.

In addition, this analysis considers an experiment that includes a split ratio of 80% for training (Xtrain), 10% validation (Xval), and 10% test (Xtest). The samples for training were 2400, 300 for validation and testing. We obtained training and validation loss behavior, as well as translation metrics (TER, ROUGE1, ROUGE2 and BLEU). We chose the combination of the following hyperparameters along with Adam optimization:

learning rate: 1.5e − 05

trainingbatchsize: 32

validationbatchsize: 16

optimizer: Adam with β1 = 0.9, β2 = 0.999 and ϵ = 1e − 08

num epochs: 20

In Fig. 13 it can be seen that the M1 translation metrics converged to the following validation values: BLEU: 85.22, ROUGE1: 94.59, ROUGE2: 88.35, TER: 8.95. Regarding the loss behavior, shown in Fig. 14, we obtained 0.5118 for validation and 0.0122 for training.

Fig. 13.

Translation metrics for Model M1 after splitting dataset in train/test/validation.

Fig. 14.

Training and validation loss performance for model M1 after splitting dataset in train/test/validation.

The test data set reported the following results: BLEU: 83.23, ROUGE1: 91.49, ROUGE2: 84.98, TER: 10.67 and we obtained 0.4978 in loss.

We did the same for the M2 model. In Fig. 15 it can be seen that after 20 epochs, the translation metrics converged to the following validation values: BLEU: 83.68, ROUGE1: 95.84, ROUGE2: 89.83, TER: 6.64. Regarding the loss behavior, shown in Fig. 16, we obtained 0.0069 for validation and 0.011871 for training.

Fig. 15.

Translation metrics for model M2 after splitting dataset in train/test/validation.

Fig. 16.

Training and validation loss performance for model M2 after splitting dataset in train/test/validation.

The test data set for the evaluation of M2 reported the following metrics: BLEU: 85.41, ROUGE1: 95.84, ROUGE2: 89.83, TER: 6.64. Regarding the loss, we obtained 0.0103. Although the BARTO and Helsinki models were designed for SPA paraphrasing tasks, the specific fine-tuning process applied to them on the SPA-to-MSL dataset allowed the model to adapt effectively to translation tasks. The models are available at https://huggingface.co/vania2911/esp-to-lsm-model-split for the M1 model and https://huggingface.co/vania2911/esp-to-lsm-barto-model for the M2 model. The colab codes were updated in the repository47 with the names: “Model_M1_split_version.ipynb” and “Model_M2_split_version.ipynb”. The translations generated by these models are in the same repository with the names: “translationsM1.txt” and “translationsM2.txt”

By training on a dataset that includes a variety of contexts and structures in SPA, the models aim to achieve a balanced representation appropriate for translating into MSL, mitigating potential biases toward certain linguistic patterns. Additionally, we partitioned the data to create a test dataset to evaluate the model’s ability to generalize new data.

Qualitative validation

The translation of the trained model was analyzed when uploading the model to the Hub. Hugging Face® Repos allows to run inferences directly in the browser. The corresponding pipeline was used for the translation task. The results of the fine-tuned model are compared to Ground Truth (MSL) of translation by human expertise in Table 4.

Table 4.

Translations results with fine-tuned model.

| Source | Model M1 | Model M2 | Ground Truth |

|---|---|---|---|

| Él estudia español (He studies SPA) | El español estudiar | El español estudiar | El español estudiar |

| Quiero aprender lsm (I want to learn msl) | Yo lsm aprender querer | Yo lsm aprender | Yo lsm aprender querer |

| Ayer estuve ocupada (Yesterday I was busy) | Ayer yo ocupada estar | Ayer yo ocupada estar | Ayer yo ocupada estar |

| A mi mamá le gusta cocinar (My mom likes cooking) | Mamá mía cocinar gustar | Mamá mía cocinar gustar | Mamá mía cocinar gustar |

| Mi amigo va a la universidad de Lunes a Viernes (My friend goes to University from Monday to Friday) | Lunes a Viernes amigo mío universidad ir | Lunes a Viernes amigo mío universidad ir | Lunes a Viernes amigo mío universidad ir |

| La niña es sorda (The girl is deaf) | Niño mujer sorda | Niño mujer sorda | Niño mujer sorda |

| Hace mucho frío (It is very cold) | Frío mucho | Frío mucho | Frío mucho |

From Table 4 sentences like Él estudia español (SPA) (He studies SPA(ENG)) that becomes He studies SPA(MSL). Sentences that include gender like La niña es sordaSPA (The girl is deaf(ENG)) that becomes niño mujer sorda(MSL). Similarly, for CCT sentences: Mi amigo va a la universidad de Lunes a ViernesSPA (My friend goes to university from Monday to Friday(ENG)) whose translation in MSL is Lunes a Viernes amigo mío universidad ir.

Table 5 includes translation results that were not correct at all. The incorrect translations were highlighted in red. Some sentences that have implicit PRON like Vivo en MéxicoSPA (I live in MexicoENG) were mistakenly translated as México vivo vivir by Model M1, while Model M2 correctly translated the previous sentence. Also, variations in sentences with plurals like Hay perros en el parqueSPA (There are dogs in the parkENG) were incorrectly translated as Hay perros en el parque by model M1 and model M2. The lack of examples of sentences with implicit PRON in the training dataset causes this. This issue can be fixed by augmenting the dataset with some backtranslation techniques in future work.

Table 5.

Not Correct translations results with M1 model.

| Source | M1 | M2 | Ground Truth |

|---|---|---|---|

| Yo vivo en México (I live in Mexico) | México yo vivir | México yo vivir | México yo vivir |

| Vivo en México (I live in Mexico) | México vivo vivir | México yo vivir | México yo vivir |

| Los perros juegan en la calle (Dogs play in the street) | Parque ahí perro cl + cl jugar | Parque ahí perro cl + cl jugar | Parque ahí perro cl + cl jugar |

| Hay perros en el parque (There are dogs in the park) | Hay perros en el parque | Parque ahí perros haber | Parque ahí perro cl + cl haber |

Usage Notes

The model cards of M1 and M2 are available at: the repository: https://huggingface.co/VaniLara/esp-to-lsm-mode and https://huggingface.co/VaniLara/esp-to-lsm-barto-model. To use both models with transformers library, there is a guide on how to use it on how to use this model tab on each model’s repository. As a demonstration: To load M2 the model directly on Colab, the following lines of code are copied from how to use this model tab on the model’s repository:

from transformers import AutoTokenizer, AutoModelForSeq2SeqLM

tokenizer = AutoTokenizer.from_pretrained ("VaniLara/esp-to-lsm-barto-model")

model = AutoModelForSeq2SeqLM.from_pretrained ("VaniLara/esp-to-lsm-barto-model")

Note that the transformer library must be installed beforehand. Our pre-trained model and tokenizer are loaded.

A pipeline will be required to execute the inference with M2. The pipeline is instantiated.

from transformers import pipeline

pipe = pipeline ("text2text-generation", model="VaniLara/esp-to-lsm-barto-model")

The input is prefixed to run the inference in Colab. A pipeline is instantiated, and a text in SPA is passed: Me gusta el suéter de color rosa (SPA) (I like the pink sweater) that outputs the MSL gloss translation: yo sueter rosa gustar

#text= "me gusta el sueter rosa"

result=pipe (text)

result=

#['generated_text':' yo sueter rosa gustar']

M1 works the same way. In addition, there is the option to execute the inference on the pytorch® and the tensorflow®.

The repository47 includes .ipynb files, which contain the programming methodology for preprocessing and training the translation models. These notebooks provide a structured and interactive environment for executing Python® code, visualizing results, and documenting the process.

• Model_M1.ipynb: A Google Colab® notebook containing the code for fine-tuning the M1 transformer model for Spanish-to-MSL gloss translation. The data were split into 80% and 20% validation. It contains the code to fine-tune and evaluate with the pre-trained Model M1, the AutoTokenizer, and the Trainer class. To run the program, one needs to sign up and create a profile on Hugging Face and then access it with a token that is provided when a security token is created on the settings tab. It is recommended to install the commented libraries.

• Model_M1_split_version.ipynb: A Google Colab® notebook containing the code for fine-tuning the M1 transformer model for Spanish-to-MSL gloss translation. The data were divided into 80% training, 10% testing, and 10% validation.

Model_M2.ipynb:A Google Colab® notebook implementing fine-tuning of the M2 model for the same translation task. he data were split into 80% and 20% validation.

• Model_M2_split_version.ipynb: A Google Colab® notebook containing the code for fine-tuning the M2 transformer model for Spanish-to-MSL gloss translation. The data were divided into 80% training, 10% testing, and 10% validation.

• translationsM1.txt: A .txt file containing reference and predicted translations for model M1.

• translationsM2.txt: A .txt file containing reference and predicted translations for model M2.

• README.md: A documentation file that provides instructions on using the dataset and models.

The workflow data-processing of SPA-to-MSL dataset is depicted in the diagram of Fig. 17.

Author contributions

Conceptualization, R.Q.F.A. and I.C.; methodology, V.L.O., R.Q.F.A. and I.C.; software,V.L.O. and I.C.; validation, V.L.O. and I.C.; formal analysis, V.L.O., R.Q.F.A. and I.C.; investigation,V.L.O., R.Q.F.A. and I.C.; resources, R.Q.F.A. and I.C.; data curation, R.Q.F.A. and I.C.; writing–original draft preparation, V.L.O., R.Q.F.A. and I.C.; writing–review and editing, V.L.O., R.Q.F.A. and I.C.; visualization, V.L.O. and I.C.; supervision, R.Q.F.A. and I.C.; project administration, R.Q.F.A. and I.C.; funding acquisition, R.Q.F.A. and I.C.

Code availability

The model cards M1 and M2 are available in https://huggingface.co/VaniLara/esp-to-lsm-model and https://huggingface.co/VaniLara/esp-to-lsm-barto-model. The code files are contained in Colab notebooks in a Figshare repository47 (see Section Data records).

Competing interests

The authors declare no competing interests.

Footnotes

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

These authors contributed equally: Vania Lara-Ortiz, Rita Q. Fuentes-Aguilar, Isaac Chairez.

Contributor Information

Rita Q. Fuentes-Aguilar, Email: rita.fuentes@tec.mx

Isaac Chairez, Email: isaac.chairez@tec.mx.

References

- 1.Besacier, L., Barnard, E., Karpov, A. & Schultz, T. Automatic speech recognition for under-resourced languages: A survey. Speech Commun.56, 85–100, 10.1016/j.specom.2013.07.008 (2014). [Google Scholar]

- 2.Joshi, P., Santy, S., Budhiraja, A., Bali, K. & Choudhury, M. The state and fate of linguistic diversity and inclusion in the NLP world. In Proceedings of the 58th Annual Meeting of the Association for Computational Linguistics, 6282–6293, 10.18653/v1/2020.acl-main.560 (2020).

- 3.Ahmed, M., Zaidan, B. B., Zaidan, A. A., Salih, M. & Lakulu, M. M. A review on systems-based sensory gloves for sign language recognition state of the art between 2007 and 2017. Sensors18, 2208, 10.3390/s18072208 (2018). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Moryossef, A., Yin, K., Neubig, G. & Goldberg, Y. Data augmentation for sign language gloss translation. En Proceedings of the 1st International Workshop on Automatic Translation for Signed and Spoken Languages (AT4SSL), 1–11, https://aclanthology.org/2021.mtsummit-at4ssl.1/ (2021).

- 5.Escobar L.-Dellamary, L. La lengua de seÑas mexicana, ¿una lengua en riesgo? Contacto bimodal y documentación sociolingüística. Estudios de Lingüística Aplicada62, 125–152, 10.22201/enallt.01852647p.2015.62.420 (2016). [Google Scholar]

- 6.BURAD, V. La glosa: Un sistema de notación para la lengua de señas Mendoza, https://cultura-sorda.org/la-glosa-un-sistema-de-notacion-para-la-lengua-de-senas (2011).

- 7.Li, D. et al. Transcribing natural languages for the deaf via neural editing programs. CoRRabs/2112.09600. https://arxiv.org/abs/2112.09600 (2021).

- 8.López, B. D., Escobar, C. G. & Martínez, S. M. Manual de gramática de la lengua de señas mexicana (LSM). Editorial Mariángel. https://www.calameo.com/read/0001639034c5089940816 (2016).

- 9.Gu, J., Hassan, H., Devlin, J. & Li, V. O. K. Universal Neural Machine Translation for Extremely Low Resource Languages. Proc. 2018 Conf. North Am. Chapter Assoc. Comput. Linguist.: Hum. Lang. Technol. 344–354, 10.18653/v1/N18-1032 (2018).

- 10.Dong, D., Wu, H., He, W., Yu, D. & Wang, H. Multi-task learning for multiple language translation. Proc. 53rd Annu. Meet. Assoc. Comput. Linguist. & 7th Int. Joint Conf. Nat. Lang. Process., 1723–1732. Association for Computational Linguistics, Beijing, China. 10.3115/v1/P15-1166 (2015).

- 11.Vaswani, A. et al. Attention Is All You Need. Adv. Neural Inf. Process. Syst.30, 5998–6008, 10.48550/arXiv.1706.03762 (2017). [Google Scholar]

- 12.Blackwood, G. W., Ballesteros, M. & Ward, T. Multilingual Neural Machine Translation with Task-Specific Attention. arXiv preprint abs/1806.03280. Preprint at https://arxiv.org/abs/1806.03280 (2018).

- 13.Pan, S. J. & Yang, Q. A survey on transfer learning. IEEE Trans. Knowl. Data Eng.22, 1345–1359, 10.1109/TKDE.2009.191 (2010). [Google Scholar]

- 14.Zoph, B., & Knight, K. Multi-Source Neural Translation. Proceedings of the 2016 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, 30–34. Association for Computational Linguistics. https://aclanthology.org/N16-1004 (2016).

- 15.Nguyen, T. Q. & Chiang, D. Transfer learning across low-resource, related languages for neural machine translation. Proc. 8th Int. Joint Conf. Nat. Lang. Process. 2, 296–301. Asian Federation of Natural Language Processing. https://aclanthology.org/I17-2050 (2017).

- 16.Kocmi, T. & Bojar, O. Trivial Transfer Learning for Low-Resource Neural Machine Translation. Proc. 3rd Conf. Mach. Transl.: Res. Pap. 244–252. Association for Computational Linguistics, Brussels, Belgium. 10.18653/v1/W18-6325 (2018).

- 17.Kim, Y., Gao, Y. & Ney, H. Effective Cross-lingual Transfer of Neural Machine Translation Models without Shared Vocabularies. Proc. 57th Annu. Meet. Assoc. Comput. Linguist. 1246–1257. Association for Computational Linguistics, Florence, Italy. 10.18653/v1/P19-1120 (2019).

- 18.Kocmi, T. & Bojar, O. Transfer Learning across Languages from Someone Else’s NMT Model. arXiv preprint arXiv:1909.10955. Preprint at https://arxiv.org/abs/1909.10955 (2019).

- 19.Lakew, S. M., Erofeeva, A., Negri, M., Federico, M. & Turchi, M. Transfer Learning in Multilingual Neural Machine Translation with Dynamic Vocabulary. arXiv:1811.01137. http://arxiv.org/abs/1811.01137 (2018).

- 20.Liu, Y. et al. Multilingual Denoising Pre-training for Neural Machine Translation. 2001.08210. https://arxiv.org/abs/2001.08210 (2020).

- 21.Conneau, A. et al. Unsupervised Cross-lingual Representation Learning at Scale. Proc. 58th Annu. Meet. Assoc. Comput. Linguist. 8440–8451, 10.18653/v1/2020.acl-main.747 (2020).

- 22.Aharoni, R., Johnson, M. & Firat, O. Massively Multilingual Neural Machine Translation. Proc. 2019 Conf. North Am. Chapter Assoc. Comput. Linguist.: Hum. Lang. Technol.1, 3874–3884, 10.18653/v1/N19-1388 (2019). [Google Scholar]

- 23.Kocmi, T. & Bojar, O. Efficiently reusing old models across languages via transfer learning. In Proceedings of the 22nd Annual Conference of the European Association for Machine Translation, 19–28, 10.18653/v1/2020.eamt-1.3 (2020).

- 24.Khemchandani, Y. et al. Exploiting language relatedness for low web-resource language model adaptation: An Indic languages study. In Proceedings of the 59th Annual Meeting of the Association for Computational Linguistics and the 11th International Joint Conference on Natural Language Processing (Volume 1: Long Papers), 1312–1323, 10.18653/v1/2021.acl-long.105 (2021).

- 25.Kocmi, T. & Bojar, O. Trivial transfer learning for low-resource neural machine translation. In Proceedings of the Third Conference on Machine Translation: Research Papers, 244–252, 10.18653/v1/W18-6325 (2018).

- 26.Luo, G., Yang, Y., Yuan, Y., Chen, Z. & Ainiwaer, A. Hierarchical transfer learning architecture for low-resource neural machine translation. IEEE Access7, 154157–154166, 10.1109/ACCESS.2019.2936002 (2019). [Google Scholar]

- 27.Jiang, Z. Low-resource text classification: A parameter-free classification method with compressors. In Rogers, A., Boyd-Graber, J. & Okazaki, N. (eds.) Findings of the Association for Computational Linguistics: ACL 2023, 6810–6828, 10.18653/v1/2023.findings-acl.426 (Association for Computational Linguistics, Toronto, Canada, 2023).

- 28.Wang, R., Tan, X., Luo, R., Qin, T. & Liu, T.-Y. A Survey on Low-Resource Neural Machine Translation. 2107.04239. https://arxiv.org/abs/2107.04239 (2021).

- 29.Flores, C. A. M., Westgaard, M. P., Dellamary, L. E., Aldrete, M. C. & del Rocío G. Ramírez Barba, M. Diccionario de Lengua de Señas Mexicana LSM Ciudad de México (Capital Social Por Ti, 2017).

- 30.Hernández, M. C. Diccionario Español-Lengua de Señas Mexicana (DIELSEME) (SEP, México, 2014).

- 31.Daniel López, B. E. S. & Mirna Martínez, S. Manual de Gramática de Lengua de Señas Mexicana (LSM) (2016).

- 32.Interseña Team Interseña: SPA Sign Language Translation App. Mobile application software. https://play.google.com/store/apps/details?id=intersign.aprender.lsm&hl=es_419&pli=1 (2024).

- 33.Oliva, J., Serrano, J. I., del Castillo, M. D. & Iglesias, Á. SyMSS: A syntax-based measure for short-text semantic similarity. Data Knowl. Eng.70, 390–405 (2011). [Google Scholar]

- 34.Kumar, V. & Subba, B. A TfidfVectorizer and SVM based sentiment analysis framework for text data corpus. In Proceedings of the 2020 National Conference on Communications (NCC), 1–6, https://ieeexplore.ieee.org/document/9056085, 10.1109/NCC48643.2020.9056085 (2020).

- 35.Eshan, S. C. & Hasan, M. S. An application of machine learning to detect abusive bengali text. In 2017 20th International Conference of Computer and Information Technology (ICCIT), 1–6, 10.1109/ICCITECHN.2017.8281787 (2017).

- 36.Al-Obaydy, W. I., Hashim, H. A., Najm, Y. & Jalal, A. A. Document classification using term frequency-inverse document frequency and k-means clustering. Indonesian Journal of Electrical Engineering and Computer Science27, 1517–1524, 10.11591/ijeecs.v27.i3.pp1517-1524 (2022). [Google Scholar]

- 37.Tiedemann, J. et al. Democratizing Neural Machine Translation with OPUS-MT. Language Resources and Evaluation. 58, 713–755. Springer Nature. 1574-0218. 10.1007/s10579-023-09704-w (2023).

- 38.University of Helsinki, Language Technology Group. Language Technology Blog. https://blogs.helsinki.fi/language-technology (2024).

- 39.Helsinki-NLP. OPUS-MT Spanish-to-Spanish: Machine translation model from Spanish to Spanish. Hugging Face. Available at https://huggingface.co/Helsinki-NLP/opus-mt-es-es (2024).

- 40.Lewis, M. et al. BART: Denoising Sequence-to-Sequence Pre-training for Natural Language Generation, Translation, and Comprehension. arXiv:1910.13461. http://arxiv.org/abs/1910.13461 (2019).

- 41.Araujo, V., Trusca, M. M., Tufiño, R., & Moens, M.-F. Sequence-to-Sequence SPA Pre-trained Language Models. 2309.11259. https://arxiv.org/abs/2309.11259 (2023).

- 42.Hugging Face Hugging Face - The AI Community Building the Future. https://huggingface.co/ (2024).

- 43.Papineni, K., Roukos, S., Ward, T. & Zhu, W. BLEU: a method for automatic evaluation of machine translation. Proc. 40th Annu. Meet. Assoc. Comput. Linguist. 311–318. Association for Computational Linguistics, Philadelphia, Pennsylvania. 10.3115/1073083.1073135 (2002).

- 44.Post, M. A call for clarity in reporting BLEU scores. Proc. 3rd Conf. Mach. Transl.: Res. Pap. 186–191. Association for Computational Linguistics, Brussels, Belgium. 10.18653/v1/W18-6319 (2018).

- 45.Snover, M., Dorr, B., Schwartz, R., Micciulla, L. & Makhoul, J. A study of translation edit rate with targeted human annotation. Proc. 7th Conf. Assoc. Mach. Transl. Am.: Tech. Pap. 223–231. Association for Machine Translation in the Americas, Cambridge, Massachusetts, USA. https://aclanthology.org/2006.amta-papers.25 (2006).

- 46.Lin, C.-Y. ROUGE: A package for automatic evaluation of summaries. In Text Summarization Branches Out, 74–81. https://aclanthology.org/W04-1013/ (2004).

- 47.Lara Ortiz, D. V., Fuentes Aguilar, R. Q. & Chairez, I. Spanish to Mexican Sign Language (MSL) glosses corpus for NLP tasks. Figshare10.6084/m9.figshare.28519580 (2025). [DOI] [PubMed]

- 48.Real Academia Española. género. Diccionario de la lengua española (DLE). (2024)

- 49.Ellis, M. Transitive verbs: Definition and examples. Grammarly. https://www.grammarly.com/blog/transitive-verbs/ (2022).

- 50.Agris, U. & Kraiss, K.-F. SIGnum Database: Video Corpus for Signer-Independent Continuous Sign Language Recognition In Proceedings of the LREC2010 4th Workshop on the Representation and Processing of Sign Languages: Corpora and Sign Language Technologies, 243–246, https://www.sign-lang.uni-hamburg.de/lrec/pub/10006.pdf (2018) .

- 51.Neidle, C., Sclaroff, S. & Athitsos, V. Signstream: A tool for linguistic and computer vision research on visual-gestural language data. Behavior research methods, instruments, and computers : a journal of the Psychonomic Society, Inc33, 311–20, 10.3758/BF03195384 (2001). [DOI] [PubMed] [Google Scholar]

- 52.Othman, A. & Jemni, M. English-ASL Gloss Parallel Corpus 2012 (ASLG-PC12). In Proceedings of the 5th Workshop on the Representation and Processing of Sign Languages: Interactions between Corpus and Lexicon LREC, 22–23, https://www.sign-lang.uni-hamburg.de/lrec/pub/10006.pdf (2012).

- 53.Camgoz, N. C., Hadfield, S., Koller, O., Ney, H. & Bowden, R. Neural Sign Language Translation. Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR). 7784–7793. IEEE, 10.1109/CVPR.2018.00812 (2018).

- 54.Belissen, V., Braffort, A., & Gouiffès, M. Dicta-Sign-LSF-v2: Remake of a Continuous French Sign Language Dialogue Corpus and a First Baseline for Automatic Sign Language Processing. Proceedings of the Twelfth Language Resources and Evaluation Conference, Calzolari, N. et al. European Language Resources Association, 6040–6048, https://aclanthology.org/2020.lrec-1.740 (2020).

- 55.Hanke, T., Schulder, M., Konrad, R. & Jahn, E. Extending the Public DGS Corpus in Size and Depth. Proc. LREC2020 9th Workshop on Representation and Processing of Sign Languages 75–82. European Language Resources Association (ELRA), Marseille, France. https://aclanthology.org/2020.signlang-1.12 (2020).

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

The model cards M1 and M2 are available in https://huggingface.co/VaniLara/esp-to-lsm-model and https://huggingface.co/VaniLara/esp-to-lsm-barto-model. The code files are contained in Colab notebooks in a Figshare repository47 (see Section Data records).