Abstract

Background

Diagnostic pathology depends on complex, structured reasoning to interpret clinical, histologic, and molecular data. Replicating this cognitive process algorithmically remains a significant challenge. As large language models (LLMs) gain traction in medicine, it is critical to determine whether they have clinical utility by providing reasoning in highly specialized domains such as pathology.

Methods

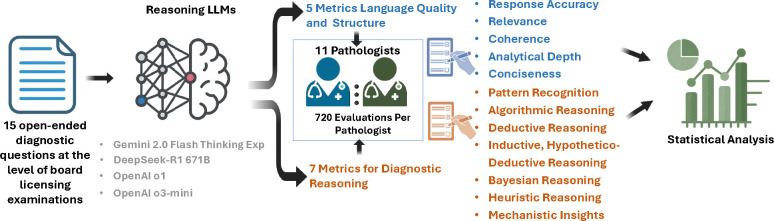

We evaluated the performance of four reasoning LLMs (OpenAI o1, OpenAI o3-mini, Gemini 2.0 Flash Thinking Experimental, and DeepSeek-R1 671B) on 15 board-style open-ended pathology questions. Responses were independently reviewed by 11 pathologists using a structured framework that assessed language quality (accuracy, relevance, coherence, depth, and conciseness) and seven diagnostic reasoning strategies. Scores were normalized and aggregated for analysis. We also evaluated inter-observer agreement to assess scoring consistency. Model comparisons were conducted using one-way ANOVA and Tukey’s Honestly Significant Difference (HSD) test.

Results

Gemini and DeepSeek significantly outperformed OpenAI o1 and OpenAI o3-mini in overall reasoning quality (p < 0.05), particularly in analytical depth and coherence. While all models achieved comparable accuracy, only Gemini and DeepSeek consistently applied expert-like reasoning strategies, including algorithmic, inductive, and Bayesian approaches. Performance varied by reasoning type: models performed best in algorithmic and deductive reasoning and poorest in heuristic and pattern recognition. Inter-observer agreement was highest for Gemini (p < 0.05), indicating greater consistency and interpretability. Models with more in-depth reasoning (Gemini and DeepSeek) were generally less concise.

Conclusion

Advanced LLMs such as Gemini and DeepSeek can approximate aspects of expert-level diagnostic reasoning in pathology, particularly in algorithmic and structured approaches. However, limitations persist in contextual reasoning, heuristic decision-making, and consistency across questions. Addressing these gaps, along with trade-offs between depth and conciseness, will be essential for the safe and effective integration of AI tools into clinical pathology workflows.

Keywords: Generative AI, Reasoning Large Language Models, Pathology, Clinical Reasoning, AI Evaluation

1. Introduction

Anatomical pathologists draw upon years of training and accumulated clinical experience to develop a range of diagnostic reasoning strategies for interpreting histopathologic findings in the context of patient presentation. This interpretive process requires not only deep visual pattern recognition but also the ability to synthesize clinical, morphologic, and molecular data using a broad array of reasoning approaches [1]. These include algorithmic workflows, deductive and inductive (hypothetico-deductive) reasoning, Bayesian and heuristic inference, mechanistic understanding of disease processes, and pattern recognition—each contributing to the integrative synthesis of visual, clinical, and contextual cues [1].

With the advent of generative artificial intelligence (AI), large language models (LLMs) such as ChatGPT, Gemini, and DeepSeek have garnered interest for their potential to support pathology workflows [2, 3]. These models generate responses by leveraging probabilistic associations across vast biomedical corpora [4, 5]. Early applications in pathology have focused on clinical summarization [6], education, and simulation; however, most evaluations have assessed only the factual accuracy or superficial plausibility of outputs, without examining the structure or soundness of the underlying reasoning processes [7, 8, 9, 10].

Although LLMs have shown strong performance on general medical benchmarks, their ability to replicate the nuanced, domain-specific reasoning required in diagnostic pathology remains unclear [11, 12, 13]. Pathology demands more than knowledge retrieval—it requires the contextual application of varied reasoning strategies across morphologic, molecular, and clinical dimensions. Given that board licensing examinations emphasize reasoning beyond factual recall [14], a critical question emerges: can LLMs generate responses that are not only accurate but also reflect expert-like diagnostic reasoning?

To address this question, we developed a novel, structured evaluation framework grounded in clinical practice. Eleven expert pathologists evaluated responses generated by four LLMs to 15 open-ended diagnostic questions. Each response was scored across five language quality metrics and seven clinically relevant reasoning strategies [1, 14]. The models, OpenAI o1, OpenAI o3-mini, Gemini 2.0 Flash Thinking Experimental (Gemini), and DeepSeek-R1 671B (DeepSeek), were compared across all evaluation criteria. Inter-observer agreement analysis was conducted to assess consistency among expert reviewers. Together, these findings offer a comprehensive assessment of clinical reasoning in LLMs and establish a scalable, domain-specific framework for evaluating AI systems in diagnostic pathology.

2. Materials and Methods

In this study, a structured evaluation framework was developed to assess the capacity of LLMs to generate clinically relevant, well-reasoned answers to open-ended pathology questions. Our focus was on both language quality and diagnostic reasoning, with expert review by pathologists. Based on the study by Wang et al. [14], a set of 15 open-ended diagnostic questions, listed in Supplementry Table 1, was selected to reflect the complexity and format of board licensing examinations.

2.1. Model Selection and Response Generation

Four state-of-the-art LLMs were evaluated: OpenAI o1, OpenAI o3-mini, Gemini, and DeepSeek. Each model was prompted independently to respond to all 15 questions in a zero-shot setting. To simulate a high-stakes clinical reasoning task, each question was preceded by the instruction: “This is a pathology-related question at the level of licensing (board) examinations”. No additional context or few-shot examples were provided. Model responses were collected between February and March 2025 using publicly accessible web interfaces or Application Programming Interface (API) endpoints. Each output included both a direct answer and explanatory reasoning. No fine-tuning or post-processing was applied to model responses.

2.2. Evaluation by Pathologists

Eleven expert pathologists (qualifications provided in Supplementary Table 2) independently evaluated the reasoning outputs generated by each LLM across 15 diagnostic questions. To mitigate potential bias, all evaluations were conducted in a blinded manner, and inter-observer agreement was analyzed to ensure consistency among evaluators. The evaluation assessed two complementary dimensions: the linguistic quality of the responses and the application of pathology-specific diagnostic reasoning strategies. The evaluation focused on the following two primary criteria:

Natural Language Quality Metrics: Assessments were conducted on the clarity, coherence, depth, accuracy, and conciseness of the responses. These metrics are commonly employed in evaluating LLM outputs to ensure that generated content is not only factually correct but also well-structured and comprehensible. Such evaluation frameworks have been discussed in studies analyzing LLM performance in medical contexts [15].

Application of Pathology-Specific Reasoning Strategies: Evaluators examined the extent to which LLMs appropriately utilized various diagnostic reasoning approaches integral to pathology practice. These approaches include pattern recognition, algorithmic reasoning, inductive hypothetico-deductive reasoning, mechanistic insights, deductive reasoning, heuristic reasoning, and probabilistic (Bayesian) reasoning (details provided in Supplemental Table 3). The framework for these reasoning strategies is grounded in the cognitive processes outlined by Pena and Andrade-Filho, who describe the diagnostic process as encompassing cognitive, communicative, normative, and medical conduct domains [1, 16].

2.3. Statistical Analysis

Scores (on a 1–5 Likert scale) were first normalized to a 0–1 range using linear scaling (score divided by 5). Following normalization, average scores were computed across raters for each combination of model, question, and evaluation criterion. These aggregated values were used to evaluate model-level and criterion-specific performance. One-way analysis of variance (ANOVA) was used to assess differences in mean scores across models for each evaluation metric, including cumulative scores. When statistically significant, pairwise comparisons were conducted using Tukey’s Honestly Significant Difference (HSD) test (α = 0.05). Analyses were conducted using Python (v3.11) with standard statistical libraries. To assess inter-observer reliability, the percent agreement was calculated for each Question–Model–Criterion (Q-M-C) combination, which was defined as the proportion of raters who selected the most common score. Ratings ranged from 7 to 11 per Q-M-C (mean = 9.9). Model-level differences in percent agreement were tested using the Kruskal–Wallis H test. When significant, post hoc pairwise comparisons were performed using Dunn’s test with Bonferroni correction for multiple testing. Additional analyses, including normalization procedures, missing data summaries, and full pairwise comparison results, are available in Supplementary Tables 4, 5, 6, 7, 8, and 9 and Figures 6 and 7.

3. Results

We evaluated the performance of four LLMs (Gemini, DeepSeek, OpenAI o1, and OpenAI o3-mini) on 15 expert-generated diagnostic pathology questions using our structured evaluation framework. Responses were assessed across two primary domains: Language Quality and Response Structure, focused on NLP-oriented metrics (e.g., relevance, coherence, accuracy); and Diagnostic Reasoning Strategies, capturing clinically grounded reasoning styles (e.g., pattern recognition, algorithmic reasoning, mechanistic insight). Each domain includes a cumulative score and multiple sub-metrics, yielding twelve evaluation criteria overall.

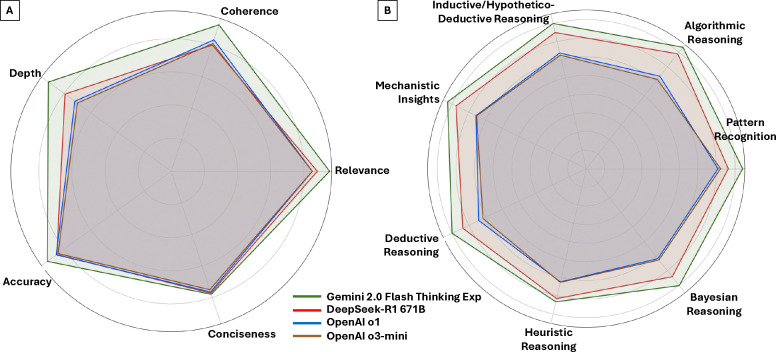

Fig. 2 summarizes model performance across these criteria using radar plots. Gemini clearly outperforms all other models on language quality metrics (Fig. 2a), with the largest margins in “depth” and “coherence”. DeepSeek ranks second overall, while the two OpenAI reasoning models show similar performance, particularly on accuracy and relevance. Fig. 2b visualizes pathology-oriented reasoning strategies. Gemini again leads across most dimensions, especially algorithmic and inductive reasoning. DeepSeek demonstrates competitive performance, whereas the OpenAI models score lower in mechanistic, heuristic, and Bayesian reasoning, suggesting less robust clinical reasoning capabilities. These overarching trends are explored in greater detail in the subsequent sections.

Figure 2: Comparative Performance of LLMs Across Language Quality and Diagnostic Reasoning Domains.

Radar plots summarize the normalized mean scores (range: 0 to 1) assigned by 11 pathologists for each model across 12 evaluation criteria. Panel A: Language quality metrics, including accuracy, relevance, analytical depth, coherence, conciseness, and cumulative scores. Panel B: Diagnostic reasoning strategies, including pattern recognition, algorithmic reasoning, deductive reasoning, inductive/hypothetico-deductive reasoning, heuristic reasoning, mechanistic insights, and Bayesian reasoning. Gemini consistently outperformed other models across both domains, particularly in analytical depth and structured reasoning. OpenAI o1 showed the greatest variability in performance across metrics.

3.1. Evaluation of Language Quality and Response Structure

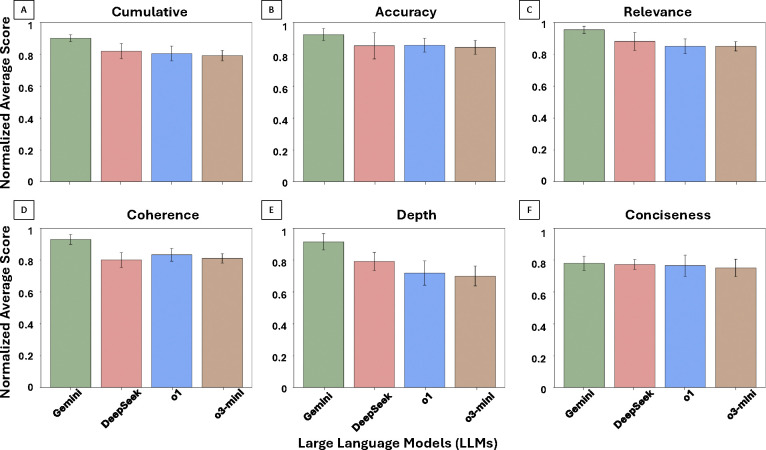

Fig. 3 presents normalized model performance across five language-focused evaluation metrics—relevance, coherence, analytical depth, accuracy, and conciseness—along with a cumulative average score. Fig. 3A shows cumulative scores across all 15 questions, while Figs. 3B–F display model-specific performance on individual metrics. Across all dimensions, Gemini consistently achieved the highest average scores. In cumulative performance (Fig. 3A), Gemini significantly outperformed OpenAI o1, OpenAI o3-mini, and DeepSeek (p < 0.05). DeepSeek also significantly outperformed both OpenAI models (p < 0.05), while there was no significant difference between OpenAI o1 and OpenAI o3-mini (p > 0.05).

Figure 3: LLM Performance on Language Quality Metrics.

Normalized average scores (range: 0 to 1) across five core language quality dimensions—accuracy, relevance, coherence, analytical depth, and conciseness—based on ratings from 11 pathologists across 15 pathology questions. Panels A–F: Overall average performance (A), followed by Accuracy (B), Relevance (C), Coherence (D), Analytical Depth (E), and Conciseness (F). Gemini consistently achieved the highest scores across all metrics, with the greatest variability observed in coherence and analytical depth.

For accuracy (Fig. 3B), Gemini significantly outperformed both DeepSeek and OpenAI o1 (p < 0.05). All other pairwise differences were not statistically significant (p > 0.05). In relevance (Fig. 3C), all models maintained relatively high scores, with Gemini significantly outperforming all others (p < 0.05). No significant differences were observed among DeepSeek, OpenAI o1, and OpenAI o3-mini (p > 0.05). For coherence (Fig. 3D), Gemini significantly outperformed all three other models (p < 0.05), while no statistically significant differences were observed between DeepSeek and either OpenAI model (p > 0.05).

Analytical depth (Fig. 3E) showed more variation; Gemini significantly outperformed all other models (p < 0.05), and DeepSeek significantly outperformed both OpenAI o1 and OpenAI o3-mini (p < 0.05). No significant difference was observed between the two OpenAI models (p > 0.05). Lastly, conciseness (Fig. 3F) showed comparable performance across all models. Although Gemini had slightly higher average scores, no statistically significant differences were observed across any pairwise comparisons (p > 0.05). Overall, these results suggest that LLMs varied most on metrics requiring deeper interpretive judgment (e.g., analytical depth and coherence), with more consistent performance observed on factual dimensions like relevance and accuracy.

3.2. Evaluation of Diagnostic Reasoning Strategies

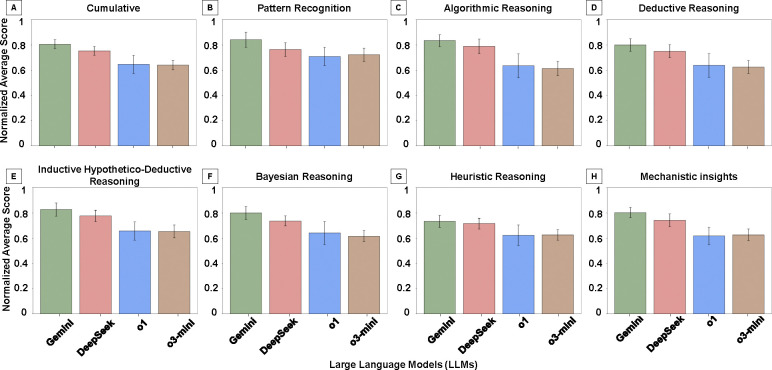

Fig. 4 shows model performance across seven clinically relevant diagnostic reasoning strategies: pattern recognition, algorithmic reasoning, deductive reasoning, inductive/hypothetico-deductive reasoning, Bayesian reasoning, heuristic reasoning, and mechanistic insights. Fig. 4A presents cumulative reasoning scores averaged across all strategies and questions, while Fig. 4B–H display model-specific performance on each individual reasoning type. In overall performance (Fig. 4A), Gemini achieved the highest cumulative score, followed by DeepSeek, with both OpenAI models scoring lower. Pairwise comparisons showed statistically significant differences between Gemini and all other models (p < 0.05), as well as between DeepSeek and both OpenAI variants (p < 0.05). No significant difference was found between OpenAI o1 and OpenAI o3-mini (p > 0.05).

Figure 4: Diagnostic Reasoning Performance by Reasoning Type.

Normalized mean scores (range: 0 to 1) for each LLM across seven diagnostic reasoning strategies based on expert evaluation of pathology-related questions. Panels A–H: Cumulative reasoning performance (A), Pattern Recognition (B), Algorithmic Reasoning (C), Deductive Reasoning (D), Inductive/Hypothetico-Deductive Reasoning (E), Bayesian Reasoning (F), Heuristic Reasoning (G), and Mechanistic Insights (H). Gemini and DeepSeek consistently outperformed the OpenAI models across most reasoning types, with particularly strong performance in algorithmic, inductive, and mechanistic reasoning. Heuristic and Bayesian reasoning yielded the lowest scores across all models, reflecting challenges with uncertainty-driven and experiential inference.

In pattern recognition (Fig. 4B), Gemini significantly outperformed all other models (p < 0.05), while differences between DeepSeek and the OpenAI models were not statistically significant (p > 0.05). For algorithmic reasoning (Fig. 4C), which yielded the highest scores among all reasoning types, Gemini and DeepSeek both significantly outperformed OpenAI o1 and OpenAI o3-mini (p < 0.05), though no difference was observed between Gemini and DeepSeek (p > 0.05). In deductive reasoning (Fig. 4D), Gemini significantly outperformed both OpenAI models (p < 0.05), and DeepSeek also significantly outperformed both OpenAI o1 and OpenAI o3-mini (p < 0.05). No significant difference was observed between Gemini and DeepSeek or between the two OpenAI models. For inductive/hypothetico-deductive reasoning (Fig. 4E), both Gemini and DeepSeek significantly outperformed the OpenAI models (p < 0.05), with no difference observed between Gemini and DeepSeek (p > 0.05).

Bayesian (probabilistic) reasoning scores (Fig. 4F) were generally lower across all models. However, all pairwise comparisons were statistically significant (p < 0.05), with Gemini outperforming all others and DeepSeek significantly outperforming both OpenAI models. In heuristic reasoning (Fig. 4G), Gemini and DeepSeek significantly outperformed both OpenAI models (p < 0.05) but did not significantly differ from each other (p > 0.05). No significant difference was observed between OpenAI o1 and o3-mini (p > 0.05). In mechanistic insights (Fig. 4H), all pairwise comparisons were statistically significant (p < 0.05). Gemini significantly outperformed DeepSeek and both OpenAI models, while DeepSeek significantly outperformed both OpenAI variants.

3.3. Inter-observer Percent Agreement Across Models

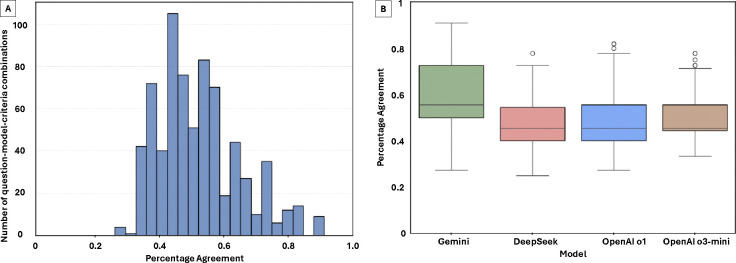

Percent agreement was analyzed for 720 unique Q-M-C combinations across four models: Gemini, DeepSeek, OpenAI o1, and OpenAI o3-mini. The overall mean percent agreement was 0.49 (range: 0.25–0.91). Fig. 5A shows a full distribution of percent agreement, with model-specific distributions shown in Fig. 5B. Percent agreement significantly differed across models (Kruskal-Wallis H = 76.798, p < 0.001). Post-hoc pairwise Dunn’s tests revealed that Gemini had significantly higher percent agreement than all other models (p < 0.001 for all comparisons). No significant differences were found among DeepSeek, OpenAI o1, and OpenAI o3-mini (p > 0.05).

Figure 5:

Percent agreement across 720 unique combinations of question, model, and evaluation criterion (Q–M–C), reflecting the proportion of raters who selected the most common score. Panel A: Distribution of percent agreement across all Q–M–C combinations. Panel B: Model-specific distributions of agreement. Gemini achieved significantly higher inter-observer agreement compared to all other models (p < 0.001), suggesting greater consistency and interpretability of its outputs. No statistically significant differences were observed in pair-wise testing between DeepSeek, OpenAI o1, and OpenAI o3-mini.

4. Discussion

This study evaluated the ability of four state-of-the-art LLMs to perform pathology-specific diagnostic reasoning using expert assessments of both language quality and clinical reasoning strategies. While all models produced generally relevant and accurate responses, only Gemini and DeepSeek demonstrated consistent strength across multiple reasoning dimensions, particularly analytical depth, coherence, and algorithmic reasoning. Moreover, Gemini achieved the highest inter-observer agreement among expert pathologists, suggesting greater clarity and interpretability in its responses. These findings highlight the need to move beyond accuracy-focused evaluation and toward models that generate clinically coherent and contextually intelligible reasoning, especially in high-stakes diagnostic domains such as pathology. This analysis also revealed substantial variation in the reasoning performance of LLMs when applied to pathology-focused diagnostic questions. Among the four evaluated models, Gemini consistently achieved the highest scores across both evaluation domains (language quality and diagnostic reasoning). DeepSeek followed closely in most categories, while the OpenAI variants (o1 and o3-mini) generally performed lower compared to the other LLMs, particularly in analytical depth, coherence, and advanced reasoning types.

In the language quality domain, all models produced broadly relevant responses. Gemini and DeepSeek distinguished themselves in analytical depth, coherence, and cumulative performance. Gemini also significantly outperformed other models in accuracy, suggesting more robust and precise responses. While the OpenAI models tended to be more concise, these differences were not statistically significant and often came at the expense of analytical depth and interpretability. In the diagnostic reasoning domain, all models performed best on algorithmic and deductive reasoning tasks. Gemini consistently outperformed all others across most reasoning types. DeepSeek also performed well in several categories, though its advantage over the OpenAI models was less pronounced in pattern recognition. Performance dropped markedly for all models in nuanced reasoning types such as heuristic, mechanistic, and Bayesian reasoning. These areas typically require integrating implicit knowledge, clinical judgment, and experience-based decision-making.

Notably, inter-observer agreement was highest for Gemini, indicating that its responses were more consistently interpreted and rated by expert pathologists. This suggests that Gemini’s outputs may be not only more complete or coherent but also more reliably understandable to clinical end-users. Such consistency is essential for downstream applications of LLMs in real-world diagnostic settings, where human-AI collaboration depends on shared reasoning clarity.

The prior evaluations of LLMs in medicine have focused on factual correctness, clinical acceptability, or performance on standardized assessments such as the USMLE and other multiple-choice examinations [11, 12, 13, 17]. While these studies demonstrate that advanced models can retrieve and generate clinically accurate information, they typically frame evaluation in binary terms, right or wrong, without assessing the structure, context, or interpretability of the underlying reasoning processes. However, recent studies have begun to explore how generative AI might support medical reasoning in pathology and related domains. Waqas et al. have outlined the promise of LLMs and FMs for digital pathology applications such as structured reporting, clinical summarization, and educational simulations [8, 9]. Brodsky et al. emphasized the importance of interpretability and reasoning traceability in anatomic pathology, noting that AI outputs must not only be accurate but also reflect logical, clinically meaningful reasoning patterns [7]. However, these studies primarily describe use cases or propose conceptual frameworks rather than empirically evaluating whether LLMs demonstrate structured reasoning aligned with human diagnostic strategies. Few studies have assessed whether model outputs reflect the types of reasoning, such as algorithmic, heuristic, or mechanistic, that are integral to expert pathology practice.

Histologic diagnosis is a cognitively demanding task that requires the integration of diverse information sources, ranging from visual features on tissue slides to clinical history and disease biology. As part of this decision-making process, pathologists rely on a broad set of reasoning strategies, including pattern recognition, algorithmic workflows, hypothetico-deductive reasoning, Bayesian inference, and heuristics, often applied fluidly based on experience and context [1, 18]. Despite their central role in diagnostic workflows, these reasoning approaches are rarely formalized in LLM evaluation studies. While prior cognitive and educational research has documented these strategies in pathology and clinical decision-making [19, 20, 21], few studies have translated them into a structured framework for evaluating AI systems in this domain. This study addresses this gap by developing and applying a structured evaluation framework that operationalizes seven clinically grounded reasoning strategies, i.e., pattern recognition, algorithmic reasoning, deductive and inductive reasoning, Bayesian reasoning, mechanistic insights, and heuristic reasoning. In contrast to prior work that has focused on factual accuracy or subjective plausibility, we used expert raters to assess whether model responses actually reflected reasoning types that pathologists would apply during their decision-making process. We also included five language quality metrics to assess the structure and interpretability of responses, features that are critical for clinical adoption but often overlooked in model evaluation. This approach builds on prior conceptual work in generative AI for pathology [7, 8, 9], but moves beyond descriptive analysis to provide a domain-specific, empirically tested benchmark for reasoning assessment.

The results of this study show that LLMs perform relatively well in algorithmic and deductive reasoning, likely reflecting their ability to retrieve structured clinical knowledge from training data and guidelines [12, 22, 23, 24]. However, model performance was markedly lower in reasoning types that rely more heavily on experience, context, and uncertainty, such as heuristic reasoning, Bayesian inference, and mechanistic understanding [25, 26]. These findings align with previous observations that current LLMs struggle with nuanced clinical reasoning, particularly in settings that require adaptive judgment [27, 28, 29, 30]. Moreover, our analysis highlights the lack of metacognitive regulation in LLMs, their inability to recognize uncertainty, self-correct, or reconcile conflicting information, challenges that have been noted in other domains as well [31]. By incorporating expert adjudication, reasoning taxonomies, and inter-observer agreement, our study offers a new model for evaluating clinical reasoning in generative AI that extends beyond correctness to assess the fidelity and structure of reasoning itself.

It is also important to interpret lower scores in certain reasoning categories in the context of clinical relevance and question content. For example, pattern recognition may be inapplicable to questions focused solely on molecular alterations or classification schemes, where visual cues are absent. Similarly, while heuristic reasoning was consistently rated low across models, some pathologists noted that this may reflect an appropriate aversion to premature conclusions based on past experience alone, an approach discouraged in modern diagnostic workflows that emphasize comprehensive differential diagnosis. These nuances underscore the importance of context when evaluating reasoning strategies.

Our findings have important implications for the safe and effective integration of LLMs into clinical workflows, particularly in diagnostic fields such as pathology, where interpretability, trust, and domain-specific reasoning are paramount. While all four models evaluated in this study produced largely relevant and accurate responses, only Gemini, and to a slightly lesser extent DeepSeek, demonstrated reasoning structures that aligned with real-world diagnostic approaches. These models also achieved higher inter-observer agreement among expert pathologists, suggesting that their outputs were not only well-structured but also consistently interpretable across users. This consistency is critical in clinical environments where diagnostic decisions are collaborative and where AI-generated insights must be readily understood, validated, and acted upon by human experts. In contrast, models with lower coherence and reasoning clarity may increase cognitive burden, introduce ambiguity, or erode trust, especially when deployed in high-stakes or time-constrained settings. By highlighting variation in reasoning quality across models and across different types of diagnostic logic, our results suggest that LLM evaluation should go beyond factual correctness and consider reasoning fidelity as a core requirement. This is particularly relevant for educational, triage, or decision support applications in pathology, where nuanced reasoning is essential, and automation must complement, rather than obscure, human expertise.

We acknowledge that this study has some limitations. Although a diverse set of reasoning strategies was evaluated, this analysis was limited to 15 open-ended questions. While these were curated by expert pathologists, they may not fully capture the range of diagnostic challenges encountered in practice. Future iterations of this benchmark should include a broader array of cases, including rare and diagnostically complex scenarios. We focused solely on text-based LLMs and did not assess multimodal models that incorporate pathology images. The use of expert raters (i.e., pathologists) adds clinical realism but also introduces subjectivity. We also did not measure downstream clinical impact, such as improvements in diagnostic accuracy or workflow efficiency. Future studies should explore these outcomes prospectively. In addition, the effects of prompting strategies, fine-tuning, and domain adaptation on reasoning performance deserve further investigation. Reasoning-aligned LLMs may hold promise for educational use, simulation, and decision support, applications that require careful validation in real-world settings.

5. Conclusion

This study presents a structured evaluation of advanced reasoning LLMs in pathology, focusing not only on factual accuracy but also on diagnostic reasoning quality. By assessing model outputs across five language metrics and seven clinically grounded reasoning strategies, we provide a nuanced benchmark that goes beyond traditional correctness-based evaluations. These findings demonstrate that while all models produce largely relevant and accurate responses, substantial differences exist in their ability to emulate expert diagnostic reasoning. Gemini and DeepSeek outperformed other models in both reasoning fidelity and inter-observer agreement, suggesting greater alignment with clinical expectations. Conversely, current limitations in heuristic, mechanistic, and probabilistic reasoning highlight areas for model improvement. To our knowledge, this is the first study to systematically evaluate the diagnostic reasoning capabilities of LLMs in pathology using expert-annotated criteria grounded in clinical practice. As generative AI tools continue to evolve, clinically meaningful evaluation frameworks, such as the one introduced here, will be essential for guiding safe and effective integration into diagnostic practice. This work lays the foundation for future studies that seek to assess not just whether models are right but whether they reason like clinicians.

Supplementary Material

Figure 1: Evaluation Framework for Assessing Diagnostic Reasoning in Large Language Models.

Fifteen open-ended diagnostic pathology questions, reflecting the complexity of board licensing examinations, were independently submitted to four LLMs: OpenAI o1, OpenAI o3-mini, Gemini 2.0 Flash-Thinking Experimental (Gemini), and DeepSeek-R1 671B (DeepSeek). Each response was evaluated by 11 expert pathologists using a structured rubric comprising 12 metrics across two domains: (1) language quality and response structure (relevance, coherence, accuracy, depth, and conciseness) and (2) diagnostic reasoning strategies (pattern recognition, algorithmic, deductive, inductive/hypothetico-deductive, heuristic, mechanistic, and Bayesian reasoning). Pathologists were blinded to model identification. Evaluation scores were aggregated and normalized to account for missing data and served as the basis for both model performance comparisons and inter-observer agreement analysis.

Acknowledgments

The research was supported in part by a Cancer Center Support Grant at the H. Lee Moffitt Cancer Center & Research Institute, awarded by the NIH/NCI (P30-CA76292), a Florida Biomedical Research Grant (21B12), an NIH/NCI grant (U01-CA200464), NSF Awards 2234836 and 2234468 and NAIRR pilot funding.

Contributor Information

Asim Waqas, Department of Cancer Epidemiology, H. Lee Moffitt Cancer Center & Research Institute, Tampa, FL.

Asma Khan, Armed Forces Institute of Pathology, Rawalpindi, Pakistan.

Zarifa Gahramanli Ozturk, Clinical Science Lab, H. Lee Moffitt Cancer Center & Research Institute.

Daryoush Saeed-Vafa, Department of Pathology, H. Lee Moffitt Cancer Center & Research Institute.

Weishen Chen, Department of Dermatology & Cutaneous Surgery, University of South Florida, Tampa, FL.

Jasreman Dhillon, Department of Anatomic Pathology, H. Lee Moffitt Cancer Center & Research Institute.

Andrey Bychkov, Department of Pathology, Kameda Medical Center, Kamogawa City, Chiba Prefecture, Japan.

Marilyn M. Bui, Department of Pathology, H. Lee Moffitt Cancer Center & Research Institute.

Ehsan Ullah, Department of Surgery, Health New Zealand, Counties Manukau, Auckland, New Zealand.

Farah Khalil, Department of Pathology, H. Lee Moffitt Cancer Center & Research Institute.

Vaibhav Chumbalkar, Department of Pathology, H. Lee Moffitt Cancer Center & Research Institute.

Zena Jameel, Department of Pathology, H. Lee Moffitt Cancer Center & Research Institute.

Humberto Trejo Bittar, Department of Pathology, H. Lee Moffitt Cancer Center & Research Institute.

Rajendra S. Singh, Dermatopathology and Digital Pathology, Summit Health, Berkley Heights, NJ.

Anil V. Parwani, Department of Pathology, The Ohio State University, Columbus, Ohio.

Matthew B. Schabath, Department of Cancer Epidemiology, H. Lee Moffitt Cancer Center & Research Institute.

Ghulam Rasool, Department of Machine Learning, H. Lee Moffitt Cancer Center & Research Institute.

References

- [1].Pena Gil Patrus and Andrade-Filho Josede Souza. How does a pathologist make a diagnosis? Archives of Pathology and Laboratory Medicine, 133(1):124–132, January 2009. [DOI] [PubMed] [Google Scholar]

- [2].Elemento Olivier, Khozin Sean, and Sternberg Cora N. The use of artificial intelligence for cancer therapeutic decision-making. NEJM AI, page AIra2401164, 2025. [Google Scholar]

- [3].Tripathi Aakash, Waqas Asim, Venkatesan Kavya, Ullah Ehsan, Bui Marilyn, and Rasool Ghulam. 1391 ai-driven extraction of key clinical data from pathology reports to enhance cancer registries. Laboratory Investigation, 105(3), 2025. [Google Scholar]

- [4].Rydzewski Nicholas R, Dinakaran Deepak, Zhao Shuang G, Ruppin Eytan, Turkbey Baris, Citrin Deborah E, and Patel Krishnan R. Comparative evaluation of llms in clinical oncology. NEJM AI, 1(5):AIoa2300151, 2024. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [5].Wu Jiageng, Liu Xiaocong, Li Minghui, Li Wanxin, Su Zichang, Lin Shixu, Garay Lucas, Zhang Zhiyun, Zhang Yujie, Zeng Qingcheng, et al. Clinical text datasets for medical artificial intelligence and large language models—a systematic review. NEJM AI, 1(6):AIra2400012, 2024. [Google Scholar]

- [6].Laohawetwanit Thiyaphat, Pinto Daniel Gomes, and Bychkov Andrey. A survey analysis of the adoption of large language models among pathologists. American Journal of Clinical Pathology, 163(1):52–59, July 2024. [DOI] [PubMed] [Google Scholar]

- [7].Brodsky Victor, Ullah Ehsan, Bychkov Andrey, Song Andrew H., Walk Eric E., Louis Peter, Rasool Ghulam, Singh Rajendra S., Mahmood Faisal, Bui Marilyn M., and Parwani Anil V.. Generative artificial intelligence in anatomic pathology. Archives of Pathology and Laboratory Medicine, 149(4):298–318, January 2025. [DOI] [PubMed] [Google Scholar]

- [8].Waqas Asim, Naveed Javeria, Shahnawaz Warda, Asghar Shoaib, Bui Marilyn M, and Rasool Ghulam. Digital pathology and multimodal learning on oncology data. BJR|Artificial Intelligence, 1(1), January 2024. [Google Scholar]

- [9].Waqas Asim, Bui Marilyn M., Glassy Eric F., Naqa Issam El, Borkowski Piotr, Borkowski Andrew A., and Rasool Ghulam. Revolutionizing digital pathology with the power of generative artificial intelligence and foundation models. Laboratory Investigation, 103(11):100255, November 2023. [DOI] [PubMed] [Google Scholar]

- [10].Jaeckle Florian, Denholm James, Schreiber Benjamin, Evans Shelley C, Wicks Mike N, Chan James YH, Bateman Adrian C, Natu Sonali, Arends Mark J, and Soilleux Elizabeth. Machine learning achieves pathologist-level celiac disease diagnosis. NEJM AI, 2(4):AIoa2400738, 2025. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [11].Wang Jonathan and Redelmeier Donald A.. Cognitive biases and artificial intelligence. NEJM AI, 1(12), November 2024. [Google Scholar]

- [12].Singhal Karan, Azizi Shekoofeh, Tu Tao, Sara Mahdavi S., Wei Jason, Chung Hyung Won, Scales Nathan, Tanwani Ajay, Cole-Lewis Heather, Pfohl Stephen, Payne Perry, Seneviratne Martin, Gamble Paul, Kelly Chris, Babiker Abubakr, Schärli Nathanael, Chowdhery Aakanksha, Mansfield Philip, Demner-Fushman Dina, Arcas Blaise Agüera y, Webster Dale, Corrado Greg S., Matias Yossi, Chou Katherine, Gottweis Juraj, Tomasev Nenad, Liu Yun, Rajkomar Alvin, Barral Joelle, Semturs Christopher, Karthikesalingam Alan, and Natarajan Vivek. Large language models encode clinical knowledge. Nature, 620(7972):172–180, July 2023. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [13].Brin Dana, Sorin Vera, Vaid Akhil, Soroush Ali, Glicksberg Benjamin S., Charney Alexander W., Nadkarni Girish, and Klang Eyal. Comparing chatgpt and gpt-4 performance in usmle soft skill assessments. Scientific Reports, 13(1), October 2023. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [14].Wang Andrew Y, Lin Sherman, Tran Christopher, Homer Robert J, Wilsdon Dan, Walsh Joanna C, Goebel Emily A, Sansano Irene, Sonawane Snehal, Cockenpot Vincent, et al. Assessment of pathology domain-specific knowledge of chatgpt and comparison to human performance. Archives of pathology & laboratory medicine, 148(10):1152–1158, 2024. [DOI] [PubMed] [Google Scholar]

- [15].Lee Junbok, Park Sungkyung, Shin Jaeyong, and Cho Belong. Analyzing evaluation methods for large language models in the medical field: a scoping review. BMC Medical Informatics and Decision Making, 24(1), November 2024. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [16].Seo Junhyuk, Choi Dasol, Kim Taerim, Cha Won Chul, Kim Minha, Yoo Haanju, Oh Namkee, Yi YongJin, Lee Kye Hwa, and Choi Edward. Evaluation framework of large language models in medical documentation: Development and usability study. Journal of Medical Internet Research, 26:e58329, November 2024. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [17].Tam Thomas Yu Chow, Sivarajkumar Sonish, Kapoor Sumit, Stolyar Alisa V., Polanska Katelyn, McCarthy Karleigh R., Osterhoudt Hunter, Wu Xizhi, Visweswaran Shyam, Fu Sunyang, Mathur Piyush, Cacciamani Giovanni E., Sun Cong, Peng Yifan, and Wang Yanshan. A framework for human evaluation of large language models in healthcare derived from literature review. npj Digital Medicine, 7(1), September 2024. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [18].Foucar Elliott. Diagnostic decision-making in anatomic pathology. Pathology Patterns Reviews, 116(suppl1):S21–S33, December 2001. [DOI] [PubMed] [Google Scholar]

- [19].Schlegel W. and Kayser K.. Pattern recognition in histo-pathology: Basic considerations. Methods of Information in Medicine, 21(01):15–22, January 1982. [PubMed] [Google Scholar]

- [20].Shin Dmitriy, Kovalenko Mikhail, Ersoy Ilker, Li Yu, Doll Donald, Shyu Chi-Ren, and Hammer Richard. Pathedex – uncovering high-explanatory visual diagnostics heuristics using digital pathology and multiscale gaze data. Journal of Pathology Informatics, 8(1):29, January 2017. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [21].Crowley Rebecca S., Legowski Elizabeth, Medvedeva Olga, Reitmeyer Kayse, Tseytlin Eugene, Castine Melissa, Jukic Drazen, and Mello-Thoms Claudia. Automated detection of heuristics and biases among pathologists in a computer-based system. Advances in Health Sciences Education, 18(3):343–363, May 2012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [22].Raab Stephen Spencer. The current and ideal state of anatomic pathology patient safety. Diagnosis, 1(1):95–97, January 2014. [DOI] [PubMed] [Google Scholar]

- [23].Hamilton Peter W, van Diest Paul J, Williams Richard, and Gallagher Anthony G. Do we see what we think we see? the complexities of morphological assessment. The Journal of Pathology, 218(3):285–291, March 2009. [DOI] [PubMed] [Google Scholar]

- [24].Truhn Daniel, Reis-Filho Jorge S., and Kather Jakob Nikolas. Large language models should be used as scientific reasoning engines, not knowledge databases. Nature Medicine, 29(12):2983–2984, October 2023. [DOI] [PubMed] [Google Scholar]

- [25].Rao Amogh Ananda, Awale Milind, and Davis Sissmol. Medical diagnosis reimagined as a process of bayesian reasoning and elimination. Cureus, September 2023. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [26].Arvisais-Anhalt Simone, Gonias Steven L., and Murray Sara G.. Establishing priorities for implementation of large language models in pathology and laboratory medicine. Academic Pathology, 11(1):100101, January 2024. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [27].Goh Ethan, Gallo Robert, Hom Jason, Strong Eric, Weng Yingjie, Kerman Hannah, Cool Joséphine A., Kanjee Zahir, Parsons Andrew S., Ahuja Neera, Horvitz Eric, Yang Daniel, Milstein Arnold, Olson Andrew P. J., Rodman Adam, and Chen Jonathan H.. Large language model influence on diagnostic reasoning: A randomized clinical trial. JAMA Network Open, 7(10):e2440969, October 2024. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [28].Bélisle-Pipon Jean-Christophe. Why we need to be careful with llms in medicine. Frontiers in Medicine, 11, December 2024. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [29].Cabral Stephanie, Restrepo Daniel, Kanjee Zahir, Wilson Philip, Crowe Byron, Abdulnour Raja-Elie, and Rodman Adam. Clinical reasoning of a generative artificial intelligence model compared with physicians. JAMA Internal Medicine, 184(5):581, May 2024. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [30].Kim Jonathan, Podlasek Anna, Shidara Kie, Liu Feng, Alaa Ahmed, and Bernardo Danilo. Limitations of large language models in clinical problem-solving arising from inflexible reasoning, 2025. [Google Scholar]

- [31].Tankelevitch Lev, Kewenig Viktor, Simkute Auste, Scott Ava Elizabeth, Sarkar Advait, Sellen Abigail, and Rintel Sean. The metacognitive demands and opportunities of generative ai. In Proceedings of the CHI Conference on Human Factors in Computing Systems, CHI 24, page 1–24. ACM, May 2024. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.