Abstract

How can we make sense of large-scale recordings of neural activity across learning? Theories of neural network learning with their origins in statistical physics offer a potential answer: for a given task, there are often a small set of summary statistics that are sufficient to predict performance as the network learns. Here, we review recent advances in how summary statistics can be used to build theoretical understanding of neural network learning. We then argue for how this perspective can inform the analysis of neural data, enabling better understanding of learning in biological and artificial neural networks.

I. INTRODUCTION

Experience reshapes neural population activity, molding an animal’s representations of the world as it learns to perform new tasks. Thanks to advances in experimental technologies, it is just now becoming possible to measure changes in the activity of large neural populations across the course of learning (Fink et al., 2025; Kriegeskorte and Wei, 2021; Masset et al., 2022; Steinmetz et al., 2021; Sun et al., 2025; Vaidya et al., 2025; Zhong et al., 2025). However, with this new capability comes the challenge of identifying which features of high-dimensional activity patterns are meaningful for understanding learning. While analyses of representations have begun how to elucidate how learning reshapes the structure of activity, it is not in general clear whether these measurements are sufficient to understand how representational changes relate to behavior (Krakauer et al., 2017; Kriegeskorte et al., 2008; Kriegeskorte and Wei, 2021; Sucholutsky et al., 2024).

In this Perspective, we propose that the principled identification of summary statistics of learning offers a possible path forward. This framework is grounded in theories of the statistical physics of learning in neural networks, which show that low-dimensional summary statistics are often sufficient to predict task performance over the course of learning (Engel and van den Broeck, 2001; Watkin et al., 1993; Zdeborová and Krzakala, 2016). We argue that thinking systematically about summary statistics gives new insight into what existing approaches of quantifying neural representations reveal about learning, and allows identification of what additional measurements would be required to constrain models of plasticity. We emphasize that the goal of this Perspective is not to advocate for the use of a particular set of summary statistics, but rather to explain the general philosophy of this approach to understanding learning in high dimensions.

II. WHAT IS A SUMMARY STATISTIC?

We posit that summary statistics of learning must satisfy two minimal desiderata:

They must be low-dimensional. That is, their dimension is low relative to the number of neurons in the network of interest. Indeed, most summary statistics we will encounter are determined by averages over the population of neurons.

They must be sufficient to predict behavior across learning. From a theoretical standpoint, there should exist a closed set of equations describing the evolution of the summary statistics that predict the network’s performance.

As we will illustrate with concrete examples in Section III, summary statistics satisfying these two desiderata are often highly interpretable thanks to their clear relationship to the network architecture and learning task. However, the summary statistics relevant for predicting performance may not be sufficient to predict all statistical properties of population activity. We will elaborate on this issue, and the resulting limitations of descriptions based on summary statistics alone, in Section IV.

Our use of the term “summary statistics” follows work by Ben Arous and colleagues (Ben Arous et al., 2023, 2022). In the literature on the statistical physics of learning, the quantities that we refer to as summary statistics are often termed “order parameters” (Engel and van den Broeck, 2001; Mézard et al., 1987; Watkin et al., 1993; Zdeborová and Krzakala, 2016). We prefer to use the former, more general term as it better captures the goal of these reduced descriptions in a neuroscientific context: we aim to summarize the features of neural activity relevant for learning.

III. SUMMARY STATISTICS IN THEORIES OF NEURAL NETWORK LEARNING

We now review how summary statistics emerge naturally in theoretical analyses of neural network learning. Out of many theoretical results, we focus on two example settings: online learning from high-dimensional data in shallow networks, and batch learning in wide and deep networks (Arnaboldi et al., 2023; Ben Arous et al., 2023; Bordelon et al., 2025; Bordelon and Pehlevan, 2023b; Cui et al., 2023; Engel and van den Broeck, 2001; Goldt et al., 2019; van Meegen and Sompolinsky, 2025; Saad and Solla, 1995; Saxe et al., 2013; Watkin et al., 1993; Zavatone-Veth and Pehlevan, 2021; Zavatone-Veth et al., 2022b; Zdeborová and Krzakala, 2016). These model problems illustrate how relevant summary statistics may be identified given a task, network architecture, and learning rule.

A. Online learning in shallow neural networks with high dimensional data

Classical models of online gradient descent learning in high dimensions can be often be summarized with simple summary statistics (Arnaboldi et al., 2023; Ben Arous et al., 2022; Biehl and Schwarze, 1995; Engel and van den Broeck, 2001; Goldt et al., 2019, 2020; Saad and Solla, 1995; Watkin et al., 1993). In this section, we discuss how the generalization performance of perceptrons and shallow (two-layer) neural networks trained on large quantities of high dimensional data can be summarized by simple weight alignment measures. Most simply, the perceptron model seeks to learn a weight vector which correctly classifies a finite set of randomly sampled training input-output pairs (, ). If the inputs are random, , and the targets are generated by a teacher network , then the generalization performance (performance of the model on new unseen data, is completely determined by the overlap of with itself and with the target direction

If the learning rate is scaled appropriately with the dimension , the high-dimensional (large-) limit of online stochastic gradient descent is given by a deterministic set of equations for and :

| (1) |

where the continuous training ‘time’ is the ratio of the number of samples seen to the dimension and is a nonlinear function that depends on the learning rate, the loss function, and the link function (Arnaboldi et al., 2023; Ben Arous et al., 2022; Engel and van den Broeck, 2001; Goldt et al., 2019; Saad and Solla, 1995). Integrating this update equation allows one to predict the evolution of the generalization error as more training data are provided to the algorithm. Despite the infinite dimensionality of the original optimization problem, only two dimensions are necessary to capture the dynamics of generalization error.

The analysis of online perceptron learning can be extended to two layer neural networks with a small number of hidden neurons ,

In this setting with isotropic random data, the relevant summary statistics are the readout weights , along with matrices and with entries

For this system, we can track the gradient descent dynamics for , , and through a generalization of Equation (1) (Biehl and Schwarze, 1995; Goldt et al., 2019, 2020; Saad and Solla, 1995). This reduces the dimensionality of the dynamics from the trainable parameters {}, {} to summary statistics, which is significant when . This reduction enables the application of analyses that cannot scale to high dimensions, for instance control-theoretic methods to study optimal learning hyperparameters and curricula (Mignacco and Mori, 2025; Mori et al., 2025). Recent works have also begun to study approximations to these summary statistics when the network width is also large, as further dimensionality reduction if possible when and have stereotyped structures (Arnaboldi et al., 2023; Montanari and Urbani, 2025).

Under what conditions is this reduction possible? Fundamentally, the summary statistics , , and are sufficient to determine the network’s performance so long as the preactivations and are approximately Gaussian. Thus, one can relax the assumption that the inputs are exactly Gaussian so long as a central limit theorem applies to and (Goldt et al., 2019, 2020). Moreover, one can allow for correlations between the different input dimensions so long as and remain Gaussian. If , with a modification of the definition of the overlaps to and a similar reduction applies (Arnaboldi et al., 2023). One can even consider extensions to plasticity rules other than stochastic gradient descent. For example, online node perturbation leads to a different effective dynamics for the same set of summary statistics (Hara et al., 2011, 2013).

How could the overlaps and be accessed from measurements of neural activity? And, in the absence of detailed knowledge of a teacher network, how could one identify the relevant overlaps? Under the simple structural assumptions of these models, one could estimate the overlaps from covariances of network activity across stimuli, i.e., with isotropic inputs one has and . Moreover, one can in some cases detect this underlying low-dimensional structure by examining the principal components of the learning trajectory (Ben Arous et al., 2023). However, more theoretical work is required in this vein.

B. Learning in wide and deep neural networks

Another strategy to reduce the complexity of multilayer deep neural networks is to analyze the dynamics of learning in terms of representational similarity matrices (kernels) for each hidden layer of the network. Consider, for example, a deep fully-connected network with input ,

where denotes training time. When the weights start from an uninformed initial condition (i.e., Gaussian random matrices), and the network is optimized with full-batch gradient descent in the large width limit (Figure 1), the relevant summary statistics of the model are representational similarity matrices

and gradient similarity matrices

which respectively compare the hidden states and the gradient signals at each hidden layer for each pair of data points (, ) and each pair of training times (, ). Depending on how weights and learning rates are scaled, one can obtain different types of large-width () limits. In the lazy / kernel limit where is constant, these representational similarity matrices are static over the course of learning (Jacot et al., 2018). However, an alternative scaling () can be adopted where these objects evolve in a task-dependent manner even as (Bordelon and Pehlevan, 2023b; Yang and Hu, 2021). In either case the performance of the network is determined by these objects since the outputs satisfy a differential equation

where is the loss function and denotes expectation over the training dataset. So long as the square of the number of training data points is small relative to the network width, this provides a substantial dimensionality reduction.

FIG. 1. Representational similarity kernels in wide neural network models and in the brain.

a. Diagram of the infinite-width limit of a deep feedforward neural network. For a fixed input and output dimension, one considers a sequence of networks of increasing hidden layer widths. b. Predicting the performance of width-2500 fully-connected networks with three hidden layers and tanh activations over training using the dynamical mean-field theory described in Section III. Networks are trained on a synthetic binary classification dataset of 10 examples, with 5 examples assigned each class at random. This leads to block structure in the final representations. Adapted from Bordelon and Pehlevan (2023b). c. The summary statistics in the dynamical mean field theory for the network in b are representational similarity kernels (; left) and gradient similarity kernels (; right) for each layer. The top row shows kernels estimated from gradient descent training, and the bottom row the theoretical predictions. All kernels are shown at the end of training (). Adapted from Bordelon and Pehlevan (2023b). d. Comparing representational similarity kernels across models and brains. Here, similarity is measured using the Pearson correlation , and the dissimilarity is plotted as a heatmap. Kernels resulting from fMRI measurements of human inferior temporal (IT) cortex (left) and electrophysiological measurements of macaque monkey IT cortex (right) are compared with the kernel for features from a deep convolutional neural network after optimal re-weighting to match human IT (center). Adapted from Figure 10 of Khaligh-Razavi and Kriegeskorte (2014) with permission from N. Kriegeskorte under a CC-BY License.

While this provides a description of the training dynamics of a model under gradient flow, it can be extended to other learning rules which use approximations of the backward pass variables , which we called pseudo-gradients in Bordelon and Pehlevan (2023a). Such rules include Hebbian learning, feedback alignment, and direct feedback alignment (Hebb, 2005; Lillicrap et al., 2016; Nøkland, 2016). In this case, the relevant summary statistics to characterize the prediction dynamics of the network include the gradient-pseudogradient correlation, which measures the alignment between the gradients used by the learning rule and the gradients that one would have used with gradient flow,

as governs the evolution of the function output:

A significant line of recent work in neuroscience aims to quantify neural representations and compare them across networks through analysis of representational similarity matrices (Kriegeskorte et al., 2008; Sucholutsky et al., 2024; Williams, 2024; Williams et al., 2021). Here, we see that these kernel matrices arise naturally as summary statistics of forward signal propagation in wide and deep neural networks (Figure 1). At the same time, those results show that tracking only feature kernels is not in general sufficient to predict performance over the course of learning. One needs access also to coarse-grained information about the plasticity rule in the form of gradient kernels (either or ), and to information about the network outputs (for instance ). More theoretical work is required to determine how to reliably estimate these gradient kernels from data.

IV. IMPLICATIONS FOR NEURAL MEASUREMENTS

The two example settings detailed in Section III show how the relevant summary statistics of learning depend on network architecture and learning rule. Theoretical studies are just beginning to map out the full space of possible summary statistics for different network architectures (Arnaboldi et al., 2023; Ben Arous et al., 2023; Bordelon et al., 2025; Bordelon and Pehlevan, 2023b; Cui et al., 2023; Engel and van den Broeck, 2001; Goldt et al., 2019; van Meegen and Sompolinsky, 2025; Saad and Solla, 1995; Saxe et al., 2013; Zavatone-Veth and Pehlevan, 2021; Zavatone-Veth et al., 2022b; Zdeborová and Krzakala, 2016). Though details of the relevant summary statistics vary depending on the scaling regime and task—as illustrated by the examples above, where network width, training dataset size, and learning rule change the relevant statistics and their effective dynamics—they share broad structural principles. In all cases, summary statistics are defined by (weighted) averages over sub-populations of neurons within the network of interest, e.g., correlations of activity with task-relevant variables, or autocorrelations of activity within a particular layer in a deep network. Thanks to these common structural features, these varied theories of summary statistics have common implications for the analysis and interpretation of neuroscience experiments.

A. Benign subsampling

The summary statistics encountered in Section III are robust to subsampling thanks to their basic nature as averages over the population of neurons. These statistical theories in fact post a far stronger notion of benign subsampling: they result in neurons that are statistically exchangeable. This is highly advantageous from the perspective of long-term recordings of neural activity, as reliable measurement of summary statistics does not require one to track the exact same neurons over time. Instead, it suffices to measure a sufficiently large subpopulation on any given day. This obviates many of the challenges presented by tracking neurons over multiple recording sessions (Masset et al., 2022). Moreover, the variability and bias introduced by estimating summary statistics from a limited subset of relevant neurons can be characterized systematically (Bordelon and Pehlevan, 2024; Kang et al., 2025; Zavatone-Veth et al., 2022a). Taken together, these properties mean that summary statistics are relatively easy to estimate given limited neural measurements, provided that exchangability is not too strongly violated (Gao et al., 2017). We will return to this question in the Discussion, as a detailed analysis of the effects of non-identical neurons will be an important topic for future theoretical work. There are limits, however, to how far one can subsample. For instance, representational similarity kernels are more affected by small, coordinated changes in the tuning of many neurons than large changes in single-neuron tuning (Figure 2) (Kriegeskorte and Wei, 2021). Determining the minimum number of neurons one must record in order to predict generalization dynamics across learning will be an important subject for future theoretical work (Gao et al., 2017; Kriegeskorte and Wei, 2021).

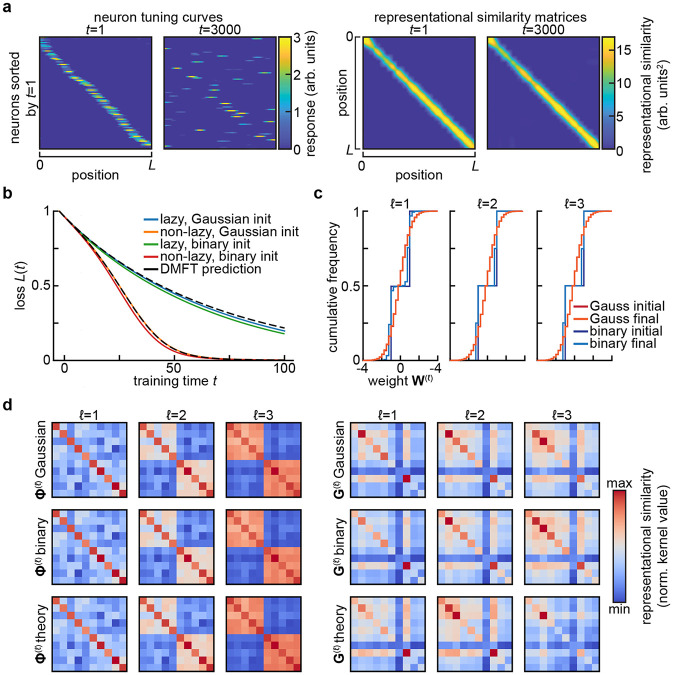

FIG. 2. Invariance and universality in summary statistics.

a. Stable summary statistics despite drifting single-neuron responses. In Qin et al. (2023)’s model of representational drift, single neurons are strongly tuned to a spatial variable, yet their tuning changes dramatically over time (left). Despite this drift, the similarity of the population representations of different spatial positions remains nearly constant (right). Adapted from Figure 5e of Qin et al. (2023), of which C.P. is the corresponding author. b. Universality of summary statistics in wide and deep networks with respect to the distribution of initial weights. Setting is as in Figure 1b-c, but also including a network for which the weights are initially drawn from {−1, +1} with equal probability. Here, , and a different realization of the random task is sampled relative to Figure 1b-c, so the loss curves are not identical. c. Cumulative distribution of weights at the start (initial) and end (final) of training for the networks shown in (b). Note that the small change in the weight distributions for the Gaussian-initialized networks is not visible at this resolution, and that one expects the size of weight changes to scale with (Bordelon and Pehlevan, 2023b). d. Feature and gradient kernels at the end of training for the networks in b. No substantial differences are visible between networks initialized with different weight distributions.

B. Invariances and representational drift

Though by our definition the summary statistics mentioned in Section III are sufficient to predict the network’s performance, they are not sufficient statistics for all properties of the neural code. In particular, in part because they arise from theories in which neurons become exchangable, they have many invariances. These invariances mean that individual tuning curves can change substantially without altering the population-level computation (Kriegeskorte and Wei, 2021). For instance, the representational similarity kernels are invariant under rotation of the neural code at each layer, enabling complete reorganization of the single-neuron code without any effect on behavior. Similarly, overlaps with task-relevant directions are invariant to changes in the null space of those low-dimensional projections. These invariances mean that focusing on summary statistics of learning sets a particular aperture on what aspects of representations one can assay.

At the same time, the invariances of summary statistics have important consequences for functional robustness. In particular, they are closely related to theories of representational drift, the seemingly puzzling phenomenon of continuing changes in neural representations of task-relevant variables despite stable behavioral performance (Masset et al., 2022; Rule et al., 2019). Many models of drift explicitly propose that representational changes are structured in such a way that certain summary statistics are preserved (Figure 2) (Masset et al., 2022; Pashakhanloo and Koulakov, 2023; Qin et al., 2023). Identifying the invariances of the summary statistics sufficient to determine task performance can allow for a more systematic characterization of what forms of drift can be accommodated by a given network. Conversely, identifying the invariances of a representation once task performance stabilizes might suggest which summary statistics are relevant for the learning problem at hand.

C. Universality

An important lesson from the theory of high-dimensional statistics is that of universality: certain coarse-grained statistics are asymptotically insensitive to the details of the distribution. The most prominent example of statistical universality is the familiar central limit theorem: the distribution of the sample mean of independent random variables tends to a Gaussian as the number of samples becomes large. A broader class of universality principles arise in random matrix theory: the distribution of eigenvalues and eigenvectors of a random matrix often become insensitive to details of the distribution of the elements as the matrix becomes large. Most famously, the Marčenko-Pastur theorem specifies that the singular values of a matrix with independent elements have a distribution that depends only on the mean and variance of the elements (Marchenko and Pastur, 1967). In the context of learning problems, universality manifests through insensitivity of the model performance to details of the distributions of parameters or of features (Hu and Lu, 2022; Misiakiewicz and Saeed, 2024).

From the perspective of summary statistics, statistical universality can allow simple theories to make informative macroscopic predictions even if they do not capture detailed properties of single neurons. For instance, the mean-field description of the learning dynamics of wide neural networks introduced in Section III are universal in that they depend on the initial distribution of hidden layer weights only through its mean and variance, even though the details of that distribution will affect the distribution of weights throughout training (Figure 2) (Golikov and Yang, 2022; Williams, 1996). Like the invariances to transformations of the neural population code mentioned before, this is nonetheless a double-edged sword: these universality properties mean that focusing on predicting performance commits one to coarse-graining away certain microscopic aspects of neural activity. Though these features are not required to predict macroscopic behavior, they may be important for understanding biological mechanisms.

V. DISCUSSION

The core insight of the statistical mechanics of learning in neural networks is the existence of low-dimensional summary statistics sufficient to predict behavioral performance. We have reviewed how different summary statistics emerge depending on network architecture and task, how summary statistics might be estimated from experimental recordings, and what this perspective reveals about existing approaches to quantifying representational changes over learning. We now conclude by discussing complementary summary statistics of neural representations that arise from alternative desiderata, and future directions for theoretical inquiry.

The summary statistics discussed here explicitly depend on the architecture and nature of plasticity in the neural network of interest, as they seek to predict its performance over learning. A distinct set of summary statistics arises if one aims to study what features of a representation are relevant for an independently-trained decoder. In this line of work, one regards the representation as fixed, rather than considering end-to-end training of the full network as we considered here. If the decoder is a simple linear regressor that predicts a continuous variable, the relevant summary statistics of the representation are just its mean and covariance across stimuli (Hu and Lu, 2022; Misiakiewicz and Saeed, 2024). Given a particular task, the covariance can be further distilled into the rate of decay of its eigenvalues and of the projections of the task direction into its eigenvectors (Atanasov et al., 2024; Bordelon et al., 2023; Bordelon and Pehlevan, 2022; Canatar et al., 2021, 2024; Harvey et al., 2024; Hastie et al., 2022; Williams, 2024). For categorically-structured stimuli, a substantial body of work has elucidated the summary statistics that emerge from assuming that one wants to divide the data according to a random dichotomy (Bernardi et al., 2020; Chung et al., 2018; Cohen et al., 2020; Engel and van den Broeck, 2001; Farrell et al., 2022; Harvey et al., 2024; Sorscher et al., 2022; Zavatone-Veth and Pehlevan, 2022).

The models reviewed here are composed of exchangable neurons, which simplifies the relevant summary statistics and renders them particularly robust to subsampling. However, the brain has rich structure that can affect which summary statistics are sufficient to track learning and how those summary statistics may be measured. Biological neural networks are embedded in space, and their connectivity and selectivity is shaped by spatial structure (Chklovskii et al., 2002; Khona et al., 2025; Stiso and Bassett, 2018). Notably, many sensory areas are topographically organized: neurons with similar response properties are spatially proximal (Kandler et al., 2009; Murthy, 2011). Moreover, neurons can be classified into genetically-identifiable cell types (Zhang et al., 2023), which may play distinct functional roles during learning (Fink et al., 2025; Hirokawa et al., 2019). Future theoretical work must contend with these biological complexities in order to determine the relevant summary statistics of learning subject to these constraints.

ACKNOWLEDGEMENTS

We are indebted to Nikolaus Kriegeskorte for sharing Figure 10 of Khaligh-Razavi and Kriegeskorte (2014), from which our Figure 1d is derived. We thank Paul Masset, Venkatesh Murthy, Farhad Pashakhanloo, and Ningjing Xia for helpful discussions and comments on previous versions of this manuscript.

J.A.Z.-V. is supported by the Office of the Director of the National Institutes of Health under Award Number DP5OD037354. The content is solely the responsibility of the authors and does not necessarily represent the official views of the National Institutes of Health. JAZV is further supported by a Junior Fellowship from the Harvard Society of Fellows. B.B. is supported by a Google PhD Fellowship. C.P. is supported by NSF grant DMS-2134157, NSF CAREER Award IIS-2239780, DARPA grant DIAL-FP-038, a Sloan Research Fellowship, and The William F. Milton Fund from Harvard University. This work has been made possible in part by a gift from the Chan Zuckerberg Initiative Foundation to establish the Kempner Institute for the Study of Natural and Artificial Intelligence.

REFERENCES

- Arnaboldi Luca, Stephan Ludovic, Krzakala Florent, and Loureiro Bruno (2023), “From high-dimensional & mean-field dynamics to dimensionless ODEs: A unifying approach to SGD in two-layers networks,” in Proceedings of Thirty Sixth Conference on Learning Theory, Proceedings of Machine Learning Research, Vol. 195, edited by Neu Gergely and Rosasco Lorenzo (PMLR; ) pp. 1199–1227. [Google Scholar]

- Atanasov Alexander, Zavatone-Veth Jacob A, and Pehlevan Cengiz (2024), “Scaling and renormalization in high-dimensional regression,” arXiv preprint arXiv:2405.00592. [Google Scholar]

- Ben Arous Gérard, Gheissari Reza, Huang Jiaoyang, and Jagannath Aukosh (2023), “High-dimensional SGD aligns with emerging outlier eigenspaces,” arXiv arXiv:2310.03010 [cs.LG]. [Google Scholar]

- Ben Arous Gérard, Gheissari Reza, and Jagannath Aukosh (2022), “High-dimensional limit theorems for SGD: Effective dynamics and critical scaling,” in Advances in Neural Information Processing Systems, Vol. 35, edited by Koyejo S., Mohamed S., Agarwal A., Belgrave D., Cho K., and Oh A. (Curran Associates, Inc.) pp. 25349–25362. [Google Scholar]

- Bernardi Silvia, Benna Marcus K., Rigotti Mattia, Munuera Jérôme, Stefano Fusi, and Salzman C. Daniel (2020), “The geometry of abstraction in the hippocampus and prefrontal cortex,” Cell 183 (4), 954–967.e21. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Biehl M, and Schwarze H (1995), “Learning by on-line gradient descent,” Journal of Physics A: Mathematical and General 28 (3), 643. [Google Scholar]

- Bordelon Blake, Cotler Jordan, Pehlevan Cengiz, and Zavatone-Veth Jacob A. (2025), “Dynamically learning to integrate in recurrent neural networks,” arXiv arXiv:2503.18754 [q-bio.NC]. [Google Scholar]

- Bordelon Blake, Masset Paul, Kuo Henry, and Pehlevan Cengiz (2023), “Loss dynamics of temporal difference reinforcement learning,” in Advances in Neural Information Processing Systems, Vol. 36, edited by Oh A., Naumann T., Globerson A., Saenko K., Hardt M., and Levine S. (Curran Associates, Inc.) pp. 14469–14496. [Google Scholar]

- Bordelon Blake, and Pehlevan Cengiz (2022), “Population codes enable learning from few examples by shaping inductive bias,” eLife 11, e78606. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bordelon Blake, and Pehlevan Cengiz (2023a), “The influence of learning rule on representation dynamics in wide neural networks,” in The Eleventh International Conference on Learning Representations. [Google Scholar]

- Bordelon Blake, and Pehlevan Cengiz (2023b), “Self-consistent dynamical field theory of kernel evolution in wide neural networks,” Journal of Statistical Mechanics: Theory and Experiment 2023 (11), 114009. [Google Scholar]

- Bordelon Blake, and Pehlevan Cengiz (2024), “Dynamics of finite width kernel and prediction fluctuations in mean field neural networks,” Journal of Statistical Mechanics: Theory and Experiment 2024 (10), 104021. [Google Scholar]

- Canatar Abdulkadir, Bordelon Blake, and Pehlevan Cengiz (2021), “Spectral bias and task-model alignment explain generalization in kernel regression and infinitely wide neural networks,” Nature communications 12 (1), 2914. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Canatar Abdulkadir, Feather Jenelle, Wakhloo Albert, and Chung SueYeon (2024), “A spectral theory of neural prediction and alignment,” Advances in Neural Information Processing Systems 36. [Google Scholar]

- Chklovskii Dmitri B, Schikorski Thomas, and Stevens Charles F. (2002), “Wiring optimization in cortical circuits,” Neuron 34 (3), 341–347. [DOI] [PubMed] [Google Scholar]

- Chung SueYeon, Lee Daniel D., and Sompolinsky Haim (2018), “Classification and geometry of general perceptual manifolds,” Phys. Rev. X 8, 031003. [Google Scholar]

- Cohen Uri, Chung SueYeon, Lee Daniel D., and Sompolinsky Haim (2020), “Separability and geometry of object manifolds in deep neural networks,” Nature Communications 11 (1), 746. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cui Hugo, Krzakala Florent, and Zdeborova Lenka (2023), “Bayes-optimal learning of deep random networks of extensive-width,” in Proceedings of the 40th International Conference on Machine Learning , Proceedings of Machine Learning Research, Vol. 202, edited by Krause Andreas, Brunskill Emma, Cho Kyunghyun, Engelhardt Barbara, Sabato Sivan, and Scarlett Jonathan (PMLR; ) pp. 6468–6521. [Google Scholar]

- Engel Andreas, and van den Broeck Christian (2001), Statistical Mechanics of Learning (Cambridge University Press; ). [Google Scholar]

- Farrell Matthew, Bordelon Blake, Trivedi Shubhendu, and Pehlevan Cengiz (2022), “Capacity of group-invariant linear readouts from equivariant representations: How many objects can be linearly classified under all possible views?” in International Conference on Learning Representations. [Google Scholar]

- Fink Andrew JP, Muscinelli Samuel P., Wang Shuqi, Hogan Marcus I., English Daniel F., Axel Richard, Litwin-Kumar Ashok, and Schoonover Carl E. (2025), “Experience-dependent reorganization of inhibitory neuron synaptic connectivity,” bioRxiv 10.1101/2025.01.16.633450, https://www.biorxiv.org/content/early/2025/01/16/2025.01.16.633450.full.pdf. [DOI] [Google Scholar]

- Gao Peiran, Trautmann Eric, Yu Byron, Santhanam Gopal, Ryu Stephen, Shenoy Krishna, and Ganguli Surya (2017), “A theory of multineuronal dimensionality, dynamics and measurement,” bioRxiv 10.1101/214262, https://www.biorxiv.org/content/early/2017/11/12/214262.full.pdf. [DOI] [Google Scholar]

- Goldt Sebastian, Advani Madhu, Saxe Andrew M, Krzakala Florent, and Zdeborová Lenka (2019), “Dynamics of stochastic gradient descent for two-layer neural networks in the teacher-student setup,” Advances in neural information processing systems 32. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Goldt Sebastian, Mézard Marc, Krzakala Florent, and Zdeborová Lenka (2020), “Modeling the influence of data structure on learning in neural networks: The hidden manifold model,” Physical Review X 10, 041044. [Google Scholar]

- Golikov Eugene, and Yang Greg (2022), “Non-Gaussian Tensor Programs,” in Advances in Neural Information Processing Systems, Vol. 35, edited by Koyejo S., Mohamed S., Agarwal A., Belgrave D., Cho K., and Oh A. (Curran Associates, Inc.) pp. 21521–21533. [Google Scholar]

- Hara Kazuyuki, Katahira Kentaro, Okanoya Kazuo, and Okada Masato (2011), “Statistical mechanics of on-line node-perturbation learning,” IPSJ Online Transactions 4, 23–32. [Google Scholar]

- Hara Kazuyuki, Katahira Kentaro, Okanoya Kazuo, and Okada Masato (2013), “Statistical mechanics of node-perturbation learning for nonlinear perceptron,” Journal of the Physical Society of Japan 82 (5), 054001. [Google Scholar]

- Harvey Sarah E, Lipshutz David, and Williams Alex H (2024), “What representational similarity measures imply about decodable information,” in UniReps: 2nd Edition of the Workshop on Unifying Representations in Neural Models. [Google Scholar]

- Hastie Trevor, Montanari Andrea, Rosset Saharon, and Tibshirani Ryan J (2022), “Surprises in high-dimensional ridgeless least squares interpolation,” The Annals of Statistics 50 (2), 949–986. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hebb Donald Olding (2005), The organization of behavior: A neuropsychological theory (Psychology press; ). [Google Scholar]

- Hirokawa Junya, Vaughan Alexander, Masset Paul, Ott Torben, and Kepecs Adam (2019), “Frontal cortex neuron types categorically encode single decision variables,” Nature 576 (7787), 446–451. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hu Hong, and Lu Yue M (2022), “Universality laws for high-dimensional learning with random features,” IEEE Transactions on Information Theory 69 (3), 1932–1964. [Google Scholar]

- Jacot Arthur, Gabriel Franck, and Hosngler Clément (2018), “Neural tangent kernel: Convergence and generalization in neural networks,” Advances in neural information processing systems 31. [Google Scholar]

- Kandler Karl, Clause Amanda, and Noh Jihyun (2009), “Tonotopic reorganization of developing auditory brainstem circuits,” Nature Neuroscience 12 (6), 711–717. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kang Hyunmo, Canatar Abdulkadir, and Chung SueYeon (2025), “Spectral analysis of representational similarity with limited neurons,” arXiv arXiv:2502.19648 [cond-mat.dis-nn]. [Google Scholar]

- Khaligh-Razavi Seyed-Mahdi, and Kriegeskorte Nikolaus (2014), “Deep supervised, but not unsupervised, models may explain it cortical representation,” PLOS Computational Biology 10, 1–29. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Khona Mikail, Chandra Sarthak, and Fiete Ila (2025), “Global modules robustly emerge from local interactions and smooth gradients,” Nature 10.1038/s41586-024-08541-3. [DOI] [PubMed] [Google Scholar]

- Krakauer John W, Ghazanfar Asif A., Gomez-Marin Alex, MacIver Malcolm A., and Poeppel David (2017), “Neuroscience needs behavior: Correcting a reductionist bias,” Neuron 93 (3), 480–490. [DOI] [PubMed] [Google Scholar]

- Kriegeskorte Nikolaus, Mur Marieke, and Bandettini Peter A. (2008), “Representational similarity analysis - connecting the branches of systems neuroscience,” Frontiers in Systems Neuroscience Volume 2 - 2008, 10.3389/neuro.06.004.2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kriegeskorte Nikolaus, and Wei Xue-Xin (2021), “Neural tuning and representational geometry,” Nature Reviews Neuroscience 22 (11), 703–718. [DOI] [PubMed] [Google Scholar]

- Lillicrap Timothy P, Cownden Daniel, Tweed Douglas B, and Akerman Colin J (2016), “Random synaptic feedback weights support error backpropagation for deep learning,” Nature communications 7 (1), 13276. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Marchenko Vladimir Alexandrovich, and Pastur Leonid Andreevich (1967), “Distribution of eigenvalues for some sets of random matrices,” Matematicheskii Sbornik 114 (4), 507–536. [Google Scholar]

- Masset Paul, Qin Shanshan, and Zavatone-Veth Jacob A (2022), “Drifting neuronal representations: Bug or feature?” Biological Cybernetics , 1–14. [DOI] [PubMed] [Google Scholar]

- van Meegen Alexander, and Sompolinsky Haim (2025), “Coding schemes in neural networks learning classification tasks,” Nature Communications 16 (1), 3354. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mézard Marc, Parisi Giorgio, and Virasoro Miguel Angel (1987), Spin Glass Theory and Beyond: An Introduction to the Replica Method and Its Applications (World Scientific Publishing Company; ). [Google Scholar]

- Mignacco Francesca, and Mori Francesco (2025), “A statistical physics framework for optimal learning,” arXiv 10.48550/arXiv.2507.07907, arXiv:2507.07907 [cond-mat.dis-nn]. [DOI] [Google Scholar]

- Misiakiewicz Theodor, and Saeed Basil (2024), “A non-asymptotic theory of kernel ridge regression: deterministic equivalents, test error, and GCV estimator,” arXiv preprint arXiv:2403.08938. [Google Scholar]

- Montanari Andrea, and Urbani Pierfrancesco (2025), “Dynamical decoupling of generalization and overfitting in large two-layer networks,” arXiv preprint arXiv:2502.21269. [Google Scholar]

- Mori Francesco, Mannelli Stefano Sarao, and Mignacco Francesca (2025), “Optimal protocols for continual learning via statistical physics and control theory,” in The Thirteenth International Conference on Learning Representations. [Google Scholar]

- Murthy Venkatesh N (2011), “Olfactory maps in the brain,” Annual Review of Neuroscience 34 (1), 233–258. [DOI] [PubMed] [Google Scholar]

- Nøkland Arild (2016), “Direct feedback alignment provides learning in deep neural networks,” Advances in neural information processing systems 29. [Google Scholar]

- Pashakhanloo Farhad, and Koulakov Alexei (2023), “Stochastic gradient descent-induced drift of representation in a two-layer neural network,” in Proceedings of the 40th International Conference on Machine Learning, Proceedings of Machine Learning Research, Vol. 202, edited by Krause Andreas, Brunskill Emma, Cho Kyunghyun, Engelhardt Barbara, Sabato Sivan, and Scarlett Jonathan (PMLR; ) pp. 27401–27419. [Google Scholar]

- Qin Shanshan, Farashahi Shiva, Lipshutz David, Sengupta Anirvan M., Chklovskii Dmitri B., and Pehlevan Cengiz (2023), “Coordinated drift of receptive fields in Hebbian/anti-Hebbian network models during noisy representation learning,” Nature Neuroscience 26 (2), 339–349. [DOI] [PubMed] [Google Scholar]

- Rule Michael E, O’Leary Timothy, and Harvey Christopher D (2019), “Causes and consequences of representational drift,” Current Opinion in Neurobiology 58, 141–147, computational Neuroscience. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Saad David, and Solla Sara A (1995), “On-line learning in soft committee machines,” Physical Review E 52 (4), 4225. [DOI] [PubMed] [Google Scholar]

- Saxe Andrew M, McClelland James L, and Ganguli Surya (2013), “Exact solutions to the nonlinear dynamics of learning in deep linear neural networks,” arXiv preprint arXiv:1312.6120 arXiv:1312.6120. [Google Scholar]

- Sorscher Ben, Ganguli Surya, and Sompolinsky Haim (2022), “Neural representational geometry underlies few-shot concept learning,” Proceedings of the National Academy of Sciences 119 (43), e2200800119, https://www.pnas.org/doi/pdf/10.1073/pnas.2200800119. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Steinmetz Nicholas A, Aydin Cagatay, Lebedeva Anna, Okun Michael, Pachitariu Marius, Bauza Marius, Beau Maxime, Bhagat Jai, Böhm Claudia, Broux Martijn, Chen Susu, Colonell Jennifer, Gardner Richard J., Karsh Bill, Kloosterman Fabian, Kostadinov Dimitar, Mora-Lopez Carolina, O’Callaghan John, Park Junchol, Putzeys Jan, Sauerbrei Britton, van Daal Rik J. J., Vollan Abraham Z., Wang Shiwei, Welkenhuysen Marleen, Ye Zhiwen, Dudman Joshua T., Dutta Barundeb, Hantman Adam W., Harris Kenneth D., Lee Albert K., Moser Edvard I., O’Keefe John, Renart Alfonso, Svoboda Karel, Häusser Michael, Haesler Sebastian, Carandini Matteo, and Harris Timothy D. (2021), “Neuropixels 2.0: A miniaturized high-density probe for stable, long-term brain recordings,” Science 372 (6539), eabf4588. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stiso Jennifer, and Bassett Dani S. (2018), “Spatial embedding imposes constraints on neuronal network architectures,” Trends in Cognitive Sciences 22 (12), 1127–1142. [DOI] [PubMed] [Google Scholar]

- Sucholutsky Ilia, Muttenthaler Lukas, Weller Adrian, Peng Andi, Bobu Andreea, Kim Been, Love Bradley C., Cueva Christopher J., Grant Erin, Groen Iris, Achterberg Jascha, Tenenbaum Joshua B., Collins Katherine M., Hermann Katherine L., Oktar Kerem, Greff Klaus, Hebart Martin N., Cloos Nathan, Kriegeskorte Nikolaus, Jacoby Nori, Zhang Qiuyi, Marjieh Raja, Geirhos Robert, Chen Sherol, Kornblith Simon, Rane Sunayana, Konkle Talia, O’Connell Thomas P., Unterthiner Thomas, Lampinen Andrew K., Müller Klaus-Robert, Toneva Mariya, and Griffiths Thomas L. (2024), “Getting aligned on representational alignment,” arXiv arXiv:2310.13018 [q-bio.NC]. [Google Scholar]

- Sun Weinan, Winnubst Johan, Natrajan Maanasa, Lai Chongxi, Kajikawa Koichiro, Bast Arco, Michaelos Michalis, Gattoni Rachel, Stringer Carsen, Flickinger Daniel, Fitzgerald James E., and Spruston Nelson (2025), “Learning produces an orthogonalized state machine in the hippocampus,” Nature 640 (8057), 165–175. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Vaidya Sachin P, Guanchun Li, Chitwood Raymond A., Li Yiding, and Magee Jeffrey C. (2025), “Formation of an expanding memory representation in the hippocampus,” Nature Neuroscience 28 (7), 1510–1518. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Watkin Timothy L H, Albrecht Rau, and Michael Biehl (1993), “The statistical mechanics of learning a rule,” Rev. Mod. Phys. 65, 499–556. [Google Scholar]

- Williams Alex H (2024), “Equivalence between representational similarity analysis, centered kernel alignment, and canonical correlations analysis,” bioRxiv 10.1101/2024.10.23.619871, https://www.biorxiv.org/content/early/2024/10/24/2024.10.23.619871.full.pdf. [DOI] [Google Scholar]

- Williams Alex H, Erin Kunz, Simon Kornblith, and Linderman Scott (2021), “Generalized shape metrics on neural representations,” in Advances in Neural Information Processing Systems, Vol. 34, edited by Ranzato M., Beygelzimer A., Dauphin Y., Liang P.S., and Vaughan J. Wortman (Curran Associates, Inc.) pp. 4738–4750. [PMC free article] [PubMed] [Google Scholar]

- Williams Christopher (1996), “Computing with infinite networks,” in Advances in Neural Information Processing Systems, Vol. 9, edited by Mozer M.C., Jordan M., and Petsche T. (MIT Press; ). [Google Scholar]

- Yang Greg, and Hu Edward J (2021), “Tensor Programs IV: Feature learning in infinite-width neural networks,” in International Conference on Machine Learning (PMLR; ) pp. 11727–11737. [Google Scholar]

- Zavatone-Veth Jacob A, Canatar Abdulkadir, Ruben Benjamin S, and Pehlevan Cengiz (2022a), “Asymptotics of representation learning in finite Bayesian neural networks,” Journal of Statistical Mechanics: Theory and Experiment 2022 (11), 114008. [Google Scholar]

- Zavatone-Veth Jacob A, and Pehlevan Cengiz (2021), “Depth induces scale-averaging in overparameterized linear Bayesian neural networks,” in Asilomar Conference on Signals, Systems, and Computers, Vol. 55, arXiv:2111.11954. [Google Scholar]

- Zavatone-Veth Jacob A, and Pehlevan Cengiz (2022), “On neural network kernels and the storage capacity problem,” Neural Computation 34 (5), 1136–1142, arXiv:2201.04669. [DOI] [PubMed] [Google Scholar]

- Zavatone-Veth Jacob A, Tong William L, and Pehlevan Cengiz (2022b), “Contrasting random and learned features in deep Bayesian linear regression,” Physical Review E 105 (6), 064118. [DOI] [PubMed] [Google Scholar]

- Zdeborová Lenka, and Krzakala Florent (2016), “Statistical physics of inference: thresholds and algorithms,” Advances in Physics 65 (5), 453–552. [Google Scholar]

- Zhang Meng, Pan Xingjie, Jung Won, Halpern Aaron R., Eichhorn Stephen W., Lei Zhiyun, Cohen Limor, Smith Kimberly A., Tasic Bosiljka, Yao Zizhen, Zeng Hongkui, and Zhuang Xiaowei (2023), “Molecularly defined and spatially resolved cell atlas of the whole mouse brain,” Nature 624 (7991), 343–354. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zhong Lin, Baptista Scott, Gattoni Rachel, Arnold Jon, Flickinger Daniel, Stringer Carsen, and Pachitariu Marius (2025), “Unsupervised pretraining in biological neural networks,” Nature 10.1038/s41586-025-09180-y. [DOI] [PubMed] [Google Scholar]