Abstract

Objective: To describe medical students' attitudes toward placing orders during training, and the effect of computerized provider order entry (CPOE) on their learning experiences.

Design: Prospective, controlled study of all 143 Johns Hopkins University School of Medicine students who began the Basic Medicine clerkship between March 2003 and April 2004 at one of three teaching hospitals: one using CPOE, one paper orders, and one that began using CPOE midway through this study.

Measurements: Survey of students at the start and after the first month of the clerkship.

Results: Ninety-six percent of students responded. Students expressed a desire to place 100% of orders for their patients. Ninety-five percent of students believed that placing orders helps students learn what tests and treatments patients need. Eighty-four percent reported that being unavailable due to conferences and teaching sessions was a significant barrier to participating in the ordering process. Students at hospitals using CPOE reported placing significantly fewer of their patients' follow-up orders compared to students at hospitals using paper orders (25% vs. 50%, p < 0.01) and were more likely to report that their resident or intern did not want them to enter orders (40% vs. 16%, p < 0.01). Comparisons of students at hospitals using CPOE to each other showed that these differences were attributable to one of the hospitals. Thirty-two percent of students at both hospitals using CPOE reported that the extra length of time required for housestaff to review their orders in the computer was a significant barrier.

Conclusion: Hospitals need to ensure that the educational potential of medical students' clinical experiences is maximized when implementing CPOE.

Medical students' clinical training bridges the transition from classroom learning to the beginning of medical practice. During their clinical clerkships, medical students must learn how to construct disease management strategies, coordinate care, and communicate effectively with other health care providers.1 Their learning is enhanced when it is experiential and patient based.2,3,4 Students spend a significant amount of time during clinical clerkships observing housestaff and participating with them in indirect patient care activities,5,6,7 such as formulating and writing orders for hospitalized patients.

Physicians use orders to communicate their diagnostic and therapeutic plans to the nurses, pharmacists, and other health care providers who will implement them. Medical errors due to inappropriate, inaccurate, and incorrectly interpreted orders are a significant cause of hospitalized patient morbidity and mortality,8,9,10,11 and improving patient safety by reducing medical errors is a high national priority.12 Computerized provider order entry (CPOE) reduces transcription errors and uses decision support to promote ordering that is complete, appropriate, and safe.13,14,15,16 Fewer than 10% of U.S. hospitals reported using CPOE as of 2002; however, many more are planning CPOE implementation.17

Technology has been successfully used to support medical student education, particularly in the preclinical years,18 and some observers have proposed that CPOE and decision support can be powerful educational tools for medical students.19 Medical students are computer literate,20 are comfortable with using computer-based resources to further their own education,21,22 and seem to have favorable opinions about CPOE.23 Others have expressed concern that CPOE may negatively affect students' learning experiences, because (i) housestaff may have less time to teach if it takes more time for them to enter orders using CPOE,24 (ii) it may take longer to review medical student orders on the computer as opposed to in the chart,24,25 (iii) attendings may not be adept at computerized ordering and therefore unable to offer guidance to students around ordering issues,24 and (iv) predetermined order sets may undermine the educational process by reducing the need to think through each order as it is placed.25 The educational value of being exposed to the ordering process during training is unclear, and there have been few controlled trials of the impact of CPOE on medical student education.26,27 We therefore conducted a study to describe medical students' attitudes toward having opportunities to place orders during training and the effect of CPOE on learning experiences during their basic medicine clerkship.

Methods

Study Population

Subjects included all 143 Johns Hopkins University School of Medicine students who began the two-month (nine weeks) Basic Medicine clerkship between March 2003 and April 2004. Six cohorts of approximately 24 students each were included. Most students enrolled in the clerkship are in their third year of medical school, but a few are at the end of their second year or in their fourth year of training. Prior to the Basic Medicine clerkship, students in the study had been exposed to paper-based ordering only or no ordering at all (second-year students).

Program Description and Study Settings

Students spend the first month of the Johns Hopkins Basic Medicine clerkship at one of three full-service hospitals.

Hospital A is a tertiary-care university teaching hospital in downtown Baltimore with 900 acute care beds. Hospital A used a mainframe CPOE system adapted from a product by Shared Medical Systems Corporation during the study period. The system had been implemented in 199628 and was used exclusively on the medical units. Users selected individual orders or one of 25 disease-specific order sets from on-screen menus using a light pen or mouse. Medical students were able to enter most types of orders, which then required electronic cosignature by a physician before they could be executed. Students were trained in 90-minute classroom sessions on the first day of the clerkship.

Hospital B is a university teaching hospital serving southeastern Baltimore with 350 acute care beds. Hospital B switched from paper-based ordering to a client-server CPOE system by Meditech on the medical units in November 2003, between the third and fourth cohorts of this study. CPOE was not implemented on other teaching units until after this study was completed. Individual items or one of more than 300 order sets are selected using a combination of keyboard and mouse. Medical students are able to enter most types of orders, which then queue to a physician for cosignature prior to implementation. Students are trained in a one-hour classroom session on the first day of the clerkship.

Hospital C is a community teaching hospital in west Baltimore with 400 acute care beds. Hospital C uses paper ordering on all of its units. Students can write orders in the chart, which then require cosignature by a physician prior to implementation by staff.

Half of the students spend the first month of the clerkship at Hospital A; the other half spend the first month at either Hospital B or Hospital C. Students who begin the clerkship at Hospital B or C spend the second half of the clerkship at Hospital A and vice versa. Students are given an opportunity to indicate where they would prefer to spend the first month of the clerkship. During the study period, 72 students spent the first month of the clerkship at Hospital A, 23 at Hospital B while it still used paper orders (Hospital B/Paper), 24 at Hospital B once it switched to CPOE (Hospital B/CPOE), and 24 at Hospital C.

Each student is paired with a housestaff team, consisting of one attending, one resident, and two interns. Students are assigned one or two new patients to follow each time their team is on call, generally every two to four days. There is no formal curriculum for Johns Hopkins University students in order writing; however, students are explicitly encouraged at the clerkship orientation to seek out opportunities to place orders for their patients. Their supervising residents and attendings are instructed to provide students with such opportunities as well. All three hospitals have their own medicine residency program, and housestaff only have teaching roles at their own hospital. All teaching attendings at each of the three hospitals are members of the Johns Hopkins University School of Medicine faculty.

Survey Instrument and Data Collection

Students were surveyed at the clerkship orientation (pre-clerkship survey) and again at a session held at the end of the first month of the rotation (mid-clerkship survey). Students were read a verbal consent script and instructed to return the survey without completing it if they did not wish to participate. The pre-clerkship survey consisted of 27 items and included short answer and Likert-type questions. Question domains included demographics, self-assessed patient-caregiving skills, and preferences and attitudes about having ordering opportunities. The mid-clerkship survey consisted of 37 short answer and Likert-type questions and a single qualitative question. Domains included quantification of experience with placing and reviewing orders, self-assessed patient-caregiving skills, attitudes toward having ordering opportunities, barriers to placing orders, quality of training received, and qualitative description of the impact of the ordering method on educational experience. The mid-clerkship survey was piloted with a group of Basic Medicine clerkship students prior to study initiation and changes were made based on their feedback.

Surveys were electronically scanned and results tabulated using TELEform version 8.2 (Cardiff Software, Inc., Bozeman, MT). Approval for the research was obtained from the Johns Hopkins Institutional Review Board and from the Associate Dean for Student Affairs for the School of Medicine.

Analysis

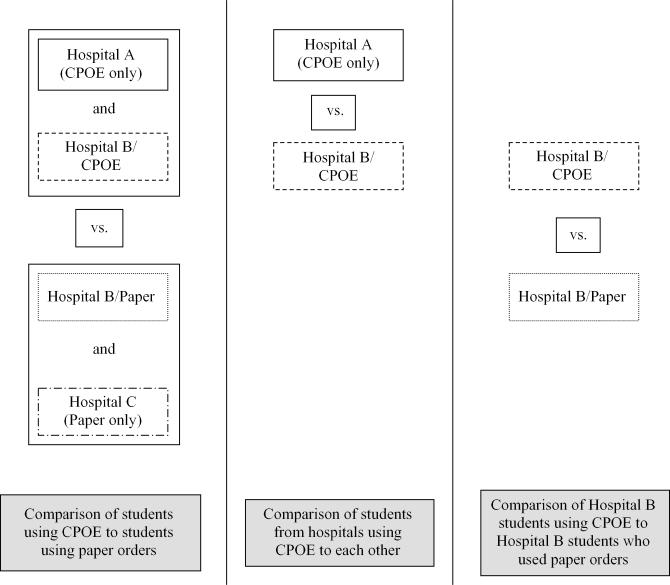

Pre-clerkship and mid-clerkship survey responses from students who trained at hospitals using CPOE (Hospital A and Hospital B/CPOE) were compared to those who trained at hospitals using paper orders (Hospital B/Paper and Hospital C) (▶). Two subgroup analyses were performed. The first subgroup analysis compared responses from students who trained at the two hospitals using CPOE to each other (Hospital A and Hospital B/CPOE), to determine whether variations in their experiences could be attributed to a particular hospital or its CPOE system. The second subgroup analysis compared the responses from students at Hospital B before and after CPOE implementation to each other to determine whether experiences at a single hospital were dependent on the ordering method (▶). Two-sided t-tests were used to compare continuous variables and chi-square tests to compare categorical variables other than Likert-type responses. Responses to Likert-type questions were skewed, and therefore medians are reported rather than means, and the Mann-Whitney U-test was used to compare responses to these questions. SPSS version 10.1 was used for all analyses (SPSS Inc., Chicago, IL).

Figure 1.

Main and subgroup analyses.

An “editing analysis style”29 was used to qualitatively evaluate responses from students at hospitals using CPOE to the question about the impact of the ordering method. Responses were read, interpreted, and coded, and preliminary categories were identified and iteratively revised. Decisions about coding and naming of categories were reached by consensus.

Results

Pre-clerkship surveys were received from 137 students (96% response rate). Eighty-four percent of students requested that they start the clerkship at a particular hospital (52% at Hospital A, 28% at Hospital B, and 20% at Hospital C), and 84% of these students received their first choice. Only two students indicated that the method for placing orders at that hospital had an impact on their request (both requested hospitals using paper orders at the time of their clerkship). Ninety-two of 96 mid-clerkship surveys (96%) were received from students who had been at hospitals using CPOE (69 from Hospital A, 23 from Hospital B/CPOE), and 44 of 47 mid-clerkship surveys (94%) were received from students who had been at hospitals using paper orders (22 each from Hospital B/Paper and Hospital C). Six students who did not complete the pre-clerkship survey provided their demographic information when completing the mid-clerkship survey.

Pre-clerkship Characteristics

Students were predominantly male, white, and in their third year of medical school (▶). Students at hospitals using CPOE were slightly older than those at hospitals using paper orders (mean 25.4 vs. 24.7, p = 0.04). There were no other significant demographic differences at the time of the pre-clerkship survey.

Table 1.

Student Demographics (N = 143*)

| N | % | |

|---|---|---|

| Gender | ||

| Male | 85 | 59.4 |

| Female | 58 | 40.6 |

| Race | ||

| Non-Hispanic white | 80 | 56.7 |

| Asian | 39 | 27.7 |

| African American | 11 | 7.8 |

| Other | 11 | 7.8 |

| School year | ||

| 2nd | 16 | 11.3 |

| 3rd | 124 | 87.3 |

| 4th | 2 | 1.4 |

| Age (y) | ||

| 21–23 | 19 | 13.4 |

| 24–26 | 96 | 67.6 |

| 27–37 | 27 | 19.0 |

Because of item nonresponse, the number for each category does not add up to sample number.

Pre-clerkship preferences, attitudes, and self-assessed patient care abilities are shown in ▶. No significant differences were found at the time of the pre-clerkship survey for these items when comparing students who subsequently spent the first month of the clerkship at hospitals using CPOE to those who trained using paper orders.

Table 2.

Pre-clerkship Preferences, Attitudes, and Ability (N = 137)

| Median | (IQR) | %* | |

|---|---|---|---|

| Preferences about order placement and review | |||

| For what percent of newly admitted patients that you pick up would you like to write or enter a complete set of admission orders? (%) | 100 | (80–100) | — |

| What percentage of your patients' total number of follow-up orders would you like to write or enter? (%) | 100 | (75–100) | — |

| What percentage of your patients' total number of admission and follow-up orders would you like to have reviewed with you: | |||

| By your intern or resident? (%) | 100 | (100–100) | — |

| By your attending? (%) | 50 | (25–100) | — |

| Attitudes toward placing orders† | |||

| Placing orders is an important way to increase my sense that I am a caregiver for my patients | 5 | (4–5) | 94.1 |

| Placing orders is an important way to learn what tests and treatments are needed by patients with certain problems | 5 | (4–5) | 95.6 |

| Entering orders by computer promotes “cookbook medicine” and discourages thinking | 2 | (2–3) | 11.8 |

| Writing orders by hand is cumbersome and encourages medical errors | 4 | (3–4) | 54.4 |

| It makes no difference in my learning whether I enter orders by computer or write them on paper | 3 | (3–4) | 46.7 |

| Medical students should be given as many opportunities as possible to place orders for their patients | 5 | (4–5) | 91.3 |

| The ordering method used will have an impact on my selection of the location of my future rotations | 2 | (2–3) | 8.9 |

| The ordering method used will have an impact on my selection of the location of my residency | 3 | (2–3) | 14.2 |

| Patient care ability‡ | |||

| Overall ability to care for hospitalized medical patients | 2 | (2–3) | 33.1 |

| Ability to write or enter orders for patients | 2 | (1–3) | 25.9 |

IQR = Interquartile Range of Responses.

Percentage who agree or strongly agree with the statements about attitudes toward placing orders and percentage who rated their patient-caregiving ability good, very good, or excellent.

Students rated the strength of their agreement with the statements using a five-point Likert scale: 1 = strongly disagree, 2 = disagree, 3 = neutral, 4 = agree, 5 = strongly agree.

Students rated their patient-caregiving ability using a five-point Likert scale: 1 = poor, 2 = fair, 3 = good, 4 = very good, 5 = excellent.

Comparison of Students Using CPOE to Students Using Paper Orders

When students at hospitals using CPOE were compared to those at hospitals using paper orders at the clerkship midpoint, there were no significant differences in self-assessed patient-caregiving abilities or attitudes toward ordering opportunities.

Students at the hospitals using CPOE reported placing significantly fewer of their patients' follow-up orders than students at hospitals using paper orders (mean 32.1% vs. 47.5%, p < 0.01). There was no significant difference between the reported number of sets of admission orders written or entered during the month (mean 2.2 vs. 2.6, p = 0.38). Students in the two groups similarly reported the percentage of their patients' orders reviewed with them by their intern or resident (mean 62.2% vs. 71.4%, p = 0.15) and by their attending (mean 9.6% vs. 15.6%, p = 0.26).

Students at the hospitals using CPOE were significantly less likely to report (i) feeling like part of the medical team, (ii) included in discussions about their patients, (iii) having interns and residents who thought it was important for them to place orders, or (iv) being adequately prepared for being an intern (▶). Most students in both the CPOE and paper groups reported that a substantial barrier to placing orders was that interns and residents entered orders while students were unavailable due to conferences and teaching sessions. Significantly more students at hospitals using CPOE reported that their resident or intern did not want them to write or enter orders and that it took too long for the resident or intern to review the students' orders (▶).

Table 3.

Training Experience*

| Comparison of Students Using CPOE to Students Using Paper Orders |

Comparison of Students at Hospitals Using CPOE to Each Other |

|||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| CPOE (n = 92) |

Paper (n = 44) |

Hospital A (n = 69) |

Hospital B/CPOE (n = 23) |

|||||||

| Median | %‡ | Median | %‡ | p | Median | %‡ | Median | %‡ | p | |

| I felt like part of the medical team in the care of my patients. | 4 | 80.3 | 4 | 95.3 | 0.01 | 4 | 75.0 | 5 | 95.7 | <0.01 |

| I was included in discussions about the management of my patients. | 4 | 79.4 | 4.5 | 95.5 | 0.03 | 4 | 72.4 | 5 | 100.0 | <0.01 |

| My intern and resident thought it was important for me to have opportunities to place orders on my patients. | 3 | 41.3 | 4 | 62.8 | 0.01 | 3 | 16.7 | 4 | 65.2 | <0.01 |

| My attending thought it was important for me to have opportunities to place orders on my patients. | 3 | 25.0 | 3 | 33.3 | 0.22 | 3 | 33.3 | 3.5 | 50.0 | <0.01 |

| I am receiving adequate training in how to write/enter orders. | 3 | 35.9 | 3 | 38.1 | 0.35 | 3 | 26.0 | 4 | 65.8 | <0.01 |

| I am receiving adequate preparation for being an intern. | 3 | 45.7 | 4 | 63.7 | 0.01 | 3 | 40.6 | 4 | 60.9 | 0.04 |

Five-point Likert scale: 1 = strongly disagree, 2 = disagree, 3 = neutral, 4 = agree, 5 = strongly agree.

Percentage reporting that they agree or strongly agree with the statement.

Table 4.

Barriers to Placing Orders*

| Comparison of Students Using CPOE to Students Using Paper Orders |

Comparison of Students at Hospitals Using CPOE to Each Other |

|||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| CPOE (n = 92) |

Paper (n = 44) |

Hospital A (n = 69) |

Hospital B/CPOE (n = 23) |

|||||||

| Median | %‡ | Median | %‡ | p | Median | %‡ | Median | %‡ | p | |

| Resident or intern did not want me to write or enter orders. | 2 | 40.0 | 1 | 16.3 | <0.01 | 2 | 47.8 | 1 | 17.3 | <0.01 |

| It took too long for the resident or intern to review orders I wrote. | 2 | 32.6 | 1 | 11.9 | <0.01 | 2 | 33.3 | 2 | 30.4 | 0.87 |

| Resident or intern entered orders while I was unavailable. | 4 | 88.9 | 3 | 74.5 | 0.11 | 3 | 85.1 | 4 | 100.0 | 0.07 |

| Difficulty finding a free computer terminal. | 1 | 6.6 | — | — | — | 1 | 8.8 | 1 | 0.0 | 0.22 |

| Resident or intern did not know how to electronically cosign my orders. | 1 | 18.9 | — | — | — | 1 | 11.9 | 2 | 39.1 | <0.01 |

| Inadequate training on the computer ordering system. | 2 | 21.4 | — | — | — | 2 | 22.8 | 1 | 17.3 | 0.49 |

| Computer ordering system difficult to use. | 2 | 35.5 | — | — | — | 2 | 40.3 | 2 | 21.7 | 0.04 |

Four-point Likert scale: 1 = none, 2 = a little, 3 = a moderate amount, 4 = a lot.

Percentage reporting that the barrier affected their having the ability to write or enter orders “a moderate amount” or “a lot.”

Comparison of Students From Hospitals Using CPOE to Each Other

Students who had been at Hospital A were less confident about their ability to write or enter orders at the clerkship midpoint compared to those who trained at Hospital B/CPOE (median 3 [interquartile range of responses 2 to 3] vs. 3 [interquartile range of responses 2 to 4], p = 0.02). There were no other significant differences between their self-assessed patient-caregiving abilities, and no significant differences between their attitudes toward ordering at the clerkship midpoint.

Students at Hospital A reported entering fewer of their patients' follow-up orders than students at Hospital B/CPOE (25.9% vs. 50.8%, p < 0.01). There were no differences between the number of sets of admission orders entered or the percentage of orders reviewed with students by their intern and resident or attending.

Students at Hospital A rated all studied aspects of the training experience lower than students at Hospital B/CPOE (▶). Students at Hospital A were significantly more likely than students at Hospital B/CPOE to report that their resident or intern did not want them to write or enter orders and that the computer ordering system was difficult to use but less likely to report that the intern or resident did not know how to cosign their orders. The two groups similarly rated other barriers to placing orders (▶).

Comparison of Hospital B Students Using CPOE to Hospital B Students Using Paper Orders

Students who spent the first month of the clerkship at Hospital B/CPOE were more likely than those who spent it at Hospital B/Paper to indicate that placing orders using paper was cumbersome (median 4 vs. 3, p = 0.03). Students at Hospital B/CPOE were also more likely to report that the length of time required for interns and residents to review their orders was a significant barrier (median 2 vs. 1, p = 0.03) and that the intern or resident entered orders while they were unavailable (median 4 vs. 3, p = 0.04). There were no other significant differences between the two groups.

Qualitative Data

Forty-one percent of students at hospitals using CPOE reported that the ordering method had affected their educational experience, and each then elaborated on how it had done so (38 students total). A similar percentage of students at Hospital A and Hospital B/CPOE recorded comments. All responses fell into one of four domains.

Twenty students (17 at Hospital A and three at Hospital B/CPOE) indicated that they had felt left out of the ordering process; for example: “Computer ordering made it easier for my interns to enter orders themselves and more difficult for them to allow me to do it and cosign my orders” and “The comfort level the interns had with entering computer orders actually discouraged student entries because they could get them done quicker and did not have to leave the office to do it.”

System limitations other than those related to cosignature issues were noted by six students. A representative comment was “[The system] was a bit cumbersome to work with, even with training prior to use. My unfamiliarity with the system definitely hindered my ability to enter or add orders in a timely and efficient manner, though I did try.”

Four students felt that the system hindered their learning in some way other than that related to cosignature or other system limitations: “We used order sets for admissions so we did not need to think through each of the aspects of an admission order.”

Ten students reported that the ordering system supported the learning and caregiving experience; for example, “Placing orders by computer simplified the ordering experience, increasing my familiarity with different procedures, and really helped to make me feel like I was part of the team caring for the patient.”

Discussion

Computerized provider order entry is a cornerstone of initiatives recommended to improve the safety of health care.30 Despite the significant changes in hospital process that accompany CPOE implementation,31,32,33 few studies have addressed the effect of CPOE or participation in the ordering process on medical student education.23,25,26,27 Students at the hospitals using CPOE in this study wrote fewer follow-up orders and rated several aspects of their training experience lower than students at hospitals using paper orders, and their experience was more affected by various barriers to placing orders. Other than the longer time to review orders, these differences appear to be explained solely by the experience of students at Hospital A. It remains unclear whether the variation in the experiences of students at Hospital A and Hospital B/CPOE can be attributed primarily to the differences in the CPOE systems they used or whether some other factor is the cause, such as a difference in patient types, the process of care, or approaches to learning.

This study affirms an earlier concern24,25 that medical student education may suffer because of the longer time required for housestaff to enter and review orders in CPOE systems.34,35,36,37 Most students in this study appreciated CPOE's role in reducing ordering errors and were not concerned about its impact on their learning. The positive experience of students at Hospital B/CPOE suggests that institutions may be able to control the effect of CPOE on education. Prior controlled studies of CPOE's effect on medical student education have been small and their results conflicting. One study showed that students using CPOE during their emergency medicine clerkship were more likely than students using paper orders to improve their ability to write orders for a simulated patient.27 However, exposure to a CPOE system had no effect on students' performance on an examination at the end of a surgery clerkship in the other published study.26 Many studies have shown that CPOE and decision support improve physicians' ordering practices,38,39,40 but their effect on medical student learning about and ability to perform patient care should be further explored.

Medical students in our study highly valued having opportunities to place and review orders during their clinical clerkships, but regardless of training site, they reported that being “unavailable” was a significant barrier to having opportunities to place orders. Structured conferences and teaching sessions are a universally accepted part of medical student education; however, teaching institutions must preserve opportunities for practical hands-on experience.2,3,4 Medical students do not want to regularly perform ancillary activities such as drawing blood and transporting patients41; although placing orders may be construed more as a learning opportunity than as “scut.” Strengthening the supervision given to students as they place orders may enhance this experience. Students may also benefit from curricula teaching them how to formulate and write orders that support collaboration and satisfactory communication with other health care providers.

Several limitations of this study should be considered. First, students were not randomized between hospitals, and students who chose to spend the first month of the clerkship at a particular hospital may have been more or less interested in learning about how to care for internal medicine patients. Other than a small difference in age, we did not detect any pre-clerkship differences between the two groups' demographics, preferences, attitudes, or self-assessed skills. Second, the study measured self-reported rather than actual experiences, and responses were subject to recall bias. We have no reason to suspect that students at one site were more likely to exaggerate or minimize their experiences than those at another. Third, only a limited number of students trained at Hospital B, reducing our ability to detect differences in the second subgroup analysis. Fourth, Hospital B was just initiating CPOE when this study was conducted, and experiences there might improve once the ordering process for housestaff and students is more firmly established. Conversely, the students exposed to CPOE at Hospital B were at a later point in the academic year than those there when it was still using paper and may have been more adept at taking advantage of opportunities to place orders. Finally, this study compared experiences of students from a single school of medicine. Experiences for students from other medical schools and at other hospitals may be different, depending on the CPOE system implemented, the culture of the hospital, and the emphasis placed on hands-on experience during clinical clerkships.

Conclusions

Computerized provider order entry is a valuable technology, but teaching institutions should take note of the change occurring in the educational environment on hospital wards implementing CPOE. As more teaching hospitals implement CPOE, educators should ensure that the systems adequately allow students to place orders, that housestaff know how to work with students' orders and are sensitized to the value of this experience for students, and that appropriate emphasis is placed on giving students opportunities to participate in the day-to-day care of their patients. Efforts to improve patient safety must continue, but if the technology used to reduce medical errors jeopardizes medical education, its ultimate value may be reduced.

Informatics and statistics support provided by the Johns Hopkins Bayview Medical Center General Clinical Research Center, grant M01-RR-02719. We are grateful for the assistance of Ms. Debbie Hill with survey design, the late Dr. Matthew Tayback with statistical analysis, and for manuscript review by and helpful suggestions from Dr. Scott Wright, Dr. Roy Ziegelstein, and Dr. David Hellmann.

Presented in part at the Society of General Internal Medicine Annual Meeting, May 15, 2004, and at Medinfo: The 11th World Congress of Medical Informatics, September 10, 2004.

References

- 1.Learning objectives for medical student education—guidelines for medical schools: report 1 of the Medical School Objectives Project. Acad Med. 1999;74:13–8. [DOI] [PubMed] [Google Scholar]

- 2.Curry RH, Hershman WY, Saizow RB. Learner-centered strategies in clerkship education. Am J Med. 1996;100:589–95. [DOI] [PubMed] [Google Scholar]

- 3.Mellinkoff SM. The medical clerkship. N Engl J Med. 1987;317:1089–91. [DOI] [PubMed] [Google Scholar]

- 4.Osler W. The Hospital as a College. Aequanimitas: with other addresses to medical students, nurses and practitioners of medicine. Philadelphia: Blakiston, 1932.

- 5.O'Sullivan PS, Weinberg E, Boll AG, Nelson TR. Students' educational activities during clerkship. Acad Med. 1997;72:308–13. [DOI] [PubMed] [Google Scholar]

- 6.van der Hem-Stokroos RHH, Scherpbier AJJA, van der Vleuten CPM, de Vries H, Haarman HJTM. How effective is a clerkship as a learning environment? Med Teach. 2001;23:599–604. [DOI] [PubMed] [Google Scholar]

- 7.Kogan JR, Bellini LM, Shea JA. The impact of resident duty hour reform in a medicine core clerkship. Acad Med. 2004;79:S58–61. [DOI] [PubMed] [Google Scholar]

- 8.Leape LL, Bates DW, Cullen DJ, et al. Systems analysis of adverse drug events. JAMA. 1995;274:35–43. [PubMed] [Google Scholar]

- 9.Bates DW, Boyle DL, Vander Vliet MB, Schneider J, Leape L. Relationship between medication errors and adverse drug events. J Gen Intern Med. 1995;10:199–205. [DOI] [PubMed] [Google Scholar]

- 10.Lesar TS, Briceland L, Stein DS. Factors related to errors in medication prescribing. JAMA. 1997;277:312–7. [PubMed] [Google Scholar]

- 11.Lesar TS. Prescribing errors involving medication dosage forms. J Gen Intern Med. 2002;17:579–87. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Kohn L, Corrigan J, Donaldson M, editors. To err is human: building a safer health system. Washington, DC: IOM, National Academy Press, 1999. [PubMed]

- 13.Bates DW, Leape L, Cullen DJ, et al. Effect of computerized physician order entry and a team intervention on prevention of serious medication errors. JAMA. 1998;280:1311–6. [DOI] [PubMed] [Google Scholar]

- 14.Bates DW, Teich JM, Lee J, et al. The impact of computerized physician order entry on medication error prevention. J Am Med Inform Assoc. 1999;6:313–21. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Mekhjian HS, Kumar RR, Kuehn L, et al. Immediate benefits realized following implementation of physician order entry at an academic medical center. J Am Med Inform Assoc. 2002;9:529–39. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Teich JM, Merchia PR, Schmiz JL, Kuperman GJ, Spurr CD, Bates DW. Effects of computerized provider order entry on prescribing practices. Arch Intern Med. 2000;160:2741–7. [DOI] [PubMed] [Google Scholar]

- 17.Ash JS, Gorman PN, Seshadri V, Hersh WR. Computerized physician order entry in U.S. hospitals: results of a 2002 survey. J Am Med Inform Assoc. 2004;11:95–9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Moberg TF, Whitcomb ME. Educational technology to facilitate medical students' learning: background paper 2 of the Medical School Objectives Project. Acad Med. 1999;74:1145–50. [DOI] [PubMed] [Google Scholar]

- 19.Whelan A, Appel J, Alper EJ, et al. The future of medical student education in internal medicine. Am J Med. 2004;116:576–9. [DOI] [PubMed] [Google Scholar]

- 20.Seago BL, Schlesinger JB, Hampton CL. Using a decade of data on medical student computer literacy for strategic planning. J Med Libr Assoc. 2002;90:202–9. [PMC free article] [PubMed] [Google Scholar]

- 21.Peterson MW, Rowat J, Kreiter C, Mandel J. Medical students' use of information resources: Is the digital age dawning? Acad Med. 2004;79:89–95. [DOI] [PubMed] [Google Scholar]

- 22.Tannery NH, Foust JE, Gregg AL, et al. Use of Web-based library resources by medical students in community and ambulatory settings. J Med Libr Assoc. 2002;90:305–9. [PMC free article] [PubMed] [Google Scholar]

- 23.Tierney WM, Overhage JM, McDonald CJ, Wolinsky FD. Medical students' and housestaff's opinions of computerized order-writing. Acad Med. 1994;69:386–9. [DOI] [PubMed] [Google Scholar]

- 24.Massaro TA. Introducing physician order entry at a major academic medical center: impact on medical education. Acad Med. 1993;68:25–30. [DOI] [PubMed] [Google Scholar]

- 25.Ash JS, Gorman PN, Hersh WR, Lavelle M, Poulsen SB. Perceptions of house officers who use physician order entry. Proc AMIA Symp. 1999:471–5. [PMC free article] [PubMed]

- 26.Patterson R, Harasym P. Educational instruction on a hospital information system for medical students during their surgical rotations. J Am Med Inform Assoc. 2001;8:111–6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Stair TO, Howell JM. Effect on medical education of computerized physician order entry. Acad Med. 1995;70:543. [DOI] [PubMed] [Google Scholar]

- 28.Weiner M, Gress T, Thiemann DR, et al. Contrasting views of physicians and nurses about an inpatient computer-based provider order-entry system. J Am Med Inform Assoc. 1999;6:234–44. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Crabtree BF, Miller WL. Doing qualitative research. Newbury Park, CA: Sage, 1992.

- 30.The Leapfrog Group Fact Sheet. The Leapfrog Group. Available at: http://www.leapfroggroup.org/about_us/leapfrog-factsheet/.

- 31.Ash JS, Gorman PN, Lavelle M, et al. A cross-site qualitative study of physician order entry. J Am Med Inform Assoc. 2003;10:188–200. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Ash JS, Stavri PZ, Kuperman GJ. A consensus statement on considerations for a successful CPOE implementation. J Am Med Inform Assoc. 2003;10:229–34. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Ahmad A, Teater P, Bentley TD, et al. Key attributes of a successful physician order entry system implementation in a multi-hospital environment. J Am Med Inform Assoc. 2001;9:16–24. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Tierney WM, Miller ME, Overhage JM, McDonald CJ. Physician inpatient order writing on microcomputer workstations: effects on resource utilization. JAMA. 1993;269:379–83. [PubMed] [Google Scholar]

- 35.Bates DW, Boyle DL, Teich JM. Impact of computerized physician order entry on physician time. Proc Annu Symp Comput Appl Med Care. 1994:996. [PMC free article] [PubMed]

- 36.Overhage JM, Perkins S, Tierney WM, McDonald CJ. Controlled trial of direct physician order entry: effects on physicians' time utilization in ambulatory primary care internal medicine practices. J Am Med Inform Assoc. 2001;8:361–71. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Shu K, Boyle D, Spurr CD, et al. Comparison of time spent writing orders on paper with computerized physician order entry. Medinfo. 2001;10:1207–11. [PubMed] [Google Scholar]

- 38.Garg AX, Adhikari NKJ, McDonald H, et al. Effects of computerized clinician decision support systems on practitioner performance and patient outcomes: a systematic review. JAMA. 2005;293:1223–38. [DOI] [PubMed] [Google Scholar]

- 39.Johnston ME, Langton KB, Haynes B, Mathieu A. Effects of computer-based clinical decision support systems on clinician performance and patient outcome: a critical appraisal of research. Ann Intern Med. 1994;120:135–42. [DOI] [PubMed] [Google Scholar]

- 40.Hunt DL, Haynes B, Hanna SE, Smith K. Effects of computer-based clinical decision support systems on physician performance and patient outcomes: a systematic review. JAMA. 1998;280:1339–46. [DOI] [PubMed] [Google Scholar]

- 41.Cook RL, Noecker RJ, Suits GW. Time allocation of students in basic clinical clerkships in a traditional curriculum. Acad Med. 1992;67:279–81. [DOI] [PubMed] [Google Scholar]