Abstract

Human-machine voice interaction based on speech recognition offers an intuitive, efficient, and user-friendly interface, attracting wide attention in applications such as health monitoring, post-disaster rescue, and intelligent control. However, conventional microphone-based systems remain challenging for complex human-machine collaboration in noisy environments. Herein, an anti-noise triboelectric acoustic sensor (Anti-noise TEAS) based on flexible nanopillar structures is developed and integrated with a convolutional neural network-based deep learning model (Anti-noise TEAS-DLM). This highly synergistic system enables robust acoustic signal recognition for human-machine collaboration in complex, noisy scenarios. The Anti-noise TEAS directly captures acoustic fundamental frequency signals from laryngeal mixed-mode vibrations through contact sensing, while effectively suppressing environmental noise by optimizing device-structure buffering. The acoustic signals are subsequently processed and semantically decoded by the DLM, ensuring high-fidelity interpretation. Evaluated in both simulated virtual and real-life noisy environments, the Anti-noise TEAS-DLM demonstrates near-perfect noise immunity and reliably transmits various voice commands to guide robotic systems in executing complex post-disaster rescue tasks with high precision. The combined anti-noise robustness and execution accuracy endow this DLM-enhanced Anti-noise TEAS as a highly promising platform for next-generation human-machine collaborative systems operating in challenging noisy environments.

Subject terms: Electrical and electronic engineering

Human-machine collaboration in noisy environments using conventional microphones remains challenging. Here, the authors develop an anti-noise triboelectric acoustic sensor integrated with a CNN-based deep learning model to facilitate complex human-machine tasks in highly noisy conditions.

Introduction

With the accelerated development of artificial intelligence (AI) and the internet of things technologies, human-machine interaction (HMI) has attracted extensive attention in applications of health monitoring, post-disaster rescue, intelligent control, and so on1–3. The HMI systems have also evolved from initial manipulation of external devices (keyboard, remote controls, etc.) to more direct and efficient modalities, including body-movement interaction, electroencephalogram-based control, and voice interaction. Although the HMI with body-movement offers operational simplicity and ease of implementation, the lack of intuitiveness and limited command repertoire restrict its utility in complex interactive tasks4–8. Electroencephalogram-based HMI (e.g., electromyography or electroencephalogram) holds theoretical promise for multifunctional control by decoding diverse electrophysiological signals. However, the incomplete understanding of complex electrophysiological patterns9–11, coupled with the bulkiness of multi-channel sensor arrays and signal acquisition hardware12–14, currently hinders both accuracy and practical wearability. As a common and natural communication medium, speech signals encode not only semantic content but also paralinguistic cues such as speaker identity and emotional state15. Human-machine voice interaction (HMVI) leverages these advantages, offering an intuitive, efficient, and information-rich interface for complex collaborative tasks16,17.

The rapid advancement of speech recognition technology and intelligent devices has broadly prompted the applications of conventional HMVI, driving their widespread commercialization18. At present, regular commercial microphones mainly use rigid acoustic sensors (e.g., moving coil or electret condenser) for signal acquisition, which inherently limits HMVI system wearability19. Flexible wearable acoustic sensors based on diverse sensing mechanisms, including piezoresistive20,21, piezoelectric22,23, and triboelectric sening24–26, potentially provide more practical solutions for the HMVI applications, demonstrating desirable performance in acoustic signal acquisition. Among these, triboelectric acoustic sensors possess advantages in capturing periodic subtle vibrational signals, owing to their high sensitivity, high response speed, and cost-effectiveness27–35. The triboelectric sensors are simple in structure, which can be developed into wearable acoustic sensors, to convert weak vibration into acoustic signals by attaching them to the throat area. However, human speech production involves complex coordination of multiple physiological components (throat, tongue, and facial muscles) to generate air vibrations36–38, meaning that laryngeal vibration alone cannot capture the complete set of speech recognition features. In addition, conventional acoustic sensors are typically designed for daily life conditions, which are often seriously interfered with by noisy environments (e.g., crowded areas, fire emergencies, or heavy rainfall).

Deep learning model (DLM) has emerged as a powerful tool for feature extraction, data analysis, and target classification, enabling efficient processing and recognition of speech signals for semantic interpretation, biometric identification, and sentiment analysis39–42. Recently, emerging studies have integrated DLM with flexible wearable acoustic sensors to further enhance HMVI efficiency43–45. For instance, Ren et al. reported an intelligent artificial pharyngeal sensor based on laser-induced graphene, which achieved accurate recognition of daily vocabulary for people with vocal impairments with the assistance of the DLM38. Lee et al. developed a flexible resonant acoustic sensor based on the piezoelectric principle and combined it with a DLM to achieve accurate biometric authentication22. In addition, Chen et al. developed a high-fidelity waterproof acoustic sensor and realized highly accurate biometric authentication with the support of the DLM26. Notably, DLMs can compensate for feature-deficient or waveform-distorted acoustic signals by leveraging their advanced data processing and pattern recognition capabilities to reconstruct and interpret speech information with high accuracy. Further exploration of the systematic integration of DLMs and triboelectric acoustic sensors will provide a practical approach for HMVI collaboration in noisy environments.

In this work, we developed an anti-noise triboelectric acoustic sensor (Anti-noise TEAS) based on flexible nanopillar structures, which was further integrated with a convolutional neural network (CNN)-based DLM to enable robust acoustic signal recognition (ASR) for complex HMVI in noisy environments. The Anti-noise TEAS could directly acquire the acoustic fundamental frequency signals from laryngeal mixed-mode vibrations through contact sensing, enabling to capture of multiple types of acoustic signals with high sensitivity, high stability, and a broad response frequency range. By optimizing the device layer structure, the sensor could effectively buffer the interference of the noisy environments, while the accurate meaning of the distorted acoustic signals was recognized with the assistance of the CNN-based DLM. Therefore, the Anti-noise TEAS enhanced by DLM (Anti-noise TEAS-DLM) achieved remarkable speech recognition accuracy exceeding 99% in high-noise conditions, demonstrating near-perfect immunity to ambient interference. In simulated virtual and real-life scenarios, the Anti-noise TEAS-DLM was applied to transmit voice commands for guiding robotic systems to perform complex post-disaster casualty rescue tasks. While conventional microphone-based systems failed to complete tasks owing to noise interference, the Anti-noise TEAS-DLM maintained reliable noise resistance, enabling precise robotic task execution. The Anti-noise TEAS-DLM developed in this work provided a practical solution for HMVI collaborative tasks in noisy environments, including post-disaster rescue, collaborative operations, and wilderness exploration. This AI-driven system was beneficial for promoting the development of a diversified system for HMVI to meet the complex scenarios in real-world noisy conditions.

Results

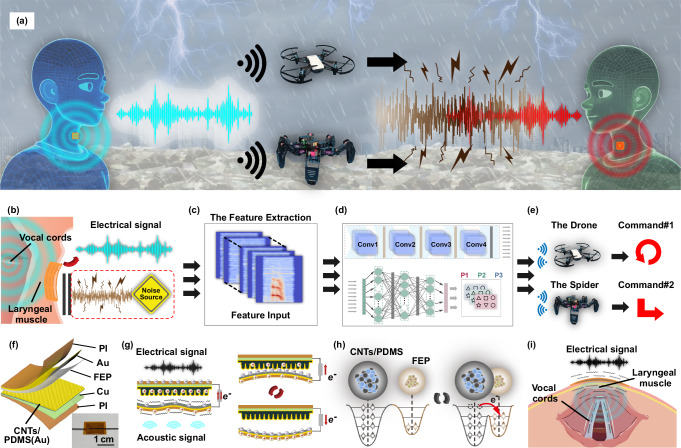

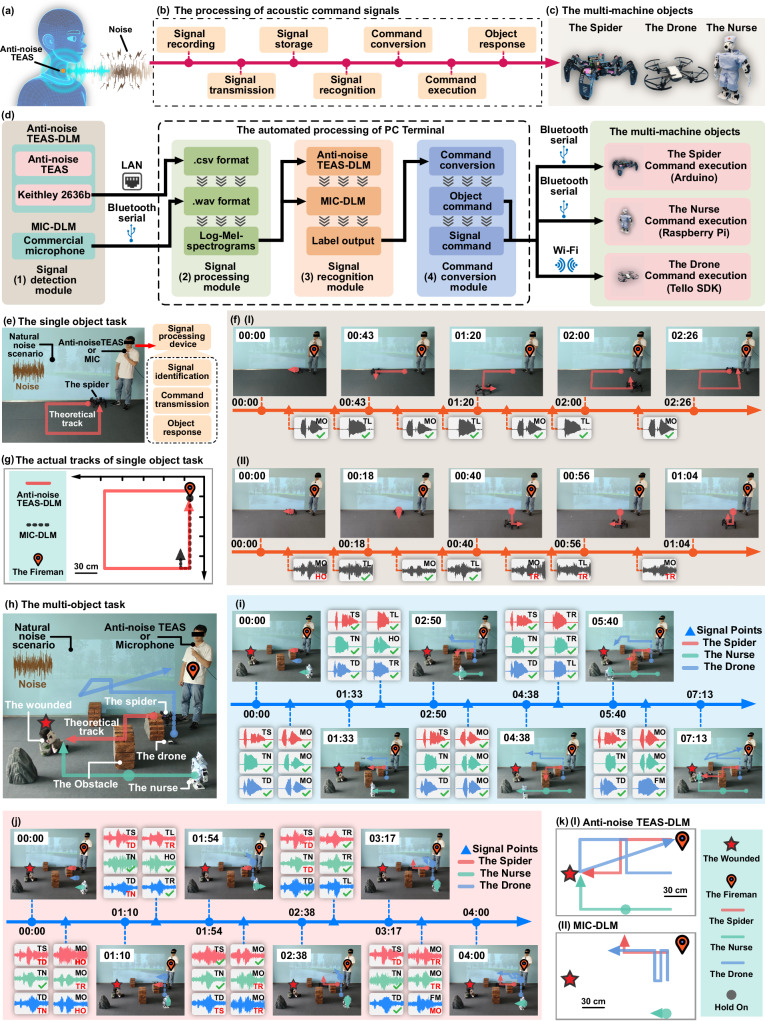

Figure 1a illustrates the working mechanism of the deep learning-enhanced Anti-noise TEAS to perform complex human-machine collaboration in noisy environments. The Anti-noise TEAS possessed desirable anti-noise interference capability, which could capture the fundamental acoustic signals of laryngeal vibrations through contact sensing in noisy scenarios, such as rainstorms, gusty winds, earthquakes, and other noisy environments. Regular acoustic sensors usually detect the speech acoustic signals after propagation through the air medium, which are easily interfered with and even drowned out by environmental noise. However, with special anti-noise structure design and optimized materials, the Anti-noise TEAS could effectively buffer the interference of environmental noise. By attaching to the cartilage of the human throat, the Anti-noise TEAS could directly capture the mixed-mode signals of acoustic (the weak vibration of vocal folds) signals and mechanical motion (the tiny movements of muscle) signals through contact sensing (Fig. 1b). These mixed-mode acoustic signals contained partial speech recognizable features, which could reflect the semantic content to some extent. While the special structure and contact sensing of the Anti-noise TEAS were beneficial to buffer the environmental noise, the acoustic signals from laryngeal vibrations could also be compromised or distorted, losing the resonance features of the tuning organs, such as the mouth, tongue, and pharynx. This resulted in difficulties in directly recognizing these signals by human hearing and traditional speech processing techniques. As a powerful tool with feature extraction and data analysis capabilities, a CNN-based DLM could potentially enhance the Anti-noise TEAS in the aspect of recognition of the distorted signals. After data processing and feature extraction (Fig. 1c), the CNN-based DLM was utilized to parse and classify the acoustic signals, and obtained both the semantic and identity information (Fig. 1d). Finally, the command signals were wirelessly transmitted to the swarms of robots, which could perform the complex human-machine collaborative tasks in harsh scenarios (Fig. 1e).

Fig. 1. Schematic of the Anti-noise TEAS-DLM for human-machine collaboration in noisy environments.

a Diagram of the working mechanism of the deep learning-enhanced Anti-noise TEAS to perform complex human-machine collaborative tasks in noisy scenarios. b The Anti-noise TEAS detected mixed-mode signals of acoustic (weak vibrations of the vocal cords) signals and mechanical motion (tiny movements of muscle) signals via contact sensing, which could block the interference of environmental noise. c Pre-processing and feature extraction of the acoustic signals to obtain the input feature spectrogram of the convolutional neural network (CNN)-based DLM. d Schematic diagram of the CNN-based DLM for ASR. e Controlling robotic systems, including the robot, the drone, etc., through the Anti-noise TEAS-DLM. f Structural schematic and photographs of the Anti-noise TEAS, with scale bar of 1 cm. g Working principle of the Anti-noise TEAS to realize contact-separation acoustic sensing. h Schematic of the atomic-scale model of the Anti-noise TEAS, where electrons were transferred from the positive materials to the negative materials when the two friction electrodes contacted. i Diagram of the Anti-noise TEAS attached to the laryngeal cartilage position to detect mixed-mode acoustic signals via the coupling of contact electrification and electrostatic induction.

The Anti-noise TEAS was composed of a flexible carbon nanotubes/polydimethylsiloxane (CNTs/PDMS) nanopillars substrate as a positive friction electrode and a fluorinated ethylene propylene (FEP) as a negative friction electrode (Fig. 1f). As a soft lithography technique, replica-molding was able to replicate the micro/nano-structures on the surface of the sample cost-effectively and rapidly (Supplementary Fig. S1). Herein, the nanopillars structure was fabricated on a flexible CNTs/PDMS substrate (Supplementary Fig. S2) by replica-molding from the surface structure of cicada wings. This nano-scale pillar structure could effectively expand the actual contact area of the friction layer interface, inducing more charge transfer and higher electrical output. In addition, a layer of ~300 nm Au on the surface of the flexible CNTs/PDMS nanopillars substrate was sputtered, to improve the substrate conductivity. After attaching a layer of conductive copper tape on the backside of the substrate, the positive friction electrode of the Anti-noise TEAS was obtained. A thin FEP film, which was coated with an Au layer on backside, served as a negative friction electrode. The friction layer interface of the FEP film was corona-treated to increase the surface charge density at the interface46,47. The as-prepared positive electrode was assembled with the FEP negative electrode to form the triboelectric sensors in the contact-separation mode, where a 300 μm-thick polyethylene glycol terephthalate (PET) film was used as the spacer between the two electrodes. The Anti-noise TEAS was encapsulated with Kapton tape, to increase the robustness of sensors and the interface contact between the sensors and skins48. The working principle of the Anti-noise TEAS was based on contact-separation sensing through the coupling of contact electrification and electrostatic induction (Fig. 1g). As a diaphragm, the FEP negative electrode would generate vibrations in response to acoustic signals. Due to the different electronic affinity capability of two friction materials, electrons were transferred from the positive frictional interface to the negative frictional interface, while contact occurred between the two friction electrodes (Fig. 1h). Thus, when the FEP diaphragm was close to the positive electrode, electrons would flow from the positive electrode to negative electrode through an external circuit. In contrast, when the FEP diaphragm was away from the positive electrode, electrons would flow back from the negative electrode to the positive electrode through the external circuit. By attaching the Anti-noise TEAS to the human larynx (Supplementary Fig. S3 and Supplementary Note S1), the sensor would generate corresponding electrical signals (current and voltage signals) in response to the laryngeal vibrations, including weak vibration of the vocal folds and tiny muscle movements, which could reflect the amplitude and frequency of the acoustic fundamental frequency signals in the human voice (Fig. 1i).

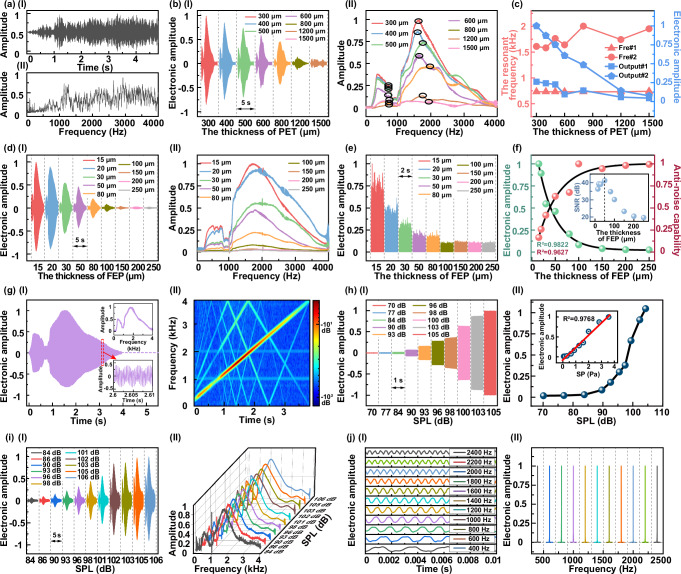

The acoustic response characteristics of acoustic sensors were important for the application of the HMVI. We employed a standard player, a sound pressure level (SPL) detector, and a precision source/measurement unit (SMU) to evaluated the acoustic response characteristics of the Anti-noise TEAS (Supplementary Fig. S4). The standard player was used as a sound source generator, which could apply acoustic signals of different frequencies and SPL to the Anti-noise TEAS. The SPL detector was used to detect acoustic signals and environmental noise, which could provide standardized SPL reference values. The SMU could detect and record the acoustic-current response signals of the Anti-noise TEAS in real time. The effect of designed parameters of the Anti-noise TEAS, such as spacer thickness and FEP thickness, on the acoustic response characteristics was systematically investigated. Firstly, the Anti-noise TEAS was prepared with various PET spacer thicknesses, ranging from 300 μm to 1500 μm. The acoustic response characteristics were evaluated by a sweep source signal with a frequency of 50–4000 Hz, a SPL of 102 dB, and a duration time of 5 s (Fig. 2a), which basically covered the main fundamental frequency range during human speech communications. The acoustic-current response signals recorded by the Anti-noise TEAS with different spacing thicknesses are shown in Fig. 2b-I. When the spacing distance was <800 μm, the envelope contours of the acoustic-current response signals exhibited high consistency, while the overall amplitude of the signals decreased with increasing spacing distance. This was likely because the electrostatic induction in the sensing process decayed as the spacer distance increased, resulting in a diminishing amplitude of the acoustic-current response signals. When the spacer distance increased to >800 μm, the stability of the Anti-noise TEAS during sensing was affected, resulting in a significant deformation of the envelope contours and a further decrease in the amplitude. Fast Fourier transforms (FFT) were performed on the acoustic-current response signals at different spacing distances to obtain linear standard sweep frequency characteristic curves. The frequency characteristic curves of the Anti-noise TEAS presented a similar situation to the acoustic-current response signals, i.e., the overall amplitude of the frequency characteristic curves diminished with the increase of the spacing distance (Fig. 2b-II). The 1st and the 2nd resonance frequencies were marked, and the relationship of frequencies (red curve) and peak values (bule curve) with spacing distance were plotted (Fig. 2c). The amplitude of the resonance frequency peaks declined with the increase of the spacing distance, and resonance frequency rose slightly with the increase of the spacing thickness, i.e., a red shift of the resonance frequency occurred. Since the flexible CNTs/PDMS substrates naturally featured various height undulations of ~200 μm, the PET layer was optimized with 300 μm thickness as the spacer, to ensure sufficient separation of friction electrodes.

Fig. 2. Acoustic response characteristics of the Anti-noise TEAS.

a (I) Waveform and (II) spectrogram of a linear sweep audio source recorded by a regular standard microphone. b (I) Sweep signal curves and (II) frequency characteristic curves recorded by the Anti-noise TEAS with different spacing sizes. c Frequency and peak value of the resonant frequencies vs the different spacing distances for the Anti-noise TEAS. d (I) Sweep signal curves and (II) frequency characteristic curves recorded by the Anti-noise TEAS with different FEP diaphragm thicknesses. e White noise signals recorded by the Anti-noise TEAS with different FEP diaphragm thicknesses through non-contact sensing. f Comparison of output performance and anti-noise capability of the Anti-noise TEAS vs the FEP diaphragm thicknesses. The inset presented the SNR of the Anti-noise TEAS vs the FEP diaphragm thicknesses. g (I) Typical linear sweep audio source curve obtained with the Anti-noise TEAS. The inset showed the frequency characteristic curve and detailed waveform, respectively. (II) Spectrogram of the sweep signal recorded by the Anti-noise TEAS. The red color in the spectrogram represented higher intensity, and the blue color meant lower intensity. h (I) Waveforms of acoustic signals (2 kHz) detected by the Anti-noise TEAS with different SPL from 70 dB to 105 dB. (II) Curves of different SPL vs the output amplitude of the acoustic-current response signals of the Anti-noivs TEAS. The inset exhibited curves of different SP vs the amplitude of the current. i (I) Waveforms of sweep signals and (II) frequency characteristic curves of different SPL detected by the Anti-noise TEAS. j (I) Waveforms and (II) frequency characteristics of different single-frequency acoustic signals detected by the Anti-noise TEAS.

The effect of the FEP diaphragm with various thicknesses (ranging from 15 μm to 250 μm) on the acoustic response characteristics was also evaluated with the sweep source signal. The acoustic-current response signals recorded by the Anti-noise TEAS with different FEP diaphragm thicknesses were exhibited in Fig. 2d-I. The envelope contours of the response signals of different FEP diaphragm thicknesses were pretty similar, and the output amplitude of the signals diminished as the FEP diaphragm thickness increased. The negative electrode stiffened as the thickness of the FEP diaphragm increased, resulting in less vibration of the friction layer during acoustic sensing, which in turn led to a reduction in the output signals. The FFT was performed on the response signals to obtain the frequency characteristic curves at various FEP diaphragms (Fig. 2d-II). The amplitude of the frequency characteristic curves decreased as the FEP thickness increased. The maximum peak of the frequency characteristic curves was identified as the output amplitude of the Anti-noise TEAS (Fig. 2f). The output performance of the sensor exhibited an exponential negative correlation with the FEP diaphragm thickness (R2 = 0.9822). When the FEP diaphragm increased to 100 μm, the response signals of the Anti-noise TEAS decreased to ~0.11-fold compared to that of the 15 μm thickness. While a thinner FEP diaphragm was desirable for enhancing output amplitude of the Anti-noise TEAS, the thicknesses effects on the anti-noise capability of sensor needed to be further investigated. Since the Anti-noise TEAS was a contact sensor oriented towards the detection of fundamental frequency acoustic signals of human larynx, the interference signals sensed spatially (non-contact) were one of the main noise sources during its operation. A white noise signal (SPL = 102 dB) was applied onto the Anti-noise TEAS, and recorded the interference signals. The amplitude of the noise signals detected by the Anti-noise TEAS declined with the increase of the FEP diaphragm thickness (Fig. 2e). The inverse of the average noise amplitude was used as an indicator to evaluated the anti-noise capability, where an exponential positive correlation with the thickness of the FEP diaphragm (R2 = 0.9627) was revealed (Fig. 2f). Comparing the effective output signals (contact sensing) and noise interference signals (spaced sensing) detected with different FEP diaphragm thicknesses, a relationship between the signal-to-noise ratio (SNR) and the FEP diaphragm thickness (inset of Fig. 2f) was obtained. The curves of the effective output signal and the noise interference signal intersected at ~50 μm of the FEP diaphragm. The SNR of the Anti-noise TEAS reached its maximum value at 50 μm thickness of the FEP diaphragm. To balance good output performance and anti-noise capability, the FEP film with 50 μm thickness was used as the negative diaphragm of the Anti-noise TEAS.

Therefore, the optimal structure design of the Anti-noise TEAS was the 300 μm-thick PET film as the spacer and the 50 μm-thick FEP film with as the negative diaphragm. With standardized preparation processes and optimal structure design, the Anti-noise TEAS was able to simultaneously possess desirable output performance and noise immunity. The optimal Anti-noise TEAS possessed a wide frequency response range (Fig. 2g-I), which covered the main range of fundamental frequency of acoustic signal in communications. In addition, the Anti-noise TEAS was capable to record the acoustic signal in different frequency ranges (Supplementary Fig. S5). The frequency characteristic curves were converted to spectrogram by short-time Fourier transform (STFT) with a window of 800 ms and a moving step of 200 ms. The color depth in the spectrogram reflected the magnitude of the acoustic signal, with darker colors (red) representing larger magnitudes and lighter colors (blue) indicated lower magnitudes. As presented in Fig. 2g-II, the intensity of the acoustic spectrogram was mainly concentrated on the diagonal line, indicating that the Anti-noise TEAS possessed a uniform linear response to sweep source. In addition, the octave signal of the sweep source was clearly visible in the spectrogram, which implied that the Anti-noise TEAS possessed the potential to detect acoustic signals at higher frequencies.

As an important metric, acoustic sensitivity of the Anti-noise TEAS was also evaluated by acoustic signals (at 2 kHz) with various SPL from 70 dB to 105 dB. The curves of the SPL vs the acoustic-current response signals of the sensor were exhibited in Fig. 2h-I, demonstrated that the amplitude of the Anti-noise TEAS raised continuously with SPL increasing. The formula for converting SPL to sound pressure (SP) was shown as followed:

| 1 |

| 2 |

Where SPL was the sound pressure level, SP was the sound pressure, SPref was the standard reference sound pressure with a value of 2 × 10−5 Pa, and A was the amplitude of output current. The SPL was converted to SP according to Eq. (1) and linearly fitted to the amplitude of the response signals (Fig. 2h-II). The response signals exhibited a great linear positive relationship with the SP (R2 = 0.9768), with a sensitivity of 0.05 nA Pa−1, which was represented by an amplitude with the SPL of 94 dB (SP = 1). In addition, the Anti-noise TEAS was also employed to detect sweep sources with different SPL ranging from 84 dB to 106 dB. The response signals of the Anti-noise TEAS exhibited a positive correlation with SPL increasing (Fig. 2i-I), while the envelopes of signals maintained a great consistency. Subsequently, the acoustic-current signals were converted into frequency characteristic curves with FFT, which exhibited a high degree of uniformity across various SPLs. However, due to differences in SNR at various SPL, the frequency characteristic curves exhibited fluctuations and distortion at lower SPL, while the overall signal envelopes remained relatively stable (Supplementary Fig. S6). To further validate the effectiveness of the Anti-noise TEAS in discerning acoustic signals of different single frequencies, the sensor was employed to detect various single-frequency sinusoidal acoustic signals ranging from 400 Hz to 2400 Hz. As shown in Fig. 2j-I, the Anti-noise TEAS was able to record the waveforms at different frequencies effectively (Supplementary Fig. S7). The frequency characteristic curves at different frequencies were obtained by FFT, demonstrating that the Anti-noise TEAS possessed good singularity and consistency in acquiring acoustic signals (Fig. 2j-II and Supplementary Note S2).

As an essential indicator of the Anti-noise TEAS, the durability of the Anti-noise TEAS was evaluated with a periodic acoustic signal at 200 Hz for a total period of ~7 × 105. During the long-term acoustic signal detecting process, the amplitude of the acoustic-current response signal did not undergo significant change and decline (Fig. 3a). The initial and final signal waveforms exhibited good consistency, with the overall fluctuation range of the output current being less than 1.5%. This indicated that the Anti-noise TEAS possessed excellent durability and stability after long-term simulation. Furthermore, to assess the feasibility of the Anti-noise TEAS in HMVI, the sensor was employed to detect various types of acoustic signals, including music, speech, and animal calls. A piece of classical music was recorded by the Anti-noise TEAS and compared to the original waveform acquired with a commercial microphone. As exhibited in Fig. 3b, the waveform profile of the acoustic signal detected by the Anti-noise TEAS was consistent with that of the microphone. While conventional microphones typically utilize a sampling rate of 44.1 kHz or higher, the Anti-noise TEAS was designed to focus on detecting the fundamental acoustic signals of human voices, only employing a sampling rate of 8 kHz. This difference would result in slight distortions and variations in the details of the waveforms. With a window of 1024 ms and a moving step of 256 ms, the music waveforms were converted to spectrograms by STFT. As shown in Fig. 3c-I, II, the spectrograms of the music recorded by the Anti-noise TEAS closely matched those of the original music, particularly in the frequency range below 1 kHz. The FFT power spectra below 1 kHz also confirmed the similarity between the original music and the recorded one (Fig. 3c-III). Although the music signal recorded by the Anti-noise TEAS exhibited slight distortions and discrepancies in spectral details, it overall reproduced the original music signal accurately. Additionally, the Anti-noise TEAS was utilized to detect acoustic signals corresponding to English letter combinations A, B, C, and D twice. Figure 3d-I exhibited the waveforms of the original speech signals for A, B, C, and D, alongside the waveforms of the two speech signals recorded by the Anti-noise TEAS. The waveforms of the two speech signals recorded with the Anti-noise TEAS exhibited good repeatability and consistency with the original signal waveforms. After transforming the acoustic signals into spectrograms by STFT (Fig. 3d-II), the distribution of the Anti-noise TEAS spectrograms demonstrated clear similarity to the original spectrograms, indicating good reproducibility between the spectrograms obtained from the twice tests (Supplementary Fig. S8). Thus, the Anti-noise TEAS was proved effective in recording and differentiating acoustic signals with semantic content. Furthermore, the Anti-noise TEAS was also employed to record the calls of 3 distinct animals, including a dog, a horse, and a rooster. The waveform contours of the animal call signals detected with the Anti-noise TEAS closely matched those of the original signals (Fig. 3e), preserving the characteristic peaks and overall contours of the original animal calls effectively. Additionally, the waveforms obtained from the Anti-noise TEAS exhibited high consistency, which demonstrated the sensor’s excellent repeatability in acoustic signal acquisition.

Fig. 3. Acoustic sensing performance of the Anti-noise TEAS.

a Durability test of the Anti-noise TEAS, utilizing an acoustic signal at 200 Hz for ~7 × 105. The inserts presented the output signals during the initial and final periods, along with a statistical analysis of output current at various stages. Graph bars represented standard deviation (SD), and the center line presented the average value. The sample size was 35. b Comparison of signal waveforms from the same piece of classical music recorded using the Anti-noise TEAS and a standard microphone. c Spectrogram comparison of the music recorded using (I) the Anti-noise TEAS and (II) the microphone, along with the (III) power spectra under the 1 kHz range. d (I) Waveform comparisons of A, B, C, and D were detected using the Anti-noise TEAS. (II) Spectrogram comparisons between the detected and original ones. e Waveform comparisons of a (I) dog, (II) horse, and (III) rooster detected by the Anti-noise TEAS with their original waveforms. f Waveforms of motion artifact interference caused by different physiological activities such as hum, sallow, etc. g Comparison of 6 acoustic signals detected by the Anti-noise TEAS with standard speech signals, such as thank you, help me, etc. h Comparison of six acoustic signals detected by the microphone with standard speech signals. i Correlation statistics of acoustic signals detected by the Anti-noise TEAS and the microphone compared to standard speech signals. Graph bars represented SD. The sample size was 6. j Representative waveforms of 6 acoustic signals detected by three different participants using the Anti-noise TEAS. k Representative spectrograms of 6 acoustic signals detected by three different participants using the Anti-noise TEAS.

During the acquisition of fundamental acoustic signals, the Anti-noise TEAS was affixed onto the human larynx, maintaining close contact with the skin. With a skeletal conduction-like approach, the Anti-noise TEAS obtained fundamental acoustic signals directly from the wearer’s larynx during communication, enabling contact-based acoustic signal detection. Nevertheless, the Anti-noise TEAS was susceptible to motion artifact interference by various physiological activities, such as breathing, nodding, and swallowing, during contact sensing. To evaluate motion artifact interference, the Anti-noise TEAS was affixed onto the wearer’s larynx and recorded the motion artifacts induced by the movements, including swallowing, nodding, coughing, and so on. The motion artifact caused by different physiological activities exhibited distinctive recognizable characteristics (Fig. 3f), which demonstrated good repeatability and recognizability among interference signals. These interference signals could be discerned and removed from the fundamental acoustic signals by employing suitable filters and de-baselining algorithms. The effects of vigorous human movements on the output signals of the Anti-noise TEAS were further evaluated (Supplementary Note S3).

Human speech communication was a highly intricate process, where the respiratory organs (lungs, trachea, etc.), vocal organs (vocal cords, larynx, etc.), and articulatory organs (tongue, lip, etc.) collaborated to generate air vibrations, thereby producing corresponding acoustic signals. Within this process, the vibration signals presented on the surface of the throat area typically comprised mixed-mode signals originating from the vibration of the vocal folds and subtle muscular movements. These mixed-mode signals contained certain speech recognizable features, which could reflect the semantic content of the acoustic signals to some extent. With the optimal structure and contact sensing, the Anti-noise TEAS could mitigate the environmental noise interference effectively, yet the acquired acoustic signals were plagued by feature deficiency and waveform distortion. The Anti-noise TEAS could directly record the tiny vibration signals of the vocal folds and the subtle muscular movement signals on the human larynx through contact sensing. Nevertheless, these mixed-mode signals lacked the resonance characteristics contributed by the oral cavity, tongue, pharynx, and other articulatory organs. Consequently, it might be difficult to effectively recognize the complete information encoded within these signals using only human hearing or traditional speech processing techniques. The Anti-noise TEAS was affixed to the larynx of a participant to record six mixed-mode fundamental acoustic signals (for 10 times), including don’t worry (DW), help me (HM), hello world (HW), it’s OK (IO), and thank you (TY). For comparison, the commercial microphone was also employed under identical conditions to record the 6 acoustic signals, serving as a control group. Figure 3g exhibited the 6 acoustic signals recorded by the Anti-noise TEAS compared with the standard waveforms, while Fig. 3h showed a comparison between the 6 acoustic signals recorded by the microphone and the standard waveforms. The acoustic signals recorded by the Anti-noise TEAS generally exhibited similarity to the standard waveforms in terms of contour, albeit displaying some differences in details. However, the acoustic signals recorded by the microphone closely resembled the standard waveforms both in general contour and details. The average correlation between the recorded and the standard signals was analyzed based on the Pearson correlation coefficient. As exhibited in Fig. 3i, the correlation among the acoustic signals recorded by the Anti-noise TEAS was notably low, with an averaged similarity score of 0.2193, whereas the correlation of the signal recorded by the microphone was as high as 0.8097. Thus, although the anti-noise structure and contact sensing mode of the Anti-noise TEAS enabled inhibition of environmental noise, the acquired mixed-mode signals would suffer from serious feature deficiency and waveform distortion. Furthermore, the correlation among the 6 acoustic signals recorded by the Anti-noise TEAS showed considerable variability, with the highest correlation around 0.3362 (for IO) and the lowest correlation recorded at merely 0.0406 (for TY). Those differences might stem from the various proportions of semantic features embedded within the mixed-mode components (vocal fold vibration and muscle movement) and the resonance components (resonance generated by the articulatory organs). Specifically, when the speech signals comprised a higher proportion of mixed-mode components, the Anti-noise TEAS was better equipped to capture effective, acoustically recognizable features, thereby resulting in a higher correlation. However, these mixed-mode acoustic signals lacked sufficient recognizable features. Traditional speech processing techniques or human hearing perception were insufficient for effectively identifying the complete information embedded within these modality-deficient acoustic signals. To address this limitation, more advanced signal processing and analysis tools, such as DLMs, were required to achieve effective recognition of acoustic signals.

Additionally, we also detected the mixed-mode acoustic signals involving three participants. Specifically, participant#1 (male), participant#2 (female), and participant#3 (male) were employed to utter identical phrases, including DW, HM, HW, IO, and TY, while their acoustic signals were recorded by the Anti-noise TEAS. Figure 3j exhibited representative waveforms of 6 acoustic signals recorded by the 3 participants using the Anti-noise TEAS. Although the recorded acoustic signals showed similarity in the overall waveforms, significant variabilities occurred in the waveform details and contour features. These differences contained a wealth of speech information, including individual specificities and unique voiceprints among different participants. Such information held significant potential for applications in semantic recognition, voiceprint identification, and biometric authentication. These acoustic signals were converted to the frequency-domain spectra (Supplementary Fig. S9) by FFT. While the frequency range of 3 participants centered from 50 Hz to 1000 Hz, which was located in the fundamental frequency range of human vocal signals, the contours of the spectra exhibited notable distinctions among them. For instance, the first resonance frequencies of the 6 acoustic signals for P#2 were distributed within the range of 250–300 Hz, with a mean first resonance frequency of ~273 Hz. Meanwhile, the first resonance frequencies of P#1 and P#3 were distributed within the range of 100–200 Hz, with mean first resonance frequencies of ~163 Hz and ~166 Hz, respectively (Supplementary Fig. S10). The acoustic signals of these 3 participants were converted into spectrograms by STFT. While the spectrograms of all participants mainly concentrated below 1 kHz, there existed significant variability in the distribution of formants and features (Fig. 3k), where the frequency domain distributions for P#2 were generally higher than those for P#1 and P#3. Hence, the Anti-noise TEAS could effectively capture the fundamental acoustic signals of various participants, while the waveforms, spectrums, and spectrograms exhibited distinct individual variabilities. This indicated that the Anti-noise TEAS was capable of recording the content of the fundamental acoustic signals, which maintained the rich speech recognizable features, such as individual specificity and voiceprint. Integrating these diverse recognizable features with AI models would facilitate the potential applications and scalability of the Anti-noise TEAS in semantic recognition, voiceprint identification, and biometric authentication.

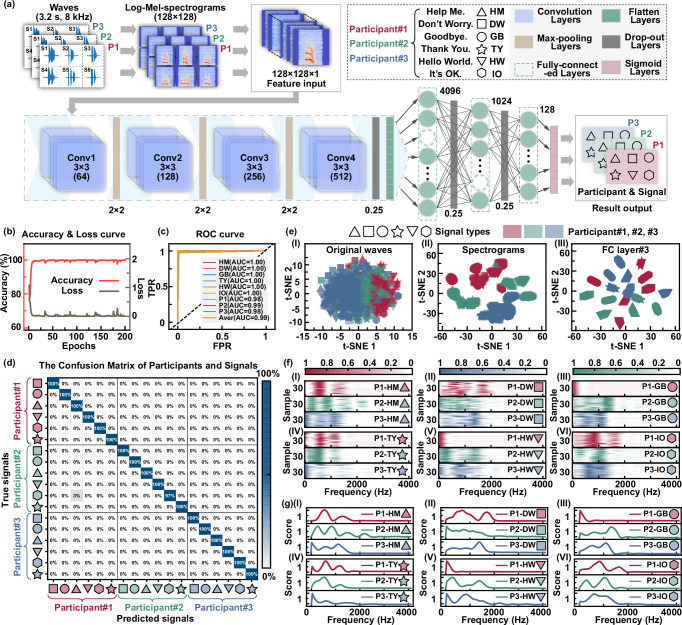

DLM was a powerful tool for feature extraction, data analysis, and target classification, which could efficiently recognize the speech signals, such as semantic recognition, bio-certification, and emotion analysis. Different from human hearing and traditional speech processing techniques, DLM exhibited a robust analytical capability for the recognizable features within speech signals, even in cases of speech signals with feature deficiency and waveform distortion. This capability was highly compatible with the Anti-noise TEAS, which focused on the detection of laryngeal mixed-mode acoustic signals. Thus, a CNN-based DLM with recognition capabilities was established to recognize the acoustic signals detected by the Anti-noise TEAS. Specifically, a CNN-based DLM for multi-label ASR was constructed to identify the semantics and individuals of various acoustic signals recorded by the Anti-noise TEAS. In addition, the recognition performance of the DLM for multi-label ASR was evaluated by various indicators, such as the confusion matrix, t-SNE cluster, and so on. The gradient-weighted class activation mapping (Grad-CAM) was also employed to analyze the activated regions of different spectrograms across 3 participants.

A total of 1244 samples (704 for the train dataset and 540 for the test dataset) of 6 different types of acoustic signals from 3 participants were collected using the Anti-noise TEAS, and followed by preprocessing on the raw data. Specifically, to ensure consistency among the different acoustic signals in the time dimension, the acoustic signals of various lengths were unified into 3.2 s through truncation or padding. Additionally, all signals were normalized to mitigate undesirable effects stemming from anomalous samples during the model training process. A feature extraction approach similar to Mel-frequency cepstral coefficients (MFCCs) was employed to transform the acoustic signals into spectrogram features, which were more suitable for DLM training. As a time-varying feature of acoustic signals, MFCCs have been widely used in speech recognition, speech synthesis, etc. However, the MFCCs transformation of acoustic signals required a discrete cosine transform (DCT), which projected spectral energy onto a new orthogonal basis that might not fully maintain the relative localization of the original signals25. In this work, to address the limitation of the discretization, the DCT would not be performed during the MFCC transformation. After taking the logarithm of the spectrum, the Mel-spectrogram was utilized as the spectrogram features of the acoustic signals directly. Consequently, by employing a window of 800 ms and a step of 200 ms, a 128 × 128-dimensional Log-Mel-spectrogram was obtained by segmenting the speech waveform signals into frames, applying windowing, performing STFT, Mel-filtering, and logarithmic operations (Supplementary Fig. S11).

The DLM for multi-label ASR consisted of convolution layers, max-pooling layers, drop-out layers, flatten layers, fully-connected layers, and sigmoid layers (Fig. 4a). By feeding the Log-Mel-spectrograms into the DLM for multi-label ASR, it could concurrently recognize semantic and individual information within the acoustic signals. In the DLM, it consisted of four convolution layers with 64, 128, 256, and 512 channels, respectively. The 3 × 3 convolution kernels, batch normalization, and activation functions of each convolution layer were employed to extract and preserve the feature spectrograms of the Anti-noise TEAS, allowing for the extraction and retention of deep nonlinear acoustic features from shallow to deep layers. The Max-pooling layers, which are situated between the convolution layers, employed a 2 × 2 filter to execute a down-sampling operation on the feature spectrograms generated from the last convolution layers, thereby diminishing the model parameters. The dropout layer with a coefficient of 0.25 was employed to randomly discard some neuron outputs of the model, which could further prevent overfitting. Then, the multi-dimensional feature spectrograms from the convolution layers were flattened into a one-dimensional feature sequence by the flatten layer and fed into the fully-connected layers. The fully-connected layers consisted of a three-layer structure with node numbers of 4096, 1024, and 128, respectively. This architecture formed a fully-connected neural network capable of extracting and reducing the dimensionality of the feature sequence, while retaining the most significant feature components within the acoustic signals. Similarly, between each fully-connected layer, some neuron nodes were randomly dropped out with a dropout layer (0.25), to enhance the final generalization ability of the DLM. The sigmoid activation function was employed to facilitate the multi-label classification of different signals, enabling the identification of various semantic contents, individual objects, and other diversified label classifications simultaneously (Supplementary Fig. S12). The detailed parameters of the DLM for multi-label ASR were exhibited in Supplementary Table S1.

Fig. 4. The CNN-based DLM for multi-label ASR.

a Schematic of the structure of the CNN-based DLM for multi-label ASR. The original acoustic signals of the Anti-noise TEAS were truncated, filled, normalized, and feature extracted into 128 × 128-dimensional Log-Mel-Spectrograms. The DLM for multi-label ASR consisted of convolution layers, max-pooling layers, drop-out layers, flatten layers, fully-connected layers, and sigmoid layers. By inputting the Log-Mel-Spectrograms of 6 different acoustic signals from three different participants into the model, it was possible to recognize the semantic content of the speech signals and individual objects simultaneously. b Curves of the relationship between accuracy and loss function value vs the number of epochs of the DLM for multi-label ASR during training. c receiver operating characteristic (ROC) curves and area under the ROC curve (AUC) for classification of six different acoustic signals and three participants using the DLM for multi-label ASR. d Confusion matrix results using the DLM for multi-label ASR for semantic and individual recognition. Six different symbols represented different acoustic content categories, and three different colors represented different participants. e T-SNE of (I) original speech waveforms, (II) Log-Mel-Spectrum feature maps, and (III) output feature values of the third layer of the fully-connected layer. Among them, different symbols represented different semantic content categories, and different colors represented different participants. The sample size was 1244. f Heatmaps of gradient-weighted class activation mapping (Grad-CAM) attention score distribution vs frequency for the DLM across (I–IV) Six different semantic content categories, while three different colors represented three different participants. g Curves of Grad-CAM attention score distribution vs frequency for the DLM across (I–IV) Six different semantic content categories, while three different colors represented three different participants.

To mitigate the impact of different sample partitioning schemes, the 10-fold cross-validation method was initially employed to perform a pre-validation and hyperparameter selection of the model structure (Supplementary Fig. S13). Subsequently, the training of the DLM for multi-label ASR was performed using all samples in the training dataset. As the training epochs increased, both the accuracy and loss function values converged rapidly (Fig. 4b). Specifically, the accuracy of the model presented a rapid boost as the epochs increased, stabilizing only after ~10 epochs, and consistently remaining above 99% overall. Conversely, the loss function value decreased rapidly, stabilizing after ~10 epochs and remaining below 0.05 overall. These observations indicated that the DLM for multi-label ASR exhibited a desirable convergence speed during the training process, which might be attributed to its structure design and hyperparameter selection. In addition, this also demonstrated that the acoustic signals of the human larynx detected with the Anti-noise TEAS contained a large number of semantic and individual features. Those features could be effectively extracted and retained throughout the training process of the DLM, ultimately enhancing the efficiency and speed of feature extraction.

To validate the feasibility of the DLM for multi-label ASR in acoustic recognition tasks, the samples of the test dataset were fed into the model to predict the multi-label classification. Subsequently, receiver operating characteristic (ROC) curves (Fig. 4c) and confusion matrices (Fig. 4d) were performed. The confusion matrix exhibited that the DLM for multi-label ASR possessed high recognition accuracy, with 99.8% accuracy for semantic recognition, 99.8% for individual recognition, and 99.8% overall accuracy. The model predicted the semantic and individual label of the samples separately, rather than directly predicting the semantic + individual label of the samples. This multi-label classification approach was more suitable for the classification of information-rich samples such as acoustic signals, which enabled a more comprehensive characterization of acoustic signals, including semantic information, individual, and emotion. Three separate DLMs for ASR were also established using data from each participant. The accuracy curves, loss curves, and confusion matrices during the training process of the models were presented in Supplementary Figs. S14–S16, while the detailed parameters of the models could be found in Supplementary Tables S2–S4. The curves exhibited fast convergence, with an overall accuracy close to 100%, and the loss curve steadily ~0. The confusion matrices indicated that the acoustic recognition accuracy of the DLMs for each participant reached 98.3%, 100%, and 99.2%, respectively, which demonstrated the effectiveness of acoustic signal classification of individual models. To further evaluate the recognition performance of the DLM for multi-label ASR, the ROC curves for nine label classifications were plotted with true positive rate (TPR) and false positive rate (FPR) from the prediction results. Additionally, the area under the ROC curve (AUC) values was also calculated for each ASR. From the ROC curves, the AUC values of the model were close to or equal to 1 on both the semantic and the individual classification tasks, with an average AUC value of 0.97, which indicated the excellent classification performance of the DLM for multi-label ASR.

To visualize the extraction capability of the DLM for multi-label ASR on critical features of the acoustic signals, the t-SNE cluster algorithm was used to perform clustering on the original acoustic waveforms, feature spectrograms, and output feature queues of the third fully-connected layer, respectively. In the t-SNE cluster maps, various symbols represented six different semantic contents, while distinct colors denoted three different participants. For the original acoustic waveform signals (Fig. 4e-I), the samples from different semantic contents and participants were intermingled, which exhibited minimal demarcation and clustering. Notably, the silhouette coefficient score of original acoustic waveform signals was merely −0.1862, indicating a lack of clear separation and clustering within the samples (Supplementary Fig. S17). For the Log-Mel-spectrograms (Fig. 4e-II), the samples from different participants presented obvious separation and clustering, with an improved silhouette coefficient score of 0.5382. However, there were still obvious regional overlaps between samples of different semantic contents, and the clustering effect was not sufficient to distinguish different types of samples effectively. Furthermore, after feeding the Log-Mel-spectrogram into the model, the output feature values of the third fully-connected layer were intercepted and performed t-SNE clustering operations. The clustering efficacy of the output feature values was notable, and there were clearer clustering patterns among different semantic content and distinct participants (Fig. 4e-III). Minimal overlap was observed between acoustic samples from different categories, resulting in a further improvement of the silhouette coefficient to 0.7841. During the operation of the DLM for multi-label ASR, the convolutional and fully-connected layers were capable of extracting and retaining the most essential features in the acoustic signals of the Anti-noise TEAS efficiently. This capability would facilitate the extraction of the recognizable features within acoustic signals, which was helpful in compensating for the lack of semantic features in the acoustic signals.

DLMs have exhibited desirable performance in ASR owing to its robust computational capabilities, yet the interpretability of deep learning operations remains a challenge. The internal mechanisms and decision-making processes of DLM were often opaque, making it difficult to discern how the model precisely reached a recognition conclusion. To address this issue, the Grad-CAM, as a neural network visualization technique, has undergone continuous development and garnered widespread acceptance in deep learning techniques. The Grad-CAM aided in deciphering the decision inference process of the DLM, which could enhance its interpretability, while preserving the architecture of the model intact without compromising its structure or accuracy. In this work, the acoustic signals of test dataset were fed into the DLM for multi-label ASR, and utilized the Grad-CAM to reverse-engineer the decision-making outcomes of the model, aiming to assess the attention scores of the input feature spectrogram in different regions. The representative Grad-CAM heatmaps of 6 different acoustic signals from 3 different participants were exhibited in Supplementary Figs. S18–S20. In the normalized heatmap of the Grad-CAM, regions with higher scores indicated that the model allocated greater attention to the features in those areas, which signified that the important information embedded within those regions contributed to the recognition of acoustic signals. Conversely, regions with lower scores denoted that the model disregarded redundant and irrelevant information within those regions during the decision-making process. After interpolation, superimposition, summation, and normalization, the average heatmaps of the Grad-CAM for different categories of acoustic signals were obtained (Supplementary Figs. S21–S23). By comparing the average heatmaps of the Grad-CAM with the input Log-Mel-spectrograms, the model’s attention scores were concentrated in the low and mid-frequency region (below 1500 Hz). This observation indicated that these frequency ranges contained significant features crucial for semantic and individual recognition, which were consistent with the frequency ranges of the fundamental acoustic signals detected by the Anti-noise TEAS. Furthermore, the heatmaps of the Grad-CAM were performed average operation along the time dimension, and retained only the compressed one-dimensional heatmaps of the average score value across frequencies. Subsequently, these compressed one-dimensional heatmaps from all samples were stacked to generate a frequency heatmap, which depicted the distribution of the Grad-CAM across frequencies (Supplementary Fig. S24). Figure 4f exhibited the frequency-heatmaps of the Grad-CAM for the 6 acoustic signals from 3 participants (different colors). Darker colors within the heatmap signified higher attention score values of the corresponding frequency regions, indicating the model’s dependence on these frequencies in the decision-making process. While the focused frequency regions of the model varied across different acoustic signals, the regions with the highest scores predominantly fall within the middle and low frequency ranges of the Log-Mel-spectrograms. In addition, the frequency-heatmaps of the Grad-CAM with the same type of samples (30 samples) were averaged and summed, which obtained the curves that indicate the relationship between the model’s attention score values and frequency distribution. The region of highest attention scores for the model varied for different categories of acoustic signals (Fig. 4g). The highest attention scores for most categories of acoustic signals were located below 1000 Hz, while a few other categories were located in the range of 1000–1500 Hz. This demonstrated that, during ASR, the features of highest concern to DLM for multi-label ASR were mainly concentrated in the middle and low frequency ranges below 1500 Hz. Such findings further confirmed the efficacy of the Anti-noise TEAS in capturing fundamental acoustic signals. The Anti-noise TEAS was able to adequately record most of the key information in the speech signals, and had sufficient differentiation of acoustic characteristics, such as different semantic contents and individual objects.

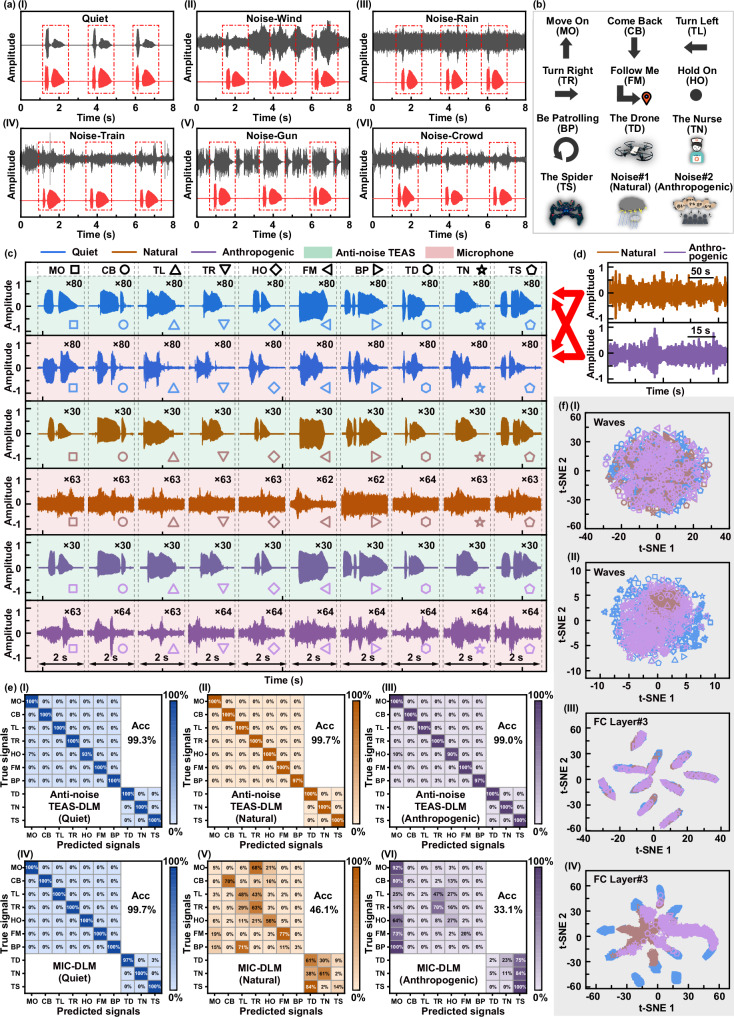

A conventional microphone encountered significant interference in noisy environments, which would hinder efficient and accurate HMVI. Although filtering algorithms and traditional speech processing techniques could solve the problem of noise interference to a certain extent, their efficacy largely depended on the prior recognition of noise categories (i.e., the availability of noise samples beforehand) to optimize signal effectiveness. In the case of unknown noisy environments, traditional speech processing techniques often failed to effectively distinguish and recognize the acoustic signals. Due to the excellent anti-noise capability, the Anti-noise TEAS could collect fundamental acoustic signals through contact sensing in noisy environments, which could be combined with DLM to achieve semantic recognition and intelligent interaction. With the optimal structure and contact sensing, the Anti-noise TEAS naturally mitigated noise interference during the sensing of mixed-mode acoustic signals. To evaluate the anti-noise capability of the Anti-noise TEAS, the sensor was employed to detect the acoustic signal (help me) in various environments, such as quiet, windy, crowded, and compared with a commercial microphone. Across various noise scenarios, the acoustic signal waveforms recorded by the Anti-noise TEAS exhibited remarkable consistency with those recorded in quiet environment (Fig. 5a). Conversely, the acoustic signals detected by the microphone were substantially obscured by ambient noise, which made the contour characteristics of the acoustic signals indistinguishable. The acoustic signals were transformed into spectrograms via STFT. Similarly, the spectrograms obtained by the Anti-noise TEAS exhibited higher consistency with those from the quiet environment, while those from the microphone were significantly impacted by ambient noise, which manifested pronounced background noise and distortion (Supplementary Fig. S25). Therefore, compared with the microphone, the Anti-noise TEAS could effectively detect the acoustic signals in noisy environments.

Fig. 5. Anti-noise and speech recognition performance of the Anti-noise TEAS-DLM.

a Acoustic signals of help me in (I) a quiet environment, and in different noisy environments, such as (II) gusty wind, (III) heavy rain, (IV) train noise, (V) gunshot noise, and (VI) crowd noise. The red curves were the acoustic signals recorded by the Anti-noise TEAS, and the black curves were the acoustic signals captured by the microphone. b Symbols of the seven action commands, three object commands, and two noise environments involved in the human-machine collaborative tasks. c Waveforms of the seven action commands, three object commands captured by the Anti-noise TEAS and microphone in quiet, natural noise, and anthropogenic noise environments, respectively. Among them, different symbols represented different command signals, while different color backgrounds represented the signals of the Anti-noise TEAS and the microphone, respectively. d Waveforms of natural noise and anthropogenic noise. e Confusion matrices of command signal recognition results of the Anti-noise TEAS-DLM in (I) quiet, (II) natural noise, and (III) anthropogenic noise environment. Confusion matrices of command signal recognition results of the MIC-DLM in (IV) quiet, (V) natural noise, and (VI) anthropogenic noise environment. f T-SNE cluster plots of (I–II) original waveforms and (III–IV) output fully-connected layers of the Anti-noise TEAS-DLM (sample size is 1400) and the MIC-DLM (sample size is 2067). Among them, different symbols represented various command categories, and different colors represented various environments.

The advantages of the Anti-noise TEAS for the acoustic signal detection in noisy environments were discussed comprehensively in Supplementary Note S4. In specific, the Anti-noise TEAS was optimized through the structure design and parameter selection to achieve physical-denoising, which was versatile for buffering unknown types of noise in open and harsh noise scenarios. In contrast, algorithmic denoising or AI-model denoising remained challenging in variable open scenarios to solve the unknown noise interference, and imposed certain requirements on the computing capacity of hardware devices. Second, as a flexible contact acoustic sensor, the Anti-noise TEAS could directly acquire the acoustic signals from the mixed-mode weak vibration of the larynx. The Anti-noise TEAS could selectively buffer the interference of the noisy environments, while remaining sensitive to record target acoustic signals from the larynx, due to the anisotropic sensing characteristics of the Anti-noise TEAS. Conventional rigid microphones were mainly designed to detect airborne acoustic signals, where the transduction of the target signal was not inherently different from the noise signals, and thus the noise could not be selectively buffered. Moreover, the Anti-noise TEAS was also available for patients with laryngeal dysfunction, by detecting the vibrations of their laryngeal muscles. Third, the Anti-noise TEAS possessed a unique air-layer structure, where the middle air layer was compressed to change the distance between the two friction layers during the contact sensing. Due to the low modulus of elasticity of the air layer, the inner diaphragm was able to highly compress it during the deformation process, and generate friction initiation and electrostatic induction with the outer friction layer, thus responding to the acoustic signals sensitively. The interference vibrations of external ambient noises mainly affected the deformation of the outer friction layer and the compression of the middle air layer. The Anti-noise TEAS could utilize the inner diaphragm to sense the acoustic vibrations of the larynx, while the outer friction layer isolated the interference of ambient noise. The specific selection and sensing of target throat signals and external noise signals allowed the sensor to resist the noise interference without reducing the detection sensitivity. In addition, due to the low modulus of elasticity of the middle air layer, the inner diaphragm and outer friction layer were in low-resistance free deformation states. Thus, the Anti-noise TEAS demonstrated a high degree of sensitivity to weak acoustic signals of laryngeal vibrations, merely by being comfortably attached to the surface of the larynx. In contrast, a conventional flexible PVDF acoustic sensor recorded a vibration signal from the larynx through the compressive deformation-induced polarization of the PVDF layer. Differing from the Anti-noise TEAS, PVDF piezoelectric acoustic sensors did not possess the anisotropic sensing characteristics. With the adjustment of the PVDF layer, the detection sensitivity of the PVDF acoustic sensor and the resistance to ambient noise were simultaneously diminished. The PVDF piezoelectric sensors also required a high pre-pressure to be attached tightly to the throat surface to achieve sufficient PVDF material deformation for acoustic sensing. Conventional flexible piezoresistive acoustic sensors mainly rely on external pressure to induce a change in contact resistance, typically exhibiting lower dynamic mechanical detection sensitivity. The piezoresistive acoustic sensors also did not possess anisotropic sensing characteristics, which was infeasible for selectively buffering noise.

The feasibility and accuracy of the Anti-noise TEAS-DLM were also validated by a complex human-machine collaborative tasks in noisy environments. In the complex human-machine collaboration (Fig. 5b), there were seven types of action commands, including move on (MO), come back (CB), turn left (TL), turn right (TR), follow me (FM), hold on (HO), be patrolling (BP), and three object commands, comprised the drone (TD), the nurse (TN), the spider (TS). There were three types of different environmental sounds in complex scenarios, including quiet, natural noise (such as rain, thunder), and anthropogenic noise (containing crowd sounds, or gunshot sounds) environment. These ten types of command signals were collected by the Anti-noise TEAS or microphone in different environments, respectively. Specifically, the Anti-noise TEAS recorded 800 sets of command signals in the quiet environment, and 300 sets each in the natural and anthropogenic noise environments, respectively. The microphone detected 800 sets of command signals in the quiet environment, 629 sets in the natural noise environment, and 638 sets in the anthropogenic noise environment. Figure 5c exhibited the representative command signals obtained by both the Anti-noise TEAS and the microphone across three distinct environments. The waveform contours of the command signals recorded by the Anti-noise TEAS exhibited notable consistency and repeatability across different environments, which were less affected by the interference of environmental noise. Conversely, the waveform contours of the command signals detected by the microphones in quiet and noisy environments displayed considerable variability, which were significantly interfered with and distorted. Meanwhile, as shown in the spectrograms (Supplementary Figs. S26 and S27), the command signals acquired by the Anti-noise TEAS in different environments possessed better consistency and repeatability than those of the microphone.

To implement complex human-machine collaborative tasks utilizing the Anti-noise TEAS-DLM in noisy environments, a CNN-based DLM for ASR was constructed. Analogous to the construction of the DLM for multi-label ASR, the command signals were truncated, padded, and normalized to eliminate undesirable effects due to anomalous samples during the training process. After frame-splitting, windowing, STFT, Mel filtering, and logarithmic operations, the command signals were converted into 128 × 128-dimensional Log-Mel-spectrograms, which were more suitable for DLM training. Furthermore, the command samples collected by the Anti-noise TEAS in the quiet environment were divided into a train dataset and a testing dataset following a 5:3 ratio. Specifically, the train dataset contained 10 types of command samples, with 50 sets of each signal type, totaling 500 sets of samples. The test dataset included 10 types of command samples, with 30 sets of each signal type, totaling 300 sets of samples. The samples of the train dataset were utilized to train the CNN-based DLM (Supplementary Fig. S28), thereby obtaining the DLM for ASR. Notably, in this work, the action and object commands were specified as two different types of signals. Thus, the classification outputs of the model were specified accordingly, i.e., action commands could only be recognized as action labels, while object commands could only be recognized as object labels (Supplementary Figs. S29 and S30). To compare the performance of the Anti-noise TEAS-DLM, the microphone coupled with the DLM for ASR (MIC-DLM) was also constructed. The model was trained utilizing the training dataset of acoustic signal samples collected by the microphone in a quiet environment (Supplementary Fig. S31). The detailed parameters of the Anti-noise TEAS-DLM and MIC-DLM models were exhibited in Supplementary Tables S5 and S6.

The test dataset of the acoustic signals recorded by the Anti-noise TEAS and the microphone in different environments was fed into the DLM, respectively. The recognition results of these acoustic signals were obtained, and separately plotted the corresponding confusion matrices (Fig. 5e). In the quiet environment, both the Anti-noise TEAS-DLM and the MIC-DLM demonstrated remarkable speech recognition accuracy, which possessed an overall command recognition accuracy of 99.3% and 99.7%, respectively. In the natural and anthropogenic noise environments, the accuracy of the Anti-noise TEAS-DLM remained unaffected, maintaining an overall accuracy of 99.7% and 99.0%. Conversely, the accuracy of the MIC-DLM notably declined due to noise interference, which decreased to 46.1% and 33.1%, respectively. Compared with the MIC-DLM, the Anti-noise TEAS-DLM preserved desirable command recognition efficacy across various environments. Such anti-noise capability was pivotal for facilitating human-machine collaborative tasks in noisy scenarios. Additionally, the ROC curves of the 10 command recognitions were performed for both the Anti-noise TEAS-DLM and the MIC-DLM across various environments, and the AUC values of each ROC curve were also calculated. For the Anti-noise TEAS-DLM (Supplementary Figs. S32–S34), the average AUC value of the object commands in a quiet environment was up to 1.00, while that of the action command was as high as 0.99. Similarly, the average AUC value in natural and anthropogenic noise environments was all close to 1.00, which indicated the desirable recognition performance of the Anti-noise TEAS-DLM. For the MIC-DLM (Supplementary Figs. S35–S37), the average AUC values of the object and the action commands in the quiet environment both reached 1.00. However, the average AUC value of the object and action commands in the natural noise environments was reduced to 0.59 and 0.65, while that in the anthropogenic noise environments was reduced to 0.60 and 0.58. These results indicated the inferior recognition performance of the MIC-DLM, which demonstrated its incapability to distinguish command signals in noisy environments effectively.

To visually exhibit the command recognition capability of the Anti-noise TEAS-DLM and the MIC-DLM, the t-SNE cluster maps were performed on various components, including original speech waveform signals, feature spectrograms, the fourth convolution layers, and the third fully-connected layers. The silhouette coefficients of different t-SNE cluster maps were also performed (Supplementary Figs. S38 and S39). In the t-SNE cluster maps, ten different symbols represented ten types of command signals, while three different colors corresponded to the three types of environments, respectively. As presented in Fig. 5f-I, II, the t-SNE cluster maps of original waveform signals, which were collected by the Anti-noise TEAS and the microphone, appeared largely mixed without distinct demarcation or clustering phenomena. The silhouette coefficients of the quiet, natural noise, and anthropogenic noise environments were −0.1244, −0.0715, −0.1204, and −0.1609, −0.1060, −0.1122, respectively. Upon transforming the original waveforms into Log-Mel-spectrograms, the t-SNE cluster maps of the Anti-noise TEAS-DLM partial separation and clustering (Supplementary Fig. S40). The silhouette coefficients were improved to 0.6062, 0.7238, and 0.6644, respectively, which demonstrated the effectiveness of feature extraction within the Anti-noise TEAS-DLM. After Log-Mel-spectrograms transformation, the t-SNE cluster maps of the MIC-DLM showed a clustering phenomenon of samples from different environments, but there was still overlap between samples of various command types (Supplementary Fig. S41). The silhouette coefficients were −0.0080, 0.2852, and 0.0134, respectively, which indicated that the feature extraction of the MIC-DLM could differentiate environmental information effectively, but not the command types. Further, the test datasets of the Anti-noise-DLM and the MIC-DLM were fed into the DLM, and the output values of the fourth convolution layer and the third fully-connected layer were intercepted to perform the t-SNE cluster operation. The t-SNE cluster maps of the fourth convolution layers of the Anti-noise TEAS-DLM exhibited a significant clustering effect, which could enhance the discrimination of command types across various environments. However, the clustering effect of the fourth convolution layers of the MIC-DLM showed marginal improvement in a quiet environment, while the clustering remained poor in natural or anthropogenic noise environments. Additionally, the t-SNE cluster maps of the third fully-connected layer of the Anti-noise TEAS-DLM exhibited robust clustering effects (Fig. 5f-III), which the silhouette coefficients improved to 0.7096, 0.6859, and 0.6686, respectively. The same type of command signals across three different environments were clustered together, indicating that the clustering effect of the Anti-noise TEAS-DLM was not affected by environmental noise. The t-SNE cluster maps of the third fully-connected layer of the MIC-DLM showed clearer clustering phenomena in the quiet environment (Fig. 5f-IV), with the silhouette coefficient improving to 0.7960. However, the clustering effect of MIC-DLM in noisy environments remained poor, which displayed significant overlap, with silhouette coefficients of only −0.0010 and −0.1149. This indicated that the microphone was susceptible to environmental noise in complex scenarios, which led to a notable absence of discernible signal features. Therefore, compared with the MIC-DLM, the Anti-noise TEAS-DLM possessed desirable noise immunity, which could effectively differentiate command signals in various noisy environments.

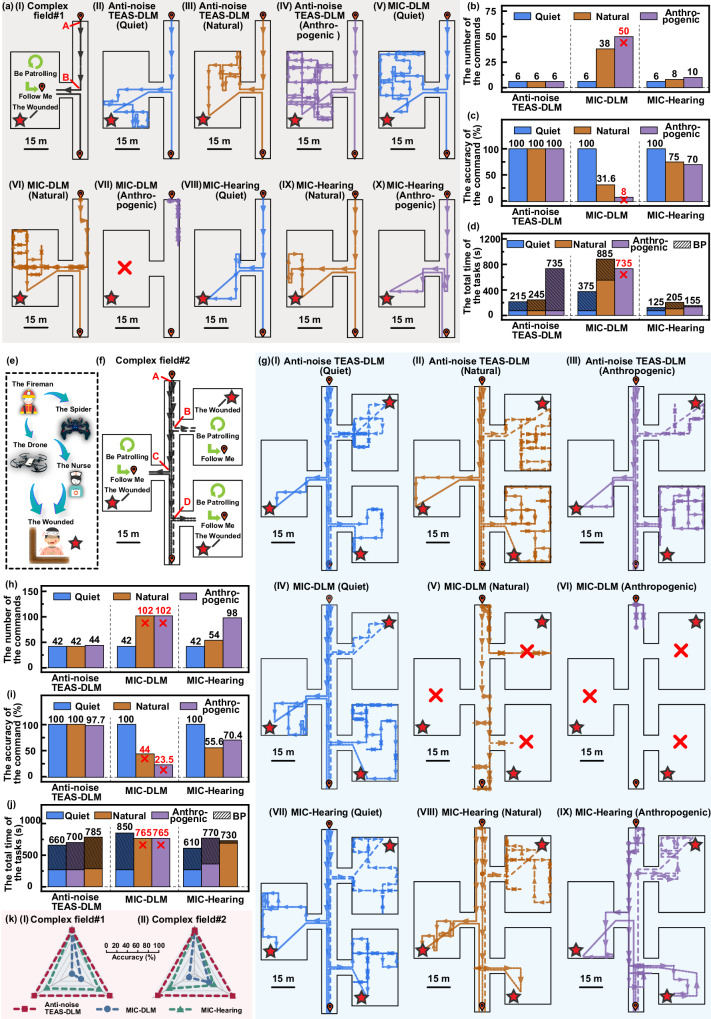

We then evaluated the feasibility and accuracy of the Anti-noise TEAS-DLM by performing the complex human-machine collaborative tasks in different harsh scenarios. Three different scenarios were designed in the virtual world, including an open field, a complex field#1, and a complex field#2, to test the feasibility of the Anti-noise TEAS-DLM in human-machine collaboration. In the open field, the objects were free to move around, while in complex field#1 and complex filed#2, the objects were required to perform rescue tasks. As the controller, the fireman leader was the subject of sending commands by voice in various scenarios, where the voice signals were detected by the Anti-noise TEAS. These acoustic signals were then recognized and parsed into the task’s commands by the DLM. Subsequently, the commands were wirelessly transmitted via the communication device to three objects (drone, spider robot, and nurse), who collaborated to accomplish the tasks. In each field, there were three types of environments, including quiet, natural noise, and anthropogenic noise environments. The leader’s acoustic signals were significantly affected by that noise, which made it challenging to accurately transmit the acoustic signal using a conventional microphone. With the action commands, the leader controlled different objects to execute specific human-machine collaborative tasks according to defined routes. As the control group, the command signals issued by the leader were simultaneously obtained by the microphone. These signals were then converted into command signals to be transmitted to the drone, robot and nurse via the MIC-DLM, which were significantly interfered with by the noisy environments. In order to simulate the complex interactions between multiple human and machine entities in real-life scenarios, the nurse (real person) also acted as an information-receiving entity. The nurse judged the types of command signals recorded by the microphone through hearing perception (MIC-Hearing), and performed the specific human-machine collaborative tasks according to defined routes (Supplementary Fig. S42). The recorded tracks of different objects in response to Anti-noise TEAS-DLM (Supplementary Fig. S43), the MIC-DLM (Supplementary Fig. S44), and the MIC-Hearing (Supplementary Fig. S45) in various harsh scenarios were monitored, by counting the indicators of the total number of commands, the command accuracy rate, and the total time for task completion.

The accuracy of the three types of HMVIs in the open field was verified by employing combined command signals to control object actions. In the open field, the fireman leader would control the drone, robot, and nurse to move along specific routes, while the scene environment had no object collision barriers to their movements (Fig. 6a). To facilitate demonstration of object tracks within the tasks in the plots, the robot’s recorded tracks were denoted with solid lines, the nurse’s tracks were represented with dense dashed lines, and the drone’s tracks were loose depicted with dashed lines (Fig. 6b). Additionally, blue, brown, and purple colors indicated the moving tracks in the quiet, natural noise, and anthropogenic noise environments, respectively, while the position of the leader was indicated by orange coordinates. Figures 6c-I–III illustrated the theoretical routes of three specific routes in the open field, where route#1 encompassed MO, TL, HO, FM, CB, TR, HO, and FM; route#2 followed MO, TR, MO, TR, MO, MO, TR, HO, and FM; and route#3 involved MO, CB, TL, TR, CB, MO, TR, and TL. Utilizing the Anti-noise TEAS-DLM, the MIC-DLM, and the MIC-Hearing, the route#1, the route#2, and the route#3 were executed in the open field (Supplementary Figs. S46–S48), respectively. In the route#1, the Anti-noise TEAS-DLM demonstrated commendable performance across various environments, with only 1 error occurring in a command intended for HO. However, while the MIC-DLM exhibited proficiency in quiet environments, its performance varied significantly in the noisy scenarios, leading to significant deviations in recorded tracks and an increased occurrence of command errors. Similarly, the MIC-Hearing performed effectively in quiet environments, but some command errors were observed in the natural and anthropogenic noise environments, resulting in some deviations of the recorded tracks from the theoretical routes. In the route#2 (Fig. 6e) and route#3 (Fig. 6f), the Anti-noise TEAS-DLM could also execute the tasks well in various environments, and the recorded tracks of the tasks were consistent with the theoretical routes. Conversely, the MIC-DLM and the MIC-Hearing successfully executed the specified routes only in quiet environments, but there were more command errors in the two noisy environments, resulting in a large deviation of the recorded tracks from the theoretical routes. The number of incorrect commands of 3 routes across different environments were counted. As exhibited in Fig. 6g, the Anti-noise TEAS-DLM had only 1 (1.4%) command error in the route#1, while MIC-DLM and MIC-Hearing possessed multiple command errors in noisy environments, which totaled up to 25 (34.7%) and 18 (25%) errors, respectively. To provide a more intuitive representation of the performance of these HMVI approaches across varied environments, the command executions for the three routes were presented with color maps (Fig. 6h). Different colors denoted various command categories, while error symbols above the colors indicated error levels in command execution. The Anti-noise TEAS-DLM demonstrated desirable performance across diverse environments and routes, whereas the MIC-DLM and the MIC-Hearing exhibited better execution proficiency solely in quiet environment, with a higher occurrence of command errors in noisy environments.

Fig. 6. Performance of the Anti-noise TEAS for human-machine collaboration in virtual open fields.