Abstract

Value-driven attentional capture (VDAC) involves involuntary attention shifts towards neutral stimuli previously associated with rewards. This phenomenon is pertinent for various neuropsychiatric conditions. This study aims to evaluate the reliability of VDAC using reaction time (RT) and data-limited accuracy measures. Two experiments were conducted: one using the traditional RT-based VDAC paradigm and another using a data-limited accuracy-based approach. The reliability of these measures was assessed through odd-even split-half and test-retest correlations. The RT-based paradigm showed significant VDAC effects and high reliability when individual RT scores were used. However, the data-limited accuracy paradigm did not exhibit VDAC effects, possibly due to task simplicity. RT-based VDAC measures are reliable when using individual scores, while data-limited accuracy measures may require more challenging tasks to effectively capture VDAC. We suggest that researchers in the experimental approaches use single scores instead of difference scores to improve reliability, as they have lower measurement error and higher between-participant variance. Using single scores may require certain prerequisites for them to be meaningful — specifically, that a reliable VDAC effect is observed at the group level.

Keywords: Value-driven attentional capture, Reliability, Reaction time, Data-limited accuracy

Subject terms: Psychology, Human behaviour

Introduction

Value-driven attentional capture (VDAC) describes the involuntary attraction of an individual’s attention to neutral stimuli that have become associated with rewards through learning, even when these stimuli are unrelated to current tasks1–3. Studies indicate that VDAC contributes to sustained involvement with addictive substances or activities by making reward-related cues unusually attractive due to their increased incentive salience, often detracting from healthier behaviors4,5. Studies on VDAC help elucidate the cognitive and behavioral symptoms observed in disorders such as ADHD and schizophrenia, guiding novel therapeutic approaches aimed at diminishing the influence of reward-predictive cues on patient behavior and decision-making processes6. Reward-predictive cues are stimuli that, through prior learning, have become associated with rewards and thus signal the potential for reward when encountered.

In Anderson et al.’s (2011) seminal work, participants underwent a training phase where they learned to associate specific colors (red or green) with varying levels of monetary rewards by identifying colored targets among distractors and receiving feedback on monetary gains for correct responses, with one color typically associated with higher rewards. Following this, in a reward-free test phase, they engaged in a visual search task to identify unique shapes among various colored distractors, including those colors previously associated with rewards, despite these colors being irrelevant to the task at hand. This design aimed to assess the influence of reward-associated colors on attention in situations where these cues were no longer pertinent. Anderson, et al.2 revealed that the presence of colors previously associated with rewards, although currently irrelevant, significantly slowed participants’ reaction times (RT) in comparison to trials without these colors, demonstrating that past reward associations continue to influence attention. This effect was not attributed to the physical salience or relevance to the task, indicating an involuntary and unique form of attentional capture driven by the learned value of the color. These findings emphasize the enduring influence of previously rewarded stimuli on shaping perceptual priorities and affecting attentional engagement, even when the rewards are absent and the stimuli are unrelated to the immediate task.

In a recent meta-analysis, Rusz, et al.3 quantitatively analyzed a rapidly expanding body of research on the impact of reward-related distractors on cognitive performance. Their meta-analysis provides robust quantitative support for the reliability and generalizability of reward-driven distraction, including the VDAC effect, across various paradigms and task designs. Specifically, they showed that high-reward distractors consistently impair performance more than low-reward or neutral distractors, and that this effect holds even after correcting for publication bias and selection-driven distraction. This supports the theoretical basis of our current work, which builds on their findings by further testing the boundary conditions of the VDAC effect—particularly through comparing the RT-based and data-limited accuracy paradigms under identical conditions. By aligning our study design with their recommendations (e.g., using the high-vs-low reward contrast to isolate reward effects), we also aim to contribute more rigorously to the ongoing methodological refinement in this field.

Reliability of VDAC

In recent years, some studies have begun to explore the reliability of VDAC7–9. Generally, they found that VDACs primarily based on RT have very low reliability. By adopting an experimental paradigm similar to Anderson, et al.2, Anderson and Kim8 focused on investigating the test-retest reliability of VDAC by examining eye movement metrics. The study focuses on two dependent variables to assess the test-retest reliability of value-driven attentional capture: RT, which measures the time of a participant fixating the target, and the percentage of initial fixation on the high- and low-value distractors, evaluating how often participants fixate on a previously rewarded distractor. Anderson and Kim8 replicated previous experimental results, demonstrating that task-irrelevant reward distractors prolong RT and participants exhibit a higher proportion of initial fixations to the reward distractors. The oculomotor method, which observes eye movements (EM) towards stimuli linked to rewards, exhibited strong test-retest reliability (r = .80) in the results from initial testing with a follow-up four weeks later. This suggests that EM tracking is a dependable method for assessing how consistently individuals are influenced by reward cues over time. Conversely, the RT method, a more traditional metric in this research area, did not display such reliability (r = .12). This lack of consistency in RT measures suggests they may not reliably capture changes in attentional bias over multiple sessions.

Freichel, et al.7 replicated and extended previous findings on VDAC by incorporating both reward and punishment contexts and exploring the test-retest reliability of the VDAC task. The results demonstrated consistent VDAC effects in reward contexts across two separate studies, indicating stronger attentional capture by distractors associated with high rewards than with low rewards. However, the reliability analysis revealed low test-retest reliability (r = .09) for VDAC.

Garre-Frutos, et al.9 assessed the reliability of VDAC, focusing on its split-half reliability and how it behaves across different task phases—rewarded and unrewarded. The method replicated the VDAC effect within a large online cohort, measuring the effect during initial learning with rewards and subsequently without rewards. The study also evaluated the reliability of VDAC using a multiverse analysis technique that considered various data preprocessing options. The findings indicated that the VDAC effect escalated during the phase with rewards and remained stable when rewards were removed, demonstrating the effect’s resilience to changes in reward conditions. The reliability analysis, which tested different data preprocessing methods, showed that incorporating more comprehensive data generally improved reliability measures. Particularly, the most reliable results were achieved by including all available trial blocks in the analysis and applying relative filters to exclude outlier RTs. Under these conditions, the rewarded phase achieved a split-half corrected reliability estimate of 0.85, with a confidence interval of 0.8 to 0.88. Meanwhile, the unrewarded phase reached its highest reliability at 0.74, with a confidence interval ranging from 0.67 to 0.80.

RT vs. data-limited accuracy-based measures

The low reliability of the VDAC paradigm may be attributed to the use of RT measures10. The task using the typical RT measure usually has a long post-target presentation time to process a target. Performance depends on the amount of processing resources engaged to the stimuli, that is resource-limited11. RT measure is sensitive to enhanced perceptual representation and decision processing12. However, since the RT measures reflect the overall amount of time for executing all necessary processes from sensory encoding to response execution13, this influence could be attenuated by other processes such as response execution. Multiple cognitive (e.g., decision making) and motor processes underlie the manual response, making this measure inherently “noisy”10.

Although RT-based VDAC is often reported to have low reliability, this study included it to test whether using individual RT scores instead of difference scores could improve reliability. Our results confirmed that with a clear VDAC effect, individual RT scores yielded high test-retest and split-half reliability, suggesting that RT-based paradigms remain useful when analyzed appropriately.

In addition to the typical RT version, we adopted data-limited accuracy-based measures11–15 in the typical VDAC paradigm developed by Anderson, et al.2. In tasks using data-limited accuracy-based measures, stimuli are briefly presented (about 37 ms) and then masked, with accuracy, reflecting the efficiency of initial information extraction. In these conditions, there is no speed stress, allowing postperceptual processes like response selection to perform equally well across manipulated conditions. Performance thus depends solely on the quality of the data processed in that short time, not on processing resources11. This type of measure is sensitive to improvements in perceptual representation12,14,15, indicating early perceptual processing13.

The EM patterns measured in Anderson and Kim8 may be related to data-limited accuracy measures. When participants voluntarily shift their overt attention to the location of the reward distractor, the perceptual representation of this distractor (rather than the target) is enhanced (i.e., better qualities such as contrast and brightness)16–20. As a result, the enhanced distractor perceptual representation may lead to less effectiveness of extracting information from that target, thereby leading to lower accuracy of target recognition in a data-limited accuracy measure. To achieve a data-limited condition in the VDAC paradigm, in the unrewarded test phase, the search display flashes briefly and then is masked to avoid further processing. The backward mask remains until a response has been made.

Difference scores vs. single scores

In addition to the measure issue mentioned above, the difference score between the two conditions often used in experimental studies is less reliable than the individual measures themselves21. This is partly due to error propagation from the two component measures to the composite score. The primary issue is that any subtraction that effectively reduces between-participant variance—thereby minimizing what is termed “error” in experimental studies—tends to increase the ratio of measurement error to between-participant variance. In the typical VDAC paradigm2, RT difference between the rewarded distractor condition and no-distractor condition is a common indicator for attentional capture. Possibly, this RT difference reduces between-participant variance and increases the ratio of measurement error to between-participant variance. Therefore, studies exploring the reliability of VDAC7–9 usually observed low test-retest and internal reliabilities. The difference score typically adopted in experimental studies may underestimate the reliability of the task. In addition to the typical difference score, we examine whether the reliability can be heightened when the individual RT score without subtraction is employed (e.g., the mean RT for the rewarded distractor condition). Importantly, a long RT from the rewarded distractor condition does not necessarily mean VDAC, because the RT from the distracter-free condition can also be long. Thus, before using a single RT to calculate reliability, a prerequisite must be met. That is, it is necessary to first confirm the existence of the VDAC phenomenon (e.g., using the analysis of variance commonly used in past research).

Research aim and hypothesis

In sum, the current study explored the reliability of VDAC. We conducted two experiments: one is the typical RT-version VDAC paradigm2 and the other one is the data-limited accuracy-version VDAC paradigm. It is hypothesized that both experiments may exhibit typical VDAC effects. Once the VDAC effects were confirmed, next, we calculated the split-half reliability and test-retest reliability of VDAC for these two experiments. The difference scores and individual scores were both employed for calculation. We hypothesized that for the RT-version experiment, the reliabilities using the individual scores should be above the acceptable level, and those using the difference scores should be below the acceptable level7–9. For the data-limited accuracy-version, the reliabilities of the difference scores and individual scores should be above the acceptable level.

Experiment 1

Participants

Sixty-two college students (16 males and 46 females,  = 23.5 years, SD = 4.8 years) participated in this experiment, reaching a power of 0.99 (This sample size was determined via G*Power, based on the following parameters: effect size f = 0.25, alpha = 0.05, power = 0.99, number of group = 1, number of measurements = 6, corr among rep measures = 0.5, and nonsphericity correlation = 1.). All achieved over 90% accuracy on the task. Each had normal or corrected-to-normal vision. The written informed consent was obtained from all participants.

= 23.5 years, SD = 4.8 years) participated in this experiment, reaching a power of 0.99 (This sample size was determined via G*Power, based on the following parameters: effect size f = 0.25, alpha = 0.05, power = 0.99, number of group = 1, number of measurements = 6, corr among rep measures = 0.5, and nonsphericity correlation = 1.). All achieved over 90% accuracy on the task. Each had normal or corrected-to-normal vision. The written informed consent was obtained from all participants.

Apparatus

We used an IBM-compatible laptop with a 21.5-inch monitor. with a resolution of 1920 × 1080 and a refresh rate at 60 Hz in both experiments. The viewing distance was 50 cm.

Design

The typical RT-based VDAC paradigm (Fig. 1) was similar to that in Experiment 3 in Anderson, et al.2. This task comprised two sequential phases: a training phase and an unrewarded test phase. Participants were required to visit the lab twice, with a gap of approximately 4 to 5 weeks between visits.

Fig. 1.

An example trial of VDAC paradigm in the current study.

The purpose of the training phase was to establish the association between colors and rewards. It was a 2 (Visit: first or second) by 2 (Distractor type: high reward or low reward) within-groups design. During the training phase, each trial began with the presentation of a white fixation cross (0.57° × 0.57°) against a black background for 500 ms. Following this, the search display appeared and remained on the screen until a response was made or the trial timed out after 800 ms. The search display consisted of six shapes (2.29° × 2.29° visual angle each) arranged at equal intervals along an imaginary circle with a 5.15° radius (center to center), all of which were circles in different colors (red, green, blue, cyan, pink, orange, yellow, and white). The targets were defined as either a red or green circle, with one target presented in each trial. Inside the target circle, a white line segment was oriented either vertically or horizontally, while inside each nontarget circle, a white line segment was tilted 45° to the left or right. Participants identified the target by pressing the “z” key for a vertically oriented line and the “m” key for a horizontally oriented line. The search display appeared until response or disappeared after 800 ms, followed by a 1000-ms blank display. Participants were instructed to respond as quickly as possible while minimizing errors. Correct responses were followed by visual feedback indicating a monetary reward. High-reward targets yielded high-reward feedback (5 NT dollars) on 80% of the trials and low-reward feedback (1 NT dollar) on the remaining 20%, whereas for low-reward targets, the percentages were reversed. Participants were not explicitly informed of this reward contingency but had to learn it during the training phase. For half of the participants, red circles were high-reward targets, while for the other half, green circles were high-reward targets. The feedback display, for 1,500 ms, informed participants of the reward earned on the previous trial and the total accumulated reward. Before the formal trials, participants underwent 20 practice trials, which were identical to the experimental trials except that no reward feedback was provided. The formal trials consisted of 240 trials. After 120 formal trials, there would be a break, during which participants pressed the space bar to continue the experiment. The inter-trial-interval (ITI) was 1000 ms.

The test phase resembled the training phase with a few key differences. It was a 2 (Visit: first or second) by 3 (Distractor type: high reward, low reward, or none) within-groups design. The search display featured either a circle among diamonds or a diamond among circles, with the target in each trial being the unique shape. Each item in the display was uniquely colored. On half of the trials, one of the nontarget elements, the distractor, was colored red or green; the target was never red or green. Participants were informed that color was irrelevant to the task and should be ignored. The search display appeared until response or disappeared after 1,200 ms. Participants were instructed to respond as quickly as possible while minimizing errors. The feedback display, for 1,000 ms, only informed participants whether their response on the previous trial was correct. No reward was provided during the test phase. There were 20 practice trials without a distractor and 240 formal trials. In formal trials, the target shape (circle or diamond), the target location (six locations), the distractor type (high reward, low reward, or none), and the distractor location (five locations) were fully crossed and randomized. After 120 formal trials, there would be a break. Participants were paid the amount of money earned during the training phase upon completing the experiment. The ITI was 500 ms.

Procedure

Each participant scheduled an initial visit and a follow-up visit 4 weeks later, both set for the same time and day of the week. If participants could not keep the original appointment, they were allowed to reschedule the follow-up visit within 1 week of the initially scheduled time. During each visit, participants were tested individually in a dimly lit laboratory room. The experimenter provided written and oral descriptions of the stimuli and procedures to familiarize participants with each task. All participants signed an informed consent, approved by the Institutional Review Board of the Chung-Shan Medical University Hospital (CS2-21181). All methods were performed in accordance with the relevant guidelines and regulations.

Results

The mean correct rate was 0.92 (SD = 0.27) for Visit 1 and 0.94 (SD = 0.24) for Visit 2. For each visit, correct RTs faster than the mean RT minus 3 SD (less than 200 ms, set at 200 ms) and those slower than the mean RT plus 3 SD were removed. This resulted in a 1.67% removal rate for visit 1 and 1.53% for visit 2. The average number of days between the first visit and the second visit was 32.5 days (SD = 4.4 days).

Examining the existence of VDAC

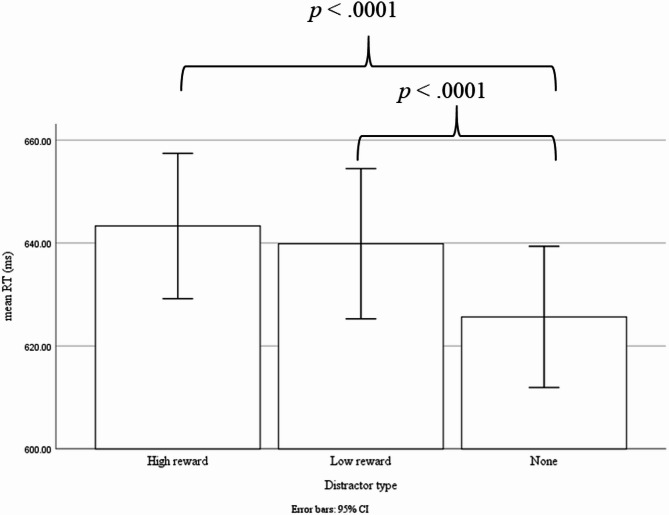

We conducted a 2 (Visit: first or second) by 3 (Distractor type: high reward, low reward, or none) analysis of variance (ANOVA) to the correct RTs (Table 1; Fig. 2). Neither Experiment 1 nor Experiment 2 violated Mauchly’s test of Sphericity. The main effects of Visit (F(1,61) = 55.473, p < .0001, ηp2 = 0.476) and Distractor type (F(2,122) = 30.975, p < .0001, ηp2 = 0.337) were significant. The interaction effect was not significant (F(2,122) = 0.599, p = .551, ηp2 = 0.010). Further, Bonferroni’s post-hoc test on the main effects showed that the mean correct RT for Visit 1 ( = 657.5 ms, SE = 7.7 ms) was longer than that for Visit 2 (

= 657.5 ms, SE = 7.7 ms) was longer than that for Visit 2 ( = 615.1 ms, SE = 7.3 ms). This may indicate the practice effect. More importantly, the mean correct RTs in the high- (

= 615.1 ms, SE = 7.3 ms). This may indicate the practice effect. More importantly, the mean correct RTs in the high- ( = 643.3 ms, SE = 7.1 ms) and low-reward (

= 643.3 ms, SE = 7.1 ms) and low-reward ( = 639.9 ms, SE = 7.3 ms) conditions were longer than that in the none distractor condition (

= 639.9 ms, SE = 7.3 ms) conditions were longer than that in the none distractor condition ( = 625.6 ms, SE = 6.9 ms) (all p’s < 0.0001). This indicated a VDAC effect. There was no RT difference between the high- and low-reward distractor conditions (p = .622).

= 625.6 ms, SE = 6.9 ms) (all p’s < 0.0001). This indicated a VDAC effect. There was no RT difference between the high- and low-reward distractor conditions (p = .622).

Table 1.

Means and standard errors (SE) of means (in parentheses) of two experiments.

| Exp. 1: RT (in ms) | Exp. 2: Data-limited accuracy | |||

|---|---|---|---|---|

| Visit 1 | Visit 2 | Visit 1 | Visit 2 | |

| High reward | 663.3 (7.5) | 623.4 (8.0) | 0.80 (0.01) | 0.83 (0.01) |

| Low reward | 661.0 (8.6) | 618.7 (7.3) | 0.78 (0.01) | 0.84 (0.01) |

| None | 648.1 (7.7) | 603.2 (7.3) | 0.79 (0.01) | 0.84 (0.01) |

Fig. 2.

Main effect of Distractor type in Experiment 1.

Test-retest and odd-even split-half reliability for VDAC

Since the VDAC effect was observed, the Pearson’s correlation analysis was conducted for test-retest and odd-even split-half reliability (Fig. 3). When RT cost (RT from the pooled reward distractor conditions minus RT from the none distractor condition) was adopted, the correlation coefficients did not significantly differ from zero, showing very low reliability. When RT with the pooled reward distractor conditions was employed, the correlation coefficients were strong, showing great reliability.

Fig. 3.

The Pearson’s correlations in Experiment 1. The RT cost is computed by subtracting the baseline RT from the pooled reward RT. The pooled reward RT is the RT pooled across the two reward distractor conditions. In general, the use of RT cost lead to very low correlation coefficients. In contrary, the use of pooled reward RT result to large correlation coefficients.

Experiment 2

This experiment used data-limited accuracy measures to examine the reliability of VDAC. The experimental procedure was similar to Experiment 1, with the only difference being the unrewarded test phase. In this phase, all six stimuli flashed briefly, followed by the masked (Fig. 1).

Participants

Forty-one college students (17 males and 24 females,  = 22.4 years, SD = 4.1 years) participated in this experiment, reaching a power of 0.99 (based on the same parameters in Experiment 1). All achieved accuracy rates between 60% and 90% on the task. Each had normal or corrected-to-normal vision. The written informed consent was obtained from all participants.

= 22.4 years, SD = 4.1 years) participated in this experiment, reaching a power of 0.99 (based on the same parameters in Experiment 1). All achieved accuracy rates between 60% and 90% on the task. Each had normal or corrected-to-normal vision. The written informed consent was obtained from all participants.

Design

This experiment was similar to Experiment 1, except that the data-limited accuracy-based VDAC paradigm was adopted. In the unrewarded test phase, the presentation time of all six stimuli was set briefly to 250 ms, followed by the six masks (color mosaic patterns). The brief 250 ms presentation time of the six stimuli was based on a previous pilot study, to ensure that the accuracy rate would be kept between 60% and 90%. These masks remained on screen until the participant responded or until 1.5 s. Participants were instructed to respond as accurately as possible.

Procedure

The same as Experiment 1.

Results

The mean correct rate was 0.79 (SD = 0.40) for Visit 1 and 0.84 (SD = 0.37) for Visit 2. The average number of days between the first visit and the second visit was 32.2 days (SD = 4.9 days). A 2 (Visit: first or second) by 3 (Distractor type: high reward, low reward, or none) ANOVA on accuracy rate was conducted. Only the Visit main effect was significant (F(1,40) = 33.39, p < .0001, ηp2 = 0.455). The mean accuracy rate for Visit 1 ( = 0.793, SE = 0.010) was lower than that for Visit 2 (

= 0.793, SE = 0.010) was lower than that for Visit 2 ( = 0.836, SE = 0.008), showing the possible practice effect. The main effect of Distractor type (F(2,80) = 1.25, p = .292, ηp2 = 0.030) and the interaction effect (F(2,80) = 2.04, p = .136, ηp2 = 0.049) were not significant. Since the VDAC effect was not observed in this experiment, the reliability analysis was not conducted.

= 0.836, SE = 0.008), showing the possible practice effect. The main effect of Distractor type (F(2,80) = 1.25, p = .292, ηp2 = 0.030) and the interaction effect (F(2,80) = 2.04, p = .136, ηp2 = 0.049) were not significant. Since the VDAC effect was not observed in this experiment, the reliability analysis was not conducted.

General discussion

To address the VDAC effect and the reliability of VDAC paradigm, this study compares RT-version VDAC paradigm2 and the data-limited accuracy-version VDAC paradigm. The RT version VDAC paradigm is common in the literature. Recently, many found that RT-based VDACs have very low reliability7–9. On the other hand, the highly reliable EM measurements in Anderson and Kim (2019) may be related to data-limited accuracy measures. The current results showed that only the RT version exhibited the VDAC effect. Further reliability analysis showed that the adoption of individual scores produced high test-retest and split-half reliabilities. The reliability using RT cost resulted in very low reliability as in previous studies7–9.

The VDAC effect reported in the current RT-version paradigm has been replicated in previous studies. The current results showed a significant RT difference between reward distractor and no distractor conditions, but no difference was found between the two reward distractor conditions. In the current unrewarded phase, all shapes are colored, therefore, the reward distractors are not color singletons. As a result, any RT difference between the reward distractor condition and no reward condition could not be attributed exclusively to physical salience of attention22. Rusz, et al.3 found that the presence of high-reward distractors significantly hindered performance compared to conditions with no distractors. The impact was measured with a standardized mean change of 0.493, 1.4 times larger than the original estimate (0.347). They suggest that published effect sizes might be influenced not only by a reward-driven process but also by a selection-driven process. Consequently, previous studies comparing reward distractors to no distractors may have overestimated the magnitude of reward-driven distraction.

The data-limited accuracy version did not exhibit the VDAC effect against our hypothesis. The absence of a VDAC effect in the data-limited accuracy version appears to be primarily due to task difficulty, rather than a fundamental flaw in the experimental design. Although we cannot completely rule out the possibility of design-related issues, the paradigm we adopted has been commonly used in previous studies. Therefore, we believe that flaws in the experimental design are unlikely to be the main cause. It is possible that the line segment identification (vertical or horizontal line) task in the unrewarded phase is not difficult enough, so when attention shifts back from the reward distractor to the target line segment, the little “data” extracted within a short time window is already sufficient to complete the task. Future research might consider adopting more challenging tasks, such as letter recognition tasks e.g., identify four similar letters H, V, U, and X in23, to make it harder for participants to extract sufficient target letter information in a short period. Perhaps the increasing the difficulty of the target task could prevent sufficient target information from being extracted in a short period when attention shifts back from the reward distractor to the target. Thus, this may reduce the target task performance.

Individual RT score (i.e., the pooled RT from the two reward distractor conditions) has high test-retest and split-half reliability. On the other hand, the typical RT difference score has low reliability as in previous studies e.g.,8. In within-participant designs, researchers in the experimental approach often subtract a baseline of behavioral performance (e.g., no distractor condition in the current case) in order to control for unwanted individual differences. Any subtraction that effectively reduces between-individual variance is likely to elevate the proportion of measurement error in relation to between-individual variance21, thus reducing reliability. The between-individual variance by using individual RT score is about three times larger than that using RT difference score. Take Visit 1, for example, the SD by using the pooled RT is 61.6 ms, and the SD by using the RT cost is 21.5 ms. Reliability increases for larger between-individual variance21.

Although the use of an individual RT scores can produce high reliability, can this score represent the effect of VDAC? That is, a long pooled reward RT does not necessarily indicate a stronger VDAC because the RT in the no distractor condition (baseline) could also be long. If two participants have the same long pooled reward RT, it can be interpreted differently due to their different baseline RTs. A longer baseline RT indicates a lower level of VDAC, whereas a shorter baseline RT indicates a higher level of VDAC. In other words, if the pooled reward RT is not correlated or is weakly correlated with the baseline RT, then the pooled reward RT alone may not effectively represent the degree of VDAC. Conversely, if the pooled reward RT is strongly positively correlated with the baseline RT, it indicates that the pooled reward RT is influenced by VDAC. In other words, the pooled reward RT can indeed partially represent VDAC. In the current study, the correlation between the pooled reward RT and the baseline RT is 0.938 for Visit 1 and 0.958 for Visit 2 (all p’s < 0.0001). Moreover, the prerequisite for a significant main effect of distractor type (e.g., reward vs. no reward) from ANOVA is critical to ensure the presence of VDAC. When the main effect of distractor type is not significant, it indicates that the reward distractor fails to capture attention, meaning there is no VDAC effect. In such cases, any measure for reliability (such as a single RT score or difference RT) becomes meaningless.

Limitations and future directions

Although this study suggests that single RT scores (rather than RT difference scores) can to some extent represent VDAC and have high reliability, it remains possible that only difference scores, as previously reported, can truly capture the core of VDAC. However, their reliability is still relatively poor. We look forward to future studies exploring the validity of single RT scores as an indicator of VDAC.

Many subsequent studies have modified the original experimental paradigm by Anderson, et al.2 to explore various VDAC-related issues3, such as the persistence and extinction of VDAC e.g.,24 and reward learning on VDAC e.g.,25. However, the current study cannot cover all VDAC issues extended from the Anderson, et al.2 paradigm. It only addresses the reliability issues of the original experimental paradigm, hoping that future researchers may use a similar approach (e.g., single RT scores) to apply to modified paradigms to investigate reliability issues.

VDAC is linked to addictive behaviors and cognitive symptoms in disorders like ADHD and schizophrenia by enhancing the appeal of reward-related cues. In the future, high-reliability single scores may serve as indicators of participants’ (e.g., addicts’) VDAC to predict their success rate in addiction recovery. For instance, addicts with longer RTs (indicating they are easily attracted by irrelevant high-reward CS) may have a lower success rate in overcoming addiction.

Conclusion

To conclude, we found that the typical RT-version VDAC paradigm reliably demonstrated the VDAC effect, while the data-limited accuracy version did not. The individual RT scores showed high test-retest and split-half reliability, contrasting with the low reliability of RT difference scores. Furthermore, the pooled reward RT was highly correlated with baseline RT, indicating that it can effectively represent the degree of VDAC. Based on this study, we suggest that researchers in the experimental approach consider using single scores (as opposed to difference scores) to establish reliability. Single scores have lower measurement error and higher between-participant variance compared to difference scores, which helps improve reliability. However, using single indicators may require certain prerequisites. In this study, for instance, it is necessary to first confirm the existence of the VDAC effect (i.e., the main effect of distractor type) for the single scores on be meaningful.

Author contributions

Chung-Yi Shih: Data Collection, Analysis, Interpretation of dataShuo-Heng Li: Programming, Data Collection, Interpretation of dataMing-Chou Ho: Concept, Design, Interpretation of data, Writing. All authors have read and approved the manuscript and agree to its submission.

Funding

We thank the National Science and Technology Council for their financial support of this research (MOST 111-2410-H-040-005-MY2).

Data availability

The experimental data is available at https://osf.io/dnbf3/?view_only=7aa41d3e3b064888a0ed38b0da53018a. None of the reported studies were preregistered.

Declarations

Competing interests

The authors declare no competing interests.

Ethics approval

This study was approved by the Institutional Review Board of the Chung-Shan Medical University Hospital (CS2-21181).

Consent to participate

All participants signed an informed consent, approved by the Institutional Review Board of the Chung-Shan Medical University Hospital.

Footnotes

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

References

- 1.Anderson, B. A. et al. The past, present, and future of selection history. Neurosci. Biobehav Rev.130, 326–350. 10.1016/j.neubiorev.2021.09.004 (2021). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Anderson, B. A., Laurent, P. A. & Yantis, S. Value-driven attentional capture. Proc. Natl. Acad. Sci.108, 10367–10371. 10.1073/pnas.1104047108 (2011). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Rusz, D., Le Pelley, M. E., Kompier, M. A., Mait, L. & Bijleveld, E. Reward-driven distraction: A meta-analysis. Psychol. Bull.146, 872–899. 10.1037/bul0000296 (2020). [DOI] [PubMed] [Google Scholar]

- 4.Albertella, L. et al. Reward-related attentional capture is associated with severity of addictive and obsessive–compulsive behaviors. Psychol. Addict. Behav.33, 495–502. 10.1037/adb0000484 (2019). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Loganathan, K. Value-based cognition and drug dependency. Addict. Behav.123, 107070. 10.1016/j.addbeh.2021.107070 (2021). [DOI] [PubMed] [Google Scholar]

- 6.Anderson, B. A. Relating value-driven attention to psychopathology. Curr. Opin. Psychol.39, 48–54. 10.1016/j.copsyc.2020.07.010 (2021). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Freichel, R. et al. Value-modulated attentional capture in reward and punishment contexts, attentional control, and their relationship with psychopathology. J. Experimental Psychopathol.14, 1–13. 10.1177/20438087231204 (2023). [Google Scholar]

- 8.Anderson, B. A. & Kim, H. Test–retest reliability of value-driven attentional capture. Behav. Res. Methods. 51, 720–726. 10.3758/s13428-018-1079-7 (2019). [DOI] [PubMed] [Google Scholar]

- 9.Garre-Frutos, F., Vadillo, M. A., González, F. & Lupiáñez, J. On the reliability of value-modulated attentional capture: an online replication and multiverse analysis. Behav. Res. Methods. 1–18. 10.3758/s13428-023-02329-5 (2024). [DOI] [PMC free article] [PubMed]

- 10.Ataya, A. F. et al. Internal reliability of measures of substance-related cognitive bias. Drug Alcohol Depend.121, 148–151. 10.1016/j.drugalcdep.2011.08.023 (2012). [DOI] [PubMed] [Google Scholar]

- 11.Norman, D. A. & Bobrow, D. G. On data-limited and resource-limited processes. Cogn. Psychol.7, 44–64. 10.1016/0010-0285(75)90004-3 (1975). [Google Scholar]

- 12.Prinzmetal, W., McCool, C. & Park, S. Attention: reaction time and accuracy reveal different mechanisms. J. Exp. Psychol. Gen.134, 73–92. 10.1037/0096-3445.134.1.73 (2005). [DOI] [PubMed] [Google Scholar]

- 13.Santee, J. L. & Egeth, H. E. Do reaction time and accuracy measure the same aspects of letter recognition? J. Exp. Psychol. Hum. Percept. Perform.8, 489–501. 10.1037/0096-1523.8.4.489 (1982). [DOI] [PubMed] [Google Scholar]

- 14.Ho, M. C. & Atchley, P. Perceptual load modulates object-based attention. J. Exp. Psychol. Hum. Percept. Perform.35, 1661–1669. 10.1037/a0016893 (2009). [DOI] [PubMed] [Google Scholar]

- 15.Ho, M. C. Object-based attention: sensory enhancement or scanning prioritization. Acta Psychol. (Amst). 138, 45–51. 10.1016/j.actpsy.2011.05.004 (2011). [DOI] [PubMed] [Google Scholar]

- 16.Carrasco, M. & Barbot, A. Spatial attention alters visual appearance. Curr. Opin. Psychol.29, 56–64. 10.1016/j.copsyc.2018.10.010 (2019). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Carrasco, M., Ling, S. & Read, S. Attention alters appearance. Nat. Neurosci.7, 308–313. 10.1038/nn1194 (2004). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Datta, R. & DeYoe, E. A. I know where you are secretly attending! The topography of human visual attention revealed with fMRI. Vis. Res.49, 1037–1044. 10.1016/j.visres.2009.01.014 (2009). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Liu, T., Abrams, J. & Carrasco, M. Voluntary attention enhances contrast appearance. Psychol. Sci.20, 354–362. 10.1111/j.1467-9280.2009.02300.x (2009). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Abrams, J., Barbot, A. & Carrasco, A. Voluntary attention increases perceived Spatial frequency. Atten. Percept. Psychophys.72, 1510–1521. 10.3758/APP.72.6.1510 (2010). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Hedge, C., Powell, G. & Sumner, P. The reliability paradox: why robust cognitive tasks do not produce reliable individual differences. Behav. Res. Methods. 50, 1166–1186. 10.3758/s13428-017-0935-1 (2018). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Theeuwes, J. Perceptual selectivity for color and form. Percept. Psychophys. 51, 599–606 (1992). [DOI] [PubMed] [Google Scholar]

- 23.Shomstein, S. & Yantis, S. Object-based attention: sensory modulation or priority setting? Percept. Psychophys. 64, 41–51 (2002). [DOI] [PubMed] [Google Scholar]

- 24.Milner, A., MacLean, M. & Giesbrecht, B. The persistence of value-driven attention capture is task-dependent. Atten. Percept. Psychophys.85, 315–341 (2023). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Le Pelley, M. E., Pearson, D., Griffiths, O. & Beesley, T. When goals conflict with values: counterproductive attentional and oculomotor capture by reward-related stimuli. J. Exp. Psychol. Gen.144, 158–171 (2015). [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

The experimental data is available at https://osf.io/dnbf3/?view_only=7aa41d3e3b064888a0ed38b0da53018a. None of the reported studies were preregistered.