ABSTRACT

This review explores state of the art machine learning and deep learning models for peptide property prediction in mass spectrometry‐based proteomics, including, but not limited to, models for predicting digestibility, retention time, charge state distribution, collisional cross section, fragmentation ion intensities, and detectability. The combination of these models enables not only the in silico generation of spectral libraries but also finds many additional use cases in the design of targeted assays or data‐driven rescoring. This review serves as both an introduction for newcomers and an update for experienced researchers aiming to develop accessible and reproducible models for peptide property predictions. Key limitations of the current models, including difficulties in handling diverse post‐translational modifications and instrument variability, highlight the need for large‐scale, harmonized datasets, and standardized evaluation metrics for benchmarking.

Keywords: deep learning, machine learning, mass spectrometry, peptide property prediction, proteomics

Abbreviations

- AA

amino acid

- AUC

area under the curve

- BiGRU

bidirectional gated recurrent unit

- BiLSTM

bidirectional long short‐term memory

- CCS

collisional cross sections

- CID

collision‐induced dissociation

- CNN

convolutional neural network

- CS

charge state

- CSD

charge state distribution

- DDA

data‐dependent acquisition

- DIA

data‐independent acquisition

- DT

decision tree

- ETD

electron‐transfer dissociation

- FDR

false discovery rate

- GRU

gated recurrent unit

- IMS

ion mobility spectrometry

- iRT

indexed retention time

- LSTM

long short‐term memory

- m/z

mass‐to‐charge ratios

- MAE

mean absolute error

- MAPE

median absolute percent error

- MLP

multi‐layer perceptron

- MS

mass spectrometry

- MSE

mean squared error

- OOD

out‐of‐distribution

- PCC

Pearson correlation coefficient

- PTM

post‐translational modification

- RMSE

root mean squared error

- RNN

recurrent neural network

- RT

retention time

- SVM

support vector machines

1. Introduction

In recent years, the interest in artificial intelligence (AI) has skyrocketed. With prominent examples such as ChatGPT [1], StableDiffusion [2], and Gemini [3], the buzzword AI can be found in many popular media headlines [4, 5]. This cultural phenomenon continues within the scientific domain, where AI is revolutionizing research across different fields. From accelerating drug discovery [6, 7] to improving climate modeling [8, 9], its potential to boost scientific discoveries is becoming increasingly clear. This is underscored by the recent Nobel Prize awarded for the development of AI in biochemistry [10]. For this progress to continue, however, it is critical that researchers stay informed about the latest developments in their field. It is also important that the field remains accessible to a wide range of talent. Inclusivity fosters innovation and encourages the diverse perspectives essential for groundbreaking discoveries that benefit society.

One area profoundly impacted by advances in AI is bioinformatics, particularly in the field of proteomics, where AI models analyze massive data sets and identify patterns and correlations that were previously undetectable [11]. The benefits of AI for proteomics are especially significant in the prediction of peptide properties. Here, it can be used to predict the behavior of peptides in mass spectrometry (MS) experiments. By predicting peptide properties, it is possible to generate expected in silico spectra of known and unknown peptides, allowing for more accurate identification and quantification [12]. To this end, the most commonly studied properties in the field are retention time and fragment ion intensities, while other properties such as precursor charge state distribution (CSD) or protein digestibility are less frequently studied.

1.1. Aim

In this review, we will follow the workflow of a proteomic MS experiment to demonstrate how AI is applied in current prediction models. While the models we focus on have either self‐reported performance gains over recent counterparts or have been identified as superior in comparative studies, it is important to note that this does not mean they are universally optimal. Other models may perform better in certain scenarios or under different conditions. Our goal is twofold: First, to guide new talent into this exciting field, and second, to update more experienced researchers about recent advances beyond the key property (or properties) on which they may currently be focused on. To achieve the first goal, we aim to make the field more accessible by briefly explaining how the key layers of AI models work in the context of selected examples across different peptide properties. This approach ensures that newcomers can develop an intuitive understanding of the fundamental components and how they are applied in real‐world scenarios. The selection of models attempts to cover a wide range of different approaches, architectures, and methodologies to show the diversity of techniques used in peptide property prediction. To keep the barrier of entry as low as possible, we included background information on the fundamentals of machine learning (Supporting Information). While not aiming to be exhaustive to achieve the second goal, we provide a comprehensive collection of the models developed in recent years to provide a clear picture of the field's current state (Table S1). In doing so, we not only highlight the strengths and applications of these tools but also discuss their limitations and identify promising directions for future research.

This paper focuses on models for predicting peptide properties in the context of standard MS‐based proteomics experiments or properties that influence MS‐based proteomics, building on prior work [11, 12, 13, 14], which we encourage to read as well. For work focusing on biomedical properties, such as classification of MHC‐binding peptides, we refer to other reviews [15, 16] with this specific focus. We also do not focus on specialized MS‐based proteomics approaches like for cross‐linked peptides (e.g., pDeepXL [17]) or glycopeptides (e.g., DeepGlyco [18]). Although our focus is on MS, many of the techniques discussed here are also applicable to other domains, such as biomedical properties.

1.2. Limitations in Model Evaluation and Comparison

The process of building and evaluating machine learning (ML) models involves five critical components: (1) training data, including preprocessing steps; (2) model architecture; (3) loss function; (4) evaluation data; and (5) evaluation metrics. Changes in any of these components can significantly influence a model's apparent performance, making fair comparisons between models inherently challenging. This difficulty is exacerbated by the lack of universally accepted benchmark datasets or standardized training sets for the peptide properties discussed in this review. While datasets such as ProteomeTools/PROSPECT [19], which provide synthetic data with reliable labels, and the nine‐species dataset [20] are available, none of these have been consistently adopted across studies. As a result, it becomes nearly impossible to determine which models are truly “better” or “best” and what specific changes contributed to improved performance. For example, consider two models evaluated using the mean absolute error (MAE) metric. One model is trained using an MAE loss function, while the other uses a mean squared error (MSE) loss function on the same dataset. The first model is likely to perform better on the MAE metric, because it was optimized for that specific criterion.

Furthermore, similarity between training and evaluation datasets can significantly influence model evaluation outcomes. Models trained on data that is similar to the evaluation dataset may appear to perform better, not because of superior architecture or methodology, but due to overfitting to specific characteristics of the data. Given these complexities, this review refrains from making judgments about which models, architectures, or approaches are objectively “better” or “best.” Instead, it highlights the challenges of evaluating and comparing models within this domain.

2. MS‐Based Peptide Property Prediction

A standard MS‐based proteomics experiment involves several steps: protein digestion, chromatographic separation, peptide ionization, sometimes followed by optional gas‐phase separation, and peptide fragmentation. This approach is commonly referred to as bottom‐up or shotgun proteomics [21]. Accurate prediction of peptide behavior at various stages of this process can increase confidence in peptide identification and help detect peptides that would otherwise be missed, with both aspects having implications for protein quantification [22]. In data‐independent acquisition (DIA) workflows, the shift toward predictive models has become essential in recent years, moving beyond reliance on experimental libraries alone [23]. Empirical spectral libraries provide key information from experiments, such as the proteins and peptides that may be present, charge states (CS), retention times (RT), and fragmentation patterns, but they are limited, especially in their ability to represent all potential variants. While data‐dependent acquisition (DDA) has traditionally not relied on libraries due to their incomplete nature, targeted approaches, and DIA have become more reliant on them. This reliance has likely led DIA to adopt predictive modeling techniques more quickly. However, DDA is also increasingly shifting towards these methods, driven by advances in computational tools and the need for more comprehensive peptide identification and quantification.

2.1. Digestibility

2.1.1. Background

After protein extraction, which we will not cover in this review, the first step in MS‐based proteomics typically involves the enzymatic digestion of proteins. Specific proteases cleave the proteins at designated sites into smaller peptides. These peptides are then analyzed to identify and quantify their parent proteins [24, 25]. The process of enzymatic digestion is stochastic, meaning that each potential cleavage site in a protein has a specific probability of being targeted by the protease. Rather than cutting equally at every possible site, proteases preferentially cleave certain sites, resulting in different peptides being produced in each digestion event [26]. In theory, given infinite time, the protease would eventually cleave all possible sites, resulting in a consistent outcome. In practice, however, external factors like time, temperature, and pressure as well as peptide properties like their 3D structure impact the digestion process [25, 27, 28]. This variability influences the final set of peptides available for analysis, affecting both the efficiency and coverage of protein identification in proteomics experiments, a principle exploited by limited proteolysis‐coupled mass spectrometry (LiP‐MS) [26]. Considering the likelihood of peptide generation during enzymatic digestion helps to predict which peptides will appear in the analysis [25, 27] and, thus, may provide additional evidence for the presence of certain proteins in a sample [28].

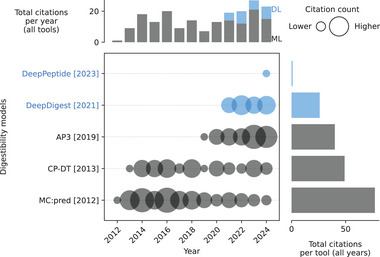

Different models have been proposed to predict the digestibility of peptides (Table S1). The earlier ones are based on classical machine learning methods like support vector machines (SVM) in MC:pred [29] or decision tree (DT)‐based methods like CP‐DT [30] or AP3 [27]. Recently, however, there has been a shift toward DL models applying more complex architectures (Figure 1) [25, 31].

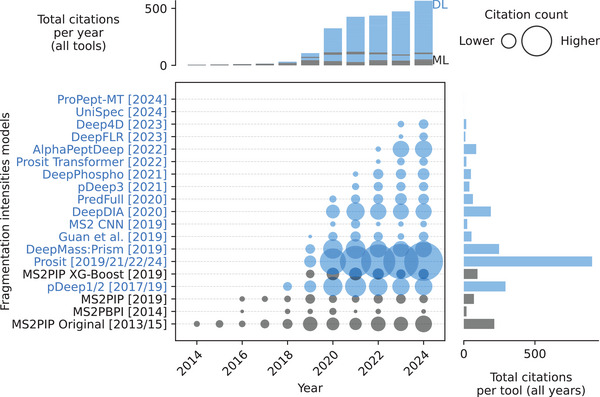

FIGURE 1.

Citations over time for different digestibility models. As of February 18, 2025. The cutoff years are 2010 and 2024. References to all models can be found in Table S1. Models using deep learning (DL) are blue. Models using only classical machine learning (ML) are gray. The exact values of the citation counts per tool and year can be found in Table S2. Created with https://github.com/jesseangelis/Citation_vis/ and OpenAlex [32].

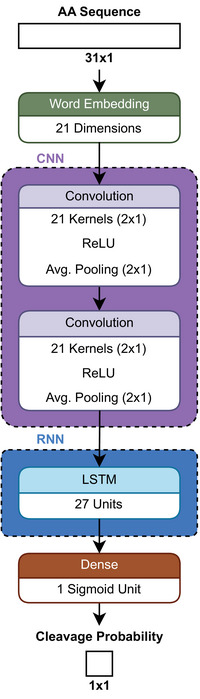

2.1.2. Introduction to DeepDigest

One of these modern deep learning approaches, called DeepDigest [25], predicts cleavage sites in protein sequences and their respective probabilities based on the chosen protease. The architecture of DeepDigest (Figure 2) is designed to consider that proteolytic digestion is influenced not just by the sequence at the cleavage site itself, but also by the surrounding amino acids. During its development, it was found that including more neighboring amino acids improved the model's performance, but this improvement plateaued at about 15 amino acids on either side of the cleavage site. To utilize this information, sequences of 31 amino acids are embedded in a 31 × 21 matrix using word embedding (see Supporting Information). The embedding is fed into a convolutional neural network (CNN) with average pooling to capture the relationships between amino acids and to detect discriminative patterns [25].

FIGURE 2.

Schematic architecture of DeepDigest [25].

CNNs are extensively used in computer vision, where they have significantly improved the ability to identify patterns and features that represent different objects in images [33, 34]. Following the CNN, a type of network known as long short‐term memory (LSTM) is used to capture the dependencies between amino acids based on their positions in the sequence [25]. LSTMs are designed to retain information over long sequences, allowing the model to consider how the presence of one amino acid affects the characteristics of the next one in the sequence. They are a type of recurrent neural network (RNN) and, in principle, able to handle inputs of variable lengths [35]. However, in practical settings, the input is often padded to have a constant length [25]. The outputs of the LSTM are combined in a dense layer (also called a fully connected layer) to form a single value. Finally, a sigmoid function is applied to this output, resulting in a value between 0 and 1 for each 31‐mer, indicating the probability of a cleavage event occurring at that specific site [25].

During the development of DeepDigest, the challenge of unbalanced data became apparent, where the number of used cleavage sites significantly outweighed the number of missed (uncleaved) cleavage sites. Such an imbalance significantly impacts the training process by affecting the loss (see Supporting Information). Specifically, the model is not penalized as often or as strongly for incorrect predictions on the underrepresented class because it encounters these instances less frequently during training. As a result, the model fails to learn how to effectively predict the minority class, leading to poorer performance on that class. This problem is not specific to digestibility and is likely a source of error in many peptide property prediction models. To address this issue, a balanced cross‐entropy loss function has been implemented for DeepDigest:

| (1) |

where ∈ {0, 1} is the ground‐truth of the cleavage site, ∈ [0, 1] is the predicted cleavage probability, and ∈ [0, 1] is an “empirically” adjusted class weight for [25]. Although it was not explained what “empirical” means, it likely refers to the inverse frequency of the classes, making the less frequent class more important. This approach improved the model's sensitivity to the less frequent cleavage sites, leading to a more robust and reliable prediction framework.

For the base model of DeepDigest, the area under the curve (AUC) values (see Supporting Information) ranged from 0.849 to 0.978 across different proteases. The model was further improved through transfer learning (see Supporting Information), where it was first pre‐trained on a large dataset and then fine‐tuned on smaller, protease‐specific datasets to account for varying experimental conditions. This approach led to a significant increase in performance, improving AUC values by up to 13.6% for the lowest‐performing protease, LysN. To validate the model, it was compared to MC:pred on four different trypsin datasets. DeepDigest consistently outperformed MC:pred, with AUC scores of 0.967, 0.987, 0.987, and 0.985, compared to 0.961, 0.945, 0.972, and 0.935 for MC:pred [25].

2.1.3. Limitations and Future Directions

The DeepDigest publication implies that the input sequences are restricted to unmodified amino acids, while the training data include fixed and variable modifications depending on the dataset [25]. This inconsistency could introduce bias, as the model's predictions depend not only on the sequence itself but also on the experimental conditions represented in the training data. However, we were unable to verify that the input is truly restricted to unmodified amino acids, as the model is only available for download over an insecure HTTP connection. Since post‐translational modifications (PTMs) can inhibit protease activity and thus affect proteolysis [36], it is essential to support input of modified amino acid sequences. This adaptation would allow the model to predict digestion more accurately for any given sequence, rather than being confined to the conditions of the training data.

Another potential bias source is that peptide quantification is limited to those peptides detectable in mass spectrometry analysis. If a peptide is unlikely to be detected, due to poor ionization or other factors, it will be marked as absent, even if it is present in the digested sample [25]. This discrepancy could distort the labeling, making it no longer a reliable ground truth. We will only briefly mention this issue here and revisit it in detail in the section on detectability.

DeepDigest provides a modern approach to digestibility prediction and performs well compared to previous models. However, there are a few areas for potential improvement. First, its accessibility could be enhanced by adopting common practices, such as making it available on a trusted platform like GitHub. Following the FAIR (findable, accessible, interoperable, reusable) principles is important for any machine learning model [37]. Second, allowing the specification of PTMs in the input would help reduce biases introduced by experimental conditions. Finally, extending digestibility predictions to include peptides beyond those detectable by mass spectrometry would offer a more complete view. Addressing these areas could make future models even more reliable and comprehensive.

2.2. Retention Time

2.2.1. Background

After proteins are digested into individual peptides, they are separated using liquid chromatography, primarily to reduce the sample complexity. There are several types of liquid chromatography, such as reverse‐phase [38], ion‐exchange [39], and size‐exclusion [40], each suited to specific peptide characteristics. The time it takes for each peptide to pass through the chromatography system, known as the retention time (RT), depends on how the peptide interacts with the stationary phase of the column, the mobile phase, other analytes, and other system parameters (e.g., temperature and pressure). These interactions are influenced by factors such as the peptide hydrophobicity, size, conformation, and the experimental conditions [41, 42]. In most cases, bottom‐up proteomics experiments use a reversed‐phase column in‐line with a mass spectrometer [41].

Knowledge of a peptide's retention time is essential, as it significantly increases confidence in its identification [43]. It is also crucial for targeted DIA analyses, as it allows for the scheduling of peptide precursor extraction windows, improving both specificity and throughput [44]. Databases containing known retention times for each peptide, ideally matching the experimental setup, are therefore needed. However, creating such databases is time‐consuming and expensive due to the large variety of peptides and LC conditions [45].

To overcome the limitation of varying retention times between different runs, the indexed retention time (iRT) was introduced [46]. This metric indicates the retention time of a given peptide relative to a set of reference iRT‐peptides, allowing for the alignment of different measurements and more accurate predictions [46]. Most RT predictors today use some form of normalized RT, most commonly iRT, to harmonize the training data and to achieve some degree of generalization [47, 48]. Consequently, predicted iRTs often require calibration with experimental data to provide meaningful real‐world insights [49].

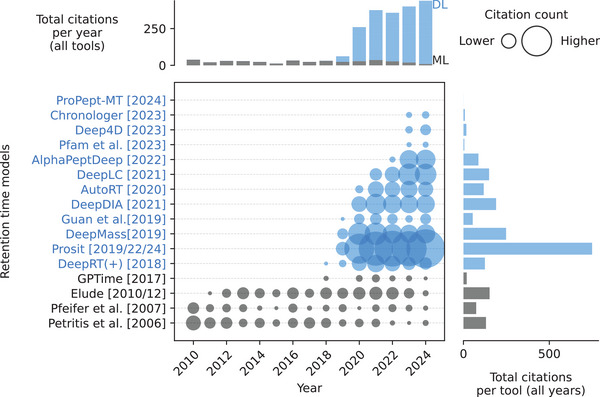

Peptide RT prediction models have evolved significantly, transitioning from early machine learning approaches like multi‐layer perceptrons (MLPs) and SVMs to more sophisticated deep learning techniques (Figure 3 and Table S1). The shift began with DeepRT(+) [50], which uses CNNs, followed by Prosit [51], which uses an architecture known as bidirectional gated recurrent units (BiGRU) in combination with the so‐called attention mechanisms. This DL trend continued with models such as DeepLC [48], using CNNs, and AlphaPeptDeep [47], which incorporates BiLSTMs and attention. While model complexity has increased, the input focus remains on peptide sequences, with variations in loss functions such as MSE, MAE, and root mean squared error (RMSE) (see Supporting Information). A notable trend is the rise of open‐source development, with many models now available on GitHub. Recent models, including Prosit, AlphaPeptDeep, and DeepLC, are rapidly gaining traction, with Prosit seeing a sharp increase in citations, from 20 in 2019 to 181 in 2024. However, it is important to note that AlphaPeptDeep and Prosit do not exclusively predict retention time, which leads to higher citation counts, as they have broader applicability.

FIGURE 3.

Citations over time for different retention time models. As of February 18, 2025. The cutoff years are 2010 and 2024. Chronologer is a preprint. Prosit includes publications from 2019, 2022, and 2024 (preprint). Elude includes publications from 2010 and 2012. References to all models can be found in Table S1. Models using deep learning (DL) are blue. Models using only classical machine learning (ML) are gray. The exact values of the citation counts per tool and year can be found in Table S2. Created with https://github.com/jesseangelis/Citation_vis/ and OpenAlex [32].

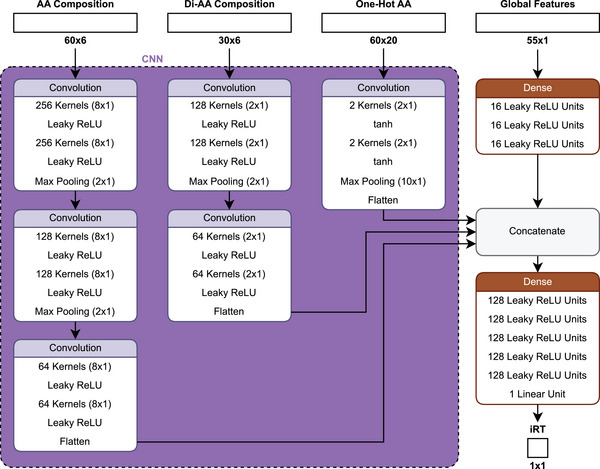

2.2.2. Introduction to DeepLC

DeepLC [48] uses an architecture (Figure 4) that aims to handle PTMs that are not part of the training set. This is approached by three additional inputs to a one‐hot encoding (see Supporting Information) of amino acids as follows: first, an amino acid composition matrix encoding the number of atoms (C, H, N, O, P, and S) of each amino acid. Second, a diamino acid composition matrix that does the same, but for two amino acids at a time. These two‐atom count embeddings allow for the representation of modified amino acids by adding the respective atom counts of the modification. Third, a global numerical feature list, including, for example, the length and mass of the peptide. Each input takes its own path within the network, where all but the global feature inputs are subjected to convolutional layers. The paths are then combined and processed by multiple dense layers. The model is optimized using the MAE as a loss function [48].

FIGURE 4.

Schematic architecture of DeepLC [48].

The multi‐input approach was successful in predicting most modifications that were not included in the training set, but it was not successful for some, particularly phosphorylation and nitrotyrosine modifications. This could be due to the different chemical structures of these modifications compared to those in the training set [48]. It suggests that the model may be overfitting or experiencing data leakage from the modifications used during training, limiting its ability to predict modifications that are chemically distinct. This limitation may arise from an inadequate feature representation or insufficient coverage of the relevant chemical space in the training data, hindering effective generalization. Ultimately, it highlights an ongoing challenge with out‐of‐distribution (OOD) problems for PTMs, despite efforts to address it.

2.2.3. Introduction to Chronologer

As mentioned above, deep learning methods require substantial amounts of training data and typically assume that these data are accurate. However, proteomics data are often imperfect and likely to contain incorrect labels, as it is commonly derived from database search results filtered to a 1% false discovery rate (FDR). Furthermore, creating large datasets, covering the physicochemical space of interest as best as possible, can be cumbersome and expensive [52]. In a recent preprint model called Chronologer [49], these issues were addressed by creating a harmonized dataset from multiple experiments and training a model that considers the error in the data. The datasets included those used to train other models such as DeepLC and Prosit. Harmonization was not done by linearly aligning the gradient using shared reference peptides across all datasets, but by separately predicting the iRT for all Prosit‐predictable peptides in each dataset. A monotonic function was then fitted to align the measured retention times to the respective predicted retention times from Prosit (the Prosit prediction space). Using this function, it was possible to transform all measurements into the Prosit prediction space and thus harmonize all datasets to the same global reference without the need for a shared standard across datasets. The harmonized data were then aligned to a physiochemically meaningful dimension, representing the hydrophobic index of a reversed‐phase high‐performance liquid chromatography. This created a database containing ∼2.2 million unique peptides measured under different experimental setups. In addition, the generated dataset contained 10 different PTMs [49].

Chronologer’s residual CNN was trained using a loss function that dynamically masks datapoints that fall outside of the 99% confidence interval when calculating the errors. To select a good base loss function, harmonized measurements of the same peptides were compared across different experimental conditions. These differences were then analyzed to see whether they followed a Gaussian distribution (related to MSE) or a Laplacian distribution (related to MAE). The analysis found that the empirical distribution was better described by a Laplacian distribution, so the MAE was chosen as the loss function. Together with the masking approach, this resulted in a significant improvement in performance, achieving an MAE of 0.81 compared to the reported MAEs of 1.29 for DeepLC, 1.27 for Prosit, and 1.48 for AlphaPeptDeep [49].

2.2.4. Limitations and Future Directions

While Chronologer is not able to predict unseen modifications out of the box, it has been shown that new modifications can be learned relatively quickly using transfer learning, without requiring excessive training data [49]. DeepLC has demonstrated the ability to predict retention times for peptides with modifications that are chemically similar to those in its training set [48]. However, to our knowledge, no study has shown convincing results indicating that any model can perform well on peptides with chemically dissimilar modifications. Bearing in mind that the Chronologer results have not yet been peer‐reviewed, they show how an error function adapted to the analyzed data can improve the performance of a model. This data‐aware approach could be valuable for other properties as well.

2.3. Charge State Distribution

2.3.1. Background

After chromatographic separation, the sample enters the ion source where peptides are ionized, most commonly by electrospray ionization. The number of charges carried by a peptide is called its charge state (CS). Peptides can exhibit various CS depending on, for example, their amino acid composition and order, their length, and their modifications [53, 54, 55, 56]. The CS is also influenced by experimental factors like the ionization method, the LC mobile phase, and the complexity of the sample matrix [34, 57–61]. But even peptides with the same sequence can have different CS within a single experiment. The ratio of different CS for the same peptide sequence is called charge state distribution (CSD) [53, 62, 63]. Since mass spectrometers measure ions based on their mass‐to‐charge ratios (m/z), predicting the dominant CS and CSD is critical for building accurate spectral libraries, aiding in peptide identification during MS analysis [53].

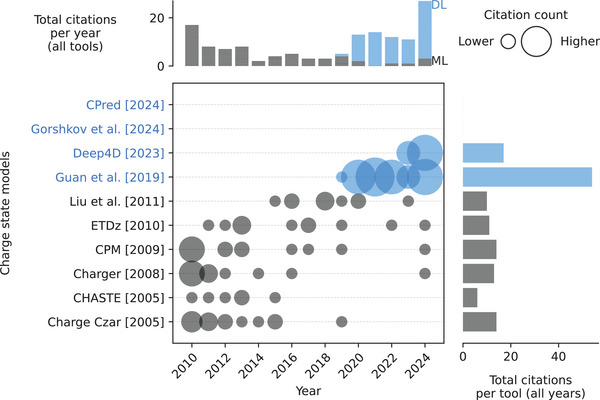

Compared to other properties, such as retention time, there has only been limited published work aimed at predicting CS or CSD of peptides using machine learning (Figure 5 and Table S1). By 2010, several basic machine learning models utilizing linear regression, or SVM, had been developed in the context of electron‐transfer dissociation (ETD) fragmentation. Here either the highest possible or the most abundant precursor charge state given a particular ETD spectrum is estimated. Thus, these models use MS2 spectra as input, often manually curated, which limits the complexity of the training data [64, 65, 66, 67, 68, 69]. In 2019, the Guan et al. model started the deep learning era for more general precursor CS prediction by being the first one on several ends as follows: (1) changing the input to peptide sequences which included some PTMs. (2) Using MS1‐based extracted ion chromatogram data to cover as much of the underlying CSD as possible. (3) Using a BiLSTM model to predict precursor CSD [62]. This development led to an increased interest in CSD prediction.

FIGURE 5.

Citations over time for different charge state and charge state distribution models. As of February 18, 2025. The cutoff years are 2010 and 2024. AlphaPeptDeep is not included as its predictor has not been part of a publication yet. CPM is an abbreviation for Charge Prediction Machine. References to all models can be found in Table S1. Models using deep learning (DL) are blue. Models using only classical machine learning (ML) are gray. The exact values of the citation counts per tool and year can be found in Table S2. Created with: https://github.com/jesseangelis/Citation_vis/ and OpenAlex [32].

Building on Guan et al. and retaining most of the concepts, the recent models Gorshkov et al. [53], and CPred [63] reported improvements of various aspects. The former focuses on the MS1‐based data processing and the loss function, and the latter focuses on PTM integration and feature engineering [53, 63]. In parallel to these developments, several other deep learning‐based CS prediction models have been developed to support CCS or MS2 spectra prediction and in some cases are mentioned as sidenotes in the respective main publications. They differ from the above CSD prediction models by either predicting only a single CS or a binary representation of a limited number of CS and are copies of the architecture used for the main prediction task of the publication [70, 71, 72].

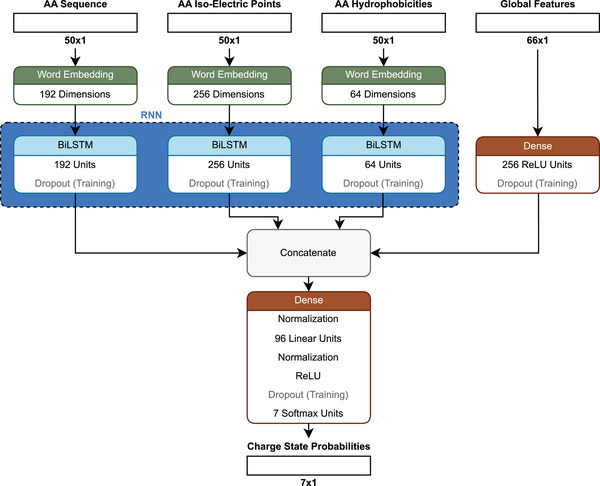

2.3.2. Introduction to CPred

CPred is a model that uses a bidirectional long short‐term memory (BiLSTM) architecture (Figure 6) [63]. A BiLSTM is similar to the already mentioned LSTM in its ability to capture contextual relationships between amino acids in a sequence. However, while an LSTM processes the sequence in a single direction (from start to end), a BiLSTM reads the sequence in both directions, thus extracting information from the beginning and the end simultaneously. This bidirectional approach enables the model to gain a more comprehensive understanding of the sequence by considering both the left and right context [73]. To encode the peptides, a similar approach to the one described for DeepLC was followed where amino acids are reportedly one‐hot‐encoded in addition to providing the atomic compositions separately to capture PTMs. Contrary to DeepLC, CPred does not include atom counts per amino acid, only calculating the peptide‐wide atom count. Other static features describing peptide‐wide characteristics are, for example, peptide length and the fraction of basic amino acids. The sequential features are the already mentioned one‐hot‐encoded amino acids and the per‐amino acid specific characteristics: hydrophobicity, and isoelectric point. The loss function is the MSE [63].

FIGURE 6.

Schematic architecture of CPred [63].

In contrast to the Guan et al. model, CPred does not use MS1‐based, but MS2‐based CSDs. A strong class imbalance in the data was noted, observing that the training data had higher probabilities for +2 and +3 charges compared to, for example, +5 or +7. As a result, the model is not able to accurately predict the most abundant CS, if it is expected to be higher than +4. In the study, it was suggested to retrain the model with a balanced data set [63]. However, it might also be possible to adjust the loss function to address the class imbalances. An example could be the weighted cross entropy loss, as mentioned in the digestibility section. Here the entropy (the difference between a known and a predicted probability) could be weighted by the inverse average probability of each CS [74].

CPred was evaluated based on the median Pearson correlation coefficient (PCC) (see supporting information) between the predicted and experimental probabilities across all charge states. A value of 0.9997 was reported [63]. This metric, however, might not be suitable for the highly imbalanced dataset as it does not represent the infrequent classes of CS larger than +4 well. A CS wise evaluation might be more suitable or if a single value is preferred, the mean of the CS wise correlations.

PTMs were found to mostly have minor impacts on the performance, however, the results in the CPred study indicate that some modifications, such as trimethylation, GlyGlycylation, crotonylation, and biotinylation, show lower PCCs. An in‐depth analysis on this issue was not provided [63], but these lower PCCs raise concerns about the generalizability of the model to unseen modifications, as these specific PTMs differ more structurally from other lysine modifications. For instance, crotonylation is the only lysine‐PTM in the CPred set with an alkene bond, trimethylation is unique in possessing a quaternary ammonium group (although dimethylations can also have a quaternary ammonium, it is less common), GlyGlycylation is distinguished by a peptide bond, and biotinylation contains the distinct biotin structure. These structural differences suggest that CPred might struggle to generalize to unknown chemical features of PTMs, particularly when most lysine modifications in the dataset have different features. While one might argue that the well performing TMT6‐plex should also fall into this category, it stands apart due to its significant representation in the dataset, with 192,452 observations. This is far more than the other PTMs, most of which have fewer than 500 observations. For a study that investigates the impact of different PTMs on retention time, CS, and fragmentation we refer to [75].

A major point that needs to be considered when discussing any deep learning model, here highlighted on CPred, is that we observed sometimes significant discrepancies between the information provided in a manuscript and the implemented code. As mentioned above for CPred, the sequential inputs were reported to be the one‐hot encoded amino acid sequence, as well as the per amino acid isoelectric points and hydrophobicities. However, the supplied code shows that the amino acid sequence is not one‐hot encoded but encoded using word embedding. Further, while the per amino acid isoelectric points and hydrophobicities are used as input they are fed into a word embedding layer, effectively turning the float values into integers and using these integers as indices for the learned embeddings, thus limiting the information input to the rounded down values. This is especially significant for hydrophobicity as these values range between −0.7 and 1. These discrepancies highlight the need for a standardized reporting and common standards for supplied code, allowing reviewers to easily find and highlight such instances. Until this is part of the publication process, checking the source code of models before relying on them is advisable.

2.3.3. Limitations and Future Direction

Due to the median PCC, used by many studies in this area, being an unsuitable metric for determining how well the present models compare against each other, further studies are needed to assess this issue. Generally, the different models for CS and CSD prediction are not directly comparable due to the many variations ranging from training data via label preparation to architectures. Like with DeepLC, the CPred study results suggest that simply adding atom counts does not enable accurate predictions for all unknown peptide modifications. This approach falls short when the modifications have chemically distinctive features from those in the training data. Further, like with DeepDigest, new standards required for publication should be implemented in order to ensure a high quality of models that allow for further development.

2.4. Ion Mobility

2.4.1. Background

LC‐based separation is the preferred method for separating peptides to decrease sample complexity. Yet, some peptides might not be separable by the standard setup used for online LC. Ion mobility spectrometry (IMS) can in some cases separate these peptides, even if they are isomers, based on their different conformations and collisional cross sections (CCS), enabling more refined analysis of complex mixtures [76]. IMS instruments are classified into temporal dispersive, spatial dispersive, and confinement/selective release types, with techniques like drift tube ion mobility spectrometry (DTIMS), traveling wave ion mobility spectrometry (TWIMS), differential mobility spectrometry (DMS), field asymmetric ion mobility spectrometry (FAIMS), and trapped ion mobility spectrometry (TIMS) [77]. TIMS, a common version of IMS used today, works by guiding ions along an electric field with an inert buffer gas flowing into the opposite direction. This gas, usually helium or nitrogen, facilitates the different drift speeds of ions by colliding with them [78].

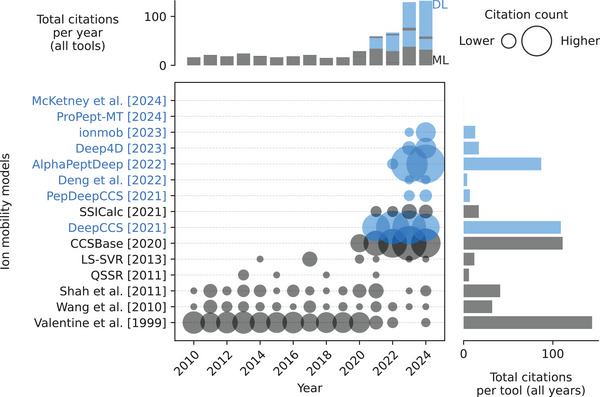

The research landscape of peptide ion mobility prediction indicates an upward trend in research activities and citations over time (Figure 7). Some of the first attempts predicted the drift time, an indication of ion mobility [79]. Initially, a series of traditional ML methods such as single hidden layer neural network [80], partial least square [81, 82], support vector regression [82, 83, 84], and Gaussian process [82] combined with extensive feature engineering on the physico‐chemical properties were published. In recent publications from 2021 to 2024, a notable shift has occurred, transitioning from traditional methods to more advanced deep learning architectures, including CNNs, BiLSTMs, and Transformers, which aim to predict the peptides CCS (Figure 7 and Table S1) [47, 70, 71, 85–88]. Nowadays the common input types across models include sequence information and charge state. Regarding training data, earlier studies relied heavily on in‐house datasets, whereas more recent models frequently utilize the dataset from Meier et al. [70]. Open‐source practices have also improved, with many recent models being released under licenses like MIT and Apache‐2.0, and their code being made available on platforms like GitHub. This code often supports both inference and training, reflecting a trend towards greater accessibility and usability in the research community.

FIGURE 7.

Citations over time for different ion mobility models. As of February 18, 2025. The cutoff years are 2010 and 2024. McKetney et al. is a preprint. DeepCCS is an abbreviation for DeepCollisionalCrossSection. References to all models can be found in Table S1. Models using deep learning (DL) are blue. Models using only classical machine learning (ML) are gray. The exact values of the citation counts per tool and year can be found in Table S2. Created with: https://github.com/jesseangelis/Citation_vis/ and OpenAlex [32].

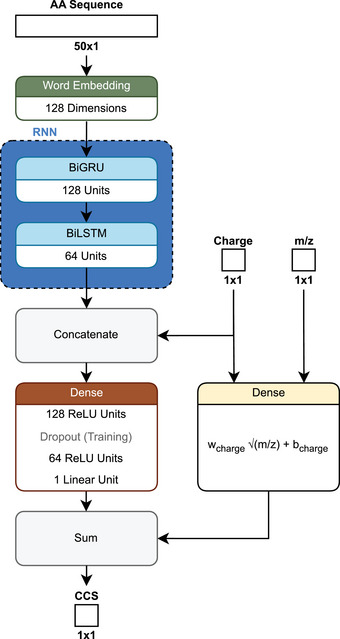

2.4.2. Introduction to ionmob

Since the CCS shows a strong correlation with the square root of m/z [78, 86], ionmob [86] approaches the task of predicting CCS by starting with exactly this square root of m/z. It is used as an initial projection, aiming to circumvent problems regarding unbalanced data, where higher charges are less frequent. It also allows comparison of the predictions against the baseline of the simple square root calculation and thus establishing a minimum required accuracy. The architecture of ionmob follows a dual‐path design (Figure 8). The main path takes the amino acid sequence and embeds it into a vector space using token embeddings (see Supporting Information). While the embedding process was not fully explained in the study, the text implies that individual amino acids were used as tokens, which would then be referred to as word embedding [86].

FIGURE 8.

Schematic architecture of ionmob [86].

The embeddings are then processed by bidirectional gated recurrent units (BiGRU) [86]. Similar to LSTMs, GRUs are a type of recurrent neural network designed to capture long‐term dependencies in sequential data. The key difference between them lies in how they process and manage information. LSTMs use three gates, each governed by sigmoid functions, to control the flow of information. The input gate regulates how much new information is added to the memory, the forget gate determines how much of the previous information is discarded, and the output gate controls how much of the updated memory is passed to the next step. In contrast, GRUs simplify this process by using only two gates: the update gate, which balances the retention of previous information with newly computed information, and the reset gate, which controls how much of the previous information is used to compute the new state [89]. BiGRU, like BiLSTM, refers to a design where information flows in both directions. GRUs were shown to produce smaller errors compared to LSTMs in a study on traffic flow [89]. To our knowledge, however, it is unclear whether this holds true for peptide property predictions based on amino acid sequences.

In ionmob the output of the BiGRUs is processed by two dense layers which also incorporate information about the CS. This output is then combined with a learned square root projection of the mass to charge ratio as an initial projection to result in the predicted CCS value. Measured CCS were aligned to a reference dataset, and training was performed using the MAE between predicted and aligned measurements [86].

The performance was compared against three other recently published CCS predictors AlphaPeptDeep [47], PepDeepCSS [85], and DeepCollisionalCrossSection [70] based on the median absolute percent error (MAPE) (see Supporting Information) to account for differences in training data normalization. ionmob reported comparable performance with PepDeepCSS and DeepCollisionalCrossSection while AlphaPeptDeep performed slightly worse. Depending on the CS, the prediction error changes more strongly across all compared prediction models. Peptides observed with a charge state of +2, +3, and +4 exhibited MAPEs of around 1.1%, 1.9%, and 2.8%–8%, respectively [86]. This indicates that all models do not generalize well for charge states that appear less frequently in the training dataset. While the addition of an initial projection might have helped in addressing that problem, none of the models adjusted their loss functions for class imbalance [47, 70, 85, 86]. A balanced metric like a weighted MAE, where the weights correspond to the inverse frequency of each charge state might allow for predictions that generalize better.

It was not addressed how ionmob performs with previously unseen modifications, likely because it currently supports only a limited set of common modifications [86]. Based on ionmob’s source code, supported modifications include phosphorylation (serine, threonine, and tyrosine), N‐terminal acetylation (lysine), oxidation (methionine), carbamidomethylation (cysteine), and carbamylation (cysteine) [86].

2.4.3. Limitations and Future Direction

As discussed in the previous properties, class imbalance seems to be a problem in current CCS prediction models too. The less frequent a given charge state is, the larger the error of the corresponding CCS gets. In general, this problem seems to be overlooked, as none of the above methods take measures to address it. Further, the problem of accurately predicting modified peptides remains unsolved for CCS. While ionmob, PepDeepCCS, and DeepCollisionalCrossSection only have a fixed limited number of modifications that are embeddable [70, 85, 86], AlphaPeptDeep employs a chemical composition approach similar to the ones discussed earlier [47]. However, the results in the AlphaPeptDeep study, regarding how well it performs on individual PTMs for CCS predictions, are not detailed enough to draw a conclusion about its performance. Therefore, further studies regarding the CCS predictions of peptides with unknown modifications are needed.

2.5. Fragmentation Ion Intensities

2.5.1. Background

To increase sensitivity and specificity, tandem MS is used in most proteomic measurements. Here m/z bands containing selected peptide ions (precursor ions) are selected for fragmentation. This fragmentation can be performed in multiple ways [90, 91]; however, the commonly applied fragmentation in bottom‐up proteomics is collision‐induced dissociation (CID) in the form of resonance‐type CID (referred to as CID on Thermo Fisher instruments) or beam‐type CID (referred to as HCD on Thermo Fisher instruments or CID by most other vendors) [90, 91].

Predicting a peptide fragmentation spectrum can take one of two main approaches: (1) focusing on the prediction of backbone ions (i.e., a, b, c, x, y, and z ions, with or without neutral losses) or (2) predicting the complete spectrum, including non‐backbone ions (e.g., immonium ions, internal ions, or even known‐unknown peaks). In this section, we will concentrate on the backbone‐only approach, as it remains the current standard in the field. However, it is important to note that recent advancements, such as the PredFull [92] model, have explored full‐spectrum predictions. This expanded approach holds promise for capturing a broader range of fragmentation patterns.

The field of fragmentation ion intensity prediction for peptides (Figure 9 and Table S1) has experienced significant advancements, particularly with the transition from traditional ML methods to deep learning techniques. Early models, such as MS2PBPI [93], employ gradient boosting, while more recent models have adopted various neural network architectures, notably BiLSTMs in models like pDeep3 [94] and DeepMass:Prism [44]. The latest trend is the integration of transformer architectures, as seen in AlphaPeptDeep, with some models employing hybrid approaches that combine different architectures. The evolution of these models reflects a broader trend in bioinformatics. Input types remain consistent across these models, typically requiring peptide sequence and CS, with some incorporating additional parameters such as collision energy. The choice of loss functions varies, with common options including PCC and MSE. While there is no universally accepted training dataset, many models are trained on in‐house datasets or utilize publicly available resources like ProteomeTools [52]. Like with other properties there is a clear preference towards open‐source development, most licensed under Apache‐2.0 and available on platforms like GitHub, supplying both training and inference code. Citation trends indicate a dynamic field with continuous innovation. Early traditional ML models gradually increased in citations, while DL models introduced around 2019 saw significantly rising interest. Models like Prosit and MS2PIP have maintained high citation counts, reflecting their foundational impact on the field, while newer models such as AlphaPeptDeep have quickly gained traction. However, it is important to note that both Prosit and AlphaPeptDeep are also used to predict other properties, which likely skews the citation counts.

FIGURE 9.

Citations over time for different fragmentation ion intensity models. As of February 18, 2025. The cutoff years are 2010 and 2024. MS2PIP Original includes publications from 2013 to 2015. Prosit includes publications from 2019, 2021, 2022, 2024, and 2024 (preprint). pDeep includes publications from 2017 and 2019. References to all models can be found in Table S1. Models using deep learning (DL) are blue. Models using only classical machine learning (ML) are gray. The exact values of the citation counts per tool and year can be found in Table S2. Created with: https://github.com/jesseangelis/Citation_vis/ and OpenAlex [32].

2.5.2. Introduction to Prosit

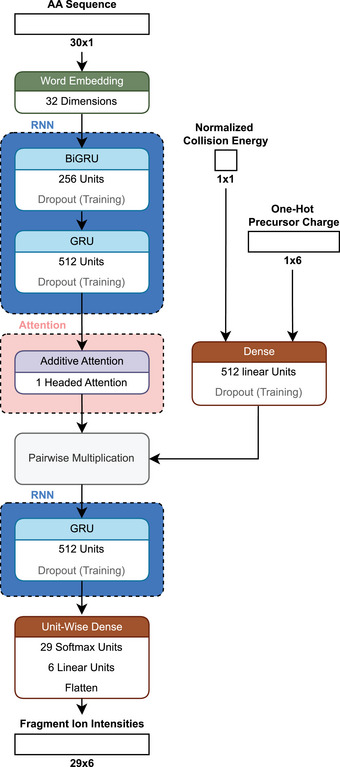

The Prosit model [51] is among the most used peptide property prediction models today. The base version of Prosit was trained on a large, publicly available dataset generated as part of the ProteomeTools project [52] that contained 331,000 synthetic peptides and 5.43 million peptide‐spectrum matches. It encodes the amino acid sequence by word embeddings. In addition, the one‐hot encoded charge state of the peptide ion of interest and a value for the normalized collision energy are fed into a dense neural network (Figure 10). The word embedding is transformed by two stacked GRUs, allowing it to capture the context of the sequence when going along each amino acid. In the original publication, all GRUs are described as bidirectional. However, the model files suggest that only the first GRU layer is a BIGRU [51].

FIGURE 10.

Schematic architecture of Prosit [51].

The information from the GRU layers is then given to an attention layer. The attention mechanism is crucial to the success of the transformer architecture, which has driven the development of powerful large language models like the once behind ChatGPT. In an attention layer, the relative importance of one element to another is evaluated, contrasting sharply with the sequential nature of LSTMs and GRUs. Through sophisticated matrix calculations, a value matrix is generated, indicating how much one value should attend to (or be influenced by) another. These attention values are then used to update the original embeddings, ensuring that primarily relevant information guides the update process [95]. This explanation, however, merely scratches the surface of the topic, and a full explanation is out of the scope for our review. For those interested in a deeper understanding of attention, we recommend exploring the visualizations provided by the 3blue1brown YouTube channel [96]. While not peer reviewed, a visual grasp of the underlying matrix calculations might help newcomers navigate the technical peer‐reviewed papers more easily, lowering the barrier to entry in this field.

Continuing along the Prosit architecture, the processed sequence information is pairwise multiplicated with information on the normalized collision energy and precursor charge to form a single latent space [51]. Latent space refers to a multi‐dimensional representation of data where complex features and patterns are captured in a simplified vectorized form [97]. It is decoded by another GRU and a uni‐twise dense network to a matrix representing the intensities of the 174 supported fragments, covering singly, doubly, and triply charged b‐ and y‐ions for peptides up to 30 amino acids. Currently, the base model of Prosit only supports carbamidomethylated cysteine and oxidation of methionine as modifications. However, this limitation is envisioned to be removed in the future [51].

For training, a normalized spectral contrast loss was used. This function takes two vectors representing the true spectrum and the prediction . These vectors are L2 normalized, turning them into unit vectors. Then the angle between them is calculated by applying the arccos. This angle is normalized to result in a value of 0 if the vectors align, 1 if they are orthogonal or 2 if they are antiparallel:

| (2) |

A recent study [91] compared different deep learning fragmentation prediction models. It was found that Prosit outperforms all other models except for when predictions are made for measurements done on a QExactive instrument using HCD. In this case, Prosit is recommended to be either finetuned or replaced with pDeep3 or AlphaPeptDeep. However, since a QExactive instrument is also using HCD (beam‐type CID) for fragmentation, it may be reasonable to assume that the difference in prediction performance is largely a result of differences in the mass spectrometers (e.g., ion transfer efficiencies) which may result in slight overfitting of the model, rather than a per se difference in prediction performance with respect to the fragmentation method. In the comparison of the different models their published versions were used. In some cases, they were fine‐tuned but it was decided to not retrain them from scratch [91]. For a meaningful comparison of architecture and loss function choices, however, common benchmark datasets and clearly defined evaluation criteria are essential. Unfortunately, this issue is not limited to fragmentation prediction alone. To our knowledge, none of the properties discussed above have community‐wide accepted standards for quantifying model performance. This lack hampers model comparability, making it challenging for researchers to identify areas for improvement and to apply successful approaches from one property to another.

In contrast to Prosit’s normalized spectral contrast loss, pDeep3 uses the PCC to assess the similarity between spectra and AlphaPeptDeep implements an L1 loss [47, 94]. These three loss functions represent different learning approaches. The spectral contrast loss in theory focuses on the general shape of the spectrum, penalizing spectra with poor relative intensities compared to their ground truths. Furthermore, being a measure based on angles in the vector space it is invariant to the actual scale of the values. L1 loss instead focuses on the actual difference in intensity values, which in the case of AlphaPeptDeep were normalized beforehand, as it is sensitive to different scales [47]. The PCC captures the linear relationship between the spectra, making it sensitive to the overall trend, but it does not account for small variations in ratios as effectively as the spectral angle [98], placing it between spectral contrast loss and L1 loss. Given a community wide accepted benchmark dataset, it would be interesting to see how each architecture performs when trained with the different loss functions, to investigate whether the focus on spectrum shape is indeed a factor for driving model performance.

2.5.3. Limitations and Future Direction

An, to the best of our knowledge, overlooked aspect when talking about fragmentation spectrum prediction is that while the training data will also contain a small fraction of false positive peptide spectrum matches (PSMs) (≤ 1% FDR), the FDR at the fragment level, the information the model is trained on, is essentially unknown. Even high‐scoring true positive PSMs are likely to contain incorrectly annotated peaks. Depending on the intensity of these incorrect peaks, the losses used to train a model can be thrown off significantly. This may also explain the long “tails” typically observable in performance distributions.

DL models have improved peptide fragment ion intensity prediction in tandem MS. However, challenges persist, such as limited handling of diverse PTMs, difficulty in generalizing across instruments and fragmentation methods, and a lack of standardized benchmarks for consistent model evaluation. Loss functions vary across models with Prosit’s spectral contrast loss, AlphaPeptDeep’s L1 loss, and pDeep3’s PCC, each emphasizing different facets of spectral similarity. The likely presence of false positive PSMs in training data, combined with poorly defined false discovery rates at the fragment level, adds further complexity. Addressing these limitations could significantly enhance the robustness and applicability of fragmentation prediction models in proteomics.

2.6. Detectability

2.6.1. Background

While the aforementioned properties all are important for the accurate detection and quantification of previously unmeasured peptides and thus proteins, one could view the problem from a more general perspective, asking if a peptide is typically present when investigating a certain protein (proteotypicity), is it able to ionize and travel along the mass spectrometer (flyability), is it then also detectable (detectability), and how well its abundance also represents the parent‐protein's abundance (quantotypicity). These properties are usually discussed together or even used synonymously as they are inadvertently connected to each other and many models have been published aiming to predict them (Figure 11 and Table S1). A peptide can only be quantotypical for a protein if it is proteotypic, able to fly, and accurately detectable. For that, it must be present after digestion and fragment well to be identified consistently. Therefore, detectability and more over quantotypicity should be considered more relevant properties of peptides. Especially quantotypicity includes all other properties like digestibility and flyability. Due to the physical limitations of current mass spectrometers only a subset of peptides can be accurately investigated by MS2 within a reasonable time. Thus, knowledge about the most quantotypical peptides is important to adjust the search space accordingly [91, 92].

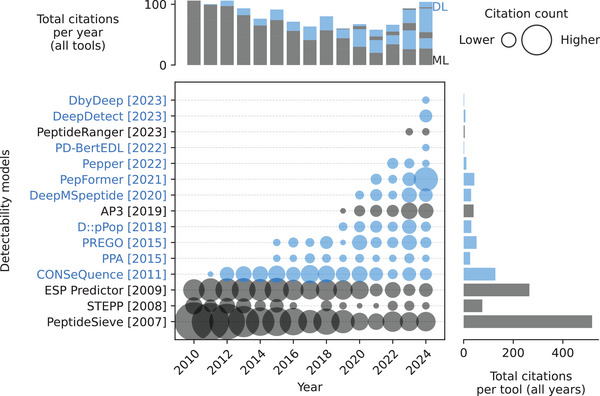

FIGURE 11.

Citations over time for different detectability (flyability) models. As of February 18, 2025. The cutoff years are 2010 and 2024. Pepper is the only flyability model. References to all models can be found in Table S1. Models using deep learning (DL) are blue. Models using only classical machine learning (ML) are gray. The exact values of the citation counts per tool and year can be found in Table S2. Created with: https://github.com/jesseangelis/Citation_vis/ and OpenAlex [32].

The models proposed to predict detectability typically use the peptide sequence as input, and in some cases, they also incorporate calculated physicochemical properties. Like with other properties, there has been a shift toward more complex DL models around 2020. Initially, CNNs were the preferred architecture, but current trends have favored sequence‐oriented architectures such as BiLSTMs and transformers. Commonly used loss functions for detectability models include binary cross‐entropy (BCE) and MSE. While earlier models such as PeptideSive [99] and ESP Predictor [100] have seen a decline in popularity, as indicated by decreasing citation counts, newer models like PepFormer [101] and DeepDetect [102] are gaining traction.

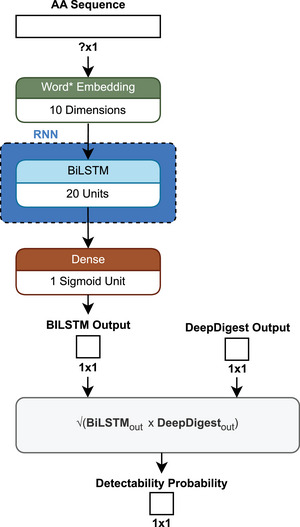

2.6.2. Introduction to DeepDetect

The authors of the previously discussed DeepDigest [25] considered digestion as a significant factor for the prediction of proteotypic peptides, because it directly influences the availability of peptides for subsequent analysis and identification in an MS experiment. They used DeepDigest’s digestibility probabilities in combination with another probability predicted by a simple BiLSTM to get a detectability probability, to build a new model called DeepDetect [102] (Figure 12). The publication does not define the maximum sequence length nor the actual type of embedding. DeepDetect was trained with a BCE loss function to assess whether a given peptide is detectable in the actual measurement. The addition of the digestion probability to the simple BiLSTM showed an increase in the AUC from 0.721–0.949 to 0.845–0.976 depending on the protease used. Further, the digestion probability was added to one of the previous state of the art models, PepFormer, where it increased the AUC from 0.682–0.903 to 0.825–0.972 depending on the protease [102].

FIGURE 12.

Schematic architecture of DeepDetect [102]. *Not clearly defined in the publication but assumed to be word embedding.

Predictions made by DeepDetect were used to filter existent spectral libraries, which were then used to detect proteins in plasma and yeast samples. Searching for only the top 40% of peptides based on detectability decreased the runtime by 42.6% for plasma and 14.8% for yeast, while increasing the precursor identifications by 0.9% and 2.0%, as well as the protein group identifications by 3.6% and 0.3%, respectively [102].

2.6.3. Introduction to PepFormer

PepFormer takes a unique approach to detectability predictions by employing a Siamese network (Figure 13). This architecture aims to generate similar vector representations for peptides with comparable detectabilities. Specifically, a Siamese network processes two different peptides through identical weights, yielding two outputs that are subsequently compared to assess their similarity. For training, the authors applied contrastive loss as follows:

| (3) |

where v 1 and v 2 are the two vector representations for each peptide, D (v 1, v 2) is their Euclidean distance, m is a margin parameter, and is a binary indicator: if both peptides are either detectable or undetectable, and if they differ. Additionally, for each vector representation, an independent cross‐entropy loss was computed for the numerical value of the predicted detectability of each peptide [101].

FIGURE 13.

Schematic architecture of PepFormer [101].

2.6.4. Introduction to Pepper

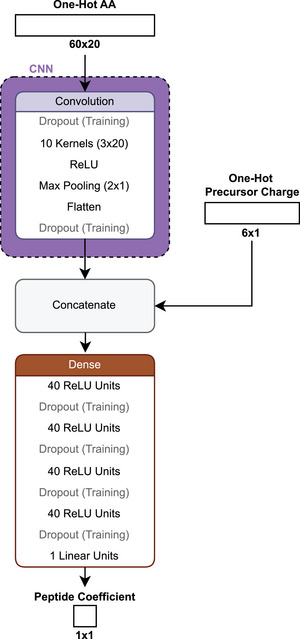

A recent model that was proposed to quantify biases associated with flyability is Pepper [103]. It follows the assumption that peptides stemming from the same parent protein have the same abundance and that their intensities could be quantified as a sequence dependent coefficient multiplied with the adjusted observed parent protein's abundance. Pepper aims to predict these coefficients. To verify the first assumption, multiple sibling peptide pairs were selected, and their observed quantity ratios across different runs were compared. Specifically, the ratio of a peptide in two runs was evaluated against the corresponding ratio of its sibling peptide in the same runs. While the PCC of 0.39 was considered high and used to prove that sibling pairs have equal abundances [103], this correlation should be considered only moderate. Therefore, indicating that if the assumption is true, the peptide‐specific bias is at least not linear. To prove that Pepper reduces this bias for protein quantification, it was shown that the correlation between this adjusted protein quantification and the respective mRNA expression was increased. However, this increase was rather small with a ∼0.03 Pearson correlation, while the absolute Pearson correlation was in the range of ∼0.3 to ∼0.46 which is only moderate at max [103]. This is likely due to the many factors influencing the abundance of a protein outside of mRNA presence like proteolysis or translation rate. While the approach Pepper takes is interesting, there is still more research needed to quantify flyability. In Pepper's architecture (Figure 14), an unusual design choice is the placement of a dropout layer immediately after the one‐hot encoding of the peptide sequence. Dropout, which randomly sets values to zero during training to prevent overfitting, is typically not applied to inputs or one‐hot encoded values, as they already contain mostly zeros. If the layer sets the single “1” in the encoding to zero, it effectively simulates a missing amino acid, or “uncertainty” on the input sequence. The impact of this approach on model performance remains unclear but could worsen model performance substantially.

FIGURE 14.

Schematic architecture of Pepper [103].

2.6.5. Limitations and Future Direction

As mentioned above, both PepFormer and DeepDetect exhibit comparable performance when digestibility predictions are incorporated [102]. This similarity suggests that model architecture alone may not significantly influence predictive capabilities. Instead, meaningful input features, such as digestibility, are likely the primary determinants of model performance. However, it is worth questioning whether DeepDigest does in fact predict digestibility or if it is already a detectability predictor. In its training data, only peptides that were both digested and detected are labeled as positive examples. Peptides that were not detected are assumed to be not digested, which introduces a bias toward detectable peptides. In clearer terms, providing any model with the results it is to predict will likely result in good performance. To predict upstream properties like digestability the training data must not be influenced by any downstream property like flyability or detectability or at least correct for these factors.

While it is questionable whether DeepDetect has actually utilized this approach, the concept of building stacked or hierarchical models remains largely unexplored in MS‐based proteomics. To our knowledge, no viable model currently exists for quantotypicity prediction. However, developing such a model would likely require robust predictions of upstream features. Ideally, such a model could learn directly from the training data. In practice, though, the complexity of quantotypicity poses challenges, as the training data may lack sufficient sample diversity, contain high levels of noise, or have an unbalanced representation of critical peptide features. These limitations might hinder a model's ability to capture all relevant parameters without additional prior information in the form of predictions. In a larger scope, integrating multiple predictive models into a comprehensive framework could enhance data quality estimation and provide ground‐truth data for evaluating search algorithms [12].

In alignment with DeepDigest, DeepDetect does not allow for the incorporation of PTMs [25, 102]. The same is also true for PepFormer [101]. This limitation as discussed earlier is a desirable point of improvement for future models. Detectability, quantotypicity, and flyability are essential properties that build upon many of the previously discussed peptide characteristics. However, while detectability can be predicted reasonably well by incorporating the digestibility predictor DeepDigest, as done in DeepDetect, to our knowledge, no effective models currently exist for predicting flyability or quantotypicity. Further work is needed, particularly to expand models to include modified peptides. The promising approach of incorporating predictors of upstream properties may pave the way for a viable quantotypicity predictor.

3. Related Peptide Property Prediction

We have discussed key peptide properties, including digestibility, retention time, charge states, collisional cross section in ion mobility, fragmentation ion intensities, flyability, detectability, and quantotypicity. However, many additional peptide properties, like surface area, gas‐phase basicity, flexibility or rigidity, stability, and synthesizability, play significant roles in molecular behavior but lie beyond the scope of this review. In the following sections, we highlight two specific properties, hydrophobicity and 3D structure, which are particularly relevant to MS‐based proteomics due to their substantial influence on molecular behavior in general.

3.1. Hydrophobicity

3.1.1. Background

Hydrophobicity is a key property of peptides that directly and indirectly (through protein structure) influences various steps in an MS‐based workflow, such as digestibility, chromatographic behavior, and ionization efficiency [104, 105, 106]. Traditionally, the hydrophobicity of peptides is estimated by applying experimentally derived hydrophobicity scales based on amino acid sequences [107, 108]. However, hydrophobicity should be understood as a broad concept rather than a single numeric value. One widely used measure of hydrophobicity is the octanol‐water partition coefficient (KOW or log p). The traditional method for experimentally determining log p is the shake flask method. Here, a compound is dissolved in both octanol and water, shaken until equilibrium is reached, and the concentration in each solvent is measured. The ratio of the compound's concentration in octanol to water is calculated, and the logarithm of this value gives log p. Positive log p values indicate hydrophobic compounds, while negative values indicate hydrophilic compounds [109, 110, 111, 112]. Another measure, log D, considers the compound's distribution between its charged and uncharged forms across different pH levels. Log D provides a more comprehensive understanding of how a compound behaves in both its ionized and unionized states, which is important in biological environments and especially for peptides [113, 114].

3.1.2. State of the Art

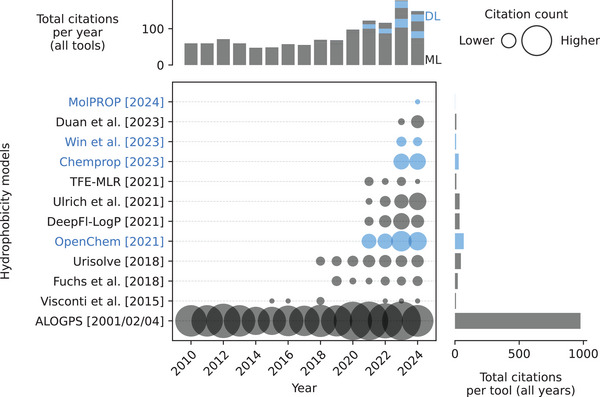

There has been a substantial amount of research aiming to predict hydrophobicity of compounds (Table S1). In fact, most citations regarding hydrophobicity prediction models in general these days still go to one of the original models in this field, ALOGPS [115, 116, 117, 118, 119] (Figure 15). The prediction of hydrophobicity for peptides specifically has not been widely explored, with only a handful of studies [120, 121, 122]. These studies are hindered by small datasets (fewer than 1000 samples) and, because of this, rely on traditional ML techniques and do not use more advanced DL models. The existing models focusing on peptide hydrophobicity demonstrate notable weaknesses in their predictions. One study, Visconti et al. [122], observed R 2 values below 0.91, indicating only moderate predictive accuracy, while another, Fuchs et al. [121], reported an RMSE of 0.6, which is significantly higher than the experimental standard deviation of 0.08. This suggests that the model's prediction errors exceed the natural variability found in experimental data. Notably, in the latter study, 75% of the test data were also used for training, which likely led to an overestimation of model performance due to data leakage (see Supporting Information). When the same model was tested on an in‐house dataset of 15 peptides, it had an RMSE of 0.9, with 46.7% of predictions showing errors greater than 1.0 log units when compared to actual measurements [121].

FIGURE 15.

Citations over time for different hydrophobicity models. As of February 18, 2025. The cutoff years are 2010 and 2024. ALOGPS includes publications from 2001, 2001, 2002, 2004, and 2004. References to all models can be found in Table S1. Models using deep learning (DL) are blue. Models using only classical machine learning (ML) are gray. The exact values of the citation counts per tool and year can be found in Table S2. Created with: https://github.com/jesseangelis/Citation_vis/ and OpenAlex [32].

Besides its impact on peptides in MS experiments, hydrophobicity is also a critical factor in drug development (here mostly referred to as lipophilicity), as it greatly influences a drug's mode of action and how well it is absorbed by the body [123]. Thus most models that aim to predict hydrophobicity are developed from a drug development perspective. A recent review [124] on in silico drug absorption provides an extensive overview of models used for predicting log p and log D. For a detailed list of these models, refer to that review. The log D prediction models presented there show comparable results to the peptide specific ones discussed earlier, with reported R 2 values around 0.9 and RMSEs of approximately 0.5 [124]. However, most of these models are primarily trained on datasets of drug‐like molecules, which may include only a limited number of peptides or peptide derivatives. As a result, predicting properties like hydrophobicity or lipophilicity for peptides could fall outside the training distribution of these models. To determine their accuracy in peptide‐specific predictions, a benchmarking study would be necessary to evaluate their performance in predicting peptide hydrophobicity.

3.1.3. Limitations and Future Direction

A key challenge in creating a suitable benchmarking dataset is ensuring a high quality of data. One study [125] identified several issues in the datasets they analyzed, such as incorrect log p values due to transcription errors or improper conversions. Other problems included incorrect molecular identifiers or SMILES notations, unsuitable experimental conditions for determining log p, and inherent measurement errors. Even under ideal conditions, these errors can range between 0.2 and 0.4 log p units, which affects both the reliability of the data, and the models trained on it [125].

Given that hydrophobicity is a key property influencing other properties such as digestion and retention time [48, 126], it is likely that more interest and research in this area will grow, especially if large datasets become available. The peptide specific studies mentioned also lack publicly available code or usable models, which limit their accessibility and reproducibility [121, 122]. Beyond the data limitations, a recent review [120] highlighted another issue. Most studies only consider octanol as the solvent, which could overlook the effects of conformational changes that peptides undergo in different environments. Additionally, they pointed out that no current study incorporates 3D structural information, which becomes crucial when peptides form secondary structures. This missing aspect could be a significant factor in accurately predicting peptide properties [120].

3.2. 3D Structure

3.2.1. Background

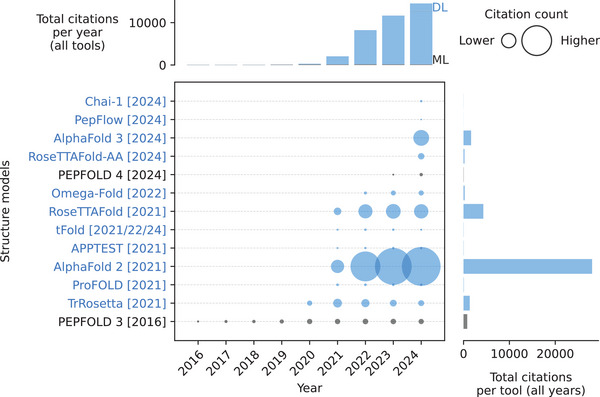

Structural knowledge of proteins and peptides is crucial for various areas in biology, like drug development, where it helps to improve their therapeutic potential by improving solubility, absorption, and permeability [127]. However, determining these 3D structures is a non‐trivial task due to the influence of various environmental factors, such as pH and temperature, on peptide folding [128]. The field of protein structure prediction has enjoyed a lot of interest with many models being proposed over the time (Figure 16 and Table S1).

FIGURE 16.

Citations over time for different 3D structure models. As of February 18, 2025. The cutoff years are 2010 and 2024. tFold includes publications from 2021, 2022 (preprint), and 2024 (preprint). Chai‐1 is a preprint. RoseTTAFold includes two publications from 2021. Omega‐Fold is a preprint. References to all models can be found in Table S1. Models using deep learning (DL) are blue. Models using only classical machine learning (ML) are gray. The exact values of the citation counts per tool and year can be found in Table S2. Created with: https://github.com/jesseangelis/Citation_vis/ and OpenAlex [32].

3.2.2. State of the Art

Given the significance and difficulty of this problem, it is, therefore, no surprise that Google DeepMinds' AlphaFold2 [129] made headlines beyond academia with its remarkable performance in the 2020 protein folding assessment, CASP14, displaying a substantial leap in predictive accuracy [130, 131, 132]. Most notably two key figures behind AlphaFold2 were awarded the Nobel Prize in chemistry [10]. However, when talking about AlphaFold2 it is important to keep in mind that CASP14 evaluates predictions for protein crystal structures, not peptides, and not all targets were successfully predicted [130]. Two years after AlphaFold2’s release, its capabilities in peptide structure prediction were examined [128]. It was found that AlphaFold2’s predictions closely matched experimentally determined structures, particularly when the peptides had well‐defined secondary structures without multiple turns. It was also highlighted that the inherent flexibility of peptides complicates accurate structure prediction, as conformations may vary with specific experimental conditions like pH or temperature. Furthermore, AlphaFold2 might predict alternative low‐energy conformations that differ from those determined experimentally. The study compared AlphaFold2 to other structure prediction models, including PEP‐FOLD3 [133], Omega‐Fold [134], RoseTTAFold [135], and APPTEST [136], demonstrating that AlphaFold2 either outperformed or was at least comparable to these alternatives [128].

Since then, AlphaFold has been updated to AlphaFold3 [137], which focuses on predicting the structures of complexes. It features significantly different architecture. AlphaFold2 uses an “evoformer,” which is a type of network that aims to extract information about evolutionary conserved regions that could be important for the structure. It then predicted relative angles between atoms based on this information [129]. In contrast, AlphaFold3 places less emphasis on evolutionary data, employing a technique called diffusion to generate atom positions [137]. This approach was previously explored in RoseTTAFold All‐Atom [138]. Diffusion models, known for their application in high‐quality image generation (e.g., StableDiffusion [2]), can be used to denoise noisy data/pictures. If they are supplied with random noise, they will instead generate a completely new image [139]. Similarly, AlphaFold3 starts with random atom coordinates and feeds information about the molecules into the diffusion module to derive the final structure through recurrent denoising [137].

AlphaFold3’s architecture consists of different modules that each have a specific task. The input encoder converts unstandardized inputs into a computationally usable array format. The template and multi‐sequence alignment modules incorporate information from structurally similar templates and genetically similar sequences, respectively. The “pairformer” then changes the information‐loaded arrays to represent more abstract features of the input. The diffusion module performs diffusion on random atom positions to infer the final structure [137].

During training, different losses are calculated based on the performance of each module and then combined to the final loss function:

| (4) |