Abstract

This article explores challenges for bridging the gap between scientists and healthcare professionals in artifical intelligence (AI) integration. It highlights barriers, the role of interdisciplinary research centers, and the importance of diversity, equity, and inclusion. Collaboration, education, and ethical AI development are essential for optimizing AI’s impact in perioperative medicine.

Keywords: AI, Artificial intelligence, Education, DEI

Clinical trial number

Not applicable.

Introduction

Artificial intelligence (AI) is transforming how (healthcare) tasks are performed by enabling artificial agents to process environmental inputs and execute intelligent actions, often enhancing or replacing human interventions [1]. While definitions of AI keep evolving, recently it has been defined as ‘systems that display intelligent behaviour by analysing their environment and taking actions - with some degree of autonomy to achieve specific goals’ [2].

Recent advancements in machine learning algorithms and foundation models have significantly increased AI’s capabilities and accessibility, mainly through natural language processing, multimodal AI models, efficient transformer architectures, and self-supervised learning techniques, enabling more personalized AI assistants and innovations. As a result, AI is rapidly evolving to become a cornerstone in modern healthcare, offering tools for diagnostics, predictive analytics, interventions, care, and workflow optimization [3, 4, 5].

In perioperative medicine, AI can enhance patient care by optimizing drug dosing, and predicting complications such as hypoxemia or hemodynamic instability [6]. Moreover, AI can be a powerful tool to train safely new generations of anesthesiologists and surgeons [7]. However, successful AI integration into clinical settings depends not only on technological advancements but also on seamless collaboration between AI scientists and healthcare professionals [8].

As such, introducing a new AI technology within healthcare is more than just adding a technical system, it impacts the distribution of tasks, professional identity and use of resources [9].

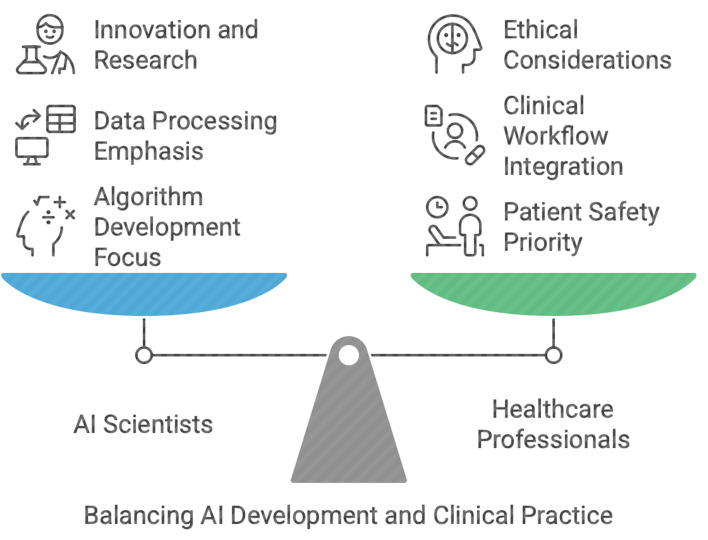

Consequently, AI scientists and healthcare professionals bring their own distinct expertise to the table: AI scientists excel in algorithm development and data modeling, while healthcare professionals focus on patient safety, clinical workflows, and ethical considerations. Inherent differences in their professional views and key performance indicators may lead to communication challenges and misaligned priorities.

This editorial examines the key barriers to interdisciplinary collaboration, explores strategies to enhance cooperation, and discusses the role of DEI (diversity, equity and inclusion) in creating ethical AI tools.

Challenges in interdisciplinary collaboration

AI scientists and physicians operate within vastly different intellectual frameworks and their priorities often diverge [8].

AI scientists focus on machine learning algorithms, data processing, and computational efficiency, often working in spaces far removed from clinical environments. Many of the challenges they tackle have AI solutions that work “most” of the time, building on data where there are correct (“right”) and incorrect (“wrong”) datapoint assignments. In contrast, anesthesiologists prioritize real-time decision-making, patient safety, the nuances of human physiology, and ethical dilemmas. Many of the challenges they tackle, do not have a “right” or “wrong”– but each option comes with advantages and disadvantages that might be assessed by different people in different ways and / or differently by the same person over time. (Fig. 1)

Fig. 1.

Interdisciplinary Collaboration for AI Integration in Perioperative Medicine

These differences can lead to misunderstandings when translating clinical needs into technical specifications. For instance, an AI scientist might design a predictive model for intraoperative complications without fully understanding the dynamic and high-stakes nature of perioperative medicine, as well as the ultimate consequences of these complications [10]. Additionally, causal relationships between different factors might not be well captured in the AI model, leading to spurious correlation. These considerations might lead to predictions that are not adequate for the complexity of the clinical task. Conversely, physicians may struggle to grasp the limitations of AI systems, such as the reliance on high-quality data or the risk of overfitting [11]. This might lead to unrealistic expectations and disappointments on the side of the healthcare professionals, when these AI tools are developed in a vacuum. An example of this might be the reliance on AI for predicting operating room turnovers without keeping in mind human factors and limitations (complexity of cases; patients not being prepared, etc…).

Bridging this knowledge gap is essential to ensure that AI tools are both technically sound, clinically relevant, and can be integrated into healthcare as a complex socio-technical system [12].

Strategies for effective interdisciplinary communication

Medical AI research centers are critical in integrating fundamental and applied research, ensuring that theoretical advancements lead to practical innovations [13]. These centers provide a structured environment where AI scientists and clinicians collaborate from the outset, allowing for the development of research questions that are both clinically relevant and ethically sound. The collaboration of AI experts and physicians from the beginning can create a fertile ground for collaboration and the development of new research questions that are both appropriate and ethical [13]. Differences in understanding can easily be taken care of in the beginning of projects, but might be harder to take into account, when discovered later on. Moreover, these centers enable to leverage large funding opportunities focusing on interdisciplinary projects. Many funding bodies prefer to fund projects in centers that have a history of successful projects.

Another approach, if the development of a research center is too challenging for small institutions, is the introduction of translational roles that can facilitate communication between AI scientists and physicians [14]. These roles could bring closer AI scientists and doctors. They could be filled by individuals with expertise in both domains, such as clinicians with a background in data science or AI scientists with experience in healthcare. However, with the advent of AI, the skillset overlap required broadens significantly, and experts in both AI & healthcare are paramount. As such, clinicians with an AI background must sit together with AI experts, they must collaborate from the beginning, from the preprocessing and cleaning of the dataset. This can significantly reduce misunderstandings and ensure that AI tools are designed with clinical applicability in mind, while ensuring expertise in all fields.

By prioritizing the needs and preferences of clinicians, such as optimizing clinical workflows, data scientists can create AI tools that are intuitive, user-friendly, and seamlessly integrated into existing workflows. Techniques such as user interviews, persona development, and usability testing can provide valuable insights into the real-world challenges faced by physicians, guiding the iterative refinement of AI solutions.

A key point here is to understand how these tools are actually used in practice [15].

The creation of these tools need to be followed by regular feedback loops, recurrent progress reviews, external validation and the establishment of user-friendly designs based on real-world clinical input. These feedback loops create a collaborative environment where both groups feel heard and valued, fostering a sense of shared ownership over the final product [16].

Early and iterative user testing is crucial for identifying gaps and ensuring that AI tools align with clinical workflows [17]. Customizable interfaces can help accommodate individual preferences without compromising system functionality. For example, physicians might be able to tailor the display of an AI tool to show only the most relevant metrics or alerts, enhancing usability and reducing cognitive load [18].

By involving healthcare professionals in prototype testing, dashboard developers can gather feedback on usability, functionality, and design, enabling continuous improvement and overall improving patient care [19].

Education and training

Early education and training in medical school are essential for bridging the chasm between AI scientists and physicians [20]. Clinicians should receive training on the capabilities and limitations of AI tools, while AI scientists should be exposed to clinical environments to gain firsthand experience with the challenges faced by physicians. Anesthesiology societies should take a leading role in promoting and providing AI training, ensuring that anesthesiologists are equipped with the knowledge and skills necessary to fully understand the implications of using or developing AI-driven tools. All involved would benefit to learn how to collaborate effectively with people of a different profession. It takes time, for example, to learn each other’s language, thinking patterns and most of all values.

Ethical AI

One of the noteworthy limitations of AI systems, are the data they are trained on. Unfortunately, many datasets used in healthcare AI are biased, underrepresenting certain populations based on factors such as age, gender, ethnicity, or socioeconomic status [21, 22, 23]. These biases can lead to disparities in care, as AI tools may perform poorly for underrepresented groups. For example, an AI model trained primarily on data from younger, healthier patients may not accurately predict complications in older or more complex patients [24].

Addressing these biases is crucial to ensuring that AI-driven decision-making adheres to fairness, transparency, and accountability principles [24, 25, 26]. Bias mitigation techniques, such as re-sampling, re-weighting, or adversarial debiasing, should be employed to ensure equitable performance across patient populations [25, 27]. Additionally, without a robust ethical framework, the potential for these technologies to exacerbate existing disparities remains a significant concern in their implementation. Recently, official organizations within the USA and Europe elicited recommendations for fairness in AI and algorithmic bias management [28, 29]. The underlying assumption is that most problems in complex modern healthcare require more than one perspective to find viable, sustainable, and cost-effective solutions.

Diversity within development teams is also critical for creating inclusive AI tools [30]. Teams should include professionals from a range of backgrounds, including physicians, nurses, IT staff, and patient advocates. By incorporating diverse perspectives from key stakeholders, development teams can better anticipate and address potential biases or usability issues, resulting in more equitable and effective solutions.

Future research directions

Developing early-on interdisciplinary training programs can equip professionals with the skills needed to navigate the intersection of AI and medicine. For example, medical schools could offer courses on AI basics, while computer science programs could include modules on healthcare applications. In all schools, it would make sense to help students learn to work with people of different backgrounds.

Good quality in-depth research that explores the barriers to the implementation of AI in perioperative medicine needs to be backed by multiple professional societies through specific grants [31].

Moreover, the creation of shared collaboration platforms can facilitate real-time testing, user-friendly designs and refinement of AI solutions. These platforms could include simulation environments where AI scientists and physicians can interact with prototypes, identify issues, and propose improvements. This includes documenting case studies of successful and unsuccessful AI implementations in perioperative medicine. As such, this can provide valuable insights into best practices and common pitfalls. These case studies can serve as a resource for future interdisciplinary projects.

Conclusion

In conclusion, the integration of AI into perioperative medicine holds immense promise but requires effective collaboration between AI scientists and physicians. By addressing communication barriers, valuing interdisciplinary cooperation with all stakeholders, and prioritizing DEI considerations, we can develop AI tools that are both technically robust, user-friendly and clinically practical. A focus on mutual understanding, respect, ethics and shared goals is essential for realizing AI’s full potential in enhancing patient safety and care.

Author contributions

MG, AD, PLI, PD, JBE, SS: Substantial contribution to conception and design, drafting the article or revising it critically for important intellectual content.All authors have read and approved the final manuscript; and agree to be accountable for all aspects of the work thereby ensuring that questions related to the accuracy or integrity of any part of the work are appropriately investigated and resolved.

Funding

None.

Data availability

No datasets were generated or analysed during the current study.

Declarations

Ethics approval and consent to participate

Not applicable

Consent for publication

Not applicable

Declaration of generative AI in scientific writing

During the preparation of this work the authors used ChatGPT 4.0 to improve readability. After using this tool/service, the authors reviewed and edited the content as needed and take full responsibility for the content of the publication.

Competing interests

The authors declare no competing interests.

Clinical trial number

Not applicable.

Footnotes

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Mia Gisselbaek and Joana Berger-Estilita contributed equally and share co-first authorship.

References

- 1.Bellman R. An introduction to artificial intelligence. Can Computers Think? Boyd & Fraser Publishing Company; 1978. p. 168.

- 2.Sheikh H, Prins C, Schrijvers E. Artificial Intelligence: Definition and Background. In: Sheikh H, Prins C, Schrijvers E, editors. Mission AI: The New System Technology [Internet]. Cham: Springer International Publishing; 2023 [cited 2025 Apr 22]. pp. 15–41. Available from: 10.1007/978-3-031-21448-6_2

- 3.Topol EJ. High-performance medicine: the convergence of human and artificial intelligence. Nat Med. 2019;25(1):44–56. [DOI] [PubMed] [Google Scholar]

- 4.Cecconi M, Greco M, Shickel B, Vincent JL, Bihorac A. Artificial intelligence in acute medicine: a call to action. Crit Care. 2024;28(1):258. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Cascella M, Cascella A, Monaco F, Shariff MN. Envisioning gamification in anesthesia, pain management, and critical care: basic principles, integration of artificial intelligence, and simulation strategies. J Anesth Analgesia Crit Care. 2023;3(1):33. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Maheshwari K, Cywinski JB, Papay F, Khanna AK, Mathur P. Artificial intelligence for perioperative medicine: perioperative intelligence. Anesth Analg. 2023;136(4):637–45. [DOI] [PubMed] [Google Scholar]

- 7.Doctors Receptive to AI Collaboration in Simulated Clinical Case without Introducing Bias [Internet]. 2024 [cited 2025 Jan 25]. Available from: https://hai.stanford.edu/news/doctors-receptive-ai-collaboration-simulated-clinical-case-without-introducing-bias

- 8.Bastian G, Baker GH, Limon A. Bridging the divide between data scientists and clinicians. Intelligence-Based Med. 2022;6:100066. [Google Scholar]

- 9.Govers M, van Amelsvoort P. A theoretical essay on socio-technical systems design thinking in the era of digital transformation. Gr Interakt Org. 2023;54(1):27–40. [Google Scholar]

- 10.Challenges and Solutions in Implementing Data Science in Healthcare [Internet]. [cited 2025 Jan 26]. Available from: https://www.skillcamper.com/blog/challenges-and-solutions-in-implementing-data-science-in-healthcare

- 11.Charilaou P, Battat R. Machine learning models and over-fitting considerations. World J Gastroenterol. 2022;28(5):605–7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.For health AI to work, physicians and patients have to trust it [Internet], American Medical Association. 2024 [cited 2025 Jan 26]. Available from: https://www.ama-assn.org/about/leadership/health-ai-work-physicians-and-patients-have-trust-it

- 13.Langlotz CP, Kim J, Shah N, Lungren MP, Larson DB, Datta S et al. Developing a Research Center for Artificial Intelligence in Medicine. Mayo Clinic Proceedings: Digital Health. 2024;2(4):677–86. [DOI] [PMC free article] [PubMed]

- 14.Petrelli A, Prakken BJ, Rosenblum ND. Developing translational medicine professionals: the Marie Skłodowska-Curie action model. J Transl Med. 2016;14:329. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Hollnagel E, Safety-I. and Safety-II: The past and future of safety management. London: CRC; 2018. p. 200. [Google Scholar]

- 16.Bajwa J, Munir U, Nori A, Williams B. Artificial intelligence in healthcare: transforming the practice of medicine. Future Healthc J. 2021;8(2):e188–94. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Maleki Varnosfaderani S, Forouzanfar M. The role of AI in hospitals and clinics: transforming healthcare in the 21st century. Bioeng (Basel). 2024;11(4):337. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Gandhi TK, Classen D, Sinsky CA, Rhew DC, Vande Garde N, Roberts A, et al. How can artificial intelligence decrease cognitive and work burden for front line practitioners? JAMIA Open. 2023;6(3):ooad079. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Chamorro-Koc M. Prototyping for Healthcare Innovation. In: Miller E, Winter A, Chari S, editors. How Designers Are Transforming Healthcare [Internet]. Singapore: Springer Nature; 2024 [cited 2025 Jan 26]. pp. 103–17. Available from: 10.1007/978-981-99-6811-4_6

- 20.Arango-Ibanez JP, Posso-Nuñez JA, Díaz-Solórzano JP, Cruz-Suárez G. Evidence-Based learning strategies in medicine using AI. JMIR Med Educ. 2024;10(1):e54507. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Gisselbaek M, Suppan M, Minsart L, Köselerli E, Nainan Myatra S, Matot I, et al. Representation of intensivists’ race/ethnicity, sex, and age by artificial intelligence: a cross-sectional study of two text-to-image models. Crit Care. 2024;28(1):363. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Gisselbaek M, Köselerli E, Suppan M, Minsart L, Meco BC, Seidel L et al. Beyond the Stereotypes: Artificial Intelligence (AI) Image Generation and Diversity in Anesthesiology. Front Artif Intell [Internet]. 2024 Oct 9 [cited 2024 Oct 9];7. Available from: https://www.frontiersin.org/journals/artificial-intelligence/articles/10.3389/frai.2024.1462819/full [DOI] [PMC free article] [PubMed]

- 23.Gisselbaek M, Köselerli E, Suppan M, Minsart L, Meco BC, Seidel L, et al. Gender bias in images of anaesthesiologists generated by artificial intelligence. Br J Anaesth. 2024;133(3):692–5. [DOI] [PubMed] [Google Scholar]

- 24.Shams RA, Zowghi D, Bano M. AI and the quest for diversity and inclusion: a systematic literature review. AI Ethics [Internet]. 2023 Nov 13 [cited 2024 Nov 24]; Available from: 10.1007/s43681-023-00362-w

- 25.Ueda D, Kakinuma T, Fujita S, Kamagata K, Fushimi Y, Ito R, et al. Fairness of artificial intelligence in healthcare: review and recommendations. Jpn J Radiol. 2024;42(1):3–15. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Alvarez JM, Colmenarejo AB, Elobaid A, Fabbrizzi S, Fahimi M, Ferrara A, et al. Policy advice and best practices on bias and fairness in AI. Ethics Inf Technol. 2024;26(2):31. [Google Scholar]

- 27.Crowe B, Rodriguez JA. Identifying and addressing Bias in artificial intelligence. JAMA Netw Open. 2024;7(8):e2425955. [DOI] [PubMed] [Google Scholar]

- 28.Artificial Intelligence and Algorithmic Fairness Initiative [Internet]. US EEOC. [cited 2025 Jan 26]. Available from: https://www.eeoc.gov/ai

- 29.The Act Texts.| EU Artificial Intelligence Act [Internet]. [cited 2024 Nov 4]. Available from: https://artificialintelligenceact.eu/the-act/

- 30.Leroy H, Buengeler C, Veestraeten M, Shemla M, Hoever IJ, Fostering Team Creativity Through Team-Focused Inclusion. The role of leader harvesting the benefits of diversity and cultivating Value-In-Diversity beliefs. Group Organ Manage. 2022;47(4):798–839. [Google Scholar]

- 31.Gisselbaek M, Hudelson P, Savoldelli GL. A systematic scoping review of published qualitative research pertaining to the field of perioperative anesthesiology. Can J Anesthesia/Journal Canadien D’anesthésie. 2021;68(12):1811–21. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

No datasets were generated or analysed during the current study.