Abstract

Introduction

Focused Cardiac Ultrasound (FOCUS) is an essential tool for rapid cardiac assessment across various clinical subspecialties. Consequently, teaching foundational FOCUS skills is of critical importance. This study investigates the effectiveness of e-learning in imparting FOCUS skills.

Materials and methods

This prospective, controlled study assessed competency development among medical students attending a FOCUS workshop (study group) at two time points: T1 (pre-training) and T2 (post-training, after completing e-learning). The competence gain of the group was compared to a reference group (control group) of physicians who had also used the e-learning in preparation for a certified FOCUS workshop. Objective competencies were measured at both time points using short-answer and multiple-choice theory tests. Subjective self-assessments of competencies and opinions of the e-learning were investigated through evaluation forms at T2 using a 7-point Likert scale (1 = strongly disagree, 7 = strongly agree). Demographic information was collected at T1, and user behaviour during e-learning was assessed at T2. Primary endpoints were the increase in theoretical competencies (study group) and the comparison of subjective and objective competency levels (study vs. reference).

Results

A total of 104 participants (study group = 48; reference group = 56) were included. The study group exhibited a significant (p < 0.001) increase in theoretical competencies. However, at T2 the reference group achieved significantly higher theoretical test scores (p < 0.001). One influencing factor was previous practical experience (p = 0.02), which was significantly higher in the reference group (p < 0.001). Both groups estimated their competency at the end of preparation to be at similar levels (4.3 ± 0.9 scalepoints [SP] versus 4.3 ± 1.0 SP; p = 0.94). Evaluation results of the e-learning were positive in both groups (5.8 ± 0.9 SP versus 6.2 ± 0.7 SP; p = 0.04), with results in the reference group being significantly higher.

Conclusion

Both the improvement in competencies and the positive reception of digital learning media should encourage the increased implementation of e-learning formats. This study shows that such formats in ultrasound training can effectively complement face-to-face workshops and should be included in certified training curricula.

Supplementary Information

The online version contains supplementary material available at 10.1186/s12909-025-07409-y.

Keywords: E-Learning, Focused cardiac ultrasound, Competency-Based education, Blended learning, Ultrasonography, Medical education

Introduction

Digitalisation in sonography training

Digital media and training have profoundly changed medical education [1]. Clinical training now often involves the use of web-based learning platforms or software [1, 2], and digital media is used increasingly widely in clinical settings or for educational purposes [3]. The COVID-19 pandemic further reinforced this development [4–7]. With in-person lectures almost impossible, online formats became vital to ongoing teaching and learning provision, thereby increasing awareness and use of innovative digital teaching methods and media [5, 7–9].

Accordingly, ultrasound teaching increasingly uses a combination of digital and in-person education models (so-called “blended learning”), which are currently being developed and further assessed in research [10–14]. Along with traditional educational media (e.g. books, scripts, slides) and teaching methods (e.g. in-person lectures, small group in-person courses), new “blended learning” models also incorporate digital educational media (such as e-learning) and teaching methods (such as video-based education, tele-education, and webinars) in instruction [1, 12–19]. Present studies suggest that digital educational media and teaching methods are positively received by learners and can effectively aid and complement training and competency development [1, 3, 8, 13, 14, 17, 20].

E-learning, defined as the use of digital media for educational purposes [5], has broadened the range of teaching methods available not only in general medical training, but in the teaching of ultrasound in particular [1–3, 8, 10, 14, 17, 20]. E-learning can take many varying forms, from videos of lectures to small-group teaching videos, and from digital reference works, pictorial or video atlases, e-books, and animated 3D models, to fully interactive online courses that combine several or all of these media [1, 3, 5, 7, 8, 14, 17]. Therefore, it is unsurprising that existing e-learning concepts in medical educational research vary immensely regarding structure, duration, and quality of educational offerings [2, 3, 10, 17]. As a result, recommendations such as the World Interactive Network Focused on Critical Ultrasound (WINFOCUS) or other recommendations for the implementation of e-learning in sonography training emphasise structured competency-based training and are often the basis for many sonography training programmes worldwide [21–23]. In this context, e-learning can support theoretical and practical competency development [2, 13, 24–34]. This development has already been evidenced in the teaching of abdominal ultrasound [14, 18, 19], MSK sonography [35], head and neck ultrasound [31–33], and emergency ultrasound [2, 34, 36].

Blended learning and e-learning courses have also been used to teach Focused Cardiac Ultrasound (FOCUS) [2, 25, 27–30, 37, 38]. These digital methods have been used to impart theoretical competencies like cardiac anatomy and physiology, to promote the acquisition of practical competencies, and to deepen and revise newly learned course content [25, 30, 39].

Physicians often use FOCUS in clinical settings, especially when making case-specific clinical decisions or in emergencies [40, 41]. Early training in FOCUS is therefore important, as it provides foundational knowledge of cardiac anatomy and sonography, reinforces anatomical and sonographic skills through interactive content, and preparing students effectively for their future clinical work [28, 30].

For ultrasonography in general and FOCUS in particular, an understanding of anatomical spatial relationships, the precise handling or movement of the probe, and visual-spatial ability are important skills [42–45]. The development of these and other competencies is often supported by in-person instruction and guidance by professionals during courses. Nevertheless, pre-course preparation can improve course competency gain by making the most effective and efficient use of the in-person course time [20, 30]. Specifically, beginners can benefit from high-quality e-learning to learn the basics of complex cardiovascular imaging before a FOCUS course [20, 30, 41].

Research problem and aim

While specialist societies and panels of experts have promoted e-learning and increasingly integrated it into the teaching of sonography, there are no shared quality standards, accreditation methods, or formal certification processes for e-learning formats [10, 20, 41]. More evidence regarding the effective use of e-learning should suggest ways of achieving this formalisation of e-learning instruction [10], particularly for the teaching of FOCUS [39]. Although previous studies have explored the effectiveness and acceptance of e-learning among different user groups, such as students and physicians, usually this has been done in isolation, comparing only one user group with a non-intervention control group [2, 9, 24, 25, 28, 30, 37, 41, 46, 47]. To our knowledge, no existing study compares the competencies and perceptions of both students and physicians learning FOCUS through the same e-learning platform. Due to their different levels of previous exposure to echocardiography, it was decided to include medical students and physicians as a participant in the study. For medical students, FOCUS training provides an opportunity to acquire theoretical knowledge and basic skills in echocardiography, filling a gap in traditional curricula and preparing them for later, more advanced training. Physicians, with their clinical experience, provide a meaningful reference group to assess the effectiveness of e-learning in both novice and experienced learners and to identify areas for optimization. Unlike previous studies that have focused solely on either medical students or practicing physicians [2, 9, 24, 25, 28, 30, 37, 41, 46, 47], our study compares both groups to explore how prior clinical experience affects the effectiveness of e-learning in FOCUS training. This comparison will provide insight into whether e-learning is equally effective for learners with different levels of clinical exposure. Our study addresses this gap by comparing competency development through e-learning between students and physicians, using the latter as a reference for skill acquisition.

The primary aim of this study was to investigate the effectiveness of e-learning as a preparatory tool for a FOCUS course, specifically in enhancing theoretical knowledge acquisition among medical students. To contextualize competency gain across different levels of clinical experience, a group of physicians served as a reference (control) group. By comparing both groups, the study explores how well e-learning supports learners with varying baseline competencies in preparing for a structured ultrasound training. Secondary aims of the study are to evaluate the quality of FOCUS-specific e-learning and the general acceptance of e-learning in sonography education. The results offer valuable evidence to support the development of digital teaching and optimise future teaching concepts.

Materials and methodology

Study design, participant (recruitment) and study procedure

This prospective, controlled observational trial (Fig. 1) was conducted from 2022 to 2023 [48]. The aim was to investigate the effectiveness of an e-learning-assisted preparation period before an in-person FOCUS workshop. We recruited medical students during their clinical years (study group) to participate in a voluntary extracurricular workshop called “Focused Cardiac Ultrasound (FOCUS)” [45]. A second group of physicians participating in a certified workshop were recruited as controls (reference group) [49]. Blended learning served as the didactic approach for this workshop [50]. Participants were recruited via official email invitations: the study group through a mailing list from the university’s Office for Student Affairs, and the reference group via the portal of a specialist society. Both groups received full access to the e-learning module, which they were expected to complete before the FOCUS workshop. Data was collected over the duration of 4 workshops (2 for students and 2 for physicians). At two different times (T1 (pre) = before the commencement of the workshop; T2 (post) = after the preparation period but before the in-person workshop), we collected written and digital evaluation forms from the groups (evaluationpre, evaluationpost) to register demographic backgrounds and user behaviour. Additionally we carried out two theory tests (study group: theorypre, theorypost and reference group: theorypost) to analyse the effectiveness of the e-learning [51, 52]. The physicians in the reference group did not complete the theorypre, as they were experienced professionals already familiar with ultrasound examinations, to minimise potential bias. The theorypost was used for evaluation purposes only and was not a prerequisite for participation in the workshop. Data collection was completed prior to the practical phase of the workshop. Inclusion criteria for participants were successful completion of the first state examination, usage of educational materials, and full participation in evaluations and tests. Primary endpoints were defined as an objective increase in theoretical competencies (study group), and subjective and objective level of competency (study versus reference group) surveyed in the evaluations and theory-tests, respectively. Secondary endpoints were defined as the evaluation of the e-learning and assessed acceptance, assessed by evaluations.

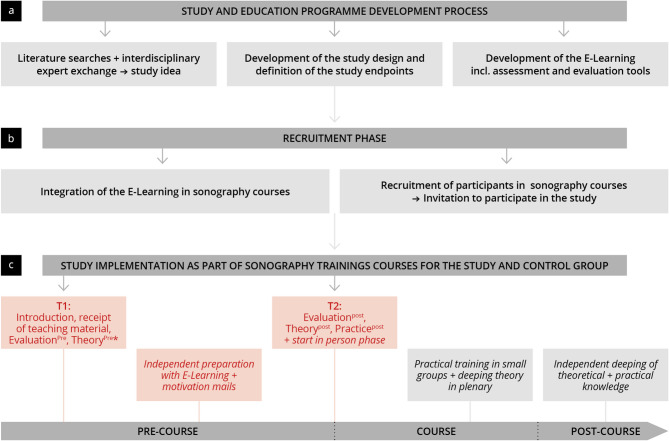

Fig. 1.

Chronological representation of the whole study development and procedure including time for data collection. After the study and the training program were designed (a), participants were recruited (b) to take part in the study through the workshop (c), * Reference group did not complete the T1 theory test

Process of the preparation period for the workshop

As typical in blended-learning teaching approaches, the workshop was split into a pre-course phase (a digital introduction and self-guided preparation with the educational medium), a course phase (in-person theoretical and practical training), and a post-course phase (a self-guided wrap-up) [50, 53]. The data collection times can be found in the study design and Fig. 1.

After registration for the workshop and one introductory plenary session (T1) participants of the study group received access to the e-learning and instructions on how to complete the digital tasks. Participants were given one to two weeks for digital preparation.

In parallel, the reference group also received access to the same e-learning content and a detailed preparation guide upon registration and digital completion of T1. Their preparation period varied from two weeks to up to four months, depending on the registration date. To sustain the motivation for the e-learning, reminder emails were sent throughout the preparation phase.

At the beginning of the in-person workshops both groups completed T2 [51].

Subsequently, theoretical and practical teaching sessions were conducted in small groups of 4–5 participants, each supervised by an experienced instructor. Practical sessions involved hands-on training with standardized patient models, focusing on probe positioning, image acquisition, and interpretation. Participants had continued access to the e-learning module throughout the study period, including after the initial pre-workshop phase, allowing for further self-guided revision.

The workshop consisted of 16 teaching units of 45 min based on pre-existing concepts [21, 45, 49]. Fundamental were the section planes proposed by the WINFOCUS for transthoracic ultrasound [22]. Data was collected until the end of the preparation period, i.e., the beginning of the workshop.

Structure of the FOCUS E-learning

To align with the defined learning goals of the course concept [21, 45, 49] and the recommendations of specialist societies [21, 22, 54], the e-learning was designed by ultrasound experts, didactics, and qualified IT specialists. The design of the e-learning was driven by the need to ensure content of high quality, specifically aligned with the course objectives and informed by established best practices in the field. The development and implementation of the e-learning followed the waterfall model, systematically refining the project through sequential phases to ensure thorough updates at each stage [55]. The structure of the e-learning course can be found in Supplement 1 and exemplary extracts are provided in Supplements 2–3. The web-based e-learning was accessed via an internet browser. Like the subsequent course, the e-learning was conducted in German. It was structured into two parts: ‘basics’, covering ultrasound physics, image production, and topographic anatomy; and ‘cardio-specific learning’, covering multiple, similarly structured chapters on standard section planes, tips for probe handling, plane orientation and optimisation, sonoanatomy, and examination questions and protocols. Pathologies were not taught. Individual revision cards (‘slides’) built the foundation of the e-learning. Educational content could be viewed in the form of continuous texts, bullet points, graphics, and/or video clips, all of which were designed to make the educational content more accessible and to clarify the examination protocol. Tables could be filled in and graphics labelled as further interactive features.

Test and evaluation instruments

The conceptual design of the test format and evaluation form resulted from the consensus of ultrasound and didactics experts according to currently available research and to meet the learning goals of the workshop [21, 22, 51]. Evaluationpre and evaluationpost were 10-minute written papers assessing 5 topics: “personal data” (T1), “prior knowledge” (T1), “user behaviour” (T1), “subjective evaluation of competencies” (T2), and “evaluation of e-learning” (T2). Answers were provided according to the type of question, either on a seven-point Likert scale (1 = strongly disagree, 7 = strongly agree), dichotomous questions (yes/no), or free responses. The response options were explicitly labeled to ensure clarity. No system logs data were collected with the aim of analysing user behaviour. The written theory papers of a total of 94 points across theorypre + theorypost examined five areas of competence matching the defined learning objectives. These were “anatomy” (max. 11 points), “basic skills” (max. 29 points), “assignment tasks” (max. 6 points), and “normal findings / identifying structures in orientational section planes” (max. 48 points). In the assessment (theorypre and therypost) there were proportionally overlapping and identical questions. Content validity was ensured by aligning questions directly with the e-learning material, learning objectives and validated educational frameworks (e.g., WINFOCUS reference planes) [21]. The point allocation for each section was determined during test development and aligned with the proportional representation of each topic in the e-learning module to ensure a fair weighting in the assessment. Questions required very short or multiple choice answers; exemplar questions are given in supplements 4 and 5. The theory-test was evaluated based on a solution key previously developed by experts. One point was awarded for each correct answer, while no negative points were given for incorrect responses. To confirm internal consistency, Cronbach’s alpha was calculated for all instruments. The assessments were administered under standardized conditions, without feedback between testing points, and without external guidance, thereby minimizing test-related bias.

Statistical analysis

Data were collected using the survey and test tool LimeSurvey (LimeSurvey GmbH, Germany), written questionnaires, and practice exam sheets. All data were saved with Microsoft Excel. All statistical analyses were performed in Rstudio (Rstudio Team [2020]. Rstudio: Integrated Development for R. Rstudio, PBC, http://www.rstudio.com, last accessed on 20 04 2024) with R 4.0.3 (A Language and Environment for Statistical Computing, R Foundation for Statistical Computing, http://www.R-project.org; last accessed on 20 04 2024). Where possible, a main scale score was derived from the average of the subscale scores. Binary and categorical baseline variables are given as absolute numbers and percentages. Continuous data are given as mean and standard deviation (SD). Categorical variables were analyzed using the chi-squared test. Normality of data distributions was assessed using the Shapiro-Wilk test, ensuring appropriate use of parametric tests. Levene’s test was applied to check for homogeneity of variance. Paired t-tests were used to compare pre- and post-intervention scores within the study group, as the data were approximately normally distributed. This directly addresses the research question regarding subjective and objective competency gains through e-learning. For between-group comparisons (study group vs. reference group), post-test scores were compared using the Mann–Whitney U test, due to violations of normality assumptions in at least one group. This non-parametric test was chosen to examine differences in theoretical knowledge acquisition after e-learning between learners with different baseline experience levels. Additionally, independent samples t-tests were conducted where normal distribution and homogeneity of variance were confirmed. These analyses directly align with our research objective of assessing whether e-learning can effectively support learners across heterogeneous competency levels.

Parametric (ANOVA) and non-parametric (Kruskal-Wallis) analyses of variance were conducted, followed by pairwise post hoc tests (T-test or Mann-Whitney U test) to explore significant differences in responses across multiple participant subgroups. For multiple pairwise comparisons, the Bonferroni correction was used. P-values < 0.05 were considered statistically significant. Effect sizes were calculated using Cohen’s d. For validation purposes and to check the internal consistency of the scales used the Cronbach’s alpha values were calculated for the theoretical test (T1 and T2) and for the e-learning evaluation. For this study, a power analysis was performed to determine the sample size required to detect a statistically significant effect. Our power analysis, based on an expected effect size of 0.6 (derived from previous literature on e-learning in medical education [3, 26]), a significance level of 0.05, and a power of 0.80, was conducted using post hoc pairwise t-tests, resulting in a required sample size of 90 participants (45 per group). The actual sample size (n = 104; study group: 48, reference group: 56) exceeded this requirement.

Results

Group characterisation

A total of n = 104 data sets were included in the statistical analysis (n = 48 study group; n = 56 reference group). The demographic traits, previous clinical experience, user behaviour, and duration of preparation of the study and reference groups were analysed and are depicted in Table 1. The study group showed a lower mean age (p < 0.0001) and reported significantly less experience, specifically regarding “prior participation in ultrasound workshops” (p = 0.002), “echocardiographies seen so far” (p < 0.001), and “independently performed echocardiographies” (p < 0.0001). In the reference group, most participants were resident physicians (87.5%) and working in internal medicine (67.9%).

Table 1.

Baseline characteristics, user behaviour and Preparation time in the study and the reference group

| Items | Study Group (Students) | Reference Group (Physicians) | p-Value |

|---|---|---|---|

| Total number of registrations | n = 55 | n = 65 | |

| Approved participants for the workshop | |||

| Not meeting the inclusion criteria (First state examination completed, use of educational materials, full participation in evaluations and tests) | n = 7 | n = 9 | |

| Participants included in the analysis | n = 48 | n = 56 | |

| T1 | |||

| Mean ± SD | Mean ± SD | ||

| Age | 25.4 ± 3.3 | 31.7 ± 5.4 | < 0.0001 |

| Gender | N (%) | N (%) | 1.0 |

| Female | 30 (62.5%) | 36 (64.3%) | |

| Male | 18 (37.5%) | 20 (35.7%) | |

| N/A | |||

| Specialty (Physicians) | N (%) | N (%) | |

| Internal medicine | 38 (67.9%) | ||

| General medicine | 6 (10.7%) | ||

| Anaesthesiology | 8 (14.3%) | ||

|

Other (Neurology, Paediatrics, Emergency medicine) |

4 (7.1%) | ||

| Position (Physicians) | N (%) | N (%) | |

| Students | 48 (100.0%) | 0 (0,0%) | |

| Resident | 49 (87.5%) | ||

| Consultant | 2 (3.6%) | ||

| Senior | 1 (1.8%) | ||

| Head of department | 0 (0,0%) | ||

| Physician Assistant | 2 (3.6%) | ||

| Other | 2 (3,6%) | ||

| Previous participation in ultrasound courses | N (%) | N (%) | 0.002 |

| No | 29 (60.4%) | 17 (30.4%) | |

| Yes | 17 (35.4%) | 38 (67.9%) | |

| N/A | 2 (4.2%) | 1 (1.8%) | |

| Shadowed/performed an ultrasound? | N (%) | N (%) | |

| Abdomen | 36 (54.5%) | 48 (49.0%) | 0.190 |

| Head and neck | 11 (16.7%) | 21 (21.4%) | 0.077 |

| Puncture | 19 (28.8%) | 29 (29.6%) | 0.149 |

| Observed an echocardiography | N (%) | N (%) | <0.001 |

| No | 19 (39.6%) | 8 (14.3%) | |

| Yes | 21 (43.8%) | 48 (85.7%) | |

| N/A | 8 (16.7%) | 0 (0.0%) | |

| Performed an echocardiography | N (%) | N (%) | < 0.0001 |

| No | 38 (79.2%) | 25 (44.6%) | |

| Yes | 2 (4.2%) | 31 (55.4%) | |

| N/A | 8 (16.7%) | 0 (0.0%) | |

| T2 | |||

| How much time did you spend e-learning? | N (%) | N (%) | 0.28 |

| 1–2 h | 3 (6.3%) | 11 (19.6%) | |

| 2.5–4 h | 28 (58.3%) | 33 (58.9%) | |

| 4.5–6 h | 15 (31.3%) | 7 (12.5%) | |

| 6.5–8 h | 2 (4.2%) | 3 (5.4%) | |

| 8.5–10 h | 0 (0.0%) | 2 (3.6%) | |

| Have you watched the videos in the e-learning? | N (%) | N (%) | 0.84 |

| Yes | 46 (95.8%) | 53 (94.6%) | |

| No | 2 (4.2%) | 3 (5.4%) | |

| Have you had any practical training in echocardiography since receiving the educational media? | N (%) | N (%) | 0.67 |

| No | 27 (56.3%) | 30 (53.6%) | |

| Yes | 21 (43.8%) | 26 (46.4%) | |

| If applicable, to what extent have you had practical training? | N (%) | N (%) | 0.3 |

| 1–4 h | 13 (61.9%) | 16 (61.5%) | |

| 5–10 h | 8 (38.1%) | 6 (23.1%) | |

| 11–20 h | 0 (0.0%) | 1 (3.8%) | |

| > 20 h | 0 (0.0%) | 3 (11.5%) | |

A majority in both groups had spent 2.5 to 4 h preparing for the workshop (study 58.3% vs. reference58.9%, p = 0.28). Most had also watched the videos in the e-learning (study 95.8% vs. reference 94.6%, p = 0.84). Multiple participants in both groups (study group 43.8%; referencegroup 46.4%) had, since receiving the educational media, “practical training in echocardiography” (p = 0.67).

Subjective level of competency and evaluation of the E-Learning

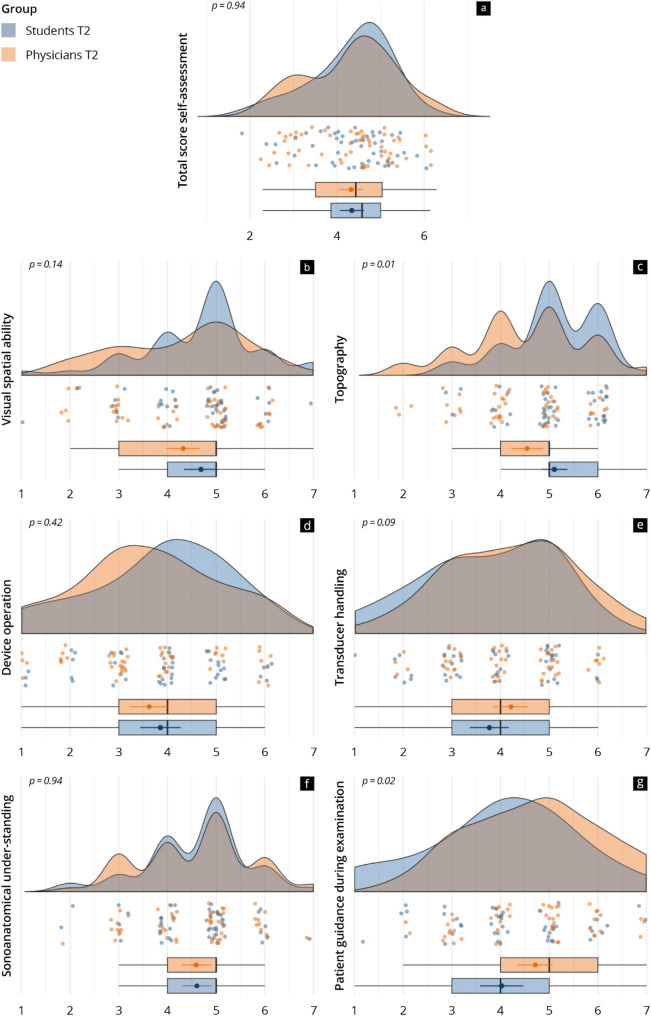

The subjective levels of competency in the study and the reference group at T2 are depicted in Fig. 2. Both groups estimated their competencies at similar levels after the preparation period (study 4.3 ± 0.9 vs. reference 4.3 ± 1.0; p = 0.94). The study group reported higher scores in the subcategory “topography” (p = 0.01), whereas the reference group indicated a higher subjective level of competence in “handling the patient during the examination”.

Fig. 2.

Resulting subjective levels of competencies in the study (blue) and reference group (orange) in the total score (a) as well as in the subcategories (b-g). The raincloud plots visualise the data at T2

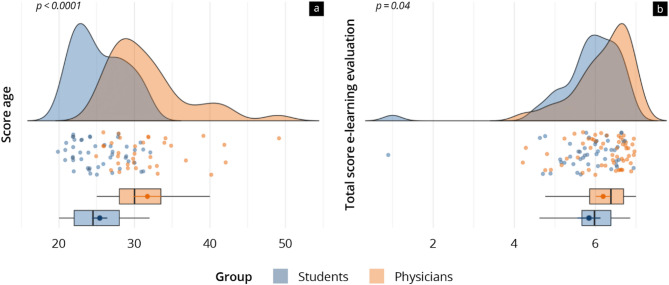

The results of the evaluation of the e-learning can be found in Fig. 3; Table 2. Both groups rated the e-learning markedly positively (4.1–6.8 scale points). Altogether, the reference group gave a significantly higher rating than the study group (study 5.8 ± 0.9 vs. reference 6.2 ± 0.7; p = 0.04). This higher score was true for the subitems “structure of the menu” (p < 0.01), “study cards structure” (p = 0.03), “coverage of study cards” (p = 0.02), and “labelling of graphics” (p = 0.01), as well as “recommendation” (p < 0.001). Particularly highly rated (scale points 5.2–5.8) in both groups were “technique”, “handling of the menu”, “video explanations”, “learning progress”, “interactive features”, and “recommendation”. A little lower (scale points 4.0–5.0) were the ratings for “labelling of graphics”, “quantity of educational videos”, and “text-graphic relation”. The Cronbach’s alpha for the e-learning evaluation was 0.90, thereby indicating excellent internal consistency.

Fig. 3.

Age of the user groups (a) and total evaluation results of the e-learning (b) at T2. Data is presented as raincloud plots (blue = study group; orange = reference group)

Table 3.

Parameters influencing the development of objective competencies in the study group (Students) and the control group (Physicians)

| Items | Score Theory Testpost (study group) |

Score Theory Testpost (control group) |

p-value |

|---|---|---|---|

| Previous participation in ultrasound courses | |||

| No |

(n = 29) 60.1 ± 13.0 |

(n = 17) 71.4 ± 16.0 |

0.02 |

| Yes |

(n = 17) 65.6 ± 16.9 |

(n = 39) 73.1 ± 11.4 |

0.11 |

| Intra-group comparison | 0.26 | 0.69 | |

| Observed an echocardiography | |||

| No |

(n = 19) 58.9 ± 11.7 |

(n = 8) 67.1 ± 19.4 |

0.29 |

| Yes |

(n = 21) 64.6 ± 16.7 |

(n = 48) 73.5 ± 11.4 |

0.03 |

| Intra-group comparison | 0.22 | 0.39 | |

| Performed an echocardiography | |||

| No |

(n = 38) 61.1 ± 14.4 |

(n = 25) 68.9 ± 14.4 |

0.04 |

| Yes |

(n = 2) 77.5 ± 13.4 |

(n = 31) 75.6 ± 10.7 |

0.87 |

| Intra-group comparison | 0.321 | 0.06 | |

| How much time did you spend e-learning? | |||

| 1–2 h |

(n = 3) 40.7 ± 8.5 |

(n = 11) 72.09 ± 14.9 |

0.003 |

| 2.5–4 h |

(n = 28) 62.8 ± 13.1 |

(n = 33) 73.6 ± 10.2 |

0.001 |

| 4.5–6 h |

(n = 15) 64.3 ± 16.2 |

(n = 7) 66.7 ± 20.6 |

0.79 |

| Have you had any practical training in echocardiography since receiving the educational media? | |||

| Yes |

(n = 19) 65.4 ± 12.4 |

(n = 26) 72.2 ± 12.2 |

0.08 |

| No |

(n = 29) 59.9 ± 15.6 |

(n = 30) 72.9 ± 13.4 |

0.001 |

| Intra-group comparison | 0.18 | 0.84 | |

| If applicable, to what extent have you had practical training? | |||

| 1–4 h |

(n = 13) 63.2 ± 12.8 |

(n = 16) 73.3 ± 9.5 |

0.02 |

| 5–10 h |

(n = 8) 66.8 ± 13.1 |

(n = 6) 72.2 ± 18.2 |

0.55 |

| Intra-group comparison | 0.55 | 0.89 |

Table 2.

Results of the evaluation of the e-learning by students and physicians

| Study Group (n = 48) |

Reference Group (n = 56) |

p-Value | |

|---|---|---|---|

| Mean ± SD | Mean ± SD | ||

| Total value | 5.8 ± 0.9 | 6.2 ± 0.7 | 0.04 |

| Log-in function | 4.7 ± 1.3 | 5.0 ± 1.3 | 0.34 |

| Technique | 6.8 ± 0.9 | 6.6 ± 1.0 | 0.42 |

| Menu structure | 5.5 ± 1.6 | 6.4 ± 0.9 | 0.002 |

| Menu operability | 6.2 ± 1.5 | 6.5 ± 1.1 | 0.15 |

| Duration of videos | 4.7 ± 1.3 | 5.1 ± 1.0 | 0.11 |

| Quality of videos | 5.1 ± 1.0 | 5.0 ± 1.2 | 0.55 |

| Quantity of videos | 4.1 ± 1.0 | 4.2 ± 0.9 | 0.95 |

| Video explanations | 5.4 ± 1.2 | 5.8 ± 1.5 | 0.14 |

| Learning progress | 5.2 ± 1.3 | 5.7 ± 1.1 | 0.07 |

| Structure study cards | 4.4 ± 1.4 | 5.0 ± 1.2 | 0.03 |

| Workload per study card | 4.4 ± 1.4 | 5.0 ± 1.0 | 0.02 |

| Font size | 4.6 ± 0.6 | 4.5 ± 0.8 | 0.32 |

| Text volume | 4.4 ± 0.9 | 4.5 ± 1.0 | 0.86 |

| Graphic dimensions | 4.5 ± 0.8 | 4.4 ± 1.0 | 0.53 |

| Graphic labels | 4.0 ± 1.3 | 4.6 ± 0.8 | 0.01 |

| Quantity of graphics | 5.3 ± 0.9 | 5.4 ± 1.0 | 0.66 |

| Relation (text/graphic) | 4.4 ± 0.7 | 4.5 ± 0.9 | 0.84 |

| Design | 5.1 ± 1.1 | 5.3 ± 1.1 | 0.38 |

| Interactive features | 6.0 ± 1.2 | 6.1 ± 1.3 | 0.97 |

| Recommendation | 5.3 ± 1.4 | 6.2 ± 0.8 | < 0.001 |

Objective development of competencies

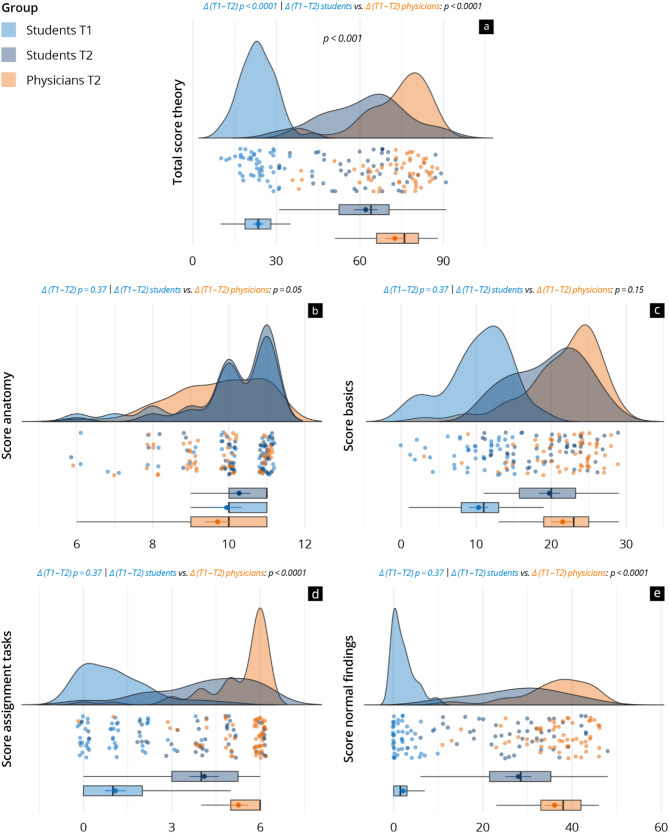

The results of the theory tests in both the study and reference groups are depicted in Fig. 4 and supplement 6. From T1 to T2, the study group achieved a significant increase in total competencies (T1: 23.5 ± 6.3 vs. T2: 62.1 ± 14.6; p < 0.001) with a large effect (Cohen’s d = 3.43, 95% CI [2.68, 4.18]), and in the subitems “basics” (p < 0.001), “assignment tasks” (p < 0.001), and “normal findings” (p < 0.001). However, no improvement was noted in the subitem “anatomy” (p = 0.37). The reference group, which had more previous practical experience (p < 0.001), achieved in summary significantly higher scores at T2 (study 62.1 ± 14.6 vs. reference 72.6 ± 12.7; p < 0.001), also in the subitems “assignment tasks” (p < 0.001) and “normal findings” (p < 0.001). In “anatomy” (p = 0.05) and “basics” (p = 0.15), both groups showed similar levels of competency at T2. Cronbach’s alpha for the theory test was 0.77 at T1, indicative of acceptable internal consistency, and 0.88 at T2, indicative of good internal consistency.

Fig. 4.

Results of the theory test of study and reference group at T1 and T2 in the total score (a) as well as in the subcategories (b–e) The raincloud plots visualise the results at T1 (light blue = study group) and T2 (dark blue = study group; orange = reference group)

Factors of influence

The analysis and examination of possible influencing factors can be found in Table 3. Multivariate linear regression analysis showed that the factor “Performed an echocardiography – yes” (β = 14.9; p = 0.01) significantly influenced the theory test at T2.

An intergroup comparison with the aid of a differentiation test (binary scale) showed that the reference group tended to have significantly better results, particularly over those with the characteristics “Previous participation in ultrasound courses – no” (p = 0.02), “Observed an echocardiography - yes” (p = 0.03), “Performed an echocardiography – no” (p = 0.04) and “Have you had any practical training in echocardiography since receiving the educational media?” (p < 0.01). The time to prepare (“How much time did you spend e-learning?”) data collected suggested that the reference group could achieve significantly better results than the study group with a preparatory period of any length of up to 4 h (p < 0.01). This trend was not evident with a preparatory period of 4.5–6 h (p = 0.79). A better result in the test correlated positively with the duration of preparatory time (up to a preparatory period of 4 h) in both groups.

Discussion

The primary objective of this study was to assess the extent to which a preparatory e-learning module could enhance the theoretical FOCUS competencies of a study group of medical students undertaking a training workshop. The study also aimed to compare the objective and subjective competency gain in this study group against those of a reference group (control) consisting of physicians, assessing competency gain across different clinical experience levels. Finally, participants evaluated the quality and acceptance of the e-learning.

The results suggest a significant improvement in the objective theoretical competencies of the study group following self-directed preparation. Although the reference group achieved significantly higher overall objective competency levels, both groups reported similarly high levels of subjective competency. Both groups similarly positively evaluated the e-learning platform, with the reference group providing a slightly higher overall rating.

Given the increasing cross-disciplinary application of FOCUS in emergency settings and for specific clinical assessments of cardiovascular status, the need for comprehensive training in this area is growing [40, 41, 56]. This study contributes valuable evidence supporting e-learning as a flexible and practical teaching method, aiming to foster innovative approaches in medical education.

Discussion of objective competencies

Ongoing research has attempted to measure the effects of e-learning on theoretical competency growth in sonography [1–3, 5, 7, 8, 10, 17, 20, 27]. As suggested in previous studies [25, 30, 37], participants of our study group saw a significant increase in their theoretical skills after completing the FOCUS-specific e-learning. This was particularly observable in “basics”, “assignment tasks”, and “normal findings”. In “anatomy”, participants already had a high level of competency at T1, which explains the slight, statistically insignificant increase at T2. Conjecturally, study subjects might have benefitted from persisting anatomic and physiological knowledge from the preclinical stage. Studies have previously correlated ultrasound instruction and improvements in anatomy knowledge, though the courses in these studies were specifically designed and used to teach cardiac anatomy and physiology itself, unlike the workshop in our study [30, 37, 46]. Our study benefitted from using multiple-choice as well as very short answer questions, as these enabled clearer measures and more decisive assessment of competency growth in comparison with other studies [9, 47].

A defining characteristic of our study was the use of a reference group of physicians (control) who similarly used the FOCUS e-learning in preparation for a one-day FOCUS workshop. Prior studies of e-learning specialised on FOCUS tend to have one study group made of inexperienced participants including both students as well as physicians, with some using and some eschewing a control group [2, 25, 39, 46, 47]. Other studies used alternative teaching methods as a control, whether peer teaching, self-guided study, or team-based learning [9, 25]. Unlike other studies that have focused solely on students, we introduce a comparative perspective by including both students and physicians, helping to contextualise the effectiveness of e-learning across different learner groups. The results of this study and prior studies suggest that e-learning is not only a promising approach by which learners can acquire new theoretical competencies, but that e-learning is also effective for deepening and revising existing knowledge and skills, especially in ultrasound basics [20, 25, 30, 38]. This is particularly supported by the significant improvements observed in basic theoretical competences, especially in the subcategories “basics” and “normal findings”. After the preparation phase, the reference group showed a generally higher level of objective competencies, especially in “basics”, “assignment tasks”, and “normal findings”, though not in “anatomy”. This reiterates that the study group’s superior anatomical competencies may result from their recent pre-clinical training, where e-learning reinforces prior knowledge [25]. Several factors likely played a role in this difference, most notably the physicians’ greater clinical experience, as indicated by our regression analysis. Their more extensive hands-on exposure to echocardiography in daily practice likely gave them a stronger foundation for interpreting ultrasound images and performing related tasks [57–59]. E-learning was effective in improving theoretical competence and substantially narrowing the gap between novice and experienced learners, although, as expected, it was not able to close it entirely.

Discussion of subjective competencies

Recent studies have examined both the objective and subjective increases in learner competencies achieved through e-learning [1, 8, 14, 17, 28, 30]. After the e-learning in this study, both groups estimated their level of skills similarly, suggesting a levelling of the subjective competency gradient. Prior research has also shown that participants with initially low self-assessments tend to reduce their lag during e-learning [14], a phenomenon attributed to the so-called “catch-up effect” [60].

The significantly higher self-assessment in the study group in the “topography” sub-category mirrors their objectively higher knowledge of anatomy. Meanwhile, the reference group estimated themselves higher in “patient handling during the examination”, which is explicable by their greater time spent in clinical practice and patient care. The discrepancies in subjective vs. objective competencies gained may reflect differences in the learning experiences of both groups: the study group may have overestimated their knowledge due to the theoretical focus, a phenomenon that can be explained by the Dunning–Kruger effect [61]. The reference group, benefiting from more clinical experience, gave a more realistic self-assessment. This suggests that subjective evaluations are influenced by individual experiences and learning strategies, which may not always align with objective competency development.

Overall, the results indicate that e-learning enhances educational success and positively influences learners’ subjective evaluation of their competencies. E-learning can, therefore, be a good tool to enhance target-oriented competency growth.

Discussion of the evaluation of the E-learning

The evaluation of digital learning formats is an important part of quality management, and the value of qualitatively effective e-learning is also emphasised in the literature [1, 10, 20]. Following previous studies, we used a questionnaire to evaluate the learners’ perception of the quality of our e-learning [7, 14, 28, 30]. The evaluation of competencies was based on the Kirkpatrick model, focusing on competence development and participant feedback [62].

The e-learning was generally rated highly positively, especially in “technique”, “user-friendliness”, and “interactive features”, which together form the foundation of user-friendly e-learning software. To enhance the user experience further, we propose integrating individualized learning paths, which would tailor content to the diverse needs and preferences of learners. This approach, supported by feedback, could improve user-friendliness and foster a more personalized learning journey, thereby optimizing overall competency gain. The evaluation by the reference group, who had a significantly higher mean age, was significantly higher than the evaluation by the study group. This could be due to the study group’s higher expectations from digital teaching media as participants in the study group could be considered “digital natives”. These differences in expectations highlight an opportunity to develop even more individualised e-learning offers.

Optimisation is also important to ensure ongoing quality. For example, the waterfall model could advance the implementation and revision process of the e-learning by enabling systematic improvements through structured, sequential phases [55, 63]. In prior successful e-learning studies, special emphasis was placed on correcting all errors, adapting to the current scientific standard, optimising features, and implementing extensions [55, 63].

We propose to adapt the e-learning in our study after student evaluation to improve its quality. Feedback indicated an indirect wish for more videos: the quantity of videos was rated as “moderate”, while their quality and explanation were rated as “above average”. This suggests that participants value the clarity and depth of video content, but would benefit from an increased quantity to further enhance their learning experience. Prior studies confirm that video-based education is increasing in use and is popular among students, but they also show that instructional videos in isolation are insufficient and more interactive formats are desirable for competency gain and to meet learner preferences [9, 15, 25, 64]. These findings reinforce that no single method leads to successful learning but rather a combination of different teaching methods seems to be the most effective way to achieve broad growth in competencies [9, 10, 15, 20, 38].

In addition to measuring learning success, this study also investigated e-learning acceptance by users. E-learning acceptance was assessed using a multi-dimensional Likert-scale questionnaire that measured aspects such as technical usability, content quality, interactivity, and willingness to recommend the e-learning module. The positive feedback from the participants strongly suggests that the e-learning module was well received and accepted, implying not only positive reception but also overall acceptance of the e-learning platform. For this reason, the subjective data further promote e-learning concepts in future ultrasound teaching, and also to further develop it.

Outlook of E-learning

While the creation of quality e-learning is resource-intensive, e-learning has clear benefits for sonography training [10, 20, 38]. E-learning is effective, flexible, motivating, and sustainable for learners and educators [1–3, 7, 8, 17, 20, 25, 27, 38]. Numerous studies including our own suggest that participants in e-learning develop competencies [3, 17, 25]. Digital instruction also enables flexibility in the time and place of learning, and to meet the varying preferences of learners themselves, so that personalised and demand-dependent learning can be assured [1, 7, 20, 38]. This flexibility can be especially useful for physicians with a high workload, but it is also beneficial for students [10, 20]. One study showed that even a very short e-learning course can be a useful preparation tool for ultrasound examinations [14, 27]. The scope and complexity of the course can vary depending on the topic, allowing e-learning to best suit the learning goal. In the future, e-learning could potentially facilitate individualised learning concepts that can be customised to the learner’s specific needs [65]. This could be done according to pre-tests and learning preferences, or to better tailor courses to specific learning needs or disabilities [65]. The use of AI could enhance this development [66].

E-learning formats such as the one developed in this study can be expanded step-by-step, such as by including pathologies with independently created content, or by incorporating links to pathology atlases. Digital toolkits for the construction of websites can simplify structuring web pages and the realisation of e-learning formats, while high-quality e-learning courses built with such tools have been shown to motivate participants, generate learner interest, and gain wide acceptance [1, 8, 14, 17]. In the future, system logs could be used for a more detailed evaluation of user behaviour, allowing for a more precise monitoring of the hours spent on the platform and the modules worked on, thus allowing for a more differentiated and objective analysis of e-learning.

As shown by our data and in previous studies, e-learning can stably and reliably build up basic knowledge and skills [2, 25, 30, 39]. The sustainable digitalisation of in-person formats could lead to a decreased need for personnel and spatial resources [38] and could lead to a more effective use of in-person course time. The results suggest that e-learning effectively facilitates the acquisition of foundational theoretical knowledge, but additional practical components may be needed to fully address the needs of novice learners. Previous studies have also suggested that e-learning can contribute to the development of practical skills [14], though it cannot entirely replace in-person practical training [2, 9].

In summary, our data reflects favourably on integrating e-learning into blended learning teaching of FOCUS, as is also suggested by prior studies [20, 28, 30, 67]. To support under-resourced regions and promote efficient resource use, specialist societies should consider developing recommendations and clear guidelines for these courses. Furthermore, these societies should consider accrediting e-learning to enable consistent quality of training and to develop the format to its full potential for learners [10, 14].

Limitations

This study has several limitations that should be considered when interpreting the findings.

First, there were some systematic differences between study and reference group. The lack of an in-person introductory session for the reference group (including the pre-test) limits the ability to determine whether their higher post-test scores reflect learning from the e-learning module or pre-existing knowledge. To ensure validity, the written test was not conducted at home to prevent external aids. The reference group had greater age, clinical experience, prior examinations, and FOCUS exposure, likely contributing to their stronger performance. This finding is supported by a trend in the intergroup comparison, which showed that within the reference group, participants who had already performed echocardiography scored better than those who had not (p = 0.06). While these influencing variables were considered in the interpretation of results and included in a regression analysis, the subgroup sizes were small, and the comparisons should be interpreted as indicative trends rather than definitive effects. Preparation time differed between groups: the study group had a fixed 1–2 week period, whereas the reference group had up to four months to engage with the material. Although this variation might have allowed deeper engagement for some physicians. The reference group was slightly larger than the study group, with a difference of eight participants. Although this discrepancy is relatively small, it may still have influenced group comparisons. Overall, this limits the interpretation of comparative effects, which means that, as stated earlier, physicians should primarily be interpreted as a reference group.

Second, the assessments (theorypre and theorypost) contained overlapping and partially identical questions, introducing the possibility of test-retest bias. This was mitigated by a time delay between tests and by withholding feedback after the pre-test, but some recall effects cannot be ruled out.

Third, this study focused exclusively on theoretical knowledge acquisition, assessed through objective tests and subjective evaluations. The assessment of practical competencies was not feasible due to logistical and methodological constraints (e.g., lack of standardized evaluation conditions across multiple sites, need for expert raters and time-intensive evaluation processes). Consequently, the results do not allow conclusions about the acquisition of practical competencies. Future studies should include formats such as Direct Observation of Procedural Skills (DOPS) or Objective Structured Clinical Examinations (OSCEs) to evaluate the effect of e-learning on practical competencies.

Fourth, only students who had completed the first state medical examination were eligible, and thus the findings cannot be extrapolated to earlier-semester students or other groups such as nurses or allied health professionals, which may limit generalizability to other educational settings or learner populations.

Fifth, although test administration was standardized, self-selection bias remains possible. Participants who chose to take part may have been more motivated or more comfortable with digital learning formats, potentially leading to an overestimation of the e-learning module’s effectiveness.

Finally, the study did not include a second control group without e-learning access (e.g., textbook-only preparation), which would have enabled a more nuanced evaluation of the added value of e-learning compared to analog learning strategies. Furthermore, while participants had access to the e-learning platform after the initial course, no follow-up data were collected to assess long-term competency retention.

Conclusion

The present study highlights the potential of e-learning in enhancing theoretical ultrasound competencies among those undertaking FOCUS training. However, while e-learning proves effective in improving theoretical knowledge, it cannot fully compensate for the clinical experience gained through hands-on practice. The users’ positive evaluation of e-learning underscores its suitability and acceptance as a valuable tool in ultrasound education. Digital learning media, such as e-learning platforms, should therefore be more prominently integrated into future ultrasound training programs. We propose that clear guidelines for the certification of e-learning platforms, focusing on content quality, user engagement, and assessment standards, be developed in alignment with existing ultrasound education frameworks. However, further investigations may be needed to examine these relationships in more depth and clarify their underlying mechanisms. Additionally, certification and accreditation by professional societies could ensure a modern, innovative, and forward-looking ultrasound educational practice that aligns with evolving technological advancements and educational needs.

Electronic supplementary material

Below is the link to the electronic supplementary material.

Acknowledgements

We thank all participating students and lecturers for supporting our study. We would like to also thank C. Christe for her help in revising the figures. We would like to express our gratitude to Kay Stankov for his contributions to this publication. His dedicated efforts in consulting, supervising, and meticulously reviewing all statistical aspects have been instrumental in ensuring the rigor and accuracy of our research findings.

Author contributions

Conceptualization: R.K., J.W.M. and J.M.W.; methodology and software: R.K., S.G. and J.M.W.; validation: S.G., R.K., H.B., J.W. M. and J.M.W.; formal analysis: J.R., R.K. and J.M.W.; investigation: J.R., R.K. and J.M.W., resources: H.B., J.W.M. and J.M.W.; data curation: J.R., R. K., L.A.L, A.M.W., E.W. and J.M.W.; writing—original draft preparation: J.R. and J.M.W. writing—review and editing: J.R., R.K., S.G., S.W., L.A.L, A.M.W., R.K., E.W., H.B., J.W.M. and J.M.W.; visualization: J.M. W. supervision: S.G., J.W.M., and J.M.W.; project administration: J.M.W.; All authors have read and agreed to the published version of the manuscript.

Funding

Open Access funding enabled and organized by Projekt DEAL. This research received no external funding.

Data availability

Data cannot be shared publicly because of institutional and national data policy restrictions imposed by the Ethics committee since the data contain potentially identifying study participants’ information. Data are available upon request from the Johannes Gutenberg University Mainz Medical Center (contact via weimer@uni-mainz.de) for researchers who meet the criteria for access to confidential data (please provide the manuscript title with your enquiry).

Declarations

Ethics approval and consent to participate

The approval for the study was waived by the local ethics committee of the State medical association of Rhineland-Palatinate (“Ethik-Kommission der Landesärztekammer Rheinland-Pfalz”, Mainz, Germany). All procedures performed in studies involving human participants were in accordance with the ethical standards of the institutional and national research committee and with the 1964 Helsinki declaration and its later amendments or comparable ethical standards. Informed written consent was obtained from all the participants.

Consent for publication

Not Applicable.

Competing interests

J.W. is one of the Editorial Board Members of BMC Medical Education The other authors declare no conflicts of interest.

Clinical trial number

Not Applicable.

Footnotes

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Change history

7/19/2025

This article has been updated to amend the license information

References

- 1.Ruiz JG, Mintzer MJ, Leipzig RM. The impact of E-learning in medical education. Acad Med. 2006;81(3):207–12. [DOI] [PubMed] [Google Scholar]

- 2.Harel-Sterling M. Can you teach a hands-on skill online? A scoping review of e-learning for point-of-care ultrasound in medical education. Can Med Educ J. 2023;14(6):66–77. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Vallee A, Blacher J, Cariou A, Sorbets E. Blended learning compared to traditional learning in medical education: systematic review and Meta-Analysis. J Med Internet Res. 2020;22(8):e16504. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Camargo CP, Tempski PZ, Busnardo FF, Martins MA, Gemperli R. Online learning and COVID-19: a meta-synthesis analysis. Clin (Sao Paulo). 2020;75:e2286. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Darras KE, Spouge RJ, de Bruin ABH, Sedlic A, Hague C, Forster BB. Undergraduate radiology education during the COVID-19 pandemic: A review of teaching and learning strategies [Formula: see text]. Can Assoc Radiol J. 2021;72(2):194–200. [DOI] [PubMed] [Google Scholar]

- 6.Soni NJ, Boyd JS, Mints G, Proud KC, Jensen TP, Liu G, et al. Comparison of in-person versus tele-ultrasound point-of-care ultrasound training during the COVID-19 pandemic. Ultrasound J. 2021;13(1):39. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Stoehr F, Muller L, Brady A, Trilla A, Mahringer-Kunz A, Hahn F, et al. How COVID-19 kick-started online learning in medical education-The digimed study. PLoS ONE. 2021;16(9):e0257394. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Delungahawatta T, Dunne SS, Hyde S, Halpenny L, McGrath D, O’Regan A, et al. Advances in e-learning in undergraduate clinical medicine: a systematic review. BMC Med Educ. 2022;22(1):711. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Gradl-Dietsch G, Menon AK, Gursel A, Gotzenich A, Hatam N, Aljalloud A, et al. Basic echocardiography for undergraduate students: a comparison of different peer-teaching approaches. Eur J Trauma Emerg Surg. 2018;44(1):143–52. [DOI] [PubMed] [Google Scholar]

- 10.Blank V, Strobel D, Karlas T. Digital training formats in ultrasound diagnostics for physicians: what options are available and how can they be successfully integrated into current DEGUM certified course concepts? Ultraschall Med. 2022;43(5):428–34. [DOI] [PubMed] [Google Scholar]

- 11.Nourkami-Tutdibi N, Hofer M, Zemlin M, Abdul-Khaliq H, Tutdibi E. TEACHING MUST GO ON: flexibility and advantages of peer assisted learning during the COVID-19 pandemic for undergraduate medical ultrasound education - perspective from the sonobystudents ultrasound group. GMS J Med Educ. 2021;38(1):Doc5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Herbert A, Russell FM, Zahn G, Zakeri B, Motzkus C, Wallach PM, et al. Point-of-Care ultrasound education during a pandemic: from webinar to progressive Dinner-Style bedside learning. Cureus. 2022;14(5):e25141. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Weimer AM, Berthold R, Schamberger C, Vieth T, Balser G, Berthold S, et al. Digital transformation in musculoskeletal ultrasound: acceptability of blended learning. Diagnostics (Basel). 2023;13(20):3272. [DOI] [PMC free article] [PubMed]

- 14.Weimer JM, Recker F, Horn L, Kuenzel J, Dirks K, Ille C, et al. Insights into modern undergraduate ultrasound education: prospective comparison of digital and analog teaching resources in a flipped classroom concept – the divan study. Ultrasound Int Open. 2024;10:a23899410. [DOI] [PMC free article] [PubMed]

- 15.Altersberger M, Pavelka P, Sachs A, Weber M, Wagner-Menghin M, Prosch H. Student perceptions of instructional ultrasound videos as Preparation for a practical assessment. Ultrasound Int Open. 2019;5(3):E81–8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Hohne E, Recker F, Schmok E, Brossart P, Raupach T, Schafer VS. Conception and feasibility of a digital Tele-Guided abdomen, thorax, and thyroid gland ultrasound course for medical students (TELUS study). Ultraschall Med. 2023;44(2):194–202. [DOI] [PubMed] [Google Scholar]

- 17.Tang B, Coret A, Qureshi A, Barron H, Ayala AP, Law M. Online lectures in undergraduate medical education: scoping review. JMIR Med Educ. 2018;4(1):e11. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Hohne E, Recker F, Brossart P, Schafer VS. Teledidactic versus Hands-on teaching of abdominal, thoracic, and thyroid Ultrasound-The TELUS II study. J Gen Intern Med. 2024;39(10):1803–10. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Hohne E, Schafer VS, Neubauer R, Gotta J, Reschke P, Wittek A, et al. A four year follow-up survey on the Teledidactic TELUS ultrasound course: long-term benefits and implications. BMC Med Educ. 2024;24(1):1022. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Cosyns B, De Diego JJ, Stefanidis A, Galderisi M, Ernande L, Underwood SR, et al. E-learning in cardiovascular imaging: another step towards a structured educational approach. Eur Heart J Cardiovasc Imaging. 2015;16(5):463–5. [DOI] [PubMed] [Google Scholar]

- 21.Price S, Via G, Sloth E, Guarracino F, Breitkreutz R, Catena E, et al. Echocardiography practice, training and accreditation in the intensive care: document for the world interactive network focused on critical ultrasound (WINFOCUS). Cardiovasc Ultrasound. 2008;6:49. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Via G, Hussain A, Wells M, Reardon R, ElBarbary M, Noble VE, et al. International evidence-based recommendations for focused cardiac ultrasound. J Am Soc Echocardiogr. 2014;27(7):683.e1–e33. [DOI] [PubMed] [Google Scholar]

- 23.Dietrich CF, Sirli RL, Barth G, Blaivas M, Daum N, Dong Y, et al. Student ultrasound education - current views and controversies. Ultraschall Med. 2024;45(4):389–94. [DOI] [PubMed] [Google Scholar]

- 24.Gallina T, Zulawinska B, Stepniewski J, Magon W, Cebula G, Kopec G. Recognition of emerging cardiac diagnoses by echocardiography in 5th-year medical students - the role of focused e-learning. Kardiol Pol. 2021;79(10):1124–6. [DOI] [PubMed] [Google Scholar]

- 25.Gat T, Galante O, Sadeh R, Kobal SL, Fuchs L. Self-learning of cardiac ultrasound by medical students: can augmented online training improve and maintain manual POCUS skills over time? J Ultrasound. 2024;27(1):73–80. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Hempel D, Sinnathurai S, Haunhorst S, Seibel A, Michels G, Heringer F, et al. Influence of case-based e-learning on students’ performance in point-of-care ultrasound courses: a randomized trial. Eur J Emerg Med. 2016;23(4):298–304. [DOI] [PubMed] [Google Scholar]

- 27.Lien WC, Lin P, Chang CH, Wu MC, Wu CY. The effect of e-learning on point-of-care ultrasound education in novices. Med Educ Online. 2023;28(1):2152522. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Parra VM, Fita G, Azqueta M, Gonzalez M, Aranda F, Maestre ML, et al. Student survey after ten years of continuous blended teaching of echocardiography. Rev Esp Anestesiol Reanim (Engl Ed). 2024;71(1):8–16. [DOI] [PubMed] [Google Scholar]

- 29.Salerno A, Euerle BD, Witting MD. Transesophageal echocardiography training of emergency physicians through an E-Learning system. J Emerg Med. 2020;58(6):947–52. [DOI] [PubMed] [Google Scholar]

- 30.Torabi AJ, Feigenbaum H, Bateman PV. Introducing echocardiography to medical students: A novel echocardiography E-Learning experience. Echocardiography. 2021;38(4):549–54. [DOI] [PubMed] [Google Scholar]

- 31.Alnabelsi T, Al-Hussaini A, Owens D. Comparison of traditional face-to-face teaching with synchronous e-learning in otolaryngology emergencies teaching to medical undergraduates: a randomised controlled trial. Eur Arch Otorhinolaryngol. 2015;272(3):759–63. [DOI] [PubMed] [Google Scholar]

- 32.Krauss F, Giesler M, Offergeld C. [On the effectiveness of digital teaching of practical skills in curricular ENT education]. HNO. 2022;70(4):287–94. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Pillong L, Bozzato A, Hecker D, Bozzato V, Schick B, Kulas P. Ahead start or a pain in the neck-establishment and evaluation of a video-based hands-on head and neck ultrasound course. Diagnostics (Basel). 2022;12(5):1239. [DOI] [PMC free article] [PubMed]

- 34.Soon AW, Toney AG, Stidham T, Kendall J, Roosevelt G. Teaching Point-of-Care lung ultrasound to novice pediatric learners: Web-Based E-Learning versus traditional classroom didactic. Pediatr Emerg Care. 2020;36(7):317–21. [DOI] [PubMed] [Google Scholar]

- 35.Tamborrini G, Krebs A, Michel M, Michel BA, Ciurea A. [Web-based learning in musculoskeletal ultrasound]. Z Rheumatol. 2011;70(2):154–9. [DOI] [PubMed] [Google Scholar]

- 36.Nilsson PM, Todsen T, Subhi Y, Graumann O, Nolsøe CP, Tolsgaard MG. Cost-Effectiveness of mobile App-Guided training in extended focused assessment with sonography for trauma (eFAST): A randomized trial. Ultraschall Med. 2017;38(6):642–7. [DOI] [PubMed] [Google Scholar]

- 37.Jujo S, Lee-Jayaram JJ, Sakka BI, Nakahira A, Kataoka A, Izumo M, et al. Pre-clinical medical student cardiac point-of-care ultrasound curriculum based on the American society of echocardiography recommendations: a pilot and feasibility study. Pilot Feasibility Stud. 2021;7(1):175. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Johri AM, Durbin J, Newbigging J, Tanzola R, Chow R, De S, et al. Cardiac Point-of-Care ultrasound: State-of-the-Art in medical school education. J Am Soc Echocardiogr. 2018;31(7):749–60. [DOI] [PubMed] [Google Scholar]

- 39.Gibson LE, White-Dzuro GA, Lindsay PJ, Berg SM, Bittner EA, Chang MG. Ensuring competency in focused cardiac ultrasound: a systematic review of training programs. J Intensive Care. 2020;8(1):93. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Hagendorff A, Tiemann K, Simonis G, von Bardeleben S. Empfehlungen Zur Notfallechokardiographie. 2014;8(1):45–64.

- 41.Via G, Hussain A, Wells M, Reardon R, ElBarbary M, Noble VE, et al. International evidence-based recommendations for focused cardiac ultrasound. J Am Soc Echocardiogr. 2014;27(7):683.e1–e33. [DOI] [PubMed] [Google Scholar]

- 42.Weimer J, Ruppert J, Vieth T, Weinmann-Menke J, Buggenhagen H, Kunzel J, et al. Effects of undergraduate ultrasound education on cross-sectional image Understanding and visual-spatial ability - a prospective study. BMC Med Educ. 2024;24(1):619. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Shafqat A, Ferguson E, Thanawala V, Bedforth NM, Hardman JG, McCahon RA. Visuospatial ability as a predictor of novice performance in Ultrasound-guided regional anesthesia. Anesthesiology. 2015;123(5):1188–97. [DOI] [PubMed] [Google Scholar]

- 44.Clem DW, Donaldson J, Curs B, Anderson S, Hdeib M. Role of Spatial ability as a probable ability determinant in skill acquisition for sonographic scanning. J Ultrasound Med. 2013;32(3):519–28. [DOI] [PubMed] [Google Scholar]

- 45.Weimer J, Rolef P, Muller L, Bellhauser H, Gobel S, Buggenhagen H, et al. FoCUS cardiac ultrasound training for undergraduates based on current National guidelines: a prospective, controlled, single-center study on transferability. BMC Med Educ. 2023;23(1):80. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.Canty DJ, Hayes JA, Story DA, Royse CF. Ultrasound simulator-assisted teaching of cardiac anatomy to preclinical anatomy students: A pilot randomized trial of a three-hour learning exposure. Anat Sci Educ. 2015;8(1):21–30. [DOI] [PubMed] [Google Scholar]

- 47.Chisholm CB, Dodge WR, Balise RR, Williams SR, Gharahbaghian L, Beraud AS. Focused cardiac ultrasound training: how much is enough? J Emerg Med. 2013;44(4):818–22. [DOI] [PubMed] [Google Scholar]

- 48.von Elm E, Altman DG, Egger M, Pocock SJ, Gøtzsche PC, Vandenbroucke JP. The strengthening the reporting of observational studies in epidemiology (STROBE) statement: guidelines for reporting observational studies. Lancet. 2007;370(9596):1453–7. [DOI] [PubMed] [Google Scholar]

- 49.Greim CA, Weber S, Goepfert M. Perioperative Fokussierte echokardiographie in der anästhesiologie und intensivmedizin. Anasthesiologie Und Intensivmedizin. 2017;58:617–21. [Google Scholar]

- 50.Stockwell BR, Stockwell MS, Cennamo M, Jiang E. Blended Learn Improves Sci Educ Cell. 2015;162(5):933–6. [DOI] [PubMed]

- 51.Höhne E, Recker F, Dietrich CF, Schäfer VS. Assessment methods in medical ultrasound education. Front Med (Lausanne). 2022;9:871957. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 52.Puthiaparampil T, Rahman MM. Very short answer questions: a viable alternative to multiple choice questions. BMC Med Educ. 2020;20(1):141. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 53.Tolks D, Schäfer C, Raupach T, Kruse L, Sarikas A, Gerhardt-Szép S, et al. An introduction to the inverted/flipped classroom model in education and advanced training in medicine and in the healthcare professions. GMS J Med Educ. 2016;33(3):Doc46. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 54.Heinz W, Basisausbildung Notfallsonographie DEGUMde. DEGUM; 2016 [cited 2022 10.10.]. Available from: https://www.degum.de/fachgebiete/arbeitskreise/notfallsonografie/kurse-kurscurricula/basisausbildung-notfallsonografie.html

- 55.Royce WW. Managing the development of large software systems: concepts and techniques. Proceedings of the 9th international conference on Software Engineering; Monterey, California, USA: IEEE Computer Society Press; 1987. pp. 328–38.

- 56.Neskovic AN, Skinner H, Price S, Via G, De Hert S, Stankovic I, et al. Focus cardiac ultrasound core curriculum and core syllabus of the European association of cardiovascular imaging. Eur Heart J Cardiovasc Imaging. 2018;19(5):475–81. [DOI] [PubMed] [Google Scholar]

- 57.Croft H, Gilligan C, Rasiah R, Levett-Jones T, Schneider J. Current trends and opportunities for competency assessment in pharmacy Education-A literature review. Pharm (Basel). 2019;7(2):67. [DOI] [PMC free article] [PubMed]

- 58.Hani S, Chalouhi G, Lakissian Z, Sharara-Chami R. Introduction of ultrasound simulation in medical education: exploratory study. JMIR Med Educ. 2019;5(2):e13568. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 59.Recker F, Schafer VS, Holzgreve W, Brossart P, Petzinna S. Development and implementation of a comprehensive ultrasound curriculum for medical students: the Bonn internship point-of-care-ultrasound curriculum (BI-POCUS). Front Med (Lausanne). 2023;10:1072326. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 60.Saad S, Richmond C, King D, Jones C, Malau-Aduli B. The impact of pandemic disruptions on clinical skills learning for pre-clinical medical students: implications for future educational designs. BMC Med Educ. 2023;23(1):364. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 61.Kruger J, Dunning D. Unskilled and unaware of it: how difficulties in recognizing one’s own incompetence lead to inflated self-assessments. J Pers Soc Psychol. 1999;77(6):1121–34. [DOI] [PubMed] [Google Scholar]

- 62.Kirkpatrick DL, Kirkpatrick JD, Kirkpatrick JD. Evaluating training programs: the four levels. 3rd ed. San Francisco, Calif London: Berrett-Koehler McGraw-Hill distributor; 2006. xvii, 372 p.: ill. p.

- 63.Pressman RS, Maxim BR. Software engineering: a practitioner’s approach. Eighth edition ed. New York, NY: McGraw-Hill Education; 2015. xxx, 941 pages: illustrations p.

- 64.Jang HW, Kim KJ. Use of online clinical videos for clinical skills training for medical students: benefits and challenges. BMC Med Educ. 2014;14:56. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 65.Fontaine G, Cossette S, Maheu-Cadotte MA, Mailhot T, Deschênes MF, Mathieu-Dupuis G, et al. Efficacy of adaptive e-learning for health professionals and students: a systematic review and meta-analysis. BMJ Open. 2019;9(8):e025252. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 66.Daum N, Blaivas M, Goudie A, Hoffmann B, Jenssen C, Neubauer R, et al. Student ultrasound education, current view and controversies. Role of artificial intelligence, virtual reality and telemedicine. Ultrasound J. 2024;16(1):44. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 67.Canty D, Barth J, Yang Y, Peters N, Palmer A, Royse A, et al. Comparison of learning outcomes for teaching focused cardiac ultrasound to physicians: A supervised human model course versus an eLearning guided self- directed simulator course. J Crit Care. 2019;49:38–44. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Data Availability Statement

Data cannot be shared publicly because of institutional and national data policy restrictions imposed by the Ethics committee since the data contain potentially identifying study participants’ information. Data are available upon request from the Johannes Gutenberg University Mainz Medical Center (contact via weimer@uni-mainz.de) for researchers who meet the criteria for access to confidential data (please provide the manuscript title with your enquiry).