Abstract

Pharmacovigilance is the science of collection, detection, and assessment of adverse events associated with pharmaceutical products for the ongoing monitoring and understanding of those products’ safety profiles. Part of this process, signal management, encompasses the activities of signal detection, signal validation/confirmation, signal evaluation, and ultimately, final assessment as to whether a safety signal constitutes a new causal adverse drug reaction. Artificial intelligence is a group of technologies including machine learning and natural language processing that are revolutionizing multiple industries through intelligent automation. Here, we present a critical evaluation of studies leveraging artificial intelligence in signal management to characterize the benefits and limitations of the technology, the level of transparency, and our perspective on best practices for the future. To this end, PubMed and Embase were searched cumulatively for terms pertaining to signal management and artificial intelligence, machine learning, or natural language processing. Information pertaining to the artificial intelligence model used, hyperparameter settings, training/testing data, performance, feature analysis, and more was extracted from included articles. Common signal detection methods included k-means, random forest, and gradient boosting machine. Machine learning algorithms generally outperformed traditional frequentist or Bayesian measures of disproportionality per various metrics, showing the potential utility of advanced machine learning technologies in signal detection. In signal validation and evaluation, natural language processing was typically applied. Overall, methodological transparency was mixed and only some studies leveraged “gold standard” publicly available positive and negative control datasets. Overall, innovation in pharmacovigilance signal management is being driven by machine learning and natural language processing models, particularly in signal detection, in part because of high-performing bagging methods such as random forest and gradient boosting machine. These technologies may be well poised to accelerate progress in this field when used transparently and ethically. Future research is needed to assess the applicability of these techniques across various therapeutic areas and drug classes in the broader pharmaceutical industry.

Supplementary Information

The online version contains supplementary material available at 10.1007/s40290-025-00561-2.

Key Points

| Artificial intelligence technologies have revolutionized many industries and processes through intelligent automation, including pharmacovigilance. |

| Across the activities of signal detection, validation, prioritization, and evaluation, most research effort in the artificial intelligence area is applied to signal detection. |

| Novel machine learning signal detection methodologies (k-means, random forest, gradient boosting machine, and others) generally outperformed traditional frequentist methodologies as measured by various metrics, and have the potential to identify previously unknown safety signals. |

| With proper transparency and use of gold standards, these technologies will continue to bring considerable value to drug safety science. |

Introduction

Pharmacovigilance is the scientific discipline pertaining to the systematic collection, detection, assessment, monitoring, and prevention of adverse events (AEs) associated with pharmaceutical products [1, 2]. Within the realm of pharmacovigilance, signal management is a key process that focuses on the identification and evaluation of safety signals, which are defined as pieces of information on a new or known AE that are potentially caused by a medicine that warrant further investigation [3]. Signal management includes the activities of signal detection, validation, prioritization, and assessment (synonymously referred to herein as “evaluation”). Finally, based on the assessment, appropriate actions are recommended to mitigate the risk and ensure patient safety, including but not limited to labeling newly identified adverse drug reactions (ADRs).

Artificial intelligence (AI), which includes technologies such as machine learning (ML) and natural language processing (NLP), is revolutionizing various fields including pharmacovigilance [4]. Overall, AI enables organizations to optimize or automate tedious processes, surface data insights more quickly, help draft written documents, and ultimately, allow humans to focus on higher value tasks/analyses. In pharmacovigilance, AI has been effectively deployed in individual case safety report (ICSR) management for tasks such as ICSR processing (i.e., case intake, evaluation, follow-up, and distribution) [5] and causality assessments [6]. These approaches have proven useful in capturing AEs from coronavirus disease 2019 (COVID-19) vaccines reported in social media [7] and other high-volume scenarios where manual capture would be time consuming, tedious, and likely to be influenced by human error. Artificial intelligence has even been leveraged to predict ADRs for drug candidates during drug discovery [8], suggesting the utility of the technology in drug safety across the development pipeline. Signal management is another area of pharmacovigilance that has more recently begun to benefit from the application of novel AI models. Particularly, signal detection has been a key focus area, given that this process is based on complex associations between multiple variables in large safety datasets, and where AI may help to surface novel insights that traditional methodologies may fail to identify.

While the use of AI in pharmacovigilance, particularly in ICSR management, has been well documented by Salas et al. [9], Kassekert et al. [10], and others [4, 9–12], reviews summarizing the current applications of AI specifically in signal management including detailed information regarding models used and performance metrics are yet to be published. Furthermore, previous reviews summarizing the scope of methods used in signal detection such as those by Kompa et al. [13] and Painter et al. [12] did not focus on emerging advanced AI methods in detail. Additionally, there is a critical lack of information in the literature summarizing the use of AI in signal management downstream of signal detection (i.e., signal validation and evaluation) despite the importance of these steps. Thus, the current review aims to address this gap in the literature by identifying and summarizing the existing uses of AI in signal detection, validation, and assessment with a focus on novel methodologies.

Literature Search

Search Strategy

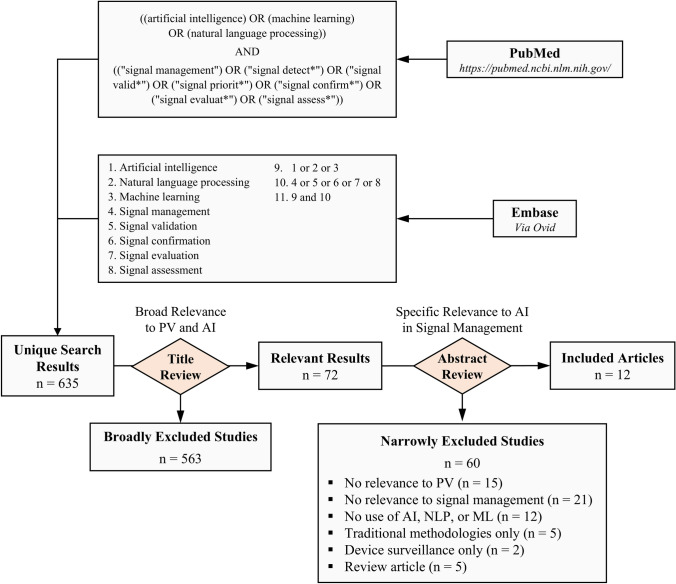

We conducted a search of PubMed and Embase databases cumulative to 27 February, 2024. Search terms pertaining to AI, ML, and NLP were combined with terms capturing different processes within pharmacovigilance signal management (Fig. 1). The search was executed via the public PubMed web portal (https://pubmed.ncbi.nlm.nih.gov/) for PubMed and via the Ovid platform (https://ovidsp.ovid.com/) for Embase using search term 11 (searching the cumulative Embase database, not excluding conference abstracts, Fig. 1).

Fig. 1.

Literature search strategy. PubMed and Embase databases were queried for publications pertaining to artificial intelligence (AI) and pharmacovigilance signal management by similar search strategies. Search results were screened first by title for broad relevance, then by abstract for narrow relevance, resulting in 12 final included articles. ML machine learning, NLP natural language processing, PV pharmacovigilance

Article Selection

Results from PubMed and Embase databases were compiled via exports in Microsoft Excel, then deduplicated by title. A two-step screening process was adopted as follows: first, all article titles were screened for broad topic relevance. Articles with titles deemed to be potentially relevant to the scope of this review were flagged for further review. Then, flagged articles were screened by abstract for final determination of relevance to our scope. For the purposes of this review, the European Medicines Agency Guideline on good pharmacovigilance practices definitions of signal detection, validation, prioritization, and evaluation were adopted [1]. “Signal assessment” and “signal evaluation” are used interchangeably. Further, in this review, signal detection was limited to detection of signals from spontaneous reports and real-world data rather than from statistical alerts or other sources. We also aimed to characterize novel applications of emerging advanced ML techniques for the purpose of signal detection, targeting those that may improve performance over traditional frequentist (e.g., proportional reporting ratio [PRR], reporting odds ratio [ROR], relative risk reduction, information component) or Bayesian (e.g., Bayesian Confidence Propagation neural network) measures of disproportionality, association rule analysis, or older text mining methods, despite that these are in fact ML methods. Therefore, these methods were considered out of scope for inclusion in our analysis. In summary, the following exclusion criteria were applied:

Not related to the field of pharmacovigilance.

Related to the field of pharmacovigilance but not related to signal management processes (e.g., articles pertaining only to non-signal management processes such as upstream AE detection in unstructured sources, case entry, case management, ad hoc or bespoke purposes).

No application of AI, NLP, or ML methodologies.

Signal detection studies exploring traditional methodologies defined above as out of scope.

Studies pertaining only to medical device surveillance only.

Review articles.

Studies for which exclusion criteria were unclear were adjudicated by a team discussion among the authors. Articles were not excluded based on year of publication, journal, authorship, or other content-irrelevant factors. Conference abstracts were not excluded. Excluded studies are listed in the Electronic Supplementary Material.

Data Extraction

For studies focusing on signal detection using supervised ML, we extracted information pertaining to the database from which training/testing data were derived, the corresponding number of product-event combinations (PECs) in that database, the types of event signals tested (if specific clinical concepts were targeted in the analysis), the types of drugs for which PECs were included (if not simply all or any drugs in a given dataset), the number of positive and negative control PECs used to train the model, the methods used, any performance metrics provided, and any results pertaining to a feature analysis. If multiple sets of reference data were used for positive and negative control PECs, we extracted the highest number. If methods were tested on multiple test datasets, the highest performance metric was extracted (unless an average was provided; we did not impute averages). Thus, our results indicate the highest ideal performance of the models in our included articles. If ML methods were compared against standard methods (e.g., PRR, ROR, relative risk reduction, information component), performance metrics for those standard methods were also captured. Depending on whether hyperparameters used to train ML models were provided in our included articles’ methodologies, we captured “Yes” or “No”, but did not provide the actual hyperparameter values, as this would be of little informative value to the reader. Information was compiled in Microsoft Excel. Given the low number of studies focusing on signal validation and signal evaluation (as well as unsupervised ML in signal detection), these were evaluated and summarized qualitatively on a per-study basis.

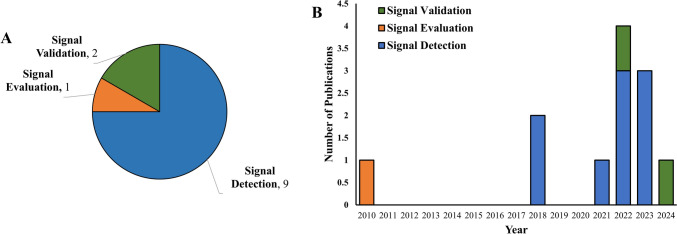

Summary of Studies Leveraging AI in Signal Detection

Our search strategy identified 12 final included studies [14–25], nine of which pertained to signal detection (one using unsupervised ML [15], eight using supervised ML [14, 16, 18, 20, 22–25]), two to signal validation [17, 21], and one to signal evaluation [19] (Fig. 2A). Publication year for all studies ranged from 2010 to 2024 with a heavy bias toward more recent years, to the extent that nine were published in 2021 or later [15–18, 20–22, 24, 25] (Fig. 2B). The nine signal detection studies we identified [14–16, 18, 20, 22–25] applied a range of AI technologies, generally limited to ML (but also including NLP), particularly supervised ML (8 of 9) [14, 16, 18, 20, 22–25] but also including unsupervised ML (one of nine) [15]. While we did not exclude signal detection from real-world data in our search strategy (e.g., claims data or electronic health records [EHRs]), these studies generally focused solely on signal detection from spontaneous data. Each of these applications of AI to signal detection is discussed below.

Fig. 2.

Characteristics of included studies. A Included studies by topic (signal detection, signal validation, or signal evaluation). B Included studies by publication year, stratified by topic

Applications of Unsupervised ML

Our search strategy identified only one article applying unsupervised ML to the task of signal detection, rather unsurprisingly, given that supervised ML offers certain advantages in this specific use case, as discussed more in the Sect. 6 below. The exploratory study in question aimed to detect signals associated with the AstraZeneca COVID-19 vaccine by clustering PECs in VigiBase, then retrospectively analyzing the clusters for potential signals [15]. First, the authors reduced the number of variables per case from 114 to 10 via a multiple correspondence analysis, then applied kmeans clustering. A review of the resulting clusters in two dimensions revealed that one of the main clusters was mainly represented by AEs of thrombocytopenia and/or thrombosis, which are known ADRs for that product. The ability of the strategy to detect other known ADRs or additional unknown ADRs was not discussed (although this study is reported only as a conference abstract, thus the ability for the authors to share more meaningful findings was limited).

Applications of Supervised ML

Tables 1 and 2 summarize included articles that applied supervised ML techniques to signal detection. These eight studies [14, 16, 18, 20, 22–25] leveraged a variety of public or private databases, including the Ajou University Hospital EHR Database, Australia Pharmaceutical Benefit Scheme Medication Dispensing Database, Netherlands Pharmacovigilance Centre Lareb, University of Texas Health Science Center at Houston Clinical Data Warehouse Affiliated Brain Hospital of Guangzhou Medical University EHR Database, and the US Food and Drug Administration Event Reporting System and Korea Adverse Event Reporting System. Six of the eight studies developed ML models to target any AE (or any “new”, i.e., unlabeled AE) [14, 18, 20, 22–24], as would be desired for general purpose signal detection (although one of these six focused solely on product-laboratory value combinations rather than drug-AE combinations [23]). The remaining two studies developed models specifically to detect a certain set of AEs or a certain laboratory value [16, 25]. Similarly, four of the eight developed models specific for a product or set of products [14, 16, 22, 25] while the other half allowed any (or nearly any) PEC to be detected [18, 20, 23, 24]. Ultimately, the resulting number of cases or PECs studied in these databases ranged from ~1200 to ~17,700,000. Some studies used positive controls [14, 16, 18, 20, 22, 24], with a range from 67 to 1439 PECs, while others did not provide this information [23, 25]. The use of negative controls also varied widely across the studies, with a range from 45 to 28,985 PECs [14, 16, 20, 22, 24, 25].

Table 1.

Summary of articles applying novel supervised machine learning methodologies to signal detection

| References | Database | n | Positive controls | Negative controls | Type of controls | Signals targeted | Drugs included | Method(s) | Number of features | Training:testing split (ratio) | Label distribution imbalance adjustment | Hyperparameters provided | Hyperparameter tuning |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Jeong et al. [23] | Ajou University Hospital EHR Database | 1674 drug-laboratory combinations | N/P | N/P | N/P | Any unlabeled drug-laboratory event pairs | Any |

Random forest L1 regularized Logistic regression Support vector machine NN |

48 | 70:30 | N/P | Yes | Ten-fold cross-validation |

| Hoang et al. [20] | Australia Pharmaceutical Benefit Scheme Medication Dispensing Database | 1.8 million cases | 67 PECs | 83 PECs | External | Any | Any |

Logistic regression decision tree Support vector machine NN Random forest Gradient boosting machine SSA |

N/P | N/P | N/P | N/P | Unspecified cross-validation |

| Bae et al. [14] | KAERS | 1244 PECs |

70 PECs 267 PECs |

15 PECs 71 PECs |

Internal | Any unlabeled AEs |

Nivolumab Docetaxel |

Gradient boosting machine Random forest ROR IC |

23 | 75:25 | SMOTE | Yes | Five-fold cross-validation |

| Gosselt et al. [18] | Netherlands Pharmacovigilance Centre Lareb | 30,424 PECs | 1439 PECs | 28,985 PECs | Internal | Any unlabeled AEs | Any (except vaccines) |

Logistic regression Elastic net logistic regression eXtreme gradient boosting |

19 | 70:30 | Random down sampling | N/P | Five-fold cross-validation |

| Mower et al. [24] | University of Texas Health Science Center at Houston Clinical Data Warehouse | 2.5 million PECs | 163 PECs | 234 PECs | External | Any | Any |

EARP SGNS PRR/ROR |

N/P | N/P | N/P | N/P | N/P |

| Zhu et al. [25] | Affiliated Brain Hospital of Guangzhou Medical University EHR Database | 672 drug-laboratory combinations | N/P | N/P | N/P | Prolactin level | Olanzapine | eXtreme gradient boosting | 383 | 80:20 | N/P | Yes | Ten-fold cross-validation |

| Dauner et al. [16] | FAERS | 17.7 million PECs | 110 PECs | 45 PECs | Internal | Dysglycemia, hepatic decompensation and hepatic failure, angioedema | Targeted list of 60 |

Logistic regression Gradient-boosted trees Random forest Support vector machine PRR |

9 | 80:20 | SMOTE | N/P | Five-fold cross-validation |

| Jang et al. [22] | KAERS | 1893 PECs | 642 PECs | 485 PECs | Internal | Any unlabeled AEs | Ciprofloxacin, gemifloxacin, levofloxacin, moxifloxacin, ofloxacin |

Random forest Bagging ROR IC |

22 | 75:25 | ROSE | Yes | Five-fold cross-validation |

AE adverse event, EARP Embeddings Augmented by Random Permutations, EHR electronic healthcare record, FAERS US Food and Drug Administration Adverse Event Reporting System, IC information component, KAERS Korea Adverse Event Reporting System, N/P not provided, NN neural network, PEC product event combination, PRR proportional reporting ratio, ROR reporting odds ratio, ROSE random over-sampling example, SGNS skipgram-with-negative-sampling, SMOTE synthetic minority over-sampling technique, SSA sequence symmetry analysis

Table 2.

Summary of performance and feature analysis

| References | Method(s) | Performance | Top features | ||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Sensitivity | Specificity | PPV | NPV | Accuracy | F1 | Precision | Recall | AUROC | MAE | MSE | RMSE | MRE | |||

| Jeong et al. [23] |

Random forest L1 regularized Logistic regression Support vector machine NN |

0.671 0.593 0.569 0.793 |

0.780 0.756 0.796 0.619 |

0.727 0.679 0.709 0.645 |

0.732 0.682 0.680 0.777 |

– – – – |

0.696 0.631 0.629 0.709 |

– – – – |

– – – – |

0.816 0.741 0.737 0.795 |

– – – – |

– – – – |

– – – – |

– – – – |

Shape of laboratory result distribution, descriptive statistics, Yule’s Q, EB05 |

| Hoang et al. [20] |

Logistic regression Decision tree Support vector machine NN Random forest Gradient boosting machine SSA |

0.62 0.67 0.61 0.59 0.57 0.77 0.56 |

0.72 0.67 0.43 0.48 0.85 0.81 0.78 |

0.67 0.65 0.52 0.51 0.79 0.76 0.65 |

0.68 0.70 0.52 0.55 0.69 0.82 0.72 |

– – – – – – |

– – – – – – |

– – – – – – |

– – – – – – |

– – – – – – |

– – – – – – |

– – – – – – |

– – – – – – |

– – – – – – |

Statistical features |

| Bae et al. [14] |

Gradient boosting machine Random forest ROR IC |

1.00 – – – |

0.93 – – – |

0.95 – – – |

1.00 – – – |

0.97 – – – |

– – – – |

– – – – |

– – – – |

0.973 0.952 0.548 0.486 |

– – – – |

– – – – |

– – – – |

– – – – |

SOC, frequency, seriousness, HCP-reported |

| Gosselt et al. [18] |

Logistic regression Elastic net logistic regression eXtreme gradient boosting |

– – – |

– – – |

– – – |

– – – |

– – – |

– – – |

– – – |

– – – |

0.75 0.74 0.74 |

– – – |

– – – |

– – – |

– – – |

Listedness, seriousness, IME, HCP-reported, rechallenge |

| Mower et al. [24] |

EARP SGNS PRR/ROR |

– – – |

– – – |

– – – |

– – – |

– – – |

– – – |

– – – |

– – – |

0.677 0.709 0.530 |

– – – |

– – – |

– – – |

– – – |

No feature analysis performed |

| Zhu et al. [25] | eXtreme gradient boosting | – | – | – | – | – | – | – | – | – | 0.046 | 0.0036 | 0.06 | 11% | Sex, concomitant medications, age, dose, laboratory values |

| Dauner et al. [16] |

Logistic regression Gradient-boosted trees Random forest Support vector machine PRR |

– – – – – |

– – – – – |

– – – – – |

– – – – – |

0.710 0.645 0.710 0.710 – |

0.824 0.766 0.830 0.830 – |

0.741 0.720 0.720 0.833 0.673 |

0.955 0.864 1.000 1.000 0.321 |

0.606 0.657 0.641 0.611 0.458 |

– – – – – |

– – – – – |

– – – – – |

– – – – – |

Frequency, dechallenge, recent reporting, seriousness |

| Jang et al. [22] |

Random forest bagging ROR IC |

– – – – |

– – – – |

– – – – |

– – – – |

0.63 0.59 0.32 0.43 |

– – – – |

– – – – |

– – – – |

– – – – |

– – – – |

– – – – |

– – – – |

– – – – |

No feature analysis performed |

Performance columns include “–” in lieu of “Not Provided” for ease of reading

AUROC area under the receiver operating characteristic curve, EARP Embeddings Augmented by Random Permutations, EB05 empirical Bayesian geometric mean lower 95% confidence interval limit, HCP healthcare professional, IC information component, MAE mean absolute error, MRE root-mean-squared error, MSE mean squared error, NN neural network, NPV negative predictive value, PPV positive predictive value, PRR proportional reporting ratio, RMSE root-mean-squared error, ROR reporting odds ratio, SGNS skipgram-with-negative-sampling, SOC system organ class

Across studies, a small variety of ML methods were used including tree-based methods (e.g., decision tree, random forest, gradient boosting machine [GBM] or eXtreme GBM), logistic regression, support vector machine, neural network, and some NLP techniques (embeddings augmented by random permutations and skipgram-with-negative-sampling). Five of eight studies included a comparison against a more “traditional” signal detection methodology, often a frequentist disproportionality analysis (e.g., ROR, PRR, information component) or sequence symmetry analysis [14, 16, 20, 22, 24]. When preparing their data for use in ML training, authors used a range of 9–383 features, most often based on safety variables (e.g., case-level or PEC-level attributes) but sometimes including special statistical or pharmacological features depending on the study’s aim [20, 23]. Many features were binary, but some were split into several categories, for instance, age was commonly divided into child (0–17 years), adult (18–64 years), and elderly (65+ years) groups, each with a corresponding numeric identifier (e.g., 1, 2, or 3). Products were generally coded to Anatomical Therapeutic Chemical ontologies, and AEs were generally coded to Medical Dictionary for Regulatory Activities preferred terms (PTs) or lower-level terms. When dividing data for testing and training, authors used different ratios that ranged from 70:30 to 80:20 evenly, with two studies not providing this information [20, 24]. Feature imbalance in testing versus training datasets was corrected in half of studies [14, 16, 18, 22] with the synthetic minority oversampling technique being the most common methodology followed by random oversampling examples, and random down sampling. Seven of eight studies described their hyperparameter tuning method (most commonly five-fold cross-validation) [14, 16, 18, 20, 22, 23, 25], but only four provided the actual hyperparameter values [14, 22, 23, 25].

Regarding performance, a total of 13 different metrics were used across studies. Among these, the area under the receiver operating characteristic curve (AUROC) was the most common. Gradient boosting machine and random forest models consistently showed high scores across multiple metrics when compared against other methods within studies, and were also high performers when comparing between studies. Importantly, in all studies that included a more “traditional” comparator method, at least one ML method showed better performance [14, 16, 20, 22, 24]. Overall, the highest AUROC of 0.973 was achieved with a GBM model with five-fold cross-validation hyperparameter tuning trained on a small set of PECs with 23 safety-related features [14]. In that study, ROR and information component had AUROC scores of 0.548 and 0.486, respectively, when used for the same task. The lowest AUROC score overall was 0.606, from a logistic regression model tuned by five-fold cross-validation trained on an extremely large (~ 17.7 million PEC) dataset [16]. Despite this low performance compared with other studies, it still outperformed PRR (AUROC 0.458). Another perspective to consider with regard to performance is whether ML models can detect new clinically meaningful safety signals when compared ‘head-to-head’ with standard signal detection practices. Three of our studies did indeed identify and investigate new drug-laboratory pairs or PECs, which were assessed as clinically meaningful by the supporting literature [14, 22, 23]. This suggests that the performance metrics described above can to some extent be translated to real improvements in signal detection.

Last, six of eight studies performed a feature analysis via various methods including Shapley Additive Explanations or Lasso regression [14, 16, 18, 20, 23, 25]. The most common features included some event-level attributes such as seriousness, and healthcare professional reportedness, PEC-level attributes such as dechallenge, rechallenge, listedness, and report frequency or proportion, and various demographic factors such as age and sex. Some studies such as those by Jeong et al. [23] and Hoang et al. [20] leveraged special methodologies with unique features pertaining to laboratory or statistical measures, respectively, making the extrapolation to general purpose signal detection difficult.

Summary of Studies Leveraging AI in Signal Validation

In 2024, Dong et al. [17] performed a proof-of-concept study to explore the application of NLP in optimizing signal management via data derived from the Vaccine Adverse Event Reporting System (VAERS), specifically for COVID-19 vaccines. Validation of signals of disproportionality within the VAERS platform is difficult given the “extreme” rise in the number of AEs after immunization following the approval and marketing of COVID-19 vaccines due to their wide adoption and use. Concomitant with this sharp rise in AEs is a similar rise in signals of disproportionate reporting (SDRs), making the task of manually reviewing these signals to determine whether they represent valid safety signals a difficult and time-consuming task for safety scientists. Furthermore, a fraction of these signals represents non-valid safety signals where the AE in question is already a listed ADR for that product. In many cases (depending on the safety organization in question), exclusion of these listed AEs is performed manually.

To address this, the authors developed an innovative NLP-based approach that automatically excluded disproportionality signals that were related to listed AEs following immunization. The methodology involved first identifying a group of COVID-19 vaccines, exporting VAERS safety data for them over a 3-year period, and separately, compiling a list of known ADRs associated with these products. The ADRs were entered into FACTA+ [26], a tool that identifies and visualizes indirect associations between medical concepts, to generate a list of signs and symptoms associated with each ADR in plain language. Then, the authors undertook a robust effort to map these plain language signs and symptoms to Medical Dictionary for Regulatory Activities PTs or lower-level terms that could be compared against the PTs of each VAERS SDR, where if the PT in the SDR matched one of the PTs generated from FACTA+ signs/symptoms, the SDR could be dismissed. This mapping was attempted first by exact string matching, which worked in just over half of the terms. For the remaining terms, nine different NLP techniques were used and ultimately compared to the GPT3.5 large language model. The techniques included Damerau–Levenshtein, longest common subsequence, qgram, cosine, Jaccard distance, Jaro–Winkler distance, and optimal string alignment distance, among a few others. These techniques reached a peak accuracy of 65%, significantly lower than GPT3.5’s performance of 78%, suggesting the robust conceptual understanding ability of this large language model, even without special domain knowledge. Ultimately, the approach successfully dismissed 17% of COVID-19 vaccine SDRs in the authors’ dataset (130 of 757). Overall, this article presents a novel approach to the early steps of pharmacovigilance signal management by combining manual techniques and NLP and demonstrates the potential of NLP in improving the efficiency and accuracy of signal management in the context of vaccine AE reporting.

Our literature search strategy identified one other study focusing on the use of AI for signal validation, but in this instance via the use of a quantitative ML method rather than via NLP with the goal of automatically assigning validation categories to SDRs [21]. The authors first accessed internal SDRs from their safety database, then trained various ML models based on SDR/ICSR features. Individual case safety report-derived features included case attributes (e.g., report type, medical confirmation), patient attributes (i.e., demographics), product attributes (e.g., product name, indication), event attributes (e.g., PT, seriousness, outcome), and PEC attributes (e.g., time to onset, dechallenge, rechallenge, listedness, reported/determined causality). Aggregate database features included SDR attributes (e.g., designated medical event, listedness, trending over time) and case counts (i.e., number of cases, number of serious/fatal cases, case frequency). The SDR validation categories that served as potential outputs for the algorithms included “signal” or “no signal”; where “no signal” was the outcome, the following justifications were permitted: (1) listed/expected ADR; (2) no ADR; (3) recently investigated; (4) medical judgment, or (5) confounded by indication. “No signal—medical judgment” was reported as the most common historical SDR outcome in the dataset (~ 63%).

Four types of models were compared: random forest, linear support vector classifier, logistic regression, and extreme gradient boosting implementation of gradient boosted trees (XGBoost). The XGBoost model showed the highest performance and was chosen for further use for training and testing on both new and recurring SDRs. The model produced a macro-average F1 score of 0.53, with individual F1 scores per outcome that ranged from zero (for outcomes such as “signal”, for which there were very little training data available) to 0.89. Certain outcomes greatly benefited from having prior SDRs, i.e., if an SDR appeared and was adjudicated as “no signal—confounded by indication”, then that human adjudication was then used to retrain the model such that it could be allowed to come to the same conclusion upon future reappearance of that same SDR. Indeed, this human-in-the-loop feed-forward paradigm was beneficial for several other outcomes including “no signal—listedness” (i.e., once the model “knows” the SDR is listed, it can mark it so in the future). Other high-impact features included company-determined causality and listedness. This approach was applied across six drugs (including one biologic) representing multiple classes and therapeutic areas, although the identity of the drugs themselves was not disclosed.

Last, the authors tested the XGBoost model prospectively for 3 months alongside traditional signal detection and validation procedures to gather human feedback. The accuracy compared to human validations was stable over this time period and ranged from 83 to 86%. New SDRs were identified less accurately than reoccurring SDRs (~ 72 vs ~ 90%, respectively). Generally, the platform was accepted by the safety scientists, and even more so when explaining which features led most greatly to the outcomes (i.e., when the model was presented transparently). A limitation of this study was that only a single-digit number of SDRs with the outcome of “signal” were included in the training set, and unsurprisingly, the one SDR flagged as “signal” by the model was a misclassification.

Summary of Studies Leveraging AI in Signal Evaluation

In 2010, an abstract presented at the International Society of Pharmacovigilance meeting in Ghana described the use of NLP to identify confounding factors in AE reports of rhabdomyolysis sourced from 4 years of EHR data, summing to 150 records [19]. Rhabdomyolysis may be caused by myopathy induced by certain concomitant medications or certain concomitant disease states [27]. Performance of an NLP tool called MedLEE [28, 29] was scored against manual human review of the same records. While this conference abstract did not describe the methodology in detail, it reported a sensitivity of 96.7% and a specificity of 81.4%. Of a total of 687 patients captured in these reports who had an elevated creatine kinase, 522 of these elevations were identified by the approach as being clearly attributable to a confounding medical condition or disease. Further, confounding drugs known to elevate creatine kinase were identified including valproic acid, tenofovir, statins, and haloperidol. The authors claimed a 20-fold return on time investment to develop the NLP model for this topic (i.e., for every hour invested in development, 20 hours were claimed to be saved). The authors described this technique as advantageous in the context of “signal processing”. Terminology differs within the industry, but there are clear implications in a signal evaluation, as this is the stage of signal management in which many cases are evaluated for causality by, in part, examining confounding factors and filtering cases accordingly. Given the highly manual current process of a signal evaluation, there are major implications for time savings following the adoption of NLP assistance.

Discussion

While AI is not a new concept, and in fact was first conceptualized nearly 70 years ago, its popularity and use in multiple industries, including the pharmaceutical industry, has exploded in recent decades with the increasing availability of digital training data. Artificial intelligence has successfully been deployed to enhance drug discovery and development [30, 31], manufacturing [32], clinical trial design [33], and even marketing [34]. In addition, AI techniques have been used to increase efficiency and consistency in pharmacovigilance, a field incorporating repetitive tasks and large datasets, i.e., one that is a natural candidate for AI automation. Indeed, AI has reduced case processing times, improved information capture (e.g., from spontaneous reports and social media), automated extraction of products/AEs to standard dictionaries, enhanced identification of drug–drug interactions [35], automated causality assessment [36], and more [11].

In the current review on AI applications in signal management, with respect to signal detection, we made a distinction between the ‘detection’ of ADRs in written language and the formal process of signal detection, where qualitative or quantitative methods are applied to identify safety signals in data already imported to a safety database to which statistical alerts have not been applied. This is important because many studies leverage NLP or ML techniques to detect ADRs in unstructured data (e.g., medical charts, EHR databases, social media) [9] and do not make a clear distinction between this case capture/entry process and the “proper” aforementioned signal detection process. Historically, signal detection methods began with qualitative human review of ICSRs, a technique that still allows safety experts to apply clinical judgment to complex safety topics in low case volume scenarios [37]. However, as early as the era of the thalidomide tragedy in the 1960s [38], quantitative techniques to detect SDRs were being conceptualized [39] using a 2 × 2 contingency table format. These frequentist disproportionality analyses (e.g., ROR, PRR, relative risk reduction, Chi Square, Yule’s Q, Poisson probability) [40] became increasingly popular with the advent of digital safety databases. Around the turn of the century, the desire to combine quantitative methodologies with expert knowledge and past data in a feed-forward manner led to the adoption of Bayesian statistical methods, for instance, the Bayesian Confidence Propagation Neural Network [41] method, which was the first routinely used method to contribute to a change in the status quo to a quantitative first, qualitative second review paradigm. Around this time, association rule analysis (a very closely related technique to the Bayesian Confidence Propagation Neural Network) [42] and other techniques such as shrinkage Lasso regression—an early ML technique that could similarly identify patterns in large data [42, 43]—were being developed and considered for the purpose of pharmacovigilance signal detection [44]. The Bayesian Confidence Propagation Neural Network, association rule mining, and text mining paradigms, among others, would typically fall within the scope of this review as ML technologies. However, we considered them out of scope because of their existing extensive discussion in the literature [45, 46] to instead focus on emerging ‘advanced’ ML technologies.

Indeed, many advanced ML algorithms have recently gained popularity in many industries including pharmacovigilance. Decision tree-based ML algorithms including random forest and GBM/gradient-boosting trees are examples of supervised ML algorithms built on a basic architecture of a cascading set of rules. These models offer the flexibility to handle complex datasets while making no assumptions about existing relationships between variables, but require a large training corpus. Six of our included articles applied decision tree ML methods [14, 16, 18, 20, 23, 25]. A common tree-based method used was random forest, an “ensemble” or “bootstrap aggregating” (also known as “bagging”) method that aggregates results from multiple decision tree models, which each individually “vote” for an outcome [47]. In the study by Jeong et al. [23], random forest had the highest AUROC, outperforming logistic regression, support vector machine, and a neural network. In the other three studies using random forest, this model was outperformed by another tree-based method, GBM [14, 16, 20]. Gradient boosting machine, like random forest, is an ensemble classification method, but which in contrast to random forest builds a series of decision trees where each new tree corrects the errors of the one prior until no further improvements can be made; the final model is the weighted sum of all trees [48]. Gradient boosting machine, or a modification of it (e.g., eXtreme gradient boosting used by Gosselt et al. [18] and Zhu et al. [25] or gradient-boosted trees used by Dauner et al. [16]), was used by five of our included studies [14, 16, 18, 20, 25]. This type of ML model was a consistently high performer across these studies and often outperformed other models including random forest. Other models used included several non-ensemble methods (e.g., decision tree) and several non-ensemble, non-tree-based methods (e.g., logistic regression and support vector machine), although they rarely outperformed their ensemble counterparts. The fact that these advanced ML algorithms generally outperformed traditional signal detection methodologies highlights the great potential of this technology to improve safety science; however, these studies generally lack a level of standardization, transparency, and information on the timeliness of signal detection to strongly conclude that ML algorithms are truly superior. Additionally, these studies generally do not discuss the logistical challenges in operationalizing ML algorithms across a diverse portfolio of products—a significant barrier to full adoption.

When training their ML models, our included studies’ authors used a variety of internal or external positive and negative controls to “teach” the algorithms examples of what constitutes a valid signal and what does not. Most studies developed their own internal standards, although among these, most were developing ML algorithms for specific AEs or specific products, meaning the choice to develop their own standards was likely best. In these instances, listed ADRs typically served as the positive controls and unlisted AEs typically served as the negative controls [14, 16, 18, 22]. Two of three studies developing models for any AE and any (or almost any) product [20, 24] leveraged externally available “gold standards” including “Wahab13” [41] and “Harpaz14” [49] and Exploring and Understanding Adverse Drug Reactions and Observational Medical Outcomes Partnership standards. However, there are documented limitations even in these established standards. For example, a 2016 study by Hauben et al. [50] discovered that an estimated 17% of negative controls in the Observational Medical Outcomes Partnership standard may in fact have been misclassified because of methodological oversights. In the future, where applicable, authors should make decisions about internal versus external standards cautiously, and if choosing external standards, be weary of their quality and potential effects on their models.

Among others, one benefit of supervised ML algorithms (vs unsupervised clustering ML methods) is the opportunity to analyze which input features were given the highest weight by the algorithm during training (i.e., feature analysis). Likely, users of ML algorithms would hypothesize that case-level or PEC-level features known by experts to drive an assessment of causality (e.g., disproportionate reporting frequency, healthcare provider-reportedness, positive dechallenge, positive rechallenge) would be identified as top features. Indeed, feature analysis tools such as Shapley Additive Explanations feature importance [51] or Lasso regression [52] were used by several of our included studies’ authors to identify some of these expected features, as previously described above.

In contrast to the eight studies that we found applying supervised ML to the task of signal detection, often in comparison to frequentist or Bayesian disproportionality analyses, only one study was identified using a clustering (i.e., unsupervised ML) approach, in this case based on k-means [15]. This is unsurprising, given that the ultimate outcome of signal detection is a simple binary classification—signal or no signal. However, clustering is a creative solution to the complex task of signal detection. Large industry safety databases contain many PECs, even when considering only one product. When clustering these PECs, logically, some clusters will contain PECs constituting a safety signal, and others will not. However, in this approach, safety scientists must spend time “back-engineering” clusters to identify the reason the cluster was created and determine whether a signal is present or not, a clear limitation to this approach.

While many studies focused on signal detection, relatively few focused on the latter stages of signal management—signal validation/confirmation and signal evaluation/assessment. These stages are critical in pharmacovigilance but are generally less dependent on a statistical analysis and more dependent on a qualitative expert analysis (e.g., considering the biological plausibility of the topic, the presence of confounding factors, individual causality assessments)—the lack of ML studies in this area corresponds with this fact. However, other types of AI, specifically NLP, have clear uses in these areas. The studies reviewed above by Dong et al. [17] and Haerian et al. [19] reflect this concept. Large language models such as GPT, Bart, Llama, and others have a conceptual understanding of basic clinical concepts and can identify synonymous terms, and match terms to Medical Dictionary for Regulatory Activities or Anatomical Therapeutic Chemical. Further, there is an industry-wide desire for the development of large language models with domain-specific knowledge [53], some of which are already being developed and commercialized.

The current review must be considered in the context of its inherent limitations. First, we employed a rather narrow search and screening strategy, reducing 635 unique results to only 12 final included articles. There are many applications of AI in pharmacovigilance peripheral to signal management, or which were developed for a specific use case. Although some of these studies may theoretically be applicable to signal management in some way, we have not considered them in scope (many of these types of articles are reviewed elsewhere). Furthermore, our search strategy relied on articles including specific terms or phrases related to signal management and AI/ML/NLP in their keywords, titles, or abstracts. While these are common terms, it is possible that some studies that described uses of AI in signal management using alternate phraseology may not be captured here. As an emerging field, there is also an increasing number of publications, some of which were written and became available even during the peer-review process for this article. Six such publications were identified that pertained to signal detection via advanced ML algorithms such as deep learning, reinforcement learning, and others (although these studies do not alter the main conclusions of this review) [54–59]. Next, we considered device surveillance out of scope given that there is little standardization across companies’ safety organizations as to how device surveillance is performed (i.e., under or not under the umbrella of the drug safety function). Our search strategy did identify two device surveillance studies leveraging ML for surveillance of arthroplasty devices and dual-chamber implantable cardioversion devices [60, 61], indeed, the same methodologies, strengths, and limitations apply. Last, a lack of consistent use of performance metrics across studies precluded our ability to conduct a robust quantitative comparison of methodologies; instead, a qualitative approach was adopted.

Looking forward, the development and use of AI tools in signal management will continue to face technical and regulatory challenges. For instance, in the field of generative AI, synthesis of non-factual information (commonly termed ‘hallucination’), mis-prompting, privacy, and bias remain key challenges [62]. Overcoming these challenges will unlock AI drafting potential for ad hoc and periodic safety reports to regulators—a service that some vendors are beginning to supply. Similarly, in discriminative AI systems, bias in training data may be perpetuated by ML algorithms and must be investigated proactively, with several leaders in the technology industry (e.g., IBM, Microsoft) making pledges to do so [63]. Despite the rapid evolution of the technology, concrete regulatory frameworks have not yet been established. However, some publications on current thinking reveal various regulators’ ethical positions on AI. For instance, in 2021 the World Health Organization established ethical principles of AI use in healthcare, including how transparency and explainability (among other principles) must be carefully balanced with performance and innovation [64, 65]. Similarly, the European Medicines Agency recently released a draft reflection on the use of AI across the medicinal product lifecycle, which briefly opines on the responsibility of marketing authorization holders to “validate, monitor and document model performance and include AI/ML operations in the pharmacovigilance system” as related to signal detection and classification/severity scoring of AEs [66]. By surmounting these challenges and gracefully navigating this dynamic regulatory landscape in a risk-based manner [67], future applications of AI in signal management are poised to significantly improve efficiency across signal management, possibly even in the early detection of ‘black swan’ events, which are difficult to predict, have severe and widespread consequences, and are marked by retrospective bias [68].

Conclusions

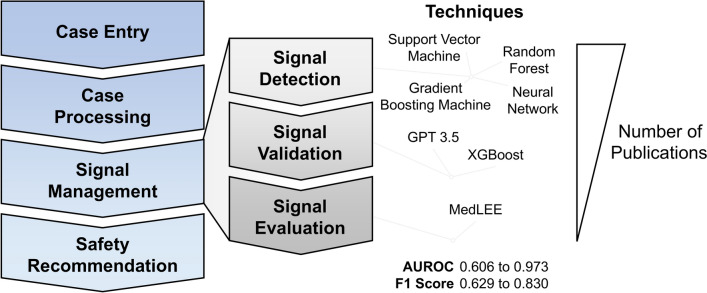

Signal management is the pharmacovigilance process pertaining to the detection, validation, and evaluation of safety signals. Recent advances in novel ML and NLP models have begun to influence industry performance in these areas, particularly in signal detection (Fig. 3). Ensemble methods such as random forest and GBM have great potential to supplement traditional frequentist and Bayesian statistical approaches to signal detection. Furthermore, NLP models have great potential in revolutionizing not only case entry/processing but also signal validation and evaluation. Adoption of gold standard positive and negative control PECs (while considering their limitations), transparency in model choice, hyperparameter tuning, and performance scoring will accelerate collective progress in these areas. Future studies are needed to determine the logistical and operational feasibility and true time-saving value of these techniques applied across therapeutic areas and drug classes. Like many industries, pharmacovigilance is poised to benefit from intelligent automation thanks to the rapid pace of AI development in the coming years. The safety scientist not versed in complex statistical analysis is encouraged to adopt and trial these high-performing bagged ML models and NLP models to see quicker turnaround times for fulfilling regulatory obligations. However, such adopters must leverage these technologies transparently and ethically to uphold our duty to patient safety, particularly in the context of a dynamic regulatory landscape.

Fig. 3.

Summary figure. This review summarized publications pertaining to the use of artificial intelligence in signal management. Techniques involved in our 12 included studies were supervised machine learning [support vector machine, random forest, gradient boosting machine, and neural network], natural language processing (GPT, MedLEE), and others. Artificial intelligence performance in signal detection varied, and overall, the number of publications in each topic was heavily biased toward signal detection. AUROC area under the receiver operating characteristic curve

Supplementary Information

Below is the link to the electronic supplementary material.

Acknowledgements

We thank John Miller, Richard Walgren, Nick Merker, and David Woodward for their review of the manuscript.

Declarations

Funding

The authors did not receive any funds from any funding agency for the preparation of this article.

Conflict of interest

Jeffrey Warner, Anaclara Prada Jardim, and Claudia Albera are employees of Eli Lilly and Company. Anaclara Prada Jardim and Claudia Albera are shareholders in Eli Lilly and Company. Funding for this work was provided by Eli Lilly and Company.

Ethics approval

Not applicable.

Consent to participate

Not applicable.

Consent for publication

Not applicable.

Availability of data and material

Data are available from the corresponding author upon reasonable request.

Code availability

Not applicable.

Author contributions

Conceptualization: JW, AJ, CA; literature search and data analysis: JW; writing (original draft preparation): JW; writing (review and editing): JW, AJ, CA; supervision: CA. All authors have read and approved the final submitted manuscript and agree to be accountable for this work.

References

- 1.European Medicines Agency. EMA/95595/2022: guidelines on good pharmacovigilance practices (GVP). In: European Medicines Agency, editor. Introductory cover note, last updated with release of Addendum III of Module XVI on pregnancy prevention programmes for public consultation. Amsterdam: Heads of Medicines Agencies; 2022. p. 4.

- 2.World Health Organization. Regulation and prequalification: what is pharmacovigilance? Available from: https://www.who.int/teams/regulation-prequalification/regulation-and-safety/pharmacovigilance. Accessed 6 Sep 2024.

- 3.European Medicines Agency. EMA/827661/2011: guideline on good pharmacovigilance practices (GVP). In: European Medicines Agency, editor. Module IX: signal management (Rev 1). Amsterdam: Heads of Medicines Agencies; 2017.

- 4.Ball R, Dal Pan G. “Artificial intelligence” for pharmacovigilance: ready for prime time? Drug Saf. 2022;45(5):429–38. 10.1007/s40264-022-01157-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Schmider J, Kumar K, LaForest C, Swankoski B, Naim K, Caubel PM. Innovation in pharmacovigilance: use of artificial intelligence in adverse event case processing. Clin Pharmacol Ther. 2019;105(4):954–61. 10.1002/cpt.1255. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Kreimeyer K, Dang O, Spiker J, Munoz MA, Rosner G, Ball R, et al. Feature engineering and machine learning for causality assessment in pharmacovigilance: lessons learned from application to the FDA Adverse Event Reporting System. Comput Biol Med. 2021;135: 104517. 10.1016/j.compbiomed.2021.104517. [DOI] [PubMed] [Google Scholar]

- 7.Bhardwaj K, Alam R, Pandeya A, Sharma PK. Artificial intelligence in pharmacovigilance and COVID-19. Curr Drug Saf. 2023;18(1):5–14. 10.2174/1574886317666220405115548. [DOI] [PubMed] [Google Scholar]

- 8.Mohsen A, Tripathi LP, Mizuguchi K. Deep learning prediction of adverse drug reactions in drug discovery using Open TG–GATEs and FAERS databases. Front Drug Discov. 2021. 10.3389/fddsv.2021.768792. [Google Scholar]

- 9.Salas M, Petracek J, Yalamanchili P, Aimer O, Kasthuril D, Dhingra S, et al. The use of artificial intelligence in pharmacovigilance: a systematic review of the literature. Pharm Med. 2022;36(5):295–306. 10.1007/s40290-022-00441-z. [DOI] [PubMed] [Google Scholar]

- 10.Kassekert R, Grabowski N, Lorenz D, Schaffer C, Kempf D, Roy P, et al. Industry perspective on artificial intelligence/machine learning in pharmacovigilance. Drug Saf. 2022;45(5):439–48. 10.1007/s40264-022-01164-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Murali K, Kaur S, Prakash A, Medhi B. Artificial intelligence in pharmacovigilance: practical utility. Indian J Pharmacol. 2019;51(6):373–6. 10.4103/ijp.IJP_814_19. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Painter JL, Kassekert R, Bate A. An industry perspective on the use of machine learning in drug and vaccine safety. Front Drug Saf Regul. 2023. 10.3389/fdsfr.2023.1110498. [Google Scholar]

- 13.Kompa B, Hakim JB, Palepu A, Kompa KG, Smith M, Bain PA, et al. Artificial intelligence based on machine learning in pharmacovigilance: a scoping review. Drug Saf. 2022;45(5):477–91. 10.1007/s40264-022-01176-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Bae JH, Baek YH, Lee JE, Song I, Lee JH, Shin JY. Machine learning for detection of safety signals from spontaneous reporting system data: example of nivolumab and docetaxel. Front Pharmacol. 2020;11: 602365. 10.3389/fphar.2020.602365. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Bourdeau S, Mouysset S, Ruiz D, Alliot J, Montastruc F. Discussed poster abstracts PM1-015. Fundam Clin Pharmacol. 2023;37(S1):48–70. 10.1111/fcp.12906. [Google Scholar]

- 16.Dauner DG, Leal E, Adam TJ, Zhang R, Farley JF. Evaluation of four machine learning models for signal detection. Ther Adv Drug Saf. 2023;14:20420986231219470. 10.1177/20420986231219472. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Dong G, Bate A, Haguinet F, Westman G, Durlich L, Hviid A, et al. Optimizing signal management in a vaccine adverse event reporting system: a proof-of-concept with COVID-19 vaccines using signs, symptoms, and natural language processing. Drug Saf. 2024;47(2):173–82. 10.1007/s40264-023-01381-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Gosselt HR, Bazelmans EA, Lieber T, van Hunsel F, Harmark L. Development of a multivariate prediction model to identify individual case safety reports which require clinical review. Pharmacoepidemiol Drug Saf. 2022;31(12):1300–7. 10.1002/pds.5553. [DOI] [PubMed] [Google Scholar]

- 19.Haerian K, Varn D, Chase H, Vaidya S, Friedman C. Electronic health record pharmacovigilance signal extraction: a semi-automated method for reduction of confounding applied to detection of rhabdomyolysis [abstract 93]. 10th Annual Meeting of the International Society of Pharmacovigilance; 7 November, 2010: p. 891–962; Accra.

- 20.Hoang T, Liu J, Roughead E, Pratt N, Li J. Supervised signal detection for adverse drug reactions in medication dispensing data. Comput Methods Progr Biomed. 2018;161:25–38. 10.1016/j.cmpb.2018.03.021. [DOI] [PubMed] [Google Scholar]

- 21.Imran M, Bhatti A, King DM, Lerch M, Dietrich J, Doron G, et al. Supervised machine learning-based decision support for signal validation classification. Drug Saf. 2022;45(5):583–96. 10.1007/s40264-022-01159-2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Jang MG, Cha S, Kim S, Lee S, Lee KE, Shin KH. Application of tree-based machine learning classification methods to detect signals of fluoroquinolones using the Korea Adverse Event Reporting System (KAERS) database. Expert Opin Drug Saf. 2023;22(7):629–36. 10.1080/14740338.2023.2181341. [DOI] [PubMed] [Google Scholar]

- 23.Jeong E, Park N, Choi Y, Park RW, Yoon D. Machine learning model combining features from algorithms with different analytical methodologies to detect laboratory-event-related adverse drug reaction signals. PLoS ONE. 2018;13(11): e0207749. 10.1371/journal.pone.0207749. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Mower J, Bernstam E, Xu H, Myneni S, Subramanian D, Cohen T. Improving pharmacovigilance signal detection from clinical notes with locality sensitive neural concept embeddings. AMIA Jt Summits Transl Sci Proc. 2022;2022:349–58. [PMC free article] [PubMed] [Google Scholar]

- 25.Zhu X, Hu J, Xiao T, Huang S, Shang D, Wen Y. Integrating machine learning with electronic health record data to facilitate detection of prolactin level and pharmacovigilance signals in olanzapine-treated patients. Front Endocrinol (Lausanne). 2022;13:1011492. 10.3389/fendo.2022.1011492. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Tsuruoka Y, Miwa M, Hamamoto K, Tsujii J, Ananiadou S. Discovering and visualizing indirect associations between biomedical concepts. Bioinformatics. 2011;27(13):i111–9. 10.1093/bioinformatics/btr214. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Torres PA, Helmstetter JA, Kaye AM, Kaye AD. Rhabdomyolysis: pathogenesis, diagnosis, and treatment. Ochsner J. 2015;15(1):58–69. [PMC free article] [PubMed] [Google Scholar]

- 28.Friedman C, Alderson PO, Austin JH, Cimino JJ, Johnson SB. A general natural-language text processor for clinical radiology. J Am Med Inform Assoc. 1994;1(2):161–74. 10.1136/jamia.1994.95236146. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Friedman C, Johnson SB, Forman B, Starren J. Architectural requirements for a multipurpose natural language processor in the clinical environment. Proc Annu Symp Comput Appl Med Care. 1995:347–51. [PMC free article] [PubMed]

- 30.Fleming N. How artificial intelligence is changing drug discovery. Nature. 2018;557(7707):S55–7. 10.1038/d41586-018-05267-x. [DOI] [PubMed] [Google Scholar]

- 31.Paul D, Sanap G, Shenoy S, Kalyane D, Kalia K, Tekade RK. Artificial intelligence in drug discovery and development. Drug Discov Today. 2021;26(1):80–93. 10.1016/j.drudis.2020.10.010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Gams M, Horvat M, Ozek M, Lustrek M, Gradisek A. Integrating artificial and human intelligence into tablet production process. AAPS PharmSciTech. 2014;15(6):1447–53. 10.1208/s12249-014-0174-z. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Harrer S, Shah P, Antony B, Hu J. Artificial intelligence for clinical trial design. Trends Pharmacol Sci. 2019;40(8):577–91. 10.1016/j.tips.2019.05.005. [DOI] [PubMed] [Google Scholar]

- 34.Mahajan K, Kumar A. Business intelligent smart sales prediction analysis for pharmaceutical distribution and proposed generic model. Int J Comput Sci Inform Technol. 2017;8(3):407–12. [Google Scholar]

- 35.Datta A, Flynn NR, Barnette DA, Woeltje KF, Miller GP, Swamidass SJ. Machine learning liver-injuring drug interactions with non-steroidal anti-inflammatory drugs (NSAIDs) from a retrospective electronic health record (EHR) cohort. PLoS Comput Biol. 2021;17(7): e1009053. 10.1371/journal.pcbi.1009053. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Cherkas Y, Ide J, van Stekelenborg J. Leveraging machine learning to facilitate individual case causality assessment of adverse drug reactions. Drug Saf. 2022;45(5):571–82. 10.1007/s40264-022-01163-6. [DOI] [PubMed] [Google Scholar]

- 37.Egberts TC. Signal detection: historical background. Drug Saf. 2007;30(7):607–9. 10.2165/00002018-200730070-00006. [DOI] [PubMed] [Google Scholar]

- 38.Kim JH, Scialli AR. Thalidomide: the tragedy of birth defects and the effective treatment of disease. Toxicol Sci. 2011;122(1):1–6. 10.1093/toxsci/kfr088. [DOI] [PubMed] [Google Scholar]

- 39.Finney DJ. The design and logic of a monitor of drug use. J Chronic Dis. 1965;18:77–98. 10.1016/0021-9681(65)90054-8. [DOI] [PubMed] [Google Scholar]

- 40.van Puijenbroek EP, Bate A, Leufkens HG, Lindquist M, Orre R, Egberts AC. A comparison of measures of disproportionality for signal detection in spontaneous reporting systems for adverse drug reactions. Pharmacoepidemiol Drug Saf. 2002;11(1):3–10. 10.1002/pds.668. [DOI] [PubMed] [Google Scholar]

- 41.Bate A, Lindquist M, Edwards IR, Olsson S, Orre R, Lansner A, et al. A Bayesian neural network method for adverse drug reaction signal generation. Eur J Clin Pharmacol. 1998;54(4):315–21. 10.1007/s002280050466. [DOI] [PubMed] [Google Scholar]

- 42.Agrawal R, Imieliński T, Swami A. Mining association rules between sets of items in large databases. Proceedings of the 1993 ACM SIGMOD International Conference on Management of data. Washington, DC: Association for Computing Machinery; 1993. p. 207–16.

- 43.Caster O, Norén GN, Madigan D, Bate A. Large-scale regression-based pattern discovery: the example of screening the WHO global drug safety database. Stat Anal Data Min. 2010;3(4):197–208. 10.1002/sam.10078. [Google Scholar]

- 44.Wang C, Guo XJ, Xu JF, Wu C, Sun YL, Ye XF, et al. Exploration of the association rules mining technique for the signal detection of adverse drug events in spontaneous reporting systems. PLoS ONE. 2012;7(7):e40561. 10.1371/journal.pone.0040561. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.Bate A, Evans SJW. Quantitative signal detection using spontaneous ADR reporting. Pharmacoepidemiol Drug Saf. 2009;18(6):427–36. 10.1002/pds.1742. [DOI] [PubMed] [Google Scholar]

- 46.Hauben M, Noren GN. A decade of data mining and still counting. Drug Saf. 2010;33(7):527–34. 10.2165/11532430-000000000-00000. [DOI] [PubMed] [Google Scholar]

- 47.Breiman L. Bagging predictors. Mach Learn. 1996;24(2):123–40. 10.1023/A:1018054314350. [Google Scholar]

- 48.Friedman JH. Greedy function approximation: a gradient boosting machine. Ann Stat. 2001;29(5):1189–232. [Google Scholar]

- 49.Harpaz R, Odgers D, Gaskin G, DuMouchel W, Winnenburg R, Bodenreider O, et al. A time-indexed reference standard of adverse drug reactions. Sci Data. 2014;1: 140043. 10.1038/sdata.2014.43. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50.Hauben M, Aronson JK, Ferner RE. Evidence of misclassification of drug-event associations classified as gold standard ‘negative controls’ by the Observational Medical Outcomes Partnership (OMOP). Drug Saf. 2016;39(5):421–32. 10.1007/s40264-016-0392-2. [DOI] [PubMed] [Google Scholar]

- 51.Lundberg S, Lee S-I. A unified approach to interpreting model predictions. In: 31st Conference on Neural Information Processing Systems, Long Beach, CA, USA, United States: Curran Associates Inc.; 2017. p. 4768–77.

- 52.Tibshirani R. Regression shrinkage and selection via the Lasso. J R Stat Soc Ser B Methodol. 1996;58(1):267–88. 10.1111/j.2517-6161.1996.tb02080.x. [Google Scholar]

- 53.Pal S, Bhattacharya M, Lee S-S, Chakraborty C. A domain-specific next-generation large language model (LLM) or ChatGPT is required for biomedical engineering and research. Ann Biomed Eng. 2024;52(3):451–4. 10.1007/s10439-023-03306-x. [DOI] [PubMed] [Google Scholar]

- 54.Barbieri MA, Abate A, Balogh OM, Petervari M, Ferdinandy P, Agg B, et al. Network analysis and machine learning for signal detection and prioritization using electronic healthcare records and administrative databases: a proof of concept in drug-induced acute myocardial infarction. Drug Saf. 2025. 10.1007/s40264-025-01515-y. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 55.Bastos E, Allen JK, Philip J. Statistical signal detection algorithm in safety data: a proprietary method compared to industry standard methods. Pharm Med. 2024;38(4):321–9. 10.1007/s40290-024-00530-1. [DOI] [PubMed] [Google Scholar]

- 56.Chung CK, Lin WY. ADR-DQPU: a novel ADR signal detection using deep reinforcement and positive-unlabeled learning. IEEE J Biomed Health Inform. 2025;29(2):831–9. 10.1109/JBHI.2024.3492005. [DOI] [PubMed] [Google Scholar]

- 57.De Abreu FR, Zhong S, Moureaud C, Le MT, Rothstein A, Li X, et al. A pilot, predictive surveillance model in pharmacovigilance using machine learning approaches. Adv Ther. 2024;41(6):2435–45. 10.1007/s12325-024-02870-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 58.Dijkstra L, Schink T, Linder R, Schwaninger M, Pigeot I, Wright MN, Foraita R. A discovery and verification approach to pharmacovigilance using electronic healthcare data. Front Pharmacol. 2024;15:1426323. 10.3389/fphar.2024.1426323. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 59.Dimitsaki S, Natsiavas P, Jaulent MC. Causal deep learning for the detection of adverse drug reactions: drug-induced acute kidney injury as a case study. Stud Health Technol Inform. 2024;316:803–7. 10.3233/SHTI240533. [DOI] [PubMed] [Google Scholar]

- 60.Cafri G, Graves SE, Sedrakyan A, Fan J, Calhoun P, de Steiger RN, et al. Postmarket surveillance of arthroplasty device components using machine learning methods. Pharmacoepidemiol Drug Saf. 2019;28(11):1440–7. 10.1002/pds.4882. [DOI] [PubMed] [Google Scholar]

- 61.Ross JS, Bates J, Parzynski CS, Akar JG, Curtis JP, Desai NR, et al. Can machine learning complement traditional medical device surveillance? A case study of dual-chamber implantable cardioverter-defibrillators. Med Devices (Auckl). 2017;10:165–88. 10.2147/MDER.S138158. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 62.Templin T, Perez MW, Sylvia S, Leek J, Sinnott-Armstrong N. Addressing 6 challenges in generative AI for digital health: a scoping review. PLOS Digit Health. 2024;3(5): e0000503. 10.1371/journal.pdig.0000503. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 63.Parikh RB, Teeple S, Navathe AS. Addressing bias in artificial intelligence in health care. JAMA. 2019;322(24):2377–8. 10.1001/jama.2019.18058. [DOI] [PubMed] [Google Scholar]

- 64.Pinheiro LC, Kurz X. Artificial intelligence in pharmacovigilance: a regulatory perspective on explainability. Pharmacoepidemiol Drug Saf. 2022;31(12):1308–10. 10.1002/pds.5524. [DOI] [PubMed] [Google Scholar]

- 65.World Health Organization. Ethics and governance of artificial intelligence for health: WHO guidance. Geneva: World Health Organization; 2021. [Google Scholar]

- 66.European Medicines Agency. Draft reflection paper on the use of artificial intelligence (AI) in the medicinal product lifecycle. In: European Medicines Agency, editor. Use CfMPfH. Amsterdam: Heads of Medicines Agencies; 2023.

- 67.Stegmann JU, Littlebury R, Trengove M, Goetz L, Bate A, Branson KM. Trustworthy AI for safe medicines. Nat Rev Drug Discov. 2023;22(10):855–6. 10.1038/s41573-023-00769-4. [DOI] [PubMed] [Google Scholar]

- 68.Kjoersvik O, Bate A. Black swan events and intelligent automation for routine safety surveillance. Drug Saf. 2022;45(5):419–27. 10.1007/s40264-022-01169-0. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.