Abstract

The “critical brain hypothesis” posits that neural circuitry may be tuned close to a “critical point” or “phase transition”—a boundary between different operating regimes of the circuit. The renormalization group and theory of critical phenomena explain how systems tuned to a critical point display scale invariance due to fluctuations in activity spanning a wide range of time or spatial scales. In the brain this scale invariance has been hypothesized to have several computational benefits, including increased collective sensitivity to changes in input and robust propagation of information across a circuit. However, our theoretical understanding of critical phenomena in neural circuitry is limited because standard renormalization group methods apply to systems with either highly organized or completely random connections. Connections between neurons lie between these extremes, and may be either excitatory (positive) or inhibitory (negative), but not both. In this work we develop a renormalization group method that applies to models of spiking neural populations with some realistic biological constraints on connectivity, and derive a scaling theory for the statistics of neural activity when the population is tuned to a critical point. We show that the scaling theories differ for models of in vitro versus in vivo circuits—they belong to different “universality classes”—and that both may exhibit “anomalous” scaling at a critical balance of inhibition and excitation. We verify our theoretical results on simulations of neural activity data, and discuss how our scaling theory can be further extended and applied to real neural data.

There is little hope of understanding how each of the neurons contributes to the functions of the brain [1]. Even individual brain regions contain millions of neurons [2, 3], more than can be individually mapped out, but enough that the tools of statistical physics can be applied to understand how collective patterns of neural activity may contribute to brain function. Indeed, experimental work has demonstrated that neural circuitry can operate in many different regimes of collective activity [4–8]. Theoretical and computational analyses of these collective dynamics suggest that transitions between different operating regimes may be sharp, akin to phase transitions observed in statistical physics [4, 6, 9–17]. The theory of critical phenomena predicts that at a phase transition the statistical fluctuations of a system span many orders of magnitude in space and time, and the system displays approximate scale invariance [18]. In the brain, scale invariance would lead not only to power-law scaling in neural activity, but scaling collapse, in which activity data collected under different conditions can be systematically rescaled to fall onto a single universal curve. Such signatures of criticality have been observed in neural data from the retina [19], visual cortex [20, 21], hippocampus [22], and other cortical areas [23]. These observations have lead neuroscientists to hypothesize that circuitry in the brain is actively maintained close to critical points—the dividing lines between phases; this has become known as the “critical brain hypothesis” [8, 24–26]. Proponents of this hypothesis argue that these scale-spanning fluctuations could benefit brain function by minimizing circuit reaction times to perturbations, facilitating switches between computations and maximizing information transferred [25].

However, our understanding of critical phenomena and scaling in neural systems is largely phenomenological, based on analogies with well-studied systems from physics that lack many of the biological features of neural circuits, such as complex network structure and distinct excitatory and inhibitory cell types. This makes it difficult to resolve apparently inconsistent measurements of signatures of criticality across different brain areas. For example, some analyses of neural data appear to suggest that power law exponents are of the “mean-field” type, predictable by standard dimensional analysis, while other studies point towards anomalous exponents that that deviate from the mean-field predictions [8, 27, 28]. Anomalous power law exponents come in sets corresponding to different “universality classes,” where the universality class of a system is characterized by symmetries of the dynamics and statistical distributions, and, in lattices and continuous media, the dimension of the system. While universality classes and anomalous scaling are well understood theoretically in lattices and continuous media, the situation on more general networks remains an open problem.

The modern understanding of critical phenomena, and the origin of anomalous scaling, is based on the renormalization group (RG). The RG is a framework for organizing activity into a hierarchy of scales and determining how statistical fluctuations at each scale contribute to the overall statistics of a system. In soft condensed matter physics, these scales are typically distance and time, and the RG reveals how microscopic details influence dynamics and statistics on long spatial and temporal scales. However, it is not clear what the appropriate scales are in neural circuits, as spatial distance between neurons does not necessarily reflect the influence they have on each other through chains of synaptic connections.

Recent work has used ideas from the RG to devise phenomenological schemes for analyzing data, determining that a system is critical if the data can be shown to be approximately scale invariant under repeated coarse-graining of the principal components of neural covariances [22, 29–31] or in time [32]. However, a theoretical understanding of neural systems through the lens of the RG has so far been restricted to models from statistical physics that are re-interpreted in terms of of coarse-grained neural signals, such as active/inactive units [16, 29], or in networks of neurons described by firing rates [33, 34], rather than populations of neurons that emit spikes, the fundamental unit of communication in neural circuits.

In this work we establish this missing theoretical foundation and develop a scaling theory for the relaxation of neural activity to a steady state. To the author’s knowledge, the results obtained follow from the first full theoretic RG analysis of neural populations with leaky integrate-and-fire spiking dynamics. We consider models of both in vitro circuits—slices of tissue removed from the brain—and in vivo circuits—recordings directly from in-tact brain tissues—and show that they belong to the directed percolation and Ising model universality classes, respectively. We first perform a mean-field analysis of the model to show that it predicts two different types of phase transitions, for which we derive the scaling collapse relations and illustrate the idea of data collapse (Sec. I). We then give and overview of how fluctuations change the mean-field picture and the scaling relations. We show that simulated data of several networks can indeed be collapsed onto universal scaling forms, some yielding mean-field exponents and others anomalous scaling (Sec. III). We end this report by discussing the implications of this work for current theoretical and experimental investigations of collective activity in spiking networks, both near and away from phase transitions (Sec. IV).

I. SPIKING NETWORK MODEL

We consider a network of neurons that stochastically fire action potentials, which we refer to as “spikes.” The probability that neuron fires spikes within a small window is given by a counting process with expected value , where is a non-negative firing rate nonlinearity, conditioned on the current value of the membrane potential . We assume is the same for all neurons, and for definiteness we will take the counting process to be Poisson or Bernoulli, though the properties of the phase transitions should not depend on this specific choice.

The membrane potential of each neuron obeys leaky dynamics,

| (1) |

| (2) |

where is the membrane time constant, is the equilibrium potential of the neuron in the absence of input, and is the weight of the synaptic connection from pre-synaptic neuron to post-synaptic neuron . The synaptic weights are characterized by a strength , and the connections between neurons are encoded by the adjacency matrix , which is 1 if neuron connects onto neuron and 0 otherwise. We take to be negative in order to implement a “soft” refractory effect, resetting a neuron’s membrane potential by a fixed amount after each spike. For simplicity, we model the synaptic input as an instantaneous impulse, referred to as a “pulse coupled” network. We focus on symmetric networks with a largest real-valued eigenvalue associated with a homogeneous eigenmode, where is the maximum eigenvalue of . That is, phase transitions in these networks will correspond to pattern formation out of a homogeneous state of activity. While real networks are not homogeneous and symmetric, reciprocal pairs of connections are more common than expected for random networks, and tend to be stronger than uni-directional connections [35]. We interpret our networks as an approximation in which unidi-rectional connections and and variance in the synaptic weights can be neglected. Despite the restriction of a leading homogeneous mode, this encompasses a broad and important class of models and networks, such as bump attractor models in neuroscience [5, 6] and, more generally, diffusion on networks [36].

In this work we consider two nonlinearities corresponding to two types of networks, in vitro and in vivo networks:

| (3) |

where sets the slope of the nonlinearities and sets the maximum firing rate of the neurons (equal to for in vitro networks and for in vivo networks). We will choose units such that . The soft threshold is the value that the membrane potential needs to exceed in order for a neuron to have an increased probability of firing a spike.

The key difference between the in vitro and in vivo network nonlinearities is the presence of the rectification in the in vitro network nonlinearity, which creates the absorbing state: when , due to the shift down by , neurons in an in vitro network will not fire. If all neurons’ membrane potentials are below this threshold the network will be completely quiescent and cannot fire spikes without further external input, so this quiescent phase constitutes an absorbing state of the network. In contrast, the instantaneous firing rates of neurons in in vivo networks never vanish for any finite input, so there is always some probability that a neuron can fire a spike, even if that probability is small. Other important features of these networks are the saturation of the instantaneous firing rate and the concavity of the nonlinearity. For example, if the nonlinearity is unbounded the network may become unstable for , leading to runaway excitation of the neurons.

In this work we probe the behavior of the network by potentiating or suppressing the membrane potentials of the entire population, and then allowing the network to relax back to a steady state. If the network is tuned to a critical point this relaxation will follow a power law. This procedure would approximate an experimental set-up in which a wide area of neural tissue is optogenetically stimulated or suppressed uniformly. This procedure is similar to experimental setups investigating neural “avalanches”—cascades of neural activity typically originating from a single neuron. However, by potentiating or suppressing the entire network, there is no single neuron that triggers the cascade of activity, allowing us to avoid ambiguity in defining avalanches in the spontaneously active in vivo networks, in which it can be unclear whether multiple clusters of activity are really independent events or just non-local parts of a single avalanche.

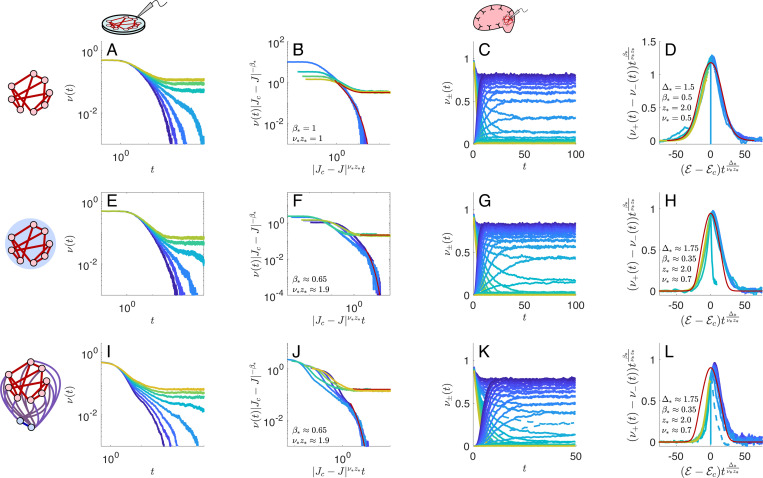

In Fig. 1 we display two types of phase transitions this stochastic spiking model can exhibit. The first type involves a transition between a quiescent, inactive state, and a self-sustained active state (Fig. 1A–D). This is an appropriate model for in vitro networks, tissue removed from the brain and maintained or cultured in a dish. Such networks receive little-to-no external input other than that which an experimenter provides, hence the possibility for the network to become quiescent if it cannot sustain its activity through recurrent excitation. The second type of transition occurs in spontaneously active networks, exhibiting a transition from asynchronous firing to high and low firing (Fig. 1E–H). This is appropriate as a model of in vivo networks, neural tissue that is still part of the brain and receives input from other brain regions. We will first show how to predict these transitions based on a mean-field analysis of the model, and then present the scaling theory that incorporates the effects of stochastic fluctuations that modify the universal quantitative properties that can be measured in experiments.

FIG. 1. Phase transitions in in vitro versus in vivo neural populations.

Neural activity may differ between recorded from tissue maintained or grown in a pitri dish (“in vitro”; top row) or recorded directly from neurons in a living organism (“in vivo”; bottom row). These differences partly reflect external input: in vitro tissue may require experimenter-provided stimulation to maintain the activity of neurons, while in vivo neurons are constantly bombarded with input from other brain areas or body systems, leading to spontaneous activity. In both cases qualitative changes in population-level activity may be observed as properties of the network, such as the overall strength of synaptic connections, are modulated. A. Phase diagram of an in vitro network: if the equilibrium resting potential of the neurons is perturbed (e.g., due to external tonic current input), the network’s firing can be suppressed or promoted . At the equilibrium potential (normalized units) the network activity will decay away if the strength of synaptic connections is less than a critical value . For synaptic strengths the network activity is self-sustaining. At the critical value the activity decays to quiescence, but very slowly. B. Example raster plot of spiking activity in a network with subcritical (circle), along the line , showing a fast decay of activity. C. Spiking activity in a network at the approximate critical point (star), showing slow decay of activity. D. Spiking activity in a supercritical network with (square), showing sustained activity. E. Phase diagram of an in vivo network: perturbing the equilibrium resting potential will increase or decrease neural firing. Along a critical line (dashed diagonal line) the network will fire asynchronously. For synaptic strengths there exist states of low or high firing, which the network can transition spontaneously between in finite networks. F. Example raster plot of spiking activity in a network with subcritical (circle), along the approximate critical line , showing asynchronous activity. G. Spiking activity in a network at the approximate critical point (star), showing intermittent high and low spiking activity activity. H. Spiking activity in a supercritical network with (square), showing apparent transient metastable transitions between high and low firing rate states that are possible in finite-sized network simulations. Excitatory neurons are colored red, inhibitory neurons are colored blue.

II. WIDOM SCALING THEORY

A. General theory

As seen in the simulations in Fig. 1, the spiking activity undergoes a phase transition at some critical synaptic coupling and critical baseline . Because we assume our networks consist of statistically homogeneous neurons, we focus on the dynamics of the population averages of the neurons’ membrane potentials and firing rates and . Away from the critical point, the population activity decays exponentially to a steady state, while at the critical point the activity decays algebraically—i.e., a power law. However, we can make a stronger statement than this. For networks tuned close to the critical point we anticipate that vestiges of scale invariance will lead to scaling collapse: although we are measuring the populations means or as a function of three independent parameters, time , synaptic strength , and baseline , for long times and small enough and we expect the data can be described by a function of only two combinations of the independent parameters. The generic form of the “Widom scaling relationship” for the population-averaged firing rates is

| (4) |

where is the firing rate at the critical point, is a scaling function of two arguments, and we have introduced the critical exponents , , , and ; we give critical exponents subscripts of to distinguish them from other variables that have similar symbols. The exponents , , and are conventionally called the “correlation length,” “dynamic,” and “anomalous” exponents, while and are derived from these exponents by scaling relations we will introduce later. We will retain the name “correlation length exponent” for , even though there may not be a notion of length in arbitrary networks.

Note that the scaling function is actually multivalued: it can depend on the sign of , , and . This scaling form holds for both the in vitro and in vivo network models, though the values of the exponents and the scaling function will differ between the two cases because the two types of networks belong to different universality classes, as we will explain. We derive this scaling form within both the mean-field approximation and our renormalization group analysis in Appendix A.

Eq. (4) tells us that if can determine the correct values of the critical exponents and the critical parameters , , and , then a plot of against and , will “collapse” our three-dimensional dataset onto a two-dimensional surface. In practice, such a collapse is difficult to achieve, and instead one tries to eliminate one of the variables by tuning it to its critical value, and then performing the collapse in the remaining variables, in which case the data should fall onto a one-dimensional curve.

In in vitro networks we focus on the case , and we will collapse firing rates for different synaptic strengths using the reduced scaling form

| (5) |

where and we have pulled a factor of out of the scaling function in order to write the prefactor as .

In our in vivo model we will instead consider , for which the scaling form can be reduced to

The practical difficulty with this scaling form is identifying the critical firing rate . This difficulty can be eliminated by performing two paired experiments: one in which the neurons are potentiated and then allowed to relax to the steady state from above, and another in which the neurons are suppressed and then allowed to relax to the steady state from below, with all other parameters being the same in the two experiments. The scaling function will differ in these two scenarios (Appendix A), allowing us to obtain a scaling form for the difference in activity:

| (6) |

where , where the sign subscripts correspond to potentiation (+) or suppression (−).

Next, we briefly review the mean-field approximation of the network activity and its predictions for the critical exponents, before highlighting the results of the renormalized scaling theory.

B. Mean-field scaling theory

The stochastic system defined by Eqs. (1)–(2) cannot be solved in closed form, and understanding the statistical dynamics of these networks has historically been accomplished through simulations and approximate analytic or numerical calculations. A qualitative picture of the dynamics of the model can often be obtained by a mean-field approximation in which fluctuations are neglected, such that , and solving the resulting deterministic dynamics:

| (7) |

Equations of this form are a cornerstone of theoretical neuroscience [14, 17, 33, 37–39], though often motivated phenomenologically as firing rate models, rather than as the mean-field approximation of a spiking network’s membrane potential dynamics. A wide variety of different types of dynamical behaviors and transitions among behaviors are possible depending on the properties of the connections and nonlinearity [4, 40], including bump attractors [5, 6, 9, 10], pattern formation in networks of excitatory and inhibitory neurons [11–13], transitions to chaos [14, 15], and avalanche dynamics [7, 8, 16]. In many of these examples, networks admit steady-states for which for all as .

Within the mean-field approximation, the dynamics for the population-and-trial-averaged means reduce to

| (8) |

where . The phase transitions of the network can be characterized by analyzing the dynamics of this one-dimensional system. While Eq. (8) cannot be solved exactly, one can show that there is a continuous bifurcation at , where the critical baseline and critical synaptic weight are defined by

| (9) |

| (10) |

in both the in vitro and in vivo network models. The first condition, Eq. (9), corresponds to the baseline potential and the mean synaptic input to each neuron balancing out so that the membrane potential of the neurons come to rest at , to be determined momentarily. The second condition, Eq. (10), corresponds to this steady state becoming marginally stable: perturbations away from the steady state will not decay away exponentially, nor will they grow exponentially. We expect that when there will be multiple steady states, so in order for there to be a single marginal state the critical membrane potential must correspond to the largest value of the gain ,

| (11) |

In the in vivo model this corresponds to , while for the in vitro model (with ), this corresponds to , the activation threshold. It follows that , , and for the in vitro model. For the in vivo model we obtain , , and .

In our in vitro model the phase transition separates an inactive steady state from an active steady state in which recurrent excitation is strong enough to self-sustain activity without external input, as shown in Fig. 1A–D. In our in vivo model the phase transition separates an asynchronous steady state of intermediate firing rates from a bistable state of high- and low-firing rates, as shown in Fig. 1E–H.

At long times and close enough to the critical point we expect to be close to , such that we can expand and truncate at the leading nonlinear order. In our in vitro model the leading nonlinear order is , while in the in vivo model the leading order is . Plugging this expansion into Eq. (7) and solving for , and then approximating to leading order yields the scaling form (4) with exponents and in the in vitro models [41] and and in the in vivo model. For both networks and .

We verify that numerical solution of the full mean-field dynamics (Eq. (7)) indeed satisfies the scaling laws when the networks are tuned to their respective critical points. We plot these collapses in Fig. 2 to illustrate the idea of data collapses.

FIG. 2. Mean-field behavior of the spiking network.

for in vitro networks (top row) and in vivo networks (bottom row). A,D) A typical nonlinearity for each of the two network types. In the in vitro networks the nonlinearity is rectified, such that the firing rate is zero when a neuron’s membrane potential is negative. In in vivo networks the firing rate is never zero—there is always a non-zero, though possibly small, probability of firing. B,E) The decay of , the population- and trial-averaged membrane potential, starting from an initial value of in in vitro networks and and in in vivo networks. C,F) Widom scaling collapses using Eq. (5) for in vitro networks and Eq. (6) for in vivo networks and the mean-field exponents given in Table I.

C. Renormalized scaling theory

While the mean-field approximation generally paints a qualitatively correct picture of phase transitions in a stochastic model, it is well-known that universal quantities like critical exponents or the Widom scaling functions are often quantitatively incorrect [18], a problem that was ultimately resolved by the development of the renormalization group [42–45].

By developing an RG procedure that can be applied to this spiking network model (detailed in Appendix C), we can capture the effects of stochastic fluctuations on the collective activity of the network. Within our RG approximation scheme, the dynamics of the population averages obey

| (12) |

| (13) |

which is similar to Eq. (7) except that the nonlinearity is replaced with an effective nonlinearity . The key idea behind the RG method presented in Appendix C is that we can compute this effective nonlinearity by iteratively averaging the bare nonlinearity over fluctuations associated with different eigenmodes of the synaptic weight matrix . In lattices these eigenmodes are simply Fourier bases, which can be parametrized in terms of spatial frequencies (“momenta”) which are traditionally coarse-grained in statistical physics. The eigenvalues of the synaptic weight matrix thus generalize the traditional “momentum-shell” RG approach, though it is not the only possible choice; see also [22, 29, 32, 46].

Near the critical synaptic coupling this effective nonlinearity has the form

| (14) |

where is the critical firing rate of a neuron, is the critical membrane potential, is a universal function, and and are universal critical exponents. Plugging (14) into (12)–(13), the scaling forms (5) and (6) follow with non-trivial values of the critical exponents and scaling functions .

The scaling function and the critical exponents are characteristics of the “universality class” of a system, which is determined by the (emergent) symmetries of a model. In lattice systems these universality classes are sub-divided by the spatial dimension of the system. i.e., a two-dimensional system will have different critical exponents than a three-dimensional system, despite them both having the same underlying symmetries.

While several notions of dimension have been proposed for complex networks [47], it is not immediately clear which, if any, is the appropriate generalization in the context of critical phenomena. In our RG analysis of the spiking network model (Appendix C), we find that the appropriate generalization of dimension is the spectral dimension of the eigenvalue distribution of the synaptic weight matrix , defined by

| (15) |

where is the maximum eigenvalue of the continuous part of the eigenvalue spectrum and is the maximum eigenvalue of the network. If , then the spectrum is continuous near the maximum eigenvalue and ; the definition of here is chosen so that it matches the spatial dimension when the network is a hypercubic lattice with periodic boundary conditions. If , then the largest eigenvalue is an outlier and diverges—this will be relevant in the case of random regular networks we study in Sec. III. The spectral dimension has been identified as the relevant definition of dimension in other work investigating critical dynamics of, e.g., Ising-like models or random walks on networks [48–50].

We can further show that the in vitro and in vivo models belong to different universality classes. The in vitro model belongs to the “directed percolation” universality class, a ubiquitous non-equilibrium universality class that describes the transition between extinction of activity and self-sustained activity. The directed percolation universality class is characterized by an emergent “rapidity symmetry” that relates the magnitude of the membrane potential to fluctuations in the spiking activity, and three independent exponents, the correlation length exponent , the dynamic exponent , and the anomalous exponent . The other critical exponents can be expressed as and [51]. The directed percolation universality class has an “upper critical dimension” of , which means that networks with spectral dimension above this value will display mean-field scaling. Networks with have , and are above the upper critical dimension. The values of these critical exponents in lattice systems and mean-field are summarized in Table I.

TABLE I.

Critical exponents for the Directed percolation and Ising model universality classes on lattices of and 3 dimensions, compared to the mean-field (MF) prediction. Values are given to the hundredths place; more accurate estimates are available in the cited references.

| Directed percolation [52] | Ising model | |||||

|---|---|---|---|---|---|---|

|

|

||||||

| MF | [18] | [53] | MF | |||

| 0.73 | 0.58 | 1/2 | 1 | 0.63 | 1/2 | |

| 1.77 | 1.89 | 2 | 2.17 [54] | 2.02 [55] | 2 | |

| −0.41† | −0.17† | 0 | 1/4 | 0.036 | 0 | |

| 0.28 | 0.82 | 1 | 1/8 | 0.33 | 1/2 | |

| 1.87† | 1.91† | 2 | 15/8 | 1.56 | 3/2 | |

Derived exponents using the scaling relations and for the directed percolation universality class [51].

Turning to the in vivo model, our RG analysis predicts that the model belongs to the Ising model universality class, which describes transitions from a disordered state to ordered states related by an inversion symmetry. In the spiking network the two ordered states are the high and low firing rate states, and the inversion symmetry implies that close to the phase transition the distribution of fluctuations around the means of the high and low firing rates are identical. The universality class of the non-equilibrium Ising model is characterized by the correlation length exponent , the dynamic exponent , and anomalous exponent , with and . Like the directed percolation universality class, the Ising model has an upper critical dimension of , above which the mean-field approximation predicts the correct scaling. The values of the critical exponents for two- and three-dimensional lattices and mean-field are given in Table I.

The values of the critical exponents are not necessarily the same for neurons arranged in lattices and complex networks, even if they have the same spectral dimension . Our RG scheme, described in Appendix C, does predict that lattices and networks with the same spectral dimension will have the same exponents, but we expect this to be only true approximately. Being the first application of the RG to a spiking population model, our method does not capture the effects of the eigenmode structure of the synaptic weight matrix on the critical exponents, which could impact the values of and in particular, which our method predicts to have the mean-field values and . However, our method does predict anomalous values of , , and . This said, because our RG scheme predicts the universality classes of the in vitro and in vivo networks, we can use the full set of anomalous exponents from -dimensional lattices as a starting point for the scaling collapses we perform on our simulated data, allowing us to estimate potential discrepancies between lattices and networks with the same spectral dimension.

Finally, within our RG approximation we can also analytically compute the asymptotic tails of the scaling functions appearing in the Widom scaling forms. For in vitro networks we find

| (16) |

where and the constants , , , and are non-universal constants. In in vivo networks we find that the tails of the scaling function (6) obey

| (17) |

where and is another non-universal constant. Up to the non-universal constants, we show in the next section that not only can we collapse simulated activity data, the collapses agree well with the predicted scaling functions.

III. SCALING ANALYSES OF SIMULATED DATA

To validate our scaling theory, we first show that simulated data from neurons arranged on 2- and 3-dimensional lattices with nearest-neighbor excitatory connections are indeed collapsed using the directed percolation or Ising model critical exponents listed in Table I.

We then investigate scaling in networks in which excitatory neurons are sparsely connected with fixed degree and inhibitory neurons, if present, provide broad global inhibition. Our primary goal is to verify that the universality classes of the in vitro and in vivo models are consistent with the directed percolation and Ising universality classes, respectively. We do not seek to obtain high precision estimates of the exponents competitive, instead devoting our computational resources to estimating the exponents on several network types.

A. Excitatory lattices

As shown in Fig. 3A–B, simulated data from in vitro models can be collapsed using the direction percolation critical exponents and the scaling form (5), and simulated data from in vivo models can be collapsed using the Ising critical exponents and the scaling form (6).

FIG. 3. Widom scaling collapses for simulated activity on excitatory lattices.

Top row (A-D): . Bottom row (E-H): . A, E. Population-and-trial-averaged spike trains versus time in in vitro networks as the synaptic strength is tuned from subcritical (, blue curves) to supercritical (, green-gold curves). B, F. Widom scaling collapse of the data using Eq. (5). Data below and above collapse onto different curves, with tails given by Eq. (16). C, G. Population-and-trial-averaged spike trains versus time in in vivo networks, starting from a high firing rate initial condition and a low firing rate initial condition . Curves correspond to equilibrium potentials (green-gold curves) to (blue curves). D, H. Widom scaling collapse of according to Eq. (6), with tails given by Eq. (17). The critical exponents used to collapse the data, inset in each collapse, are the known values of the critical exponents for the directed percolation (DP) and Ising model (IM) universality classes, given in Table I.

In our in vitro networks the critical synaptic weights are in and in , while in the spontaneous networks the critical parameters are in and in .

B. Sparse excitation and dense inhibition

We now consider networks with slightly more realistic features. Cortical circuits consist of two broad cell types: excitatory and inhibitory. Excitatory cells are typically thought to be the “principal neurons” whose activity is the neural realization of computations within cortical circuitry, while inhibitory cells are often “interneurons” that serve these computations indirectly by regulating the activity of the principal neurons. In cortex, excitatory neurons have been found to make sparse connections to other excitatory neurons [56], instead influencing each other through the densely connected inhibitory interneurons [57]. We therefore consider a network in which excitatory neurons make sparse connections to one another; specifically, we will model excitatory-excitatory connections using random regular graphs in which every neuron makes a fixed number of synaptic connections but the pairs of neurons connected are randomly chosen, independent of any spatial organization of the network. The remaining connections in the network are dense; for simplicity we take the connections from excitatory to inhibitory cells, as well as inhibitory-to-inhibitory or inhibitory-to-excitatory, to be all-to-all connected.

First, it is useful to consider what happens in networks without inhibition, for which we need only consider the excitatory neurons arranged in a random regular network. If the synaptic strength of each connection is and each neuron has a refractory self-input of strength , then the eigenvalue distribution of the synaptic weight matrix has a maximum eigenvalue associated with the homogeneous mode. This eigenvalue is an outlier. The bulk spectrum of the synaptic weight matrix is given by the McKay law [58] in the limit (modified to include the self-coupling and normalized by ),

where . Note that the bulk spectrum has spectral dimension , independent of the degree . The contribution of the single outlier eigenvalue contributes negligibly to the effective nonlinearity , but it nonetheless controls the phase transition because it renders the spectral dimension to be (Eq. (15)). We therefore expect that the excitatory random regular network will exhibit mean-field critical exponents; we confirm this in the scaling collapses of both in vitro and in vivo networks, shown in Fig. 4. We find that the transitions occur approximately at in in vitro networks and in in vivo networks.

FIG. 4. Widom scaling collapses for simulated activity on networks with random regular excitatory-excitatory connections.

All excitatory neurons make excitatory connections to other neurons. Top row: Simulation results for purely excitatory networks. A. Decay of the population-averaged spiking activity in an absorbing state network for several values of coupling strength , and B. its corresponding data collapse using mean-field predictions for the critical exponents. C. Decay of the population-averaged spiking activity in a spontaneously active network for several values of the input current , and D. its corresponding data collapse using mean-field predictions for the critical exponents. Middle row: Simulation results for an excitatory population with effective inhibitory connections between neurons. E-F and G-H are the same as A-B and C-D, but using anomalous values of the critical exponents. Bottom: Simulation results for a model of separate excitatory and inhibitory populations that reduces to the effective model (Appendix B). I-J and K-L are the same as E-F and G-H, using the same values of the anomalous exponents. In the absorbing state collapses (second column), the analytically estimated asymptotic Widom scaling forms Eq. (16) are plotted in red, scaled by non-universal factors to match the data. Similarly for the spontaneous network collapses (fourth column) using Eqs. (6) and (17); see also Appendix A.

Next, we consider what happens when we turn on the inhibitory connections. Rather than analyzing the full EI population, it is useful to first consider an effective network model consisting of excitatory neurons that excite their random regular neighbors while inhibiting all other neurons in the network. This effective model is a formal reduction of a full population model with explicit excitatory and inhibitory populations; see Appendix B. Suppose the global inhibitory connections have strength , where is the number of excitatory neurons. These inhibitory connections will shift the location of from to , without affecting any other eigenmodes of the network because they are orthogonal to the homogeneous mode. There is then a critical value of for which the maximum eigenvalue is moved to the location of the bulk eigenvalue , closing the gap between the bulk spectrum and the outlier. We then expect the effective dimension to be , and the network may exhibit anomalous scaling instead of mean-field scaling. We verify this for networks with for both the effective EI network and networks with explicit inhibitory neurons.

In the case of the effective EI networks, we find with approximate exponents and in in vitro networks, and , , , in in vivo networks. The same exponents with and in in vitro and in vivo networks, respectively, produce collapses in simulations with explicit excitatory and inhibitory populations (Fig. 4). While these estimates are not especially precise, the in vitro exponents differ enough from the exponents in the lattices to suggest that the network structure does have an influence on the critical exponents, and hence the universality class of the networks may differ from the 3-dimensional lattices, although these universality classes are still in some sense close.

We see that the collapses for the effective and explicit EI networks are asymmetrically skewed compared to the analytically predicted Widom scaling forms for the Ising universality class, which match the lattice well. Similar asymmetries have been observed in the scaling collapses of mean neural avalanche shapes, and recent modeling work has shown that inhibitory neurons play a role in producing these asymmetries [59]. It is not clear what the origin of this asymmetry is. Potentially it is a finite-size effect that will weaken in simulations of much larger networks. A surface plot of the firing rates versus and does not show a sharp jump, compared to the lattices, suggesting that finite size effects may be softening the transition. For instance, the dashed curve in Fig. 4K–L corresponds to a value of that is close to the estimated critical , and does not collapse well in our current simulations, but may be more sharply separated from the critical point in larger networks. Alternately, the asymmetries could be driven by subleading corrections to scaling or contributions from the network eigenmodes that are not captured by our current RG scheme.

Next, we perform a brief investigation of networks with degree . There is a common folk wisdom that mean-field theory becomes accurate in high dimensional lattices because of the increased number of neighbors each unit interacts with. However, the spectral dimension of random regular networks does not depend on the number of neighbors . We might therefore wonder whether the critical exponents will remain close to the values predicted by our scaling theory, or if at a sufficiently large we see a reversion to mean-field behavior.

We find that networks with and global inhibition strength exhibit mean-field scaling. However, the observed critical strength is lower than the mean-field prediction, in contrast to our other simulations. This suggests that the global inhibition may be too large. The mismatch most likely originates in our RG scheme’s independence of the eigenmode structure of the network, and a higher order approximation scheme is required to identify the precise value of global inhibition at which anomalous scaling is observed for .

We may therefore wonder how robust the anomlous scaling of the network is to perturbations in the number of connections each neuron makes. So far, we have considered networks in which the number of connections each neuron makes is the same for all neurons. If we instead consider networks with a fraction of neurons that make synaptic connections and a fraction of neurons that make synaptic connections, we can investigate between which fractions we observe a transition from anomalous to mean-field scaling.

The excitatory synaptic connections between neurons are , where is the adjacency matrix of the excitatory connections, formed by randomly paring up synapses of degree 3 neurons and degree 4 neurons (and rejecting networks with self-connections or multiple synapses between a single pair of neurons); i.e., this is a configuration model [60]. is the degree of neuron , and is again the all-to-all inhibitory weight chosen to move the leading eigenvalue of to the edge of the bulk spectrum. Although we do not have a closed form for the spectrum of this weight matrix, the limiting cases and —random regular networks of degrees 3 and 4, respectively—have spectral dimension , and so we expect the mixed network does as well. We find that at neurons with degree 4 the anomalous scaling persists, while at we again obtain mean-field scaling.

While we have not ruled out that there is a different value of global inhibition that can achieve anomalous scaling in networks with mixed connectivity, our results suggest a possible new mechanism that could explain apparently contradictory observations of mean-field versus anomalous scaling in neural avalanche data [8, 27, 28], with the observed scaling depending on the balance of excitatory sparsity and broad inhibition.

IV. DISCUSSION

In this work we have shown that stochastic spiking networks with symmetric connections and homogeneous steady states can undergo at least two types of phase transitions as the strength of their synaptic connections and baseline potentials are tuned: i) an inactive-to-active transition between extinction and self-sustained activity, appropriate as an in vitro model of neural tissue, and ii) a transition from a single asynchronous state of activity to high or low firing rate states, appropriate as a model of in vivo neural tissue.

Using both a mean-field approximation and a renormalization group analysis of the spiking network model, we developed a Widom scaling theory to show that the in vitro network models belong to the directed percolation universality class, while the in vivo network models belong to the Ising universality class. These universality classes are subdivided by “dimension,” which we identified as the spectral dimension of the synaptic weight matrix. If the largest eigenvalue of this spectrum is an outlier, then the spectral dimension is infinite, and we find the mean-field predictions of the critical exponents are correct. However, if the largest eigenvalue is at the edge of the continuous part of the spectrum, then is finite and anomalous scaling may be observed.

This is, to the author’s knowledge, the first renormalization group analysis of a leaky integrate-and-fire model. While previous work modeling phase transitions in neural populations have used Ising-like models or chemical reaction networks of active or inactive neurons, these are phenomenological models of neural activity. Similarly, while other work has investigated the non-perturbative renormalization group in neuroscience contexts, it has been applied only to calculating correlation and response functions in firing rate models [33], and exploring possible equivalences between the RG and neural sensory coding work [46]. This work establishes formally that spiking populations are in the Ising model or directed percolation universality classes.

The value of performing renormalization group calculations on spiking network models is that these calculations help clarify what features of neurons and their connectivity shape the critical exponents measured in data. Experimental recordings of neural avalanches—cascades of neural activity triggered that propagate through neural circuitry [7, 8, 16, 25]—often seem to support mean-field exponents, but deviations have also been reported [8], and the origin of these deviations remains the subject of much debate. Some reports suggest deviations could be the effects of subsampled recordings of neurons in space or time [61, 62], while RG analysis of firing rate models suggest the cause could also be logarithmic corrections to critical exponents in networks at their upper critical dimension [34]. Similarly, the “phenomenological RG” method developed by Ref. [22] motivates exponent relations based on analogies with lattice systems, and find anomalous scaling for the dynamical exponents , albeit with values on the order of 0.16 ∼ 0.3, much smaller than predicted by the directed percolation or Ising model universality classes. Similar exponent values are obtained by Ref. [63] in rat visual cortex. Using our foundational RG theory of spiking networks to understand the phenomenolgoical RG method could yield insight into these unexpectedly small dynamical exponents.

In general, having a firm theoretical understanding of what properties influence critical exponents will not only aid in disambiguating genuine deviations from mean-field theory versus estimates are skewed by subsampling problems, but could also reveal mechanisms by which a system that appears to be in a mean-field universality class could be tuned toward anomalous behavior.

For instance, both the directed percolation and Ising model mean-field universality classes make the same predictions for avalanche exponents, but in lower dimensions the exponents and even the exponent relations differ. In our excitatory-inhibitory network of sparse excitatory connections that interact through dense inhibitory connections, we found that the inhibition could tune the network to a critical point with anomalous exponents for both the directed percolation and Ising universality classes. One could imagine a future closed-loop experimental paradigm in which excitatory neurons are inhibited with wide-field optogenetic stimulation, where the strength of that stimulus depends on the recorded neural activity. Depending on the properties of the excitatory connections (e.g., the degree of sparsity), this could drive a transition toward a anomalous critical state, distinguishing between different mean-field universality classes.

Several other mechanisms have been proposed as possible causes of mean-field or anomalous scaling. Ref. [34] introduced a firing rate model in which nonlinearities in the membrane dynamics cancel out nonlinearities in the mean synaptic input, resulting in a new universality class with upper critical dimension . At this upper critical dimension the critical exponents differ from the mean-field scaling by logarithmic corrections that can depend on the distance to the critical point, introducing apparent anomalous scaling.

Another mechanism well-known to change critical exponents is heterogeneity in network properties, often dubbed “disorder” in the statistical physics literature. In this work we considered only heterogeneity in the pairs of neurons connected, and observed that it potentially alters critical exponents compared to lattice networks with the same effective dimension. We assume our networks can be interpreted as the average connectivity in networks with weak variability that can be neglected. Strong heterogeneity in the synaptic weights, however, can have a variety of possible effects. It can smear out a transition, effectively destroying it, drive the system to a different “strong disorder” universality class with different critical exponents, or lead to anomalously slow temporal scaling or for non-universal exponents and due to the existence of “rare regions” of the network that happen to be close to the critical point of the non-disordered system [64–66].

Because we have shown that the spiking network belongs to the directed percolation or Ising universality classes, we can leverage past work on other systems in the directed percolation [67–69] or Ising classes [70, 71] to motivate hypotheses for how heterogeneity might impact criticality in spiking networks. For example, Ising models with heterogeneity in properties analogous to the baseline potential and synaptic strength are thought to belong to the random field Ising model universality class [70, 71], for which renormalized scaling theories have been derived using extensions of the methods we use to obtain the results reported in this work [72–76].

Heterogeneity in synaptic weights drives perhaps the most well known example of a phase transition in theoretical neuroscience, the celebrated transition to chaos in the Sompolinsky-Crisanti-Sommers model [14, 37], a firing rate model with random recurrent synaptic connections that can be interpreted as the mean-field theory of the spiking network studied in this work. This model and its many descendants have been extensively studied using methods from dynamic mean-field theory, and have become a cornerstone of theoretical neuroscience. The transition to chaos is not in the directed percolation or Ising model universality classes, owing in part to the fact that the synaptic connections have zero mean and are not symmetric. An important direction of future work is to extend our RG scaling theory to such networks and investigate whether stochastic spiking generates anomalous scaling in the transition to chaos. While fluctuations have been added to this family of models by adding fluctuating external currents or Poisson inputs with rates matching the firing rate of the networks, mimicking the effect of spike fluctuations [77, 78], and suggest that mean-field scaling persists in the presence of fluctuations, it remains an open question whether this is robust to adding structure to the synaptic connections, such as occurs in the networks studied in this work.

This said, all cases we have considered so far assume fixed synaptic connectivity. This is a reasonable assumption on timescales comparable to the millisecond scales of neural activity. However, on longer timescales these connections can be modified by synaptic plasticity, in which neural activity drives strengthening or weakening of synaptic connections. Including synaptic plasticity in the stochastic spiking model couples the neural dynamics with their synaptic tuning parameters. Ref. [79] investigated plasticity dynamics in a Sompolinsky-Crisanti-Sommers firing rate model using a dynamic mean-field approach, but this phenomena has yet to be explored through using RG scaling analyses. It is often hypothesized that synaptic plasticity and homeostasis will lead to self-organized criticality, with some simulation results supporting the possibility in simple models [80]. This would imply that the synaptic strengths and baseline potentials of the networks are self-regulated towards their critical values over time [81–83]. Understanding the impact of disorder and synaptic plasticity on critical properties is crucial for interpreting neural data in the context of criticality, and the approach presented here establishes an important first step toward analyzing critical phenomena in spiking network models with heterogeneous features.

Supplementary Material

FIG. 5. Mean-field versus anomalous scaling in Excitatory-Inhibitory networks of varying degree.

A-B. Simulated activity in an effective EI network with degree 4 random regular excitatory-excitatory connections, and B. its corresponding scaling collapse using mean-field exponents. The phase transition occurs at a value of that is less than the mean-field prediction , whereas in our other cases our RG analysis predicts that is larger than the mean-field prediction. C-D. Effective EI network with 20% degree 4 and 80% degree 3 excitatory-excitatory connections. The phase transition occurs at , less than the mean-field prediction . The data can be collapsed using mean-field exponents. E-F. Effective EI network with 10% degree 4 and 90% degree 3 excitatory-excitatory connections. The estimated critical coupling is , comparable to the mean-field prediction of . The data, however, collapses using the same anomalous exponents as the pure degree 3 effective EI network.

ACKNOWLEDGMENTS

The author thanks National Institute of Mental Health and National Institute for Neurological Disorders and Stroke grant UF-1NS115779-01 and Stony Brook University for financial support for this work, and Ari Pakman for feedback on an early version of this manuscript and anonymous referees for valuable feedback.

References

- [1].Kass R. E., Amari S.-I., Arai K., Brown E. N., Diekman C. O., Diesmann M., Doiron B., Eden U. T., Fairhall A. L., Fiddyment G. M., Fukai T., Grün So, Harrison M. T., Helias M., Nakahara H., Teramae J.-N., Thomas P. J., Reimers M., Rodu J., Rotstein H. G., Shea-Brown E., Shimazaki H., Shinomoto S., Yu B. M., and Kramer M. A. Computational neuroscience: Mathematical and statistical perspectives. Annual Review of Statistics and Its Application, 5(1):183–214, 2018. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [2].Wandell Brian A. Foundations of Vision. Sinauer Associates, 1995. [Google Scholar]

- [3].Collins Christine E, Airey David C, Young Nicole A, Leitch Duncan B, and Kaas Jon H. Neuron densities vary across and within cortical areas in primates. Proceedings of the National Academy of Sciences, 107(36):15927–15932, 2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [4].Rabinovich Mikhail I, Varona Pablo, Selverston Allen I, and Abarbanel Henry DI. Dynamical principles in neuroscience. Reviews of modern physics, 78(4):1213, 2006. [Google Scholar]

- [5].Wimmer Klaus, Nykamp Duane Q, Constantinidis Christos, and Compte Albert. Bump attractor dynamics in prefrontal cortex explains behavioral precision in spatial working memory. Nature neuroscience, 17(3):431–439, 2014. [DOI] [PubMed] [Google Scholar]

- [6].Kim Sung Soo, Rouault Hervé, Druckmann Shaul, and Jayaraman Vivek. Ring attractor dynamics in the drosophila central brain. Science, 356(6340):849–853, 2017. [DOI] [PubMed] [Google Scholar]

- [7].Beggs John Mand Plenz Dietmar. Neuronal avalanches in neocortical circuits. Journal of neuroscience, 23(35):11167–11177, 2003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [8].Friedman N., Ito S., Brinkman B. A. W., Shimono M., DeVille R. E. L., Dahmen K. A., Beggs J. M., and Butler T. C. Universal critical dynamics in high resolution neuronal avalanche data. Phys. Rev. Lett., 108:208102, May 2012. [DOI] [PubMed] [Google Scholar]

- [9].Zhang Kechen. Representation of spatial orientation by the intrinsic dynamics of the head-direction cell ensemble: a theory. Journal of Neuroscience, 16(6):2112–2126, 1996. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [10].Laing Carlo Rand Chow Carson C. Stationary bumps in networks of spiking neurons. Neural computation, 13(7):1473–1494, 2001. [DOI] [PubMed] [Google Scholar]

- [11].Ermentrout G Bardand Cowan Jack D. A mathematical theory of visual hallucination patterns. Biological cybernetics, 34(3):137–150, 1979. [DOI] [PubMed] [Google Scholar]

- [12].Bressloff Paul C. Metastable states and quasicycles in a stochastic wilson-cowan model of neuronal population dynamics. Physical Review E, 82(5):051903, 2010. [DOI] [PubMed] [Google Scholar]

- [13].Butler Thomas Charles, Benayoun Marc, Wallace Edward, Drongelen Wim van, Goldenfeld Nigel, and Cowan Jack. Evolutionary constraints on visual cortex architecture from the dynamics of hallucinations. Proceedings of the National Academy of Sciences, 109(2):606–609, 2012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [14].Sompolinsky H., Crisanti A., and Sommers H. J. Chaos in random neural networks. Phys. Rev. Lett., 61:259–262, Jul 1988. [DOI] [PubMed] [Google Scholar]

- [15].Dahmen David, Grün Sonja, Diesmann Markus, and Helias Moritz. Second type of criticality in the brain uncovers rich multiple-neuron dynamics. Proceedings of the National Academy of Sciences, 116(26):13051–13060, 2019. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [16].Buice Michael Aand Cowan Jack D. Field-theoretic approach to fluctuation effects in neural networks. Physical Review E, 75(5):051919, 2007. [DOI] [PubMed] [Google Scholar]

- [17].Kadmon Jonathan and Sompolinsky Haim. Transition to chaos in random neuronal networks. Physical Review X, 5(4):041030, 2015. [Google Scholar]

- [18].Goldenfeld Nigel. Lectures on Phase Transitions and the Renormalization Group. Westview Press, 1992. [Google Scholar]

- [19].Tkačik Gašper, Mora Thierry, Marre Olivier, Amodei Dario, Palmer Stephanie E, Berry Michael J, and Bialek William. Thermodynamics and signatures of criticality in a network of neurons. Proceedings of the National Academy of Sciences, 112(37):11508–11513, 2015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [20].Ma Zhengyu, Gina G Turrigiano Ralf Wessel, and Keith B Hengen. Cortical circuit dynamics are homeostatically tuned to criticality in vivo. Neuron, 104(4):655–664, 2019. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [21].Xu Yifan, Schneider Aidan, Wessel Ralf, and Hengen Keith B. Sleep restores an optimal computational regime in cortical networks. Nature neuroscience, 27(2):328–338, 2024. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [22].Meshulam Leenoy, Gauthier Jeffrey L, Brody Carlos D, Tank David W, and Bialek William. Coarse graining, fixed points, and scaling in a large population of neurons. Physical review letters, 123(17):178103, 2019. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [23].Cocchi Luca, Gollo Leonardo L, Zalesky Andrew, and Breakspear Michael. Criticality in the brain: A synthesis of neurobiology, models and cognition. Progress in neurobiology, 158:132–152, 2017. [DOI] [PubMed] [Google Scholar]

- [24].Shew Woodrow L, Yang Hongdian, Yu Shan, Roy Rajarshi, and Plenz Dietmar. Information capacity and transmission are maximized in balanced cortical networks with neuronal avalanches. Journal of neuroscience, 31(1):55–63, 2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [25].Beggs J. and Timme N. Being critical of criticality in the brain. Frontiers in Physiology, 3:163, 2012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [26].Shew W. L. and Plenz D. The functional benefits of criticality in the cortex. The Neuroscientist, 19(1):88–100, 2013. PMID: 22627091. [DOI] [PubMed] [Google Scholar]

- [27].Yaghoubi Mohammad, Graaf Ty De, Orlandi Javier G, Girotto Fernando, Colicos Michael A, and Davidsen Jörn. Neuronal avalanche dynamics indicates different universality classes in neuronal cultures. Scientific reports, 8(1):3417, 2018. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [28].Fontenele Antonio J, Vasconcelos Nivaldo AP De, Feliciano Thaís, Aguiar Leandro AA, Soares-Cunha Carina, Coimbra Bárbara, Porta Leonardo Dalla, Ribeiro Sidarta, Rodrigues Ana João, Sousa Nuno, et al. Criticality between cortical states. Physical review letters, 122(20):208101, 2019. [DOI] [PubMed] [Google Scholar]

- [29].Bradde Serena and Bialek William. PCA meets RG. Journal of statistical physics, 167(3):462–475, 2017. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [30].Nicoletti Giorgio, Suweis Samir, and Maritan Amos. Scaling and criticality in a phenomenological renormalization group. Physical Review Research, 2(2):023144, 2020. [Google Scholar]

- [31].Ponce-Alvarez Adrián, Kringelbach Morten L, and Deco Gustavo. Critical scaling of whole-brain resting-state dynamics. Communications biology, 6(1):627, 2023. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [32].Sooter J Samuel, Fontenele Antonio J, Ly Cheng, Barreiro Andrea K, and Shew Woodrow L. Cortex deviates from criticality during action and deep sleep: a temporal renormalization group approach. bioRxiv, pages 2024–05, 2024. [Google Scholar]

- [33].Stapmanns Jonas, Kühn Tobias, Dahmen David, Luu Thomas, Honerkamp Carsten, and Helias Moritz. Self-consistent formulations for stochastic nonlinear neuronal dynamics. Physical Review E, 101(4):042124, 2020. [DOI] [PubMed] [Google Scholar]

- [34].Tiberi Lorenzo, Stapmanns Jonas, Tobias Kühn Thomas Luu, Dahmen David, and Helias Moritz. Gellmann–low criticality in neural networks. Physical Review Letters, 128(16):168301, 2022. [DOI] [PubMed] [Google Scholar]

- [35].Song Sen, Sjöström Per Jesper, Reigl Markus, Nelson Sacha, and Chklovskii Dmitri B. Highly nonrandom features of synaptic connectivity in local cortical circuits. PLoS biology, 3(3):e68, 2005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [36].Villegas Pablo, Gili Tommaso, Caldarelli Guido, and Gabrielli Andrea. Laplacian renormalization group for heterogeneous networks. Nature Physics, 19(3):445–450, 2023. [Google Scholar]

- [37].van Vreeswijk C. and Sompolinsky H. Chaos in neuronal networks with balanced excitatory and inhibitory activity. Science, 274(5293):1724–1726, 1996. [DOI] [PubMed] [Google Scholar]

- [38].Martí Daniel, Brunel Nicolas, and Ostojic Srdjan. Correlations between synapses in pairs of neurons slow down dynamics in randomly connected neural networks. Physical Review E, 97(6):062314, 2018. [DOI] [PubMed] [Google Scholar]

- [39].Schuecker Jannis, Goedeke Sven, and Helias Moritz. Optimal sequence memory in driven random networks. Physical Review X, 8(4):041029, 2018. [Google Scholar]

- [40].Amari Shun-ichi. Dynamics of pattern formation in lateral-inhibition type neural fields. Biological cybernetics, 27(2):77–87, 1977. [DOI] [PubMed] [Google Scholar]

- [41].There is an edge case that a reader might wonder about, , for which and the leading order nonlinearity is cubic, rather than quadratic. This represents a tricritical point of the system in which the mean-field exponents for the in vitro model that match the exponents of the in vivo model. In our simulations, which use the value of , we observe the regular critical point because fluctuations induce a quadratic term in the effective nonlinearity. The tri-critical point, if it exists, requires a fine-tuned value of that we do not attempt to determine in this work.

- [42].Kadanoff Leo P. Scaling laws for ising models near t c. Physics Physique Fizika, 2(6):263, 1966. [Google Scholar]

- [43].Wilson Kenneth G. Renormalization group and critical phenomena. i. renormalization group and the kadanoff scaling picture. Physical review B, 4(9):3174, 1971. [Google Scholar]

- [44].Wilson Kenneth G. The renormalization group: Critical phenomena and the kondo problem. Reviews of modern physics, 47(4):773, 1975. [Google Scholar]

- [45].Wilson Kenneth G. The renormalization group and critical phenomena. Reviews of Modern Physics, 55(3):583, 1983. [Google Scholar]

- [46].Kline Adam Gand Palmer Stephanie E. Gaussian information bottleneck and the non-perturbative renormalization group. New Journal of Physics, 24(3):033007, 2022. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [47].Wen Tao and Cheong Kang Hao. The fractal dimension of complex networks: A review. Information Fusion, 73:87–102, 2021. [Google Scholar]

- [48].Tuncer Aslı and Erzan Ayşe. Spectral renormalization group for the gaussian model and 4 theory on nonspatial networks. Physical Review E, 92(2):022106, 2015. [DOI] [PubMed] [Google Scholar]

- [49].Millán Ana P, Gori Giacomo, Battiston Federico, Enss Tilman, and Defenu Nicolò. Complex networks with tuneable spectral dimension as a universality play-ground. Physical Review Research, 3(2):023015, 2021. [Google Scholar]

- [50].Bighin Giacomo, Enss Tilman, and Defenu Nicolò. Universal scaling in real dimension. Nature Communications, 15(1):4207, 2024. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [51].Janssen Hans-Karl and Täuber Uwe C. The field theory approach to percolation processes. Annals of Physics, 315(1):147–192, 2005. [Google Scholar]

- [52].Wang Junfeng, Zhou Zongzheng, Liu Qingquan, Garoni Timothy M, and Deng Youjin. High-precision monte carlo study of directed percolation in (d+ 1) dimensions. Physical Review E—Statistical, Nonlinear, and Soft Matter Physics, 88(4):042102, 2013. [DOI] [PubMed] [Google Scholar]

- [53].Kazmin Stanislav and Janke Wolfhard. Critical exponents of the ising model in three dimensions with long-range power-law correlated site disorder: A monte carlo study. Physical Review B, 105(21):214111, 2022. [Google Scholar]

- [54].Liu Zihua, Vatansever Erol, Gerard T, and Fytas Nikolaos G. Critical dynamical behavior of the ising model. Physical Review E, 108(3):034118, 2023. [DOI] [PubMed] [Google Scholar]

- [55].L Ts Adzhemyan, Evdokimov DA, Hnatič M, Ivanova EV, Kompaniets MV, Kudlis A, and Zakharov DV. The dynamic critical exponent z for 2d and 3d ising models from five-loop expansion. Physics Letters A, 425:127870, 2022. [Google Scholar]

- [56].Seeman Stephanie C, Campagnola Luke, Davoudian Pasha A, Hoggarth Alex, Travis A Hage, Bosma-Moody Alice, Baker Christopher A, Lee Jung Hoon, Mihalas Stefan, Teeter Corinne, et al. Sparse recurrent excitatory connectivity in the microcircuit of the adult mouse and human cortex. elife, 7:e37349, 2018. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [57].Sonja B Hofer Ho Ko, Pichler Bruno, Vogel-stein Joshua, Ros Hana, Zeng Hongkui, Lein Ed, Nicholas A Lesica, and Thomas D Mrsic-Flogel. Differential connectivity and response dynamics of excitatory and inhibitory neurons in visual cortex. Nature neuroscience, 14(8):1045–1052, 2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [58].McKay Brendan D. The expected eigenvalue distribution of a large regular graph. Linear Algebra and its Applications, 40:203–216, 1981. [Google Scholar]

- [59].Zaccariello Roberto, Herrmann Hans J, Sarracino Alessandro, Zapperi Stefano, and Arcangelis Lucilla de. Inhibitory neurons and the asymmetric shape of neuronal avalanches. Physical Review E, 111(2):024133, 2025. [DOI] [PubMed] [Google Scholar]

- [60].Fosdick Bailey K, Larremore Daniel B, Nishimura Joel, and Ugander Johan. Configuring random graph models with fixed degree sequences. Siam Review, 60(2):315–355, 2018. [Google Scholar]

- [61].Nonnenmacher Marcel, Behrens Christian, Berens Philipp, Bethge Matthias, and Jakob H. Signatures of criticality arise from random subsampling in simple population models. PLoS computational biology, 13(10):e1005718, 2017. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [62].Levina Anna and Priesemann Viola. Subsampling scaling. Nature communications, 8(1):1–9, 2017. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [63].Castro Daniel M, Feliciano Thaís, Vasconcelos Nivaldo AP de, Soares-Cunha Carina, Coimbra Bárbara, Rodrigues Ana João, Carelli Pedro V, and Copelli Mauro. In and out of criticality? state-dependent scaling in the rat visual cortex. PRX Life, 2(2):023008, 2024. [Google Scholar]

- [64].Randeria Mohit, Sethna James P, and Palmer Richard G. Low-frequency relaxation in ising spin-glasses. Physical review letters, 54(12):1321, 1985. [DOI] [PubMed] [Google Scholar]

- [65].Bray AJ. Nature of the griffiths phase. Physical review letters, 59(5):586, 1987. [DOI] [PubMed] [Google Scholar]

- [66].Munoz Miguel A, Juhász Róbert, Castellano Claudio, and Ódor Géza. Griffiths phases on complex networks. Physical review letters, 105(12):128701, 2010. [DOI] [PubMed] [Google Scholar]

- [67].Hinrichsen Haye and Howard Martin. A model for anomalous directed percolation. The European Physical Journal B-Condensed Matter and Complex Systems, 7(4):635–643, 1999. [Google Scholar]

- [68].Hinrichsen Haye. On possible experimental realizations of directed percolation. Brazilian Journal of Physics, 30:69–82, 2000. [Google Scholar]

- [69].Hooyberghs Jef, Iglói Ferenc, and Vanderzande Carlo. Absorbing state phase transitions with quenched disorder. Physical Review E, 69(6):066140, 2004. [DOI] [PubMed] [Google Scholar]

- [70].Vives Eduard and Planes Antoni. Avalanches in a fluctuationless first-order phase transition in a random-bond ising model. Physical Review B, 50(6):3839, 1994. [DOI] [PubMed] [Google Scholar]

- [71].Dahmen Karin and Sethna James P. Hysteresis, avalanches, and disorder-induced critical scaling: A renormalization-group approach. Physical Review B, 53(22):14872, 1996. [DOI] [PubMed] [Google Scholar]

- [72].Tarjus Gilles and Tissier Matthieu. Nonperturbative functional renormalization group for random-field models: The way out of dimensional reduction. Physical review letters, 93(26):267008, 2004. [DOI] [PubMed] [Google Scholar]

- [73].Tarjus Gilles and Tissier Matthieu. Nonperturbative functional renormalization group for random field models and related disordered systems. i. effective average action formalism. Physical Review B, 78(2):024203, 2008. [Google Scholar]

- [74].Tissier Matthieu and Tarjus Gilles. Nonperturbative functional renormalization group for random field models and related disordered systems. ii. results for the random field o (n) model. Physical Review B, 78(2):024204, 2008. [DOI] [PubMed] [Google Scholar]

- [75].Balog Ivan and Tarjus Gilles. Activated dynamic scaling in the random-field ising model: A nonperturbative functional renormalization group approach. Physical Review B, 91(21):214201, 2015. [Google Scholar]

- [76].Balog Ivan, Tarjus Gilles, and Tissier Matthieu. Criticality of the random field ising model in and out of equilibrium: A nonperturbative functional renormalization group description. Physical Review B, 97(9):094204, 2018. [Google Scholar]

- [77].Brunel Nicolas. Dynamics of sparsely connected networks of excitatory and inhibitory spiking neurons. Journal of computational neuroscience, 8:183–208, 2000. [DOI] [PubMed] [Google Scholar]

- [78].Grytskyy Dmytro, Tetzlaff Tom, Diesmann Markus, and Helias Moritz. A unified view on weakly correlated recurrent networks. Frontiers in computational neuroscience, 7:131, 2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [79].Clark David Gand Abbott LF. Theory of coupled neuronal-synaptic dynamics. Physical Review X, 14(2):021001, 2024. [Google Scholar]

- [80].Kessenich Laurens Michiels van, Arcangelis Lucilla De, and Herrmann Hans J. Synaptic plasticity and neuronal refractory time cause scaling behaviour of neuronal avalanches. Scientific reports, 6(1):32071, 2016. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [81].Vespignani Alessandro, Zapperi Stefano, and Pietronero Luciano. Renormalization approach to the self-organized critical behavior of sandpile models. Physical Review E, 51(3):1711, 1995. [DOI] [PubMed] [Google Scholar]

- [82].Loreto Vittorio, Pietronero Luciano, Vespignani A, and Zapperi S. Renormalization group approach to the critical behavior of the forest-fire model. Physical review letters, 75(3):465, 1995. [DOI] [PubMed] [Google Scholar]

- [83].Loreto Vittorio, Pietronero Luciano, Vespignani A, and Zapperi S. Loreto et al reply. Physical Review Letters, 78(7):1393, 1997. [Google Scholar]

- [84].Gerstner Wulfram, Kistler Werner M, Naud Richard, and Paninski Liam. Neuronal dynamics: From single neurons to networks and models of cognition. Cambridge University Press, 2014. [Google Scholar]

- [85].We neglect the self-coupling term of the inhibitory neurons, which in the reduced model would just shift the denominator of the population average .

- [86].Canet L., Delamotte B., Deloubrière O., and Wschebor N. Nonperturbative renormalization-group study of reaction-diffusion processes. Phys. Rev. Lett., 92:195703, May 2004. [DOI] [PubMed] [Google Scholar]

- [87].Canet Léonie, Chaté Hugues, Delamotte Bertrand, Dornic Ivan, and Munoz Miguel A. Nonperturbative fixed point in a nonequilibrium phase transition. Physical review letters, 95(10):100601, 2005. [DOI] [PubMed] [Google Scholar]

- [88].Machado T. and Dupuis N. From local to critical fluctuations in lattice models: A nonperturbative renormalization-group approach. Phys. Rev. E, 82:041128, Oct 2010. [DOI] [PubMed] [Google Scholar]

- [89].Canet L., Chaté H., and Delamotte B. General framework of the non-perturbative renormalization group for non-equilibrium steady states. Journal of Physics A: Mathematical and Theoretical, 44(49):495001, Nov 2011. [Google Scholar]

- [90].Rançon A. and Dupuis N. Nonperturbative renormalization group approach to strongly correlated lattice bosons. Phys. Rev. B, 84:174513, Nov 2011. [Google Scholar]

- [91].Winkler A. A. and Frey E. Long-range and many-body effects in coagulation processes. Phys. Rev. E, 87:022136, Feb 2013. [DOI] [PubMed] [Google Scholar]

- [92].Homrighausen Ingo, Winkler Anton A., and Frey Erwin. Fluctuation effects in the pair-annihilation process with lévy dynamics. Phys. Rev. E, 88:012111, Jul 2013. [DOI] [PubMed] [Google Scholar]

- [93].Kloss T., Canet L., Delamotte B., and Wschebor N. Kardar-parisi-zhang equation with spatially correlated noise: A unified picture from nonperturbative renormalization group. Phys. Rev. E, 89:022108, Feb 2014. [DOI] [PubMed] [Google Scholar]

- [94].Canet Léonie, Delamotte Bertrand, and Wschebor Nicolás. Fully developed isotropic turbulence: Symmetries and exact identities. Physical Review E, 91(5):053004, 2015. [DOI] [PubMed] [Google Scholar]

- [95].Jakubczyk P. and Eberlein A. Thermodynamics of the two-dimensional XY model from functional renormalization. Phys. Rev. E, 93:062145, Jun 2016. [DOI] [PubMed] [Google Scholar]

- [96].Duclut C. and Delamotte B. Nonuniversality in the erosion of tilted landscapes. Phys. Rev. E, 96:012149, Jul 2017. [DOI] [PubMed] [Google Scholar]

- [97].Delamotte Bertrand. An introduction to the nonperturbative renormalization group. In Renormalization group and effective field theory approaches to many-body systems, pages 49–132. Springer, 2012. [Google Scholar]

- [98].Canet Léonie. Reaction–diffusion processes and non-perturbative renormalization group. Journal of Physics A: Mathematical and General, 39(25):7901, 2006. [Google Scholar]

- [99].Canet L. and Chaté H. A non-perturbative approach to critical dynamics. Journal of Physics A: Mathematical and Theoretical, 40(9):1937–1949, feb 2007. [Google Scholar]

- [100].Dupuis Nicolas, Canet L, Eichhorn Astrid, Metzner W, Pawlowski Jan M, Tissier M, and Wschebor N. The nonperturbative functional renormalization group and its applications. Physics Reports, 910:1–114, 2021. [Google Scholar]

- [101].Ocker G. K., Josić K., Shea-Brown E., and Buice M. A. Linking structure and activity in nonlinear spiking networks. PLOS Computational Biology, 13(6):1–47, 06 2017. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [102].Kordovan M. and Rotter S. Spike train cumulants for linear-nonlinear poisson cascade models, 2020. [Google Scholar]

- [103].Brinkman Braden AW, Rieke Fred, Shea-Brown Eric, and Buice Michael A. Predicting how and when hidden neurons skew measured synaptic interactions. PLoS computational biology, 14(10):e1006490, 2018. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [104].Ocker Gabriel Koch. Republished: Dynamics of stochastic integrate-and-fire networks. Physical Review X, 13(4):041047, 2023. [Google Scholar]

- [105].Wetterich C. Exact evolution equation for the effective potential. Physics Letters B, 301(1):90 – 94, 1993. [Google Scholar]

- [106].Tarpin Malo, Benitez Federico, Canet Léonie, and Wschebor Nicolás. Nonperturbative renormalization group for the diffusive epidemic process. Physical Review E, 96(2):022137, 2017. [DOI] [PubMed] [Google Scholar]

- [107].Henkel Malte, Hinrichsen Haye, and Lübeck Sven. Universality classes different from directed percolation. Non-Equilibrium Phase Transitions: Volume I: Absorbing Phase Transitions, pages 197–259, 2008. [Google Scholar]

- [108].Although we can choose an initial nonlinearity for which vanishes, this property is not preserved by the flow of the effective nonlinearity , and in general . Because the dimensionless flow equation is only asymptotic for , we may use in place of define this non-dimensionalization instead.

- [109].Had we not been careful to define z in terms of the difference between the membrane potential and its running set-point then our stability analysis would have found a WF fixed point that was unstable toward a complex-valued “spinodal” critical point that may describe critical metastable transitions..

- [110]. [There is also information about the initial conditions contained in the constant prefactors of the running scales, which we have suppressed in Eq. (C27).]

- [111].Caillol Jean-Michel. The non-perturbative renormalization group in the ordered phase. Nuclear Physics B, 855(3):854–884, 2012. [Google Scholar]