Abstract

Determining the location of a video is crucial for law enforcement and national security. While outdoor videos can use landmarks or geolocation techniques, indoor videos present a greater challenge due to the lack of visible landmarks. Recently, researchers have explored using the electrical network frequency (ENF), naturally embedded in audio and video sources, for this purpose. Most studies have focused on extracting ENF from different electrical grids (inter-grid scenarios), where ENF from one grid differs significantly from another. However, recent research shows that ENF recordings from different locations within the same grid (intra-grid scenarios) have minor variations that can help estimate the video’s location. Previous work used ENF recordings directly from electrical mains. This paper proposes a technique to extract ENF from smartphone videos in intra-grid scenarios using an improved super-pixel approach. Unlike direct ENF extraction from electrical mains, extracting ENF from smartphone videos is challenging due to sensor noise and aliasing effects. Our improved super-pixel technique mitigates these issues. We validated our approach with real-world smartphone video recordings from various locations within the Australian Eastern grid. By comparing the extracted ENF signatures with ground truth ENF data from anchor locations provided by a power supplier, we demonstrated the ability to estimate the location of smartphone videos within the same electrical grid. To our knowledge, this is the first practical demonstration of location estimation for smartphone videos in an intra-grid scenario.

Subject terms: Engineering, Mathematics and computing

Introduction

The widespread use of multimedia content, especially images and videos, as the main form of communication has led to a significant increase in its creation and distribution across the internet and social media platforms. The information contained in these media files regarding their location of origin is invaluable for law enforcement and national security in their effort to effectively track and fight crime such as those on domestic violence, human trafficking, child exploitation and other illegal acts1–3. Multimedia geolocation involves identifying the real-world location where a multimedia file, such as image or video, was captured. This task is gaining significance in digital forensics as the number of multimedia files involved in criminal investigations continues to grow3. Many digital cameras today, including most smartphone cameras, can embed GPS coordinates into the metadata of images or videos. However, these GPS coordinates and other metadata can be easily removed to conceal the location where the video was captured. Multimedia files captured in outdoor scenes can leverage the presence of visible terrains, such as street signs, landmarks, and natural features, indicating the location where they were captured4.

For outdoor environment, researchers have employed computer vision techniques and big data methodologies to analyze visible patterns and landmarks in images/videos, matching them with extensive geospatial data to accurately determine the geographic location of the images/videos4,5. These technologies still encounter significant challenges when visible landmarks are absent, such as in outdoor images/videos where there are no distinguishing features. Also, typically, when multimedia content is shared through instant messaging and social media, metadata-based geolocation information is removed4. This makes the task of geolocating, geotagging, or finding geographical hints in such context excessively challenging for investigators using these techniques. Furthermore, these techniques are ineffective for images/videos captured indoors where accurate GPS metadata and diverse visual cues like landmarks, street signs, and natural features are often unavailable6,7. Such scenarios are prevalent in global efforts to combat crimes like child exploitation and domestic violence where a substantial share of abusive content is recorded indoors by criminals. The internet has erased national borders, complicating the task of tracking down these criminals. Developing new technologies to determine the locations of such images/videos, even roughly, would significantly enhance global investigations and support law enforcement in relevant jurisdictions8.

In this study, we investigate the use of a virtually undetectable location-based signature known as the Electric Network Frequency (ENF) signal, that is naturally embedded in a video to identify the location of videos captured with a smartphone at intra-grid level. The ENF represents the frequency of the electrical power grid, fluctuating around its standard value of 60 Hz in North America and 50 Hz in Europe, Australia, and many other parts of the world9,10. The intrinsic fluctuation characteristic of the ENF stems from the variations in the load on the power grid and are essentially random. An ENF signal is embedded in audio files generated by devices connected to the mains power or situated in surroundings where electromagnetic interference or acoustic mains hum is detectable9,11,12. Also, artificial lights used in indoor lighting undergo fluctuations in light intensity at twice the supply frequency, resulting in flickering that is barely perceptible in the illuminated surroundings. Consequently, videos recorded under indoor lighting conditions using the camera would capture the ENF signal13,14. Recent study has also revealed the possibility of embedding an ENF within an image taken with a rolling shutter camera8. This inherent characteristic of the ENF signal renders it valuable for media forensic analysis9. By leveraging the distinctive ENF patterns present in multimedia recordings, forensic analysts can discern the authenticity of timestamps15,16, detect potential instances of tampering or forgery17–19, identify the media recording device20–22, and determine the media location or grid of recording23,24.

In one of the earliest works, Garg et al.13 introduced the idea of using optical sensing and signal processing to extract ENF signals from digital video recordings. They demonstrated that videos recorded under artificial lighting could capture ENF-induced flickers, which could serve as natural timestamps for video recordings. This foundational work highlighted the potential of ENF as a reliable source for temporal analysis in multimedia files. Building on this, Vatansever et al.25 proposed a super-pixel-based approach to enhance ENF signal detection in videos. By applying super-pixel segmentation to localize regions with high ENF signal strength, they improved the robustness of ENF extraction from noisy environments. More recent efforts have addressed the challenges introduced by rolling shutter cameras, which are common in smartphones and other consumer devices. Choi et al.26 analyzed the impact of rolling shutters on ENF extraction and proposed techniques to mitigate distortions caused by the sequential exposure of pixels. Similarly, Han et al.27 introduced a phase-based approach for ENF extraction from rolling shutter videos, which focused on correcting phase distortions to enhance the accuracy of ENF estimation, especially in dynamic scenes where motion artifacts are prominent. Frijters and Geradts28 explored the application of ENF signals for time estimation in video material, emphasizing its use in forensic investigations. Karantaidis and Kotropoulos29 proposed an automated super-pixel-based approach for ENF estimation based on both static and non-static video recordings. Their method emphasized the importance of handling video dynamics, such as camera motion and scene changes, to ensure accurate ENF extraction even under challenging conditions.

The application of the ENF in determining the location of multimedia recordings, both at inter-grid and intra-grid levels is highly significant for recordings made in indoor environments. The unique ENF characteristics of distinct power grids are leveraged at inter-grid localization, enabling the identification of a recording’s broader geographical region or grid of origin. At this level, it is feasible to distinguish between recordings made across different grids, as the ENF signal fluctuations typically vary across independently operated grids23. Previous studies and approaches have focused on using unique and relevant features extracted from the ENF signals in test recordings to train machine learning systems to classify the ENF signals based on their grid of origin, particularly in cases where the simultaneous recording of the power reference signal is inaccessible. For instance, the authors in Hajj-Ahmad et al.24 utilized statistical, linear-predictive, and wavelet-based features extracted from power and audio recordings made in 11 distinct grids across the world to train a multi-class Support Vector Machine (SVM). They achieved overall accuracy of 88.4% and 84.3% in identifying ENF signals in power and audio recording obtained from 11 target grids respectively. The authors in Šaric et al.30 employed features used in Hajj-Ahmad et al.24 and additional features related to the extrema, rising edge, and autocorrelation to train five classifiers—SVM, K-nearest neighbors, linear perceptron, random forest, and neural networks. The features linked to the extrema and rising edge in the ENF signal showed an enhanced performance, with improvements ranging from 3 to 19%. These techniques hold significant potential in security and forensic investigations, aiding in the determination of the source of ENF-containing files, especially those related to domestic violence, child exploitation and pornography, ransom demands, and terrorism attacks23.

Intra-grid localization, on the other hand, delves into the finer distinctions within a singular power grid. Despite the intrinsic similarity in ENF fluctuations captured simultaneously across diverse locations within the same grid, noticeable differences may likely be identified. These differences stem from shifts in power consumption specific to areas, and the time lapses needed for load-related alterations to propagate throughout the grid. Additionally, such disparities can arise from systematic interruptions such as power line transitions or generator disconnection23,31. For example, a localized adjustment in load might affect the ENF within its immediate area, whereas a significant system alteration like generator disconnection impacts the entirety of the grid31. Garg et al23 pioneered the first work in this area and demonstrated that differences exist in ENF signals recorded simultaneously at various locations within the same grid through experiments conducted on power ENF data captured from the Eastern and Western US grids. They also observed a correlation between ENF signals details and geographical distances within a densely and sparsely populated grid area. Based on this, they designed two trilateration-based methods to estimate recording locations within the grid. While power signals used in their study provide the purest form of ENF, noisy video recordings require an ENF signal with high signal-to-noise ratio (SNR) (above 20 dB) for accurate location tracking32. Also, ENF estimation from videos captured with smartphone cameras suffer from the problem of aliasing because of the lower temporal sampling rate of the cameras compared to the light flickering frequency, causing the ENF to appear at different frequencies than those appearing in audio files. As a result, current ENF estimation and localization feature extraction approaches proposed in Garg et al23 cannot reliably extract such high-quality ENF from video recordings.

In this paper, we present an improved super-pixel technique to deal with sensor and environmental noise in videos captured with smartphone. Smartphones, especially those using CMOS sensors with rolling shutters, present several challenges, including motion artifacts and variations in exposure times, which can introduce noise into the ENF extraction process26,33. Additionally, the variability in lighting conditions and the quality of smartphone cameras compared to professional recording equipment can further complicate accurate ENF estimation across all sensor types. In this method, we implement a motion compensation technique to reduce distortions and improve consistency in consecutive frames before applying SLIC segmentation. Instead of applying SLIC on the first frame, we dynamically update the super-pixels across frame to adapt to changing scene characteristics which helps to better capture variations in scenes with object movements. Temporal smoothing technique is then applied across frames to refine the intensity profile within each super-pixel, improving the quality of the ENF signal. ESPRIT and STFT are utilized for the ENF estimation. The peak Normalized Cross-Correlation Coefficient (NCC) is employed to compare the similarity between the estimated ENF and the reference ENF data. Triangulation is employed to narrow down the location of a video recording using the NCC values. Figure 1 shows the overview of the video location estimation process or workflow.

Fig. 1.

Overview of video location estimation process or workflow. (12 anchor stations in disk).

This paper makes the following contributions:

The implementation of an improved super-pixel technique for ENF extraction from smartphone videos, addressing the noisy nature of recordings and improving the SNR of the extracted signal.

The application of triangulation for ENF-based location estimation at the intra-grid level. To the best of our knowledge, this work presents the first practical demonstration for the location estimation of smartphone videos within an intra-grid scenario.

The remainder of this paper is structured as follows: “Theory and Method” section discusses the ENF propagation mechanism, study area, ENF estimation and extraction methods, and the evaluation metrics used in the experiment. The “Result” section provides the experimental results and analysis. A discussion of the proposed approach and its relevance in media forensics is presented in the “Discussion” section. The “Conclusion” section provides the concluding remarks.

Theory and methods

This section presents details about our study area, the various experiments conducted, the ENF analysis techniques employed, and the evaluation metrics for the results.

ENF signal propagation and location-dependent characteristics

In a grid, fluctuations exist in the ENF signal primarily because of dynamic load variations experienced by the grid. Typically, power demand and supply in a specific area exhibit cyclic patterns. For instance, residential neighborhoods often see increased demand during the evening hours as households switch on appliances such as air conditioners, lighting units, and so on. To maintain the stability of the grid, a control mechanism is employed to manage any changes in load34. When there is high power demand, which causes a temporary drop in the supply frequency, this mechanism detects the frequency deviation and compensates by drawing power from neighboring areas. Consequently, the load in neighboring areas used for the compensation also rises, leading to a decrease in the instantaneous supply frequency. This prompts an overall increase in power supply to counterbalance the escalating demand which results in a drop in the instantaneous supply frequency across the regions. This control mechanism process also applies to addressing instances of excess power supply that lead to frequency surges.

While minor load changes may only affect ENF locally, significant load changes such as those resulting from generator failure can impact the entire grid. Such changes propagate at varying speeds along the grid based on the complexity of the grid network. The experiment conducted by Garg et al.23 demonstrated that ENF signals from cities separated by greater geographical distance yielded lower correlation coefficient compared to that produced by ENF signals from cities closer to each other. They observed that the correlation coefficient is approximately/roughly proportional to the distance separating the cities. Given three cities A, B, and C, and assuming a linear relationship between city distance and the correlation coefficients, the authors demonstrated that the correlation values  and

and  along with the respective geographical distances

along with the respective geographical distances  and

and  can be utilized to derive the distance

can be utilized to derive the distance  for a specific observation of

for a specific observation of  . They expressed an estimate of

. They expressed an estimate of  for a given

for a given  utilizing the linear relationship as:

utilizing the linear relationship as:

|

1 |

This determines the average distance estimation error by calculating the mean absolute difference between  (n) and the actual geographical distance

(n) and the actual geographical distance  across various query segments. The correlation coefficient amongst data from various locations may exhibit a complex dependency on the distance between these locations due to various factors including grid topology and density affecting the transmission of the ENF signal through wire lines. Recognizing this case where Eq. (1) does not suffice, the authors developed a localization protocol that does not explicitly model the relationship between the correlation coefficient and the distance to estimate the unknown recording location. Given a location K with anchor cities

across various query segments. The correlation coefficient amongst data from various locations may exhibit a complex dependency on the distance between these locations due to various factors including grid topology and density affecting the transmission of the ENF signal through wire lines. Recognizing this case where Eq. (1) does not suffice, the authors developed a localization protocol that does not explicitly model the relationship between the correlation coefficient and the distance to estimate the unknown recording location. Given a location K with anchor cities  ,

,

, and their ENF data and known locations, an unknown location of a city node A within a set of locations defined by the convex hull of

, and their ENF data and known locations, an unknown location of a city node A within a set of locations defined by the convex hull of  represented as D, can be estimated. If the distance from

represented as D, can be estimated. If the distance from  to city A is greater than the distance from

to city A is greater than the distance from  to city A, city A is said to be within the half-plane defined by the set points

to city A, city A is said to be within the half-plane defined by the set points  given by:

given by:

|

2 |

The conditions outlined in Eq. (2) depend on the sign bit of the difference between the correlation coefficients. Considering the noisy nature of pairwise correlation coefficients, for instance, where the correlation coefficients of the ENF signal from city A with the  and

and  locations are very similar, that is,

locations are very similar, that is,  for a small

for a small  . The feasible set of Eq. (2) was modified to address such cases by introducing a tolerance

. The feasible set of Eq. (2) was modified to address such cases by introducing a tolerance  :

:

|

3 |

By utilizing the correlation value acquired from each of the anchor nodes, the set of possible points can be narrowed down by calculating the intersections of all feasible half-planes as follows:

|

4 |

Study area

This study was conducted in the southeast region of Queensland, Australia, where the nominal ENF value is 50 Hz. This area is part of the Australia Eastern grid, which constitute the largest and most interconnected grid in the country, covering the states of Queensland, New South Wales, Victoria, Tasmania, and South Australia35. Power generated from various sources is transported across vast distances through a sophisticated transmission network, as illustrated in Figure 2. Figure 3 depicts the study area, showing the locations of the anchor station numbered from 1–12 and the recording points numbered from 13–17.

Fig. 2.

Transmission network of Australian eastern grid36.

Fig. 3.

Study area map (created with: ArcGIS Pro version 3.2.0. https://www.esri.com/en-us/arcgis/products/arcgis-pro/overview).

We conducted video recordings of 10-minute durations for white wall videos and 5-minute durations for videos featuring complex scenes and scenes with movement, across five different locations (recording points): Petrie, Caboolture, Forest Lake, Sunshine Coast, and Gladstone. At each recording point, we captured one white wall video, one video featuring complex scenes, and one video with movement within complex scenes using four different smartphone models—an iPhone 6s, iPhone 16 ProMax, Samsung Galaxy S22, and Oppo A5, resulting in a total of sixty videos. The smartphones were mounted on a tripod, with the back camera oriented either towards a white wall or capturing the room under electric lighting at various times of the day to ensure diversity in conditions. Sample frames of the video recordings are shown in Figure 4. Recording a, known as the white wall video, provided an ideal scenario for assessing the feasibility of embedding and subsequently estimating ENF variations within a static and apparently less noise-free environment. Recording b, c, and d featured a complex static scene with objects of different colors and textures, captured in a classroom and meeting room environment, including both the floor and background wall. Recording e and f presented a challenging scenario, with continuous movement of a person within a room. The movement occurred near the camera, affecting a large portion of pixels in every frame.

Fig. 4.

Sample frames of video recordings.

To match and verify the quality of the ENF signals extracted from the videos, we obtained ENF data recorded from the power substations managed by Ergon Energy, covering regional southeast Queensland where the video recordings were conducted. These power substations are referred to as anchor stations in this study and are located in Atherton, Childers, Biloela, Dalby, Hughenden, Kingaroy, Toowoomba, Rockhampton, Maryborough, Gladstone, Innisfail, and Emerald. Ergon Energy monitors various power quality and consumption metrics, including ENF, in real time using Elspec G4420 power quality (PQ) analyzers deployed in its stations. These devices are equipped with Elspec’s proprietary compression algorithm, enabling real-time recording of high-resolution data compressed into PQZIP format. We obtained ENF data from twelve anchor stations, corresponding to the days and times of our recordings, in compressed PQZIP format. Using PQSCADA Sapphire software, we decompressed these files and extracted ENF readings matching the days and times of our recordings, storing the data in Excel format. The data was analyzed at a resolution of one sample per second to ensure a more accurate reference ENF signal.

ENF estimation

In videos captured under electric lights, the ENF is implanted in the electric light signal. Assuming that short-time segments of the signal are stable, the ENF can be modeled as:

|

5 |

where  denote the signal magnitude and signal phase respectively while

denote the signal magnitude and signal phase respectively while  represents the fluctuating frequency of the ENF component. Recent studies have revealed that ENF characteristics can be detected in video recordings made under fluorescent light or the illumination of incandescent bulbs due to variations in light intensity37.

represents the fluctuating frequency of the ENF component. Recent studies have revealed that ENF characteristics can be detected in video recordings made under fluorescent light or the illumination of incandescent bulbs due to variations in light intensity37.

Aliasing effect

The intensity of light directly relates with electric current, and its standard frequency is influenced by the ENF signal which oscillates at twice the nominal frequency of the ENF (e.g., 100 Hz in Australia and 120 Hz in the United States). The lower temporal sampling rate of cameras capturing the videos files compared to light flickering frequencies leads to a significant aliasing of the ENF signals. Consequently, ENF manifests at different frequencies from those in recordings which are captured directly from the electrical mains. However, even though the majority of consumer cameras cannot offer such high frame sampling rates, the illumination frequency can still be estimated from aliased frequencies29. Assuming that  is the sampling frequency of the camera and

is the sampling frequency of the camera and  is the light source illumination frequency, then the aliased illumination frequency

is the light source illumination frequency, then the aliased illumination frequency  is expressed as29:

is expressed as29:

|

6 |

where  is an integer. Therefore, when a 100 Hz illumination signal from a light source is sampled using a camera with a frame rate of 29.97 Hz, the aliased base frequency of the ENF will be obtained as 10.09 Hz, while the aliased second frequency of the ENF will be obtained as 9.79 Hz. Table 1 lists the aliased frequency corresponding to various camera frame rates and power main frequencies.

is an integer. Therefore, when a 100 Hz illumination signal from a light source is sampled using a camera with a frame rate of 29.97 Hz, the aliased base frequency of the ENF will be obtained as 10.09 Hz, while the aliased second frequency of the ENF will be obtained as 9.79 Hz. Table 1 lists the aliased frequency corresponding to various camera frame rates and power main frequencies.

Table 1.

Aliased ENF frequencies in relation to power main frequencies and camera frame rates.

| Power mains (Hz) | Video frame rate (fps) | Aliased base frequency (Hz) | 2nd harmonic aliased frequency (Hz) |

|---|---|---|---|

| 50 | 29.97 | 10.09 | 9.79 |

| 50 | 30 | 10 | 10 |

| 60 | 29.97 | 0.12 | 0.24 |

| 60 | 30 | 0 | 0 |

In this study, the videos were captured using iPhone 6s, iPhone 16 Pro Max, Samsung Galaxy S22, and Oppo A5 which employs a complementary metal oxide semiconductor (CMOS) sensor with a rolling shutter. Table 2 shows the cameras resolutions and frame rates used for the recordings. Although rolling shutter typically allow for a higher sampling rate, we chose to estimate the ENF using the aliased frequencies based on the ENF nominal frequency of 50 Hz in Australia and the camera’s frame rates as shown in Table 2. Our proposed ENF estimation method relies on super-pixel segmentation via the Simple Linear Iteration Clustering (SLIC) algorithm, which is effective for ENF estimation regardless of the shutter type, as it focuses on the intensity variations within each super-pixel, minimizing the effects of motion or temporal misalignment that may be arise from the rolling shutter mechanism.

Table 2.

Phones specification.

| Phone | Resolution | Frame rate (fps) | Aliased frequency (Hz) |

|---|---|---|---|

| iPhone 6S | 1920 × 1080 | 29.98 | 10.6 |

| iPhone 16 ProMax | 1920 × 1080 | 30 | 10 |

| Samsung Galaxy S22 | 1920 × 1080 | 30.01 | 10.2 |

| Oppo A5 | 1920 × 1080 | 30 | 10 |

ENF extraction

The procedure for estimating ENF in video recordings differs slightly from that in mains recordings due to variations in the pre-processing stage. The procedure for videos is based on whether the video is static or non-static. For static videos which have been used mostly in video-based ENF experiments, contemporary methods recommend computing the mean intensity of each frame, forming a one-dimensional time-series signal from two-dimensional (2D) images. In non-static videos, current approaches suggest computing the mean intensity of relatively stationary areas within each frame. In both cases, a one-dimensional time-series is generated, and the process of estimation mirrors that used for mains recordings. This time-series is regarded as a raw signal which can be passed through a zero-phase bandpass filter around the frequencies where the ENF is present. The filtering process is crucial for accurate ENF estimation, and the bandpass filter edges must be set to account for the aliased base frequency based on the nominal frame rate. In this study, we employed three methods to analyze our video recordings to estimate the ENF.

Proposed method

In this section, we propose an improved method that relies on the use of Simple Linear Iteration Clustering (SLIC) algorithm for image segmentation to generate super-pixels, which are area/regions with similar characteristics within a frame38. Super-pixel techniques aim to group pixels into larger, homogeneous regions that adhere to natural image boundaries, significantly reducing the complexity of image processing task38. Unlike processing individual pixels, working with super-pixels allows for localized feature analysis, enhances computational efficiency, and retains critical structural information. The SLIC algorithm is a widely adopted super-pixel segmentation technique known for its simplicity and effectiveness. SLIC operates by clustering pixels in a five-dimensional space composed of spatial (x, y) and color (e.g., Lab color space) information. By iteratively refining clusters through distance metrics, the algorithm efficiently generates regions with consistent color and texture properties while maintaining a balance between spatial proximity and appearance similarity38. These properties make SLIC particularly suitable for tasks requiring precise region-based analysis, such as ENF estimation.

Our method utilizes SLIC to focus on areas/regions with high luminance levels that are not obscured by shadows or dark areas, making it easier to detect light sources variations and estimate ENF signal more accurately. For the first frame t = 1, the SLIC algorithm is applied to the first frame I(1) to generate  regions (super-pixels) with similar characteristics. Then, the mean intensity values

regions (super-pixels) with similar characteristics. Then, the mean intensity values  for all regions in the first frame are calculated, retaining only those surpassing a predefined threshold

for all regions in the first frame are calculated, retaining only those surpassing a predefined threshold  used to filter out super-pixels with low mean intensity. Denoting the size of region mean intensity values surpassing the threshold as

used to filter out super-pixels with low mean intensity. Denoting the size of region mean intensity values surpassing the threshold as  , the mean intensity value for the first frame is calculated as follows:

, the mean intensity value for the first frame is calculated as follows:

|

7 |

Here,  represents the Heaviside function, where the function is 1 if

represents the Heaviside function, where the function is 1 if  , and

, and  otherwise.

otherwise.

We integrate motion compensation technique (optical flow method) to account for object movement between frames39. This involved estimating motion vectors  (indicating the spatial shift between frames

(indicating the spatial shift between frames  and

and  ) between consecutive frames and applying compensation to align super-pixels, reducing distortion and improving consistency in ENF estimation. Here,

) between consecutive frames and applying compensation to align super-pixels, reducing distortion and improving consistency in ENF estimation. Here,  is adjusted for motion as:

is adjusted for motion as:

|

8 |

Next, we make the super-pixel generation adaptive. Instead of applying SLIC only on the first frame as in Karantaidis and Kotropoulos29, we dynamically update the super-pixels across frames to adapt to changing scene characteristics. This adaptive approach helps to better capture variations in frames with object movement and scene complexity. Reapplying the SLIC algorithm to each frame  to generate

to generate  super-pixels with updated mean intensity values

super-pixels with updated mean intensity values  , we compute the mean intensity

, we compute the mean intensity  for each frame as:

for each frame as:

|

9 |

where  is the number of super-pixels in frame

is the number of super-pixels in frame  with mean intensity exceeding

with mean intensity exceeding  .

.

We apply a moving average filter to the time-series  to reduce noise and outliers within each super-pixel while preserving the spatial details captured during segmentation. This leads to a cleaner signal for ENF estimation.

to reduce noise and outliers within each super-pixel while preserving the spatial details captured during segmentation. This leads to a cleaner signal for ENF estimation.

|

10 |

where W is the number of frames used for smoothing.

Two techniques are employed for ENF extraction: the non-parametric Short-Time Fourier Transform (STFT) and the parametric Estimation by Rotational Invariance Techniques (ESPRIT). STFT assumes signal stationarity within short-time segment and computes the Discrete-Time Fourier Transform for each segment using the formula40:

|

11 |

Here, frames indexed by  are referred to as samples.

are referred to as samples.  is a window function of length

is a window function of length  ,

,  is the Fourier transform of the windowed data concentrated about

is the Fourier transform of the windowed data concentrated about  , and the hop size (the number of samples between consecutive time segments) in sample is denoted as

, and the hop size (the number of samples between consecutive time segments) in sample is denoted as  . An optimal balance between time and frequency resolution is achieved by selecting the appropriate window function, and the periodogram of each segment

. An optimal balance between time and frequency resolution is achieved by selecting the appropriate window function, and the periodogram of each segment  is computed, where

is computed, where  ranges from 0 to

ranges from 0 to  with

with  . The frequency corresponding to the maximum periodogram value is extracted as an initial ENF, and quadratic interpolation is utilized to refine the ENF estimate.

. The frequency corresponding to the maximum periodogram value is extracted as an initial ENF, and quadratic interpolation is utilized to refine the ENF estimate.

For the ESPRIT technique, assuming  to be the sample covariance matrix given by41:

to be the sample covariance matrix given by41:

|

12 |

where H represents transposition and

|

13 |

is a vector of  -lagging time series samples.

-lagging time series samples.  is the number of time steps, and

is the number of time steps, and  is the embedding dimension (or the order of the model). Assuming

is the embedding dimension (or the order of the model). Assuming  represents the signal subspace spanned by the

represents the signal subspace spanned by the  principal eigenvectors of

principal eigenvectors of  , the matrices

, the matrices  and

and  are formed by shifting the subspace matrix:

are formed by shifting the subspace matrix:

|

14 |

where  is the identity matrix of size

is the identity matrix of size  , and the shifts ensure that the subspace matrices correspond to the lagged signals. ESPRIT estimates the angular frequency

, and the shifts ensure that the subspace matrices correspond to the lagged signals. ESPRIT estimates the angular frequency  for

for  as

as  where

where  are the eigenvalues of the estimated matrix

are the eigenvalues of the estimated matrix  39:

39:

|

15 |

The frequency  in

in  is then given by:

is then given by:

|

16 |

where  is the sampling frequency, and

is the sampling frequency, and  the nearest to the aliased base frequency is considered the ENF estimate. Figure 5 shows the schematics for the proposed method. The proposed method is versatile as it combines video segmentation (via SLIC) and sophisticated signal processing techniques (STFT and ESPRIT) to estimate ENF from both white wall, static and dynamic complex scene video recordings.

the nearest to the aliased base frequency is considered the ENF estimate. Figure 5 shows the schematics for the proposed method. The proposed method is versatile as it combines video segmentation (via SLIC) and sophisticated signal processing techniques (STFT and ESPRIT) to estimate ENF from both white wall, static and dynamic complex scene video recordings.

Fig. 5.

Schematic diagram showing ENF extraction process from smartphone videos using improved super-pixel method.

Baseline methods

To evaluate the performance of the proposed method, we employ two baseline methods proposed in Karantaidis and Kotropoulos29 and in Frijters and Geradts28 to compare the proposed method. The method in Frijters and Geradts28 was used only for white wall videos. In their method, the gray values of the individual frames in the video are computed to extract the light intensity signal. Each pixel’s gray value represents the light intensity in that pixel. The intensity signal is then passed through a high pass filter to eliminate the direct current (DC) component. Fast Fourier Transform (FFT) is then applied to the signal, which creates a power spectrum showing all available frequencies within the signal. A band-pass filter is subsequently applied to filter out every other frequency from the signal except the one within the passband we have defined (9.8 Hz–10.2 Hz). Finally, the STFT is applied to the band-pass filtered signal for possible extraction of the ENF signal. The STFT examines the precise/exact frequency for small time steps which produced a varying frequency around the specified frequency component, similar to the ENF signal which varies around 50 Hz. Figure 6 shows the schematics for the proposed method in Frijters and Geradts28.

Fig. 6.

Schematic diagram showing ENF extraction process from smartphone videos using the method in Frijters and Geradts28.

In this study, these three methods were implemented in MATLAB and used for the analysis of the videos.

Evaluation indices

Normalized cross-correlation coefficient

After analyzing the videos, we compare the frequency variations extracted from videos with reference ENF data recorded simultaneously at twelve anchor substations. We employed normalized cross-correlation coefficient (NCC) for this comparison which evaluates the similarity of signal patterns without taking their amplitudes or baseline shift into account. The NCC of the ENF signal  and the reference signal

and the reference signal  is evaluated as:

is evaluated as:

|

17 |

where  is the total number of samples,

is the total number of samples,  is the mean of the extracted ENF signal

is the mean of the extracted ENF signal  and

and  is the mean of the reference signals

is the mean of the reference signals  . A high correlation value implies that the ENF signal extracted from the video is of high quality.

. A high correlation value implies that the ENF signal extracted from the video is of high quality.

In this study, we used a longer reference ENF signals to compute the peak Normalized Cross-Correlation (NCC) with the extracted ENF signal from the videos. Specifically, for the five-minute videos, we utilized a one-hour reference signal, and for the ten-minute videos, we employed a two-hour reference signal. This approach was chosen to ensure that the reference signal covered a wide temporal range and allowed for the identification of the peak correlation at different time lags. By using longer reference signals, we ensured that the peak NCC reflects the highest correlation between the extracted ENF signal and the reference signal, even when there are potential time shifts or lags. This method allowed for a more robust and comprehensive evaluation of the similarity between the video ENF and the reference signal, ensuring that the peak correlation is captured effectively.

Triangulation

Triangulation is a method used to determine the location of an object based on the geometric properties of triangles and mathematical formulations42. It involves two techniques: lateration and angulation. Lateration determines the position of an object by measuring its distance from multiple reference points. In a two-dimensional coordinate system, distances to at least three non-collinear reference points need to be measured as shown in Fig. 7a. Various techniques are used to measure distances in lateration system, including direct measure, time-of-arrival, and attenuation. Angulation, on the other hand, uses angles to determine the position of an object. In a two-dimensional coordinate system, angulation utilizes two angles and the distance between the reference points as shown in Fig. 7b 42.

Fig. 7.

Lateration and angulation techniques of triangulation.

Results

The initial analysis involved static white wall videos recorded at five different locations, which provide an ideal scenario for accurate ENF estimation due to their static nature. For each video, we applied the proposed method and the baseline method proposed in Frijters and Geradts28 to extract the ENF signal. The ENF extracted from each video is then compared to each reference ENF data obtained from twelve different anchor substations, and their NCC values were calculated. Table 3 shows the NCC values obtained from iPhone 6S videos using the proposed super-pixel method and the method in Frijters and Geradts28. It can be seen from the tables that the proposed method outperforms the method in Frijters and Geradts28 which is the same situation in the results obtained from videos recorded with iPhone 16 Pro Max, Samsung Galaxy S22, and Oppo A5 which can be found in the supplementary information (Supplementary Table S1). Subsequently, ENF signals were extracted from video recordings containing complex scenes and movement using the proposed super-pixel method and baseline method proposed in Karantaidis and Kotropoulos29. The corresponding NCC values achieved by the proposed super-pixel method and Karantaidis and Kotropoulos29 are displayed in Tables 4 and 5 for videos with static complex scene and dynamic complex scene respectively. Again, it can be seen from the tables that the proposed method outperforms the method in Karantaidis and Kotropoulos29 for all videos recorded with all the phones. It is important to mention that the method in Frijters and Geradts28 was also used to analyze videos with static complex and dynamic complex scenes, but it failed to extract the ENF from the videos.

Table 3.

Correlation coefficient between the ENF of white wall videos captured with iPhone 6 s and the reference ENFs.

| White wall videos | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Substation sites | Gladstone | Forest Lake | Caboolture | Petrie | Sunshine coast | |||||

| Proposed method | Frijters and Geradts28 | Proposed method | Frijters and Geradts28 | Proposed method | Frijters and Geradts28 | Proposed method | Frijters and Geradts28 | Proposed method | Frijters and Geradts28 | |

| Atherton | 0.9108 | 0.8744 | 0.9655 | 0.9352 | 0.9144 | 0.8907 | 0.9731 | 0.9458 | 0.8592 | 0.8368 |

| Biloela | 0.9668 | 0.9414 | 0.9730 | 0.9419 | 0.9497 | 0.9269 | 0.9742 | 0.9473 | 0.9606 | 0.9302 |

| Childers | 0.9512 | 0.9308 | 0.9752 | 0.9430 | 0.9764 | 0.9683 | 0.9841 | 0.9596 | 0.9670 | 0.9377 |

| Dalby | 0.9372 | 0.9117 | 0.9783 | 0.9493 | 0.9784 | 0.9610 | 0.9858 | 0.9628 | 0.9645 | 0.9356 |

| Emerald | 0.9485 | 0.9265 | 0.9681 | 0.9367 | 0.9703 | 0.9489 | 0.9646 | 0.9336 | 0.9562 | 0.9159 |

| Gladstone | 0.9721 | 0.9471 | 0.9696 | 0.9389 | 0.9318 | 0.9058 | 0.9709 | 0.9441 | 0.9639 | 0.9348 |

| Hughenden | 0.9350 | 0.9080 | 0.9651 | 0.9348 | 0.9202 | 0.8983 | 0.9672 | 0.9404 | 0.9087 | 0.8870 |

| Innisfail | 0.9335 | 0.9058 | 0.9722 | 0.9408 | 0.9719 | 0.9507 | 0.9773 | 0.9502 | 0.8226 | 0.7897 |

| Kingaroy | 0.9473 | 0.9209 | 0.9801 | 0.9515 | 0.9737 | 0.9535 | 0.9892 | 0.9651 | 0.9692 | 0.9424 |

| Maryborough | 0.9214 | 0.9028 | 0.9742 | 0.9441 | 0.9917 | 0.9702 | 0.9781 | 0.9522 | 0.9617 | 0.9328 |

| Rockhampton | 0.9628 | 0.9393 | 0.9687 | 0.9381 | 0.9725 | 0.9522 | 0.9688 | 0.9427 | 0.9611 | 0.9305 |

| Toowoomba | 0.9461 | 0.9198 | 0.9774 | 0.9472 | 0.9775 | 0.9595 | 0.9835 | 0.9579 | 0.9628 | 0.9338 |

Table 4.

Correlation coefficient between the ENF extracted from static complex scene videos captured with iPhone 6S and the reference ENFs. (Karan. and Kotro.29—Karantaidis and Kotropoulos29).

| Static complex scene videos | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Substation sites | Gladstone | Forest Lake | Caboolture | Petrie | Sunshine Coast | |||||

| Proposed method | Karan. and Kotro.29 | Proposed method | Karan. and Kotro.29 | Proposed method | Karan. and Kotro.29 | Proposed method | Karan. and Kotro.29 | Proposed method | Karan. and Kotro.29 | |

| Atherton | 0.8925 | 0.7971 | 0.9241 | 0.8722 | 0.8998 | 0.8737 | 0.9188 | 0.8833 | 0.8233 | 0.7994 |

| Biloela | 0.9306 | 0.8724 | 0.9419 | 0.8981 | 0.9158 | 0.8924 | 0.9235 | 0.8887 | 0.9267 | 0.8936 |

| Childers | 0.9227 | 0.8592 | 0.9428 | 0.9001 | 0.9231 | 0.9012 | 0.9310 | 0.9095 | 0.9342 | 0.9038 |

| Dalby | 0.9055 | 0.8485 | 0.9473 | 0.9108 | 0.9255 | 0.9065 | 0.9378 | 0.9104 | 0.9319 | 0.9001 |

| Emerald | 0.9191 | 0.8515 | 0.9388 | 0.8846 | 0.9195 | 0.8957 | 0.9144 | 0.8775 | 0.9234 | 0.8907 |

| Gladstone | 0.9334 | 0.8895 | 0.9404 | 0.8884 | 0.9132 | 0.8862 | 0.9226 | 0.8861 | 0.9308 | 0.8981 |

| Hughenden | 0.9015 | 0.8382 | 0.9233 | 0.8701 | 0.9071 | 0.8766 | 0.9152 | 0.8789 | 0.8825 | 0.8595 |

| Innisfail | 0.8987 | 0.8264 | 0.9415 | 0.8902 | 0.9152 | 0.8891 | 0.9248 | 0.8912 | 0.7897 | 0.7801 |

| Kingaroy | 0.9121 | 0.8537 | 0.9481 | 0.9192 | 0.9218 | 0.8981 | 0.9461 | 0.9142 | 0.9376 | 0.9077 |

| Maryborough | 0.8962 | 0.8196 | 0.9439 | 0.9054 | 0.9315 | 0.9104 | 0.9272 | 0.8951 | 0.9285 | 0.8956 |

| Rockhampton | 0.9281 | 0.8692 | 0.9397 | 0.8861 | 0.9208 | 0.8972 | 0.9171 | 0.8808 | 0.9271 | 0.8944 |

| Toowoomba | 0.9108 | 0.8504 | 0.9458 | 0.9085 | 0.9249 | 0.9044 | 0.9298 | 0.8987 | 0.9296 | 0.8973 |

Table 5.

Correlation coefficient between the ENF extracted from dynamic complex scene videos captured with iPhone 6S and the reference ENF. (Karan. and Kotro.29—Karantaidis and Kotropoulos29).

| Dynamic complex scene videos | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Substation sites | Gladstone | Forest Lake | Caboolture | Petrie | Sunshine Coast | |||||

| Proposed method | Karan. and Kotro.29 | Proposed method | Karan. and Kotro.29 | Proposed method | Karan. and Kotro.29 | Proposed method | Karan. and Kotro.29 | Proposed method | Karan. and Kotro.29 | |

| Atherton | 0.8771 | 0.7541 | 0.8816 | 0.7555 | 0.8487 | 0.7228 | 0.9018 | 0.7704 | 0.8071 | 0.7094 |

| Biloela | 0.9076 | 0.7815 | 0.9146 | 0.7819 | 0.8706 | 0.7506 | 0.9027 | 0.7719 | 0.9109 | 0.7847 |

| Childers | 0.9003 | 0.7753 | 0.9151 | 0.7836 | 0.8968 | 0.7737 | 0.9198 | 0.7905 | 0.9186 | 0.7838 |

| Dalby | 0.8894 | 0.7662 | 0.9190 | 0.7887 | 0.8982 | 0.7764 | 0.9212 | 0.7917 | 0.9164 | 0.7812 |

| Emerald | 0.8967 | 0.7725 | 0.9124 | 0.7776 | 0.8884 | 0.7639 | 0.8965 | 0.7614 | 0.9087 | 0.7826 |

| Gladstone | 0.9121 | 0.7878 | 0.9143 | 0.7807 | 0.8591 | 0.7433 | 0.9012 | 0.7696 | 0.9146 | 0.7794 |

| Hughenden | 0.8854 | 0.7628 | 0.8764 | 0.7496 | 0.8553 | 0.7291 | 0.8994 | 0.7658 | 0.8642 | 0.6445 |

| Innisfail | 0.8824 | 0.7605 | 0.9135 | 0.7801 | 0.8901 | 0.7658 | 0.9049 | 0.7749 | 0.7830 | 0.6544 |

| Kingaroy | 0.8920 | 0.7681 | 0.9196 | 0.7903 | 0.8927 | 0.7681 | 0.9237 | 0.7945 | 0.9218 | 0.7875 |

| Maryborough | 0.8788 | 0.7568 | 0.9160 | 0.7855 | 0.9102 | 0.7801 | 0.9072 | 0.7771 | 0.9129 | 0.7868 |

| Rockhampton | 0.9048 | 0.7794 | 0.9131 | 0.7792 | 0.8911 | 0.7672 | 0.9008 | 0.7682 | 0.9121 | 0.7851 |

| Toowoomba | 0.8902 | 0.7673 | 0.9172 | 0.7871 | 0.8980 | 0.7748 | 0.9125 | 0.7832 | 0.9132 | 0.7782 |

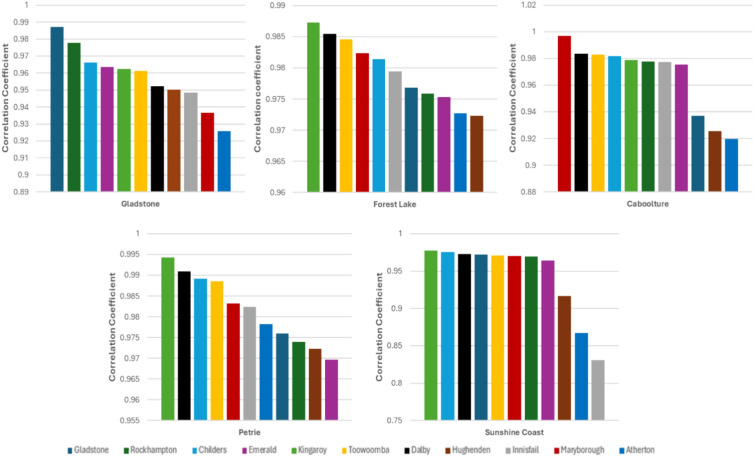

Figures 8 and 9 show the extracted ENF signals from static white wall videos recorded in Gladstone and Caboolture, matched against reference ENF signals obtained from the Gladstone and Maryborough substations, respectively. Figure 10 compares the ENF signal extracted from the Gladstone white wall video with reference ENF data from three substations (Gladstone, Biloela, and Rockhampton). During our recordings, we considered two location (town) scenarios: one with an anchor substation present and another without any anchor substation nearby. In the first scenario, we recorded videos in Gladstone, which has an anchor substation. The ENF signal extracted from Gladstone recordings showed the highest NCC values when compared to the ENF data from the Gladstone anchor substation. This is clearly seen in the result tables and the bar charts presented in Figs. 11, 12, 13, and 14. Importantly, we observed that ENF signals extracted from videos tend to achieve the peak NCC values with substations closest in geographical proximity to the recording location, as hypothesized by Garg et al23. However, in the second scenario with videos recorded in locations without an anchor substation, there are notable inconsistencies in the correlation values across different substations. For example, in Forest Lake, Caboolture, Petrie, and the Sunshine Coast, while the best NCC values were often achieved among three to five substations closest to the recording location, the peak NCC was not always with the geographically nearest substation. In some cases, a substation farther away achieved the peak NCC value than one that is geographically closer to the recording point. For videos recorded in Gladstone, the peak NCC value was achieved with the reference data obtain from the Gladstone anchor station. For videos recorded in Forest Lake, Petrie, and Sunshine Coast, the peak NCC value was achieved with the reference data obtained from Kingaroy anchor station while for videos recorded in Caboolture, the peak NCC value was achieved with reference data obtained from Maryborough anchor station. The peak NCC values for each recording points are underlined in the result tables while the best five top NCC values for each recording points are highlighted in bold font. This trend in the results obtained from videos captured with iPhone 6S is also observed in the results obtained from videos captured with other smartphones (iPhone 16 Pro Max, Samsung Galaxy S22, and Oppo A5) across all the methods as can be seen in sample bar charts presented in Figs. 11, 12, 13, and 14, and Supplementary Table S1.

Fig. 8.

ENF signal from a white wall video conducted in Gladstone matched with reference ENF data obtained from Gladstone substation (Frequency signals are normalized around zero).

Fig. 9.

ENF signal from a white wall video conducted in Caboolture matched with reference ENF data obtained from Maryborough substation (Frequency signals are normalized around zero).

Fig. 10.

ENF signal from a white wall video conducted in Gladstone matched with reference ENF data obtained from Gladstone, Biloela, and Rockhampton substations (Frequency signals are normalized around zero).

Fig. 11.

Chart showing the correlations between the ENF extracted from white wall videos for five recording locations captured with iPhone 6S and reference ENF data from 12 anchor substations using the improved super-pixel method.

Fig. 12.

Chart showing the correlations between the ENF extracted from white wall videos for five recording locations captured with iPhone 16 Pro Max and reference ENF data from 11 anchor substations using the linear STFT method.

Fig. 13.

Chart showing the correlations between the ENF extracted from white wall videos for five recording locations captured with Samsung Galaxy S22 and reference ENF data from 11 anchor substations using the improved super-pixel method.

Fig. 14.

Chart showing the correlations between the ENF extracted from white wall videos for five recording locations captured with Oppo A5 and reference ENF data from 11 anchor substations using the improved super-pixel method.

The variability suggests that while geographic proximity is generally a strong predictor of higher NCCs, other factors beyond geographical distance such as grid topology, transmission line paths, and grid infrastructure play a role in the propagation of ENF signals. The variability in NCCs is likely due to differences in how ENF signals travel through the grid’s transmission lines, where road distance may not equal actual transmission distances, and multiple paths may exist for the signal to reach various substations. Additionally, environmental factors such as electromagnetic interference or local grid load variations could also play a role in the observed signal fluctuations.

Despite these inconsistencies, our results demonstrate the effectiveness of using triangulation with the top NCC values to narrow down the recording location. In Figs. 15a–c, we illustrate the effectiveness of triangulation by applying it to the top five (pentagonal triangulation), four (quadrilateral triangulation), and three (triangular triangulation) highest NCC values. This method successfully captures the location of the video recording (Gladstone) within the triangulated area. This demonstrates that, for a video from an unknown location/origin within the grid, even when direct accuracy of the NCC is hindered by signal complexities, the use of NCC combined with triangulation can significantly narrow down the potential area where the video was recorded. As shown in Fig. 15d, applying triangulation to Forest Lake video identifies the three closest substations—Kingaroy, Dalby, and Toowoomba as being within the triangulated region. Similarly, for videos recorded in Caboolture (Fig. 15e), triangulating the anchor substations that achieved the best NCCs effectively localizes the recording area. The same approach produced consistent results for video recordings made in Petrie (Fig. 15f), and Sunshine Coast, further validating the method’s capability to narrow down location estimation across varying environments within the grid.

Fig. 15.

Triangulation of the best NCC for Gladstone substation (a–c), Forest Lake substation (d), Caboolture substation (e), Petrie substation (f).

Discussion

Our study presents a simple and effective approach to narrow down the location of a video file within a grid by analyzing the ENF signal extracted from the video footage. The proposed method could significantly improve the efficiency of narrowing down the locations of video recordings, especially in large areas like the Australian Eastern Grid, which spans over 4,600 km, one of the largest grids in the world. While previous research mainly focused on identifying the grid of recording from many grids (inter-grid scenario) using ENF features extracted from power and audio recordings, this study has shown that ENF signals extracted from noisier videos with illumination from artificial lights can be exploited to narrow down the location of recording within a specific grid (intra-grid scenario). In an ideal condition, the results demonstrate that ENF signals extracted from videos can be correlated with reference ENF data from nearby substations, providing useful insights for verifying the location of a recording. However, as indicated by the inconsistencies in NCC values, especially in non-ideal conditions, the method may experience challenges in guaranteeing precise/pinpoint location identification.

One of the key challenges in accurately interpreting the results arises from the complex nature of ENF signal propagation in electrical grids. While geographical proximity to a substation is a significant factor, our findings show that the peak NCC values do not always correlate perfectly with distance. This suggests that grid topology—specifically, the structure and layout of the transmission lines has a substantial influence on ENF signal behavior. Power lines often follow paths dictated by grid architecture rather than road distances, and signal propagation can be affected by impedance variations, transformer connections, and the specific configuration of transmission networks. These factors complicate the relationship between the ENF signal extracted from a video and the expected correlation with nearby substations. The inconsistencies in NCC values, particularly in regions without a nearby anchor substation, underscore the limitations of relying solely on geographical distance to interpret the correlation, hence the need for incorporating triangulation in this study.

In some cases, we observed that the second or third closest substation achieved a peak NCC than the nearest one, suggesting that the signal’s travel path may differ from the shortest geographical route. This variation could be attributed to the distribution of power loads, the complexity of the electrical grid in the area, and differences in transmission line distances, which may not correspond to simple physical proximity.

While the approach is effective in narrowing down the location of video recordings, it should not be seen as a tool for pinpointing the exact location with high precision under all grid conditions. The method is sensitive to the complexities of signal propagation, which vary from one region to another based on the grid’s infrastructure. This limitation is particularly evident in cases where recordings were made in areas without anchor substations, where the triangulation of NCC values often captures a broader range of possible locations. To enhance the reliability of the method, future research will explore the impact of grid topology more thoroughly, focusing on regions with varying grid configurations. Expanding the range of experimental conditions such as including areas with complex transmission line networks or regions with multiple transformers would help refine the method’s accuracy and provide deeper insight into the interplay between ENF signal behavior and grid architecture. Additionally, incorporating data on the actual transmission paths could potentially lead to more robust predictions of NCC patterns.

Authors also note that the adaptive use of the SLIC segmentation algorithm may introduce some computational complexities, particularly for videos where multiple frames must be processed. However, to address the computational requirements, processing could be parallelized, or only key frames could be analyzed in certain scenarios to speed up video localization without significantly impacting accuracy.

Conclusion

In this study, we explored the potential of using ENF signals extracted from video recordings for intra-grid location estimation. We developed an improved super-pixel technique to mitigate sensor and environmental noise prevalent in smartphone-recorded videos, achieving an ENF signal with a higher signal-to-noise ratio (SNR) for location analysis. By applying our method to various types of video recordings—static white wall videos, complex scene videos, and videos with movement, we evaluated its robustness and consistency.

Our findings show that the method holds great promise in narrowing down the possible location of a video recording within the grid. While the method demonstrate promise, the accuracy varies depending on factors such as the proximity of anchor substations, grid topology, and the complexity of signal paths. Although our method successfully correlates ENF signals from videos with those from nearby substations, the observed variability in NCC values indicates that it is better suited for approximating the location within a region of the grid rather than providing pinpoint accuracy. The inconsistencies in the correlation values, particularly in areas lacking nearby anchor substations, suggest that the method is influenced by the intricate nature of grid infrastructure and transmission lines, factors that require further investigation.

Future research will focus on expanding the range of experimental conditions, including different grid topologies and regions with varying levels of complexity in transmission networks. Additionally, incorporating more detailed grid data and improving the granularity of ENF databases could further enhance the method’s reliability and accuracy.

Supplementary Information

Acknowledgements

The authors gratefully acknowledge the support from Roly Jarrett and Ergon Energy Network (Part of Energy Queensland) for providing the reference data used in this research.

Author contributions

E.N., L.M.A., M.W. and K.P.S. conceived of the study and approach. E.N. developed the theory and performed the experiments. L.M.A. verified the numerical application and post-processing. E.N. led the writing of the manuscript, and L.M.A., M.W. and K.P.S. helped shape the research, analysis and manuscript.

Data availability

The dataset generated during and/or analyzed during the current study are available from the corresponding author on reasonable request.

Declarations

Competing interests

The authors declare no competing interests.

Footnotes

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

The online version contains supplementary material available at 10.1038/s41598-025-89184-w.

References

- 1.“Child Exploitation Investigations Unit, U.S. Department of Homeland Security,” [Online]. Available: https://www.ice.gov/predator, Accessed May 2024.

- 2.“Child Protection from Violence, Exploitation and Abuse, UNICEF at United Nation,” [Online]. Available: https://www.unicef.org/protection/, Accessed May 2024.

- 3.Hemadri, R. V., Singh, A. & Singh, A. Children safety and rescue (censer) system for trafficked children from brothels in india. Proc. AAAI Conf. Artif. Intell.36(11), 11917–11925 (2022). [Google Scholar]

- 4.Bamigbade, O., Sheppard, J. & Scanlon, M. Computer Vision for Multimedia Geolocation in Human Trafficking Investigation: A Systematic Literature Review (2024). arXiv preprint arXiv:2402.15448.

- 5.“Finder (IARPA Research Program),” [Online]. Available: https://www.iarpa.gov/index.php/research-programs/finder, Accessed November 2016.

- 6.Friedland, G., Vinyals, O. & Darrell, T. Multimodal location estimation. In: Proceedings of the 18th ACM International Conference on Multimedia. MM ’10; New York, NY, USA: Association for Computing Machinery (2010), 1245–1252.

- 7.Yuan, Y. Geolocation of images taken indoors using convolutional neural network. Master’s thesis; Monash University, Melbourne, Australia (2022).

- 8.Wong, W., Hajj-Ahmad, A. & Wu, M. Invisible geo-location signature in a single image. In Proceedings of IEEE International Conference on Acoustics Speech Signal Processing (ICASSP), Apr. 2018, pp. 1987–1991.

- 9.Grigoras, C. Digital audio recording analysis: The electric network frequency (ENF) criterion. Int. J. Speech, Lang. Law12(1), 63–76 (2005).

- 10.Ngharamike, E., Ang, K. L. M., Seng, J. K. P. & Wang, M. ENF based digital multimedia forensics: Survey, application, challenges and future work. IEEE Access (2023).

- 11.Sanders, R. W. Digital audio authenticity using the electric network frequency. In Proceedings of Audio Engineering on Society Conference, 33rd Int. Conf., Audio Forensics-Theory Pract., Audio Eng. Soc., Denvor, CO, USA, Jun. 2008, pp. 5–7.

- 12.Brixen, E. B. Techniques for the authentication of digital audio recordings. In Audio Engineering Society Convention. New York, NY, USA: Audio Eng. Soc. (2007).

- 13.Garg, R., Varna, A. L. & Wu, M. Seeing’ ENF: Natural time stamp for digital video via optical sensing and signal processing. In Proceedings of 19th ACM International Conference on Multimedia, Scottsdale, AZ, USA, Nov. 2011, pp. 23–32.

- 14.Garg, R., Varna, A. L., Hajj-Ahmad, A. & Wu, M. ‘“Seeing” ENF: Power signature-based timestamp for digital multimedia via optical sensing and signal processing’. IEEE Trans. Inf. Forensics Secur.8(9), 1417–1432 (2013). [Google Scholar]

- 15.Ferrara, P., Sanchez, I., Draper-Gil, G., Junklewitz, H. & Beslay, L. A MUSIC spectrum combining approach for ENF-based video timestamping. In 2021 IEEE International Workshop on Biometrics and Forensics (IWBF) (pp. 1–6) (2021). IEEE.

- 16.Vatansever, S., Dirik, A. E. & Memon, N. ENF based robust media time-stamping. IEEE Signal Process. Lett.29, 1963–2196 (2022). [Google Scholar]

- 17.Wang, Y., Hu, Y., Liew, A.W.-C. & Li, C.-T. ‘ENF based video forgery detection algorithm’. Int. J. Digit. Crime Forensics12(1), 131–156 (2020). [Google Scholar]

- 18.Wang, Z.-F., Wang, J., Zeng, C.-Y., Min, Q.-S., Tian, Y. & Zuo, M.-Z. Digital audio tampering detection based on ENF consistency. In Proceedings of International Conference on Wavelet Analysis and Pattern Recognition (ICWAPR), pp. 209–214 (2018).

- 19.Korgialas, C., Kotropoulos, C. & Plataniotis, K. N. Leveraging Electric Network Frequency Estimation for Audio Authentication. IEEE Access (2024).

- 20.Hajj-Ahmad, A., Berkovich, A. & Wu, M. Exploiting power signatures for camera forensics. IEEE Signal Process. Lett.23(5), 713–717 (2016). [Google Scholar]

- 21.Bykhovsky, D. Recording device identification by ENF harmonics power analysis. Forensic Sci. Int.307, 110100 (2020). [DOI] [PubMed] [Google Scholar]

- 22.Ngharamike, E., Ang, L.-M., Seng, K. P. & Wang, M. Exploiting the rolling shutter read-out time for ENF-based camera identification. Appl. Sci.13(8), 5039 (2023). [Google Scholar]

- 23.Garg, R., Hajj-Ahmad, A. & Wu, M. Geo-location estimation from electrical network frequency signals. In Proceedings of the 2013 IEEE International Conference on Acoustics, Speech and Signal Processing, pages 2862–2866 (2013a). IEEE.

- 24.Hajj-Ahmad, A., Garg, R. & Wu, M. ‘ENF-based region-of-recording identification for media signals’. IEEE Trans. Inf. Forensics Secur.10(6), 1125–1136 (2015). [Google Scholar]

- 25.Vatansever, S., Dirik, A. E. & Memon, N. Detecting the presence of ENF signal in digital videos: A super-pixel-based approach. IEEE Signal Process. Lett.24(10), 1463–1467 (2017). [Google Scholar]

- 26.Choi, J., Wong, C. W., Su, H. & Wu, M. Analysis of ENF signal extraction from videos acquired by rolling shutters. IEEE Trans. Inf. Forens. Secur.18, 4229–4242 (2023). [Google Scholar]

- 27.Han, H., Jeon, Y., Song, B. K. & Yoon, J. W. A phase-based approach for ENF signal extraction from rolling shutter videos. IEEE Signal Process. Lett.29, 1724–1728 (2022). [Google Scholar]

- 28.Frijters, G. & Geradts, Z. J. Use of electric network frequency presence in video material for time estimation. J. Forens. Sci.67(3), 1021–1032 (2022). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Karantaidis, G. & Kotropoulos, C. An automated approach for electric network frequency estimation in static and non-static digital video recordings. J. Imaging7(10), 202 (2021). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Šaric, Ž., Žunic, A., Zrnic, T., Kneževic, M., Despotovic, D. & Delic, T. Improving location of recording classification using electric network frequency (ENF) analysis. In Proceedings of IEEE 14th International Symposium on Intelligent System Information (SISY), Aug. 2016, pp. 51–56.

- 31.Elmesalawy, M. M. & Eissa, M. M. New forensic ENF reference database for media recording authentication based on harmony search technique using GIS and wide area frequency measurements. IEEE Trans. Inf. Forens. Secur.9(4), 633–644 (2014). [Google Scholar]

- 32.Garg, R., Hajj-Ahmad, A. & Wu, M. Feasibility study on intra-grid location estimation using power ENF signals (2021). arXiv preprint arXiv:2105.00668.

- 33.Liang, C. K., Chang, L. W. & Chen, H. H. Analysis and compensation of rolling shutter effect. IEEE Trans. Image Process.17(8), 1323–1330 (2008). [DOI] [PubMed] [Google Scholar]

- 34.Bollen, M. & Gu, I. Signal Processing of Power Quality Disturbances, Wiley-IEEE Press (2006).

- 35.The Power Grid in Australia. Accessed: April 2024. [Online]. Available: https://www.energymatters.com.au/renewable-news/the-power-grid-in-australia/

- 36.National Electricity Market. Accessed: April 2024. [Online]. Available: https://www.aemc.gov.au/energy-system/electricity/electricity-system/NEM

- 37.Su, H., Hajj-Ahmad, A., Wong, C., Garg, R. &Wu, M. ENF signal induced by power grid: A new modality for video synchronization. In Proceedings of the 2nd ACM International Workshop on Immersive Media Experiences, Orlando, FL, USA, 3–7 November 2014; pp. 13–18.

- 38.Achanta, R. et al. SLIC superpixels compared to state-of-the-art superpixel methods. IEEE Trans. Pattern Anal. Mach. Intell.34(11), 2274–2282 (2012). [DOI] [PubMed] [Google Scholar]

- 39.Su, H., Hajj-Ahmad, A., Garg, R. & Wu, M. Exploiting rolling shutter for ENF signal extraction from video. In 2014 IEEE International Conference on Image Processing (ICIP) (pp. 5367–5371) (2014). IEEE.

- 40.Allen, J. B. & Rabiner, L. R. A unified approach to short-time Fourier analysis and synthesis. Proc. IEEE65(11), 1558–1564 (1977). [Google Scholar]

- 41.Stoica, P. & Moses, R. L. Spectral analysis of signals (Vol. 452, pp. 25–26). Upper Saddle River, NJ: Pearson Prentice Hall (2005).

- 42.Gonçalo, G. & Helena, S. A novel approach to indoor location systems using propagation models in WSNs. Int. J. Adv. Netw. Serv.2(4), 251–260 (2009). [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Data Availability Statement

The dataset generated during and/or analyzed during the current study are available from the corresponding author on reasonable request.