Abstract

Objective.

Video-based pose estimation is an emerging technology that shows significant promise for improving clinical gait analysis by enabling quantitative movement analysis with little costs of money, time, or effort. The objective of this study is to determine the accuracy of pose estimation-based gait analysis when video recordings are constrained to 3 common clinical or in-home settings (ie, frontal and sagittal views of overground walking and sagittal views of treadmill walking).

Methods.

Simultaneous video and motion capture recordings were collected from 30 persons after stroke during overground and treadmill walking. Spatiotemporal and kinematic gait parameters were calculated from videos using an open-source human pose estimation algorithm and from motion capture data using traditional gait analysis. Repeated-measures analyses of variance were then used to assess the accuracy of the pose estimation-based gait analysis across the different settings, and the authors examined Pearson and intraclass correlations with ground-truth motion capture data.

Results.

Sagittal videos of overground and treadmill walking led to more accurate measurements of spatiotemporal gait parameters versus frontal videos of overground walking. Sagittal videos of overground walking resulted in the strongest correlations between video-based and motion capture measurements of lower extremity joint kinematics. Video-based measurements of hip and knee kinematics showed stronger correlations with motion capture versus ankle kinematics for both overground and treadmill walking.

Conclusion.

Video-based gait analysis using pose estimation provides accurate measurements of step length, step time, and hip and knee kinematics during overground and treadmill walking in persons after stroke. Generally, sagittal videos of overground gait provide the most accurate results.

Impact.

Many clinicians lack access to expensive gait analysis tools that can help identify patient-specific gait deviations and guide therapy decisions. These findings show that video-based methods that require only common household devices provide accurate measurements of a variety of gait parameters in persons after stroke and could make quantitative gait analysis significantly more accessible.

Keywords: Artificial Intelligence, Gait, Gait Disorders: Neurologic, Measurement: Applied, Movement, Rehabilitation, Stroke

Introduction

Gait dysfunction is common in many persons with neurologic conditions, including stroke. Persons after stroke exhibit heterogeneous patterns of gait deficits that often include decreased walking speed, shortened step lengths, increased gait asymmetry, and deviations in movement kinematics.1,2 As gait dysfunction after stroke is associated with increased fall risk, reduced independence, decreased quality of life, and long-term disability,3–5 persons after stroke commonly report gait improvement to be among their most important rehabilitation goals.6 However, personalized therapy after stroke depends on accurate assessment of patient-specific gait deficits.

Objective and quantitative clinical gait analysis is often difficult because traditional methods are inaccessible to most clinicians. Marker-based motion capture systems and electronic gait mats are considered gold standards for gait analysis, but these tools are prohibitively expensive and time consuming, can require technical expertise to use, and are unavailable in most clinics. Wearable devices improve accessibility, but these devices also require specific hardware and provide only limited information (ie, a predefined set of gait parameters). Consequently, current methods of gait analysis are insufficient for providing an accessible, comprehensive, objective analysis directly in the clinical setting. There is a clear need for new approaches that can provide a kinematic gait analysis with minimal costs of time, money, and effort.

Recent developments in computer vision have produced algorithms that track human movement from simple digital videos.7–14 These human pose estimation algorithms leverage artificial intelligence to identify and track anatomical landmarks from video recordings automatically. Virtual markers are assigned to these landmarks, providing estimation of the anatomical position (ie, “pose”) of the person. These technologies offer solutions to some of the shortcomings of traditional quantitative gait assessments, as pose estimation enables full-body movement tracking using only a video recorded by a common household device (eg, smartphone, tablet) in virtually any environment (eg, clinic, home).

Recent work from our group and others has leveraged pose estimation to provide quantitative, video-based overground gait analysis.15–20 These studies demonstrated that video-based gait analysis work flows show promise for accurate measurements when compared to ground-truth motion capture recordings. However, we do not yet understand how best to record the videos to optimize the analysis within common clinical constraints. As examples, we consider that rehabilitation clinicians may only have access to long, narrow hallways, and/or stationary treadmills for performing clinical gait analysis; this means that the clinician would likely be unable to record a sagittal video of overground walking (as has been investigated previously19) given the space constraints.

Our previous work considered only frontal and/or sagittal videos of overground gait. We observed differences in the levels of accuracy achieved from the different viewpoints, suggesting that the video recording methods are relevant to the accuracy of the assessment.21 We have not yet compared the accuracy achieved using treadmill-based assessments, which are often accessible in clinical settings and may show improved accuracy due to lesser influence of issues like parallax and changes in perspective since the patient is largely stationary within the field of view. There is a need for understanding how video-based gait analyses compare to ground-truth motion capture analyses in the different scenarios that could be available in clinical settings (eg, sagittal overground videos, frontal overground videos, treadmill videos).

The goal of this study was to compare the accuracy of video-based gait analysis during overground versus treadmill walking in persons after stroke. We determined accuracy by calculating the difference between the video-based measurements and ground-truth marker-based motion capture measurements. We hypothesized that video-based gait parameters measured during treadmill walking would be more accurate than those measured during overground walking and that video-based kinematics from more proximal joints (eg, hip and knee) would be measured more accurately than ankle kinematics.19,21 We aim to provide data that clinicians and researchers can use to optimize the ability to perform accurate, video-based gait analyses using freely available technologies.

Methods

Participants

Thirty persons with chronic stroke (21 men, 9 women; mean age = 63 [standard deviation (SD) = 9] years; height = 1.73 [SD = 0.11] m; body mass = 85.1 [SD = 19.2] kg) participated. Thirteen participants used assistive devices during overground walking (11 used a cane, 3 used an ankle-foot orthosis, and 1 used functional electrical stimulation of the plantarflexors; participants who used orthoses or functional electrical stimulation also used these devices during treadmill walking, and some participants used more than 1 device). The participants included here are a subset of those who were included in previous work21 and who performed both overground and treadmill walking trials. Unfortunately, clinical measures of gross motor impairment (eg, Fugl–Meyer Assessment of Motor Recovery after stroke) were not available for all participants; therefore, we do not provide these data but do provide each participant’s preferred overground and treadmill gait speeds along with demographic data (Tab. 1). All participants provided written informed consent prior to participation in accordance with the protocol approved by the Johns Hopkins University School of Medicine Institutional Review Board.

Table 1.

Participant Demographics and Gait Characteristicsa

| Participant | Age (y) | Sex | Overground Gait Speed (m/s) | Treadmill Speed (m/s) | Assistive Device |

|---|---|---|---|---|---|

|

| |||||

| 1 | 51 | M | 0.83 | 0.90 | N |

| 2 | 65 | F | 0.88 | 1.22 | N |

| 3 | 64 | F | 0.69 | 0.38 | Y |

| 4 | 51 | M | 0.84 | 1.09 | Y |

| 5 | 60 | F | 0.98 | 1.14 | N |

| 6 | 83 | M | 0.75 | 0.83 | N |

| 7 | 53 | M | 0.55 | 0.59 | Y |

| 8 | 70 | M | 0.80 | 1.00 | Y |

| 9 | 60 | M | 0.81 | 0.96 | Y |

| 10 | 56 | M | 0.66 | 0.93 | N |

| 11 | 73 | M | 0.62 | 0.84 | Y |

| 12 | 63 | M | 0.76 | 0.92 | N |

| 13 | 54 | F | 0.74 | 0.74 | Y |

| 14 | 74 | F | 0.15 | 0.16 | Y |

| 15 | 67 | M | 0.24 | 0.28 | Y |

| 16 | 58 | M | 0.78 | 1.15 | Y |

| 17 | 69 | M | 0.63 | 0.90 | N |

| 18 | 52 | M | 0.51 | 0.91 | Y |

| 19 | 67 | M | 1.24 | 1.47 | N |

| 20 | 69 | M | 1.15 | 1.20 | N |

| 21 | 69 | M | 0.49 | 0.40 | Y |

| 22 | 54 | M | 0.89 | 1.14 | N |

| 23 | 69 | M | 0.66 | 0.85 | N |

| 24 | 68 | F | 0.67 | 0.80 | N |

| 25 | 68 | F | 0.84 | 0.99 | Y |

| 26 | 64 | F | 0.85 | 1.02 | N |

| 27 | 70 | M | 0.83 | 0.90 | N |

| 28 | 41 | F | 0.83 | 0.95 | N |

| 29 | 74 | M | 0.70 | 0.79 | N |

| 30 | 56 | M | 1.11 | 1.42 | N |

F = female; M = male; N = no; Y = yes.

Protocol and Data Collection

Participants attended 1 testing session. To measure each participant’s preferred gait speed, each participant first completed 3 overground 10-meter walk tests. We determined each participant’s preferred walking speed as the mean speed across the 3 tests. Each participant then performed 4 overground walking trials across a 4.8-meter walkway at their preferred walking speed with simultaneous 3-dimensional motion capture and video recordings (Fig. 1A). We used a 10-camera motion capture system (Vicon, Denver, CO, USA) to capture the positions of reflective markers as described previously.21 We used 2 tablets (Galaxy A7; Samsung Electronics, Suwon-si, South Korea) to record digital videos (resolution: 1920 × 1080 pixels) of all overground gait trials. One tablet faced parallel to the walkway (ie, frontal view of the participant), and the other faced perpendicular to the walkway (ie, sagittal view of the participant). The participant walked toward the frontal camera during 2 overground gait trials (left side facing the sagittal camera) and away from the frontal camera in the other 2 overground gait trials (right side facing the sagittal camera). Additional details about the video recordings can be found in prior work.21

Figure 1.

(A) Experimental setups for treadmill testing. (B) Experimental setup for overground gait testing. (C) Simplified schematic showing our pose estimation-based workflow for gait analysis. 3D = three-dimensional.

Each participant then also completed 1 2-minute treadmill trial at their preferred walking speed (they were allowed to hold onto the handrails as needed). Because this speed was based on the overground 10-m walk tests performed prior to the experiment and the overground motion capture/video trials were performed on a shorter walkway, the treadmill speeds were often faster than the speeds observed in the overground gait trials (Tab. 1). Occasionally, we slowed the treadmill per participant request. During the treadmill trials, we used the same motion capture setup but only used 1 video recording (sagittal plane with the camera facing the participant’s right side) (Fig. 1B) because our video-based gait analysis using frontal videos requires movement toward or away from the camera and movement in global space is approximately static during treadmill walking.

Data Processing and Analysis

We smoothed the motion capture marker trajectories using a 0-lag fourth-order low-pass Butterworth filter with a 7-Hz cutoff frequency. We detected left and right heel-strikes and toe-offs as the positive and negative peaks, respectively, of the anterior–posterior left or right lateral malleolus markers relative to the torso.

We processed the video recordings using similar methodology to previous work.19,21 Briefly, we first applied OpenPose—an open-source human pose estimation algorithm7,8—to identify 25 anatomical keypoints using the BODY_25 keypoint model.7,8 We then used the frame-by-frame JSON file outputs containing 2-dimensional pixel coordinates of each keypoint to calculate the spatiotemporal and kinematic gait parameters using our custom MATLAB (The MathWorks, Inc, Natick, MA, USA) video-based gait analysis work flows (freely available at github.com/janstenum/GaitAnalysis-PoseEstimation). We illustrate a simplified version of our work flow in Figure 1C; extensive detail about our OpenPose and MATLAB work flows for analysis of frontal and sagittal recordings can be found in prior work.19,21

We then calculated commonly reported spatiotemporal gait parameters (step length, step time, step length asymmetry, step time asymmetry; asymmetry parameters were calculated as [paretic − nonparetic]/[paretic + nonparetic]) using motion capture and video-based data as well as raw (motion capture minus video-based) and absolute (absolute value of motion capture minus video-based) errors for these parameters to provide information about bias and accuracy, respectively. Note that step length was calculated as the distance between the lateral malleoli markers at heel-strike for the motion capture and sagittal video-based data, but it was calculated as the distance traveled by the torso between consecutive heel-strikes for frontal video-based data.21

We also calculated lower extremity sagittal kinematics for the hip, knee, and ankle bilaterally from motion capture and sagittal video data on a cycle-by-cycle basis (bilateral kinematics were unavailable for 1 participant and nonparetic kinematics were unavailable for another participant due to technical errors). We then normalized all cycles in time and averaged the joint angles across all cycles for each participant to result in a single, normalized ensemble time series across each joint within each limb for each participant. From these ensemble time series data, we calculated cross-correlation coefficients (at 0 time lag) to measure accuracy between the individual participant mean sagittal joint angle profiles at the hip, knee, and ankle as measured by the video-based and motion capture analyses for the treadmill and sagittal overground walking trials. Finally, we calculated peak flexion and extension (or dorsiflexion/plantarflexion) at each joint for each limb in each participant given that peak sagittal joint angles are often of clinical interest.

Statistical Analysis

We performed Pearson correlation analyses and calculated the ICC(1, 1) to measure associations and reliability, respectively, between spatiotemporal gait parameters as measured by the video-based and motion capture analyses for the treadmill walking trials (we report these analyses for the overground gait data in prior work21). We interpreted ICC(1, 1) estimates to be excellent (>0.90), good (between 0.75 and 0.90), moderate (between 0.50 and 0.75), and poor (<0.50) on the basis of their CIs as established previously.22 We also performed 2 (limb: paretic, nonparetic) ×3 (method: treadmill, sagittal overground, frontal overground) repeated-measures analyses of variance (with treadmill and overground speeds included as covariates) on the raw and absolute errors to assess how each approach to video-based gait analysis affected the bias and accuracy, respectively, of the spatiotemporal parameters. To compare the accuracy of the video-based and motion capture measurements of sagittal joint angles at the hip, knee, and ankle across joints and limbs, we performed a 3 (joint: hip, knee, ankle) × 2 (method: treadmill, sagittal overground video) × 2 (limb: paretic, nonparetic) repeated-measures analysis of variance (again with treadmill and overground speeds included as covariates) on the cross-correlation coefficients of the kinematics. Finally, we performed Pearson correlation analyses and calculated ICCs to measure associations and reliability between peak joint angles at the hip, knee, and ankle as measured by the video-based and motion capture analyses for the treadmill walking trials (sagittal joint angles for the overground trials were reported previously21). We used JASP version 0.17.1.0 (University of Amsterdam, Amsterdam, NL) for all analyses, set α at ≤.05, and performed Bonferroni corrections for post hoc comparisons where appropriate.

Role of the Funding Source

The funders played no role in the design, conduct, or reporting of this study.

Results

Video-Based Estimates of Spatiotemporal Gait Parameters Versus Motion Capture Measurements During Treadmill Walking

We first assessed how well the video-based analyses of treadmill walking could measure step length and step time with respect to motion capture (the overground gait data have been reported previously as part of a larger dataset21). We observed that the video-based measurements of step length were significantly correlated with the motion capture measurements when we analyzed all steps individually and at the participant level (ie, mean values for each participant) (Tab. 2). We observed similar findings for correlations between video-based and motion capture measurements of step time, step length asymmetry, and step time asymmetry when analyzed individually and at the participant level. ICCs indicated excellent reliability for all step length and step time analyses (Fig. 2A and B) and moderate to excellent reliability for step length asymmetry and step time asymmetry analyses (Fig. 3A and B). Full statistical results are shown in Table 2.

Table 2.

Relationships Between Video-Based and Motion Capture Measurements of Spatiotemporal Gait Parameters During Treadmill Walking in Persons After Stroke

| Parameter | Pearson r | P | ICC(1, 1) | 95% CI for ICC(1, 1) |

|---|---|---|---|---|

|

| ||||

| Step length | ||||

| Individual steps | ||||

| All | 0.97 | <.0001 | 0.964 | 0.962 to 0.965 |

| Paretic | 0.97 | <.0001 | 0.962 | 0.959 to 0.965 |

| Nonparetic | 0.97 | <.0001 | 0.965 | 0.963 to 0.968 |

| Participant level | ||||

| All | 0.98 | <.0001 | 0.979 | 0.966 to 0.988 |

| Paretic | 0.98 | <.0001 | 0.978 | 0.955 to 0.989 |

| Nonparetic | 0.98 | <.0001 | 0.981 | 0.961 to 0.991 |

| Step time | ||||

| Individual steps | ||||

| All | 0.98 | <.0001 | 0.977 | 0.976 to 0.978 |

| Paretic | 0.98 | <.0001 | 0.984 | 0.983 to 0.985 |

| Nonparetic | 0.96 | <.0001 | 0.955 | 0.952 to 0.958 |

| Participant level | ||||

| All | 0.99 | <.0001 | 0.994 | 0.990 to 0.996 |

| Paretic | 1.00 | <.0001 | 0.996 | 0.993 to 0.998 |

| Nonparetic | 0.99 | <.0001 | 0.986 | 0.971 to 0.993 |

| Step length asymmetry | ||||

| Individual steps | ||||

| All | 0.77 | <.0001 | 0.745 | 0.729 to 0.761 |

| Participant level | ||||

| All | 0.77 | <.0001 | 0.722 | 0.497 to 0.857 |

| Step time asymmetry | ||||

| Individual steps | ||||

| All | 0.86 | <.0001 | 0.851 | 0.841 to 0.861 |

| Participant level | ||||

| All | 0.98 | <.0001 | 0.959 | 0.917 to 0.980 |

| Peak hip flexion | ||||

| Participant level | ||||

| All | 0.74 | <.001 | 0.675 | 0.508 to 0.794 |

| Paretic | 0.67 | <.001 | 0.571 | 0.269 to 0.772 |

| Nonparetic | 0.74 | <.001 | 0.682 | 0.429 to 0.836 |

| Peak hip extension | ||||

| Participant level | ||||

| All | 0.57 | <.001 | 0.354 | 0.109 to 0.559 |

| Paretic | 0.49 | <.01 | 0.205 | −0.164 to 0.526 |

| Nonparetic | 0.71 | <.001 | 0.543 | 0.231 to 0.755 |

| Peak knee flexion | ||||

| Participant level | ||||

| All | 0.94 | <.0001 | 0.928 | 0.882 to 0.957 |

| Paretic | 0.94 | <.0001 | 0.923 | 0.845 to 0.963 |

| Nonparetic | 0.77 | <.001 | 0.712 | 0.477 to 0.853 |

| Peak knee extension | ||||

| Participant level | ||||

| All | 0.74 | <.001 | 0.625 | 0.440 to 0.759 |

| Paretic | 0.66 | <.001 | 0.492 | 0.164 to 0.723 |

| Nonparetic | 0.78 | <.001 | 0.725 | 0.497 to 0.860 |

| Peak ankle dorsiflexion | ||||

| Participant level | ||||

| All | −0.10 | .48 | 0.000 | −0.255 to 0.256 |

| Paretic | −0.26 | .18 | 0.000 | −0.357 to 0.359 |

| Nonparetic | 0.03 | .87 | 0.048 | −0.314 to 0.400 |

| Peak ankle plantarflexion | ||||

| Participant level | ||||

| All | 0.17 | .21 | 0.159 | −0.100 to 0.399 |

| Paretic | 0.18 | .35 | 0.165 | −0.204 to 0.495 |

| Nonparetic | 0.18 | .35 | 0.173 | −0.196 to 0.501 |

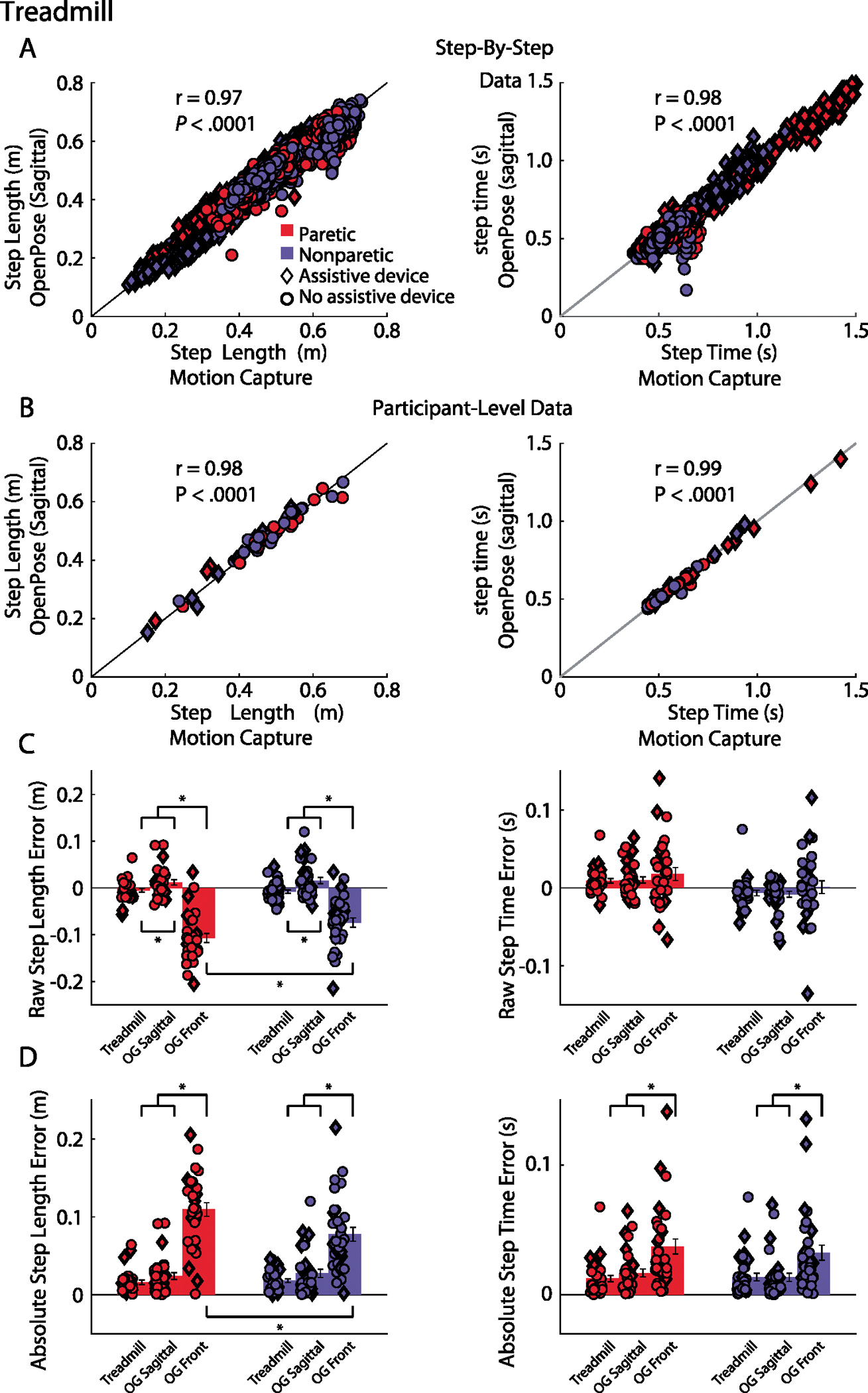

Figure 2.

(A) Relationships between video-based and motion capture measurements of step length (left) and step time (right) for all steps recorded. The parameters that correspond to the paretic leg are shown in blue, and those that correspond to the nonparetic leg are shown in red. The diamond shape indicates that the participant used an assistive device, and the circle indicates that the participant did not. (B) Relationships between video-based and motion capture measurements of step length (left) and step time (right) for participant-level data, where all steps for each participant were averaged into a single value. Color and shape conventions are the same as those for panel A. (C) Raw step length error (left) and raw step time error (right) for the analyses resulting from sagittal treadmill, sagittal overground, and frontal overground video measurements relative to motion capture measurements. (D) Absolute step length error (left) and absolute step time error (right) for the analyses resulting from sagittal treadmill, sagittal overground, and frontal overground video measurements relative to motion capture measurements. *P < .05. OG = overground.

Figure 3.

(A) Relationships between video-based and motion capture measurements of step length asymmetry (left) and step time asymmetry (right) for all steps recorded. The diamond shape indicates that the participant used an assistive device, and the circle indicates that the participant did not. (B) Relationships between video-based and motion capture measurements of step length asymmetry (left) and step time asymmetry (right) for participant-level data, where all steps for each participant were averaged into a single value. Shape conventions are the same as those for Figure 2A. (C) Raw step length asymmetry error (left) and raw step time asymmetry error (right) for the analyses resulting from sagittal treadmill, sagittal overground, and frontal overground video measurements relative to motion capture measurements. (D) Absolute step length asymmetry error (left) and absolute step time asymmetry error (right) for the analyses resulting from sagittal treadmill, sagittal overground, and frontal overground video measurements relative to motion capture measurements. *P < .05. OG = overground.

Comparing the Accuracy of Spatiotemporal Gait Parameters Measured by Video-Based Analyses of Treadmill Versus Overground Walking

We next compared the accuracy of the video-based analyses of treadmill walking to the accuracy of video-based analyses of overground walking from videos recorded from sagittal and frontal viewpoints.21 We display participant-level raw and absolute error magnitudes for step length and step time for both the paretic and nonparetic limbs across the 3 methods (sagittal videos of treadmill walking, sagittal videos of overground walking, and frontal videos of overground walking) in Figure 2C and D, respectively.

When analyzing raw step length error between the video-based and motion capture measurements, we observed a significant main effect of method (F2,54 = 9.17; P <. 001) but no significant main effect of limb (F1,27 = 2.51;P = .13) or method × limb interaction (F2,54 = 1.17; P = .32). Post hoc comparisons revealed significant differences in raw step length error bilaterally between the treadmill versus frontal overground (P <. 001), treadmill versus sagittal overground (P <. 01), and sagittal overground versus frontal overground comparisons (P <. 001). Both the treadmill and sagittal overground measurements showed significantly less raw step length error. The frontal overground comparisons showed larger negative values for raw step length error, indicating that the video-based measurement overestimated step length when compared to the motion capture measurement; this discrepancy results in part from the different step length calculation methods used for motion capture and frontal video-based data (see Methods) when compared to the frontal overground measurements. The treadmill measurements showed significantly more negative error than the overground sagittal measurements, indicating that the video-based measurements tended to slightly overestimate step lengths in the treadmill measurements and slightly underestimate step lengths in the overground sagittal measurements.

When analyzing absolute step length error between the video-based and motion capture measurements, we observed a significant method × limb interaction (F2,54 = 3.18; P = .05) but no significant main effects of method (F2,54 = 2.25; P = .12) or limb (F1,27 = 2.14; P = .16). Results from the post hoc comparisons revealed significant differences in absolute step length error bilaterally between the treadmill versus frontal overground and sagittal overground versus frontal overground comparisons (all Ps <. 001), with both the treadmill and sagittal overground measurements showing significantly less absolute step length error. Post hoc comparisons also revealed a significant difference between frontal overground measurements of paretic and nonparetic absolute step length error (P <. 01; measurements of nonparetic step length were more accurate than measurements of paretic step length when using the frontal overground measurements).

We also performed similar analyses for raw and absolute step time error. When analyzing raw step time error between the video-based and motion capture measurements, we observed a significant method × limb interaction (F2,54 = 5.64; P <. 01) but no significant main effects of method (F2,54 =.50; P = .50) or limb (F1,27 = .01; P = .91). However, there were no statistically significant pairwise post hoc comparisons in raw step time error.

When analyzing absolute step time error between the video-based and motion capture measurements, we observed a significant main effect of method (F2,54 = 4.02; P = .02) but no significant main effect of limb (F1,27 = 1.23; P = .28) and no significant limb × method interaction (F2,54 = 1.67; P = .20). Post hoc comparisons revealed significant differences in absolute step time error bilaterally between the treadmill versus frontal overground and sagittal overground versus frontal overground comparisons (both Ps <. 001), with the frontal overground measurements showing significantly larger absolute step time error when compared to the 2 other methods.

We also compared the raw and absolute errors in step length asymmetry and step time asymmetry between the video-based and motion capture measurements (Fig. 3C and D). We observed significant main effects of method in raw step time asymmetry error (F2,54 = 3.88; P = .03) and absolute step length asymmetry error (F2,54 = 16.69; P < .001) but not raw step length asymmetry error (F2,54 = 1.66; P = .20) or absolute step time asymmetry error (F2,54 = 1.98; P = .15). Post hoc comparisons did not reveal any significant pairwise differences in raw step time asymmetry error but did reveal that absolute step length asymmetry error was significantly smaller during treadmill versus frontal overground measurements (P < .001).

Video-Based Estimates of Lower Extremity Joint Angles Versus Motion Capture Measurements

We next compared the degree of correlation (ie, cross-correlation coefficient) between the sagittal lower extremity joint angle profiles measured by the video-based analyses of treadmill walking versus motion capture measurements and the sagittal video-based analysis of overground walking versus motion capture measurements. We show the sagittal joint angle profiles of the hip, knee, and ankles of the paretic and nonparetic limbs as measured by video-based and motion capture analyses of both treadmill and overground walking in Figure 4A.

Figure 4.

(A) Group mean sagittal joint angle profiles for the paretic (left) and nonparetic (right) legs during treadmill (top) and overground (bottom) walking. Colored lines indicate joint angles measured by the video-based analyses, and black lines indicate joint angles measured by motion capture. Error bars show the standard error of the mean. The mean and standard error of the mean cross-correlation coefficients (ie, r values) between video-based and motion capture measurements for each leg and joint are also shown. (B) Relationships between video-based and motion capture measurements of peak hip flexion, hip extension, knee flexion, knee extension, ankle dorsiflexion, and ankle plantarflexion during treadmill walking. Color and shape conventions are the same as those for Figure 2A.

When analyzing the cross-correlation coefficients assessing relationships between video-based and motion capture measurements for treadmill and sagittal overground walking across joints and limbs, we observed significant main effects of joint (F2,50 = 7.24; P < .01) and limb (F1,25 = 9.50; P < .01), a significant joint × method interaction (F2,50 = 3.70; P = .03), and a significant joint × method × limb interaction (F2,50 = 4.65; P = .01) but no significant main effect of method (F1,25 = 2.14; P = .16) and no significant joint × limb (F2,50 = 0.96; P = .39) or method × limb (F1,25 = 2.91; P = .10) interactions. Post hoc comparisons revealed that the cross-correlation coefficients between the video-based and motion capture measurements of sagittal joint angles were significantly larger (ie, more strongly related) for the hip and knee versus the ankle (both Ps < .001); there were no significant differences between the hip and knee (all Ps > .99). The cross-correlation coefficients were also significantly larger for overground versus treadmill walking (P < .001) and for the nonparetic versus paretic limb (P = .01).

We also performed Pearson correlation analyses and calculated ICCs to assess relationships and reliability between peak sagittal joint angles (ie, peak hip flexion, hip extension, knee flexion, knee extension, ankle dorsiflexion, and ankle plantarflexion) as measured by the video-based analyses and motion capture for the paretic and nonparetic limbs during treadmill walking (Fig. 4B). When considering all gait cycles (for both the paretic and nonparetic limbs), we observed significant correlations between the video-based analyses and motion capture for peak hip flexion, hip extension, knee flexion, and knee extension but not ankle dorsiflexion or plantarflexion (Tab. 2). In the paretic limb, we again observed significant correlations between the video-based analyses and motion capture for peak hip flexion, hip extension, knee flexion, and knee extension but not ankle dorsiflexion or plantarflexion. We observed similar results in the nonparetic limb with significant correlations between the video-based analyses and motion capture for peak hip flexion, hip extension, knee flexion, and knee extension but not ankle dorsiflexion or plantarflexion. ICCs indicated good to excellent reliability for some measurements (peak hip flexion, knee flexion, and knee extension) and poor to moderate reliability for others (peak hip extension, ankle dorsiflexion, ankle plantarflexion). Full statistical results are shown in Table 2.

Discussion

In this study, we compared the accuracy of video-based gait analysis to ground-truth motion capture measurements during overground and treadmill walking in persons after stroke. We used video recordings constrained to 3 common clinical or in-home settings: frontal views of overground walking, sagittal views of overground walking, and sagittal views of treadmill walking. In a sample of persons after stroke, we found that video-based measurements of spatiotemporal gait parameters during treadmill walking were strongly correlated with ground-truth motion capture measurements (we previously observed similar results in overground walking21) and showed moderate to excellent reliability that video-based measurements of spatiotemporal gait parameters were generally more accurate from sagittal videos of overground and treadmill walking than from frontal videos of overground walking, that sagittal videos of overground walking resulted in more accurate measurements of lower extremity joint kinematics than sagittal videos of treadmill walking, and that video-based measurements of hip and knee kinematics were more accurate and reliable than measurements of ankle kinematics. These results demonstrate the ability to measure clinically relevant gait parameters accurately using only simple digital videos (recorded from multiple different perspectives and in different settings) and open-source software.

These findings add to a growing body of literature aimed at improving the accessibility of quantitative, clinical gait analyses. We have previously shown that pose estimation can be used for a comprehensive gait analysis in persons with and without gait impairment,19,21 and others have shown that pose estimation algorithms can be combined with neural networks to predict and estimate a variety of clinical gait assessments and gait parameters using only video recordings.15–17,20 Given the wide and expanding variety of human pose estimation algorithms available8–11,13,23,24—we used OpenPose8 simply because we have had previous success using this algorithm for gait analysis applications19,21—recent work has also explored which algorithms are most useful for gait analyses.25

Contrary to our hypothesis, we did not find that our sagittal video-based gait analysis was more accurate during treadmill walking than overground walking (spatiotemporal results were similar between the 2 methods while some kinematic measurements improved during overground walking). We anticipated that a relatively stationary view of the participant may provide more accurate tracking; however, this also resulted in consistent occlusion of 1 side of the body (the left side in our videos, given that the camera faced the right side of the participant) that impairs the tracking of the occluded leg. This may also have caused the discrepancy that we observed in the measurements of joint kinematics in the paretic versus nonparetic leg during treadmill walking, as the paretic leg was the left (occluded) leg for 22 of the 29 participants included in the kinematic analyses. Although the overground walking videos resulted in the person translating across the field of view and led to susceptibility to parallax and changes in perspective, there was less issue of occlusion because the participants walked back and forth (ie, the camera faced each leg for half of the trials). One solution could be to use 2 recording devices—1 on each side of the patient—during treadmill walking; however, here we sought to investigate analyses that result from only a single device, as we considered this to be the most practical approach used for in-clinic or in-home recording.

We also observed some issues with video-based measurements of ankle kinematics during treadmill walking in particular. This was expected to some extent, as we have previously observed larger errors in video-based measurements of ankle kinematics as compared to hip and knee kinematics.19 However, we noted 2 participants who showed unusually large errors in peak ankle angle measurements that were due to poor identification of the foot keypoints during the late stance and early swing phases of the gait cycle (Suppl. Figure). It was not clear what caused the large errors in these participants; it is possible that their footwear (which was similar in color to our treadmill) caused difficulty with the foot identification and tracking.

Our findings suggest that sagittal videos of overground and treadmill walking result in similarly accurate measurements of spatiotemporal gait parameters, while most accurate measurements of lower extremity kinematics are likely to be obtained using sagittal videos of overground walking (where the patient completes at least 1 pass back and forth). This requires an open space where the camera can be placed far enough from the patient to record their whole body within the frame and multiple strides of walking. If such a space is unavailable, our results show that sagittal treadmill videos or frontal overground videos can also be useful, as our video-based measurements of several gait parameters (spatiotemporal parameters and hip and knee kinematics in the treadmill videos; spatiotemporal parameters in the frontal overground videos21) show strong correlations with motion capture measurements using these approaches. However, accuracy is likely to be impacted on some parameters when using treadmill or frontal overground videos, especially parameters that require significant precision (eg, step length asymmetry).

Limitations

This study was limited to a single clinical population (stroke), single-camera video recordings, and a single pose estimation algorithm (OpenPose). There are also well-documented issues with existing pose estimation algorithms (eg, hardware requirements, occlusions, limitations of training data, requirements of fixed reference frames26–28) and usability for end users without significant technical expertise.27 We did not develop neural networks or other machine learning techniques to provide additional postprocessing; this may improve the accuracy and reliability of the results.15–17

Conclusions

Quantitative gait analysis tools can help identify patient-specific gait deviations, guide therapy decisions, and monitor response to interventions; however, these are often prohibitively expensive and inaccessible to many clinicians and researchers. Here, we showed that video-based gait analysis using only household devices and open-source software provides accurate measurements of step length, step time, and hip and knee kinematics during overground and treadmill walking in persons after stroke. In particular, overground gait videos recorded in the sagittal plane yielded best results when considering spatiotemporal and kinematic gait parameters collectively.

Supplementary Material

Acknowledgments

The authors would like to acknowledge Roma Kaur Sahota, MD, for providing helpful discussion about this study.

Funding

This study was funded with grants from the RESTORE Center at Stanford University (NIH grant P2CHD101913), American Heart Association (23IPA1054140), and the Sheikh Khalifa Stroke Institute at Johns Hopkins Medicine.

Footnotes

Disclosures and Presentations

The authors completed the ICMJE Form for Disclosure of Potential Conflicts of Interest and reported no conflicts of interest. Data in this manuscript will be presented as part of the “Advances in Rehabilitation Technology to Improve Health Outcomes Session” at: the American Physical Therapy Association’s Combined Sections Meeting; February 15–17, 2024; Boston, MA. K. John and J. Stenum contributed equally as co-first authors. K.M. Cherry-Allen and R.T. Roemmich contributed equally as co-senior authors.

Ethics Approval

This study was approved by the Johns Hopkins University School of Medicine Institutional Review Board.

Data Availability

The data underlying this article are available in GitHub at https://github.com/janstenum/GaitAnalysis-PoseEstimation.

References

- 1.Knutsson E, Richards C. Different types of disturbed motor control in gait of hemiparetic patients. Brain. 1979;102:405–430. 10.1093/brain/102.2.405. [DOI] [PubMed] [Google Scholar]

- 2.Olney SJ, Richards C. Hemiparetic gait following stroke. Part I: characteristics. Gait Posture. 1996;4:136–148. 10.1016/0966-6362(96)01063-6. [DOI] [Google Scholar]

- 3.Guralnik JM, Ferrucci L, Pieper CF et al. Lower extremity function and subsequent disability: consistency across studies, predictive models, and value of gait speed alone compared with the short physical performance battery. J Gerontol Ser A. 2000;55:M221–M231. 10.1093/GERONA/55.4.M221. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Chang MC, Lee BJ, Joo NY, Park D. The parameters of gait analysis related to ambulatory and balance functions in hemiplegic stroke patients: a gait analysis study. BMC Neurol. 2021;21:1–8. 10.1186/S12883-021-02072-4/FIGURES/2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Nyberg L, Gustafson Y. Fall prediction index for patients in stroke rehabilitation. Stroke. 1997;28:716–721. 10.1161/01.STR.28.4.716. [DOI] [PubMed] [Google Scholar]

- 6.Bohannon RW, Horton MG, Wikholm J. Rehabilitation goals of patients with hemiplegia. Int J Rehabil Res. 1988;11:181–184. 10.1097/00004356-198806000-00012. [DOI] [Google Scholar]

- 7.Cao Z, Simon T, Wei SE, Sheikh Y. Realtime multi-person 2D pose estimation using part affinity fields.arXiv.2017. 10.48550/arXiv.1611.08050. [DOI] [PubMed] [Google Scholar]

- 8.Cao Z, Hidalgo Martinez G, Simon T, Wei S-E, Sheikh YA. OpenPose: Realtime multi-person 2D pose estimation using part affinity fields. IEEE Trans Pattern Anal Mach Intell.2019;43:172–186. 10.1109/tpami.2019.2929257. [DOI] [PubMed] [Google Scholar]

- 9.Insafutdinov E, Pishchulin L, Andres B, Andriluka M, Schiele B. Deepercut: a deeper, stronger, and faster multi-person pose estimation model. 10.48550/arXiv.1605.03170. [DOI] [Google Scholar]

- 10.Pishchulin L, Insafutdinov E, Tang S et al. DeepCut: joint subset partition and labeling for multi person pose estimation. arXiv. 2016. 10.48550/arXiv.1605.03170. [DOI] [Google Scholar]

- 11.Insafutdinov E, Andriluka M, Pishchulin L et al. ArtTrack: articulated multi-person tracking in the wild. arXiv. 2016. 10.48550/arXiv.1612.01465. [DOI] [Google Scholar]

- 12.Toshev A, Szegedy C. DeepPose: human pose estimation via deep neural networks. arXiv. 2013. 10.48550/arXiv.1312.4659. [DOI] [Google Scholar]

- 13.Mathis A, Mamidanna P, Cury KM et al. DeepLabCut: markerless pose estimation of user-defined body parts with deep learning. Nat Neurosci. 2018;21:1281–1289. 10.1038/s41593-018-0209-y. [DOI] [PubMed] [Google Scholar]

- 14.Pang Y, Christenson J, Jiang F et al. Automatic detection and quantification of hand movements toward development of an objective assessment of tremor and bradykinesia in Parkinson’s disease. J Neurosci Methods. 2020;333:108576. 10.1016/j.jneumeth.2019.108576. [DOI] [PubMed] [Google Scholar]

- 15.Lonini L, Moon Y, Embry K et al. Video-based pose estimation for gait analysis in stroke survivors during clinical assessments: a proof-of-concept study. Digit Biomarkers. 2022;6:9–18. 10.1159/000520732. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Cimorelli A, Patel A, Karakostas T, Cotton RJ, Abilitylab SR. Portable in-clinic video-based gait analysis: validation study on prosthetic users. medRxiv. 2022. 10.1101/2022.11.10.22282089. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Cotton RJ, Mcclerklin E, Cimorelli A, Patel A, Karakostas T. Transforming gait: video-based spatiotemporal gait analysis. Proc Annu Int Conf IEEE Eng Med Biol Soc EMBS. 2022;115–120. 10.48550/arxiv.2203.09371. [DOI] [PubMed] [Google Scholar]

- 18.Moro M, Marchesi G, Odone F, Casadio M. Markerless gait analysis in stroke survivors based on computer vision and deep learning: a pilot study. Proc ACM Symp Appl Comput. 2020;2097–2104. 10.1145/3341105.3373963. [DOI] [Google Scholar]

- 19.Stenum J, Rossi C, Roemmich RT. Two-dimensional video-based analysis of human gait using pose estimation. PLoS Comput Biol. 2021;17:e1008935. 10.1371/JOURNAL.PCBI.1008935. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Kidziński Ł, Yang B, Hicks JL, Rajagopal A, Delp SL, Schwartz MH.Deep neural networks enable quantitative movement analysis using single-camera videos. Nat Commun. 2020;11:4054. 10.1038/s41467-020-17807-z. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Stenum J, Hsu MM, Pantelyat AY, Roemmich RT. Clinical gait analysis using video-based pose estimation: multiple perspectives, clinical populations, and measuring change. medRxiv. 2023. 10.1101/2023.01.26.23285007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Koo TK, Li MY. Cracking the code: providing insight into the fundamentals of research and evidence-based practice a guideline of selecting and reporting Intraclass correlation coefficients for reliability research.J Chiropr Med.2016;15:155–163. 10.1016/j.jcm.2016.02.012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Andriluka M, Pishchulin L, Gehler P, Schiele B. 2D human pose estimation: new benchmark and state of the art analysis. IEEE Conference on Computer Vision and Pattern Recognition. 2014:3686–3693. 10.1109/CVPR.2014.471. [DOI] [Google Scholar]

- 24.Lugaresi C, Tang J, Nash H et al. MediaPipe: a framework for building perception pipelines. n.d.

- 25.Washabaugh EP, Shanmugam TA, Ranganathan R, Krishnan C. Comparing the accuracy of open-source pose estimation methods for measuring gait kinematics. Gait Posture. 2022;97:188–195. 10.1016/J.GAITPOST.2022.08.008. [DOI] [PubMed] [Google Scholar]

- 26.Seethapathi N, Wang S, Saluja R, Blohm G, Kording KP. Movement science needs different pose tracking algorithms. arXiv. 2019. 10.48550/arXiv.1907.10226. [DOI] [Google Scholar]

- 27.Stenum J, Cherry-Allen KM, Pyles CO et al. Applications of pose estimation in human health and performance across the lifespan. Sensors. 2021;21:7315. 10.3390/s21217315. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Cherry-Allen KM, French MA, Stenum J, Xu J, Roemmich RT. Opportunities for improving motor assessment and rehabilitation after stroke by leveraging video-based pose estimation. Am J Phys Med Rehabil. 2023;102:S68–S74. 10.1097/PHM.0000000000002131. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Data Availability Statement

The data underlying this article are available in GitHub at https://github.com/janstenum/GaitAnalysis-PoseEstimation.