Dear editor,

Artificial intelligence (AI) has rapidly evolved over the past few years, impacting various disciplines, including medicine. Initially focused on pattern recognition, data processing, and medical imaging analysis, AI has advanced to the level where it can affect clinical decision-making, reduce diagnostic errors, and improve patient outcomes, particularly in specialized fields like ophthalmology.1,2

The role of AI in linking visual evaluations with diagnostic algorithms makes it particularly promising in ophthalmology. AI algorithms have already demonstrated proficiency in detecting diabetic retinopathy,3 retinal detachment,4 age-related macular degeneration,5 and glaucoma6 through image analysis. Such capabilities are likely to further enhance diagnostic efficiency when combined with physician judgment. As AI's role in image-based diagnostics is developing, the potential of large language models (LLMs), which can comprehend and generate human-like text, has remained largely unexplored.

LLMs represent a significant milestone in AI, beginning with OpenAI's release of Chat Generative Pre-trained Transformer 3 (GPT-3) in June 2020.7 GPT-3, trained on 410 billion words from the internet, features 175 billion parameters. Three years later, OpenAI launched ChatGPT-4, followed by the launch of ‘ChatGPT-4o-latest’ (ChatGPT) in August 2024. The fastest and most efficient model yet, dynamically updated from its predecessors, some experts hypothesize that the latest iteration could contain around 1.8 trillion parameters, although the exact number remains uncertain.8, 9, 10 Similarly, Meta (formerly Facebook) introduced Llama 3.1 in July 2024 as the largest open-source LLM to date.11 Llama 3.1 is considered a new standard, outperforming ChatGPT and other LLMs in reasoning, multilingual capabilities, long-context handling, math, and evaluation metrics.12 Llama 3.1 is available in three sizes: 8 billion (8B), 70 billion (70B), and 405 billion (405B) parameters.

Parameters are the internal variables and weights learned during training, enabling models to perform complex tasks, including natural language processing, medical diagnostics, and decision support. Increasing parameters generally enhance performance, allowing for better model capacity, generalization, fine-tuning, and contextual understanding. However, more parameters also increase the burden on processing power, computational cost, and training time, making the development and deployment of larger models both resource and technologically demanding.13,14

The progression of LLMs, such as GPT-4o and Llama, represents a significant step forward in AI's capabilities. Recent LLMs have been applied successfully in various medical contexts, including passing standardized exams like the United States Medical Licensing Examination and providing insights into clinical decision-making.15 However, the application of these models in specialized fields, such as ophthalmology, in terms of accuracy, reliability, and utility compared to human experts remains to be addressed. As these models may not have specific pre-training in ophthalmic knowledge, the ability of LLMs to process and respond to textual, knowledge-based medical queries is still under investigation.11, 12, 13 Understanding such performance characteristics will be critical for the employment of LLMs in medical education and the training of physicians.14

Given the implications for medical education and clinical practice, this study aims to evaluate the accuracy of two of the most advanced LLMs to date -- ChatGPT-4o and Llama 3.1 -- in responding to ophthalmology-related test questions. While the performance of LLMs has previously been explored, it is unknown how much it has advanced with the updated model and new models, regarding ChatGPT and Meta AI, respectively.

1. Methods

Based on the general examination method of other investigators, a test set consisting of 254 questions was created from the Review Questions in Ophthalmology, Third Edition, a resource recommended by the American Academy of Ophthalmology (AAO) Committee for Resident Education as preparation for the Ophthalmic Knowledge Assessment Program (OKAP) examination.16, 17, 18 Since this book is not indexed by search engines, it is unlikely to have been included in LLM pre-training datasets.

The 254 questions were randomly selected from a total of 1062 questions. The current OKAP examination is structured into 13 sections (20 questions each) while the textbook has 12 (it does not include "general medicine"). The OKAP sections in this study were thus populated by 22 randomly selected questions from the corresponding source chapter. Questions requiring visual interpretation were excluded due to current limitations in LLMs' ability to process image-based inputs. Notably, only 12 pathology questions met this criterion as the majority contained visual material.

To replicate the OKAP examination format, we adhered to a multiple-choice question structure, where each question includes four options with one correct answer. The ChatGPT, Llama 70B, and Llama 405B models were independently prompted with the following preface: "Please select the correct answer and provide an explanation:" followed by the question-and-answer choices. To ensure that no memory or learning bias influenced the outcomes, a new session was initiated for each LLM prior to processing each question.

To determine the reliability of outputs from the tested LLMs, multiple trials were performed. Recognizing the probabilistic characteristics of LLMs, some variation in response was anticipated with each run. To evaluate this possibility, the same set of test questions were submitted three times, using identical prompts.

The textbook's question bank did not provide explicit difficulty ratings for each item. To address this, each question was categorized by cognitive level before testing, thereby enabling an analysis of each LLM's performance on questions of varying complexity. A two-tiered cognitive level system was adopted, simplifying the three-level system used by the AAO on real OKAP examinations.19 Low cognitive level questions focused on assessing simple factual recall and basic concept recognition, such as identifying the nerve responsible for a specific ocular movement. High cognitive questions, by comparison, required more advanced reasoning, such as determining the correct intraocular lens power calculation or selecting the best management approach for neovascular age-related macular degeneration.

This experimental design allowed for an evaluation of LLM performance across varying levels of cognitive demand and provided insights into the reproducibility of their outputs in a controlled, clinically relevant context. Three composite variables were created: ChatGPT4oCombinedAnswer, Llama70BCombinedAnswer, and Llama405BCombinedAnswer. These variables represent the common answer selected by the respective LLMs at least twice out of three trials. In cases where the LLMs provided different answers in all trial runs, the final response was recorded as the combined answer.

Logistic regression was conducted to determine significant associations between AI model accuracy and both the cognitive level and category of the questions, with P-values of <0.05 considered statistically significant. Logistic regression is a statistical modeling tool that estimates the relationship between a binary dependent variable and one or more independent variables. It allows for the control of multiple confounding variables and provides results in the form of adjusted odds ratios, which indicate the estimated risk of an outcome associated with the independent variables. The utility of this model lies in its capacity to account for complex interactions while studying a binary outcome measure.

The accuracy of each LLM was determined by verifying its responses against the answer provided in the book. Cohen's kappa was employed for reliability analysis to assess the agreement among three responses within each LLM, as well as for the agreement between the LLM combined response and the textbook's correct answer. The kappa statistic, as proposed by Cohen, measures the agreement between two binary variables while accounting for the probability of chance agreement. The kappa coefficient is calculated as the observed agreement minus expected agreement, divided by one minus expected agreement.20 The strength of agreement was classified according to the following thresholds: poor (k ≤0.20), fair (0.21≤ k ≤0.40), moderate (0.41≤ k ≤0.60), good (0.61≤ k ≤0.80), very good (0.81≤ k ≤0.90), and excellent (0.91≤ k ≤1.00).21 Associations between categorical variables were analyzed using the Chi-square test. All analyses were performed using SPSS v29.

2. Results

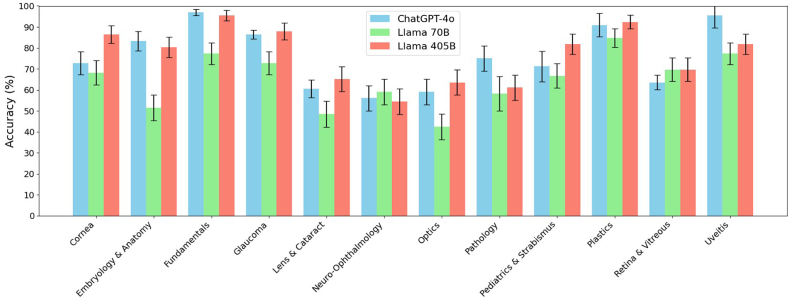

The ChatGPT model achieved an accuracy of 75.9% ± 1.1%. Llama 3.1 models varied with the 405B model reaching 77.3% ± 0.6% accuracy and the 70B model at 64.9% ± 1.0%. The differences between the 405B and 70B models, between ChatGPT and the 70B model were statistically significant, but not between 405B and ChatGPT (Table 1). All models performed similarly across categories, with the weakest performances occurring in lens and cataract, neuro-ophthalmology, and optics categories (Fig. 1).

Table 1.

Accuracy results by model and test set.a

| AI Model | Test 1 (%) | Test 2 (%) | Test 3 (%) | Mean Accuracy ± SD (%) |

|---|---|---|---|---|

| ChatGPT-4o | 78 | 76 | 74 | 75.9 ± 1.1 |

| Llama 70B | 66.5 | 65.4 | 63 | 64.9 ± 1.0 |

| Llama 405B | 77.2 | 78.3 | 76.4 | 77.3 ± 0.6 |

Results are presented as a percentage of correct answers. Each model completed three identical test sets, comprising 254 questions each, totaling 762 questions (n = 762). The overall mean and standard deviation were calculated across models' three test sets.

ChatGPT-4o and Llama 405B significantly outperformed Llama 70B (P < 0.001) but did not differ significantly from each other (P > 0.05).

Fig. 1.

Model Accuracy Comparison

Comparison of accuracy scores across 12 categories for three models: ChatGPT-4o, Llama 70B, and Llama 405B. Error bars represent standard errors.

Questions of cognitive level 2 had a 35.8% decrease in the odds of Llama 3.1 70B answering correctly compared to questions of cognitive level 1 (Table 2). In contrast, there was no significant association between question cognitive level with 405B or ChatGPT accuracy. No significant association existed between the question category and any LLMs' accuracy.

Table 2.

Accuracy by cognitive level for AI models.

| AI Model | Odds Ratio | 95% Confidence Interval | P-value |

|---|---|---|---|

| ChatGPT-4o | 1.166 | [0.522, 2.605] | 0.709 |

| Llama 70B | 0.358 | [0.170, 0.754] | 0.007a |

| Llama 405B | 1.379 | [0.630, 3.018] | 0.421 |

Only Llama 3.1 70B showed a significant decrease in odds of answering questions belonging to cognitive level 2 (n = 61) correctly compared to cognitive level 1 (n = 193).

Statistically significant.

All three LLMs demonstrated good internal agreement (test-retest) (Table 3). 405B and ChatGPT had good agreement with the correct answer, whereas 70B exhibited moderate agreement. The 405B and 70B models displayed the least amount of agreement between their answers, while 405B and ChatGPT produced had highest amount of agreement between each model (Table 4).

Table 3.

Intra-AI agreement.

| AI Model | Comparison | Agreement Level | Kappa (k) Valuea |

|---|---|---|---|

| ChatGPT-4o | Answers 1 & 2 | Very good | 0.879 |

| Answers 1 & 3 | Very good | 0.879 | |

| Answers 2 & 3 | Very good | 0.874 | |

| Combined vs. Correct | Good | 0.679 | |

| Llama 70B | Answers 1 & 2 | Very good | 0.942 |

| Answers 1 & 3 | Very good | 0.921 | |

| Answers 2 & 3 | Very good | 0.926 | |

| Combined vs. Correct | Moderate | 0.526 | |

| Llama 405B | Answers 1 & 2 | Very good | 0.858 |

| Answers 1 & 3 | Very good | 0.853 | |

| Answers 2 & 3 | Very good | 0.900 | |

| Combined vs. Correct | Good | 0.700 |

Agreement levels and kappa values for comparisons between combined answers from Llama 70B, Llama 405B, and ChatGPT-4o. Values indicate the level of agreement between answers, with higher kappa values indicating stronger agreement.

all Kappa values P < 0.001.

Table 4.

Cross-model agreement.

| Agreement Level | Kappa (k) Valuea | |

|---|---|---|

| Llama 70B vs. ChatGPT-4o | Good | 0.611 |

| Llama 70B vs. Llama 405B | Moderate | 0.595 |

| Llama 405B vs. ChatGPT-4o | Good | 0.726 |

Agreement levels and kappa values for comparisons between combined answers from Llama 70B, Llama 405B, and ChatGPT-4o. Values indicate the level of agreement between models, with higher kappa values indicating stronger agreement.

all Kappa values P < 0 .001.

3. Discussion

Other studies that evaluated various LLMs on OKAP practice test questions found ChatGPT-3.5 demonstrated an accuracy range of 46%–59.4%,22,23 while ChatGPT-4 achieved between 70% and 81%.16,24 Our results are promising, aligning well with or even surpassing the reported accuracies of previous models assessed on OKAP test results. There is currently no published research evaluating the performance of any Llama model on standardized medical knowledge-test accuracy, which limits direct comparisons. A 71% accuracy on the OKAP exam was reported for other LLMs such as Google Gemini and Bard, further supporting the validity of our findings in relation to existing LLM benchmarks.25 When considering general medicine standardized exams, such as the USMLE, ChatGPT-3 showed an accuracy of 60%,26 and ChatGPT-4 achieved 77% on other specialty board questions.27 These comparisons further underscore the recent advancements in LLMs noted earlier.

The analysis also revealed that the performance of the Llama 3.1 70B model decreased significantly on questions classified as cognitive level 2 compared to level 1, while no such association was observed for the 405B model or ChatGPT-4o (Table 2). This suggests that smaller models may struggle more with complex reasoning tasks, which likely require a deeper understanding of content and emphasizes the importance of model size in handling more sophisticated medical questions.

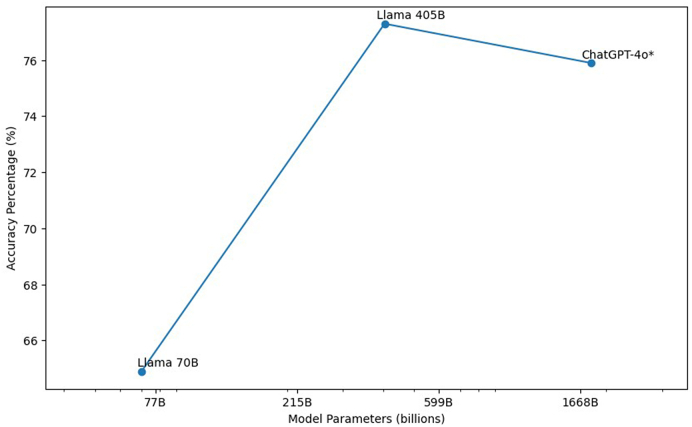

Meta AI's 70B model significantly underperformed compared to its more complex sibling in terms of accuracy (Fig. 2). This disparity, attributable solely to the difference in the number of parameters, aligns with computational studies indicating that increasing the number of parameters generally improves performance, as the model can better capture and leverage complex patterns within the data. Additionally, the unexpected finding that the 70B model's responses aligned more closely with ChatGPT's than with 405B further underscores the possibility that smaller models may adopt different generalization strategies. Due to its reduced complexity, the 70B model may rely on reasoning pathways that are more akin to those used by ChatGPT, rather than its larger counterpart. All of this indicates that models of varying sizes process and generalize medical knowledge differently.

Fig. 2.

AI Model Parameters vs. Accuracy Percentage

Relationship between model size and accuracy for three large language models: ChatGPT-4o, Llama 70B, and Llama 405B. Asterisk (∗) indicates that the 1.8 trillion parameter count for ChatGPT-4o is an estimate. This figure shows that larger models generally achieve higher accuracy, the performance similarity between the 405B model and ChatGPT-4o suggests diminishing returns as models scale, indicating a potential parameter-performance plateau.

The absence of significant differences between the 405B model and ChatGPT-4o suggests that simply increasing model size may lead to diminishing returns, particularly as models reach a certain scale. While the parameter count of ChatGPT-4 remains uncertain, its comparable performance to the 405B model hints at a parameter-performance plateau. This is consistent with research indicating that beyond a certain point, performance gains from increased parameters become nonlinear.10,14,26 The analysis also highlighted limitations across all models in certain ophthalmology categories such as lens and cataract, neuro-ophthalmology, and optics. This suggests that despite overall strong performance, LLMs struggle with narrowly focused areas of expertise. The findings underscore the potential need for domain-specific pretraining or fine-tuning of LLMs to enhance their utility in ophthalmology. Incorporating public-domain ophthalmology resources like EyeWiki for pretraining could improve the accuracy and reliability of AI-generated responses in clinical settings.

It is essential to recognize that the observed differences in performance may stem from factors beyond merely parameter count. These variations may reveal inherent limitations related to model design and training methodologies. Elements such as a model's architecture, the diversity and quality of its training dataset, and the optimization techniques employed during training can significantly influence its capacity to generalize across complex medical domains. This is particularly relevant as previously discussed in the context of the 70B model, which may rely heavily on the specific training data to compensate for its smaller parameter count. On the other hand, models like ChatGPT may benefit from access to a more diverse, high-quality training dataset and employ advanced optimization techniques that enhance their reasoning abilities beyond just the size of its parameters.

Understanding these nuances underscores that, while parameter size is significant, strategic improvements in training methodologies and architectural design are crucial for developing models that excel in specialized domains, such as ophthalmology. However, it is important to note that the proprietary nature of these design and training elements complicates direct comparisons and comprehensive discussions between LLMs.

The implications of these findings for medical education and practice are significant. As LLMs are increasingly integrated into educational tools and clinical decision-support systems, understanding their strengths and limitations is crucial. The increased accuracy rates achieved by the larger models suggest that these tools could potentially be valuable in complementing traditional educational resources, particularly in helping prepare students and professionals for standardized examinations. LLMs hold tremendous potential by making education more accessible: they provide cost-effective, personalized explanations of exam material to students and create practice test questions.28 However, it's important to note that larger models often require more advanced computing resources and may be behind paywalls, potentially limiting access for some users. Additionally, the observed deficiencies in specific categories and the variable performance depending on cognitive complexity highlight the need for cautious integration. Overreliance on LLMs without proper oversight could lead to gaps in learning or even propagate misinformation, particularly in areas where the models are less accurate.

This study has several limitations. First, the models were evaluated using questions based on 2015 compendium that could have impacted the results, but since the questions tend to reflect core and basic factual knowledge and clinical practice patterns that are time-vetted, that effect is likely small. The pretraining dataset for Llama 3.1 encompasses information up to December 2023, whereas ChatGPT's training data only extends to October 2023, potentially enabling both models to provide more contemporary and different, yet accurate responses.10,12 That said, the LLMs still displayed superior accuracy to other studies. Another limitation might be that the tested question set may not accurately represent a real OKAP exam.

In conclusion, while larger LLMs such as Meta AI's Llama 3.1 405B and ChatGPT-4o-latest models demonstrate improved accuracy in ophthalmology-related questions in comparison to their predecessors and smaller models, the marginal gains observed with increasing model size suggest that further scaling may not significantly enhance performance. Likewise, the persistent deficiencies seen in certain ophthalmology categories indicate that domain-specific pretraining may be necessary to fully realize the potential of LLMs in ophthalmology. These findings contribute to the growing body of literature exploring the capabilities and limitations of LLMs in specialized medical fields like ophthalmology. The integration of written and image content into future models could address some of these limitations. Such models would likely enhance the validity and utility of LLMs in ophthalmic education, training, and exam preperation, where multimodal analysis is critical for practice skills such as interpreting optical coherence tomography (OCT) scans, fundoscopic images, or interpreting ocular pathology alongside clinical reasoning.

Study approval

There was no requirement for IRB study approval as this study did not involve human subjects.

Author contributions

TL: Conceptualization, Data curation, Investigation, Methodology, Visualization, Writing – original draft. RL: Writing - Review and Editing. RM: Writing - Review and Editing, Formal Analysis. CM: Writing - Review and Editing, Supervision, Project Administration. All authors reviewed the results and approved the final version of the manuscript.

Funding

This research did not receive any specific grant from funding agencies in the public, commercial, or not-for-profit sectors.

Declaration of competing interest

The authors declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

Acknowledgments

Thanks to all the peer reviewers for their opinions and suggestions.

References

- 1.Ting D.S.W., Pasquale L.R., Peng L., et al. Artificial intelligence and deep learning in ophthalmology. Br J Ophthalmol. 2018;103(2):167–175. doi: 10.1136/bjophthalmol-2018-313173. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Nath S., Marie A., Ellershaw S., Korot E., Keane P.A. New meaning for NLP: the trials and tribulations of natural language processing with GPT-3 in ophthalmology. Br J Ophthalmol. 2022;106(7):889–892. doi: 10.1136/bjophthalmol-2022-321141. [DOI] [PubMed] [Google Scholar]

- 3.Bellemo V., Lim G., Rim T.H., et al. Artificial intelligence screening for diabetic retinopathy: the real-world emerging application. Curr Diabetes Rep. 2019;19(9) doi: 10.1007/s11892-019-1189-3. [DOI] [PubMed] [Google Scholar]

- 4.Antaki F., Coussa R.G., Kahwati G., Hammamji K., Sebag M., Duval R. Accuracy of automated machine learning in classifying retinal pathologies from ultra-widefield pseudocolour fundus images. Br J Ophthalmol. 2021;107(1):90–95. doi: 10.1136/bjophthalmol-2021-319030. [DOI] [PubMed] [Google Scholar]

- 5.Burlina P.M., Joshi N., Pekala M., Pacheco K.D., Freund D.E., Bressler N.M. Automated grading of age-related macular degeneration from color fundus images using deep convolutional neural networks. JAMA Ophthalmology. 2017;135(11):1170. doi: 10.1001/jamaophthalmol.2017.3782. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Hood D.C., De Moraes C.G. Efficacy of a deep learning system for detecting glaucomatous optic neuropathy based on color fundus photographs. Ophthalmology. 2018;125(8):1207–1208. doi: 10.1016/j.ophtha.2018.04.020. [DOI] [PubMed] [Google Scholar]

- 7.OpenAI. ChatGPT. chat.openai.com. https://chat.openai.com/chat

- 8.Ayub H. GPT-4o: successor of GPT-4? Medium. May 14, 2024. https://hamidayub.medium.com/gpt-4o-successor-of-gpt-4-8207acf9104e#:∼:text=Trained%20on%20a%20diverse%20and Published.

- 9.OpenAI . Models OpenAI platform. August 13, 2024. https://platform.openai.com/docs/models/gpt-4o Published.

- 10.Howarth J. August 6, 2024. https://explodingtopics.com/blog/gpt-parameters (Number of Parameters in GPT-4 (Latest Data). Exploding Topics). Published. [Google Scholar]

- 11.Meta. Meta AI. https://www.meta.ai/

- 12.Introducing Llama 3.1: our most capable models to date. Meta AI. July 23, 2024. https://ai.meta.com/blog/meta-llama-3-1/ Published.

- 13.Radford A., Narasimhan K., Salimans T., Sutskever I. Improving Language understanding by generative pre-training. 2018. https://cdn.openai.com/research-covers/language-unsupervised/language_understanding_paper.pdf

- 14.Brown T.B., Mann B., Ryder N., et al. Language models are few-shot learners. arxivorg. 2020;4 https://arxiv.org/abs/2005.14165 [Google Scholar]

- 15.Kung T.H., Cheatham M., Medenilla A., et al. Performance of ChatGPT on USMLE: potential for AI-assisted medical education using large language models. Dagan A., editor. PLOS Digital Health. 2023;2(2) doi: 10.1371/journal.pdig.0000198. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Antaki F., Touma S., Milad D., El-Khoury J., Duval R. Evaluating the performance of ChatGPT in ophthalmology: an analysis of its successes and shortcomings. Ophthalmology Science. 2023;3(4) doi: 10.1016/j.xops.2023.100324. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Chern K.C., Saidel M.A. third ed. Lippincott Williams & Wilkins; 2015. Review Questions in Ophthalmology. [Google Scholar]

- 18.Board Prep Resources for Ophthalmology Residents. American Academy of Ophthalmology. Accessed August 20, 2024. https://www.aao.org/education/board-prep-resources.

- 19.OKAP® User's Guide. American Academy of Ophthalmology; 2020. https://www.aao.org/Assets/d2fea240-4856-4025-92bb-52162866f5c3/637278171985530000/user-guide-2020-pdf [Google Scholar]

- 20.Kelsey J.L., Thompson D.W., Evens A.S. Oxford University Press; New York: 1986. Methods in Observation Epidemiology; pp. 105–127. pp Ch 5. [Google Scholar]

- 21.Altman D.G. Chapman and Hall/CRC; 1990. Practical Statistics for Medical Research. [DOI] [Google Scholar]

- 22.Haddad F., Saade J.S. Performance of ChatGPT on ophthalmology-related questions across various examination levels: observational study. JMIR Med. Educat. 2024;10 doi: 10.2196/50842. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Olis M., Dyjak P., Weppelmann T.A. Performance of three artificial intelligence chatbots on Ophthalmic Knowledge Assessment Program materials. Can J Ophthalmol. 2024;59(4) doi: 10.1016/j.jcjo.2024.01.011. [DOI] [PubMed] [Google Scholar]

- 24.Teebagy S., Colwell L., Wood E.R., Yaghy A., Faustina M. Improved performance of ChatGPT-4 on the OKAP examination: a comparative study with ChatGPT-3.5. J. Acad. Ophthalmol. 2023;15(2):e184–e187. doi: 10.1055/s-0043-1774399. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Mihalache A., Grad J., Patil N.S., et al. Google Gemini and Bard artificial intelligence chatbot performance in ophthalmology knowledge assessment. Eye. 2024;38 doi: 10.1038/s41433-024-03067-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Guerra G., Hofmann H., Sobhani S., et al. GPT-4 artificial intelligence model outperforms ChatGPT, medical students, and neurosurgery residents on neurosurgery written board-like questions. World Neurosurg. 2023;179 doi: 10.1016/j.wneu.2023.08.042. [DOI] [PubMed] [Google Scholar]

- 27.Hoffmann J, Borgeaud S, Mensch A, et al. Training Compute-Optimal Large Language Models. arXiv.org. doi: 10.48550/arXiv.2203.15556. [DOI]

- 28.Heinke A., Radgoudarzi N., Huang B.B., Baxter S.L. A review of ophthalmology education in the era of generative artificial intelligence. Asia-Pacific J. Ophthalmol. 2024;13(4) doi: 10.1016/j.apjo.2024.100089. [DOI] [PMC free article] [PubMed] [Google Scholar]