Abstract

Deep learning, a cornerstone of artificial intelligence, is driving rapid advancements in computational biology. Protein-protein interactions (PPIs) are fundamental regulators of biological functions. With the inclusion of deep learning in PPI research, the field is undergoing transformative changes. Therefore, there is an urgent need for a comprehensive review and assessment of recent developments to improve analytical methods and open up a wider range of biomedical applications. This review meticulously assesses deep learning progress in PPI prediction from 2021 to 2025. We evaluate core architectures (GNNs, CNNs, RNNs) and pioneering approaches—attention-driven Transformers, multi-task frameworks, multimodal integration of sequence and structural data, transfer learning via BERT and ESM, and autoencoders for interaction characterization. Moreover, we examined enhanced algorithms for dealing with data imbalances, variations, and high-dimensional feature sparsity, as well as industry challenges (including shifting protein interactions, interactions with non-model organisms, and rare or unannotated protein interactions), and offered perspectives on the future of the field. In summary, this review systematically summarizes the latest advances and existing challenges in deep learning in the field of protein interaction analysis, providing a valuable reference for researchers in the fields of computational biology and deep learning.

Keywords: Deep learning, Protein-protein interactions, Artificial intelligence, PPI prediction, Artificial neural networks, Computational biology, Machine learning

Introduction

In the current era, Artificial Intelligence (AI) has become a central driver of interdisciplinary innovation and development, particularly in the realm of deep learning. Due to its remarkable pattern recognition capabilities, deep learning has led to transformative advancements across a wide range of disciplines. The impact of this phenomenon can be observed across both academic and practical domains. These innovations have shown that advanced computational models can emulate aspects of human reasoning and generate creative outputs, and in some cases, may even exceed the conventional limits of human cognition [1].

Protein-protein interactions (PPIs) play an essential role in cellular function, influencing a variety of biological processes such as signal transduction, cell cycle regulation, transcriptional regulation, and cytoskeletal dynamics [2]. PPI regulates the interaction of transcription factors with their target genes by modulating intracellular signaling pathways in response to external stimuli, ensuring precise control over gene expression and cell cycle [3, 4]. Furthermore, PPIs are crucial for maintaining cytoskeletal structural stability and dynamic remodeling. They also play a vital role in protein folding and quality control mechanisms, helping prevent the accumulation of misfolded proteins. PPIs can be categorized based on their nature, temporal characteristics, and functions: direct and indirect interactions, stable and transient interactions, as well as homodimeric and heterodimeric interactions. Different types of interactions shape their functional characteristics and work in concert to regulate cellular biological processes.

Before the advent of deep learning-based predictors, the prediction and analysis of PPIs relied predominantly on experimental methods and rudimentary computational approaches. Techniques such as the yeast two-hybrid screening, co-immunoprecipitation (Co-IP), mass spectrometry, and immunofluorescence microscopy were instrumental in elucidating molecular interactions [5–7]. Although effective, these experimental techniques were often time-consuming, resource-intensive, and constrained by the limited number of detectable interactions and the challenges associated with scaling to large datasets. Concurrently, computational methods based on sequence similarity, structural alignment, and docking were employed to predict PPIs. However, these approaches faced significant limitations due to their reliance on manually engineered features and difficulties in scaling to accommodate large, complex biological systems [8, 9].

The application of deep learning in computational biology is largely enabled by its powerful capability for high-dimensional data processing and automatic feature extraction [10, 11]. Biological data are often complex and high-dimensional, while deep learning effectively captures nonlinear relationships and automatically extracts meaningful features [12, 13]. In contrast to conventional machine learning algorithms such as support vector machines and random forests, which rely on manually engineered features [14, 15], deep learning can autonomously extract semantic sequence context information from sequence and residue information data [16]. This ability makes it particularly well-suited for processing large-scale datasets, as evidenced by breakthroughs like AlphaFold 2 [17]. This capability allows for a more comprehensive understanding of PPI networks, enabling new insights into cellular processes and facilitating the discovery of potential therapeutic targets.

Deep learning has the potential to fundamentally transform the paradigm of PPI prediction, offering unprecedented levels of accuracy and efficiency. This review systematically sorts out the latest progress of deep learning in PPI analysis, comprehensively summarizes existing methods and key technologies, explores their application prospects, and highlights future trends in PPI prediction. This will provide valuable references for researchers in the fields of computational biology and artificial intelligence, promoting the advancement and integration of protein interaction research and deep learning technology.

Data availability and issue description

Database

PPI data comprises a diverse range of information, which is primarily employed to elucidate protein functions and interactions. Protein sequence data (e.g., amino acid sequences) are fundamental to PPI research, as their unique characteristics closely relate to interactions. Gene expression data further facilitates the inference of protein expression and interaction patterns. In addition, protein structure data—encompassing three-dimensional conformations and domain information—illuminates the roles of binding sites and spatial characteristics in mediating interactions. PPI network data, generated through experimental methodologies, construct comprehensive interaction maps between proteins, thereby offering an integrative overview of their interplay [18–20]. Functional annotation data, including resources such as Gene Ontology (GO) and KEGG pathway information, enhance our understanding of proteins’ involvement in specific biological processes [21, 22]. Moreover, several publicly available databases and datasets, containing extensive experimental results as well as algorithm-based predictions, have been extensively utilized in PPI prediction tasks. These resources have provided critical support for the training and validation of deep learning models. Table 1 presents several key datasets commonly employed in PPI prediction tasks, along with their sources and pertinent details.

Table 1.

Commonly used PPI databases and their descriptions

| Database Name | Description | URL |

|---|---|---|

| STRING | A database for known and predicted protein-protein interactions across various species. | https://string-db.org/ |

| BioGRID | A database of protein-protein and gene-gene interactions from various species. | https://thebiogrid.org/ |

| IntAct | A protein interaction database maintained by the European Bioinformatics Institute. | https://www.ebi.ac.uk/intact/ |

| MINT | A database of protein-protein interactions, particularly from high-throughput experiments. | https://mint.bio.uniroma2.it/ |

| HPRD | A human protein reference database with interaction, enzymatic, and cellular localization data. | http://www.hprd.org/ |

| DIP | A database of experimentally verified protein-protein interactions. | https://dip.doe-mbi.ucla.edu/ |

| Reactome | An open, free database of biological pathways and protein interactions. | https://reactome.org/ |

| CORUM | A database focused on human protein complexes with experimentally validated data. | http://mips.helmholtz-muenchen.de/corum/ |

| PDB | A database storing 3D structures of proteins that also includes interaction data. | https://www.rcsb.org/ |

| I2D | A database of protein-protein interactions, based on literature and experimental data. | http://ophid.utoronto.ca/i2d/ |

| GeneMANIA | A tool for analyzing functional gene and protein interaction networks. | http://genemania.org/ |

| PINA | A protein-protein interaction network analysis database. | https://cbg.garvan.org.au/pina/ |

| APID | A database of protein-protein interactions, with tools for visualization and analysis. | http://apid.dep.usal.es/ |

Common PPI tasks

Common tasks in PPI research include interaction prediction, interaction site identification, cross-species interaction prediction, as well as the construction and analysis of PPI networks [23–25]. The objective of interaction prediction is to ascertain the probability of interactions between proteins. This determination is frequently made through the analysis of amino acid sequences, structural characteristics, and gene expression data. Interaction site prediction focuses on identifying specific regions on the protein surface that are likely to participate in molecular interactions, often relying on high-resolution three-dimensional structural data. Cross-species interaction prediction aims to predict protein interactions across different species, facilitating the integration of data from diverse organisms and enabling transfer learning applications. The construction and analysis of PPI networks have yielded invaluable insights into global interaction patterns and the identification of functional modules, which are essential for understanding the complex regulatory mechanisms governing cellular processes.

Core deep learning models for PPI prediction

Graph-neural networks for protein-protein interactions

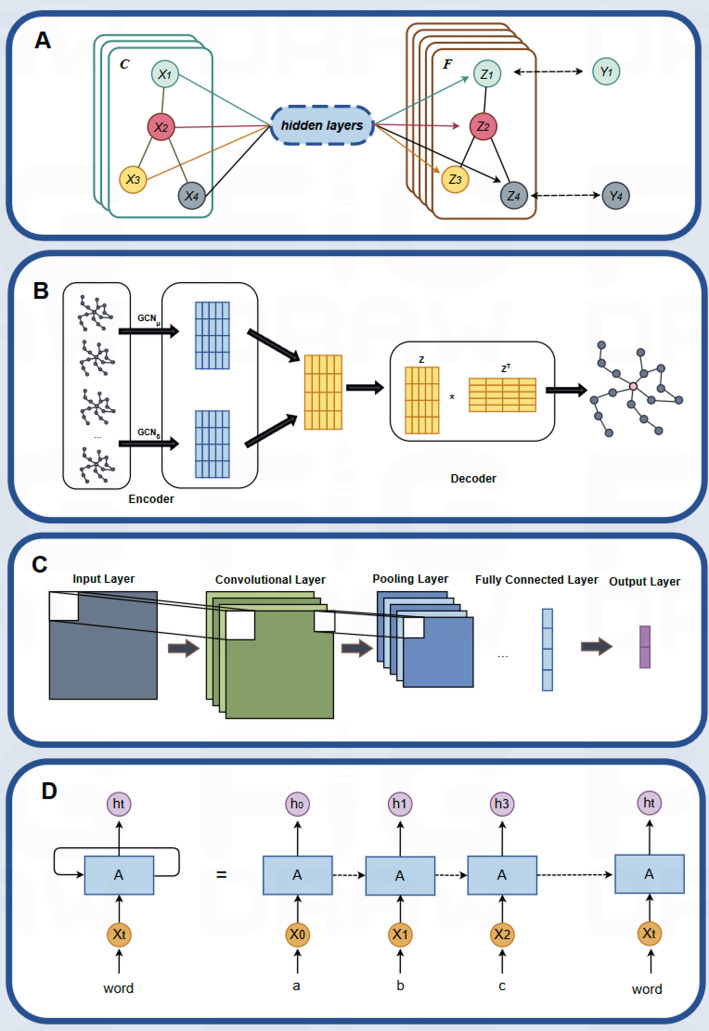

Graph neural networks (GNNs) based on graph structures and message passing adeptly capture local patterns and global relationships in protein structures [26]. By aggregating information from neighboring nodes, GNNs generate node representations that reveal complex interactions and spatial dependencies in proteins (as shown in Fig. 1B). Variants of GNN, such as graph convolutional network (GCN) [27], GraphSAGE, and Graph autoencoder (as shown in Fig. 1B), provide flexible toolsets for PPI prediction. Graph convolutional networks (GCN), graph attention networks (GAT), GraphSAGE (Graph Sampling and Aggregation), and graph autoencoders (GAE) constitute four principal architectures in the field of GNN, each addressing specific challenges inherent in graph-structured data. GCN employs convolutional operations to aggregate information from neighboring nodes, making it highly effective for tasks such as node classification and graph embedding. As illustrated in Fig. 1A, an input node (denoted as C) is processed through successive hidden layers to produce outputs Z1, Z2, Z3, Z4, and so on, with each computational layer incorporating both the graph’s adjacency matrix and convolution operations on node features. However, the uniform treatment of neighboring nodes in GCN may limit its ability to capture heterogeneous relationships in more complex graphs [28]. In contrast, GAT introduces an attention mechanism that adaptively weights neighboring nodes based on their relevance, thereby enhancing the flexibility of information propagation in graphs with diverse interaction patterns [29]. The GAE framework utilizes an autoencoder-based approach, comprising an encoder and a decoder (see Fig. 1B). The encoder processes the graph data through a series of GCN layers to generate compact, low-dimensional node embeddings (Z and ZT), which are subsequently employed by the decoder either to reconstruct the graph structure or to facilitate predictive tasks, such as node classification and graph reconstruction [30]. Meanwhile, GraphSAGE is specifically designed for large-scale graph processing, utilizing neighbor sampling and feature aggregation to significantly reduce computational complexity, making it especially well-suited for applications involving massive graph data [31].

Fig. 1.

Examples of three traditional artificial neural networks. (A) Graph Convolutional Network. (B) Graph autoencoder. (C) Convolutional Neural Network. (D) Recurrent Neural Network

In this context, researchers have introduced several innovative architectures, including the AG-GATCN framework developed by Yang et al., which integrates GAT and temporal convolutional networks (TCNs) to provide robust solutions against noise interference in Protein-protein interactions analysis [32]. Zhong et al. developed the RGCNPPIS system that integrates GCN and GraphSAGE, enabling simultaneous extraction of macro-scale topological patterns and micro-scale structural motifs [33]. Wu and Cheng introduced Deep Graph Auto-Encoder (DGAE), which innovatively combines canonical auto-encoders with graph auto-encoding mechanisms, enabling hierarchical representation learning for optimizing low-dimensional embeddings of biomolecular interaction graphs [34].

The continuous-time message passing paradigm has emerged as a pivotal framework for modeling protein conformation dynamics. The GSALIDP architecture, introduced by Zheng et al., is a hybrid GraphSAGE-LSTM network designed to predict the dynamic interaction patterns of intrinsically disordered proteins (IDPs). It combines the GraphSAGE algorithm for capturing graph-based structural information from multiple conformations of IDPs and an LSTM network to process the temporal evolution of these conformations. This approach models the fluctuating nature of IDP conformations as dynamic graphs, enabling the prediction of interaction sites and contact residue pairs between IDPs [35]. Complementarily, Wang et al. formulated the Relational Graph Network (RGN) approach under this paradigm, which established hierarchical graph representations of protein structures through coordinated integration of spectral graph convolutions and attention-based edge weighting. This dual-modality architecture enables multi-scale topological feature extraction, significantly advancing the precision of PPI trajectory prediction [36].

Recent advancements in neural network architectures leverage residual connectivity, convolutional kernels, and hybrid dynamic adjustment strategies to enhance multivariate modeling of structured data, such as graph-based and 3D protein representations. For example, Li et al. integrated residual connectivity, dense connectivity, and dilation convolution into GCNs, significantly enhancing training depth and stability [37]. SO(3) is a mathematical concept representing the group of all 3D rotations, whereas isometric neural networks are designed to preserve these rotational symmetries. These networks are typically based on graph or spherical convolutional neural networks to maintain isometry in machine learning. Based on the applicability of rotation invariance in protein structures, Aykent and Xia proposed GBPNet [38], an SO(3)-equivariant neural network for protein structure representation, resulting in notable performance gains in downstream tasks.

Convolutional neural networks for protein-protein interactions

A typical CNN module consists of convolutional layers, pooling layers, fully connected layers, and additional architectural enhancements such as residual shortcuts (as shown in Fig. 1C) [39, 40]. Recently, three-dimensional convolutional neural networks (3D-CNNs) have been employed for protein structure integration due to their advantages in modeling multi-level spatial features and optimizing geometric invariance.

Advancements in 3D structural representation have significantly enhanced the modeling of spatial and geometric features in protein analysis and drug design, primarily through the application of 3D-CNNs and geometric invariance-based approaches. Three-dimensional convolutional neural networks (3D CNNs) have been shown to excel in the capture of spatial features. RepVGG, a lightweight convolutional neural network initially designed for image classification tasks, offers advantages in terms of computational efficiency and inference speed. Guo et al. built upon the foundations laid out by RepVGG, extending the framework to propose the TRScore model: a protein docking method based on 3D RepVGG. This method has been demonstrated to accurately distinguish favourable near-native conformations from unfavourable non-native docking complexes, exhibiting strong performance without requiring additional input features [41]. To address 3D-CNN’s sensitivity to rotational and translational variations in initial structures, Chen et al. introduced Eq. 3DCNN that integrates rotation-invariant modules to predict protein properties and capture non-geometric features [42]. Zhu et al. proposed DeepRank, a deep learning-based framework tailored for data mining of 3D PPI interfaces [43]. In the context of drug design, Sree et al. developed a 3D-CNN-based method for protein structure prediction to enhance the accuracy of drug recommendation systems [44].

Moreover, leveraging the effectiveness of multi-neural network architectures, Li et al. introduced a PPI prediction model called MARPPI, which employs a two-channel framework combined with a multi-scale residual network design [45]. Zhang et al. developed the DeepGOA, which integrates protein sequence data and PPI network [46]. DeepGOA utilizes Bi-LSTM (Bidirectional Long Short-Term Memory) with multi-scale CNNs to generate semantic features of protein sequences, and the DeepWalk technique to obtain the representation of PPI networks. These two representations were jointly used to predict protein functions, demonstrating superior performance compared to DeepGO and BLAST in practice. In addition, CNN-based architecture was also employed to predict the location of water molecules on protein chains. Specifically, Park and Seok introduced GalaxyWater-CNN for this purpose [47].

Recurrent neural networks for protein-protein interactions

In the context of Protein-protein interactions prediction, recurrent neural networks (RNNs) have been employed to process and analyze the semantic information of protein sequences (as shown in Fig. 1D) [48]. The input at each time step (e.g., Xt, X0, X1, X2, etc.) represents different protein fragments or features, which are processed by a shared computational unit A to generate hidden states (e.g., h0, h1, h2, h3). These hidden states contain contextual information from the protein sequence, reflecting potential interactions between proteins. It is evident that RNN is capable of capturing the contextual dependencies between amino acids in protein sequences, thereby enabling the effective prediction of protein interactions and the revelation of significant connections in protein function and biological processes. RNN-based models excel in modeling sequential correlated data across multiple scales to enhance functionality. A substantial body of research has leveraged this capability to learn protein sequence representations and develop hybrid models by integrating diverse data structure methods, such as the protein localization approach proposed by Alakus and Turkoglu, which combines AVL trees with bi-directional RNNs to validate interactions between SARS-CoV-2 and human proteins [49].

Long Short-Term Memory (LSTM) networks have become a critical component in PPI prediction, due to their proficiency in capturing sequence order and residue dependencies. The development of long-range dependency learning has shed light on the intricate relationship between protein folding and function by capturing interactions between non-neighboring residues. In LSTM-based deep learning frameworks, regularization methods have been leveraged to improve performance. For instance, Deng et al. proposed a hybrid deep learning framework combining CNNs and LSTMs, which optimized performance through logistic regression with L1 regularization [50]. Furthermore, Zhou et al. proposed a method for calculating the frustration index by evaluating the additional stabilization energy of residue pairs relative to statistical energy distributions, which is also used for PPI prediction [51]. The LSTM-PHV model developed by Sho Tsukiyama et al. innovatively utilizes amino acid sequences to predict protein-protein interactions (PPI) between humans and viruses by combining long short-term memory (LSTM) networks and word2vec technology. This method effectively captures the contextual information of sequences by converting amino acid sequences into “word vectors” and using LSTM to learn the complex dependencies between protein sequences [52]. The RAPPPID method, proposed by Joseph Szymborski and Amin Emad, integrates a dual AWD-LSTM network with multiple regularization techniques. This approach aims to effectively address the generalization issues of traditional PPI prediction models in the absence of unseen proteins and data bias. The innovative network architecture ensures stability in complex datasets [53].

Exploring emerging techniques and approaches

Network optimization of attention mechanisms

Deep learning methods based on attention are reshaping the technical paradigm of PPI prediction [54–56]. The attention mechanism enhances prediction accuracy by establishing residue-level long-range dependency modeling and using dynamic weights to analyze higher-order protein relationships. The cross-attention mechanism effectively constructs representations across diverse modalities, while the gated fusion architecture enhances multi-scale feature extraction. Representative works in this field include: Li et al. proposed SDNN-PPI that uses amino acid composition (AAC), conjoint triad (CT), and auto covariance (AC) features while employing a self-attention mechanism to enhance the feature extraction ability of deep neural networks (DNNs), achieving excellent prediction accuracy on multiple datasets [57]. Zhai et al. proposed LGS-PPIS, a local-global information aggregation framework combining an edge-aware graph convolutional network (EA-GCN) and a self-attention (SA-RIM) module for PPIS prediction [58]. Conversely, Wu et al. proposed AttentionEP, integrating cross- and self-attention mechanisms, extracting spatial and temporal features through GCN, GAT, and BiLSTM, integrating subcellular localization data, and employing a ResNet classifier for key protein prediction [56, 59].

The attention mechanism generates a dynamic network through an interpretable weight distribution, visualizing and analyzing the protein interface recognition process. Its hierarchical attention model uncovers the coupling patterns among residue-level features, enabling researchers to deconstruct the multi-scale feature synergy mechanism at the molecular dynamics level and offering computational evidence for analyzing protein conformational selection preferences. Song et al. proposed a method for clustering spatially resolved gene expression data based on graph-regularized convolutional neural networks, supporting biological interpretation of gene clusters in the spatial context [60]. Tang et al. proposed HANPPIS, combining six features: PSSM, secondary structure, pre-trained vectors, hydrophilicity, and amino acid position [61]. Wang et al. proposed ECA-PHV, an interpretable model based on an effective channel attention mechanism for predicting human-virus PPI [62].

Furthermore, several innovative PPI prediction frameworks and auxiliary models have emerged. Li and Liu proposed MuToN, a geometric deep learning-based framework that uses a geometric attention network to identify changes in the binding interface caused by mutations and calculate the allosteric effects of amino acids [63]. Ieremie et al. developed the TransformerGO model, which generates a graph representation of GO terms using the node2vec algorithm. This method dynamically captures semantic similarity between GO terms (The GO nomenclature is a standardized term employed in Gene Ontology (GO) to describe the functions of genes and their products, the biological processes in which they are involved, and the cellular components in which they are located), outperforming traditional metrics and existing machine learning methods on Saccharomyces cerevisiae and Homo sapiens datasets [64] (Table 2).

Table 2.

Summary of network optimization strategies using attention mechanisms for PPI prediction

| Author | Model Name | Research method | Evaluation parameter |

|---|---|---|---|

| Li et al. [57] | SDNN-PPI | SDNN-PPI, which utilizes AAC, CT, and AC features and uses a self-attention mechanism to enhance DNN feature extraction capability. |

(H. sapiens) ACC = 0.9894 ± 0.0019 MCC = 0.9757 ± 0.0060 AUC = 0.9960 |

| Zhai et al. [58] | LGS-PPIS | LGS-PPIS, a local-global information aggregation framework combining edge sensing GCN and self-attention modules. |

ACC = 0.802 F1 = 0.502 MCC = 0.398 AUROC = 0.819 |

| Wu et al. [59] | AttentionEP | AttentionEP’s multi-scale feature fusion method combines cross-attention and self-attention mechanisms. |

ACC = 0.9610 FScore = 0.8262 AUC = 0.9793 Precision = 0.8627 BACC = 0.8880 |

| Song et al.[60] | CNN | A clustering method of spatially resolved gene expression data based on graph regularization and CNN. |

ACC = 0.933923 F1 = 0.932646 AUC = 0.935 120 |

| Tang et al. [61] | HANPPIS | HANPPIS, a method based on a hierarchical attention mechanism. |

ACC = 0.631 Precision = 0.291 F1 = 0.393 |

| Wang et al. [62] | ECA - PHV | ECA - PHV for predicting human-viral PPI. |

(TR1) ACC = 0.9221 (TR2) ACC = 0.9263 (TS1) ACC = 0.869 (TS2) ACC = 0.880 |

| Li and Liu [63] | MuToN | MuToN, a geometric deep learning based framework. |

PCC = 0.991 Spearman correlation = 0.62 |

| Ieremie et al. [64] | TransformerGO | The TransformerGO model generates graph embeddings of GO terms through the use of the node2vec algorithm, with the purpose of capturing the semantic similarity between GO terms in a dynamic manner. |

S. cerevisiae, GO-set size (10, 20) AUC = 0.973 H. sapiens, GO-set size (10, 30) AUC = 0.953 |

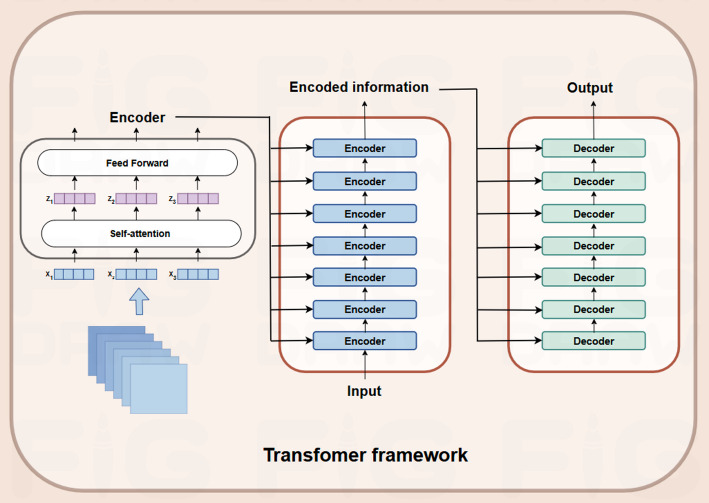

Transformer model structure

The transformer network is a novel model architecture consisting of multiple identical layers, each including two main components: a multi-head self-attention mechanism and a simple position-aware feed-forward fully connected network (as shown in Fig. 2) [65].

Fig. 2.

The transformer framework is comprised of two principal components. The encoder processes an input sequence to produce an internal representation using self-attention and feed-forward mechanisms. These elements enable the model to emphasise the pertinent components. The feed-forward layer transforms each input token independently in order to capture complex patterns. The encoder produces an encoded representation, which is then transmitted to the decoder. The decoder employs self-attention mechanisms to refine the output

Architectures based on multiple transformer layers have been incorporated into an ensemble framework and achieved impressive results on pre-trained protein language models. EnsemPPIS, proposed by Mou et al., is an ensemble framework based on transformer and gated convolutional networks. It extracts residue interactions in protein sequences through transformer layers and an ensemble learning strategy to integrate global and local sequence features [66]. The MaTPIP deep learning framework, proposed by X. Li et al., integrates CNN and transformer architectures, leveraging a pretrained protein language model (PLM) along with manually curated protein sequence data. This approach demonstrates superior performance on both human and cross-species PPI benchmark datasets [67]. Meanwhile, the transformer architecture was further extended by Kang et al., who proposed HN-PPISP. This innovative hybrid neural network model integrates the MLP-Mixer module with a two-stage multi-branch transformer structure, exploiting the advantages of attention mechanisms and parallel feature aggregation. This approach markedly boosts the accuracy of PPI site prediction, outperforming seven established methods [68].

Furthermore, point cloud-based deep learning techniques offer a promising geometry-driven paradigm for resolving protein 3D structures. Wang et al. were the first to apply PointNet and PointTransformer in predicting protein-ligand binding affinity, which significantly improved prediction accuracy [69]. Chen et al. also utilized PointNet and 3D point cloud neural networks to evaluate protein docking models [70]. These efforts contributed to further improvements in the accuracy of docking predictions, highlighting the potential of point cloud-based approaches in computational protein structure analysis (Table 3).

Table 3.

Summary of transformer-based architecture for PPI prediction

| Author | Model Name | Research method | Evaluation parameter |

|---|---|---|---|

| Mou et al. [66] | EnsemPPIS | EnsemPPIS, an integration framework based on transformer and gated convolutional networks. |

(1jtdB) MCC = 0.760 (1b6cA) MCC = 0.542 |

| X. Li et al. [67] | MaTPIP | The MaTPIP deep learning framework works by fusing pre-trained protein language models. |

(S. cerevisiae) AUPR = 56.6 F-Score = 54.4 AUROC = 87.5 |

| Kang et al. [68] | HHN-PPISP | HHN-PPISP, an innovative hybrid neural network model, combines the transformer architecture’s MLP-Mixer module and two-stage multi-branch module. |

ACC = 0.667 MCC = 0.244 F-measure = 0.427 AUCPR = 0.360 |

| Wang et al. [69] | PointNet and PointTransformer | A point cloud-based deep learning strategy for protein–ligand binding affinity prediction | Average Rp = 0.827 |

| Chen et al. [70] | PointDE | PointDE: Protein Docking Evaluation Using 3D Point Cloud Neural Network |

Top 1: Success rate = 65.6%, surpassing GNN-DOVE (64.1%). Top 5: Success rate = 90.1%, surpassing GNN-DOVE (84.9%). Top 10: Success rate = 91.8%, surpassing GNN-DOVE (86.7%). |

Multitasking learning

In PPI prediction, a multi-task learning framework addresses multiple tasks via shared layers, directing the training process by constraining layer optimization. The shared layers were trained to obtain the shared feature dependencies across multiple tasks, with task-specific layers dedicated to each individual task. The shared layers enable the model to generalize across tasks, improving its overall performance. (as shown in Fig. 3A). This enhancement is achieved by considering both the protein’s interaction and its biologically notable features.

Fig. 3.

Overview of multi-task learning and multimodal learning (A) Multi-task learning mechanism (B) Interdisciplinary multimodal expansion applications

Li et al. have proposed a novel multi-task graph structure learning method, MgslaPPI. The PPI prediction task is specifically decomposed into two stages: amino acid residue reconstruction (A2RR) and protein interaction prediction (PIP). An auxiliary task, protein feature reconstruction (PFR) and mask interaction prediction (MIP), is introduced to enhance the model’s capacity to predict interactions [71]. Similarly, Yang et al. proposed a novel framework, MpbPPI, which integrates a multi-task pre-training strategy and geometric isometry preservation techniques to predict the effect of amino acid mutations on PPI. Efforts have also been made to address challenges like sample bias and missing data through the application of multi-task learning [72]. Capel et al. proposed a multi-task learning strategy to solve the problem of data scarcity in predicting PPI interface residues. Incorporating tasks such as secondary structure prediction, solvent accessibility prediction, and buried residues identification into a multi-task learning framework enables the model to mitigate the impact of missing PPI annotation data [73] (Table 4).

Table 4.

Summarizes the contribution of Multi-task learning to the study of Protein-Protein interactions

| Author | Model Name | Research method | Evaluation parameter |

|---|---|---|---|

| Li et al. [71] | MgslaPPI | The MgslaPPI is divided into two stages: A2RR and PIP. An auxiliary task, PFR and MIP, is introduced to enhance the model’s capacity to predict interactions. |

SHS27K F1 = 79.95% SHS148K F1 = 83.78% |

| Yang et al. [72] | MpbPPI | MpbPPI: a multi-task pre-training-based equivariant approach for the prediction of the effect of amino acid mutations on protein-protein interactions. |

(S4169) Rp = 0.795 ± 0.004 (S1131) Rp = 0.865 ± 0.003 (S645) Rp = 0.615 ± 0.013 (M1101) RP = 0.787 ± 0.002 |

| Capel et al. [73] | Customized OPUS-TASS | Multi-task learning to leverage partially annotated data for PPI interface prediction. | AUC ROC = 0.732 ± 0.004 |

Multimodal approach and integrated model

Amid the rapid advancement of multi-omics data analysis, integrative models that combine diverse data modalities—such as sequence, structural, and functional data—are widely employed to improve prediction accuracy and shed light on interaction mechanisms [74]. For instance, Kang et al. introduced a multimodal approach that fuses structural and sequence data, where a pre-trained transformer network extracts structural features, and a masked language model encodes sequence information [75]. Similarly, Chen and Hu combined sequence, structure, and adjacency features, to predict residue-residue interactions, employing a stacked meta-learning method [76].

In the domain of interdisciplinary multi-modal extended applications, Rafiei et al. proposed a deep learning method called DeepTraSynergy, which integrates multiple data types, including drug-target interactions, protein-protein interactions, and cell-target interactions. DeepTraSynergy contains a transformer model to extract drug features. Through multitask learning, the model simultaneously predicts drug toxicity, drug-target interactions, and drug combination synergy. The model employs node2vec for protein representation and enhances prediction accuracy by optimizing multiple loss functions, including synergy loss and toxicity loss (as shown in Fig. 3B) [77]. Researchers use knowledge graphs (e.g., protein families [78]) to represent and integrate associations between data from diverse modalities. The independence of neural networks enables modular design according to functional purpose, with combined integrated models offering advantages of different neural networks for multi-level and multi-dimensional analysis capabilities. Baek et al. proposed a three-track neural network model integrating one-dimensional sequences, two-dimensional distance maps, and three-dimensional structural information for protein structure and interaction prediction, demonstrating comparable performance to the DeepMind system at CASP14 [79]. Concurrently, Asim et al. presented ADH-PPI, a deep hybrid model integrating FastText embedding and LSTM, CNN, and self-attention layers, enhancing accuracy by 4% and the Matthews correlation coefficient by 6% relative to prevailing methods in PPI prediction [80].

The multi-view model derived from AlphaFold represents a significant advancement in computational protein analysis. For instance, Meng et al. introduced MVGNN-PPIS, a multi-view graph neural network model that combines AlphaFold3 predictive structures with migration learning, achieving superior performance over existing methods across multiple PPI datasets [24]. Analogously, the AlphaBridge framework stands as a cutting-edge breakthrough in computational protein complex analysis, leveraging the advanced technology of AlphaFold3 [81]. This framework employs key metrics, including the predicted local distance difference test (pLDDT), pairwise alignment error (PAE), and predicted distance error (PDE) [82], which are innovatively incorporated into a graph-based clustering algorithm. With its unique architecture, the AlphaBridge framework is capable of accurately identifying and deeply analyzing a variety of interaction interfaces within macromolecular complexes, whether they involve protein-protein interactions or protein-nucleic acid associations, ultimately providing precise insights.

Furthermore, researchers have leveraged the integration of multi-omics data to achieve more accurate prediction of protein complex formation, thereby facilitating a deeper understanding of diverse biological processes within cells [83]. Consequently, this enhanced efficiency in disease prediction and drug research, and development has led to significant advances in the field [84]. For example, Schulte-Sasse et al. developed EMOGI, a graph convolutional network that seamlessly integrates multi-omics pan-cancer data with PPI networks for cancer gene prediction [85] (Table 5).

Table 5.

Summary of multimodal approaches and integrated models in PPI prediction

| Author | Model Name | Research method | Evaluation parameter |

|---|---|---|---|

| Kang et al. [75] | AFTGAN | Multi-type PPI prediction based on attention-free converter and graph attention network. |

(SHS27K) Micro-F1 = 0.867 Hamming Loss = 0.087 (SHS148K) Micro-F1 = 0.920 Hamming Loss = 0.052 |

| Kuan-Hsi Chen and Yuh-Jyh Hu. [76] | RRI-Meta | Residue–Residue interaction prediction via stacked Meta-Learning. |

(3HMX) AUROC = 0.97 (1ML0) AUROC = 0.74 (1RKE) AUROC = 0.86 |

| Rafiei et al. [77] | DeepTraSynergy | Drug combinations using multimodal deep learning with transformers. |

(DrugCombDB) ACC = 0.7715 AUC-ROC = 0.8321 F1 = 0.7608 (OncologyScreen) ACC = 0.8052 AUC-ROC = 0.8637 F1 = 0.8112 |

| Baek et al. [79] | BAKER-ROSETTASERVER and BAKER | Accurate prediction of protein structures and interactions using a three-track neural network. | To a level comparable to AlphaFold2. |

| Asim et al. [80] | ADH-PPI | Deep hybrid model combining FastText embedding and LSTM, CNN, and self-attention layer. |

ACC = 0.9263 MCC = 0.9144 Precision = 0.9284 |

| Schulte-Sasse et al. [85] | EMOGI | Integration of multiomics data with graph convolutional networks to identify new cancer genes and their associated molecular mechanisms. | —— |

Transfer learning and pre-trained models

Transfer learning is a machine learning approach that improves model precision and generalizability by pre-training on a large dataset and subsequently applying the resulting model to a smaller dataset. The widespread use of transfer learning in bioinformatics, exemplified by applications like gene expression prediction and cancer diagnosis, underscores its potential when data is limited. For instance, Qiao et al. proposed ProNEP, a deep learning algorithm combining transfer learning and a bilinear attention network for high-throughput identification of NLR receptor-pathogen effector interactions [86]. Concurrently, Yang et al. combined evolutionary sequence features, Siamese convolutional neural networks, and multilayer perceptrons, introducing two transfer learning strategies (‘freeze’ and ‘fine-tune’) to significantly improve human-virus PPI prediction performance [87].

Pretrained models based on transformer structures, such as BERT and ESM, have become mainstream methods for transfer learning-based PPI prediction [88, 89]. The effectiveness of BERT can be attributed to its bidirectional encoding and pretraining-fine-tuning, enabling it to learn a general language representation from substantial unlabeled data. Given this applicability, Liu et al. proposed the MindSpore ProteinBERT (MP-BERT) model, a transformer-based bidirectional encoder representation using protein pairs as input, suitable for PPI identification and site location [90]. Warikoo et al. developed LBERT, a transformer model combining local and global context, significantly improving classification accuracy for PPI, DDI, and PER extraction tasks [91]. The ESM architecture can also learn universal protein representations through large-scale pretraining, similar to BERT. The ES2M architecture core relies on evolutionary data (e.g., diverse genomic sequences) for deep protein sequence representation learning and feature capturing related to biological functions. Li et al. developed a hybrid model using the ESM-2 model to encode protein sequences into embedding representations with high-dimensional features extracted [92]. In a comparable study, Yang et al. developed TUnA, a hybrid model combining the ESM-2 and transformer encoders. TUnA introduces the Spectral-normalized Neural Gaussian Process and uncertainty estimation to generate robust PPI predictions [93] (Table 6).

Table 6.

Summary of transfer learning and pre-trained models used in PPI prediction

| Author | Model Name | Research method | Evaluation parameter |

|---|---|---|---|

| Qiao et al. [86] | ProNEP | ProNEP, a deep learning algorithm combining transfer learning and bilinear attention networks. |

AUROC = 0.9292 AUPRC = 0.7134 |

| Yang et al. [87] | Siamese CNN architecture and MLP | Evolutionary sequence graph features and a twin convolutional neural network (CNN) architecture combined with a multilayer perceptron model. |

(HIV) ACC = 98.65 Precision = 95.16 F1-score = 92.36 AUPRC = 0.974 |

| Liu et al. [90] | MindSpore ProteinBERT (MP-BERT) | MindSpore ProteinBERT (MP-BERT) model, a transformer-based bidirectional encoder representation. |

(H. sapiens) ACC = 0.9818 Precision = 0.9732 F1 = 0.9820 MCC = 0.9639 |

| Warikoo et al. [91] | LBERT | A lexical awareness transformer model that combines local and global context. |

Precision = 0.858 F1-score = 0.855 |

| Li et al. [92] | ESMDNN-PPI | A hybrid model that employs the ESM-2 model to encode protein sequences as embedded representations. |

AUPR = 0.9306 ROC = 0.9869 |

| Parkinson et al. [93] | TUnA | TUnA, the model combines an ESM-2 embedding with a transformer encoder and introduces a spectral-normalized neural Gaussian process. |

AUROC = 0.7 AUPR = 0.69 F1 = 0.65 MCC = 0.3 Balanced accuracy = 0.65 |

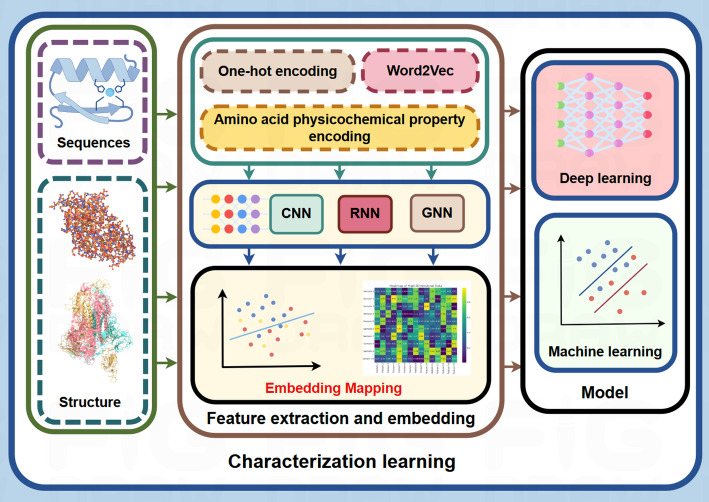

Protein-protein interaction characterization learning and autoencoders

In the field of PPI prediction, feature extraction serves as a critical foundational step. As illustrated in Fig. 4, converting protein sequences or structures into representative feature vectors is commonly employed. Several sequence encoding methods have been widely adopted, including one-hot encoding, which represents each amino acid as an independent binary vector to facilitate sequence processing; amino acid physicochemical property encoding, such as AAindex, which quantifies properties like polarity, charge, and hydrophobicity; and embedding techniques such as Word2Vec, which map protein sequences to a low-dimensional space by capturing the contextual relationships among amino acids. These generated vectors exhibit complex semantic information and enhanced expressive capabilities. Subsequently, advanced deep learning models are employed for intricate feature extraction and pattern recognition, followed by further modeling using machine learning approaches.

Fig. 4.

Protein-protein interactions characterization learning

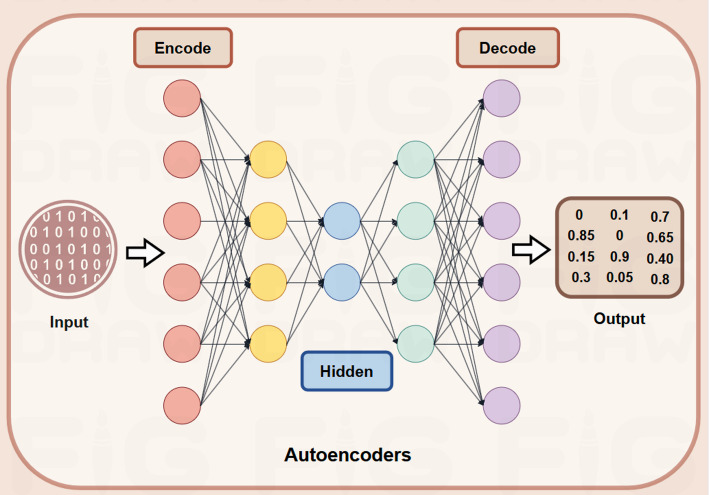

Autoencoders (AEs) represent a significant breakthrough in the representation learning of PPIs, with the potential to greatly enhance the accuracy of sequence and structure encoding. As illustrated in Fig. 5, this advancement is achieved by mapping and reconstructing protein data in a low-dimensional latent space, which helps capture intrinsic relationships. AE is a model used for unsupervised learning to learn low-dimensional representations of data, typically for tasks like data compression and reconstruction [94]. GAE (Graph Autoencoder) extends this concept to graph data, using GNNs in the encoder to capture node embeddings based on graph structure and reconstruct the graph (e.g., predicting node connections) in the decoder. In essence, GAE is a graph-specific version of AE, designed to handle the complexities of graph-structured data [30].

Fig. 5.

The mechanism of autoencoders

Cui et al. introduced SMG (self-supervised masked graph learning), a novel method leveraging PPI networks enriched with multi-omics data for cancer gene identification. By employing a self-supervised learning paradigm, SMG leverages GNNs to effectively accomplish its objectives [95]. Similarly, Cao et al. proposed FFANE, a node representation technique integrating PPI networks with protein sequence data to enhance PPI prediction accuracy [96]. Furthermore, Zhang et al. developed PPII-AEAT, a method for PPI inhibitor prediction based on autoencoders and adversarial training, which extracts key features of small molecule compounds using extended connectivity fingerprints and Mordred descriptors, undergoing three-stage training within an autoencoder framework to learn high-level representations and predict inhibitory activity [97] (Tables 7 and 8).

Table 7.

Summary of characterization learning and autoencoders in PPI prediction

| Author | Model Name | Research method | Evaluation parameter |

|---|---|---|---|

| Cui et al. [95] | SMG | SMG: self-supervised masked graph learning for cancer gene identification |

(The disease subnetwork identification task) AUPRC = 0.87 |

| Cao et al. [96] | FFANE | Protein features fusion using attributed network embedding for predicting protein-protein interactions |

(S. cerevisiae) average accuracy = 94.28% (H. sapiens) average accuracy = 97.69%, (H. pylori) average accuracy = 84.05% |

| Zhang et al. [97] | PPII-AEAT | PPII-AEAT: Prediction of protein-protein interactions inhibitors based on autoencoders with adversarial training |

(Bcl2-Like/Bak-Bax) MCC = 0.84 ± 0.027 F1 = 0.92 ± 0.012 AUROC = 0.93 ± 0.006 |

Table 8.

Key technologies for PPI prediction

| Technology | Advantages | Disadvantages | Applicability |

|---|---|---|---|

| GNN | Effectively captures local and global dependencies in protein networks; handles non-Euclidean data. | High computational complexity for large-scale graphs; prone to overfitting with sparse data. | Best for large-scale, complex network-based protein interaction analysis. |

| CNNs | Excellent at extracting local features; handles 3D protein structures well. | Struggles with long-range dependencies in sequences; relies on local features. | Ideal for 3D protein structure analysis, drug design, and protein docking tasks. |

| RNN | Captures long-distance dependencies in protein sequences using LSTM; suitable for sequence-based tasks. | Susceptible to vanishing gradient problems; training on long sequences can be challenging. | Best for sequence-based tasks, especially in protein sequence analysis and prediction. |

| Transformer | Powerful for capturing long-range dependencies in complex interactions; excels in multi-modal data fusion. | Requires significant computational resources and large datasets for effective training. | Most effective in tasks involving long-range dependencies and multi-modal data integration. |

| Multi-task Learning | Improves model generalization by leveraging shared layers; enhances performance across related tasks. | Requires careful task-specific layer design to avoid interference between tasks. | Suitable for tasks where multiple related objectives need to be predicted simultaneously. |

| Multimodal Learning | Integrates multiple data types to enhance prediction accuracy; provides a comprehensive understanding of PPI. | Increases computational complexity; challenges in merging heterogeneous data types. | Effective in scenarios that require integrating various data types (e.g., sequence, structure, and function). |

| Transfer Learning | Improves model performance on small datasets by leveraging knowledge from large datasets; enhances generalization. | Performance depends on the similarity between source and target tasks. It may be limited when tasks differ significantly. | Best for tasks with limited data, such as cross-species protein interaction prediction. |

A technical analysis and comparison of PPI

Innovative strategies and challenges for PPI

Data quality and generalization ability

In protein-protein interactions (PPIs) prediction, data imbalance constitutes a pervasive challenge, primarily manifested as the disproportionate ratio of positive to negative samples, heterogeneity in data sources, and high-dimensional feature sparsity. Typically, PPI prediction datasets are characterized by an abundance of negative samples—protein pairs without interactions—while positive samples representing protein interactions are relatively scarce, leading models to bias toward negative samples during training and consequently impairing predictive accuracy [98, 99]. Moreover, PPI data are acquired via a variety of experimental methods (e.g., yeast two-hybrid, co-immunoprecipitation, and mass spectrometry) that differ in accuracy and coverage, thereby exacerbating data heterogeneity. In addition, high-dimensional features such as protein sequences and structural information may exhibit inherent sparsity, further complicating training, particularly in imbalanced scenarios.

To address these challenges, researchers have proposed several strategies. Resampling techniques (e.g., SMOTE-based oversampling and undersampling) are widely employed to adjust the ratio of positive to negative samples [100, 101]. Cost-sensitive learning methods, which assign different error penalty weights to positive and negative samples, enhance the model’s sensitivity to the minority class and mitigate the impact of imbalance [102]. Bagging algorithms, such as random forests, leverage ensemble approaches by training multiple independent base learners on diverse data subsets and incorporating feature selection techniques to improve the identification of minority class samples. Additionally, boosting algorithms like gradient-boosted trees sequentially train base learners that focus on correcting the errors of their predecessors [103–105]. Recently, Generative Adversarial Networks (GANs) have been introduced to generate synthetic positive samples that mimic authentic data, thereby augmenting the positive sample count and rebalancing the dataset. GANs have demonstrated superior performance compared to traditional oversampling and undersampling methods by capturing the underlying data distribution, which enhances both the model’s accuracy and generalization ability [103, 106, 107] (Table 9).

Table 9.

Common PPI benchmark datasets

| Dataset Name | Dataset Size (Protein Pairs) | Data Source | Weight Definition | Applicable Species |

|---|---|---|---|---|

| STRING | > 20 million proteins, 100 million interactions | Experimental data & prediction | Confidence score (0–1) | Multiple species (including human) |

| BioGRID | > 1 million interactions | Experimental validation | Literature support | Multiple species (human, fly, etc.) |

| HINT | > 70,000 human interactions | Experimental data & prediction | Confidence score | Human |

| DIP | > 100,000 interactions | Experimental validation | Literature support | Multiple species |

| MIPS | Multiple yeast interactions | Experimental validation | Experimental support | Yeast |

| IntAct | Hundreds of thousands of interactions | Experimental validation | Experimental methods & literature support | Multiple species (including human) |

| Reactome | Thousands of reactions & interactions | Experimental validation & reaction network | Reaction type annotation | Primarily human |

| PDB | > 170,000 protein structures & interactions | Experimental validation | High-confidence experimental validation | Multiple species (including human) |

| PPI-Disease | Disease-related interactions | Experimental validation & disease annotations | Disease-related annotations | Primarily human |

| Corum | > 20,000 protein complex interactions | Experimental validation | Reliability annotation | Mammals (primarily human) |

| MINT | Hundreds of thousands of interactions | Literature & experimental validation | Literature support | Multiple species |

| KEGG | Thousands of biological pathways & interactions | Experimental data & prediction models | Confidence score | Multiple species |

Interpretability and multidimensional interaction strategies

In protein-protein interactions (PPIs) prediction, balancing model complexity with interpretability remains a significant challenge. As data complexity and biological requirements grow, researchers adopt model fusion, hybrid strategies, and innovative architectures to optimize performance. Moreover, downstream analyses—such as visualization and functional module detection—enhance model interpretability. For instance, t-SNE dimensionality reduction and clustering visualization techniques can evaluate model effectiveness when handling heterogeneous and sparse data sources [108]. Additionally, the open-source tool Cytoscape offers interactive visualization interface with functionalities for importing, navigating, filtering, clustering, searching, and exporting networks to examine multidimensional protein interaction networks [109, 110].

PPIs are dynamic relationships that are regulated by multiple factors, including the cellular environment, the cell cycle, and phosphorylation [111, 112]. These regulatory factors drive PPI networks to continuously adapt to changes in the intracellular and extracellular environments. Consequently, in the domain of PPI prediction, multidimensional interaction strategies have the capacity to integrate sequence, structure, network, microenvironment, and dynamic information. The advent of multi-modal technologies has served to partially circumvent the limitations imposed by single-factor approaches. For instance, Islam et al. attained 100% accuracy in protein category prediction, thereby substantiating the efficacy of multi-modal data. Additionally, GO symbol prediction achieved a high accuracy rate of 96% in biological processes (BP), 97% in cellular components (CC), and 98% in molecular functions (MF) [113]. Multidimensional interaction strategies have been proven to have significant advantages in capturing complex relationships in protein functions and achieving more accurate protein functional annotation.

Challenges and opportunities encountered in PPI

Conventional models predicated upon the notion of protein interactions as static are found to be in error when considering the dynamic nature of these interactions, which are known to be subject to fluctuations arising from the cell state, environment, and temporal considerations [114, 115]. It is imperative to acknowledge the dynamic nature of this phenomenon, as disregarding it frequently results in suboptimal prediction accuracy under various physiological conditions. Future research endeavors must prioritize the development of models capable of capturing these dynamic changes. Moreover, these models should incorporate uncertainty modeling techniques to effectively integrate temporal and spatial information [116].

The majority of existing PPI prediction methods and their corresponding datasets are based on model organisms (e.g., human, mouse, and baker’s yeast), and their models and data exhibit a high degree of adaptation to these species [117, 118]. However, the applicability of existing protein-protein interaction (PPI) prediction methods to non-model organisms is severely limited, primarily due to differences in protein function, structure, and interaction networks between species. Consequently, the effective transfer and application of these model-organism-based PPI prediction methods to different species has become a major challenge in current research.

Predicting interactions between rare or unannotated proteins is also a major challenge. A substantial proportion of unannotated proteins may manifest distinctive interaction patterns, and prevailing models frequently demonstrate suboptimal performance in settings with scarce data. Researchers are exploring the potential of generative adversarial networks (GANs) to generate synthetic data [119]. They are employing techniques such as self-supervised learning and transfer learning to enhance the capacity of models to predict infrequent interactions [120, 121]. Nevertheless, model prediction bias resulting from data scarcity persists as a significant challenge.

Discussion

The rapid development of artificial intelligence technology has led to significant advancements in the field of protein-protein interactions (PPIs) prediction through the application of deep learning methods. This technological evolution has resulted in unprecedented levels of innovation and transformation within the scientific community. The impact of deep learning on protein prediction is anticipated to be transformative, leading to substantial advancements in the foreseeable future. The review of the latest research over the past five years provides a reference point for the rapidly evolving industry.

This article reviews the application and progress of deep learning in PPI prediction from 2021 to 2025, summarizing cutting-edge technologies of recent years and examining the continuously evolving deep learning methods from a new perspective. It analyzes the innovative applications of graph neural networks (GNNs), convolutional neural networks (CNNs), recurrent neural networks (RNNs), attention mechanisms, and transformer architectures, as well as multi-task and multi-modal learning, and transfer learning to improve prediction accuracy. These deep learning methods interact with each other, but each one exhibits unique performance characteristics and advantages. These powerful computational tools can provide insights into the operating modes of structured and networked proteins in organisms from complex data sets, thereby reconstructing our understanding of PPI and fundamentally changing our logical understanding of organism systems. Deep learning’s innovation in PPI prediction is a leap in AI’s application in molecular biology, especially in studying protein interactions. Optimized algorithms, better computing power and more data are driving deep learning’s growing influence in PPI prediction and broader scope in computational biology. This technological evolution is transforming our understanding of cellular processes, protein functions and regulatory networks, paving the way for more accurate predictive models and advancing research in this critical domain.

Acknowledgements

This research was funded by the National Natural Scientific Foundation of China (62171241, 62403390), The Group Project of Developing Inner Mongolia through Talents (2025TEL25), and the Central Guidance Fund for Local Science and Technology Development (2024ZY0168). The figures were drawn by Figdraw.

Author contributions

Y.Z. and D.Y. Conceptualization. J.C. and S.Y. Writing - Original Draft, Visualization, Formal analysis. L.Y. and Q.X. Writing - Review & Editing.

Data availability

No datasets were generated or analysed during the current study.

Declarations

Competing interests

The authors declare no competing interests.

Footnotes

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Jiafu Cui and Siqi Yang contributed equally to this work.

Contributor Information

Dezhi Yang, Email: imudezhi@hotmail.com.

Yongchun Zuo, Email: yczuo@imu.edu.cn.

References

- 1.Lee M. Recent advances in deep learning for Protein-Protein interaction analysis: A comprehensive review. Molecules. 2023;28(13):35. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Wetie AGN, Sokolowska I, Woods AG, Roy U, Loo JA, Darie CC. Investigation of stable and transient protein-protein interactions: past, present, and future. Proteomics. 2013;13(3–4):538–57. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Ren HM, Ou QS, Pu Q, Lou YQ, Yang XL, Han YJ, et al. Comprehensive review on bimolecular fluorescence complementation and its application in deciphering protein-protein interactions in cell signaling pathways. Biomolecules. 2024;14(7):859. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Zhang Y, Yang Y, Ren L, Zhan M, Sun T, Zou Q, et al. Predicting intercellular communication based on metabolite-related ligand-receptor interactions with MRCLinkdb. BMC Biol. 2024;22(1):152. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Network YI. High-Quality binary protein interaction map of the. Science. 2008;1158684(104):322. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Koegl M, Uetz P. Improving yeast two-hybrid screening systems. Briefings Funct Genomics Proteom. 2007;6(4):302–12. [DOI] [PubMed] [Google Scholar]

- 7.Borch J, Jørgensen TJ, Roepstorff P. Mass spectrometric analysis of protein interactions. Curr Opin Chem Biol. 2005;9(5):509–16. [DOI] [PubMed] [Google Scholar]

- 8.Fradera X, Knegtel RM, Mestres J. Similarity-driven flexible ligand docking. Proteins Struct Funct Bioinform. 2000;40(4):623–36. [DOI] [PubMed] [Google Scholar]

- 9.Sinha R, Kundrotas PJ, Vakser IA. Docking by structural similarity at protein-protein interfaces. Proteins Struct Funct Bioinform. 2010;78(15):3235–41. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Zhang H-Q, Arif M, Thafar MA, Albaradei S, Cai P, Zhang Y, et al. PMPred-AE: a computational model for the detection and interpretation of pathological myopia based on artificial intelligence. Front Med. 2025;12:1529335. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Joshi M, Singh BK. Deep learning techniques for brain lesion classification using various MRI (from 2010 to 2022): review and challenges. Medinformatics. 2024:1529335.

- 12.Abdelkader GA, Kim JD. Advances in Protein-Ligand binding affinity prediction via deep learning: A comprehensive study of datasets, data preprocessing techniques, and model architectures. Curr Drug Targets. 2024;25(15):1041–65. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Zulfiqar H, Guo Z, Ahmad RM, Ahmed Z, Cai P, Chen X, et al. Deep-STP: a deep learning-based approach to predict snake toxin proteins by using word embeddings. Front Med. 2024;10:1291352. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.You ZH, Li L, Ji Z, Li M, Guo S. Prediction of protein-protein interactions from amino acid sequences using extreme learning machine combined with auto covariance descriptor. IEEE. 2013;12(1):1–7. [Google Scholar]

- 15.Zubek J, Tatjewski M, Boniecki A, Mnich M, Plewczynski D. Multi-level machine learning prediction of protein–protein interactions in Saccharomyces cerevisiae. PeerJ. 2015;3(1):e1041. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Zhang B, Hou Z, Yang Y, Wong K-c, Zhu H, Li X. SOFB is a comprehensive ensemble deep learning approach for elucidating and characterizing protein-nucleic-acid-binding residues. Commun Biology. 2024;7(1):679. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Bryant P, Pozzati G, Elofsson A. Improved prediction of protein-protein interactions using AlphaFold2. Nat Commun. 2022;13(1):1265. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Wang C, Cai S, Liu Z, Chen Y. Yeast protein-protein interaction network model based on biological experimental data. Appl Math Mechanics-English Ed. 2015;36(6):827–34. [Google Scholar]

- 19.Zhang Y, Lin H, Yang Z, Wang J, Liu Y, Sang S. A method for predicting protein complex in dynamic PPI networks. BMC Bioinformatics. 2016;17(Suppl 7):229. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Bhowmick SS, Seah BS. Clustering and summarizing Protein-Protein interaction networks: A survey. IEEE Trans Knowl Data Eng. 2016;28(3):638–58. [Google Scholar]

- 21.Mao X, Cai T, Olyarchuk JG, Wei L. Automated genome annotation and pathway identification using the KEGG orthology (KO) as a controlled vocabulary. Bioinformatics. 2005;21(19):3787–93. [DOI] [PubMed] [Google Scholar]

- 22.Kanehisa M, Araki M, Goto S, Hattori M, Hirakawa M, Itoh M, et al. KEGG for linking genomes to life and the environment. Nucleic Acids Res. 2007;36(suppl1):D480–4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Joseph S, Amin E. INTREPPPID—an orthologue-informed quintuplet network for cross-species prediction of protein–protein interaction. Brief Bioinform. 2024;25(5):bbae405. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Meng L, Wei L, Wu R, MVGNN-PPIS. A novel multi-view graph neural network for protein-protein interaction sites prediction based on Alphafold3-predicted structures and transfer learning. Int J Biol Macromol. 2025;300:140096. [DOI] [PubMed]

- 25.Zou HT, Ji BY, Xie XL. A multi-source molecular network representation model for protein–protein interactions prediction. Sci Rep. 2024;14(1):6184. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Khemani B, Patil S, Kotecha K, Tanwar S. A review of graph neural networks: concepts, architectures, techniques, challenges, datasets, applications, and future directions. J Big Data. 2024;11(1):18. [Google Scholar]

- 27.Ali F, Khalid M, Almuhaimeed A, Masmoudi A, Alghamdi W, Yafoz A. IP-GCN: A deep learning model for prediction of insulin using graph convolutional network for diabetes drug design. J Comput Sci. 2024;81:102388. [Google Scholar]

- 28.Kipf TN, Welling M. Semi-supervised classification with graph convolutional networks. Proceedings of the 5th International Conference on Learning Representations (ICLR); 2017. pp. 1–14.

- 29.Velikovi P, Cucurull G, Casanova A, Romero A, Liò P, Bengio Y. Graph attention networks. Proceedings of the 6th International Conference on Learning Representations (ICLR); 2018. pp. 1–12.

- 30.Kipf TN, Welling M, Variational Graph A-E. 2016. Variational graph auto-encoders. Proceedings of the NIPS Workshop on Bayesian Deep Learning; 2016. pp. 1–10.

- 31.Hamilton WL, Ying R, Leskovec J. Inductive representation learning on large graphs. Proceedings of the 31st International Conference on Neural Information Processing Systems (NeurIPS); 2017. pp. 1024–1034.

- 32.Yang P, Lu P, Zhang T. AG-GATCN: A novel method for predicting essential proteins. Chin Phys B. 2023;32(5):058902. [Google Scholar]

- 33.Zhong J, Zhao H, Zhao Q, Zhou R, Zhang L, Guo F, et al. RGCNPPIS: A residual graph convolutional network for Protein-Protein interaction site prediction. Ieee-Acm Trans Comput Biology Bioinf. 2024;21(6):1676–84. [DOI] [PubMed] [Google Scholar]

- 34.Wu X, Cheng Q, editors. Stabilizing and enhancing link prediction through deepened graph auto-encoders. Proceedings of the 31st International Joint Conference on Artificial Intelligence (IJCAI-22). 2022;3587–93. [DOI] [PMC free article] [PubMed]

- 35.Zheng Y, Li Q, Freiberger MI, Song H, Hu G, Zhang M, et al. Predicting the dynamic interaction of intrinsically disordered proteins. J Chem Inf Model. 2024;64(17):6768–77. [DOI] [PubMed] [Google Scholar]

- 36.Wang S, Chen W, Han P, Li X, Song T. RGN: residue-Based graph attention and convolutional network for protein–protein interaction site prediction. J Chem Inf Model. 2022;62(23):5961–74. [DOI] [PubMed] [Google Scholar]

- 37.Li G, Mueller M, Qian G, Delgadillo IC, Abualshour A, Thabet A, et al. DeepGCNs: making GCNs go as deep as CNNs. IEEE Trans Pattern Anal Mach Intell. 2023;45(6):6923–39. [DOI] [PubMed] [Google Scholar]

- 38.Aykent S, Xia T, editors. Gbpnet: Universal geometric representation learning on protein structures. Proceedings of the 28th ACM SIGKDD Conference on Knowledge Discovery and Data Mining; 2022. pp. 4–14.

- 39.Zouari S, Ali F, Masmoudi A, Ghazalah SA, Alghamdi W, Kateb FA, et al. Deep-GB: A novel deep learning model for globular protein prediction using CNN‐BiLSTM architecture and enhanced PSSM with trisection strategy. IET Syst Biol. 2024;18(6):208–17. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Almusallam N, Ali F, Kumar H, Alkhalifah T, Alturise F, Almuhaimeed A. Multi-headed ensemble residual CNN: a powerful tool for fibroblast growth factor prediction. Results Eng. 2024;24:103348. [Google Scholar]

- 41.Guo L, He J, Lin P, Sheng-You H, Wang J. TRScore: a 3D RepVGG-based scoring method for ranking protein Docking models. Bioinformatics. 2022;38(9):2444–51. [DOI] [PubMed] [Google Scholar]

- 42.Chen H, Cheng Y, Dong J, Mao J, Wang X, Gao Y et al. Protein property prediction based on local environment by 3D equivariant convolutional neural networks. BioRxiv. 2024:2024.02. 07.579261.

- 43.Renaud N, Geng C, Georgievska S, Ambrosetti F, Ridder L, Marzella DF, et al. DeepRank: a deep learning framework for data mining 3D protein-protein interfaces. Nat Commun. 2021;12(1):7068. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Sree PK. 3D convolutional neural networks for predicting protein structure for improved drug recommendation. EAI Endorsed Trans Pervasive Health Technol. 2024;10:e105685. [Google Scholar]

- 45.Li X, Han P, Chen W, Gao C, Wang S, Song T, et al. MARPPI: boosting prediction of protein-protein interactions with multi-scale architecture residual network. Brief Bioinform. 2023;24(1):bbac524. [DOI] [PubMed] [Google Scholar]

- 46.Zhang F, Song H, Zeng M, Wu F-X, Li Y, Pan Y, et al. A deep learning framework for gene ontology annotations with Sequence- and Network-Based information. Ieee-Acm Trans Comput Biology Bioinf. 2021;18(6):2208–17. [DOI] [PubMed] [Google Scholar]

- 47.Park S, Seok C, GalaxyWater-CNN. Prediction of water positions on the protein structure by a 3D-convolutional neural network. J Chem Inf Model. 2022;62(13):3157–68. [DOI] [PubMed]

- 48.Sherstinsky A. Fundamentals of recurrent neural network (RNN) and long short-term memory (LSTM) network. Physica D. 2020;404:132306. [Google Scholar]

- 49.Alakus TB, Turkoglu I. A novel protein mapping method for predicting the protein interactions in COVID-19 disease by deep learning. Interdiscip Sci. 2021;13(1):44–60. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50.Deng L, Nie W, Zhao J, Zhang J. A hybrid deep learning framework for predicting the protein-protein interaction between virus and host. 2021. Preprint (Version 1) at 10.21203/rs.3.rs-506156/v1.

- 51.Zhou X, Song H, Li J. Residue-Frustration-Based prediction of Protein-Protein interactions using machine learning. J Phys Chem B. 2022;126(8):1719–27. [DOI] [PubMed] [Google Scholar]

- 52.Tsukiyama S, Hasan MM, Fujii S, Kurata H. LSTM-PHV: prediction of human-virus protein–protein interactions by LSTM with word2vec. Brief Bioinform. 2021;22(6):bbab228. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 53.Szymborski J, Emad A. RAPPPID: towards generalizable protein interaction prediction with AWD-LSTM twin networks. Bioinformatics. 2022;38(16):3958–67. [DOI] [PubMed] [Google Scholar]

- 54.Hu J, Chen K-X, Rao B, Ni J-Y, Thafar MA, Albaradei S, et al. Protein-peptide binding residue prediction based on protein Language models and cross-attention mechanism. Anal Biochem. 2024;694:115637. [DOI] [PubMed] [Google Scholar]

- 55.Hu J, Li Z, Rao B, Thafar MA, Arif M. Improving protein-protein interaction prediction using protein Language model and protein network features. Anal Biochem. 2024;693:115550. [DOI] [PubMed] [Google Scholar]

- 56.Vaswani A, Shazeer N, Parmar N, Uszkoreit J, Jones L, Gomez AN, et al. Attention is all you need. Adv Neural Inf Process Syst. 2017;30:6000–10. [Google Scholar]

- 57.Li X, Han P, Wang G, Chen W, Wang S, Song T. SDNN-PPI: self-attention with deep neural network effect on protein-protein interaction prediction. BMC Genomics. 2022;23(1):474. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 58.Zhai Z, Xu S, Ma W, Niu N, Qu C, Zong C. LGS-PPIS: A Local-Global structural information aggregation framework for predicting Protein-Protein interaction sites. Proteins-Structure Function Bioinf. 2025;93(3):716–27. [DOI] [PubMed] [Google Scholar]

- 59.Wu C, Lin B, Zhang J, Gao R, Song R, Liu Z-P. AttentionEP: Predicting essential proteins via fusion of multiscale features by attention mechanisms. Computational and Structural Biotechnology Journal. 2024;23:4315-23. [DOI] [PMC free article] [PubMed]

- 60.Song T, Markham KK, Li Z, Muller KE, Greenham K, Kuang R. Detecting spatially co-expressed gene clusters with functional coherence by graph-regularized convolutional neural network. Bioinformatics. 2022;38(5):1344–52. [DOI] [PubMed] [Google Scholar]

- 61.Tang M, Wu L, Yu X, Chu Z, Jin S, Liu J. Prediction of Protein-Protein interaction sites based on stratified attentional mechanisms. Front Genet. 2021;12:784863. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 62.Wang M, Lai J, Jia J, Xu F, Zhou H, Yu B. ECA-PHV: predicting human-virus protein-protein interactions through an interpretable model of effective channel attention mechanism. Chemometr Intell Lab Syst. 2024;247:105103. [Google Scholar]

- 63.Li P, Liu Z-P. MuToN quantifies binding affinity changes upon protein mutations by geometric deep learning. Adv Sci. 2024;11(35):e2402918. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 64.Ieremie I, Ewing RM, Niranjan M. TransformerGO: predicting protein-protein interactions by modelling the attention between sets of gene ontology terms. Bioinformatics. 2022;38(8):2269–77. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 65.Vig J, Belinkov Y. Analyzing the structure of attention in a transformer Language model. ArXiv Preprint arXiv:190604284. 2019.pp. 63–76.

- 66.Mou M, Pan Z, Zhou Z, Zheng L, Zhang H, Shi S, et al. A Transformer-Based ensemble framework for the prediction of Protein-Protein interaction sites. Research. 2023;6:0240. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 67.Ghosh S, Mitra P. MaTPIP: A deep-learning architecture with eXplainable AI for sequence-driven, feature mixed protein-protein interaction prediction. Comput Methods Programs Biomed. 2024;244:107955. [DOI] [PubMed] [Google Scholar]

- 68.Kang Y, Xu Y, Wang X, Pu B, Yang X, Rao Y, et al. HN-PPISP: a hybrid network based on MLP-Mixer for protein-protein interaction site prediction. Brief Bioinform. 2023;24(1):bbac480. [DOI] [PubMed] [Google Scholar]

- 69.Wang Y, Wu S, Duan Y, Huang Y. A point cloud-based deep learning strategy for protein–ligand binding affinity prediction. Brief Bioinform. 2022;23(1):bbab474. [DOI] [PubMed] [Google Scholar]

- 70.Chen Z, Liu N, Huang Y, Min X, Zeng X, Ge S, et al. PointDE: protein Docking evaluation using 3D point cloud neural network. IEEE/ACM Trans Comput Biol Bioinf. 2023;20(5):3128–38. [DOI] [PubMed] [Google Scholar]

- 71.Li J, Li Y-T. Extracting Inter-Protein Interactions Via Multitasking Graph Structure Learning. arXiv preprint arXiv:250117589. 2025.

- 72.Yue Y, Li S, Wang L, Liu H, Tong HHY, He S. MpbPPI: a multi-task pre-training-based equivariant approach for the prediction of the effect of amino acid mutations on protein-protein interactions. Brief Bioinform. 2023;24(5):bbac480. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 73.Capel H, Feenstra KA, Abeln S. Multi-task learning to leverage partially annotated data for PPI interface prediction. Sci Rep. 2022;12(1):10487. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 74.Liu T, Huang J, Luo D, Ren L, Ning L, Huang J, et al. Cm-siRPred: predicting chemically modified SiRNA efficiency based on multi-view learning strategy. Int J Biol Macromol. 2024;264(Pt2):130638. [DOI] [PubMed] [Google Scholar]

- 75.Kang Y, Elofsson A, Jiang Y, Huang W, Yu M, Li Z. AFTGAN: prediction of multi-type PPI based on attention free transformer and graph attention network. Bioinformatics. 2023;39(2):btad052. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 76.Chen K-H, Hu Y-J. Residue-Residue interaction prediction via stacked Meta-Learning. Int J Mol Sci. 2021;22(12):6393. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 77.Rafiei F, Zeraati H, Abbasi K, Ghasemi JB, Parsaeian M, Masoudi-Nejad A. DeepTraSynergy: drug combinations using multimodal deep learning with Transformers. Bioinformatics. 2023;39(8):btad438. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 78.Zhang D, Kabuka M. Multimodal deep representation learning for protein interaction identification and protein family classification. BMC Bioinformatics. 2019;20:1–14. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 79.Baek M, DiMaio F, Anishchenko I, Dauparas J, Ovchinnikov S, Lee GR, et al. Accurate prediction of protein structures and interactions using a three-track neural network. Science. 2021;373(6557):871–6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 80.Asim MN, Ibrahim MA, Malik MI, Dengel A, Ahmed S. ADH-PPI: an attention-based deep hybrid model for protein-protein interaction prediction. Iscience. 2022;25(10):105169. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 81.Abramson J, Adler J, Dunger J, Evans R, Green T, Pritzel A, et al. Accurate structure prediction of biomolecular interactions with alphafold 3 (630, Pg 493, 2024). Nature. 2024;636(8042):E4–E. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 82.Álvarez-Salmoral D, Borza R, Xie R, Joosten RP, Hekkelman ML, Perrakis A. AlphaBridge: tools for the analysis of predicted macromolecular complexes. BioRxiv. 2024:2024.10. 23.619601.

- 83.Csikász-Nagy A, Fichó E, Noto S, Reguly I. Computational tools to predict context-specific protein complexes. Curr Opin Struct Biol. 2024;88:102883. [DOI] [PubMed] [Google Scholar]

- 84.Wang X, Zhu H, Jiang Y, Li Y, Tang C, Chen X, et al. PRODeepSyn: predicting anticancer synergistic drug combinations by embedding cell lines with protein–protein interaction network. Brief Bioinform. 2022;23(2):bbab587. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 85.Schulte-Sasse R, Budach S, Hnisz D, Marsico A. Integration of multiomics data with graph convolutional networks to identify new cancer genes and their associated molecular mechanisms. Nat Mach Intell. 2021;3(6):513–26. [Google Scholar]

- 86.Qiao B, Wang S, Hou M, Chen H, Zhou Z, Xie X, et al. Identifying nucleotide-binding leucine-rich repeat receptor and pathogen effector pairing using transfer-learning and bilinear attention network. Bioinformatics. 2024;40(10):btae581. [DOI] [PMC free article] [PubMed] [Google Scholar]