ABSTRACT

The objective of this article is to bring together the key current information on practical considerations when conducting statistical analyses adjusting long‐term outcomes for treatment switching, combining it with learnings from our own experience, thus providing a useful reference tool for analysts. When patients switch from their randomised treatment to another therapy that affects a subsequently observed outcome such as overall survival, there may be interest in estimating the treatment effect under a hypothetical scenario without the intercurrent event of switching. We describe the theory and provide guidance on how and when to conduct analyses using three commonly used complex approaches: rank preserving structural failure time models (RPSFTM), two‐stage estimation (TSE), and inverse probability of censoring weighting (IPCW). Extensions and alternatives to the standard approaches are summarised. Important and sometimes misunderstood concepts such as recensoring and sources of variability are explained. An overview of available software and programming guidance is provided, along with an R code repository for a worked example, reporting recommendations, and a review of the current acceptability of these methods to regulatory and health technology assessment agencies. Since the current guidance on this topic is scattered across multiple sources, it is difficult for an analyst to obtain a good overview of all options and potential pitfalls. This paper is intended to save statisticians time and effort by summarizing important information in a single source. By also including recommendations for best practice, it aims to improve the quality of the analyses and reporting when adjusting time‐to‐event outcomes for treatment switching.

Keywords: inverse probability of censoring weighting, rank preserving structural failure time, recensoring, survival analysis, treatment switching, two stage

1. Introduction

In this paper, we provide details of some of the practical considerations that will be useful for analysts conducting analyses adjusting long‐term outcomes for treatment switching. There are many publications now available on this topic, but often they have a theoretical focus or important points are scattered across multiple sources, so that it is difficult and time‐consuming to develop comprehensive awareness of best practice. The objective of this article is to gather the key information together in one place, combining it with learnings from our own experience in applying these methods, thus providing a useful reference tool for analysts.

The authors are members of the treatment switching subteam of the PSI Health Technology Assessment (HTA) Special Interest Group (SIG), a group of statisticians from across the pharmaceutical industry with a common interest in treatment switching methodology. In 2013, the subteam published an overview paper [1]. Since then, new methods such as the simplified two‐stage model [2] have been developed, and further research and publications have become available. Notably, the publication of the National Institute for Health and Care Excellence (NICE) Decision Support Unit Technical Support Document (DSU TSD) 16 [3] in 2014 has led to more widespread use of treatment switching methods, particularly in HTA. This has recently been updated in the supplementary NICE DSU TSD 24 [4]. Software packages and code have also been developed.

We outline in Sections 2 to 7 the theory behind the commonly used methods, and provide practical guidance on how to perform the analyses, including topics such as recensoring and sources of variability. An overview of available software and programming guidance is provided in Section 8. A supporting repository of R code for a worked example is available. We conclude with reporting recommendations and a review of the current acceptability of these methods to regulatory and HTA agencies (Sections 9 and 10). This is intended to provide an updated and more comprehensive treatise compared to the 2013 publication [1], but can also be read as a standalone publication.

2. General Considerations

Treatment switching occurs in various forms in randomised controlled trials. In the simplest case, patients randomised to the control group may switch to the active group of the trial at some point in time. This is sometimes referred to as treatment crossover (although many statisticians prefer to avoid this term since it can be confused with crossover trials where a patient receives a pre‐planned randomised sequence of treatments). Alternatively, patients might switch to another treatment or treatments not included in the original trial protocol, which may or may not belong to the same class of treatments as the active drug in the trial. Switching could be linked to a specific event, such as disease progression or positive results from an interim analysis. Discontinuation of trial drug could also be considered a treatment switch. Moreover, switching does not necessarily need to be restricted to one of the randomised groups in the trial but might affect all groups.

2.1. Treatment Switching and Estimands

If treatment switching could have affected a subsequently observed outcome of interest, for example overall survival, then there may be a desire to adjust the estimated treatment effect on that outcome to remove the impact of some or all types of switching. This will depend on whether the clinical question of interest, or estimand, addresses a hypothetical scenario in which the switching patterns do not reflect those that occurred in the trial.

It is crucial to clearly define in advance the clinical question of interest, which may be different for various audiences such as different HTA bodies or regulatory agencies. Usually, we wish to adjust to remove the effect of true “nuisance” treatments as subsequent therapies in the specific region; that is, those that are not approved or part of standard regional clinical practice, and that potentially affect the outcome of interest. However, sometimes there is an interest in isolating the effects of just the randomised treatments from any subsequent therapies. It is helpful to frame this within the estimand framework [5], viewing treatment switch as an intercurrent event, and there may be multiple estimands to address different clinical questions from different review bodies. Often, we are interested in modeling a hypothetical scenario with a differing switch pattern to that observed, which is the focus of the methods described in this paper. Others [6] have described methods for a principal stratification estimand, where the interest is to model multiple treatment effects in different strata of patients according to their switch patterns. Manitz et al. [5] and Bell Gorrod et al. [4] noted that the composite and while‐on‐treatment intercurrent event strategies are unlikely to align with clinically meaningful questions about long term outcomes in the presence of treatment switching.

2.2. When to Adjust for Treatment Switching

Adjusting for switching is only necessary when it is likely to influence the treatment effect estimate—for example, when switch treatment is likely to be beneficial, when a reasonable proportion of patients switch, and when there is sufficient follow‐up time post‐switch for the impact to be realised. A graphical illustration of how control‐arm switching to an effective treatment may reduce the observed benefit in overall survival is provided in Figure 1 of Latimer et al. [7].

The most prevalent occurrence of treatment switching and implementation of corresponding methodologies is in oncological studies where patients might switch treatment after disease progression, making the treatment switch an intercurrent event in the context of the overall survival endpoint. However, treatment switching also plays a significant role outside of oncology. Within immunology, the AIDS Clinical Trial Group 021 study [8] showed a significant delay in time to pneumocystis pneumonia, a potentially fatal pulmonary infection, in patients with AIDS randomised to bactrim versus aerosolised pentamidine as prophylaxis therapy. However, the conventional Intent‐To‐Treat (ITT) analysis did not indicate a significant impact on mortality rates. Robins [9] examined whether the lack of a confirmed survival benefit was attributable to the absence of a biological effect on survival or the dilution of a genuine underlying survival benefit due to factors such as loss to follow‐up, discontinuation of all prophylaxis therapy, or treatment switching. This was particularly relevant as patients who were randomised to a specific treatment were permitted to switch to the alternative treatment if they developed pneumocystis pneumonia.

Besides overall survival, any other long‐term outcomes measured post‐switch, including health‐related quality of life measures and adverse effects, are impacted in trials where a substantial number of patients switch treatment. This may be more prevalent in non‐oncology trials where the criteria for switch are not based on a disease progression outcome. For example, in the CENTAUR trial [10] of patients with the fatal neurodegenerative disease amyotrophic lateral sclerosis (ALS), placebo patients were offered switch to active treatment following measurement of the primary score‐based outcome at 6 months, but were then followed up for longer‐term time‐to‐event outcomes. Another example is the SANAD B study in epilepsy with a time‐to‐remission outcome, which had a pragmatic design where patients often experienced multiple treatment changes from randomised treatment over the follow‐up period [11]. Treatment changes included the switching to or addition of other treatments as well as changes in prescribed dosage. There was an inherent clinical interest in not only addressing the pragmatic question of treatment effectiveness under trial conditions (as targeted via intention‐to‐treat analysis), but also in estimating the efficacy (or causal effect) of the randomised treatments, factoring out changes in prescribed treatment from the one originally randomised. This aspect was particularly relevant due to the non‐inferiority design of the trial.

Analyses adjusting for treatment switching can only be applied robustly if the appropriate data have been collected. Data requirements vary dependent on the different methods used to address treatment switching. For instance, the inverse probability of censoring weighting (IPCW) method has the heaviest data collection burden, requiring the availability of all important baseline and time‐dependent information on prognostic factors for the long‐term endpoint of interest (e.g., mortality) as well as on any factors that affect the probability of switching, at all times up until the switch or the outcome occurs. Identifying relevant patient characteristics demands time and cross‐functional input at the trial design stage. Collecting all pertinent patient characteristics (over time) adds complexity and cost, but if it is not done, then the degree of missing data can easily become substantial and undermine the validity of some switch adjustment methods. The analyst should take time to understand the extent and potential impact of missing data when considering which methods are appropriate, taking into account the important distinction between data that are missing just to the analyst and data that are also missing to the clinician/patient at the time of the switch decision [4].

2.3. Method Selection

Different adjustment methods are suitable for the various types of switching, and the underlying assumptions associated with these methods as well as the overall trial design must be considered when selecting among them [3].

In the following sections, we describe each of the commonly used complex treatment switch models and how to fit them—rank preserving structural failure time models (RPSFTM), two‐stage estimation (TSE), and inverse probability of censoring weighting (IPCW). Naïve methods such as excluding or censoring switchers are not considered due to the large biases known to be associated with them when the decision to switch is influenced by variables such as disease progression that are also linked to survival [3]. After defining the estimands, the suitability of a particular model will depend on four questions that should be carefully considered in turn for each case:

Are the appropriate data collected?

If yes, is the model appropriate given the switching mechanism (type, timing and number of patients)?

If yes, are the assumptions of the model reasonable?

If yes and the model is fitted to the data, are the results plausible?

NICE DSU TSD 16 [3] provides a flow diagram that can also be considered when selecting appropriate model(s).

3. Rank Preserving Structural Failure Time Model (RPSFTM)

The Rank Preserving Structural Failure Time Model (RPSFTM) [12] is a randomisation‐based estimator [13] and only requires information on the randomised treatment group, observed event times, and switch treatment start (and sometimes stop) dates to estimate a causal treatment effect. In its standard form, it can only adjust for treatment “crossover” from one arm to the other arm (or to treatments reasonably expected to have the same effect as the other arm, for example in the same class) and not for broader switching to other treatments. Therefore, it is typically used in studies to adjust for the effect of switch from the control arm to the experimental treatment.

The objective for RPSFTM is to determine a shrinkage factor that is applied to the time interval after switch for individual switching control patients, to remove the additional survival due to switching treatment. This is applied in an accelerated failure time (AFT) framework. The total survival time is separated into for the jth patient, where denotes untreated time not spent on experimental treatment (i.e., placebo, control or standard of care comparators) and represents time spent on experimental treatment (or subsequent therapies with similar effect). Then, the RPSFT model estimates a counterfactual survival time which would have been observed on the control if the patient had not switched, based on the following causal model:

| (1) |

where is the true causal parameter and and suggests a beneficial treatment effect. In , is the one‐parameter acceleration factor (AF) associated with the experimental treatment and is the shrinkage factor used to adjust the individual on‐treatment survival time for switchers.

3.1. Assumptions of RPSFTM

A key assumption of RPSFTM is the common (or constant) treatment effect, that is, that is constant during follow‐up time and the same for all patients. This means that the effect of experimental treatment is the same regardless of when it is initiated, for example, at randomisation or after disease progression. This applies on the scale of the causal model structure, that is, a set duration of time on treatment will extend survival by the same absolute amount, regardless of when it is taken. The plausibility of this will depend on the mode of action of the treatment and whether a reduction of effect is anticipated as the disease stage advances, therefore clinical input is required. If the treatment effect distribution is not the same for all patients, then this is a broader consideration for the fundamental trial analysis and interpretation. For example, if there are subgroups known to have markedly different levels of benefit then treatment effects should be interpreted at a subgroup level; RPSFTM could then be applied within subgroups.

Latimer et al. [14] showed that RPSFTM is robust to a modest violation of this assumption. Although the amount of bias increases slightly, it is still smaller than naive approaches in most circumstances if the average treatment effect in switchers is reduced by 20% compared to that in patients randomised to experimental treatment. Hence, in many cases we can apply standard RPSFTM.

If there is a concern about the common treatment effect assumption, then a tipping point analysis could be conducted. The treatment effect for switchers to experimental treatment compared to those originally randomised to experimental treatment is reduced in steps until there is only a pre‐specified difference to the unadjusted intention to treat (ITT) results; this level of effect reduction is then reviewed for plausibility. In this approach, a modified counterfactual survival model is used: , where = 1 for those randomised to experimental and < 1 for switchers. For example, to implement a 20% effect reduction in switchers, = 0.8 would be used. Alternatively, this model could be run using a specified value of for switchers, if a reasonable clinical estimate of this can be obtained.

Another key assumption is that the distribution of the survival in the absence of receiving experimental treatment is the same between arms, that is, that if no patients received any experimental arm treatment, then there would be no difference in survival between randomised arms. This seems reasonable in large‐scale randomised trials where prognostic characteristics are expected to be balanced between arms, as long as being randomised to experimental does not influence survival in other ways than simple treatment receipt. Some caution is advised with smaller trials or subgroups, where balance should be checked for prognostic characteristics that are known and collected, although this cannot rule out an imbalance in other unmeasured factors. Indeed, in this situation, any randomisation‐based inference from the trial may be problematic.

3.2. Other Considerations When Determining Suitability of the RPSFT Model

One advantage of the RPSFTM is that it can be applied when there are very high levels of switching or even when all patients switch in an arm, because the adjustment is done by comparing between randomised arms, rather than comparing switchers and non‐switchers within an arm as in some other methods.

However, the AFT model structure does lead to the following properties of the standard RPSFTM, which may or may not be desirable:

The direction of the unadjusted treatment effect is preserved in the standard RPSFTM adjusted treatment effect due to the model structure—it cannot “flip” the result from favoring the control arm to favoring the experimental arm (assuming more experimental treatment is given in the experimental arm).

If the unadjusted treatment effect is small (e.g., hazard ratio (HR) close to one) then the RPSFTM adjustment will have little effect [15].

The p‐value from the unadjusted treatment effect is preserved for the RPSFTM adjusted treatment effect—any improvement in the magnitude of effect is offset by an increase in variability.

If the time on experimental treatment is similar in the two arms, then model fitting difficulties are likely [15].

To illustrate this further, we note that the shrinkage factor (inverse AF) is estimated by assuming that counterfactual survival would be equal between arms, and therefore any differences in observed survival must be due only to differing amounts of experimental treatment () between arms. So, if the observed survival favours the experimental arm with more time on experimental treatment, experimental treatment must be beneficial, and the estimated shrinkage factor will be < 1. This leads to shorter adjusted survival for switchers in the control arm and an even bigger adjusted survival difference in favour of the experimental arm. Similarly, if observed survival favours the control arm with less time on experimental treatment, then experimental treatment must be detrimental, and the adjusted survival difference becomes more in favour of the control arm. If there is no difference in observed survival, experimental treatment must be ineffective, and adjustment will not change the observed unadjusted result.

If we apply this logic across the distribution of observed survival effects, the proportion of the distribution that favors the experimental arm (e.g., HR < 1) will remain in favor of the experimental arm after adjustment. Similarly, the proportion favoring the control arm (HR > 1) will remain in favor of the control arm. Thus, the distribution is stretched out on either side of the null effect (HR = 1, or logHR = 0), and the point estimate may improve, but the variability increases and the p‐value is unchanged (Figure 1).

FIGURE 1.

Diagram to illustrate preservation of the p‐value with RPSFTM adjustment to remove the effect of control arm switching from a hazard ratio for survival.

If there is a difference in observed survival between arms but no difference in the amount of experimental treatment, there will be no shrinkage factor that can make the counterfactual survival equal between arms. Any situation where the difference between arms in the relative amount of time on experimental treatment is small, such as a large proportion of early switchers [16], may result in model fitting issues such as multiple roots (see Section 3.3.1) and poor performance of the RPSFTM [15].

Although similar principles should apply if re‐censoring is employed (see Section 5), it is noted that they apply on the re‐censored data scale. So, for example, if the standard unadjusted HR is > 1 but after re‐censoring it is < 1, then models will estimate a re‐censored RPSFTM HR < 1. Such a situation is unlikely and should be carefully checked for multiple roots.

3.3. How to Fit the RPSFT Model

Before fitting an RPSFT model, as well as determining whether the appropriate data are available, whether it is appropriate given the switching mechanism and whether the assumptions are likely to be reasonable, a decision must be made about two additional factors: whether to use recensoring, and how to define the time on treatment (). Recensoring will be discussed in detail later in Section 5, as it is also applicable to the two‐stage method.

Time on treatment should be defined in terms of expected duration of treatment effect, as this is the portion of the survival time that the shrinkage factor will be applied to. Two approaches are commonly used. The “on‐treatment” (or “as treated”) approach assumes that the treatment effect acts only whilst the patient is taking the treatment, and so is based on the duration between start and stop dates. If a patient has multiple repeat treatments or treatment breaks, the durations are summed. As a consequence of the common treatment effect assumption and model structure, it is also implied that the relative effect of being “off‐treatment” is the same regardless of arm and timing (e.g., whether pre‐switch in the control arm or post‐randomised treatment in the experimental arm). The plausibility of this should be considered. The “treatment group” (or “ever treated”) approach assumes that the treatment effect starts on the first day of receiving it and persists at the same level until death (or end of follow‐up), so the stop date is not used.

Typically, because these represent two extremes, one approach is selected as the base case based on the mode of action of the treatment and the other may be evaluated as a sensitivity analysis. However, there may be instances where one is clearly inappropriate—for example, the on‐treatment approach is unlikely to be suitable for CAR‐T therapies where treatment is only given once and the mode of action is durable, and the treatment group approach may be unsuitable for short‐acting daily therapies.

It is noted that the “on‐treatment” approach tends to lead to stronger shrinkage factors but is applied over shorter periods of time. This can increase the amount of long‐term survival information loss when recensoring (see Section 5).

The “on‐treatment” approach often also leads to less of a difference in the relative amount of time on treatment between arms than the “treatment group” approach, which increases the likelihood of model fitting difficulties as discussed in the previous section [17].

3.3.1. RPSFT Model 1—Estimate the Causal Parameter

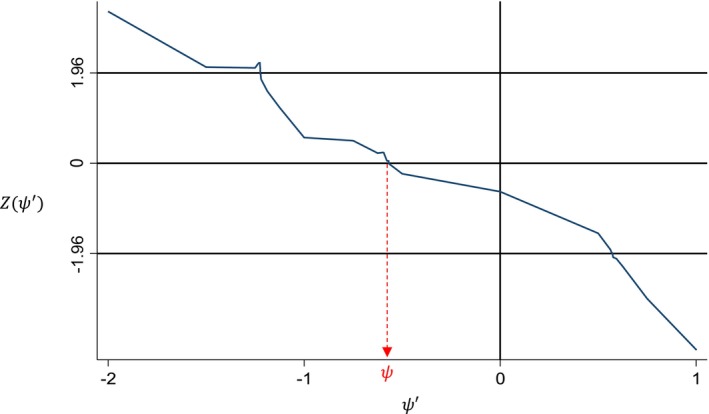

The scalar causal parameter is estimated using G‐estimation [18] based on all patients across both arms. We seek to find that causal parameter that makes both treatment arms as similar as possible if both arms were untreated, that is, experimental arm patients would never have received active treatment and control arm patients would never have switched. A helpful graphical illustration of how G‐estimation works is given in the supplementary appendix of Latimer et al. [18]. Possible choices are chosen from an equidistantly discretised grid range []. For each value in the range we calculate counterfactual (control treated) survival for each patient and compare the counterfactual survival distribution between arms, obtaining a test statistic Z(). The test statistic should ideally be the same as that used for the observed ITT survival analysis, such as a log rank or Cox model based statistic, using the same stratification or covariates. Z() is plotted against and is taken to be the root value satisfying (see Figure 2). If no such roots are identified, the grid range may need to be expanded. If it is still not possible to find any roots across a reasonable range, this may be due to imbalances in prognostic covariates between arms, similar time on treatment between arms, or bias due to the chosen recensoring approach, and alternative methods or recensoring approaches should be considered.

FIGURE 2.

G‐estimation of the causal parameter ψ.

Sometimes, multiple roots may occur, and so the plot in Figure 2 should always be produced to check for this. Although one root could be picked, multiple roots may indicate that the model is unstable, and consideration should be given as to whether results from such an analysis are robust. This may be less of a concern if it occurs very occasionally for samples within a bootstrap to obtain a confidence interval, for example. If selecting one root, some software will pick the one closest to the start of the grid, which may be the most favorable and thus anti‐conservative. Picking the one closest to zero will give the smallest absolute treatment effect which may be preferable, or an average could be taken [4]. Safari et al. [16] discuss some other approaches to handling multiple roots such as changing the grid step size, restricting the grid to a narrower but more plausible range, or using alternative techniques such as iterative parameter estimation (see Section 3.4).

An alternative approach to the grid search is to use interval bisection [13], each time selecting the interval that contains the sign change in the test statistic until a sufficiently small interval for is obtained. However, multiple roots will not be identified by interval bisection, and can only be evaluated via grid search.

A further helpful model fitting check is to generate the Kaplan–Meier (KM) plot of the counterfactual survival for each arm for the chosen value of , which should look similar. Sometimes even though the test statistic indicates no overall difference in counterfactual survival between arms, the distributions may look quite different (e.g., crossing curves) which may raise some concerns. However, bear in mind that if the test statistic is stratified or adjusted for covariates, the effect of covariates will not be reflected in the KM, which may affect their appearance.

3.3.2. RPSFT Model 2—Final Outcome Model (Compare Adjusted Survival Between Arms)

After estimating using Model 1, we next compare the observed survival in the experimental arm to the counterfactual survival in the control arm if no switching to experimental treatment had occurred. For control arm switchers, their counterfactual survival will be different (shorter, if treatment is beneficial) than their observed survival ; for control arm non‐switchers, their counterfactual and observed survival will be the same (). Again, the statistical method used to compare arms should be the same as that used for the observed ITT survival analysis. Kaplan–Meier plots can be directly generated from the adjusted individual patient data. It is also possible to fit flexible survival models to the adjusted data, if extrapolation is the objective.

However, the confidence interval and p‐value that comes directly from this analysis will be incorrect, as unless additional steps are taken to carry through the variability in the estimation of to the ‐adjusted survival analysis, the results will be too precise. should not be treated as a fixed value.

There are two ways to ensure the variability of from Model 1 is correctly carried through to the survival analysis result from Model 2. The first and simplest is to maintain the unadjusted ITT p‐value. By combining this with the adjusted point estimate such as the log HR, the correct variability can be obtained for generating confidence intervals that are consistent with that p‐value [19]. The second way is to bootstrap Model 1 and Model 2 together to obtain the bootstrap distribution of the adjusted effect [19, 20]. In general, the first approach should suffice, unless multiple statistical methods need to be applied, or for some reason the same test statistic is not used for the ITT, G‐estimation and final outcomes model. For example, the primary comparison may be a log rank test which should be used to estimate , with the unadjusted p‐value maintained for the RPSFTM p‐value, but if a supportive HR from a Cox model or restricted mean survival difference estimate from a parametric model is also needed then the associated confidence interval could be bootstrapped. Alternatively the log‐rank p‐value could be used to derive the Cox or parametric model based estimate standard error, acknowledging that there would not be a similar correspondence between these quantities in the unadjusted analysis. Bootstrapping can also be helpful when recensoring is applied since the unadjusted ITT result may be affected by recensoring.

It is noted that, although they are both treatment effects and may be similar in magnitude, exp() from Model 1 and the switch‐adjusted hazard ratio from Model 2 have different interpretations and should not be confused with each other. Both models should be executed to obtain the final adjusted hazard ratio.

3.4. RPSFTM Alternatives and Extensions

Iterative parameter estimation [21] (IPE) is very similar to RPSFTM and often gives similar results. The key difference is that is estimated assuming that the counterfactual survival follows a parametric accelerated failure time distribution, such as a Weibull, and the test statistic Z is based on this. An initial estimate of is obtained using the observed data, this is used to estimate counterfactual survival for switchers, the arms are compared again, and the process continues iteratively until the estimate of converges to a prespecified level of precision. Due to the parametric assumption, there will be a unique root. However, RPSFTM is more commonly used as it does not require the additional distributional assumption of IPE. IPE could be a useful sensitivity analysis for RPSFTM if, for example, there are difficulties in finding a unique root.

Bowden et al. [22] propose a weighted version of RPSFTM using a weighted log rank test, which tends to put greater weight on earlier survival times. Jiménez et al. [23] propose using a modified weighted log rank test (as an alternative to RPSFTM) in the presence of switching. These approaches may increase power and precision; thus, the unadjusted p‐value need not be preserved as with RPSFTM. However, a careful justification of the rationale for using alternative weighting would be required.

To adjust for multiple treatments, Xu et al. [24] proposed to either use a random forest model or a stratified version of RPSFTM with multiple levels of subsequent treatment, where the kth subsequent treatment is associated with causal parameter . The stratified RPSFTM performs a multidimensional grid search over all possible combinations of to , therefore is likely to become computationally intensive as k increases, and may have issues with non‐identifiability of multiple parameters from a single randomisation. It is also likely to require a reasonable number of patients receiving each of the k treatments to avoid model fitting issues. Bell Gorrod et al. [4] provide some further reflections on potential limitations of this method and another “enhanced” RPSFT method proposed by Li et al. [25] for adjusting for two types of switch treatment.

4. Two‐Stage Estimation (TSE)

Two‐stage estimation [2] (TSE) consists of estimating counterfactual survival times for patients who switch treatment, as for RPSFTM, but uses a different statistical methodology to do so.

The TSE adjustment method was originally developed to account for and adjust for treatment switching that occurs at or soon after a particular disease‐related event (such as disease progression), commonly referred as a “secondary baseline”. The counterfactual survival is structured in the same way (see Equation 1) but the acceleration factor is estimated differently. This is done by comparing switchers and non‐switchers from the secondary baseline point, adjusting for important confounders measured at the secondary baseline, that is, treating the second “stage” of the trial after the secondary baseline as an observational study of switching versus not switching. Therefore, a reasonable number of the patients in the subset still at risk at the secondary baseline need to be in the switching and not switching treatment groups for the TSE methodology to be applied, which is a common barrier to its application. Unlike RPSFTM, with TSE the subsequent therapy can be different from that in the other arm of the study; however, due to the requirement that switches only happen at or soon after the secondary baseline, it is often only suited to studies where “crossover” is offered at a pre‐defined point as part of the study design. Similarly, although TSE can be used to adjust for switching occurring in both arms, it is only suitable if both arms are restricted to switch at a specified point, which is not common.

4.1. Assumptions of TSE

The key assumption of the standard TSE model is that there are no important unmeasured confounders; that is, that all of the important covariates that influence both survival and the decision to switch are included in the model comparing post‐secondary baseline survival between switchers and non‐switchers. Identification of potential confounding covariates is discussed further in Section 6.3.

Since only covariates available (or imputed) at the time of secondary baseline are used in the simple TSE model, it is assumed that there is no additional confounding occurring in the time period between secondary baseline and the time of switch. If switch occurs soon after secondary baseline, this assumption should be reasonable. However, if some patients have a longer time period between secondary baseline and switch, this simple TSE method may not be suitable; a potential extension is discussed in 4.4. Defining what length of gap would be acceptable will be study specific and clinical input should be sought; details could be pre‐specified in the protocol.

4.2. Other Considerations When Determining the Suitability of the TSE Model

Many considerations for TSE are similar to IPCW, and we refer the reader to the later IPCW Section 6 on this. Unlike RPSFTM, neither TSE nor IPCW are randomisation‐based methods, and so the adjusted p‐value can differ from the unadjusted p‐value.

An additional consideration for TSE is whether any patients switch before secondary baseline, for example, without confirmed disease progression. Such patients will not be in the risk set at the secondary baseline and may be quite different from those that switch post‐secondary baseline and so, in general, should not be included in the model to estimate the acceleration factor or have their survival adjusted with that acceleration factor. Therefore, their observed survival must be used, without removing the effect of switch treatment. If there are such patients, or at least a non‐negligible proportion, TSE may not be a suitable method. One way to assess their potential influence would be to do two sensitivity analyses, both setting the secondary baseline for these patients as the point of switch, one where their survival is adjusted using the AF from true secondary baseline patients and one where these patients are also included in the estimation of the AF. If either of these was markedly different from the primary analysis, then TSE would not be appropriate. However, such sensitivity analyses should not be over‐interpreted since they are unable to define secondary baseline in the same way for all patients and so these inferences may be biased.

Secondary baseline is typically set as disease progression, but it could be another clearly defined event that would trigger the switch decision. For example, in the PACIFIC study, Ouwens et al. [26] set secondary baseline as initiation of any subsequent therapy and compared patients that switched to immunotherapy versus other therapies (see Section 4.4). However, it is still important that all important confounders are included in the model—for example, if switching were allowed if a patient was unable to tolerate the comparator therapy, the type and severity of that intolerance may influence their switch decision and future outcome. If switching was also allowed at disease progression, then the earlier of those two events would become the secondary baseline, and the type of secondary baseline (progression or intolerance) would likely be an important confounder. Extra care is needed with such a “composite” secondary baseline, since switching patterns and reasons may differ by type of event. Since the standard implementation of TSE applies a single AF to all switching patients, it will be important that the treatment effect does not depend on the type of event. Alternatively, separate AFs could be calculated for the different baseline types, although this will add complexity to the modeling and interpretation. Consideration may need to be given to interactions between secondary baseline type and other confounders. The analyst should check that patients who experience both events (e.g., intolerance followed by disease progression) switch soon after the first; if some switch at the first and some at the second, then the assumption of no unmeasured confounding between secondary baseline and switch may be violated. Analyses which depart from the typical secondary baseline of disease progression should therefore be used and interpreted cautiously.

4.3. How to Fit the TSE Model

As with RPSFTM, before fitting a TSE model, consideration should be given to whether to use recensoring (Section 5), and how to define the time on treatment (; Section 3.3).

4.3.1. TSE Model 1—Estimate the Acceleration Factor

For ease of explanation, we focus on subjects switching from control to experimental treatment (and/or other treatments that we wish to adjust for in the control arm). If both arms are being adjusted, this process is repeated to adjust for switching in the experimental arm.

In the first model, focusing only on control arm patients, one compares post‐secondary baseline survival times for patients who switch onto the experimental treatment to those who did not. A parametric AFT model is used (most commonly Weibull, lognormal, log‐logistic or gamma), the distribution being chosen based on the best fit by, for example, AIC or BIC. The model also controls for prognostic characteristics measured at the secondary baseline time point and includes the time‐dependent (usually) switch indicator. The effect of treatment associated with switching can be obtained from there as an acceleration factor (AF). An alternative to including the covariates directly in the model is to estimate a propensity score for switch treatment [26] and use inverse probability of treatment weights to fit weighted parametric AFT models.

Essentially, this represents a simplification of the approach used by Robins and Greenland [27] and Yamaguchi and Ohashi [28] to adjust for treatment switches, in which a structural nested model was utilised to estimate the treatment effect in the control group, rather than a less complex AFT model as suggested here [2].

4.3.2. TSE Model 2—Final Outcome Model (Compare Adjusted Survival Between Arms)

As in RPSFTM, we next use the shrinkage factor (1/AF) from Model 1 to adjust the survival times in the control arm by replacing them with the counterfactual times. If the experimental arm is also being adjusted to remove the effect of switching, we do the same to the experimental arm survival times using the AF from the experimental arm Model 1 fit. Analysis proceeds as for RPSFT Model 2 (Section 3.3.2). As with RPSFTM, Kaplan–Meier plots and models for extrapolation can be fit directly to the adjusted individual patient data.

Again, we must ensure that the variability in the estimation of the AF from Model 1 is carried through to Model 2, or the results from Model 2 will be too precise. This is commonly done by bootstrapping (Model 1 and Model 2 together) [29].

4.4. TSE Extensions

Latimer et al. [29] describe an extension of simple TSE, referred to as TSEgest, to adjust for additional time dependent confounding between secondary baseline and time of switch, via G‐estimation. Simulations showed that this may be a useful alternative approach in some scenarios, particularly when there is time dependent confounding and a high switch proportion which leads to large bias with standard TSE and IPCW. However, this negates one of the main practical advantages of standard TSE over IPCW; namely that the collection of time dependent covariate data after secondary baseline is not required.

Ouwens et al. [26] describe a modified two‐stage method which was applied in the PACIFIC study of durvalumab versus placebo in non‐small cell lung cancer. Standard TSE using progression as a secondary baseline to adjust for the effects of switching to immunotherapy was not applicable as the switch occurred a median of ~6 months after progression. Instead, the start of any subsequent therapy was used as a secondary baseline, and thus the survival time of patients starting immunotherapy was replaced with an estimated counterfactual survival if they had received other therapy (e.g., traditional chemotherapy). This was done sequentially by line of subsequent therapy, first looking at patients who received second or later subsequent therapy and adjusting for immunotherapy based on the start of second or later subsequent therapy as a secondary baseline, and then similarly looking at first subsequent therapy as a secondary baseline.

Some alternative methods that have been proposed for adjusting time to event outcomes for treatment switching, including random forests, regression imputation, semi‐competing risks models, semi‐parametric copula‐based models, decision analytic modeling, and use of external data, are discussed in Bell Gorrod et al. [4].

5. Re‐Censoring in RPSFTM and TSE

RPSFTM and TSE involve calculation of an acceleration factor which represents the prolonging of survival times for those on the control arm, as a result of switching to the (often beneficial) experimental treatment. Crudely applying this acceleration factor to shrink a patient's survival time can result in informative censoring, as a consequence of shrunken censoring times occurring earlier in switchers than non‐shrunken censoring times in non‐switchers, when switch is linked to prognosis. Hence, censoring time is no longer independent of failure time. Censoring is uninformative on the observed time scale, but is informative on the counterfactual time scale. A method called re‐censoring [13] has been proposed to mitigate the effects of informative censoring bias. However, as will be discussed shortly, this also comes at the price of loss of information. As a result of this necessary trade‐off, it requires due consideration prior to implementing.

Re‐censoring involves breaking the dependence between censoring time and switch status (prognosis) by applying a new censoring rule that affects all patients in the same way. Let be the administrative censoring time (data cut‐off) for patient i. All patients still at risk are then censored at the earlier of their observed administrative censoring time or a new administrative censoring time.

Specifically, the counterfactual survival times of patients are re‐censored at their potential censoring time , which is the minimum of the administrative censoring time observed or the adjusted administrative censoring time after application of the acceleration factor:

| (2) |

If switch treatment is beneficial, as is usual, then . If is less than the counterfactual survival time for patient i (whether event or censored), this time is replaced by , and re‐censoring occurs, which means the event status of patient i is set to censored. In this way, counterfactual survival times of both switchers and non‐switchers are re‐censored at a common earlier point that is independent of switch status/prognosis, thus eliminating informative censoring.

It is noted that an amendment to the definition of can be used in the situation where no switching can occur for any patient for an initial period of time in the trial. For example, switching may not be allowed until after results of an interim analysis that occurs at time after the last subject entered the trial. In that case, only the time after this initial period needs to be adjusted, and we replace with in Equation (2). The impact of re‐censoring will be reduced as increases.

Re‐censoring is applied for data on the counterfactual time scale, and therefore should be applied to all patients in the RPSFTM G‐estimation procedure which compares counterfactual survival between arms to obtain the AF. The exception to this would be if the experimental arm has complete treatment (all patients are on treatment for the entire period, for example, in the “treatment group” approach) (Section 3.3), in which case recensoring is not required in the experimental arm since all patients will be affected by the AF shrinkage equally.

In the subsequent analysis of adjusted survival for both RPSFTM and TSE, observed survival is used for the experimental arm, so recensoring need only be applied to the control arm. For control arm non‐switchers, although their counterfactual survival happens to be equal to their observed survival, re‐censoring still needs to be applied to break the dependency between censoring time and switch status in the control arm. An alternative “hybrid” recensoring approach for RPSFTM, where recensoring is applied in the AF estimation model but not the adjusted survival model, is discussed at the end of this section.

Re‐censoring has been shown to mitigate the problems associated with informative censoring bias; however, it can be associated with missing follow‐up information bias as a result of the shrinkage of survival times and the re‐censoring of events at timepoints earlier than those observed on the trial. The bias associated with missing follow‐up information is apparent when switching to the experimental agent is beneficial for the patient and proportional to the magnitude of benefit seen in the trial—with larger treatment benefits associated with larger acceleration factors and therefore greater shrinkage—shortening the follow‐up time considerably as a result of the shrinkage. This can be seen in examples such as Figure 3, taken from the NICE submission of axicabtagene ciloleucel in relapsed or refractory diffuse large B‐cell lymphoma after first‐line chemotherapy [30]. Here, the last observation for the standard of care therapy (SOCT) RPSFTM adjusted curve with full recensoring in blue is more than 18 months earlier than the SOCT curves for other switch adjustment methods; over half of the follow‐up period has been lost due to recensoring. A similar example from a different study in metastatic melanoma, including TSE, is presented by Latimer et al. [31].

FIGURE 3.

Example of information loss due to full recensoring in RPSFTM (blue curve) in the ZUMA‐7 study of axicabtagene ciloleucel versus standard of care therapy (SOCT) in diffuse large B‐cell lymphoma.

This information loss is often more acute with the “on‐treatment” approach than the “treatment group” approach, as AFs tend to be larger but applied for shorter periods when estimating counterfactual survival (since stops when switch treatment stops). However, they are applied for the whole trial period when defining potential censoring time, meaning occurs early and lots of information after this point is lost to re‐censoring.

In simulations [31], methods that do not account for re‐censoring have been shown to typically overestimate outcomes in the control group. Therefore, bias was directionally positive in these analyses, whereas methods that re‐censor underestimated to a proportionate degree outcomes in the control arm, therefore had negative biases. However, this is situation specific, and in real‐life studies it is not always the case that analyses without re‐censoring estimate better outcomes for control than those with re‐censoring. The simulated magnitude of bias was different for the different methods (RPSFTM, TSE), with the re‐censoring in RPSFTM typically associated with the largest degree of negative bias. TSE typically had the lowest level of bias associated with it, although this varied according to the magnitude of treatment effect and prognosis of switchers, for example.

Re‐censoring therefore has been cautiously put forward as a way to mitigate biases associated with these two treatment switching methods, as it addresses the issue associated with informative censoring bias; however, this comes at the expense of loss of follow‐up information. There is a lot of conflicting guidance in public literature as to whether to re‐censor or not, and no real consensus on which method provides the least biased results. Latimer et al. [31] recommend presenting results both with and without recensoring. For TSE, an approach combining TSE with IPCW has also been suggested as an alternative for handling informative censoring; simulations [32] showed that it could be useful, but its performance compared to recensoring approaches varied depending on the scenario. In our experience, it is often obvious (from comparing the last data timepoint and number of events after recensoring to the observed data) when recensoring causes a major issue with loss of long‐term follow‐up information. Early truncation of the survival curves due to recensoring may have an impact in terms of increased uncertainty when the adjusted survival curves are extrapolated over a longer time horizon, for example, to use in health economic modeling.

Re‐censoring can also be problematic, as it can lead to model misspecification. As re‐censoring typically involves truncation of the survival curves, estimation of the true treatment effect after adjusting for switch is therefore calculated over a shorter follow‐up duration. Where we would typically assume the effect of experimental treatment to diminish over time, this would lead to an over‐estimation of the true treatment effect when applying re‐censoring. Simulation studies have shown that on account of this, RPSFTM analyses can lead to negative bias; that is, they over‐adjusted for the true impact of treatment switching.

For RPSFTM, a potential alternative to full recensoring or no recensoring is a hybrid approach, where recensoring is applied in the AF estimation model (Model 1) but not the adjusted survival model (Model 2), that is, at the point of estimating but not the final survival analysis. This approach was used in the NICE submission of osimertinib in metastatic EGFR and T790M mutation‐positive non‐small cell lung cancer [33]. This may have some advantages in reducing informative censoring bias in the estimation of whilst reducing bias due to information loss in the final survival analysis. However, it does not eliminate the alternative sources of bias in each model. The performance of the hybrid method has not been evaluated in simulations, to our knowledge. Nevertheless, it may be a useful additional approach to present alongside the more usual full and no recensoring results, in scenarios where full recensoring results in a large degree of information loss.

6. Inverse Probability of Censoring Weighting (IPCW)

The inverse probability of censoring weighting (IPCW) method originated in the causal inference literature (as did RPSFTM), and given the assumptions are satisfied, can estimate an unbiased adjusted treatment effect in the presence of time‐varying confounders [9]. Unlike RPSFTM, with IPCW the subsequent therapy can be different from that in the other arm of the study. Both arms can be adjusted, and the therapies adjusted for can be different for the different arms. IPCW differs in approach from RPSFTM and TSE as it does not adjust the individual survival times of patients but instead estimates weights for each patient to define a pseudo‐population.

IPCW extends naïve censoring techniques based on per‐protocol analysis where patients are censored at the time of treatment switching. Naïve artificial treatment censoring at the time of switch introduces informative censoring because the outcomes of patients who switch and those who do not are likely to be different.

In IPCW, the bias associated with informative naive censoring is removed by weighting each patient in the experimental and control arm. The weight for each patient is the inverse of the predicted probability of not being censored (i.e., not switching from planned treatment) at any given time conditional on the observed values of baseline and time‐varying covariates. Uncensored patients are up‐weighted based on the covariate similarity of censored patients [34]. Patients with a low probability of treatment switch (unlikely to switch) are given relatively smaller weights compared to patients with a larger probability of treatment switch. For example, if patients with higher biomarker expression are more likely to switch, patients with higher biomarker expression who have not yet switched are assigned higher weights. By applying this IPC weighting, the differences between censored switchers and uncensored non‐switchers should be eliminated and censoring becomes uninformative, removing the bias associated with the naive censoring approach.

6.1. Assumptions of IPCW

The main challenge in applying IPCW is to establish that the weights adjust appropriately for the bias created by censoring switchers, such that the covariates available at baseline and over time include all important ones that are prognostic for survival and influence the probability of treatment switching [18]. This is the “no unmeasured confounders” assumption that also applies to TSE as previously described in Section 4.1; we can never be sure that we have no unmeasured confounders. Although this is the same assumption as for TSE, for IPCW we can use covariates over the entire study time period to satisfy this assumption, not just those at secondary baseline.

6.2. Other Considerations When Determining the Suitability of the IPCW Model

Unlike RPSFTM, IPCW is not a randomisation‐based method, and so the adjusted p‐value can differ from the unadjusted p‐value.

Since Model 1 of the IPCW method (see Section 6.4.1) compares switchers and non‐switchers within an arm, there needs to be a reasonable number of patients in both of these groups to obtain robust model estimates. So if the proportion of switchers is very high, IPCW will not be suitable; if it is very low, there is little point in adjusting for switch (indeed, this applies to all methods). Simulations [2, 18] have shown IPCW is prone to substantial error if more than 85% of patients switch in a control arm of 250 patients; however, it will also depend on absolute numbers, and smaller studies may be affected at lower switching proportions. Further simulations [14] have suggested that all methods provide close approximations of the true treatment effect when the switching proportion is moderate, defined as less than approximately 60% of the patients eligible to switch. Therefore, we propose that IPCW is best suited to switching proportions of 40%–60%, unsuitable with switch proportions over 85% or under 15%, and used cautiously in other situations, particularly in smaller studies. If there are certain preconditions that need to be met prior to switch being possible (e.g., it is only offered to patients still in the study following an interim analysis, or who have disease progression), then the proportions and group sizes should be assessed in the subset of patients eligible for switch. The same principle applies to TSE, where this should be assessed in the patients with a secondary baseline.

It is expected that there will be at least one time‐varying covariate in an IPCW model, since it is likely that something has changed over time since baseline that has influenced the decision to switch at that point, such as disease worsening. If switch truly only depends on baseline factors then a simpler model can be applied, but such a situation seems unlikely.

The IPCW method is not suitable if there are any covariate patterns which predict with probability equal to one that treatment switching will take place [35]. Additionally, those with probabilities close to one are likely to lead to model fitting difficulties. This means that if there are any important confounding variables that are observed exclusively or near‐exclusively in switchers (or non‐switchers)—for example all/most patients with disease progression chose to switch—then IPCW will not be appropriate. Similar considerations will apply in TSE.

Furthermore, the correctness of model specification is important: Model 1 (weight determining model) and Model 2 (final outcome model to compare adjusted survival data) need to be correctly specified to lead to reasonable bias reduction in the trial.

6.3. Covariate Selection for IPCW and TSE

A suggested approach to defining a set of potentially confounding covariates to adjust for in the IPCW and TSE models is provided in the Data S1.

6.4. How to Fit the IPCW Model

As with RPSFTM and TSE, there are two models in IPCW.

6.4.1. IPCW Model 1—Estimate Weights

At first, a switching model is defined within an arm to estimate the probability that the patient has not yet switched by time t, dependent on baseline and time‐varying covariates. If both arms are being adjusted for subsequent therapies, separate models are typically fitted for each arm, unless the switching behavior can reasonably be expected to be the same for each arm (e.g., the switch therapy is the same and the impact of covariates on the decision to switch does not differ by randomised arm). A patient will be censored at treatment switch and is removed from the at‐risk set.

We wish to fit a time dependent Cox model which typically has a form as shown in Equation (3) [9], where h C is the arm‐specific hazard of switch (censoring), is past covariate history, T is the actual switch time, h 0 is the arm‐specific baseline hazard, and is the baseline and current time varying covariate values:

| (3) |

It is noted that, if desired, the analyst may change the form of this model such as incorporating more complex interactions between covariates or with time; the proportional hazards assumption is discussed further in Section 7. However, the model is structured, we then use this to estimate the probability of switch for each subject and each time at risk, which we then convert into an inverse probability of switch (censoring) weight.

There are two modelling approaches that can be used. We can fit the continuous time Cox model directly, or we can use a discrete time pooled logistic regression model, which should be a good approximation to this under the assumption that the probability for the event is small in the discrete time interval [36]. When this methodology was first proposed, some software could not handle time‐varying subject‐specific weights from the Cox models. So older examples of IPCW tend to use pooled logistic regression for both estimating weights in Model 1 and performing weighted survival analyses in Model 2. Due to the increased availability of suitable software (see Section 8.2), the time‐dependent Cox model is now more commonly fitted directly rather than using the pooled logistic regression model approximation. However, we discuss both methods here for completeness.

6.4.1.1. Handling Missing Data for Covariates

A covariate value is needed for every patient in every time interval where they are at risk to keep them in the analysis set. Potential strategies for handling missing covariate data are discussed in the Data S1.

6.4.1.2. Estimating Weights From a Time Dependent Cox Model

Firstly, the data is restructured into time intervals with breaks at the appropriate event/switch/covariate change times, as described by Graffeo et al. [37]. For each patient, their event status, switch status, and baseline and time‐varying covariate values are set for each interval and should be constant within that interval. Intervals after switch are removed, which is equivalent to censoring at switch. Intervals after death or censoring for the outcome are also removed.

A time‐dependent Cox model for the hazard of switch over time, dependent on baseline and time varying covariates, can then be fit to the restructured data using the counting process approach as described in Section 8.2.

Each individual's unstabilised IPC weights over time are then obtained from this model (see Graffeo et al. [37] Equation (1)). At a given time t, this weight is defined as the inverse of the probability of remaining unswitched until time t, given the observed values of the baseline and time‐varying covariates at t.

6.4.1.3. Estimating Weights From a Pooled Logistic Regression Model

As with the Cox model, the data are firstly restructured into time intervals, event/switch/covariate values are determined in each interval and post‐switch/event/censoring intervals are removed. Commonly in pooled logistic regression, fixed length intervals are used, but this is not mandatory.

A logistic regression model is fit to each interval for the log‐odds of switch dependent on baseline and time‐varying covariates. Due to the large number of intervals, to reduce the number of parameters compared to fitting a separate intercept for each interval, a smooth function such as a natural cubic spline can be applied to the intercept [38, 39]. For spline expansions, a decision is needed regarding the number and position of the knots to be placed [11]. Hence, pooled logistic regression implementations may require more user judgment in the implementation than the Cox model.

Individual probabilities of switching (censoring) over time based on all covariates V, , are then obtained from the model predicted probabilities. The inverse of these, , are the unstabilised IPC weights.

6.4.1.4. Assessing and Stabilizing Weights

Large unstabilised weights can occur, especially towards the end of the observation time when most patients have either experienced the event or have been censored. Alternatively, stabilised weights can be applied which form a narrower distribution with decreased weight variability and increased statistical efficiency [38]. In the weight calculation the numerator is replaced by which is the individual's estimated probability of switch with only the subset of baseline covariates B in the model, rather than the full set of baseline and time varying covariates V. Hence, stabilised weights are . However, these may not fully resolve the issue of large weights.

The distribution of the weights should therefore be reviewed. Graffeo et al. [37] propose a plot of truncated log‐stabilised weights with a boxplot for each time interval since enrolment. However, within a model that includes a large number of intervals, it may be preferable to plot all weights on the y‐axis over all time intervals and patients on the x‐axis and scan for extreme weights. In the case of a strong covariate‐treatment association, weights estimators may still be considerably variable and positively skewed [40]. For this scenario, truncation may be considered where extreme weights are replaced by minimum and maximum weights according to percentiles of weight distribution, for example, the 95th percentile. Truncation of weights is further discussed in Bell Gorrod et al. [4]. Estimated weights that are extreme in value or that in aggregate do not have a mean close to 1 may indicate model misspecification or non‐positivity, and an estimate of survival based on such weights may fail to correct for bias [41].

6.4.2. IPCW Model 2—Compare Adjusted Survival Between Arms

Once the weights for all time intervals in the counting process are estimated across all patients, these weights can be incorporated within an outcomes model. If only one arm is being adjusted, the weights should be set to 1 in the other arm. The outcomes model can be a weighted Cox model with subject‐specific time‐varying weights, applied using the same counting process approach and time intervals as described for Model 1, modeling the hazard of the outcome event (e.g., death) dependent on treatment arm. Or it could be a weighted pooled logistic regression model, with weights set for each patient in each interval, modeling the log odds of the outcome event in a similar way. It is also possible to fit weighted flexible survival models if extrapolation is the objective. As well as treatment arm, if stabilised weights are used, any baseline confounders from the weight determining model should also be included as covariates in the weighted outcomes models. This is because stabilised weights do not adjust for confounding by baseline covariates, as they are included in both the numerator and denominator of the weight [42].

Confidence intervals generated by this weighted model will be inappropriate because the standard errors are not valid. Weights are estimated in IPCW and are not fixed and known, so the variability in this estimation from Model 1 should be carried through to Model 2. The weights also induce within‐subject correlation. To account for within‐subject correlation, standard errors must be calculated in a robust way, such as using the sandwich variance [38]. To account for the estimation of the weights, bootstrapping can be applied [3]. The whole routine of estimating weights and computing the final outcome model should be bootstrapped simultaneously. A weighted analysis is less efficient (with bigger standard errors) than an unweighted analysis; for example, IPCW is performed to decrease bias, not to gain efficiency.

7. Proportional Hazards Assessment After Switch Adjustment

If necessary, the assumption of proportional hazards after switch‐adjustment can be assessed using standard graphical methods. However, the properties of the various tests that are commonly used for proportional hazards assessment are not fully understood when applied to switch‐adjusted results; this is a potential area for future research. Care should be taken to ensure that any test‐based approaches or confidence bands are using the correct variability.

The IPCW method was developed using the Cox model as a basis, which makes the proportional hazards assumption. It may be possible to adapt this to use, for example, an accelerated failure time distribution‐based parametric model if the alternative assumption about the parametric distribution is deemed reasonable. However, if the unadjusted analysis shows strong evidence of non‐proportional hazards, caution should be exercised when using any of the switch adjustment methods described here, as their performance may be impacted. For example, the common treatment effect assumption of RPFSTM is less likely to hold in this situation.

8. Software and Programming Guidance

Some efforts have been made to develop packages and code in various statistical software to implement treatment switching methods. These are described in this section.

To support analysts who wish to use these models, we have developed a repository of R code applied to a worked example. Details are provided in the Code Appendix at the end of this paper (Section 12).

8.1. RPSFTM and IPE

For RPSFTM, the Stata package strbee [13] was originally developed in 2002 and for a long time remained the only well‐known published option. This can also be used for IPE models. It has many helpful features that enable multiple types of RPSFT models to be fitted.

The rpsftm package [43] in R was released in 2018, and has much of the same functionality as strbee. Time on and off treatment for an individual is represented in the rpsftm() function call using rx, the proportion of total time spent on treatment. The test used for G‐estimation can be based on log‐rank, Cox regression, or parametric survival models and covariates or strata can be included. Recensoring can be applied through specifying the censor_time argument; setting autoswitch = TRUE will not recensor an arm if it is completely treated (rx = 1 [or 0] for all patients). It is also possible to use the treat_modifier argument to conduct sensitivity analyses where differs by treatment arm, that is, reduced effect in control arm switchers ( < 1 per Section 3.1) compared to those randomised to treatment.

Outputs include and counterfactual survival times for both arms, and plots of these can be easily produced. Subsequent modelling of adjusted survival (Model 2 in the earlier section) can then be programmed by the analyst according to their requirements, ensuring that the variability of is correctly carried through as discussed previously—for example via rearranging the test statistic (formed of the adjusted log HR estimate and its variability) corresponding to the unadjusted p‐value to obtain the adjusted log HR variability, or bootstrapping.

It is noted that, in the case of multiple roots found for the estimation of , the rpsftm package uses the first root in the interval in subsequent calculations (such as generating individual counterfactual survival times). The analyst should consider if this is appropriate for their objective, as discussed earlier in Section 3.3.1, and if necessary, modify their code to use an alternative root.

For RPSFTM in SAS, analysts often write their own code. A macro for estimating using G‐estimation has been developed by Danner and Sarkar [44]. They show consistent results to the R rpsftm outputs in their example, although they mention it is less efficient. This was based on the log‐rank test and has more recently been extended [45] for Weibull and Cox models. However, the authors of this paper do not yet have experience with the macro and analysts should ensure it is validated for the circumstances of their analysis.

8.2. IPCW

Our original publication [1] describes some approaches that have been used previously to fit IPCW models in Stata and SAS. At the time, the pooled logistic regression approach was commonly used, as described earlier in Section 6.4.1. However, it is now more usual to directly fit a Cox model using a counting process notation [46, 47] for time‐varying covariates in the estimation of IPC weights, and for the weights themselves in the subsequent weighted survival analysis. Start and stop times tstart and tstop are input to define the intervals in which the covariates or weights are constant (see Table 1).

TABLE 1.

Programming syntax for IPCW Cox models in R and SAS.

| Software: function | Counting process for IPC weight model (Model 1) | Robust standard errors for weighted survival analysis (Model 2) |

|---|---|---|

| R: coxph |

Surv(tstart, tstop, switch) ~ <covariates> |

Surv(tstart, tstop, event) ~ arm + cluster(subjid), data = dat, weights = ipcw |

| SAS: PROC PHREG | model (tstart, tstop)*switch = <covariates> |

data=dat covs(agg); class arm; model (tstart, tstop)*event=arm; freq ipcw/notruncate; i d subjid; |

Event occurrence covariates such as time‐varying progression status or specific adverse events can be set up as indicator variables, taking a value of 0 until the event occurs when the value changes to 1. Switch status is similarly constructed, with the value changing from 0 to 1 in the switching interval. Post‐switch intervals are removed.

Much of the effort in fitting IPCW models comes from manipulating the data into this interval structure. The R package ipcwswitch [37] provides some useful functions for assisting with this process, as illustrated in its accompanying publication. Users should be aware that some functions require quoted arguments when others do not, and that the functions used earlier in the process can use dates, but the later ones require this to be converted to a numeric time since baseline. Some functions may not work or may restructure data incorrectly if datasets are not sorted in the expected order (e.g., by subject identifier) or variables are not in the correct format (e.g., arm as a factor). Therefore, the generated datasets should be very carefully checked. The package also includes a function ipcw() to generate the stabilised (and optionally truncated) IPC weights from time‐dependent Cox models once the data structure is established. It is noted that this function contains a loop which can make it time consuming to run, particularly in a large bootstrap sample; the user may wish to re‐write some parts of it to increase efficiency if needed.

The R package ipw [48] is an alternative that can fit a wider range of marginal structural models. The ipwtm() function with family='survival' can similarly be used to generate IPC weights from a time‐dependent Cox model once data has been structured to meet its requirements. It has some small methodological differences to the ipcwswitch::ipcw() function, which means estimated weights will not always be exactly equal from the two packages [37]. ipw::ipwplot() is useful to plot and explore the weights distribution of any dataset, including those generated by other packages.

Mosier [45] describes a process including a macro for conducting an IPCW analysis based on the Cox model rather than pooled logistic regression in SAS. However, the authors of this paper do not yet have experience with implementing this or how it compares to results from the above R packages; analysts should ensure it is validated for the circumstances of their analysis.

It is recommended to conduct the following programming quality control (QC) check for IPCW analyses, which can highlight any errors in the data re‐structuring or model fitting process: set all the weights in each interval to 1 and re‐run the survival model. The results should match those from a naïve survival analysis where patients are censored at switch.

One advantage of using R rather than SAS to fit IPCW models is the ease with which it produces IPC weighted KM curves in a single line of code—the same counting process syntax can be applied in the survdiff() call as in coxph() to apply time‐varying, subject‐specific weights. PROC LIFETEST in SAS does not currently have this functionality, even though PROC PHREG does. It is further noted that for parametric models in R, flexsurvreg allows counting process syntax but survreg does not. Since IPCW is a weighted analysis, the KM numbers at risk across time will not be integers, although they may be rounded for presentation.

As previously discussed, it is important to carry through the variability in the IPC weight estimation into the weighted survival analysis. This can be done via bootstrapping.

Counting process models may generate error messages when a subject dies, is censored for death, or switches on the same day as baseline (time tstart for the first interval)—for example, if they die on the day of randomisation. This is because the length of the first interval becomes zero days. This can be fixed without affecting the estimated hazard ratio by adding 1 day to all times relative to baseline (death/censoring, switch, data cut off, time‐varying covariate change times) or by adding 0.5 days to any death/censoring/switch times occurring on the same day as baseline [49].

8.3. Two Stage Estimation

At present, there are no known packages or macros specifically for TSE, as this tends to use a combination of already established methods. Some of the approaches described above for IPCW can be applied when writing code for TSE. R code for a worked example is provided in the repository that accompanies this paper.

9. Reporting Recommendations

Sullivan et al. [20] reviewed the quality of reporting on the implementation of switching adjustment methods in oncology trials as reported in published literature and NICE HTA submissions. The authors noted that the quality of reporting was generally poor; for example, few studies discussed whether the adjustment method was pre‐specified, why a particular method had been chosen, how the methods were implemented, and whether any sensitivity analyses were applied to probe assumptions. These limitations made it difficult to assess the validity of the adjusted treatment effect estimates. Bell Gorrod et al. [4] performed a review of recent NICE TAs and similarly found sub‐optimal reporting and reviewing and proposed their own detailed reporting guidelines. Hence, motivated by the authors' recommendations to improve reporting, we present additional modified checklists for outputs common to all treatment switching adjustment methods in Table 2 and further outputs specific to a given adjustment method in Table 3.

TABLE 2.

Reporting checklist for general outputs—all treatment switch adjustment methods.

| 1 | Discuss whether assumptions are plausibly satisfied for treatment adjustment methods. |

| 2 | Outline treatment‐switching mechanism: Who could switch and when during study? Optionally, report intercurrent events in the estimands framework following Manitz et al. table 1 [5], for example, distinguishing intercurrent event strategies for switches to investigational drugs and drugs available in a given market. |

| 3 | Report number of switchers, when switching occurred and how many were eligible to switch. |

| 4 | If covariates adjusted for: What covariates, how they are selected, amount of missingness, and are they fixed or time‐varying covariates? Process for variable selection (targeted literature review, clinical consultation, statistical variable selection etc.). |

| 5 | Was the selected adjustment approach (including model fitting steps) pre‐specified? |