ABSTRACT

Background/objective

Artificial intelligence (AI) is embedded in healthcare education and practice. Pre‐service training on AI technologies allows health professionals to identify the best use of AI. This systematic review explores health students'/academics' perception of using AI in their practice. The authors aimed to identify any gaps in the health curriculum related to AI training that may need to be addressed.

Methods

Medline (EBSCO), Web of Science, CINAHL (EBSCO), ERIC, Google Scholar, and Scopus were searched using key terms including health students, health academics, AI, and higher education. Quantitative and qualitative studies published in the last seven years were reviewed. JBI SUMARI was used to facilitate study selection, data extraction, and quality assessment of included articles. Thematic and descriptive data analyses were used to retrieve data. This systematic review has been registered in PROSPERO (CRD42023448005).

Results

Twelve studies, including seven quantitative and five mixed‐method studies, provided novel insights into health students’ perceptions of using AI in health education or practice. Quantitative findings reported significant variations in attitudes and literacy levels regarding AI across different disciplines and demographics. Senior students and those with doctoral degrees exhibited more favourable outlooks compared with their less experienced counterparts (p < 0.001). Students intending to pursue careers in research demonstrated greater optimism towards AI adoption than those planning to work in clinical practice (p < 0.001). A review of qualitative data, particularly on nursing discipline, revealed four themes, including limited AI literacy, replacement of health specialties with AI vs. providing support, optimism vs. cautiousness about using AI in practice, and ethical concerns. Only one study explored health academics’ experiences with AI in education, highlighting a gap in the current literature. This is while that students consistently agreed that universities are the best setting for learning about AI technologies in healthcare highlighting the need for embedding AI training into the health curricula to prepare future healthcare professionals.

Conclusion and implications for nursing/health policy

This systematic review recommends embedding AI training in health curriculum, offering direction for health education providers and curriculum developers responsible for preparing next‐generation healthcare professionals, particularly nurses. Ethical considerations and the future role of AI in healthcare practice remain central concerns to be addressed in both curriculum development and future research. Further research is required to address the implication and cost‐effectiveness of embedding AI training into health curricula.

Keywords: artificial intelligence, health academics, health students, higher education

1. Introduction

Artificial intelligence (AI) refers to the field of science and engineering focused on creating intelligent machines that use algorithms or predefined rules to replicate human cognitive functions, such as learning and problem‐solving (Xu et al. 2021). The role of generative AI, like the use of large language models or image synthesis tools in the field of health education, has been discussed in the literature (Safranek et al. 2023; Abd‐Alrazaq et al. 2023). Healthcare applications, such as clinical decision support systems, are another concept that should be evaluated. In everyday health practice, AI‐enabled systems can improve the accuracy of diagnosis, reliability of data analysis, efficiency of care planning, and consistency of treatment activities for a wide range of diseases (Lee and Yoon 2021; Esmaeilzadeh 2020). In addition, AI‐based technologies have the potential to benefit the healthcare system by providing the best quality patient care, saving time and resources, reducing workload, improving staffing, augmenting roles, and even predicting the risk of diseases (Ahuja 2019). However, there are concerns that benefits may be overstated, and risks underplayed owing to a misunderstanding of AI learning systems (Babic et al. 2021).

The benefits and challenges arising from the rapid uptake of these technologies have important implications for health professionals (Ali et al. 2023). As AI technologies continue to gain prominence within clinical settings, higher education institutions are acknowledging the imperative to prepare health students with requisite skills and knowledge for the effective utilisation of AI tools (Knopp et al. 2023). In response, higher education institutions are formulating curricula that embed core AI concepts and tools across diverse health disciplines (Xu et al. 2024; Jackson et al. 2024). This encompasses a thorough understanding of machine learning, data analytics, and the ethical implications associated with the deployment of AI tools in healthcare settings. Furthermore, several institutions, such as the Duke Institute for Health Innovation and Stanford University, are at the forefront of initiatives that seamlessly integrate AI into health education (Paranjape et al. 2019; Weidener and Fischer 2024). These efforts are realised through collaborative projects, specialised coursework and hands‐on experiences with AI technologies, all geared towards enhancing healthcare services delivery and fostering innovation.

Preparation for professional practice and practice readiness, among other key graduate attributes, are predictors of a successful transition to professional practice. However, the first year of professional practice is already challenging for new health professionals with multiple learning priorities (Murray‐Parahi 2020) who may not be motivated enough or have the available time to learn new skills once in the workforce. Therefore, higher education curricula or pre‐service training is the most likely and appropriate phase of professional education to embed knowledge of AI‐based technologies relevant to specific disciplines. Knowledge of AI technologies will allow health professionals to understand their use and critically examine the benefits and risks before beginning their careers (Meskó and Görög 2020). The integration of AI technologies in health education creates a diverse landscape, with significant differences across disciplines based on their unique needs and challenges (Bajwa et al. 2021). As the sector evolves with technology, it is essential to adopt tailored educational approaches to equip future healthcare professionals with the skills to effectively use AI in their fields (Lambert et al. 2023). This focus not only aims to improve patient‐health outcomes but also addresses the ethical implications of implementing these transformative technologies.

Previous research into AI in health education and practice has focused on educational outcomes (Feigerlova et al. 2025), the use of generative AI in student assessment, and student perceptions of using AI (González‐Calatayud et al. 2021). There is limited research focusing on the comparative insights of students’ and academics’ knowledge, perceptions, and experiences of AI integration in education and clinical practice. This systematic review aims to provide a unique insight into health students’ and academics’ knowledge, experiences, and perceptions of using AI‐based technologies in education and clinical practice. By doing so, it aims to offer valuable insights from students and academics that will be valuable to inform future policies and educational strategies. This review will also identify any gaps in the health curriculum specific to AI training.

2. Materials and methods

2.1. Protocol and Registration

The protocol of this systematic review has been registered in PROSPERO (CRD42023448005). To uphold precise and transparent reporting, the research followed the Preferred Reporting Items for Systematic Reviews and Meta‐Analyses (PRISMA) guidelines (Sarkis‐Onofre et al. 2021).

The focus of this review was exclusively on studies that present the experiences of health students and academics in using AI‐based technologies to obtain a tertiary degree. The current systematic review defines health students and academics as individuals who study or teach in a health discipline but are not limited to nursing, midwifery, medicine, pharmacology, radiology, or physiotherapy in a tertiary education institute like a university or college.

2.2. Search Strategy

An initial MEDLINE (EBSCO) search string using MeSH and key terms was conducted in consultation with a research librarian (Table 1). Four key terms for this systematic review were health students, health academics, AI, and higher education. The search strings were applied to Medline (EBSCO), Web of Science, CINAHL (EBSCO), ERIC, Google Scholar, and Scopus databases. The reference lists of included studies were searched to identify additional related studies. The search was limited to studies published since 2017 and English language. The last seven years were selected because the literature shows a significant growth of 43% in the application of AI‐based technologies in higher education since 2017 (Zawacki‐Richter et al. 2019).

TABLE 1.

Search conducted in September 2024.

| # | Searches | Records retrieved |

|---|---|---|

| S1 | (MH ‘Students, Nursing’) | 30,488 |

| S2 | (MH ‘Students, Health Occupations’) | 3,360 |

| S3 | (MH ‘Education, Health’) | 34,690 |

| S4 | (MH ‘Education, Health, Graduate’) | 8,404 |

| S5 | (MH ‘Education, Health, Associate’) | 1,779 |

| S6 | (MH ‘Education, Health, Baccalaureate’) | 21,259 |

| S7 | (MH ‘Faculty+’) | 39,771 |

| S8 | AB (Student*(nurs* OR medic*)) OR TI (Student*(nurs* OR medic*)) | 95,460 |

| S9 | AB (Nurs* educat*(graduat* OR associat* OR baccalaureate)) OR TI (Nurs* educat*(graduat* OR associat* OR baccalaureate)) | 2,509 |

| S10 | AB (Faculty (nurs* OR health OR medic* OR pharmacy OR dent*)) OR TI (Faculty (nurs* OR health OR medic* OR pharmacy OR dent*)) | 27,751 |

| S11 | S1 OR S2 OR S3 OR S4 OR S5 OR S6 OR S7 OR S8 OR S9 OR S10 | 195,900 |

| S12 | (MH ‘Artificial Intelligence’) | 38,825 |

| S13 | AB (artificial intelligence OR AI OR A.I.) OR TI (artificial intelligence OR AI OR A.I.) | 42,904 |

| S14 | AB AI‐based technolog* OR TI AI‐based technolog* | 122 |

| S15 | S12 OR S13 OR S14 | 74,386 |

| S16 | (MH ‘Universities’) | 52,378 |

| S17 | (MH ‘Education’) | 21,520 |

| S18 | AB (Universit* OR college* OR higher education OR tertiary education) OR TI (Universit* OR college* OR higher education OR tertiary education) | 639,209 |

| S19 | S16 OR S17 OR S18 | 672,277 |

| S20 | S11 AND S15 AND S19 | 107 |

| S21 |

S11 AND S15 AND S19 (Limiters—English, Date of publication: 2017–2024) |

75 |

The full search was undertaken in September 2024.

2.3. Eligibility Criteria and Study Selection

Studies of qualitative, quantitative, and mixed‐method design that examined experiences and perspectives of health students and academics using AI‐based technologies were selected for review. Interventional research and non‐original research, including reviews, policies, and guidelines, were excluded, as this review aims to synthesise current literature on students’ and academics’ perceptions rather than to seek to understand the effectiveness of AI or review institutional decision‐making. Studies that are related to using AI‐based technologies in schools, interventions, and training programmes to examine the impact of the technologies were excluded from the analysis phase. This approach has been adopted to mitigate potential biases stemming from expert opinions, interventions, or training programmes supported by AI‐based technology supporters.

Articles retrieved from electronic databases were downloaded and stored in Joanna Briggs Institute (JBI) SUMARI (Adelaide, Australia) (Munn et al. 2019). The Endnote program was used to eliminate duplicated publications. JBI SUMARI was used to facilitate study selection, data extraction, and quality assessment of the included articles. JBI SUMARI was used due to its reputation for quality appraisal of health‐related research and for providing a systematic and structured approach to evaluating and synthesising research (Munn et al. 2019).

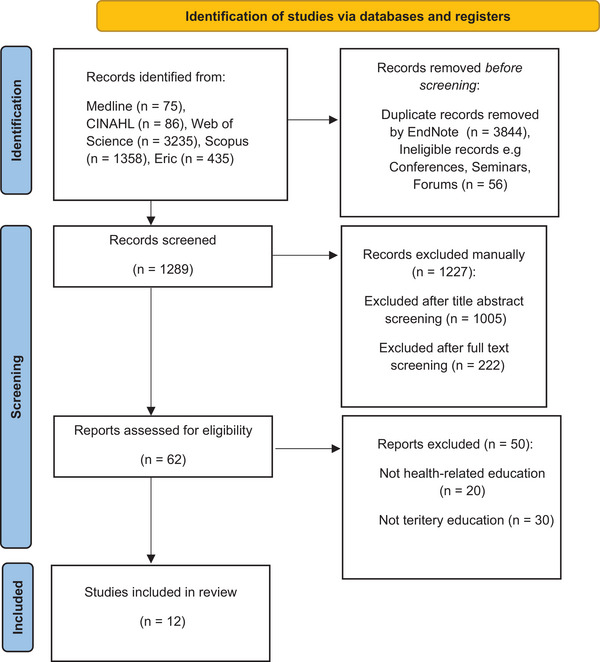

A decision tree was used as a methodological guide for the research team to ensure consistency and transparency in including or excluding articles and minimise bias risk. Two reviewers screened the titles and abstracts of the articles independently regarding inclusion and exclusion criteria for this review. Any disagreement was reviewed by the third reviewer and resolved through a team discussion. After completing the title/abstract screening phase, three reviewers conducted full‐text screening against the inclusion and exclusion criteria using the decision tree. The decisions upon inclusion or exclusion of the articles were entered in an Excel spreadsheet. In case of discrepancies, the first author reviewed the articles and resolved disagreements through discussions with the reviewers. The selection process was documented with a PRISMA flow chart (Figure 1).

FIGURE 1.

PRISMA 2020 flow diagram for new systematic reviews.

2.4. Quality Assessment and Data Extraction

JBI quality assessment tools, including analytical cross‐sectional study and qualitative research tools, were used to appraise and determine the risk of bias in the included articles. Two reviewers independently appraised all articles. After conducting a thorough quality assessment, 10 out of 12 articles were evaluated as having a low risk of bias in research methodology, and two articles were determined as having a moderate risk of bias. All articles were retained in the review due to the recognition that even lower‐quality articles contribute significant insights into AI‐based technologies in health education and practice (see Table 2).

TABLE 2.

Summary of included studies.

| Title | Author/year/country | Aim | Research design | Participants | Findings | Limitations | Risk of bias |

|---|---|---|---|---|---|---|---|

| Chatbots for future docs: Exploring medical students’ attitudes and knowledge towards artificial intelligence and medical chatbots |

Moldt et al./2023 Germany |

To determine the existing levels of knowledge of medical students about AI chatbots in particular in the healthcare setting. | Mixed‐method study (quantitative survey and an in‐depth analysis of the collected qualitative data) | 12 Medical students from Faculty of Tuebingen/ University of Luebeck |

|

‐ Small number of participants ‐ Study was conducted in a short period of time However, state of knowledge and perceptions of medical students regarding AI and chatbots in medicine was achieved. |

Low |

| Awareness and level of digital literacy among students receiving health‐based education |

Aydınlar et al./2024 Turkiye |

To determine the digital literacy level and awareness of students receiving health‐based education and to prepare to support the current curriculum with digital literacy courses when necessary. | Survey (questionnaire consisted of 24 queries evaluating digital literacy in seven fields), group interviews, and Delphi | 476 Students who have been receiving undergraduate education for at least four years of health‐based education at Acibadem University. |

|

Biomedical engineering and medicine students enter the programmes with high scores, and in this study, they were evaluated together with other students that may have affected participants’ interest, knowledge, and skills in use of AI. | Low |

|

Artificial intelligence readiness, perceptions, and educational needs among dental students: A cross‐sectional study |

Halat et al./2024 Qatar |

Examines dental students’ perceptions, educational needs, and readiness to use AI in health education and healthcare. |

Cross‐sectional online survey |

94 Dental students |

|

‐ The cross‐sectional design skips gauging changes in readiness, perceptions, and needs over time. ‐ With a single‐ centre design, findings cannot be generalised. ‐ Participants were limited to undergraduate dental students |

Low |

|

Relationship between individual innovativeness levels and attitudes toward artificial intelligence among nursing and midwifery students |

Erciyas et al./2024 Turkiye |

To explore the connection between individual innovativeness levels and attitudes towards AI among nursing and midwifery students. | Cross‐sectional, descriptive, and exploratory correlational design |

500 Nursing and midwifery students |

|

‐Cross‐sectional design of the study | Low |

|

Medical students’ knowledge and attitude towards artificial intelligence: An online survey |

Al‐Saad/ 2022 Jordan |

To investigate the attitudes of Jordanian medical students regarding AI and machine learning (ML). To estimate the level of knowledge and understanding of the effects of AI on medical students. |

Electronic pre‐validated questionnaire Google forms |

900 medical students from 6 universities in Jordan |

|

‐ Participants were not asked background questions about AI. ‐ A possible selection bias may occur as students with more positive attitude and motivation to use AI might complete the survey. ‐Random selection of answers from different years could reduce the risk of bias. |

Low |

|

Digital proficiency: assessing knowledge, attitudes, and skills in digital transformation, health literacy, and artificial intelligence among university nursing students |

Abou Hashish/2024 Saudi Arabia |

Assess the perceived knowledge, attitudes, and skills of nursing students about digital transformation, and their digital health literacy (DHL) and attitudes towards AI. Investigate the potential correlations of variables. | Descriptive correlational design | 266 female nursing students |

|

‐Reliance on a single setting limited generalisability of the findings ‐ Self‐reported data increased risk of bias ‐ Personal experiences, organisational digital culture, and individual perceptions might affect responses regarding knowledge and attitudes |

Low |

| Perceptions of the use of intelligent information access systems in university‐level active learning activities among teachers of biomedical subjects |

Aparicio/2018 Spain |

To gather biomedical and health sciences teachers’ opinions regarding the benefits of using complementary methods that are supported by intelligent systems, and the benefits of using these methods to the learning activities. |

Mixed‐method including open‐ended and close‐ended questions |

Bilingual/medical science higher education 11 teachers (9 female and 2 male) with biomed science degrees |

|

‐ Small number of participants ‐ Interviewing teachers from different backgrounds might increase the variability of perceptions |

Low |

| Machine learning in clinical psychology and psychotherapy education: A mixed‐methods pilot survey of postgraduate students at a Swiss university |

Blease/ 2021 Switzerland |

To explore postgraduate clinical psychology students’ familiarity and formal exposure to topics related to artificial intelligence and machine learning (AI/ML) during their studies. | Mixed‐methods online survey Thematic analysis of free‐text data |

37 clinical psychology students enrolled in a two‐year asters’ programme at a Swiss university |

|

‐ Small sample size through a convenience sample of students at a single academic centre ‐ The survey was administered during the COVID‐19 pandemic, and this may have affected willingness to respond ‐ Reading AI/ML mental health journal articles might affect their responses |

Moderate |

| Investigating students’ perceptions towards artificial intelligence in medical education |

Buabbas/2023 Kuwait |

To investigate students’ perceptions of AI in medical education. | A cross‐sectional survey was conducted using an online questionnaire | 352 medical students in the Faculty of Medicine at Kuwait University |

|

‐ A single‐centre study ‐ Selection bias based on characteristics of participants who were willing to participate in the study ‐ Self‐reported questionnaire ‐Discussion was based on comparing findings of this study with studies from other populations with different culture ‐Lack of understanding of why some students did not receive AI training. |

Moderate |

| The importance of introducing artificial intelligence to the medical curriculum assessing practitioners’ perspectives |

Dumić‐Čule/2020 Croatia |

To assess the attitude about the importance of introducing education on AI in medical schools’ curricula among physicians whose everyday job is significantly impacted by AI. | An anonymous electronic survey (Google Forms, Google LLC) was distributed via email at the national level in Croatia | 144 radiologists and radiology residents practising in primary, secondary, and tertiary health care institutions, both in the private and public sectors. |

|

‐ Voluntary survey is a potential risk for participating those who are highly motivated and interested in the topic. | Low |

| Assessment of awareness, perceptions, and opinions towards artificial intelligence among healthcare students in Riyadh, Saudi Arabia |

Syed/2023 Saudi Arabia |

To evaluate awareness, perceptions, and opinions towards AI among pharmacy undergraduate students at King Saud University (KSU). |

A cross‐sectional, questionnaire‐based study |

157 pharmacy students at KSU College of Pharmacy |

|

‐ Single discipline from a single setting of study limited generalisation of findings to other students ‐ An online, self‐administered survey ‐The cross‐sectional study limited assessment of causal relationships ‐Convenient sampling introduces selection bias. |

Low |

| Health care students' perspectives on artificial intelligence: Countrywide survey in Canada |

Teng/2022 Canada |

To explore and identify gaps in the knowledge that Canadian healthcare students have regarding AI, capture how healthcare students in different fields differ in their knowledge and perspectives on AI, and present student‐identified ways that AI literacy may be incorporated into the healthcare curriculum. |

A survey including 15 items Descriptive statistics, nonparametric statistics, and thematic analysis conducted to analyse quantitative and qualitative data |

2,167 entries to practice medical, nursing and allied health students from all year levels |

|

‐Only medical students from a single setting limited equitable representation ‐Selection bias due to participation of those who are highly motivated and interested. |

Low |

Articles were divided between the reviewers for data extraction, and the first author conducted an independent data extraction of all included articles. For consistency and increasing relevance of the extracted data, the reviewers used the JBI template, which included title, author/s, year, country, aim, research design, participants’ characteristics, and main findings.

2.5. Data Analysis

2.5.1. Qualitative Data

A thematic analysis was used to identify codes and generate themes and sub‐themes from qualitative data (Clarke and Braun 2017). The included records for full‐text analysis were divided between the reviewers for coding and creating preliminary themes and sub‐themes. The first author conducted an independent analysis of all records. The codes, statements, and themes were entered into a spreadsheet for further team discussion and agreement. A meta‐aggregative synthesis approach in the JBI SUMARI was applied to pool the qualitative data (Lockwood et al. 2015). Through this approach, relevant qualitative data were summarised and combined to demonstrate a collective meaning. All unclear and doubtful findings were excluded from the synthesis or solved through a discussion between all reviewers.

2.5.2. Quantitative Data

Descriptive data analysis was undertaken to retrieve data about the various types of AI‐based technologies that are used by students and academics in health education and practice. The analysis also sought to determine to what extent AI‐based technologies are being used.

3. Results

The systematic search of the six databases and manual searching identified 5,029 articles, with 1,185 articles remaining after duplications removal in the Endnote. After removing records from conferences, seminars, and forums (n = 56), 1,129 records were imported to JBI SUMARI for screening. Upon screening titles and abstracts of the articles, 62 articles remained for full‐text screening, of which 12 articles met the review criteria for analysis (Figure 1). Out of 12 included articles, 7 studies were quantitative and 5 were mixed‐methods studies (Table 2).

3.1. Characteristics of Included Studies and Participants

Twelve studies, including seven quantitative and five mixed‐method designs, were reviewed in the current study. The articles included a variety of health disciplines in higher education such as nursing, midwifery, medicine, dentistry, allied health, and psychology in different countries.

Out of 32 university settings and one hospital, 4,666 participants were included in the current study, including 4,655 students and 11 university teachers. Among the university students, 4,474 were undergraduate students, 37 were master's students, and 144 were medical residents practising their clinical education in a hospital setting. Countries of study are Jordan, Spain, Croatia, Saudi Arabia, Kuwait, Canada, Turkiye, Qatar, Germany, and Switzerland.

3.2. Quantitative Descriptive Data

In a study by Teng et al. (2022) on healthcare students who entered an entry‐to‐practice programme in Canada, the authors found a positive attitude towards using AI‐based technologies in health education and practice with no significant difference between different ages, genders, or regions of study. However, years of study and degree of education significantly impacted participants’ attitudes (Teng et al. 2022). For instance, students in the first year of their studies had fewer positive outlooks towards AI‐based technologies than advanced students (p < 0.001). Students who had completed high school only had fewer positive attitudes towards AI‐based technologies compared with those who already completed a tertiary degree (p = 0.001) or had a PhD degree (p = 0.004). Participants who intended to pursue a research or business career postgraduation were more positive towards using AI technologies in education compared with those willing to work in the clinical field (p < 0.001) (Teng et al. 2022).

Level of literacy about AI technologies among the health students was examined in the reviewed studies. Aydinlar et al. (2024) conducted a survey study in a health faculty in Turkiye and reported lower AI literacy and familiarity with new health‐related technologies among nursing students compared with those from biomedical engineering, and medicine disciplines. This is while 94% of nursing and midwifery students did not receive any AI education in their programme in a study in Turkiye (Erciyas et al. 2024). Similarly, Teng and colleagues (2022) explored and identified gaps in the knowledge that Canadian health students have regarding AI and captured how health students in different fields differ in their knowledge and perspectives on AI (Teng et al. 2022). In contrast, Abou Hashish and Alnajjar (2024) reported good knowledge of and positive attitudes towards AI technologies among female nursing students in Saudi Arabia. Jordanian medical students in a study by Al Saad et al. (2022) reported a level of familiarity with AI technologies but not adequate to work confidently with AI in clinical practice. Consistent with findings from a study in Saudi Arabia on pharmacy students (Syed et al. 2023), Jordanian medical students believed AI literacy can improve their clinical performance when they commence their real practice (Al Saad et al. 2022). In the study by Teng et al. (2022) in Canada on a variety of health students, half of the respondents acknowledged low literacy of AI technologies (1107/2167, 51.08%) or incorrect knowledge of it (676/2167, 31.2%) while they strongly believed that AI technology would affect their careers within the coming decade. Pharmacy students in Saudi Arabia perceived AI‐based technologies as helpful in reducing errors in medical practice (75.1%), increasing patients’ access to healthcare services (63%), facilitating healthcare providers’ access to information (77%), and increasing making accurate care plans and decisions (82.8%) (Syed et al. 2023).

In six out of 12 studies with quantitative content, respondents regardless of their health disciplines believed that university is the best place for learning about health‐related AI technologies (p < 0.001) (Teng et al. 2022; Blease et al. 2021; Dumić‐Čule et al. 2020; Buabbas et al. 2023; Syed et al. 2023; Al Saad et al. 2022) and presented students identified ways that AI literacy may be incorporated into the health curriculum.

Those who had some knowledge about AI through taking private lessons out of university believed that incorporating AI courses into their curricula would improve the learning process and prepare students for more confidence in their clinical practice (Buabbas et al. 2023). Students in these studies were willing to have AI courses in their curriculum, however, ethical implications of using AI in healthcare practice was a significant concern of some respondents (p < 0.001) (Teng et al. 2022; Syed et al. 2023; Moldt et al. 2023; Aydinlar et al 2024). Medical students in Saudi Arabia and medical and midwifery students in Canada expressed their concerns about the violation of confidentiality and humanistic aspects of their profession when using AI technologies in healthcare. Therefore, they requested having AI courses integrated in their curricula along with ethics training (Teng et al. 2022; Syed et al. 2023).

Replacement of the health professions with AI‐based technologies was another concern raised by some respondents in the reviewed studies (Buabbas et al. 2023; Al Saad et al. 2022). While more than half of the respondents in both studies (68% and 69%, p < 0.05) agreed that some health specialties will be replaced by AI in the future, they disagreed with the statement that AI will replace physicians’ role (78% and 65%) (Buabbas et al. 2023; Al Saad et al. 2022). This finding is in line with the belief of 83% of medical students in Germany who did not express any concern of being replaced by AI in the future (Moldt et al. 2023).

Among the 12 included studies only one article addressed health academics’ experience of using AI‐based technologies in health education. All lecturers (n = 11) reported using AI‐based technologies to facilitate active learning through designing lecture contents and executing class activities (Aparicio et al. 2018).

3.3. Qualitative Findings

In this review, five studies encompassed qualitative data. Four of them sought experiences and perceptions of health students regarding AI‐based technologies in health education and practice, of which one included 37 clinical psychology students enrolled in a two‐year masters’ programme at the Swiss University, one included 12 medical students in Germany, one included 476 health students in Turkiye, and the other one included 2,167 entries to practice medical and nursing, and allied health students in Canada. One study presented 11 biomedical and health sciences teachers’ opinions regarding the benefits of using AI‐based technologies in teaching. Analysis of the qualitative data revealed four main themes that highlight the most common insights of using AI in education, clinical practice, and learning perceived by health students and academics.

3.4. Limited AI Literacy

A limited understanding of the concept of AI and its application in health education and clinical practice was obvious in the included articles. A review of the responses of the students showed most respondents did not have basic knowledge about AI‐based technologies, although they were willing to be trained while in the university (Teng et al. 2022; Blease et al. 2021; Aydinlar et al. 2024).

I have not learnt much of AI and how it can be used in nursing, this survey has sparked my interest, and it is something I am going to read up on. (Nursing student)

In addition to their interest to learn about AI and its application in health education, they were also keen to understand how AI will help them with their clinical practice either as a part of their education programme or in their future professional practice (Teng et al. 2022; Blease et al. 2021).

I think AI may play a large role in training future clinicians, but not in clinical work or practice. (Speech language pathology student)

While some medicine students expressed their concerns over having AI training integrated into their intensive curriculum, other students expressed their disappointment with the AI training missing in their high degree programme (Teng et al. 2022).

It scares me that MD students/healthcare professionals on top of everything else will one day have to learn AI, this is similar to learning statistics to be able to do research properly. It is simply not our field and expertise, and it stresses me out. (Medicine student)

I always hear about it, but I don't see a lot of opportunities to learn about it. (Pharmacy student)

3.5. Replacement of Health Specialties With AI vs Providing Support

There were some concerns about the development of AI‐based technologies raised by students from various disciplines. The literature shows that students, particularly those who intended to work in a clinical field in the future, were worried about being replaced by AI‐based technologies. They expressed their concerns in two ways. One is about limited job opportunities for health specialties in the future due to the development of AI‐based technologies. The second is about patient–health professional relationship (Teng et al. 2022; Blease et al. 2021). For example, a midwifery student stated, ‘It does not have a place in midwifery, you cannot teach empathy and comfort measures for a woman in labour’. Some psychology students were also doubtful about AI competency to replace health professions considering the patient–clinician relationship as the core feature of psychotherapy (Blease et al. 2021).

But I think the key in psychotherapy is the relationship between the patient and therapist. [It's] a work [which] is only effective when patients feel and experience real contact to the therapist a human being. I cannot imagine that AI can substitute us rather I think [it'll] be a support‐tool for us. (Psychology student)

However, in the same study, other students believed that using AI‐based technologies could save the practitioners time and improve patient–practitioner relationship in clinical practice. ‘I rather believe that it would help psychologists to have enough time in order to build good relationships with their clients’. Similarly, medical students in Germany highlighted the importance of AI in easing administrative tasks and developing health‐related research. However, they believe that the technical implementation of AI is currently limited and requires development (Moldt et al. 2023).

3.6. Optimism vs Cautiousness About Use of AI in Health Education and Practice

Medical students in a study by Moldt et al. (2023) in Germany expressed their optimism towards using AI as a therapy tool that reduces barriers like the shyness of patients about disclosing their personal information. However, it was thought that the use of AI may deteriorate patient–doctor interaction.

Medicine, midwifery, and social work students frequently showed their positiveness regarding the use of AI‐based technologies in their clinical practice (Teng et al. 2022). ‘[AI] will greatly improve the practice of medicine to be more efficient and reliable’ and ‘[it] could prevent mistakes and increase efficiency’ and ‘It may be inevitable for AI to be involved in my field to some degree in the future.’ (Medical and Midwifery students)

Other students from the same health disciplines agreed on the usefulness of AI in health practice; however, they believed that the usage must be cautious.

‘If it helps patient outcomes, I'm in’ and ‘If AI is used for enhancement of the field rather than replacement of skilled workers then my comfort increases, however I am apprehensive of the potential misuse of the technology and the risk of job loss to physicians’. (Medicine student)

Similar to medicine and midwifery students, a nursing student stated their cautiousness with using AI in their practice; ‘I think it can have great impacts but still need to be monitored for safety’. (Teng et al. 2022)

3.7. Ethical Concerns in Using AI in Health Education and Practice

While studies reflected on a general willingness of health students and academics to use AI in their education and clinical practice, some ethical considerations must be in place. For instance, despite the high intention of nursing students towards AI, they still were worried about the patients’ right and risk of their identity disclosure by use of AI. ‘AI can pose huge confidentiality issues for patient healthcare records’.

Psychology students also expressed their concern about the disclosure of patients’ information when using AI in their clinical practice; ‘Of course, security and data protection are a crucial issue in the field of AI especially when it comes to sensitive information like mental health’ (Blease et al. 2021).

3.8. Curriculum Development

This review found a shared sentiment of intention to integrate AI training into the health programme curricula. Considering the significant impacts of using AI‐based technologies on different health professions including but not limited to providing quality patient care, saving time and resources, reducing workload, improving staffing and augmenting roles (Ahuja 2019), introducing AI courses to health curriculum would be beneficial. Healthcare students from various disciplines like nursing, midwifery, medicine, psychology, and allied health believed that early exposure to AI training in university is an important step to facilitate and ease the use of health‐related technologies in the graduates’ future careers (Blease et al. 2021; Teng et al. 2022; Syed et al., 2023).

Interestingly, even those who opposed the development of AI in their practical fields (235/2167, 10.84%), agreed on the importance of having health graduates trained in AI‐based technologies. However, the authors did not clarify the reason for this intention (Teng et al. 2022).

Radiology residents (89%) overwhelmingly believed that AI training must be mandatory in medical curricula due to its dynamic nature and being affected by ongoing technological innovations (Dumić‐Čule et al. 2020).

From the academic perspective, biomedical lecturers in a study in Spain expressed their satisfaction with using intelligent systems such as BioAnnote, CLEiM, and MedCMap in the development of active learning for health students from bilingual backgrounds. Some positive aspects of using the systems were usefulness as a medical glossary, usefulness as an assessment system, bilingual aid to teachers, and being reliable (Aparicio et al. 2018). The lecturers found the systems useful; however, they were not familiar with the AI‐related terms and were not aware of using the intelligent systems in their teaching methods. However, they realised that they were using AI‐based technologies in teaching after the researchers defined and clarified the terms. They agreed to use AI‐based technologies in health higher education and integrate them as a mandatory part of the curricula (Aparicio et al. 2018).

4. Discussion

This systematic review revealed the implications of AI‐based technologies in clinical practice. Considering the focus of this review on the perception of health professionals or emerging health professionals of using AI in their clinical practice during study or after completion of their course of study, articles that addressed generative AI like ChatGPT were excluded from this review. The qualitative findings supported the quantitative findings, and both showed that health students believed that they lack AI literacy, which raises the need to learn about AI (Teng et al. 2022; Blease et al. 2021; Al Saad et al. 2022).

Postgraduate university students and PhD degrees shared a common concern about not being trained and prepared for using the technologies in their clinical placements and clinical practice after graduation (Teng et al. 2022; Blease et al. 2021). The studies revealed a need for having a comprehensive AI education in health science programmes, like other courses like computer‐based and engineering sciences (Abichandani et al. 2023). A study on radiology residents by Hu et al. (2023) revealed that AI training workshops significantly improved the knowledge and confidence of the participants about using AI in their clinical practice. Consistent with six studies included in this review, the participants expressed their intention to continue AI education and have the training to be part of the radiology residency curriculum (Hu et al. 2023). However, medicine students in the Teng et al. 2022) study expressed apprehension regarding the additional burden that acquiring AI knowledge might impose on them, particularly due to time constraints and the demands of their existing workload or studies. This reveals a complex balance between recognising the importance of AI literacy and the practical challenges students face in integrating such learning into their already busy academic schedules. Similarly, Wood et al. (2021) reported degrees of concern regarding the integration of AI education into medical curricula due to time restrictions in delivering the training over the whole course of the study. These concerns should be considered to examine the effectiveness of having AI education as an intra‐curricula programme as well as the willingness of health students and academics to integrate AI education into the curriculum for different health disciplines. This review found that students exhibit divergent perspectives on the use of AI in clinical settings. Some are enthusiastic about its implementation, expressing optimism about its potential to prevent mistakes and enhance efficiency (Teng et al. 2022). On the contrary, another group of students voiced apprehension about the adoption of AI, citing concerns related to potential negative impacts on employment and sociological aspects (Buabbas et al. 2023; Al Saad et al. 2022). These sentiments might stem from a limited comprehension of AI, but they should not be dismissed outright. In contrast to the findings of this review, Groeneveld et al. (2024) did not suggest any concern about nurses being replaced by AI‐based technology. Instead, they suggested a novel idea of ‘new digital colleague’ that complements nurses’ human qualities and integrates into nurses’ workflow. On the other hand, the study presented some potential risks of integrating AI in nursing practice as decreasing nurse–patient contact, poor patient empowerment, and lack of transparency which align with the current review and other literature. It is crucial to conduct additional research to delve into and address these concerns more comprehensively (Sit et al. 2020; Groeneveld et al. 2024).

The findings of the current review demonstrate that health students are concerned about ethical issues related to using AI in the clinical space. This is more related to patients’ confidentiality and data management and storage (Teng et al. 2022; Syed et al. 2023). Students expressed concern about the protection of sensitive mental health data, particularly for vulnerable patients (Blease et al. 2021). Robust data encryption and access control mechanisms should be implemented, and clear guidelines and protocols for handling sensitive data must be established to underscore the importance of privacy (Farhud and Zokaei 2021). Ethics training should be integrated into the curriculum for health students and professionals, specifically addressing the unique ethical challenges associated with AI in clinical settings. Ongoing education ensures that individuals are kept informed about evolving ethical standards and best practices in the dynamic landscape of AI in healthcare (Kooli and Al Muftah 2022).

In addition, Teng et al. (2022) identified that students’ self‐rated understanding of the ethical implications of AI differed by profession with students studying physiotherapy and dentistry having a greater awareness than students in medicine, nursing, and midwifery. Education should be provided to health students regarding their professional ethical obligations and consider these when using AI‐based technologies. Registered healthcare practitioners are bound by professional codes and principles to adhere to, which vary by profession. One such example is nurses are bound by the ICN code of ethics (International Council of Nurses 2021). This code outlines a nurse's obligations in relation to ethical principles such as maintaining the privacy and confidentiality of people in their care (International Council of Nurses 2021). Further research into the curriculum design of some healthcare courses could identify any gaps in knowledge when considering ethical principles and the use of AI in health practice.

Pilot studies have shown positive outcomes of AI integration in medical training programmes, including the perception of impact, increased efficiency, and reduced clinician workload (Banerjee et al. 2021). AI chatbots have become popular in curriculum design, providing feedback in a scalable way (Neumann et al. 2021), while AI‐supported mentors provide immediate and democratised access to expert guidance but may raise ethical challenges including privacy and confidentiality (Köbis and Mehner 2021). This highlights that there needs to be more stringent regulatory controls and education around ethical concerns of using AI‐based technologies in healthcare in the curriculum. Also, discussion around the regulatory frameworks related to the use of AI‐based technologies would be beneficial according to the health legislation of each state or country (Pesapane et al. 2021).

In this review, only one study reflected directly on academics’ perception of using AI‐based technologies in health higher education (Aparicio et al. 2018). Considering the importance of AI in teaching and learning development, the study emphasised the integration of AI training into the curriculum. The adoption of AI in curriculum development in health teaching and learning has the potential to revolutionise education by providing personalised and dynamic learning experiences (Pedro et al. 2019). The integration of AI into tertiary health education presents a dual landscape of advantages and drawbacks. On the positive side, AI can enhance the curriculum by providing personalised learning experiences, and tailoring content to individual student needs and learning styles (Dave and Patel 2023). AI algorithms can analyse data on student performance to identify gaps in knowledge and suggest targeted interventions. Additionally, AI can assist in keeping curriculum content up to date by continuously monitoring advancements in health education and recommending relevant updates (Cardona et al. 2023).

While interest, development, and potential for AI in professional health education and practice continue, evidence of measurable education and clinical outcomes is limited and lacks methodological rigour (Joshi et al. 2025; Feigerlova et al. 2025). Cost‐effectiveness analyses and sustainability are also important metrics. Data scaling and sampling, training data quality, and algorithm selection could help reduce computational costs and improve the efficiency of AI training programmes (Kim et al. 2021).

5. Conclusion and Implications for Nursing and Health Policy

Findings of the current systematic review and discussion around the findings revealed a gap in health education when considering AI‐based technologies. This study recommends embedding AI training programmes in the health curriculum, offering direction for health educators, and curriculum developers responsible for preparing next‐generation healthcare professionals, in particular nursing graduates. The training should empower health students with confidence about AI‐based technology concepts, implications, challenges, and ethical considerations.

In addition, this systematic review found a gap in the literature regarding research about AI education in health‐related courses. Since there is a scarcity of studies addressing AI training in health education in terms of its implication and cost‐effectiveness, further qualitative and quantitative research must be conducted on both health students and academics.

Author Contributions

SS conducted a systematic search through the databases with the consultation of a health librarian. SS and PMP screened titles and abstracts of the articles independently regarding inclusion and exclusion criteria for this review. Any disagreement was reviewed by the third reviewer (XL) and resolved through a team discussion. Three reviewers (XL, PMP, and SM) conducted full‐text screening against the inclusion and exclusion criteria. In case of discrepancies, SS reviewed the articles and resolved disagreements through discussions with the reviewers. All authors (SS, PMP, EA, SM, XL) contributed to quality assessment, data extraction, data analysis and drafting the manuscript. SS supervised and led the project. Study conception/design: SS. Data collection/analysis: SS, PMP, XL, and SM. Drafting of manuscript: SS, PMP, XL, SM, and EA. Critical revisions: SS, EA, and XL. Administrative/technical/material support: SS. Study supervision: SS.

Conflict of Interests

The authors declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

Acknowledgements

Open access publishing facilitated by Western Sydney University, as part of the Wiley ‐ Western Sydney University agreement via the Council of Australian University Librarians.

Funding:The authors received no financial support for the research and authorship of this article. Open access was enabled with financial support from Western Sydney University.

Data Availability Statement

The authors confirm that the data supporting the findings of this study are available within the article and/or its supplementary materials.

References

- Abd‐Alrazaq, A. , AlSaad R., Alhuwail D., et al. 2023. “Large Language Models in Medical Education: Opportunities, Challenges, and Future Directions.” JMIR Medical Education 9, no. 1: e48291. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Abichandani, P. , Iaboni C., Lobo D., and Kelly T.. 2023. “Artificial Intelligence and Computer Vision Education: Codifying Student Learning Gains and Attitudes.” Computers and Education: Artificial Intelligence 5: 100159. [Google Scholar]

- Abou Hashish, E. A. , and Alnajjar H. 2024. “Digital Proficiency: Assessing Knowledge, Attitudes, and Skills in Digital Transformation, Health Literacy, and Artificial Intelligence Among University Nursing Students.” BMC Medical Education 24, no. 1: 508. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ahuja A. S. 2019. “The Impact of Artificial Intelligence in Medicine on the Future Role of the Physician.” PeerJ 7: e7702. 10.7717/peerj.7702. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Al Saad, M. M. , Shehadeh A., Alanazi S., et al. 2022. “Medical Students' Knowledge and Attitude towards Artificial Intelligence: An Online Survey.” The Open Public Health Journal 15, no. 1:. [Google Scholar]

- Ali, O. , Abdelbaki W., Shrestha A., Elbasi E., Alryalat M. A. A., and Dwivedi Y. K. 2023. “A Systematic Literature Review of Artificial Intelligence in the Healthcare Sector: Benefits, Challenges, Methodologies, and Functionalities.” Journal of Innovation & Knowledge 8, no. 1: 100333. 10.1016/j.jik.2023.100333. [DOI] [Google Scholar]

- Aparicio, F. , Morales‐Botello M. L., Rubio M., et al. 2018. “Perceptions of the Use of Intelligent Information Access Systems in University Level Active Learning Activities Among Teachers of Biomedical Subjects.” International Journal of Medical Informatics 112: 21–33. [DOI] [PubMed] [Google Scholar]

- Aydınlar, A. , Mavi A., Kütükçü E., et al. 2024. “Awareness and Level of Digital Literacy Among Students Receiving Health‐Based Education.” BMC Medical Education 24, no. 1: 38. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Babic, B. , Gerke S., Evgeniou T., and Cohen I. G. 2021. “Beware Explanations From AI in Health Care.” Science 373, no. 6552: 284–286. 10.1126/science.abg1834. [DOI] [PubMed] [Google Scholar]

- Bajwa, J. , Munir U., Nori A., and Williams B. 2021. “Artificial Intelligence in Healthcare: Transforming the Practice of Medicine.” Future Healthcare Journal 8, no. 2: e188–e194. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Banerjee, M. , Chiew D., Patel K. T. et al. 2021. “The Impact of Artificial Intelligence on Clinical Education: Perceptions of Postgraduate Trainee Doctors in London (UK) and Recommendations for Trainers.” BMC Medical Education [Electronic Resource] 21: 429 10.1186/s12909-021-02870-x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Blease, C. , Kharko A., Annoni M., Gaab J., and Locher C. 2021. “Machine Learning in Clinical Psychology and Psychotherapy Education: A Mixed Methods Pilot Survey of Postgraduate Students at a Swiss University.” Frontiers in Public Health 9: 273. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Buabbas, A. J. , Miskin B., Alnaqi A. A., et al. 2023. Investigating Students' Perceptions Towards Artificial Intelligence in Medical Education. In Healthcare (Vol. 11, No. 9, p. 1298). MDPI. [DOI] [PMC free article] [PubMed]

- Cardona, M. A. , Rodríguez R. J., and Ishmael K. 2023. Artificial Intelligence and the Future of Teaching and Learning. https://www2.ed.gov/documents/ai‐report/ai‐report.pdf

- Clarke, V. , and Braun V. 2017. “Thematic Analysis.” The Journal of Positive Psychology 12, no. 3: 297–298. [Google Scholar]

- Dave, M. , and Patel N. 2023. “Artificial Intelligence in Healthcare and Education.” British Dental Journal 234, no. 10: 761–764. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dumić‐Čule, I. , Orešković T., Brkljačić B., Kujundžić Tiljak M., and Orešković S. 2020. “The Importance of Introducing Artificial Intelligence to the Medical Curriculum: Assessing Practitioners' Perspectives.” Croatian Medical Journal 61, no. 5: 457–464. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Educational , Scientific and Cultural Organization. https://repositorio.minedu.gob.pe/bitstream/handle/20.500.12799/6533/Artificial%20intelligence%20in%20education%20challenges%20and%20opportunities%20for%20sustainable%20development.pdf.

- Erciyas, Ş. K. , Ekrem E. C., and Edis E. K. 2024. “Relationship Between Individual Innovativeness Levels and Attitudes Toward Artificial Intelligence Among Nursing and Midwifery Students.” CIN: Computers, Informatics, Nursing 10–1097. [DOI] [PubMed] [Google Scholar]

- Esmaeilzadeh, P. 2020. “Use of AI‐Based Tools for Healthcare Purposes: A Survey Study From Consumers' Perspectives.” BMC Medical Informatics and Decision Making 20, no. 1: 1–19. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Farhud, D. D. , and Zokaei S. 2021. “Ethical Issues of Artificial Intelligence in Medicine and Healthcare.” Iranian Journal of Public Health 50, no. 11: i. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Feigerlova, E. , Hani H., and Hothersall‐Davies E. 2025. “A Systematic Review of the Impact of Artificial Intelligence on Educational Outcomes in Health Professions Education.” BMC Medical Education 25, no. 1: 129. 10.1186/s12909-025-06719-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- González‐Calatayud, V. , Prendes‐Espinosa P., and Roig‐Vila R. 2021. “Artificial Intelligence for Student Assessment: A Systematic Review.” Applied Sciences 11, no. 12: 5467. 10.3390/app11125467. [DOI] [Google Scholar]

- Groeneveld, S. , Bin Noon G., den Ouden M. E., et al. 2024. “The Cooperation Between Nurses and a New Digital Colleague “AI‐Driven Lifestyle Monitoring” in Long‐Term Care for Older Adults.” JMIR Nursing 7: e56474. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Halat, D. , Shami R., Daud A., Sami W., Soltani A., and Malki A. 2024. “Artificial Intelligence Readiness, Perceptions, and Educational Needs Among Dental Students: A Cross‐Sectional Study.” Clinical and Experimental Dental Research 10, no. 4: e925. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hu, R. , Rizwan A., Hu Z., Li T., Chung A. D., and Kwan B. Y. 2023. “An Artificial Intelligence Training Workshop for Diagnostic Radiology Residents.” Radiology: Artificial Intelligence 5, no. 2: e220170. [DOI] [PMC free article] [PubMed] [Google Scholar]

- International Council of Nurses . 2021. The ICN Code of Ethics for Nurses. https://www.icn.ch/sites/default/files/inline‐files/ICN_Code‐of‐Ethics_EN_Web.pdf.

- Jackson, P. , Ponath Sukumaran G., Babu C., et al. 2024. “Artificial Intelligence in Medical Education‐perception Among Medical Students.” BMC Medical Education 24, no. 1: 804. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Joshi, S. , Urteaga I., van Amsterdam W. A. C., et al. 2025. “AI as an Intervention: Improving Clinical Outcomes Relies on a Causal Approach to AI Development and Validation.” Journal of the American Medical Informatics Association. 10.1093/jamia/ocae301. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kim, D. D. , and Basu A. 2021. “How Does Cost‐effectiveness Analysis Inform Health Care Decisions?” Journal of Ethics 23, no. 8: E639–647. 10.1001/amajethics.2021.639. [DOI] [PubMed] [Google Scholar]

- Knopp, M. I. , Warm E. J., Weber D., et al. 2023. “AI‐enabled Medical Education: Threads of Change, Promising Futures, and Risky Realities Across Four Potential Future Worlds.” JMIR Medical Education 9: e50373. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Köbis, L. , and Mehner C.. 2021. “Ethical Questions Raised by AI‐Supported Mentoring in Higher Education.” Frontiers in Artificial Intelligence 4. 10.3389/frai.2021.624050. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kooli, C. , and Al Muftah H. 2022. “Artificial Intelligence in Healthcare: A Comprehensive Review of Its Ethical Concerns.” Technological Sustainability 1, no. 2: 121–131. [Google Scholar]

- Lambert, S. I. , Madi M., Sopka S., et al. 2023. “An Integrative Review on the Acceptance of Artificial Intelligence Among Healthcare Professionals in Hospitals.” NPJ Digital Medicine 6, no. 1: 111. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lee, D. , and Yoon S. N.. 2021. “Application of Artificial Intelligence‐based Technologies in the Healthcare Industry: Opportunities and Challenges.” International Journal of Environmental Research and Public Health 18, no. 1: 271. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lockwood, C. , Munn Z., and Porritt K. 2015. “Qualitative Research Synthesis: Methodological Guidance for Systematic Reviewers Utilizing Meta‐Aggregation.” JBI Evidence Implementation 13, no. 3: 179–187. [DOI] [PubMed] [Google Scholar]

- Meskó, B. , and Görög M. 2020. “A Short Guide for Medical Professionals in the Era of Artificial Intelligence.” NPJ Digital Medicine 3, no. 1: 126. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Moldt, J. A. , Festl‐Wietek T., Madany Mamlouk A., Nieselt K., Fuhl W., and Herrmann‐Werner A. 2023. “Chatbots for Future Docs: Exploring Medical Students' Attitudes and Knowledge Towards Artificial Intelligence and Medical Chatbots.” Medical Education Online 28, no. 1: 2182659. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Munn, Z. , Aromataris E., Tufanaru C., et al. 2019. “The Development of Software to Support Multiple Systematic Review Types: The Joanna Briggs Institute System for the Unified Management, Assessment and Review of Information (JBI SUMARI).” JBI Evidence Implementation 17, no. 1: 36–43. [DOI] [PubMed] [Google Scholar]

- Murray‐Parahi P. G. 2020. Preparing Nurses for Primary Health Care Roles: Barriers and Enablers to the Successful Transition of Next‐generation Nurses into the Primary Health Care Environment [PhD Thesis, University of Technology Sydney]. OPUS. Sydney Australia. https://opus.lib.uts.edu.au/bitstream/10453/147360/2/02whole.pdf.

- Neumann, A. T. , Arndt T., Köbis L., et al. 2021. “Chatbots as a Tool to Scale Mentoring Processes: Individually Supporting Self‐Study in Higher Education.” Frontiers in Artificial Intelligence 4. 10.3389/frai.2021.668220. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Paranjape, K. , Schinkel M., Panday R. N., Car J., and Nanayakkara P. 2019. “Introducing Artificial Intelligence Training in Medical Education.” JMIR Medical Education 5, no. 2: e16048. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pedro, F. , Subosa M., Rivas A., and Valverde P. 2019. Artificial Intelligence in Education: Challenges and Opportunities for Sustainable Development. The United Nations.

- Pesapane, F. , Bracchi D. A., Mulligan J. F., et al. 2021. “Legal and Regulatory Framework for AI Solutions in Healthcare in EU, US, China, and Russia: New Scenarios After a Pandemic.” Radiation 1, no. 4: 261–276. [Google Scholar]

- Safranek, C. W. , Sidamon‐Eristoff A. E., Gilson A., and Chartash D. 2023. “The Role of Large Language Models in Medical Education: Applications and Implications.” JMIR Publications 9: e50945. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sarkis‐Onofre, R. , Catalá‐López F., Aromataris E., and Lockwood C. 2021. “How to Properly Use the PRISMA Statement.” Systematic Reviews 10, no. 1: 1–3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sit, C. , Srinivasan R., Amlani A., et al. 2020. “Attitudes and Perceptions of UK Medical Students Towards Artificial Intelligence and Radiology: A Multicentre Survey.” Insights Into Imaging 11: 1–6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Syed, W. , Basil M., and Al‐Rawi A.. 2023. “Assessment of Awareness, Perceptions, and Opinions Towards Artificial Intelligence Among Healthcare Students in Riyadh, Saudi Arabia.” Medicina 59, no. 5: 828. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Teng, M. , Singla R., Yau O., et al. 2022. “Health Care Students' Perspectives on Artificial Intelligence: Countrywide Survey in Canada.” JMIR Medical Education 8, no. 1: e33390. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Weidener, L. , and Fischer M. 2024. “Artificial Intelligence in Medicine: Cross‐Sectional Study Among Medical Students on Application, Education, and Ethical Aspects.” JMIR Medical Education 10, no. 1: e51247. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wood, E. A. , Ange B. L., and Miller D. D. 2021. “Are We Ready to Integrate Artificial Intelligence Literacy Into Medical School Curriculum: Students and Faculty Survey.” Journal of Medical Education and Curricular Development 8: 23821205211024078. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Xu, Y. , et al. 2021. “Artificial Intelligence: A Powerful Paradigm for Scientific Research.” The Innovation 2, no. 4: 100179. 10.1016/j.xinn.2021.100179. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Xu, Y. , Jiang Z., Ting D. S. W., et al. 2024. “Medical Education and Physician Training in the Era of Artificial Intelligence.” Singapore Medical Journal 65, no. 3: 159–166. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zawacki‐Richter, O. , Marín V. I., Bond M., and Gouverneur F. 2019. “Systematic Review of Research on Artificial Intelligence Applications in Higher Education: Where Are the Educators?.” International Journal of Educational Technology in Higher Education 16, no. 1: 1–27. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

The authors confirm that the data supporting the findings of this study are available within the article and/or its supplementary materials.