Abstract

In the information age, novel hardware solutions are urgently needed to efficiently store and process increasing amounts of data. In this scenario, memory devices must evolve significantly to provide the necessary bit capacity, performance, and energy efficiency needed in computation. In particular, novel computing paradigms have emerged to minimize data movement, which is known to contribute the largest amount of energy consumption in conventional computing systems based on the von Neumann architecture. In-memory computing (IMC) provides a means to compute within data with minimum data movement and excellent energy efficiency and performance. To meet these goals, resistive-switching random-access memory (RRAM) appears to be an ideal candidate thanks to its excellent scalability and nonvolatile storage. However, circuit implementations of modern artificial intelligence (AI) models require highly specialized device properties that need careful RRAM device engineering. This work addresses the RRAM concept from materials, device, circuit, and application viewpoints, focusing on the physical device properties and the requirements for storage and computing applications. Memory applications, such as embedded nonvolatile memory (eNVM) in novel microcontroller units (MCUs) and storage class memory (SCM), are highlighted. Applications in IMC, such as hardware accelerators of neural networks, data query, and algebra functions, are illustrated by referring to the reported demonstrators with RRAM technology, evidencing the remaining challenges for the development of a low-power, sustainable AI.

1. Introduction

According to the von Neumann architecture, a computer consists of two essential parts, namely, the central processing unit (CPU) and the memory. The latter must support both instructions and data for the computation, which is executed in the CPU. With the massive increase of data and the widespread use of artificial intelligence (AI) in our modern digital society, memory and computing demand have seen an exponential increase which dictates the introduction of novel memory technologies and computing paradigms. In particular, there is a need to introduce a novel memory concept that can provide a large density combined with a high performance in terms of data access time, thus unifying the properties of storage and memory modules. In addition, for modern AI and machine learning applications, the processing time and energy consumption become limited by the data movement between the memory and the CPU. Overcoming this fundamental gap of performance requires the introduction of novel computing paradigms, such as in-memory computing (IMC), capable of moving a large portion of the computation within the memory, thus alleviating the memory bottleneck. ,

In this scenario, memory technologies acquire paramount importance, as they must provide a broad scope of properties, including nonvolatile storage, low voltage/current operation, high scaling capability, compatibility with the CMOS process flow, and integration in the back end of the line (BEOL). The last 25 years have seen the introduction of several emerging nonvolatile memory (NVM) technologies, such as resistive switching random-access memory (RRAM), phase change memory (PCM), magnetic random-access memory (MRAM), and ferroelectric random-access memory (FeRAM). , Historically, these technologies have been known for a relatively long time due to pioneering research works on a variety of materials and devices, such as oxides or chalcogenides. Currently, these memory concepts can hardly replace existing established technologies, such as static random-access memory (SRAM), dynamic random-access memory (DRAM), and nonvolatile flash memory, due to insufficient performance and excessive cost. On the other hand, emerging memories can provide a unique solution for embedded memories, where a high-capacity memory needs to be integrated into the same chip of a computing system, such as a microcontroller unit (MCU) for edge computing. Emerging memories also provide an improved radiation hardness compared to conventional CMOS-based memories, such as Flash memories. In particular, RRAM has been shown to have excellent radiation hardness, which is crucial for radiation-tolerant systems in spaceborne applications. , Also, emerging memory devices combining nonvolatile storage and high density are a suitable platform for IMC circuits for AI applications.

Among the emerging NVM technologies, RRAM displays a simple device structure and fabrication process that are amenable to crossbar array (CBA) architecture and 3D integration to achieve extremely high density. The nonvolatile switching behavior ensures good retention even at elevated temperatures, while program/erase cycling shows strong endurance, making RRAM an ideal solution for embedded nonvolatile memory (eNVM). The properties of RRAM devices match well with the requirements of several computing applications, such as nonvolatile behavior, multilevel operation, good scaling capability, and high linearity. RRAM can also offer unconventional properties such as stochastic phenomena and short-term memory effects, which are useful in selected computing applications. All of these properties can be tuned and optimized by careful materials and device engineering as well as circuit design. Usually, computing application requirements are met by a detailed design/technology co-optimization (DTCO), where the most convenient solution is provided by a specific set of materials, stack, process steps, device geometry, and circuit design.

Overall, thanks to its simple structure and flexible concept, RRAM appears as a strong candidate for advanced memory technology and IMC. However, many challenges still need to be addressed, including the optimization of the programming precision, linearity, and endurance, as well as the feasibility and energy efficiency of the overall RRAM computing system, which also includes ancillary circuits such as the analog-digital converters, the programming periphery circuits, the select/unselect decoders, and the digital controller. To solve these fundamental challenges, a cross-disciplinary research approach is essential, where materials engineering, device technology, circuit design, conceptual architecture, and final application, including its requirements, are fully understood and carefully monitored.

To meet these goals, the purpose of this work is to provide a comprehensive overview of RRAM from materials, devices, circuits, systems, and applications viewpoints. The review is organized as follows. Section provides an overview of RRAM devices including device structure, characteristics, and operation. Section describes the RRAM cell and array structure for memory applications, including a summary of presented demonstrators in the literature. Section presents RRAM circuits for computing primitives, such as matrix-vector multiplication (MVM) and inverse matrix calculation. Section addresses RRAM-based computing applications, focusing on various AI, neural networks, and other popular machine learning tasks and highlighting the specific requirements which are essential for each computing task. Section provides a conclusion and a perspective on the open research challenges.

2. RRAM Devices

Resistive switching random-access memory (RRAM) is a memory device capable of changing its resistance upon the application of electrical pulses. − Most typically, the RRAM structure consists of a metal–insulator–metal (MIM) stack, where the insulating layer can be modified by the presence and growth of a conductive filament (CF) shunting the two metal electrodes. This is shown in Figure a, indicating the MIM where a CF connects the metal electrodes across the insulating layer. Modification of the CF leads to a resistance change of the MIM structure, which is thus responsible for the resistance switching effect. The CF is generally first introduced in the MIM structure by an electrical forming operation, also known as electroforming, which consists of a controlled voltage-induced breakdown operation of the insulating layer. Then, the CF can be activated or deactivated by generation of a depleted gap across the filament as shown in Figure b. Figure c shows the typical current–voltage (I–V) curve for a RRAM device, displaying the set transition for the switching from high to low resistance and the reset transition for the switching from high to low resistance. The type of switching displayed in Figure c is the unipolar switching of RRAM, where the set and reset transition can take place at the same voltage polarity. , Most relevant for the memory and computing applications of RRAM is the bipolar characteristic in Figure d, where the set and reset transitions take place at opposite polarities. The set transition generally shows a steep slope in the I–V curve from high to low resistance, which is attributed to the negative differential resistance (NDR) due to CF formation and the consequent growth of a low resistance path across the oxide. The reset transition instead shows a more gradual, continuous change in the I–V curve, as the CF is gradually disconnected or retracted in response to the electric field.

1.

Sketch of RRAM and its switching characteristics. (a, b) Sketch of the RRAM device, including the conductive filament (CF). (c) Schematic I–V curve for a unipolar switching RRAM device. (d) Schematic I–V curve for a bipolar switching RRAM device. Reproduced from ref . Copyright 2016 IOP Publishing Ltd. with Creative Commons Attribution 3.0 license https://creativecommons.org/licenses/by/3.0/.

In the case of unipolar switching, although set/reset operations occur at the same polarity, they differ by the current condition, in that the set operation requires a limitation in current known as the compliance current (CC) to prevent the destructive breakdown of the device. The CC is generally adopted for the set process of bipolar switching as well, to minimize degradation and enable tight control of the final resistive state. The two stable states of RRAM are known as the high resistance state (HRS) and the low resistance state (LRS), which are obtained after the reset and set transition, respectively.

Early reports about resistive switching (RS) have been published in the 1960s within studies of the reversible breakdown phenomena in thin metal oxides, such as SiO x , Al2O3, Ta2O5, ZrO2, and TiO2. In general, these layers displayed an NDR effect which was explained by a space-charge-limited current (SCLC) originating from the trapping of electrons in localized states. Studies in niobium oxide (Nb2O5) layers demonstrated bistable RS between two stable states. Figure shows one of the first reported I–V curves for RS, where the LRS (a) is first subject to a reset transition to the HRS at negative voltage (b), followed by a set transition back to the LRS at positive voltage (c). Both the HRS and LRS were found to be stable, thus supporting the possibility of conceiving a nonvolatile memory (NVM) from a RRAM device.

2.

Measured I–V curves of a MIM stack with a Nb2O5 insulating layer. Resistance switching is demonstrated where the application of a negative voltage (b) causes a reset transition from LRS (a) to HRS, and the application of a positive voltage (c) causes a set transition from HRS to LRS. Reproduced with permission from ref . Copyright 1965 American Institute of Physics.

Those early studies were mostly aimed at elucidating the fundamental transport properties of insulating layers, such as transition metal oxide. However, in the early 2000s interest in RS phenomena rose significantly for studying NVM applications. Similar to phase change memory (PCM), ferroelectric random access memory (FeRAM), and magnetic random access memory (MRAM), RRAM devices were extensively studied with the specific purpose of developing a new class of memory technology. In particular, research on these emerging memory concepts was aimed at assessing the scalability, density, performance, energy consumption, reliability, and cost of the technology, to be compared to conventional memories of complementary metal-oxide-semiconductor (CMOS) technology, such as static random access memory (SRAM), dynamic random access memory (DRAM) and Flash NVM. Given the excellent combination of speed, reliability, low voltage operation, and endurance, RRAM devices were even targeted as potential ‘universal’ memory, capable of satisfying the requirements of all major device technologies, from SRAM to Flash.

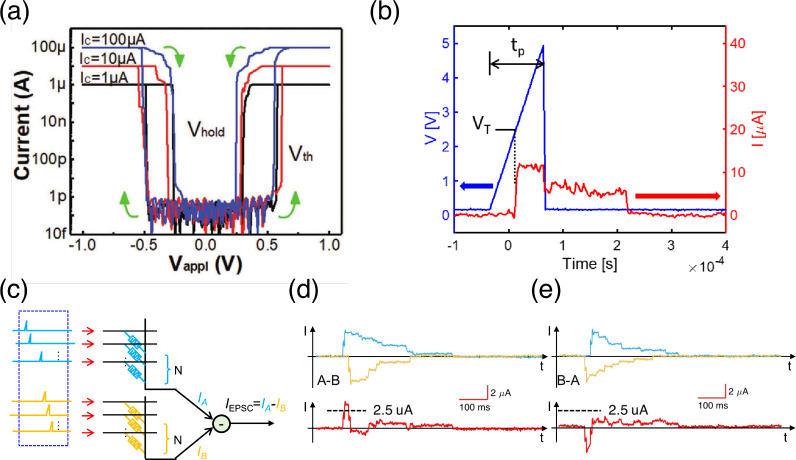

2.1. Unipolar Switching RRAM

Unipolar switching in NiO-based RRAM devices first attracted interest as a high-density NVM technology. Figure shows the measured I–V curve for polycrystalline NiO films deposited on Pt/Ti/SiO2/Si substrates, indicating unipolar switching for both positive and negative applied voltages, also referred to as nonpolar switching. Two types of switching are shown in Figure , namely, nonvolatile, or memory, switching (Figure a) and volatile, or threshold, switching (Figure b), where the set transition results in an unstable LRS, which spontaneously switches back to HRS within a short retention time. Memory and threshold switching in NiO were found for different ratios of Ni and O concentrations in the NiO film, which were Ni/O = 1.05 and 0.95 in Figure a and b, respectively. Memory switching took place under the same polarity by applying a proper k during the set transition. On the other hand, threshold switching leads to a transition from HRS to LRS with CC of 3 mA; however, the HRS was recovered as the voltage decreased below a characteristic holding voltage VH. Although not useful for NVM technology, threshold switching has a significant role in several applications for both storage, e.g. select devices in CBAs, and computing, such as short-term memory and oscillating circuits. Unipolar memory switching with RRAM was explored given the simplicity of the circuit integration, where not only a field-effect transistor (FET) but also unipolar diodes or bipolar junction transistors can be adopted for high-density NVM CBAs. Metal-oxide p–n diodes suitable for integration in the back-end of the line (BEOL) of the CMOS process flow were demonstrated as RRAM selectors, thus enabling 3D stackable high-density CBAs.

3.

Measured I–V curves for Pt/NiO/Pt RRAM devices with memory switching (a) and threshold switching (b) under either polarity, thus demonstrating nonpolar unipolar switching. The different behaviors are due to different Ni/O ratios in the switching layer, namely, 1.05 and 0.95 in (a) and (b), respectively. Reproduced with permission from ref . Copyright 2004 AIP Publishing.

2.2. Bipolar Switching RRAM

While advantageous from an integration viewpoint, unipolar switching RRAM devices showed poor reproducibility of switching mainly due to the lack of control of the CF size and resistance in the LRS. Unipolar switching is mainly explained by thermochemical oxidation and diffusion of the material locally at the CF as a result of Joule heating, which lacks directionality. Bipolar switching RRAM then attracted interest because of the improved ability to control the ionic migration responsible for CF growth and disconnection. Contrary to thermally induced oxidation and diffusion, field-induced migration can be directed toward either electrode side, thus enabling the controllable modulation of CF resistance. After the seminal works of the 1960s, studies on bipolar switching of metal oxides were revived by covering perovskite materials, such as SrZrO3, and binary metal oxides such as TiO2 and HfO2. ,, Among the latter materials, HfO2 raised considerable interest, mostly thanks to the relevance of this material as a high-k gate dielectric for the logic CMOS technology. ,

Figure a shows the measured I–V curves of HfO2-based RRAM, indicating bipolar switching with controllable LRS resistance via the CC. The bipolar switching effect can be understood by the directional migration of ionic species, such as oxygen vacancies responsible for the higher local conductivity in the CF. During reset, field- and temperature-induced ionic migration cause the opening of a depleted gap across the CF, thus bringing the device into an HRS. By increasing the time and/or the voltage of the reset operation, the gap length increases its length, thus resulting in a higher resistance and enabling tight control of the HRS resistance. During the set transition, the applied field causes the migration of ions in the opposite direction, thus replenishing the previously opened gap and restoring the LRS conductance. The CC plays a key role during the set transition by limiting the final resistance of the LRS to the value R = V C /I C , where V C is a critical voltage, characteristic of the microscopic ion-migration process, and I C is the CC. , The critical voltage V C represents the voltage value for the acceleration of the CF growth by ionic migration at the time scale characteristic of the experiment, e.g. about 1 s for a typical quasi-static experiment. Experimental results indicate that this voltage increases at decreasing times during the set transition. Due to the weak dependence of V C among different RRAM materials, the LRS resistance was found to follow a universal behavior when plotted as a function of the CC. Note that ionic migration is a directional process guided by the field, thus supporting the repeatability of the set-reset process at the basis of cycling endurance. Cycle-to-cycle variability is also strongly reduced compared to unipolar switching RRAM, as the same defects are consistently reused during bipolar set/reset processes, thus mitigating defect-number variation. HfO2-based RRAM also showed excellent switching speed and scaling in the 10 nm range, thus supporting this materials system as a promising solution for scalable RRAM.

4.

Measured I–V curves for bipolar switching RRAM devices, namely Ti/HfO2/Pt RRAM (a) and Cu/AlO x conductive-bridge random-access memory (CBRAM) device (b). Panel (a) is adapted with permission from ref . Copyright 2008 IEEE. Panel (b) is adapted with permission from ref . Copyright 2013 IEEE.

2.3. RRAM Stack Optimization

Despite the outstanding performance of HfO2-based RRAM, it was soon realized that RRAM optimization requires an overarching engineering effort aimed at the whole RRAM stack, including both metal oxide and metal electrodes, in terms of composition profile, material structure, and interfaces. Several RRAM stacks were then reported with the objective of optimizing the device behavior from various perspectives.

Figure b shows the I–V curve for a conductive-bridge random-access memory (CBRAM), also known as the electrochemical metallization (ECM) device. In CBRAM, the top electrode material is replaced by an active metal, such as Cu, ,− Ag, or CuTe. Application of a positive voltage to the top electrode causes the field-induced oxidation and migration of electrode cations across the insulating layer, also known as the electrolyte. The latter consists of a chalcogenide layer, such as GeSe or GeS2, or an oxide layer, such as Al2O3, ZrO x , SiO2 or GdO x . Compared to conventional oxide-based RRAM devices, CBRAMs display a larger resistance window, in a range of 104 compared to about 102 for the case of metal-oxide RRAM. The higher resistance window can be explained by the higher ionic mobility of Cu and Ag in CBRAM compared to oxygen vacancies and enables the design and integration in high-density memory arrays. The relatively high ionic mobility of Cu and Ag can be challenging due to thermally induced diffusion during the BEOL process at 400 °C. Process-induced Cu diffusion was reduced by diffusion barriers such as TiW inserted between the electrolyte and the Cu injecting electrode without compromising the memory performance. Thanks to the large resistance window, multilevel operation over a resistance range of 6 orders of magnitude of the LRS was demonstrated by CC-control of the set transition. Due to the high mobility of the cation species, especially in the case of Ag, the CF generally displays a short retention time, which enables short-term memory and other dynamic properties that become useful in neuromorphic computing (Section ).

Binary metal oxide layers also require careful design and engineering to improve the electrical performance. Figure a shows the transmission electron microscopy (TEM) image of the cross-section of a RRAM device with a TiN/Ti/HfO x /TiN stack. A thin Ti cap was introduced between TiN and HfO x to enable oxygen exchange according to the following reaction:

5.

Top electrode engineering for bipolar switching RRAM devices. (a) TEM image of a TiN/Ti/HfO x /TiN stack for a bipolar switching RRAM device. (b) XPS depth profile of a TiN/Ti/HfO2/TiN stack indicating the presence of the OEL. Panel (a) is adapted with permission from ref . Copyright 2008 IEEE. Panel (b) is adapted with permission from ref . Copyright 2009 IEEE.

| 1 |

thus resulting in an intermediate oxygen exchange layer (OEL) with a high concentration of oxygen vacancies. The OEL is clearly shown in Figure b, reporting the X-ray photoelectron spectroscopy (XPS) profile of the stack in Figure a and indicating a relatively wide transition region between the TiN and HfO x layers. The generation of oxygen vacancies provides an initial reservoir of defects available for migration during forming, set, and reset operation of the RRAM device, thus supporting good performance and reliability of the device. Similar cap layers to form the OEL were adopted in several RRAM reports, with the cap consisting of Ti, , Hf, or Ta. ,

To better assess the impact of the OEL on the device performance, Figure a shows the measured I–V curves for a HfO x -based RRAM device under forming, set, and reset operation. The forming voltage is critical for the device, since it dictates the size of the selector and decoder transistors, which must sustain part of the applied voltage soon after the forming event. The forming voltage is directly linked to the leakage current across the pristine device, as shown in Figure b. Here, the leakage was increased and the forming voltage was decreased by increasing the thickness of the Ti cap layer in the RRAM stack, resulting in a more extensive O exchange and, hence, a larger concentration of oxygen vacancies. Optimizing the metal cap and thermal annealing to activate the O exchange are essential to control and minimize the forming voltage. Forming-free RRAM devices have also been developed to mitigate the forming issue.

6.

Impact of OEL on forming. (a) Measured I–V curves showing set, reset and forming characteristics for a HfO x -based RRAM. (b) Measured I–V curves showing an increase of leakage current and a decrease of forming voltage for increasing thickness of the Ti cap layer. Panel (a) is adapted with permission from ref . Copyright 2011 IEEE. Panel (b) is adapted with permission from ref . Copyright 2013 IEEE.

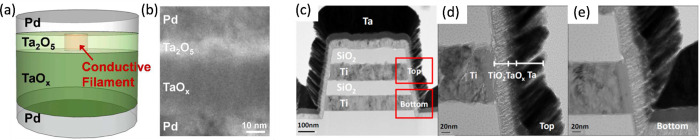

Stack optimization is important not only for forming but also for set operation. A key issue of both forming and set is the abrupt transition to a lower resistance, which can result in a high-voltage degradation of the select transistor, as well as in current overshoot effects causing device overprogramming and excessive reset currents. To minimize the overshoot effects, a local series resistance can be integrated close to the RRAM device to accommodate part of the applied voltage at the set/forming transition. Such close integration of the switching device and the conductive device can be achieved by bilayer structures, where one layer acts as a series resistance while the other layer acts as the proper switching layer. This is the case for the Ta2O5/TaO x bilayer structures shown in Figure a, consisting of a relatively thick conductive TaO x layer and a relatively thin switching Ta2O5 layer. Figure b shows the cross-sectional TEM image of the RRAM stack, including the Pd top and bottom electrodes. As shown in Figure a, the CF extends across only the thin switching layer, whereas the conductive layer only serves as a series resistance to prevent excessive degradation to the select transistors and overshoot effects. The integration of the series resistance within the RRAM stack enables high scalability and low parasitic capacitances. Bilayer structures based on the TiN/TaO x /HfO2/TiN stack, where TaO x and HfO2 serve as conductive and switching layers, respectively, were recently reported to enable analog-type switching with improved control of the HRS and LRS states.

7.

Bilayer RRAM structures. (a) Sketch and (b) cross-sectional TEM image of a Ta2O5/TaO x bilayer, where Ta2O5 and TaO x layers serve as the switching and the conductive layers, respectively. (c) Cross-sectional TEM of a vertical RRAM device with TiO2/TaO x bilayers, with close-up images of the (d) top and (e) bottom device in the vertical structure. Panels (a) and (b) are reprinted from ref . Copyright 2014 ACS. Panels (c,d,e) are adapted with permission from ref . Copyright 2016 IEEE.

Similar RRAM structures consisting of TiO2/TaO x bilayers were reported to enable analog switching in vertical RRAM devices as shown in Figure c,d,e. ,− Atomic layer deposition (ALD) is generally adopted as a deposition tool to tightly control the thickness, uniformity, composition, and structure of each layer in the bilayer stack. Analog switching was optimized in bilayer stacks, such as HfO2/Al:TiO2 and TaO x /HfO2, to achieve high linearity, high symmetry and high endurance, which are essential in hardware accelerators for supervised training of neural networks (see Section ).

In addition to the top electrode and oxide layers, bottom electrode engineering is also essential, particularly for reliability optimization. Cycling endurance in bipolar RRAM devices was shown to be limited by the unwanted set transition occurring under negative polarity when the normal set process was expected under positive voltage. To prevent a negative set, the bottom electrode should be as chemically inert as possible. RRAM with bottom electrodes based on inert materials such as Pt, C, and Ru has been shown to display excellent retention, thanks to a reduced chemical ionization of the bottom electrode and reduced interaction with the oxide layer.

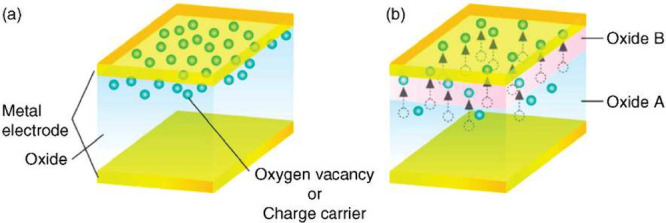

2.4. Nonfilamentary RRAM Devices

Although most of the RRAM implementation relies on the filamentary concept, RRAM devices based on uniform (or interface) switching were also reported. In these devices, resistance switching results from a change in the resistivity which extends uniformly across the active device area via an electrically induced change of the stack composition impacting the local conductivity. A possible physical mechanism for the uniform switching is illustrated in Figure , showing the density profile of oxygen vacancies in the LRS (a) and the HRS (b). First, oxygen vacancies are uniformly distributed in an OEL at the top-electrode side, thus resulting in relatively high conductivity across the RRAM oxide layer. The application of a negative voltage to the top electrode results in the migration of oxygen ions toward the OEL, thus causing the reoxidation of the OEL with a local increase of resistivity due to the formation of a Schottky barrier. The reoxidized layer is indicated as Oxide B in the figure, where reoxidation has taken place via partial depletion of oxygen from the Oxide A layer.

8.

Uniform switching RRAM concept illustrating the defect distribution in the LRS (a) and HRS (b). Migration of oxygen vacancies from the top electrode side to the bottom electrode side under a negative applied bias in (b) causes the top electrode reoxidation of the OEL at the top electrode (oxide B in the figure), thus causing an enhanced Schottky barrier, and hence reduced carrier injection characterizing the HRS. Reproduced with permission from ref . Copyright 2008 IEEE.

The most typical materials showing uniform switching are perovskites, such as manganites, − where switching was shown to occur by oxygen transfer from the manganite layer to an active electrode, such as Al or Sm. Uniform switching can be generally recognized by the absence of an abrupt set transition and from the linearity of LRS and HRS resistance on the device area. Given the area-scaling property of the programming current, uniform switching RRAM has been considered for ultralow-power RRAM suitable in high-density 3D CBAs.

2.5. RRAM Area Scaling

Device scaling is among the most important properties of any memory concept, to support area scaling, bit-cost reduction, and competitiveness compared to conventional CMOS-based memory concepts, such as SRAM, DRAM, and Flash. RRAM scaling has been supported by several reports, evidencing the ability to reduce both the area and thickness scaling. Figure a shows a top-view SEM image of a RRAM device with a TiN/Hf/HfO x /TiN RRAM stack where the size of both the top and bottom electrodes was defined in the range of 10 nm. This is shown in Figure b and c, reporting the TEM cross-sectional images of the device along the top and bottom electrodes, respectively. A key concern of area downscaling was shown to be the forming voltage, which tends to increase according to the Poisson area scaling of time-dependent dielectric breakdown (TDDB). To compensate for such an area dependence, the switching layer thickness can be reduced and optimized by composition profiling. For optimized scaling behavior of the forming voltage, an amorphous structure of the oxide layer is preferred compared to a polycrystalline structure, where grain boundaries might induce local nonuniformities.

9.

Scaling of RRAM devices. (a) Top view SEM image of TiN/Hf/HfO x /TiN RRAM device with CBA structure. (b) Cross-sectional TEM image of the same device along the top electrode direction, exhibiting the 10 nm width of the bottom electrode. (c) Cross-sectional TEM image along the bottom electrode direction, exhibiting the 10 nm width of the Hf cap at the top electrode side. Reproduced with permission from ref . Copyright 2011 IEEE.

Sub-10 nm scaling of RRAM devices was shown by advanced techniques based on vertical film deposition and fin exposure. RRAM CBA circuits with a 2 nm width of the top and bottom electrodes were demonstrated, while a line pitch of about 12 nm was achieved, corresponding to a device density in the range of 4.5 terabits per square inch. The switching of the TiO2/HfO2 stack was shown to occur with a low current in the range of about 50 nA.

2.6. RRAM Based on 2D Materials

In addition to area and pitch scaling, thickness scaling is essential to enable a good aspect ratio of the device geometry and a low forming voltage. Toward this goal, RRAM with atomic thickness was demonstrated by adopting a 2D transitional metal dichalcogenide (TMD) monolayer as the switching layer. Various types of single-layer TMDs were demonstrated as switching layers, including MoS2, MoSe2, WS2, and WSe2 with Ag and Au electrodes. This device was dubbed ‘atomristor’ to highlight its ability for thickness miniaturization to the atomic scale. The TMD monolayer was deposited by chemical vapor deposition (CVD) or metal–organic CVD (MOCVD) and then transferred on the bottom electrode and completed with top electrode deposition and patterning. Figure a shows the sketch of the device while Figure b shows the cross-section of the RRAM devices, evidencing the atomically thin MoS2 monolayer between Au top and bottom electrodes. Figure c shows the crystalline atomic structure of the MoS2 layer, indicating the presence of S vacancies, which potentially influence the forming and switching behavior of the device.

10.

RRAM device with TMD switching layer. (a) Sketch of the device with CBA structure evidencing the TMD layer sandwiched between the top and the bottom electrodes. (b) TEM cross-sectional image illustrating the Au/MoS2/Au stack with monolayer thickness and atomic smoothness of the interface. (c) Scanning tunneling microscopy (STM) image of a monolayer MoS2 evidencing the S vacancy defects. Reprinted from ref . Copyright 2017 ACS.

Thickness scaling was further demonstrated in van der Waals (vdW) structures, where both the switching layer and the electrode consist of a 2D material. The vdW heterostructure graphene/MoS2–x O x /graphene was demonstrated in a RRAM device with switching endurance of up to 107 and with the possibility for deposition on flexible organic substrates. RRAM devices based on hexagonal boron nitride (hBN), an insulating 2D material, were demonstrated in combination with MoS2-based select transistors, thus supporting the feasibility of 2D-based one-transistor/one-resistor (1T1R) memory in the BEOL at relatively low temperature. Wafer-scale integration and full-CMOS integration at the 180 nm node were recently demonstrated for hBN-based RRAM devices.

2D semiconductors provide an attractive solution as active channel materials for scalable CMOS transistors, thanks to their atomic-scale thickness and their capability for 3D, BEOL integration. , Significant progress has been recently reported to support 2D semiconductors as a feasible technology to extend the Moore’s law of CMOS transistor scaling. −

Memory devices based on 2D semiconductors include not only RRAM but also charge-based concepts such as floating gate memories , and charge trap memories. , RRAM and transistor functionalities were merged in a new device named ‘memtransistor’, consisting of a 3-terminal device with a 2D-semiconductor channel controlled by a gate and contacted by source and drain. − The device can operate as a conventional transistor, where the gate voltage enables control of the channel conductivity. However, the application of a relatively large voltage across the drain and source can result in RS of the channel conductance, similar to RRAM operation. The switching mechanism in MoS2-based memtransistors has been explained by the field-induced dislocation migration in the polycrystalline MoS2 channel , or the modulation of the Schottky barrier at the metal–semiconductor contact.

Figure a shows a top-view SEM image of a memtransistor device based on a MoS2 channel with Ag source and drain separated by a 18 nm gap. The channel conduction was controlled by the gate voltage V G applied to the Si back gate, with a SiO2 layer of thickness 285 nm. To initiate the RS behavior, a forming operation was initially carried out by applying a voltage of 1.8 V across the source and drain. Figure b shows the I–V curves after formation, indicating a set transition at about V DS = 0.9 V from HRS to LRS, followed by a spontaneous decay from LRS to HRS as V DS is reduced below a characteristic holding voltage V hold of about 0.2 V. The volatile switching can be attributed to the formation of a conductive bridge shunting the source and drain as a result of voltage-induced Ag migration on the surface of the MoS2 channel. The decay of the Ag CF can be explained by its instability as a result of the large surface energy, which is minimized by collapsing the elongated CF shape into isolated nanoparticles, as already shown by in situ experimental results and simulations. More detailed time-resolved studies indicate a retention time in the range of about 100 ms. Figure c shows the measured V set and V hold as a function of V GS from Figure b, indicating that the set and holding voltage do not depend on the applied gate voltage, which only controls the channel leakage current in the HRS. Similar memtransistor devices were reported, although with an asymmetric structure of source and drain electrode materials. , Thanks to the controllability of the gate and the drain, memtransistors are a promising device technology for neuromorphic computing applications.

11.

MoS2-based memtransistor device. (a) Top-view SEM image of the back-gated MoS2-based transistor with a Ag source and drain. (b) I–V curves of the set transition at V set followed by a spontaneous collapse to the HRS at the characteristic holding voltage V hold . Changing the gate voltage affects only the HRS current without any impact on V set or LRS resistance, with the latter being controlled by the CC. (c) Measured V set and V hold as a function of V GS . Reproduced with permission from ref . Copyright 2022 Wiley VCH.

3. RRAM Cell and Array Structure

For memory and computing applications, the RRAM device element can be replicated several times to realize a device array arranged in rows and columns, usually referred to as word lines (WLs) and bit lines (BLs). Figure shows a summary of the various structures for the RRAM cell and the array, including one-resistor (1R) structure (a), one-selector/one-resistor (1S1R) structure (b), one-transistor/one-resistor (1T1R) structure (c), and one-capacitor (1C) structure (d). In the 1R structure, every RRAM device is connected between a row and a column of the array. While being particularly attractive from a density point of view, the 1R array, also referred to as passive CBA, is prone to disturb effects during set/reset programming and to sneakpath problems during readout.

12.

RRAM cell and array structure. (a) One-resistor (1R) array, where RRAM is connected between each row and column in the CBA. (b) One-selector/one-resistor (1S1R) array, where each RRAM element is combined with a selector device in series. (c) One-transistor/one-resistor (1T1R) array, where each RRAM element is combined with a transistor device requiring an additional line for connecting the transistor gates. (c) One-capacitor (1C) array, where the RRAM is operated as a capacitor and is connected between each row and column in the CBA. Reproduced from ref . Copyright 2023 AIP Publishing with Creative Commons Attribution 4.0 license https://creativecommons.org/licenses/.

3.1. 1S1R Arrays

To prevent the sneakpath current, a nonlinear selector element can be added in series to the device in the 1S1R structure. − Thanks to the nonlinear element, when a device is selected by applying a voltage to its row and column, all other devices are subject to a smaller voltage, which translates into an exponentially lower current.

The adoption of a two-terminal selector element, such as an antifuse element, a p-n diode, or an ovonic threshold switch (OTS) device, allows maintenance of a small cell area of only 4F, where F is the lithography feature of the technology.

The selector element must satisfy a number of challenging properties, including (i) a sufficient nonlinearity, to enable safe select/unselect bias schemes where the cumulated unselected device current is negligible compared to the selected device one, (ii) a sufficient on-state current, to support the programming current of the memory RRAM device in both set and reset processes, (iii) a sufficient endurance, to enable several set, reset, and read operations of the device, (iv) a low variation of threshold switching voltage and on/off-state currents, (v) a high speed to enable fast transition from select to unselect bias modes, and (vi) a bipolar operation, where the selector device can operate under both positive and negative voltage polarities to support set and reset of bipolar RRAM devices. Oxide-based p-n diodes generally display unipolar operation due to their p-n structure; thus, they are compatible only with the class of unipolar RRAM devices. Mixed ionic/electronic conduction (MIEC) devices have been shown to display a high nonlinearity combined with a bipolar operation, although their operating voltage is relatively low compared with that of typical RRAM devices. Similarly, tunneling-based selector devices with a barrier engineered stack of oxide layers show excellent bipolar characteristics with high nonlinearity, although dielectric breakdown may critically affect endurance at the high operating voltages needed to set/reset RRAM devices.

Selector devices with threshold switching characteristics have also been explored with a range of different materials, including reversible insulator–metal transition metal oxides such as VO2 and NbO2. These metal oxides display a reversible threshold switching from an off-state to an on-state, which can be used to select and unselect devices within a crosspoint array. However, the on/off current ratio is generally not sufficient to enable cell selection within a relatively large memory array. An on/off ratio of several orders of magnitude is offered by field-assisted superlinear threshold (FAST) devices and diffusive memristors consisting of a volatile RRAM device made of an Ag electrode and an oxide layer, such as SiO2. In this case, key concerns are the stochastic variation of the threshold voltage and the relatively long retention time for the transition from on-state to the off-state, which is generally limited by the rediffusion of cations to dissolve the conductive filament responsible for the on-state conduction. The OTS selector device shows excellent properties, including high on/off current ratio, high speed and high endurance, which must be sufficient for both the programming and the read operations. A relatively high on/off ratio is generally achieved by operating the device at a high threshold switching voltage, which, however, affects the power consumption and the design of the front-end transistors in the peripheral circuits. To improve the trade-off between threshold voltage and nonlinearity, low-voltage OTS devices were recently developed. Most recently, OTS device technology gained a renewed interest in selector-only memory (SOM) devices, where a nonlinear OTS layer can both serve as a selector and store memory states consisting of different threshold voltages. Up to 8 levels of different SOM threshold voltages were demonstrated, although the mechanism for the threshold voltage variation is still under debate. −

3.2. 1T1R Arrays

The drawbacks of the 1S1R devices are alleviated in the 1T1R structure, where the 2-terminal selector device is replaced by a 3-terminal MOS transistor as shown in Figure c. − The select transistor allows for better current control during the set transition as well as minimizing the leakage current from half-selected and unselected devices, at the expense of an additional line, usually called the WL, to access the gate terminal. Another limitation of the 1T1R structure is the need for a relatively large selector device to sustain the programming current of the device. As a result, the cell area is generally much larger than 4F, which prevents achievement of a large integration density. Figure d shows the 1C passive array, where the device memory bit is encoded in the capacitance instead of the device resistance, which is typical of ferroelectric materials and devices.

3.3. RRAM Array Demonstrators

Table reports a summary of RRAM technology demonstrators, namely prototypes of memory arrays with a density of at least 1 kb. ,− Prototypes are listed for increasing years of the report, between 2011 and 2023, evidencing a consistent decrease of the technology node from 180 nm to 12 nm. All demonstrators adopted a 1T1R structure, except for ref , where a 1S1R structure with a high capacity of 32 Gbit was reported.

1. Summary of RRAM Integrated Demonstrators ,− .

| Year | Node [nm] | Capacity | Institution | Stack | Ref |

|---|---|---|---|---|---|

| 2011 | 180 | 4 Mb | ITRI | TiN/Ti/HfO2/TiN | |

| 2011 | 130 | 384 kb | Adesto | Ag/GeS2 | |

| 2011 | 180 | 4 Mb | Sony | CuTe/GdO x | |

| 2012 | 180 | 8 Mb | Panasonic | TaN/TaO2/Ta2O5/Ir | |

| 2013 | 180 | 500 kb | Panasonic | TaN/TaO2/Ta2O5/Ir | |

| 2013 | 24 | 32 Gb | Sandisk/Toshiba | Metal Oxide | |

| 2014 | 28 | 1 Mb | TSMC | Metal Oxide | |

| 2014 | 27 | 16 Gb | Micron/Sony | Cu-based/oxide | |

| 2015 | 90 | 2 Mb | Renesas | Metal/Ta2O5/Ru | |

| 2017 | 90 | 500 kb | Winbond | TiN/HfO2/Ti/TiN | |

| 2018 | 40 | 11.3 Mb | TSMC | ||

| 2019 | 22 | 3.6 Mb | Intel | ||

| 2020 | 22 | 13.5 Mb | TSMC | ||

| 2020 | 28 | 500 kb | TSMC | ||

| 2020 | 28 | 1.5 Mb | TSMC/IMECAS | ||

| 2021 | 14 | 1 Mb | IMECAS | Cu-based/oxide | |

| 2022 | 28 | 800 kb | Infineon/TSMC | ||

| 2023 | 12 | 1 Mb | TSMC |

Figure shows the array capacity (a) and the technology node (b) as a function of the year of the demonstrator. In most cases, the prototypes in Table display relatively small capacity, aiming at the demonstration of eNVM capable of being integrated into the same chip as analog and digital circuits for sensing and processing, such as microcontroller units (MCUs). RRAM technology is among the most promising thanks to the BEOL integration requiring only metal and insulator layers for the active cell, while the CMOS select transistor can be integrated in the front-end of the line. This solution allows for overcoming the difficult integration of Flash devices in advanced CMOS nodes beyond the 28 nm node, where CMOS transistors adopt the high-k/metal-gate (HKMG) process.

13.

Summary of RRAM technology scaling according to Table . (a) Array capacity and (b) technology nodes of the reported RRAM demonstrators.

In just two cases, the capacity in Table exceeds the Gbit level, which evidences the effort to achieve RRAM arrays with high capacity approaching the typical range of Flash and DRAM. , This was possible thanks to an extremely small cell area of 4F for the 1S1R structure and 6F for the 1T1R structure.

This technological trend generally goes under the name of storage class memory (SCM), which identifies a memory technology capable of filling the gap in the memory hierarchy between volatile DRAM, characterized by relatively high performance and relatively large area, and nonvolatile Flash NAND storage, characterized by relatively small area, low cost and slow access times.

Figure a shows the TEM cross-section along the BL direction of the 1T1R array with 6F cell area in the 27 nm node. The cross-section evidences the V-shaped recess access transistors with elongated channels and buried WL and the RRAM devices sharing the same TE line, which minimizes the cell footprint along the BL direction. Figure b shows the cumulative distributions of the measured read current for the LRS and the HRS after 103 cycles for various cells, namely the integrated cell in Figure a, the intrinsic cell with larger RRAM active area and the scaled cell with larger pitch. The distributions show a similar shape and similar read window, suggesting that the integration process does not significantly affect the RRAM cell behavior. Note the relatively large statistical spread of the HRS read current, which makes the effective read window relatively small in the large array.

14.

1T1R RRAM array with 6F cell area. (a) TEM cross-section of the array along the bit line (BL), evidencing the select transistors with recess geometry and the RRAM devices sharing the TE. (b) Cumulative distributions of read current for the LRS and HRS for the intrinsic cell, integrated cell and scaled cell after 103 cycles. Reproduced with permission from ref . Copyright 2014 IEEE.

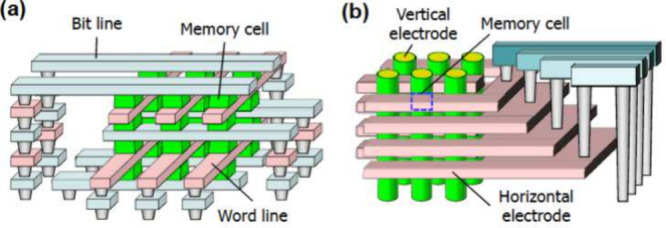

An even higher capacity was achieved by the 1S1R array in ref thanks to (i) the 2-terminal structure of the selector element thus enabling a CBA architecture and (ii) the 3D stacking, where two devices occupy the same cell area. This is the horizontal 3D approach evidenced in Figure a, where multiple CBAs are stacked on top of each other to minimize the effective cell area and, hence, maximize the bit density. A horizontal 3D RRAM array with 6 layers was demonstrated with a Cu/Ta/TaN/TaON/Cu stack in 28 nm HKMG CMOS technology. A similar horizontal 3D approach has been pursued in the 3DXP technology consisting of stacked 1S1R CBAs of a PCM element combined with an OTS selector. , However, horizontal stacking is prone to layer-to-layer variation due to thermal degradation during the fabrication process. Most importantly, the process yield decreases sharply with the number of stacked layers, due to the repetition of critical lithography masks, similar to the case of 3D flash NAND technology.

15.

3D RRAM arrays. (a) Horizontal 3D RRAM array, where two or more CBAs are stacked to share the same area. (b) Vertical RRAM array, where the memory cells are obtained at the interface between vertical and horizontal electrodes. Reproduced with permission from ref . Copyright 2011 IEEE.

To maximize the bit density while maintaining a high process yield, the vertical 3D RRAM technology of Figure b was developed. Here, each bit cell is located at the crossing between a horizontal metal plane or electrode and a vertical electrode. The processing yield is maximized with this approach, since there is just one critical lithography mask to realize the vertical holes to be filled with the oxide/electrode stack. 3D vertical RRAM concepts were demonstrated with both filamentary-type HfO x -based RRAM and uniform-switching RRAM with TiO2/TaO x bilayers (see also Figure c,d,e ,, ). In all vertical 3D RRAM implementations, conformal deposition techniques such as ALD become critical for the deposition of the vertical oxide/electrode stack within holes or trenches with a high aspect ratio.

4. RRAM Circuits for Computing

RRAM has been initially developed for memory applications and identified as a promising technology for SCM and eNVM for consumer, industrial and automotive microcontrollers. Besides the pure memory application, RRAM can provide an enabling technology in computing applications, where the memory plays a crucial role within the von Neumann architecture. In fact, bringing the compute function near (or even inside) the memory can provide several advantages for data-intensive computing tasks. ,,, Such a memory- or data-centric approach, as opposed to the conventional compute-centric one, is a promising paradigm to accelerate modern computing tasks such as data search, data analytics, machine learning, and artificial intelligence (AI). This constitutes the so-called in-memory computing (IMC) concept, where computing in situ within the memory can alleviate or suppress the data movement which is responsible for most of the energy consumption and latency in conventional digital computing systems.

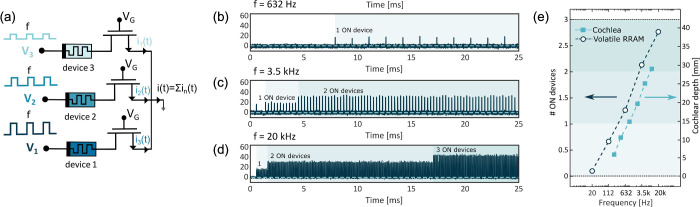

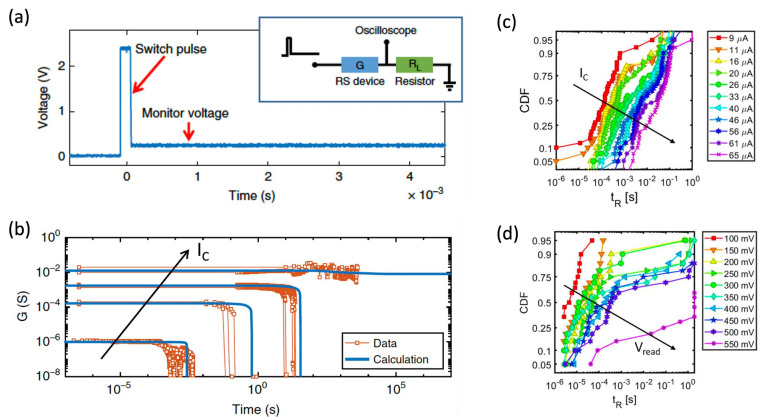

RRAM features several advantages for IMC, such as high density, scalability, low-power operation, nonvolatile storage, multilevel operation, and CMOS-compatible BEOL integration. Memory applications in computing generally rely on the ability to perform analog-domain operations with high parallelism within the RRAM array, typically exploiting Kirchhoff’s law for summation and Ohm’s law for multiplication. Several concepts for such physical computing within RRAM have been proposed in the literature, as summarized in Figure . The schematic I–V curve in Figure a highlights two potential regimes for IMC operation, namely (i) the static regime at low voltage, where RRAM can store a pretrained, preprogrammed parameter for computation (Figure b), and (ii) the dynamic regime at high voltage across the switching regime where the device can dynamically change its programmed state to mimic spike integration, learning, adaptation, and other linear or nonlinear functions (Figure c).

16.

Applications of RRAM Devices for in-memory computing (IMC). (a) Schematic I–V curve of a RRAM device with low-voltage and high-voltage regimes, corresponding to static and dynamic IMC, respectively. (b) Examples of static IMC, where pretrained parameters are stored in the memory to perform physical computation tasks, such as matrix-vector multiplication (MVM). (c) Examples of dynamic IMC, where pulses are applied in the switching regime to induce dynamic changes in the conductance for reproducing adaptation, learning, and other types of linear/nonlinear computing functions. Reproduced from ref . Copyright 2023 AIP Publishing with Creative Commons Attribution 4.0 license https://creativecommons.org/licenses/.

Among the static IMC functions, matrix-vector multiplication (MVM) is probably the most popular and explored, due to its implication in the deep neural network (DNN) for both the inference and the training processes. Similarly, a RRAM CBA can be used for inverse MVM, where a linear system is solved, thus facilitating the calculation of inverse, pseudoinverse matrices, eigenvectors and singular value decomposition. Other static functions include content addressable memory (CAM) for data search and query, , combinatorial optimization, , physical unclonable function (PUF) , and Bayesian inference.

Dynamic IMC aims at exploiting the programming property of the RRAM to reproduce dynamic functions, such as nonlinear neuron activation, stateful Boolean logic gates, , synaptic plasticity, , and learning in supervised/unsupervised neural networks. − Typically, the dynamic regime leverages controlled switching close to the set or reset voltage to modify the conductance of the RRAM device in response to the applied pulse width and amplitude. Randomized switching in the dynamic set/reset range can be used to develop circuits for true random number generation (TRNG). − Steep-slope logic devices have also been proposed based on the abrupt set transition in the dynamic regime of RRAM devices.

A key issue with dynamic RRAM computing is the limited set/reset endurance of RRAM devices as well as the energy consumption required by the set/reset operations. On the other hand, static IMC provides nonvolatile storage of computational weights for the execution of standardized tasks, such as neural network inference. Dynamic and static IMC can be generally combined in the same platform to provide energy-efficient processing capable of learning and adaptation. ,

4.1. RRAM Crossbar Arrays for Matrix-Vector Multiplication

The CBA circuit of RRAM devices has been widely used for accelerating MVM, or dot product, which, among various use cases, is one of the dominating bottlenecks in accelerating inference and training of neural network models. Figure a shows a circuit for performing MVM based on CBAs, namely a dot-product engine (DPE). The CBA can be a passive 1R array (Figure a) or a selected-memory CBA, such as the 1S1R array (Figure b) or the 1T1R array (Figure c). Thanks to the possibility of programming analog parameters into a RRAM device, the RRAM CBA can be adopted as a physical transcription of a matrix, where each RRAM cell serves as a matrix entry. A matrix is programmed in the CBA such that each RRAM conductance is given by G ij = A ij × G 0, where G 0 is a suitable unit conductance. An analog voltage vector is applied to the rows that are connected to the RRAM top electrodes. By connecting the M columns at ground, the resulting vector of currents i is given by

17.

Matrix-vector multiplication (MVM) with RRAM CBAs. (a) Circuit schematic of a dot-product engine (DPE) with RRAM devices. Input voltages are applied on the TE (rows), and currents are accumulated on the columns and sensed using a transimpedance amplifier (TIA). (b) I–V curves of the RRAM devices programmed in multiple conductance states, indicating both the multiple achievable states and the linear (ohmic) conduction for relatively high conductance. (c) Image of an integrated circuit including multiple 1T1R arrays of various sizes. (d) Correlation plot between the experimentally measured MVM with linear correction and the ideal MVM performed in software. (e) Equivalent MVM bit-precision as a function of memristor bit accuracy for multiple array sizes, i.e., the number of rows in the CBAs. (f) MVM throughput as a function of array size of DPE and digital ASIC. Panels (a,c,e,f) are adapted with permission from ref . Copyright 2016 Association for Computing Machinery. Panel (b) is adapted from ref . Copyright 2021 Nature Publishing Group with Creative Commons Attribution 4.0 license http://creativecommons.org/licenses/by/4.0/. Panel (d) is adapted with permission from ref . Copyright 2018 Wiley VCH.

| 2 |

which corresponds to a MVM or dot product. The output current i can be sensed by a transimpedance amplifier (TIA) which converts the current into a voltage, which can, in turn, be converted into a digital word with an analog-to-digital converter (ADC).

Figure b shows the current–voltage characteristic for several RRAM devices in the read (low-voltage) regime, with multiple stable conductance levels having ohmic, i.e. linear, conduction, which is essential for performing dot products without errors due to nonlinear parasitic effects. To avoid nonlinearity issues due to the non-ohmic behavior of RRAM devices for some states, a 1T1R structure can be used with the TE voltage fixed to a convenient voltage V read and the gate voltage equal to the logic binary input, while the summation current is accumulated along the column. After conversion of the current to a digital word, further summation can be achieved with the help of a shift-and-add operation in the digital domain. Such a binary-input approach also comes with the advantage of effectively eliminating the need for a digital-to-analog converter (DAC) at the CBA input rows, with benefits of reduced area and improved energy efficiency.

Figure c shows the physical implementation of multiple CBAs with a maximum size of N = 64 rows and M = 128 columns of 1T1R cells. The chip in the figure was used to demonstrate an on-chip MVM and to assess the impact of the array size on the accuracy of the dot-product operation. Figure d shows a correlation plot of the measured analog output as a function of the expected software output. A linear correction was applied to address the column-wise error due to IR-drop, and the results show a good agreement between the software and experiments. However, as the array size increases, the impact of the parasitic wire resistance of the TE and BE becomes increasingly relevant, thus limiting the effectiveness of linear correction. In fact, the array wire resistance causes a current resistance (IR) drop along the rows and columns. Considering the same current I flowing in each device, the voltage drop ΔV IR across the wire can be estimated by

| 3 |

where r is the cell-to-cell wire resistance. Considering, for instance, r = 1 Ω, I = 10 μA and N = 128, we obtain an estimate for the total IR drop along the line of ΔV IR ≈ 8 mV, which can contribute significantly to the dot-product error. By performing a dot product on arrays of different sizes and comparing it with the error obtained by performing a digital MVM with reduced precision, such as fixed-point INT8 or INT4, it is possible to correlate the array size with a given bit precision, as shown in Figure e. If only one value is programmed per column, the computational accuracy equals the RRAM accuracy (worst case); however, 7-bit computational precision can be reached for dense matrices of 6-bit RRAMs, given that noise is assumed uncorrelated among multiple devices. Computational accuracy saturates to 8-bit even for a large number of bits stored in the RRAM, due to the IR drop becoming the dominant factor compared to device noise. By properly modeling the IR drop, it is possible to introduce compensation techniques at both circuit level , and system level. Large arrays are desirable to maximize the equivalent throughput, defined as the number of operations (two in the case of multiply accumulate) performed in a unit of time. Figure f shows the throughput as a function of matrix size N for a RRAM-based DPE and a digital ASIC, demonstrating that DPE can reach a higher computing speed than a digital counterpart for N ≈ 128, and be 10 times faster for N > 512.

4.2. RRAM CBA Circuits with Analog Feedback for Inverse MVM

By modifying the analog peripherals of the CBA, it is possible to perform inverse linear algebra operations, such as computing the solution of linear systems, extracting the eigenvectors of a matrix, performing linear regression , and others. Figure a shows the circuit schematic of the CBA and its analog peripherals required for computing the solution of a linear system. The columns and rows of the CBA are connected to the input and output terminals of operational amplifiers, respectively, to provide a feedback loop. The conductance G ij values of the RRAM devices in the CBA are programmed with the coefficients of a positive-definite matrix A; then a current vector i is injected at the column terminals, which are kept at virtual ground potential by the feedback loop. The application of the input current pulse stimulates an analog output voltage vector v:

18.

Inverse MVM in CBAs with a feedback loop connection. (a) Circuit schematic of a closed-loop CBA for the solution of linear systems. The inset shows a programmed 3 × 3 matrix. (b) The conceptual schematic highlighting the difference with conventional circuits for the direct operation of CBAs. (c) Experimental and analytical output voltages for the solution of a linear system. (d) Output voltage as a function of the input parameters β with i in = βi ref . (e) Correlation plot of analytical and measured inverse matrix computation. Reproduced with permission from ref . Copyright 2019 National Academy of Sciences.

| 4 |

which is the same as eq but referred to the output voltage. Equation provides the solution of the linear system with matrix A = G and the known vector i.

Figure b shows the concept of this circuit and its relationship with the open-loop MVM of Section . While the MVM circuit is similar to the simple case of a voltage applied to a conductance, resulting in a scalar product I = GV, the inverse MVM case resembles the TIA circuit, where the applied current is converted to a voltage V = −G –1 I thanks to the concept of feedback loop enabled by the operational amplifiers.

Figure c shows an experimental demonstration of the concept, with the measured output of a linear system with 3 equations closely matching the analytical result. The result is confirmed for various inputs in Figure d, where parameter β provides the relative amplitude of the applied input current. The same circuit can be used for computing the inverse of a matrix by applying the vectors of an identity matrix as input and collecting the various obtained output voltage vectors to form the inverse matrix. Figure e shows the correlation plot comparing the elements of the inverse matrix computed with the analytical formula to those obtained from the experimental output voltage, indicating a good accuracy of the inverse matrix circuit. This circuit with the CBA in analog feedback can be used for various applications beyond the solutions of linear systems, as further illustrated in Section .

4.3. Content Addressable Memories

The content addressable memory (CAM) is a fundamental memory structure that operates in a complementary way with respect to the random access memory (RAM). As shown in Figure a, reading a RAM circuit consists of selecting an address as input and obtaining a data bit stored at the address location as output. On the other hand, reading a CAM requires that a content is presented as input, while the output yields the memory address where that specific content is stored. Figure b shows a ternary CAM (TCAM), where each cell verifies whether the input is equal to the stored value. A wildcard (‘X’, or don’t care) is added to match both 0s and 1s as input. If all of the TCAM cells in a row are matched, a match value is returned on the match line (ML), which can be then converted into the specific address.

19.

CAM architectures. (a) Conceptual circuit schematic of the RAM. (b) Conceptual circuit schematic of TCAM. (c) TCAM cell with 4T2M structure. (d) TCAM cell with 2T2M structure. (e) TCAM cell with 3T1M. (f,g,h) Search latency for 4T1M, 2T2M, and 3T1M cell structures, respectively. (i,j,k) Search margin for 4T1M, 2T2M and 3T1M cell structures, respectively. The RRAM resistive window ΔR was changed to assess its impact on the circuit performance. Adapted with permission from ref . Copyright 2019 IEEE.

TCAMs are ubiquitous in several applications, particularly in networking. , However, the broader use of TCAMs for computing has been hindered by the large area and power consumption of SRAM-based TCAMs, which require 16 transistors for storing and searching ternary values. From this viewpoint, RRAM-based TCAM implementation is attractive, as it enables a smaller cell area and hence a higher bit density. Various designs of RRAM-based TCAM cells have been proposed, including 4-transistors/2-memories (4T2M, Figure c), 2-transistors/2-memories (2T2M, Figure d), and 3-transistors/1-memory (3T1M, Figure e). In general, a TCAM circuit is operated by connecting all the input search line (SL) terminals along the column direction and all the output ML terminals along the row direction for creating the array in Figure b. For the TCAM search operation, the ML is initially precharged to a convenient potential, and then the input data are applied to the SL. If the input data match the value stored in the RRAM devices, the ML remains at the precharged potential; otherwise, a pull-down transistor is activated to discharge the ML. A sense amplifier connected to the ML is used for sensing and latching the output after a given search time.

In the case of the 4T2M TCAM in Figure d, two RRAM devices are programmed to represent a 0, 1, or X in [HRS,LRS], [LRS,HRS] or [LRS,LRS], respectively. As an example of the TCAM operation, when a ‘1’ is applied to the SL (and a ‘0’ on SLn), if a ‘0’ is stored, then a voltage divider between the two RRAMs activates the pull-down transistor, thus causing the discharge of the ML. A similar behavior can be derived for other cases such as search ‘1’ store ‘1’, search ‘0’ store ‘0’, and search ‘0’ store ‘1’. In the case of an ‘X’ stored, the voltage divider node is the mean voltage which is tuned to be below the pull-down threshold. In the case of the 2T2M cell, the encoding of the cell state into the RRAM device is the same; however, the input transistors act directly as pull-down transistors, thus avoiding the need for an additional transistor.

The 3T1M cell utilizes three RRAM states, namely, LRS, medium resistance state (MRS), and HRS to store 1, X, and 0, respectively. When a ‘1’ is searched, both data lines (DL) are kept at the ground, with only SLp at 1. If the cell stores a 0, most of the voltage drop is on the RRAM, thus activating the pull-down node and vice versa in the case where a 1 is stored. The conductance of the MRS is tuned such that the voltage drop on the RRAM device is not sufficient to activate the pull-down transistor. Note that TCAM operations can also be emulated by conventional CBAs, although this requires significant additional peripheral overhead.

Figure f,g,h shows the worst-case search latency as a function of array word length for 4T2M, 2T2M, and 3T1M simulated with the same technology node, i.e. CMOS 180 nm. The worst case is defined as a 1-bit mismatch since only one pull-down transistor is activated to remove the charge from the ML, thus resulting in a relatively long search time. Search time scales linearly with the word length since a drain-source parasitic capacitance on the ML is added for each cell on a row, increasing the overall ML capacitance. Figure i,j,k shows the read margin as a function of the word length for 4T2M, 2T2M, and 3T1M, respectively, under a similar simulation on the CMOS 180 nm technology node. The readout margin is defined as the difference between the voltage on the ML during the worst-case match (all ‘X’s) and the worst-case mismatch (1-bit). Longer columns have larger leakage, thus significantly reducing the read margin, due to the nonactivated pull-down transistors providing a parasitic contribution to the discharge of the ML.

While 2T2M has the most compact structure, it requires a large HRS (e.g. >1 MΩ) to minimize the parasitic discharge leakage and, hence, maximize the array size. On the other hand, the 3T1M architecture provides the fastest response, which comes at the cost of a relatively large static power consumption during search operation. The 4T2M cell design displays a larger area; however, it can provide a suitable trade-off between conductance window requirements, latency, and power consumption. The proposed RRAM-based TCAM cells have been demonstrated in various compute applications, including regular expression matching, genomics, and hyperdimensional computing. ,

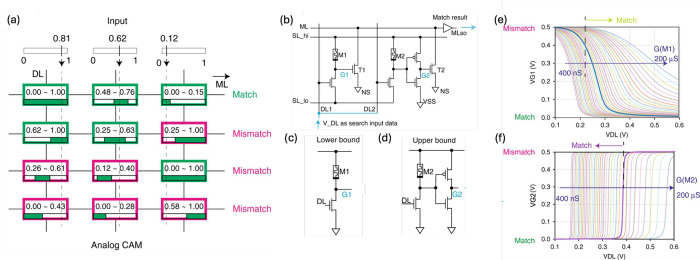

By leveraging the analog operation of emerging NVMs, an analog CAM (Figure a) was recently proposed, where TCAM columns are merged in ranges, to return a match in a cell if the analog input is within the stored range. For this purpose, two RRAM devices can be used for the lower and upper bound, as shown in the circuit of Figure b with a 6-transistors/2-memories (6T2M) design. If the input voltage applied to the DL is high enough, T1 is turned on, effectively pulling down the gate of T2, which is switched off and thus plays no role in affecting the ML, which returns a match. Figure c,d shows the lower and upper bound circuits. The lower bound circuit operation is shown in Figure e with the voltage on the gate of the lower bound pull-down transistor as a function of the input voltage on the data line (DL) for multiple programmed conductances in M1. By increasing the M1 conductance, it is possible to move the lower bound to a higher value. Complementary to the lower bound, the upper bound is realized by adding an inverter between the voltage divider on M2 and the pull-down transistor. Figure f shows the voltage on the upper bound pull-down gate G2 and a function of the input voltages for multiple conductance values programmed on M2. Similarly, by increasing the conductance on M2, it is possible to extend the upper bound. Analog CAM has been demonstrated for the acceleration of multiple compute workloads, including tree-based machine learning, one-shot learning and query processing.

20.

Analog CAM concept. (a) Conceptual circuit schematic. (b) Circuit design of the 6T2M TCAM cell. (c) Lower bound subcell controlling the G1 pull-down transistor. (d) Upper bound subcell controlling the pull-down transistor G2. (e) Lower and (f) upper bound pull-down voltages as a function of input voltage V DL for multiple programmed conductance. Adapted from ref . Copyright 2020 Nature Publishing Group with Creative Commons Attribution 4.0 license http://creativecommons.org/licenses/by/4.0/.

5. Computing Applications

IMC is extremely promising for the execution of data processing tasks directly in the memory, thus reducing the energy consumption and taking advantage of the extreme parallelism and analog operation of the memory array circuit. Table summarizes the most relevant computing applications that have been explored for IMC. The mainstream applications attracting widespread interest are inference and training of AI models, such as DNNs and large language models (LLMs). Other computing applications include solving linear equations, linear regression problems, principal component analysis (PCA), decision trees, combinatorial optimization of complexes, multiple-variable problems, stochastic computing and spiking neural networks in neuromorphic computing. Each of these computing applications generally relies on a different IMC circuit primitive. For instance, DNN inference generally requires MVM to support the extensive weighted summation that takes place in each fully connected or convolutional layer. On the other hand, linear regression requires IMVM to support the pseudoinverse matrix calculation. Most importantly, each circuit/application combination may require a different set of properties of the RRAM device. The device requirements that should be fulfilled for each specific computing application are summarized in Table and include multilevel operation, data retention, endurance, linear conductance update, linear conduction, and short-term memory.

2. Summary of the Most Relevant Computing Applications and Corresponding RRAM Requirements.

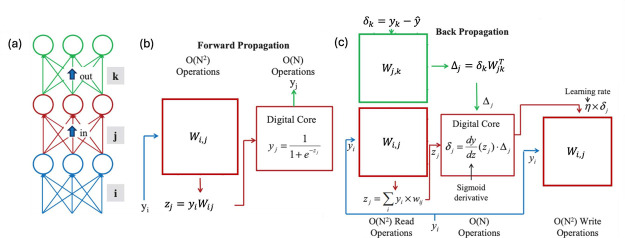

5.1. Neural Network Inference

The DPE circuit for MVM can be used for accelerating neural network inference. In fact, each layer of a feedforward DNN performs the dot product of the i-th layer neurons with their corresponding synaptic weights, to be accumulated at the i+1-th layer neurons as input for the nonlinear activation function. Thus, each layer of a DNN can be mapped to one or more CBAs, whose outputs are then accumulated and sent to an activation function unit. Recently, multiple fully integrated DNN accelerators have been realized using RRAM-based CBAs. − Such accelerators generally include the CBA circuit, all of the sensing units required for operating it, and a bus or network-on-chip to control the data flow.

A notable example is NeuRRAM, consisting of 48 cores, each one of them able to realize multiplication of vectors with 256 × 256 stored matrices. Figure a illustrates the NeuRRAM circuit schematic of the core architecture. Each core consists of 16 × 16 corelets that share common bit-lines and word-lines along the rows and source-lines along the columns. Each corelet (Figure b) comprises a 16 × 16 RRAM CBA and one neuron circuit. CBAs in NeuRRAM can be connected in multiple configurations, including forward, where inputs are applied on the rows, for typical MVM, and backward, where input are applied on the column to perform the transposed MVM, which can be used for computing gradients during training. Compared to conventional DPE circuits performing MVM with current mode sensing (Figure c), NeuRRAM performs voltage mode sensing (Figure d) to reduce energy consumption. Accumulating the current of multiple devices can result in a large power consumption, thus requiring large TIAs. Also, as shown in Figure e, different DNN models, having different weight distributions, would result in strongly different current distributions to sense. By performing voltage mode sensing, the output is normalized by the total equivalent conductance seen by each neuron, resulting in a more uniform current distribution. Thanks to voltage-mode sensing, the energy-delay product in the NeuRRAM circuit outperforms other accelerators − despite being designed in a relatively old technology, as shown in Figure f. To improve the classification accuracy during inference, NeuRRAM employs several hardware-software codesign techniques, such as noise-aware training and on-chip fine-tuning.

21.

Illustration of DNN inference in NeuRRAM based on 1T1R arrays of RRAM devices. (a) Overall architecture and (b) individual corelet of the NeuRRAM chip. Each corelet of size 16 × 16 is connected to a neuron. 16 × 16 corelets are organized in a core, which has a resulting size of 256 × 256. (c) Illustration of current mode sensing and (d) voltage mode sensing. (e) Comparison of the distribution of output for current- and voltage-mode sensing. (f) Energy delay product as a function of bit-precision for multiple taped-out RRAM-based accelerators. (g) Distribution of programmed conductance during programming and (h) after 30 min for multiple programmed levels. (i) Classification accuracy for the data set of the Canadian Institute for Advanced Research with 10 classes (CIFAR-10) of NeuRRAM with various operation modes. (j) Layer-wise accuracy comparison with and without fine-tuning. Adapted from ref . Copyright 2022 Nature Publishing Group with Creative Commons Attribution 4.0 license http://creativecommons.org/licenses/by/4.0/.

Figure g shows the distribution of conductances programmed in 8 equally spaced levels, corresponding to 3 bits. The programming resolution is limited by several factors, including IR drops, capacitive coupling, and limited ADC range. Three bits are not enough to reach good classification accuracy, with most networks requiring 4 or even 8 bits for quantization for good enough results. Moreover, as shown in Figure h, after 30 min it is possible to observe a distribution broadening. To solve this issue, instead of quantizing a pretrained model or training a quantized network, which is equivalent to injecting uniformly distributed noise into weights, networks are trained with floating point precision with Gaussian distributed noise extracted from RRAM characterization. Figure i shows the simulated and experimentally verified result of such operation while performing inference on the CIFAR-10 data set, improving accuracy from 25.35% to 83.67%. Moreover, a fine-tuning training with chip-in-the-loop is performed to further increase the accuracy. Weights are programmed and finely adjusted in each layer while the network is undergoing training, to avoid multiple reprogramming while keeping hardware-awareness during the training operation. First, the model is trained completely offline. Then the first layer is programmed into the chip. Afterward, inference with the training set is performed by using experimental activations coming from the on-chip first layer and offline software activation for the other layers. The weights of all of the offline layers are adjusted to minimize the loss. The operation is repeated by programming the second layer on the chip, performing fine-tuning of all others, and so on until all of the model is programmed. The overall effect of such operation and noise injection is shown at the right-most bar in Figure i, resulting in an accuracy of 85.66%, with the layer-wise comparison between training with and without fine-tuning shown in Figure j.

A key advantage of using RRAM compared to traditional CMOS memories, such as SRAMs, for IMC is the multilevel programming capability, which results in improved computational efficiency per unit area and reduced complexity of the peripheral circuits. Multiple conductance states can be achieved by properly modulating the pulse parameters during the set operation or during a reset operation. Figure a shows the cumulative distribution function (CDF) of the conductance obtained after a set operation in a 1T1R RRAM device by changing the gate voltage of the select transistor at a fixed TE voltage, namely using an incremental gate pulse programming algorithm. Similarly, Figure b shows the CDF of conductance obtained after the reset operation, where the stop voltage was gradually increased during reset at a fixed gate voltage, which is referred to as the incremental reset pulse programming algorithm. These results demonstrate the ability to tune the average analog conductance via set or reset operations; however, the conductance variations are relatively large, which suggests that adoption of a closed-loop program-verify (PV) algorithm is advantageous.

22.