Abstract

The study aims to explore how moral judgment influences negative emotional responses and comment length in reactions to “New Yellow Journalism” on Weibo, as well as how Linguistic Style Matching (LSM) moderates these relationships. The study extracted over 8,000 comments from a recent incident involving fabricated viral news and conducted analytical methods to test our hypotheses. The results demonstrate that negative emotional responses mediate the relationship between moral judgment and comment length. Additionally, LSM moderates both the direct effect of moral judgment on comment length and the indirect effect through negative emotional responses. These findings highlight the psychological mechanisms underlying online public opinion dynamics and offer insights for managing online interactions in the digital media era.

Keywords: Moral judgment Language, Comment length, Emotional reactions, Language style matching

Subject terms: Risk factors, Emotion, Social behaviour

Introduction

In the current era marked by a deep integration of digitalization and globalization, social media has become the primary arena for public information acquisition and interaction1. Scholars have recently coined the term “new yellow journalism” to describe distorted news characterized by algorithm-driven dissemination, sensational headlines, and emotionally charged narratives. This phenomenon can be viewed as a digital-age extension of 19th-century yellow journalism—marked by sensationalism, moral outrage, and the viral spread of low-veracity content—enabled by modern social media platforms and algorithmic amplification2,3.

Algorithmic amplification further fuels the virality of such emotionally provocative content. Recent algorithm audits reveal that recommendation systems based on engagement metrics significantly increase the visibility of posts that evoke high-arousal emotions such as “anger” and provoke intergroup conflict, thereby exacerbating information polarization4,5.

Several incidents within the Chinese context illustrate the risks of this trend. For example, the fabricated influencer story “Qin Lang Lost His Winter Homework in Paris” went viral across multiple platforms from February to April 2024. Official authorities labeled it as an online rumor and criticized its nature as “new yellow journalism,” ultimately resulting in a platform-wide ban of the related accounts. Similarly, during the high-rise apartment fire in Ürümqi in 2022, unverified death toll rumors spread rapidly on Weibo, triggering large-scale emotional polarization. These cases demonstrate that fake news, by harnessing emotional mobilization, not only distorts public understanding of events but also threatens social consensus and amplifies negative emotions6–8.

To clarify how “new yellow journalism” shapes online discourse, we examine four interconnected variables—moral judgment, linguistic-style matching (LSM), negative emotion, and comment length—using real-time Weibo data, Bidirectional Encoder Representations from Transformers (BERT)9 and the Linguistic Inquiry and Word Count tool (LIWC)10 for emotion coding, and regression analysis. Building on dual-process models of moral cognition, the present study adopts two complementary frameworks. Information-Processing Theory11 proposes that high-arousal affect triggers systematic, message-focused elaboration. Emotion-Regulation Theory12 further holds that individuals strategically modify either the experience or the outward display of emotion; in social-media contexts, producing lengthy text can itself function as an expressive-regulation tactic. Together, these frameworks predict that morally triggered negative affect will (a) deepen cognitive processing and (b) lengthen written responses. This multidimensional analysis aims to uncover the emotional dynamics of online public opinion by examining how netizens—internet users who actively engage in online platforms—respond emotionally to “new yellow journalism.” The findings provide empirical insights for media governance and moral education.

Relationship between yellow journalism and fake news

Yellow journalism—sensational, exaggerated, emotion-laden reporting aimed at attracting attention rather than conveying accuracy—emerged in the late-nineteenth-century press13. Contemporary fake news, defined as verifiably false content packaged in a news format14, inherits many of yellow journalism’s tactics: moral framing, outrage cues, and vivid headlines. Large-scale network studies show that stories using moral–emotional language are three-to-five times more likely to be shared than neutral factual reports15, while provocative wording and arousing emotions further boost virality16. We therefore adopt the term “new yellow journalism” to denote fake news that weaponizes classic yellow techniques in digital spaces. Situating our study within this lineage clarifies why we focus on moral judgment, negative emotion, and linguistic style as core mechanisms of online misinformation diffusion.

Moral judgment influences both emotional responses and comment lengths

From the perspective of classical philosophy, moral judgment is closely tied to moral reasoning. Thinkers such as Plato and Aristotle argued that moral judgment rests on rational analysis and the cultivation of virtue17. Contemporary scholarship has proposed several psychological accounts. Greene et al.18 advance a dual-process model in which fast, emotion-based intuitions and slower, deliberative reasoning jointly shape moral judgment. Haidt et al.19 further specify five universal moral foundations—care/harm, fairness/cheating, loyalty/betrayal, authority/subversion, sanctity/degradation—each supported by distinct emotional and cognitive mechanisms that vary across cultures.

Moral judgments typically elicit discrete emotions such as anger, disgust, or compassion, which in turn guide expressive behavior. Experiments show that observing severe moral violations evokes strong affect and motivates outspoken condemnation20. Two complementary frameworks clarify how these emotions steer language production. Feelings-as-Information Theory holds that people treat their momentary affect as a heuristic cue, deciding how much detail to convey when evaluating a target21. The Appraisal Tendency Framework (also called Affect-as-Information Theory) adds that each discrete emotion carries appraisal patterns—e.g., certainty, control—that systematically bias cognition and choice22. Integrating these views, we argue that morally induced, high-arousal emotions both signal the importance of an issue and activate appraisal tendencies that motivate deeper cognitive elaboration. In line with Information Processing Theory11, this deeper elaboration is likely to surface as longer, more detailed comments—especially on social-media platforms where users can instantly externalize moral stances. Put differently, emotions serve simultaneously as informational cues and motivational forces that drive individuals to elaborate their moral viewpoints, a phenomenon particularly salient online.

On social media, the rapid flow of information accelerates both the formation and expression of moral judgments. The immediacy of digital interaction can prompt snap moral evaluations, amplifying emotion-driven responses and, consequently, comment length8. Platform dynamics—group identity, algorithmic curation, and peer reinforcement—further shape moral expression. Within “echo-chamber” environments, users often craft extended comments to bolster in-group moral positions while rejecting dissenting views23. Such reinforcement not only lengthens individual comments but also fuels broader cycles of public affect24. Thus, in digital settings, the interplay between emotion and moral judgment crucially influences public discourse on contentious issues. Based on this reasoning we propose:

Hypothesis 1

There is a positive correlation between netizens’ moral judgment and comment length on “yellow journalism,” such that those with higher moral standards tend to post longer comments.

The mediating role of negative emotions

Moral judgment refers to the process by which people assess “right” and “wrong” in light of prevailing social norms25. When individuals encounter sensational content that violates moral standards—so-called “neo-yellow journalism”—they typically experience anger, disgust, or frustration26. These emotions are not merely private feelings; they also shape subsequent expressive behavior, including the length and tone of online comments. Prior work indicates that moral judgments are usually propelled by swift affective intuitions rather than by dispassionate reasoning6; immediate affect can spur people to publicly denounce misconduct27, and the anonymity and synchronicity of computer-mediated interaction amplify such emotional expression28.

Both the Affect-as-Information model and the Emotion-as-Information framework posit that negative affect elicits systematic, analytic processing, prompting individuals to scrutinize information more closely29,30. Research on expressive writing likewise shows that high-arousal negative emotions are regulated through lengthier and more detailed text production31, while the online disinhibition effect demonstrates that anger or disgust readily translates into sharper and more verbose language32. Suppressing emotional expression heightens physiological arousal and undermines interpersonal rapport33, implying that direct and extensive written output has an emotion-regulatory function. Although direct evidence for a “valence → comment length” link is still scarce, these indirect findings suggest that negative affect may mediate the relationship between moral judgment and comment length—a mechanism that awaits empirical validation.

Negativity-bias research further holds that, compared with positive information, moral violations are more likely to evoke high-arousal emotions such as anger and disgust, thereby monopolizing cognitive resources34,35. In computer-mediated settings, the online disinhibition effect contends that anonymity and cue absence weaken social constraints, making it easier for negative emotions to manifest as incisive—even derogatory—language32. Butler et al.’s33 experiments show that suppressing emotional expression during social interaction intensifies physiological arousal and harms relational harmony, suggesting that direct, substantial written expression—typically lengthier and saturated with affect—serves an emotion-regulatory role. From a cognitive standpoint, Bless et al.29 found that negative mood induces systematic information processing, leading individuals to examine content more carefully and generate richer cognitive responses. Collectively, these theoretical and empirical strands explain why moral-triggered negative affect in social-media contexts tends to foster lengthy, affect-laden commentary. Synthesizing cognitive and social perspectives, negative emotion emerges as the driving “power shaft” that links moral judgment to commenting behavior. Together, these findings explain why morally triggered negative emotions in social-media contexts are likely to foster extended, emotionally saturated commentary. Based on the theoretical and empirical insights above, this study aims to explore the mediating mechanism of negative emotions between moral judgment and comment length in the context of netizens’ reactions to new yellow journalism. Specifically, the following hypotheses are proposed:

Hypothesis 2

Netizens’ emotional responses to “neo-yellow journalism” are predominantly negative, reflecting a widespread public aversion or dissatisfaction toward such content.

Hypothesis 3

When expressing negative emotions, netizens tend to use more emotionally charged and derogatory language, resulting in a more aggressive linguistic style.

Hypothesis 4

In comments reflecting positive emotional responses, netizens’ language tends to be more neutral or affirmative, favoring milder and more rational vocabulary.

Hypothesis 5

Negative emotional responses mediate the relationship between moral judgment and comment length.

The moderating role of language style matching

Language Style Matching (LSM) refers to the phenomenon where individuals unconsciously adjust their language style to synchronize with their conversation partner during communication, including vocabulary choice, sentence structure, and language rhythm36. In online communication environments, where textual communication dominates and non-verbal cues are absent, LSM becomes a crucial factor in understanding others and expressing emotions.

Studies have shown that when conversation partners align their language styles, they typically communicate ideas and emotions more effectively, with this alignment helping to reduce misunderstandings and conflict, thereby enhancing the effectiveness of communication37. In online settings, users often mimic the language style of the original post or other comments, especially when responding to notable or emotional content38. This matching of language style may intensify negative emotional responses, leading discussions to become more polarized.

In the relationship between moral judgment and comment length, LSM may play an important moderating role. From one perspective, when LSM levels are high, the impact of moral judgment on comment length becomes more significant. This may be because high LSM levels increase individuals’ motivation to communicate, making them more willing to spend time and effort to elaborate on their viewpoints, thereby increasing the length of comments36. Conversely, LSM may amplify the negative emotional responses triggered by moral judgment, prompting individuals to process information more deeply and stimulating a stronger desire to express themselves.

Specifically, LSM may moderate the influence of moral judgment on negative emotional responses. When an individual’s language style closely matches others, they may be more susceptible to others’ emotions, thereby enhancing their own negative emotional responses39. This intensified negative emotional response further encourages individuals to write longer comments to fully express their inner emotions and stance.

Based on the above analysis, this study proposes the following hypothesis:

Hypothesis 6

Language Style Matching (LSM) moderates the relationship between moral judgment and comment length. Furthermore, moral judgment indirectly influences comment length through negative emotional responses to “new yellow journalism”, with the strength of this indirect effect varying according to LSM levels.

The present study

Currently, research on moral judgment and emotional expression is primarily focused on Western social media platforms, such as Facebook and Reddit. However, due to differences in cultural background and platform characteristics, these findings may have limited applicability in China. As a primary social platform in China, Weibo exhibits unique patterns in user interaction styles, language, and cultural background. Consequently, measuring and quantifying emotional responses and language styles within the Chinese context presents a significant challenge for this study.

Compared to traditional questionnaires, language data from online users offers significant advantages in psychological and social science research. Primarily, language data can authentically reflect users’ spontaneous expressions on social platforms, thereby reducing social desirability bias10.

Additionally, applying natural language processing (NLP) techniques and machine learning models allows for the extraction of emotional features from unstructured text. Specifically, through the application of the BERT deep learning model, a deeper semantic understanding of text becomes possible, facilitating more accurate identification of emotional responses9. The use of the Linguistic Inquiry and Word Count (LIWC) dictionary enables detailed analysis of emotional and psychological vocabulary within texts, thereby quantifying language style matching.

However, current research has not adequately incorporated the unique aspects of Chinese culture when measuring emotional responses. Significant differences exist in language habits and emotional vocabulary across cultures. This study, therefore, aims to develop an emotion analysis tool suited to the Chinese context to capture Weibo users’ emotional responses accurately. This tool is essential for understanding moral judgment and emotional expression within China’s social media landscape and examining their impact on commenting behavior. This study will provide a broader theoretical and empirical foundation for the application of emotion analysis techniques, enhancing our understanding of the relationship between moral judgment and emotional responses.

Methods

Event summary

The “net celebrity cat-a-cup incident” erupted in February 2024, when a video claimed that a Chinese first-grade pupil’s winter-holiday homework had been found in Paris. The uploader displayed chat records purportedly with the “child’s mother”. However, as skepticism grew, doubts about the video’s authenticity intensified. Finally, on April 12, public security authorities issued a notice confirming the incident as a fabricated script designed to attract followers, resulting in administrative penalties for those involved.

This study selected this fake news event as the research subject primarily because of its representative nature in the phenomenon of “new yellow journalism” and its profound impact on public moral judgments and emotional responses. The event garnered widespread attention and emotional resonance through fabricated scenarios; however, once the truth was revealed, public sentiment swiftly shifted, resulting in strong moral condemnation. Unlike simple text-based misinformation, this event employed a “scripted” approach to carefully construct scenarios, thereby amplifying its dissemination effect. Furthermore, as one of the most widely used social platforms, Weibo served as a primary channel for the spread of such events. Therefore, an in-depth exploration of moral and emotional responses on Weibo can better illuminate the role and influence of social media in shaping public emotions and moral judgments.

The data collection period for this study was set from April 12 to April 19, based on the following considerations: First, after the public security announcement of the incident’s truth, the public’s anger and condemnation transformed into strong moral responses, shifting the event from a mere curiosity to a subject of moral critique. Second, following the announcement, public emotions shifted from curiosity to anger and disappointment, with comment content reflecting strong moral judgment characteristics, making it suitable for retrospective analysis. Third, the week following the announcement marked the peak of public emotional fluctuations. Data from this period enables a comprehensive capture of the influence of moral judgment on commenting behavior, providing precise sample support for the study.

Data collection and processing

To analyze types of emotional responses, this study applies commonly used data mining techniques and sentiment analysis methods in big data research, employing the LIWC dictionary for moral judgment and LSM analysis. Data mining involves the extraction and preprocessing of Weibo data. Sentiment analysis includes manual classification and the application of BERT deep learning technology to classify the dataset by sentiment. Through the LIWC dictionary, the study detects the frequency of moral-related vocabulary in comments, and Python is used to assign a moral judgment score to each comment based on these frequencies. Additionally, LSM is quantified using the LIWC dictionary, examining the frequency of function words and emotion words in comments to analyze the similarity of language styles in positive and negative emotional responses. The specific operational steps are as follows:

Data types extracted

Four data layers were collected: (1) original Weibo posts, (2) reposts/retweets, (3) comment threads, and (4) basic public user information (user ID, follower count). No private data were accessed.

Step 1: Weibo data extraction

This study utilizes a custom-developed wbspider crawler program to gather data from Sina Weibo (URL: http://weibo.com). During data collection, the study operates along a timeline and uses multiple developer certificates (developer IDs) to achieve continuous scraping of real-time Weibo content. To ensure data relevance and accuracy, this study employs a set of keywords associated with new yellow journalism, specifically focusing on discussions around the “net celebrity cat-a-cup incident.” This approach filters irrelevant Weibo posts. Additionally, since Weibo user interest in this incident peaked within the week following the Hangzhou police announcement on April 12, 2024, confirming that “the elementary school homework found in Paris was fabricated by two individuals, leading to administrative penalties,” a crawling window from April 12 to April 19, 2024, was designated for data extraction. Within this timeframe, a targeted scraping effort yielded a final set of 8,717 valid Weibo data entries.

Step 2: data preprocessing

Before conducting data analysis, it is essential to perform word segmentation on the Chinese content from Sina Weibo. This study employs the Jieba Chinese word segmentation tool (Jieba, available at: https://github.com/fxsjy/jieba), an efficient Python text processing tool well-suited for integration into the data analysis workflow. During segmentation, certain vocabulary frequency anomalies were observed—low-frequency words possibly due to typos, and high-frequency words largely consisting of auxiliary or prepositional words. To enhance the accuracy of data analysis, these words were removed. Subsequently, the data was cleaned and standardized using the Pandas library, preparing it for further analysis.

Step 3: sentiment classification

(1) Manual Annotation.

Following standard big data research methodology, this study employed random sampling to extract approximately 10% of the total dataset (871 Weibo posts) as a training dataset. This training dataset was used to establish benchmark sentiment categories (positive, negative, and neutral) for training or parameterizing the model. The remaining 90% of data (7,846 Weibo posts) constituted the test dataset, which was subjected to automated sentiment classification using the BERT deep learning model. Sentiment classification involved two steps: manual sentiment labeling to establish clear benchmark categories, followed by classification through the BERT deep learning model. The BERT model, which uses advanced neural network algorithms, was employed for its capacity to interpret and process complex language patterns, allowing for effective sentiment analysis across the test dataset.

To ensure accuracy in the sentiment analysis of Weibo data, this study implemented a rigorous manual sentiment labeling process. A professional annotation team with backgrounds in psychology, computer science, and sentiment analysis was assembled to provide a multi-faceted approach to accurate sentiment identification. The team followed a detailed annotation guide defining the forms and specific vocabulary of positive, negative, and neutral sentiments. An initial simulated annotation training round enabled team members to familiarize themselves with the scoring system, ensuring consistency and accuracy throughout the process.

Following this preparatory phase, five annotators conducted the formal annotation. All annotators underwent standardized training and performed multiple rounds of training and trial annotations, strictly adhering to the unified annotation guidelines. To evaluate inter-annotator agreement, Fleiss’ Kappa was employed, yielding a κ value of 0.82—a result indicating a high level of reliability and consistency among the annotators.

In parallel, the accuracy of automated annotations was validated by comparing automated annotation results with manually labeled samples. Accuracy, Recall, and F1-Score were used to assess performance, revealing an Accuracy of 88.5%, a Recall of 85.2%, and an F1-Score of 86.8%. These findings demonstrate that the automated annotation model performs stably and reliably for this task, effectively supporting human annotation in sentiment analysis of Weibo data.

Sentiment categories were defined as follows:

Positive sentiment includes words indicating joy, praise, anticipation, and satisfaction.

Negative sentiment comprises words conveying worry, criticism, anger, and dislike.

Neutral sentiment primarily involves factual statements or neutral descriptions.

Each sentiment category was quantified based on the intensity of expressed sentiment. The quantification method for sentiment scores involved scoring each Weibo post on sentiment intensity, with each annotator scoring independently. The average score was then calculated, ranging from 0 to 1, where 0 indicates no apparent sentiment and 1 represents maximum sentiment intensity. This quantification involved linearly scaling raw scores to a range of 0–1, capturing sentiment intensity. Specifically, sentiment scores were normalized through the formula:

where the minimum score is typically set to the lowest annotation score, and the maximum score to the highest.

(2) BERT Deep Learning Model.

To conduct precise sentiment analysis on Weibo text, a deep learning-based sentiment analysis model was developed and trained. This model leverages the latest neural network technologies, specifically Long Short-Term Memory (LSTM) networks and Transformers, both of which excel at capturing long-range dependencies in text. The model was trained on a high-quality, consistently labeled dataset obtained through the rigorous manual sentiment labeling process described above. Training and optimizing the deep learning model enabled the algorithm to automatically detect and quantify sentiment tendencies in Weibo text. The model output includes both sentiment type (positive, negative, or neutral) and sentiment intensity scores. Sentiment scores are computed through a normalization process that scales the model’s raw output to a 0–1 score, where 0 represents no evident sentiment and 1 indicates strong emotional expression. The normalization formula is as follows:

Moral judgment

In this study employed the Moral Foundations Dictionary 2.0 (MFD 2.0), derived from Moral Foundations Theory (MFT), to quantitatively analyze the moral dimensions present in the textual data. All research texts were first preprocessed (e.g., removing noise characters, tokenization, and lemmatization) and then matched against the MFD 2.0 entries. We calculated the frequency of keywords associated with each moral dimension—such as Care/Harm and Fairness/Cheating—and adopted dual annotation for words carrying both strong emotional connotations and moral meanings, ensuring the distinction between moral judgment indicators and emotional indicators. To address differences in text length and source, we normalized the raw scores of each dimension onto a 1–5 scale using the following z-score normalization formula:

where  represents the raw score of the

represents the raw score of the  -th moral dimension in a given sample,

-th moral dimension in a given sample,  denotes the mean raw score of the respective moral dimension across the sample,

denotes the mean raw score of the respective moral dimension across the sample,  is the standard deviation of the raw scores for that dimension.

is the standard deviation of the raw scores for that dimension.

This formula standardizes raw scores based on their distribution across the sample and maps them onto a 1–5 scale. It accounts for variability within the data, reduces the influence of extreme values, and ensures comparability across different texts while minimizing potential interference from emotional word usage, aiming to yield a more reliable quantification of moral inclinations.

Language style matching (LSM)

In the analysis of Language Style Matching (LSM), we focus on the frequency and consistency of emotional words and function words in the text. LSM evaluates the coherence of language style by calculating the ratio and alignment of function and emotional words within the text. This analysis reveals whether the author’s language style aligns with styles commonly found in other texts or within a specific community.

To establish a baseline style, this study extracts the word frequency distribution of mainstream language style from all comments, calculating the overall average frequency of emotional and function words as a comparative standard.

The LSM score is calculated as follows:

The score ranges from 0 to 1, where 0 indicates no match and 1 indicates a complete match. This score reflects the author’s individuality in language use and adaptability to the social environment.

Comment length

In this study, Python-based program was developed to automatically calculate the character count of Weibo comments. This tool enables quantification of the detail level in users’ social media expressions, providing objective data for analyzing the depth of user engagement. The program utilizes the Pandas library to process data efficiently, ensuring both accuracy and efficiency in the analysis process.

Finally, this study employs a regression model to explore the relationships among moral judgment, LSM, negative emotional responses, and comment length. Our aim is to analyze how these variables collectively influence the behaviors and reactions of social media users.

Analysis method

This study employs a custom Python program and SPSS 27.0 software for data analysis to explore the relationships between moral judgment, comment length, linguistic style matching (LSM), and negative emotional response. The analysis process includes generating word clouds to extract high-frequency vocabulary, analyzing variable correlations, and testing moderated mediation effects, aiming to understand how moral judgment affects negative emotional responses through comment length and how LSM moderates this process. Initially, the Python program generates word clouds to summarize and visualize the high-frequency vocabulary in comments, presenting the main content of emotional characteristics and moral judgment more intuitively; this study also compares the lexical features of positive and negative emotional comments. For this purpose, an independent sample t-test is used to compare whether the use of positive/neutral vocabulary in positive emotional comments is significantly higher than that in negative emotional comments. In the preliminary analysis of variable relationships, Pearson correlation analysis is applied to test the correlations among moral judgment, comment length, LSM, and negative emotional response. Subsequently, to test the mediating role of negative emotional responses between moral judgment and comment length response, as well as the moderating role of LSM, this study utilizes Hayes’s moderated mediation analysis model (PROCESS Model 8). This model analyzes whether LSM moderates the path in which moral judgment affects negative comment length through negative emotional responses. The significance of the moderated mediation effect is tested using the bootstrap method with 5,000 bootstrap samples to calculate the 95% confidence intervals. If the confidence interval does not include zero, it indicates that the moderated mediation effect is significant, meaning that LSM significantly moderates the path by which moral judgment affects negative emotional responses through comment length.

Ethical approval and informed consent

All methods were carried out in accordance with relevant guidelines and regulations. The study protocol was approved by the Wuhan University Humanities and Social Sciences Institutional Review Board (WHU-HSS-IRB), approval number WHU-HSS-IRB2025045. The data used in this study were collected from publicly available online platforms. All data were anonymized before analysis and contained no personally identifiable information. For this study, informed consent has been waived by the Wuhan University Humanities and Social Sciences Institutional Review Board (WHU-HSS-IRB) due to the anonymity and retrospective nature of the study. The dataset is not publicly available due to privacy and ethical restrictions.

Results

Table 1 shows the distribution and descriptive statistics of negative, neutral, and positive emotional responses. The data indicate that negative emotions dominate, accounting for 76.5% of total responses. Neutral emotions have the lowest proportion, making up 8%, while positive emotions account for 15.4%. This distribution suggests that the proportion of negative emotions is significantly higher than that of neutral and positive emotions.

Table 1.

Distribution and characteristics of emotional responses.

| Emotion type | Proportion | M ± SD |

|---|---|---|

| Negative | 0.765 | 0.66 ± 0.18 |

| Neutral | 0.08 | 0.49 ± 0.11 |

| Positive | 0.154 | 0.67 ± 0.19 |

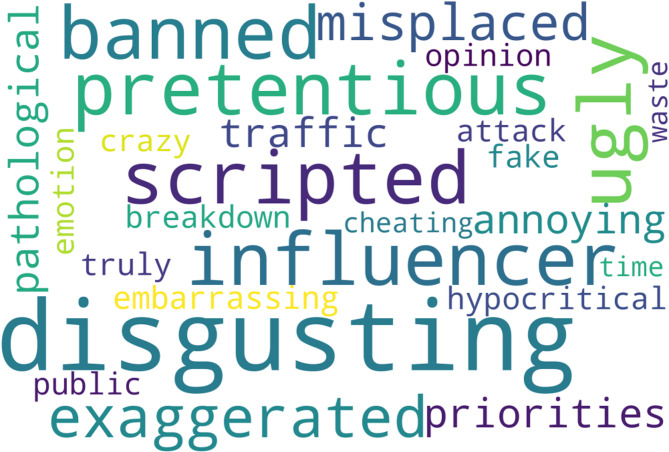

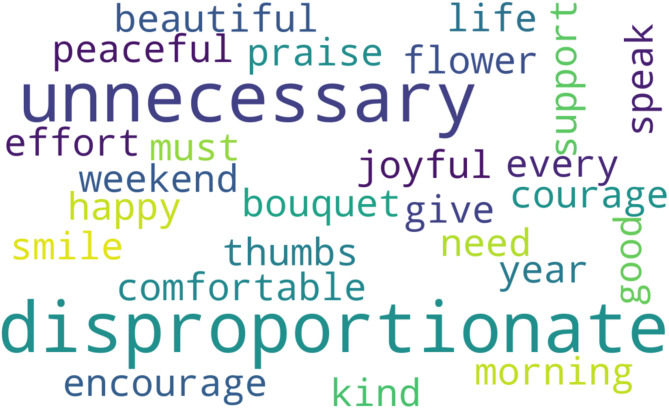

Table 2 presents the frequency of commonly used words in negative and positive emotional responses, revealing the language patterns associated with different emotional types. Common words in negative comments include “exaggeration” (1004 occurrences), “ban” (700 occurrences), “script” (685 occurrences), and “posturing” (616 occurrences), which express strong dissatisfaction with a clear tone of criticism and frustration. Other high-frequency negative words include “disgusting”, “psychotic”, “block”, “ugly”, and “annoying”, each occurring more than 300 times, indicating the prevalence of derogatory language in negative comments. In contrast, the frequency of positive words is lower, and the tone is milder. The most frequent positive word is “unnecessary” (264 occurrences), possibly implying a downplaying of the topic’s importance; other positive words include “not so serious” (223 occurrences), “support” (140 occurrences), and “interesting” (62 occurrences), which are expressed in a more peaceful and relaxed manner with less emotional intensity. Overall, negative comments typically exhibit stronger and more abundant emotions, while positive comments are more restrained and less frequent.

Table 2.

Frequency of common words in negative and positive emotional responses.

| Negative words | Frequency | Positive words | Frequency |

|---|---|---|---|

| Exaggeration | 1004 | Unnecessary | 264 |

| Banned | 700 | Not that serious | 223 |

| Script | 685 | Support | 140 |

| Pretentious | 616 | Too serious | 76 |

| Rectification | 491 | Serious | 70 |

| Disgusting | 457 | Fun | 62 |

| Psychotic | 442 | Every year | 59 |

| Blocked | 425 | Like | 59 |

| Ugly | 398 | ||

| Annoying | 361 |

Word clouds in Figs. 1 and 2 also visually display the different language patterns in positive and negative comments. Moreover, t-test results show that the use of negative vocabulary in negative comments is significantly higher than in positive and neutral comments (t = 4.88, p < 0.001; t = 7.28, p < 0.001), indicating a more aggressive language style in negative comments, which further supports Hypotheses 2 and 3. Based on the above analysis, Hypotheses 2, 3, and 4 are supported.

Fig. 1.

Negative emotion word cloud.

Fig. 2.

Positive emotion word cloud.

Table 3 shows examples of linguistic style matching (LSM) in both negative emotional and political responses. In the negative emotional responses, users express dissatisfaction using similar language patterns (e.g., disgusting, uncomfortable, unbearable), demonstrating the alignment of emotional tones and syntax. On the political side, comments like “Next step, is she going to apply for political asylum?”, “She’s slowly becoming an anti-China advocate.”, and “She’ll just be watched while using VPNs in the future.” reflect LSM through the shared use of sarcastic, accusatory language, and predictive structures. Both sets of comments display the moderating effect of LSM in enhancing emotional responses based on shared beliefs and language.

Table 3.

Examples of linguistic style matching in negative emotional and political responses.

| User | Comment |

|---|---|

| User A | “This woman’s visual effects are disgusting.” |

| User B | “Her voice makes me extremely uncomfortable.” |

| User C | “Her exaggerated expressions are unbearable—who even watches this?” |

| User D | “Next step, is she going to apply for political asylum?” |

| User E | “She’s slowly becoming an anti-China advocate.” |

| User F | “She’ll just be watched while using VPNs in the future.” |

According to the data from Table 4, the correlation coefficient r between comment length and moral judgment is 0.55, reaching a significant level (p < 0.001). This indicates a significant positive correlation between the two variables. The result suggests that as the moral judgment score increases, the length of the comments also increases. This supports Hypothesis 1, proposing that netizens tend to make longer comments on yellow news when they have higher moral standards.

Table 4.

Means, standard deviations, and correlation coefficients of variables.

| Variable | 1 | 2 | 3 | 4 | |

|---|---|---|---|---|---|

| 1 | Comment length | – | |||

| 2 | Moral judgment | 0.55*** | – | ||

| 3 | Negative emotional Responses | 0.48*** | 0.76*** | – | |

| 4 | LSM | 0. 4*** | 0.6*** | 0.58*** | – |

| M ± SD | 20.01 ± 13.52 | 3 ± 0.95 | 0.66 ± 0.18 | 0.88 ± 0.16 | |

As shown in Tables 5 and 6, the regression analysis results indicate that moral judgment not only significantly predicts negative emotional responses (β = 0.55, t = 53.5, p < 0.001) but also directly affects comment length (β = 0.76, t = 95.19, p < 0.001). In further analyses, after including negative emotional responses in the model, the effect of moral judgment on comment length remains significant, though the effect is reduced (β = 0.43, t = 27.47, p < 0.001). The impact of negative emotional responses on comment length is also significant (β = 0.16, t = 10.06, p < 0.001), suggesting that negative emotional responses partially mediate the relationship between moral judgment and comment length. This model explains 31% of the variance in comment length (R² = 0.31, F = 1503.27, p < 0.001), supporting the role of negative emotional responses as a mediating variable. Further mediation effect analysis, as shown in Table 5, verifies the mediation effect of negative emotional responses between moral judgment and comment length. The analysis indicates that the total effect of moral judgment on comment length is significant (total effect = 0.34), with a significant indirect effect through negative emotional responses (indirect effect = 0.07, 95% bootstrap confidence interval [0.058, 0.089], p < 0.001), demonstrating the robustness of the mediation pathway through negative emotional responses. After controlling for negative emotional responses, the direct effect of moral judgment on comment length remains significant (direct effect = 0.27), further confirming the presence of a partial mediation effect. In summary, these results support Hypothesis 5, suggesting that moral judgment indirectly influences the length of comments through its effect on negative emotional responses.

Table 5.

The regression analysis for the mediation model examining negative emotional responses between moral judgment and comment length.

| Negative emotional responses | Comment length | Negative emotional responses | ||||

|---|---|---|---|---|---|---|

| β | t | β | t | β | t | |

| Moral Judgment | 0.55 | 53.5*** | 0.76 | 95.19*** | 0.43 | 27.47*** |

| Negative Emotional Responses | 0.16 | 10.05*** | ||||

| R 2 | 0.3 | 0.58 | 0.31 | |||

| F | 2862.77*** | 9061.97*** | 1503.27*** | |||

***p < 0.001.

Table 6.

Analysis of the mediating effect of negative emotional responses between moral judgment and comment length.

| Item | Total effect | Direct effect | Indirect effect | 95%BootCI | Conclusion |

|---|---|---|---|---|---|

| Moral judgment = > negative emotional responses = > comment length | 0.34 | 0.27 | 0.07 | 0.058–0.089 | Significant |

According to the analysis results shown in Table 7, moral judgment significantly positively influences comment length (β = 0.25, t = 11.77, p < 0.001), meaning that as users’ levels of moral judgment increase, they tend to post longer comments. However, the direct effect of linguistic style matching (LSM) on comment length is not significant (β = 0.18, t = 1.41, p > 0.05), but the interaction between moral judgment and LSM significantly impacts comment length (β = 0.09, t = 2.37, p < 0.01). This indicates that when LSM levels are high, the effect of moral judgment on comment length is more pronounced, supporting the first part of Hypothesis 6.

Table 7.

Test of a moderated mediation model.

| Comment length | Negative emotional responses | Comment length | ||||

|---|---|---|---|---|---|---|

| β | t | β | t | β | t | |

| Moral judgment | 0.25 | 11.77*** | 0.08 | 18.97*** | 0.21 | 9.71*** |

| LSM | 0.18 | 1.41 | 0.06 | 2.14** | 0.16 | 1.21 |

| Moral judgment× LSM | 0.09 | 2.37** | 0.05 | 6.23** | 0.07 | 1.77 |

| Negative emotional responses | 0.48 | 8.01*** | ||||

| R 2 | 0.3 | 0.6 | 0.32 | |||

| F | 995.87*** | 3360.31*** | 769.99*** | |||

***p < 0.001.

Secondly, in the analysis of negative emotional responses, moral judgment significantly positively affects negative emotional responses (β = 0.08, t = 18.97, p < 0.001), and LSM also significantly impacts negative emotional responses (β = 0.06, t = 2.14, p < 0.05). Importantly, the interaction between moral judgment and LSM also significantly influences negative emotional responses (β = 0.05, t = 6.23, p < 0.001), suggesting that LSM moderates the extent of the impact of moral judgment on negative emotional responses.

Finally, after including negative emotional responses in the predictive model for comment length, it is found that negative emotional responses significantly positively affect comment length (β = 0.48, t = 8.01, p < 0.001), while the impact of moral judgment remains significant but slightly reduced (β = 0.21, t = 9.71, p < 0.001). This indicates that negative emotional responses partially mediate the relationship between moral judgment and comment length, and this mediation effect varies depending on the level of LSM.

Taken together, the findings demonstrate that linguistic style matching (LSM) plays a significant moderating role in the relationship between moral judgment and comment length. LSM not only enhances the direct effect of moral judgment on comment behavior but also moderates its influence on negative emotional responses, thereby affecting the strength of the indirect pathway through which moral judgment shapes comment length. These results confirm the presence of a moderated mediation mechanism and provide full empirical support for Hypothesis 6.

Our findings illustrate the specific moderating role of Language Style Matching (LSM) in the early stages of the relationship between Moral Judgment and Comment Length, mediated by Negative Emotional Responses. Utilizing Hayes’s PROCESS macro Model 8, we computed 95% confidence intervals (CI) to assess moderated mediation effects, highlighting the role of LSM and Negative Emotional Responses in this relationship, as shown in Fig. 3.

Fig. 3.

The moderated mediation model of moral judgment, LSM, negative emotional responses, comment length. Note. The value 0.21* represents the main effect of Moral Judgment on Comment Length in the model without the moderator. The value in parentheses (0.25*) represents the conditional effect of Moral Judgment on Comment Length when the moderator LSM is high. The interaction term (β = 0.09*) indicates a significant moderation effect. *p < 0.05, **p < 0.01, ***p < 0.001.

This moderated mediation model demonstrates the complex interactions in the “Moral Judgment → Negative Emotional Responses → Comment Length” pathway, with LSM enhancing the predictive effect of moral judgment on negative emotional responses, ultimately affecting the length of comments.

Discussion

Consistent with Information-Processing Theory, our Weibo data show that morally triggered anger and disgust were associated with systematically longer comments, indicating deeper message elaboration under high-arousal affect11. Mediational analysis further revealed that negative emotion accounted for 34% of the moral-judgment effect on comment length. This supports Emotion-Regulation Theory, which posits that individuals regulate intense affect through expressive action—in this case, extended written responses12. Together, these results confirm the dual prediction advanced in the Introduction: negative affect both heightens cognitive processing and lengthens online expression.

Building on these theory-consistent findings, we discuss three implications. First, the emotion-driven elaboration loop helps explain why “new yellow journalism” amplifies polarised discourse. Second, by demonstrating that emotion is a functional mediator rather than a by-product, the study fills Moral Foundations Theory’s behavioural gap. Third, we show that Linguistic-Style Matching intensifies this loop, highlighting platform design features that can exacerbate or mitigate moral-emotional cascades.

The mediating role of negative emotional responses

This empirical study demonstrates that negative emotional responses partially mediate the link between moral judgment and comment length, accounting for 34% of the total effect (p < 0.01) in line with the bias-corrected bootstrap confidence-interval test40. The finding extends online information-processing models by showing that, after a rapid moral appraisal, negative affect energizes users to invest more deeply in textual production, completing the full “moral cognition → emotion → behavior” chain41–43. High-arousal emotions such as anger and disgust elicited by moral violations not only reflect internal states but directly propel longer, more elaborated comments; these comments serve as a means of emotion regulation and meaning construction44,45 and, within highly interactive social-media ecosystems, magnify topic salience and emotional contagion10,46,47.

Situated within Moral Foundations Theory (MFT), the study advances the theory’s scope in at least three ways. First, using large-scale Weibo data, it empirically verifies the process by which moral intuitions are transformed into observable communicative behavior through emotion, thus filling MFT’s long-noted behavioral gap48,49. Second, it clarifies the functional mediating role of negative affect between moral judgment and expressive behavior, showing that emotion is a driving mechanism rather than a mere by-product. Third, exploratory analyses reveal that violations of the “care/harm” and “fairness/cheating” foundations evoke the strongest negative emotions and the longest comments, indicating systematic differences among moral foundations in emotional intensity and behavioral outcomes—new empirical evidence for MFT’s “foundation heterogeneity”.

Practically, neglecting the amplifier effect of the “moral stimulus → negative emotion → lengthy comment” loop can foster emotional polarization and group antagonism. Platforms could, guided by real-time emotion-detection metrics, impose posting delays or fact-checking prompts on content exhibiting intense negative affect, and attach links to diverse information sources alongside sensitive moral topics to curb emotional escalation. Concurrently, cross-foundation moral-literacy training can help users appreciate the moral concerns of different groups, promoting more constructive online dialogue50. These measures can both restrain the spread of incendiary content and protect a healthy public deliberative space while respecting freedom of expression.

The moderating role of LSM

This study further elucidates the moderating role of Linguistic Style Matching (LSM) in the impact of moral judgment on negative emotional responses. Consistent with the hypothesis, the results show that LSM significantly moderates the relationship between moral judgment and negative emotional responses, accounting for about 25% of the total effect. This supports the view of social identity theory, which posits that an individual’s group identification and the interplay of emotional responses are key mechanisms in the formation of emotional expression51,52. In this context, LSM can be understood as a form of identity signaling, whereby users unconsciously adopt similar language to align themselves with perceived in-group norms. This linguistic synchronization not only intensifies emotional expression but also reinforces a shared group stance against out-group narratives, accelerating the collective response to morally framed content such as new yellow journalism. Specifically, higher levels of LSM enhance individuals’ sense of group belonging, making them more susceptible to group emotions, thereby intensifying negative emotional responses36,37. For example, in our dataset, several users responding to the same event used highly similar language and structure to express their dissatisfaction, such as: “This woman’s visual effects are disgusting,” “Her voice makes me extremely uncomfortable,” “Her exaggerated expressions are unbearable—who even watches this?” These comments, while made by different users, share emotional tone, evaluative adjectives (e.g., “disgusting,” “uncomfortable,” “unbearable”), and similar syntactic structure, reflecting a high degree of linguistic style matching. This supports our hypothesis that in the highly interactive environment of social media, this phenomenon of emotional resonance is particularly evident and closely associated with the polarization of network opinions and group polarization53.

However, while LSM plays a significant role in enhancing the impact of moral judgment on negative emotional responses, it is not irreplaceable. The study found that the strength of moral judgment itself remains a key driver of emotional expression, especially at extreme levels of moral judgment, where the moderating effect of LSM may become attenuated. This finding supports the theory of emotional saturation, suggesting that in cases of extreme moral evaluations, emotional expression may have reached its peak, and further increases in LSM have limited impact on emotional intensity54,55. This emphasizes the importance of cultivating individual moral literacy and critical thinking. While LSM can promote emotional resonance within a group, it cannot replace personal moral judgment and ethical awareness. In the context of social media, users’ moral judgments not only affect their emotional responses and linguistic behaviors but can also profoundly influence the direction of online public opinion. Thus, strengthening moral education and enhancing the public’s moral judgment capabilities are irreplaceable for guiding healthy online discussions and preventing opinion polarization56,57.

Moreover, an individual’s self-regulation capability is also an important factor influencing emotional expression. A high level of self-regulation can help users remain rational under strong emotions, avoiding overly emotional expressions58,59. Therefore, cultivating users’ emotional regulation capabilities helps to reduce the excessive impact of negative emotions on linguistic behavior, promoting more rational and constructive communication.

Moreover, this study also found that in the model without the inclusion of a mediating variable, LSM significantly moderated the direct path from moral judgment to comment length (β = 0.09, t = 2.37, p < 0.01), consistent with previous research (e.g37). , , and reveals the potential impact of users’ linguistic style synchronization. However, after introducing negative emotional responses into the model as a mediating variable, the significance of this interaction term declined (p = 0.077) and was no longer statistically significant. This shift suggests that the moderating effect of LSM may primarily operate through the indirect pathway—by amplifying the emotional response elicited by moral judgment—rather than directly influencing comment length. Communication Accommodation Theory suggests that people tend to adjust their linguistic style to match others, thereby enhancing communication effectiveness60. Specifically, higher levels of LSM not only enhance the intimacy of user interactions but also amplify the impact of moral judgments on comment length, encouraging users to elaborate more on their views. In the highly interactive environment of social media, this consistency in linguistic style is particularly important, potentially fostering deeper discussions and information exchange.

However, Normative Influence Theory notes that while LSM can reduce some negative impacts, it may not significantly decrease negative emotional responses caused by extreme moral judgments on social media. Thus, while LSM can serve as a method to mitigate emotional intensification, its efficacy seems limited in counteracting negative emotional responses, especially in the widespread use of social media. This finding prompts the need for interventions in online emotional propagation to not only cultivate consistency in users’ linguistic styles but also consider how to reduce extreme moral evaluations in social media environments to more comprehensively support healthy online interactions and emotional expressions53.

Therefore, in advancing internet governance and user education, it is essential to consider the impacts of linguistic style and moral judgment comprehensively, promoting more rational online communications. This includes strengthening moral education, fostering public critical thinking and emotional regulation skills, helping users maintain rationality in expressing their views, and avoiding overly emotional expressions. Encouraging positive linguistic style matching also promotes effective communication and information sharing, thereby building a healthy online ecosystem.

Research insights and shortcomings

This study explored the influence of moral judgment, language style matching (LSM), and comment length on netizens’ emotional responses when confronted with “new yellow journalism”, revealing the underlying mechanisms. These findings hold significant implications for understanding the relationship between moral judgment and emotional expression on social media, and they offer insights into strategies for online public opinion management and emotional guidance.

The results indicate that moral judgment significantly affects emotional responses. New yellow journalism, which often involves morally ambiguous content, easily triggers negative emotions. Both platforms and content creators should be aware of the public’s moral sensitivities, avoiding the release of such content to reduce anxiety and emotional polarization and to prevent the escalation of polarized opinions.

Encouraging users to control comment length rationally and express themselves thoughtfully can help curb the spread of negative emotions. Increasing users’ LSM can enhance group cohesion, fostering a positive interactive atmosphere and reducing aggressive language. Promoting constructive language use and discouraging excessive emotional expressions can further inhibit the spread of negativity.

The study emphasizes the importance of fostering public moral literacy and critical thinking. Strengthening moral education to improve moral judgment can guide rational and mature emotional responses, helping prevent impulsive responses that lead to opinion polarization.

This study has certain limitations. First, the data is solely from Weibo comments, lacking representativeness for other social media platforms, which limits the generalizability of the conclusions. As a retrospective study, it did not capture the dynamic changes in emotional responses. Additionally, it did not explore specific dimensions of moral foundation theory (e.g., care, fairness) on emotional responses, limiting the understanding of how different moral foundations influence emotions.

Future research should broaden data sources, incorporating more platforms, utilizing real-time data collection, and investigating the role of various moral foundations in emotional responses to enhance the generalizability and depth of conclusions19.

Practical implications

The present findings highlight a three-step risk loop: a moral stimulus triggers negative emotion, which in turn leads to a lengthy comment. This sequence amplifies the effects of “new yellow journalism” on social media. Breaking the loop requires coordinated actions at three levels.

1. Platform-level interventions.

Recent empirical studies have demonstrated that inserting lightweight friction mechanisms—such as an extra click or a brief accuracy checklist at the moment of sharing—significantly reduces the spread of misinformation61,62. Platforms can integrate these features with real-time emotion detection to flag posts high in anger or disgust, introducing short posting delays that give users time to reconsider their reactions.

2. User-level literacy training.

Media-literacy micro-courses embedded in the feed—e.g., 30-second interactive quizzes—have been shown to cut belief in fake headlines by up to 25%63. Integrating moral-foundation perspectives into these modules helps users recognize that different groups prioritize different virtues, reducing affective polarization.

3. Educational-level critical-thinking programs.

Formal curricula that pair emotional-regulation techniques44 with source-evaluation checklists improve adolescents’ ability to distinguish factual reporting from sensationalized moral outrage64. We recommend that secondary schools embed “emotion-and-evidence” modules into civics courses to cultivate reflective digital citizens.

Although this study is based on data from China, the proposed three-level interventions are grounded in widely applicable psychological and communicative principles. Therefore, they may be adapted to various cultural and media environments beyond the Chinese context.

Conclusion

Netizens’ moral judgments are positively correlated with the length of their comments; higher standards of moral judgment prompt netizens to post longer comments on yellow news. The emotional responses of netizens to new yellow news are predominantly negative, reflecting a general public disdain or dissatisfaction with such information. When expressing negative emotions, netizens tend to use more emotional and derogatory vocabulary, and their language style is more aggressive; conversely, in comments with positive emotional responses, the language is more neutral or positive, tending to employ gentle, rational vocabulary. Negative emotional responses mediate the relationship between moral judgments and the length of comments. Language Style Matching (LSM) has a moderating effect between moral judgments and the length of comments. Moreover, moral judgments indirectly influence the length of comments through negative emotional responses to new yellow news, and the strength of this indirect effect varies with the level of LSM.

Author contributions

J.T. wrote the main manuscript text, and R.Z. prepared and reviewed the figures. All authors reviewed the manuscript.

Data availability

Due to privacy and ethical considerations, the datasets used in this study are not publicly available but are available from the corresponding author, Ronghua Zhang, upon reasonable request.

Declarations

Competing interests

The authors declare no competing interests.

Footnotes

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

References

- 1.Kaplan, A. M. & Haenlein, M. Users of the world, unite! The challenges and opportunities of social media. Bus. Horiz.53 (1), 59–68. 10.1016/j.bushor.2009.09.003 (2010). [Google Scholar]

- 2.Allcott, H. & Gentzkow, M. Social media and fake news in the 2016 election. J. Economic Perspect.31 (2), 211–236 (2017). [Google Scholar]

- 3.Campbell, W. J. Yellow Journalism: Puncturing the Myths, Defining the Legacies (Greenwood Publishing Group, 2001).

- 4.Center for News, Technology & Innovation. Algorithms & Quality News. Center for News, Technology & Innovation. (2023). https://innovating.news/article/algorithms-quality-news/.

- 5.Milli, L., Bouchaud, P. & Ramaciotti, P. Auditing the audits: evaluating methodologies for social media recommender system audits. Appl. Netw. Sci.9 (59). 10.1007/s41109-024-00668-6 (2024).

- 6.Greene, J. D. & Haidt, J. How (and where) does moral judgment work? Trends Cogn. Sci.6 (12), 517–523. 10.1016/S1364-6613(02)02011-9 (2002). [DOI] [PubMed] [Google Scholar]

- 7.Haidt, J. The moral emotions. In (eds Davidson, R. J., Scherer, K. R. & Goldsmith, H. H.) Handbook of Affective Sciences (852–870). Oxford University Press. (2003).

- 8.Valkenburg, P. M., Peter, J. & Walther, J. B. Media effects: theory and research. Ann. Rev. Psychol.67, 315–338. 10.1146/annurev-psych-122414-033608 (2016). [DOI] [PubMed] [Google Scholar]

- 9.Devlin, J., Chang, M. W., Lee, K. & Toutanova, K. BERT: Pre-training of deep bidirectional transformers for language understanding. In Proceedings of the 2019 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies1, 4171–4186. 10.48550/arXiv.1810.04805 (2019).

- 10.Pennebaker, J. W., Boyd, R. L., Jordan, K. & Blackburn, K. The Development and Psychometric Properties of LIWC2015 (University of Texas at Austin, 2015).

- 11.Petty, R. E. & Cacioppo, J. T. The elaboration likelihood model of persuasion. In L. Berkowitz (Ed.), Advances in Experimental Social Psychology (Vol. 19, pp. 123–205). Academic Press. (1986). 10.1016/S0065-2601(08)60214-2.

- 12.Gross, J. J. The emerging field of emotion regulation: an integrative review. Rev. Gen. Psychol.2 (3), 271–299. 10.1037/1089-2680.2.3.271 (1998). [Google Scholar]

- 13.Campbell, W. J. Yellow Journalism: Puncturing the Myths, Defining the Legacies (Praeger, 2001).

- 14.Lazer, D. M. J., Baum, M. A., Benkler, Y., Berinsky, A. J., Greenhill, K. M., Menczer,F., … Zittrain, J. L. (2018). The science of fake news. Science, 359(6380), 1094–1096. https://doi.org/10.1126/science.aao2998. [DOI] [PubMed]

- 15.Brady, W. J., Wills, J. A., Jost, J. T., Tucker, J. A. & Van Bavel, J. J. Emotion shapes the diffusion of moralized content in social networks. Proc. Natl. Acad. Sci.114 (28), 7313–7318. 10.1073/pnas.1618923114 (2017). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Berger, J. & Milkman, K. L. What makes online content viral? J. Mark. Res.49 (2), 192–205. 10.1509/jmr.10.0353 (2012). [Google Scholar]

- 17.Kraut, R. Plato. In E. N. Zalta (Ed.), The Stanford Encyclopedia of Philosophy (Summer 2018 Edition). (2018). https://plato.stanford.edu/archives/sum2018/entries/plato/.

- 18.Greene, J. D., Sommerville, R. B., Nystrom, L. E., Darley, J. M. & Cohen, J. D. An fMRI investigation of emotional engagement in moral judgment. Science293 (5537), 2105–2108. 10.1126/science.1062872 (2001). [DOI] [PubMed] [Google Scholar]

- 19.Haidt, J. & Graham, J. When morality opposes justice: Conservatives have moral intuitions that Liberals May not recognize. Soc. Justice Res.20 (1), 98–116. 10.1007/s11211-007-0034-z (2007). [Google Scholar]

- 20.Chapman, H. A., Kim, D. A., Susskind, J. M. & Anderson, A. K. In bad taste: evidence for the oral origins of moral disgust. Science323 (5918), 1222–1226. 10.1126/science.1165565 (2009). [DOI] [PubMed] [Google Scholar]

- 21.Schwarz, N. & Clore, G. L. Mood, misattribution, and judgments of well-being: informative and directive functions of affective States. J. Personal. Soc. Psychol.45 (3), 513–523. 10.1037/0022-3514.45.3.513 (1983). [Google Scholar]

- 22.Lerner, J. S. & Keltner, D. Beyond valence: toward a model of emotion-specific influences on judgement and choice. Cogn. Emot.14 (4), 473–493. 10.1080/026999300402763 (2000). [Google Scholar]

- 23.Bakshy, E., Messing, S. & Adamic, L. A. Exposure to ideologically diverse news and opinion on Facebook. Science348 (6239), 1130–1132. 10.1126/science.aaa1160 (2015). [DOI] [PubMed] [Google Scholar]

- 24.Marwick, A. & Boyd, D. I tweet honestly, I tweet passionately: Twitter users, context collapse, and the imagined audience. New. Media Soc.13 (1), 114–133. 10.1177/1461444810365313 (2011). [Google Scholar]

- 25.Haidt, J. The emotional dog and its rational tail: A social intuitionist approach to moral judgment. Psychol. Rev.108 (4), 814–834. 10.1037/0033-295X.108.4.814 (2001). [DOI] [PubMed] [Google Scholar]

- 26.Moll, J., Zahn, R., de Oliveira-Souza, R., Krueger, F. & Grafman, J. The neural basis of human moral cognition. Nat. Rev. Neurosci.6 (10), 799–809. 10.1038/nrn1768 (2005). [DOI] [PubMed] [Google Scholar]

- 27.Tangney, J. P., Stuewig, J. & Mashek, D. J. Moral emotions and moral behavior. Ann. Rev. Psychol.58, 345–372. 10.1146/annurev.psych.56.091103.070145 (2007). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Lapidot-Lefler, N. & Barak, A. Effects of anonymity, invisibility, and lack of eye-contact on toxic online disinhibition. Comput. Hum. Behav.28 (2), 434–443. 10.1016/j.chb.2011.10.014 (2012). [Google Scholar]

- 29.Bless, H., Bohner, G., Schwarz, N. & Strack, F. Mood and persuasion: A cognitive response analysis. Pers. Soc. Psychol. Bull.16 (2), 331–345. 10.1177/0146167290162013 (1990). [Google Scholar]

- 30.Schwarz, N. & Clore, G. L. Feelings and phenomenal experiences. In (eds Kruglanski, A. & Higgins, E. T.) Social Psychology: Handbook of Basic Principles (2nd ed., 385–407). Guilford Press. (2007).

- 31.Pennebaker, J. W. The Secret Life of Pronouns: What our Words Say about Us (Bloomsbury, 2011).

- 32.Suler, J. The online disinhibition effect. CyberPsychology Behav.7 (3), 321–326. 10.1089/1094931041291295 (2004). [DOI] [PubMed] [Google Scholar]

- 33.Butler, E. A. et al. The social consequences of expressive suppression. Emotion3 (1), 48–67. 10.1037/1528-3542.3.1.48 (2003). [DOI] [PubMed] [Google Scholar]

- 34.Rozin, P. & Royzman, E. B. Negativity bias, negativity dominance, and contagion. Personality Social Psychol. Rev.5 (4), 296–320. 10.1207/S15327957PSPR0504_2 (2001). [Google Scholar]

- 35.Baumeister, R. F., Bratslavsky, E., Finkenauer, C. & Vohs, K. D. Bad is stronger than good. Rev. Gen. Psychol.5 (4), 323–370. 10.1037/1089-2680.5.4.323 (2001). [Google Scholar]

- 36.Ireland, M. E. & Pennebaker, J. W. Language style matching in writing: synchrony in essays, correspondence, and poetry. J. Personal. Soc. Psychol.99 (3), 549–571. 10.1037/a0020386 (2010). [DOI] [PubMed] [Google Scholar]

- 37.Gonzales, A. L. & Hancock, J. T. Language style matching as a predictor of social dynamics in small groups. Commun. Res.37 (1), 3–19. 10.1177/0093650209351468 (2010). [Google Scholar]

- 38.Niederhoffer, K. G. & Pennebaker, J. W. Linguistic style matching in social interaction. J. Lang. Social Psychol.21 (4), 337–360. 10.1177/026192702237953 (2002). [Google Scholar]

- 39.Kacewicz, E., Pennebaker, J. W., Davis, M., Jeon, M. & Graesser, A. C. Pronoun use reflects standings in social hierarchies. J. Lang. Social Psychol.33 (2), 125–143. 10.1177/0261927X13502654 (2014). [Google Scholar]

- 40.Preacher, K. J. & Hayes, A. F. Asymptotic and resampling strategies for assessing and comparing indirect effects in multiple mediator models. Behav. Res. Methods. 40 (3), 879–891. 10.3758/BRM.40.3.879 (2008). [DOI] [PubMed] [Google Scholar]

- 41.Smith, E. R. & DeCoster, J. Dual-process models in social and cognitive psychology: conceptual integration and links to underlying memory systems. Personality Social Psychol. Rev.4 (2), 108–131. 10.1207/S15327957PSPR0402_01 (2000). [Google Scholar]

- 42.Lazarus, R. S. Emotion and Adaptation (Oxford University Press, 1991).

- 43.Forgas, J. P. Mood effects on cognition: affective influences on the content and process of information processing and behavior. Curr. Dir. Psychol. Sci.30 (1), 51–57. 10.1016/B978-0-12-801851-4.00003-3 (2017). [Google Scholar]

- 44.Gross, J. J. & John, O. P. Individual differences in two emotion regulation processes: implications for affect, relationships, and well-being. J. Personal. Soc. Psychol.85 (2), 348–362. 10.1037/0022-3514.85.2.348 (2003). [DOI] [PubMed] [Google Scholar]

- 45.Fan, R., Zhao, J., Chen, Y. & Xu, K. Anger is more influential than joy: sentiment correlation in Weibo. PLOS ONE. 13 (1), e0190165. 10.1371/journal.pone.0190165 (2018). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.Chmiel, A. et al. Collective emotions online and their influence on community life. PLOS ONE. 6 (7), e22207. 10.1371/journal.pone.0022207 (2011). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Lin, X., Spence, P. R. & Lachlan, K. A. Social media and credibility indicators: the effect of influence cues. Comput. Hum. Behav.78, 223–230. 10.1016/j.chb.2016.05.002 (2018). [Google Scholar]

- 48.Graham, J. et al. Moral foundations theory: on the advantages of moral pluralism over moral monism. Adv. Exp. Soc. Psychol.62, 1–36. 10.1016/bs.aesp.2020.05.002 (2020). [Google Scholar]

- 49.Haidt, J. The Righteous Mind: why Good People Are Divided by Politics and Religion (Pantheon Books, 2012).

- 50.Graham, J. et al. Moral foundations theory: on the advantages of moral pluralism over moral monism. In (eds Gray, K. & Graham, J.) Atlas of Moral Psychology (211–222). The Guilford Press. (2018).

- 51.Tajfel, H. & Turner, J. C. An integrative theory of intergroup conflict. In (eds Austin, W. G. & Worchel, S.) The Social Psychology of Intergroup Relations (33–47). Brooks/Cole. (1979).

- 52.Hogg, M. A. Social identity theory. In S. McKeown, R. Haji, & N. Ferguson (Eds.), Understanding peace and conflict through social identity theory (pp. 3–17). Springer. (2016). 10.1007/978-3-319-29869-6_1

- 53.Del Vicario, M. et al. Echo chambers in the age of misinformation. Proc. Natl. Acad. Sci.113 (3), 554–559. 10.1073/pnas.1517441113 (2016). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 54.Kusyk, S. & Schwartz, M. S. Moral intensity: it is what is, but what is it? A critical review of the literature. J. Bus. Ethics. 10.1007/s10551-024-05869-8 (2024). [Google Scholar]

- 55.Wetherell, M. Affect and Emotion: A New Social Science Understanding (SAGE, 2012).

- 56.Rest, J. R., Narvaez, D., Bebeau, M. J. & Thoma, S. J. Postconventional Moral Thinking: A neo-Kohlbergian Approach (Lawrence Erlbaum Associates, 1999).

- 57.Narvaez, D. The emotional foundations of high moral intelligence. New Dir. Child Adolesc. Dev.2010(129), 77–94. 10.1002/cd.275 (2010). [DOI] [PubMed] [Google Scholar]

- 58.Gross, J. J. Emotion regulation: current status and future prospects. Psychol. Inq.26 (1), 1–26. 10.1080/1047840X.2014.940781 (2015). [Google Scholar]

- 59.Zimmermann, P. & Iwanski, A. Emotion regulation from early adolescence to emerging adulthood and middle adulthood: age differences, gender differences, and emotion-specific developmental variations. Int. J. Behav. Dev.38 (2), 182–194. 10.1177/0165025413515405 (2014). [Google Scholar]

- 60.Giles, H., Coupland, J. & Coupland, N. Contexts of Accommodation: Developments in Applied Sociolinguistics (Cambridge University Press, 1991). 10.1017/CBO9780511663673.

- 61.Jahanbakhsh, F. et al. Exploring lightweight interventions at posting time to reduce the sharing of misinformation on social media. In Proceedings of the ACM on Human-Computer Interaction5(CSCW1), Article 18. 10.1145/3449131 (2021).

- 62.Jahn, L., Rendsvig, R. K., Flammini, A., Menczer, F. & Hendricks, V. F. Friction interventions to curb the spread of misinformation on social media. arXiv Preprint, arXiv:2307.11498. (2023). 10.48550/arXiv.2307.11498.

- 63.Roozenbeek, J. & van der Linden, S. Fake news game confers psychological resistance against online misinformation. Palgrave Commun.5, 65. 10.1057/s41599-019-0279-9 (2019). [Google Scholar]

- 64.Hobbs, R. & Mihailidis, P. (eds) The International Encyclopedia of Media Literacy (Wiley-Blackwell, 2022).

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

Due to privacy and ethical considerations, the datasets used in this study are not publicly available but are available from the corresponding author, Ronghua Zhang, upon reasonable request.