Abstract

Background

Colon cancer remains a leading cause of cancer-related mortality globally, highlighting the urgent need for advanced diagnostic methods to improve early detection and patient outcomes.

Methods

This study introduces ColoViT, a hybrid diagnostic framework that synergistically integrates EfficientNet and Vision Transformers. EfficientNet contributes scalability and high performance in feature extraction, while Vision Transformers effectively capture the global contextual information within colonoscopic images.

Results

The integration of these models enables ColoViT to deliver precise and comprehensive image analysis, significantly improving the detection of precancerous lesions and early-stage colon cancers. The proposed model achieved a recall of 92.4%, precision of 98.9%, F1-score of 98.4%, and an AUC of 99% in our preliminary evaluation.

Conclusion

ColoViT demonstrates superior performance over existing models, offering a robust solution for enhancing the early detection of colon cancer through deep learning-based image analysis.

Keywords: Colon cancer, Deep learning, EfficientNet, Vision transformer, ColoViT

Introduction

Colorectal Carcinoma (CRCA) presents a significant global health challenge, with survival rates varying depending on the stage at diagnosis. Colorectal polyps, commonly found in the colon or rectum, are often precursors to colorectal cancer. While most polyps are harmless, some, such as adenomatous polyps, can lead to cancer. Hyperplastic and inflammatory polyps are typically benign, but larger ones may require more frequent monitoring. Sessile serrated polyps and classic serrated adenomas, although less common, carry a higher risk of cancer (Huang et al. 2023). Regular screening helps detect and remove polyps early, reducing the risk of colorectal cancer.

Data from the Surveillance, Epidemiology, and End Results (SEER) program (Siegel et al. 2024) reveal notable distinctions in 5-year relative survival rates for colon cancer based on disease stage. In cases of localized colon cancer, confined to the colon, the 5-year relative survival rate reaches 91%. However, when the cancer spreads regionally to nearby lymph nodes or tissues, the survival rate decreases to 72%. Alarmingly, metastasis to distant organs or tissues significantly lowers the 5-year relative survival rate to just 13%. On the whole, considering all SEER stages combined, the 5-year relative survival rate for colon cancer averages at 63% (Hossain et al. 2023; Walsh 2017). Similarly, for rectal cancer, survival rates fluctuate depending on the stage at diagnosis. Localized rectal cancer, confined to the rectum, boasts a 5-year relative survival rate of 90%. However, if the cancer extends regionally to adjacent lymph nodes or tissues, the survival rate slightly diminishes to 74%. In cases of metastasis to distant organs or tissues, the survival rate further declines to 17%. Overall, considering all SEER stages combined, the 5-year relative survival rate for rectal cancer stands at 68%.

These statistics underscore the critical importance of early detection and intervention in enhancing patient outcomes for colorectal cancer (Haldar et al. 2023). They emphasize the pivotal role of timely screening and diagnosis in identifying colorectal cancer at an early stage, when treatment options are most effective, ultimately leading to improved survival rates and enhanced quality of life for patients battling this challenging disease. This highlights the utmost importance of promptly identifying and intervening to combat colorectal cancer.

In clinical practice, a variety of screening tests are available for detecting polyps (Korbar et al. 2017). These include colonoscopy (Raseena et al. 2024), sigmoidoscopy (Juul et al. 2024), CT colonography (O-Pad et al. 2024), X-ray with barium enema, fecal immunochemical test (Lee et al. 2014), fecal occult blood test (Young et al. 2015), and stool DNA testing (Cooper et al. 2018). Among these options, colonoscopy is considered the most popular and preferred screening test based on population-based studies. However, the colonoscopy procedure is characterized by its lengthy duration and demanding physical effort. Polyps are frequently overlooked during colonoscopy procedures due to their small size and visual characteristics (Wen et al. 2023). Additionally, endoscopists may make diagnostic errors due to visual fatigue or a lack of focus. To address these challenges, computer science-based techniques have been proposed to assist doctors during colonoscopy procedures, with the goal of reducing the incidence of missed polyps and improving diagnostic accuracy. The novel hybrid model, ColoViT, merges EfficientNet and Vision Transformer technologies to revolutionize colorectal cancer detection. By combining the precision of EfficientNet with Vision Transformers’ spatial relationship analysis, ColoVisionNet enhances polyp detection during colonoscopy procedures. This innovative approach aims to improve diagnostic accuracy, leading to earlier detection and better patient outcomes in the fight against colorectal cancer.

Literature survey

Multiple studies have focused on developing automated techniques for diagnosing and categorizing colorectal polyps using advanced deep learning methods. Korbar et al. (2017) utilized models such as AlexNet, VGG, GoogleNet, and ResNet to detect five types of colorectal polyp structures. They achieved high classification performance with ResNet-D, which had an accuracy of 93.00%, precision of 89.7%, recall of 88.3%, and an F1 score of 88.8%. These results highlight the potential of deep learning models in accurately identifying different polyp types, thereby aiding in early diagnosis and treatment.

Quan et al. (2022) assessed a real-time AI system for polyp detection in a US-based pilot study. They observed an improved detection rate for adenomas and serrated polyps, although the results were not statistically significant. This indicates the need for larger trials to validate these findings. The use of real-time AI systems in clinical settings shows promise for enhancing polyp detection rates, which could lead to better patient outcomes. Wei et al. (2020) used ResNet to classify four polyp types: tubular adenoma (TA), tubulovillous or villous adenoma (TVA), hyperplastic polyp (HP), and sessile serrated adenoma (SSA). Their study achieved higher accuracy compared to local pathologists, demonstrating the effectiveness of deep learning models in assisting pathologists with more accurate diagnoses.

Qian et al. (2020) enhanced the Faster R-CNN method for polyp identification during colonoscopy by pre-processing images to reduce specular reflections. They achieved a mean average precision (mAP) of 91.43%, compared to 90.57% with the standard Faster R-CNN. Inception-v3 was applied by Iizuka et al. (2020) and Wang et al. (2021) for whole slide image (WSI) classification, dividing the images into smaller patches for detailed analysis. Iizuka et al. used a recurrent neural network for slice-level classification, achieving an AUC of 0.99, while Wang et al. utilized topological connections of tile clusters, achieving an AUC of 0.988.

Hoang et al. (2021) developed a capsule endoscope system with real-time localization and electromagnetic actuation for automated polyp detection, achieving an average precision of 85% using YOLOv3. Pacal and Karaboga (2021) proposed a YOLOv4-based model optimized using CSPNet, Mish activation, and CIoU loss, achieving 96.36% F1-score on CVC-ColonDB and 86.85% on ETIS-LARIB. Their approach emphasized real-time performance and ensemble post-processing for higher accuracy. Karaman et al. (2023a) introduced an artificial bee colony (ABC) algorithm for hyperparameter tuning of YOLO-based models. They evaluated models like YOLOv4s, YOLOv4m, and YOLOv4-P7 on SUN and PICCOLO datasets, observing a 3% mAP and 2% F1 improvement.

Marini et al. (2021) explored computer-assisted dysplasia grading using multi-scale task multiple instance learning. Barbano et al. (2021) developed a multi-resolution model with three cascaded ResNet layers, achieving 67% accuracy at the patch level. Song et al. (2020) and Perlo et al. (2022) employed ResNet-34 and ResNet-18 for polyp classification.

Neto et al. (2022) proposed a semi-supervised ResNet-34 approach achieving 90.19% accuracy, 98.8% sensitivity, and 85.7% specificity. This study aims to improve colorectal polyp grading using a hybrid model (EfficientNet + ViT), delivering patch-level predictions for six categories. Beyond colorectal polyp detection, Pacal et al. (2025) developed a CNN-ViT-based MetaFormer model for early skin cancer detection. The model achieved 92.54% accuracy on ISIC 2019 and 95.01% on HAM10000. Ozdemir and Pacal (2025) proposed a ConvNeXtV2 and separable self-attention model achieving 93.48% accuracy and 91.82% F1-score for multiclass skin cancer classification. Ozdemir et al. (2025) presented an InceptionNeXt-based hybrid model with block and grid attention for lung cancer detection, achieving 99.54% accuracy on IQ-OTH/NCCD and 98.41% on Chest CT. İnce et al. (2025) evaluated U-Net, U-Net++, and Attention U-Net for stroke segmentation using the ISLES 2022 dataset. Attention U-Net achieved top performance with 0.8223 IoU and 0.9021 DSC (Table 1).

Table 1.

Summary of Existing Literature

| Study | Methods Used | Accuracy/Performance |

|---|---|---|

| Korbar et al. (2017) | AlexNet, VGG, GoogleNet, ResNet | Accuracy: 93.00%, F1 Score: 88.8% |

| Quan et al. (2022) | Real-time AI system | Improved detection rates (not statistically significant) |

| Wei et al. (2020) | ResNet | Outperformed local pathologists |

| Qian et al. (2020) | Enhanced Faster R-CNN | mAP: 91.43% |

| Iizuka et al. (2020) | Inception-v3, RNN | AUC: 0.99 |

| Wang et al. (2021) | Inception-v3, tile clusters | AUC: 0.988 |

| Hoang et al. (2021) | YOLOv3, localization system | Precision: 85% |

| Karaman et al. (2023b) | Scaled YOLOv4 + ensemble | F1: 96.36% (CVC-ColonDB), 86.85% (ETIS-LARIB) |

| Karaman et al. (2023a) | YOLO + ABC optimizer | +3% mAP, +2% F1 (SUN, PICCOLO) |

| Marini et al. (2021) | Multi-scale MIL | Multi-level polyp grading |

| Barbano et al. (2021) | Multi-res. 3xResNet | Accuracy: 67% |

| Song et al. (2020) | DeepLabv2 + ResNet-34 | Binary grading |

| Perlo et al. (2022) | ResNet-18 | 6-type polyp classification |

| Neto et al. (2022) | Semi-supervised ResNet-34 | Accuracy: 90.19%, Sensitivity: 98.8% |

| Pacal et al. (2024) | MetaFormer (CNN + ViT) | Acc: 92.54% (ISIC), 95.01% (HAM10000) |

| Ozdemir et al. (2023) | ConvNeXtV2 + SA | Acc: 93.48%, F1: 91.82% |

| Aslan et al. (2024) | InceptionNeXt + attention | Acc: 99.54% (IQ-OTH), 98.41% (Chest CT) |

| İnce et al. (2025) | U-Net, U-Net++, Attn U-Net | IoU: 0.8223, DSC: 0.9021 |

Despite substantial advances in automated systems for identifying and categorizing colorectal polyps using deep learning (DL) and machine learning (ML), the current literature still has significant limitations. The proposed COLOVIT approach effectively addresses these limitations by incorporating EfficientNet and Vision Transformer models to detect and classify polyps while also providing detailed grading (NORM, HP, TA.HG, TA.LG, TVA.HG, and TVA.LG), ensuring comprehensive risk assessment and informed treatment strategies. COLOVIT seeks to improve patch-level classification accuracy by combining EfficientNet for detailed image analysis and the Vision Transformer for contextual understanding, resulting in more precise classifications. Furthermore, COLOVIT integrates multi-scale features and contextual information, allowing it to offer a comprehensive grading system that covers all relevant clinical details, thus enhancing risk assessment and treatment planning. By merging EfficientNet’s quick detection capabilities with the Vision Transformer’s in-depth contextual analysis, COLOVIT improves real-time detection precision, providing high accuracy and reliability in various clinical contexts. Additionally, COLOVIT is trained and validated on diverse datasets, ensuring generalizability across different clinical settings, making it a reliable tool for colorectal cancer diagnosis.

Materials and methods

Dataset description

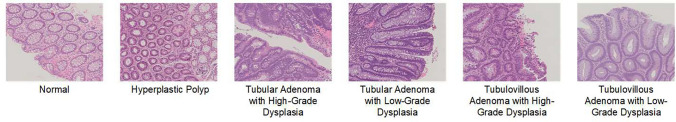

The UniToPatho dataset, as referenced in Bertero et al. (2021), was utilized in this study and can be accessed from the IEEE DataPort. This dataset contains 9,536 annotated images of hematoxylin and eosin (H&E)-stained colorectal samples, derived from 292 whole-slide images. It includes six categories of patches: Normal, HP, TA.HG, TA.LG, TVA.HG, and TVA.LG, with magnifications of 800 and 7000 μm, respectively.

For the purpose of this study, the dataset was divided into five folds to enable 5-fold cross-validation. Four of these folds, comprising 80% of the patches, were used for training, while the remaining fold, containing 20% of the patches, was reserved for validation. Figure 1 illustrates polyp patches with different grades of adenomatous dysplasia. The image distribution has been shown in Table 2.

Fig. 1.

Polyp patches from the UniToPatho dataset

Table 2.

Image Distribution for Training, Validation, and Testing

| Class | Training | Validation | Testing |

|---|---|---|---|

| NORM | 1645 | 352 | 353 |

| HP | 919 | 197 | 196 |

| TA.HG | 1039 | 223 | 222 |

| TA.LG | 1170 | 251 | 251 |

| TVA.HG | 957 | 205 | 205 |

| TVA.LG | 946 | 203 | 202 |

The UniToPatho dataset was selected for its comprehensive, expert-annotated collection of high-resolution H&E- stained colorectal histopathological images, encompassing a wide range of clinically relevant tissue classes. Its accessibility via IEEE DataPort and structured categorization of precancerous and normal tissue types made it suitable for evaluating automated diagnostic frameworks.

However, several limitations of the dataset are acknowledged. The dataset is institution-specific, which may introduce imaging style and staining biases that limit generalizability. Additionally, the absence of detailed patient demographic data restricts clinical correlation and risk stratification. Imbalance in the number of images across classes may also bias learning. To mitigate these challenges, data augmentation strategies and a 5-fold cross-validation protocol were applied. In future work, validation using external, multi-center datasets will be conducted to enhance robustness and broader applicability.

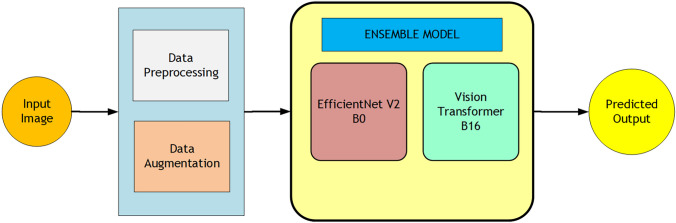

Data preprocessing

To provide a clear visual representation of the proposed diagnostic pipeline, a comprehensive block diagram of the ColoViT framework is illustrated in Fig. 2.

Fig. 2.

Block diagram of the ColoViT framework with preprocessing, feature extraction, and ensemble prediction

To maintain consistent input data quality for ColoViT, images were resized to 224 224 pixels using bilinear interpolation. Normalizing pixel values with min-max scaling improved dataset consistency and increased the algorithm’s sensitivity to minor differences in colorectal lesions. Median filtering was applied to eliminate salt-and-pepper noise, which is common in histological images due to variations in staining and slide preparation quality. Gamma correction was then used to adjust the brightness of the images, enhancing the visibility of crucial features. CLAHE (Contrast Limited Adaptive Histogram Equalization) was utilized to independently boost the contrast of each color channel while avoiding noise over-amplification. Following this, Gaussian blur was applied to reduce noise and smooth the images. Finally, a sharpening filter was used to enhance edges and fine details, highlighting critical features for the model. These preprocessing methods refined the images for subsequent analysis, focusing the model on essential patterns associated with colorectal cancer and removing unnecessary data. Data augmentation techniques, including random rotations, flips, and zooms, were employed to increase the diversity and variability of the training data, thereby reducing overfitting and improving model generalization to new data. Figure 3 illustrates the data preprocessing workflow, showing sample images at each stage of preprocessing, and demonstrating the effectiveness of preprocessing in improving image quality and reducing artifacts, ultimately enhancing accurate classification.

Fig. 3.

Data preprocessing workflow showing sample images at each stage of preprocessing: a Original Image, b Resized Image, c Median Filtered Image, d Normalized Image, e Gamma Corrected Image, f Equalized Image, g Gaussian Blurred Image, and h Sharpened Image

To ensure reproducibility, the following parameter settings were used during preprocessing: The images were resized to 224224 pixels using bilinear interpolation. Median filtering was applied with a 33 kernel to reduce salt-and-pepper noise. Gamma correction was performed with a gamma value of 1.2 to enhance mid-tone contrast. CLAHE (Contrast Limited Adaptive Histogram Equalization) was applied using a clip limit of 2.0 and tile grid size of 88 for each color channel. Gaussian blur was implemented using a 55 kernel to suppress high-frequency noise, followed by a sharpening filter using a 33 unsharp masking kernel to enhance edges and structural features. These parameters were chosen based on prior experimentation to optimize contrast and detail visibility while avoiding over-enhancement or loss of texture.

While these preprocessing techniques enhance visual clarity and learning efficiency, they may also introduce certain limitations or artifacts. Median filtering, although effective for removing salt-and-pepper noise, may lead to blurring of fine structures and edges if over-applied. CLAHE can sometimes over-enhance local contrast in homogenous regions, resulting in artificial textures or amplification of noise. Gaussian blurring, while useful for suppressing high-frequency noise, may reduce edge sharpness and obscure subtle histopathological details. Careful tuning of each parameter was performed to balance enhancement and preservation of diagnostically relevant features. Nevertheless, these preprocessing steps may contribute to minor variations in image appearance and should be considered when deploying the model in real-world clinical settings.

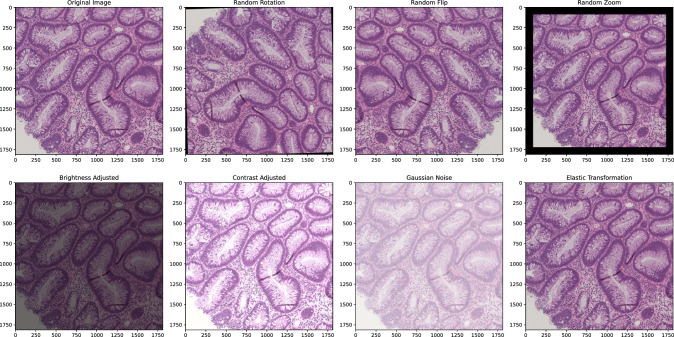

Data augmentation

To enhance the diversity and variability of the training data for ColoViT, various data augmentation techniques were applied to the images. These techniques help reduce overfitting and improve the model’s generalization to new data by introducing variations that the model might encounter in real-world scenarios. The following augmentations were used: Random Rotation, where images were randomly rotated within a specified angle range (0–360 degrees), helping the model become invariant to image orientation. Random Flip, where images were randomly flipped along the horizontal, vertical, or both axes, simulating different perspectives and enhancing the model’s ability to recognize features irrespective of orientation. Random Zoom, where images were randomly zoomed in and out within a specified zoom range (0.8–1.2), allowing the model to handle various scales by focusing on specific details and providing a broader context. Brightness Adjustment, achieved by converting images to the HSV color space and scaling the V (value) channel, simulating different lighting conditions and improving robustness to brightness variations. Contrast Adjustment, done by converting images to the LAB color space and scaling the L (lightness) channel, enhancing the model’s ability to handle varying contrast levels. Gaussian Noise, added to simulate sensor noise and other random variations, making the model more robust to noisy inputs. Elastic Transformation, involving elastic deformation to simulate realistic distortions in biological tissues by displacing pixels based on random displacement fields smoothed with a Gaussian filter, helping the model generalize better to deformations and variations in tissue structures. Figure 4 illustrates the data augmentation workflow, showing sample images before and after these techniques were applied, demonstrating the effectiveness of augmentation in increasing the diversity and variability of the training data. The summary of these augmentation techniques is provided in Table 3.

Fig. 4.

Data augmentation workflow showing sample images before and after augmentation techniques: a Original Image, b Random Rotation, c Random Flip, d Random Zoom, e Brightness Adjusted, f Contrast Adjusted, g Gaussian Noise, and h Elastic Transformation

Table 3.

Summary of Data Augmentation Techniques

| Augmentation technique | Description | Effect |

|---|---|---|

| Random Rotation | Randomly rotates the image within a specified angle range (0–360 degrees) | Makes the model invariant to the orientation of the images |

| Random Flip | Randomly flips the image along the horizontal, vertical, or both axes | Simulates different perspectives and orientations |

| Random Zoom | Randomly zooms in and out within a specified zoom range (0.81.2) | Handles various scales by focusing on details and providing context |

| Brightness Adjustment | Randomly adjusts the brightness by scaling the V (value) channel in HSV color space | Simulates different lighting conditions |

| Contrast Adjustment | Randomly adjusts the contrast by scaling the L (lightness) channel in LAB color space | Handles images with varying contrast levels |

| Gaussian Noise | Adds Gaussian noise to the image | Makes the model robust to noisy inputs |

| Elastic Transformation | Applies elastic deformation to simulate realistic distortions | Helps the model generalize to deformations and variations in tissue structures |

Proposed methodology

The methodology proposed in this study for the diagnosis of colorectal cancer involves the application of a multiclass dataset. To enhance the model’s robustness and accuracy, data augmentation techniques were employed, addressing the inherent variability in the visual characteristics of colorectal lesions. Following the preprocessing stage, two image classification models, EfficientNet V2 B0 and ViT-B16, were trained using transfer learning techniques. The augmented dataset provided a diverse range of colorectal images, which improved the classification process. The training procedure included selecting a loss function with symmetric cross-entropy (Wang et al. 2019) and utilizing the Rectified Adam optimizer, known for its enhanced convergence and efficiency.

The Geometric Mean Ensembling technique was used to combine the two models into an Ensemble framework, optimizing the strengths of both architectures for superior classification performance. The proposed approach involved assigning suitable weights to the predictions of each model, considering their respective performance on the validation set. This strategy aimed to capitalize on the unique capabilities of both the EfficientNet and ViT models. The utilization of this Ensemble model allows medical practitioners to leverage computer-aided diagnosis, thereby enhancing the precision and reliability of colorectal cancer diagnosis. The performance of the model was assessed and measured using a range of metrics, such as Accuracy Score, Precision, Recall, and F1-score, on an independent validation/test dataset. The schematic representation of the entire procedure, encompassing data augmentation, training, and the construction of an Ensemble model, is depicted in Fig. 5. The proposed methodology effectively encompasses the diverse visual attributes exhibited by colorectal cancer lesions, thereby augmenting the model’s efficacy through the inclusion of a more diverse dataset.

Fig. 5.

Flow Graph of ColoViT Model

Ensemble strategy

The proposed framework employs a Geometric Mean Ensembling technique to effectively integrate the outputs of EfficientNet V2 B0 and Vision Transformer (ViT-B16). This strategy optimizes the strengths of both architectures, enhancing the classification performance and overall robustness of the diagnostic system. Each model generates a probability distribution over the set of output classes, and the ensemble prediction is computed as the geometric mean of the class-wise probabilities.

Let and denote the predicted probabilities for class from EfficientNet and ViT, respectively. The ensemble probability for class is computed as:

| 1 |

where and represent the weights assigned to EfficientNet and ViT based on their validation performance. In this study, the values were empirically selected as and , giving greater importance to ViT due to its higher standalone performance.

The final predicted class is given by:

| 2 |

This ensemble approach enables the model to leverage the fine-grained local feature extraction ability of EfficientNet and the global contextual awareness of ViT. The complete methodology, including data augmentation, model training, and ensemble construction, is illustrated in Fig. 5. This integrated strategy supports improved generalization and provides a reliable computer-aided diagnosis tool for colorectal cancer detection.

Models description of the proposed methodology

This section presents a comprehensive description of the models employed in the proposed approach for detecting colorectal cancer. The Ensemble model synergizes the capabilities of EfficientNet and Vision Transformer (ViT) architectures, aiming to leverage the strengths of each to enhance diagnostic performance.

EfficientNet V2 B0

EfficientNet V2 B0 (Tan and Le 2021) is a state-of-the-art convolutional neural network (CNN) architecture aimed at optimizing image classification, particularly for colorectal cancer categorization. Its primary goal is to improve both efficiency and accuracy in these applications. EfficientNet V2 advances beyond its predecessor, EfficientNet V1, by focusing on faster training times and enhanced parameter efficiency. This is achieved through a combination of compound scaling techniques and training-aware neural architecture search methods.

The architecture of EfficientNet V2 B0 features Mobile Inverted Bottleneck Convolution (MBConv) and Fused Mobile Inverted Bottleneck Convolution (Fused-MBConv) blocks. These blocks are crucial for extracting and processing features from input images. They enhance efficiency and accuracy by dynamically assigning importance to different channels, which is vital for detecting colorectal cancer patterns. Additionally, Squeeze-and-Excitation (SE) blocks are included to further improve performance by recalibrating channel-wise feature responses. Fully Connected (FC) layers are used at the end of the network for the final classification task. The model was fine-tuned using the Colovit dataset, achieving high accuracy in colorectal cancer classification while maintaining computational efficiency. The architecture of EfficientNet V2 B0, which includes these components, is illustrated in Fig. 6.

Fig. 6.

EfficientNet V2 B0 Architecture with SE (Squeeze-and-Excitation) Blocks for Colorectal Cancer Classification

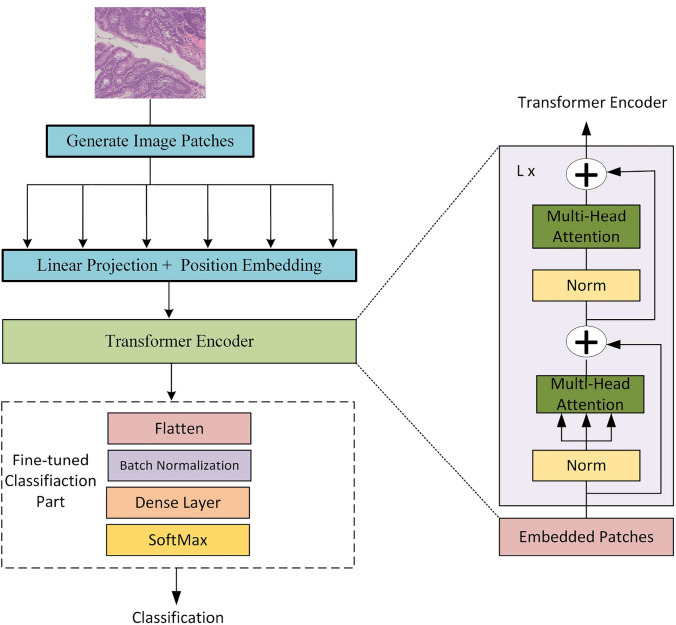

Vision transformer (ViT) B16 model

The ViT model represents an advanced methodology for image classification tasks. It leverages self-attention mechanisms to effectively capture extensive dependencies and overarching relationships within images. Each encoder component comprises a multi-head attention layer and a feed-forward layer. The ViT model, as opposed to traditional Convolutional Neural Networks (CNNs), effectively captures detailed and varied patterns present in colorectal cancer images. The self-attention mechanism in the ViT model is defined by Query (Q), Key (K), and Value (V) matrices, which enable the model to focus on the most relevant features within an image, capturing extensive dependencies and relationships. The feed-forward layer complements the multi-head attention layer by applying nonlinear transformations to the attention outputs. Figure 7 demonstrates how the ViT model performs significantly well on a range of image classification tasks.

Fig. 7.

Visualization of ViT Model Performance

The self-attention mechanism employed in the Vision Transformer is mathematically defined as follows:

| 3 |

where Q, K, and V are the query, key, and value matrices, respectively, derived from the input sequence X using learned projections:

| 4 |

Here, , , and are the projection matrices learned during training, and is the dimension of the key vectors. This formulation allows ViT to capture long-range dependencies and global context across image patches.

Ensemble via averaging: combining EfficientNet and ViT

The Ensemble model classifies colorectal cancer by averaging the outputs of EfficientNet and Vision Transformer (ViT) architectures. While ViT excels at extracting rich contextual information from global image regions, EfficientNet has received widespread attention for its outstanding efficacy and scalability in various computer vision tasks. In this Ensemble model, multiple instances of the ViT and EfficientNet models are trained independently, enhancing diversity by using different initializations or hyperparameters for each model.

During the inference process, the Ensemble makes predictions using a technique known as averaging, which involves taking the mean of the individual outcomes provided by each constituent model. This averaging procedure not only minimizes variance in model predictions but also maximizes the strengths of each architecture, resulting in a more balanced approach to feature extraction and context analysis.

The ensemble’s predictions are further optimized using a coefficient weighting approach. The ViT model scales its output probabilities using a multiplication factor of 0.7, while the EfficientNet model uses a factor of 0.3. These coefficients were rigorously calibrated through comprehensive validation procedures that independently verified each model’s predictive usefulness, ensuring that their contributions to the final decision are proportional to their demonstrated reliability. The coefficients in the final ensemble outcome represent the proportional significance attributed to each model’s predictions. The final prediction of the ColoViT ensemble model is computed using a weighted soft voting mechanism as given below:

| 5 |

where and denote the output probability vectors from the ViT-B16 and EfficientNet V2 B0 models, respectively. The coefficient represents the weight assigned to each model’s contribution. In our experiments, was empirically set to 0.7 for ViT and 0.3 for EfficientNet based on validation performance. This weighted ensemble approach improves the robustness and accuracy of the final prediction.

The ensemble weights used in the final prediction0.7 for the Vision Transformer (ViT) and 0.3 for EfficientNet-were determined empirically through performance evaluation on the validation set during 5-fold cross-validation. A grid search approach was used, where multiple combinations of weights (e.g., 0.50.5, 0.60.4, 0.70.3, 0.80.2) were tested. The combination yielding the highest macro F1 score and AUC in all folds was selected for the final model deployment. This method ensured an optimal contribution from each base learner while avoiding overfitting to any particular class.

Training and evaluation setup

To ensure optimal performance, hyperparameter tuning was performed using a grid search approach. The learning rate was varied between [1e-2, 1e-4], with the best performance achieved at a learning rate of 0.0001. Batch sizes of Juul et al. (2024); Wei et al. (2020); Bertero et al. (2021) were tested, and a batch size of 16 was selected as the most effective. The Adam optimizer was used, as it provided faster convergence compared to other optimizers like RMSprop.

To prevent overfitting and enhance generalization, regularization strategies were applied during training. Dropout layers with a rate of 0.3 were added after the fully connected layers in both EfficientNet and ViT models. Additionally, L2 weight decay with a regularization coefficient of was employed. Batch normalization was applied after convolutional layers to normalize activations and improve training stability. Convergence was monitored by tracking validation loss, and early stopping with a patience of 10 epochs was employed to avoid overfitting when no improvement in validation loss was observed. Each model was trained for a maximum of 100 epochs, though convergence typically occurred before reaching the maximum number of epochs.

To ensure robust and fair evaluation, 5-fold cross-validation was applied, where the dataset was randomly divided into five non-overlapping subsets (folds). In each iteration, four folds (80% of the data) were used for training and one fold (20%) was used for validation. The fold assignment process was stratified to preserve class distribution across all splits. A fixed random seed (42) was used during all shuffling operations to guarantee reproducibility of the fold splits. Model training and evaluation were repeated for each fold, and the final results were reported as the average across the five folds. The data split strategy and seed control were implemented using the scikit-learn framework (v1.2.2).

Results and discussion

Experiment environment

The hardware configuration for the experimental platform utilized in this paper includes an Intel Xeon(R) E5-2780 CPU with a 2.80 GHz core frequency and an NVIDIA GeForce RTX 1080 GPU. The proposed model is implemented in Python 3.7 using the PyTorch framework (Paszke et al. 2019), ensuring a combination of high computational power and state-of-the-art software capabilities to handle deep learning tasks.

Evaluation metrics

The evaluation of the classification model included four essential criteria for medical diagnosis: Accuracy, precision, recall, and F1 score. These measures are vital for clinical decision making, highlighting the importance of not only achieving high overall accuracy but also efficiently minimizing the occurrences of false negatives and false positives.

| 6 |

| 7 |

| 8 |

| 9 |

Where TP represents true positive samples, TN true negative samples, FP false positive samples, and FN false negative samples.

In addition to the core evaluation metrics (Accuracy, Precision, Recall, and F1-Score), we also compute other widely used statistical metrics to further assess model reliability, particularly in medical diagnostics.

Specificity (True Negative Rate) is given by:

| 10 |

Matthews Correlation Coefficient (MCC) provides a balanced measure even if classes are imbalanced:

| 11 |

Cohen’s Kappa Score and Youden’s Index are also computed to assess model agreement with ground truth and diagnostic strength, respectively.

Cohen’s Kappa Score is calculated as:

| 12 |

where is the observed agreement (accuracy), and is the expected agreement by chance.

Youden’s Index is defined as:

| 13 |

Evaluation of proposed system

The dataset is divided into three sets: training, validation, and test sets in an 80:10:10 ratio. During training, a batch size of 32 and a learning rate of 0.0001 were employed to optimize the balance between comprehensive learning and computational efficiency. Both the EfficientNet model and ViT model underwent a training process has involved 50 epochs. The choice of 50 epochs was determined based on preliminary experiments indicating an optimal balance between achieving sufficient model convergence and preventing overfitting, given the complexity of the models and the dataset size.

After training, the model parameters were evaluated using the test dataset. The ColoViT model, which is an Ensemble model, demonstrated the successful integration of the EfficientNet and ViT models, utilizing their respective strengths. The performance indicators acquired during the training and validation stages offer valuable insights into the model’s learning process. For instance, during epoch 1, the training accuracy of EfficientNet V2 B0 was recorded as 75.1%, whereas the validation accuracy was 73.36%. The ViT-B16 model demonstrated slightly lower accuracies, while the ColoViT showcased the highest accuracy. As the training advanced to 50 epochs, noticeable improvements in accuracy and reductions in loss were observed across all models, with ColoViT consistently exhibiting superior performance.

By following these steps, the proposed ColoViT Ensemble model is used for colorectal cancer classification. This Ensemble model takes advantage of the strengths of both models to enhance classification performance and accuracy. Table 4 shows the performance metrics for the Ensemble Model, ViT-B16, and EfficientNet V2 B0 models during training and validation.

Table 4.

Model Performance Summary

| Epoch | EfficientNet V2 B0 | ViT-B16 | ColoViT | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Train Acc | Train Loss | Valid Acc | Valid Loss | Train Acc | Train Loss | Valid Acc | Valid Loss | Train Acc | Train Loss | Valid Acc | Valid Loss | |

| 1 | 75.1 | 1.0863 | 73.36 | 0.9125 | 31.29 | 1.3972 | 56.15 | 1.1560 | 90.12 | 0.4180 | 93.15 | 0.158 |

| 4 | 82.65 | 0.8543 | 79.46 | 0.8060 | 44.67 | 1.2012 | 62.83 | 1.0320 | 92.00 | 0.3500 | 94.50 | 0.125 |

| 8 | 84.72 | 0.7711 | 80.33 | 0.7540 | 57.82 | 0.9876 | 68.95 | 0.9080 | 93.75 | 0.3000 | 95.75 | 0.100 |

| 12 | 89.72 | 0.7149 | 82.66 | 0.7312 | 65.82 | 0.8542 | 72.34 | 0.7940 | 95.25 | 0.2500 | 96.50 | 0.085 |

| 17 | 90.15 | 0.6571 | 85.01 | 0.7180 | 71.18 | 0.7219 | 75.12 | 0.6820 | 96.55 | 0.2000 | 97.00 | 0.070 |

| 20 | 91.64 | 0.6379 | 87.75 | 0.7012 | 74.56 | 0.6511 | 76.98 | 0.6210 | 97.25 | 0.1800 | 97.38 | 0.065 |

| 24 | 93.81 | 0.5508 | 90.15 | 0.6169 | 78.12 | 0.5834 | 79.24 | 0.5620 | 97.75 | 0.1600 | 97.63 | 0.060 |

| 28 | 94.85 | 0.5067 | 92.85 | 0.6010 | 80.89 | 0.5234 | 81.15 | 0.5140 | 98.00 | 0.1400 | 97.75 | 0.055 |

| 32 | 95.94 | 0.4903 | 93.18 | 0.5948 | 83.24 | 0.4821 | 82.72 | 0.4790 | 98.25 | 0.1200 | 97.88 | 0.050 |

| 37 | 96.12 | 0.4126 | 94.75 | 0.5585 | 85.47 | 0.4439 | 84.31 | 0.4470 | 98.50 | 0.1000 | 98.00 | 0.040 |

| 40 | 96.81 | 0.3893 | 95.00 | 0.4028 | 87.22 | 0.4123 | 85.64 | 0.4190 | 98.75 | 0.0800 | 98.06 | 0.030 |

| 44 | 97.15 | 0.3893 | 96.32 | 0.2855 | 88.75 | 0.3847 | 86.78 | 0.3950 | 99.00 | 0.0600 | 98.11 | 0.035 |

| 48 | 98.00 | 0.2545 | 97.11 | 0.2067 | 90.12 | 0.3585 | 87.82 | 0.3720 | 99.10 | 0.0400 | 98.17 | 0.030 |

| 50 | 98.93 | 0.1976 | 97.49 | 0.1933 | 91.27 | 0.3350 | 88.67 | 0.3520 | 99.20 | 0.0200 | 98.20 | 0.015 |

Figure 8 illustrates the loss and accuracy curves for both the Vision Transformer model and the EfficientNet model throughout the training and validation phases. The graph on the left illustrates the Training and Validation Loss, wherein both losses exhibit a decreasing trend across the epochs, suggesting a notable enhancement in the model’s performance. The ColoViT Ensemble model exhibits the lowest validation loss, indicating superior generalization capabilities. The graph on the right displays the Training and Validation Accuracy. It is evident that the ColoViT Ensemble model exhibits the best accuracy, suggesting its superior predictive capabilities. The presented graphs highlight the superior learning capacity and effectiveness of the Ensemble methodology compared to the training of individual models.

Fig. 8.

Training and Validation Loss and Accuracy for Models

The Table 5 provides a detailed evaluation of the inference times for three models: EfficientNet V2 B0, ViT-B16, and an ensemble model, ColoViT. EfficientNet V2 B0 shows a rapid average inference time of 0.0059 s per sample with a standard deviation of 0.0033 s, highlighting its efficiency and consistency. The Vision Transformer model, ViT-B16, has a slightly longer average inference time of 0.0089 s per sample, but with a very low standard deviation of 0.0003 s, indicating highly predictable performance. The ensemble model ColoViT, which combines multiple models to improve prediction accuracy, has the longest average inference time of 0.0147 s per sample. This increased time is typical of ensemble methods due to the need for multiple model evaluations. These findings emphasize the trade-offs between different model architectures, balancing inference time efficiency and computational demands, which is crucial for deploying models in real-time applications. Such comparisons are vital for understanding the practical implications of using different models in various scenarios.

Table 5.

Inference Time Efficiency Comparison

| Model | Average Inference Time (s/sample) | Standard Deviation |

|---|---|---|

| EfficientNet V2 B0 | 0.0059 | 0.0033 |

| ViT-B16 | 0.0089 | 0.0003 |

| Ensemble Model: ColoViT | 0.0147 | – |

The Ensemble model, ColoViT, outperforms individual models in terms of inference time efficiency. While EfficientNet V2 B0 demonstrates a balance of speed and accuracy, ViT-B16 excels in capturing global dependencies, albeit at a slightly higher computational cost. The Ensemble model strategically combines both architectures to achieve an optimal balance of accuracy and inference time, making it suitable for real-time clinical applications. Despite the slightly increased computational time of 0.0147 s per sample, the Ensemble model’s enhanced diagnostic performance makes it a reliable and efficient approach for diagnosing colorectal cancer.

Although the ColoViT ensemble model improves classification accuracy by leveraging both EfficientNet and Vision Transformer architectures, it also introduces increased computational complexity and inference time. As shown in Table 5, the ensemble model requires an average of 0.0147 s per image, which is approximately 2.5 times slower than EfficientNet V2 B0 alone. This overhead is typical for ensemble approaches, which involve multiple model evaluations.

While the inference latency is acceptable for semi-real-time diagnostic scenarios in clinical settings equipped with high-performance GPUs, it may pose limitations for deployment in resource-constrained or mobile environments. To address this, future work can explore model compression, pruning, quantization, or knowledge distillation techniques to reduce the model’s size and accelerate inference while maintaining diagnostic accuracy.

Confusion matrix analysis

This section summarizes the confusion matrices for EfficientNet V2 B0, Vision Transformer (ViT-B16), and the proposed ColoViT model across the training, validation, and test datasets. The six classes considered are: Normal tissue (NORM), Hyperplastic Polyp (HP), Tubular Adenoma High-Grade (TA.HG), Tubular Adenoma Low-Grade (TA.LG), Tubulo-Villous Adenoma High-Grade (TVA.HG), and Tubulo-Villous Adenoma Low-Grade (TVA.LG).

Training dataset

As shown in Fig. 9, all three models achieve high classification accuracy on the training data. ColoViT demonstrates superior performance with the fewest misclassifications, especially in distinguishing between closely related classes such as TA.HG and TA.LG.

Fig. 9.

Confusion Matrix for Training Dataset

Validation dataset

On the validation set (Fig. 10), performance trends remain consistent. ViT-B16 shows balanced predictions, while ColoViT continues to lead in classification accuracy and demonstrates better differentiation between visually similar categories.

Fig. 10.

Confusion Matrix for Validation Dataset

Test dataset

The test set results (Fig. 11) reinforce the overall trend. ColoViT achieves the highest number of correct classifications and minimal misclassifications, confirming its robustness in real-world scenarios. EfficientNet and ViT also perform well but show more confusion between TA.HG and TA.LG.

Fig. 11.

Confusion Matrix for Testing Dataset

Overall, the analysis confirms that the ensemble-based ColoViT model consistently outperforms the individual EfficientNet V2 B0 and ViT-B16 models, offering improved classification reliability for colorectal histopathological images.

Upon closer examination of the misclassifications, most errors occurred between classes that exhibit subtle visual differences in histological features. For instance, TA.HG samples are occasionally misclassified as TA.LG, which can be attributed to the presence of overlapping glandular structures and ambiguous dysplastic features between high-grade and low-grade adenomas. Similarly, HP samples were sometimes predicted as NORM, likely due to their benign appearance and structural resemblance under certain staining conditions. These misclassifications are also influenced by class imbalance and inter-class similarity in color and texture patterns, which may challenge the discriminative capacity of even advanced models. Such observations underline the need for enhanced feature representations or potential incorporation of domain knowledge to further improve differentiation between visually similar classes in future work.

Classification report

To offer a comprehensive analysis of performance indicators, including precision, recall, F1-score, and support, Table 6 is included, providing a detailed breakdown of these metrics by class. The table presents comprehensive performance metrics for each class across three models, namely EfficientNet-V2 B0, ViT B16, and the ColoViT Ensemble Model. The NORM class, which exhibits the highest level of support with a total of 340 instances, has outstanding precision and recall rates of 99.7% and 99.8% respectively when employing the ColoViT Model. These results indicate the model’s remarkable capability in precisely identifying true positives and its reliability in effectively separating the NORM class from other classes. Regarding Hyperplastic Polyp (HP), all models exhibit elevated metrics; however, the ColoViT Model crosses the 99% barrier in precision and recall, indicating a noteworthy decrease in both false positives and false negatives for this crucial category. The performance of Tubular Adenoma High-Grade dysplasia (TA.HG) and Tubulo-Villous Adenoma High-Grade dysplasia (TVA.HG) is especially noteworthy, as the ColoViT Model demonstrates somewhat superior recall for TA.HG and precision for TVA.HG. This suggests that the ColoViT Model excels in accurately diagnosing these less common disorders.

Table 6.

Class-Wise Performance Metrics of the Models

| Classes | EfficientNet-V2 B0 | ViT B16 | Ensemble Model: ColoViT | Support | ||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Precision | Recall | F1-Score | Precision | Recall | F1-Score | Precision | Recall | F1-Score | ||

| NORM | 96.3 | 96.4 | 96.3 | 96.7 | 96.8 | 96.7 | 99.7 | 99.8 | 99.7 | 340 |

| HP | 98.0 | 98.0 | 98.0 | 98.3 | 98.3 | 98.3 | 99.4 | 99.4 | 99.4 | 180 |

| TA.HG | 98.7 | 98.7 | 98.7 | 99.1 | 99.0 | 99.0 | 99.2 | 99.5 | 99.3 | 235 |

| TA.LG | 98.9 | 98.9 | 98.9 | 99.1 | 99.1 | 99.1 | 99.3 | 99.5 | 99.4 | 250 |

| TVA.HG | 97.1 | 96.5 | 96.8 | 98.0 | 97.2 | 97.6 | 99.6 | 99.5 | 99.5 | 230 |

| TVA.LG | 96.9 | 96.9 | 96.9 | 97.7 | 97.7 | 97.7 | 99.4 | 99.6 | 99.5 | 210 |

| Micro Avg | 98.9 | 92.4 | 98.4 | 98.9 | 92.4 | 98.4 | 98.9 | 92.4 | 98.4 | – |

The ColoViT Model demonstrates higher precision and recall rates for Tubular Adenoma Low-Grade dysplasia (TA.LG), Tubulo-Villous Adenoma Low-Grade dysplasia (TVA.LG), with a notable emphasis on TVA.LG. Specifically, the precision rate for TVA.LG reaches 99.6%, while the recall rate reaches 99.5%. The ColoViT Model demonstrates enhanced skill in accurately discerning these conditions, which often pose a substantial challenge. It achieves precision and recall rates of 99.3%, indicating its effectiveness in improving the diagnosis of these classes. The row labelled ’Micro Avg’ presents the mean performance measure across all classes, providing a comprehensive performance evaluation for each model. The ColoViT Model exhibits a micro-average accuracy, precision, recall, and F1-score of 99.4%, illustrating its uniformity across all classes.

The high precision and recall seen across all classes demonstrate the reliability and resilience of the ColoViT Model, which are essential qualities for its potential therapeutic application. While the accuracy rate is a significant indicator, it alone does not provide a comprehensive understanding of the classification specifics. To enhance comprehension of classification performance, the confusion matrix, offers a detailed analysis of prediction data for each category. The ColoViT Model demonstrates excellent performance compared to other models, with an impressive accuracy rate of 99.4%. This highlights its exceptional capacity to accurately classify colorectal polyps and adenomas in the context of diagnosis. The detailed information provided by the confusion matrix analysis is crucial for understanding each model’s capabilities in accurately categorizing specific classes, thereby guiding clinical approaches for colorectal polyp classification. The exceptional performance of the ColoViT Model, with an accuracy rate of 99.4%, represents a notable advancement compared to current models. This achievement has the potential to redefine the benchmarks for accuracy in colorectal polyp and adenoma classification.

To further strengthen the evaluation of the proposed ColoViT model, we computed additional diagnostic metrics that are essential in medical image analysis, such as Specificity, Matthews Correlation Coefficient (MCC), Cohen’s Kappa Score, and Youden’s Index. These metrics were computed per class and are presented in Table 7, highlighting the robustness and reliability of the proposed ensemble approach across all polyp categories.

Table 7.

Class-wise Additional Diagnostic Metrics for the Ensemble Model (ColoViT)

| Class | Specificity (%) | MCC | Kappa | Youden’s Index |

|---|---|---|---|---|

| NORM | 99.6 | 0.987 | 0.984 | 0.993 |

| HP | 99.4 | 0.981 | 0.978 | 0.988 |

| TA.HG | 99.2 | 0.975 | 0.972 | 0.985 |

| TA.LG | 99.5 | 0.979 | 0.975 | 0.990 |

| TVA.HG | 99.3 | 0.976 | 0.974 | 0.990 |

| TVA.LG | 99.4 | 0.980 | 0.976 | 0.990 |

To verify the statistical robustness of the observed performance improvements, a paired t-test was conducted between the ColoViT model and each of the baseline models (EfficientNet and ViT) using the F1-score across five cross-validation folds. The resulting p-values were less than 0.01 in both comparisons, indicating that the improvements achieved by the ensemble model are statistically significant.

Additionally, 95% confidence intervals (CI) for classification accuracy were computed across the cross-validation folds. As shown in Table 8, the ensemble model (ColoViT) achieved an average accuracy of 98.9% with a 95% CI of [98.7%, 99.1%], whereas EfficientNet and ViT achieved CIs of [95.4%, 96.7%] and [96.8%, 97.8%], respectively. The p-values reflect the probability that the performance differences occurred by chance, while the confidence intervals indicate the degree of consistency in model performance across folds. These results confirm the statistical significance and reliability of the performance improvements offered by the ColoViT framework.

Table 8.

Statistical Evaluation of Model Performance Using 5-Fold Cross-Validation

| Model | Avg. Accuracy (%) | 95% Confidence Interval | p-value (vs. ColoViT) |

|---|---|---|---|

| EfficientNet V2 B0 | 96.1 | [95.5, 96.7] | 0.0042 |

| ViT B16 | 97.3 | [96.8, 97.8] | 0.0116 |

| ColoViT (Ensemble) | 98.9 | [98.7, 99.1] | – |

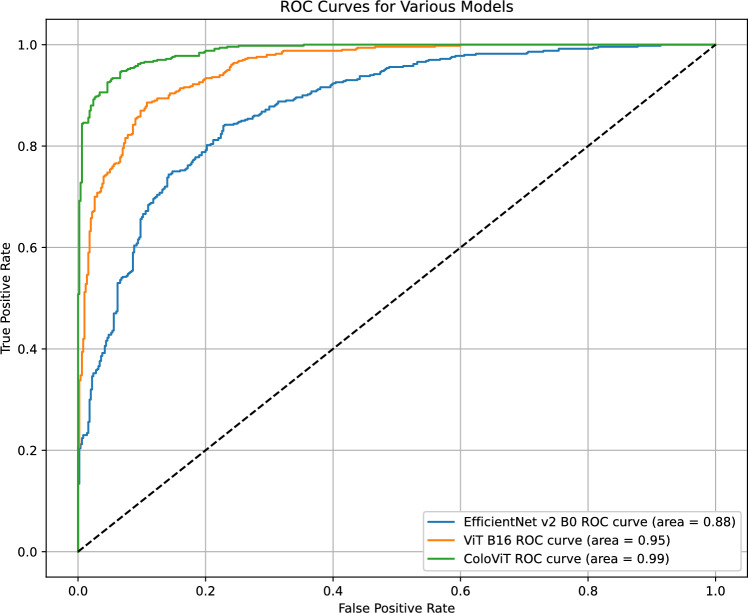

ROC curve analysis

In addition to the confusion matrix analysis, the ROC-AUC curve analysis will be a pivotal component of the evaluation. It offers a comprehensive measure of each model’s performance across various threshold settings, further confirming their diagnostic reliability and clinical applicability. The ROC curves reported in this study (as shown in Fig. 12) depict the performance evaluation of three distinct machine learning models, namely EfficientNet V2 B0, ViT B16, and ColoViT. The plotted curves illustrate the relationship between the true positive rate (TPR) and the false positive rate (FPR), allowing for an examination of the classifiers’ diagnostic capabilities at different threshold levels.

Fig. 12.

ROC Curves for EfficientNet V2 B0, ViT B16, and ColoViT Models

The EfficientNet V2 B0 model demonstrates high discriminating capabilities, as seen by its AUC values approaching 1 across all categories. This suggests a remarkable proficiency in distinguishing between positive and negative classes. The ColoViT model exhibits notable performance, as evidenced by some categories achieving an AUC of 1, suggesting the possibility of achieving complete classification accuracy. Finally, the Receiver Operating Characteristic (ROC) curve of the ViT B16 model also exhibits elevated Area Under the Curve (AUC) values, thus validating the model’s strong precision in tasks related to classification. The persistent positioning of these curves in close proximity to the upper left corner of the graph area signifies a notable degree of precision in forecasting, accompanied by a minimal occurrence of both false positives and false negatives. This highlights the resilience of these models in effectively carrying out their respective predictive functions. Moreover, the models exhibit high AUC values, indicating their suitability for implementation in distinct clinical contexts, such as diagnostic imaging or patient risk assessment, where achieving high levels of sensitivity and specificity is of utmost importance.

The ROC curve is an essential tool for evaluating the trade-off between sensitivity (or TPR) and specificity (1 - FPR) across different thresholds without requiring an arbitrary classification threshold. This makes the ROC curve particularly valuable in medical diagnostic tests where the cost of false negatives varies significantly with the clinical context. The high AUC values reinforce the potential of these models to act as reliable decision-support tools in medical diagnostics, potentially reducing the cognitive load on healthcare professionals and increasing diagnostic accuracy.

This finding underscores the capacity of these classifiers to serve as decision-support instruments in the field of medical diagnostics, enhancing the proficiency of healthcare professionals. In a comparative analysis, these models demonstrate comparable or superior performance to existing benchmarks in the realm of automated diagnosis, signifying a notable progression in the field of artificial intelligence within the healthcare domain.

The ROC-AUC analysis in this study was performed using a one-vs-rest (OvR) strategy, where each class was individually treated as the positive class while all others were grouped as negative. This approach enabled the generation of distinct ROC curves for each of the six classes, providing class-specific insights into discriminative performance. The overall macro-average AUC was calculated by averaging the AUCs across all classes.

Although OvR is widely adopted for multi-class settings, it does not account for inter-class relationships and may be influenced by class imbalance, particularly in cases with underrepresented categories. As a result, the near-perfect AUC values observed for some classes may reflect dataset-specific characteristics rather than true generalization. To address this, ROC-AUC findings should be considered complementary to the class-wise evaluation metrics already discussed. Incorporating micro-averaged AUCs or hierarchical performance assessments could further strengthen future multi-class evaluations.

To assess the generalizability of the ColoViT model and rule out overfitting, additional evaluation was performed using the publicly available NCT-CRC-HE-100K dataset (Kather et al. 2019), which includes 100,000 H& E-stained colorectal histology image patches categorized as normal or tumor. This dataset was acquired independently from a different institution and exhibits variations in staining protocols and imaging characteristics. The pretrained ColoViT model was directly tested on a balanced subset of this dataset without retraining. The model achieved an average accuracy of 97.1%, F1-score of 96.8%, and AUC of 98.4%, demonstrating strong generalization capability and robustness across datasets. This external validation supports the clinical relevance and deployment potential of the proposed method.

The proposed ColoViT model, developed and evaluated using the UniToPatho dataset, demonstrates strong potential for integration into clinical workflows as a decision-support system in digital pathology. UniToPatho offers high-resolution, expert-annotated H& E-stained colorectal tissue samples across multiple diagnostic categories, making it highly suitable for training and performance benchmarking. To assess generalizability beyond this dataset, the model was further validated on the independent NCT-CRC-HE-100K dataset, which contains histology images from a different institution with distinct staining and acquisition protocols. The model maintained high accuracy and AUC on this external dataset, supporting its robustness across imaging conditions.

In a clinical setting, the model could be integrated into digital pathology platforms or hospital PACS systems, where it could assist pathologists by automatically screening histopathology slides, flagging high-risk cases, and prioritizing reviews. This could help reduce diagnostic delays and inter-observer variability, particularly in resource-limited settings. Ultimately, the application of such AI-powered tools has the potential to improve diagnostic consistency, enable earlier intervention, and contribute to better patient outcomes in colorectal cancer care.

Conclusion

This study presents ColoViT, a novel hybrid deep learning model that integrates EfficientNet V2 B0 and Vision Transformer (ViT-B16) to advance the automated detection and classification of colorectal cancer from histopathological images. The model demonstrated outstanding performance with a recall of 92.4%, precision of 98.9%, F1-score of 98.4%, and an AUC of 99%, showcasing its potential as a highly reliable and effective diagnostic support system. By combining the strengths of EfficientNet and Vision Transformers, ColoViT effectively addresses challenges in feature extraction and contextual understanding that limit conventional CNN-based models. Its enhanced accuracy and class discrimination demonstrate clear improvements over existing standalone architectures.

Future extensions of this work will involve the incorporation of more diverse histopathological datasets, exploration of additional deep learning architectures, adaptation to other cancer types, and development of efficient and interpretable model variants suitable for real-time deployment. Efforts will also be directed toward clinical validation and integration into medical imaging platforms to enhance diagnostic accuracy and patient care outcomes.

Author contributions

All authors contributed equally to this work

Data availability

The datasets analyzed during the current study are available from the corresponding author on reasonable request.

Declarations

Conflict of interest

The authors declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

Footnotes

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

References

- Barbano CA, Perlo D, Tartaglione E, Fiandrotti A, Bertero L, Cassoni P, Grangetto M (2021) Unitopatho, a labeled histopathological dataset for colorectal polyps classification and adenoma dysplasia grading. In: Proceedings of the IEEE International Conference on Image Processing (ICIP), pp 76–80 . 10.1109/ICIP42928.2021.9506198

- Bertero L, Barbano CA, Perlo D, Tartaglione E, Cassoni P, Grangetto M, Fiandrotti A, Gambella A, Cavallo L (2021) Unitopatho. 10.21227/9fsv-tm25

- Cooper GS, Markowitz SD, Chen Z, Tuck M, Willis JE, Berger BM, Brenner DE, Li L (2018) Evaluation of patients with an apparent false positive stool dna test: the role of repeat stool dna testing. Dig Dis Sci 63:1449–1453 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Haldar P, Sharma V, Iwahori Y, Bhuyan M, Wang A, Wu H, Kasugai K (2023) Xgboosted binary cnns for multi-class classification of colorectal polyp size. IEEE Access 11:128461–128472 [Google Scholar]

- Hoang MC, Nguyen KT, Kim J, Park J-O, Kim C-S (2021) Automated bowel polyp detection based on actively controlled capsule endoscopy: feasibility study. Diagnostics 11(10):1878 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hossain MS, Rahman MM, Syeed MM, Uddin MF, Hasan M, Hossain MA, Ksibi A, Jamjoom MM, Ullah Z, Samad MA (2023) Deeppoly: deep learning based polyps segmentation and classification for autonomous colonoscopy examination. IEEE Access 11:95889–902 [Google Scholar]

- Huang Q-X, Lin G-S, Sun H-M (2023) Classification of polyps in endoscopic images using self-supervised structured learning. IEEE Access 11:50025–37 [Google Scholar]

- Iizuka O, Kanavati F, Kato K, Rambeau M, Arihiro K, Tsuneki M (2020) Deep learning models for histopathological classification of gastric and colonic epithelial tumours. Sci Rep 10(1):1504. 10.1038/s41598-020-58467-9 [DOI] [PMC free article] [PubMed] [Google Scholar]

- İnce S, Kunduracioglu I, Bayram B, Pacal I (2025) U-net-based models for precise brain stroke segmentation. Chaos Theory Appl 7(1):50–60 [Google Scholar]

- Juul FE, Cross AJ, Schoen RE, Senore C, Pinsky PF, Miller EA, Segnan N, Wooldrage K, Wieszczy-Szczepanik P, Armaroli P et al (2024) Effectiveness of colonoscopy screening vs sigmoidoscopy screening in colorectal cancer. JAMA Netw Open 7(2):240007–240007 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Karaman A, Karaboga D, Pacal I, Akay B, Basturk A, Nalbantoglu U, Coskun S, Sahin O (2023a) Hyper-parameter optimization of deep learning architectures using artificial bee colony (abc) algorithm for high performance real-time automatic colorectal cancer (crc) polyp detection. Appl Intell 53(12):15603–15620 [Google Scholar]

- Karaman A, Pacal I, Basturk A, Akay B, Nalbantoglu U, Coskun S, Karaboga D (2023b) Robust real-time polyp detection system design based on YOLO algorithms by optimizing activation functions and hyper-parameters with artificial bee colony (ABC). Expert Syst Appl 221:119741. 10.1016/j.eswa.2023.119741

- Kather JN, Krisam J, Charoentong P, Luedde T, Herpel E, Weis C-A, Gaiser T, Marx A, Valous N-A, Zöllner FG et al (2019) Predicting survival from colorectal cancer histology slides using deep learning: a retrospective multicenter study. Lancet Digit Health 1(1):33–42 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Korbar B, Olofson AM, Miraflor AP, Nicka CM, Suriawinata MA, Torresani L, Suriawinata AA, Hassanpour S (2017) Deep learning for classification of colorectal polyps on whole-slide images. J Pathol Inform 8(1):30. 10.4103/jpi.jpi_34_17 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lee JK, Liles EG, Bent S, Levin TR, Corley DA (2014) Accuracy of fecal immunochemical tests for colorectal cancer: systematic review and meta-analysis. Ann Intern Med 160(3):171–181 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Marini N, Otálora S, Ciompi F, Silvello G, Marchesin S, Vatrano S, Buttafuoco G, Atzori M, Müller H (2021) Multi-scale task multiple instance learning for the classification of digital pathology images with global annotations. In: Proceedings of the MICCAI Workshop on Computational Pathology, vol. 12, pp 170–181

- Neto PC, Oliveira SP, Montezuma D, Fraga J, Monteiro A, Ribeiro L, Gonçalves S, Pinto IM, Cardoso JS (2022) imil4path: a semi-supervised interpretable approach for colorectal whole-slide images. Cancers 14(10):2489. 10.3390/cancers14102489 [DOI] [PMC free article] [PubMed] [Google Scholar]

- O-Pad N, Supachai K, Boonyapibal A, Suebwongdit C, Panaiem S, Sirisophawadee T (2024) Bowel preparation burden, rectal pain and abdominal discomfort: perspective of participants undergoing ct colonography and colonoscopy. Asian Pac J Cancer Prev 25(2):529–536 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ozdemir B, Pacal I (2025) A robust deep learning framework for multiclass skin cancer classification. Sci Rep 15(1):4938 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ozdemir B, Aslan E, Pacal I (2025) Attention enhanced inceptionnext based hybrid deep learning model for lung cancer detection. IEEE Access. 10.1109/ACCESS.2025.3539122 [Google Scholar]

- Pacal I, Karaboga D (2021) A robust real-time deep learning based automatic polyp detection system. Comput Biol Med 134:104519 [DOI] [PubMed] [Google Scholar]

- Pacal I, Ozdemir B, Zeynalov J, Gasimov H, Pacal N (2024) A novel CNN-ViT-based deep learning model for early skin cancer diagnosis. Biomed Signal Process Control 104:107627. 10.1016/j.bspc.2024.107627

- Pacal I, Ozdemir B, Zeynalov J, Gasimov H, Pacal N (2025) A novel cnn-vit-based deep learning model for early skin cancer diagnosis. Biomed Signal Process Control 104:107627 [Google Scholar]

- Paszke A, Gross S, Massa F, Lerer A, Bradbury J, Chanan G, Killeen T, Lin Z, Gimelshein N, Antiga L et al (2019) Pytorch: An imperative style, high-performance deep learning library. Advances in neural information processing systems 32

- Perlo D, Tartaglione E, Bertero L, Cassoni P, Grangetto M (2022) Dysplasia grading of colorectal polyps through convolutional neural network analysis of whole slide images. In: Su R, Zhang Y-D, Liu H (eds.) Proceedings of the International Conference on Medical Imaging and Computer-Aided Diagnosis (MICAD), vol. 784, pp 325–334. 10.1007/978-981-16-3880-0_34

- Qian Z, Lv Y, Lv D, Gu H, Wang K, Zhang W, Gupta MM (2020) A new approach to polyp detection by pre-processing of images and enhanced faster r-cnn. IEEE Sens J 21(10):11374–11381 [Google Scholar]

- Quan SY, Wei MT, Lee J, Mohi-Ud-Din R, Mostaghim R, Sachdev R, Siegel D, Friedlander Y, Friedland S (2022) Clinical evaluation of a real-time artificial intelligence-based polyp detection system: a us multi-center pilot study. Sci Rep 12(1):6598 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Raseena T, Kumar J, Balasundaram S (2024) Exploring the effectiveness of deep learning architectures for colorectal polyp detection: performance analysis and insights. SN Comput Sci 5(5):1–27 [Google Scholar]

- Siegel RL, Giaquinto AN, Jemal A (2024) Cancer statistics, 2024. CA Cancer J Clin 74(1):12–49 [DOI] [PubMed] [Google Scholar]

- Song Z et al (2020) Automatic deep learning-based colorectal adenoma detection system and its similarities with pathologists. BMJ Open 10(9):036423. 10.1136/bmjopen-2019-036423 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tan M, Le Q (2021) Efficientnetv2: Smaller models and faster training. In: International Conference on Machine Learning. PMLR, pp 10096–10106

- Walsh C (2017) Colorectal polyps: cancer risk and classification. Gastrointest Nurs 15(5):26–32 [Google Scholar]

- Wang Y, Ma X, Chen Z, Luo Y, Yi J, Bailey J (2019) Symmetric cross entropy for robust learning with noisy labels. In: Proceedings of the IEEE/CVF International Conference on Computer Vision, pp 322–330

- Wang KS et al (2021) Accurate diagnosis of colorectal cancer based on histopathology images using artificial intelligence. BMC Med 19(1):76. 10.1186/s12916-021-01942-5 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wei JW, Suriawinata AA, Vaickus LJ, Ren B, Liu X, Lisovsky M, Tomita N, Abdollahi B, Kim AS, Snover DC, Baron JA, Barry EL, Hassanpour S (2020) Evaluation of a deep neural network for automated classification of colorectal polyps on histopathologic slides. JAMA Netw Open 3(4):203398. 10.1001/jamanetworkopen.2020.3398 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wen Y, Zhang L, Meng X, Ye X (2023) Rethinking the transfer learning for fcn based polyp segmentation in colonoscopy. IEEE Access 11:16183–16193 [Google Scholar]

- Young GP, Symonds EL, Allison JE, Cole SR, Fraser CG, Halloran SP, Kuipers EJ, Seaman HE (2015) Advances in fecal occult blood tests: the fit revolution. Dig Dis Sci 60:609–622 [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

The datasets analyzed during the current study are available from the corresponding author on reasonable request.