Abstract

We used functional MRI to test the hypothesis that emotional states can selectively influence cognition-related neural activity in lateral prefrontal cortex (PFC), as evidence for an integration of emotion and cognition. Participants (n = 14) watched short videos intended to induce emotional states (pleasant/approach related, unpleasant/withdrawal related, or neutral). After each video, the participants were scanned while performing a 3-back working memory task having either words or faces as stimuli. Task-related neural activity in bilateral PFC showed a predicted pattern: an Emotion × Stimulus crossover interaction, with no main effects, with activity predicting task performance. This highly specific result indicates that emotion and higher cognition can be truly integrated, i.e., at some point of processing, functional specialization is lost, and emotion and cognition conjointly and equally contribute to the control of thought and behavior. Other regions in lateral PFC showed hemispheric specialization for emotion and for stimuli separately, consistent with a hierarchical and hemisphere-based mechanism of integration.

Emotion and cognition are two major aspects of human mental life that are widely regarded as distinct but interacting (1–3). Emotion–cognition interactions are intuitively intriguing and theoretically important. However, there are many different kinds or forms of interaction, and in principle these differ widely in what they imply about the functional organization of the brain. Some forms of interaction are diffuse or nonspecific, whereas others are more nuanced and imply a greater complexity in brain organization. The typical folk psychological view of emotion–cognition interaction is nonspecific: for better or worse, emotional states diffusely impact higher cognition (e.g., pleasant emotions are nonspecifically beneficial, whereas unpleasant emotions are nonspecifically detrimental). A nonspecific view is parsimonious: complexity should not be posited without compelling evidence. Here, we report neural evidence for a strong highly constrained form of emotion–cognition interaction. These data reveal a complexity that implies an integration of emotion and cognition in brain functional organization.

Neuroimaging data are widely used to provide evidence for a specialization or fractionation of psychological function (based on double-dissociation logic; e.g., ref. 4). Intuitively, integration is the opposite: a convergence and merging of specialized subfunctions into a single more general function (5–7). Formally, we define integration in terms of a specific experimental design and a logically sufficient pattern of results (see Fig. 1). Given such a design, emotion–cognition integration is implied by the existence of a brain region having a crossover interaction between the emotional and cognitive factors, with no main effects of either factor. Although emotion and cognition may be mostly separable, the presence of such a highly specific pattern means that the emotional and cognitive influences are also inseparable. At that point of processing, functional specialization is lost. If the integrated signal has a functional role, emotion and cognition can conjointly and equally contribute to the control of thought, affect, and behavior. Integration does not mean that emotion is an intrinsic aspect of cognition or vice versa, or that emotion and cognition are completely identical (in fact, they can be integrated only if they are also separable). Multiple processing streams may exist, not all of which need be integrated.

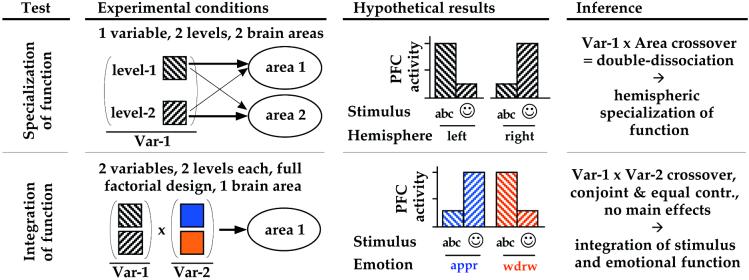

Figure 1.

Two formal tests of brain functional organization based on neuroimaging data used in this paper. A variable represents a psychological function (e.g., working memory); levels of a variable represent subfunctions (e.g., verbal, nonverbal). The form of the observed interaction is critical (crossover or not), but the direction is not. (Upper) A test for specialization assesses whether a psychological function must be separable into subfunctions, on the basis of a double-dissociation by area (see ref. 4). The null hypothesis is that the function is unitary, and fractionation is not possible; this is falsified if there are two distinct brain areas for which processing associated with one subfunction activates area1 but not area2, and processing associated with the other subfunction activates area2 but not area1. That is, a Function × Area (e.g., Stimulus × Hemisphere) crossover interaction shows that the two subfunctions are distinct or separable for at least one point of processing. (Double-dissociations can also be shown in the time domain.) (Lower) A test for integration assesses whether two psychological functions must be inseparable within some brain region. The null hypothesis is that two functions (e.g., working memory and emotion) are completely separable. This is falsified if there is at least one brain area in which activity (i) is not predictable given only information about either function in isolation, and yet (ii) is influenced conjointly and equally by the two functions in combination; i.e., in a full factorial design, there are no main effects of either function and yet there is a Function1 × Function2 crossover interaction (e.g., Stimulus × Emotion). These conditions and results are sufficient to show integration: If the pattern holds, integration must be occurring; without the pattern, no inference about integration can be drawn. The locus where integration is revealed is not necessarily the locus where it occurred: it could be revealed at a point of processing that is subsequent to, yet correlated with, its actual occurrence. abc, verbal stimuli;  , nonverbal stimuli; appr, approach-related; var, variable; wdrw, withdrawal-related.

, nonverbal stimuli; appr, approach-related; var, variable; wdrw, withdrawal-related.

In our model of emotion–cognition interaction (8–10), approach- and withdrawal-motivated emotional states (11, 12) are associated with different modes of information processing. The efficiency of specific cognitive functions is held to depend on the particular state or mode (see refs. 13–15). Emotional states are postulated to transiently enhance or impair some functions but not others, doing so relatively rapidly, flexibly, and reversibly. In this way, they could adaptively bias the overall control of thought and behavior to meet situational demands more effectively (8, 16, 17). For example, the active maintenance of withdrawal-related goals should be prioritized during threat-related or withdrawal-motivated states but deemphasized during reward-expectant or approach-related states, yet just the opposite should be true for approach-related goals (8, 9, 15). A functional integration of emotion and cognition would allow the goal-directed control of behavior to depend on the emotional context. Goal-directed behavior is a complex control function mediated neurally by lateral prefrontal cortex (PFC) (18) and involves higher cognitive processes such as working memory (19). Consequently, lateral PFC is a possible site for the occurrence of integration.

Our model currently draws support from three behavioral studies (8). Induced emotional states consistently exerted opposite effects on working memory for verbal versus nonverbal information, showing a selective effect. An unpleasant emotional state (anxiety, withdrawal-motivated) impaired verbal working memory yet improved spatial working memory. A pleasant emotional state (amusement, approach-motivated) produced the opposite pattern (improved verbal working memory and impaired spatial working memory). The manipulations of working memory and emotional state were well controlled methodologically. The tasks were variants of the n-back paradigm, and the verbal and spatial versions differed in instructions but not in difficulty or even task stimuli. The effect was a crossover interaction—the strongest kind of evidence for a selective rather than nonspecific effect. Moreover, the behavioral effect was specific to approach–withdrawal emotional states, as shown by a striking specific association between the size of the behavioral effect and individual differences in trait sensitivity to reward and threat, which act as selective amplifiers of emotion inductions (20). Stronger inductions lead to a stronger effect on performance, and both were predicted by validated measures of emotional reactivity. This result held when further controlling for individual differences in a neutral condition, strongly suggesting an effect of approach–withdrawal emotional states.

These data and much other work suggest that theories of PFC function will need to incorporate both cognitive and affective variables (8, 14, 15, 19, 21–26). Several theories suggest a segregated model in which ventromedial and orbitofrontal areas subserve emotional function, whereas lateral and dorsal areas subserve cognitive function (27–29). Most segregated models are agnostic about selective effects or integration. In contrast, other models actively or tacitly predict selective effects and, by implication, integration (8, 11, 14, 15, 26). Although there has been a great deal of work on emotion–cognition interaction, little or none of it has examined whether and where emotion and higher cognition are integrated in the brain. To our knowledge, there are no imaging studies that can be reinterpreted as evidence for such integration, because the minimum experimental design requirements have not been met. Single-task studies (e.g., emotion and verbal fluency; ref. 30) cannot show integration, because showing a crossover interaction requires two tasks.

We used functional MRI (fMRI) to examine the conjoint effects of cognitive task and emotional state manipulations on brain activity, focusing on lateral PFC as a potential site of emotion–cognition integration. Previous work has suggested that lateral PFC is critical for working memory and goal-directed behavior (18, 31–33) and for some forms of integration (5–7). Lateral PFC is also thought to be sensitive to approach–withdrawal emotion specifically (11, 12, 15, 34). Thus it is possible that lateral PFC is further sensitive to the integration of emotion and cognition. To test this hypothesis, we sought evidence that cognitive task and emotional state factors would contribute jointly and equally to lateral PFC activity during performance of a working memory task.

We also sought to identify brain regions sensitive to emotional and cognitive factors separately. We did so to test the hypothesis that emotion–cognition integration within lateral PFC is related to hemispheric specialization of function in this region. This hypothesis is based on a pioneering neuropsychological model that holds that the effects of emotion on cognition can often be predicted if the task depends on regional neural systems (26, 35). Positive emotional states§ are held to relatively enhance performance on tasks that depend on the left frontal lobe, whereas negative states are held to enhance performance on tasks that depend on the right frontal lobe.¶ The model is partly based on research suggesting that lateralized effects of experienced emotion are specific to frontal regions and do not hold throughout the entire brain (11, 12, 15, 26, 34); it is also based on a lateralization of cognitive function. In general, verbal processing tends to be left lateralized, and nonverbal processing tends to be right lateralized (38, 39). This verbal–nonverbal specialization also appears to hold in tasks that recruit lateral PFC (33, 40–42).

Thus, hemispheric specialization within lateral PFC for emotion and cognition separately might support their integration. For example, a hierarchical arrangement is possible, in which segregated sources of emotional and cognitive information within lateral PFC serve as the input to another higher-order lateral PFC region that integrates them. However, in addition to a lack of knowledge regarding emotion–cognition integration in lateral PFC, it has also not yet been demonstrated within a single dataset that both emotional and cognitive hemispheric asymmetries occur within lateral PFC. We predicted and found evidence for both emotion–cognition integration and for lateralized effects of emotion and cognition separately within lateral PFC.

Methods

We used fMRI to assess brain activity as participants performed a demanding cognitive task after a preceding emotional video had ended. We tested a larger sample off-line to assess the reliability of effects of the emotion induction on behavioral performance.

Participants and Procedure.

In the fMRI study (see ref. 43 for more detail), 14 neurologically normal right-handed participants (six male; age 19–27 years) gave informed consent and were paid $25 per hour. They had 15 familiarization trials of each version of the 3-back task. Inside the scanner, they had structural scans followed by six functional scans in a factorial design: 2 (stimulus type: face or word) × 3 (emotion video-type: pleasant–approach, neutral, or unpleasant–withdrawal), order counterbalanced. For each functional scan, the 3-back task and scan began simultaneously after a short video had ended.

In the sample that was scanned, we expected to find the same behavioral effect (an Emotion × Stimulus crossover interaction) as in our previous work (8), in terms of effect size (r = 0.24). Because such an effect would not be statistically reliable in a small sample, we assessed the behavioral effect using data from an additional 52 participants tested in a similar design (total n = 66).

There were six different videos for the emotion inductions (two versions of three emotion types). The videos were presented digitally but were otherwise the same as used in previous work (8): 9- to 10-min selections from comedy (amusement, pleasant, approach-related), documentary (neutral, calm), or horror (anxiety, unpleasant, withdrawal-related) films.

The 3-back tasks had four initial fixation trials to allow tissue magnetization to reach steady-state. These were followed by 16 3-back trials and 10 fixation trials, with the task-plus-fixation sequence repeated three more times (108 trials total, each trial 2.5 s long). The task instructions were to press a target button if the stimulus currently on the screen was the same as the one seen three trials previously and to press a nontarget button for any other stimulus. The stimuli were either concrete English nouns, one to four syllables in length, or unfamiliar male and female faces intermixed (41).

After each scan, participants used the response buttons to rate how they had felt after the last video just before the scan, from 0 (not at all) to 4 (very much) for each of six emotion terms presented in random order (calm, amused, anxious, energetic, fatigued, and gloomy).

Image Acquisition and Analysis.

Whole-brain images were acquired on a Siemens 1.5 Tesla Vision System (Erlangen, Germany). Structural images were acquired by using a high-resolution MP-RAGE T1-weighted sequence (44). Functional images were acquired by using an asymmetric spin-echo echo-planar sequence (45). Each scanning run gave 108 sets of 16 contiguous 8-mm-thick axial images (3.75 × 3.75 mm in-plane resolution). After movement correction, functional images were scaled to a whole-brain mode value of 1,000 for each scanning run and were temporally aligned within each whole-brain volume. Functional images were resampled into 3-mm isotropic voxels, transformed into atlas space (46), and smoothed with a Gaussian filter (8 mm full width half maximum).

Analyses were conducted to identify different kinds of regions: integration sensitive, stimulus sensitive, and emotion sensitive. To ensure that these analyses were conservative, they subjected the data to multiple statistical tests, each test being a t test or ANOVA with participants as the random effect and a voxel-wise significance threshold of P < 0.05. Regions were identified only if they had at least eight contiguous voxels, with each voxel simultaneously passing several such tests. Although we collected whole-brain images, our a priori search space was lateral PFC, defined as |X| > 25, Y > 0, and 0 < Z < 48. We report signal change as the task-related percent change from fixation.‖

To be identified as integration sensitive, all voxels in a region had to survive tests requiring greater activity in task than fixation, an Emotion × Stimulus interaction, and no main effects of emotion or stimulus. To be identified as stimulus sensitive, a region had to have greater activity in task than fixation and greater activity for a preferred than for a nonpreferred stimulus type. To be identified as emotion sensitive, a region had to have greater activity in task than fixation and greater activity for the preferred than for the nonpreferred emotion. Hemispheric specialization was inferred on the basis of an interaction of hemisphere with the factor of interest by using ANOVA.

A specific pattern of activation across the six experimental conditions was of interest a priori (8): a crossover Emotion × Stimulus interaction. We used contrast weights to formalize this prediction and to express the observed strength of the predicted pattern, collapsing the six values from the six conditions into a single numerical index. Because performance is best in the verbal–pleasant and nonverbal–unpleasant conditions, these conditions were both assigned contrast weights of 1. Performance is poorest in the verbal–unpleasant and nonverbal–pleasant conditions (assigned weights of −1) and intermediate in the verbal and nonverbal neutral conditions (assigned weights of 0). To summarize the observed strength of the predicted pattern across conditions, the value from each condition was multiplied by its contrast weight, and the resulting products were summed together. A higher sum indicates a more strongly selective effect of emotion. In different analyses, the contrast weights were applied to group or individual data to retain information about the conjoint (interactive) modulation of activation or performance by the two experimental factors (task stimuli, emotion induction) and remove information about the mean levels.

Technical problems resulted in unusable fMRI images from one scan for one subject (face-unpleasant condition) and the loss of behavioral data from one scan from another subject (face-neutral condition).

Results

Behavioral Data.

The face and word versions of the task were matched for difficulty. Repeated-measures ANOVAs (emotion and stimulus conditions within-subject) indicated no main effects of stimulus type on mean accuracy (expressed using the signal detection index d′: face = 2.56, word = 2.36, F (1, 12) = 2.17, P > 0.10), or mean response time (RT: face = 1,112 ms, word = 1,063 ms, F (1, 12) = 2.50, P > 0.10).

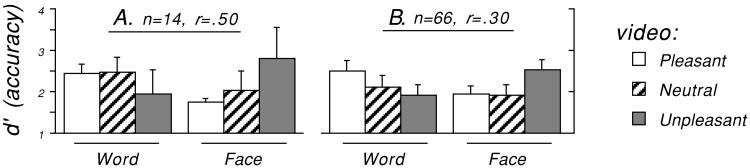

The effect of emotion on task performance was a crossover interaction (as tested by a focused contrast; see Methods). In the sample that was scanned (n = 14), Fig. 2A, this held in the direction expected (d′, effect size r = 0.50; RT, r = 0.41). In the larger sample (n = 66), Fig. 2B, the same behavioral effect held and was statistically reliable (d′, r = 0.30, P = 0.019; RT, r = 0.24, P = 0.063). That is, word 3-back performance was enhanced by a pleasant state and impaired by an unpleasant state, whereas face 3-back performance showed the reverse (replicating and extending ref. 8).

Figure 2.

A selective effect of the emotion induction on 3-back task performance (Emotion × Stimulus interaction between subjects) in (A) the 14 participants who were scanned, and (B) 66 participants (52 off-line, 14 scanned). Higher d′ indicates more accurate performance.

Participants' self-report ratings verified that the emotion induction was successful at eliciting the intended emotional states. We compared how strongly participants rated feeling an intended emotion after the appropriate videos compared with the other videos (e.g., the average rating of anxious after the two unpleasant videos minus the average of anxious after the other four). The emotion induction produced selectively larger self reports for anxious after the unpleasant videos [mean difference = 1.5; t(13) = 5.87, P < 0.001], calm after the neutral videos [mean = 0.9, t(13) = 3.70, P < 0.003], and amused after the pleasant videos [mean = 1.3, t(13) = 5.80, P < 0.001]. Emotional arousal was assessed by comparing how strongly participants rated feeling energetic. Ratings of energetic were smaller after the neutral videos (mean rating = 1.4), as compared with either the pleasant [mean = 2.5; t(13) = 2.38, P = 0.03] or unpleasant [mean = 2.7; t(13) = 3.03, P = 0.01] videos. The energetic ratings did not differ between pleasant and unpleasant videos [t(13) = 0.41, P = 0.68], suggesting equal arousal.

Neuroimaging Data. Integration-sensitive regions.

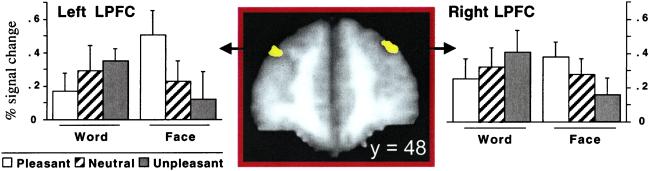

Candidate regions were identified on the basis of voxels that were part of the task network and showed an Emotion × Stimulus interaction. The results of this conjunction analysis across the whole brain are summarized in Table 1. Two regions of interest (ROI) were within lateral PFC (Fig. 3). They showed no hemispheric specialization (Fs < 1 for all interactions with hemisphere) nor main effects of the stimulus or emotion factors (when treated either as two separate regions or as a single combined region, all main effect Fs < 1.05, Ps > 0.30). We considered them be a single ROI and refer to it as the integration-sensitive ROI. The pattern of activity in the integration-sensitive ROI across the six conditions was a crossover interaction (Fig. 3) indicating that the emotion induction selectively influenced task-related brain activity. Neural activity was greatest in the word-unpleasant and face-pleasant conditions, intermediate in the neutral conditions, and lowest in the word-pleasant and face-unpleasant conditions. This region met all formal criteria for integration (Fig. 1).

Table 1.

Integration-sensitive ROIs across the whole brain

| Brodmann's Area | Talairach coordinates | Size, voxels | |

|---|---|---|---|

| Dorsolateral PFC | 9 | −32, 48, 30 | 8 |

| 9 | 33, 41, 32 | 24 | |

| Occipital cortex | 18 | −23, −96, 0 | 39 |

| 18 | 26, −99, −3 | 141 | |

| −8, −105, −12 | 30 | ||

| Midbrain | −8, −21, −12 | 13 |

Only the dorsolateral PFC ROIs were within the a priori search space.

Figure 3.

Integration-sensitive regions in lateral PFC (LPFC; Brodmann Area 9). Percent signal change reflects how much more neural activity was present during the task blocks as compared with the fixation blocks. ROIs are shown in a coronal view (left on left, top at top), at Y = 48 mm. Task stimuli: word or face; emotion induction: pleasant, neutral, or unpleasant video.

The crossover pattern in the integration-sensitive ROI was reminiscent of the behavioral effect (Fig. 2). Conditions with the most neural activity were the most difficult behaviorally, consistent with a functional relationship. To test this idea, we computed correlations between activity and performance. We first created summary scores for each participant using the contrast weights (see Methods). This yielded two scores for each participant summarizing the strength of the selective effect for (i) brain activation and (ii) performance, removing mean activation and mean performance. The pattern of neural activity predicted the pattern of d′ (r = −0.66, P = 0.013). The correlation held for the left and right regions separately (rs = −0.57, −0.64, Ps < 0.05) but not for the non-PFC ROIs in Table 1 (|rs| < 0.43, Ps > 0.10). These analyses bolster the primary finding: both activity and performance were modulated by the emotion induction, and in lateral PFC only, the degree of modulation of one predicted the degree of modulation of the other.

Stimulus-sensitive regions.

We found effects of stimulus type in lateral PFC in the neutral conditions (see Table 2). ROI analyses revealed the expected pattern of hemispheric specialization (Stimulus × Hemisphere interaction: dorsolateral PFC, F(1,12) = 18.9, P < 0.001; ventrolateral PFC, F(1,12) = 39.3, P < 0.001). Word stimuli preferentially activated the left hemisphere, and face stimuli preferentially activated the right hemisphere (replicating ref. 41). The emotion inductions did not modulate activity in these ROIs (i.e., there were no Emotion × Hemisphere or Emotion × Stimulus × Hemisphere interactions, Fs < 1).

Table 2.

Stimulus-sensitive and emotion-sensitive ROIs

| Left hemisphere | Right hemisphere | |

|---|---|---|

| Stimulus sensitive | ||

| Asymmetric | ||

| DLPFC (BA 9) | −37, 42, 24 | 46, 24, 33 F |

| VLPFC (BA 45) | −40, 6, 21 | 47, 12, 18 F |

| Symmetric | ||

| MPFC (BA 32) | −17, 24, 24 | 11, 24, 24 |

| Parietal | −23, −27, 33 | 38, −30, 33 |

| Single | ||

| LPFC | 26, 57, 33 | |

| Emotion sensitive | ||

| Asymmetric | ||

| DLPFC (BA 9) | −37, 36, 33 U | 35, 48, 24 |

| Symmetric | ||

| MPFC | −8, 6, 18 | 20, 18, 18 |

| Parietal | −50, −24, 24 | 59, −36, 24 |

| −23, −42, 33 | 17, −48, 33 | |

| Single | ||

| DLPFC | 35, 18, 33 | |

| VLPFC | 59, 3, 24 |

The Talairach coordinates of all ROIs in lateral PFC are reported; parietal ROIs are reported as controls. Stimulus-sensitive regions were identified in the two neutral conditions and had more activity in the word than face condition unless noted (F, face). Emotion-sensitive regions were identified in the four nonneutral conditions (collapsing word and face conditions) and had more activity in the pleasant than unpleasant condition, unless noted (U, unpleasant). Asymmetric, a significant interaction (P < 0.05) of hemisphere with the stimulus or emotion effect on % signal change; Symmetric, no such interaction (P > 0.05). BA, Brodmann's area; DLPFC, dorsolateral PFC; LPFC, lateral PFC; MPFC, medial PFC; VLPFC, ventrolateral PFC.

Emotion-sensitive regions.

We found effects of the emotion induction in lateral PFC in the emotional conditions (see Table 2). ROI analyses indicated that lateral PFC showed hemispheric specialization (Emotion × Hemisphere interaction, F (1, 12) = 28.3, P < 0.001), with greater right-hemisphere task-related activation during the pleasant emotion conditions and greater left-hemisphere task-related activation during the unpleasant emotion conditions (see Discussion regarding the direction of activation). Stimulus type did not influence activity in the emotion-sensitive ROIs (i.e., there were no Emotion × Stimulus × Hemisphere interactions nor Stimulus × Hemisphere interactions, Fs < 2, Ps > 0.10). In posterior areas, there were several emotion-sensitive ROIs but no hemispheric asymmetries (Emotion × Hemisphere interactions, Fs < 1.7, Ps > 0.2).

Discussion

As participants performed psychometrically matched verbal and nonverbal versions of a working memory task, neural activity in a bilateral region in lateral PFC depended conjointly and equally on the emotional and stimulus conditions: a crossover interaction with no main effects. The existence of such a remarkable pattern within a discrete region directly supports our primary hypothesis that lateral PFC is sensitive to an integration of emotion and cognition. Lateral PFC was the only brain region to show the crossover interaction and have such activity predict behavioral performance, consistent with a functional influence of emotion on higher cognition specifically.

Two other kinds of regions were evident in lateral PFC: stimulus sensitive and emotion sensitive. These regions showed hemispheric specialization but not Emotion × Stimulus interactions. Because these regions were present in lateral PFC, the data are consistent with the possibility that lateral PFC is the site of emotion–cognition integration, not merely sensitive to it.

To our knowledge, the current experiment is the most direct test of, and evidence for, the hypothesis that prefrontal hemispheric asymmetries for cognition and emotion separately might mediate selective interactions (8, 14, 15, 26). Developing and testing more specific models of selective interactions will be important. One model is that emotion should modulate stimulus-sensitive areas in a hemisphere selective manner (or conversely, cognition should modulate emotion-sensitive areas). Another model is that lateralized emotional and cognitive signals are first represented in separate regions in lateral PFC and are then combined hierarchically. The resulting integrated signal is represented in a third, integration-sensitive region.

Lateral PFC activity in the current paradigm appears to reflect psychological load: how much top-down support is needed to maintain behavioral performance at a given set point (32, 47). The current data support the idea that PFC activity reflects psychological load in response to the separate and conjoint demands of emotional and task demands. Because the effect of emotion was selective, the emotional load cannot be attributed merely to general or nonspecific effects such as effort, motivation, or arousal.

In addition to finding emotion–cognition integration, this study is the first we are aware of to use fMRI or positron-emission tomography and report an emotion-related asymmetry in PFC and not posterior cortex. Because lateralization has been suggested only on the basis of anatomically nonspecific reports (e.g., refs. 12, 34, and 48), it is of note that we observed it in lateral PFC. The n-back task may have created relatively uniform neural activity as a baseline against which a subtle or nonfocal influence of emotion could then be detected as a bias on the task-related activity.

Although the self-report, behavioral, and imaging data show that emotional states were induced and their effects persisted during the task (also shown in ref. 8), emotional states are dynamic and may have been altered by engaging in the task. This idea is consistent with our view of emotion–cognition integration: not only should emotion contribute to the regulation of thought and behavior, but also cognition should contribute to the regulation of emotion (for a convergent fMRI study, see ref. 49). We do not assume that emotional states persisted statically during the task, but rather that their induction exerted an emotional bias on subsequent cognitive function (cf. ref. 50).

Although the existence of the emotion-related hemispheric asymmetry was as predicted, its apparent direction was surprising (see refs. 11, 12, and 34). The emotion effect was an apparent modulation of activity in the task network; the question concerns the direction of modulation. The interpretation we favor is that the emotion inductions both reduced and imposed a psychological load, doing so differentially within each hemisphere (i.e., pleasant-approach states leading to a lower load on the left, facilitating verbal performance, and leading to lower activity). In the emotion-sensitive ROIs, the emotional load modulated the task-related load, doing so in a hemisphere-specific manner. Another interpretation is that emotion-specific activity was greater during fixation and reduced during the task (e.g., the task tending to suppress the emotional state). If so, then emotion-specific signal changes should be assessed as fixation minus task (i.e., opposite in direction from what we report). Either interpretation is consistent with previous work.

Although emotion has high psychological validity and unique strengths as a challenge paradigm for investigating PFC function, caveats are warranted regarding statistical power. We may not have been able to detect all important effects. For example, the integration-sensitive analyses also revealed six voxels in left ventrolateral PFC that each showed an Emotion × Stimulus interaction, not meeting the cluster-size threshold. Further, all of the identified interactions had a crossover form. Weaker interactions might be mechanistically important but may have failed to reach statistical threshold because of the greater power needed to detect such effects (51). Finally, individual differences in emotional reactivity may have made it harder to detect group-level effects. For example, the anterior cingulate cortex (ACC) is critical for both cognitive control and emotion (27), yet we did not find evidence for integration in ACC. However, we did find large meaningful variation in ACC activity as a function of personality (43). Thus, for several reasons, future fMRI studies in this domain are likely to benefit from relatively large sample sizes.

Finally, the current data provide converging evidence that working memory and lateral PFC activity can be influenced by affective variables (8, 50, 52). The distinction between affective states and stimuli is important in the context of hemispheric asymmetries (11). Consequently, it is of note that working memory-related activity in the lateral PFC can be influenced by induced affective states, in addition to affective stimuli (50, 52).

Acknowledgments

We thank Dr. Deanna M. Barch for helpful comments and discussion. This work was supported by a grant from the National Science Foundation (BCS 0001908) and the McDonnell Center for Higher Brain Function, Washington University. Preliminary reports were presented in New York at the 8th Annual Meeting of the Cognitive Neuroscience Society, March 25–27, 2001, and in Brighton, U.K., at the 7th Annual Human Brain Mapping Conference, June 10–14, 2001.

Abbreviations

- PFC

prefrontal cortex

- fMRI

functional MRI

- ROI

region of interest

Footnotes

This paper was submitted directly (Track II) to the PNAS office.

Recent work suggests that an approach–withdrawal distinction best explains the prefrontal emotion-related hemispheric asymmetries (10–12, 34). Perhaps the strongest evidence is that induced anger—a subjectively unpleasant but approach-motivated emotion—is associated with greater left frontal activity, arguing strongly for an approach–withdrawal account of the prefrontal asymmetry (12). This account might also explain some apparent exceptions (36, 37).

This procedure is obviously appropriate for identifying task-specific activations but is not clearly so for identifying the direction of emotion-specific activity within a task-related network; see Discussion. The identification of emotion–cognition interactions and hemispheric differences is uninfluenced by this consideration.

The model is a generalization from diverse data. It might be argued that emotion-related activation should interfere with cognitive activity in the same brain area (for discussion, see ref. 9; J.R.G., unpublished work). However, the opposite behavioral effect is observed (8). If emotion-related activity was due to an independent source of activation within a region (i.e., competing with on-going cognitive activity), it should produce crosstalk or dual-task interference. If emotion modulated ongoing activity, it could produce enhancement.

References

- 1.Ekman P, Davidson R J. The Nature of Emotion. New York: Oxford; 1994. [Google Scholar]

- 2.Dalgleish T, Power M J. Handbook of Cognition and Emotion. New York: Wiley; 1999. [Google Scholar]

- 3.Martin L L, Clore G L. Theories of Mood and Cognition. Mahwah, NJ: Erlbaum; 2001. [Google Scholar]

- 4.Smith E E, Jonides J, Koeppe R A, Awh E, Schumacher E H, Minoshima S. J Cognit Neurosci. 1995;7:337–356. doi: 10.1162/jocn.1995.7.3.337. [DOI] [PubMed] [Google Scholar]

- 5.Damasio A R. Neural Comp. 1989;1:123–132. [Google Scholar]

- 6.Prabhakaran V, Narayanan K, Zhao Z, Gabrieli J D E. Nat Neurosci. 2000;3:85–90. doi: 10.1038/71156. [DOI] [PubMed] [Google Scholar]

- 7.Rao S C, Rainer G, Miller E K. Science. 1997;276:821–824. doi: 10.1126/science.276.5313.821. [DOI] [PubMed] [Google Scholar]

- 8.Gray J R. J Exp Psychol: General. 2001;130:436–452. doi: 10.1037//0096-3445.130.3.436. [DOI] [PubMed] [Google Scholar]

- 9.Gray J R, Braver T S. In: Emotional Cognition. Moore S C, Oaksford M R, editors. Amsterdam: Benjamins; 2002. , in press. [Google Scholar]

- 10. Gray, J. R. (2002) Psychol. Rev., in press.

- 11.Davidson R J. In: Brain Asymmetry. Davidson R J, Hugdahl K, editors. Cambridge, MA: MIT Press; 1995. pp. 361–387. [Google Scholar]

- 12.Harmon-Jones E, Sigelman J. J Person Soc Psychol. 2001;80:797–803. [PubMed] [Google Scholar]

- 13.Hobson J A, Stickgold R. In: The Cognitive Neurosciences. Gazzaniga M S, editor. Cambridge, MA: MIT Press; 1995. pp. 1373–1389. [Google Scholar]

- 14.Tucker D M, Williamson P A. Psychol Rev. 1984;91:185–215. [PubMed] [Google Scholar]

- 15.Tomarken A J, Keener A D. Cognit Emot. 1998;12:387–420. [Google Scholar]

- 16.Gray J R. Pers Soc Psychol Bull. 1999;25:65–75. [Google Scholar]

- 17.Carver C S, Sutton S K, Scheier M F. Pers Soc Psychol Bull. 2000;26:741–751. [Google Scholar]

- 18.Duncan J, Emslie H, Williams P, Johnson R, Freer C. Cognit Psychol. 1996;30:257–303. doi: 10.1006/cogp.1996.0008. [DOI] [PubMed] [Google Scholar]

- 19.Braver T S, Cohen J D. In: Attention and Performance XVIII. Monsell S, Driver J, editors. Cambridge, MA: MIT Press; 2000. pp. 713–738. [Google Scholar]

- 20.Larsen R J, Ketelaar E. J Person Soc Psychol. 1991;61:132–140. doi: 10.1037//0022-3514.61.1.132. [DOI] [PubMed] [Google Scholar]

- 21.Damasio A R. Descartes' Error: Emotion, Reason and the Human Brain. New York: Grosset/Putnam; 1994. [Google Scholar]

- 22.Bechara A, Damasio A R, Damasio H, Anderson H W. Cognition. 1994;50:7–15. doi: 10.1016/0010-0277(94)90018-3. [DOI] [PubMed] [Google Scholar]

- 23.Simpson J R, Snyder A Z, Gusnard D A, Raichle M E. Proc Natl Acad Sci USA. 2001;98:683–687. doi: 10.1073/pnas.98.2.683. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Simpson J R, Drevets W C, Snyder A Z, Gusnard D A, Raichle M E. Proc Natl Acad Sci USA. 2001;98:688–693. doi: 10.1073/pnas.98.2.688. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Arnsten A F, Goldman-Rakic P S. Arch Gen Psychiat. 1998;55:362–368. doi: 10.1001/archpsyc.55.4.362. [DOI] [PubMed] [Google Scholar]

- 26.Heller W. In: Psychological and Biological Approaches to Emotion. Stein N, Leventhal B L, Trabasso T, editors. Hillsdale, NJ: Erlbaum; 1990. pp. 167–211. [Google Scholar]

- 27.Bush G, Luu P, Posner M I. Trends Cognit Sci. 2000;4:215–222. doi: 10.1016/s1364-6613(00)01483-2. [DOI] [PubMed] [Google Scholar]

- 28.Dias R, Robbins T W, Roberts A C. Nature (London) 1996;380:69–72. doi: 10.1038/380069a0. [DOI] [PubMed] [Google Scholar]

- 29.Liotti M, Tucker D M. In: Brain Asymmetry. Davidson R J, Hugdahl K H, editors. Cambridge, MA: MIT Press; 1995. pp. 389–423. [Google Scholar]

- 30.Baker S C, Frith C D, Dolan R J. Psychol Med. 1997;27:565–578. doi: 10.1017/s0033291797004856. [DOI] [PubMed] [Google Scholar]

- 31.Goldman-Rakic P S. In: Handbook of Physiology–The Nervous System V. Plum F, Mountcastle V, editors. Vol. 5. Bethesda, MD: Am. Physiol. Soc.; 1987. pp. 373–417. [Google Scholar]

- 32.Braver T S, Cohen J D, Nystrom L E, Jonides J, Smith E E, Noll D C. Neuroimage. 1997;5:49–62. doi: 10.1006/nimg.1996.0247. [DOI] [PubMed] [Google Scholar]

- 33.Smith E E, Jonides J. Science. 1999;283:1657–1661. doi: 10.1126/science.283.5408.1657. [DOI] [PubMed] [Google Scholar]

- 34.Sutton S K, Davidson R J. Psychol Sci. 1997;8:204–210. [Google Scholar]

- 35.Heller W, Nitschke J B. Cognit Emot. 1997;11:637–661. [Google Scholar]

- 36.Chua P, Krams M, Toni I, Passingham R, Dolan R. Neuroimage. 1999;9:563–571. doi: 10.1006/nimg.1999.0407. [DOI] [PubMed] [Google Scholar]

- 37.Heller W, Nitschke J B. Cognit Emot. 1998;12:421–447. [Google Scholar]

- 38.Hellige J B. Hemispheric Asymmetry. Cambridge, MA: Harvard Univ. Press; 1993. [Google Scholar]

- 39.Banich M T. Neuropsychology. Boston: Houghton Mifflin; 1997. [Google Scholar]

- 40.D'Esposito M, Aguirre G K, Zarahn E, Ballard D, Shin R K, Lease J. Cognit Brain Res. 1998;7:1–13. doi: 10.1016/s0926-6410(98)00004-4. [DOI] [PubMed] [Google Scholar]

- 41.Braver T S, Barch D M, Kelley W M, Buckner R L, Cohen N J, Meizin F M, Snyder A Z, Olinger J M, Conturo T E, Akbudak E, et al. Neuroimage. 2001;14:48–59. doi: 10.1006/nimg.2001.0791. [DOI] [PubMed] [Google Scholar]

- 42.Courtney S M, Ungerleider L G, Keil K, Haxby J V. Cereb Cortex. 1996;6:39–49. doi: 10.1093/cercor/6.1.39. [DOI] [PubMed] [Google Scholar]

- 43. Gray, J. R. & Braver, T. S. (2002) Cognit. Affect. Behav. Neurosci., in press. [DOI] [PubMed]

- 44.Mugler J P I, Brookeman J R. Magn Reson Med. 1990;15:152–157. doi: 10.1002/mrm.1910150117. [DOI] [PubMed] [Google Scholar]

- 45.Conturo T E, McKinstry R C, Akbudak E, Snyder A Z, Yang T, Raichle M E. Society for Neuroscience. Vol. 26. Washington, DC: Society for Neuroscience; 1996. p. 7. [Google Scholar]

- 46.Talairach J, Tournoux P. Co-planar Stereotaxic Atlas of the Human Brain. New York: Thieme; 1988. [Google Scholar]

- 47.Bunge S A, Klingberg T, Jacobsen R B, Gabrieli J D E. Proc Natl Acad Sci USA. 2000;97:3573–3578. doi: 10.1073/pnas.050583797. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48.Canli T, Desmond J E, Zhao Z, Glover G, Gabrieli J D. NeuroReport. 1998;9:3233–3239. doi: 10.1097/00001756-199810050-00019. [DOI] [PubMed] [Google Scholar]

- 49.Ochsner K N, Bunge S A, Gross J J, Gabrieli J D E. Proceedings of the Cognitive Neuroscience Society. Vol. 8. Hanover, NH: Cognitive Neuroscience Society; 2001. p. 31. [Google Scholar]

- 50.Perlstein W M, Elbert T, Stenger V A. Proc Natl Acad Sci USA. 2002;99:1736–1741. doi: 10.1073/pnas.241650598. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51.Wahlsten D. Psychol Bull. 1991;110:587–595. [Google Scholar]

- 52.Watanabe M. Nature (London) 1996;382:629–632. doi: 10.1038/382629a0. [DOI] [PubMed] [Google Scholar]