Abstract

The human Ether-à-go-go-Related Gene (hERG) potassium channel is crucial for repolarizing the cardiac action potential and regulating the heartbeat. Molecules that inhibit this protein can cause acquired long QT syndrome, increasing the risk of arrhythmias and sudden fatal cardiac arrests. Detecting compounds with potential hERG inhibitory activity is therefore essential to mitigate cardiotoxicity risks. In this article, we present a new hERG data set of unprecedented size, comprising nearly 300,000 molecules reported in PubChem and ChEMBL, approximately 2000 of which were confirmed hERG blockers identified through in vitro assays. Multiple structure-based artificial intelligence (AI) binary classifiers for predicting hERG inhibitors were developed, employing, as descriptors, protein–ligand extended connectivity (PLEC) fingerprints fed into random forest, extreme gradient boosting, and deep neural network (DNN) algorithms. Our best-performing model, a stacking ensemble classifier with a DNN meta-learner, achieved state-of-the-art classification performance, accurately identifying 86% of molecules having half-maximal inhibitory concentrations (IC50s) not exceeding 20 µM in our challenging test set, including 94% of hERG blockers whose IC50s were not greater than 1 µM. It also demonstrated superior screening power compared to virtual screening schemes that used existing scoring functions. This model, named “HERGAI,” along with relevant input/output data and user-friendly source code, is available in our GitHub repository (https://github.com/vktrannguyen/HERGAI) and can be used to predict drug-induced hERG blockade, even on large data sets.

Supplementary Information

The online version contains supplementary material available at 10.1186/s13321-025-01063-8.

Keywords: hERG, Inhibitor, Binary classification model, Machine learning, Deep learning, Deep neural network, Random forest, Extreme gradient boosting, PLEC fingerprint, Stacking ensemble

Scientific contribution

We present the largest and most complex hERG inhibition data set for AI research, integrating meticulously curated experimental data from PubChem and ChEMBL. This realistic and challenging data set enables the training and evaluation of advanced models for predicting hERG blockers. We also introduce “HERGAI,” a novel stacking ensemble classifier with strong classification and screening performance, leveraging state-of-the-art machine learning/deep learning techniques and incorporating PLEC fingerprints, for the first time, as descriptors of hERG-bound ligand conformations.

Supplementary Information

The online version contains supplementary material available at 10.1186/s13321-025-01063-8.

Introduction

The human Ether-à-go-go-Related Gene (hERG) potassium (K+) channel is essential for cardiac electrophysiology, as it regulates the rapid delayed rectifier K+ current in cardiomyocytes. This current is essential in the cardiac action potential repolarization stage, ensuring the accurate rhythm of each heartbeat. Dysfunction of this ion channel, either caused by genetic mutations or resulting from drug inhibition, can induce a prolonged QT interval (LQTS) [1]. This condition is linked to a higher risk of life-threatening arrhythmias such as torsades de pointes (TdP) [1], which can degenerate into ventricular fibrillation, potentially causing sudden cardiac death [2]. Given its critical role in maintaining cardiac rhythm, identifying compounds that block the hERG channel is a major priority in drug safety evaluation. Many therapeutic drugs (including antihistamines, antipsychotics, antibiotics) have been withdrawn from the market due to their unintended hERG inhibitory effects [3], making this among the leading causes of drug attrition in clinical development [4]. Regulatory agencies such as the US Food and Drug Administration (FDA) and the European Medicines Agency (EMA) require thorough hERG liability assessments for new drug candidates to minimize the risk of drug-induced arrhythmias [5]. These observations underscore the need for early hERG inhibitor detection to prevent costly late-stage failures and minimize cardiotoxicity risks, ensuring the development of safer pharmaceuticals.

Computational approaches in cheminformatics and bioinformatics have been extensively employed to predict hERG inhibition [6–11]. Traditional techniques such as quantitative structure–activity relationship (QSAR) modeling, pharmacophore modeling, and molecular docking have been integrated with modern machine learning (ML) and deep learning (DL) methodologies, resulting in hERG inhibitor prediction tools that were trained and evaluated on diverse data sets [9, 12–15]. The past decade has seen a surge in artificial intelligence (AI)-based programs designed to achieve this goal. In 2020, a comprehensive comparison of such hERG effect predictors was performed, assessing models based on classical approaches such as random forests (RF) and gradient boosting (GB), and those relying on modern AI techniques including deep neural networks (DNN) and recurrent neural networks (RNN) [16]. A year later, a DL framework named “CardioTox net,” based on step-wise training, was introduced to predict hERG channel blockade by small molecules, outperforming pre-existing methods across various evaluation metrics [17]. In 2022, two new tools were presented: one based on a directed message passing neural network (D-MPNN) that took moe206 descriptors as input [18], the other was a voting-based consensus model employing RF, extreme GB (XGB), and DNN [11]. Another study published in 2023 constructed a series of binary classification models, either using four types of molecular fingerprints and molecular descriptors fed into four ML algorithms, or based on graph convolutional neural networks (GNN) [19]. Its findings demonstrated the superior classification power of GNN, suggesting their ability to effectively capture molecular features relevant to hERG inhibition directly from the original input [19]. These results were corroborated by another study published in the same year, underscoring the predictive power and generalizability of GNN as a foundation for comprehensive automation in predictive toxicology [20]. “AttenhERG,” a novel GNN framework introduced in 2024, were reported to significantly improve the interpretability and accuracy of hERG blocker prediction by integrating uncertainty estimation [21]. In January 2025, Lee and Yoo combined a graph attention mechanism and a gated recurrent unit to develop “hERGAT,” a new DL model focusing on the importance of different molecular substructures by capturing complex interactions at atomic and molecular levels, providing insights into the features contributing to hERG inhibition [22]. Another publication from May 2025 introduced a strategy combining XGB with isometric stratified ensemble (ISE) mapping to build robust models less affected by class imbalance, demonstrating strong performance in both recall and specificity [23]. By estimating the model’s applicability domain and enabling more reliable prediction confidence and compound prioritization through data stratification, ISE mapping enhanced the utility of the XGB-ISE framework as a promising AI-based tool for early cardiotoxicity assessment related to drug-induced hERG inhibition. Table S1 (Supporting Information) provides an overview of the hERG blocker predictors listed above.

Though differing in methodology, most of the hERG inhibitor detection tools described above were trained on relatively limited data sets, typically small to medium in size, with most containing no more than 20,000 molecules (and some even fewer than 10,000) [9–19, 21]. As a result, the vast amount of hERG-related experimental data available in public repositories such as PubChem remains underutilized for model training. Furthermore, many test sets used to assess hERG blocker predictors were either class-balanced or enriched with positive instances [13, 15, 17, 18, 21, 22], failing to reflect a realistic virtual screening (VS) deck where inactive molecules vastly outnumber active ones [24, 25]. This may lead to an overestimation of these tools’ screening performance, particularly when they are applied to VS tasks [24, 25]. On another note, the source code for some hERG-specific AI models was neither published alongside their original articles nor made publicly available elsewhere [9, 11]. This lack of accessibility hinders the ability to retrain these models on larger data sets, reproduce the reported results, and apply them to large-scale data. In light of the above remarks, in this study, we aimed to:

-

i.

Retrieve, curate, and maximize the use of available hERG blocker/non-blocker data from public experimental repositories to construct a realistic data set for training and evaluating hERG inhibitor prediction models;

-

ii.

Develop a new AI-based hERG blocker prediction tool using state-of-the-art ML/DL techniques and assess its performance with appropriate evaluation metrics;

-

iii.

Make all input/output data and source code publicly available in a GitHub repository, allowing other researchers to reproduce our findings, enhance our model, and use it to predict hERG inhibitory activity, even on large-scale data sets.

Specifically, we assembled and curated both high-throughput screening (HTS) and non-HTS data from PubChem and ChEMBL by following a thorough procedure recommended in the literature [24, 26], producing a realistic, high-quality hERG blocker/non-blocker data set of nearly 300,000 molecules strategically split into a training set and a test set. Using these data, we herewith present a new AI-based hERG-specific scoring function (SF) that is a stacking ensemble binary classifier for detecting hERG inhibitors. This model, named “HERGAI,” employs a DNN trained on the output of three base models, each of which was built using protein–ligand extended connectivity (PLEC) fingerprints extracted from hERG-bound docking poses of our training molecules and fed into the RF, XGB, or DNN algorithm. HERGAI demonstrated a strong classification and screening power on our challenging test set, and avoided the pitfall of overfitting to positive instances. All data and source code are publicly available at https://github.com/vktrannguyen/HERGAI.

Results and discussion

Computational workflow

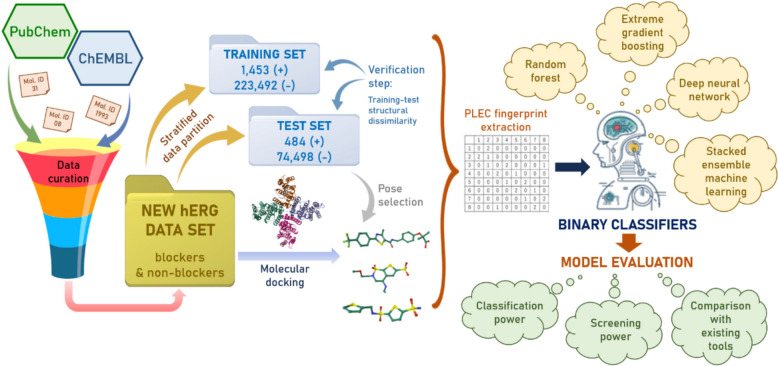

The computational workflow of our study is shown in Fig. 1. First, small-molecule data related to hERG inhibitory assays (both HTS and non-HTS), including all ligand identifiers (IDs), their canonical SMILES strings and recorded half-maximal inhibitory concentration (IC50) values (in µM) were retrieved from PubChem and ChEMBL. These molecules underwent a curation process that aimed at: (i) removing ambiguous data points (active compounds without any potency value, active readouts from primary assays with no confirmatory result) and duplicates; (ii) defining a universal threshold to distinguish between active and inactive ligands; (iii) determining an overall activity label and potency value for each molecule; (iv) removing potential false positive (assay-interfering) instances such as luciferase inhibitors, promiscuous aggregators, auto-fluorescent substances; and (v) removing inorganic and inactive ligands whose physicochemical properties fell outside the commonly observed ranges for drug-like molecules (see “Methods”). These steps were recommended in a recently published protocol [26], and had been performed during the development of LIT-PCBA, a well-known data collection designed for ML-based VS [24]. All remaining molecules were next docked into a hERG template structure chosen from the Protein Data Bank (PDB), using Smina (v.2019-10-15) [27], in the same binding site as that of a hERG blocker whose conformations had been experimentally solved in complex with hERG. A stratified data split was then conducted on the successfully docked molecules, creating a training set and a test set that would be proven structurally dissimilar to each other by a subsequent verification step. Among Smina [27], RF-Score-VS [28] and the recently introduced ClassyPose [29], one SF was selected as docking pose selection method based on the performance of various VS schemes utilizing each of them on our test data. PLEC fingerprints were then extracted from all selected docking poses [30], and those of training molecules were used as input to train and evaluate six AI-based binary classifiers for hERG inhibitor prediction, incorporating RF, XGB, and DNN as learning algorithms. Two models were built per algorithm, with half of the models constructed using a stacked ensemble ML approach. This method involves training a meta-classifier to combine the output of several base models, thereby leveraging the strength of diverse algorithms. The meta-model learns how to best integrate their predictions, which often leads to improved predictive performance and robustness, especially in complex classification tasks [31]. Their classification and screening power would eventually be evaluated on the test set, with comparisons to state-of-the-art hERG blocker predictors recently reported in the literature.

Fig. 1.

Computational workflow of our study. A series of AI-based binary classifiers for hERG inhibitor prediction were built and evaluated (half of which were from a stacked ensemble ML approach). These models incorporated RF, XGB, and DNN as learning algorithms, using PLEC fingerprints extracted from docking poses of nearly 300,000 hERG blockers and non-blockers retrieved and curated from PubChem and ChEMBL

Data retrieval, data curation and stratified data split

From PubChem and ChEMBL, 299,927 molecules (1937 true actives and 297,990 true inactives) remained after the data retrieval and data curation procedure (see “Methods”). The IC50 of 20 µM, corresponding to a potency value (-logIC50) of 4.7, was chosen as the universal threshold to distinguish between experimental hits and non-hits, as it was the most common threshold used across all available hERG inhibitory assays. The remaining ligands were structurally diverse: only 1.50% of the pairs that could be formed among the true actives had Tanimoto similarity above 0.30, in terms of Morgan fingerprints (2048 bits, radius 2) (Figure S1, Supporting Information); the proportion was 0.46% in the case of true inactives. Moreover, these true hits and non-hits shared wide physicochemical property ranges, posing a particular challenge for any VS procedure and SF (Figure S2, Supporting Information). All of them were successfully docked into the structure of hERG, in the same binding site as that of astemizole, a well-known blocker of this potassium channel (see “Methods”).

From these remaining active and inactive ligands, 1048 and 82,909 Bemis-Murcko (BM) scaffold cores were extracted, respectively, based on which the composition of the training set and that of the test set were determined [32]. The principle guiding data set partitioning ensured that each active/inactive BM scaffold was randomly assigned as a whole to either the training set or the test set (no further split on any BM scaffold was performed), such that the training-to-test ratio (in terms of ligand population) was 3/1. Table 1 provides details about our final data partitions.

Table 1.

Final composition of our training set and test set

| Activity label | Training set | Test set | |

|---|---|---|---|

| Number of BM scaffolds | Active | 796 | 252 |

| Inactive | 61,464 | 21,445 | |

| Number of ligands | Active | 1453 | 484 |

| Inactive | 223,492 | 74,498 |

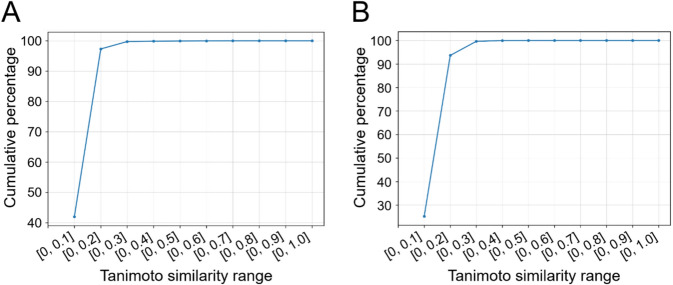

It is noteworthy that we wanted to ensure the training set was structurally different from the test set, in order to avoid training-test biases and data leakage during subsequent ML model training. Being aware that different BM scaffolds might be very structurally similar to each other (which would lead to performance overestimation, should our ML models be trained and tested on ligands whose structures were alike), we took precautions to make sure that training-test analog biases were minimal. To this end, Morgan fingerprints (2048 bits, radius 2) were extracted from all training and test set ligands, after which the Tanimoto similarity of every training active-test active pair and training inactive-test inactive pair was computed (see “Methods”). Indeed, there were very few similar molecules across the two data partitions, as shown in Fig. 2.

Fig. 2.

Cumulative percentage of training active-test active pairs (a) and training inactive-test inactive pairs (b) whose Tanimoto similarity coefficients fell within defined ranges, in terms of Morgan fingerprints (2048 bits, radius 2)

It is observed that 99.77% of all training active-test active pairs (n = 703,252) and 99.64% of all training inactive-test inactive pairs (n = 16,649,707,016) had Tanimoto similarity coefficients not exceeding 0.30, in terms of Morgan fingerprints (2048 bits, radius 2). This highlights the very low structural similarity between our training and test ligands, and justifies the BM scaffold-based stratified data split that we performed.

On another note, our data set, particularly the test partition, is especially challenging due to the presence of overlapping BM scaffolds between active and inactive molecules (Figure S3, Supporting Information). Specifically, 24 BM scaffolds are shared between test actives and test inactives, with 38 test actives sharing scaffolds with 952 test inactives. This overlap increases the difficulty of the prediction task and mirrors real-world screening collections, where active compounds may differ from inactives by only subtle structural changes (e.g., a halogen substituent or a heteroatom in a cyclic moiety) which are not always captured by BM scaffolds. Such overlap better reflects the realistic complexity of drug discovery data and helps avoid overestimating model performance in both classification and VS tasks. The challenging nature of our test set is further evidenced by the poor performance of an RF classification model trained solely on ligand-based features (Morgan fingerprints, 2048 bits, radius 2) using default hyperparameters. Even after applying random oversampling of the minority class in the training set, consistent with the oversampling strategy subsequently used in our AI/ML models, this RF classifier achieved only a balanced accuracy of 0.525 (barely above random) and a recall of 0.050 (given a decision threshold of 0.5). These results underscore the minimal bias in our stratified train-test split, reinforcing the robustness of our partition strategy. They also suggest that ligand-based features, which omit protein-related information, are suboptimal for developing accurate hERG inhibitor prediction models.

Choosing a pose selection method

All target-bound conformations of each docked molecule in the test set were scored by three different SFs: Smina [27], RF-Score-VS (v.2) [28], and ClassyPose [29]. Smina is the classical SF native to the namesake docking program used to generate all docking poses in this study [27]. RF-Score-VS is a generic RF-based SF that was trained for predicting ligand-target binding affinity [28]. ClassyPose is a support vector machine (SVM) binary classifier introduced in 2024 as a pose classification tool that exhibited good performance in terms of docking power and was proven effective for pose selection in various VS tasks [29]. Note that, for ClassyPose, a PLEC fingerprint had to be extracted from each docking pose obtained from the test set, using our in-house Python code (see “Methods”). Only one best-scored docking pose determined by each of these three SFs was retained for each test set ligand, before ligand ranking was carried out by either Smina or RF-Score-VS. This strategy led us to the following six VS schemes performed on our test data:

Scheme 1: Smina/Smina (pose selection and ligand ranking by Smina);

Scheme 2: RF-Score-VS/Smina (pose selection by RF-Score-VS, ligand ranking by Smina);

Scheme 3: ClassyPose/Smina (pose selection by ClassyPose, ligand ranking by Smina);

Scheme 4: Smina/RF-Score-VS (pose selection by Smina, ligand ranking by RF-Score-VS);

Scheme 5: RF-Score-VS/RF-Score-VS (pose selection and ligand ranking by RF-Score-VS);

Scheme 6: ClassyPose/RF-Score-VS (pose selection by ClassyPose, ligand ranking by RF-Score-VS)

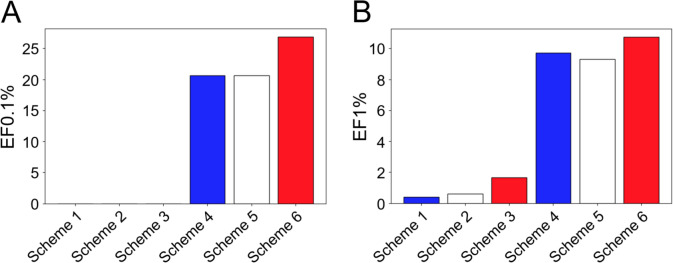

The performance of each VS scheme, in terms of enrichment factor (EF) of true actives at the top 0.1% molecules (EF0.1%) and EF1%, is demonstrated in Fig. 3.

Fig. 3.

Performance on the test set, in terms of EF0.1% (a) and EF1% (b), of six VS schemes involving docking pose selection by Smina, RF-Score-VS or ClassyPose followed by ligand ranking by either Smina or RF-Score-VS. When the same SF was used to rank test set ligands, VS schemes employing ClassyPose for pose selection (Schemes 3, 6) achieved the highest EF0.1% and EF1% (red bars, except when Smina was used for ligand ranking, all EF0.1% = 0.0)

As shown in Fig. 3, pose selection by ClassyPose led to the highest EF1% in both scenarios where Smina and RF-Score-VS were employed for subsequent ligand ranking. An improvement of 166.67 and of 10.64%, in terms of EF1%, was achieved when ClassyPose was used to select docking poses, in comparison to the second-best SF prior to ligand ranking by Smina and RF-Score-VS, respectively. A similar observation could be drawn even if we narrowed down the proportion of virtual hits from the top 1% to only 0.1%: when RF-Score-VS was responsible for ligand ranking, pose selection by ClassyPose helped retrieve 30% more true actives than both Smina and RF-Score-VS. These remarks are in agreement with VS results reported in the original ClassyPose publication [29], which demonstrated the strong performance of this SVM-based model in ligand posing challenges involved in in silico screening. ClassyPose was thus chosen as the SF to select one best docking pose per ligand in the training set and the test set for subsequent development and evaluation of our hERG-specific AI models.

Developing AI-based binary classifiers for predicting hERG inhibitors

For each docking pose having the highest score given by ClassyPose per training set ligand, its PLEC fingerprint was used as input to train our binary classifiers for predicting hERG inhibitors, employing one of the following three ML algorithms: RF, XGB, and DNN. We carried out hyperparameter tuning to determine the optimal set of hyperparameters for each algorithm. This was performed by fivefold cross-validation (CV) on the training set (training-to-validation fold ratio of 4/1), aiming to optimize the average balanced accuracy (recommended in case of class-imbalanced data [33]) obtained across validation folds. Due to the class imbalance of our training set (active-to-inactive ratio of 1/154, approximately), we performed random oversampling of active ligands (the minority class) in each training fold during fivefold CV, i.e., after the training-validation fold split. This oversampling practice helped avoid data leakage from the training fold to the validation fold, and thus enabled a more exact evaluation of model performance. Based on our preliminary experiments with different oversampling ratios (data not shown), we chose to multiply the active ligand population by 100 times in each training fold, thus rendering it much more class-balanced. The optimal hyperparameters are listed in Table S2 (Supporting Information), and were used to train our final binary classifiers on the entire training set (oversampled in the same manner as each training fold). These hERG-specific SFs were then applied to the external test set (whose docking poses had been selected by ClassyPose). Based on the “active probability” predicted by each ML model and the decision threshold determined from fivefold CV, all test set ligands were classified as active or inactive, and ranked from the most to the least likely to be active.

We also implemented a stacked ensemble ML approach using the three previously generated base classification models, called RF_BC, XGB_BC, and DNN_BC from this point. Each of the same three algorithms (RF, XGB, and DNN) was used as the meta-learner to build a stacking binary classifier. For this, the active probabilities of the ligands in each of the five validation folds (partitioned from the training set) were predicted by each base model, trained on the corresponding training fold, using its respective optimal hyperparameters. To account for the optimal decision threshold determined for each base model (which changed from one model to another, and impacted their classification results), we adjusted the active probabilities predicted by a base model, dividing them by the corresponding decision threshold. The adjusted scores from the three base classifiers for all training set ligands were used as input features for meta-model training. Hyperparameter tuning was performed with fivefold CV on the training set, after which the optimal hyperparameters were used to train the stacking classifiers, called RF_SC, XGB_SC, and DNN_SC from this point, on the entire training set. It is important to note that: (i) the fold compositions of the training set and the search space for hyperparameter tuning remained consistent during the training of both base and stacking models, and (ii) oversampling was applied to each training fold (during fivefold CV) and to the entire training set in the same manner as previously described.

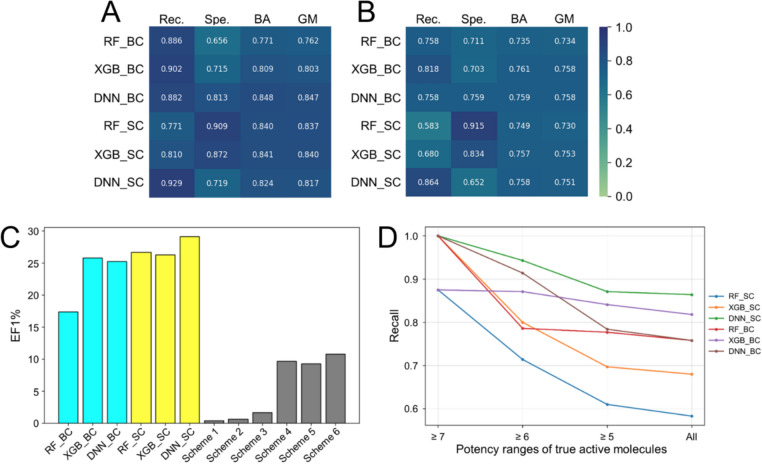

We evaluated the performance of our six binary classifiers in terms of screening power and classification power. The screening power was assessed on the external test set using the area under the receiver operating characteristic curve (ROC-AUC), the EF0.1% and the EF1%, enabling direct comparisons with six VS schemes involving existing generic SFs (see the previous section). The classification power, on the other hand, was evaluated both during fivefold CV and on the test set, primarily based on the recall, and secondarily on the balanced accuracy, the specificity, and the geometric mean of recall and specificity (G-mean). We chose these metrics to assess our models because: (i) our primary goal was to build classifiers capable of identifying hERG inhibitors, which pose a fatal risk of cardiotoxicity to patients (thus, recall should be the primary evaluation metric); and (ii) other commonly used metrics, such as the Matthews correlation coefficient (MCC), the precision, and the F1-score, though widely cited in the literature, may not be suitable for highly imbalanced data. Indeed, when the test set is severely class-imbalanced and based on an imperfect reference standard (as in our case, with a low prevalence of 0.006), even a good classification model can yield a very low or near-zero MCC, precision value, and F1-score. This occurs particularly when only a small proportion of negative instances are misclassified, yet their population far outweighs that of true positives, which drastically lowers these metrics and does not fairly reflect the model’s classification power. This issue was raised and analyzed in a recent article [34], highlighting the importance of selecting the most appropriate evaluation metric(s) for the task’s purpose and the test data composition. Figures 4, S4 and Table S3 (Supporting Information) illustrate the performance of our six AI-based binary classifiers for hERG inhibitor prediction in the scenarios outlined above.

Fig. 4.

Performance of our six AI-based binary classifiers for hERG inhibitor prediction. Their classification power was assessed during fivefold CV (a) and on the test set (b), in terms of recall (Rec.), specificity (Spe.), balanced accuracy (BA), and G-mean (GM). For (a), average values across the five folds are reported. The screening power of these models was evaluated on the test set, reflected by the EF1% values portrayed in (c) (the performance of six VS schemes involving existing generic SFs is shown for comparison). The recall values of our hERG-specific classifiers on sub-populations of test actives corresponding to different potency ranges are portrayed in (d)

As shown in Figure S4a, S4b and S4c (Supporting Information), our hERG-specific ML classifiers significantly outperformed all six VS schemes involving existing generic SFs on the external test set, in terms of screening power, according to all evaluation metrics (ROC-AUC, EF0.1% and EF1%). This observation aligns with findings from other studies, highlighting the superior screening power of target-specific MLSFs over generic ones, even on challenging test sets composed of ligands structurally different from those in the training set and containing a small proportion of positive instances [26, 35–37]. Our six AI models demonstrated better performance than random classification (balanced accuracy = 0.5, G-mean = 0), and successfully avoided the pitfall of predicting nearly all test data as belonging to the dominant class present in the training set, despite the class imbalance. Among these classifiers, the stacking models gave comparable performance to their base components, in terms of classification power (Figs. 4a, b, c S4 d-g). However, the classifiers resulting from stacked ensemble gave significantly better EF1% than the base ones (Figs. 4c, S4h). Notably, the stacking model DNN_SC gave the highest EF of true actives at both the top 0.1% and 1% of our ranked test population (Figs. 4c, S4i), demonstrating its ability to prioritize true positives over true negatives based on predicted active probability scores. It also gave the highest recall (our primary evaluation metric) during both fivefold CV and on the test set (Fig. 4a, b). Though our models experienced performance drops between fivefold CV and test set evaluation, this was mostly due to reduced recall. However, the DNN_SC model showed only a modest drop in both balanced accuracy and recall, indicating good generalization and limited overfitting. As we took a closer look at sub-populations of test active ligands corresponding to different potency ranges (Fig. 4d), it was observed that DNN_SC managed to recognize 94.29% (n = 66) of test instances whose pIC50s were higher than or equal to 6 (IC50s not exceeding 1 µM, n = 70), including 100% of the strongest hERG blockers whose IC50s were not higher than 0.1 µM (n = 8). The potency threshold corresponding to an IC50 of 1 µM is commonly used in pharmacology and toxicology studies to distinguish strong hERG blockers, which are associated with an elevated risk of cardiotoxicity, from weaker ones [38]. Despite their structural differences from the active ligands used in model training (Table S4, Supporting Information), the most potent true hits were correctly labeled by our DNN_SC model. Moreover, it achieved higher recall and substantially better screening power on the full test set (Table 2) compared to two state-of-the-art hERG prediction tools, CardioTox net [17] and AttenhERG [21], demonstrating its robustness as a hERG inhibitor detector.

Table 2.

Classification and screening performance of DNN_SC, CardioTox net and AttenhERG on the full test set (BA: balanced accuracy, GM: G-mean)

| Classification performance | Screening performance | ||||||

|---|---|---|---|---|---|---|---|

| Recall | Specificity | BA | GM | ROC-AUC | EF0.1% | EF1% | |

| DNN_SC | 0.864 | 0.652 | 0.758 | 0.751 | 0.863 | 64.05 | 29.13 |

| CardioTox net | 0.603 | 0.821 | 0.712 | 0.704 | 0.770 | 16.53 | 5.58 |

| AttenhERG | 0.698 | 0.737 | 0.718 | 0.717 | 0.789 | 51.65 | 16.74 |

Notwithstanding our primary goal of building classifiers with high recall, and acknowledging the inherent trade-off between the recall and the specificity, it is important to note that a minority of negative instances, particularly in the external test set, were mislabeled as positives by our six hERG-specific ML classifiers (including DNN_SC). This, in turn, lowered their specificity. While decision threshold tuning was performed in this study to alleviate the drop in specificity, cost-sensitive learning techniques such as class-weighted loss functions may help mitigate this trade-off by better balancing false positives and false negatives. We aimed to examine this issue more closely and investigated whether HergSPred, another recent hERG blocker predictor, would face similar challenges when applied to a data set of unprecedented size and complexity like ours.

Comparing DNN_SC to HergSPred, a state-of-the-art hERG inhibitor prediction tool

HergSPred is a consensus hERG inhibitor prediction tool based on 30 individual classification models using voting principles [11]. Unlike our structure-based hERG-specific ML classifiers, HergSPred is a ligand-based model employing DNN, RF, and XGB as learning algorithms, each of which was trained and evaluated using MACCS keys and Morgan fingerprints extracted from nearly 7000 ligands during tenfold CV [11]. Introduced in 2022, it was claimed to achieve strong classification performance that exceeded pre-existing tools on multiple test sets [11]. To verify whether HergSPred would encounter the same issue of identifying false positives, as observed in our best model (DNN_SC), we applied it to our inactive test population of 74,498 instances.

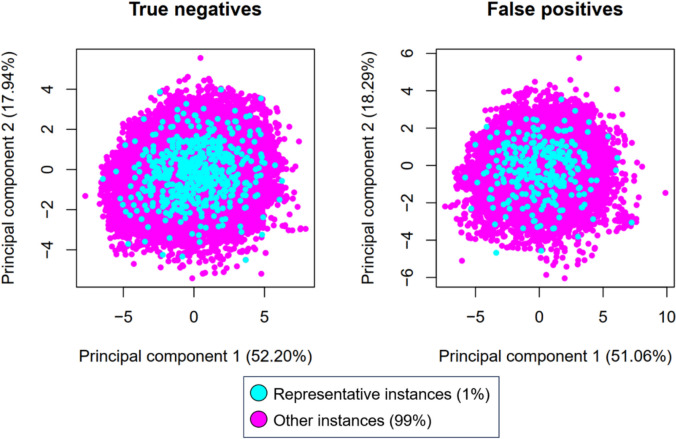

However, HergSPred can only be accessed via a web server (at http://www.icdrug.com/ICDrug/T), without any source code to run the program on large data sets. Given that the web server can only process a limited number of molecules at a time (to avoid overloading), it was not feasible to run predictions for over 70,000 ligands on the website. This prompted us to select only a representative subset corresponding to 1% of our inactive test population (n = 744). The size of this subset was comparable to those of most test data published in the literature [11–15]. To this end, we first divided our test inactives into two categories: true negatives (TN, n = 48,557) and false positives (FP, n = 25,941), according to the classification predictions given by our best model (DNN_SC). The purpose of doing so was to verify: (i) whether HergSPred would manage to correctly label the TN, and (ii) if it would make the same labeling mistakes on the FP, as DNN_SC did. Using Open Babel (v.3.1.1) [39], nine physicochemical properties including molecular weight, number of atoms, number of bonds, number of single/double/aromatic/rotatable bonds, topological surface area (TPSA), and lipophilicity (logP) were computed for each molecule. K-means clustering was performed, separately, on the TN and FP populations, using the data scaled from nine previously computed properties and our in-house R code (see “Methods”), such that 485 and 259 clusters were created among the TN and FP, respectively. Eventually, only one molecule was randomly selected from each cluster. This practice helped us achieve the following goals: (i) a 1% portion of our inactive test data was selected, and (ii) each cluster was represented by one of its members, thus ensuring the representativeness of the chosen subset in chemical space. Indeed, a principal component analysis (PCA) using the scaled properties (see “Methods”) showed that the selected 1% TN/FP samples were well-distributed across the 2D space defined by the first two principal components and encompassing the remaining 99% of instances (Fig. 5). Further statistical analyses using the Mann–Whitney U test with Bonferroni correction confirmed that the chosen 1% subset did not differ significantly from the other 99% in terms of key structural properties (all p-values exceeding 0.05, Figure S5, Supporting Information). These observations suggest that the selected 485 TN and 259 FP instances were representative of the entire inactive test population and suitable for HergSPred evaluation.

Fig. 5.

Projection of 48,557 TN and 25,941 FP instances predicted by our best model, DNN_SC, onto the 2D space defined by the first two principal components from a PCA performed on nine ligand-based physicochemical properties. The representative instances (1%) appear in cyan, while the remaining 99% are in magenta. It can be observed that the representatives are well-distributed across the space occupied by the other instances, including the edge regions. This confirms the representativeness of the chosen 1% inactive test subset in chemical space. Similar observations were made using t-SNE dimensionality reduction with Morgan fingerprints (2048 bits, radius 2) as descriptors (Figure S6, Supporting Information)

Like our DNN_SC model, HergSPred failed to correctly label all selected 259 FP instances (all active probabilities ≥ 0.63, 75.68% of them were ≥ 0.90). On the other hand, among the 485 TN instances, only 15 were correctly labeled as inactives by HergSPred (active probability threshold set at 0.50). This means, on a non-blocker population whose size was comparable to those of existing test sets (n = 744), HergSPred tended to predict almost all molecules (97.98%) as hERG inhibitors. This finding becomes even more pronounced as we remarked that over 60% of our chosen inactive subset was assigned strikingly high active probabilities exceeding 0.90 by HergSPred. Its specificity on this non-blocker subset was, thus, only 0.020, far lower than that of DNN_SC (0.652). Note that HergSPred was trained by considering compounds with IC50s exceeding 10 µM as inactives [11]: it should have been able to correctly classify the non-blockers in our data set, whose IC50s were higher than 20 µM. The above observations also suggest a high likelihood that HergSPred, despite being built on a much smaller data set than ours, was overtrained on active instances, a limitation much less severe in our classification models. A closer inspection of the structures of negative instances in our test set provided insights into why the evaluated classifiers made many FP predictions. Indeed, most structural and physicochemical features which, according to HergSPred authors [11], tended to increase the hERG inhibitory activity of most compounds were also present in many non-blockers that we assembled. They included halogen atoms (the most common were F, Cl), aromatic rings (98.79% of our test inactives possessed at least an aromatic ring in their structures), and fat solubility-increasing fragments (exhibited by a logP value higher than 0: this was the case of 99.36% of our test inactives). These findings serve as a reminder of a study published in 2010 by Doddareddy et al. [12], which showed that certain additional features or substructures (also present in our test non-blockers) can counteract common hERG blocker signatures, reducing hERG liability as “compensating (detoxifying) features.” They also highlight the considerable challenge that our data set, especially the test molecules, presents to any hERG inhibitor prediction tool.

Conclusions

In this study, we assembled and curated a comprehensive hERG data set comprising 1937 blockers and 297,990 non-blockers from PubChem and ChEMBL, all of which had been experimentally validated by in vitro assays. A stratified split was performed on this data set, providing a training set (75%) and a test set (25%) that have been proven challenging for VS methods, and can be directly used to train and evaluate future hERG inhibition prediction tools. This is, by far, the largest and most complex properly curated hERG inhibiting data set for ML/AI research that has been published, to the best of our knowledge. Using our training set (n = 224,945), we trained a series of base and stacking binary classifiers employing RF, XGB, and DNN as learning algorithms, during which hyperparameter tuning through fivefold CV was carried out. Due to the severe imbalanced class distribution in our training data (active-to-inactive ratio of 1/154), we also performed random oversampling of active (positive) instances, and tuned the decision threshold along with the hyperparameters. Based on the results obtained from fivefold CV (on the training set) and on the external test set (n = 74,982), the stacking model DNN_SC gave the best recall (0.931 and 0.864, respectively), having managed to recognize 94.29% of test molecules with IC50s not exceeding 1 µM, including all hERG blockers with IC50s ≤ 0.1 µM. This classifier also exhibited the strongest screening power, on the test data, compared to VS schemes involving existing SFs and other models that we developed, as well as CardioTox net and AttenhERG, two recently introduced state-of-the-art hERG inhibitor prediction tools. This highlights the potential of proper stacked ensemble ML in both classification and screening tasks. Our model training strategy also helped avoid overtraining on active instances, an issue that would cause a classifier to label nearly all molecules as hERG inhibitors (expressed by a very low specificity level), as seen in HergSPred, another recent hERG blocker predictor. The source code of our best model (DNN_SC), henceforth referred to as “HERGAI,” along with relevant data, is made available in our GitHub repository, allowing users to experiment with our code, reproduce our results, and make predictions on their own data, even at a large scale.

Methods

Data retrieval, data curation and stratified data split

Data retrieval and curation

Raw data tables containing experimental activity data points reported for hERG were downloaded from PubChem and ChEMBL, using the UniProt ID Q12809, according to the steps described in a recently published protocol [26]. These data tables contained the following information on each molecule in vitro tested on hERG: canonical SMILES string, ID(s), phenotype(s) and corresponding label(s) (active/inactive), the bioassay(s) that provided the reported readout(s) (assay ID(s) and its (their) classification: either primary or confirmatory), and activity concentration(s) (if available). Information on bioassays which were performed was also retrieved. Here, we only considered data instances reported for hERG inhibitory activity. These raw data tables then underwent the following data curation process, which consisted of the following steps:

-

Step 1: Removal of ambiguous data points and duplicates:

All “active” readouts with no accompanying IC50 values or from primary assays without any confirmatory result were discarded. After this step, the remaining active-labeled instances consisted of data points with one or more IC50 values determined from at least one confirmatory screen. Duplicates were removed according to the practice described in a recent protocol [26].

-

Step 2: Determination of a universal threshold to distinguish active from inactive ligands:

The activity threshold (in terms of IC50, in µM) to determine the label of each tested molecule was retrieved from each inhibitory assay performed on hERG. The most common threshold used across these assays was considered the universal threshold to distinguish between active and inactive ligands in this study.

-

Step 3: Determination of an overall activity label and potency value for each molecule:

Based on the universal threshold determined in Step 2, each data instance with an accompanying IC50 value was reassigned a label (either active or inactive).

In case a molecule (an SID) had more than one activity readout, the following scenarios were considered:- If the label of the molecule was unanimously determined across readouts: its label remained the same, its overall pIC50 (-logIC50) was the average of all reported pIC50 values (the overall pIC50 was obligatory for active molecules, and optional for inactive ones).

-

If there were contrasting labels for the same molecule across readouts: two sub-scenarios were considered:If all readouts of the molecule had accompanying IC50 values: the average pIC50 across readouts and its standard deviation (SD) were calculated. If the average pIC50 was higher than the SD, the former would then be considered the overall pIC50 of the molecule, based on which its overall label was determined (according to the universal threshold). Otherwise (average pIC50 ≤ SD), the molecule would be discarded (variation too large to be considered reliable).

If at least a readout of the molecule did not have an accompanying IC50 value (in case it was labeled “inactive” in one or more bioassays), the dominant label across readouts would become its overall label. If it was “inactive,” the overall pIC50 was left blank. If it was “active,” the average value of all reported IC50s was calculated and considered the overall IC50 of the molecule. If there was no dominant label, the molecule would be discarded.

-

Step 4: Removal of potential false positive (assay-interfering) instances:

Potential false positives such as luciferase inhibitors, promiscuous aggregators, auto-fluorescent substances were removed from our data set. The criteria based on which we carried out this step were listed in the LIT-PCBA original article [24].

-

Step 5: Removal of inorganic and inactive ligands whose physicochemical properties fell outside the commonly observed ranges for drug-like molecules:

Ligands containing at least one atom other than H, C, N, O, P, S, F, Cl, Br, and I were considered inorganic molecules and were, thus, discarded from our data set.

Inactive-labeled ligands whose physicochemical properties fell within the following ranges were retained: (i) molecular weight from 150 to 800 Da, (ii) logP (computed by Open Babel, v.3.1.1) from − 3.0 to 5.0, (iii) number of rotatable bonds lower than 15, (iv) hydrogen bond acceptor count lower than 10, (v) hydrogen bond donor count lower than 10, and (vi) total formal charge from − 2.0 to + 2.0. These ranges were used as filtering layers to create the LIT-PCBA data collection [24]. All inactive ligands that failed to meet at least one of these criteria were discarded. Note that these physicochemical property filters were applied only to the inactive ligands. Inactives retrieved from PubChem and ChEMBL may include compounds with physicochemical properties markedly different from those of actives (e.g., low molecular weight fragments such as simple alcohols or aldehydes). Including such inactives, particularly in the test set, would lead to an overestimation of screening performance, as they are trivially distinguishable from more complex, drug-like actives. Excluding them helps reduce the physicochemical disparity between the active and inactive populations, thereby creating a more realistic and challenging benchmark for evaluating screening methods.

Molecular docking

Molecular docking of all remaining active (n = 1937) and inactive (n = 297,990) molecules was carried out with Smina (v.2019-10-15) [27], using the PDB template structure 7CN1. This is a cryo-electron microscopic structure of the potassium-bound hERG channel in which the binding site of astemizole, a well-known hERG inhibitor, is well defined [40]. The search space for Smina was defined as a grid box whose center was placed at the coordinates x = 141.438, y = 141.442, z = 174.151, and whose size was set at 8000 Å3 (20 Å × 20 Å × 20 Å). This search space covered the key amino acid residues necessary for the binding of hERG channel blockers, including Y652 (chains A, B, C, D), S624 (chains C, D), T623, V625, F656 (chain C), G648 and S649 (chain D), and corresponded to the binding site of astemizole [40]. The protein structure was prepared using the ‘DockPrep’ tool of Chimera (v.2.3.1) [41], the parameters were set as recommended by dos Santos et al. [42]. The ligands in our data set, on the other hand, were prepared from their SMILES strings using Open Babel (v.3.1.1) [39]: 3D conformations were generated with the MMFF94 force field, implicit hydrogen atoms were added and protonation states were assigned at physiological pH (7.4). All input and output files of the docking command were in mol2 (a maximum of 10 docking poses were retained for each successfully docked ligand).

Stratified data split

BM scaffolds were extracted from the SMILES strings of all remaining active and inactive molecules, using the ‘MurckoScaffoldSmiles’ function from the ‘MurckoScaffold’ module of ‘rdkit’ (v.2023.03.3) [43]. The BM method removes the substituents/side chains from the structure of an input molecule and keeps only its main core, which normally consists of ring systems and linkers. It is worth noting that molecular chirality was ignored during the BM scaffold extraction process (‘includeChirality = False’, default option).

After the training and test sets were determined based on the BM scaffold cores of all remaining ligands, we wanted to verify that there were minimal analog biases between our two data partitions. For this, Morgan fingerprints (2048 bits, radius 2) were first extracted from the SMILES strings of all training and test set ligands, using the ‘GetMorganFingerprintAsBitVect’ function from the ‘AllChem’ module of ‘rdkit’ (v.2018.03.4) [43]. The Tanimoto similarity, in terms of Morgan fingerprints, of every training active-test active pair and training inactive-test inactive pair was computed using the ‘BulkTanimotoSimilarity’ function from rdkit’s ‘DataStructs’ module [43]. The Python code to perform these tasks was provided with a recent protocol [26].

Choosing a pose selection method

Three SFs were evaluated as pose selection tools in this study: Smina [27], RF-Score-VS (v.2) [28], and ClassyPose [29]. They were employed to score all docking poses of our test set ligands, after which only one best-scored pose was retained per ligand per SF.

First, the docking pose with the most negative docking score for each ligand was retained as “selected by Smina.” Then, after all docking poses were rescored by RF-Score-VS, pose selection was carried out again: the pose having the highest score issued by this SF for each docked ligand was kept as “selected by RF-Score-VS.” The steps required to accomplish these tasks were detailed in a recently published protocol [26].

For ClassyPose, PLEC fingerprints of all test set docking poses were extracted using the ‘PLEC’ function from the Open Drug Discovery Toolkit (ODDT, v.0.7) [44]. The parameters were set according to recommendations in the original PLEC paper [30] and a recently published protocol [26]. The Python code used for PLEC fingerprint extraction is provided in our GitHub repository (see “Data availability”). For each ligand, the pose receiving the highest “good pose probability” score was retained as “selected by ClassyPose.”

Since ClassyPose was later chosen as the pose selection method for subsequent phases of the study (see “Results and Discussion”), PLEC fingerprints of all training set docking poses were extracted as well, using the same Python code for extracting those of the test set. For each ligand, only one pose having the highest “good pose probability” score determined by ClassyPose was kept, and had its PLEC fingerprint retained.

Developing AI-based binary classifiers for predicting hERG inhibitors

PLEC fingerprints extracted from ClassyPose-chosen training set docking poses were provided as input for three ML algorithms (RF, XGB, DNN) used to train our base binary classification models (RF_BC, XGB_BC, and DNN_BC). The functions ‘RandomForestClassifier’ from ‘sklearn’ (v.1.0.2) and ‘XGBClassifier’ from ‘xgboost’ (v.1.5.1) were used to train our RF and XGB models, respectively [45, 46]. For DNN classifiers, the ‘keras’ function from ‘tensorflow’ (v.2.11.0) was employed [47]. For each ML algorithm, hyperparameter tuning was carried out using ‘hyperopt’ (v.0.2.7) [48], fivefold CV was performed to select the combination of hyperparameters giving the highest average balanced accuracy (10 evaluations). During CV, the original training set was divided into a training fold and a validation fold using the ‘StratifiedKFold’ function from ‘sklearn’ (v.1.0.2), ‘n_splits’ set at 5 [45]. This function helped preserve the active-to-inactive ratio across different folds. Oversampling of active molecules (the minority class) was performed solely on the training fold to avoid data leakage to the corresponding validation fold, using the ‘RandomOverSampler’ function from ‘imbalanced-learn’ (v.0.8.1) [49], such that the active population was multiplied by 100 per training fold. In this study, we also tuned the decision threshold for each of our binary classifiers during CV alongside the hyperparameters. All random seeds were set at 42 (where possible) to ensure that our results are reproducible. In the end, each final binary classifier was trained using each algorithm’s optimal hyperparameters on the entire training set (oversampled similarly to the training folds), and applied to the external test set (PLEC features from ClassyPose-selected docking solutions only). The recall, specificity, balanced accuracy and G-mean corresponding to the optimal hyperparameters across five validation folds and on the test set were computed using ‘sklearn’ (v.1.0.2) [45]. The ROC-AUC, the EF0.1% and the EF1% were computed using our in-house Python code. The stacking models (RF_SC, XGB_SC, and DNN_SC) were trained using the scores adjusted from the active probabilities that the three base models predicted for training set ligands (validation fold predictions after model training on the corresponding training fold, using model-specific optimal hyperparameters), and were evaluated in the same manner as the base classifiers, as described in the “Results and Discussion” section. All relevant code is provided in our GitHub repository (see “Data availability”). Figure 6 provides a comprehensive diagram summarizing all input, output, and key components associated with our best-performing model (DNN_SC), which has been renamed “HERGAI.”

Fig. 6.

Architecture of HERGAI, a DNN model trained on the output of three base models: RF, XGB, and DNN, each built using PLEC fingerprints derived from docking poses of hERG-bound molecules. The diagram summarizes key input, output, and model components

The classification and screening performance of two state-of-the-art hERG blocker prediction tools, CardioTox net [17] and AttenhERG [21], was evaluated on our full test set using their public GitHub repositories (https://github.com/Abdulk084/CardioTox and https://github.com/Tianbiao-Yang/AttenhERG). Both tools output a probability score for each molecule, with a decision threshold of 0.5 used to assign binary class labels. These probabilities were used to calculate classification metrics (recall, specificity, balanced accuracy, and G-mean), and also ranked to compute screening metrics (ROC-AUC, EF0.1%, EF1%). In cases where a tool failed to predict a molecule (each tool missed one, but not the same molecule), we assigned a probability of 0 and labeled the prediction as “Inactive” for evaluation purposes. All predictions from CardioTox net and AttenhERG on our test set are provided in a csv file in our GitHub repository.

Comparing DNN_SC to HergSPred, a state-of-the-art hERG inhibitor prediction tool

K-means clustering on the TN and FP populations

K-means clustering was performed, separately, on the TN and FP populations (n = 48,557 and 25,941, respectively, according to the prediction results of our best model, DNN_SC). The ‘kmeans’ function in R (v.4.3.3) was employed, using as input the data scaled from nine physicochemical properties computed by Open Babel (v.3.1.1) [39] (see “Results and Discussion”) for all TN/FP molecules (‘scale’ R function). The ‘nstart’ parameter was set at 25: this was the number of random initializations the algorithm would perform, among which it would choose the best clustering result based on the lowest total within-cluster sum of squares (‘withinss’). The ‘centers’ parameter was set at 485 and 259 for the TN and FP populations, respectively, determining 485 TN clusters and 259 FP clusters. As only one member would then be randomly selected from each cluster, this practice allowed us to create a representative subset that corresponded to 1% of our inactive test population (n = 744). All other parameters were kept as default. Relevant data are provided in our GitHub repository (see “Data availability”).

PCA on the representative and remaining TN/FP instances

We wanted to verify whether the chosen 1% TN and FP subsets represented well the chemical space occupied by all TN/FP instances. To this end, a PCA was performed, using the ‘PCA’ function from the R ‘FactoMineR’ package (v.2.11) [50]. The input was the same scaled properties (previously used for k-means clustering). All TN/FP instances (the representative 1% and the remaining 99%) were projected onto the 2D space defined by the first two principal components and were colored accordingly, using the ‘plot’ and ‘points’ R functions.

Running HergSPred predictions on the TN and FP representatives

The HergSPred website (http://www.icdrug.com/ICDrug/T, accessed in January 2025) takes as input the SMILES string of a molecule whose hERG inhibitory activity needs to be predicted. To avoid overloading, we only ran predictions on 50 SMILES strings at a time. An active probability (“probability of Yes”) for hERG inhibition was assigned to each molecule in the “Toxicity” section. All results are provided in our GitHub repository (see “Data availability”).

Supplementary Information

Acknowledgements

We would like to thank the Erasmus Mundus Joint Master’s Program “ChEMoinformatics+” for a two-year scholarship awarded to U.F.R. We also express our gratitude to Université Paris Cité for supporting this study.

Author contributions

V-K.T-N. and O.T. established the research direction and supervised the work. U.F.R. was responsible for data retrieval and data curation. V-K.T-N. led the experimental design and data modeling, was responsible for interpreting the results and preparing the initial version of the manuscript. All authors reviewed, revised and approved the final manuscript.

Funding

Not applicable.

Data availability

All relevant source code, data sets and results reported in this article are available free of charge in our GitHub repository at https://github.com/vktrannguyen/HERGAI. Please read the README file for more information.

Declarations

Ethics approval and consent to participate

Not applicable.

Competing interests

A conflict of interest is declared concerning the authors of the following article, as our study demonstrated that our model outperformed theirs and highlighted limitations in their model’s performance: Zhang X, Mao J, Wei M, et al. (2022) HergSPred: Accurate Classification of hERG Blockers/Nonblockers with Machine-Learning Models. J Chem Inf Model 62:1830–1839. 10.1021/acs.jcim.2c00256.

Footnotes

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Viet-Khoa Tran-Nguyen and Ulrick Fineddie Randriharimanamizara have contributed equally.

Contributor Information

Viet-Khoa Tran-Nguyen, Email: viet-khoa.tran-nguyen@u-paris.fr.

Olivier Taboureau, Email: olivier.taboureau@u-paris.fr.

References

- 1.Sanguinetti MC, Tristani-Firouzi M (2006) hERG potassium channels and cardiac arrhythmia. Nature 440:463–469 [DOI] [PubMed] [Google Scholar]

- 2.Vandenberg JI, Perry MD, Perrin MJ et al (2012) hERG K⁺ channels: structure, function, and clinical significance. Physiol Rev 92:1393–1478 [DOI] [PubMed] [Google Scholar]

- 3.Redfern WS, Carlsson L, Davis AS et al (2003) Relationships between preclinical cardiac electrophysiology, clinical QT interval prolongation, and torsade de pointes. J Cardiovasc Electrophysiol 14:S156–S162 [DOI] [PubMed] [Google Scholar]

- 4.Rodriguez B, Eason JC, Rudy Y (2014) Pharmacologic ion channel blockade: strengths and limitations of in silico prediction to assess QT prolongation. J Mol Cell Cardiol 72:89–99 [Google Scholar]

- 5.Gintant G, Sager PT, Stockbridge N (2016) Evolution of strategies to improve preclinical cardiac safety testing. Nat Rev Drug Discov 15:457–471 [DOI] [PubMed] [Google Scholar]

- 6.Li Q, Jørgensen FS, Oprea T et al (2008) HERG classification model based on a combination of support vector machine method and GRIND descriptors. Mol Pharm 5:117–127 [DOI] [PubMed] [Google Scholar]

- 7.Villoutreix BO, Taboureau O (2015) Computational investigations of hERG channel blockers: new insights and current predictive models. Adv Drug Deliv Rev 86:72–82 [DOI] [PubMed] [Google Scholar]

- 8.Jing Y, Easter A, Peters D et al (2015) In silico prediction of hERG inhibition. Future Med Chem 7:571–586 [DOI] [PubMed] [Google Scholar]

- 9.Braga RC, Alves VM, Silva MFB et al (2015) Pred-hERG: a novel web-accessible computational tool for predicting cardiac toxicity. Mol Inform 34:698–701 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Creanza TM, Delre P, Ancona N et al (2021) Structure-based prediction of hERG-related cardiotoxicity: a benchmark study. J Chem Inf Model 61:4758–4770 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Zhang X, Mao J, Wei M et al (2022) HergSPred: accurate classification of hERG blockers/nonblockers with machine-learning models. J Chem Inf Model 62:1830–1839 [DOI] [PubMed] [Google Scholar]

- 12.Doddareddy MR, Klaasse EC, Shagufta et al (2010) Prospective validation of a comprehensive in silico hERG Model and its applications to commercial compound and drug databases. ChemMedChem 5:716–729 [DOI] [PubMed] [Google Scholar]

- 13.Zhang C, Zhou Y, Gu S et al (2016) In silico prediction of hERG potassium channel blockage by chemical category approaches. Toxicol Res 5:570–582 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Li X, Zhang Y, Li H, Zhao Y (2017) Modeling of the hERG K+ channel blockage using online chemical database and modeling environment (OCHEM). Mol Inform 36:1700074 [DOI] [PubMed] [Google Scholar]

- 15.Cai C, Guo P, Zhou Y et al (2019) Deep learning-based prediction of drug-induced cardiotoxicity. J Chem Inf Model 59:1073–1084 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Siramshetty VB, Nguyen D-T, Martinez NJ et al (2020) Critical Assessment of Artificial Intelligence Methods for Prediction of hERG Channel Inhibition in the “Big Data” Era. J Chem Inf Model 60:6007–6019 [DOI] [PubMed] [Google Scholar]

- 17.Karim A, Lee M, Balle T et al (2021) CardioTox net: a robust predictor for hERG channel blockade based on deep learning meta-feature ensembles. J Cheminform 13:60 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Shan M, Jiang C, Chen J et al (2022) Predicting hERG channel blockers with directed message passing neural networks. RSC Adv 12:3423–3430 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Chen Y, Yu X, Li W et al (2023) In silico prediction of hERG blockers using machine learning and deep learning approaches. J Appl Toxicol 43:1462–1475 [DOI] [PubMed] [Google Scholar]

- 20.Ylipää E, Chavan S, Bånkestad M et al (2023) hERG-toxicity prediction using traditional machine learning and advanced deep learning techniques. Curr Res Toxicol 5:100121 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Yang T, Ding X, McMichael E et al (2024) AttenhERG: a reliable and interpretable graph neural network framework for predicting hERG channel blockers. J Cheminform 16:143 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Lee D, Yoo S (2025) hERGAT: predicting hERG blockers using graph attention mechanism through atom- and molecule-level interaction analyses. J Cheminform 17:11 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Falcón-Cano G, Morales-Helguera A, Lambert H et al (2025) hERG toxicity prediction in early drug discovery using extreme gradient boosting and isometric stratified ensemble mapping. Sci Rep 15:15585 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Tran-Nguyen V-K, Jacquemard C, Rognan D (2020) LIT-PCBA: an unbiased data set for machine learning and virtual screening. J Chem Inf Model 60:4263–4273 [DOI] [PubMed] [Google Scholar]

- 25.Tran-Nguyen V-K, Rognan D (2020) Benchmarking data sets from PubChem BioAssay data: current scenario and room for improvement. Int J Mol Sci 21:4380 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Tran-Nguyen V-K, Junaid M, Simeon S et al (2023) A practical guide to machine-learning scoring for structure-based virtual screening. Nat Protoc 18:3460–3511 [DOI] [PubMed] [Google Scholar]

- 27.Koes DR, Baumgartner MP, Camacho CJ (2013) Lessons learned in empirical scoring with Smina from the CSAR 2011 benchmarking exercise. J Chem Inf Model 53:1893–1904 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Wójcikowski M, Ballester PJ, Siedlecki P (2017) Performance of machine-learning scoring functions in structure-based virtual screening. Sci Rep 7:46710 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Tran-Nguyen VK, Camproux AC, Taboureau O (2024) ClassyPose: a machine-learning classification model for ligand pose selection applied to virtual screening in drug discovery. Adv Intell Syst 6:2400238 [Google Scholar]

- 30.Wójcikowski M, Kukiełka M, Stepniewska-Dziubinska MM et al (2019) Development of a protein–ligand extended connectivity (PLEC) fingerprint and its application for binding affinity predictions. Bioinformatics 35:1334–1341 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Trinh T-C, Falson P, Tran-Nguyen V-K, Boumendjel A (2025) Ligand-based drug discovery leveraging state-of-the-art machine learning methodologies exemplified by Cdr1 inhibitor prediction. J Chem Inf Model 65:4027–4042 [DOI] [PubMed] [Google Scholar]

- 32.Bemis GW, Murcko MA (1996) The properties of known drugs. 1. molecular frameworks. J Med Chem 39:2887–2893 [DOI] [PubMed] [Google Scholar]

- 33.Thölke P, Mantilla-Ramos Y-J, Abdelhedi H et al (2023) Class imbalance should not throw you off balance: choosing the right classifiers and performance metrics for brain decoding with imbalanced data. Neuroimage 277:120253 [DOI] [PubMed] [Google Scholar]

- 34.Foody GM (2023) Challenges in the real world use of classification accuracy metrics: from recall and precision to the Matthews correlation coefficient. PLoS ONE 18:e0291908 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Tran-Nguyen V-K, Simeon S, Junaid M et al (2022) Structure-based virtual screening for PDL1 dimerizers: evaluating generic scoring functions. Curr Res Struct Biol 4:206–210 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Caba K, Tran-Nguyen V-K, Rahman T et al (2024) Comprehensive machine learning boosts structure-based virtual screening for PARP1 inhibitors. J Cheminform 16:40 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Gómez-Sacristán P, Simeon S, Tran-Nguyen V-K et al (2025) Inactive-enriched machine-learning models exploiting patent data improve structure-based virtual screening for PDL1 dimerizers. J Adv Res 67:185–196 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Kramer J, Obejero-Paz CA, Myatt G et al (2013) MICE models: superior to the HERG model in predicting torsade de pointes. Sci Rep 3:2100 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.O’Boyle NM, Banck M, James CA et al (2011) Open babel: an open chemical toolbox. J Cheminform 3:33 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Asai T, Adachi N, Moriya T et al (2021) Cryo-EM structure of K+-bound hERG channel complexed with the blocker Astemizole. Structure 29:203-212.e4 [DOI] [PubMed] [Google Scholar]

- 41.Pettersen EF, Goddard TD, Huang CC et al (2004) UCSF chimera—a visualization system for exploratory research and analysis. J Comput Chem 25:1605–1612 [DOI] [PubMed] [Google Scholar]

- 42.Dos Santos RN, Ferreira LG, Andricopulo AD (2018) Practices in Molecular Docking and Structure-Based Virtual Screening. In: Gore M, Jagtap UB (eds) Computational Drug Discovery and Design. Springer, New York, pp 31–50. https://www.rdkit.org/docs/Overview.html [DOI] [PubMed] [Google Scholar]

- 43.RDKit: open-source cheminformatics. https://www.rdkit.org.

- 44.Wójcikowski M, Zielenkiewicz P, Siedlecki P (2015) Open drug discovery toolkit (ODDT): a new open-source player in the drug discovery field. J Cheminform 7:26 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.Pedregosa F, Varoquaux G, Gramfort A et al (2011) Scikit-learn: machine learning in Python. J Mach Learn Res 12:2825–2830 [Google Scholar]

- 46.Chen T, Guestrin C (2016) XGBoost: A Scalable Tree Boosting System. In: Proceedings of the 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining. ACM, San Francisco California USA, pp 785–794.

- 47.Abadi M, Barham P, Chen J, et al (2016) TensorFlow: a system for large-scale machine learning. arXiv:1605.08695. 10.48550/arXiv.1605.08695.

- 48.Bergstra J, Yamins D, Cox DD (2013) Making a Science of Model Search: Hyperparameter Optimization in Hundreds of Dimensions for Vision Architectures. In: Proceedings of the 30th International Conference on Machine Learning (ICML 2013). Atlanta Georgia USA, pp. I-115–I-23.

- 49.Lemaître G, Nogueira F, Aridas CK (2017) Imbalanced-learn: a Python toolbox to tackle the curse of imbalanced datasets in machine learning. J Mach Learn Res 18:1–5 [Google Scholar]

- 50.Lê S, Josse J, Husson F (2008) FactoMineR: An R package for multivariate analysis. J Stat Soft 25:1–18 [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Data Availability Statement

All relevant source code, data sets and results reported in this article are available free of charge in our GitHub repository at https://github.com/vktrannguyen/HERGAI. Please read the README file for more information.