Abstract

Various diseases, such as colon cancer, gastric cancer, celiac, and bleeding, pose a significant risk to the gastrointestinal (GI) tract, which serves as a fundamental component of the human body. It is less invasive to observe the inner part for disease recognition by using endoscopy and colonoscopy devices. Gastroenterologists consider the increased frame rate in video endoscopy to be challenging when it comes to identifying pathological findings. The detailed examination requires an experienced gastroenterologist. The ordinary procedure takes much time in disease classification. A machine-learning-based computer-aided diagnostic system (CADx) is in high demand for helping Gastroenterologists diagnose GI tract diseases with high accuracy (Acc). CADx takes very little time in diagnosing diseases and supports the training of clinicians. With the assistance of a gastroenterologist, CADx has an impact on reducing the mortality rate by finding diseases in their early stages. In an extensive examination of CADx, the focus is placed on ailments affecting the GI tract, various imaging methods, as well as diverse forms of CADx and techniques. These encompass preprocessing, feature extraction (both handcrafted and deep learning features), feature selection, and classification. In addition, future research directions in the area of automatic disease identification and categorization employing endoscopic frames are being looked into based on the existing literature.

Keywords: CADx, Disease classification, GI tract, Machine learning, Segmentation

Introduction

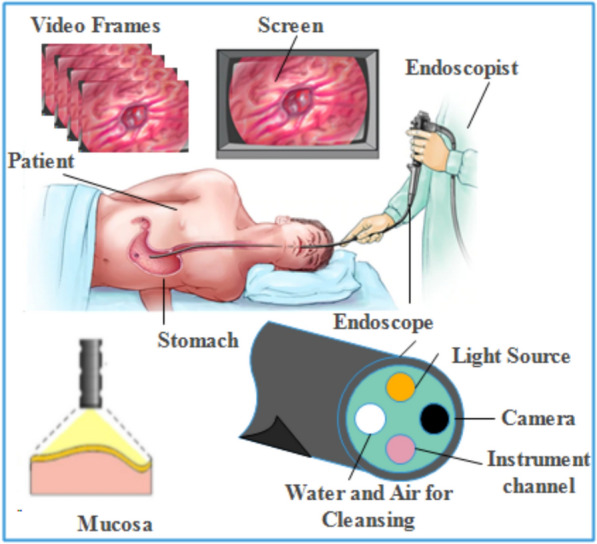

Visual data are very important and dominate over the other forms of data. Efficient algorithms are used to compute the visual data and acquire the necessary information. The CADx has multiple applications, such as disease recognition and classification, recognizing faces on social networks, assisting remote sensing, and enhancing security systems [1]. In addition, AI-powered systems can locate disasters in far-off locations by analyzing satellite images. Similarly, the health care system generates significant information about diseases using visual data promptly. Once the multimedia data is processed and analyzed, it is essential to promptly address the results of the challenges within the existing healthcare system. A way of healthy living can be improved by the advancements in technology in the medical industry. With the help of advancements in technology, now a day man can visualize and inspect the inner parts of the human body by adopting procedures such as endoscopy and other techniques that were unapproachable in the past. The gastroenterologist examines the internal part of the GI tract by inserting a camera through a long wire tube to identify any potential diseases. The digestive system, sometimes referred to as the GI system, which is essential to the process of breaking down and absorbing nutrients from food. GI tract is composed of eleven parts. The endoscopy procedures are categorized into two methods upper endoscopy and colonoscopy, the procedure which is adopted to observe the stomach, and small intestine by inserting the tube from the mouth is known as upper endoscopy while examining the large colon, intestine, and rectum inserting the long tube through the rectum is referred as colonoscopy. The GI tract is vulnerable due to several diseases such as colon and gastric cancer affecting the bowl and stomach respectively. The literature shows that every year 0.7 million cases are reported related to gastric cancer [2]. It is essential to comprehend the intricacies of the gastrointestinal system in order to preserve general health and avoid digestive diseases. A balanced diet, adequate hydration, and proper nourishment all support this vital physiological system's optimal operation. Figure 1 shows the pictorial view of the GI tract.

Fig. 1.

Anatomy of GI tract [3]

According to the National Center for Health Statistics, in new cancer cases, 606,880 out of 1,762,450 were death cases in the United States. Moreover, stomach cancer is revealed as the fifth deadliest cancer, resulting in an estimated 783,000 deaths in 2018 [4]. Cancer is dominant in central Asia and Middle Eastern countries reported internationally. The intensity of GI diseases increased in the United States of America where 60–70 million people were affected by bowel cancer (colorectal cancer) [5] and 700,000 cancer deaths and 1.3 million new cases were revealed in 2018 statistics [6]. World Health Organization (WHO) international agency declared that colorectal malignancy is the third and second most normal disease in people. The abnormal growth of cells leads to the development of various diseases, including cancer. The abnormal cells are produced from the affected older cells that never die. When compared with normal cells, cancer cells have different characteristics including the growth of cells into other tissues [7]. Cancer is a very dangerous case when the deoxyribonucleic acid (DNA) of a cell becomes damaged which can form a cancer cell. Types of diseases in the GI tract are illustrated in Fig. 2.

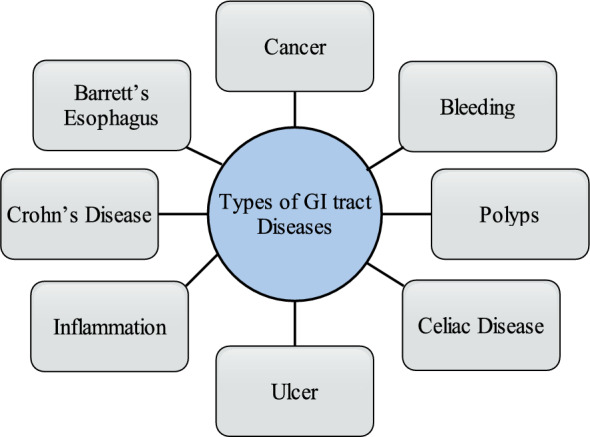

Fig. 2.

Categories of GI tract diseases

There are two possibilities when the DNA of a cell gets damaged, In one case the damaged cell is repaired and in the second case, the cell dies. The second case is very dangerous in which new cells are produced like DNA as its parents[8]. Bleeding refers to a medical condition in which various abnormal conditions affect the GI tract [9]. GI tract bleeding is the most common abnormality and its detection is particularly very important in screening [10]. A polyp is a very dangerous disease like cancer that leads to unusual mucosal growth, typically benign. However, some colorectal polys are diminutive and very dangerous. If Polys is not treated in the early stage, it can affect the other regions of the GI tract [11]. The colon and small bowel are the most suitable places for growing polyps that grow in any region of the GI tract [12]. Celiac disease has become more complex to diagnose [13]. The small intestine contains such a type of disorder [14]. Excessive acidity in the stomach can cause ulcer disease in the abdominal [15]. The disease due to cells that are damaged by gastric juices is known as a peptic disease [16]. Inflammation of the gastric lining is associated with dyspepsia and acid reflux [17]. H Pylori is the main cause of gastric inflammation [18]. When the mucosal surface is infected or inflamed then it is caused to Crohn's disease [19]. Some time Crohn's disease can also damage the ileum(parts of the small intestine) [20]. Normal squamous esophageal epithelium can inflammation by BE disorder caused by metaplastic intestinal epithelium encompassing goblet cells [21]. A pictorial view of all discussed diseases of GI tract is given in Fig. 3. Gastric cancer clinical conditions are presented in Table 1.

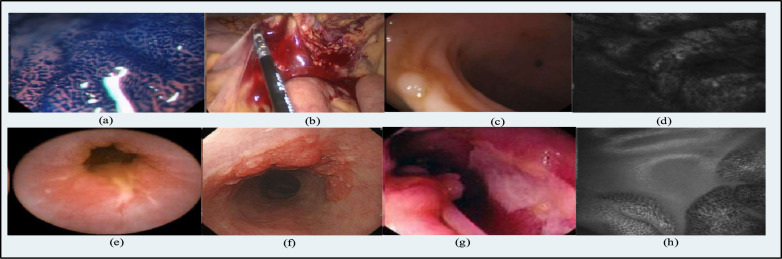

Fig. 3.

Pictorial view of GI tract diseases a cancer b bleeding c polyps d celiac disease e ulcer f inflammation g Crohn’s Disease h Barrett’s Esophagus[22]

Table 1.

Gastric cancer clinical conditions

| No | Name | Clinical conditions |

|---|---|---|

| 1 | Normal | The mucosa is free from irregular structure and abnormal lining. |

| 2 | Metaplasia | The existence of gastric-type mucus-secreting cells is described by the gastric metaplasia in the mucosa of the epithelium of the duodenum. The gastric foveolar epithelium caused limits the syndrome to a duodenal bulb and includes the replacement of normal absorptive and goblet cells [23]. |

| 3 | Dysplasia | When the cells of the gastric mucosa are transformed into abnormal then stomach epithelial dysplasia appears. The most common type of stomach cancer adenocarcinoma forms when this cell irregularity appears[24]. |

| 4 | Sparce | Integrin beta and MMP-2 protein levels and SPARC are upregulated in stomach tumors examined with adjacent noncancerous tissues [25]. |

| 5 | Atrophic | Atrophic type cancer is also known as Type A or Type B gastritis. Atrophic is the process of persistent gastric lining, pointing to the loss of gastric epithelial cells and their future replacement by intestinal and fibrous tissue [26]. |

When the disease is examined and detected in its early stage might be possible to recover timely which saves human life. Early examination of GI tract can put an impact on human health. Conversely, the screening procedure is time taking and demands hefty costs for disease diagnosing that gives rise to an unfavorable atmosphere and discontentment. Norway and the US have conducted tests at prices of $450 and $1100 per case, respectively, demonstrating a similar approach [27]. Various diseases that are visually visible, such as gastric cancer, bleeding, and stomach ulcers, have the potential to impact the GI tract [28]. The medical field encompasses a vast domain to acquire a region of interest in GI tract. The exponential growth of multimedia data, including videos and images, poses challenges in terms of storage, processing power, and bandwidth requirements. Multimedia data comes in various formats, resolutions, and encoding schemes, making it difficult to handle the heterogeneity of data sources. This heterogeneity introduces challenges in data integration, interoperability, and compatibility across different multimedia platforms. Extracting meaningful information from multimedia data involves challenges in content understanding, feature extraction, object recognition, and semantic analysis [29]. Using modalities of different companies, varying specifications, the orientation of diseases, cleansing, and illumination effects are founded in the frames of endoscopy and colonoscopy. Different types of image modalities are discussed in the following section.

Images modalities

The medical image acquisition procedure is adopted without any biopsy renowned as modalities. Therefore, screening of the GI tract is performed using endoscopy as an invasive method. In addition, an endoscope consists of a long wire-attached instrument with a camera [30]. Endoscopy techniques are being found with diversities. Several types are introduced but major categories are traditional wired video endoscopy and wireless capsule endoscopy, both are used for screening of GI tract. Some of these types of endoscopy procedures are discussed as:

Traditional wired video endoscopy (WVE): WVE is composed of different parts including the light source, charged couple camera (CCD), liquid crystal display (LCD) screen, flexible wire, light source, and monitor to observe the individual frame of an image. The WVE is a less invasive method where a secondary channel is used for biopsy or taking tissue samples from the lesion areas [31]. This procedure needs accurate control by gastroenterologists over the movement of the endoscope during the cleansing process using the accessory channel [32]. The wired endoscopy procedure having the camera with its front end is shown in Fig. 4.

Wireless capsule endoscopy (WCE): The more secure, convenient, and efficient way is the wireless capsule endoscopy for observing the GI tract. WCE faces some challenges such as capsule cost with the cost of an expert, screening without camera control, and lack of a secondary channel. The one noninvasive procedure is the WCE, but its field of view (FOV) is out of control for visualizing small bowel when compared with WVE. However, WCE still provides painless solutions when compared with WVE [34]. The advantage of WCE over other techniques is its capturing long-length videos, such as in the case of a single patient 45 min to 8 h are taken and more than 50000 frames are generated. The process of manual screening takes time with a specialist endoscopist and the specific region of the GI tract which is being observed [35].

High-definition video endoscopy (HDVE): The density of transistors in a single chip is cumulative as enhancement in technology is being made. The microscopic view can be magnified by using HDVE. The targeted area can be visualized 150 times magnified in HDVE and the microvascular structure of mucosal can be visualized more visible and clearly by the HDVE instance of WVE [36].

Zoom/magnifying endoscopy (ME): The improved endoscopy technique is known as ME in which a lens is used to the magnifying the mucosal surface. HDVE and ME both have similar features such as magnifying the microvascular structure of mucosal [37]. The standard WVE with some filter of the lens is used for magnification [38].

Chromoendoscopy (CH): Chromoendoscopy is the process used traditionally for investigating the mucosal structure wherein spraying dyes are instilled into the GI tract during visualization that makes disease area or cancerous area more clear and reported useful in many cases [39].

Virtual chromoendoscopy (VCH): The VCH is more user-friendliness as compared to the CH. The CH is primarily utilized alongside image processing algorithms and bandpass filters, which produce a dye-like effect. Furthermore, other endoscopic procedures conducted with the VCH do not require the application or removal of dye through spraying and suction. VCH and HDVE are used alternatively and controlled with a single switch for on/off which makes them more sophisticated and user-friendly [40]. The term digital, electronic, and dye-less is used for VCH in the literature [41].

Narrow band imaging (NBI): The mucosal irregularities are highlighted using NBI associated with polys and dysplasia [42]. The NBI is a more convenient and refined procedure because it uses filters and makes the microvascular structure, vein, and capillaries more highlighted without using dye [43].

Confocal laser endomicroscopy (CLE): CLE is a novel gadget in the endoscopist armamentarium. It contains closed histological information. The limit of gastroenterologists is to disentangle the infinitesimal pieces of information which appear in various screening procedures in the GI tract. Starting late, the field of utilization has reached out to give hepatobiliary and intrastomach CLE imaging. CLE licenses"sharp,"coordinated biopsies, and can control endoscopic interventions. Regardless, CLE is in like manner translational in its system and licenses commonsense imaging that put impacts our perception of gastrointestinal diseases. Subnuclear imaging with CLE licenses acknowledgment and depiction of wounds and may even be used for the desire for a response to centered treatment [44]. The illustration of all discussed endoscopy apparatuses is given below in Fig. 5.

Fig. 4.

Image acquisition and setup for endoscopic screening [33]

Fig. 5.

Illustrations of Endoscopic apparatus a WVE b WCE c HDVE d ME e CH f VCH g MBI h CLE [45]

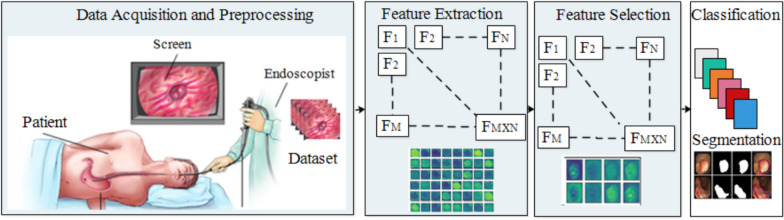

However, an appropriate technique for processing visual data due to both ongoing development and the limited availability of training data is needed. Therefore, effective, reliable, and scalable data processing techniques are required that can handle substantial amounts of data. In addition, within the healthcare system, visual data necessitates parallel processing and the utilization of elastic heterogeneous resources to achieve swift processing [46]. Choosing the right feature extraction technique for CADx is an essential decision. Likewise, the extracted features play a significant role in tasks such as segmentation and classification. Both image preprocessing and image segmentation hold significant importance in obtaining image descriptors [47]. The study focuses on several aspects related to the screening of the GI tract, including different modalities that can be utilized. In addition, the paper discusses feature selection techniques and different classification methods employed in this field. A generalized model of the CADx is shown in Fig. 6.

Fig. 6.

An overview of the CADx

There are enormous approaches used in CADx such as Harshala Gammulle et al.proposed two methods for disease recognition using handcrafted and deep learning features methods [48]. Vajira et al. present five distinct ML models using global features and deep NN models for multiple disease detection and classification in the GI tract [49]. Novel techniques with good accuracy are employed in which data mining techniques are applied with neural networks for GI tract disease detection and classification [50]. The proposed model of CADx is employed for disease classification [51]. The approach describes the benefits of automatic disease detection and classification over manual findings with endoscopy images that are very fast and accurate [52]. The study concentrates on the real-time use of CAD technologies that give endoscopists instant feedback to make it easier to integrate CAD systems into clinical processes [53]. Using AI-based diagnostic techniques on video data is a crucial step in achieving this objective. In a recent study, NBI zoom video clips of EAC and NDBE were used to assess a deep learning model that had been built on static images.

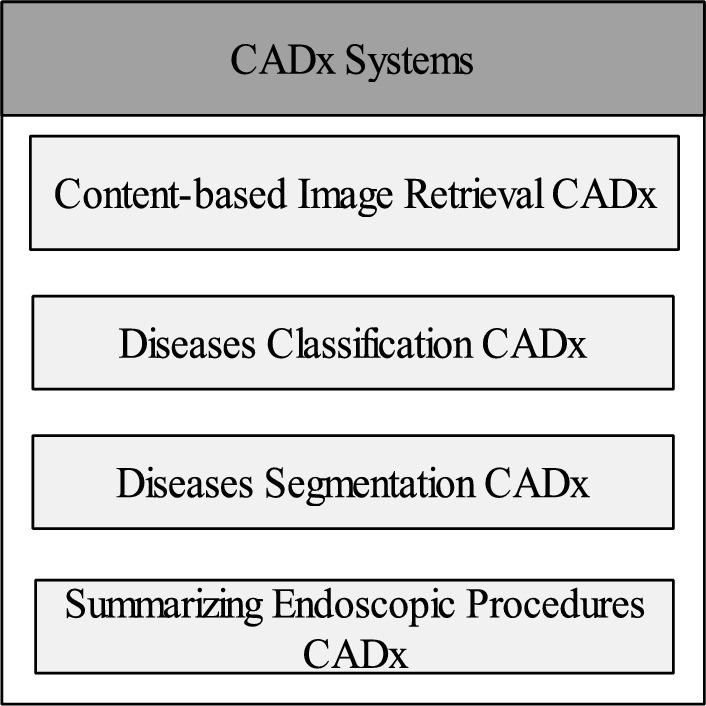

Output-based CADx systems are depicted in Fig. 7.

Content-based image retrieval CADX: By retrieving well-matched images or collections of images from the database using content-based image retrieval (CBIR) algorithms, a descriptor is produced, which is then condensed by feature extraction [54]. CBIR is a straightforward solution that aids Gastroenterologists in identifying abnormalities by allowing them to choose required insights from a sequence of frames. [55]. All data of endoscopic procedures retrieved that hold similar pathological conditions such as bleeding, inflammation, and ulcer [56]. Therefore, CBIR is referred to as CADx because a query image is fed into it after features matched by a single or set of images are provided at its output [57]. Generally, the discrimination between the normal or abnormal regions in the frames is achieved by classification and segmentation methods that are described ahead.

Disease classification CADX: Classification-based CADX system classifies the lesion or nonlesion area in the frames of the endoscopy and it can also perform multilevel classification further checks the grade or severity-level of the disease so that treatment started at the early stage of the disease [58]. The modern approaches for disease classification are supervised(labeled or data-driven) and unsupervised (unlabeled) based learning. In supervised learning, the descriptor of each frame is extracted. It is combined with the label of each class, resulting in the attachment of the class label to every feature vector within the entire dataset. The model predicts the label of the relevant class after training while in the unsupervised model, the label is not required similar feature-based clustering is performed in the training phase and the model can classify the normal and abnormal areas in the frames of endoscopy [59].

Disease segmentation CADX: The process of selecting the required area from the image based on similar features is called segmentation also referred to as a perceptual grouping of pixels. The pixels with similar characteristics show the disease area also called the subimage in the frame [60]. The segmentation-based CADx systems take an image as input and provide the annotated image. Detecting diseases using the segmentation method remains a difficult undertaking due to the presence of various factors in the GI tract area, such as blood, polyps, and bubbles, which introduce variations. Therefore, segmentation could be performed in real time or later on recorded frames of videos [61].

Summarizing endoscopic procedures CADX: Recall (Rec), sometimes referred to as sensitivity/hit rate/true positive rate, is one statistic used to evaluate the effectiveness of CADx systems. Recall offers information on the correct identified sample relative to the real sample of the positive class.. The term FPR (false positve rate) and FNR(false-negative rate) measures the postive and negative classes prediction. Other metrics are included precision (Prec), accuracy, specificity (Spec), F1 score, and region of interest. These measures scale how well the system can accurately classify and segment data.

Fig. 7.

Output-based CADx systems

The complete review is organized as follows: Sect."Preprocessing of endoscopic frames"offers a detailed analysis of the various preprocessing techniques applied in prior studies. Sect."Polyps segmentation methods"outlines the approaches employed for segmenting the affected area in the frames. The methods for feature extraction, including both deep learning and handcrafted techniques, are discussed in Sect."Feature extraction methods". Sect."Features selection methods"delves into the approaches for feature selection, while Sect."Disease classification methods"focuses on classification methods. In Sects."Datasets" and "Performance measures", the datasets used and the evaluation metric employed are described, respectively. In the last, Sect."Challenges and findings"offerings the conclusions drawn from the review, and Sect."Conclusion"highlights the challenges and potential avenues for future research.

Preprocessing of endoscopic frames

CADx includes preprocessing as an initial stage because endoscopic frames contain a lot of challenges including feeble cleansing, bubbles, insertion of instruments, distortion of lenses, illumination invariance, and the existence of food particles [62]. Likewise, endoscopic images are suffered from various factors such as poor contrast and disease orientation [63]. Thresholding techniques are utilized on parts of the frames to eliminate noise in the images, while image clipping methods isolate particular regions and break down the background into small noise elements such as edges, points, and lines. Simple and median filters reduce the noise from the frames by designing an affecting noise filter [64]. Preprocessing deals with various operations including image resizing, noise removal, median filters, color space transformation, and histogram equalization for improving the visualization of the frames. The image enhancement is performed for making the image more appealing and revealing the contents of the image that are not perceived by the naked eye [65]. Furthermore, image enhancement is done by adjusting the contrast, pixel color value, removing noise, and normalizing the endoscopic frames [66]. The technique brightness preserving dynamic fuzzy histogram equalization (BPDFHE) is employed for enhance the brightness of the images [67]. The different color spaces which include RGB, CIEXYZ, CIELAB, YIQ, HSV YUV, and HIS are used for improving the quality of the frames [68]. The implementation of the CLAHE preprocessing technique enhances the outcomes in the classification of colorectal cancer conditions. [69]. The available frames of endoscopy come with RGB color space. Histogram equalization improves the visualization of RGB frames [70]. The preprocessing techniques including HSV, CIELAB, and other color spaces support color feature extraction [71]. Statistics of color and moments are used as color space. There are three color space channels hue, saturation, and intensity that are transformed in HSI color space [72]. Three channels are used with radial basis filters also known as the kernel. Disease detection and classification need to be considered using endoscopy-based images. Only a few frames of images own abnormalities during capturing the images in the GI tract. Sometimes the abnormal areas in the image cannot be identified by the human eyes due to the lack of the image capturing standard [73]. For improving image quality, the process where image enhancement, color space selection, size, and texture adjustment is performed. Hence, existing techniques can be accommodated by handling all these issues [74]. Color utilization variation in the image frames may cause false detection of abnormalities in the image classification [75]. A low colorspace is used during the capturing of frames from the GI tract by endoscopic procedures. Hence for improving the Acc, several preprocessing methods are conducted to show images more appealing for the detection of abnormal areas [76]. The random rotation occurs in the endoscopic frames due to variation in the field of view. Several methods are discovered that determine the variations in the visual area of the endoscopic frames [77]. The changes in light and distance to the mucosa in the gastroscopy images may cause scale invariance in the endoscopic frames [78]. The preprocessing helps to segment the abnormal area of the GI tract. Polyps are detected by CNN-based approaches using annotated image datasets that are prepared by an experienced colonoscopist. In the following section, different segmentation approaches are comprehensively described.

Polyps segmentation methods

In the recent times, the field of automatic disease detection, particularly in areas such as polyp detection and segmentation, has become an important research area. Consequently, numerous algorithms and effective approaches have been devised to detect polyps [79]. The analysis of polyp is done based on texture and color methods. utilization of manually designed descriptors to capture and learn the distinctive characteristics from the images [80]. In the research industry, CNN has gained considerable prominence due to its notable achievements in public challenges. [81]. Novel software modules and algorithms are specifically developed for the detection of edge and polyp shots, employing CNN [82]. Moreover, a range of methods is utilized for detecting polyps in colonoscopy data. The CNN models are employed in transfer learning and postprocessing approaches [83]. Similarly, the model's ability to detect polyps has been enhanced and the production of polyp images occurs by using generative adversarial networks [84]. A high-sensitivity algorithm utilizes to identify the real-time pattern from the images is known as you only look once (YOLO) to detect and pinpoint polyps [85]. A SEM-supervised method is used to segment the abnormal area of the illness [86]. Moreover, the Spec and Sen of these systems are high in terms of performance [87]. In recent times, the standard of computer vision approaches has improved using data-driven methods, including the method of segmentation, where feature maps are acquired from the layers of deep CNN models [88]. Different deep-learning models are employed in the literature for disease segmentation as shown in Table 2.

Table 2.

A summary of results compiled over the Kvasir-SEG dataset

The initial introduction of the segmentation approach relies on a fully convolutional network (FCN) [96]. The FCN architecture has been modified and expanded to create UNet [97]. Furthermore, UNet is composed of two pathways known as the encoder and decoder, which serve as the analysis and synthesis components, respectively. The encoder explores the information deeply using different depth of filters and also, decreased the dimensions of the feature maps while the decoder combined the information and provide segmented output. The encoder–decoder architecture, exemplified by UNet and FCN, holds great importance in the realm of semantic segmentation [98]. In the literature, various versions of UNet have been documented for biomedical segmentation. Among the pretrained networks utilized, both VGG16 and VGG19 are included which are trained by the ImageNet dataset that can also be replaced by the encoder for segmentation tasks [99]. As a result, ResNet50 and similar residual networks have achieved remarkable success in transfer learning [100]. As described in the upcoming section, the process of extracting features holds significant significance in disease segmentation and classification.

Feature extraction methods

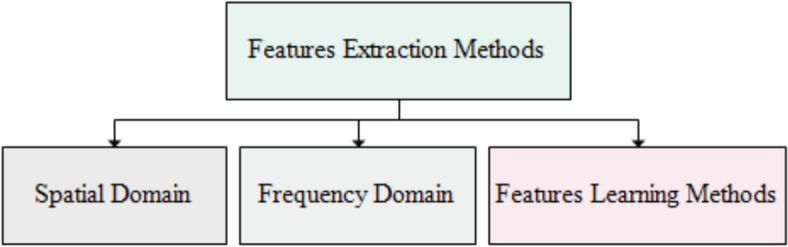

The color information (pixel intensity) is transformed into numeric data which shows the pattern and representation of instances in the images known as features. The recent literature reports the methods how to extract the descriptors (most important information/features) from the frames using different feature engineering methods. The required low-level information about the diseases is extracted that is employed by the classifiers [58]. It is difficult to explore the descriptor of lesions in the frames of the GI tract explicitly because of even conspicuous variations in shape and color [101]. The important features are color, texture, geometric, hybrid, and deep learning features. There are three classes of feature extraction including the spatial domain which deals with pixels directly, the frequency domain works using a frequency of the specific information including Fourier transform and wavelet techniques, and deep features learn automatically using deep models. Moreover, The spatial domain deals with pixel intensities while the frequency domain describes the rate of change in the intensity of the pixels. The spatial–temporal domain has some feature extraction methods [102]. The process of extracting features is categorized into four groups, namely color features, geometric features, texture features, and feature learning. Texture and color information is commonly used to characterize the features of GI tract diseases. Texture features are categorized into three types such as local binary patterns (LBP) that capture local information using 8 x 8 sliding windows, filter-based features, and shape-based which are crucial for GI tract disease identification [103]. Frames of video endoscopy are transformed into HSI color space for feature exploration [104]. The feature sets are made by splitting the images into patches with chromaticity movements calculation [105]. The CIELAB color space methods are used for detecting abnormal regions using local patches [106]. The texture and shape of the image help to find an interesting region in the image frame [107]. A single frame of image is subdivided into small partitions to extract insights from each patch. Texture analysis is used in finding abnormal parts of the frames, it is used as a descriptor for pattern analysis of gastric tumors or malignancies [108]. Feature extraction methods utilized for texture analysis encompass histogram-oriented gradient (HOG) that apply thresh hold and extract information using 9 bins based over gradient (magnitude of the rate of change) and orientations (angles), scale-invariant feature transform (SIFT) [109], segmentation-based fractal texture analysis (SFTA) [110] collects the fractal information from the images, LBP, and Otsu's methods [111]. Homogeneous textures are used for the identification of lesions in the endoscopic frames [112]. The texture is computed using Discrete Fourier transform method and statistical analysis of normalized gray-level co-occurrence matrices is calculated that represents the texture of the images [113]. Discrete wavelet transform (DWT) based features are combined with LBP features to extract texture information from WCE frames and classifiers are trained on these features [114]. Features extraction methods are depicted in Fig. 8.

Fig. 8.

Features extraction methods

Spatial domain

Spatial features refer to the intensity of local pixels in the image. Moreover, spatial features are used to analyze the features including texture analysis (Texture analysis involves identifying patterns and structures within an image), color analysis (Color analysis involves identifying the colors present in an image and their distribution), and shape analysis (Shape analysis involves identifying the shapes of objects in an image). The spatial features are extracted by domain experts who design a specific technique according to their knowledge. It is difficult to extract complex patterns using handcrafted techniques. Feature extraction is a predominant and important method like preprocessing and segmentation that are used for GI tract disease findings. Feature extraction methods in the spatial domain are depicted in Fig. 9. Feature extraction is a key emphasis for Ailment detection. The processing of feature extraction in the spatial domain deals with direct pixels manipulation. Every pixel of the endoscopic frames is precisely investigated for abnormalities recognition [108]. Several methods of feature extraction are developed for infection finding and getting perceptual information. The multiple methods of feature extraction in the spatial domain are discussed in the following section.

Fig. 9.

Overview of feature extraction methods using a special domain

Color characteristics

The basic visual features that are extracted from the endoscopic frames are the colors that show the visual spectrum of the mixed light with various bandwidths. The mucosal surface is visualized by using color information of gastric frames acquainted using NBI and CH. Colors are considered very important in clinical pathology finding such as inflammation, bleeding, ulcers, etc. Endoscopic frames contain colorful information where each color information is recognized as the color channel (RGB). However, gray images are made with a single-intensity channel. Mostly colon and gastric frames have low contrast color space [115]. Endoscopic frames are represented by different color spaces such as CIE-LAB, CIELUV, CIEXYZ, HSV, and RGB in various applications but which one is more important for any particular malignancies could not be determined.

Color histograms: The histogram is the basic information that can be derived from an image's channel [116]. A histogram indicates a pixel strength probability and provides information on the color distribution. In the zoom endoscopy, the study of the different channels in a single color space is carried out and the individual channel is combined as a single frame [117]. Likewise, the two-color channels histogram including RGB and HSI are utilized. The features of the color histogram and RGB histogram are used to detect the specific region of the disease. Besides, the HSV color histogram is considered better than the RGB color histogram in some experiments. Therefore, the conversion of RGB color space into other color spaces is performed for improving results. Classification of bleeding frames is performed by using HSV color space that shows greater uniformity of colors [118]. HSV color histograms gain more importance than image histograms of the local RGB color. The primary colors are used to compute thresholds for the segmentation of the colonoscopy images, and the segmentation error is reduced by using the CIE-LAB color space [119].

Color moments: Colors are very sensitive to lighting. These are changed in a complex GI tract during data acquisition under different lighting conditions that offer new challenges when using colors as descriptors to identify gastric anomalies. Due to lighting changes, color moments are meant to cope with color variations. The basic function of the methods of polynomials and HSI color space is to detect diseases such as bleeding and ulcers in endoscopic frames. The bleeding regions are identified and classified by using color arrangements [120]. Several methods of feature extraction are developed using different statistical approaches to describe the color characteristics in the RGB frames. The diseases including precancerous lesions are identified by statistical characteristics using endoscopic frames [121]. The technique optimum multithresholding flattens the boundaries of diseases and morphological operations are performed to fill the holes for disease detection [122]. Owing to a lack of control over camera movement in the WCE procedure, the major problem is the camera distortion in various systems. The camera's variable distance from the mucosal wall creates undesirable effects. Uniformly spaced channels and light reflections are distinguished by the CIE-LAB color space. Kurtosis, variances, and entropy are the color moments that are calculated to detect the Ulcer and bleeding from image channels [123]. Color features are extracted by HIS color space for visual perception [124]. Similarly, the numerical statistical measurements of various composite channels for bleeding detection in WCE frames are computed over a variety of color attributes.

Salient color features: salient color features mean specific patterns in the frames. A process of conversion from RGB to other color space (CMYK, CIE-LAB) is done to detect the salient regions such as polyps, and bleeding [125]. First-order moments are determined to shape the collection of features. Moreover, in CIE-LAB color space, characteristics of particular pixels are defined for the identification of affected regions where the transformation of RGB to HSV is done [126]. Furthermore, HSV color space is inverted to RGB color space for the detection of the bleeding regions [46].

Local color features: By splitting any image into small patches, the information on local color is obtained from images. Using the patches, valuable features (descriptors) are extracted [127]. The local information from the frames is determined and analyzed. Descriptors can also be included the pixel spatial position and as well as color information. Similarly, the distance between color pixels is considered for computing the abnormalities using CH frames [128].

Texture features

A recurring pattern in an image is recognized as a texture that provides information about surface characteristics including roughness and flatness. Texture in frames is classified by employing a difference-based micro-block technique in which multiscale symmetry is discriminated [129]. Pixel-level and dense macroblock changes are referred to as local features that combine the K-rotation with Gaussian distribution. A descriptor based on a higher-order local feature-based method is proposed that processes the local information to encode global descriptors [130]. An average spatial pyramid pooling is merged using a multiscale difference (MSD) and micro-block difference (MD) based texture descriptor which is created by multiscale SDMD [131]. A novel multiscale frequency-based classification approach is proposed by including the color difference-based representation (CDR) method for texture exploration. The local and differential excitation vectors are used for extracting a variety of textural formations. The combination of the CDRs is used to create various texture features [132]. Similarly, texture analysis is very famous and gained a lot of significance in medical imaging such as cancerous regions detection and representation of texture methods using endoscopic frames.

Local binary pattern: A very helpful texture describing the method by using images is LBP where all neighboring pixels are compared with the central pixel and binary code is assigned to every pixel that determines the simple LBP pattern and later on binary codes are converted into decimals. A histogram is created by computing the occurrence of binary codes where the local texture in the images is represented. There are numerous developments made to increase the representational power of the LBP. Here, illumination variations are dealt with by multiscale LBP [133]. The LBP deals with the various structure of neighboring pixels (8,16,24) and is also employed with various other LBP variants (LBP59, LBP256, LBP10) that extract features for the problem of image classification [134]. Similarly, vector quantization is used with uniform LBP for abnormalities detection [135]. Refined local pattern features-based LBP is introduced for the grouping of texture and a more advanced technique is reported to extract a refined completed LBP (RCLBP) combined to create a JRLP texture descriptor [136].

Statistical texture features: To represent the texture of pictures, statistical measures are commonly used. Statistics on intensity distribution provide details about the texture of the image. GLCM is introduced in which pixels in pair form are found whose frequency is measured. Besides, from these matrices, several statistics including contrast, energy, similarity, and correlation are determined to describe the texture of images [137]. Similarly, anomalies are detected by the texture of the endoscopic images using Haralick's features [138]. Moreover, YIQ color space with statistical features is employed to approximate the diseases in the GI tract. Texture information is obtained for disease classification by computing GLCM. Texture features involve the computation of entropy, an inverse moment of difference, energy-angular second moment, and correlation are used for the analysis of GI tract diseases [139]. In short, statistical metrics (mean, standard deviation) are used for texture features analysis. Some common statistical texture features such as LBP, and gray-level run length matrix (GLRLM) features. Gray-level co-occurrence matrix (GLCM) features describe the second-order statistics of an image, which capture the spatial relationship among a couple of pixels. LBP features describe the distribution of local binary patterns within an image, which captures the texture information in a more local and fine-grained way than GLCM features. GLRLM features describe the distribution of pixel runs of the same gray level within an image, which captures information about the length and orientation of texture patterns.

Geometric features

Geometric features contain information about edges, corners, points, lines, blobs, ridges, image texture with salient points, and shape bases features as addressed in the following sections.

Edge-based features: Normally, colorectal cancer has no distinctive form or scale. However, features (edges or contours) of some lesions like polyps and tumors are detected by using a geometric model [140]. Similarly, Sobel which is famous for sharp variation detection, and Canny edge detector are used to design a model that finds the shapes and edges of the polyps [141]. The features of Gastric cancer disease are extracted using edge-based features [142]. Celiac disease tissues of normal and abnormal are examined by the geometric feature extraction approach [143].

Shape-based features: The shape-based modeling including smooth spiral, fractal dimension, and Koch-snowflake efficient methods are performed using frames of NBI [144]. Based on the optical colonoscopy images, very important features are extracted from multiscale objects [145]. Some geometrical features of polyps are determined by the HLAC method [146]. HLAC technique uses the product–sum formula over auto-correlation for feature extraction using endoscopic images [147]. Features of the elliptical pattern are used to recognize the polyps abnormalities in the colonoscopy frames [148]. Geometric features that make 3D trajectory construction using frames of endoscopy by using fuzzy logic [65].

Hybrid features

In the spatial domain, frames are processed to extract the various descriptors. In some particular conditions, these methods of feature extraction may work well, for example, color features for bleeding detection). However, these characteristics have minimal discrimination power to identify complicated anomalies (e.g., cancer, polyps, and Ulcers) when used separately. Hybrid features deal with environmental problems such as variations of rotation, size, and illumination in the images. To achieve stronger discrimination against accidents, two or more forms of characteristics are combined. Below are hybrid methods that are used for the automatic diagnosis of lesions.

Color texture features: The inflammation region in the GI tract is illustrated by applying combined characteristics of the colors such as red color and filter-based texture are utilized [149]. The composite characteristics of the edge-based, texture, and colors help to recognize the diseases in the GI tract [150].

Local binary pattern and color texture features: A composition of RGB and HSV introduces modified LBP features from the endoscopic frames [151]. The combination of color and texture detail is used in various research works such as HV histogram from HSV and similarly, HIS contains detailed information in I channel, RGB, and RG histogram combined, same as hue histogram are used in combination fashion [152]. Super pixels are raw materials to extract LBP texture, color histogram, and PHOG. Multiple color channels in a mixture fashion support the extraction of more refine texture detail from the images. Gaussian-filtered LBP (GF-LBP) combined features are used to extract texture from endoscopic images [153]. The pyramidal histogram is used in the extraction of texture information. In the same way, texture-based patches are isolated from the LBP characteristics for classification by using patch information [154]. LBP texture is arranged in the paired form with moments of color. Texture color information is acquired by the use of color histogram and LBP texture in a combined fashion [155]. The explanation of color features and texture descriptors in combined form is reported where various components are fused including color histogram, HSV, and LBP components [156]. Furthermore, the LBP of 8 and 16 pixels with the central neighborhood pixel is extracted to combine texture and color information. LBP and uniform features are combined and used for getting information from endoscopic frames [157]. The chrominance is determined by the histogram in which RGB to HIS transformation is executed using endoscopic images.

Statistical color texture features: An imaging histogram is utilized to compute the statistical moments (entropy, standard deviation) that represent the HIS and RGB color spaces. The dominant colors with eight in quantity are computed from GLCM frames where texture and color characteristics are combined [158, 159]. In the same way, color characteristics are presented by the bi-dimensional ensemble empirical mode decomposition approach where computation is made by intrinsic mode functions (IMFs) using endoscopic frames [160].

Color and geometric with texture features

Geometric texture features: Texture features (LBP, SIFT, and HOG, etc.) are combined with geometric (PHOG function) and color information (RGB) to describe the descriptor where significant deep unmonitored features are extracted [161]. SIFT is used to classify the endoscopic frames combined with shape-based HOG and LBP features [162]. Statistical moments support determining the topological characteristics in the picture [163]. The physical (geometric) characteristics are determined to classify any potential for the affected region from NBI images [164]. Using the high-order kernel to design an algorithm named graph matching algorithm for clustering the information of the frames [165]. The combination of the edges and nodes is recognized as a graph where nodes and edges are referred to as pixels and relationships between nodes have similarities respectively.

Geometric and color features: standard deviation and mean statistics are calculated for polyps detection using frames of endoscopy [166]. Heterogeneous, colors and point-based SIFT characteristics are combined to segment the lesion in frames of endoscopy [167]. Salient features are extracted using SIFT techniques in person re-identification [168]. For segmenting disease, visual data (edges, colors, and textures) is used and an edge-based model is suggested as an ACWE [169]. The following section describes the feature extraction approaches used in the frequency domain.

Frequency domain

Images are used without transformation in most cases for disease prediction. Frequency domain procedures are performed based on pixel intensities and the rate of change in intensities. The features are extracted using exponential Fourier analysis that determines the rate of changes in pixel intensities. The transformation (z, Laplace, and Fourier transformation) into the frequency domain is done before the feature extraction in most cases [170]. The feature extraction methods in the frequency domain are shown in Fig. 10.

Fig. 10.

Overview of features extraction methods using frequency domain

There are multiple methods of frequency domain such as hybrid, color, and texture feature extraction. The color texture method is further classified as Gabor and GLCM-based color texture, LBP, and statistical color texture in the wavelet domain. Similarly, geometrical color, texture, and combined form are used as color texture methods. The frequency domain methods for texture feature include LBP in the wavelet domain and statistical texture are used for disease identification and classification [146]. Color feature methods of frequency domain are DWT-based and Fourier filter-based.

Color features

Discrete wavelet transform-based color features: Different methods are used to transform endoscopic frames into color features (color information). The coefficient of wavelets is employed to extract the color information from the endoscopic frames by the transformation of pyramidal discrete wavelets [171]. The statistical methods including mean, standard deviation, scale, and rotation are utilized to extract features by the dual-tree- discrete wavelet transform (DT-DWT) approach [172]. A novel method produces feature vectors by computing six complex orientation sub-bands in every breakdown scale [173]. Color eight sub-bands are proposed to extract features. Stationary wavelet transform represents the de-correlated detail sub-bands by computing variance and shows better performance in contrast with DT-DWT-based features. Before DWT computing, images are transformed into CIE-LAB color space for feature extraction [174]. Fourier filters support to extract colors features for disease identification.

Color features based on Fourier filter: Frames of endoscopy are filtered using ring filters after the transformation into the Fourier domain [175]. The method of ring-shape band-pass filters utilizes ring width sizes of minimal (1) and maximal (15) for the analysis of multiscale objects. Using different size ring-shape filters, RGB frames are filtered for getting statistical information [176]. The information is obtained by splitting individual channels of RGB space into R, G, and B separately. The Fourier filter is applied on any separate channel of any color space like RGB, LAB, and CIELAB [177].

Texture features

Image surfaces and super-pixels are analyzed statistically for texture features (softness or stiffness) extraction in the frequency domain. Whereas an image is transformed into the frequency domain before extracting texture information. Generally, the computation of statistical measures represents texture in the outcome of the operation.

Statistical features: Image classification tasks are performed by the wavelet sub-bands statistical methods. Adjacent sub-bands descriptors are represented and modeled using linear regression that makes a difference between the sample and texture of the class [178]. In the wavelet sub-band, four local features are used to define the texture descriptors where wavelet coefficients model the heterogeneous and incrementally generated histogram (HIGH). The techniques of texture concatenation of all sub-band create the image vector (non-negative multiresolution vectors (NNMV)). The low-dimension WCE frames are employed to compute the linear subspace of NNMVs [179]. DFTs are computed for transforming the frames of the WCE [180]. Operation of the log transform is conducted using changing magnitudes for co-occurrence matrices normalization. Various statistics are computed using co-occurrence matrices of WCE images to represent the texture features. Different patches are found in the images that are utilized by DWT for lesion detection where four statistical measurements of GLCM are used [181]. The Gabor wavelet transforms (GWT) with the based comparison between DT-DWT and DWT is performed to get texture features [182].

Local binary pattern: The transformation of images is done and texture features are extracted by the curve-let transformation. The image texture for Ulcer classification is represented by implementing uniform-LBP features based on the coefficient of the transformed domain [183].

Texture analysis based on Gabor filter: Likewise, the log of Gabor filters are applied to WCE frames after the contour-let transformation. The result of the filters is computed by the mean and standard deviation to represent the texture features [184]. Gabor texture features are represented by applying the properties of scale, rotation, and illumination variations shift-invariance [185].

Hybrid features

Supplementary discriminative power is achieved by combining the various types of features referred to as hybrid features. Hybrid features are widely used in both spatial and frequency domains. The combination of color and texture features is employed in the frequency domain which provides better results than the spatial domain. The hybrid methods in the frequency domain are described below.

Color and texture features based on Gabor filters: Texture features based on Gabor are fused with color components by taking the mean and variance of each sample. A random forest classifier is trained using color texture features based on Gabor [186]. The ratios of various color components are utilized to analyze the frames of the WCE. The differentiation between cancerous and noncancerous cells is done by extracting texture, shape and intensities based features in the diseases classification [187]. Both color and texture information is obtained by combining the homogeneous texture features where the log of Gabor filters (LoG) is employed based on RGB color space. The composite fashion of Gabor-based texture and scalable colors makes a partition of the WCE frames of the GI tract. The classification of WCE frames is performed by using MPEG-7 features [188]. MPEG-7 features are used from where edge histogram features and dominant color descriptors are extracted that help to detect the abnormal region in Crohn's.

Color and texture based on grey-level co-occurrence matrices: Texture and color features are extracted by LUV color space with WCC matrices and Gray-level co-occurrence matrices (GLCM) are extended where from sub-bands of wavelets, statistics of GLCM are computed [189]. RGB color channels are exploited by combining GLCM-based texture features and color information. In the transformation of every channel, GLCM-based characteristics are presented by sub-bands of DWT. WCE frames are used in the DWT process where GLCM features are extracted, and later using RGB images and HSV color spaces, statistical measures are computed [190]. The combined methods GLCM and color moments are employed to compute features from images and channels respectively for making a complete set of features [191].

Local binary pattern and color features in wavelet domain: The transformation of RGB to CIE-XYZ color space and from contour-let to LBP is made for feature extraction from the WCE images [192]. Bleeding is identified in the testing phase of HSI and RGB color. The transformation to CIE-XYZ color space response is better than other color spaces. The new approach is introduced where first DFT uses color channels and later, using wavelet domain and GLCM is calculated [193]. Intera-color histograms and color channel support extract features with the help of the opponent color-local binary pattern (OC-LBP). By using texture information from images, DWT and LBP features are extracted from middle-level sub-band images. WCE images are captured with RGB and HSI format from where DWT-based LBP is extracted for classification [194]. YCbCr color space having color information is employed to extract DWT-based LBP features that have texture information [195].

Statistical color texture using wavelet domain: In general, statistical measurements including skewness, mean, standard deviation, variance, and kurtosis statistics are used to calculate the sub-bands of images, while energy, inverse difference, entropy, moment, covariance, and contrast are used to calculate the sub-bands of images'features. DCT transforms color curve-let covariance (3 C) images RGB to HSV color space and 3 C of images is used to compute second-order statistics. Leukocytes are categorized using wavelet decomposition approach [196]. A set of features is formed by the 3 C for the detection of tumors in small bowel [197]. HSI color space is used by texture features, same as texture and color features are fused that are recognized as statistical features [198]. Likewise, the integration of Hu moment and Fourier descriptors creates the final feature set. The transformation from DT-CWT to ME frames that are used in texture feature computation using frames of sub-band having six-level. Irregularities in the form of pit-pattern features are represented and computed by statistics or Weibull parameters [199].

Combination of geometric and texture features: A segmentation of polyps is performed by the watershed method [100]. A feature combination approach referred to as a marker is proposed where the fusion of k-mean clustering and Gabor texture is made for polyp shape analysis. The term motility describes muscle contraction that unites and drives contents. Moreover, for the classification of a specific area in WCE frames, the contraction method is adopted by using the Gabor filter for edge detection [200]. Crisp segments are shaped with edge detection by LoG and SUSAN’s edge detector in the colonoscopy frames [201].

Combination of texture features: The discrimination power increases when the combination of texture with another type of feature. The sign pattern (SP) and the magnitude pattern (MP) map the texture and multiscale wavelet transform-based techniques known as MRIR split texture as a step function [202]. MP having wavelet sub-bands are utilized by the step function fitted and calculated by the SDMVs with frequency vectors (FVs) of SP [203]. The combined features of LBP with multiple shapes and sizes are got by the Gaussian filters named Leung-Malik LBP (LM-LBP) which help to detect several pathological situations from frames of endoscopy [204]. Generally, Texture features are a powerful tool for analyzing and classifying medical gastrointestinal frames. Texture features capture information about the patterns and variations in intensity and contrast within an image, which can be indicative of underlying tissue structures and pathologies. Similarly, by simply concatenating the feature vectors of the various texture features into a single feature vector, such as when we extract the Gabor and Haralick features from an image, merging the two feature vectors into a single feature vector. A summary of the feature extraction methods that are employed for the classification of GI tract diseases is shown in Table 3.

Table 3.

Summary of feature extraction methods and GI tract disease classification

| Refs | Years | Methods | Datasets | Modality | Results |

|---|---|---|---|---|---|

| [205] | 2023 | Feature Engineering methods are employed for GI tract disease detection and classification. | 8000 images | VE |

99.24% Acc |

| [206] | 2023 | Hybrid approach is used for GI tract disease classification. | 8000 images | VE |

97.25% Acc |

| [207] | 2022 | Deep features are extracted for GI tract disease identification. | 8000 images | VE |

97.00% Acc |

| [29] | 2021 | Transfer learning approaches are used with variants of SVM classifiers GI tract diseases are categorized. | 4000 images | VE |

95.02% Acc |

| [161] | 2020 |

CS-LBP and auto color correlogram are employed for feature extraction and K-mean and SVM classify the frames of endoscopy. |

200 images | WCE |

95.00% Acc |

| [208] | 2020 | GLRLM-based features are used for colorectal polyp findings using SVM. | 86 videos | VE |

98.83% Acc |

| [209] | 2020 | Color and texture features are employed for Polyps identification and classification using an SVM classifier. |

300 Images |

WCE |

86.00% Rec |

| [210] | 2019 | ASWSVD is used for feature extraction and multiple classifiers classify the diseases of GI tract | 5,293 and 8,740 images | WCE | 86.00% Pre |

| [211] | 2019 | An ulcer is classified by an SVM classifier using color and texture features. | 9000 images | WCE |

99.00% Acc |

| [212] | 2018 | Cancer is identified using GLCM and Gabor texture methods and disease classification is performed by multiple classifiers. |

176 Images |

CH |

87.20% Acc |

| [106] | 2018 | Cancer identification and classification are performed by RF and KNN methods using Fourier, HIS, and Statistical techniques for feature extraction. | 280 images | VE | 86.00% Sen |

| [213] | 2017 | Polyps are detected using super pixel-based clustering and SVM classifiers. | 39 images | WCE |

94.00% Acc |

Feature learning methods

Features extraction methods are divided canonically into two types, bag of features (BOF) and deep learning approaches. Moreover, feature presentation methods are employed for the dictionary of the visual words while neural networks for classification and deep learning CNN are utilized for feature extraction using visual data in the form of images. Feature extraction methods including BOF and deep learning methods are given in Fig. 11. The detail of each method is shown in the coming sections.

Fig. 11.

An overview of feature learning methods

Bag of features

The term Bag of Features (BoF) is an extension of the Bag of Words (BoW) used in natural language processing while BoF is applied to image representation in computer vision rather than text. The BoF model is a feature extraction technique used in computer vision, where local image descriptors (e.g., SIFT) are clustered into a visual vocabulary, and images are represented as histograms of these visual words. Although BoF generates structured numerical representations [214]. In various classification tasks, the BOF model or bag of the visual word is widely used where image features representation is denoted as a visual word. The features in the frames of endoscopy are represented in the form of visual words that are used for learning the dictionary or codebook. The feature selection methods include filter (variance thresholding, TF-IDF), wrapper (e.g., SVM-RFE), and hybrid approaches (PCA + wrapper are employed to refine BoF features by removing irrelevant or redundant elements, improving model efficiency and accuracy. Combining BoF with feature selection enhances performance in tasks like image classification and object recognition by reducing dimensionality, minimizing noise, and optimizing discriminative power [215]. As a feature vector, the histogram is used for every frame [216]. SIFT features distinct visual words that are obtained and referred to as dictionaries [217]. Characteristics are represented in vector quantization by computing the histogram of visual words. These features are used for the classification and clustering of the diseases in the frames. SIFT features are used to extract dense features for disease classification [218]. The k-mean clustering algorithm produces visual vocabulary and quantized features set to recognize cancer tissues in the endoscopic frames. BOF methods are employed with big-scale SIFT and dense detectors for producing pCLE imagery [219]. Visual word-based color histograms and the frames of endoscopic are tested by techniques including LAB, CMYK, RGB, HSV, and YCbCr color spaces for bleeding detection [220]. Moreover, two-level of saliency detects the bleeding area in GI tact, and homomorphic filters are used to normalize the colors and illuminate the component L for filtering. Similarly, an adaptive color histogram produces vocabulary in the form of visual words [221]. The deep feature methods are discussed in the following section.

Deep learning methods for feature extraction

The existing deep learning models and their architectures are discussed comprehensively in this section. The models are employed for detecting and classification of GI tract diseases (Ulcers, polyps, bleeding, stomach cancer, colorectal cancer, and colon disease). The literature reports that the supervised CNN-based models use the labels (name of the classes) for classification. In unsupervised learning, clusters are created by the model as it learns from the data itself. The unsupervised learning models known for data creation, picture encoding, and augmentation in the medical field are GANs and auto-encoders (AEs). Generally, for the unsupervised model, annotated image datasets are intractable to obtain features.

-

Artificial neural networks: It is a part of ML where neurons are connected with a cascade style called a neural network that comprises input, hidden, and output layers. Furthermore, the layer which is located between the input and output layers is known as the hidden layer which consists of more than one layer, adding more layers leads to a deep neural network (DNN) [222]. Disease segmentation is done by using DNN and CNN for feature extraction [223]. Likewise, the combined fashion of all layers makes a fully connected artificial neural network (ANN) and the model of ANN is found in changed structures according to the problem [224]. The general model of ANN is shown in Fig. 12.

The model of ANN is bioinspired which is mathematically formalized the behavior of the biological neurons [226]. Multilayer perceptron(MPL) is used for image analysis and classification [227]. However, for analysis and efficient training, the MLP model is considered hard due to its dense structure [228].

-

Convolutional neural networks: Deep CNN is the primary model for feature extraction and classification as a supervised learning model in computer vision. Convolutional, pooling, and soft-max layers make up the majority of the layers that make up CNN, but they also serve as their fundamental building blocks. More convolutional layers give the images more detail [229]. The feature maps are down-sampled before being followed by the convolutional layer using average and maximum pooling. In addition, using the max-pooling layer, a dense CNN model is built for feature classification tasks [230]. The backpropagation and stochastic gradient descent methods are very crucial to training the CNN model [231]. Model train on the label images dataset and predict and generate the class label and compute the model Acc [232]. The results of classification are compiled using 5-fold cross-validation. In the same way, each input image is convolved at each convolution layer l with a set of n kernels and added biases each producing a new feature map the transformation process of nonlinear features is carried out at each convolution layer l (“*” denotes the convolutional operation).

1 The models of TL including LeNet, AlexNet, VGG −16, etc., extract features and classify the diseases using frames of endoscopy [233]. Benign and malignant diseases are segmented and classify by using TL techniques for feature extraction and serially fusion [234]. The deep models including ResNet, DenseNet, and GoogleNet contain a more complex network that improves the efficiency and training procedures [235]. TL is a technique where the dataset can be attached and features can also be extracted from the layers of the modified model [230].

-

Region-level CNNs: The selection of a particular region in the images for disease analysis using a bounding box is a very famous research area in the medical domain. In the same way, the prominent deep models with a little bit of improvement include region-CNN (R-CNN) [236], fast R-CNN[237], and Faster R-CNN [238] detect diseases by selecting a specific area from the image. Furthermore, a well-known selective searching method of the R-CNN model functions like a region-level recognition by generating thousands of object proposals [239]. The Swin-transformer method is employed for deep feature extraction [240]. In addition, the region is selected by the bounding box in classification problems where regression refines the bounding box [241]. Generally, the Fast R-CNN model identifies features of the proposal and extracts features for disease identification and also warps them into squares [242]. The deep learning models perform better in terms of clinical diagnosis including cancer segmentation [243]. The new proposed Region-of-Interest (RoI) pooling layer where after reshaping the proposals into fixed-size are fed into CNN which perform classification operation [244]. The region proposal network (RPN) is adopted by R-CNN where class-specified objects or nonobjects are classified by feature extraction [244]. Like RPN, the new model finds the class probability and various bounding boxes simultaneously known as a single-shot detector (SSD) [245]. Similarly, YOLO and RetinaNet show their performance with a faster prediction speed [246]. The YOLO model detects the knobbles in the lung using CT scan images [247].

Deep learning models outperform as compared to the handcrafted methods where segmentation tasks for pixel-wise recognition achieve great development. Segmentation tasks are performed pixel-wise with FCNs and U-Net where features are extracted by down and up-sampling and pixel-to-pixel spatial correspondence is achieved [248]. Low-level and high-level features are combined in U-Net to get accurate predictions. Similarly, 3-D image analysis is performed by U-Net which is based on the model of volumetric CNN [249]. The key element of pixel-wise segmentation that increases the spatial dimension is the Up-sampling in the U-Net that overcomes the gap between dense and coarse features map in disease prediction. In addition, instant detection and segmentation are performed by advanced frameworks referred to as Mask R-CNN [250]. The advancement of Faster R-CNN is R-CNN Mask that prediction disease using segmentation approaches with images of ground truth (segmentation mask) in parallel [251]. Unsupervised deep models consist of auto-encoders and a GAN.

Auto-encoders (AE): In the deep models, an AE and an encoder–decoder architecture are a part of typical unsupervised learning. The function of the encoder block is to encode the pixel intensities to the low-dimensional attributes, whereas, in the decoder block, intensities of original frames are reconstructed from the low-dimensional learned features. Similarly, AE discriminates the difference between learned features and input features to low automatically by learning discriminative image features [252]. The various composites AE blocks increased the performance of the stacked AE (SAE) while AE is limited, very complicated, and has highly nonlinear patterns by allowing for the representative power of a single-layer [253]. The complex patterns inherent in the frames and multiple levels of information are represented by the different layers of SAE where lower and higher layers of the network represent the simple patterns while inputs the SAE. Moreover, SAE-based models are generally used as an unsupervised feature encoders in the medical domain [254]. For example, in the disease classification methods, the learned feature of the CNN and SAE model is used [255]. For accurate organ segmentation, on 2-D + time, DCE-MRI is represented with spatial and sequential features [256]. Moreover, model performance boosts up by 50% by applying subsequent histogram matching and classifying tissue with SAE [257].

Generative adversarial network: In unsupervised learning, GAN is considered an optimistic model for feature extraction and classification problems [258]. GAN models are very crucial for image analysis which is composed of two neural network models such as a generator, and a discriminator model. The simulated and naturally produced frames are distinguished by the discriminator network while images are generated by the generator's subsequent learning process. GAN models are found in invariants that provide good presentation including deep convolutional GANs [259]. The training is performed simultaneously by the Star GAN model on various domains [260]. The cycle and shape consistency method’s limitations are introduced for disease segmentation which is part of the GAN model [261]. Moreover, in the MRI process, radiation planning with contextual information to synthesize CT images is performed by the GAN model [262]. GANs model is used for the analysis of intestinal mucositis which is detected in cancer patients [263]. The strategy in which knowledge is acquired in one task and used to solve related several problems just by changing input and output layers of data is referred to as TL [264]. Limited data is dealt with to transfer learning. The deep model training directly is not possible without annotated medical datasets images that are smaller than natural image datasets. The deep network demands large datasets for training and gets knowledge from natural images to solve medical imaging problems. Therefore, different diseases are classified automatically such as stage prostate cancer is classified with multiparametric MRI and TL models [265]. GAN detects the colorectal tumor automatically and further tumor buds are identified in the GI tract [266]. A comprehensive study describes the methods to diagnose colorectal cancer using deep-learning approaches including GAN for feature learning [267]. The structure of the GAN model is composed of data generator, discriminator (categorizes real and fake information), and selector switch which toggle between generated data and real data. The architecture of the GAN model is illustrated in Fig. 13.

Fig. 12.

Architecture of artificial neural network [225]

Fig. 13.

Illustration of the structure of the GAN model

The frames are given to TL models as input for obtaining features and later on, the classification of tumor is performed in the different levels [268]. The feature learning approaches are mostly targeted for disease detection and classification where deep learning models are employed that demand more images for better feature learning [269]. In short, the above discussion reveals that feature extraction approaches like the feature engineering method (handcrafted and deep learning) get valuable information from the frames of the endoscopy and help to classify the diseases of the GI tract. Feature selection is a crucial technique that minimizes the redundancy of the features and saves the computation cost of the classifiers [270–272]. In summary, handcrafted features are designed by human experts to capture specific characteristics of an image, while deep features are learned automatically by neural networks [98, 205, 273–277]. Handcrafted features include edge detectors, texture descriptors, and shape features, while deep features are typically learned through convolutional neural networks (CNNs) trained on large datasets. Handcrafted features are often simpler and faster to compute than deep features, but may not perform as well on complex tasks such as object recognition. Deep features can capture more abstract and complex characteristics of an image than handcrafted features and can adapt to different datasets and tasks. Deep features require large amounts of data and computational resources for training, while handcrafted features can be designed with much smaller datasets and computational resources. Handcrafted features are often used in conjunction with traditional machine learning algorithms, while deep features are typically used in conjunction with deep learning models. Handcrafted features are interpretable, meaning that they can be easily understood and visualized. On the other hand, deep features are often more opaque and difficult to interpret [278–280]. Deep features are shown to outperform then handcrafted features on many image-processing tasks like segmentation and classification. Handcrafted features are still widely used in applications where computational resources are limited or where interpretability is important. The choice between handcrafted features and deep features depends on the specific task, available data, and computational resources. Both types of features have their strengths and weaknesses, and the best approach will depend on the specific requirements of the application. The important feature selection methods are described in the following section. Feature learning methods for computer-aided diagnoses of abnormalities in the GI tract are presented in Table 4.

Table 4.

Summary of feature-learning methods for GI tract disease classification

| Refs | Years | Methods | Datasets | Modality | Results |

|---|---|---|---|---|---|

| [281] | 2020 | LSST feature-based multiple diseases of the GI tract are classified by SVM, RBF Kernel. |

50 videos |

WCE |

92.23% Acc |

| [282] | 2020 | The colorectal disease is classified by ANN using CNN-based features. |

4000 frames |

VE |

93.00% F1 |

| [283] | 2020 | Multiple diseases of GI tract are classified by ANN using CNN features. | 130,00 frames | VE |

92.00% Acc |

| [284] | 2018 | FCN extracts feature for ANN-based classification of various GI tract illnesses |

20,000 frames |

VE | 78.70% Acc |

| [285] | 2018 | ANN-based classification is performed using WCNN deep features. |

10,000 frames |

VE WCE |

96% Acc |

| [286] | 2018 | Bleeding is classified by SVM using YIQ features method. |

100 frames |

WCE | 98% Acc |

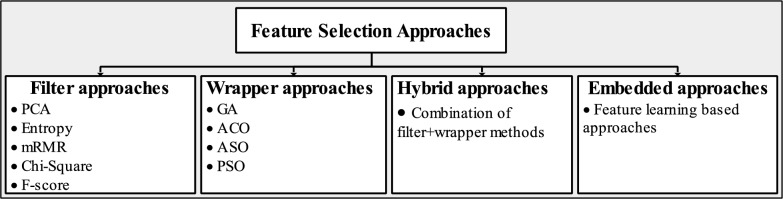

Features selection methods

The methods for selecting features are essential for reducing the size of large datasets and enabling the model to achieve high accuracy. In addition, they are divided into categories according to supervised and unsupervised concepts, including filter, wrapper, hybrid, and embedding approaches. Figure 14 depicts a summary of feature selection techniques.

Fig. 14.

An overview of feature selection Methods

Filter methods

Statistical measures are taken to score the correlation between input variables in the filter methods. The most relevant features are filters by applying supervised feature selection techniques. A subset of an ideal element is chosen from the given feature set of information using filter approaches rather than a learning method. Ordinarily, certain evaluation criteria are adopted to calculate the score of features. At that point, the best scores are picked from the given feature vectors. The assessment standards might be multivariable or univariable measures. Although multivariable measures think about more than two-route connections inside the list of capabilities, univariable measures assess each component autonomously. Likewise, multivariable measures can recognize excess features and consequently are treated as broader. The three methods PCA, entropy, and mRMR are described below.