Abstract

Purpose

Online consumer health forums serve as a way for the public to connect with medical professionals. While these medical forums offer a valuable service, online Question Answering (QA) forums can struggle to deliver timely answers due to the limited number of available healthcare professionals. One way to solve this problem is by developing an automatic QA system that can provide patients with quicker answers. One key component of such a system could be a module for classifying the semantic type of a question. This would allow the system to understand the patient’s intent and route them towards the relevant information.

Methods

This paper proposes a novel two-step approach to address the challenge of semantic type classification in Indonesian consumer health questions. We acknowledge the scarcity of Indonesian health domain data, a hurdle for machine learning models. To address this gap, we first introduce a novel corpus of annotated Indonesian consumer health questions. Second, we utilize this newly created corpus to build and evaluate a data-driven predictive model for classifying question semantic types. To enhance the trustworthiness and interpretability of the model’s predictions, we employ an explainable model framework, LIME. This framework facilitates a deeper understanding of the role played by word-based features in the model’s decision-making process. Additionally, it empowers us to conduct a comprehensive bias analysis, allowing for the detection of “semantic bias”, where words with no inherent association with a specific semantic type disproportionately influence the model’s predictions.

Results

The annotation process revealed moderate agreement between expert annotators. In addition, not all words with high LIME probability could be considered true characteristics of a question type. This suggests a potential bias in the data used and the machine learning models themselves. Notably, XGBoost, Naïve Bayes, and MLP models exhibited a tendency to predict questions containing the words “kanker” (cancer) and “depresi” (depression) as belonging to the DIAGNOSIS category. In terms of prediction performance, Perceptron and XGBoost emerged as the top-performing models, achieving the highest weighted average F1 scores across all input scenarios and weighting factors. Naïve Bayes performed best after balancing the data with Borderline SMOTE, indicating its promise for handling imbalanced datasets.

Conclusion

We constructed a corpus of query semantics in the domain of Indonesian consumer health, containing 964 questions annotated with their corresponding semantic types. This corpus served as the foundation for building a predictive model. We further investigated the impact of disease-biased words on model performance. These words exhibited high LIME scores, yet lacked association with a specific semantic type. We trained models using datasets with and without these biased words and found no significant difference in model performance between the two scenarios, suggesting that the models might possess an ability to mitigate the influence of such bias during the learning process.

Keywords: Text mining, Consumer health question-answering system, Semantic annotation scheme, Semantic type classification, Consumer health questions

Introduction

Internet-based consumer health forums represent a growing avenue for public healthcare inquiries. However, their effectiveness is often hampered by limited physician availability, leading to delayed responses. To address this critical bottleneck, several authors proposed the integration of intelligent question-answering (QA) systems [1–6]. While such technology has demonstrably enhanced user experience in chatbot-based applications, its implementation in the consumer health domain remains fraught with challenges. The complexity of healthcare information, question understanding, and the relative scarcity of labeled data pose significant hurdles to the development of robust and reliable automated medical QA systems [7].

Within automated consumer health question-answering systems, a key component lies in the question semantic type classification, analyzing user questions and extracting their underlying semantic content [1, 8–10]. This extracted information serves a dual purpose. First, it aids the system in comprehending the true intent of the user’s question, facilitating the development of appropriate response strategies [1, 10–13]. Second, it allows the system to discern whether the query falls within its competency or necessitates human intervention from a qualified physician [13]. Ultimately, semantic type classification empowers the system to effectively navigate medical information sources – such as encyclopedias and research websites – to select the most pertinent and reliable answers for user consumption [1].

Roberts et al. [14] harvested a collection of 2,937 consumer questions, where each question was annotated with a semantic category, such as “management” or “susceptibility”. While Roberts et al. [12] provided valuable insights into semantic type classification for consumer health QA systems, their work primarily focused on an English dataset comprised of 13 semantic types. This poses two issues when applying their findings to the Indonesian context. First, there is a scarcity of annotated Indonesian consumer health question datasets for training machine learning models. Second, the suitability of the 13 chosen semantic types for capturing the nuances of Indonesian medical language remains untested. Adding to the challenges, Roberts et al. [12] noted that the distribution of the 13 semantic labels was highly imbalanced, posing further difficulties in adapting their findings to the Indonesian context. Hakim et al. [15] constructed a corpus of 214 consumer health questions sourced from Indonesian websites, categorized into six semantic groups. While this corpus offers valuable insights into public health information seeking behavior, its annotation solely by the authors (non-medical professionals) raises concerns regarding the accuracy and reliability of the assigned semantic labels. Using the previously constructed dataset [12], Roberts et al. [16] presented a classification model to identify question types using a multi-class Support Vector Machine (SVM) [17] with an automatic feature selection technique [18]. In another work, Roberts et al. [14] make use of SVM to classify medical sentences into background, ignore, or questions. Sarrouti and Ouatik El Alaoui [19] also utilize SVM with handcrafted lexico-syntactic to automatically classify biomedical questions into one of the four categories: (1) yes/no, (2) factoid, (3) list, and (4) summary. Kilicoglu et al. [20] propose a more fine grained task by identifying phrase-level semantic entities on health questions using Conditional Random Fields [21]. However, the impact of word-level features, such as bias words, on model performance remains largely unexplored. We posit that investigating word-level bias enhances the explainability of the automatic semantic type classification task.

This paper presents a two-pronged approach to tackle semantic type classification in Indonesian consumer health questions. First, we introduce a novel corpus of annotated Indonesian consumer health questions categorized by semantic types, aimed at addressing the scarcity of Indonesian health domain data for machine learning models. Building upon the initial efforts of Hakim et al. [15], who compiled a corpus of consumer health questions from Indonesian websites, we present a refined iteration annotated with a more granular category set. Using the detailed schema proposed by Roberts et al. [14], we then hired medical professionals and students to assign semantic labels to each question within our dataset. Second, we leverage this corpus to build and evaluate a predictive model for classifying question types. To enhance trust in the model’s predictions, we employ an explainable model framework, understanding the effect of word-based features. This also allows for bias analysis, enabling us to detect and assess the influence of “semantic bias” - words not inherently associated with any specific semantic type. Furthermore, we conducted two sets of experiments: (1) to address the imbalanced label distribution within the corpus using oversampling techniques; and (2) to analyze the impact of bias words on the model’s performance. These experiments ultimately contribute to determining the model’s suitability and trustworthiness for semantic type classification in Indonesian consumer health inquiries.

Background

Equipping clinicians with the best available patient-centered medical evidence is crucial in evidence-based medicine, as it empowers them to make informed decisions when managing patient care [22]. Clinicians usually make use of medical text retrieval systems, such as PubMed1, to retrieve relevant information from a collection of millions of articles in life sciences that mostly comes from MEDLINE2. However, medical search engines, such as PubMed, are not designed to generate a short answers to a user’s question. Instead, they provide a ranked list of snippets and links to a set of biomedical journals. This experience makes it challenging for a user to quickly get the answer of their questions, since they need to skim several top-ranked articles in order to find the answer. On the other hand, QA systems can immediately provide a short and precise summary as a response to a natural language question [1–5], allowing users to effectively pinpoint the needed information from a larger set of documents.

One approach to modeling and representing medical questions involve classifying them into different semantic categories [11, 13, 23, 24]. Ely et al. [13] categorized medical questions into two broad taxonomies: topics and generic questions. The topic taxonomy consists of approximately 63 categories, such as “endocrinology” and “gynaecology”, taken from medical specialties and designed based on a family practice article filing system [25]. Templates for generic questions were also developed using an iterative annotation process. Some of the templates are: “what is the cause of symptom X?” and “what is the drug of choice for condition X?”. In a subsequent work, Ely et al. [24] categorized generic questions into four levels of specificity. The first level includes five classes: “diagnosis”, “treatment”, “management”, “epidemiology”, and “non-clinical” questions. Patrick and Li [11] collected 550 questions from the ICU visits and designed a hierarchical set of question types based on answering strategy. Those types include “comparison”, “reason”, and “external knowledge”. For example, the question “is he neurological better?” belongs to the “comparison” class and “why was the dialysis stopped?” is coded as “reason”. Athenikos et al. [23] argued that the first level classes proposed by Ely et al. [24] are not sufficient to identify semantic relations among questions. Athenikos et al. [23] then devised a four-level categorization and the first level classes are semantic relations, which include “cause-effect”, “method”, “indication”, “efficacy”, “quantity”, “discrimination”, and “significance”.

Medical QA has largely targeted professional clinicians for seeking answers from biomedical literature [1, 4, 5, 8, 26–28]. However, medical questions can also be posed by consumers, particularly on consumer health forums, such as MedlinePlus3, Genetics Home Reference4, or Indonesian Alodokter5. Furthermore, several consumer health QA systems are also commercially available, such as Cleveland Clinic Health Q&A6, Florence7, and MedWhat8. While clinicians may delve deeper into specific mechanisms or clinical guidelines, consumers focus on general information, causes, and prognosis [29, 30]. Zhang [31] and Roberts et al. [14] further noted that consumer health questions often contain abbreviations, misspellings, and tend to be multiple questions bundled into a single post. To address misspellings on consumer health questions, Kilicoglu et al. [32] developed a dataset that is useful for spelling correction. This dataset contains 472 questions collected from the U.S. National Library of Medicine (NLM). Roberts and Demner-Fushman [33] found that questions posed by professionals tend to focus on treatments and tests. While consumer questions discuss about symptoms and diseases. In addition, professional questions tend to be shorter than consumer questions [33]. Medical professionals provide little background information on their questions. While consumers usually support their questions with more background stories taken from online resources.

There have been several works dealing with consumer health QA systems focusing on question understanding [10, 12, 29–31, 33–36]. Roberts and Demner-Fushman [33] investigated the main characteristics of consumer questions using Natural Language Processing tools on lexical, syntactic, and semantic levels. In early work, [14] proposed 13 semantic categories for consumer health questions. Based on a corpus collected from Genetic and Rare Diseases Information Center9 (GARD), five most common types are: “Management” (prevention and treatment), “Information” (general information), “Susceptibility” (how a disease is acquired or transmitted), “OtherEffect” (the effect of a disease without signs and complications), and “Prognosis” (expected success of a treatment). The level of agreement between the annotators who classified the questions based on type was substantial with Cohen’s , implying a shared understanding of the question types. With the same GARD dataset, Roberts et al. [14] also decomposed complex consumer questions into fine-grained individual sub-questions and each sub-question is labeled with three general semantic elements, including “focus” (the main theme), “background” (contextual information), and “question” (the main question). Differ from a question-level classification scheme proposed by Roberts et al. [14], Kilicoglu et al. [20] proposed a named entity annotation scheme for consumer questions, where the unit of annotation is at token or phrase level. Three most common entity types are: “problem” (e.g. HIV and cholesterol), “anatomy” (e.g. head, arm, and stomach), “person” (e.g. daughter and female).

To develop effective tools for extracting relevant semantic content from medical questions, it is critical to have question corpora annotated with semantic categories or with formal representations. Several works have developed question corpora collected from consumer health websites or forums [10, 12, 20, 33, 37]; while other authors have collected questions posed by professionals via various sources, including NLM and PubMed [13, 33, 38, 39]. Roberts et al. [14] collected 2,937 consumer questions harvested from GARD and annotated with 13 semantic categories. Kilicoglu et al. [20] annotated 1,548 medical questions, identifying a total of 15,052 medical entities across these questions. Kilicoglu et al. [10] constructed a corpus that is divided into two parts: (1) a collection of 1,740 questions coming from emails sent to the NLM customer service between 2013 and 2015 (2) another collection of 874 questions originating from MedlinePlus query logs. Moreover, those questions were annotated with a number of semantic labels: named entities, question triggers/types, question topics, and frames.

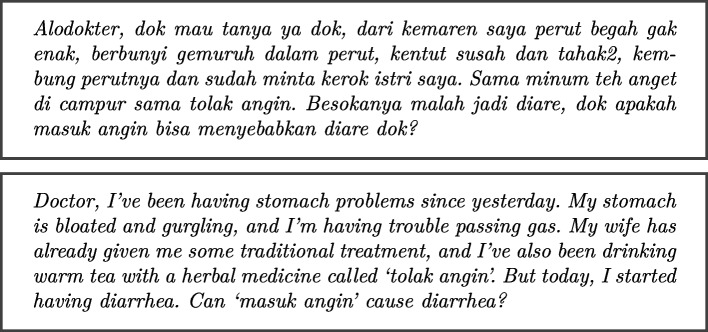

We argue that extracting semantic information from a question on a consumer health website requires a method that is specific for a particular domain and language. Guo et al. [40] developed a corpus of Chinese consumer health questions with a two-layered annotation scheme. This corpus also covers several Chinese-specific topics, such as Chinese traditional medicine. On the other hand, Indonesian consumer questions also exhibit specific information. Figure 1 shows a consumer health question taken from an Indonesian consumer health website, Alodokter. The question contains culturally specific terms [41, 42]. For example, “masuk angin”, a language-specific idiom, which is a common Indonesian illness thought to be caused by exposure to cold wind or drafts. Several Indonesian terms are ill-formed [43], such as “kemaren” (yesterday), “gak” (not), and “anget” (warm), are non-standard; and the term “besokanya” (tomorrow) is a misspelling. Furthermore, we may find some questions containing code-mixed writing in which the users use English mixed with Indonesian [44].

Fig. 1.

Example of a consumer health question taken from the “Alodokter” website. English translation is provided to assist non-native Indonesian speakers in understanding the content

Therefore, understanding Indonesian consumer health questions at least needs a specific corpus. To the best of our knowledge, the only semantically annotated Indonesian question dataset is the collection constructed by Hakim et al. [15]. This dataset contains 86,731 questions harvested from five Indonesian consumer health websites; and a subset of 214 questions has been annotated with six semantic categories: “diagnosis”, “symptom”, “treatment”, “effect”, “information”, and “other”. Our work builds upon the dataset of Hakim et al. [15] by expanding it with additional questions annotated by several medical students. Furthermore, we refine the annotation scheme by introducing more semantic categories, providing a richer resource for the development and evaluation of Indonesian-language consumer health QA systems.

Semantic category taxonomy

This work adopts the semantic type scheme proposed by Roberts et al. [14]. However, to ensure its suitability for the Indonesian dataset, we conducted expert validation through interviews with three doctors. The interviews, lasting 30–45 minutes each, were held via video conferencing. Prior to each interview, informed consent was obtained from the participant to guarantee data confidentiality and credibility. Subsequently, the doctors were presented with the annotation guidelines and asked a series of questions, including one on the scheme’s validity for the Indonesian context. The following is a list of questions presented to the doctors during the interviews:

Does the field of medicine have established taxonomies for categorizing patient questions? If so, could you elaborate on their structure?

In your expert opinion, can the semantic type scheme proposed by Roberts et al. [14] be effectively adapted for the Indonesian dataset?

From your experience, are there any semantic types within the scheme whose definitions you find ambiguous?

Based on your interactions with patients, are there any question types categorized within the scheme that you believe patients rarely ask?

Who do you consider to be suitable for accurately categorizing patient questions? Can final-year medical students be considered experts in this context?

The expert panel comprised three doctors with diverse backgrounds: (1) The first doctor is a general practitioner working at a government research and technology institution. They also possess experience as a freelance data analyst and an interactive medical consultant for a healthcare startup, providing them with a unique perspective on patient question documentation in medical QA systems. (2) The second doctor is an internal medicine specialist with 20 years of experience encompassing both clinical practice and the academic journey required to become a doctor and specialist. (3) The third doctor is a dermatologist with 9 years of experience following graduation from medical school in 2014. They have further enriched their experience by providing consultations via two healthcare startup applications. The following entry summarizes the key takeaways from interviews conducted with the three doctors:

The findings suggest that semantic types from the Roberts et al. [14] study can be adapted for use with Indonesian medical data;

Two out of the three doctors interviewed expressed agreement on potentially merging the “Complication” and “OtherEffect” semantic types due to their perceived similarity in definition. However, a dissenting opinion emerged from the third doctor, who argued that a distinction exists between these types. According to this doctor, “Complication” refers specifically to the impact of the disease on the patient’s health, whereas “OtherEffect” encompasses broader social and economic consequences. Further investigation is warranted to reconcile these viewpoints;

The interviews revealed that medical students in the pre-clinical stage might possess sufficient medical knowledge to participate in the annotation process. This finding suggests potential for involving such students in data annotation.

We further conducted a validation process to assess the appropriateness of sample questions in Indonesian for the annotation guideline. These sample questions were translations of those used in the study by Roberts et al. [14], with modifications to align with commonly asked questions in Indonesian healthcare settings. Following this doctor-led validation process, a total of twelve semantic types were identified:

Anatomy (ANT). Anatomy-related questions focus on specific body structures potentially impacted by a particular disease. These questions typically seek answers within the anatomical or physiological sections of reference materials. Example: “Apakah saya bisa hidup dengan satu ginjal?” (Can I live with one kidney?)

Cause (CAU). Cause-related questions explore the factors directly or indirectly responsible for initiating a disease, including those that increase susceptibility. These questions often target the etiology or causal factors of a patient’s condition. Example: “Mengapa kaki bengkak saat hamil?” (Why do feet become swollen during pregnancy?)

Complication (CMP). Complication-related questions address potential secondary issues arising from a specific disease. These questions often focus on patient-specific risks associated with the disease progression. Example: “Apakah pemakaian kacamata yang tidak teratur menyebabkan silinder saya bertambah dok?” (Does irregular use of glasses cause my astigmatism to worsen, doctor?)

Diagnosis (DGN). Diagnosis-related questions center on the methods and tools employed for identifying a specific disease. These questions may explore the diagnostic process itself, relevant diagnostic tests, or specific inquiries related to diagnosis confirmation. Example: “Apakah down syndrome dapat dideteksi sebelum anak dilahirkan?” (Is it possible to detect Down syndrome before a child is born?)

Information (INF). Information-related questions focus on broad knowledge about a particular disease. These questions may seek definitions, disease descriptions, or an overall understanding of the condition. Example: “Bagaimana cara membedakan darah haid dan flek tanda kehamilan?” (How to distinguish menstrual blood and pregnancy spotting?)

Management (MNA). Management-related questions explore available interventions for a specific disease. These inquiries may focus on treatment options, medications, disease controls, or preventative measures. Example: “Apakah ada terapi jenis baru untuk menangani penyakit diabetes?” (Are there any new types of therapy for diabetes?)

Manifestation (MNF). Manifestation-related questions delve into the signs and symptoms associated with a specific disease. These questions explore how a disease presents itself in the body. Example: “Seperti apa ciri-ciri kehamilan yang sehat itu ya dok?” (What are the characteristics of a healthy pregnancy, doctor?)

PersonORG (PRS). Questions labeled as “PersonORG” focus on identifying individuals associated with a specific disease. These may include requests for information on specialists, research institutions, or healthcare facilities. Example: “Saya membutuhkan informasi mengenai dokter spesialis jantung di daerah Jakarta Barat.” (I need information about heart specialist doctors in West Jakarta.)

Prognosis (PRG). Prognosis-related questions explore the expected course of a disease, including potential outcomes such as life expectancy, quality of life, or treatment success rates. Example: “Apakah pengobatan hipnoterapi dapat menghilangkan phobia saya?” (Can hypnotherapy cure my phobia?”)

Susceptibility (SSC). Susceptibility-related questions explore factors that influence an individual’s risk of developing a particular disease. These inquiries may delve into genetic predispositions or transmission patterns, including both contagious and non-contagious diseases. Example: “Apakah wasir ini berbahaya bagi bayi saya jika tidak diobati semasa hamil?” (Could this hemorrhoid pose a risk to my baby if left untreated during pregnancy?)

Other (OTH). Questions labeled as “Other” encompass a range of topics related to a specific disease that fall outside the realm of medical diagnosis, treatment, or prognosis. These may include questions regarding financial aspects, historical background, or social implications of the disease. Answers to such inquiries are often found in non-medical resources such as encyclopedias or social science studies. Example: “Berapa biaya operasi usus buntu dok?” (How much does an appendix surgery cost, doctor?)

NotDisease (NDD). Questions labeled as “NotDisease” encompass questions that fall outside the system’s capabilities or intended use. These may include queries related to religious faiths and practices, personal experiences, or topics beyond the domain of medical information. Example: “Dok, saya sudah haid selama 14 hari, apakah saya bisa solat?” (Doctor, I’ve been on my period for 14 days, am I allowed to pray now?)

Data annotation

The dataset used in this work is secondary data collected from the research conducted by Ekakristi et al. [45] and Nurhayati [46]. These data were obtained through crawling on medical consultation forum sites from 9 February 2016 to 18 March 2016, namely Alodokter, Dokter Sehat, detikHealth, KlikDokter, and Tanyadok. The difference lies in the data from Ekakristi et al. [45], which has been decomposed into three parts: Background, Ignore, and Question. However, several question-type labels encompass more than one inquiry, necessitating further decomposition. The data for this research was derived from 489 documents. These documents included 191 from Ekakristi et al. [45] and 298 from Nurhayati [46]. This analysis identified a total of 965 individual questions.

Following data collection, we prepared an annotation guideline to ensure consistency among annotators during the labeling process. Before starting the annotation process, we held a meeting to assess the annotators’ understanding of the task. This meeting provided an opportunity for annotators to ask questions and discuss the definitions and examples of the semantic types. The annotation process was conducted over a 10-hour period, spread across three weeks. We recruited four pre-clinical medical faculty students as annotators and assigned each data instance to two annotators for cross-labeling.

To evaluate the annotation quality, we measure inter-annotator agreement using Cohen’s Kappa coefficient Cohen [47]. The Cohen’s Kappa score is expressed by the following equation:

| 1 |

where a represents actual agreement; e represents expected agreement; denotes the probability of actual agreement; and denotes the probability of expected agreement.

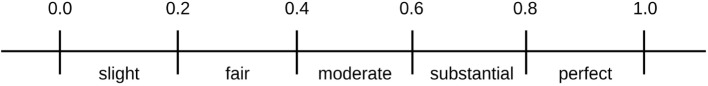

Our evaluation using Cohen’s Kappa coefficient (Landis and Koch [48]) revealed a moderate level of agreement between the annotators, with a kappa score of 0.48. We refer to Landis and Koch[48] (as illustrated in Fig. 2) to interpret Cohen’s Kappa in this study. At the label level, the Kappa scores vary significantly, reflecting some semantic categories are more ambiguous compared to others. We present the Kappa score in Table 1.

Fig. 2.

Interpretation of Cohen’s Kappa values based on Landis and Koch [48]. Note that negative values represent “no-agreement”

Table 1.

Cohen’s Kappa scores for 12 semantic labels

| Label | Kappa | Label | Kappa | Label | Kappa |

|---|---|---|---|---|---|

| INF | 0.43 | CAU | 0.61 | MNA | 0.87 |

| DGN | 0.69 | PRS | 0.97 | MNF | 0.23 |

| CMP | 0.55 | PRG | 0.67 | SSC | 0.47 |

| NDD | 0.18 | ANT | −0.01 | OTH | 0.71 |

Subsequently, disagreements were resolved through adjudication process. We ask the annotators to engage in discussion why they provided different label for a certain instance. For example, there was different interpretation toward “Diagnosis” and “Cause” categories. One annotator thought the questions related to a patient’s condition should be categorized into “Diagnosis” category. On the contrary, another annotator interpreted “Diagnosis” more strictly, limiting it to questions explicitly referencing a specific disease. Questions like “Why am I feeling this way, doctor?” belonged to the “Cause” category because the answer might not necessarily be a diagnosis. Another annotator considered the ideal “Diagnosis” question to be a confirmation query, like “Do I have disease X?”. This distinction contrasted with first annotator’s broader interpretation, which encompassed both confirmation and cause-oriented questions under “Diagnosis”.

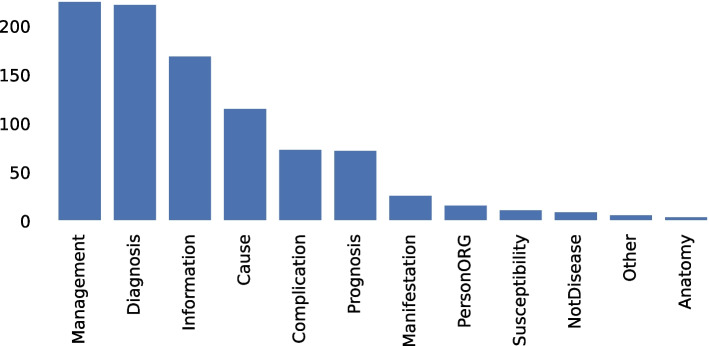

The gold standard dataset includes all instances where both annotators agreed on the label, as well as those where they initially disagreed but later reached consensus through adjudication. Figure 3 describes the statistic of annotated data. It shows imbalanced distribution across different semantic types. Several semantic categories have more data, e.g., “Management” and “Diagnosis”, while there are only very few instances of “Other” or “Anatomy” categories. This uneven distribution is concerning, as a model trained on such data may exhibit high overall accuracy but struggle to predict minority classes. To address this challenge, we employed several oversampling techniques to balance the dataset. (see Modeling semantic category section).

Fig. 3.

Statistics of data across semantic categories. y-axis represents frequency

Modeling semantic category

To support an Indonesian consumer health question-answering (QA) system, we developed a data-driven model for automatic question semantic type identification. Formally, we seek a classification function that maps a question to one of the question semantic labels in . First, we performed two essential preprocessing steps prior to model training: stop word removal and text processing. Stop word removal involves eliminating a predefined set of common words with minimal semantic meaning, in our case, including 5% of the most frequent and least frequent words in the dataset. Text processing encompasses a series of steps to normalize the text data, including lowercasing, removal of digits, punctuation, and excessive whitespace, and finally, stemming. Second, we investigated the performance of two distinct machine learning paradigms for text classification: conventional models and deep learning models. Conventional models rely on hand-crafted features capturing informative characteristics within the text data. For this approach10, we employed a variety of algorithms including XGBoost [49], Naïve Bayes, Perceptron, Multi-Layer Perceptron (MLP), Decision Tree, and Support Vector Machine (SVM) [50]. In contrast, deep learning models automatically extract features from the text data. This eliminates the need for manual feature engineering. We used a BERT-based model [51] for this purpose, adding a classifier layer on top to perform the final text categorization.

Conventional machine learning models receive input in the form of TF-IDF representation using unigram, bigram, and unigram-bigram combinations. Unigrams represent individual words, while bigrams represent pairs of consecutive words.

Suppose is a set of vocabulary in a training collection; a unigram vector of a question q is represented by . The term weight, w(t, q), is computed as:

where f(t, q) is the number of times the term t occurs in q; N is the number of questions in the training collection; and df(t) is the number of questions that contains term t. For bigram, t is replaced by a pair of consecutive terms. By combining unigram and bigram representations, we aim to capture both local and contextual information within the text data.

Meanwhile, the BERT model received the raw text as input, along with positional embeddings and masking vectors. These additional elements allow the BERT model to capture the contextual relationships between words and to focus on relevant portions of the text, respectively. In addition, we employed the pre-trained language model11 for our BERT architecture. This model builds upon the foundation of “indobert-base-p2”12 [52] by incorporating further fine-tuning on a consumer health domain dataset. This fine-tuning process specializes the model’s understanding and representation of consumer health language, enhancing its performance for our specific question semantic classification task.

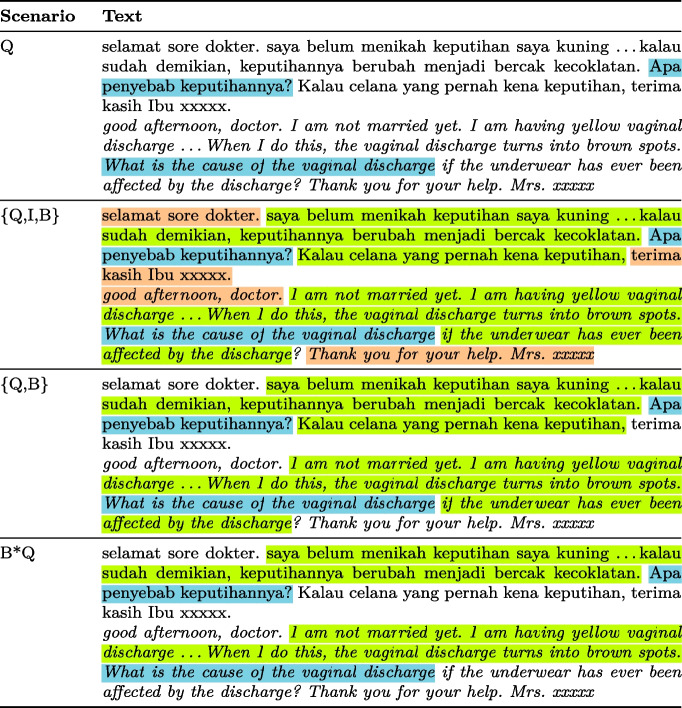

Question text in consumer health forums usually consists of multiple sentences. Mahendra et al. [53] proposes a sentence recognition task to decompose these sentences and classify them into three types: Question, Background, and Ignore. For the semantic classification task, we consider four different input scenarios based on sentence type:

Scenario Q: Only sentences identified as “Question” are used as input.

Scenario {Q,I,B}: All sentences, regardless of type (“Question”, “Background”, or “Ignore”), are used as input.

Scenario {Q,B}: Only sentences identified as “Question” or “Background” are used as input; “Ignore” sentences are omitted.

Scenario B*Q: The input consists of the sentence identified as “Question” and all preceding sentences labeled as “Background”.

Table 2 presents an example of how these different scenarios are applied to construct input for the semantic classification task.

Table 2.

Example of a consumer health question (

indicates Background sentence,

indicates Background sentence,

indicates Question sentence, and

indicates Question sentence, and

indicates Ignore sentence)

indicates Ignore sentence)

Highlighted text is used as input for the semantic classification task

Furthermore, to address the challenges of imbalanced data distribution and limited data availability—crucial factors for building well-generalized models—we employ oversampling techniques. Three specific oversampling approaches are implemented: (1) data-driven oversampling using SMOTE [54], ADASYN [55], and BORDERLINE-SMOTE [56]; (2) keyword-based oversampling; and (3) oversampling with pseudo-labeling.

Data-Driven Oversampling.

In SMOTE, an instance x is randomly selected from a minority class. Subsequently, SMOTE identifies the k-nearest neighbors of x, denoted by , within the same minority class. For each neighbor , SMOTE randomly generates a synthetic data point that lies along the line segment connecting x and the neighbor . ADASYN [55] is an extension to the SMOTE method. The number of samples produced by ADASYN is adjusted according to the local concentration of the minority class. BORDERLINE-SMOTE is a variant of SMOTE that only considers borderline instances as a basis for oversampling. All these techniques were employed to augment the data in each class with synthetic samples until a balanced distribution is achieved, where all classes have a similar quantity of data points. It is important to note that this approach is primarily applicable to machine learning models.

Keyword-based Oversampling.

This approach addresses class imbalance by augmenting the training data with synthetic question samples generated from unlabeled data. The process involves utilizing characteristic words identified for each semantic label through two approaches: (1) using conditional probability of words with respect to labels, denoted by , and (2) calculating the contribution of words to labels using LIME, C(t, l). The former analysis method is conducted by identifying, for each semantic type, the words most likely associated with it. Characteristic analysis involves calculating the semantic type probabilities based on word occurrences. The estimate of is computed as follow:

where represents the number of times the term t appears in questions belonging to class l; and is the number of times the term t appears in the training data.

In addition to using conditional probability, the distinctive features of semantic types can be associated with a word’s contribution to the semantic type’s prediction value using several machine learning models. To achieve this, we utilized LIME [57], a local model-agnostic explainability tool, to calculate the average contribution of each word towards a semantic type, allowing us to detect words that exert a disproportionate influence on the semantic type assignment. With LIME, the explanation model for question q, denoted by E(q), is the interpretable linear model g that minimizes a loss function L, measuring how close g(q) is to the prediction of the original model f(q). Formally, E(q) is , such that:

where is a “white-box” linear model with a set of parameters ; and determines the weight of data points surrounding instance q that are included when explaining its characteristics. Each parameter corresponds to a certain term in the vocabulary V. When f predicts that a question q belongs to label l, can be interpreted as the change in the probability of belonging to the label l upon removing term from set of features used to represent the question q. For example, f assigns an instance q with probability 0.40 of being positive. After fitting to f, a parameter equals to , meaning that removing term will increase the probability of being positive by about 0.12 ().

To identify the top-n terms with the highest average contributions for each semantic type, the LIME approach was executed for the explanation of three different models: the XGBoost, Multinomial Naïve Bayes, and Multilayer Perceptron. Let be a list of questions in the dataset; and be the value of after fitting to f with respect to the question . The average contribution of word t with respect to label l, denoted by C(t, l), is computed using LIME as follow:

Starting with several keyword candidates having the highest scores, we then filtered them down using C(t, l) scores to select twenty keywords with the highest average contribution for each semantic type. Some of identified keywords can be seen in Table 10. These keywords are used to identify relevant sentences within the unlabeled data pool. Specifically, the approach targets sentences classified as Question type that do not contain keywords associated with other labels.

Table 10.

Unbiased terms used as features for semantic type identification

| Semantic Type | Term |

|---|---|

| MNA | obat, solusi, saran (drugs, solution, recommendation) |

| DGN | derita, ganggu (suffering, bother) |

| INF | program (program) |

| PRG | sembuh (cured) |

| CAU | faktor (factor) |

| SSC | tular (transmitted) |

| ANT | tubuh (body) |

| PRS | spesialis (specialist) |

| CMP | efek, bahaya (effect, danger) |

| OTH | biaya (cost) |

| MNF | ciri (characteristic) |

Oversampling with Pseudo-labeling.

Similar to keyword-based oversampling, this approach utilizes unlabeled data to address class imbalance. However, it employs a different strategy. The approach involves querying the unlabeled data and using a model to predict labels for these data points. These label prediction models can be traditional machine learning models, ensemble learning models, or even deep learning models, all trained on the entire dataset. In this work, we utilized XGBoost and Perceptron as they demonstrated strong performance in scenarios without oversampling.

Experiments and analysis

To mitigate the limitations imposed by our dataset size, we adopted a repeated hold-out strategy when evaluating all proposed models. This approach involved splitting the data into training and testing sets with a 70%/30% ratio twenty times. To address the potential impact of imbalanced class distributions, two approaches were investigated: (1) no-oversampling, where we evaluated model performance without oversampling the data; (2) oversampling, where we balanced the label distribution within the training sets of each iteration by oversampling minority classes. We conducted preliminary experiments using all feature types – unigrams, bigrams, and combinations. However, unigram only features consistently outperformed the others in our preliminary trials. Therefore, for this experiment, we present results based solely on unigram features. We evaluated all models using the F1 score, a widely used metric that balances precision and recall. To assess the generalizability of performance across different data splits, we averaged the F1 scores obtained from all twenty iterations. Additionally, for each data split (experiment), we conducted paired t-tests with a significance level of 0.05 to statistically compare the performance of different models. This paired approach accounts for the inherent dependency between the training and testing sets within each split.

Our evaluation commenced with a comparison of model performance under two conditions: with and without oversampling of training data. This approach aimed to mitigate potential biases arising from imbalanced class distributions. Table 3 presents the results for the scenario without oversampling. Notably, input scenario Q achieved the highest weighted average value compared to other scenarios ({Q,B}, {Q,I,B}, and B*Q)13. Interestingly, Perceptron and XGBoost emerged as the top performers based on the weighted F1 score. The BERT-based model, however, underperformed in this setting. As a possible explanation, the limited size of our training dataset might not have provided sufficient information for effective learning in the BERT architecture. Another potential explanation is that the base BERT model may require pre-training on a specialized corpus of consumer health documents. This phenomenon warrants further investigation in our future studies.

Table 3.

Weighted average F1 scores for all input scenarios (no-oversampling)

| Label | Q | {Q,I,B} | {Q,B} | B*Q |

|---|---|---|---|---|

| XGBoost | 0.52 | 0.44 | 0.43 | 0.43 |

| Naïve Bayes | 0.42 | 0.30 | 0.30 | 0.30 |

| Perceptron | 0.56 | 0.44 | 0.43 | 0.43 |

| MLP | 0.37 | 0.25 | 0.26 | 0.26 |

| Decision Tree | 0.46 | 0.35 | 0.35 | 0.35 |

| SVM | 0.42 | 0.28 | 0.28 | 0.28 |

| BERT | 0.08 | 0.09 | 0.09 | 0.09 |

Table 4 delves deeper, showcasing the performance details for each label using input scenario Q in the no-oversampling scenario. Building upon the finding that input scenario Q yielded the highest performance without oversampling (Table 3), we further investigated the impact of various oversampling techniques on this specific scenario (Tables 5, 6, 7, and 8). This focused analysis allowed us to assess the effectiveness of oversampling strategies for scenarios that already exhibit promising performance. Several oversampling approaches were employed: SMOTE, ADASYN, Borderline-SMOTE, keyword-based oversampling, and pseudolabeling oversampling. The pseudolabeling oversampling method uses Perceptron as model for oversampling because the Perceptron achieves the best weighted average F1 score without oversampling. The model has been trained on the dataset used in this research. However, due to the inherent characteristics of the BERT model, we only applied keyword-based and pseudo-labelling oversampling, excluding the other three scenarios. It can be seen that the effectiveness of oversampling techniques varies across models and libraries. This is evident from the weighted average F1 scores, which exhibit minimal changes for some models with oversampling. Moreover, specific oversampling approaches may not be universally beneficial. For instance, SMOTE application resulted in a performance decline for the Decision Tree model. On the other hand, Borderline-SMOTE enhanced the performance of the SVM model. These observations highlight the importance of careful selection and evaluation of oversampling techniques for optimal model performance. The significant improvement observed in the Naïve Bayes model with both SMOTE and Borderline-SMOTE exemplifies this point (t-test, p-value ).

Table 4.

Results for scenario Q stratified by semantic labels (no-oversampling)

| Label | XGBoost | N. Bayes | Perceptr. | MLP | Dec. Tree | SVM | BERT | |

|---|---|---|---|---|---|---|---|---|

| INF | F1 | 0.37 | 0.38 | 0.42 | 0.29 | 0.36 | 0.36 | 0.06 |

| P | 0.34 | 0.48 | 0.44 | 0.30 | 0.26 | 0.46 | 0.03 | |

| R | 0.40 | 0.31 | 0.40 | 0.33 | 0.57 | 0.30 | 0.15 | |

| CAU | F1 | 0.51 | 0.23 | 0.54 | 0.21 | 0.44 | 0.28 | 0.06 |

| P | 0.54 | 0.91 | 0.59 | 0.31 | 0.57 | 0.93 | 0.04 | |

| R | 0.49 | 0.13 | 0.53 | 0.21 | 0.37 | 0.17 | 0.15 | |

| MNA | F1 | 0.72 | 0.72 | 0.76 | 0.65 | 0.62 | 0.56 | 0.07 |

| P | 0.71 | 0.59 | 0.75 | 0.55 | 0.68 | 0.40 | 0.04 | |

| R | 0.73 | 0.93 | 0.77 | 0.82 | 0.57 | 0.95 | 0.20 | |

| DGN | F1 | 0.63 | 0.59 | 0.64 | 0.55 | 0.56 | 0.68 | 0.07 |

| P | 0.59 | 0.44 | 0.63 | 0.46 | 0.58 | 0.63 | 0.04 | |

| R | 0.68 | 0.92 | 0.66 | 0.72 | 0.54 | 0.74 | 0.20 | |

| PRS | F1 | 0.25 | 0.00 | 0.44 | 0.00 | 0.20 | 0.07 | 0.03 |

| P | 0.40 | 0.00 | 0.47 | 0.00 | 0.46 | 0.20 | 0.02 | |

| R | 0.20 | 0.00 | 0.44 | 0.00 | 0.13 | 0.04 | 0.10 | |

| MNF | F1 | 0.22 | 0.00 | 0.25 | 0.00 | 0.20 | 0.00 | 0.05 |

| P | 0.32 | 0.00 | 0.30 | 0.00 | 0.35 | 0.00 | 0.03 | |

| R | 0.19 | 0.00 | 0.22 | 0.00 | 0.15 | 0.00 | 0.15 | |

| CMP | F1 | 0.44 | 0.07 | 0.47 | 0.08 | 0.31 | 0.24 | 0.02 |

| P | 0.48 | 0.52 | 0.51 | 0.14 | 0.48 | 0.85 | 0.01 | |

| R | 0.40 | 0.04 | 0.45 | 0.06 | 0.24 | 0.14 | 0.05 | |

| PRG | F1 | 0.45 | 0.10 | 0.50 | 0.13 | 0.35 | 0.15 | 0.00 |

| P | 0.49 | 0.75 | 0.56 | 0.21 | 0.45 | 0.89 | 0.00 | |

| R | 0.42 | 0.05 | 0.47 | 0.10 | 0.31 | 0.08 | 0.00 | |

| SSC | F1 | 0.04 | 0.00 | 0.11 | 0.00 | 0.00 | 0.00 | 0.00 |

| P | 0.07 | 0.00 | 0.16 | 0.00 | 0.00 | 0.00 | 0.00 | |

| R | 0.03 | 0.00 | 0.10 | 0.00 | 0.00 | 0.00 | 0.00 | |

| NDD | F1 | 0.06 | 0.00 | 0.12 | 0.00 | 0.10 | 0.00 | 0.00 |

| P | 0.09 | 0.00 | 0.11 | 0.00 | 0.20 | 0.00 | 0.00 | |

| R | 0.05 | 0.00 | 0.15 | 0.00 | 0.07 | 0.00 | 0.00 | |

| ANT | F1 | 0.03 | 0.00 | 0.11 | 0.00 | 0.00 | 0.00 | 0.00 |

| P | 0.03 | 0.00 | 0.10 | 0.00 | 0.00 | 0.00 | 0.00 | |

| R | 0.03 | 0.00 | 0.20 | 0.00 | 0.00 | 0.00 | 0.00 | |

| OTH | F1 | 0.53 | 0.00 | 0.23 | 0.00 | 0.42 | 0.00 | 0.00 |

| P | 0.51 | 0.00 | 0.21 | 0.00 | 0.43 | 0.00 | 0.00 | |

| R | 0.60 | 0.00 | 0.35 | 0.00 | 0.50 | 0.00 | 0.00 |

Table 5.

Weighted average F1 score for input scenario Q (oversampling)

| Label | No Overs. | SMOTE | B. SMOTE | ADASYN | Pseudo. | Keywo. |

|---|---|---|---|---|---|---|

| XGBoost | 0.52 | 0.55 | 0.54 | 0.52 | 0.52 | 0.52 |

| Naïve Bayes | 0.42 | 0.58 | 0.59 | 0.42 | 0.42 | 0.42 |

| Perceptron | 0.56 | 0.55 | 0.55 | 0.56 | 0.56 | 0.56 |

| MLP | 0.37 | 0.36 | 0.38 | 0.35 | 0.37 | 0.35 |

| Decision Tree | 0.46 | 0.37 | 0.39 | 0.45 | 0.46 | 0.46 |

| SVM | 0.42 | 0.52 | 0.28 | 0.43 | 0.42 | 0.42 |

| BERT | 0.08 | – | – | – | 0.09 | 0.00 |

Note that we only applied keyword-based and pseudolabeling oversampling for the BERT-based classifier

Table 6.

Weighted average F1 score for input scenario {Q,I,B} (oversampling)

| Label | NoOvers. | SMOTE | B.SMOTE | ADASYN | Pseudo. | Keywo. |

|---|---|---|---|---|---|---|

| XGBoost | 0.52 | 0.44 | 0.43 | 0.44 | 0.43 | 0.43 |

| NaïveBayes | 0.42 | 0.33 | 0.29 | 0.33 | 0.30 | 0.30 |

| Perceptron | 0.56 | 0.42 | 0.43 | 0.43 | 0.43 | 0.43 |

| MLP | 0.37 | 0.25 | 0.24 | 0.27 | 0.25 | 0.25 |

| DecisionTree | 0.46 | 0.28 | 0.33 | 0.31 | 0.34 | 0.34 |

| SVM | 0.42 | 0.29 | 0.29 | 0.29 | 0.28 | 0.28 |

Table 7.

Weighted average F1 score for input scenario {Q,B} (oversampling)

| Label | NoOvers. | SMOTE | B.SMOTE | ADASYN | Pseudo. | Keywo. |

|---|---|---|---|---|---|---|

| XGBoost | 0.52 | 0.44 | 0.43 | 0.44 | 0.43 | 0.43 |

| NaïveBayes | 0.42 | 0.33 | 0.29 | 0.33 | 0.30 | 0.30 |

| Perceptron | 0.56 | 0.42 | 0.43 | 0.43 | 0.43 | 0.43 |

| MLP | 0.37 | 0.27 | 0.25 | 0.27 | 0.25 | 0.25 |

| DecisionTree | 0.46 | 0.29 | 0.34 | 0.32 | 0.34 | 0.34 |

| SVM | 0.42 | 0.29 | 0.29 | 0.29 | 0.27 | 0.27 |

Table 8.

Weighted average F1 score for input scenario B*Q (oversampling)

| Label | NoOvers. | SMOTE | B.SMOTE | ADASYN | Pseudo. | Keywo. |

|---|---|---|---|---|---|---|

| XGBoost | 0.52 | 0.44 | 0.43 | 0.44 | 0.01 | 0.43 |

| NaïveBayes | 0.42 | 0.33 | 0.29 | 0.01 | – | 0.30 |

| Perceptron | 0.56 | 0.43 | 0.43 | 0.43 | 0.01 | 0.43 |

| MLP | 0.37 | 0.27 | 0.24 | 0.27 | 0.01 | 0.26 |

| DecisionTree | 0.46 | 0.32 | 0.34 | 0.32 | 0.01 | 0.34 |

| SVM | 0.42 | 0.29 | 0.29 | 0.29 | 0.01 | 0.27 |

Bias Identification.

This work also investigated “semantic bias”, referring to words spuriously associated with a specific semantic type. This investigation was motivated by the concern that certain words might disproportionately influence the labeling process despite not being truly indicative of that type. Such bias could arise from the limited data used in this study. Specifically, we aimed to identify disease-related words exhibiting semantic bias, as disease terminology ideally should not be inherently tied to a particular semantic type. We initially considered the top-20 terms with the highest C(t, l) as candidates for potentially biased terms with respect to a particular semantic type l. This step is similar to the keyword generation process for the oversampling mechanism (see Modeling semantic category section). These terms were then categorized into two groups: (1) terms that serve as characteristics and (2) potentially biased terms. Biased terms are defined as those among the top-20 contributing terms that should not be associated with a specific semantic type.

In summary, this paper proposes a three-step approach to identify biased terms and investigate their effects on machine learning models:

Candidate Identification. Potentially biased words are identified based on pre-computed C(t, l) scores. This work focuses specifically on disease-related terms as candidates. Let B denotes this set of candidates;

Template Construction. A list of question templates T is constructed, with each question is associated with a specific label (e.g., DGN and MNA). These templates, detailed in Table 9, are derived from validated examples provided by Roberts et al. [14];

Performance Evaluation and Bias Detection. The performance of machine learning models is evaluated on predicting labels for the constructed template set T. Biased words are detected based on model predictions. A term is considered unbiased if its predicted label accurately reflects its intended meaning. Conversely, if the predicted label indicates a bias towards a specific concept, the term is flagged as biased. For example, the template “what is the right treatment for disease {disease name}?” is labelled as MNA. Suppose a question q is instantiated from this template by replacing {disease name} with “cancer”. If a machine learning model m consistently predicts question q as DGN, it is likely that the term “cancer” is biased with respect to m.

Table 9.

List of question templates for detecting biased terms

| No | Sentence | Semantic Type |

|---|---|---|

| 1 | apakah saya menderita penyakit {disease name} | Diagnosis |

| (do I have disease {disease name}) | ||

| 2 | apakah saya terkena {disease name} | Diagnosis |

| (do I have disease {disease name}) | ||

| 3 | pengobatan yang tepat untuk penyakit {disease name} | Management |

| (what is the right treatment for disease {disease name}) | ||

| 4 | bagaimana cara mengatasi {disease name} | Management |

| (how to deal with disease {disease name}) | ||

| 5 | apa obat yang cepat untuk mengatasi {disease name} | Management |

| (what is the right medicine to overcome {disease name}) | ||

| 6 | apa penyebab {disease name} | Cause |

| (what causes {disease name}) | ||

| 7 | apa gejala {disease name} | Manifestation |

| (what are the symptomps of {disease name}) | ||

| 8 | apa ciri-ciri {disease name} | Manifestation |

| (what are the charactheristics of {disease name}) | ||

| 9 | dokter spesialis apa yang sebaiknya saya temui untuk menangani {disease name} | PersonORG |

| (what specialist do I have to see to treat {disease name}) | ||

| 10 | saya menderita {disease name} dokter spesialis apa yang sebaiknya saya temui | PersonORG |

| (I have {disease name} what specialist should I see) | ||

| 11 | penjelasan terkait {disease name} | Information |

| (explaination of {disease name}) | ||

| 12 | apakah {disease name} bisa sembuh | Prognosis |

| (can {disease name} be cured) | ||

| 13 | apakah {disease name} berbahaya bagi kesehatan saya | Complication |

| (is {disease name} bad to my health) | ||

| 14 | berapa kira-kira biaya perawatan {disease name} | Other |

| (what is the approximation cost of treatment {disease name}) |

Terms categorized as characteristic of a particular semantic type can be seen in Table 10, while terms categorized as biased can be observed in Table 11. Finally, we investigated the impact of biased terms on model performance (XGBoost, Naïve Bayes, and Multilayer Perceptron) by comparing their outcomes when trained with and without biased terms as features. We employed both input scenario Q and unigram weighting for this analysis. The performance of each model remained statistically unchanged, suggesting that the removal of biased features did not affect their overall effectiveness. This might be attributed to the fact that biases were evaluated on synthetic and highly specific data. Further research is necessary to determine if similar biases persist in the newly generated corpus.

Table 11.

Biased terms identified when utilizing three machine learning models

| XGBoost | Naïve Bayes | MLP | |||

|---|---|---|---|---|---|

| Term | Type | Term | Type | Term | Type |

| kanker | DGN | kanker | DGN | kanker | DGN |

| (cancer) | (cancer) | (cancer) | |||

| depresi | DGN | depresi | DGN | depresi | DGN |

| (depression) | (depression) | (depression) | |||

| hepatitis | MNF | hepatitis | DGN | hepatitis | DGN |

| silinder | MNF | silinder | INF | maag | CAU |

| (astigmatism) | (astigmatism) | (gastritis) | |||

| maag | MNF | tumor | CAU | ||

| (cancer) | (cancer) | ||||

| wasir | CMP | wasir | CMP | ||

| (hemorrhoids) | (hemorrhoids) | ||||

Conclusion

A key component for a consumer health question-answering system is a module to understand the user’s intent by classifying the question’s semantic type. This study investigates a method for automatically classifying the semantic type of Indonesian consumer health questions, allowing the QA system to direct users to the most relevant information. To address the scarcity problem of Indonesian health domain data, we built a new corpus of Indonesian consumer health queries with 964 questions labeled with their corresponding semantic types. The semantic types were adapted from Roberts et al. [14] and validated by a medical expert. A pre-medical student was also recruited to annotate the questions, and the inter-annotator agreement for semantic type determination was 0.48 (a moderate agreement level).

We make use of our newly created corpus to develop and evaluate a data-driven predictive model for classifying question semantic types. The model construction involved a variety of machine learning algorithms, encompassing both conventional models requiring manual feature engineering and deep learning models capable of automatic textual feature extraction. To address the challenges of imbalanced data distribution and limited data availability, several oversampling approaches were also employed: SMOTE, ADASYN, Borderline-SMOTE, keyword-based oversampling, and pseudo-labeling oversampling. In general, Perceptron and XGBoost emerged as the top-performing models, achieving the highest weighted average F1 scores across all input scenarios and weighting factors. Our study also revealed that the effectiveness of oversampling techniques varied significantly between models. For example, applying SMOTE actually worsened the performance of the Decision Tree model. Conversely, Borderline-SMOTE led to improvements in the SVM and Naïve Bayes models. These findings emphasize the crucial role of carefully selecting and evaluating oversampling techniques to achieve optimal model performance.

To enhance the trustworthiness and interpretability of the model’s predictions, we employed the LIME framework, which facilitates a deeper understanding of how word-based features influence the model’s decision-making process. We propose an approach using LIME to identify potentially biased terms that disproportionately influence predictions and to investigate their effects on model performance. We define biased terms as those that lack a strong association with a specific semantic type but still significantly influence the model’s predictions. LIME-based analysis can reveal these terms. For example, the word “ciri” (“symptom”) might be considered a characteristic of the MNF (Manifestation) semantic type. However, not all high-scoring terms represent true semantic type characteristics. Some words, like “hepatitis”, might be erroneously associated with a particular type (e.g., DGN or Diagnosis) due to bias in the training data. To assess the impact of biased terms, we conducted an experiment where disease-related words with high LIME scores but weak semantic type association were removed from the test data. This experiment revealed no significant difference in model performance with or without these biased words.

One crucial area for future direction involves expanding the size and diversity of our dataset. This will enable us to explore more advanced deep learning approaches, which often require large amounts of labeled data for optimal performance. As our experiments have shown, BERT-based models demonstrate worse performance in our current setting compared to other conventional approaches. We will investigate efficient and cost-effective strategies to increase the size of our dataset, including active learning method that can acquire labels for the most informative data points, maximizing the gain in model performance with minimal annotation effort. From an application perspective, semantics type can be combined with named entities [58] and keywords [59] extracted from questions to produce a more comprehensive question understanding module in a consumer health QA system.

Abbreviations

- ADASYN

Adaptive Synthetic Sampling Approach for Imbalanced Learning

- ANT

Anatomy

- BERT

Bidirectional Encoder Representation from Transformer

- CAU

Cause

- CMP

Complication

- DGN

Diagnosis

- GARD

Genetic and Rare Diseases Information Center

- HIV

Human Immunodeficiency Virus

- ICU

Intensive Care Unit

- INF

Information

- LIME

Local Interpretable Model-agnostic Explanations

- MLP

Multi Layer Perceptron

- MNA

Management

- MNF

Manifestation

- NDD

Not a Disease

- NLM

National Library of Medicine

- OTH

Other

- PRG

Prognosis

- PRS

PersonORG

- QA

Question Answering

- SMOTE

Synthetic Minority Over-sampling Technique

- SSC

Susceptibility

- SVM

Support Vector Machine

- TF-IDF

Term Frequency - Inverse Document Frequency

- XGBoost

Extreme Gradient Boosting

Authors’ contributions

R.N.H, R.M., and A.F.W. design of the work; R.N.H did acquisition, analysis, interpretation of data; and the creation of new software used in the work. R.N.H. wrote the first draft of manuscript text; R.M. and A.F.W. wrote and revised manuscript. All authors reviewed the manuscript.

Funding

This work was supported by Faculty of Computer Science, Universitas Indonesia, through Grant NKB-004/UN2.F11.D/HKP.05.00/2023

Data availability

Data is available at https://github.com/ranianhanami/semantic_class_idn_chqa.

Declarations

Ethics approval and consent to participate

Not applicable.

Competing interests

The authors declare the following potential competing interests. Rahmad Mahendra is currently a PhD student at School of Computing Technologies at RMIT University, working at research project at The ARC Training Centre in Cognitive Computing for Medical Technologies. He is also affiliated with School of Computing and Information Systems, the University of Melbourne.

Footnotes

We mostly made use of sklearn, a Python’s library for machine learning.

However, this does not hold true for BERT, as evidenced by the results reported in Tabel 3.

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

References

- 1.Weissenborn D, Tsatsaronis G, Schroeder M. Answering Factoid Questions in the Biomedical Domain. In: BioASQ@CLEF. Heidelberg: Springer; 2013.

- 2.Wiese G, Weissenborn D, Neves M. Neural Domain Adaptation for Biomedical Question Answering. In: Levy R, Specia L, editors. Proceedings of the 21st Conference on Computational Natural Language Learning (CoNLL 2017). Vancouver: Association for Computational Linguistics; 2017. pp. 281–9. https://aclanthology.org/K17-1029. 10.18653/v1/K17-1029.

- 3.Yang Z, Zhou Y, Nyberg E. Learning to Answer Biomedical Questions: OAQA at BioASQ 4B. In: Kakadiaris IA, Paliouras G, Krithara A, editors. Proceedings of the Fourth BioASQ workshop. Berlin: Association for Computational Linguistics; 2016. pp. 23–37. https://aclanthology.org/W16-3104. 10.18653/v1/W16-3104.

- 4.Ben Abacha A, Zweigenbaum P. MEANS: a medical question-answering system combining NLP techniques and semantic web technologies. Inf Process Manag. 2015;51(5):570–94. 10.1016/j.ipm.2015.04.006. [Google Scholar]

- 5.Cao Y, Liu F, Simpson P, Antieau L, Bennett A, Cimino JJ, et al. AskHERMES: an online question answering system for complex clinical questions. J Biomed Inform. 2011;44(2):277–88. 10.1016/j.jbi.2011.01.004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Jin Q, Yuan Z, Xiong G, Yu Q, Ying H, Tan C, et al. Biomedical Question Answering: A Survey of Approaches and Challenges. ACM Comput Surv (CSUR). 2021;55:1–36. [Google Scholar]

- 7.Lamurias A, Sousa D, Couto FM. Generating biomedical question answering corpora from Q &a forums. IEEE Access. 2020;8:161042–51. 10.1109/ACCESS.2020.3020868. [Google Scholar]

- 8.Yang Z, Gupta N, Sun X, Xu D, Zhang C, Nyberg E. Learning to Answer Biomedical Factoid & List Questions: OAQA at BioASQ 3B. In: Conference and Labs of the Evaluation Forum. Heidelberg: Springer; 2015.

- 9.Lally A, Prager JM, McCord MC, Boguraev BK, Patwardhan S, Fan J, et al. Question analysis: how Watson reads a clue. IBM J Res Dev. 2012;56(3.4): 2:1-2:14. 10.1147/JRD.2012.2184637. [Google Scholar]

- 10.Kilicoglu H, Abacha AB, Mrabet Y, Shooshan SE, Rodriguez LM, Masterton K, et al. Semantic annotation of consumer health questions. BMC Bioinformatics. 2018;19. 10.1186/s12859-018-2045-1. [DOI] [PMC free article] [PubMed]

- 11.Patrick J, Li M. An ontology for clinical questions about the contents of patient notes. J Biomed Inform. 2012;45(2):292–306. [DOI] [PubMed] [Google Scholar]

- 12.Roberts K, Masterton K, Fiszman M, Kilicoglu H, Demner-Fushman D. Annotating question types for consumer health questions. In: Proceedings of the Fourth LREC Workshop on Building and Evaluating Resources for Health and Biomedical Text Processing. Paris: European Language Resources Association (ELRA); 2014.

- 13.Ely JW, Osheroff JA, Ebell MH, Bergus GR, Levy BT, Chambliss ML, et al. Analysis of questions asked by family doctors regarding patient care. BMJ. 1999;319(7206):358–61. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Roberts K, Masterton K, Fiszman M, Kilicoglu H, Demner-Fushman D. Annotating Question Decomposition on Complex Medical Questions. In: Calzolari N, Choukri K, Declerck T, Loftsson H, Maegaard B, Mariani J, et al., editors. Proceedings of the Ninth International Conference on Language Resources and Evaluation (LREC‘14). Reykjavik: European Language Resources Association (ELRA); 2014. pp. 2598–602. https://aclanthology.org/L14-1156/.

- 15.Hakim AN, Mahendra R, Adriani M, Ekakristi AS. Corpus development for Indonesian consumer-health question answering system. In: 2017 International Conference on Advanced Computer Science and Information Systems (ICACSIS). Piscataway: Institute of Electrical and Electronics Engineers (IEEE); 2017. pp. 222–7. https://api.semanticscholar.org/CorpusID:19151762.

- 16.Roberts K, Kilicoglu H, Fiszman M, Demner-Fushman D. Automatically classifying question types for consumer health questions. In: AMIA Annual Symposium Proceedings. Vol. 2014. Arlington: American Medical Informatics Association; 2014. p. 1018. [PMC free article] [PubMed]

- 17.Fan RE, Chang KW, Hsieh CJ, Wang XR, Lin CJ. LIBLINEAR: a library for large linear classification. J Mach Learn Res. 2008;9:1871–4. [Google Scholar]

- 18.Pudil P, Novovičová J, Kittler J. Floating search methods in feature selection. Pattern Recogn Lett. 1994;15(11):1119–25. [Google Scholar]

- 19.Sarrouti M, Ouatik El Alaoui S. A Machine Learning-based Method for Question Type Classification in Biomedical Question Answering. Methods Inf Med. 2017;56. 10.3414/ME16-01-0116. [DOI] [PubMed]

- 20.Kilicoglu H, Abacha AB, M’rabet Y, Roberts K, Rodriguez L, Shooshan S, et al. Annotating named entities in consumer health questions. In: Proceedings of the Tenth International Conference on Language Resources and Evaluation (LREC’16). Paris: European Language Resources Association (ELRA); 2016. pp. 3325–32.

- 21.Lafferty JD, McCallum A, Pereira FCN. Conditional Random Fields: Probabilistic Models for Segmenting and Labeling Sequence Data. In: Proceedings of the Eighteenth International Conference on Machine Learning. ICML ’01. San Francisco: Morgan Kaufmann Publishers Inc.; 2001. pp. 282–9.

- 22.Straus SE, Glasziou P, Richardson WS, Haynes RB. Evidence-based medicine E-book: how to practice and teach EBM. Edinburgh: Elsevier Health Sciences; 2019. [Google Scholar]

- 23.Athenikos SJ, Han H, Brooks AD. Semantic analysis and classification of medical questions for a logic-based medical question-answering system. In: 2008 IEEE International Conference on Bioinformatics and Biomedicine Workshops. Piscataway: IEEE; 2008. pp. 111–2.

- 24.Ely JW, Osheroff JA, Gorman PN, Ebell MH, Chambliss ML, Pifer EA, et al. A taxonomy of generic clinical questions: classification study. BMJ. 2000;321(7258):429–32. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Reynolds RD. A family practice article filing system. J Fam Pract. 1995;41:583–90. [PubMed] [Google Scholar]

- 26.Goodwin TR, Harabagiu SM. Medical question answering for clinical decision support. In: Proceedings of the 25th ACM international on conference on information and knowledge management. New York: Association for Computing Machinery (ACM); 2016. pp. 297–306. [DOI] [PMC free article] [PubMed]

- 27.Goodwin TR, Harabagiu SM. Knowledge representations and inference techniques for medical question answering. ACM Trans Intell Syst Technol. 2017;26. 10.1145/3106745.

- 28.Cairns B, Nielsen RD, Masanz JJ, Martin JH, Palmer M, Ward WH, et al. The MiPACQ clinical question answering system. AMIA Ann Symp Proc. 2011;2011:171–80. [PMC free article] [PubMed] [Google Scholar]

- 29.Kilicoglu H, Fiszman M, Demner-Fushman D. Interpreting Consumer Health Questions: The Role of Anaphora and Ellipsis. In: Cohen KB, Demner-Fushman D, Ananiadou S, Pestian J, Tsujii J, editors. Proceedings of the 2013 Workshop on Biomedical Natural Language Processing. Sofia: Association for Computational Linguistics; 2013. pp. 54–62. https://aclanthology.org/W13-1907.

- 30.Liu F, Antieau LD, Yu H. Toward automated consumer question answering: automatically separating consumer questions from professional questions in the healthcare domain. J Biomed Inform. 2011;44(6):1032–8. 10.1016/j.jbi.2011.08.008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Zhang Y. Contextualizing Consumer Health Information Searching: An Analysis of Questions in a Social Q &A Community. In: Proceedings of the 1st ACM International Health Informatics Symposium. IHI ’10. New York: Association for Computing Machinery; 2010. pp. 210–9. 10.1145/1882992.1883023.

- 32.Kilicoglu H, Fiszman M, Roberts K, Demner-Fushman D. An ensemble method for spelling correction in consumer health questions. In: AMIA Annual Symposium Proceedings. Vol. 2015. Arlington: American Medical Informatics Association; 2015. p. 727. [PMC free article] [PubMed]

- 33.Roberts K, Demner-Fushman D. Interactive use of online health resources: a comparison of consumer and professional questions. J Am Med Inform Assoc. 2016;23(4):802–11. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Andersen U, Braasch A, Henriksen L, Huszka C, Johannsen A, Kayser L, et al. Creation and use of Language Resources in a Question-Answering eHealth System. In: Calzolari N, Choukri K, Declerck T, Doğan MU, Maegaard B, Mariani J, et al., editors. Proceedings of the Eighth International Conference on Language Resources and Evaluation (LREC‘12). Istanbul, Turkey: European Language Resources Association (ELRA); 2012. pp. 2536–42. https://aclanthology.org/L12-1274/.

- 35.Van Der Volgen J, Harris B, Demner-Fushman D. Analysis of consumer health questions for development of question–answering technology. In: Proceedings of one HEALTH: Information in an interdependent world, the 2013 annual meeting and exhibition of the Medical Library Association (MLA). Chicago: Medical Library Association; 2013.

- 36.Roberts K, Kilicoglu H, Fiszman M, Demner-Fushman D. Decomposing Consumer Health Questions. In: Cohen K, Demner-Fushman D, Ananiadou S, Tsujii Ji, editors. Proceedings of BioNLP 2014. Baltimore: Association for Computational Linguistics; 2014. pp. 29–37. https://aclanthology.org/W14-3405/.

- 37.Surdeanu M, Ciaramita M, Zaragoza H. Learning to Rank Answers on Large Online QA Collections. In: Moore JD, Teufel S, Allan J, Furui S, editors. Proceedings of ACL-08: HLT. Columbus: Association for Computational Linguistics; 2008. pp. 719–27. https://aclanthology.org/P08-1082/.

- 38.Ely JW, Osheroff JA, Ferguson KJ, Chambliss ML, Vinson DC, Moore JL. Lifelong self-directed learning using a computer database of clinical questions. J Fam Pract. 1997;45(5):382–90. [PubMed] [Google Scholar]

- 39.D’Alessandro DM, Kreiter CD, Peterson MW. An evaluation of information-seeking behaviors of general pediatricians. Pediatrics. 2004;113(1):64–9. [DOI] [PubMed] [Google Scholar]

- 40.Guo H, Na X, Li J. Qcorp: an annotated classification corpus of Chinese health questions. BMC Med Inform Decis Making. 2018;18(S–1):39–47. 10.1186/S12911-018-0593-Y. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Wibowo H, Fuadi E, Nityasya M, Prasojo RE, Aji A. COPAL-ID: Indonesian Language Reasoning with Local Culture and Nuances. In: Duh K, Gomez H, Bethard S, editors. Proceedings of the 2024 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies (Volume 1: Long Papers). Mexico City: Association for Computational Linguistics; 2024. pp. 1404–22. https://aclanthology.org/2024.naacl-long.77/. 10.18653/v1/2024.naacl-long.77.

- 42.Koto F, Mahendra R, Aisyah N, Baldwin T. Indoculture: exploring geographically influenced cultural commonsense reasoning across eleven Indonesian provinces. Trans Assoc Comput Linguist. 2024;12:1703–19. 10.1162/tacl_a_00726. [Google Scholar]

- 43.Wibowo HA, Prawiro TA, Ihsan M, Aji AF, Prasojo RE, Mahendra R, et al. Semi-Supervised Low-Resource Style Transfer of Indonesian Informal to Formal Language with Iterative Forward-Translation. In: 2020 International Conference on Asian Language Processing (IALP). 2020. pp. 310–5. 10.1109/IALP51396.2020.9310459.

- 44.Barik AM, Mahendra R, Adriani M. Normalization of Indonesian-English Code-Mixed Twitter Data. In: Xu W, Ritter A, Baldwin T, Rahimi A, editors. Proceedings of the 5th Workshop on Noisy User-generated Text (W-NUT 2019). Hong Kong: Association for Computational Linguistics; 2019. pp. 417–24. 10.18653/v1/D19-5554.

- 45.Ekakristi AS, Mahendra R, Adriani M. Finding Questions in Medical Forum Posts Using Sequence Labeling Approach. In: Gelbukh A, editor. Computational Linguistics and Intelligent Text Processing. Cham: Springer Nature Switzerland; 2023. p. 62–73. [Google Scholar]

- 46.Nurhayati S. Pencarian pertanyaan serupa pada forum konsultasi kesehatan online dengan pendekatan perolehan informasi [Bachelor’s thesis]. Depok: Faculty of Computer Science, Universitas Indonesia; 2019.

- 47.Cohen J. A coefficient of agreement for nominal scales. Educ Psychol Meas. 1960;20(1):37–46. [Google Scholar]

- 48.Landis JR, Koch GG. The measurement of observer agreement for categorical data. Biometrics. 1977;33(1):159–74. [PubMed] [Google Scholar]

- 49.Chen T, Guestrin C. XGBoost: A Scalable Tree Boosting System. In: Proceedings of the 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining. KDD ’16. New York: Association for Computing Machinery; 2016. pp. 785–94. 10.1145/2939672.2939785.

- 50.Vapnik VN. The Support Vector method. In: Gerstner W, Germond A, Hasler M, Nicoud JD, editors. Artificial Neural Networks – ICANN’97. Berlin: Springer; 1997. pp. 261–71.

- 51.Devlin J, Chang MW, Lee K, Toutanova K. BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding. In: Burstein J, Doran C, Solorio T, editors. Proceedings of the 2019 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Volume 1 (Long and Short Papers). Minneapolis: Association for Computational Linguistics; 2019. pp. 4171–86. https://aclanthology.org/N19-1423/. 10.18653/v1/N19-1423.

- 52.Wilie B, Vincentio K, Winata GI, Cahyawijaya S, Li X, Lim ZY, et al. IndoNLU: Benchmark and Resources for Evaluating Indonesian Natural Language Understanding. In: Wong KF, Knight K, Wu H, editors. Proceedings of the 1st Conference of the Asia-Pacific Chapter of the Association for Computational Linguistics and the 10th International Joint Conference on Natural Language Processing. Suzhou: Association for Computational Linguistics; 2020. pp. 843–57. https://aclanthology.org/2020.aacl-main.85/. 10.18653/v1/2020.aacl-main.85.

- 53.Mahendra R, Hakim AN, Adriani M. Towards question identification from online healthcare consultation forum post in bahasa. In: 2017 International Conference on Asian Language Processing (IALP). 2017. pp. 399–402. 10.1109/IALP.2017.8300627.

- 54.Chawla N, Bowyer K, Hall L, Kegelmeyer W. SMOTE: synthetic minority over-sampling technique. J Artif Intell Res. 2002;16:321–57. 10.1613/jair.953. [Google Scholar]

- 55.He H, Bai Y, Garcia EA, Li S. ADASYN: Adaptive synthetic sampling approach for imbalanced learning. In: 2008 IEEE International Joint Conference on Neural Networks (IEEE World Congress on Computational Intelligence). 2008. pp. 1322–8. 10.1109/IJCNN.2008.4633969.

- 56.Han H, Wang WY, Mao BH. Borderline-SMOTE: A New Over-Sampling Method in Imbalanced Data Sets Learning. In: Huang DS, Zhang XP, Huang GB, editors. Advances in Intelligent Computing. Berlin: Springer; 2005. pp. 878–87.

- 57.Ribeiro MT, Singh S, Guestrin C. “Why Should I Trust You?”: Explaining the Predictions of Any Classifier. In: Proceedings of the 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, San Francisco, CA, USA, August 13-17, 2016. New York: Association for Computing Machinery (ACM); 2016. pp. 1135–44.

- 58.Suwarningsih W, Supriana I, Purwarianti A. ImNER Indonesian medical named entity recognition. In: 2014 2nd International Conference on Technology, Informatics, Management, Engineering & Environment. 2014. pp. 184–8. 10.1109/TIME-E.2014.7011615.

- 59.Saputra IF, Mahendra R, Wicaksono AF. Keyphrases Extraction from User-Generated Contents in Healthcare Domain Using Long Short-Term Memory Networks. In: Demner-Fushman D, Cohen KB, Ananiadou S, Tsujii J, editors. Proceedings of the BioNLP 2018 workshop. Melbourne: Association for Computational Linguistics; 2018. pp. 28–34. 10.18653/v1/W18-2304.

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

Data is available at https://github.com/ranianhanami/semantic_class_idn_chqa.