Abstract

PURPOSE

For patients with cancer and their doctors, prognosis is important for choosing treatments and supportive care. Oncologists’ life expectancy estimates are often inaccurate, and many patients are not aware of their general prognosis. Machine learning (ML) survival models could be useful in the clinic, but there are potential concerns involving accuracy, provider training, and patient involvement. We conducted a qualitative study to learn about patient and oncologist views on potentially using a ML model for patient care.

METHODS

Patients with metastatic cancer (n = 15) and their family members (n = 5), radiation oncologists (n = 5), and medical oncologists (n = 5) were recruited from a single academic health system. Participants were shown an anonymized report from a validated ML survival model for another patient, which included a predicted survival curve and a list of variables influencing predicted survival. Semistructured interviews were conducted using a script.

RESULTS

Every physician and patient who completed their interview said that they would want the option for the model to be used in their practice or care. Physicians stated that they would use an AI prognosis model for patient triage and increasing patient understanding, but had concerns about accuracy and explainability. Patients generally said that they would trust model results completely if presented by their physician but wanted to know if the model was being used in their care. Some reacted negatively to being shown a median survival prediction.

CONCLUSION

Patients and physicians were supportive of use of the model in the clinic, but had various concerns, which should be addressed as predictive models are increasingly deployed in practice.

INTRODUCTION

Physicians caring for patients with cancer assess risks/benefits of therapies and educate patients about their disease. Most patients want to know their prognosis and chance of cure, and physicians often inform patients when their cancer is curable as it instills hope and increases resiliency for side effects.1,2 When a cancer is incurable, sharing prognosis with the patient allows them to make informed decisions.3,4

Physician concerns about inaccuracy of prognosis estimates and for patients’ emotional well-being, and their often-inaccurate estimates, cause many to forgo prognosis conversations with patients.5,6 Prognostic conversations occur infrequently; while 71% of patients indicated that they wanted to discuss prognosis with their physician, only 17.6% reported having these critical conversations.5 The absence of these conversations can cause misunderstandings about goals of care. In one study, up to 81% of patients receiving palliative chemotherapy believed that the treatment intent was curative.7 Patients who do not discuss prognosis with their physician are more likely to overestimate it.3 Inaccurate estimates can impede the transition away from active treatment or cause patients to forgo effective therapies.8

Machine learning/artificial intelligence (ML/AI) could improve the accuracy of prognosis estimates and aid physicians in determining which patients need these conversations most. Patients and physicians are becoming increasingly familiar with technology and expect its clinical use.9,10 ML has been used in multiple models aimed at improving the prediction of survival time in patients with cancer.10–13 However, many questions remain about how these models can be used and incorporated into clinical practice.9,14,15

A few studies have been performed on AI/ML acceptance in health care; only some of those have considered specific applications or showed participants what a report from an AI model would look like.14,16–18 We previously developed a ML prognostic model for patients with metastatic cancer and found that it performed slightly better than physicians.13,19,20 However, issues remain regarding how to implement this model into the clinical setting, chief among them being patient and provider attitudes toward such a tool and how it may affect decisions. We therefore conducted a qualitative study to better understand patients and oncologists’ understanding, perceptions, and feelings toward this computer model, which included showing them a model report of a real patient.

METHODS

The ML survival model was trained using the electronic health records of 12,588 patients with metastatic cancer.13 Over 4,000 predictor variables are considered by the model when making predictions, including laboratory values, vital signs, diagnosis codes, and term frequencies from the note text.13 A list of the features causing the largest increase and decrease in predicted survival for each patient is generated.20 The ML survival model was used to create a sample report for a real patient with prostate cancer that could be shown to their physician; personally identifiable information was changed (Fig 1).

FIG 1.

Anonymized model report shown to participants. MCH, mean corpuscular hemoglobin; MCHC, mean corpuscular hemoglobin concentration; MCV, mean corpuscular volume; PSA, prostate-specific antigen.

From July 2021 to February 2022, patients and oncologists were approached to participate in semistructured interviews. Patients were required to be at least 18 years old, have metastatic cancer (excluding leukemia or lymphoma), be English-speaking, and not have severe cognitive impairment. See the study Protocol for full inclusion criteria. Participants were shown the sample ML model report (Fig 1) and then asked standardized questions, which can be found in the study Protocol.

All patients received care at the Stanford Cancer Institute and were referred by medical or radiation oncologists. Caregivers were welcomed but not required to be part of the interview. Interviews occurred in person or via Zoom, and patients were not interviewed by their treating physician to ensure unbiased discussions. Physicians were recruited for interviews in batches to include a range of sexes and subspecialties. Patient household incomes were estimated using 2019 US Census Zip code data.

Interview transcripts were uploaded to qualitative data management software (NVIVO 1.6.1). A research team member (R.D.H.) read the transcripts and created codes inductively corresponding to the ideas being discussed. The codes were used to form a codebook following an iterative process. Transcripts were reviewed by two study members (R.D.H. and A.N.E.), and codes were assigned. Discrepancies were discussed until consensus was reached. Coded excerpts were then analyzed by the entire study team thematically, following the constant comparative method, where codes were grouped to enable pattern finding.21

This study was approved by the Institutional Review Board, and all participants provided informed consent with no compensation.

RESULTS

Fifteen patients, 10 physicians, and five caregivers were interviewed (demographics in Table 1), meeting the study’s accrual goal. Twelve physicians had been approached for participation, for an enrollment rate of 83%; the number of patients approached was not recorded. Patient interviews ranged from 12 to 29 minutes (median, 19). Physician interviews ranged from 13 to 38 minutes (median, 31). One patient interview was stopped early at the patient’s request.

TABLE 1.

Participant Characteristics

| Patient Demographic | n = 15 |

|---|---|

| Sex, No. (%) | |

| Male | 8 (53) |

| Female | 7 (47) |

| Age, years, No. (%) | |

| 50–59 | 2 (13) |

| 60–69 | 3 (20) |

| 70–79 | 7 (47) |

| 80–89 | 3 (20) |

| Race, No (%) | |

| White | 8 (53) |

| Asian | 6 (40) |

| Others | 1 (7) |

| Ethnicity, No. (%) | |

| Non-Hispanic/non-Latino | 13 (87) |

| Hispanic/Latino | 2 (13) |

| Household income (USD), No. (%) | |

| 50,000–99,999 | 8 (53) |

| 100,000–149,999 | 3 (20) |

| 150,000–199,999 | 3 (20) |

| Not reported | 1 (7) |

| Residency, No. (%) | |

| California | 13 (87) |

| Non-California | 2 (13) |

| Education, No (%) | |

| High school/GED | 3 (20) |

| BA/BS/associates | 5 (33) |

| Postgraduate degree | 6 (40) |

| Unknown | 1 (7) |

| Caregiver(s) present during interview,a No. (%) | 4 (27) |

| Occupation, No. (%) | |

| Medical oncologist | 5 (50) |

| Radiation oncologist | 5 (50) |

| Sex, No. (%) | |

| Male | 5 (50) |

| Female | 5 (50) |

| Years in practice, No. (%) | |

| 0–9 | 3 (30) |

| 10–19 | 5 (50) |

| 20–29 | 2 (20) |

| Practice type, No. (%) | |

| Tertiary care center | 8 (80) |

| Outreach site | 2 (20) |

Abbreviation: GED, General Educational Development; USD, US dollars.

One patient had two caregivers present.

At the time of analysis, 3 of 15 patients interviewed were deceased, with an average of 140 days between interview and death.

Patient Statistics

Most patients interviewed (n = 9, 60%) had not discussed prognosis with a physician; of those who had, most (n = 4, 67%) found the conversation useful (Table 2). Of those who had not discussed prognosis, most (n = 6, 67%) reported wanting to have this conversation. All patients who completed the interview (n = 14, 93%) said that they would want the option for a prognostic model to be used in their care and the majority (n = 11, 78%) would want to see their report if the model was run on them.

TABLE 2.

Patient Responses

| Item | Yes, No. (%) | No, NO. (%) | Unsure, No. (%) | Unanswered/Not Applicable, No. (%) |

|---|---|---|---|---|

| Have discussed prognosis with a physician | 6 (40) | 9 (60) | 0 (0) | 0 (0) |

| Found the prognosis discussion helpful | 4 (27) | 2 (13) | 0 (0) | 9 (60) |

| Have not had a prognosis discussion but want to | 6 (40) | 3 (20) | 0 (0) | 6 (40) |

| Want the option for a prognostic model to be used in their care | 14 (93) | 0 (0) | 0 (0) | 1 (7) |

| Believe they have the right to know if a prognostic computer model is used in their care | 11 (73) | 3 (20) | 0 (0) | 1 (7) |

| Would want to see the model report if it was run on them | 11 (73) | 1 (7) | 2 (13) | 1 (7) |

Physician Statistics

While 8 of 10 oncologists reported that they inform patients if their cancer is incurable, only three would show any patient the model report if asked (Table 3). Two would show the report in certain instances, three after greater validation, and three would rarely or never show the report.

TABLE 3.

Physician Responses

| Item | Medical Oncologists (n = 5), No. (%) | Radiation Oncologists (n = 5), No. (%) |

|---|---|---|

| Prognosis communication | ||

| Typically inform patients if their cancer is incurable | 5 (100) | 3 (60) |

| Typically discuss prognosis with patients | 3 (60) | 1 (20) |

| Only discuss prognosis with patients if directly asked | 1 (20) | 2 (40) |

| Model feedback | ||

| Would want option to use model in clinical care | 5 (100) | 5 (100) |

| Would show patients model report if asked | 1 (20) | 2 (40) |

| Would only show model reports to select patients | 1 (20) | 1 (20) |

| Would only show patients model report if more validated | 2 (40) | 0 (0) |

| Would rarely or never show patients model report | 1 (20) | 2 (40) |

Physician Themes

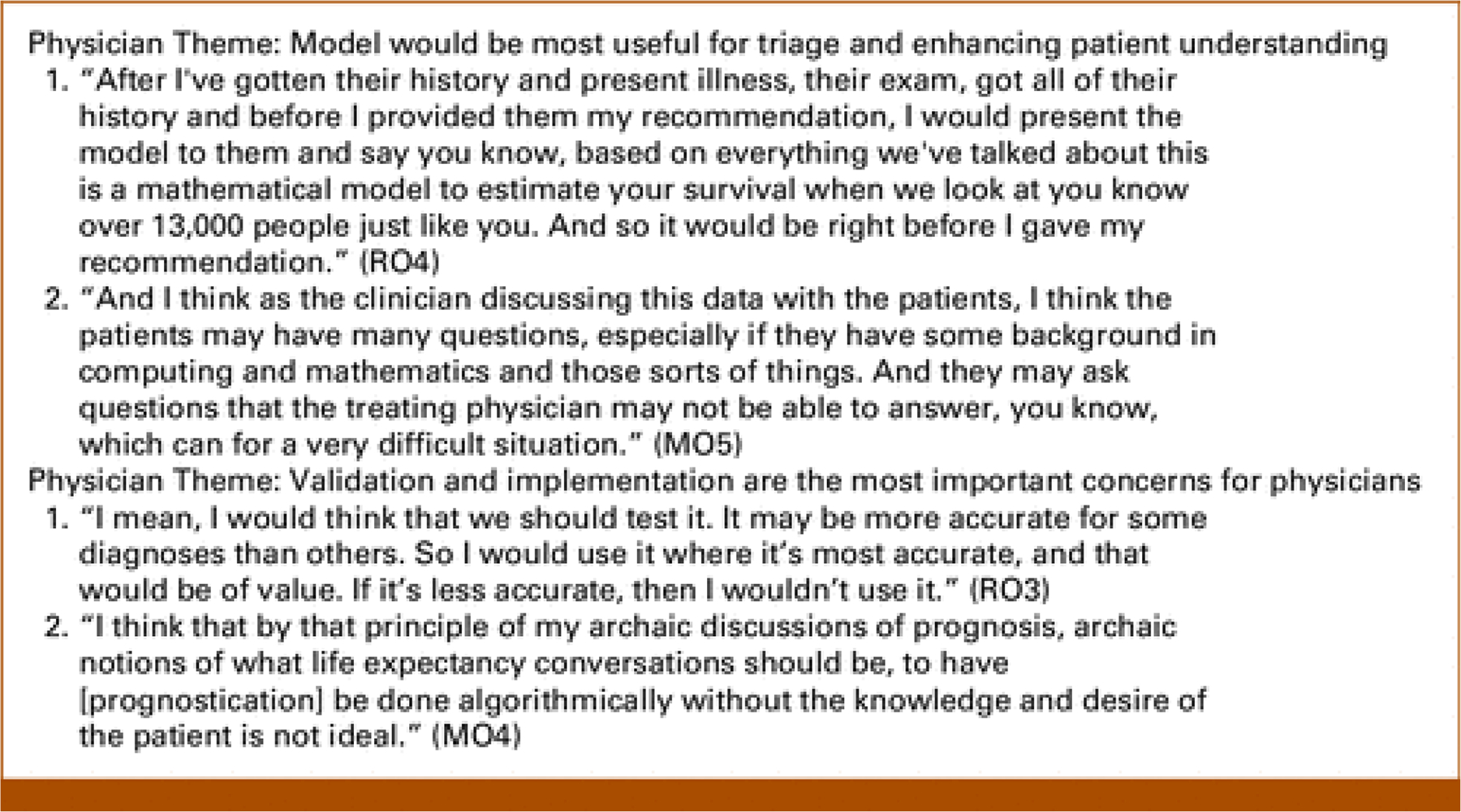

Theme: Model Would Be Most Useful for Triage and Enhancing Patient Understanding

Every physician interviewed thought that the model could be helpful in clinical practice. None of the physicians suggested that it would replace them making their own prognostic estimates, rather emphasizing its utility as a supporting tool. The usefulness for triaging patients was one of the most compelling features, helping physicians prioritize those in the greatest need of advance care planning (ACP) conversations. One physician stated, “I know I need to talk to everybody [about ACP] every time, but I can’t do that, so I think [the model] has to be used as a triage system” (MO2).

Physicians found the prognostic model useful in deciding between more aggressive and palliative treatments. For example, knowing that the patient had a short life expectancy could help the radiation oncologist decide to “shorten their radiation course…or probably just omit radiation” (RO3).

Patient understanding of their prognosis was a major concern for many of the physicians interviewed. Most physicians said that they struggled with balancing the need to affirm patient understanding without being cruel or focusing on death. Even after extensive ACP conversations, one physician found many patients “surprised… things are not going well” near the end of life, emphasizing the importance of clear communication, “to help the patients internalize and accept the knowledge” (MO5). Vague prognosis estimates can make it harder for patients to accept the terminal nature of their situation, with physicians potentially overestimating life expectancy to not dishearten the patient.

Multiple physicians said that the model could enhance patient understanding of their prognosis. Possible uses relied on its objectivity when making treatment decisions. Physicians said that they would specifically highlight its personalization to the patient and lack of bias, especially when the model confirms their own prognosis estimate.

Although most physicians were open to using the model for patient care, they also expressed concerns about becoming too reliant on it. One physician worried that dependence on the model would cause doctors to “override [their] own clinical judgment … which may not be so great, but some of us are pretty good … we know patients much better than the model does, and we can account for variations the model is not taking into account” (RO3).

Theme: Model Validation and Implementation Are Important Concerns

Physicians were interested in prospectively evaluating this model before incorporating into their clinical practice. Although the example model report included some of the considered factors, the black box nature of the predictions unsettled some interviewees. For physicians to trust the model, “It’s not going to be enough [for a model] to spit out a number, it’s going to need to explain why” because “patients will need a little bit more” than a limited list of considerations (MO5).

Some physicians said that they found it difficult to accept the reported outcome of the model if it differed from their expectations. Having been informed that the model had performed slightly better than physicians in a prospective study, one doctor wondered if those physicians were just poor prognosticators, wanting to see how the model compared with his own judgment prospectively before trusting it (RO3). However, another physician cautioned against being overly hesitant to adopt the model into practice: “Do we really say no to good information, because basically it’s good information, it’s adding to our already present information” (MO2).

Physicians were split on whether patients should be notified about model usage, with only one (20%) of the radiation oncologists and four (80%) of the medical oncologists saying that this should be required. Those who were against informing patients mainly cited the vast number of tools they already use to make clinical decisions without patient knowledge. Those who felt that notification should be required focused on transparency in treatment decision making: “if [patients] weren’t notified it would probably cause a scandal” (MO3). Another physician worried that patients would question physicians about how the model came to its prediction, putting the physician in a very difficult situation if they did not have answers (MO5).

Physicians who believed that patient consent was required before using the model on them mainly believed that mortality predictions required special consideration: “To have [life expectancy conversations] be done algorithmically without the knowledge and desire of the patient is not ideal” (MO4).

Selected quotations from physicians are presented in Figure 2.

FIG 2.

Physician quotes.

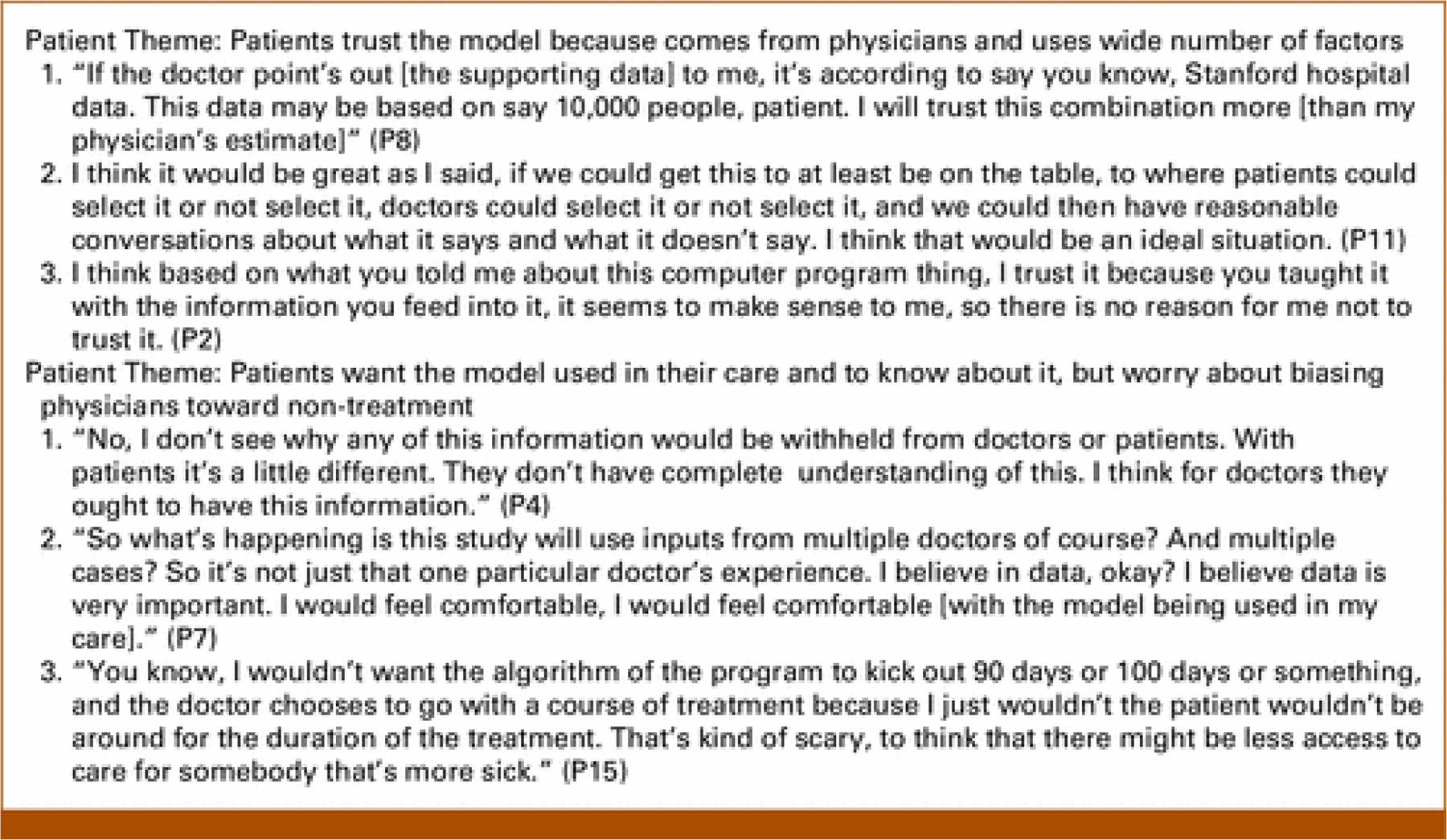

Patient Themes

Theme: Patients Trust the Model Because It Comes From Physicians and Uses Many Predictors

All 14 patients who completed the full interview wanted the option to have the model used in their care. Patients were especially interested in the wide number of factors the model considers as “it’s very easy [for a physician] to forget something that’s a minor issue or a minor thing…” (P13), unintentionally biasing the physician’s prognostic estimate. Multiple patients said that time demands on providers often make it challenging to take all the relevant factors for prognosis into consideration, so the comprehensiveness of the model was appealing.

Some patients more trusted the model for their prognostic estimate. They felt the model ensured that their prognosis was based on a full view of their condition, with one patient commenting on the knowledge of a tool using “data from thousands and thousands of cases” being superior to a doctor relying on their “own opinion from their own personal experiences” (P7). This was especially important to patients who were more skeptical of their physicians, but nearly all patients were in favor of utilization of supplemental tools that enhance physician knowledge.

Most of the patients implicitly trusted the validity of the model if its results were being presented by a physician. While most patients wanted to know if the model was being used, others viewed the model as just another tool that physicians could use in the background (Table 2). One patient thought that it was up to the doctor if they shared the results with the patient, as patients “don’t have complete understanding” of what various results would mean (P4). A caregiver echoed this, noting “we don’t ask [the doctor] about what papers or textbooks he’s basing his decisions on,” implying the burden of choosing which sources to trust fell on the doctor rather than the patient (caregiver to P4).

Theme: Patients Want the Model to Be Used in Their Care and to Know About It

In most interviews, patients expressed full trust of their doctors’ judgment, alluding to the significant number of tools and resources patients know that doctors pull from to make treatment decisions. Many trusted their physicians implicitly to act in their patient’s best interests; still, 11 of the 14 (79%) patients who completed the full interview (79%) said that they would want to be informed if the model was used in their care (Table 2). Most patients (n = 11, 79%) stated that they would want to see the report if the model was run on them, including three patients who did not believe that they had the right to know if the model was being used in their care.

Many patients compared the model with other tools currently being used to assist with treatment decisions. One patient commented on how the model could help standardize prognoses given by different physicians even within the same care team, as “everybody’s going to put value on different things… to just have the facts sometimes is really important” (P15).

Theme: Patients Worry About the Model Biasing Physicians Toward Nontreatment

Multiple patients were concerned that a shorter prognosis would bias physicians toward not providing treatments they would otherwise offer. Over-reliance on the model prediction, especially if its prediction persuaded against treatment, was of specific concern to patients. Caregivers expressed similar worries of physicians being less aggressive with their treatment plans if the patient has a shorter predicted lifespan: “I would fear it would, is it objective or subjective to determine how aggressive you want to treat a patient’s cancer, and how would these results impact that?” (caregiver of P6).

Selected quotations from patients are presented in Figure 3. Additional themes from physicians and patient interviews are found in Appendix 1.

FIG 3.

Patient quotes.

DISCUSSION

Clinicians, patients, and caregivers had generally positive views about the use of a ML prognostic model in patient care, but had concerns about validity and implementation. The positive response from patients mirrors results from a previous study examining a mortality prediction tool for inpatients.18

One significant obstacle to physicians using ML prognostic models in practice is their lack of understanding of AI. Distrust of factors contrary to their clinical intuitions and concerns about answering patient questions were some of the most common hesitations that physicians cited. Explainable AI, which encompasses the ways that AI applications are described using user-centered terms, or providing standardized model cards describing the model could possibly increase provider trust.15,22 In addition, providing detailed information about the data set that the model was trained on could assuage questions about whether the model’s accuracy varies by disease site and other characteristics.

Physicians differed in how much they wanted to understand the model’s methodology. Most physicians commented on high PSA being listed as a factor increasing survival, which goes against clinical knowledge. The requirement for a cancer diagnosis to be included in the training set may explain this as training set patients with prostate cancer (and subsequently, elevated PSA) had a more favorable diagnosis compared with those with more rapidly fatal cancer types, an example of Simpson’s paradox.23 Physicians generally do not have extensive training in statistics, making it difficult for them to understand why counter-intuitive factors affecting survival are accurate for this particular population.24 Since the primary purpose of showing some factors considered was to increase transparency and bolster physician trust, variations such as showing the weighting of variables or providing confidence intervals could assuage some concerns.

There was a notable discrepancy between patients mostly trusting model results similar to other test results as long as they were presented by their physicians and physicians largely viewing the ML model as separate from other tools commonly used in clinical practice because of its dealing with mortality and reliance on AI. All patients who completed the interview (n = 14) were amenable to the model being used in their care, in contrast to a study of the general public where most respondents were uncomfortable with AI involvement in medical applications.14 Physicians were much more cautious in trusting the model predictions and, in turn, were more hesitant to share the results with patients as they worried they would be accepted as truth. However, 79% (n = 11) of patients interviewed felt that they had the right to know if the model was being used in their care, similar to other studies where the majority of patients expected to be informed, but each patient has their own preferences about how much they want to know.25 Ethical concerns about patient consent and access to model results will be integral into incorporation into clinical practice.

Several studies have assessed patient and clinician attitudes about the use of AI in prognostication, but patient respondents typically were younger than the average patient, and clinician respondents were often not in specialties most likely to use similar models routinely in practice.9,14 One advantage of our study is that participating patients had disease and demographic profiles matching the model’s intended demographic and practitioners were all oncologists who routinely take care of patients with metastatic cancer. Patients were of a high-risk population; 20% (n = 3) of the patients interviewed passed away within 2 months of the final interview. In addition, showing participants a sample report run on a real patient made the more abstract AI concepts tangible, unlike most previous studies which investigated physician and patient views on abstract AI applications.

This study was limited by not using randomized selection from a large pool of patients, leading to a potential bias as physicians might have been likely to approach patients comfortable with discussing prognosis. In addition, all patients were treated in a tertiary care center in the highly educated San Francisco Bay Area and accordingly might have had greater average education and income than the overall cancer patient population.26 Future studies with a more diverse patient population will be required to assess patient trust of similar models across a wide variety of care settings.

In conclusion, most patients and physicians were comfortable with a ML prognostic model being used for patients with metastatic cancer. Although patients largely said that they trusted the model when results were presented by a physician, some physicians expressed skepticism about the model’s accuracy or suitability for clinical use. The generally positive response encourages further investigation into incorporating prognostic models into the clinic and ways to encourage physician trust of the results.

Supplementary Material

CONTEXT.

Key Objective

To understand the views and concerns of patients, caregivers, and oncologists regarding utilization of machine learning/artificial intelligence (ML/AI) models to predict the survival of patients with metastatic cancer.

Knowledge Generated

Patients and oncologists were uniformly positive about integrating the model into patient care. Although patients largely trusted the model results when presented by a physician, most oncologists wanted further proof of accuracy before widely using the model in clinical care.

Relevance

AI-powered programs are becoming more accepted in clinical care and can improve patient outcomes when implemented correctly. These results highlight the positive attitudes of patients toward ML/AI models for mortality prediction, an especially sensitive subject. Continuous efforts to demonstrate accuracy and utility to oncologists will increase physician confidence in using the model, improving prognostic accuracy and assisting in treatment decision making.

ACKNOWLEDGMENT

We thank the patients and their family members, and Drs Hilary Bagshaw, Curtis Chong, Millie Das, Gregory Heestand, Saad Khan, Carol Marquez, Kavitha Ramchandran, Scott Soltys, Nicholas Trakul, and Sandra Zaky for participating in the study.

AUTHORS’ DISCLOSURES OF POTENTIAL CONFLICTS OF INTEREST

The following represents disclosure information provided by authors of this manuscript. All relationships are considered compensated unless otherwise noted. Relationships are self-held unless noted.

I = Immediate Family Member, Inst = My Institution. Relationships may not relate to the subject matter of this manuscript. For more information about ASCO’s conflict of interest policy, please refer to www.asco.org/rwc or ascopubs.org/cci/author-center.

Open Payments is a public database containing information reported by companies about payments made to US-licensed physicians (Open Payments).

Daniel T. Chang

Research Funding: RefleXion Medical, ViewRay

Other Relationship: ViewRay

Kavitha J. Ramchandran

Consulting or Advisory Role: Drishti, GroupWell, Varian Medical Systems

Michael F. Gensheimer

Stock and Other Ownership Interests: Roche/Genentech

Research Funding: Varian Medical Systems, X-RAD Therapeutics

Open Payments Link: https://openpaymentsdata.cms.gov/physician/431774

No other potential conflicts of interest were reported.

Footnotes

PRIOR PRESENTATION

Presented in part at the American Society for Radiation Oncology Annual Meeting, San Antonio, TX, October 23–26, 2022.

REFERENCES

- 1.Step MM, Ray EB: Patient perceptions of oncologist–patient communication about prognosis: Changes from initial diagnosis to cancer recurrence. Health Commun 26:48–58, 2011 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Wallberg B, Michelson H, Nystedt M, et al. : Information needs and preferences for participation in treatment decisions among Swedish breast cancer patients. Acta Oncol 39:467–476, 2000 [DOI] [PubMed] [Google Scholar]

- 3.Enzinger AC, Zhang B, Schrag D, et al. : Outcomes of prognostic disclosure: Associations with prognostic understanding, distress, and relationship with physician among patients with advanced cancer. J Clin Oncol 33:3809–3816, 2015 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Epstein AS, Prigerson HG, O’Reilly EM, et al. : Discussions of life expectancy and changes in illness understanding in patients with advanced cancer. J Clin Oncol 34:2398–2403, 2016 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Abernethy ER, Campbell GP, Pentz RD: Why many oncologists fail to share accurate prognoses: They care deeply for their patients. Cancer 126:1163–1165, 2020 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Krishnan M, Temel J, Wright A, et al. : Predicting life expectancy in patients with advanced incurable cancer: A review. J Support Oncol 11:68–74, 2013 [DOI] [PubMed] [Google Scholar]

- 7.Weeks JC, Catalano PJ, Cronin A, et al. : Patients’ expectations about effects of chemotherapy for advanced cancer. N Engl J Med 367:1616–1625, 2012 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Patel MI, Periyakoil VS, Moore D, et al. : Delivering end-of-life cancer care: Perspectives of providers. Am J Hosp Palliat Care 35:497–504, 2018 [DOI] [PubMed] [Google Scholar]

- 9.Blease C, Kaptchuk TJ, Bernstein MH, et al. : Artificial intelligence and the future of primary care: Exploratory qualitative study of UK general practitioners’ views. J Med Internet Res 21:e12802, 2019 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Bohr A, Memarzadeh K: The rise of artificial intelligence in healthcare applications, in Bohr A, Memarzadeh K (eds): Artificial Intelligence in Healthcare. Amsterdam, The Netherlands, Elsevier, 2020, pp 25–60 [Google Scholar]

- 11.Li J, Zhou Z, Dong J, et al. : Predicting breast cancer 5-year survival using machine learning: A systematic review. PLoS One 16:e0250370, 2021 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Liu D, Wang X, Li L, et al. : Machine learning-based model for the prognosis of postoperative gastric cancer. Cancer Manag Res 14:135–155, 2022 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Gensheimer MF, Henry AS, Wood DJ, et al. : Automated survival prediction in metastatic cancer patients using high-dimensional electronic medical record data. J Natl Cancer Inst 111:568–574, 2019 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Khullar D, Casalino LP, Qian Y, et al. : Perspectives of patients about artificial intelligence in health care. JAMA Netw Open 5:e2210309, 2022 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Mitchell M, Wu S, Zaldivar A, et al. : Model cards for model reporting, in: Proceedings of the Conference on Fairness, Accountability, and Transparency. ACM, 2019, pp 220–229 [Google Scholar]

- 16.Parikh RB, Manz CR, Nelson MN, et al. : Clinician perspectives on machine learning prognostic algorithms in the routine care of patients with cancer: A qualitative study. Support Care Cancer 30: 4363–4372, 2022 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Haan M, Ongena YP, Hommes S, et al. : A qualitative study to understand patient perspective on the use of artificial intelligence in radiology. J Am Coll Radiol 16:1416–1419, 2019 [DOI] [PubMed] [Google Scholar]

- 18.Saunders S, Downar J, Subramaniam S, et al. : mHOMR: the acceptability of an automated mortality prediction model for timely identification of patients for palliative care. BMJ Qual Saf 30: 837–840, 2021 [DOI] [PubMed] [Google Scholar]

- 19.Gensheimer MF, Aggarwal S, Benson KR, et al. : Automated model versus treating physician for predicting survival time of patients with metastatic cancer. J Am Med Inform Assoc 28:1108–1116, 2021 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Gupta D, Fardeen T, Teuteberg W, et al. : Use of a computer model and care coaches to increase advance care planning conversations for patients with metastatic cancer. J Clin Oncol 39, 2021. (suppl 28; abstr 8) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Boeije HA: Purposeful approach to the constant comparative method in the analysis of qualitative interviews. Qual Quant 36:391–409, 2002 [Google Scholar]

- 22.Pawar U, O’Shea D, Rea S, et al. : Explainable AI in healthcare, in: 2020 International Conference on Cyber Situational Awareness, Data Analytics, and Assessment (CyberSA). IEEE, 2020, pp 1–2 [Google Scholar]

- 23.Kievit RA, Frankenhuis WE, Waldorp LJ, et al. : Simpson’s paradox in psychological science: A practical guide. Front Psychol 4:513, 2013 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Bartlett G, Gagnon J: Physicians and knowledge translation of statistics: Mind the gap. CMAJ 188:11–12, 2016 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Aneja S, Chang E, Omuro A: Applications of artificial intelligence in neuro-oncology. Curr Opin Neurol 32:850–856, 2019 [DOI] [PubMed] [Google Scholar]

- 26.Census: Census 2010 DP-1. Census.gov. https://www.census.gov/data/developers/data-sets/decennial-census.2010.html#sf1

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.