Abstract

The aim of this work was to study the relationship between cortical activity and visual perception. To do so, we developed a psychophysical technique that is able to dissociate the visual percept from the visual stimulus and thus distinguish brain activity reflecting the perceptual state from that reflecting other stages of stimulus processing. We used dichoptic color fusion to make identical monocular stimuli of opposite color contrast “disappear” at the binocular level and thus become “invisible” as far as conscious visual perception is concerned. By imaging brain activity in subjects during a discrimination task between face and house stimuli presented in this way, we found that house-specific and face-specific brain areas are always activated in a stimulus-specific way regardless of whether the stimuli are perceived. Absolute levels of cortical activation, however, were lower with invisible stimulation compared with visible stimulation. We conclude that there is no terminal “perceptual” area in the visual brain, but that the brain regions involved in processing a visual stimulus are also involved in its perception, the difference between the two being dictated by a higher level of activity in the specific brain region when the stimulus is perceived.

What is the relationship between brain activity and perception, and how can we chart those parts of the brain with activity that is the neural equivalent of our subjective perceptual experience (1)? To dissect processing from perception, experimental methods able to dissociate the stimulus from the percept are needed. Previous studies (2–5) have used binocular rivalry for this purpose, when a different image is presented to each eye, and perception fluctuates between the two. Binocular rivalry might reflect a competition between the two eyes (6), although evidence suggests that it arises at a later stage of the system through competition between alternative stimulus interpretations (refs. 7 and 8, but see also ref. 9). By monitoring brain activity under these conditions of constant stimulus and alternating perception, one might hope to distinguish between areas responding simply to stimulation and those with a response that is dictated by the percept. The use of such stimuli has not given straightforward physiological results, because all visual areas have cells that follow the stimulus or the percept, with some neurons showing even more unexpected properties, by responding when their preferred stimulus is suppressed perceptually (2, 3). By using binocular rivalry in humans, fluctuations in the blood oxygen level-dependent signal between the fusiform face area and the parahippocampal place area have been reported to mirror perceptual alterations (5). However, during such bi-stable perceptual phenomena, suppressive frontoparietal brain mechanisms also are in action (10). Thus the covariation between perception and cortical activation in the specialized areas does not necessarily imply that the two are equivalent. Any relationship between the unstable perception of binocular rivalry and brain activity therefore does not reveal the areas that mediate the percept but only those stages by which the neural competition between the two images has been resolved (2–5). Binocular rivalry studies thus have not distinguished neural activity underlying perception from that underlying other stages of visual processing.

In binocular fusion, the conflict between the two monocular inputs leaves insufficient time for rivalry to develop. Two different colors briefly presented at corresponding retinal locations in each eye will result in the perception of a single mixed color (11, 12). If a red square is flashed in one eye and an identical green square in the corresponding retinal location of the other eye, a yellow square will be perceived. To achieve continuous red/green fusion and thus continuous perception of the yellow square, repetitive brief flashes (<100 ms) separated by longer (>100 ms) nonstimulation intervals can be used (13). Based on this principal, we developed a method by which any two-colored image that is clearly recognizable when the stimuli to the two eyes are identical can be made “invisible” when the color contrast in one eye is reversed. Our aim was to learn how brain areas specialized for faces and objects (14) react to such stimuli that are perceived clearly when either eye is stimulated alone but become invisible when the stimulus is viewed simultaneously through both eyes. Our paradigm allowed us to compare brain activity produced by nonperceived face/house stimuli of dichoptically opposite color contrast to (i) face/house stimuli of the same color contrast in the two eyes, which therefore are perceived, and (ii) stimulation with dichoptically opposite uniform color fields, which leads to the same null perceptual result as dichoptically opposite face/house stimulation. We thus were able to dissociate the stimulus from the percept both in the same (i, same stimulus/varying percept) and opposite (ii, same percept/varying stimulus) way as that achieved by using binocular rivalry. Because no extra suppressive brain mechanisms have been described in binocular fusion, a more direct indication of the relationship between brain activity and perception can be achieved.

Methods

Stimulus Description.

Stimuli were isoluminant two-color face and house drawings of low color contrast, which was achieved by adding a small amount of either green or red to an initial gray color. Two opposite images thus were produced: red on green and green on red. A Gaussian function with a radius of 3 pixels was used to blur the edges and thus aid the “disappearance” of opposite stimuli (see below). Uniform reddish and greenish fields were used as controls. Each eye was stimulated separately, and the two monocular images were fused into a single binocular percept. There were two different types of stimulation: “same,” when the two monocular images were identical, and “opposite,” when they had the opposite color contrast (but otherwise were identical). The total number of conditions therefore was 12: four (two same and two opposite) for each of our three (faces, houses, and control) types of stimuli. The two types of stimuli appeared randomly on the screen, subtended 7 × 5°, and were surrounded by a white frame with a small central fixation cross. Black cardboard obstructed the contralateral hemifield from each eye, and +3 lenses were used to help subjects fuse and focus. The frames and crosses were visible at all times and used before the experiment to determine, for each subject, the best position of the two monocular stimuli for total fusion to occur. In the scanner, the two monocular stimuli were presented simultaneously by using repetitive (≈50-ms) ON flashes, separated by (≈150-ms) OFF intervals, during which only the frame and fixation cross were visible. Continuous fusion (instead of binocular rivalry) was thus achieved (13). Each stimulus train was presented for 1.5 s, followed by a 1.5-s rest period (frame and cross still visible), during which subjects reported their percept by pressing one of three buttons: “face,” “house,” or “nothing.” The total scanning time was divided into two 10-min sessions, each consisting of 192 3-s events.

Subjects and Task.

Three female and four male normal right-handed subjects with normal or corrected-to-normal vision were scanned. All gave informed consent, and the experiment was approved by the ethics committee of the Institute of Neurology (London). Before scanning, subjects were trained on the task for a few minutes inside the scanner until they could fuse the two monocular images correctly and felt confident with the discrimination. For each subject, the various stimulus parameters (size, position, isoluminance point, color, and ON/OFF times) were manipulated around the baseline values given above until the subject verbally reported a house/face percept with same stimulation and its absence with opposite stimulation. Each subject's sensitivity was different, and the stimulus therefore had to be adjusted individually. During scanning, subjects were instructed to make one of three possible choices (face, house, or neither) depending on what they saw. The similarity between the psychophysical performances of different subjects during scanning (see Fig. 1) reflects the individual adjustment made beforehand and reassures us against the possibility of each subject adopting his/her own reporting criterion at will; the small variation between subjects reflects an objective perceptual effect rather than subjective individual answering strategies. Fig. 1 also shows that 5–10% of opposite stimuli were perceived because of an occasional imperfect fusion between the two images (e.g., by an eye movement changing the focal plane). By giving subjects the neither option, such cases could be detected and excluded from the analysis.

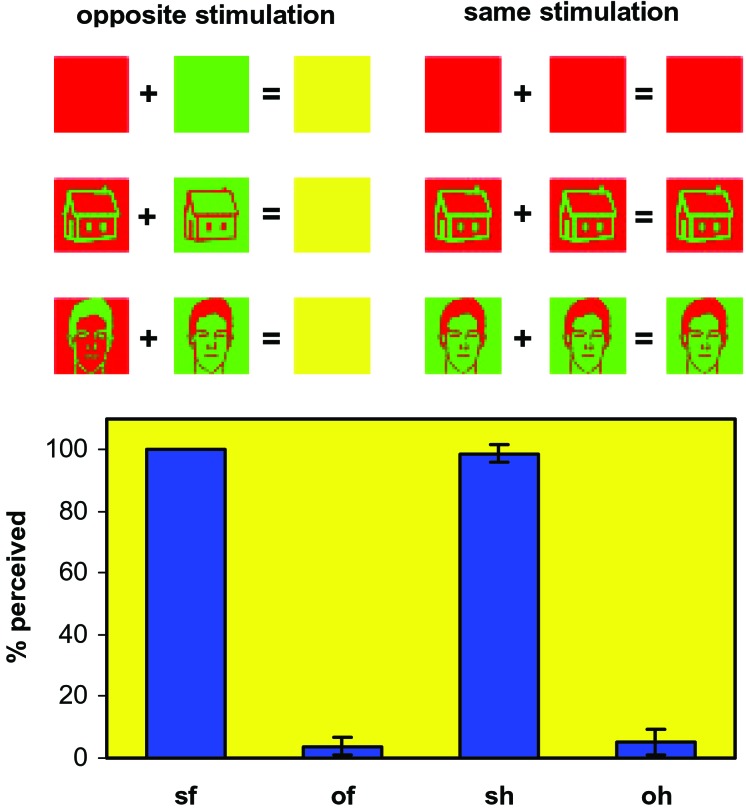

Figure 1.

A schematic presentation of the stimulation method used in this study and the averaged performance of the seven subjects in the face/house/nothing discrimination task. Dichoptic stimuli of opposite color contrast between the two eyes were invisible (opposite stimulation), whereas identical stimuli of the same color contrast were perceived the vast majority of the times (same stimulation). Pictures of houses, faces, and uniformly colored controls were used. The input to the two eyes and the expected perceptual output (Upper) and subjects' true psychophysical performance of the subjects (Lower) are shown. Continuous fusion of the stimuli was achieved by using repetitive brief presentations (see Methods). sf, same faces; of, opposite faces; sh, same houses; oh, opposite houses. The averaged percentage of the number of stimuli perceived is shown (of a total of 448 per subject per stimulus category) together with the standard error between the subjects. Control stimuli were never perceived either as a face or a house. The few trials in which subjects had perceived an opposite stimulus were modeled separately in the design matrix (see Methods) and thus did not influence the pattern of brain activation.

The invisibility of opposite stimuli was also tested by using a two-alternative forced-choice (2AFC) method before scanning. In all experiments we used eight different faces and eight different houses as stimuli. For the 2AFC task, each of these stimuli was shown once in each of the four possible configurations, giving a total of (8 + 8) × 4 = 64 trials. Thus, half of the trials were same and half were opposite conditions (each scanning session had twice as many face/house trials, i.e., 128, plus 64 monochromatic controls). In the same conditions, 98.2% of the answers were correct (SD = 2.5); in the opposite conditions, the 2AFC gave an average performance of 52.7% (SD = 4.2); 4/7 subjects scored above 50%, the highest score being 59.4%. We believe the occasional imperfect fusion to have caused this above-chance performance, which is why we did not use a 2AFC task during scanning. In addition, a 2AFC task would create complications with the grouping of individual events into different categories for functional MRI (fMRI) analysis; opposite stimulation events for which the response is correct cannot be used, and the only valid data from the opposite category would be events for which the response is wrong (i.e., stimulus is not perceived). However, the assumption that nothing is perceived in these events is not necessarily true; in a forced-choice paradigm where subjects are asked to guess rather than to report their percept, it is easier to “imagine” stimuli that are not there. Such imaginary events cannot be ascertained, and the brain activation they produce could distort the analysis. This distortion is also true for the uniform color controls, both same and opposite; because there is never a correct answer, any peculiar events cannot be spotted by the experimenter, who is forced to assume that the responses never reflect perception. Finally, a 2AFC task may not reflect the perceptual state because of the possibility of “blindsight” under dichoptically opposite conditions (15), although such claims have been questioned (16).

Image Acquisition and Data Analysis.

Scanning was done in a 2T Magnetom Vision fMRI scanner with a head-volume coil (Siemens, Erlangen, Germany) while viewing the visual display via an angled mirror as described previously (17). A gradient echo planar-imaging sequence was selected to maximize the blood oxygen level-dependent contrast (echo time 40 ms, repeat time 4.5 s). Each brain image volume was acquired in 48 slices, each 2-mm thick with 1-mm gaps in between and comprising 64 × 64 pixels. There were two scanning sessions, each consisting of 156 image volumes. A T1-weighted anatomical image was acquired at the end of the two sessions. We analyzed data by using SPM99 (Wellcome Department of Imaging Neuroscience, London, U.K.). The echo planar-imaging images were realigned spatially, normalized to the Montreal Neurological Institute template provided in SPM99 (18), smoothed spatially with an 8-mm isotropic Gaussian kernel, and filtered temporally with a band-pass filter with a low-frequency cutoff of period 171 s and a high-frequency cutoff shaped to the spectral characteristics of the canonical hemodynamic response function within SPM99. Global changes in activity were removed by proportional scaling. The data were “realigned in time” by using sinc interpolation (19) before spatial normalization. This temporal realignment adjusted the whole-brain images to approximate those that would have been obtained if the whole image had been acquired instantaneously, at a time 2/3 through the real scan (scanning from top to bottom). Each event was modeled with a separate box-car function, convolved with SPM99's canonical hemodynamic response function, in a fixed-effects multiple-regression analysis (20). Each of the 12 different stimulation conditions was modeled separately in the design matrix; events for which the subject's answer was not the expected one were categorized and modeled separately (e.g., “perceived opposite faces,” “nonperceived same houses,” etc.). The resultant parameter estimates for each regressor at each voxel were compared by using t tests to determine whether significant activation resulting from a comparison of conditions had occurred. The statistical results given are based on a single-voxel t threshold of 3.12 (corresponding to P < 0.001, uncorrected for multiple comparisons) unless otherwise stated. We made no further corrections for multiple comparisons within visual areas for which we had a priori hypotheses. Outside these areas a correction was made for multiple comparisons across the whole-brain volume, because we had no prior hypothesis for nonvisual areas.

Results

Fig. 1 shows all combinations of monocular stimuli that we used, together with their expected perceptual output. There were two modes of stimulation: same, when the two monocular stimuli were identical, and opposite, when they differed only in having the opposite color contrast. Same stimuli were perceived clearly, whereas opposite stimuli were not, because they cancelled out at the binocular level, as is illustrated in the graph of Fig. 1, which shows the performance of subjects during scanning. Dichoptically presented same stimuli were virtually always perceived, whereas opposite ones were perceived only ≈5% of the time (because of the occasional imperfect superposition of the images in the two retinas). These few cases in which either same stimuli were not (correctly) perceived or opposite stimuli were perceived (either correctly or incorrectly) were modeled separately in the design matrix and excluded from comparisons.

We were interested, first, to see what brain areas would be activated by our invisible faces and houses, and compare this result to brain areas activated by the same face and house stimuli when they were perceived. To exclude any activation caused by general differences between same and opposite stimulation, we used a corresponding control for each. We therefore calculated the contrasts “opposite face-opposite control” and “opposite house-opposite control,” as well as the contrasts “same face-same control” and “same house-same control.” Fig. 2 shows the brain areas activated by perceived (dichoptically same) and nonperceived (dichoptically opposite) faces and houses. As expected, perceived (same) faces and houses activate extensive parts of the visual cortex when compared with uniform-colored controls. Both houses and faces activated the majority of these regions, with differences (see below). But much the same visual areas were activated also by nonperceived face and house stimuli. Although the extent of activation was not as widespread as with perceived stimuli, it still is surprising that many “higher,” binocularly driven areas of the brain are activated by these invisible stimuli when compared with the (perceptually equivalent) uniform controls. One might have expected our monocularly present but binocularly absent stimuli to activate the monocularly driven part of striate cortex alone. Our results show, however, that monocular information is well preserved in later, binocularly driven parts of the visual cortex. Moreover, the activation of these higher areas by nonperceived stimuli suggests that their role in perception is not as straightforward as previously imagined (see Discussion).

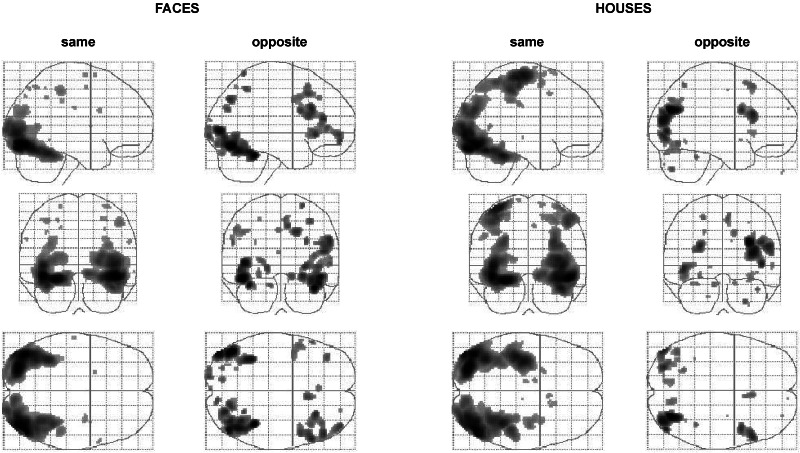

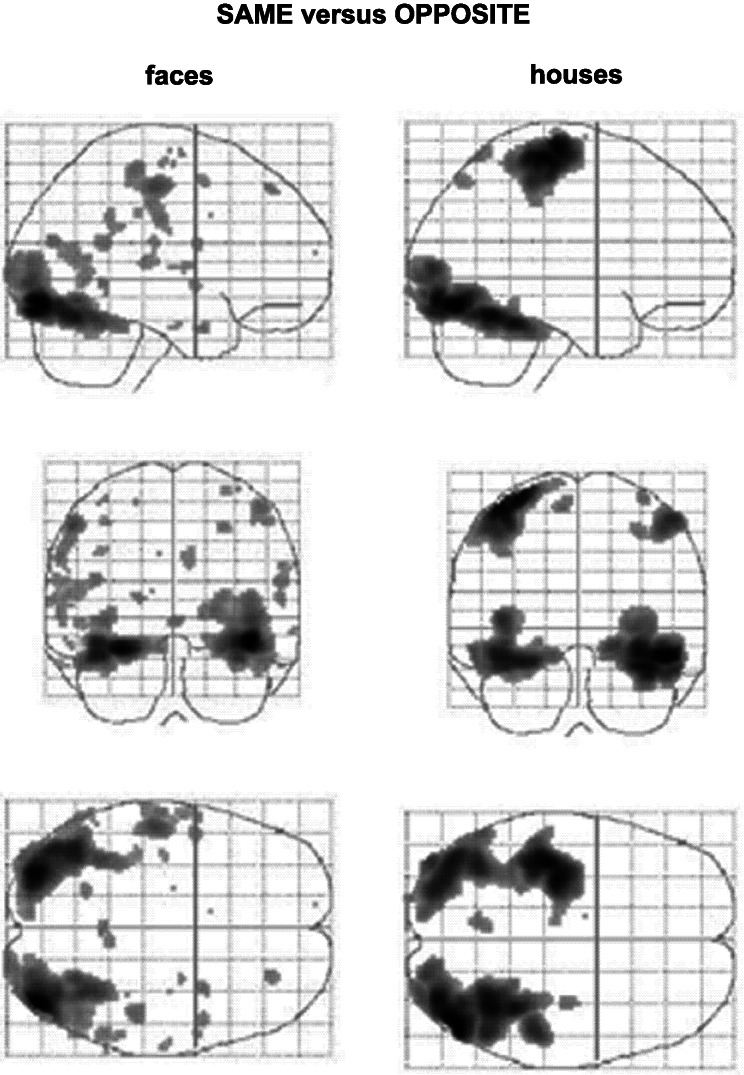

Figure 2.

Glass-brain presentations of group results of the activation produced by the opposite and same dichoptic stimulation with pictures of faces and houses (compared with that produced by their corresponding control). Large regions of binocularly driven prestriate visual areas are activated even when the two monocular stimuli cancel each other out at the binocular level, resulting in an identical and null percept in the two opposite stimulations (opposite face/house-opposite control) that is the same as that of the control. The brain regions activated by the opposite stimulation also are much the same as those activated by the same stimulation but are less extensive. With the same houses, there is also activity in the motor cortex, which might be caused by the fact that the button-press of the subjects (to report house) was made by the third finger and thus was more difficult than the other two.

To investigate the relationship between brain activity and perception, it is necessary to find areas that respond in a stimulus-specific way and learn whether there is a covariation between their activation and perception. Face-specific and house-specific activity has been demonstrated in the fusiform and parahippocampal gyri (14). We used the contrasts same face-same house and same house-same face to define face-specific and house-specific areas, respectively. Their anatomical locations are shown in the upper rows of Fig. 3 (B and C, and A, respectively); face-specific activity was found in the fusiform gyrus, and house-specific activity was found in the parahippocampal gyrus, confirming previous reports (14). By calculating the contrasts opposite face-opposite house and opposite house-opposite face, we examined whether activation of these areas by invisible stimuli also is stimulus-specific even though the stimuli are not perceived. The contrasts resulted in both face-specific and house-specific activation; the anatomical locations are shown in the lower rows of Fig. 3 (B and C, and A, respectively) and are almost identical to the ones produced by the visible stimuli. In fact, activations caused by opposite stimuli (significant at P = 0.001 uncorrected; see Methods) became significant at the P = 0.05 corrected level when a small volume correction was applied (r = 10 mm) based on the results of the same stimulation. The same brain areas are therefore activated in the same stimulus-specific way, regardless of whether the stimuli are perceived. This result cannot be explained in terms of expectation or attention, because stimuli appeared in a random order, and thus the occurrence of invisible faces and houses was not predictable.

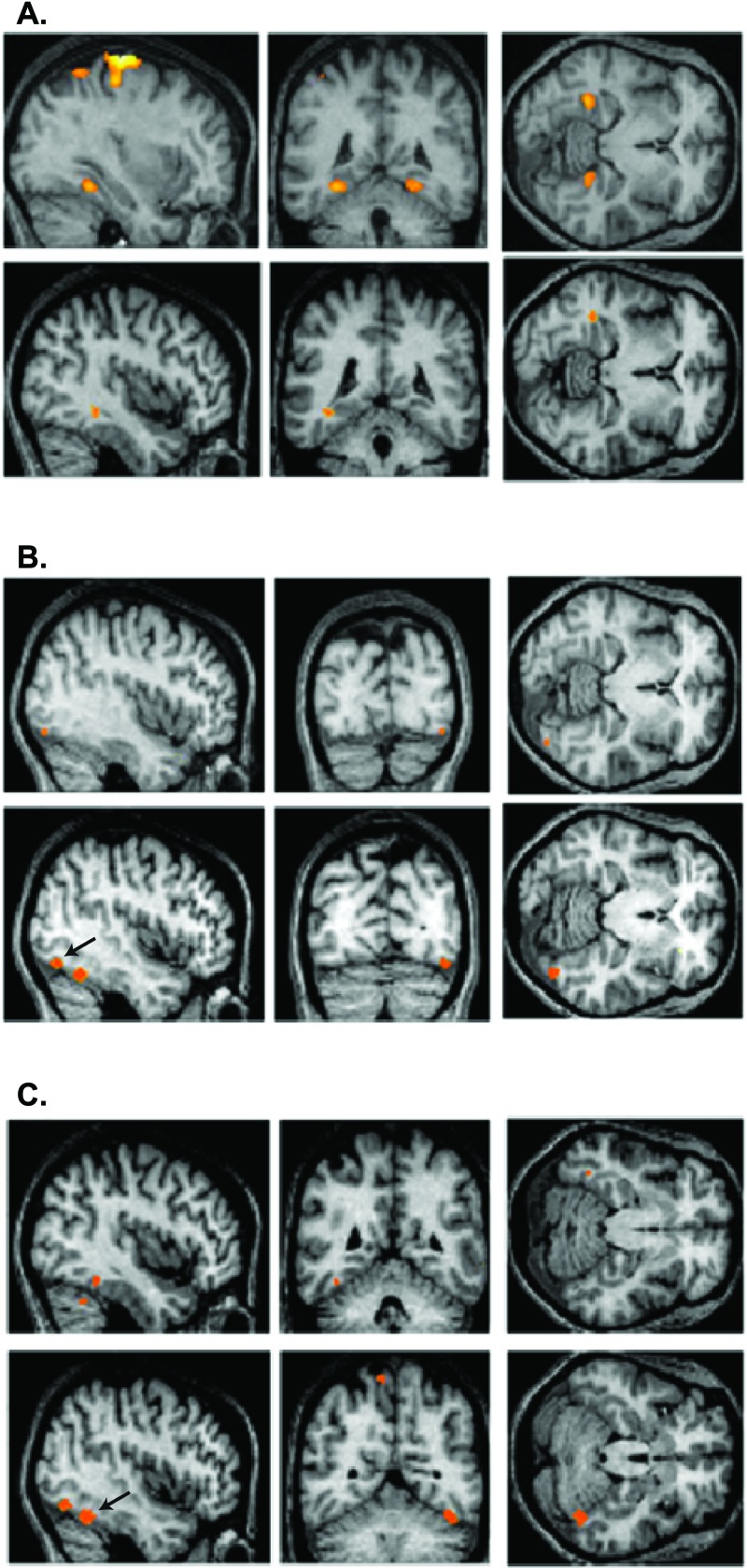

Figure 3.

Group results of brain regions showing stimulus-specific activation under conditions of same and opposite stimulation, revealing that such activation correlates with perceived and not-perceived conditions. (A Upper) The contrast same houses-same faces shows bilateral stimulus-specific activation in the parahippocampal gyrus (Talairach coordinates, −30, −44, −12 and 26, −44, −10). (Lower) The contrast opposite houses-opposite faces shows unilateral stimulus-specific activation in the same region (−38, −42, −10). (B Upper) The contrast same faces-same houses reveals stimulus-specific activation in a region of the fusiform gyrus (42, −82, −12). (Lower) The contrast opposite faces-opposite houses reveals stimulus-specific activation in the same brain region (44, −74, −14), shown here by the arrow. (C Upper) the contrast same faces-same control when exclusively masked by the contrast same houses-same control revealed another region in the fusiform gyrus (−38, −48, −18) showing face-specific activation. (Lower) The same brain region in the opposite hemisphere (44, −56, −22) also shows much more significant face-specific activation under the contrast opposite faces-opposite houses; this area is shown by the arrow to distinguish it from the second area revealed by the same contrast.

It thus is not stimulus-specific activation of higher visual areas that is the neural equivalent of visual perception (see Discussion). Comparison between conditions that are identical in the (null) percepts produced by different stimuli shows that the activation of these areas follows the stimulus rather than the percept (Fig. 3). What then determines whether a stimulus is perceived? Fig. 4 illustrates how the seven most significant voxels of the house/face-specific brain areas (shown in Fig. 3) were activated by each one of our six different types of stimuli. It again shows that there is always a correlation between the stimulus and cortical activation, irrespective of whether the stimulus is perceived. Furthermore, the preference for a specific stimulus is always the same (i.e., either face or house) in both the same and the opposite conditions but in some voxels reaches statistical significance in the former and in some in the latter. However, the percentage of blood oxygen level-dependent activation in most cases is larger when the stimuli are perceived than when they are not. The same areas thus are activated more strongly when a percept arises, which is illustrated also in the comparison between conditions under which identical stimulation leads to a different perception. Fig. 5 illustrates the contrasts same house-opposite house and same face-opposite face. There is a differential activation between perceived and nonperceived stimuli in all regions of the brain involved with the processing of these visual stimuli (Fig. 2).

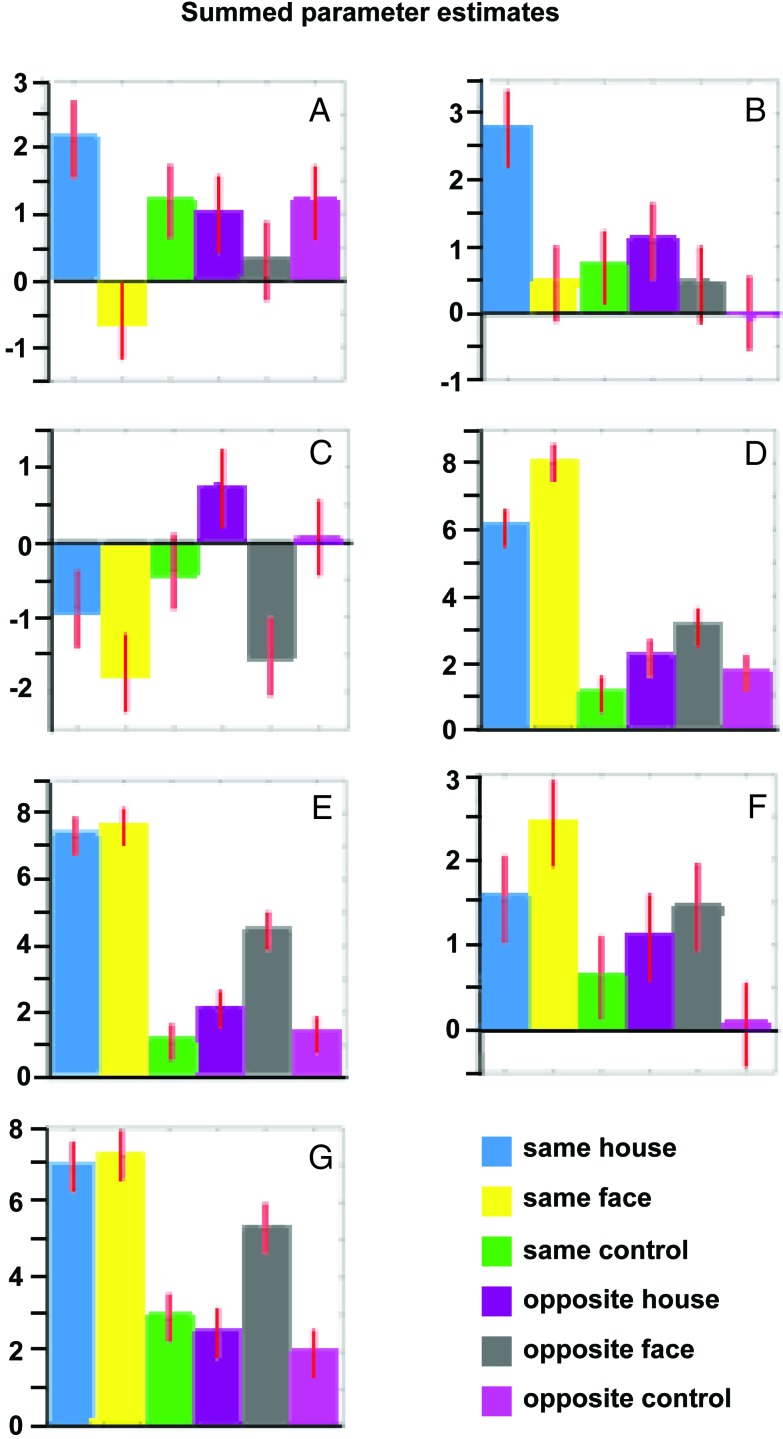

Figure 4.

Group results of summed parameter estimates for the six different stimulation conditions in the seven different brain regions shown in Fig. 3 (mean parameter estimates can be calculated by dividing the y axes values by 28). Talairach coordinates: A, −30, −44, −12; B, 26, −44, −10; C, −38, −42, −10; D, 42, −82, −12; E, 44, −74, −14; F, −38, −48, −18; G, 44, −56, −22. In each region the preference for face or house is identical in the same and opposite conditions; however, in some voxels this preference is statistically significant for the former and in some for the latter (with the exception of F). Also, the parameter estimates for the same condition are usually higher than those for the opposite condition, i.e., although the same brain regions are involved and show stimulus-specific activation in both conditions, the level of this activation is higher where there is a perceptual outcome.

Figure 5.

Glass-brain presentations of group results of the activation produced by the comparisons same face-opposite face (Left) and same house-opposite house (Right). This contrast reveals differential activation under conditions in which the same stimulus results in a different percept. Comparison of these results with those in Fig. 2 shows that all the areas involved in the processing of faces and houses are more active when stimulus processing results in a visual percept than when it does not.

Discussion

In this study we combined a psychophysical method that is able to dissociate between stimulation and perception and brain-imaging experiments to investigate the relationship between cortical activation and visual perception. We monitored brain activation under conditions where stimulation is constant but the percept varies and vice versa. Our results show that (i) monocular information is able to activate brain areas located well beyond the point of convergence of the input from the two eyes and in which most cells are binocularly driven (21), (ii) areas selectively responding to faces and houses show stimulus specificity irrespective of whether the stimulus is perceived, and (iii) the absolute level of cortical activation in all areas involved with the processing of faces and houses is higher when the processing results in a percept.

In addition to the processing vs. perception problem, we also wanted to learn whether the monocular visual information is preserved after the input from the two eyes is combined to generate binocularly driven neurons. If it is not preserved, the only cortical activation to be expected from our invisible stimuli would be in monocularly driven V1 neurons, because the two opposite monocular stimuli cancel each other out and disappear at the binocular level. But our monocular stimuli activated large regions of the visual cortex, almost identical to and nearly as extensive as the regions activated by normal binocular stimulation. Although this might seem to be a surprising result at first, it is unlikely that the prestriate cortex is blind to differences between the inputs from the two eyes, because such information is important for various visual functions, binocular disparity being the most obvious one (for a review of the problem and a theoretical model see ref. 22). Our fMRI results constitute clear evidence that purely monocular visual information is able to activate these higher visual areas.

By using two different groups of stimuli, faces and houses, we were able to investigate further whether the brain activation caused by our nonperceived stimuli maintained the stimulus specificity reported under conditions of normal stimulation (14). We used our dichoptically same stimuli to define brain regions responding more strongly to faces than to houses and vice versa and then tested whether the activation produced in these areas by our dichoptically opposite stimulation follows the same stimulus specificity. We found that the responses of these areas are dictated by the stimulus rather than the percept, and that stimulus specificity is maintained regardless of whether perception occurs. Our result is the opposite of what happens under conditions of binocular rivalry, where activity in higher areas has been found to correlate with the percept rather than the stimulus (2–5). Concerning humans in specific, a previous fMRI study using binocular rivalry has shown that activity in the face- and house-specific areas fluctuates in coherence with perceptual alterations (5). Our results, however, eliminate the possibility that the activation of these areas might be the neural equivalent of conscious visual perception by showing that they also can maintain their selectivity during stimulus processing that does not result in a percept. Such dissociations between neural activity in these areas on the one hand and perception on the other accounts well for why a parietal patient could not see faces and houses presented in his neglected hemifield, even though stimulus-specific activation was found in his corresponding specialized brain areas (23). It seems, therefore, that the problem of consciousness cannot be solved by simply finding “perceptual areas” with activation that is the neural equivalent of our perceptual experience, although we cannot exclude the possibility that our imaging methods were not sensitive enough to pick up any extra perceptual areas; absence of proof is no proof of absence. But our study does show that the fusiform face area and the parahippocampal place area are not such areas, because both can be activated selectively in the absence of any face/house perception.

Our results further show that it is not stimulus-specific activity as such but the strength of brain activation (as reflected in the blood oxygen level-dependent signal; see ref. 24) that correlates better with the presence or absence of perception. But, although specialized processing activity of the visual input takes place throughout the visual brain, irrespective of whether there is a perceptual output, this activity is stronger in the former than in the latter case. The idea of a direct relationship between perception and intensity of cortical activation was put forward first with respect to an fMRI study of patient GY, who could or could not see motion depending on the strength of activation in area V5 (25). With hindsight, it is also evident in earlier electrophysiological results in macaque area V5 that correlated neural activation with the psychophysical performance of the animals (26), studies that also have been verified by using fMRI in humans (27). A problem concerning the two latter V5 studies is that the activation/perception correlation is indirect and thus expected; both perception and brain activation also correlate with the (parametrically varied) stimulus. Activity in the fusiform gyrus has been reported recently to correlate with confidence levels in an object-recognition task (28). In this study, however, identical stimuli sometimes were perceived and sometimes not, suggesting a role of attention and/or visual priming, as well as various other factors (29), in modulating the neural response. Our results, on the other hand, clearly demonstrate a relationship between brain activation and perception in areas related to the processing of higher order stimuli such as faces and houses. The present study further suggests that there are no separate processing and perceptual areas (30) but rather that the same cortical regions are involved in both the processing and, when certain levels of activation are reached and probably in combination with the activation of other areas as well, the generation of a conscious visual percept.

Acknowledgments

We thank Gabriel Caffarena for computer programming, Martin Cook for help in preparing the manuscript, and Richard Perry and Andreas Bartels for useful comments on the manuscript. This work was supported by The Wellcome Trust (London).

Abbreviations

- 2AFC

two-alternative forced choice

- fMRI

functional MRI

References

- 1.Frith C, Perry R, Lumer E. Trends Cogn Sci. 1999;3:105–114. doi: 10.1016/s1364-6613(99)01281-4. [DOI] [PubMed] [Google Scholar]

- 2.Logothetis N K, Schall J D. Science. 1989;245:761–763. doi: 10.1126/science.2772635. [DOI] [PubMed] [Google Scholar]

- 3.Leopold D A, Logothetis N K. Nature (London) 1996;379:549–553. doi: 10.1038/379549a0. [DOI] [PubMed] [Google Scholar]

- 4.Sheinberg D L, Logothetis N K. Proc Natl Acad Sci USA. 1997;94:3408–3413. doi: 10.1073/pnas.94.7.3408. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Tong F, Nakayama K, Vaughan J T, Kanwisher N. Neuron. 1998;21:753–759. doi: 10.1016/s0896-6273(00)80592-9. [DOI] [PubMed] [Google Scholar]

- 6.Blake R. Psychol Rev. 1989;96:145–167. doi: 10.1037/0033-295x.96.1.145. [DOI] [PubMed] [Google Scholar]

- 7.Logothetis N K, Leopold D A, Sheinberg D L. Nature (London) 1996;380:621–624. doi: 10.1038/380621a0. [DOI] [PubMed] [Google Scholar]

- 8.Kovacs I, Papathomas T V, Yang M, Feher A. Proc Natl Acad Sci USA. 1996;24:15508–15511. doi: 10.1073/pnas.93.26.15508. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Lee S H, Blake R. Vision Res. 1999;39:1447–1454. doi: 10.1016/s0042-6989(98)00269-7. [DOI] [PubMed] [Google Scholar]

- 10.Lumer E D, Friston K J, Rees G. Science. 1998;280:1930–1934. doi: 10.1126/science.280.5371.1930. [DOI] [PubMed] [Google Scholar]

- 11.Gunter R. Br J Psychol. 1951;42:363–372. [Google Scholar]

- 12.Perry N W, Childers D G, Dawson W W. Vision Res. 1969;9:1357–1366. doi: 10.1016/0042-6989(69)90072-8. [DOI] [PubMed] [Google Scholar]

- 13.Ono H, Komoda M, Mueller E R. Percept Psychophys. 1971;9:343–347. [Google Scholar]

- 14.Tovée M J. Neuron. 1998;21:1239–1242. doi: 10.1016/s0896-6273(00)80644-3. [DOI] [PubMed] [Google Scholar]

- 15.Kolb F C, Braun J. Nature (London) 1995;377:336–338. doi: 10.1038/377336a0. [DOI] [PubMed] [Google Scholar]

- 16.Morgan M J, Mason A J, Solomon J A. Nature (London) 1997;385:401–402. doi: 10.1038/385401b0. [DOI] [PubMed] [Google Scholar]

- 17.McKeefry D J, Zeki S. Brain. 1997;120:2229–2242. doi: 10.1093/brain/120.12.2229. [DOI] [PubMed] [Google Scholar]

- 18.Talairach J, Tournoux P. Co-Planar Stereotaxic Atlas of the Human Brain. Stuttgart: Thieme; 1998. [Google Scholar]

- 19.Schanze T. IEEE Trans Sig Process. 1995;43:1502–1503. [Google Scholar]

- 20.Friston K J, Holmes A P, Worsley K J, Poline J B, Frith C D, Frackowiak R S J. Hum Brain Mapp. 1995;2:189–210. doi: 10.1002/(SICI)1097-0193(1996)4:2<140::AID-HBM5>3.0.CO;2-3. [DOI] [PubMed] [Google Scholar]

- 21.Zeki S. J Physiol (London) 1978;277:273–290. doi: 10.1113/jphysiol.1978.sp012272. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Lumer E D. Cereb Cortex. 1998;8:553–561. doi: 10.1093/cercor/8.6.553. [DOI] [PubMed] [Google Scholar]

- 23.Rees G, Wojciulik E, Clarke K, Husain M, Frith C, Driver J. Brain. 2000;123:1624–1633. doi: 10.1093/brain/123.8.1624. [DOI] [PubMed] [Google Scholar]

- 24.Logothetis N K, Pauls J, Augath M, Trinath T, Oeltermann A. Nature (London) 2001;412:150–157. doi: 10.1038/35084005. [DOI] [PubMed] [Google Scholar]

- 25.Zeki S, Ffytche D H. Brain. 1998;121:25–45. doi: 10.1093/brain/121.1.25. [DOI] [PubMed] [Google Scholar]

- 26.Newsome W T, Britten K H, Movshon J A. Nature (London) 1989;341:52–54. doi: 10.1038/341052a0. [DOI] [PubMed] [Google Scholar]

- 27.Rees G, Friston K, Koch C. Nat Neurosci. 2000;3:716–723. doi: 10.1038/76673. [DOI] [PubMed] [Google Scholar]

- 28.Bar M, Tootell R B, Schacter D L, Greve D N, Fischl B, Mendola J D, Rosen B R, Dale A M. Neuron. 2001;29:529–535. doi: 10.1016/s0896-6273(01)00224-0. [DOI] [PubMed] [Google Scholar]

- 29.Aguirre G K. Neuron. 2001;29:317–319. doi: 10.1016/s0896-6273(01)00207-0. [DOI] [PubMed] [Google Scholar]

- 30.Zeki S, Bartels A. Conscious Cogn. 1999;8:225–259. doi: 10.1006/ccog.1999.0390. [DOI] [PubMed] [Google Scholar]