Abstract

Objective

Recent advances in artificial intelligence (AI) are revolutionizing ophthalmology by enhancing diagnostic accuracy, treatment planning, and patient management. However, a significant gap remains in practical guidance for ophthalmologists who lack AI expertise to effectively analyze these technologies and assess their readiness for integration into clinical practice. This paper aims to bridge this gap by demystifying AI model design and providing practical recommendations for evaluating AI imaging models in research publications.

Design

Educational review: synthesizing key considerations for evaluating AI papers in ophthalmology.

Participants

This paper draws on insights from an interdisciplinary team of ophthalmologists and AI experts with experience in developing and evaluating AI models for clinical applications.

Methods

A structured framework was developed based on expert discussions and a review of key methodological considerations in AI research.

Main Outcome Measures

A stepwise approach to evaluating AI models in ophthalmology, providing clinicians with practical strategies for assessing AI research.

Results

This guide offers broad recommendations applicable across ophthalmology and medicine.

Conclusions

As the landscape of health care continues to evolve, proactive engagement with AI will empower clinicians to lead the way in innovation while concurrently prioritizing patient safety and quality of care.

Financial Disclosure(s)

Proprietary or commercial disclosure may be found in the Footnotes and Disclosures at the end of this article.

Keywords: Artificial intelligence, Glaucoma detection, Machine learning, Ophthalmology

Recent advances in artificial intelligence (AI) have highlighted its potential for earlier detection and more precise treatment of ocular conditions.1 Artificial intelligence algorithms can analyze medical images to detect conditions like glaucoma, diabetic retinopathy, and age-related macular degeneration with impressive accuracy.2 By automating these diagnostic processes, AI not only augments the capabilities of ophthalmologists but also improves accessibility and efficiency in eye care, potentially leading to earlier detection and improved patient outcomes.

However, AI integration into ophthalmology has unique challenges compared to other medical specialties. Unlike radiology, in which imaging is more standardized, ophthalmology faces a lack of universal imaging standards, smaller datasets, and the absence of universal diagnostic criteria for diseases like glaucoma.3 For example, noninteroperable imaging outputs from different OCT device manufacturers complicate model generalizability.4 Despite these challenges, the integration of AI into ophthalmic care is rapidly gaining traction due to the field's high reliance on imaging for diagnosis of intraocular and systemic disease.

There is a notable gap in the availability of practical guidance for clinicians encountering AI-based literature and product development. The most recent practical guide created for ophthalmologists, published by Ting et al in 2019, preceded the rapid expansion of AI research and the growing accessibility of toolkits for building and testing medical AI models. As a result, ophthalmologists and trainees now need more fundamental, detailed awareness of the nuances of training data in the context of ophthalmology (e.g., demographic composition, data augmentation, and pretraining), model interpretability approaches, and critical evaluation of model performance (e.g., subgroup analysis and ethical considerations).5 The seemingly constant influx of new technical information poses a challenge for clinicians seeking to understand how they might integrate AI into their practice while safeguarding their patients.

This paper aims to fill this gap by demystifying the design and evaluation of AI models as presented in scientific literature. Our framework was developed through a literature review of AI publications in ophthalmology and interdisciplinary discussions based on our team's experiences in developing and applying AI to ophthalmic imaging. To explain the fundamental steps of designing AI imaging models, this paper uses practical examples, including an AI model designed to detect glaucoma from OCT reports.6,7 By highlighting key considerations and potential pitfalls in AI model design and validation, this paper empowers clinicians to critically evaluate AI-based ophthalmic literature and therefore later AI product implementation.

Training the Model

Training data is the foundation of any AI model, and its characteristics significantly influence the model's performance (Table 1). The training dataset, which consists of images or other types of information, allows the AI model to learn patterns and relationships. This training enables AI to make predictions when presented with new, unseen data. An appropriately representative training dataset ensures the model performs well across diverse groups. The importance of training data in evaluating AI bias risk and efficacy cannot be overstated, yet it is often underreported and underdiscussed. In a review of ophthalmology AI trials, only 27 out of 37 trials included information on training data.8

Table 1.

Key Considerations for Data Used to Train an AI Model

| Category | Key Questions |

|---|---|

| Dataset size |

|

| Dataset scope |

|

| Labeling methods |

|

| Pretraining |

|

AI = artificial intelligence.

Although the teaching tool in this paper uses a set of OCT reports as training data for simplicity, the future of AI in ophthalmology likely lies in multimodal approaches that integrate diverse data sources.9,10 For glaucoma detection, these approaches may integrate multiple imaging modalities, such as en face images, deviation maps, and raw B-scans alongside functional tests and clinical risk factors, to achieve more robust diagnostic capabilities.11 Expanding beyond single-modality training data could lead to more robust and generalizable models that better reflect clinical decision-making.

Demographic Information

When evaluating training data, it is essential to consider whether the data are demographically representative of the patient population one serves. A review of the existing datasets in ophthalmology found that most datasets unevenly represented disease populations and poorly reported demographic characteristics such as age, sex, and ethnicity.12 Only 7 out of 27 models identified in ophthalmology reported age and gender composition, and just 5 out of 27 included race and ethnicity data.8 Additionally, much of the training data come from clinical trials in ophthalmology, which historically lack sociodemographic diversity.13 This raises concerns that many AI models in ophthalmology might perform poorly for underrepresented groups if broadly implemented in clinical care, as has been discovered in other specialties using AI.14,15 In ophthalmology, machine learning approaches have shown high accuracy in detecting glaucoma. However, when one model's performance was evaluated across different ethnicities, significant variations in performance were observed.16 Some example models, such as FairCLIP and the fair error-bound scaling approach, are addressing this issue by intentionally improving performance on minority group data.17,18 The National Institutes of Health's All of Us Research Program is actively curating a dataset that includes participants from different races, ethnicities, age groups, regions of the country, gender identities, sexual orientations, educational backgrounds, disabilities, and health statuses.19 Such efforts, along with similar initiatives to diversify training data, will help to limit bias.

Labels

The accuracy of a model's predictions heavily relies on the quality of the labels in the training data, often referred to as the “ground truth” or “reference standard.” In ophthalmology, this labeling process might involve attaching a single word or disease to an image or annotating OCT reports to identify pathology. Ideally, these labels are assigned by experts, such as experienced ophthalmologists. However, the expertise of the labelers can vary significantly. Data labeled by a resident physician may not be as accurate as data labeled by a senior physician. A consensus method, in which multiple experts review and agree on labels, could further improve accuracy and has been successfully implemented by some groups.20

Unlike supervised algorithms, which require large quantities of hand-annotated or labeled data, self-supervised learning relies on inexact labels. For example, expert gaze of OCT reports recorded by eye-tracking technology can serve as a pseudo-labeling method.21 This technique is based on the premise that an expert has honed (perhaps subconsciously) which aspects of an image are most likely to be diagnostic and will therefore spend the most time looking at specific points in the image. Stember et al22 have shown that gaze annotations are comparable to hand annotations in accuracy, while offering a more clinically valid and organic alternative because gaze data can be collected during routine diagnostic workflows. These data can be collected in bulk using automated gaze tracking, which is highly efficient. However, although gaze data can be collected during routine diagnostic workflows, integrating it into AI models requires more complex processing than traditional label-based annotations. Once a self-supervised model is trained to correlate gaze patterns from diagnoses, pseudo-labels can be inferred for new data. This AI ability enables a more scalable and interpretable alternative to traditional expert image annotation.

In contrast, unsupervised learning methods do not rely on labeled data. Rather, unsupervised learning algorithms analyze the data independently to find structures and patterns. This approach can be useful when labeled data are difficult to obtain. There is a risk that the model will focus on and identify clinically irrelevant patterns. However, unsupervised learning also has the potential to uncover entirely new and important insights into disease pathology.

Pretraining and Fine-Tuning

Pretrained models use self-supervised learning to extract knowledge from large, unlabeled datasets. ImageNet, a dataset containing over 14 million images, is frequently used for pretraining.23 During this process, the model learns to recognize important visual features such as edges, textures, and shapes. This broad understanding of visual patterns can then be applied to new specialized tasks.

Transfer learning, or fine-tuning, builds on pretraining by adapting these generalized models to specific tasks.24,25 By training the model on a smaller, task-specific dataset, fine-tuning allows it to specialize in areas such as glaucoma detection. This approach improves the model's performance while reducing the computational resources and time required to train a model from scratch. In ophthalmology, the RetFound model was pretrained on 1.6 million unlabeled retinal images. This foundational training, followed by fine-tuning on task-specific datasets, enabled RetFound to outperform similar models in diagnostic accuracy.26,27

Data Augmentation

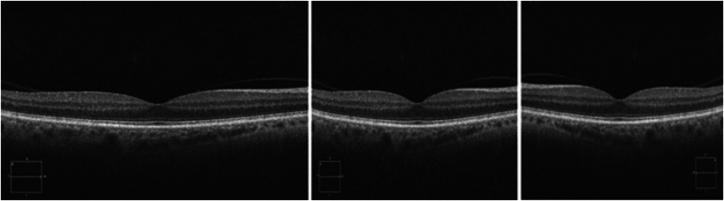

Data augmentation is a technique that some researchers use to artificially increase the size and diversity of a dataset. This method involves creating additional training images by transforming existing data through rotations, zooming, flipping, or other computer manipulations (Fig 1). These augmentations can help the model prepare for variability, such as low image quality, by exposing it to a broader range of scenarios during training.

Figure 1.

Three variations of the same OCT image using data augmentation techniques. The original image (left) is modified to create new computer-generated versions by adding Gaussian noise (center) and applying a horizontal flip (right). These transformations simulate variability in the dataset, enhancing the AI model's ability to generalize. AI = artificial intelligence.

Although these augmentations help anticipate variations due to user error and other factors, concerns remain regarding the clinical relevance of these augmented data. For example, although flipping images can help the model learn better, it might cause the model to learn from images that do not match normal anatomical configurations. Understanding the extent and type of augmentation used is crucial because it impacts the model's performance and reliability in clinical settings. Although a model might be advertised as being trained on 10 000 images, it is important to evaluate how many unique patients these images represent and whether any augmentation techniques were applied.

Ideal image datasets should be large and diverse, using authentic images collected from multiple centers to capture variability. Although augmentation can be a valuable tool, particularly for smaller datasets, its long-term performance and generalizability warrant further investigation. For example, in a glaucoma detection model, a good training dataset would ideally consist of thousands of OCT images labeled as either “glaucoma” or “not glaucoma,” collected from demographically and geographically diverse sources, and labeled by a consensus of experienced glaucoma specialists. However, given the practical challenges of data sharing due to regulatory and privacy barriers, augmentation may serve as a reasonable and effective alternative for enhancing model performance.28,29

Information Processing and Deep Learning: The “Black Box”

Neural networks, inspired by the human brain's structure and function, form the core of many AI models. A neural network is composed of interconnected nodes, or “neurons,” organized into layers: an input layer that receives raw data (e.g., an image of the retina), hidden layers that transform this data, and an output layer that generates the final result (e.g., a diagnosis of glaucoma). The network processes numerous labeled examples, makes predictions, and adjusts based on feedback, thus refining its accuracy through trial and error.

Although understanding the technical aspects of AI is essential, one must turn to the more practical aspects of data input, output, and interpretability to understand the root causes of model bias or clinical inaccuracy.

Outputs: Classification vs. Generation

Classification models, such as those used for detecting glaucoma from OCT images, produce outputs that indicate the presence or absence of disease. These models make straightforward classifications based on learned patterns in the training data. In contrast, generative models can create new data that mimic patterns in the training dataset. Returning to the example of glaucoma detection, generative models could synthesize new retinal images showing signs of glaucoma for training purposes. ChatGPT, a generative AI model familiar to many ophthalmologists, has recently been adapted to interpret images. This tool could be used for ophthalmic image analysis by providing preliminary interpretations or assisting with diagnostic workflows.30,31 However, pretrained models, like ChatGPT, may lack ophthalmology-specific expertise and their decision process is not transparent. Generative AI, like discriminative AI, can learn and reproduce biases or errors present in training data. Generative AI models can also “hallucinate” or produce outputs that seem plausible but are factually incorrect.

Interpretability

The decision-making processes of AI models are not easily understood and are frequently referred to as a “black box.” The black box mimics human learning. Throughout life, one is exposed to billions of experiences that shape knowledge through a complex network of neuronal connections. This is why it is possible to recognize a familiar face in a crowd almost instantly but it is difficult to pinpoint a specific feature that allows the face to be recognized. Similarly, AI identifies patterns based on large datasets without being able to specify where it learned each individual feature.

To increase interpretability, techniques like saliency maps, Gradient-Weighted Class Activation Maps, and eye-tracking heat maps can be used to visualize which parts of an image are influencing the model's decisions.21,32 This helps make the outputs more interpretable by highlighting the regions that contributed most to the model's predictions (Fig 2). By using these techniques, clinicians can better understand and critically evaluate the AI model's decision-making process. However, there are limitations to these techniques. Different variants of Gradient-Weighted Class Activation Maps and other saliency-based methods can produce inconsistent results, even when applied to the same dataset and model.33,34 Interpretability techniques can also oversimplify a complex decision-making process or lead to bias in users. Ideally, clinicians would corroborate insights from multiple interpretability techniques and combine them with clinical expertise to ensure accuracy.

Figure 2.

Grad-CAM highlights regions most important in the AI model's decision-making process, enhancing interpretability. In this case, the Grad-CAM (right) illustrates that model relies on the RNFL probability map's arcuate region to assess glaucoma, as indicated by the yellow highlights. This provides a visual explanation of the AI's focus during analysis. The color scale (yellow to blue) indicates the relevance of different regions, with yellow showing the highest relevance. AI = artificial intelligence; Grad-CAM = Gradient-Weighted Class Activation Maps; GCL = Ganglion Cell Layer; RNFL = retinal nerve fiber layer; VF = visual fields.

Model Testing

Retrospective Testing

Testing data are important for the development and validation of models. They are used to evaluate the model's performance after training by assessing how well the model performs with novel data (Table 2). Although training data teach the model, testing data provide a measure of its effectiveness. For a model to be deemed reliable, the testing data, like the training data, must be representative of the patient population they are intended to serve.

Table 2.

Types of AI Model Testing

| Testing Type | Description | Considerations |

|---|---|---|

| Internal (retrospective) | Testing on the same dataset the model was trained on, commonly an 80/20 test train split. | A good first step but insufficient to prove robustness or generalizability because it can inflate accuracy and fail to represent true performance. |

| External (retrospective) | Testing on an entirely different dataset. | Provides a more robust measure of performance but may be limited by the availability of appropriate datasets. |

| Prospective | Real-time testing in a clinical setting. | Considered the gold standard for evaluating model performance but requires acquiring new data from patients, which can be time consuming and resource intensive. |

| Subgroup analysis | Evaluating AI performance across distinct subgroups, such as disease severity levels and demographic groups. | Subgroup analysis is essential for assessing AI model performance across varying disease severities and demographic groups in both retrospective and prospective testing, helping to identify and mitigate potential biases or disparities. |

It is common for studies to boast high model success rates, such as 95% accuracy. However, it is important to scrutinize these claims. Often, these metrics are derived from training data that are split into subsets, commonly an 80/20 split, wherein 80% of the data are used for training and 20% are used for testing. Some researchers use a three-way split (80/10/10), in which an additional validation set helps to optimize model parameters before final testing. Data splits should always occur at the participant level rather than the eye or image level to prevent data leakage, which refers to the same patient's data appearing in both training and testing sets, artificially inflating model performance. One must additionally consider whether any of the data are augmented, as discussed earlier. This training or testing split is an effective validation method in early stages of model development but is not sufficient for model implementation into clinical practice. Robust testing data should be comprised of a separate dataset than training data. Ideally, this external dataset would encompass diverse demographic and geographic patient data to ensure that the testing data accurately reflect any intended patient population. In ophthalmology, there are publicly available datasets, such as UK Biobank and AI-READI, that are often used for this purpose.35, 36, 37 After strong performance with retrospective testing data, a model is ready for prospective testing.

Prospective Testing

Prospective testing involves evaluating the AI model on new patient data as they become available, mimicking clinical implementation. Prospective trials are essential for validating the model's performance and identifying implementation errors across different clinical environments. Conducting these trials at multiple centers ensures that the model can be used across various patient populations and clinical settings.

Subgroup Analysis

Subgroup analysis is vital for understanding AI model performance across different disease severities and demographic groups in both retrospective and prospective testing. Models trained on datasets with a disproportionate representation of severe diseases might perform better in advanced cases but struggle with earlier, subtler presentations. For example, in glaucoma detection, early-stage cases might be underrepresented in training datasets, as these datasets are typically curated using clear diagnostic criteria that may favor more advanced disease. This might limit the model's ability to perform well in the early cases in which clinicians most need assistance.

Demographic subgroup analysis is equally important to ensure that AI models perform well across all patient populations. By examining performance metrics for different demographic subgroups, researchers can identify and address potential inequities in model performance before model deployment. The growing trend of authors reporting performance metrics with subgroup analysis is a promising step toward ensuring AI models serve all patient populations.

Performance Metrics

Outcome measures are the final component of the assessment of AI models. Key performance metrics used to evaluate AI models include sensitivity (recall), specificity, precision, and accuracy. Sensitivity, or recall, measures the proportion of true positives correctly identified by the model. Specificity measures the proportion of true negatives correctly identified. Precision, or positive predictive value, indicates the proportion of positives identified by the model that are true positives. Accuracy is the overall proportion of correct predictions.

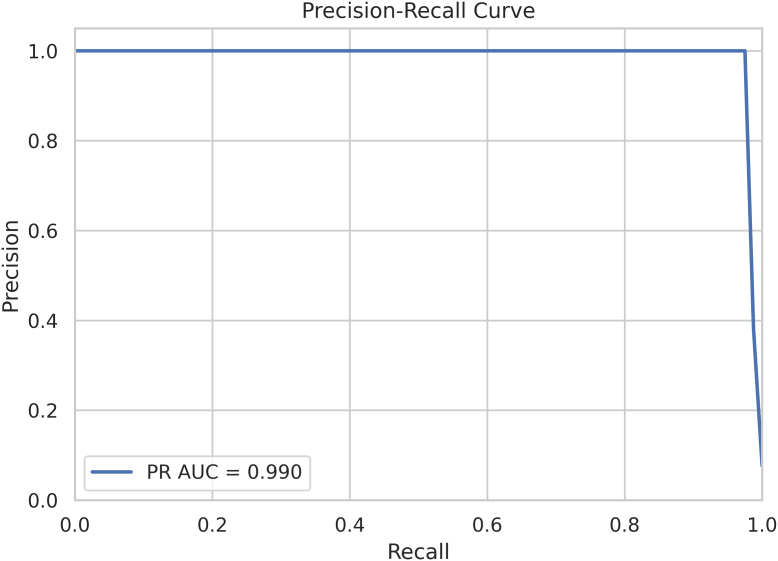

Both precision-recall (PR) curves and receiver operating characteristic (ROC) curves are used to highlight different aspects of model performance. The PR curve is particularly useful for evaluating models on imbalanced datasets in which the number of positive instances is much lower than the number of negative instances. Precision and recall are plotted to assess the model's performance. The area under the PR curve (AUC-PR) provides a single metric to summarize performance and is particularly useful in cases of rare diseases or class imbalance. For example, PR curves can be used to evaluate AI models in glaucoma detection, in which disease prevalence is often low in screening populations. High AUC-PR values indicate strong performance in identifying early subtle cases of disease. Figure 3 shows a sample PR curve with an AUC-PR of 0.990, indicating exceptional model performance (with perfect performance being 1.0). This near perfect curve indicates that the model can capture almost all true positives with very few false positives.

Figure 3.

Precision-recall curve. AUC = area under the curve; PR = precision-recall.

The ROC curve plots the true positive rate (sensitivity) against the false-positive rate (1-specificity) across various threshold settings, providing a view of the model's performance across all classification thresholds. The AUC-ROC is a widely used summary metric that indicates the model's ability to distinguish between classes. Receiver operating characteristic curves are generally better suited for evaluating overall performance, especially in balanced datasets.38 Figure 4 presents a sample ROC curve with an AUC of 0.91, indicating strong performance; an AUC of 1.0 represents perfect discrimination between classes. In an ideal scenario, the ROC curve would rise sharply and hug the top left corner, reflecting near perfect sensitivity and specificity. The dotted line represents random guessing (AUC = 0.5).

Figure 4.

Receiver operating characteristic curve. ROC = receiver operating characteristic.

When interpreting these metrics, consider the clinical implications of missed pathology (false-negatives) and overdiagnosis (false-positives). A balanced evaluation using both PR and ROC curves, alongside subgroup analyses, ensures that the model performs well across different patient groups and clinical scenarios.

Clinical Implementation

Implementing an AI model in a clinical setting involves several practical considerations beyond its performance metrics. Challenges to clinical implementation, such as regulatory approval, data privacy, and user training, must be addressed to ensure successful clinical integration. Although these considerations may not always be explicitly discussed in scientific literature, they are critical to evaluating an AI model's readiness for clinical use and should be kept in mind when reviewing papers.

From a practical perspective, there are several steps necessary to consider model integration into clinical practice. First, ensure the practice has the necessary infrastructure and resources to integrate the AI model, including compatibility with existing practice equipment. A model trained on a specific OCT device might not perform well with data from another OCT device without another layer of AI to translate image formats.39 Standardization of data using Digital Imaging and Communications in Medicine standards will enhance future data transferability and system interoperability.4 The adoption of the Observational Medical Outcomes Partnership Common Data Model in ophthalmology provides a framework for standardizing diverse datasets, enabling large-scale research and promoting interoperability across health care systems.40

Second, ensure that the care team has a thorough understanding of how the model works. This knowledge is necessary for providing patients with informed consent, explaining the role of AI in their diagnosis or treatment, and addressing any questions or concerns they may have. Collaboration between clinicians and data scientists is essential during implementation to ensure that the model is not only technically robust but also clinically relevant and seamlessly integrated into the workflow.

Third, evaluate whether the model accounts for the full clinical context. For example, can glaucoma be accurately diagnosed based solely on an OCT report? Consider if the model integrates other relevant clinical data or if additional diagnostic tools and clinical judgment are required for a complete assessment. As AI advances, multimodal AI that incorporates all these components will become more prevalent.

Finally, consider practical aspects of delivering AI results in the clinical workflow. Will AI-generated results appear directly in the electronic health record, on a separate dashboard or application, or integrated into imaging systems? The content and format of AI reports are important. Clinicians need clear outputs that facilitate decision making rather than introduce confusion. Ensuring that AI-generated results are accessible and seamlessly integrated into existing workflows remains difficult when balancing regulatory requirements and interoperability with various health IT systems.

Ethical Considerations

The integration of AI into ophthalmology presents a range of ethical issues that must be carefully addressed. Artificial intelligence systems introduce unique challenges to the informed consent process. Physicians may not fully understand the intricacies of the AI models they use, raising concerns about their ability to adequately explain the technology, its benefits, and its limitations to patients. Simultaneously, patients may struggle to comprehend how AI contributes to their care given the abstract nature of machine learning algorithms. Furthermore, the black-box nature of AI models raises issues of responsibility, particularly in cases of errors or misdiagnosis,40 and although AI may reduce clinician burnout, trainees may become less skilled due to overreliance on automated systems. Papers like this one, which aim to demystify AI, will hopefully help move the field toward more informed consent by equipping clinicians with the knowledge needed.

Additionally, the development of AI heavily relies upon patient data. While efforts are made to deidentify data, there is a growing recognition that advances in technology could potentially reidentify data previously considered anonymous.41 This raises concerns about patient privacy and data breaches.42 In addition, questions of ownership and compensation emerge: if patients' data are instrumental in building models, should they have stake in any benefits derived from those models?

Research Reporting Guidelines

Existing guidelines related to AI in research and medicine focus on how to report trials in AI. The Standard Protocol Items: Recommendations for Interventional Trials–Artificial Intelligence and Consolidated Standards of Reporting Trials–Artificial Intelligence (CONSORT-AI) extensions were developed to provide minimum guidelines for reporting clinical trial protocols and results, and these guidelines have been successfully implemented into some of the early trials in ophthalmology.43,44 Although no ophthalmology journals mandate CONSORT-AI in their online submission guidelines, Ophthalmology Science recommends its use. Despite the absence of strict mandates, approximately 90% of AI studies were found to be compliant with CONSORT-AI guidelines.45,46 Although these guidelines assist in evaluating the design and conduct of trials, they do not emphasize the intricacies of model development.

Model cards and the Minimum Information About Clinical Artificial Intelligence Modeling checklist facilitate easier interpretation of AI models. They encourage authors to summarize the key components outlined in this guide.47,48 As more authors adopt these tools, information accessibility in the field will likely improve.

Conclusion

As AI continues to advance, its potential to transform clinical practice, particularly in image-heavy fields like ophthalmology, becomes increasingly evident. This paper has outlined a practical guide for assessing AI imaging models, emphasizing the importance of understanding model development, training data quality, performance metrics, and implementation. By critically evaluating these aspects, physicians can make informed decisions about integrating AI technologies into their practices. As the landscape of healthcare continues to evolve, proactive engagement with AI will empower clinicians to lead the way in innovation, ensuring that patient safety and care quality remain at the forefront of medical practice.

To maximize the benefits of AI, clinicians should ensure that models are rigorously validated across diverse populations, imaging devices, and clinical settings before adoption. Prospective testing of models in the clinic will reveal whether AI tools can be seamlessly integrated into existing workflows. Governing body regulatory approval and institutional review are also necessary steps before AI algorithms can be integrated into clinical workflows to ensure safety, efficacy, and compliance with ethical and legal standards. By critically evaluating AI literature as it evolves, physicians can make safer decisions about AI integration into clinical practice.

Manuscript no. XOPS-D-24-00417.

Footnotes

All authors have completed and submitted the ICMJE disclosures form.

The Article Publishing Charge (APC) for this article was paid by Department of Ophthalmology, Columbia University Irving Medical Center.

The authors have made the following disclosures:

Supported in part by an unrestricted grant to the Columbia University Department of Ophthalmology from Research to Prevent Blindness, Inc., New York, NY, USA. Author K.A.T. receives Research Funding from Topcon Healthcare, Inc.

HUMAN SUBJECTS: No human subjects were included in this study.

No animal subjects were used in this study.

Author Contributions:

Conception and design: McCarthy

Data collection: McCarthy

Analysis and interpretation: McCarthy, Valenzuela, Chen, Dagi Glass, Thakoor

Obtained funding: N/A

Overall responsibility: McCarthy, Valenzuela, Chen, Dagi Glass, Thakoor

References

- 1.Li Z., Wang L., Wu X., et al. Artificial intelligence in ophthalmology: the path to the real-world clinic. Cell Rep Med. 2023;4 doi: 10.1016/j.xcrm.2023.101095. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Ting D.S.W., Pasquale L.R., Peng L., et al. Artificial intelligence and deep learning in ophthalmology. Br J Ophthalmol. 2019;103:167–175. doi: 10.1136/bjophthalmol-2018-313173. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Radgoudarzi N., Hallaj S., Boland M.V., et al. Barriers to extracting and harmonizing glaucoma testing data: gaps, shortcomings, and the pursuit of FAIRness. Ophthalmol Sci. 2024;4 doi: 10.1016/j.xops.2024.100621. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Lee A.Y., Campbell J.P., Hwang T.S., et al. Recommendations for standardization of images in ophthalmology. Ophthalmology. 2021;128:969–970. doi: 10.1016/j.ophtha.2021.03.003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Ting D.S.W., Lee A.Y., Wong T.Y. An ophthalmologist's guide to deciphering studies in artificial intelligence. Ophthalmology. 2019;126:1475–1479. doi: 10.1016/j.ophtha.2019.09.014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Hood D.C., La Bruna S., Tsamis E., et al. Detecting glaucoma with only OCT: implications for the clinic, research, screening, and AI development. Prog Retin Eye Res. 2022;90 doi: 10.1016/j.preteyeres.2022.101052. [DOI] [PubMed] [Google Scholar]

- 7.Al-Aswad L.A., Ramachandran R., Schuman J.S., et al. Collaborative community for ophthalmic imaging executive committee and glaucoma workgroup. Artificial intelligence for glaucoma: creating and implementing artificial intelligence for disease detection and progression. Ophthalmol Glaucoma. 2022;5:e16–e25. doi: 10.1016/j.ogla.2022.02.010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Chen D., Geevarghese A., Lee S., et al. Transparency in artificial intelligence reporting in ophthalmology-A scoping review. Ophthalmol Sci. 2024;4 doi: 10.1016/j.xops.2024.100471. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Wang S., He X., Jian Z., et al. Advances and prospects of multi-modal ophthalmic artificial intelligence based on deep learning: a review. Eye Vis. 2024;11:38. doi: 10.1186/s40662-024-00405-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Chen J., Wu X., Li M., et al. EE-explorer: a multimodal artificial intelligence system for eye emergency triage and primary diagnosis. Am J Ophthalmol. 2023;252:253–264. doi: 10.1016/j.ajo.2023.04.007. [DOI] [PubMed] [Google Scholar]

- 11.Lim W.S., Ho H.Y., Ho H.C., et al. Use of multimodal dataset in AI for detecting glaucoma based on fundus photographs assessed with OCT: focus group study on high prevalence of myopia. BMC Med Imaging. 2022;22:206. doi: 10.1186/s12880-022-00933-z. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Khan S.M., Liu X., Nath S., et al. A global review of publicly available datasets for ophthalmological imaging: barriers to access, usability, and generalisability. Lancet Digit Health. 2021;3:e51–e66. doi: 10.1016/S2589-7500(20)30240-5. [published correction appears in Lancet Digit Health. 2021 Jan;3(1):e7] [DOI] [PubMed] [Google Scholar]

- 13.Nakayama L.F., Mitchell W.G., Shapiro S., et al. Sociodemographic disparities in ophthalmological clinical trials. BMJ Open Ophthalmol. 2023;8 doi: 10.1136/bmjophth-2022-001175. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Tschandl P. Risk of bias and error from data sets used for dermatologic artificial intelligence. JAMA Dermatol. 2021;157:1271–1273. doi: 10.1001/jamadermatol.2021.3128. [DOI] [PubMed] [Google Scholar]

- 15.Bachina P., Garin S.P., Kulkarni P., et al. Coarse race and ethnicity labels mask granular underdiagnosis disparities in deep learning models for chest radiograph diagnosis. Radiology. 2023;309 doi: 10.1148/radiol.231693. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Li C., Chua J., Schwarzhans F., et al. Assessing the external validity of machine learning-based detection of glaucoma. Sci Rep. 2023;13:558. doi: 10.1038/s41598-023-27783-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.FairCLIP: harnessing fairness in vision-language learning. 2024. arxiv.orghttps://arxiv.org/html/2403.19949v2

- 18.Lucy Z., Yan L., Tian Y., et al. Improving cup-rim segmentation by fair error-bound scaling with segment anything model (SAM) Invest Ophthalmol Vis Sci. 2024;65:2413. [Google Scholar]

- 19.All of Us Research Program Overview National Institutes of health (NIH) — all of us. 2020. https://allofus.nih.gov/about/program-overview

- 20.Phene S., Dunn R.C., Hammel N., et al. Deep learning and glaucoma specialists: the relative importance of optic disc features to predict glaucoma referral in fundus photographs. Ophthalmology. 2019;126:1627–1639. doi: 10.1016/j.ophtha.2019.07.024. [DOI] [PubMed] [Google Scholar]

- 21.Akerman M., Choudhary S., Liebmann J.M., et al. Extracting decision-making features from the unstructured eye movements of clinicians on glaucoma OCT reports and developing AI models to classify expertise. Front Med (Lausanne) 2023;10 doi: 10.3389/fmed.2023.1251183. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Stember J.N., Celik H., Krupinski E., et al. Eye tracking for deep learning segmentation using convolutional neural networks. J Digit Imaging. 2019;32:597–604. doi: 10.1007/s10278-019-00220-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Deng J., Dong W., Socher R., et al. 2009 IEEE Conference on Computer Vision and Pattern Recognition. 2009. ImageNet: a large-scale hierarchical image database; pp. 248–255. Miami, FL, USA. [Google Scholar]

- 24.Gulshan V., Peng L., Coram M., et al. Development and validation of a deep learning algorithm for detection of diabetic retinopathy in retinal fundus photographs. JAMA. 2016;316:2402–2410. doi: 10.1001/jama.2016.17216. [DOI] [PubMed] [Google Scholar]

- 25.Poplin R., Varadarajan A.V., Blumer K., et al. Prediction of cardiovascular risk factors from retinal fundus photographs via deep learning. Nat Biomed Eng. 2018;2:158–164. doi: 10.1038/s41551-018-0195-0. [DOI] [PubMed] [Google Scholar]

- 26.Zhou Y., Chia M.A., Wagner S.K., et al. A foundation model for generalizable disease detection from retinal images. Nature. 2023;622:156–163. doi: 10.1038/s41586-023-06555-x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Chuter B., Huynh J., Hallaj S., et al. Evaluating a foundation artificial intelligence model for glaucoma detection using color fundus photographs. Ophthalmol Sci. 2024;5 doi: 10.1016/j.xops.2024.100623. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Goceri E. Medical image data augmentation: techniques, comparisons and interpretations. Artif Intell Rev. 2023;56:12561–12605. doi: 10.1007/s10462-023-10453-z. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Joo J., Kim S.Y., Kim D., et al. Enhancing automated strabismus classification with limited data: data augmentation using StyleGAN2-ADA. PLoS One. 2024;19 doi: 10.1371/journal.pone.0303355. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Mihalache A., Huang R.S., Popovic M.M., et al. Accuracy of an artificial intelligence chatbot's interpretation of clinical ophthalmic images. JAMA Ophthalmol. 2024;142:321–326. doi: 10.1001/jamaophthalmol.2024.0017. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Antaki F., Touma S., Milad D., et al. Evaluating the performance of ChatGPT in ophthalmology: an analysis of its successes and shortcomings. Ophthalmol Sci. 2023;3 doi: 10.1016/j.xops.2023.100324. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Selvaraju R.R., Cogswell M., Das A., et al. Grad-CAM: visual explanations from deep networks via gradient-based localization. Int J Computer Vis. 2020;128:336–359. [Google Scholar]

- 33.Fan R., Alipour K., Bowd C., et al. Detecting glaucoma from fundus photographs using deep learning without convolutions: transformer for improved generalization. Ophthalmol Sci. 2022;3 doi: 10.1016/j.xops.2022.100233. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Malerbi F.K., Nakayama L.F., Prado P., et al. Heatmap analysis for artificial intelligence explainability in diabetic retinopathy detection: illuminating the rationale of deep learning decisions. Ann Transl Med. 2024;12:89. doi: 10.21037/atm-24-73. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.UK Biobank UK Biobank. 2019. https://www.ukbiobank.ac.uk/

- 36.AI-READI Consortium AI-READI: rethinking AI data collection, preparation and sharing in diabetes research and beyond. Nat Metab. 2024;6:2210–2212. doi: 10.1038/s42255-024-01165-x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Kulyabin M., Zhdanov A., Nikiforova A., et al. OCTDL: optical coherence tomography dataset for image-based deep learning methods. Sci Data. 2024;11:365. doi: 10.1038/s41597-024-03182-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Hicks S.A., Strümke I., Thambawita V., et al. On evaluation metrics for medical applications of artificial intelligence. Sci Rep. 2022;12:5979. doi: 10.1038/s41598-022-09954-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Wu Y., Olvera-Barrios A., Yanagihara R., et al. Training deep learning models to work on multiple devices by cross-domain learning with No additional annotations. Ophthalmology. 2023;130:213–222. doi: 10.1016/j.ophtha.2022.09.014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Cai C.X., Halfpenny W., Boland M.V., et al. Advancing toward a common data model in ophthalmology: gap analysis of general eye examination concepts to standard observational medical outcomes partnership (OMOP) concepts. Ophthalmol Sci. 2023;3 doi: 10.1016/j.xops.2023.100391. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Evans N.G., Wenner D.M., Cohen I.G., et al. Emerging ethical considerations for the use of artificial intelligence in ophthalmology. Ophthalmol Sci. 2022;2 doi: 10.1016/j.xops.2022.100141. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.American Academy of Ophthalmology Board of Trustees Special commentary: balancing benefits and risks: the case for retinal images to Be considered as nonprotected health information for research purposes. Ophthalmology. 2025;132:115–118. doi: 10.1016/j.ophtha.2024.07.031. [DOI] [PubMed] [Google Scholar]

- 43.Abdullah Y.I., Schuman J.S., Shabsigh R., et al. Ethics of artificial intelligence in medicine and ophthalmology. Asia Pac J Ophthalmol (Phila) 2021;10:289–298. doi: 10.1097/APO.0000000000000397. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Rivera S.C., Liu X., Chan A.W., et al. Guidelines for clinical trial protocols for interventions involving artificial intelligence: the SPIRIT-AI Extension. BMJ. 2020;370 doi: 10.1136/bmj.m3210. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.Liu X., Cruz Rivera S., Moher D., et al. Reporting guidelines for clinical trial reports for interventions involving artificial intelligence: the CONSORT-AI extension. Nat Med. 2020;26:1364–1374. doi: 10.1038/s41591-020-1034-x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.Martindale A.P.L., Ng B., Ngai V., et al. Concordance of randomised controlled trials for artificial intelligence interventions with the CONSORT-AI reporting guidelines. Nat Commun. 2024;15:1619. doi: 10.1038/s41467-024-45355-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Chen D.K., Modi Y., Al-Aswad L.A. Promoting transparency and standardization in ophthalmologic artificial intelligence: a call for artificial intelligence model card. Asia Pac J Ophthalmol (Phila) 2022;11:215–218. doi: 10.1097/APO.0000000000000469. [DOI] [PubMed] [Google Scholar]

- 48.Norgeot B., Quer G., Beaulieu-Jones B.K., et al. Minimum information about clinical artificial intelligence modeling: the MI-CLAIM checklist. Nat Med. 2020;26:1320–1324. doi: 10.1038/s41591-020-1041-y. [DOI] [PMC free article] [PubMed] [Google Scholar]