Abstract

Diabetic retinopathy (DR) is a microvascular complication of diabetes that can lead to blindness if left untreated. Regular monitoring is crucial for detecting early signs of referable DR, and the progression to moderate to severe non-proliferative DR, proliferative DR (PDR), and macular edema (ME), the most common cause of vision loss in DR. Currently, aside from considerations during pregnancy, sex is not factored into DR diagnosis, management or treatment. Here we examine whether DR manifests differently in male and female patients, using a dataset of retinal images and leveraging convolutional neural networks (CNN) integrated with explainable artificial intelligence (AI) techniques. To minimize confounding variables, we curated 2,967 fundus images from a larger dataset of DR patients acquired from EyePACS, matching male and female groups for age, ethnicity, severity of DR, and hemoglobin A1C levels. Next, we fine-tuned two pre-trained VGG16 models—one trained on the ImageNet dataset and another on a sex classification task using healthy fundus images—achieving AUC scores of 0.72 and 0.75, respectively, both significantly above chance level. To uncover how these models distinguish between male and female retinas, we used the Guided Grad-CAM technique to generate saliency maps, highlighting critical retinal regions for correct classification. Saliency maps showed CNNs focused on different retinal regions by sex: the macula in females, and the optic disc and peripheral vasculature along the arcades in males. This pattern differed noticeably from the saliency maps generated by CNNs trained on healthy eyes. These findings raise the hypothesis that DR may manifest differently by sex, with women potentially at higher risk for developing ME, as opposed to men who may be at greater risk for PDR.

Introduction

Men and women are affected differently across a range of medical conditions from cardiovascular to mental health disorders [1–5]. Although clinical manifestations and presentation of many diseases vary between men and women [6–8] key differences remain under-recognized due to a paucity of research that systematically takes sex and gender into consideration [9, 10]. Biases in diagnosis and treatment contribute to poorer outcomes across all sexes and genders, including men, women, and gender-diverse individuals. For example, osteoporosis is often underdiagnosed and undertreated in men, significantly impacting their morbidity and quality of life [3]. Similarly, while diabetes is more prevalent among men [11], women are diagnosed at an older age, greater disease severity, and higher body fat mass than men [12–15]. In addition, growing evidence suggests that diabetic complications manifest differently in women and men [16, 17]. For instance, some studies indicate that women and girls may be at greater risk of developing diabetic kidney disease compared to age-matched men and boys [18, 19]. Computational modeling predictions further support sex-specific differences in diabetes-induced changes in kidney function, highlighting distinct physiological responses in males and females [20]. Elucidating specific fine-grained diagnostic markers for men and women would enhance diagnostic precision and enable earlier detection of disease across diverse populations.

Diabetic retinopathy (DR), a microvascular complication of diabetes and a leading cause of blindness, affected an estimated 103 million people worldwide in 2020, with projections rising to 160 million by 2045 [21]. Delays to the diagnosis of DR poses significant risks, including progression to vision-threatening stages and systemic complications. Identifying novel sex-specific markers would likely enhance the diagnostic accuracy of DR, enabling earlier and more precise detection of the disease. There is some evidence in the literature that sex and gender play a role in how this condition develops, manifests and progresses [22–26]. However, aside from considerations during pregnancy, sex is not factored into the diagnosis, management or treatment of DR. This lack of sex- and gender-specific medical guidelines for DR highlights the need for more research into the underlying mechanisms of these differences to inform more precise diagnostic and treatment strategies. Here, we investigate sex-specific manifestations of DR using artificial intelligence to analyze retinal fundus images.

To achieve this, we utilize a comprehensive dataset of retinal images to compare male and female patients diagnosed with DR. We employ a recently developed methodological pipeline that integrates convolutional neural networks (CNNs) with explainable artificial intelligence (AI) techniques to analyze retinal fundus images [27]. AI applications have become increasingly popular in medical image analysis, particularly in ophthalmology, where machine learning models have been successfully used to detect various eye diseases, including DR, with high sensitivity and precision [28–34]. However, the broader adoption of deep learning in medicine is challenged by the "black box" nature of these models. While CNNs are powerful, they often operate in a way that is not transparent, making it difficult to understand how they arrive at their decisions. This lack of clarity poses a significant barrier to integrating AI into clinical settings, where decision-making transparency is critical. Explainable AI methods, such as post-hoc interpretability algorithms, offer a possible solution by providing visualizations that highlight important areas of medical images, like the heat maps generated by the Grad-CAM algorithm [35–37].

The rationale of the present work is as follows: Previous studies have shown that CNNs can successfully classify fundus images based on sex [38–40] and have identified several retinal markers that differ between healthy male and female eyes [27]. Here, we investigate whether a CNN model can be trained to identify sex in retinal fundus images of DR patients. If sex differences in the manifestation of DR exist, the model would likely extract and utilize these differences, in addition to any known markers of sex. Thus, we proceed by first training a CNN model to classify the sex of patients based on fundus images from those with DR, and then applying the Guided Grad-CAM technique to explore sex differences in these retinas, aiming to identify distinct manifestations of DR in males and females.

Materials and methods

Fundus dataset

We used a private EyePACS dataset [41] (access date: February 18, 2022), which contains approximately 90,000 fundus images, of which 9,944 were labeled with a DR diagnosis. After matching male and female images for age, ethnicity, severity of DR, and hemoglobin A1c (HbA1c) level, and including only images of good and excellent quality, we obtained a subset of 2,967 DR-labeled images, of which 1,491 were from female patients and 1,476 were from male patients. The DR levels include mild nonproliferative, moderate nonproliferative, severe nonproliferative, and proliferative DR. This subset was partitioned into training, validation, and testing sets containing 2,071, 448, and 448 images, respectively. The composition of this dataset in terms of age, sex, ethnicity, severity of DR, and HbA1c level is shown in Table 1. The dataset composition, presented separately for the training, validation, and test sets, is provided in S1–S3 Tables. Matching of male and female groups for age, HbA1c, ethnicity, and severity of DR was done to prevent the model from relying on these potentially confounding variables when classifying a patient’s sex. After matching, the effect size of differences in age and HbA1c between males and females was 0.16 and 0.01, respectively, while the total variation distance in ethnicity and DR severity between the two groups was 0.09 and 0.08, respectively. Each fundus image was cropped into a square format by detecting the circular contour of the fundus and centring it within a square with equal height and width. The dataset did not contain any identifiable information.

Table 1. Composition of the CNN Development set. The CNN Development Set were balanced with respect to the size of the female vs. male sets, which were matched for age, ethnicity, severity of DR, HbA1c level, and years with diabetes. NPDR: Non-proliferative DR.

| CNN Development Set | ||||

|---|---|---|---|---|

| Female | Male | Total | ||

| N | 1491 | 1476 | 2967 | |

| Age (M ± SD) | ||||

| Ethnicity (N) | ||||

| Latin American | 1118 | 1011 | 2129 | |

| Caucasian | 136 | 240 | 376 | |

| Multi-racial | 79 | 58 | 137 | |

| Asian | 52 | 53 | 105 | |

| African Descent | 46 | 60 | 106 | |

| Other | 34 | 26 | 60 | |

| Native American | 14 | 16 | 30 | |

| Indian Subcontinent Origin | 7 | 10 | 17 | |

| Unknown | 5 | 2 | 7 | |

| Severity of DR (N) | ||||

| Mild NPDR | 679 | 568 | 1247 | |

| Moderate NPDR | 696 | 794 | 1490 | |

| Severe NPDR | 57 | 73 | 130 | |

| Proliferative NPDR | 59 | 41 | 100 | |

| HbA1c (M ± SD) | ||||

CNN architecture

Following our previous work [27], we used the VGG16 architecture [42] and fine-tuned two pre-trained models: (1) one pre-trained on the ImageNet dataset [43], and (2) another pre-trained on a sex classification task using fundus images from healthy individuals (no DR). The original VGG16 model, designed for 1000 output classes for the ImageNet classification contest, was modified for our binary sex classification task by replacing the final fully connected (FC) layer with a randomly initialized FC layer. This new layer had 4096 input features (corresponding to the VGG model’s output features) and two output classes (male and female).

Training procedure

Our training approach combined transfer learning with fine-tuning. For the first two epochs, we kept the network’s weights frozen, allowing only the newly added classifier layer to learn the task. This practice prevents the initial random weights of the FC layer from steering the network parameters in undesired directions. After these initial two epochs, once the classifier was partially trained, we unfroze the network’s weights and allowed them to be updated over the next 100 epochs. Hyperparameters were optimized by testing various combinations and selecting those with the best validation performance. A summary of the hyperparameters used for model architecture, training and evaluation is provided in Table 2.

Table 2. Summary of the hyperparameters used for training and evaluating the model.

| Training Hyperparameters | |

|---|---|

| Optimizer | |

| method | Adam |

| batch size | 128 |

| number of epochs | 102 |

| initial learning rate | 0.0003 |

| learning rate annealing | 0.5 every 20 epochs |

| Criterion | |

| loss function | binary cross-entropy |

| class weights (Female, Male) | (0.463, 0.537) |

| Network | |

| architecture | VGG16 |

| input image resolution | 224224 |

| number of features (hidden layer) | 4096 |

| number of output classes | 2 |

| Training Transforms | |

| random rotation | |

| resize | 224224 |

| Validation Transforms | |

| resize | 224 224 |

Data augmentation and transforms

During CNN training, we implemented data augmentation techniques to prevent the model from merely memorizing image-label pairs. We first applied random rotations to the images, ranging uniformly from -10 to +10 degrees. We also introduced an innovative approach specifically tailored to fundus image datasets. Since the left and right retinas are mirror images with approximate symmetry along the vertical axis, they introduce significant image-level variation (left vs. right). However, this source of variation is not relevant to the sex classification task. To address this, we horizontally flipped all right-eye images so that they resemble left-eye fundus images. This horizontal flipping transformation reduces task-irrelevant image variance in the dataset, improving model performance in sex classification [27]. The rationale for this improvement is that horizontal flipping ensures the model encounters the same anatomical retinal features in consistent locations (e.g., optic disc on the left, and fovea on the right), facilitating more efficient feature learning.

Model evaluation

We used the validation set to assess the model’s performance and tune the hyperparameters based on the AUC score. In addition to AUC, we monitored accuracy and binary cross-entropy (BCE) loss on both training and validation sets throughout the epochs to track training progress. To determine the best-performing model, we selected the epoch with the highest validation AUC score and saved the model’s weights at this point as "the best model’s weights." Subsequently, we reloaded this best model to evaluate and report its performance on an unseen test set.

Generation of saliency maps (Grad-CAM)

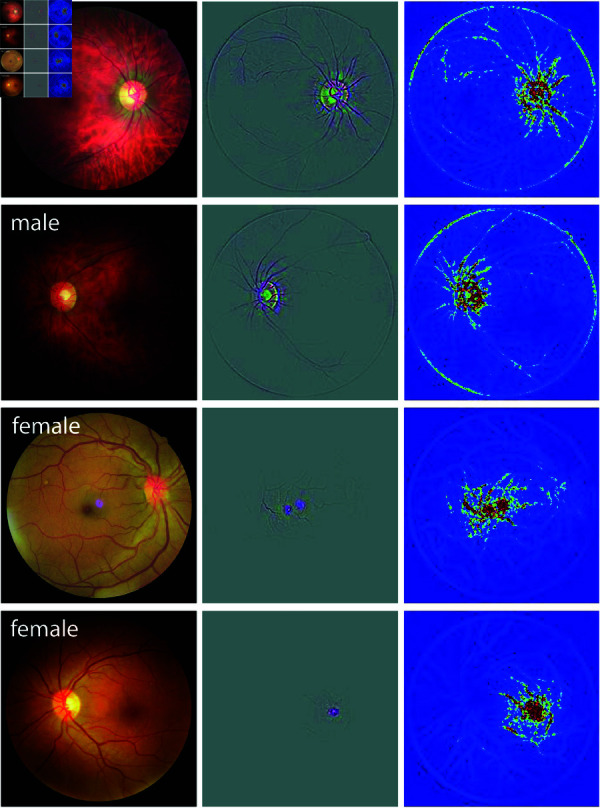

To generate saliency maps, we used the Grad-CAM (Gradient-weighted Class Activation Mapping) technique, introduced by Selvaraju et al. [37]. The input images were fed into the trained CNN for a forward pass, during which we saved the predicted labels. The model’s output was one-hot encoded, designating the predicted class as one and the other class as zero. The Grad-CAM saliency map was generated by backpropagating the gradient of the predicted label to the last convolutional layer. Simultaneously, we computed the Guided Backpropagation map through a deconvolutional network designed as the inverse of the trained model (see [36] for details). Finally, the outputs of these two methods were combined through pixel-wise multiplication to generate the Guided Grad-CAM saliency maps. In the saliency maps, each colour channel reflects the attention given to its corresponding colour (middle row in Figs 1 and 2). To provide a clearer view of the overall attention distribution across the image, we also converted the color saliency maps into amplitude-only versions by aggregating the three colour channels. These amplitude maps are colour-coded and displayed in the right column of Figs 1 and 2.

Fig 1. Saliency map results of sample fundus images from two male and two female patients for the ImageNet pre-trained model.

In each panel, the original fundus image, the Guided Grad-CAM output (3-channel image), and its color-coded amplitude (single-channel image) are shown from left to right.

Fig 2. Saliency map results of sample fundus images from two male and two female patients for the sex-trained model.

In each panel, the original fundus image, the Guided Grad-CAM output (3-channel image), and its colour-coded amplitude (single-channel image) are shown from left to right.

Results

Sex classification

The models were tested on the unseen test set, and the performance results are reported in Table 3. To determine the significance of the results and calculate p-values, we compared performance metrics achieved by each model to chance-level performance using a non-parametric t-test with bootstrapping. The ImageNet pre-trained model achieved an average test AUC of 0.72 and test accuracy of 0.66, both significantly above the chance level (p-value <0.001). The model pretrained on sex classification showed improved performance, achieving an AUC of 0.75 and an accuracy of 0.69 on the test set, both significantly higher than chance (p-value <0.001).

Table 3. Model performance on the test set. AUCs and accuracies with their corresponding confidence intervals and p-values are reported. An asterisk (*) denotes statistical significant results.

| AUC () | p-value | Accuracy () | p-value | |

|---|---|---|---|---|

| pre-trained on ImageNet | 0.718 (0.668, 0.769) | < .001* | 0.663 (0.621, 0.708) | < .001* |

| pre-trained on sex classification | 0.745 (0.700, 0.788) | < .001* | 0.692 (0.650, 0.737) | < .001* |

Model interpretation

Figs 1 and 2 depict sample saliency maps for eight patients—two male and two female for each model class—using the ImageNet and sex-trained models, respectively. The original fundus images, Guided Grad-CAM outputs, and the colour-coded saliency maps are shown in the left, middle, and right panels, respectively. The images are selected as correctly classified examples from the test set. These sample maps show that the network focuses on distinct anatomical regions of the retina for male and female DR patients, with the highlights in male eyes consistently differing from those in female eyes. Specifically, the heatmaps for female eyes with DR predominantly highlight the macular region while largely ignoring the optic disc and peripheral regions. Conversely, heatmaps for male eyes with DR focused on the optic disc and peripheral vasculature along the arcades, with minimal emphasis on the central macular region. This pattern was consistently observed across the remaining correctly identified images in the test set (S1 and S2 Figs), raising the hypothesis that the information needed for sex classification based on fundus photographs in the presence of DR patients is located in different regions of the retina.

Discussion

Here, we show that sex can be successfully predicted from fundus images of patients with DR—an attribute that psychophysical evidence indicates is undetectable by ophthalmologists above chance levels [27]—by fine-tuning pre-trained CNNs. The performance of machine learning models, especially deep neural networks, largely depends on the size of the training dataset. Given this dependence, our study achieved strong performance in sex classification from fundus photographs, particularly considering the small size of our training set, compared to previous studies that used much larger datasets [39, 40]. As shown in Table 4, the performance we achieved in the present study is on par with, and even higher than what was achieved by previous work that used small datasets [27, 38], despite using a smaller number of training samples. This may suggest that, in patients with DR, the retina may exhibit additional sex-specific features beyond what is known to differ in healthy eyes, potentially contributing to the improved predictive power of CNNs trained on eyes with DR. Importantly, the primary aim of this study was not sex classification from fundus images, but rather leveraging the trained model to investigate potential sex differences in the manifestation of DR.

Table 4. Sex classification results of the previous studies and the current study.

In addition, post-hoc interpretation of the trained model shows that it focuses on different regions in male and female eyes. This is in stark contrast to saliency maps generated for healthy eyes [27], where the highlighted areas were similar for both male and female eyes: the optic disc, vasculature, and sometimes the macula. This focus on the same structures may suggest that sex differences in healthy eyes may involve variations in the same retinal structure (e.g. optic disc). However, in our study of DR-affected eyes, we observed distinct saliency map patterns for males and females: the model focused on the optic disc and the peripheral vasculature for males, avoiding the macular area, while for females, it concentrated on the macula with no focus on the optic disc or periphery. This was true for both of our models, including the one fine-tuned on a model already trained for sex classification on healthy eyes. Based on this, we hypothesize that sex differences in DR-affected eyes may be reflected in the model’s attention to different retinal regions, though the specific features driving this behaviour remain unclear.

DR, from its onset at mild non-proliferative stages through moderate and severe non-proliferative DR to PDR and macular edema, manifests with hallmark lesions such as hard exudates, cotton wool spots, microaneurysms, and hemorrhages. Based on the interpretation of our models trained on eyes with DR, it is possible that the manifestation of DR differs between males and females. For instance, saliency maps generated by our model suggest that the macular region may play a more significant role in DR classification for females, whereas peripheral regions, particularly around the optic disc and along the vascular arcades, are more relevant for males. These patterns raise the hypothesis that women might be at a higher risk for macular edema, while men might be at a higher risk for PDR. However, it is important to note that saliency maps only highlight the regions the model attends to when making its predictions; while they inform us about which areas are important for the classification task, they do not definitively indicate which features or lesions, such as neovascularization or macular edema, are being used by the model. Further research is needed to validate this hypothesis; if confirmed, it could have significant implications for the diagnosis, management, and treatment of DR.

Differences in the manifestation of DR between males and females have not been extensively investigated, and our findings are among the first to suggest that such differences may exist. Failing to account for these differences in diagnostic approaches may lead to higher false positive and false negative rates. For example, diagnostic algorithms or clinical assessments that consider all symptoms (i.e., the union of symptoms in both sexes) may result in increased false positives, while those that focus only on common symptoms could overlook sex-specific features, increasing false negatives. By identifying and incorporating sex-specific features, diagnostic methods could be refined to reduce both types of errors, enable more accurate decisions, and mitigate the risks of delayed diagnosis by facilitating earlier detection of DR onset.

The risks of delayed diagnosis of DR, the leading cause of blindness among persons of working age in the industrialized world, are substantial and include progression to severe vision-threatening stages and systemic complications [44]. Early diagnosis alerts patients to the systemic onset of microvascular damage, signaling that their diabetes has progressed to a critical stage [45]. This awareness often prompts patients to adopt stricter glycemic control, especially for those who have not yet prioritized diabetes management. DR is also strongly correlated with diabetic kidney disease (DKD), and an early diagnosis of DR encourages closer monitoring of kidney function, including routine blood work, which can help detect and address kidney complications before they progress. Improved diabetes management and regular monitoring can reduce the risk of future complications, including cardiovascular disease, neuropathy, and kidney failure [46].

From an ocular perspective, early diagnosis is particularly beneficial for patients in the mild and moderate stages of DR, as the disease often progresses silently without symptoms in its early stages, delaying intervention. Regular follow-ups and monitoring at these stages provide an opportunity for timely intervention, which can help prevent progression to advanced stages [47]. More importantly, it is particularly crucial to identify and manage high-risk non-proliferative DR patients due to their approximately likelihood of progressing to proliferative DR (PDR) within a year [48]. PDR, the advanced stage of DR, can cause blindness through retinal neovascularization-related complications such as vitreous hemorrhages, tractional retinal detachments, and neovascular glaucoma. At this advanced stage, even with treatment, the likelihood of meaningful visual recovery is significantly reduced [49].

Current treatment options for DR, including pan-retinal photocoagulation (PRP) and intravitreal anti-VEGF and glucocorticosteroid injections, have revolutionized outcomes for DR patients [47]. Severe vision loss in DR was once common, affecting approximately of patients with PDR in the pre-treatment era [50]. Today, these rates have decreased in patients receiving appropriate treatment. However, the effectiveness of these treatments is closely tied to the stage of DR at the time of intervention. The chance of preventing vision loss or achieving visual recovery diminishes significantly as the disease progresses to advanced stages [51]. Thus, early and more accurate diagnosis not only reduces diagnostic errors but also enables timely intervention, which can prevent the progression of DR and significantly improve patient outcomes.

Our analysis was based on the binary ‘patient-sex’ field in the dataset, which classifies individuals as either male or female. The observed differences may reflect biological aspects of sex, such as hormonal or anatomical variations, or may be influenced by social factors commonly associated with gender, including healthcare access and lifestyle behaviours. However, as our dataset lacks sociocultural and behavioural information, we are unable to disentangle these influences. Future studies incorporating more gender-specific data will be essential to better understand the distinct contributions of sex and gender to DR manifestation.

Another limitation of our study is the absence of healthy control images in the primary analysis. The EyePACS dataset used in this study includes only individuals with some degree of diabetic retinopathy, preventing direct comparison with healthy eyes. While previous work has investigated sex-based differences in healthy fundus images, we did not include a healthy cohort in our model training or evaluation. Incorporating a healthy control group from a different dataset would introduce data distribution shifts due to differences in imaging protocols, equipment, or population characteristics. Such shifts could confound the model’s behaviour and make it hard to isolate effects specifically related to DR. Future studies using harmonized datasets that include both healthy and DR-affected eyes from the same source would be valuable in disentangling general sex-based retinal differences from those specific to disease manifestation.

In addition to these directions, future work could benefit from external validation on independent datasets such as Messidor [52] or IDRiD [53] to assess the generalizability of our findings across different populations and imaging conditions. Moreover, although the EyePACS dataset includes individuals from diverse ethnic backgrounds, we did not explore whether sex-related differences in retinal presentation vary across ethnic groups. Understanding how sex and ethnicity may interact in the context of DR could offer valuable insights into disease manifestation and the model’s behaviour, and represents an important avenue for future research.

Finally, this study serves as a proof-of-concept, demonstrating the potential of deep learning-based analysis of fundus images to uncover novel retinal biomarkers, enabling more precise diagnosis and management of retinal diseases. Our findings suggest that DR may manifest differently in males and females, highlighting the need for sex-specific diagnostic approaches. While our model successfully identified sex-based retinal differences, further research is required to validate these findings in larger, more diverse datasets and to determine their clinical significance. Ultimately, leveraging AI-driven insights could help refine clinical decision-making, reduce diagnostic errors, and improve outcomes for patients with DR.

Supporting information

(PDF)

(PDF)

(PDF)

Sixteen images were randomly chosen to demonstrate the consistency of the saliency map results.

(TIF)

Sixteen images were randomly chosen to demonstrate the consistency of the saliency map results.

(TIF)

Data Availability

Data cannot be shared publicly because data are owned by a third party and we do not have permission to share the data. The dataset used in this study was obtained from EyePACS, LLC under a standard licensing agreement. We confirm that we did not receive any special access privileges that other researchers would not have. Researchers can request a license to access the data by contacting EyePACS at contact@eyepacs.org, or at 1-800-228-6144, or visiting their website https://www.eyepacs.com. More information on licensing and access conditions can be obtained by contacting EyePACS. The code utilized to generate the results presented in this manuscript is available in the following GitHub repository: https://github.com/Fundus-AI/DR_sex_difference.

Funding Statement

(OY) NSERC Discovery Grant (22R82411) (OY) Pacific Institute for the Mathematical Sciences (PIMS) CRG 33 (IO) NSERC Discovery Grant (RGPIN-2019-05554) (IO) NSERC Accelerator Supplement (RGPAS-2019-00026) (IO & OY) UBC DSI Grant (no number) (IO) UBC Faculty of Science STAIR grant (IO & OY) UBC DMCBH Kickstart grant (IO & OY) UBC Health VPR HiFi grant. The sponsors or funders did not play any role in the study design, data collection and analysis, decision to publish, or preparation of the manuscript.

References

- 1.Regitz-Zagrosek V. Sex and gender differences in health: science & society series on sex and science. EMBO Rep. 2012;13(7):596–603. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Mehta LS, Beckie TM, DeVon HA, Grines CL, Krumholz HM, Johnson MN. Acute myocardial infarction in women: a scientific statement from the American Heart Association. Circulation. 2016;133(9):916–47. [DOI] [PubMed] [Google Scholar]

- 3.Willson T, Nelson SD, Newbold J, Nelson RE, LaFleur J. The clinical epidemiology of male osteoporosis: a review of the recent literature. Clin Epidemiol. 2015;:65–76. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Albert PR. Why is depression more prevalent in women?. JPN. 2015;40(4):219–21. doi: 10.1503/jpn.150205 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Jaffer S, Foulds HJA, Parry M, Gonsalves CA, Pacheco C, Clavel M-A, et al. The Canadian Women’s Heart Health Alliance ATLAS on the epidemiology, diagnosis, and management of cardiovascular disease in women—Chapter 2: Scope of the problem. CJC Open. 2021;3(1):1–11. doi: 10.1016/j.cjco.2020.10.009 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Patwardhan V, Gil GF, Arrieta A, Cagney J, DeGraw E, Herbert ME, et al. Differences across the lifespan between females and males in the top 20 causes of disease burden globally: a systematic analysis of the Global Burden of Disease Study 2021. The Lancet Public Health. 2024;9(5):e282–94. doi: 10.1016/s2468-2667(24)00053-7 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Bagenal J, Khanna R, Hawkes S. Not misogynistic but myopic: the new women’s health strategy in England. The Lancet. 2022;400(10363):1568–70. doi: 10.1016/s0140-6736(22)01486-6 [DOI] [PubMed] [Google Scholar]

- 8.Hirst JE, Witt A, Mullins E, Womersley K, Muchiri D, Norton R. Delivering the promise of improved health for women and girls in England. The Lancet. 2024;404(10447):11–4. [DOI] [PubMed] [Google Scholar]

- 9.Haupt S, Carcel C, Norton R. Neglecting sex and gender in research is a public-health risk. Nature. 2024;629(8012):527–30. [DOI] [PubMed] [Google Scholar]

- 10.Witt A, Politis M, Norton R, Womersley K. Integrating sex and gender into biomedical research requires policy and culture change. NPJ Women’s Health. 2024;2(1):23. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Ivers NM, Jiang M, Alloo J, Singer A, Ngui D, Casey CG. Diabetes Canada 2018 clinical practice guidelines: key messages for family physicians caring for patients living with type 2 diabetes. Canadian Family Physician. 2019;65(1):14–24. [PMC free article] [PubMed] [Google Scholar]

- 12.Kautzky-Willer A, Leutner M, Harreiter J. Sex differences in type 2 diabetes. Diabetologia. 2023;66(6):986–1002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Rossi MC, Cristofaro MR, Gentile S, Lucisano G, Manicardi V, Mulas MF, et al. Sex disparities in the quality of diabetes care: biological and cultural factors may play a different role for different outcomes. Diabetes Care. 2013;36(10):3162–8. doi: 10.2337/dc13-0184 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Wannamethee S, Papacosta O, Lawlor D, Whincup P, Lowe G, Ebrahim S. Do women exhibit greater differences in established and novel risk factors between diabetes and non-diabetes than men? The British Regional Heart Study and British Women’s Heart Health Study. Diabetologia. 2012;55:80–7. [DOI] [PubMed] [Google Scholar]

- 15.Westergaard D, Moseley P, Sørup FKH, Baldi P, Brunak S. Population-wide analysis of differences in disease progression patterns in men and women. Nat Commun. 2019;10(1):666. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Shepard BD. Sex differences in diabetes and kidney disease: mechanisms and consequences. Am J Physiol-Renal Physiol. 2019;317(2):F456–62. doi: 10.1152/ajprenal.00249.2019 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Maric-Bilkan C. Sex differences in diabetic kidney disease. Mayo Clinic Proc. 2020;95(3):587–99. doi: 10.1016/j.mayocp.2019.08.026 [DOI] [PubMed] [Google Scholar]

- 18.Bjornstad P, Cherney DZ. Renal hyperfiltration in adolescents with type 2 diabetes: physiology, sex differences, and implications for diabetic kidney disease. Curr Diab Rep. 2018;18:1–7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Kajiwara A, Kita A, Saruwatari J, Miyazaki H, Kawata Y, Morita K. Sex differences in the renal function decline of patients with type 2 diabetes. J Diab Res. 2016;2016(1):4626382. doi: 10.1155/2016/4626382 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Swapnasrita S, Carlier A, Layton AT. Sex-specific computational models of kidney function in patients with diabetes. Front Physiol. 2022;13:741121. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Teo ZL, Tham Y-C, Yu M, Chee ML, Rim TH, Cheung N, et al. Global prevalence of diabetic retinopathy and projection of burden through 2045. Ophthalmology. 2021;128(11):1580–91. doi: 10.1016/j.ophtha.2021.04.027 [DOI] [PubMed] [Google Scholar]

- 22.Seghieri G, Policardo L, Anichini R, Franconi F, Campesi I, Cherchi S, et al. The effect of sex and gender on diabetic complications. CDR. 2017;13(2):148–60. doi: 10.2174/1573399812666160517115756 [DOI] [PubMed] [Google Scholar]

- 23.Yokoyama H, Uchigata Y, Otani T, Aoki K, Maruyama A, Maruyama H, et al. Development of proliferative retinopathy in Japanese patients with IDDM: Tokyo Women’s Medical College Epidemiologic Study. Diabetes Res Clin Pract. 1994;24(2):113–9. doi: 10.1016/0168-8227(94)90028-0 [DOI] [PubMed] [Google Scholar]

- 24.Kajiwara A, Miyagawa H, Saruwatari J, Kita A, Sakata M, Kawata Y, et al. Gender differences in the incidence and progression of diabetic retinopathy among Japanese patients with type 2 diabetes mellitus: a clinic-based retrospective longitudinal study. Diabetes Res Clin Pract. 2014;103(3):e7–10. doi: 10.1016/j.diabres.2013.12.043 [DOI] [PubMed] [Google Scholar]

- 25.Jervell J, Moe N, Skjaeraasen J, Blystad W, Egge K. Diabetes mellitus, pregnancy—management, results at Rikshospitalet and Oslo 1970 –1977. Diabetologia. 1979;16:151–5. [DOI] [PubMed] [Google Scholar]

- 26.Moloney JBM, Drury MI. The effect of pregnancy on the natural course of diabetic retinopathy. Am J Ophthalmol. 1982;93(6):745–56. doi: 10.1016/0002-9394(82)90471-8 [DOI] [PubMed] [Google Scholar]

- 27.Delavari P, Ozturan G, Yuan L, Yilmaz Ö, Oruc I. Artificial intelligence, explainability, and the scientific method: a proof-of-concept study on novel retinal biomarker discovery. PNAS Nexus. 2023;2(9). doi: 10.1093/pnasnexus/pgad290 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Gulshan V, Peng L, Coram M, Stumpe MC, Wu D, Narayanaswamy A. Development and validation of a deep learning algorithm for detection of diabetic retinopathy in retinal fundus photographs. JAMA. 2016;316(22):2402–10. [DOI] [PubMed] [Google Scholar]

- 29.Ting DSW, Cheung CYL, Lim G, Tan GSW, Quang ND, Gan A. Development and validation of a deep learning system for diabetic retinopathy and related eye diseases using retinal images from multiethnic populations with diabetes. JAMA. 2017;318(22):2211–23. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Gharaibeh N, Al-Hazaimeh OM, Al-Naami B, Nahar KM. An effective image processing method for detection of diabetic retinopathy diseases from retinal fundus images. Int J Signal Imaging Syst Eng. 2018;11(4):206–16. [Google Scholar]

- 31.Al-hazaimeh OM, Abu-Ein AA, Tahat NM, Al-Smadi MA, Al-Nawashi MM. Combining artificial intelligence and image processing for diagnosing diabetic retinopathy in retinal fundus images. Int J Online Biomed Eng. 2022;18(13). [Google Scholar]

- 32.Grzybowski A, Jin K, Zhou J, Pan X, Wang M, Ye J. Retina fundus photograph-based artificial intelligence algorithms in medicine: a systematic review. Ophthalmol Therapy. 2024;13(8):2125–49. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Coan LJ, Williams BM, Krishna Adithya V, Upadhyaya S, Alkafri A, Czanner S, et al. Automatic detection of glaucoma via fundus imaging and artificial intelligence: a review. Surv Ophthalmol. 2023;68(1):17–41. doi: 10.1016/j.survophthal.2022.08.005 [DOI] [PubMed] [Google Scholar]

- 34.Singh P, Yang L, Nayar J, Matias Y, Corrado G, Wesbter D. Real-world performance of a deep learning diabetic retinopathy algorithm. Investigat Ophthalmol Visual Sci. 2024;65(7):2323. [Google Scholar]

- 35.Simonyan K, Vedaldi A, Zisserman A. Deep inside convolutional networks: visualising image classification models and saliency maps. arXiv preprint 2013. doi: arXiv:13126034 [Google Scholar]

- 36.Zeiler MD, Fergus R. Visualizing and understanding convolutional networks. Computer Vision–ECCV 2014 : 13th European Conference, Zurich, Switzerland, September 6-12, 2014, Proceedings, Part I. Springer; 2014. p. 818–33.

- 37.Selvaraju RR, Cogswell M, Das A, Vedantam R, Parikh D, Batra D. Grad-cam: Visual explanations from deep networks via gradient-based localization. In: Proceedings of the IEEE International Conference on Computer Vision; 2017. p. 618–26.

- 38.Berk A, Ozturan G, Delavari P, Maberley D, Yılmaz Ö, Oruc I. Learning from small data: classifying sex from retinal images via deep learning. PLoS One. 2023;18(8):e0289211. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Korot E, Pontikos N, Liu X, Wagner SK, Faes L, Huemer J. Predicting sex from retinal fundus photographs using automated deep learning. Sci Rep. 2021;11(1):1–8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Poplin R, Varadarajan AV, Blumer K, Liu Y, McConnell MV, Corrado GS. Prediction of cardiovascular risk factors from retinal fundus photographs via deep learning. Nat Biomed Eng. 2018;2(3):158–64. [DOI] [PubMed] [Google Scholar]

- 41.Cuadros J, Bresnick G. EyePACS: an adaptable telemedicine system for diabetic retinopathy screening. J Diabetes Sci Technol. 2009;3(3):509–16. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Karen S. Very deep convolutional networks for large-scale image recognition. arXiv preprint 2014. doi: arXiv:1409.1556 [Google Scholar]

- 43.Deng J, Dong W, Socher R, Li LJ, Li K, Fei-Fei L. Imagenet: a large-scale hierarchical image database. In: 2009 IEEE Conference on Computer Vision and Pattern Recognition. IEEE; 2009. p. 248–55.

- 44.Kollias AN, Ulbig MW. Diabetic retinopathy: early diagnosis and effective treatment. Deutsches Ärzteblatt Int. 2010;107(5):75. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.Cheung N, Mitchell P, Wong TY. Diabetic retinopathy. Lancet. 2010;376:124–36. [DOI] [PubMed] [Google Scholar]

- 46.Saini DC, Kochar A, Poonia R. Clinical correlation of diabetic retinopathy with nephropathy and neuropathy. Indian J Ophthalmol. 2021;69(11):3364–8. doi: 10.4103/ijo.ijo_1237_21 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Chong DD, Das N, Singh RP. Diabetic retinopathy: screening, prevention, and treatment. CCJM. 2024;91(8):503–10. doi: 10.3949/ccjm.91a.24028 [DOI] [PubMed] [Google Scholar]

- 48.Group ETDRSR, et al. Grading diabetic retinopathy from stereoscopic color fundus photographs—an extension of the modified Airlie House classification: ETDRS report number 10. Ophthalmology. 1991;98(5):786–806. [PubMed] [Google Scholar]

- 49.Wykoff CC, Khurana RN, Nguyen QD, Kelly SP, Lum F, Hall R. Risk of blindness among patients with diabetes and newly diagnosed diabetic retinopathy. Diabetes Care. 2021;44(3):748–56. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50.Group D R S R, et al. Photocoagulation treatment of proliferative diabetic retinopathy: clinical application of diabetic retinopathy study (DRS) findings, DRS report number 8. Ophthalmology. 1981;88(7):583–600. [PubMed] [Google Scholar]

- 51.Zhao Y, Singh RP. The role of anti-vascular endothelial growth factor (anti-VEGF) in the management of proliferative diabetic retinopathy. Drugs in context. 2018;7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 52.Decencière E, Zhang X, Cazuguel G, Lay B, Cochener B, Trone C. Feedback on a publicly distributed image database: the Messidor database. Image Anal Stereol. 2014:231–4. [Google Scholar]

- 53.Porwal P, Pachade S, Kamble R, Kokare M, Deshmukh G, Sahasrabuddhe V, et al. Indian Diabetic Retinopathy Image Dataset (IDRiD): a database for diabetic retinopathy screening research. Data. 2018;3(3):25. doi: 10.3390/data3030025 [DOI] [Google Scholar]