Abstract

This study addressed the critical challenge of filling gaps in PM2.5 time series data from Pavlodar, Kazakhstan. We developed and evaluated a comprehensive hierarchy of 46 gap-filling methods across five representative gap lengths (5–72 hours), introducing dynamic models capable of adapting to gaps of variable duration. Tree-based models with bidirectional sequence-to-sequence architectures delivered superior performance, with XGB Seq2Seq achieving a mean absolute error of 5.231 ± 0.292 μg/m3 for 12-hour gaps, representing a 63% improvement over basic statistical methods. The advantage of multivariate models incorporating meteorological variables increased substantially with gap length, from modest improvements of 2–3% for 5-hour gaps to significant enhancements of 16–18% for 48–72 hour gaps. Dynamic multivariate models demonstrated remarkable operational flexibility by successfully processing real-world gaps ranging from 1 to 191 hours despite being trained on maximum lengths of 72 hours. Analysis of the reconstructed complete time series revealed that 61.2% of monitored hours exceeded the WHO daily threshold of 15 μg/m3, with strong seasonal patterns and pronounced diurnal cycles. This research advances environmental monitoring capabilities by providing robust methodological tools for addressing data continuity challenges that currently limit the utility of PM2.5 measurements for public health applications and scientific analysis.

Introduction

Air quality challenges and PM2.5 monitoring

Fine particulate matter (PM2.5) represents one of the most significant air pollutants affecting human health and environmental quality globally. With aerodynamic diameters smaller than 2.5 μm, these particles can penetrate deep into the respiratory system, cross the blood-air barrier, and cause widespread systemic effects [1,2]. Continuous monitoring of PM2.5 concentrations has become essential for environmental management, epidemiological research, and public health protection [3]. Such monitoring provides critical data for understanding temporal patterns, identifying pollution sources, evaluating regulatory compliance, and developing effective mitigation strategies [4].

The health implications of PM2.5 exposure are substantial and well-documented. Short-term exposure is associated with increased respiratory infections, exacerbation of asthma and chronic obstructive pulmonary disease, and cardiovascular events including stroke and heart attacks [5]. Long-term exposure contributes to reduced lung function development, chronic cardiovascular diseases, increased cancer risk, and premature mortality [6]. Recent studies have also linked PM2.5 exposure to adverse pregnancy outcomes, neurodevelopmental disorders, and accelerated cognitive decline [7,8]. The World Health Organization has progressively tightened its air quality guidelines for PM2.5, most recently to a daily mean concentration of 15 μg/m3 and annual mean of 5 μg/m3, reflecting mounting evidence that even low levels of exposure can harm health [9].

Beyond health impacts, PM2.5 contributes significantly to environmental degradation. These particles can transport toxic compounds over long distances, deposit in sensitive ecosystems, reduce visibility, and influence regional climate patterns through their effects on radiative forcing and cloud formation [10]. In urban areas, PM2.5 concentrations are influenced by a complex interplay of emission sources, meteorological conditions, and local topography, creating substantial spatial and temporal variability that requires dense monitoring networks [11].

In Central Asia, including Kazakhstan where our study is centered, air quality challenges are compounded by factors such as rapid industrialization, coal-dependent energy systems, aging infrastructure, continental climate extremes, and transitioning regulatory frameworks [12–14]. These conditions create both unique air pollution patterns and specific challenges for maintaining continuous monitoring operations [15].

Missing data challenges in environmental monitoring

Despite the crucial importance of continuous air quality monitoring, measurement systems frequently encounter operational challenges resulting in data gaps. These discontinuities arise from various factors including sensor malfunctions, power outages, routine maintenance, calibration issues, and extreme weather events [16,17]. Studies have reported data completeness rates ranging from 60% to 85% in typical monitoring networks, with some stations experiencing significantly worse coverage [18,19]. Such gaps undermine data analysis efforts, potentially leading to biased estimates of pollution levels, mischaracterization of temporal trends, and reduced effectiveness of public health warning systems [20].

Data collection disruptions can be broadly categorized as technical, operational, and environmental factors [21]. Technical causes include sensor malfunctions (component failures, measurement drift, calibration errors) [22,23], while operational interruptions stem from routine maintenance procedures like filter replacements and software updates [24]. Infrastructure challenges, particularly power outages and Internet connectivity issues in remote locations or regions with unstable grids, create additional discontinuities [25,26].

Environmental conditions present further complications, especially in regions with continental climates like Central Asia, where temperature extremes (--40°C to +40°C) can exceed equipment specifications and cause systematic seasonal gaps [27–30]. Compounding these issues are anthropogenic factors such as vandalism and resource limitations that prevent prompt repairs, often extending short gaps into prolonged missing periods [31,32].

These data gaps significantly impact the reliability and utility of air quality information across multiple applications. From an analytical perspective, missing values compromise statistical analysis by reducing sample sizes, introducing potential bias, and limiting the applicability of standard time series methods that assume continuous data [33]. When gaps coincide with important pollution events, such as severe episodes or seasonal transitions, critical information may be lost, leading to underestimation of pollution severity and population exposure [25]. Temporal pattern identification becomes particularly challenging when gaps occur non-randomly, potentially masking diurnal, weekly, or seasonal variations that are essential for understanding pollution dynamics [20].

For regulatory compliance and policy development, incomplete datasets may yield unreliable annual statistics, affecting attainment status determinations and policy effectiveness assessments [34,35]. Public health applications suffer similarly, as gaps in real-time monitoring can delay or prevent timely health advisories during pollution episodes when protection is most needed [36]. Research applications face even broader impacts, with missing data limiting the development of accurate forecasting models, exposure assessments, and source apportionment studies [37].

The importance of developing effective gap-filling methodologies extends beyond simply achieving dataset completeness. The quality of imputed values significantly affects downstream analyses and decisions. Methods that merely insert statistically convenient values (such as means or medians) without accounting for temporal patterns may satisfy basic continuity requirements but can introduce artificial patterns or suppress real variability [38]. Conversely, sophisticated approaches that leverage underlying data structures, correlations with meteorological factors, and known temporal patterns have the potential to reconstruct missing segments with high fidelity to actual conditions [39].

The problem of missing data is particularly acute in developing regions and transition economies where monitoring infrastructure may be less robust, maintenance resources more limited, and operational challenges more prevalent [40]. Addressing these data gaps is essential for ensuring the continuity and reliability of air quality information that underpins environmental policy, public health interventions, and scientific research. As monitoring networks expand globally and generate increasingly high-resolution temporal data, effective methodologies for handling missing values have become a critical component of environmental data management systems [41].

As environmental monitoring networks generate increasingly high-resolution data and support more complex applications, the development of advanced gap-filling approaches has become a critical research area. Effective imputation methods must balance competing demands: preserving temporal patterns while avoiding introducing artificial structures, maintaining statistical properties of the original data, accommodating gaps of varying lengths, functioning reliably with limited computational resources, and adapting to diverse pollutants and monitoring contexts. Meeting these challenges requires innovative methodological approaches that combine statistical rigor with practical deployability in operational monitoring systems.

Existing approaches for gap-filling in PM2.5 time series

Researchers have employed a wide spectrum of methods to fill gaps in PM2.5 time series, from simple statistical imputations to sophisticated machine learning models.

Traditional statistical methods offer straightforward solutions. For example, replacing missing values with summary statistics (mean or median) or carrying the last observed value forward are common practices [42]. Simple interpolation (linear or spline) across a gap is also frequently used. These techniques are easy to implement but often inadequate for complex environmental data–they tend to smooth out or miss important variability. Notably, basic interpolation cannot recover sharp pollution peaks or low troughs, leading to biased daily averages when data are missing [25]. Such limitations motivate more advanced approaches.

Time-series modeling and classical machine learning provide more dynamic gap-filling strategies. Autoregressive models like ARIMA (Auto-Regressive Integrated Moving Average) leverage temporal correlations in the PM2.5 series to predict missing values. ARIMA and its seasonal extensions (e.g., SARIMA/SARIMAX) have been widely applied in air quality time series analysis and serve as strong benchmarks for gap-filling [43]. In some evaluations, ARIMA-based methods achieved comparable or better accuracy than modern nonlinear models for short gaps [44]. Their strength lies in statistical rigor and the ability to model seasonality; however, they require assumptions of stationarity and can propagate errors when used to impute long consecutive gaps (by iteratively forecasting each missing point). To incorporate additional predictors and nonlinear relationships, researchers have turned to ensemble learning methods. Tree-based models like Random Forests and gradient boosting (e.g. XGBoost) have been used to predict missing PM2.5 readings from correlated variables and historical data [44]. For instance, Xiao et al. [45] developed an ensemble model that combined decision-tree learners (including Random Forest and XGBoost) to reconstruct historical PM2.5 concentrations [44]. These machine learning models can capture complex, non-linear interactions and often handle multivariate inputs (such as meteorological factors or neighboring station data) seamlessly. Studies report that such models provide more accurate imputations than simple univariate interpolation, especially when pollutant levels depend on external factors (weather, traffic, etc.) [42]. A key advantage of tree ensembles is their robustness and relatively low risk of overfitting for moderate dataset sizes, but they do not inherently account for time dependencies unless temporal features (e.g., time lags or timestamps) are included. Consequently, their performance can degrade for long gaps where the model has to extrapolate far beyond the last known observation.

Deep learning approaches have gained traction for gap-filling in recent years, owing to their ability to learn sequential patterns. Recurrent neural networks (RNNs)–particularly Long Short-Term Memory (LSTM) and Gated Recurrent Unit (GRU) networks–are well-suited to time series imputation because they maintain an internal “memory” of past values [46]. LSTM-based models have been applied to PM2.5 datasets to predict missing intervals by learning from historical sequences [47]. Researchers have found LSTM can outperform simpler methods like mean fill or moving averages, achieving lower error when sufficient training data are available [48]. GRU networks, which are a streamlined variant of LSTMs, have also shown promise; in one study a GRU model achieved a mean absolute percentage error of ~11%, outperforming conventional LSTM and even other machine learning methods for imputation of hourly PM2.5 data [44]. Deep learning models excel at capturing complex temporal dynamics and can naturally handle multivariate inputs. Moreover, advanced architectures (e.g., sequence-to-sequence models, denoising autoencoders, and attention-based networks) have been proposed to directly reconstruct long missing segments by learning from the context before and after the gap [49]. These methods often yield improvements in accuracy, for example by better preserving diurnal patterns or seasonal cycles that simpler models might miss. However, neural networks require large datasets for training and careful tuning–otherwise they may underperform simpler models. The most commonly adopted neural network architectures for time series applications include Long Short-Term Memory (LSTM) networks, Gated Recurrent Unit (GRU) networks, Convolutional Neural Networks (CNN), and Echo State Networks (ESN) [50]. LSTM represents a specialized type of recurrent neural network designed to capture long-term dependencies in sequential data through gating mechanisms that selectively retain or discard information, effectively addressing vanishing and exploding gradient problems that limit traditional RNNs [51]. GRU networks offer a streamlined alternative to LSTM with fewer parameters while maintaining similar capabilities for temporal modeling. CNN architectures apply convolution operations to identify local patterns and temporal structures, while ESN networks leverage reservoir computing principles for efficient training of recurrent architectures.

Indeed, a recent comparative study showed that a basic imputer like k-nearest neighbors or a well-tuned SARIMAX model can outperform vanilla deep networks for extended gaps, highlighting that deep learning is not guaranteed to excel without optimization [52]. Another drawback is the computational cost: training an LSTM/GRU model can be resource-intensive [25], which may be impractical for real-time gap-filling applications.

Limitations of current methods. Despite the variety of available techniques, gap-filling in PM2.5 time series remains challenging, especially for long-duration gaps. Many traditional methods perform adequately when the missing span is short (e.g., a few timestamps) and surrounded by known data, but their accuracy degrades for longer gaps [25,42]. Autoregressive models like ARIMA, when used to fill consecutive missing hours or days, suffer from cumulative forecast uncertainty–errors compound with each step, often leading to divergence from true values over long gaps. Machine learning models, while more flexible, face a data availability problem: for a prolonged gap, there may be limited recent information to inform the model. Unless supplemented by additional context (nearby station readings, meteorology, or seasonal profiles), even advanced models effectively operate semi-blind over long voids in the data. Researchers have noted that most gap-filling techniques partially fail when confronted with prominent (extended) gaps or anomalous events [53]. For instance, methods that ignore the local daily cycle can badly miss peak pollution hours when filling a multi-day gap. Moreover, many studies historically focused on short gaps, and consecutive missing periods spanning 12–24 + hours received comparatively little attention until recently [44]. As a result, existing approaches often struggle to reliably impute long stretches of missing PM2.5 data. In summary, while traditional statistical imputation, classical time-series models (ARIMA), tree-based regressors, and deep learning techniques each have demonstrated successes in gap-filling, they all exhibit important limitations. Simpler methods lack fidelity to complex pollution dynamics, and more advanced models can be data-hungry or prone to error propagation. These gaps in capability are especially pronounced for long missing intervals, underscoring the need for continued research into more robust gap-filling methodologies.

Despite significant advances in gap-filling methodologies, the practical implementation of these approaches faces substantial limitations in air quality monitoring systems. For continental climate conditions characteristic of cities like Pavlodar (Kazakhstan), the challenge of selecting an optimal model remains critical, particularly when dealing with extended gaps (≥24 hours) that constitute over 15% of our dataset. These constraints motivate the development of more adaptive and robust methodological frameworks specifically tailored to local environmental patterns and operational constraints.

Research aim and objectives.

The primary aim of this study is to develop and rigorously evaluate a robust, length-adaptive gap-filling framework for hourly PM2.5 time series that maintains high accuracy across diverse missing data patterns while ensuring operational feasibility in real-world monitoring systems. This research specifically addresses the challenges of data continuity in continental climate cities with frequent extended outages and limited computational resources.

To achieve this aim, we pursue the following specific objectives:

Establish a comprehensive benchmark for PM2.5 gap-filling by developing a unified testing framework that systematically evaluates 46 methods (8 statistical, 28 univariate, 8 multivariate, 2 dynamical) across five representative gap lengths (5, 12, 24, 48, and 72 hours), identifying the strengths and limitations of each approach under standardized conditions.

Quantify the relative contributions of methodological factors (model architecture, directionality, forecasting strategy) and environmental variables (meteorological parameters, temporal indicators) to imputation accuracy across different gap scenarios, with particular attention to the performance-stability trade-off as gap length increases.

Design and implement dynamic univariate and multivariate sequence-to-sequence models capable of automatically adapting to gaps of varying lengths with a single set of model weights, optimizing the balance between prediction accuracy and computational efficiency.

Validate the proposed models using both synthetic test cases and real-world gaps encountered in operational monitoring, assessing their effectiveness for reconstructing complex pollution patterns during extended data outages up to 191 hours while maintaining temporal pattern fidelity.

Assess the current state of air quality in Pavlodar using the reconstructed complete time series, analyzing temporal patterns, evaluating compliance with international health standards, and identifying critical pollution episodes that may have been obscured by data gaps in the original measurements.

Through these objectives, the research aims to advance environmental monitoring capabilities by providing robust methodological tools for addressing data continuity challenges that currently limit the utility of PM2.5 measurements for public health applications, regulatory compliance, and scientific analysis.

Scientific novelty of the research.

This study introduces several significant methodological advances in environmental time series gap-filling. First, we develop a comprehensive hierarchical framework systematically evaluating 46 imputation methods across varying gap lengths. Unlike previous studies focusing on limited approaches or specific gap durations, our structured comparison reveals performance differences and critical trade-offs between accuracy, computational efficiency, and model stability. This establishes standardized evaluation protocols for objective method selection based on monitoring requirements.

Second, we introduce novel dynamic modeling architectures addressing fundamental limitations of conventional fixed-context approaches. While previous gap-filling models require predetermined context windows and separate implementations for different gap lengths, our dynamic models automatically adjust context processing based on gap characteristics. This innovation allows a single model to effectively handle gaps ranging from one hour to over one week without reconfiguration or retraining, representing significant advancement over previous work where performance deteriorated substantially when applied to different gap durations.

Third, we provide rigorous empirical comparison between univariate and multivariate modeling approaches across diverse gap scenarios. Our research systematically quantifies the added value of incorporating meteorological variables for different gap lengths and model architectures. This reveals that multivariate modeling benefits increase substantially with gap length, from minimal improvements for short gaps (2–3%) to significant enhancements for extended intervals (16–18%). These findings provide practical guidance for selecting appropriate model complexity based on gap characteristics and available contextual data.

Our contribution emphasizes operational adaptability for real-world monitoring systems rather than algorithmic novelty, addressing the practical challenge of maintaining a single model capable of handling diverse gap scenarios encountered in environmental monitoring networks.

These innovations extend current knowledge regarding environmental time series reconstruction and provide practical solutions for challenging operational conditions in air quality monitoring networks.

Methods

Data description and preprocessing

Data sources and study area.

Measurement was performed automatically using a stationary monitoring unit equipped with an AQS008A air quality sensor (Sichuan Weinasa Technology Co., Ltd., The International Creative Federation Cross-Border E-Commerce Industry Park, Mianyang, China) [54]. The AQS008A sensor uses laser-based detection principles for PM2.5 concentration measurement. Data were collected from 23 May 2024 to 19 January 2025 at a 1-minute sampling interval. Measured data on PM2.5 concentration are presented in SQLITE database (freely available at https://doi.org/10.5281/zenodo.15305392).

The station operates within a research project framework and is installed on a building belonging to Margulan University in Pavlodar, Kazakhstan (coordinates: 52°17’56.6“N, 76°57’18.3”E). This location is partially shielded from direct traffic exposure by the surrounding academic buildings; however, several potential emission sources are present within a short distance: railway tracks are located approximately 200 m to the north, warehouses and garages about 100 m to the east, and one of the city’s major roads approximately 200 m to the south.

Meteorological data.

In addition to PM2.5, air temperature, and relative humidity readings collected by the AQS008A sensor, a complementary set of meteorological variables (T, P0, P, U, DD, Ff, VV) was downloaded from the RP5 weather archive for Pavlodar (airport, METAR) [55]. These variables represent, respectively, temperature (T, °C), sea-level pressure (P0, mmHg), station-level pressure (P, mmHg), relative humidity (U, %), wind direction (DD, compass points), wind speed (Ff, m/s), and horizontal visibility range (VV, km). Timestamps from the downloaded dataset were synchronized with PM2.5 sensor data to create a unified time series. Meteorological data are available in S1 File.

Initial data filtering and quality checks.

Outlier Detection To address anomalously high or low PM2.5 measurements, two complementary checks are performed. First, suspiciously large spikes are flagged if they exceed a threshold (for instance, 200 µg/m3) and are more than triple the previous reading. Second, the interquartile range (IQR) method locates points lying outside [Q1 − 1.5 × IQR, Q3 + 1.5 × IQR] (Fig 1). Rather than discarding these outlier points, the script converts them into missing values (NaN), thus preserving the possibility of gap-filling them in subsequent steps.

Fig 1. PM2.5 measurements showing detected outliers (red dots) based on threshold and IQR methods.

Time Range Formation: Once outliers are marked, the dataset is aligned to a uniform minute-by-minute timeline. Any timestamps absent in the original data become rows filled with NaN, ensuring that the entire period of interest is fully represented and simplifying future interpolation or time-based analyses.

Delayed Measurement Correction: Some readings arrive slightly late and might cause spurious gaps of just a few seconds. If the timestamp delay is under a predefined threshold (for instance, 15 s), the reading is shifted back to the intended minute. This prevents artificially introduced breaks, yielding a cleaner series with fewer false gaps.

Short Gap Interpolation: After outlier marking and time alignment, consecutive NaN segments are checked. If a gap is shorter than a chosen duration (e.g., five consecutive minutes), it is filled by linear interpolation. This approach retains most of the data continuity while ensuring that only genuinely larger gaps remain unfilled for further advanced methods.

Hourly Aggregation and Secondary Outlier Filtering: Because many analyses use hourly data, minute-level observations are averaged to hourly blocks, provided each hour has enough valid measurements (for instance, at least 40 non-NaN minutes). Any hour lacking sufficient data remains NaN, indicating insufficient coverage for a reliable hourly mean. After aggregation, an additional outlier detection is applied on the hourly data. This secondary filtering flags hours with values exceeding 270 µg/m3 or values over 200 µg/m3 that are more than three times higher than the previous hourly reading. The threshold value of 270 µg/m3 was established based on statistical analysis of the value distribution and corresponds to the 99.61st percentile in the dataset, meaning that only 0.39% of all observations exceed this threshold (Fig 2). This approach effectively identifies abnormally high values while preserving the natural variability of the data. The additional criterion for sharp spikes helps detect short-term anomalies that may be caused by measurement interferences or brief episodes of severe pollution. Outliers detected at this aggregated level are similarly converted to NaN to preserve the potential for gap-filling, ensuring that the hourly time series used for subsequent analyses is both complete and robust.

Fig 2. Secondary outlier detection in hourly aggregated PM2.5 measurements, highlighting abnormal spikes (red points) identified by exceeding threshold criteria (>270 µg/m3) or showing disproportionate increases (>200 µg/m3 and >3 × previous value).

Final Checks As a last step, graphical overviews (heatmaps and missing-data plots) verify that outliers were properly flagged, time gaps handled consistently, and minute-to-hour resampling performed accurately. Fig 3 presents a comprehensive visualization of data completeness across the entire study period, revealing significant variability in data availability between months (ranging from 23.7% to 81.4% completeness) and highlighting the spatial-temporal patterns of gaps that will require treatment. These visual checks confirm the dataset is now ready for advanced gap-filling or modeling.

Fig 3. Hourly PM2.5 data completeness visualization after quality checks and outlier removal.

The heatmaps display data availability by month from May 2024 to January 2025, with each cell representing an hour of the day (x-axis) for each day of the month (y-axis). Legend: Green = Data Available, Red = Missing Values, White = Outside Measurement Period. Monthly completeness percentages are shown in the top-right corner of each panel.

Final dataset specification.

After preprocessing, the final hourly dataset spans from May 23, 2024 to January 19, 2025, comprising 5,791 hourly timestamps (242 days). Overall data completeness for PM2.5 measurements is 73.3%, with 1,546 missing values representing 26.7% of the total time series. Analysis of gap distribution reveals that most missing data occurs in relatively short segments: 84.9% of gaps are short (≤12 hours), 12.6% are medium-length (13–48 hours), and only 2.5% are extended periods (>48 hours), with the longest continuous gap spanning 191 hours. In total, 159 distinct gaps were identified across 25 different gap lengths, with the most frequent being 3-hour gaps (35 occurrences) (Fig 4). These gaps are not uniformly distributed throughout the monitoring period, as demonstrated in Fig 3, with August exhibiting the lowest completeness (55.6%) and June showing the highest (85.7%). This pattern of missing data presents a significant challenge for environmental analysis and necessitates advanced imputation techniques to produce a continuous time series suitable for pollution trend analysis and forecasting applications.

Fig 4. Distribution of PM2.5 data gaps by length (in hours).

Experimental framework for gap-filling evaluation

Synthetic gap creation and testing infrastructure.

To effectively evaluate and compare various gap-filling methodologies for PM2.5 data, we developed a comprehensive synthetic gap testing framework. This approach provides several key advantages over relying solely on naturally occurring data gaps.

Real-world environmental monitoring data often contains gaps with irregular distribution, varying lengths, and unknown ground truth values. These characteristics make it difficult to reliably evaluate the performance of different imputation methods. By introducing artificial gaps into complete data segments, we create a controlled testing environment with the following benefits:

By artificially removing values that actually exist in the dataset, we retain access to the true values for accurate performance assessment.

We can systematically vary gap lengths and patterns to evaluate model performance under different scenarios.

Multiple randomized runs with different gap placements allow for more reliable performance metrics and confidence intervals.

All methods are evaluated on identical gap patterns, ensuring comparative results reflect actual method differences rather than data peculiarities.

The synthetic gap generation process implemented in our framework follows a systematic approach. For each experiment, we:

Create a copy of the original dataset to preserve the source data.

Calculate the number of gaps to introduce based on a specified missing fraction (typically 5% of the total dataset).

Randomly select starting indices for gaps, ensuring they are sufficiently distant from dataset boundaries.

Replace original values with NaN for each designated gap.

Return the modified dataset with artificial gaps and the indices of these gaps for later evaluation.

To comprehensively evaluate method performance across different missing data scenarios, we tested multiple gap lengths:

-

−

Short gaps: 5, 12 hours (representing brief sensor malfunctions or maintenance)

-

−

Medium gaps: 24 hours (representing daily outages)

-

−

Long gaps: 48, 72 hours (representing extended equipment failures)

Each model’s performance was evaluated across all gap lengths and runs, resulting in a comprehensive assessment of its capabilities under various missing data scenarios.

Data preparation for model training and testing.

Proper data preparation is critical for developing robust gap-filling models. Our methodology incorporates several key steps to ensure appropriate scaling, prevent data leakage, and transform time series data into formats suitable for different modeling approaches.

Data Scaling and Normalization. All numerical features undergo standardization using the StandardScaler method from scikit-learn, which transforms features to have zero mean and unit variance. This preprocessing step is essential for time series modeling as it brings all features to a comparable scale, which is particularly important for multivariate models incorporating diverse environmental parameters; accelerates model convergence, especially for neural network architectures; improves model stability by preventing larger-magnitude features from dominating the learning process.

For univariate models, only the target variable (PM2.5) was scaled. For multivariate approaches, all included features (PM2.5, air temperature, humidity, and derived temporal features) underwent normalization. Critically, to prevent data leakage, the scaler is fitted exclusively on the training portion of the data. We then apply the same transformation parameters to both validation and test sets. This ensures that no information from the test set influences the scaling process, maintaining the integrity of model evaluation.

Time-Based Data Splitting. To maintain the temporal integrity of the data and prevent future information from influencing past predictions, we implemented a strict time-based splitting approach:

The dataset was chronologically ordered by timestamp

The first 80% of the data points were allocated to the training set

The remaining 20% were reserved for testing

This approach differs from traditional random splitting in machine learning, as it respects the temporal structure of the data. It also simulates real-world conditions where models must predict future or missing values based solely on past observations. Importantly, the test set represents a future time period that the model has not seen during training, creating a realistic evaluation scenario.

Data Transformation for Different Model Types. The preparation of training and testing data varied according to the imputation approach:

-

1)

Simple and Window-Based Methods. For baseline methods (mean, median) and window-based approaches (linear, polynomial interpolation), the data required minimal preprocessing beyond scaling. These methods utilize local information from specified time windows surrounding gaps.

-

2)

Sequence-to-Sequence Models. For models designed to predict entire gaps at once, we extracted fixed-length contexts before and after each gap. Both univariate and multivariate inputs were structured as time windows (typically 32 time steps) containing either PM2.5 values alone or multiple environmental parameters.

-

3)

Autoregressive Models. For models predicting one step at a time, we created sliding window inputs where each window predicts the subsequent value. During inference, these models recursively incorporate newly predicted values into the input window for subsequent predictions.

-

4)

Unidirectional vs. Bidirectional Models. Our framework implements two distinct prediction strategies:

-

−

Unidirectional approaches utilize a single model that processes data in one direction. While these models can incorporate both past and future data as context, they produce a single prediction through one forward pass. This approach is computationally efficient but may not fully leverage temporal patterns from both directions.

-

−

Bidirectional methods employ two separate models working in opposite directions. The forward model predicts future values based on past observations, while the backward model predicts in reverse–forecasting past values based on future data (using reversed time series). This produces two independent predictions, which are then combined using weighted linear interpolation, giving more weight to predictions closer to their respective input contexts.

-

−

For all model types, we maintained a strict separation between training and testing data, ensuring that validation metrics genuinely reflect model performance on unseen data patterns. This methodology provides a realistic assessment framework for comparing various imputation techniques across different gap scenarios.

Evaluation methodology.

To systematically assess the performance of gap-filling models, we established a comprehensive evaluation framework combining quantitative metrics and statistical analysis across multiple experimental runs.

The evaluation process follows a consistent pattern: after training, each model is applied to synthetic gaps created in the testing dataset. The predicted values are then compared with the original values (temporarily removed during the gap creation process). This provides a direct measure of how accurately each model can reconstruct the missing data.

We employed four complementary metrics to evaluate different aspects of prediction accuracy:

-

1

Mean Absolute Error (MAE): measures the average magnitude of errors without considering their direction [56]:

| (1) |

where yi and ŷi are the i-th observed and predicted values, respectively.

-

2

Root Mean Square Error (RMSE): emphasizes larger errors by squaring them before averaging [56]:

| (2) |

-

3

Mean Absolute Percentage Error (MAPE): expresses errors as percentage of true values [57]:

| (3) |

where ε is a small constant (10⁻⁸) to prevent division by zero.

-

4

Coefficient of Determination (R2): measures the proportion of variance explained by the model [58]:

| (4) |

where ȳ is the mean of the observed values.

These metrics provide complementary insights: MAE offers an intuitive measure of prediction error, RMSE penalizes large individual errors, MAPE provides a percentage-based metric for interpretability, and R2 evaluates how well the model captures data patterns.

To ensure statistical robustness, each experiment was repeated five times (n_runs = 5) with different random seeds controlling gap placement. For each metric and model configuration, we calculated mean value across all runs, and standard deviation to quantify variability.

This approach allows us to report not just point estimates of performance but also the consistency and reliability of each method. When reporting results, we present metrics in the format “mean±standard deviation” to properly characterize each model’s performance distribution.

For systematic model comparison, we organized results by gap length (5, 12, 24, 48, and 72 hours) and model category. This structure enables identification of:

Which models perform best for specific gap lengths

How performance degrades as gap length increases

The relative benefit of model complexity for different scenarios

The added value of multivariate versus univariate approaches

The evaluation methodology was implemented within a unified testing framework that ensures identical conditions across all models, enabling fair and objective comparison of diverse gap-filling approaches.

Model selection criteria.

Final model selection was based on multiple performance criteria evaluated through systematic comparison across all gap lengths. Primary selection criterion was Mean Absolute Error (MAE) due to its direct interpretability in physical units (μg/m3) and robustness to outliers. Secondary criteria included Root Mean Square Error (RMSE) for assessing prediction variance, coefficient of determination (R2) for explanatory power evaluation, and computational efficiency measured by average runtime. Models were ranked within each category (statistical, univariate machine learning, multivariate, dynamic) based on consistent performance across all tested gap lengths (5–72 hours). The final recommended models (XGB Seq2Seq for accuracy-critical applications and Dynamic Multivariate XGB for operational deployment) were selected based on their superior and stable performance across diverse gap scenarios, combined with acceptable computational requirements for practical implementation.

Model combination framework.

To ensure fair and systematic comparison across different gap-filling approaches, we developed a unified testing framework called ImputationCombiner. This framework streamlines the evaluation process by standardizing data preparation, model training, gap creation, performance evaluation, and results analysis.

The ImputationCombiner provides a consolidated environment where various imputation methods—from simple statistical approaches to complex deep learning models—can be evaluated under identical conditions. This infrastructure eliminates methodological inconsistencies that could bias comparisons and ensures that performance differences reflect genuine algorithmic capabilities rather than implementation variations.

The core components of the framework include:

A centralized data processing pipeline that handles scaling, splitting, and formatting

Standardized synthetic gap generation with controlled parameters

Uniform evaluation protocols applying consistent metrics

Automated result aggregation and statistical analysis

The framework employs a modular design that facilitates seamless integration of diverse imputation methods. Each model is registered with the combiner through a standardized interface that specifies:

-

−

The model’s name and category

-

−

Training and forecasting functions

-

−

Forecast type (e.g., “seq2seq”, “autoreg”, “uniseq2seq”)

-

−

Additional parameters such as window size or feature columns

This modular structure allows new methods to be incorporated with minimal code changes, enabling rapid prototyping and evaluation of novel approaches. The system also accommodates both univariate and multivariate methods through a flexible data preparation pipeline that adapts to each model’s requirements.

The ImputationCombiner executes a comprehensive testing workflow that:

Processes each registered model across multiple gap lengths

Conducts several experimental runs with different random seeds

Computes performance metrics and their statistical distributions

Records execution times for performance benchmarking

Results are systematically organized and stored in structured formats for subsequent analysis. The framework generates detailed performance tables showing metrics for each model, gap length, and experimental run. These results include not only average performance metrics but also standard deviations, allowing for statistical significance analysis.

Additionally, the system offers visualization capabilities to generate comparative charts, scatter plots of predicted versus actual values, and time series visualizations of filled gaps. These visual tools complement numerical metrics by providing qualitative insights into each method’s behavior.

This unified framework played a crucial role in our study by ensuring methodological consistency across all experiments, enabling objective identification of the most effective approaches for different gap-filling scenarios.

Hierarchy of gap-filling methods

We developed and evaluated a comprehensive taxonomy of gap-filling approaches, classified by their directionality, variable usage, and forecasting methodology. Table 1 summarizes the key characteristics and hyperparameters of all implemented methods.

Table 1. Gap-filling methods hierarchy and parameters.

| # | Category | Direction1 | Variables2 | Method | Architecture4 | Key Parameters | Context Length3 |

|---|---|---|---|---|---|---|---|

| 1 | Simple | – | Uni | Mean Imputation | Statistical | – | – |

| 2 | Simple | – | Uni | Median Imputation | Statistical | – | – |

| 3 | Local | – | Uni | Local Mean | Statistical | Window size = Variable | 15 + gap + 15 |

| 4 | Local | – | Uni | Local Median | Statistical | Window size = Variable | 15 + gap + 15 |

| 5 | Window | – | Uni | Linear Interpolation | Mathematical | – | 10 |

| 6 | Window | – | Uni | Polynomial Interpolation | Mathematical | Degree = 3 | Variable |

| 7 | Window | – | Uni | B-spline Interpolation | Mathematical | – | Variable |

| 8 | Window | – | Uni | ARIMA Imputation | Statistical | Order=(1,0,0) | 100 |

| 9 | Autoreg | Uni | Uni | UniAR LSTM | Neural Network | Units = 64, Activation = tanh | 32 |

| 10 | Autoreg | Uni | Uni | UniAR GRU | Neural Network | Units = 64, Activation = tanh | 32 |

| 11 | Autoreg | Uni | Uni | UniAR RNN | Neural Network | Units = 64, Activation = tanh | 32 |

| 12 | Autoreg | Uni | Uni | UniAR CNN | Neural Network | Filters = 32, Kernel = 3 | 32 |

| 13 | Autoreg | Uni | Uni | UniAR TCN | Neural Network | Filters = 32, Dilations=[1,2,4,8] | 32 |

| 14 | Autoreg | Uni | Uni | UniAR RF | Tree-based | Estimators = 50 | 32 |

| 15 | Autoreg | Uni | Uni | UniAR XGB | Tree-based | Estimators = 50 | 32 |

| 16 | Autoreg | Uni | Multi | UniAR XGB Multi | Tree-based | Estimators = 50, Features = 5 | 32 |

| 17 | Seq2Seq | Uni | Uni | UniSeq2Seq LSTM | Neural Network | Units = 64, Bidirectional | 32/32 |

| 18 | Seq2Seq | Uni | Uni | UniSeq2Seq GRU | Neural Network | Units = 64, Bidirectional | 32/32 |

| 19 | Seq2Seq | Uni | Uni | UniSeq2Seq RNN | Neural Network | Units = 64, Bidirectional | 32/32 |

| 20 | Seq2Seq | Uni | Uni | UniSeq2Seq CNN | Neural Network | Filters = 32/64, Kernel = 3 | 32/32 |

| 21 | Seq2Seq | Uni | Uni | UniSeq2Seq TCN | Neural Network | Filters = 64, Dilations=[1,2,4,8] | 32/32 |

| 22 | Seq2Seq | Uni | Uni | UniSeq2Seq RF | Tree-based | Estimators = 50 | 32/32 |

| 23 | Seq2Seq | Uni | Uni | UniSeq2Seq XGB | Tree-based | Estimators = 50 | 32/32 |

| 24 | Seq2Seq | Uni | Multi | UniSeq2Seq LSTM Multi | Neural Network | Units = 64, Bidirectional, Features = 5 | 32/32 |

| 25 | Seq2Seq | Uni | Multi | UniSeq2Seq CNN Multi | Neural Network | Filters = 32, Kernel = 3, Features = 5 | 32/32 |

| 26 | Seq2Seq | Uni | Multi | UniSeq2Seq RF Multi | Tree-based | Estimators = 50, Features = 5 | 32/32 |

| 27 | Seq2Seq | Uni | Multi | UniSeq2Seq XGB Multi | Tree-based | Estimators = 50, Features = 5 | 32/32 |

| 28 | Autoreg | Bi | Uni | LSTM Autoreg | Neural Network | Units = 64, Activation = tanh | 32 |

| 29 | Autoreg | Bi | Uni | GRU Autoreg | Neural Network | Units = 64, Activation = tanh | 32 |

| 30 | Autoreg | Bi | Uni | RNN Autoreg | Neural Network | Units = 64, Activation = tanh | 32 |

| 31 | Autoreg | Bi | Uni | CNN Autoreg | Neural Network | Filters = 32, Kernel = 3 | 32 |

| 32 | Autoreg | Bi | Uni | TCN Autoreg | Neural Network | Filters = 32, Dilations=[1,2,4,8] | 32 |

| 33 | Autoreg | Bi | Uni | RF Autoreg | Tree-based | Estimators = 50 | 32 |

| 34 | Autoreg | Bi | Uni | XGB Autoreg | Tree-based | Estimators = 50 | 32 |

| 35 | Autoreg | Bi | Multi | XGB Autoreg Multi | Tree-based | Estimators = 50, Features = 5 | 32 |

| 36 | Seq2Seq | Bi | Uni | LSTM Seq2Seq | Neural Network | Units = 64 | 32 |

| 37 | Seq2Seq | Bi | Uni | GRU Seq2Seq | Neural Network | Units = 64 | 32 |

| 38 | Seq2Seq | Bi | Uni | RNN Seq2Seq | Neural Network | Units = 64 | 32 |

| 39 | Seq2Seq | Bi | Uni | CNN Seq2Seq | Neural Network | Filters = 32, Kernel = 3 | 32 |

| 40 | Seq2Seq | Bi | Uni | TCN Seq2Seq | Neural Network | Filters = 32, Dilations=[1,2,4] | 32 |

| 41 | Seq2Seq | Bi | Uni | RF Seq2Seq | Tree-based | Estimators = 50 | 32 |

| 42 | Seq2Seq | Bi | Uni | XGB Seq2Seq | Tree-based | Estimators = 50 | 32 |

| 43 | Seq2Seq | Bi | Multi | XGB Seq2Seq Multi | Tree-based | Estimators = 50, Features = 5 | 32 |

| 44 | Seq2Seq | Bi | Multi | RF Seq2Seq Multi | Tree-based | Estimators = 50, Features = 5 | 32 |

| 45 | Dynamic | Uni | Uni | Dynamic Uni Seq2Seq XGB | Tree-based | Estimators = 50, Variable Context | Variable |

| 46 | Dynamic | Uni | Multi | Dynamic Multi Seq2Seq XGB | Tree-based | Estimators = 50, Features = 5, Variable Context | Variable |

1Direction: Uni = Unidirectional, Bi = Bidirectional

2Variables: Uni = Univariate (PM2.5 only), Multi = Multivariate (PM2.5 + meteorological and temporal features)

3Context Length: For bidirectional models, values represent pre/post gap context lengths

4Neural network models used Adam optimizer with learning rate = 0.001, trained for up to 30 epochs with early stopping (patience = 15). For all models, batch size = 32 was used during training

Baseline statistical methods.

The simplest approaches to gap-filling rely on basic statistical operations without requiring model training. These methods serve as foundational benchmarks for more complex techniques.

Simple Imputers replace missing values with a single statistic calculated from the available data. We implemented Mean and Median imputation, which substitute gaps with the average or median value of the entire time series, respectively.

Local Statistical Methods operate within a defined window surrounding each gap. Local Mean and Local Median calculate the respective statistic using only values within this window, providing more contextually relevant replacements than global statistics.

Window-Based Interpolation methods construct mathematical functions that pass through known points surrounding gaps. Linear interpolation fits straight lines between adjacent points, while Polynomial interpolation (degree = 3) and B-spline interpolation fit more complex curves that can capture non-linear patterns. These methods excel at preserving local trends but may struggle with longer gaps.

ARIMA Imputation applies time series modeling principles to extrapolate missing values using an ARIMA(1,0,0) model trained on values preceding each gap. This method can capture temporal dependencies but is computationally intensive compared to simpler approaches.

Machine learning approaches.

Beyond statistical methods, we implemented machine learning models with varying architectures, directionality, and forecasting strategies.

Forecasting strategies

Autoregressive Models predict one step at a time, using each new prediction as input for subsequent forecasts. While this approach can propagate errors, it adapts well to changing patterns within gaps.

Sequence-to-Sequence (Seq2Seq) Models directly predict the entire gap as a single output. This approach avoids error accumulation but requires the model to capture longer-term dependencies.

Directionality approaches

Unidirectional Models use context from only one temporal direction, typically forecasting forward using past values. These models include UniAR (Unidirectional Autoregressive) and UniSeq2Seq (Unidirectional Sequence-to-Sequence) variants.

Bidirectional Models utilize context from both past and future observations. These methods train two separate models: a forward model predicting from past to future, and a backward model predicting from future to past (using reversed time series). The final imputation combines these predictions with distance-weighted averaging, giving more weight to predictions closer to their input context.

Model architectures

Our framework incorporates diverse predictive models, each with unique strengths:

Tree-Based Models (Random Forest, XGBoost) effectively capture non-linear patterns without requiring extensive hyperparameter tuning. These models process flattened input windows rather than sequential data structures.

Recurrent Neural Networks (SimpleRNN, LSTM, GRU) specialize in sequential data, with LSTM and GRU offering sophisticated mechanisms to maintain long-term dependencies while addressing vanishing gradient problems.

Convolutional Models (CNN, TCN) apply convolution operations to capture local patterns and dependencies. TCN (Temporal Convolutional Network) specifically employs dilated convolutions to expand receptive fields for capturing longer-range dependencies.

Multivariate extensions.

To enhance predictive performance, we extended key models to incorporate additional contextual features beyond PM2.5 values:

Feature Selection included air temperature, air humidity, hour of day, and season indicators. These features were selected based on their known correlations with air quality patterns and their availability in practical deployment settings.

Input Representation for multivariate models included multiple channels for each time step. For example, instead of a single PM2.5 value per time step, multivariate models received vectors containing PM2.5, temperature, humidity, and temporal features.

Model Adaptations required architecture modifications to handle multi-dimensional inputs. For neural networks, this involved increasing input layer dimensions; for tree-based models, feature vectors were flattened while preserving their relationships.

Table 1 summarizes the key characteristics and hyperparameters of all implemented methods, organized by their classification within our hierarchy.

This comprehensive framework allowed us to systematically evaluate the impact of model architecture, directionality, variable usage, and forecasting strategy on gap-filling performance across different gap lengths.

Dynamic models development.

Moreover, we developed dynamic models capable of adapting their context processing based on gap characteristics. Our approach centers on three key innovations:

First, we implemented dynamic context sizing, where the effective context window adjusts proportionally to gap length. For gaps ≤10 hours, we used a factor of 3 × the gap length (e.g., 15-hour context for a 5-hour gap). For longer gaps, we capped context at 32 time steps to maintain computational efficiency while preserving sufficient information. This allows the model to function effectively even when full context is unavailable, such as near the edges of the time series or between closely spaced gaps.

Second, we developed a unified training methodology that exposes the model to gaps of various lengths during a single training process. This creates a generalized model capable of handling diverse gap scenarios without requiring separate specialized models. Instead of maintaining 25 different models for each observed gap length, a single dynamic model can handle any gap length from 1 to 191 hours, dramatically simplifying operational deployment.

Third, we incorporated explicit metadata into the input features, including gap length being predicted, dynamic context size being used, and position within the gap being filled. This metadata provides the model with explicit information about the prediction task’s characteristics, allowing it to adjust its internal processing accordingly.

Technical Implementation Details Our primary dynamic architecture employs XGBoost regression with several adaptations for time series gap filling. The model processes:

Left context (pre-gap): Variable-length observations before the gap, padded to a maximum size (C_max = 32);

Right context (post-gap): Variable-length observations after the gap, similarly padded;

Metadata features: Gap length, context size, and position indicators.

For gaps of varying lengths, the model outputs predictions for the maximum supported gap length, with only the relevant portion used for shorter gaps. This approach creates a single model capable of handling diverse gap scenarios.

The preprocessing pipeline dynamically selects appropriate context windows based on the gap. During training, we create a comprehensive dataset that includes examples of all target gap lengths (5, 12, 24, 48, and 72 hours), with appropriately sized context windows for each. This exposes the model to diverse gap patterns and their optimal context requirements simultaneously.

A critical component of our implementation is the position-aware padding procedure. Since the model requires consistent input dimensions, we standardize all inputs to a fixed maximum size through padding:

-

−

For the left context, we pad with zeros on the left side, preserving the most recent values closest to the gap

-

−

For the right context, we pad with zeros on the right side, preserving the earliest values after the gap

-

−

This creates input vectors where actual data points are positioned closest to the gap, with zero padding in the more distant positions

This padding approach ensures that the most relevant temporal information (closest to the gap boundaries) is preserved in a consistent position within the input vector, allowing the model to learn more effectively from the available context.

Gap-Filling Algorithm for Real-World Applications. For operational deployment, we implemented a comprehensive gap-filling algorithm capable of addressing the challenges presented by real-world missing data patterns:

The algorithm first identifies all missing data segments in the time series, determining the start index and length of each gap.

It then assesses the available context around each gap, adapting to whatever context is available–even if significantly shorter than the ideal 32 time steps due to edge effects or nearby gaps.

For standard gaps shorter than or equal to the maximum training length (72 hours), the model directly predicts the entire sequence using whatever context is available from both sides of the gap.

For extended gaps exceeding the maximum training length (e.g., the 191-hour gap in our dataset), the algorithm segments them into manageable chunks plus a remainder. Each chunk is predicted separately with appropriate context overlap, with predictions subsequently concatenated to form a complete sequence.

This approach allows our dynamic model to handle real-world gaps of any length, even those extending well beyond the maximum length used during training, and regardless of their position within the time series or proximity to other gaps.

We developed both univariate and multivariate variants of our dynamic models:

-

−

The univariate model (DynamicUniSeq2SeqXGB) uses only historical PM2.5 data as input, focusing solely on temporal patterns in the target variable.

-

−

The multivariate model (DynamicMultiSeq2SeqXGB) incorporates additional features identified as significant in our correlation analysis: wind speed (Ff), wind direction (DD), air temperature, air humidity, hour of day, and season.

By incorporating position-aware padding, metadata enrichment, and unified training across gap lengths, our dynamic models can automatically adjust their processing to the specific characteristics of each gap encountered, regardless of its length or position within the time series. This approach eliminates the need for maintaining multiple specialized models and provides robust performance across the diverse gap scenarios encountered in real-world environmental monitoring data.

Our dynamic approach differs conceptually from existing adaptive methods in time series imputation. While RNN-based dynamic models like those proposed by Waqas [48] focus on sequential state updating for streaming data, and state-space models emphasize hidden state evolution, our method addresses the specific challenge of retrospective gap-filling with known boundaries. Unlike online dynamic methods that process data sequentially without future context, our approach leverages both pre- and post-gap information while dynamically adjusting context windows based on gap characteristics. This operational focus distinguishes our contribution from algorithmic innovations in neural architectures, emphasizing practical deployment flexibility over novel learning mechanisms.

Implementation tools and environment

Implementation tools and environment.

All computations were performed on a system with Intel(R) Core(TM) i7-14700KF CPU (14 physical cores, 28 logical cores) and NVIDIA GeForce RTX 4080 SUPER GPU with 16GB VRAM. The software environment included CUDA 12.4, NVIDIA driver version 572.16, and TensorFlow 2.18.0. This hardware configuration provided sufficient computational resources for training all models within a reasonable timeframe, including the more complex deep learning architectures. The entire methodology was implemented using Python 3.12 with the libraries specified in Table 2.

Table 2. Primary software libraries used in this study.

| Category | Libraries |

|---|---|

| Data processing | pandas 1.3.5, NumPy 1.21.5 |

| Machine learning | scikit-learn 1.0.2, XGBoost 1.6.1 |

| Deep learning | TensorFlow 2.9.1, Keras 2.9.0 |

| Specialized components | tensorflow-tcn 0.5.0 (for TCN models) |

| Visualization | Matplotlib 3.5.1, Seaborn 0.11.2 |

| Statistical analysis | SciPy 1.7.3 |

| Utility libraries | joblib 1.1.0 (for model serialization) |

Our gap-filling models were implemented using a combination of Python-based machine learning frameworks. Standard machine learning models (Random Forest, XGBoost) were developed using scikit-learn and XGBoost libraries, while neural network architectures were implemented in TensorFlow with Keras API. For specialized temporal convolutional networks (TCN), we utilized the tensorflow-tcn extension library.

All dynamic models were built using the XGBoost framework (version 1.6.1), specifically leveraging its MultiOutputRegressor functionality to handle variable-length outputs. This allowed for efficient training and prediction across different gap lengths while maintaining a consistent model architecture.

The code implementation prioritized computational efficiency and scalability. For neural network models, we employed batch processing with early stopping to optimize training time while preventing overfitting. Memory management techniques were implemented for processing longer time series, including efficient data generators and gradient accumulation where necessary.

Integration with testing framework

The dynamic models were seamlessly integrated into our unified testing infrastructure through the ImputationCombiner framework. This integration required several adaptations:

Custom preprocessing pipelines: We developed specialized data transformation functions to handle dynamic context sizing, padding, and metadata enrichment.

Evaluation protocol adaptation: The standard evaluation process was extended to properly handle variable-length predictions, ensuring fair comparison with fixed-length models.

Results aggregation: The performance metrics calculation system was modified to account for the dynamic nature of the models, particularly in handling the different confidence levels across various positions within filled gaps.

This integration allowed direct comparison between traditional fixed-context models and our dynamic approaches across identical testing conditions, ensuring valid performance comparisons.

Application considerations.

The dynamic models offer several practical advantages for real-world deployment:

Deployment simplicity: A single dynamic model can replace multiple specialized models, reducing operational complexity and resource requirements.

Robustness to varied gaps: The models can handle irregularly distributed gaps of unpredictable lengths without requiring reconfiguration.

Performance consistency: By adapting to gap characteristics automatically, these models maintain more consistent performance across various missing data patterns.

Resource efficiency: The dynamic context sizing optimizes computational resources by utilizing only the necessary context for each gap.

However, there are also implementation considerations to note:

Training complexity: The unified training process with variable-length gaps is more complex than training separate specialized models.

Inference overhead: The dynamic preprocessing pipeline adds a small computational overhead during inference compared to simpler fixed models.

Data requirements: Effective training requires examples of diverse gap patterns, potentially necessitating more comprehensive training data.

These tradeoffs should be considered when selecting appropriate models for specific application requirements, particularly in resource-constrained environments.

All models were benchmarked under identical conditions to ensure fair comparison of computational efficiency and performance. The complete implementation, including data preprocessing pipelines, model architectures, and evaluation protocols, has been organized into a modular codebase to facilitate reproducibility and extension to additional datasets and gap-filling scenarios.

Results

Correlation analysis and feature selection

Correlation patterns between PM2.5 and meteorological parameters.

To identify relationships between PM2.5 concentrations and meteorological factors, we conducted a comprehensive correlation analysis using the preprocessed hourly dataset.

The correlation matrix in Fig 5 illustrates the interrelationships between PM2.5 and various meteorological parameters. The statistical significance of these correlations is indicated by asterisks, with most correlations showing high significance (p < 0.001).

Fig 5. Correlation matrix between PM2.5 and meteorological parameters.

The color intensity and numerical values represent Pearson correlation coefficients, with red indicating negative correlations and blue indicating positive correlations. Data measured with our station: PM2.5 (µg/m3), T’–air temperature (°C), U’–air humidity (%). Data from open source (rp5.com, weather archive in airport of Pavlodar, METAR [55]): T–air temperature (°C), P0–sea-level pressure (mmHg), P–station-level pressure (mmHg), U–relative humidity (%), DD–wind direction (compass points), Ff–wind speed (m/s), and VV–horizontal visibility range (km). *–p < 0.05 (statistically significant result), **–p < 0.01 (highly significant result), ***–p < 0.001 (extremely significant result).

The analysis revealed that air temperature (T’) showed a weak negative correlation with PM2.5 concentrations (r = –0.22, p < 0.001), suggesting a modest trend of higher PM2.5 levels during colder periods. This inverse relationship aligns with the expected seasonal patterns of air pollution in urban environments, where winter conditions can contribute to the accumulation of particulate matter.

Interestingly, relative humidity (U’) demonstrated only a weak positive correlation with PM2.5 levels (r = 0.10, p < 0.001), indicating a limited direct relationship between humidity and particulate matter concentrations in our study area. This finding differs from some previous studies that reported stronger humidity effects.

Wind speed (Ff) exhibited a moderate negative correlation with PM2.5 (r = −0.30, p < 0.001), confirming the expected dilution effect of stronger winds on air pollutants. This finding highlights the importance of atmospheric dispersion processes in regulating local PM2.5 concentrations. Wind direction (DD) showed a moderate positive correlation with PM2.5 (r = 0.25, p < 0.001), suggesting that certain wind directions may be associated with higher pollutant levels, possibly due to the transport of emissions from specific source areas.

Visibility (VV) showed a weak negative correlation with PM2.5 (r = −0.15, p < 0.001), which is consistent with the expected relationship between particulate matter and atmospheric transparency, though the association was not as strong as might be anticipated.

The correlation analysis also revealed notable interactions between meteorological parameters themselves. For instance, air temperature and relative humidity displayed a strong negative correlation (r = −0.59, p < 0.001), while station-level pressure (P0) and sea-level pressure (P) were perfectly correlated (r = 1.00, p < 0.001), as expected. The strong correlation between air temperature measured on our station (T’) and the temperature measured on station at the Airport (T) (r = 0.99, p < 0.001) indicates consistency between these two related measurements. These interrelationships among predictors are important considerations for multivariate modeling approaches.

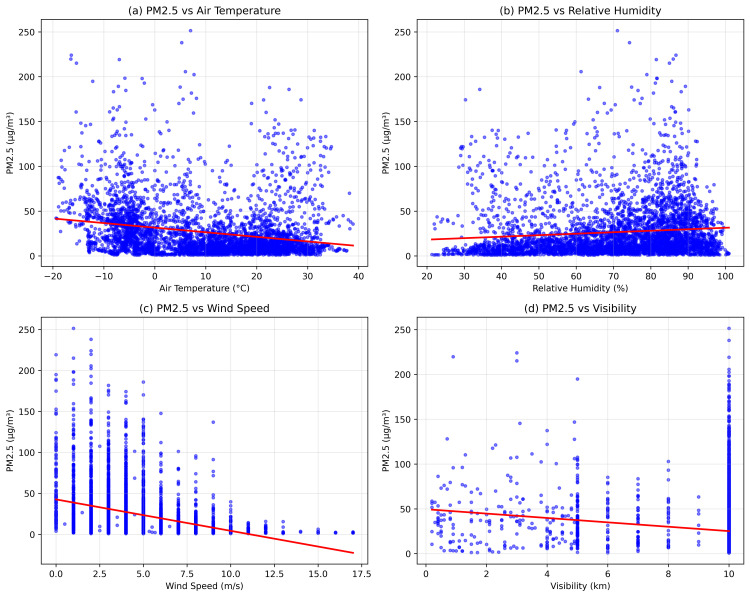

Fig 6 presents the scatter plots of PM2.5 against four key meteorological parameters, visualizing the relationships discussed above. The plots clearly demonstrate the inverse relationship with temperature (panel a, r = –0.26) and wind speed (panel c, r = –0.30), as well as the weak positive association with humidity (panel b, r = 0.13). The visibility plot (panel d) shows that as PM2.5 increases, visibility tends to decrease (r = –0.15), though with considerable variance at lower PM2.5 concentrations.

Fig 6. Scatter plots showing relationships between PM2.5 and key meteorological variables: (a) air temperature, (b) relative humidity, (c) wind speed, and (d) visibility.

Blue points represent individual hourly measurements, and red lines indicate best-fit trends.

To investigate potential non-linear relationships that might not be captured by Pearson correlation coefficients, we applied logarithmic regression analysis using log-transformed PM2.5 concentrations as the dependent variable. The logarithmic transformation was applied to normalize the distribution of PM2.5 values and better capture non-linear relationships with meteorological parameters.

After addressing multicollinearity issues by removing highly correlated variables, the final logarithmic regression model explained approximately 29.1% of the variance in log-transformed PM2.5 concentrations (R2 = 0.291, F = 289.9, p < 0.001). This moderate explanatory power is consistent with the complex nature of particulate matter dynamics in urban environments, where multiple factors beyond meteorological conditions influence concentration levels.

The regression analysis identified wind parameters as the strongest predictors (Table 3).

Table 3. Logarithmic regression model results for PM2.5 concentrations.

| Variable | Coefficient (β) | Std. Error | t-value | p-value |

|---|---|---|---|---|

| Constant | 5.206 | 14.027 | 0.371 | 0.711 |

| Air temperature | –0.020 | 0.002 | –9.291 | <0.001*** |

| Log(Air humidity) | 0.146 | 0.062 | 2.371 | 0.018* |

| Log(P) | –0.291 | 2.091 | –0.139 | 0.889 |

| Wind direction (DD) | 0.047 | 0.002 | 18.972 | <0.001*** |

| Wind speed (Ff) | –0.144 | 0.006 | –23.590 | <0.001*** |

| Log(Visibility) | –0.252 | 0.046 | –5.515 | <0.001*** |

Note: R2 = 0.291, Adjusted R2 = 0.290, F(6,4238) = 289.9, p < 0.001, n = 4245. Significance levels:

*p < 0.05,

***p < 0.001.

Wind speed (Ff) demonstrated a highly significant negative relationship with PM2.5 levels (β = −0.144, t = −23.59, p < 0.001), confirming the important role of atmospheric dispersion in reducing particulate concentrations. Wind direction (DD) showed a significant positive association (β = 0.047, t = 18.97, p < 0.001), suggesting that certain wind directions may transport pollution from nearby emission sources.

Air temperature exhibited a significant negative relationship with PM2.5 (β = –0.020, t = –9.29, p < 0.001), supporting our correlation findings and highlighting the seasonal patterns of particulate pollution. Visibility (log-transformed) also maintained a significant negative association (β = –0.252, t = –5.52, p < 0.001), while relative humidity showed a weaker but still significant positive relationship (β = 0.146, t = 2.37, p = 0.018).

The selection of variable transformations was guided by both theoretical considerations and empirical testing. While logarithmic transformation was applied to several variables (air humidity, pressure, visibility) to normalize their distributions and linearize relationships, wind parameters were deliberately maintained in their original linear form for several compelling reasons.

Wind direction (DD) was kept in its original scale as it represents a directional measure where logarithmic transformation would distort its physical meaning. The circular nature of wind direction data, where values indicate specific compass bearings, makes linear representation most appropriate for capturing the transport of pollutants from particular source areas.

For wind speed (Ff), we tested both logarithmic and linear specifications in preliminary analyses. The linear form was ultimately selected based on three key factors: (1) it provided superior model fit, with the R2 value decreasing from 0.291 to 0.262 when logarithmic transformation was applied; (2) atmospheric dispersion theory suggests a predominantly linear relationship between wind speed and pollutant dilution in urban environments; and (3) the presence of zero values (calm conditions) in the dataset would require artificial adjustments for logarithmic transformation, potentially introducing bias. The higher t-statistic for wind speed in its linear form (–23.59 versus −19.25 for the logarithmic form) further confirmed that this representation better captured its relationship with PM2.5 concentrations.

This mixed transformation approach, applying logarithmic transformation selectively where appropriate while maintaining linear relationships for wind parameters, produced the most statistically robust and physically interpretable model specification.

Diagnostic plots of this model revealed an approximately normal distribution of residuals, although with some tendency toward underestimation at extreme PM2.5 values. The scatter plot of predicted versus actual log(PM2.5) values (Fig 7) demonstrated the model’s ability to capture general trends while also highlighting the inherent variability not explained by meteorological factors alone. The concentration of points around the central region (approximately 2.5–3.5 log(PM2.5)) demonstrates the model’s tendency toward regression to the mean, with some underestimation of high concentration events and overestimation of low values. This pattern is typical for regression models addressing complex environmental phenomena and reflects the challenge of capturing extreme pollution events using meteorological variables alone.

Fig 7. Scatter plot of predicted versus actual log-transformed PM2.5 concentrations from the logarithmic regression model.

The histogram on Fig 8 displays the distribution of model residuals, showing an approximately normal pattern centered around zero. This distribution suggests that the logarithmic transformation successfully addressed much of the non-linearity in the relationships between PM2.5 and meteorological parameters. The slight positive skew in the right tail indicates some systematic underestimation of higher pollution events, potentially related to episodic emission sources or complex atmospheric conditions not fully captured by the included predictors.

Fig 8. Distribution of residuals from the logarithmic regression model of PM2.5 concentrations.

Fig 9 presents boxplots, a statistical visualization technique that provides an intuitive representation of data distribution. The rectangular box represents the interquartile range (IQR), encompassing the central 50% of observations between the 25th and 75th percentiles. The horizontal line within the box indicates the median value. Vertical “whiskers” extend to show the data’s spread, typically to 1.5 times the IQR. Individual points beyond the whiskers represent outliers–data points that significantly deviate from the overall distribution.

Fig 9. Distribution of PM2.5 Concentrations Across Meteorological Parameters: (a) air temperature, (b) relative humidity, (c) wind speed, and (d) wind direction.

Boxplots display the median (central line), interquartile range (box), distribution (whiskers), and outliers (circles) of PM2.5 concentrations within each categorical bin. Wind directions indicate the direction from which the wind blows.

The figure illustrates the distribution of PM2.5 concentrations across four meteorological parameters: air temperature, relative humidity, wind speed, and wind direction. These boxplots reveal nuanced relationships between environmental conditions and particulate matter concentrations.

Temperature analysis (Fig 9a) demonstrates a non-linear relationship between PM2.5 levels and air temperature. Notably, extremely low temperatures (between –25 and –20°C) correspond to elevated particulate concentrations. As temperatures rise to 0–10°C, a significant reduction in PM2.5 levels occurs, potentially attributable to decreased emission intensity and altered atmospheric dynamics. A moderate increase in concentrations is observed at temperatures above 25°C, which may result from enhanced photochemical reactions and specific particulate matter dispersion characteristics.

Relative humidity distribution (Fig 9b) reveals a pronounced trend of increasing PM2.5 concentrations with humidity elevation. The 20–50% humidity range exhibits relatively low particulate levels, while concentrations substantially increase at 80–100% humidity. This pattern likely stems from complex interactions involving particle deposition, atmospheric transformation processes, and meteorological conditions affecting pollutant dispersal.

Wind speed analysis (Fig 9c) illustrates a classic pattern of pollutant dispersion. Minimal wind speeds (0–2 m/s) correspond to maximum PM2.5 concentrations, indicative of air stagnation and pollutant accumulation. A sharp concentration decline occurs with wind speeds increasing to 4–6 m/s, demonstrating effective atmospheric mixing and particle transport. Concentrations stabilize at low levels when wind speeds exceed 8 m/s, highlighting wind regime’s critical role in atmospheric pollution dynamics.

Wind direction distribution (Fig 9d) unveils spatial nuances of particulate matter concentration. Highest median concentrations are observed with northerly (N, NNE) and southerly (S, SSE) winds, potentially reflecting emission source locations or local topographical influences. Western wind directions (W, WNW) correspond to relatively lower concentrations. However, significant value dispersion across all directions underscores the complex, multifactorial nature of particulate matter atmospheric transport.