Abstract

This work synthesizes and updates findings from previous systematic-reviews and meta-analyses on open-label placebos (OLPs). For the first time, it directly tests whether OLPs differ in effects between clinical and non-clinical samples, and between self-report and objective outcomes. We searched eight databases up to November 9, 2023, and included 60 randomized controlled trials (RCTs), compromising 63 separate comparisons. OLPs yielded a small positive effect across various health-related outcomes (k = 63, n = 4554, SMD = 0.35, CI 95% = 0.26; 0.44, p < 0.0001, I2 = 53%). The effect differed between clinical (k = 24, n = 1383, SMD = 0.47) and non-clinical samples (k = 39, n = 3171, SMD = 0.29; Q = 4.25, p < 0.05), as well as between self-reported (k = 55, n = 3919, SMD = 0.39) and objective outcomes (k = 17, n = 1250, SMD = 0.09; Q = 7.24, p < 0.01). Neithter the level of suggestiveness nor the type of control moderated the effect. These findings confirm that OLPs are effective for both clinical and non-clinical samples—particularly when effects are assessed via self-reports.

Supplementary Information

The online version contains supplementary material available at 10.1038/s41598-025-14895-z.

Keywords: Open-label placebo, Objective outcome, Meta-analysis, Systematic-review

Subject terms: Psychology, Medical research, Outcomes research

Introduction

The placebo effect is a well-known psychological phenomenon that can lead to significant improvements in both clinical and non-clinical populations for self-report outcomes (e.g., self-rated questionnaires) and objective outcomes (e.g., physiological, or behavioral variables). Placebos have been shown to have beneficial effects in health-related outcomes and conditions such as allergies, anxiety, Alzheimer’s disease, Parkinson’s disease, depression, fatigue, pain, as well as on physical performance and various physiological systems1–3. However, traditional placebos, which involve deceiving recipients about their treatment, raise ethical concerns and limit their applicability in clinical and general practice2. An alternative to deceptive placebos is the use of open-label placebos (OLPs), where recipients are informed transparently that they are receiving a placebo with no pharmacologically active ingredients4. Since the pioneering OLP trial by Kaptchuk et al5., numerous studies have explored various aspects of OLPs6.

Previous meta-analyses have demonstrated beneficial effects of OLP interventions, particularly in clinical populations and for self-report outcomes. While Spille et al.7 found a moderate OLP effects in non-clinical samples for self-reports, Charlesworth et al.8 and von Wernsdorff et al.9 reported moderate to large effects of OLPs in clinical samples. Buergler et al.10 conducted the first network meta-analyses in the field, examining how OLP effects vary depending on treatment expectation, comparator, administration route, and population. Their findings suggest that OLPs are generally more effective in clinical than non-clinical samples, that positive treatment expectations play a key role, and that the type of comparator influences effect sizes—while the route of administration had no substantial impact.

These findings highlight the need to consider both the population type (clinical vs. non-clinical) and the form of outcome (self-report vs. objective) when evaluating the effectiveness of OLPs. However, none of the existing meta-analyses statistically integrated or directly compared clinical and non-clinical samples, nor did they analyze differences between self-report and objective outcomes. To date, no meta-analysis has quantified the overall effect of OLPs across both population type and outcome forms in a single model.

A key concept in OLP research is the induction of treatment expectations through verbal suggestions in OLP interventions11. Most OLP interventions are accompanied by a standardized rationale originally developed by Kaptchuk et al.12, which typically includes the following four suggestive elements: (1) the placebo effect is powerful, (2) the body is automatically responding to placebos, (3) a positive attitude towards palcebos is not necessary, and (4) adherence to the placebo regime is important. Initial meta-analytic evidence suggests that OLPs delivered with such a suggestive rationale tend to be more effective than those without it. For example, Buergler et al.10 found that OLP interventions with a suggestive rationale were more effective than those without, though the available data were limited. Spille et al.7 reported that suggestiveness influenced objective outcomes in non-clinical populations but had no clear effect on self-reports. Taken together, these findings suggest that building expectations is a key factor in OLP effectiveness—but it remains unclear when and for which outcomes suggestive rationales actually improve treatment effects.

The type of control condition plays a crucial role in determining the observed effect size of OLP interventions. This is well established in psychotherapy research, where comparisons to waiting list (WL) control groups often yield larger effect sizes than comparisons to no treatment (NT)13. Buergler et al.10 found that OLPs in clinical samples were more effective than NT and WL, but not more effective than treatment as usual (TAU). In non-clinical samples, Spille et al. introduced the concept of covert placebo (CP)7—a condition where participants receive the same treatment as in the OLP group, but with a rationale designed to divert attention away from the placebo related expectations (e.g., participants are given a technical explanation for receiving the placebo treatment). Unlike deceptive placebos, CPs aime to avoid creating specific expectations in recipients about the treatment’s outcome. CPs were proposed as a potential alternative to NT, aiming to minimize expectation effects without deception. However, the limited data available from Spille et al. necessitates a reevaluation of their findings7.

In summary, to address these unresolved questions, we conducted a systematic review and meta-analysis that builds on and extends previous work in the field7,9,10,14. We derived the following research questions (RQs): First, do OLP interventions offer greater benefits compared to control groups across various outcomes and populations (RQ1)? Second, do these benefits differ between clinical and non-clinical populations (RQ2)? Third, do these benefits differ between self-report and objective outcomes (RQ3)? Fourth, does the level of expectation induction through suggestion influence the OLP effect (RQ4)? Fifth, do effect sizes vary based on the type of control group used (RQ5)?

Methods

This systematic review and meta-analysis utilized the same databases and search terms as Spille et al.7 and von Wernsdorff et al.9. The study report adhered to the revised Preferred Reporting Items for Systematic Reviews and Meta-Analyses (PRISMA) 2020 statement15 (Supplemental digital appendix A1). We pre-registered the study with PROSPERO (CRD42023421961) prior to data collection.

Eligibility criteria

We included randomized controlled trials (RCTs) that investigated the effects of OLPs on various health-related outcomes in both clinical and non-clinical populations. The OLP intervention could include any form of substance without a pharmacologically active ingredient, such as placebo pills, capsules, nasal sprays, dermatological creams, injections, acupuncture, or verbal suggestions16. It was essential that the OLP was administered transparently, with recipients fully informed that they were receiving a placebo6,16. OLPs had to be compared with one of the following control groups: CP, NT, TAU, or WL control group. We included only trials reporting outcomes on a continuous scale, whether self-report (e.g., self-rated questionnaire) or objective measure (i.e., physiological, or behavioral variables). In accordance with the Cochrane Handbook for Systematic Reviews17we included crossover trials only if data from the initial phase of the trial (i.e., prior to the crossover) were available. We contacted authors, if this data was not reported. If the authors could not provide the data or did not respond, we excluded the trial from the analyses.

Information sources and search strategy

This study builds upon and updates two previous systematic reviews on open-label placebos7,9, which served as primary sources and starting points for identifying eligible studies. In addition, we conducted a comprehensive systematic database search on November 9, 2023, including EMBASE via Elsevier, MEDLINE via PubMed, APA PsycINFO and PSYNDEX Literature with PSYNDEX Tests via EBSCO, the Web of Science Core Collection, and the most recent edition of the Cochrane Central Register of Controlled Trials (CENTRAL, The Cochrane Library, Wiley). We used the search terms identical to those employed by von Wernsdorff et al.9 and Spille et al.7, focusing on variants of ‘open-label placebo’ (e.g., ‘placebo’, ‘open-label’, ‘non-blind’, and ‘without deception’). The number of hits for each search term in these databases is provided in Supplemental digital appendix A2. We restricted our search to publications from January 2020 onward (i.e., the search date of von Wernsdorff et al., 2021). For non-clinical samples, we included trials published from April 15, 2021 (i.e., the search data of Spille et al., 2023). Additionally, we screened all entries in the Journal of Interdisciplinary Placebo Studies database (JIPS, https://jips.online/) from January 2020, as well as the complete database in the Program in Placebo Studies & Therapeutic Encounter (PiPS, http://programinplacebostudies.org/), using keywords in the publication titles (e.g. ‘analgesia’, ‘expectation’, ‘non-deceptive’, ‘open-label’, ‘placebo’, ‘suggestion’). No restrictions were applied regarding the language of publication or the age of participants.

Selection process

Results from the literature databases, including hits from the JIPS and PiPS databases, were exported into the systematic review management software Rayyan18. Duplicates were removed using Rayyan’s semi-automatized duplicate-detection feature. Two researchers (C.T. and P.S.) independently assessed study eligility. First, they screened all titles and abstracts, followed by a full-text assessment of reports deemed potentially eligible in the first stage by one of the investigators (see Supplemental digital appendix A3 for excluded full-texts with reasons for exclusion). Disagreements were resolved through discussion, with the supervision of two additional researchers (J.C.F. and S.S.).

Data items and collection process

Two researchers (C.T. and P.S.) independently extracted data from the included studies in a standardized Excel form, which was piloted with three records. Data extraction covered the following areas: study details (i.e., author, year, title), sample characteristics (i.e., type of population, population size, distribution of participants), intervention and control groups (e.g., pill, cream, spray, duration), outcomes (i.e., baseline, post-intervention or change scores), and outcome form (i.e., self-report or objective). We extracted data for the outcome defined as primary outcome in the respective report. If a primary outcome was not specified, we extracted all outcomes related to the OLP intervention to minimize bias from selective outcome choice based on effect size and hypothesis fit19. In trials where baseline outcome scores were unavailable prior to experimental exposure, we extracted post-intervention values only.

Participants were classified into clinical populations if they met a medical condition (e.g., allergic rhinitis, cancer-related fatigue, chronic low back pain, menopausal hot flashes) or a mental disorder (e.g., major depressive disorder), as diagnosed by a clinician or psychologist20. Those classified as non-clinical populations were generally healthy individuals. Subclinical traits, such as test anxiety or low levels of well-being, did not qualify participants for the clinical population and were therefore categorized as non-clinical. The degree of suggestiveness of the treatment rationale was independently evaluated by two researchers (C.T. and P.S.) based on the description of the OLP administration. Rationals of OLP interventions featuring one or more elements from Kaptchuk et al. (2010, see Introduction)12 were rated as having a ‘high’ degree of suggestiveness, while those lacking elements of suggestive expectation induction were rated as having a ‘low’ degree of suggestiveness. In studies with different treatment rationales across separate intervention groups, both groups were extracted as distinct trials and coded accordingly as OLP+ (‘high’ degree of suggestiveness) and OLP- (‘low’ degree of suggestiveness). To ensure comparability across clinical trials, all control groups (i.e., NT, TAU, WL, and CP) were checked for compliance with given definitions of comparators21. Thus, NT refers to a group in which no alternative treatment is provided, while TAU included access to standard treatment practices for the condition. If participants were offered an OLP intervention following the OLP group, the control group was labeled as a WL group. For non-clinical trials, NT refers no intervention, and CP involved the same physical treatment as the OLP group, but with a rationale designed to avoid creating specific expectations regarding the outcome7. Missing values were addressed by contacting the authors. If the authors did not responed or could not provide the data, the study was excluded. All extracted data were cross-checked using Excel’s data validation feature. Discrepancies were resolved through discussion and consensus, supervised by (J.C.F. and S.S.).

The study at hand is an extended update of the reviews by von Wernsdorff et al.9 and by Spille et al.7. Therefore, one reviewer (C.T.) re-evaluated and extracted data from the studies included in these reviews. In cases of discrepancies regarding selection and extraction due to methodological differences (e.g., von Wernsdorff et al.9 extracted consistently post-intervention values only), a second reviewer (P.S.) independently re-assessed these reports. In cases where changes were necessary, both reviewers (C.T. and P.S.) independently extracted any additional data. The same data cross-checking process, as illustrated above, was applied to ensure accuracy.

Risk of bias assessment

We assessed the risk of bias of the included trials using the Cochrane Risk of Bias tool (RoB 2.0)22. The RoB 2 assesses bias arising from the randomization process (domain 1), deviations from intended interventions (domain 2), missing outcome data (domain 3), measurement of the outcome (domain 4), and selection of the reported result (domain 5)22. The results of the five domains are aggregated into an overall risk of bias rating, which is equivalent to the worst rating in any of the domains. In line with the previous meta-analyses on OLPs7,9,10, we applied the same special rules to the RoB 2 to account for the unblinded nature of OLPs. When a rating of a ‘high’ risk of bias in domain 4 resulted only due to the signaling question 4.5 (‘Is it likely that the assessment of the outcome was influenced by knowledge of the intervention received?’), we overrode the suggestion of the algorithm for this domain and labeled it with ‘some concerns’. Since eliciting treatment expectation is a crucial mode of action of OLPs, the effect of knowing about the group allocation cannot be separated from the placebo or nocebo effect (i.e., excitement or disappointment respectively)9. Moreover, we would have lost all variance in the assessment, as all studies would have received a ‘high’ overall rating as consequence. The RoB 2 for the newly included studies was assessed by two researchers independently (C.T. and P.S.), with discrepancies resolved through discussion and consensus. The results from the risk of bias assessments of the studies included in previous versions of the review by Spille et al. (2023)7 and von Wernsdorff et al.(2021)9 and the present one are presented in the Supplemental digital appendix A4.

Data synthesis and analysis

Statistical analyses were performed using R, version 4.5.023. To evaluate the effects of the OLP interventions, we calculated standardized mean differences (SMDs) by subtracting the mean pre-post change in the intervention group from the mean pre-post change in the control group and dividing the result by the pooled pre-intervention standard deviation24. For studies that reported only post scores, SMDs were calculated based on these values. Standard errors of the means were converted into SDs following the guidelines outlined in the Cochrane Handbook17. We used Hedges’ g which corrects for bias due to small sample sizes25. We interpreted values of 0.20, 0.50, and 0.80 as small, moderate, and large effect, respectively26. Whenever a primary outcome was clearly defined in a study, this outcome was used for effect size calculation. In studies without defined primary outcome27–51we computed SMDs for all relevant outcomes—whether self-report or objective—individually, and then averaged them to obtain an overall SMD estimate for the respective trial52. This was done by the aggregate function in R from the metafor package53under the assumption of an intra-study correlation coefficient of ρ = 0.654. In studies where the type of administration of the OLP intervention varied (e.g., one group received OLP nasal spray and another intervention group received OLP pills)29,38,43,55,56the mean pre- and post-values along with their SD were aggregated prior to data analysis, in accordance with the guidelines outlined in the Cochrane Handbook17. We aggregated the interventions groups of these trials as follows: for Barnes et al.56, we combined the ‘Semi-Open Label’ and ‘Fully-Open Label’ intervention groups; for El Brihi et al.34, we combined the groups receiving one and four OLP pills per day; for Kube et al.38, we aggregated the ‘OLP-H’ (hope) and ‘OLP-E’ (expectation) groups; for Olliges et al.43, we combined the ‘OLP pain’ and ‘OLP mood’ groups; and for Winkler et al.29, we merged the ‘OLP nasal active’, ‘OLP nasal passive’, and ‘OLP capsule’ groups. In studies where the OLP rationale was manipulated accross two independent samples32,42,57, the SMDs were calculated separately for each intervention group (OLP + and OLP− respectively) and treated as two distinct trials. To prevent unity-of-analysis error due to double counting, the control group data was divided in half for each intervention group in these cases17. Similarly, if a trial contributed to more than one comparison (i.e., assessing both self-report and objective outcomes)27,31,36,38,42,49,58 again, the sample size was divided by the number of comparisons to prevent unit-of-analysis error due to double-counting. To ensure consistent interpretation of the direction of effects where a positive SMD value indicates a beneficial effect of the OLP intervention for recipients, the means of some studies were multiplied by − 1, as outlined in the Cochrane Handbook17.

After aggregating all effect sizes within studies, we conducted a meta-analysis using the meta package in R59. Anticipating heterogeneity among trials, we employed a random-effects model with the inverse-variance weighting method60. We weighted the trials based on post-intervention sample sizes, which are more conservative than pre-intervention samples sizes. All tests were two-tailed. Heterogeneity among studies was assessed using the Q-statistic and quantified with the I2 index as well as prediction intervals. The I2 values indicate the percentage of total variance between effects that is due to true effect variation and are interpreted as follows: 0 to 40% might not be important; 30 to 60% may represent moderate heterogeneity; 50 to 90% may represent substantial heterogeneity; and 75 to 100% is considered to constitute considerable heterogeneity17. The prediction intervals indicate true effect variation and represent the range into which the true effect size of all populations will fall61. If the prediction interval lies entirely on the positive side (i.e., does no include the zero), favoring the OLP intervention, it suggests that, despite variations in effect sizes, the OLP is likely to be beneficial; however, is important to note that broad prediction intervals are relatively common and reflect inherent variability in the data54.

Sensitivity analysis

We conducted four sensitivity analyses to examine the robustness of results. First, we excluded outliers utilizing the “non-overlapping confidence intervals” approach, which regards a comparison as outlier, if its 95% confidence interval of the effect size did not overlap with the 95% confidence interval of the pooled effect size54. Second, we excluded studies assessed with a ‘high’ risk of bias according to the RoB 2 assessment. We chose the criterion ‘high’, because almost all studies had at least a risk to ‘some concerns’ due to the lack of blinding of the OLP interventions and to self-reported outcomes. Third, we considered potential publication bias using Duval and Tweedie’s trim and fill procedure, which provides an estimate of the pooled effect size after adjusting for asymmetry in the funnel plot62. Fourth, we estimated the overall OLP effect using a hierarchical three-level meta-analytic model, with effect sizes nested in studies54.

Reporting bias assessment and certainty assessment

We assessed publication bias by creating a funnel plot, which plots effect estimates (SMDs) from individual studies against their standard error (SE). We visually inspected the funnel plot for asymmetry, conducted Egger’s regression test, which regresses the SMDs against their SE63 and calculated Rosenthal’s Fail-Safe-N64.

Results

Trial selection

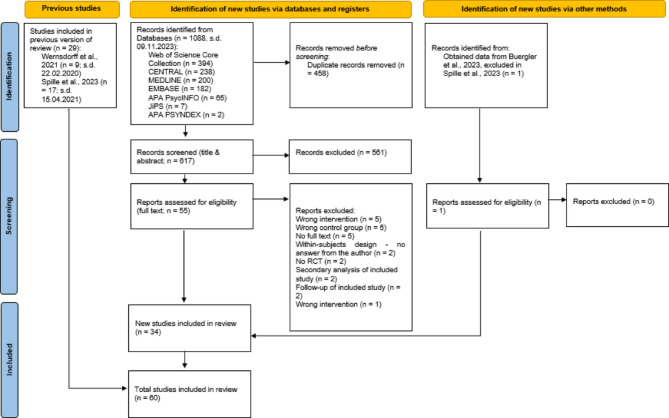

We included 60 RCTs with 63 seperate comparisons. Of these, 9 trials were from von Wernsdorff et al.9, 17 from Spille et al.7, and 34 were identified in our updated search (for a flow chart see Fig. 1). The chance-corrected agreement between raters after the full-text screening in the updated search was substantial (κ = 0.78)65. We further applied a set of additional selection and exclusion steps based on eligibility criteria, data availability, trial design (e.g., follow-ups, crossover studies), and control group definitions; a detailed list of these decisions is provided in Supplemental digital appendix A5.

Fig. 1.

PRISMA flow diagram for study selection. In four cases, the individual article reported data from two independent experiments each, which were considered as two distinct studies.

Trial characteristics

Detailed qualitative characteristics of included trials are presented in Supplemental digital appendix A6, and detailed numerical characteristics are presented in Supplemental digital appendix A7. The trials were published in English between 2001 and 2023. The 60 RCTs (37 non-clinical and 23 clinical trials) involved a total of 4648 participants, with 2492 individuals randomized to an OLP intervention and 2156 individuals randomized to control groups. The mean age of participants weighted for the pre-intervention sample size was 30.5 years (SD = 8.7) with an age range from 14 to 70 years. The weighted mean percentage of females was 72%. Sample sizes ranged from 9 to 133 individuals. The duration of the trials was from one to 90 days (Mean/SD = 12.4/16.6, Median = 7). Studies were conducted in Germany29,31,38–41,43–47,49,57,66,67,78–84, in the USA12,35,37,69,76,85–93, in Australia32,34,51,56,94 and Austria28,48,58,95–97Switzerland42,50,55,98 in Israel99, Japan27, the Netherlands89, New Zealand30, Portugal33, and the United Kingdom36.

Non-clinical trials investigated OLP effects on experimentally induced emotional distress of various types, including distressing pictures35,58,67,95, experimentally induced sadness78–80guilt98, intrusive memories40,and distress related to social exclusion50; experimentally induced pain38,42,83,90; physical and mental well-being32,34,44; arousal and well-being31,49; psychological distress29; physiological processes such as nausea56, wound healing30, experimentally induced itch89, sleep quality28, caffeine withdrawal symptoms94, and physiological recovery51; cognitive performance36,82; test anxiety45,55; promoting beneficial behaviors such as Progressive Muscle Relaxation96 and Acts of Kindness97; and experimentally induced acute stress46.

Clinical trials investigated OLP effects on allergic rhinitis39,41,47,57,84, chronic low-back pain27,33,81,85, and major depressive disorder48,87,99. Additionally, trials focused on cancer-related fatigue37,91,92, irritable bowel syndrome12,69,88, the impact of OLPs on knee osteoarthritis pain43, and the reduction of experimentally induced emotional distress in women diagnosed with major depressive disorder66. Trials also included a conditioning OLP paradigm, of which two investigated the reduction of opioid medication after surgery76,93, and one on opioid use disorder86.

The type of OLP administration varied across the primary trials: 33 trials used OLP pills, 11 trials used active or passive nasal sprays (i.e., with or without a prickling sensation), four trials administered the OLP in the form of drops, three as a dermal cream, three as a decaffeinated coffee, and one each in the form of imaginary pills, oral spray, oral vapor, saline injection, sham acupuncture, sham deep brain stimulation, syrup, and verbal suggestion. Seven trials used CP as control group, 38 trials NT, six trials TAU, and nine trials WL. Three trials applied a conditioning design.

Regarding the OLP rationale, 52 trials used a treatment rationale with ‘high’ levels of suggestiveness, five trials used a treatment rationale without suggestive elements and three trials manipulated the treatment rationale, resulting in one intervention group with suggestion (OLP+) and one without (OLP−). In total, 84 self-report and 61 objective outcomes were extracted from non-clinical trials, and 29 self-report and five objective outcomes from clinical trials. The chance-corrected agreement between raters regarding the outcomes to be extracted was substantial (κ = 0.61)65.

Risk of bias in studies

Supplemental digital appendix A4 presents the risk of bias assessment. In the updated review, nine trials27–29,85,86,91,93,94,96 (26%) were rated as having a high risk of bias. Eight of these trials had missing outcome data27,28,85,86,91,93,94,96, while one trial29 had concerns about the randomization process and one trial failed to analyze the data according to intention-to-treat96. Twenty-four trials (71%) were rated as having a moderate risk of bias or ‘some concerns’. This was primarily due to the fact, that the majority of the trials collected self-report data, which could be influenced by participants’ knowledge of their group allocation. In the overall sample, 12 primary trials (20%) were rated as having a ‘high’ risk of bias, 46 trials (77%) had ‘some concerns’ and two trials (3%) had a ‘low’ risk of bias.

Effects of OLPs

Table 1 summarizes the results of the main, sensitivity, and subgroup analyses. Figure 2 displays the forest plot for the main analysis of OLPs. We analyzed 63 comparisons across 60 trials, including three trials that manipulated treatment rationales in two distinct intervention groups (OLP + and OLP−)32,42,57. The meta-analysis revealed a small but significant positive effect of OLP interventions compared to control groups (k = 63, n = 4554, SMD = 0.35, CI 95% = 0.26; 0.44, p < 0.0001; I2 = 53%), with moderate heterogeneity between trials (RQ1). The prediction interval ranged from − 0.17 to 0.87, indicating that while the average effect favors OLPs, true effects in future settings could vary and may include no effect. However, the sensitivity analyses produced similar results, demonstrating the robustness of the results.

Table 1.

Effects of open-label placebos (OLPs). Main, sensitivity analyses, and subgroup analyses.

| Analyses | k | N a | g | CI_lb | CI_ub | I2 | I2_lb | I2_ub | τ | PI_lb | PI_ub | p | Q | p_sg |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Main and sensitivity analyses | ||||||||||||||

| All comparisons | 63 | 4554 | 0.35 | 0.26 | 0.44 | 52.64 | 36.85 | 64.49 | 0.25 | − 0.17 | 0.87 | **** | ||

| Outliers excluded | 56 | 3938 | 0.40 | 0.33 | 0.46 | 4.45 | 0.00 | 29.81 | 0.05 | 0.27 | 0.52 | **** | ||

| High risk excluded | 51 | 3651 | 0.32 | 0.22 | 0.42 | 53.82 | 36.55 | 66.39 | 0.26 | − 0.21 | 0.86 | **** | ||

| Adjusted for publication bias | 71 | 5012 | 0.28 | 0.18 | 0.37 | 61.26 | 49.88 | 70.05 | 0.31 | − 0.34 | 0.90 | **** | ||

| 3-Level Model | 179 | 4554b | 0.33 | 0.23 | 0.43 | 56.38 | – | – | 0.32 | − 0.40 | 1.06 | **** | ||

| Subgroup analyses | ||||||||||||||

| Population | ||||||||||||||

| Clinical | 24 | 1383 | 0.47 | 0.36 | 0.57 | 0.00 | 0.00 | 44.61 | 0.00 | 0.35 | 0.58 | **** | 4.25 | * |

| Non-clinical | 39 | 3171 | 0.29 | 0.17 | 0.42 | 63.41 | 48.46 | 74.02 | 0.30 | − 0.33 | 0.91 | **** | 39 | |

| Form of outcomec | ||||||||||||||

| Self-report | 55 | 3919 | 0.39 | 0.30 | 0.49 | 50.78 | 32.83 | 63.93 | 0.25 | − 0.11 | 0.90 | **** | 7.24 | ** |

| Objective | 17 | 1250 | 0.09 | − 0.12 | 0.29 | 66.33 | 43.91 | 79.79 | 0.33 | − 0.65 | 0.83 | |||

| Suggestiveness | ||||||||||||||

| High | 55 | 4131 | 0.38 | 0.30 | 0.47 | 42.20 | 20.15 | 58.16 | 0.20 | − 0.03 | 0.80 | **** | 2.20 | |

| Low | 8 | 423 | 0.11 | − 0.25 | 0.46 | 70.31 | 38.40 | 85.69 | 0.42 | − 0.97 | 1.19 | |||

| Type control | ||||||||||||||

| NT | 41 | 2967 | 0.29 | 0.17 | 0.41 | 61.09 | 45.36 | 72.29 | 0.30 | − 0.34 | 0.91 | **** | 5.43 | |

| WL | 9 | 517 | 0.41 | 0.24 | 0.59 | 0.00 | 0.00 | 64.80 | 0.00 | 0.21 | 0.62 | **** | ||

| CP | 7 | 666 | 0.50 | 0.34 | 0.65 | 0.00 | 0.00 | 70.81 | 0.00 | 0.30 | 0.69 | **** | ||

| TAU | 6 | 404 | 0.50 | 0.27 | 0.73 | 17.85 | 0.00 | 62.65 | 0.12 | 0.06 | 0.94 | **** | ||

aSample sizes reflect post-intervention or change-score Ns used for variance estimation for meta-analytic weighting, and may therefore differ from baseline sample sizes reported in text.

bFor the 3-Level Model, n refers to the total number of unique participants included across all studies, although multiple effect sizes per study were modeled.

cStudy count k and number of participants n in the form of outcome subgroups do not sum to the overall total of all comparisons, as some studies included both self-report and objective outcomes. Adjusted sample sizes were used in subgroup comparisons to correct for unit-of-analysis issues in cases where such overlap occurred.

k number of studies, CI confidence interval, lb lower bound of 95% CI, ub upper bound of 95% CI, PI prediction interval, sg subgroup, NT no treatment, TAU treatment as usual, CP covert placebo, WL waiting list.

*p < 0.05; **p < 0.01; ***p < 0.001; ****p < 0.0001.

Fig. 2.

Forest plot of the effects of open-label placebos vs. control conditions on all outcomes. Studies were weighted using the inverse-variance method. The size of the grey squares indicates the weight of each study, while the whiskers represent the 95% confidence intervals. The overall SMD is shown as a black diamond. In trials employing and comparing several treatment rationals, intervention groups receiving a suggestive treatment rationale are denoted by author names and year followed by a +’, whereas those receiving a rationale without suggestion are denoted by author names and year followed by a ‘−’.

OLP interventions had a small significant effect in both clinical samples (k = 24, n = 1383, SMD = 0.47, CI 95% = 0.36; 0.57, p < 0.0001; I2 = 0%) and non-clinical samples (k = 39, n = 3171, SMD = 0.29, CI 95% = 0.17; 0.42, p < 0.0001; I2 = 63%), with lower heterogeneity in clinical samples. The effectiveness differed significantly between clinical and non-clinical samples (Q = 4.25, p < 0.05, RQ2). The prediction interval for clinical samples did not include zero (0.35 to 0.58), suggesting that future studies are consistently likely to show a positive effect. In contrast, the prediction interval for non-clinical samples ranged from − 0.33 to 0.91, indicating that effects may vary more widely and could include no effect in some settings.

OLP interventions significantly improved self-report outcomes (k = 55, n = 3919, SMD = 0.39, CI 95% = 0.30; 0.49, p < 0.0001; I2 = 51%) but showed no significant effect on objective outcomes (k = 17, n = 1250, SMD = 0.09, CI 95% = − 0.12; 0.29, p = 0.41; I2 = 66%), with moderate to substantial heterogeneity. The difference in effectiveness between self-report and objective outcomes was significant (Q = 7.24, p < 0.01, RQ3). The prediction interval for self-report outcomes ranged from − 0.11 to 0.90, suggesting the possibility of no effect in future studies. For objective outcomes, the interval ranged from − 0.65 to 0.83, clearly encompassing zero, indicating high uncertainty and that OLPs may not consistently affect objective measures.

Trials with a high suggestive treatment rationale showed a small significant OLP effect (k = 55, n = 4131, SMD = 0.38, CI 95% = 0.30; 0.47, p < 0.0001; I2 = 42%), whereas trials with low suggestiveness did not (k = 8, n = 423, SMD = 0.11, CI 95% = − 0.25; 0.46, p = 0.55; I2 = 70%). However, the difference between these subgroups based on suggestiveness was not significant (Q = 2.20, p = 0.14, RQ4). The prediction interval for high suggestiveness (– 0.03 to 0.80) slightly included zero, indicating small remaining uncertainty about whether effects will consistently be positive. For low suggestiveness, the interval was wider (– 0.97 to 1.19) suggesting high variability and low confidence in consistent benefit.

Finally, small to medium significant effects of OLPs were observed across all types of control groups (Table 1). The difference between control subgroups was not significant (Q = 5.43, p = 0.14, RQ5). Prediction intervals varied between control types: they included zero for no-treatment, but did not for treatment-as-usual (0.06 to 0.94), waitlist (0.21 to 0.62), and covert placebo (0.30 to 0.69), suggesting more robust and consistent effects of OLPs in those contexts.

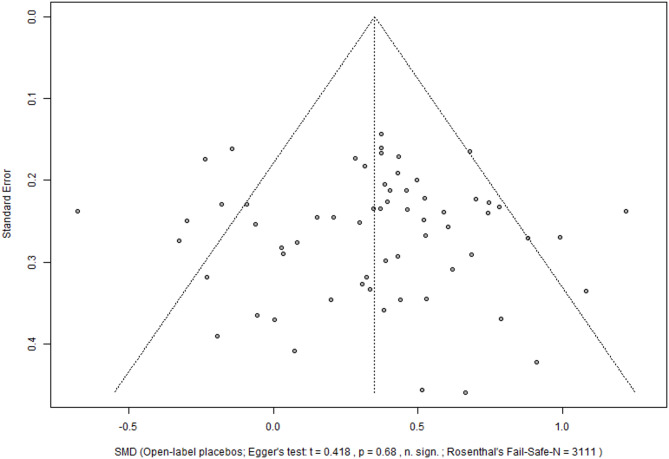

Reporting bias

The visual inspection of the funnel plot (Fig. 3) and the Egger’s regression showed no evidence of publication bias for the main meta-analysis across outcomes and samples (t = 0.42, p = 0.68), the Fail-Safe-N was 3111 studies).

Fig. 3.

Funnel plot of meta-analysis across populations and outcomes. The funnel plot plots the effect estimates (SMDs) from individual studies against their standard error. SMD Standardized mean difference.

Discussion

This systematic review and meta-analysis updates and synthesizes findings from four previous meta-analyses of randomized controlled trials (RCTs) on open-label placebos (OLPs)7–10. With the inclusion of 34 additional trials, our review includes a total of 60 trials with 4648 participants and is substantially larger than previous meta-analyses. This expansion allowed for novel statistical comparisons, offering deeper insights into OLP effects. For the first time, the review facilitated direct comparisons between self-report and objective outcomes and synthesized data from clinical and non-clinical samples. The review encompasses various OLP interventions, evaluates different outcomes, and compares these interventions across diverse control groups and sample types.

The results indicate that OLP interventions produce a small but clinically relevant positive effect across a wide range of health related outcomes compared to control groups (SMD = 0.35; RQ1). In addition, OLPs had a small effect in both clinical and non-clinical samples, with a significant difference in effectiveness between these populations (RQ2). OLPs improved self-report outcomes but had no significant effect on objective outcomes, with a significant difference between the two (RQ3). OLPs were effective when the treatment rationale was highly suggestive (i.e., inducing treatment expectations) but not when it was not, though the difference between these groups was not significant (RQ4). Lastly, OLPs exhibited small to medium significant effects against all kinds of control groups, with no significant differences between these control subgroups (RQ5). The overall heterogeneity was moderate in the primary analysis, but decreased substantially when excluding outliers, focusing solely on clinical samples, or comparing OLPs to TAU, covert placebos or waiting list controls. Consistently, prediction intervals in these subsets did not include zero, suggesting more reliable and generalizable effects in those conditions despite remaining variability elsewhere.

To our knowledge, this systematic review and meta-analysis is the most comprehensive synopsis of OLP research to date. The overall positive effect of OLPs (RQ1) aligns with previous meta-analyses7–10, confirming with extensive data that placebos are effective even when recipients are aware they are receiving a treatment, which is not pharmacologically active4. This finding suggests that OLPs can serve as a viable alternative to deceiving participants at least in certain clinical and research settings2.

Although OLP interventions benefit both clinical and non-clinical samples, they affect these groups differently (RQ2). Our meta-analysis shows a larger effect in clinical samples (SMD = 0.47) compared to non-clinical samples (SMD = 0.29). This difference likely arises from the greater need for relief in clinical samples due to disease-related impairments, which provides a larger potential for improvement101,102. For example, Barnes et al.32 found in a non-clinical sample larger OLP effects on wellbeing only for participants whose baseline scores were below the average, suggesting that higher distress levels might enhance OLP effects. Additionally, clinical trials lasted longer than to non-clinical trials, with a mean duration of 23.5 days (SD = 21.6) compared to 5.5 days (SD = 6.2) for non-clinical samples. This extended duration may allow patients to develop a stronger connection to the healing ritual aspects of OLPs compared to non-clinical samples6. It may also leave more time for the intervention to unfold. The results for clinical samples align with findings of Buergler et al.10, who reported an almost identical effect for OLP pills compared to NT (SMD = 0.46). This effect is somewhat smaller than those suggested by earlier meta-analyses on OLPs in clinical samples (SMD = 0.888 and SMD = 0.729). However, the earlier meta-analyses were based on a relatively small body of evidence with small sample sizes. The reduction in magnitude of the effect sizes may indicate a time-lag bias, where significant results were more likely to be reported early in OLP research, while later studies with insignificant results were likely to be published over time10. Similar earlier studies may have lower methodological quality. A sensitivity analysis excluding trials with a high risk of bias in Wernsdorff revealed a reduced effects size of SMD = 0.49 being very close to our finding9. The beneficial effects of OLP interventions in non-clinical samples (SMD = 0.29) are smaller than in clinical samples. They are also smaller than in the prior meta-analysis by Spille et al.7 as well as the significant findings for studies applying nasal spray in non-clinical samples from Buergler et al.10. This may be due to the combination of studies with self-reported and objective outcome which were separated in Spille et al. (2023). With respect to Buergler et al. (2023) also methodological differences may account for these variations. For example, they excluded trials with balanced-placebo designs as well as those with CP as a control group10, while our analysis included such trials (see eligibility criteria). In addition, they included non-randomized trials74,75, which we excluded. Moreover, Buergler et al. (2023) used a different classification for clinical and non-clinical samples10, based on the investigated states/conditions rather than on the health status of the participants/patients. Therefore they classified some trials as clinical, which were considered to be non-clinical by our approach34,45,82,96. Lastly, Buergler et al. included studies with deceptive placebo control group10, which were excluded in our analysis. These smethodological differences resulted in fewer trials in the non-clinical network compared to our analysis (12 trials in Buergler et al.10 vs. 37 in the present analysis).

OLPs appear to affect self-report and objective outcomes differently (RQ3). While OLPs have a beneficial effect on self-report outcomes across both clinical and non-clinical populations, they show no effect on objective outcomes. Despite increasing the number of trials (from 17 to 55 comparisons for self-report outcomes and from eight to 17 comparisons for objective outcomes), the effect sizes remain broadly consistent with those reported by Spille et al. (2023) (self-report SMDs: 0.43 vs. 0.39; objective SMDs: − 0.02 vs. 0.09). The results suggest that OLPs have a positive impact on individuals’ perceptions of their health, but that there is currently no meta-analytical proof that they do also alter biological or behavioral variables. Nevertheless, this finding corresponds to meta-analytical findings on deceptive placebos in clinical trials, in which patient-reported outcomes also demonstrate larger effect sizes (SMD = 0.26) than observer-rated outcomes (SMD = 0.13), although here the latter are significant103. The non-significant SMD of 0.09 in the present meta-analysis may indicate either that OLPs have no effect on objective outcomes or that there are currently too few studies to reveal a significant effect.

In the history of placebo research, deceptive placebos were initially thought to be merely suggestive to the patient, with no corresponding biological effect. This picture changed when the endogenous opoid system was identified as one biological mechanism of placebo analgesia104,105. Today, there is a large body of literature that clearly demonstrates the biological mechanisms and effects of deceptive placebos106. Whether the research on OLPs will take the same avenue remains to be seen. To date there exists to our knowledge only one experimental study demonstrating a biological mechanisms of OLP analgesia107 as well as some more fMRI studies on healthy volunteers reporting neurobiological changes67,95,108. Our finding that the effect size for objective measures of OLPs is not significant, unlike the one for deceptive placebos, highlights the need for further investigations into the psychological and biological mechanisms behind OLP effects. As more trials become available in the future, we will be able to break down the category of objective outcomes by type of variable (e.g. biological, behavioral, etc.) and time-span (e.g. short-term vs. long-term). Of particular interest are also the variables suggestion and expectation, especially with regard to interpersonal differences. Another fruitful focus could be the perspective to understand OLPs as a healing ritual and in particular addressing its embodied aspects109–111.

The degree of suggestiveness in the treatment rationale accompanying the administration of OLPs in our review had an impact on their effectiveness (RQ4). Trials with a suggestive rationale that aimed to build treatment expectations showed a small positive effect (SMD = 0.38), while those without such a rationale did not demonstrate significant effects (SMD = 0.11). These results align with Buergler at al. (2023), emphasizing that building expectancy is crucial for OLP effectiveness and that merely administering an inert treatment is not sufficient10. This supports the prevailing view that OLP effects are based on the elicitation of treatment expectations through a suggestive rationale6,112.

Regarding the impact of different types of control groups on OLP effectiveness (RQ5), we compared OLP interventions against four types of comparators: 41 used no treatment controls (NT), nine used weight list (WL) controls, seven used a covert placebo (CP), and six used treatment as usual (TAU). The inferential analysis revealed no significant differences (p = 0.14) among these control groups, indicating that OLPs yield beneficial effects across all control types. However, as “absence of evidence is not evidence of absence”113, these findings should be interpreted carefully. Moreover, these findings somewhat contrast with Buergler et al., who concluded that OLPs performed better than ‘nothing’ but only slightly better than ‘something’ and that comparator groups should be chosen carefully10. As for population types, methodological differences between our study and Buergler et al.10 may account for these discrepancies in results (see above).

The presented systematic review and meta-analysis has several strengths. First, it adhered to rigorous methodology, including a pre-registered search protocol and data synthesis, with two independent researchers assessing eligibility, extracting data, and evaluating risk of bias. Second, it incorporated 23 additional RCTs compared to the most recent previous meta-analysis of OLPs10, thereby strengthening the robustness of the results. Third, we directly compared differences in the effectiveness of OLPs between self-report and objective outcomes as well as between clinical and non-clinical samples and these differences yielded valuable insights. Finally, we conducted multiple sensitivity analyses, which confirmed the robustness of the main findings and reinforced observed trends in OLP effects.

Several limitations should be considered when interpreting the results. First, most of the trials included in the review had relatively small sample sizes (i.e., fewer than 100 participants). As already denoted by Buergler et al., this limitation raised the possibility of a so-called ‘small study effect’10, where smaller trials often report larger treatment effects than larger trials63,114,115. Second, we combined effect sizes across different populations (e.g., across various clinical conditions) and forms of outcomes (e.g., self-report vs. objective), which could bias the observed OLP effect, as various populations and outcomes may respond differently to OLP administration. In a similar vein, we independently aggregated multiple treatment arms with varying degree of suggestiveness in the treatment rationale (i.e. OLP + and OLP−) and analyzed these arms separately in the analysis. This approach may potentially skew effect sizes due to over-representation and inter-correlation of outcomes and treatment arm in question. However, we tried to avoid over-representation in terms of unit-of-analysis error due to double counting by splitting the sample sizes of the respective control groups. Moreover, we tried to model the potential dependency of multiple effect size estimates for one sample by conducting a sensitivity analysis using a hierarchical three-level meta-analytic model, with effect sizes nested in studies, leading to comparable results for the main analysis. Third, we did not peform a GRADE rating. This is because, we consider the GRADE system to be suboptimal for the use in our OLP review. We see especially the inability to blind patients/participants to the intervention, affecting the risk of bias rating, and the inclusion of a huge varieties of different outcomes in our review as problematic with respect to GRADE.

Our systematic review has identified several research gaps. First, trials with larger sample sizes are essential to enhance the robustness of the findings. Second, longer study durations are required, as most trials in non-clinical populations lasted only one day, and as long-term follow-up trials on OLP interventions in clinical samples have yielded mixed results72,73. This raises questions about the durability of OLP effects and underscores the need for further research on long-term effects in both clinical and non-clinical settings. Second, to increase the validity of the results, future trials should include more representative samples. Non-clinical trials in particular, often included younger and predominantly female participants compared to clinical populations. Third, as OLPs affect self-report and objective outcomes differently, future research should put a stronger focus on objective outcomes, particularly in clinical settings. Finally, this systematic review and meta-analysis included several trials published by the same research groups, indicating the need for research teams with less allegiance to OLPs to mitigate potential bias.

In summary, the results of the presented systematic review and meta-analysis indicate several key findings: (1) OLP interventions demonstrate small but significant positive overall benefit across various populations and outcomes in a larger sample of studies. (2) OLPs are more effective in clinical than in non-clinical samples. (3) OLPs are effective on self-report measures but their effect is unclear for objective measures. This finding raises important research questions about their biological and psychological mechanisms, particularly in comparison to deceptive placebos. (4) The suggestiveness of the treatment rationale in OLP interventions is crucial, as trials lacking suggestive elements did not yield significant beneficial effects. (5) Different comparator groups produced similar estimates of OLP effectiveness.

Supplementary Information

Below is the link to the electronic supplementary material.

Acknowledgements

We thank all the authors of the studies included in this systematic review and meta-analysis for their valuable contributions. We would like to thank Ted Kaptchuk for his valuable support in retrieving additional data and for his critical comments on our manuscript.

Author contributions

J.C.F. contributed to the conceptualization of the study and shared project administration responsibilities with S.S. He supervised the study’s execution, developed methodological considerations, and conducted the final formal analysis. Additionally, he wrote the original draft based on C.T.’s master’s thesis. C.T. co-conceptualized the study, was responsible for the literature search, screening and data extraction, curated the data, and contributed to the methodological development. He provided the foundation for the original draft with his master’s thesis, performed the initial data analysis, played a key role in the formal analysis, and was responsible for reviewing and editing the manuscript. P.S. served as a co-reviewer and, together with C.T., conducted the screening process of the studies and assisted in data curation. He contributed to the methodology and edited the final version of the paper. J.G. supervised the project throughout its various phases and reviewed and edited the manuscript. S.S. conceived the idea for the study and shared project administration with J.C.F. He was responsible for the study’s conceptualization, contributed to the methodology, supervised the various phases, and reviewed and edited the final version of the draft.

Funding

Open Access funding enabled and organized by Projekt DEAL.

Data availability

All data generated or analysed during this study are either included in this published article and its supplementary information file or have been deposited at https://osf.io/pxzcg/.

Declarations

Competing interests

The authors declare no competing interests.

Footnotes

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

References

- 1.Benedetti, F. Mechanisms of placebo and placebo-related effects across diseases and treatments. Annu. Rev. Pharmacol. Toxicol.48, 33–60 (2008). [DOI] [PubMed] [Google Scholar]

- 2.Evers, A. W. M. et al. Implications of placebo and Nocebo effects for clinical practice: expert consensus. Psychother. Psychosom.87, 204–210 (2018). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Finniss, D. G., Kaptchuk, T. J., Miller, F. & Benedetti, F. Biological, clinical, and ethical advances of placebo effects. Lancet375, 686–695 (2010). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Evers, A. W. M. et al. What should clinicians tell patients about placebo and Nocebo effects? Practical considerations based on expert consensus. Psychother. Psychosom.90, 49–56 (2021). [DOI] [PubMed] [Google Scholar]

- 5.Kaptchuk, T. J., Friedlander, E., Kelley, J. M., Sanchez, M. N., Kokkotou, E., Singer, J. P., Kowalczykowski, M., Miller, F. G., Kirsch, I., Lembo, A. J. Placebos without deception: A randomized controlled trial in irritable bowel syndrome. PLos ONE, 5, e15591 (2010). [DOI] [PMC free article] [PubMed]

- 6.Kaptchuk, T. J. Open-label placebo: reflections on a research agenda. Perspect. Biol. Med.61, 311–334 (2018). [DOI] [PubMed] [Google Scholar]

- 7.Spille, L., Fendel, J. C., Seuling, P. D., Göritz, A. S. & Schmidt, S. Open-label placebos—a systematic review and meta-analysis of experimental studies with non-clinical samples. Sci. Rep.13, 3640 (2023). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Charlesworth, J. E. G. et al. Effects of placebos without deception compared with no treatment: A systematic review and meta-analysis. J. Evid. Based Med.10, 97–107 (2017). [DOI] [PubMed] [Google Scholar]

- 9.von Wernsdorff, M., Loef, M., Tuschen-Caffier, B. & Schmidt, S. Effects of open-label placebos in clinical trials: A systematic review and meta-analysis. Sci. Rep.11, 3855 (2021). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Buergler, S., Sezer, D., Gaab, J. & Locher, C. The roles of expectation, comparator, administration route, and population in open-label placebo effects: A network meta-analysis. Sci. Rep.13, 11827 (2023). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Colloca, L. & Miller, F. G. How placebo responses are formed: A learning perspective. Philos. Trans. R. Soc. B. 366, 1859–1869 (2011). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Kaptchuk, T. J. et al. Placebos without deception: A randomized controlled trial in irritable bowel syndrome. PLoS One. 5, e15591 (2010). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Michopoulos, I. et al. Different control conditions can produce different effect estimates in psychotherapy trials for depression. J. Clin. Epidemiol.132, 59–70 (2021). [DOI] [PubMed] [Google Scholar]

- 14.Charlesworth, J. E. G. et al. Effects of placebos without deception compared with no treatment: A systematic review and meta-analysis. J. Evid.-Based Med.10, 97–107 (2017). [DOI] [PubMed] [Google Scholar]

- 15.Page, M. J. et al. The PRISMA 2020 statement: an updated guideline for reporting systematic reviews. Int. J. Surg.88, 105906 (2021). [DOI] [PubMed] [Google Scholar]

- 16.Blease, C., Colloca, L. & Kaptchuk, T. J. Are open-label placebos ethical? Informed consent and ethical equivocations. Bioethics. 30, 407–414 (2016). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Higgins, J. P. T. et al. Cochrane Handbook for Systematic Reviews of Interventions (Wiley, 2019).

- 18.Ouzzani, M., Hammady, H., Fedorowicz, Z. & Elmagarmid, A. Rayyan—a web and mobile app for systematic reviews. Syst. Rev.5, 210 (2016). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.López-López, J. A., Page, M. J., Lipsey, M. W. & Higgins, J. P. T. Dealing with effect size multiplicity in systematic reviews and meta‐analyses. Res. Synth. Methods. 9, 336–351 (2018). [DOI] [PubMed] [Google Scholar]

- 20.Rothman, K. J., Greenland, S. & Lash, T. L. Modern Epidemiology (Wolters Kluwer health-Lippincott Williams & Wilkins, 2008).

- 21.Mohr, D. C. et al. The selection and design of control conditions for randomized controlled trials of psychological interventions. Psychother. Psychosom.78, 275–284 (2009). [DOI] [PubMed] [Google Scholar]

- 22.Sterne, J. A. C. et al. RoB 2: A revised tool for assessing risk of bias in randomised trials. BMJ. 366, l4898 (2019). [DOI] [PubMed] [Google Scholar]

- 23.R Core Team. R: A language and environment for statistical computing. (2021). https://www.R-project.org/

- 24.Morris, S. B. Estimating effect sizes from pretest-posttest-control group designs. Org. Res. Methods. 11, 364–386 (2008). [Google Scholar]

- 25.Borenstein, M. Effect sizes for continuous data. In The Handbook of Research Synthesis and Meta-analysis 2nd edn 221–235 (Russell Sage Foundation, 2009). [Google Scholar]

- 26.Cohen, J. Statistical power analysis. Curr. Dir. Psychol. Sci.1, 98–101 (1992). [Google Scholar]

- 27.Ikemoto, T. et al. Open-label placebo trial among Japanese patients with chronic low back pain. Pain Res. Manag.2020, e6636979 (2020). [DOI] [PMC free article] [PubMed]

- 28.Potthoff, J. & Schienle, A. Effects of (non)deceptive placebos on reported sleep quality and food cue reactivity. J. Sleep. Res.10.1111/jsr.13947 (2023). [DOI] [PubMed] [Google Scholar]

- 29.Winkler, A., Hahn, A. & Hermann, C. The impact of pharmaceutical form and simulated side effects in an open-label-placebo Rct for improving psychological distress in highly stressed students. Sci. Rep.13, 6367 (2023). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Mathur, A., Jarrett, P., Broadbent, E. & Petrie, K. J. Open-label placebos for wound healing: A randomized controlled trial. Ann. Behav. Med.52, 902–908 (2018). [DOI] [PubMed] [Google Scholar]

- 31.Walach, H., Schmidt, S., Bihr, Y. M. & Wiesch, S. The effects of a caffeine placebo and experimenter expectation on blood pressure, heart rate, well-being, and cognitive performance. Eur. Psychol.6, 15–25 (2001). [Google Scholar]

- 32.Barnes, K., Babbage, E., Barker, J., Jain, N. & Faasse, K. The role of positive information provision in open-label placebo effects. Appl. Psychol. Health Well Being. 10.1111/aphw.12444 (2023). [DOI] [PubMed] [Google Scholar]

- 33.Carvalho, C. et al. Open-label placebo treatment in chronic low back pain: A randomized controlled trial. Pain. 157, 2766–2772 (2016). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.El Brihi, J., Horne, R. & Faasse, K. Prescribing placebos: an experimental examination of the role of dose, expectancies, and adherence in open-label placebo effects. Ann. Behav. Med.53, 16–28 (2019). [DOI] [PubMed] [Google Scholar]

- 35.Guevarra, D. A., Moser, J. S., Wager, T. D. & Kross, E. Placebos without deception reduce self-report and neural measures of emotional distress. Nat. Commun.11, 3785 (2020). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Heller, M. K., Chapman, S. C. E. & Horne, R. Beliefs about medicines predict side-effects of placebo modafinil. Ann. Behav. Med.56, 989–1001 (2022). [DOI] [PubMed] [Google Scholar]

- 37.Hoenemeyer, T. W., Kaptchuk, T. J., Mehta, T. S. & Fontaine, K. R. Open-label placebo treatment for cancer-related fatigue: A randomized-controlled clinical trial. Sci. Rep.8, 2784 (2018). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Kube, T. et al. Deceptive and nondeceptive placebos to reduce pain: an experimental study in healthy individuals. Clin. J. Pain. 36, 68–79 (2020). [DOI] [PubMed] [Google Scholar]

- 39.Kube, T., Hofmann, V. E., Glombiewski, J. A. & Kirsch, I. Providing open-label placebos remotely—a randomized controlled trial in allergic rhinitis. PLoS One. 16, (2021). [DOI] [PMC free article] [PubMed]

- 40.Kube, T., Kirsch, I., Glombiewski, J. A. & Herzog, P. Can placebos reduce intrusive memories? Behav. Res. Ther.158, (2022). [DOI] [PubMed]

- 41.Kube, T., Kirsch, I., Glombiewski, J. A., Witthöft, M. & Bräscher, A. K. Remotely provided open-label placebo reduces frequency of and impairment by allergic symptoms. Psychosom. Med.84, 997–1005 (2022). [DOI] [PubMed] [Google Scholar]

- 42.Locher, C. et al. Is the rationale more important than deception? A randomized controlled trial of open-label placebo analgesia. Pain158, 2320–2328 (2017). [DOI] [PubMed] [Google Scholar]

- 43.Olliges, E. et al. Open-label placebo administration decreases pain in elderly patients with symptomatic knee osteoarthritis – a randomized controlled trial. Front Psychiatry13, (2022). [DOI] [PMC free article] [PubMed]

- 44.Rathschlag, M. & Klatt, S. Open-label placebo interventions with drinking water and their influence on perceived physical and mental well-being. Front. Psychol.12, 658275 (2021). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.Schaefer, M. et al. Open-label placebos reduce test anxiety and improve self-management skills: A randomized-controlled trial. Sci. Rep.9, 13317 (2019). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.Schaefer, M., Hellmann-Regen, J. & Enge, S. Effects of open-label placebos on state anxiety and glucocorticoid stress responses. Brain Sci.11, 508 (2021). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Schaefer, M., Zimmermann, K. & Enck, P. A randomized controlled trial of effects of open-label placebo compared to double-blind placebo and treatment-as-usual on symptoms of allergic rhinitis. Sci. Rep.13, 8372 (2023). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48.Schienle, A. & Jurinec, N. Open-label placebos as adjunctive therapy for patients with depression. Contemp Clin. Trials Comm28, (2022). [DOI] [PMC free article] [PubMed]

- 49.Schneider, R. et al. Effects of expectation and caffeine on arousal, well-being, and reaction time. Int. J. Behav. Med.13, 330–339 (2006). [DOI] [PubMed] [Google Scholar]

- 50.Stumpp, L., Jauch, M., Sezer, D., Gaab, J. & Greifeneder, R. Effects of an open-label placebo intervention on reactions to social exclusion in healthy adults: A randomized controlled trial. Sci. Rep.13, 15369 (2023). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51.Urroz, P., Colagiuri, B., Smith, C. A., Yeung, A. & Cheema, B. S. Effect of acupuncture and instruction on physiological recovery from maximal exercise: A balanced-placebo controlled trial. BMC Complement. Altern. Med.16, 227 (2016). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 52.Marín-Martínez, F. & Sánchez-Meca, J. Averaging dependent effect sizes in meta-analysis: A cautionary note about procedures. Span. J. Psychol.2, 32–38 (1999). [DOI] [PubMed] [Google Scholar]

- 53.Viechtbauer, W. Conducting meta-analyses in R with the metafor package. J. Stat. Softw.36, 1–48 (2010). [Google Scholar]

- 54.Harrer, M., Cuijpers, P., Furukawa, T. & Ebert, D. In Doing Meta-Analysis with R: A Hands-on Guide (Chapman and Hall/CRC, 2021). 10.1201/9781003107347

- 55.Buergler, S. et al. Imaginary pills and open-label placebos can reduce test anxiety by means of placebo mechanisms. Sci. Rep.13, 2624 (2023). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 56.Barnes, K., Yu, A., Josupeit, J. & Colagiuri, B. Deceptive but not open label placebos attenuate motion-induced nausea. J. Psychosom. Res.125, 109808 (2019). [DOI] [PubMed] [Google Scholar]

- 57.Schaefer, M., Sahin, T. & Berstecher, B. Why do open-label placebos work? A randomized controlled trial of an open-label placebo induction with and without extended information about the placebo effect in allergic rhinitis. PLoS One. 13, e0192758 (2018). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 58.Schienle, A., Unger, I. & Schwab, D. Changes in neural processing and evaluation of negative facial expressions after administration of an open-label placebo. Sci. Rep.12, 6577 (2022). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 59.Balduzzi, S., Rücker, G. & Schwarzer, G. How to perform a meta-analysis with R: A practical tutorial. BMJ Ment. Health. 22, 153–160 (2019). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 60.Marín-Martínez, F. & Sánchez-Meca, J. Weighting by inverse variance or by sample size in random-effects meta-analysis. Educ. Psychol. Meas.70, 56–73 (2010). [Google Scholar]

- 61.Borenstein, M., Higgins, J. P. T., Hedges, L. V. & Rothstein, H. R. Basics of meta-analysis: I2 is not an absolute measure of heterogeneity. Res. Synth. Methods. 8, 5–18 (2017). [DOI] [PubMed] [Google Scholar]

- 62.Duval, S. & Tweedie, R. Trim and fill: A simple Funnel-Plot–Based method of testing and adjusting for publication bias in Meta-Analysis. Biometrics56, 455–463 (2000). [DOI] [PubMed] [Google Scholar]

- 63.Page, M. J., Sterne, J. A. C., Higgins, J. P. T. & Egger, M. Investigating and dealing with publication bias and other reporting biases in meta-analyses of health research: A review. Res. Synth. Methods. 12, 248–259 (2021). [DOI] [PubMed] [Google Scholar]

- 64.Rosenthal, R. The file drawer problem and tolerance for null results. Psychol. Bull.86, 638–641 (1979). [Google Scholar]

- 65.Cohen, J. A coefficient of agreement for nominal scales. Educ. Psychol. Meas.20, 37–46 (1960). [Google Scholar]

- 66.Haas, J. W., Rief, W., Glombiewski, J. A., Winkler, A. & Doering, B. K. Expectation-induced placebo effect on acute sadness in women with major depression: an experimental investigation. J. Affect. Disord.274, 920–928 (2020). [DOI] [PubMed] [Google Scholar]

- 67.Schaefer, M., Kühnel, A., Schweitzer, F., Enge, S. & Gärtner, M. Neural underpinnings of open-label placebo effects in emotional distress. Neuropsychopharmacology48, 560–566 (2023). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 68.Flowers, K. M. et al. Conditioned open-label placebo for opioid reduction after spine surgery: A randomized controlled trial. Pain162, 1828–1839 (2021). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 69.Nurko, S. et al. Effect of open-label placebo on children and adolescents with functional abdominal pain or irritable bowel syndrome: A randomized clinical trial. JAMA Pediatr.176, 349–356 (2022). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 70.Braescher, A. K., Ferti, I. E. & Witthöft, M. Open-label placebo effects on psychological and physical well-being: A conceptual replication study. (2022). 10.32872/cpe.7679 [DOI] [PMC free article] [PubMed]

- 71.Lee, S., Choi, D. H., Hong, M., Lee, I. S. & Chae, Y. Open-label placebo treatment for experimental pain: A randomized-controlled trial with placebo acupuncture and placebo pills. J. Integr. Complement. Med.28, 136–145 (2022). [DOI] [PubMed] [Google Scholar]

- 72.Carvalho, C. et al. Open-label placebo for chronic low back pain: A 5-year follow-up. Pain. 162, 1521–1527 (2021). [DOI] [PubMed] [Google Scholar]

- 73.Kleine-Borgmann, J., Dietz, T. N., Schmidt, K. & Bingel, U. No long-term effects after a 3-week open-label placebo treatment for chronic low back pain: A 3-year follow-up of a randomized controlled trial. Pain164, 645–652 (2023). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 74.Disley, N., Kola-Palmer, S. & Retzler, C. A comparison of open-label and deceptive placebo analgesia in a healthy sample. J. Psychosom. Res.140, (2021). [DOI] [PubMed]

- 75.Haas, J. W., Winkler, A., Rheker, J., Doering, B. K. & Rief, W. No open-label placebo effect in insomnia? Lessons learned from an experimental trial. J Psychosom. Res158, (2022). [DOI] [PubMed]

- 76.Morales-Quezada, L. et al. Conditioning open-label placebo: a pilot pharmacobehavioral approach for opioid dose reduction and pain control. Pain Rep.5, e828 (2020). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 77.De Vita, M. J. et al. The effects of Cannabidiol and analgesic expectancies on experimental pain reactivity in healthy adults: A balanced placebo design trial. Exp. Clin. Psychopharmacol.30, 536–546 (2022). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 78.Friehs, T., Rief, W., Glombiewski, J. A., Haas, J. & Kube, T. Deceptive and non-deceptive placebos to reduce sadness: A five-armed experimental study. J. Affect. Disord. Rep.9, 100349 (2022). [Google Scholar]

- 79.Glombiewski, J. A., Rheker, J., Wittkowski, J., Rebstock, L. & Rief, W. Placebo mechanisms in depression: an experimental investigation of the impact of expectations on sadness in female participants. J. Affect. Disord.256, 658–667 (2019). [DOI] [PubMed] [Google Scholar]

- 80.Hahn, A., Göhler, A. C., Hermann, C. & Winkler, A. Even when you know it is a placebo, you experience less sadness: first evidence from an experimental open-label placebo investigation. J. Affect. Disord. 304, 159–166 (2022). [DOI] [PubMed] [Google Scholar]

- 81.Kleine-Borgmann, J., Schmidt, K., Hellmann, A. & Bingel, U. Effects of open-label placebo on pain, functional disability, and spine mobility in patients with chronic back pain: A randomized controlled trial. Pain160, 2891 (2019). [DOI] [PubMed] [Google Scholar]

- 82.Kleine-Borgmann, J. et al. Effects of open-label placebos on test performance and psychological well-being in healthy medical students: A randomized controlled trial. Sci. Rep.11, 2130 (2021). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 83.Rief, W. & Glombiewski, J. A. The hidden effects of blinded, placebo-controlled randomized trials: an experimental investigation. PAIN®153, 2473–2477 (2012). [DOI] [PubMed] [Google Scholar]

- 84.Schaefer, M., Harke, R. & Denke, C. Open-label placebos improve symptoms in allergic rhinitis: A randomized controlled trial. Psychother. Psychosom.85, 373–374 (2016). [DOI] [PubMed] [Google Scholar]

- 85.Ashar, Y. K. et al. Effect of pain reprocessing therapy vs placebo and usual care for patients with chronic back pain: A randomized clinical trial. JAMA Psychiatry. 79, 13–23 (2022). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 86.Belcher, A. M. et al. Effectiveness of conditioned open-label placebo with methadone in treatment of opioid use disorder: A randomized clinical trial. JAMA Netw. Open.6, E237099 (2023). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 87.Kelley, J. M., Kaptchuk, T. J., Cusin, C., Lipkin, S. & Fava, M. Open-label placebo for major depressive disorder: A pilot randomized controlled trial. Psychother. Psychosom.81, 312–314 (2012). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 88.Lembo, A. et al. Open-label placebo vs double-blind placebo for irritable bowel syndrome: A randomized clinical trial. Pain162, 2428–2435 (2021). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 89.Meeuwis, S. et al. Placebo effects of open-label verbal suggestions on itch. Acta Derm. Venerol. 98, 268–274 (2018). [DOI] [PubMed] [Google Scholar]

- 90.Mundt, J. M., Roditi, D. & Robinson, M. E. A comparison of deceptive and non-deceptive placebo analgesia: efficacy and ethical consequences. Ann. Behav. Med.51, 307–315 (2017). [DOI] [PubMed] [Google Scholar]

- 91.Yennurajalingam, S. et al. Open-label placebo for the treatment of cancer-related fatigue in patients with advanced cancer: A randomized controlled trial. Oncologist27, 1081–1089 (2022). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 92.Zhou, E. S. et al. Open-label placebo reduces fatigue in cancer survivors: A randomized trial. Support Care Cancer. 27, 2179–2187 (2019). [DOI] [PubMed] [Google Scholar]

- 93.Flowers, K. M. et al. Conditioned open-label placebo for opioid reduction after spine surgery: A randomized controlled trial. Pain162, 1828 (2021). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 94.Mills, L., Lee, J. C., Boakes, R. & Colagiuri, B. Reduction in caffeine withdrawal after open-label decaffeinated coffee. J. Psychopharmacol.37, 181–191 (2023). [DOI] [PubMed] [Google Scholar]

- 95.Schienle, A., Kogler, W. & Wabnegger, A. A randomized trial that compared brain activity, efficacy and plausibility of open-label placebo treatment and cognitive repappraisal for reducing emotional distress. Sci. Rep.13, 13998 (2023). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 96.Schienle, A. & Unger, I. Open-label placebo treatment to improve relaxation training effects in healthy psychology students: A randomized controlled trial. Sci. Rep.11, 13073 (2021). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 97.Schienle, A. & Unger, I. Non-deceptive placebos can promote acts of kindness: A randomized controlled trial. 13, (2023). [DOI] [PMC free article] [PubMed]

- 98.Sezer, D., Locher, C. & Gaab, J. Deceptive and open-label placebo effects in experimentally induced guilt: A randomized controlled trial in healthy subjects. Sci. Rep.12, 21219 (2022). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 99.Nitzan, U. et al. Open-label placebo for the treatment of unipolar depression: results from a randomized controlled trial. J. Affect. Disord.276, 707–710 (2020). [DOI] [PubMed] [Google Scholar]

- 100.Pan, Y. et al. Open-label placebos for menopausal hot flushes: A randomized controlled trial. Sci. Rep.10, 20090 (2020). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 101.Hyland, M. E. Motivation and placebos: do different mechanisms occur in different contexts? Philos. Trans. R. Soc. B: Biol. Sci.366, 1828–1837 (2011). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 102.Kaptchuk, T. J. & Miller, F. G. Open label placebo: Can honestly prescribed placebos evoke meaningful therapeutic benefits? BMJ363, k3889 (2018). [DOI] [PMC free article] [PubMed]

- 103.Hróbjartsson, A. & Gøtzsche, P. C. Placebo interventions for all clinical conditions. Cochrane Database Syst. Rev. 10.1002/14651858.CD003974.pub3 (2010). [DOI] [PubMed] [Google Scholar]

- 104.Levine, J. D., Gordon, N. C., Jones, R. T. & Fields, H. L. The narcotic antagonist Naloxone enhances clinical pain. Nature272, 826–827 (1978). [DOI] [PubMed] [Google Scholar]

- 105.Benedetti, F. The opposite effects of the opiate antagonist Naloxone and the cholecystokinin antagonist Proglumide on placebo analgesia. Pain64, 535–543 (1996). [DOI] [PubMed] [Google Scholar]

- 106.Benedetti, F., Carlino, E. & Pollo, A. How placebos change the patient’s brain. Neuropsychopharmacology36, 339–354 (2011). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 107.Benedetti, F., Shaibani, A., Arduino, C. & Thoen, W. Open-label nondeceptive placebo analgesia is blocked by the opioid antagonist Naloxone. Pain164, 984–990 (2023). [DOI] [PubMed] [Google Scholar]

- 108.Schienle, A. & Wabnegger, A. Neural correlates of expected and perceived treatment efficacy concerning open-label placebos for reducing emotional distress. Brain Res. Bull.219, 111121 (2024). [DOI] [PubMed] [Google Scholar]

- 109.Wilhelm, M. et al. Working with patients’ treatment expectations – what we can learn from homeopathy. Front. Psychol. 15, (2024). [DOI] [PMC free article] [PubMed]

- 110.Kaptchuk, T. J. The placebo effect in alternative medicine: can the performance of a healing ritual have clinical significance? Ann. Intern. Med.136, 817–825 (2002). [DOI] [PubMed] [Google Scholar]

- 111.Schmidt, S. & Walach, H. Making sense in the medical system: placebo, biosemiotics, and the pseudomachine. 5, 195–215 (2016).

- 112.Blease, C. R., Bernstein, M. H. & Locher, C. Open-label placebo clinical trials: is it the rationale, the interaction or the pill? BMJ Evid. Based Med.25, 159–165 (2020). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 113.Feres, M. & Feres, M. F. N. Absence of evidence is not evidence of absence. J. Appl. Oral Sci.31, ed001 (2023). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 114.Sterling, T. D., Rosenbaum, W. L. & Weinkam, J. J. Publication decisions revisited: the effect of the outcome of statistical tests on the decision to publish and vice versa. Am. Stat.49, 108–112 (1995). [Google Scholar]

- 115.Sterne, J. A. C., Gavaghan, D. & Egger, M. Publication and related bias in meta-analysis: power of statistical tests and prevalence in the literature. J. Clin. Epidemiol.53, 1119–1129 (2000). [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Data Availability Statement

All data generated or analysed during this study are either included in this published article and its supplementary information file or have been deposited at https://osf.io/pxzcg/.