Abstract

Background

Artificial intelligence is reshaping cancer care, but little is known about how people with cancer perceive its integration into their care. Understanding these perspectives is essential to ensuring artificial intelligence adoption aligns with patient needs and preferences while supporting a patient-centered approach.

Objective

The aim of this study is to synthesize existing literature on patient attitudes toward artificial intelligence in cancer care and identify knowledge gaps that can inform future research and clinical implementation.

Methods

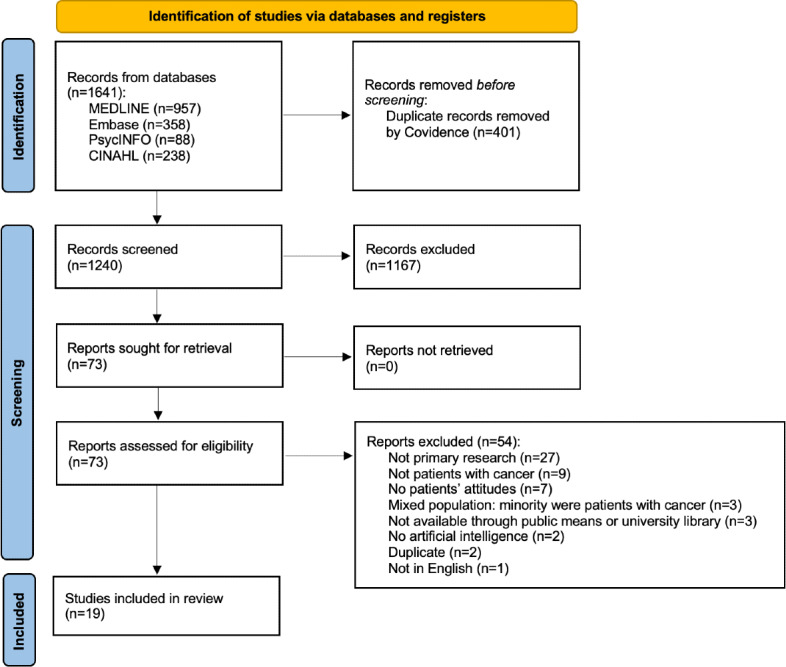

A scoping review was conducted following the PRISMA-ScR (Preferred Reporting Items for Systematic Reviews and Meta-Analyses extension for Scoping Reviews) guidelines. MEDLINE, Embase, PsycINFO, and CINAHL were searched for peer-reviewed primary research studies published until February 1, 2025. The Population-Concept-Context framework guided study selection, focusing on adult patients with cancer and their attitudes toward artificial intelligence. Studies with quantitative or qualitative data were included. Two independent reviewers screened studies, with a third resolving disagreements. Data were synthesized into tabular and narrative summaries.

Results

Our search yielded 1240 citations, of which 19 studies met the inclusion criteria, representing 2114 patients with cancer across 15 countries. Most studies used quantitative methods (9/19, 47%) such as questionnaires or surveys. The most studied cancers were melanoma (375/2114, 17.7%), prostate (n=323, 15.3%), breast (n=263, 12.4%), and colorectal cancer (n=251, 11.9%). Although patients with cancer generally supported artificial intelligence when used as a physician-guided tool (9/19, 47%), concerns about depersonalization, treatment bias, and data security highlighted challenges in implementation. Trust in artificial intelligence (10/19, 53%) was shaped by physician endorsement and patient familiarity, with greater trust when artificial intelligence was physician-guided. Geographic differences were observed, with greater artificial intelligence acceptance in Asia, while skepticism was more prevalent in North America and Europe. Additionally, patients with metastatic cancer (99/2114, 5%) were underrepresented, limiting insights into artificial intelligence perceptions in this population.

Conclusions

This scoping review provides the first synthesis of patient attitudes toward artificial intelligence across all cancer types and highlights concerns unique to patients with cancer. Clinicians can use these findings to enhance patient acceptance of artificial intelligence by positioning it as a physician-guided tool and ensuring its integration aligns with patient values and expectations.

Introduction

Artificial intelligence (AI) refers to computer systems that simulate human intelligence to perform tasks such as learning, decision-making, and pattern recognition with minimal human input [1]. In health care, AI technologies include structured machine learning, natural language processing, and computer vision, which are increasingly applied to improve diagnosis, prognosis, and treatment planning [2-4]. In cancer care, AI is advancing detection, diagnosis, and treatment by analyzing complex data to support clinical decisions. These technologies are being applied to improve diagnostic accuracy, predict survival outcomes, and personalize treatment strategies [5-12]. However, AI’s impact depends not only on its technical capabilities but also on patient acceptance and trust. Understanding these perspectives is essential to ensuring a successful patient-centered approach to AI implementation [13].

Although general attitudes of all patients toward AI have been studied, research specifically examining the perspectives of patients with cancer remains limited [14]. The chronic and severe nature of cancer creates unique psychosocial challenges for patients, which may shape their attitudes toward AI in ways distinct from other medical contexts [15,16]. The limited existing studies primarily focus on individual cancer types, such as breast and skin cancer [17-20], leaving gaps in understanding how AI is perceived across diverse cancer care contexts. A more comprehensive evaluation is needed to capture the full spectrum of patients’ attitudes toward AI in cancer care.

This scoping review maps the existing literature, identifies knowledge gaps, and highlights opportunities for future research regarding patients’ attitudes toward AI in cancer care. Given its broad and exploratory scope, this review provides a foundation for guiding the patient-centered development and implementation of AI in cancer care [21-23].

Methods

Overview

This scoping review followed a 6-stage methodological framework and adhered to the Preferred Reporting Items for Systematic Reviews and Meta-Analyses extension for Scoping Reviews guideline (PRISMA-ScR) as seen in Checklist 1 [22,24]. The review was registered with Open Science Framework and was conducted according to the published protocol [25].

Search Strategy

To formulate the research question, we applied a conceptual framework for evaluating patient attitudes toward AI in health care [26]. Following the Joanna Briggs Institute’s recommended 3-step search strategy, we conducted an initial limited search of MEDLINE and Embase to identify keywords and relevant index terms [21-24,27,undefined,undefined,undefined]. These keywords and index terms were used to develop a final search strategy and to conduct a literature search across the identified databases for this review. The MEDLINE, Embase, PsycINFO, and CINAHL databases were searched for English-language primary qualitative and quantitative studies published in peer-reviewed journals from inception until February 1, 2025. The reference lists of literature included in this scoping review were searched for additional relevant studies. The final search strategy, found in Multimedia Appendix 1, was developed with support from subject librarians at the University of British Columbia.

Inclusion and Exclusion Criteria

The eligibility criteria were determined using the Population-Concept-Context framework [27].

Population

We included studies with adult patients diagnosed with any type or stage of cancer. Studies with mixed populations (eg, patients with and without cancer) were included if the patients with cancer population was the majority or if the population was composed of those with cancer and those with nonmalignant tumors receiving specialized care. We also included mixed populations of patients with cancer and physicians or caregivers, only if patient attitudes were reported independently. For a study to be included, AI must have been involved, and we defined AI as a computer system modeling intelligent behavior with minimal human intervention [1].

Concept

We focused on patient attitudes toward AI in cancer care. Attitudes were broadly defined as any form of input from patients such as thoughts, feelings, emotions, perspectives, attitudes, opinions, sentiments, beliefs, and experiences.

Context

We included English-language primary qualitative and quantitative studies published in peer-reviewed journals until February 1, 2025, and included all geographies and clinical settings. We excluded non-English studies, gray literature, and secondary research.

Data Screening, Extraction, and Synthesis

The searches identified 1641 sources, of which 401 were duplicates. This left 1240 potential sources that were reviewed for inclusion by 2 independent reviewers, with discrepancies resolved by a third reviewer when necessary. To evaluate the reliability of the screening process, interrater reliability was calculated for both title and abstract screening, as well as full-text screening. For title and abstract screening, the interrater reliability was 90% agreement, with a κ statistic of 0.74, indicating substantial agreement between reviewers. For full-text screening, the interrater reliability was 95% agreement, with a κ statistic of 0.39, indicating moderate agreement between reviewers. Nineteen studies met the inclusion criteria. The following data items were collected: journal name, study design, sample size, methodology, key findings, type of AI used, cancer type, and patient attitudes toward AI. We used an inductive approach to thematic synthesis to identify key themes across studies. One reviewer (DH) conducted open coding of extracted data using Excel (Microsoft Corp), generating codes directly from patient-reported attitudes, experiences, and perspectives. Similar codes were then grouped and refined iteratively to form broader thematic categories, achieving consensus from co-reviewer NN. Attitude themes included preferences for physician-only care, AI-only care, or physician-guided AI, as well as patient outlook, trust, satisfaction, and fear. Table 1 provides a summary of the included studies, while details about methodology, frequency counts, and key findings are provided in narrative form in Multimedia Appendix 2.

Table 1. Summary of 19 studies included.

| Source | Sample sizea | Cancer type(s) | Attitudes toward AIb use in cancer care | ||||

|---|---|---|---|---|---|---|---|

| AI versus physicianc | Patient outlookd |

Trustd | Satisfactiond | Feard | |||

| Au et al, 2023 [28] | 1 | Choroidal metastasis | N/Ae | N/A | N/A | + | N/A |

| Fransen et al, 2025 [29] | 206 | Prostate cancer | Combined | N/A | + | N/A | N/A |

| Goessinger et al, 2024 [30] | 205 | Melanoma | Combined | N/A | + | N/A | − |

| Hildebrand et al, 2023 [31] | 15 | Metastatic cancer | Combined | +, − | + | N/A | N/A |

| Jutzi et al, 2020 [17] | 154 | Melanoma | Combined | +, − | N/A | N/A | N/A |

| Kenig et al, 2024 [32] | 20 | Breast cancer | N/A | N/A | + | N/A | N/A |

| Klotz et al, 2024 [33] | 17 | Pancreatic cancer | N/A | N/A | N/A | + | N/A |

| Lee et al, 2020 [34] | 285 | Breast, colorectal, gastric, gynecological, liver, lung, thyroid | N/A | N/A | N/A | + | N/A |

| Leung et al, 2022 [35] | 48 | Breast, gynecological, colorectal, head and neck | N/A | N/A | N/A | + | N/A |

| Lysø et al, 2024 [36] | 30 | Prostate cancer | Combined | +, − | N/A | N/A | + |

| Manolitsis et al, 2023 [37] | NA | Prostate cancer | N/A | N/A | + | N/A | N/A |

| McCradden et al, 2020 [38] | 18 | Meningioma | N/A | − | U | N/A | N/A |

| Nally et al, 2024 [39] | 28 | Colorectal | Combined | +, − | - | N/A | + |

| Nelson et al, 2020 [18] | 32 | Melanoma, nonmelanoma skin cancer | Combined | +, − | N/A | N/A | N/A |

| Rodler et al, 2024 [40] | 257 | Prostate cancer | Combined | N/A | + | N/A | N/A |

| Šafran et al, 2024 [41] | 166 | Breast, colorectal | N/A | N/A | N/A | + | N/A |

| Temple et al, 2023 [42] | 95 | N/A | N/A | − | − | N/A | N/A |

| van Bussel et al, 2022 [43] | 135 | Breast, colorectal, gynecological, N/A | N/A | + | U | N/A | N/A |

| Yang et al, 2019 [19] | 402 | Lung, breast, pharyngeal, lymphoma, othersf | Combined | +, − | N/A | N/A | N/A |

Patient population with cancer in the included study only. For detailed information on participant size and characteristics, please see Multimedia Appendix 2.

AI: artificial intelligence.

Sources were identified as either having a patient attitude that prefers AI, a physician, or a combined AI plus physician model.

Predominant attitudes in each paper are indicated by positive (+), negative (−), or undefined (U) to describe patients’ feelings with respect to the given attitude.

N/A: not applicable or not assessed.

The top 4 types of cancer included in the study have been included in this list. For more detailed information, please see Multimedia Appendix 2.

Results

General Characteristics of Included Studies

The 19 included studies, published between 2019 and 2025, represented 2114 patients from 15 countries, with the majority originating from Germany, China, and South Korea [17-19,28-43,undefined,undefined,undefined,undefined,undefined,undefined,undefined,undefined,undefined,undefined,undefined,undefined,undefined,undefined,undefined,undefined,undefined]. The majority of studies were published after 2022. Cancer types most frequently examined included prostate, melanoma, breast, colorectal, and lung cancers and are detailed in Multimedia Appendix 3. Most studies used quantitative methods (9/19, 47%), primarily using questionnaires or surveys, while qualitative studies (5/19, 26%) relied on interviews. The remaining mixed methods studies used a combination of surveys with both quantitative and qualitative answers in addition to interviews. The process of study selection is detailed in Figure 1.

Figure 1. PRISMA diagram of articles included in the review. PRISMA: Preferred Reporting Items for Systematic Reviews and Meta-Analyses.

Review Findings

Attitudes

Patient attitudes were categorized into 6 key themes: preferences for AI-driven care, concerns about AI, optimism, trust, satisfaction, and fear. These are summarized in Table 1.

Preference for AI-Driven, Physician-Led, or Combined Care

Nine studies explored patient preferences regarding care from AI only, a physician only, or combined AI-physicians using AI care models [17-19,29-31,36,39,40,undefined,undefined,undefined,undefined]. The majority of patients preferred a combined model, in which physicians use AI as a decision-support tool rather than AI acting autonomously [17-19,29-31,36,39,40,undefined,undefined,undefined,undefined]. One study reported that 83.4% of patients (166/199) preferred AI-assisted screening over physician-only or AI-only screening [30]. Patients valued AI’s ability to process large datasets, believing that its integration with physician expertise could improve clinical decision-making [18,36,39]. However, a subset of patients expressed willingness to trust AI alone, particularly when it demonstrated superior diagnostic accuracy [17,19,29-31,40,undefined,undefined].

Concerns for AI Use in Care

Eight studies identified recurring concerns regarding AI integration [17-19,31,36,38,39,42,undefined,undefined]. Patients frequently questioned the impact of AI on quality of care, with concerns that AI could lead to shorter appointment times, reduced patient-physician communication, and fewer opportunities for questions [17-19,31,36,39,42,undefined,undefined]. Many also feared that AI could weaken the physician-patient relationship, contributing to depersonalized interactions [17-19,36,42,undefined,undefined]. Concerns about AI’s susceptibility to false positives and false negatives were also raised, particularly regarding its lack of accountability in clinical decisions [17-19,36,undefined,undefined]. Some patients also questioned whether AI systems might introduce or reinforce bias, particularly if training data lacked representation from diverse populations [17,38]. Similarly, concerns about the collection and storage of patient data emerged, with patients emphasizing the need for explicit consent before data use and assurances that their information would be deidentified and protected from commercialization [17,18,31,38].

Optimism for AI Use in Care

Despite these concerns, 7 studies highlighted optimism regarding AI’s role in their care [17-19,31,36,39,43,undefined,undefined]. Many patients recognized AI’s potential to improve diagnostic accuracy, particularly in identifying subtle patterns that might be overlooked by physicians [17-19,31,36,39,undefined,undefined]. AI was also seen as enhancing early detection and treatment efficiency, potentially reducing the need for invasive procedures and leading to less intensive treatment strategies [17,36]. Additionally, AI was perceived as a tool that could improve the overall efficiency of their care and the health care system by reducing wait times, alleviating health care burdens, and lowering costs [17-19,36,undefined,undefined]. Some patients believed that AI could also help reduce physician bias and ensure more consistent, data-driven treatment recommendations [17,19,31,39].

Trust

Trust in AI varied across studies [29-32,37-40,42,43,undefined,undefined,undefined,undefined,undefined,undefined]. Although some studies found high levels of trust, this was primarily dependent on whether AI was integrated with physician oversight [30,32,37-39,undefined,undefined]. Multiple studies found that trust in AI was often rooted in physician endorsement rather than the AI itself, suggesting that patient trust in AI is closely tied to physician guidance [31,39,40]. Education level played a role in AI trust; two studies found that patients with postsecondary education were more likely to participate in AI research [29,37]. Education level was also correlated to previous exposure to and understanding of AI technologies, which was another factor that positively influenced trust in AI [19,29]. Conversely, another study found that older patients and those with lower education levels were more likely to trust AI assessments [30].

Satisfaction

Five studies examined patient satisfaction with AI-assisted care [28,33-35,41,undefined,undefined]. Patients reported greater satisfaction when AI improved diagnostic accuracy and increased health care efficiency [34]. A study comparing an AI chatbot to specialists found that patients rated the AI’s responses slightly higher in empathy, comprehensibility, and content quality [33]. One patient who used AI-driven emotional support tools, such as AI chatbots, reported reduced distress and anxiety following their cancer diagnosis [28]. However, another study found that less than half of the participants in their study were satisfied with care received from AI, emphasizing the importance of integrating patients’ experiences into the development of AI technologies [41].

Fear

Three studies explored fear as a patient response to AI integration [30,36,39]. Some patients feared that AI could replace human interactions and limit their autonomy in decision-making [36,39]. However, AI-driven diagnoses were better received when physicians confirmed the AI’s findings, underscoring the importance of physician oversight in maintaining patient trust [30].

Research User Engagement

As recommended by the Joanna Briggs Institute and outlined in our protocol, we reviewed initial findings of this scoping review with research users at a BC Cancer Summit workshop [25,27]. The workshop was attended by over 60 research users including clinicians, decision makers, researchers, and patients. Participants provided informal feedback, which broadly aligned with our findings, with many identifying more with the optimistic aspects of AI in cancer care. This engagement was intended to validate the relevance of our results and inform future research directions.

Discussion

Principal Findings

As AI adoption in cancer care accelerates, understanding patient perspectives is critical for ensuring its effective and ethical implementation. This scoping review provides the first synthesis of patient attitudes toward AI across all cancer types, revealing both areas of support and challenges for its integration in cancer care. Although patients with cancer generally support AI when it is physician-guided, concerns about depersonalization, treatment bias, and data security highlight the need for careful implementation. This review identified unique emotional and psychological concerns for patients with cancer, in contrast to the existing literature, which focused more on general patient and public perspectives of AI, particularly related to its impact on diagnostic accuracy, efficiency, and cost-saving in health care [14].

This review highlights a gap in understanding how AI is perceived across different stages of cancer, specifically among patients with metastatic disease. Despite AI’s potential to assist in prognosis and treatment planning, only 3 of the 19 included studies (16%) reported data on cancer staging, and patients with metastatic cancer represented less than 5% (99/2114) of the total study population [31,34,40]. This underrepresentation limits insights into how AI is received by those with chronic and/or advanced cancer and those receiving end-of-life care [44,45]. Patients with metastatic disease may have distinct concerns regarding AI, including fears that AI-driven prognostic models could recommend less aggressive treatment options if the predicted prognosis is poor, rather than prioritizing patient preferences [15,16,31,46]. Future research should explore how AI can better support decision-making for patients with metastatic cancer, particularly in treatment planning, physician-patient communication, and prognostic discussions.

This review highlights a geographic imbalance in studies examining patient attitudes toward AI in cancer care. Most research has been conducted in Europe (1288/2114 patients, 60.9%) [17,29,30,32,33,36,37,39-43,undefined,undefined,undefined,undefined] and Asia (688/2114, 32.5%) [19,28,34], with North American patient populations notably underrepresented (138/2114, 6.5%) [18,31,35,38]. Studies from Asia have reported more optimistic attitudes toward AI, with some patients expressing trust in AI-assisted care without concerns [19,34]. In contrast, studies from North America and Europe revealed more skepticism, with patients citing concerns related to overreliance on AI [17,31,42], treatment bias [31,36], and data security [17,38]. These findings align with prior literature suggesting that trust and AI adoption are generally higher in Asia compared to Europe and North America [43,47,48]. These differences may reflect broader cultural and health care system factors. Greater acceptance in some Asian countries may be linked to higher trust in technology and centralized health care systems [47]. In contrast, skepticism in North America and Europe may reflect stronger emphasis on individual autonomy and privacy [48]. These contextual differences underscore the need for culturally sensitive AI implementation strategies aligned with local values and expectations of care. Future research should aim to incorporate more geographically diverse populations to ensure that AI implementation strategies reflect the values, concerns, and trust levels of patients across different health care settings.

The influence of educational background on patient attitudes toward AI in cancer care was underrepresented within the studies included in our review. Although several studies explored the role of age and education on attitudes toward AI, a bias toward highly educated populations in these studies limited the generalizability of the findings [17-19,29-31,37,38,undefined,undefined,undefined,undefined]. AI technologies have been shown to produce biased and inequitable outcomes across different patient backgrounds, and this underrepresentation in our review highlights a notable gap in research [49,50]. It is essential that future research and AI implementation in cancer care incorporates the perspectives of diverse educational and socioeconomic groups to ensure that AI-driven care benefits all patients equitably.

Young adult patients with cancer were underrepresented in the studies included in this review. It is critical to understand the attitudes of this population as these patients have differences in disease biology, distribution, and survivorship compared to older patients with cancer [51]. Greater exposure to AI technology among younger patients may foster increased trust in its applications [19,37], but some evidence suggests that a deeper understanding of AI’s complexity may also contribute to skepticism towards its diagnostic accuracy [30,52]. Conversely, data suggesting that older patients were more likely to trust AI diagnoses raise concerns that misconceptions of AI technologies may result in misplaced trust and lead to uninformed or misguided decision-making around AI involvement in their cancer care [30]. These generational differences in AI familiarity and usage will shape future patient attitudes as AI becomes more integrated into clinical practice. Further research is needed to ensure that perspectives of both younger and older populations are adequately represented in studies on AI implementation in cancer care [51].

The successful integration of AI into cancer care depends on aligning these technologies with patient expectations and addressing concerns about trust, concerns about depersonalization, and ethical considerations. For AI researchers and developers, patients consistently expressed a preference for AI as a decision-support tool rather than an autonomous decision maker [17-19,30,31,36,40,undefined,undefined]. AI researchers and developers should prioritize human-AI collaboration models, ensuring that AI augments, rather than replaces, physician expertise. To build and maintain patient trust, AI developers must address concerns about algorithmic bias by seeking training data that reflects diverse populations and ensuring that systems are evaluated for equitable performance [53]. Additionally, to improve the trust and usability of AI tools, researchers and developers should co-develop these tools alongside patients with cancer. In addition, given the limited research on AI attitudes across different cancer stages, particularly metastatic cancer, future AI developments should consider how disease progression and prognosis influence treatment decisions. Developers must also recognize that AI acceptance varies globally, highlighting the need for regionally tailored AI implementation strategies that reflect diverse patient perspectives. Future AI development should incorporate the perspectives of young adult patients and individuals with diverse educational and socioeconomic backgrounds to promote more inclusive and patient-centered implementation.

For clinicians, clear communication and transparency about AI’s role in care are essential for building patient trust [54]. Clinicians should proactively discuss how AI is being used, emphasizing that it supports rather than replaces their expertise. Preserving a strong physician-patient relationship is essential, as patients remain concerned that AI could depersonalize their care [17-19,36,42,undefined,undefined]. Given that patient trust in AI is often linked to physician endorsement, clinicians can facilitate patient acceptance by integrating AI in a way that strengthens the human connection in care. Clinicians also play a role in the ethical implementation of AI by selecting tools that align with patient values and addressing concerns identified in this review, including depersonalization, bias, and data security. Lastly, clinicians can provide feedback to AI developers, ensuring that AI models are refined to better align with patient priorities and improve trust in AI-assisted care.

Strengths and Limitations

This scoping review makes several novel contributions to the existing literature by being the first to comprehensively examine the attitudes of patients with cancer toward AI across all cancer types and treatment settings. Although previous research has focused on AI acceptance in narrow contexts such as melanoma and breast cancer screening, this review provides a broader, more comprehensive synthesis of patient perspectives [14,17,18]. Another strength of this review is its robust methodology, adhering to PRISMA-ScR guidelines, systematically identifying knowledge gaps, and synthesizing findings across diverse populations [25]. Additionally, this review provides a patient-centered focus, offering insights that can inform AI development and guide clinical implementation.

Several limitations should be considered. As a scoping review, this study did not assess the quality of included articles, which is standard practice given that scoping reviews aim to map existing literature rather than evaluate methodological rigor. We observed considerable variability in study quality, especially in sample size, methodological rigor, and reporting of participant demographics and cancer staging. Recognizing this heterogeneity is important, as it influences the strength and applicability of the findings of this review. Future research should prioritize transparent reporting and the use of standardized tools to improve consistency and enable more meaningful cross-study comparisons. In addition, the relatively small number of studies (n=19) included in the review may restrict the generalizability of our findings. This low number of studies is expected given the emerging nature of AI in cancer care. Although this highlights a gap in the current literature, it also suggests that there is the opportunity for further research to build on the findings presented in this review. Other limitations include the exclusion of non-English studies, secondary research, and gray literature. Although secondary research could have offered relevant insights, we excluded it to avoid duplication and ensure that the review focused solely on primary literature. Although this review aimed to capture global perspectives, we limited inclusion to English-language, peer-reviewed primary studies due to resource constraints for translation and the challenges of systematically identifying and appraising gray literature. We recognize that this may introduce language and publication bias, potentially underrepresenting patient perspectives from non–English-speaking regions or low- and middle-income countries. However, our review still included studies from several non–English-speaking countries, such as China and South Korea, indicating some geographic diversity despite the language restriction. Future reviews should consider collaborative efforts with multilingual teams and the use of gray literature databases to ensure broader inclusion and enhance the global relevance of findings. As the literature in this area expands, future work may also benefit from applying a theoretical framework to better interpret emerging patterns in patient attitudes. Despite these limitations, the insights provided by this review offer a foundation for future research and will help guide the patient-centered implementation of AI into cancer care.

Conclusions

This scoping review is the first to synthesize patient attitudes toward AI across all cancer types. We identified 19 studies representing diverse geographies, cancer types, and AI applications. Patients generally supported the use of AI in their care when it complemented physician decision-making, but expressed concerns about depersonalization, treatment bias, and data security. The attitudes of patients varied across regions, cancer types, and stages of illness. Clinicians can use these findings to integrate AI in cancer care in ways that align with patient priorities, maintain human connection, and enhance trust, while researchers should address gaps in understanding AI perceptions among patients with advanced cancer and young adult patients.

Supplementary material

Acknowledgments

The authors are grateful to the University of British Columbia librarian, Jane Jun, for her contributions to the search strategy. The authors acknowledged the use of ChatGPT (GPT-4o, OpenAI) for language copyediting to enhance the fluency of the manuscript on February 1, 2025. All ideas, concepts, and original content were independently developed by the authors, and ChatGPT had no role in shaping the intellectual content. The authors take full responsibility for the accuracy and integrity of the manuscript. The authors received no specific funding for this work.

Abbreviations

- AI

artificial intelligence

- PRISMA-ScR

Preferred Reporting Items for Systematic Reviews and Meta-Analyses extension for Scoping Reviews

Footnotes

Authors’ Contributions: Concept and design: DH, JJN, NN.

Acquisition, analysis or interpretation of data: DH, NN.

Drafting of the manuscript: DH, NN.

Critical revision of the manuscript for important intellectual content: DH, NN, JJN, ATB.

Administrative, technical, or material support: DH.

Supervision: JJN, ATB.

Data Availability: All relevant data from this study are provided in Multimedia Appendix 2.

Conflicts of Interest: JJN has received research funding from an unrestricted research grant from Pfizer Canada. ATB reported receiving unrestricted grant funding from Pfizer Inc to BC Cancer allocated to the Psychiatry Department during the conduct of the study. DH and NN have no competing interests to declare.

References

- 1.Hamet P, Tremblay J. Artificial intelligence in medicine. Metab Clin Exp. 2017 Apr;69S:S36–S40. doi: 10.1016/j.metabol.2017.01.011. doi. Medline. [DOI] [PubMed] [Google Scholar]

- 2.Troyanskaya O, Trajanoski Z, Carpenter A, Thrun S, Razavian N, Oliver N. Artificial intelligence and cancer. Nat Cancer. 2020 Feb;1(2):149–152. doi: 10.1038/s43018-020-0034-6. doi. Medline. [DOI] [PubMed] [Google Scholar]

- 3.Chen ZH, Lin L, Wu CF, Li CF, Xu RH, Sun Y. Artificial intelligence for assisting cancer diagnosis and treatment in the era of precision medicine. Cancer Commun (Lond) 2021 Nov;41(11):1100–1115. doi: 10.1002/cac2.12215. doi. Medline. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Elemento O, Leslie C, Lundin J, Tourassi G. Artificial intelligence in cancer research, diagnosis and therapy. Nat Rev Cancer. 2021 Dec;21(12):747–752. doi: 10.1038/s41568-021-00399-1. doi. Medline. [DOI] [PubMed] [Google Scholar]

- 5.Istasy P, Lee WS, Iansavichene A, et al. The impact of artificial intelligence on health equity in oncology: scoping review. J Med Internet Res. 2022 Nov 1;24(11):e39748. doi: 10.2196/39748. doi. Medline. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Houfani D, Slatnia S, Kazar O, Saouli H, Merizig A. Artificial intelligence in healthcare: a review on predicting clinical needs. Int J Healthc Manag. 2022 Jul 3;15(3):267–275. doi: 10.1080/20479700.2021.1886478. doi. [DOI] [Google Scholar]

- 7.Jiang F, Jiang Y, Zhi H, et al. Artificial intelligence in healthcare: past, present and future. Stroke Vasc Neurol. 2017 Dec;2(4):230–243. doi: 10.1136/svn-2017-000101. doi. Medline. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Johnson M, Albizri A, Simsek S. Artificial intelligence in healthcare operations to enhance treatment outcomes: a framework to predict lung cancer prognosis. Ann Oper Res. 2022 Jan;308(1-2):275–305. doi: 10.1007/s10479-020-03872-6. doi. [DOI] [Google Scholar]

- 9.Nunez JJ, Leung B, Ho C, Ng RT, Bates AT. Predicting which patients with cancer will see a psychiatrist or counsellor from their initial oncology consultation document using natural language processing. Commun Med (Lond) 2024 Apr 8;4(1):69. doi: 10.1038/s43856-024-00495-x. doi. Medline. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Gandhi Z, Gurram P, Amgai B, et al. Artificial intelligence and lung cancer: impact on improving patient outcomes. Cancers (Basel) 2023 Oct 31;15(21):5236. doi: 10.3390/cancers15215236. doi. Medline. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Kang J, Lafata K, Kim E, et al. Artificial intelligence across oncology specialties: current applications and emerging tools. BMJ Oncol. 2024;3(1):e000134. doi: 10.1136/bmjonc-2023-000134. doi. Medline. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Kim HE, Kim HH, Han BK, et al. Changes in cancer detection and false-positive recall in mammography using artificial intelligence: a retrospective, multireader study. Lancet Digit Health. 2020 Mar;2(3):e138–e148. doi: 10.1016/S2589-7500(20)30003-0. doi. Medline. [DOI] [PubMed] [Google Scholar]

- 13.de Boer D, Delnoij D, Rademakers J. The importance of patient-centered care for various patient groups. Patient Educ Couns. 2013 Mar;90(3):405–410. doi: 10.1016/j.pec.2011.10.002. doi. Medline. [DOI] [PubMed] [Google Scholar]

- 14.Young AT, Amara D, Bhattacharya A, Wei ML. Patient and general public attitudes towards clinical artificial intelligence: a mixed methods systematic review. Lancet Digit Health. 2021 Sep;3(9):e599–e611. doi: 10.1016/S2589-7500(21)00132-1. doi. Medline. [DOI] [PubMed] [Google Scholar]

- 15.McCorkle R, Ercolano E, Lazenby M, et al. Self-management: enabling and empowering patients living with cancer as a chronic illness. CA Cancer J Clin. 2011;61(1):50–62. doi: 10.3322/caac.20093. doi. Medline. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Artherholt SB, Fann JR. Psychosocial care in cancer. Curr Psychiatry Rep. 2012 Feb;14(1):23–29. doi: 10.1007/s11920-011-0246-7. doi. Medline. [DOI] [PubMed] [Google Scholar]

- 17.Jutzi TB, Krieghoff-Henning EI, Holland-Letz T, et al. Artificial intelligence in skin cancer diagnostics: the patients’ perspective. Front Med (Lausanne) 2020;7:233. doi: 10.3389/fmed.2020.00233. doi. Medline. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Nelson CA, Pérez-Chada LM, Creadore A, et al. Patient perspectives on the use of artificial intelligence for skin cancer screening: a qualitative study. JAMA Dermatol. 2020 May 1;156(5):501–512. doi: 10.1001/jamadermatol.2019.5014. doi. Medline. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Yang K, Zeng Z, Peng H, Jiang Y. Attitudes of Chinese cancer patients toward the clinical use of artificial intelligence. Patient Prefer Adherence. 2019;13:1867–1875. doi: 10.2147/PPA.S225952. doi. Medline. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Pesapane F, Giambersio E, Capetti B, et al. Patients’ perceptions and attitudes to the use of artificial intelligence in breast cancer diagnosis: a narrative review. Life (Basel) 2024 Mar 29;14(4):454. doi: 10.3390/life14040454. doi. Medline. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Arksey H, O’Malley L. Scoping studies: towards a methodological framework. Int J Soc Res Methodol. 2005 Feb;8(1):19–32. doi: 10.1080/1364557032000119616. doi. [DOI] [Google Scholar]

- 22.Levac D, Colquhoun H, O’Brien KK. Scoping studies: advancing the methodology. Implement Sci. 2010 Sep 20;5(1):69. doi: 10.1186/1748-5908-5-69. doi. Medline. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Peters MDJ, Godfrey CM, Khalil H, McInerney P, Parker D, Soares CB. Guidance for conducting systematic scoping reviews. Int J Evid Based Healthc. 2015 Sep;13(3):141–146. doi: 10.1097/XEB.0000000000000050. doi. Medline. [DOI] [PubMed] [Google Scholar]

- 24.Tricco AC, Lillie E, Zarin W, et al. PRISMA extension for Scoping Reviews (PRISMA-ScR): checklist and explanation. Ann Intern Med. 2018 Oct 2;169(7):467–473. doi: 10.7326/M18-0850. doi. Medline. [DOI] [PubMed] [Google Scholar]

- 25.Hilbers D, Nekain N, Bates AT, Nunez JJ. Patients’ attitudes toward artificial intelligence (AI) in cancer care: a scoping review protocol. PLoS One. 2025;20(1):e0317276. doi: 10.1371/journal.pone.0317276. doi. Medline. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Richardson JP, Curtis S, Smith C, et al. A framework for examining patient attitudes regarding applications of artificial intelligence in healthcare. Digit Health. 2022;8:20552076221089084. doi: 10.1177/20552076221089084. doi. Medline. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.JBI Manual for Evidence Synthesis. JBI Global Wiki. 2024. [10-06-2024]. https://jbi-global-wiki.refined.site/space/MANUAL URL. Accessed.

- 28.Au SCL. Patient with cancer who found support and care from ChatGPT. Cancer Res Stat Treat. 2023;6(2):305–307. doi: 10.4103/crst.crst_113_23. doi. [DOI] [Google Scholar]

- 29.Fransen SJ, Kwee TC, Rouw D, et al. Patient perspectives on the use of artificial intelligence in prostate cancer diagnosis on MRI. Eur Radiol. 2025 Feb;35(2):769–775. doi: 10.1007/s00330-024-11012-y. doi. Medline. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Goessinger EV, Niederfeilner JC, Cerminara S, et al. Patient and dermatologists’ perspectives on augmented intelligence for melanoma screening: a prospective study. J Eur Acad Dermatol Venereol. 2024 Dec;38(12):2240–2249. doi: 10.1111/jdv.19905. doi. Medline. [DOI] [PubMed] [Google Scholar]

- 31.Hildebrand RD, Chang DT, Ewongwoo AN, Ramchandran KJ, Gensheimer MF. Study of patient and physician attitudes toward automated prognostic models for patients with metastatic cancer. JCO Clin Cancer Inform. 2023 Jul;7(7):e2300023. doi: 10.1200/CCI.23.00023. doi. Medline. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Kenig N, Muntaner Vives A, Monton Echeverria J. Is my doctor human? Acceptance of AI among patients with breast cancer. Plast Reconstr Surg Glob Open. 2024 Oct;12(10):e6257. doi: 10.1097/GOX.0000000000006257. doi. Medline. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Klotz R, Pausch TM, Kaiser J, et al. ChatGPT vs. surgeons on pancreatic cancer queries: accuracy & empathy evaluated by patients and experts. HPB (Oxford) 2025 Mar;27(3):311–317. doi: 10.1016/j.hpb.2024.11.012. doi. Medline. [DOI] [PubMed] [Google Scholar]

- 34.Lee K, Lee SH. Artificial intelligence-driven oncology clinical decision support system for multidisciplinary teams. Sensors (Basel) 2020 Aug 20;20(17):4693. doi: 10.3390/s20174693. doi. Medline. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Leung YW, Park B, Heo R, et al. Providing care beyond therapy sessions with a natural language processing-based recommender system that identifies cancer patients who experience psychosocial challenges and provides self-care support: pilot study. JMIR Cancer. 2022 Jul 29;8(3):e35893. doi: 10.2196/35893. doi. Medline. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Lysø EH, Hesjedal MB, Skolbekken JA, Solbjør M. Men’s sociotechnical imaginaries of artificial intelligence for prostate cancer diagnostics - a focus group study. Soc Sci Med. 2024 Apr;347:116771. doi: 10.1016/j.socscimed.2024.116771. doi. Medline. [DOI] [PubMed] [Google Scholar]

- 37.Manolitsis I, Tzelves L, Feretzakis G, et al. Acceptance of artificial intelligence in supporting cancer patients. Stud Health Technol Inform. 2023 Jun 29;305:572–575. doi: 10.3233/SHTI230561. doi. Medline. [DOI] [PubMed] [Google Scholar]

- 38.McCradden MD, Baba A, Saha A, et al. Ethical concerns around use of artificial intelligence in health care research from the perspective of patients with meningioma, caregivers and health care providers: a qualitative study. CMAJ Open. 2020;8(1):E90–E95. doi: 10.9778/cmajo.20190151. doi. Medline. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Nally DM, Kearns EC, Dalli J, et al. Patient public perspectives on digital colorectal cancer surgery (DALLAS) Eur J Surg Oncol. 2025 Jul;51(7):108705. doi: 10.1016/j.ejso.2024.108705. doi. Medline. [DOI] [PubMed] [Google Scholar]

- 40.Rodler S, Kopliku R, Ulrich D, et al. Patients’ trust in artificial intelligence-based decision-making for localized prostate cancer: results from a prospective trial. Eur Urol Focus. 2024 Jul;10(4):654–661. doi: 10.1016/j.euf.2023.10.020. doi. Medline. [DOI] [PubMed] [Google Scholar]

- 41.Šafran V, Lin S, Nateqi J, et al. Multilingual Framework for Risk Assessment and Symptom Tracking (MRAST) Sensors (Basel) 2024 Feb 8;24(4):1101. doi: 10.3390/s24041101. doi. Medline. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Temple S, Rowbottom C, Simpson J. Patient views on the implementation of artificial intelligence in radiotherapy. Radiography (Lond) 2023 May;29 Suppl 1:S112–S116. doi: 10.1016/j.radi.2023.03.006. doi. Medline. [DOI] [PubMed] [Google Scholar]

- 43.van Bussel MJP, Odekerken-Schröder GJ, Ou C, Swart RR, Jacobs MJG. Analyzing the determinants to accept a virtual assistant and use cases among cancer patients: a mixed methods study. BMC Health Serv Res. 2022 Jul 9;22(1):890. doi: 10.1186/s12913-022-08189-7. doi. Medline. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.U.S. Cancer Statistics Lung Cancer Stat Bite. Centers for Disease Control and Prevention. 2024. [21-01-2025]. https://www.cdc.gov/united-states-cancer-statistics/publications/lung-cancer-stat-bite.html URL. Accessed.

- 45.Biller LH, Schrag D. Diagnosis and treatment of metastatic colorectal cancer: a review. JAMA. 2021 Feb 16;325(7):669–685. doi: 10.1001/jama.2021.0106. doi. Medline. [DOI] [PubMed] [Google Scholar]

- 46.Andersen BL, Lacchetti C, Ashing K, et al. Management of anxiety and depression in adult survivors of cancer: ASCO guideline update. J Clin Oncol. 2023 Jun 20;41(18):3426–3453. doi: 10.1200/JCO.23.00293. doi. Medline. [DOI] [PubMed] [Google Scholar]

- 47.Yam KC, Tan T, Jackson JC, Shariff A, Gray K. Cultural differences in people’s reactions and applications of robots, algorithms, and artificial intelligence. Manag Organ Rev. 2023 Oct;19(5):859–875. doi: 10.1017/mor.2023.21. doi. [DOI] [Google Scholar]

- 48.Barnes AJ, Zhang Y, Valenzuela A. AI and culture: culturally dependent responses to AI systems. Curr Opin Psychol. 2024 Aug;58:101838. doi: 10.1016/j.copsyc.2024.101838. doi. Medline. [DOI] [PubMed] [Google Scholar]

- 49.Seyyed-Kalantari L, Zhang H, McDermott MBA, Chen IY, Ghassemi M. Underdiagnosis bias of artificial intelligence algorithms applied to chest radiographs in under-served patient populations. Nat Med. 2021 Dec;27(12):2176–2182. doi: 10.1038/s41591-021-01595-0. doi. Medline. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50.Straw I, Callison-Burch C. Artificial intelligence in mental health and the biases of language based models. PLoS One. 2020;15(12):e0240376. doi: 10.1371/journal.pone.0240376. doi. Medline. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51.Miller KD, Fidler-Benaoudia M, Keegan TH, Hipp HS, Jemal A, Siegel RL. Cancer statistics for adolescents and young adults, 2020. CA Cancer J Clin. 2020 Nov;70(6):443–459. doi: 10.3322/caac.21637. doi. Medline. [DOI] [PubMed] [Google Scholar]

- 52.Liopyris K, Gregoriou S, Dias J, Stratigos AJ. Artificial intelligence in dermatology: challenges and perspectives. Dermatol Ther (Heidelb) 2022 Dec;12(12):2637–2651. doi: 10.1007/s13555-022-00833-8. doi. Medline. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 53.Panch T, Mattie H, Atun R. Artificial intelligence and algorithmic bias: implications for health systems. J Glob Health. 2019 Dec;9(2):010318. doi: 10.7189/jogh.09.020318. doi. Medline. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 54.Holtz B, Nelson V, Poropatich RK. Artificial intelligence in health: enhancing a return to patient-centered communication. Telemed J E Health. 2023 Jun;29(6):795–797. doi: 10.1089/tmj.2022.0413. doi. Medline. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.