Abstract

Football videos are playing an increasingly important role in event analysis and tactical evaluation within computer vision. Traditional object detection methods, relying on region proposals and anchor generation, struggle to balance real-time performance and accuracy in complex scenarios such as multi-view, motion blur, and small object recognition. Meanwhile, Transformer-based methods face challenges in capturing fine-grained target information due to their high computational cost and slow training convergence. To address these problems, we propose a novel end-to-end detection framework–Football Transformer (FoT). By introducing the Local Interaction Aggregation Unit (LIAU) and Multi-Scale Feature Interaction Module (MFIM), FoT achieves an efficient balance between global semantic expression and local detail capture. Specifically, LIAU reduces the self-attention computation complexity from  to O(N) through feature aggregation within local windows and a window offset mechanism. MFIM strengthens the collaborative expression of low-level details and high-level semantics through multi-scale feature alignment and progressive fusion, effectively integrating low-level details and high-level semantics, significantly improving small object detection performance. Experimental results show that FoT achieves a 3.0% mAP improvement over the best baseline on the Soccer-Det dataset and a 1.3% gain on the FIFA-Vid dataset, while maintaining real-time inference speed. These results validate the effectiveness and robustness of the proposed method under complex football video scenarios.

to O(N) through feature aggregation within local windows and a window offset mechanism. MFIM strengthens the collaborative expression of low-level details and high-level semantics through multi-scale feature alignment and progressive fusion, effectively integrating low-level details and high-level semantics, significantly improving small object detection performance. Experimental results show that FoT achieves a 3.0% mAP improvement over the best baseline on the Soccer-Det dataset and a 1.3% gain on the FIFA-Vid dataset, while maintaining real-time inference speed. These results validate the effectiveness and robustness of the proposed method under complex football video scenarios.

Keywords: Football video analysis, Object detection, Transformer, Real-time detection

Subject terms: Computer science, Computational science

Introduction

With the growing demand for event analysis and automated understanding, football videos have become an important application scenario in computer vision research. By accurately identifying and locating key targets such as the football, players, and referees on the field, football video analysis can not only provide richer real-time information for viewers but also assist coaches and analysts in quantitatively evaluating player performance after a match. For example, tactical analysis based on object detection can statistically analyze player movements, pass and reception counts, positioning gaps, and other metrics, providing data support for team strategy formulation; real-time object detection and tracking methods can also be applied in Video Assistant Referee (VAR) systems and goal determination, further improving the accuracy and fairness of refereeing decisions. As a result, high-precision and real-time object detection algorithms are becoming increasingly important for research and application in the football field1,2.

Traditional object detection methods, such as Faster R-CNN3, SSD4, and the YOLO series5, use convolutional neural networks (CNNs)6,7 to extract features and rely on region proposals, anchor generation, and non-maximum suppression as manually designed components. While these methods perform well in general scenarios, their efficiency and accuracy are limited in complex environments such as multi-view switching, motion blur, and small object recognition. The introduction of Transformers has brought new ideas to object detection by transforming it into an end-to-end set matching problem (e.g., DETR8 and Deformable DETR9), significantly simplifying the traditional process and demonstrating strong relational modeling capabilities. However, these methods have two major limitations: first, the self-attention mechanism is computationally expensive and converges slowly during training, making it difficult to meet real-time application demands; second, their ability to detect small objects is insufficient, and they struggle to capture detailed features in complex scenes. In the high-complexity application scenario of football matches, these methods still face challenges in both real-time performance and small object detection accuracy.

To address these challenges, we propose an end-to-end detection framework called Football Transformer (FoT). This framework introduces the Local Interaction Aggregation Unit (LIAU) and Multi-Scale Feature Interaction Module (MFIM), significantly reducing computational complexity while ensuring global semantic expression and improving the detection accuracy and real-time performance for fine-grained small targets. Specifically, the LIAU in FoT uses a feature aggregation strategy within local windows and captures relationships between features in the local scope, achieving linear complexity attention computation and reducing the traditional self-attention complexity from  to

to  . To compensate for potential lack of global information due to local computations, the LIAU introduces a window offset mechanism between network layers, enabling cross-window information interaction. This significantly enhances the model’s training and inference speed while ensuring detection accuracy. For football match scenarios, where target size distribution is uneven and distant players or fast-moving footballs occupy only a small portion of the pixels, we designed the MFIM. This module performs upsampling, convolution alignment, and progressive fusion of features at different levels, enabling efficient integration of low-level details and high-level semantics, thereby preserving small target information during global context modeling and significantly improving small object detection accuracy. Our method demonstrates consistent performance gains on both Soccer-Det and FIFA-Vid datasets, with mAP improvements of 3.0% and 1.3% respectively over the strongest baselines. In addition, FoT sustains real-time inference speed, highlighting its practical value for accurate and efficient detection in complex football scenarios.

. To compensate for potential lack of global information due to local computations, the LIAU introduces a window offset mechanism between network layers, enabling cross-window information interaction. This significantly enhances the model’s training and inference speed while ensuring detection accuracy. For football match scenarios, where target size distribution is uneven and distant players or fast-moving footballs occupy only a small portion of the pixels, we designed the MFIM. This module performs upsampling, convolution alignment, and progressive fusion of features at different levels, enabling efficient integration of low-level details and high-level semantics, thereby preserving small target information during global context modeling and significantly improving small object detection accuracy. Our method demonstrates consistent performance gains on both Soccer-Det and FIFA-Vid datasets, with mAP improvements of 3.0% and 1.3% respectively over the strongest baselines. In addition, FoT sustains real-time inference speed, highlighting its practical value for accurate and efficient detection in complex football scenarios.

The main contributions of this paper can be summarized as follows:

We propose the FoT end-to-end detection framework, integrating the Local Interaction Aggregation Unit (LIAU) and Multi-Scale Feature Interaction Module (MFIM) to significantly reduce computational complexity while ensuring global semantic expression, and achieving efficient capture of fine-grained small targets.

We design the LIAU module, which employs a feature aggregation strategy within local windows and uses a window offset mechanism to achieve cross-window information interaction, reducing the traditional self-attention complexity from

to

to  , and significantly improving the model’s training and inference speed while ensuring detection accuracy.

, and significantly improving the model’s training and inference speed while ensuring detection accuracy.We propose the Multi-Scale Feature Interaction Module (MFIM), which upscales, aligns, and progressively fuses features at different levels to create a unified feature representation that balances low-level details and high-level semantics, effectively alleviating the problem of uneven target size distribution and significantly improving small target detection accuracy.

On the Soccer-Det dataset, FoT achieves a 3.0% mAP improvement over the best-performing baseline; on the FIFA-Vid dataset, it achieves a 1.3% improvement. In both cases, FoT maintains real-time detection performance, demonstrating its efficiency and strong generalization capability in football video understanding.

Related work

Real-time detection and efficient attention mechanisms

With the introduction of Transformers into visual tasks, many recent works have sought to incorporate global modeling capabilities into object detection frameworks. Swin Transformer10 computes self-attention within non-overlapping local windows and introduces a shifted window scheme to enable cross-window interactions, achieving a balance between computation and performance. Pyramid Vision Transformer11 employs a hierarchical pyramid structure to reduce spatial resolution progressively while preserving rich semantics across scales. CSWin Transformer12 designs cross-shaped window attention and local-enhanced position encoding to expand the receptive field and improve spatial modeling. Neighborhood Attention Transformer13 restricts attention to fixed local neighborhoods, reducing the quadratic complexity of self-attention to linear and significantly improving runtime efficiency. Similarly, SG-Net14 explores spatial granularity for video instance segmentation, demonstrating the effectiveness of hierarchical spatial modeling. More recently, RT-DETR15 proposes a real-time end-to-end detection framework based on a hybrid encoder and uncertainty-aware query initialization, achieving both high precision and inference speed without non-maximum suppression.

Despite the progress, these approaches share common limitations: most rely on fixed local windows or neighborhood constraints, limiting global context integration; others like Prototypical Transformer16 focus on temporal dynamics but overlook spatial computation efficiency. Recent work on deep nearest centroids17 demonstrates that local feature aggregation through prototype learning can achieve efficient recognition, which inspires our local window design. To address these issues, we propose the Local Interaction Aggregation Unit (LIAU), which achieves linear attention computation within local windows while enabling cross-window information interaction through a window-shifting strategy. This design significantly improves the training and inference efficiency while enhancing the global semantic representation needed for real-time detection scenarios.

Small object detection and multi-scale feature fusion

Detecting small objects remains a long-standing challenge in object detection due to their limited pixel footprint and susceptibility to information loss in high-level features. YOLOF18 demonstrates that single-layer features, when combined with dilated encoders and uniform label assignment, can achieve competitive detection performance without feature pyramids. RFLA19 proposes a receptive field-based label assignment scheme that models receptive field distributions as Gaussians, improving positive sample assignment for extremely small targets. CFINet20 introduces a coarse-to-fine region proposal network and a feature imitation branch to enhance the discriminative representation of small objects. IMFA21 designs an iterative multi-scale fusion paradigm guided by sparse keypoints, effectively integrating low-level details and high-level semantics within Transformer detectors. CluSTSeg22 shows that clustering-based feature organization can improve multi-scale representation. In aerial imagery, DQ-DETR23 leverages density-aware dynamic queries to handle dense small objects by predicting the target count and generating adaptive query embeddings.

However, existing methods still struggle with accurate semantic alignment and spatial consistency across scales. Standard pyramid-based approaches often suffer from feature misalignment due to resolution differences, and simple fusion strategies such as summation or concatenation can degrade fine-grained details critical for small object detection. To overcome these challenges, we introduce the Multi-Scale Feature Interaction Module (MFIM), which performs progressive upsampling, convolutional alignment, and cross-level attention fusion to construct a unified multi-scale representation. This design effectively integrates complementary features while preserving small object information, significantly boosting detection performance in complex scenes with diverse object sizes.

Method

Overview

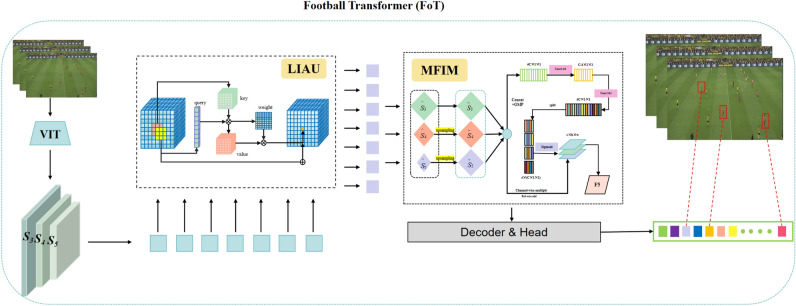

The proposed Football Transformer (FoT) is an end-to-end object detection framework specifically designed for complex football video scenarios, aiming to achieve both high accuracy and real-time efficiency. As illustrated in Figure 1, FoT first employs a vision transformer-based backbone to extract hierarchical feature maps ( ) from the input frame, which capture visual cues at different spatial resolutions. These multi-scale features are then individually processed by a Local Interaction Aggregation Unit (LIAU), which performs lightweight self-attention within non-overlapping windows and incorporates a shifted window mechanism to enable cross-region interaction while maintaining linear computational complexity. The refined features from all levels are subsequently passed into the Multi-Scale Feature Interaction Module (MFIM), where they are spatially aligned, upsampled, and fused into a unified representation that effectively balances fine-grained detail and semantic abstraction–especially for small or occluded objects like footballs. Finally, the fused features are fed into a Transformer decoder and a detection head to generate query-based object predictions. The entire architecture forms a coherent pipeline that integrates global context modeling with localized detail preservation, enabling FoT to handle diverse spatial layouts and detection challenges commonly encountered in football scenes. In the following subsections, we provide detailed descriptions of each component.

) from the input frame, which capture visual cues at different spatial resolutions. These multi-scale features are then individually processed by a Local Interaction Aggregation Unit (LIAU), which performs lightweight self-attention within non-overlapping windows and incorporates a shifted window mechanism to enable cross-region interaction while maintaining linear computational complexity. The refined features from all levels are subsequently passed into the Multi-Scale Feature Interaction Module (MFIM), where they are spatially aligned, upsampled, and fused into a unified representation that effectively balances fine-grained detail and semantic abstraction–especially for small or occluded objects like footballs. Finally, the fused features are fed into a Transformer decoder and a detection head to generate query-based object predictions. The entire architecture forms a coherent pipeline that integrates global context modeling with localized detail preservation, enabling FoT to handle diverse spatial layouts and detection challenges commonly encountered in football scenes. In the following subsections, we provide detailed descriptions of each component.

Fig. 1.

Overall pipeline of the proposed Football Transformer (FoT) framework. The backbone network extracts multi-level features ( ) from the input image. Each scale is encoded by a dedicated Local Interaction Aggregation Unit (LIAU), which performs window-based self-attention and cross-window interaction. The Multi-Scale Feature Interaction Module (MFIM) fuses features across scales to construct a unified representation. Finally, a Transformer decoder and detection head produce object-level predictions.

) from the input image. Each scale is encoded by a dedicated Local Interaction Aggregation Unit (LIAU), which performs window-based self-attention and cross-window interaction. The Multi-Scale Feature Interaction Module (MFIM) fuses features across scales to construct a unified representation. Finally, a Transformer decoder and detection head produce object-level predictions.

Backbone feature extraction network

The first stage of FoT is a feature extraction backbone that transforms the input RGB image into hierarchical feature maps at multiple spatial resolutions. Let the input image be denoted as  . A hierarchical vision Transformer is used to extract features at three different levels:

. A hierarchical vision Transformer is used to extract features at three different levels:

|

1 |

where the feature maps  ,

,  , and

, and  correspond to low-level, mid-level, and high-level representations, respectively. These multi-scale features capture complementary information: shallow features preserve edge and texture details critical for small object detection, while deep features encapsulate global semantic patterns.

correspond to low-level, mid-level, and high-level representations, respectively. These multi-scale features capture complementary information: shallow features preserve edge and texture details critical for small object detection, while deep features encapsulate global semantic patterns.

We discard the initial stages  and

and  as they mainly capture low-level texture or edge information with limited semantic value and incur high memory cost due to large spatial resolutions. Instead, we select

as they mainly capture low-level texture or edge information with limited semantic value and incur high memory cost due to large spatial resolutions. Instead, we select  as our multi-scale representations, which provide a better trade-off between spatial detail and semantic abstraction for downstream detection tasks. The extracted feature maps are then fed into the LIAU module for further enhancement via local attention and inter-window information flow.

as our multi-scale representations, which provide a better trade-off between spatial detail and semantic abstraction for downstream detection tasks. The extracted feature maps are then fed into the LIAU module for further enhancement via local attention and inter-window information flow.

Local interaction aggregation unit (LIAU)

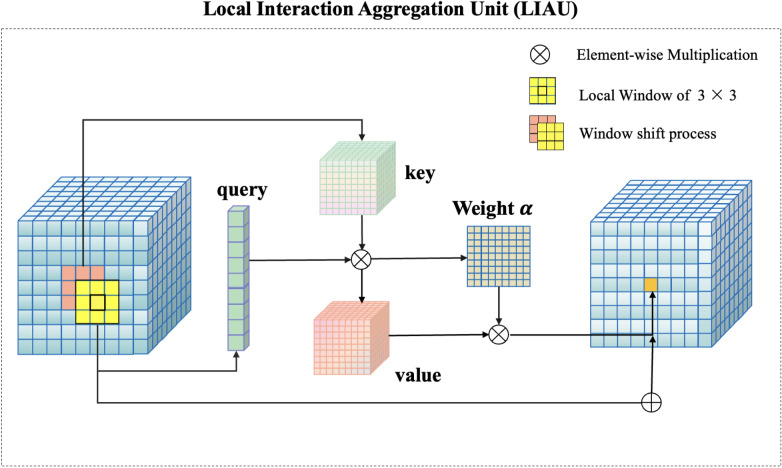

The Local Interaction Aggregation Unit (LIAU) aims to efficiently model spatial dependencies within localized regions, while also enabling cross-window information flow in a hierarchical and scalable manner. As illustrated in Figure 2, LIAU first divides each input feature map into non-overlapping windows (e.g.,  ) and performs self-attention independently within each window, reducing the computational complexity from quadratic to linear. To avoid isolated modeling within fixed regions, we apply a shifted window strategy across successive layers, allowing tokens on window boundaries to interact with neighboring windows. This mechanism effectively enlarges the receptive field without introducing global attention cost, and is especially beneficial for high-resolution football scenes where small targets (e.g., footballs and distant players) require fine-grained spatial sensitivity. The output of each LIAU layer is fused via residual connections to retain both original position encoding and aggregated context.

) and performs self-attention independently within each window, reducing the computational complexity from quadratic to linear. To avoid isolated modeling within fixed regions, we apply a shifted window strategy across successive layers, allowing tokens on window boundaries to interact with neighboring windows. This mechanism effectively enlarges the receptive field without introducing global attention cost, and is especially beneficial for high-resolution football scenes where small targets (e.g., footballs and distant players) require fine-grained spatial sensitivity. The output of each LIAU layer is fused via residual connections to retain both original position encoding and aggregated context.

Fig. 2.

Illustration of the Local Interaction Aggregation Unit (LIAU). Each feature map is first divided into non-overlapping local windows (highlighted in yellow), and attention is performed within each window independently. To enable cross-window interaction, a shifted window scheme (shown in red) is applied, allowing tokens on different sides of the boundary to aggregate information in subsequent layers. The module uses linear attention computation (query-key-value) with element-wise multiplication and residual aggregation to maintain efficiency and representation fidelity.

Input and representation

Let the input feature map be  . It is first partitioned into non-overlapping local windows of size

. It is first partitioned into non-overlapping local windows of size  , resulting in

, resulting in  windows, where

windows, where  is a fixed constant (e.g.,

is a fixed constant (e.g.,  ). Each window is processed independently, and self-attention is performed only within the local region without interaction across windows.

). Each window is processed independently, and self-attention is performed only within the local region without interaction across windows.

Local neighborhood attention mechanism

Within each local window, self-attention is restricted to a fixed neighborhood of size  . For any location

. For any location  in a window, the attention output is computed as:

in a window, the attention output is computed as:

|

2 |

|

3 |

where  denotes the local neighborhood centered at

denotes the local neighborhood centered at  , and

, and  are the projected query, key, and value features. This restricted attention significantly reduces computational overhead from global attention’s original

are the projected query, key, and value features. This restricted attention significantly reduces computational overhead from global attention’s original  to

to  , which is effectively linear when

, which is effectively linear when  .

.

Shifted window interaction

A naive window partition results in non-overlapping, isolated regions, which limits the receptive field. To overcome this limitation, LIAU adopts a shifted window strategy that introduces inter-window communication:

In even-numbered layers, window partitioning starts from the top-left corner with no offset.

In odd-numbered layers, the partition is shifted by

, ensuring that tokens belonging to different windows in the previous layer now reside in the same window.

, ensuring that tokens belonging to different windows in the previous layer now reside in the same window.

The shifting mechanism is formulated as:

|

4 |

|

5 |

To prevent boundary overflow, mirror padding is applied along the edges. This alternating partitioning scheme allows tokens to aggregate information from neighboring windows across layers, effectively eliminating the local isolation effect and enhancing cross-window feature fusion.

Output reprojection and residual fusion

Each window outputs an updated feature patch  , which is processed by a shared linear projection

, which is processed by a shared linear projection  and then merged with the original input via residual connection:

and then merged with the original input via residual connection:

|

6 |

where  denotes the index of the window to which position

denotes the index of the window to which position  belongs. The final output

belongs. The final output  encodes both the original representation and the enriched local contextual information, and will be passed to the multi-scale fusion module for further processing.

encodes both the original representation and the enriched local contextual information, and will be passed to the multi-scale fusion module for further processing.

Complexity analysis

Let the total number of tokens be  . For each token attending to a fixed-size neighborhood of

. For each token attending to a fixed-size neighborhood of  elements, the computation cost per position is

elements, the computation cost per position is  . The total complexity is thus:

. The total complexity is thus:

|

7 |

which scales linearly with the spatial resolution. Compared to conventional global self-attention, LIAU significantly reduces the computational burden while maintaining strong local representation capability, making it well-suited for real-time detection tasks.

Analysis of local vs. global attention

We provide justification for why local attention outperforms global attention in football video detection:

Spatial Locality Principle. Object detection in football videos exhibits strong spatial locality–adjacent pixels typically belong to the same object or background region. Global attention computes relationships between all  pixel pairs, including many spatially distant pairs with negligible correlation. Our local window design focuses on spatially adjacent regions where correlation is highest, eliminating redundant long-range computations.

pixel pairs, including many spatially distant pairs with negligible correlation. Our local window design focuses on spatially adjacent regions where correlation is highest, eliminating redundant long-range computations.

Multi-scale Receptive Field Construction. Through the shifted window mechanism, LIAU’s effective receptive field expands progressively. After L layers with window shift of M/2, the receptive field reaches  pixels. This progressive expansion maintains fine-grained local details crucial for small object detection while achieving sufficient global context for semantic understanding.

pixels. This progressive expansion maintains fine-grained local details crucial for small object detection while achieving sufficient global context for semantic understanding.

Small Object Feature Preservation. In global attention, small objects (e.g., footballs occupying  of image area) suffer from feature dilution when averaged across all spatial positions. LIAU’s local computation ensures small objects maintain higher relative attention weights within their local windows, preventing feature submersion in the global context.

of image area) suffer from feature dilution when averaged across all spatial positions. LIAU’s local computation ensures small objects maintain higher relative attention weights within their local windows, preventing feature submersion in the global context.

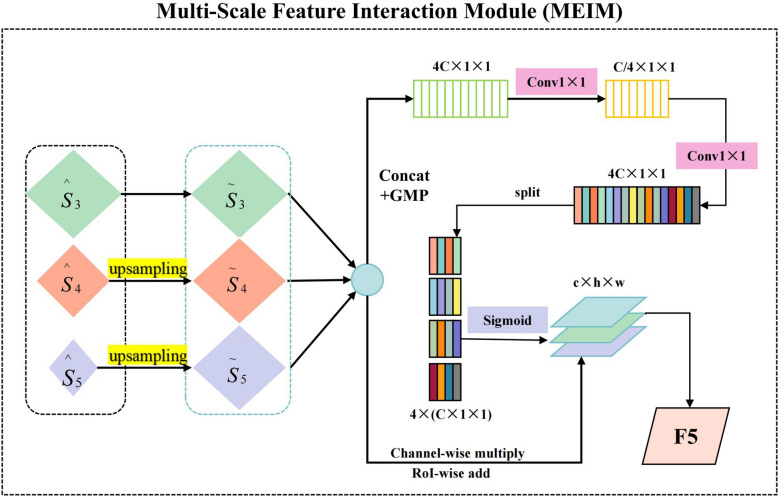

Multi-scale feature interaction module (MFIM)

The Multi-Scale Feature Interaction Module (MFIM) aims to effectively fuse the hierarchical feature maps ( ) obtained from the LIAU modules, thereby bridging the semantic gap across different resolutions. As shown in Figure 3, MFIM first performs spatial alignment by upsampling lower-resolution features (

) obtained from the LIAU modules, thereby bridging the semantic gap across different resolutions. As shown in Figure 3, MFIM first performs spatial alignment by upsampling lower-resolution features ( ) to match the resolution of the shallowest feature

) to match the resolution of the shallowest feature  , yielding aligned features

, yielding aligned features  . These aligned features are concatenated and globally pooled, followed by a channel-wise projection using

. These aligned features are concatenated and globally pooled, followed by a channel-wise projection using  convolutions to form a compressed descriptor. This descriptor is passed through a sigmoid gating mechanism, producing channel-wise weights that are used to recalibrate the concatenated feature maps through element-wise multiplication and residual addition. The resulting fused feature

convolutions to form a compressed descriptor. This descriptor is passed through a sigmoid gating mechanism, producing channel-wise weights that are used to recalibrate the concatenated feature maps through element-wise multiplication and residual addition. The resulting fused feature  serves as the input to the downstream decoder.

serves as the input to the downstream decoder.

Fig. 3.

Structure of the multi-scale feature interaction module (MFIM). The input features  are first spatially aligned via upsampling, resulting in

are first spatially aligned via upsampling, resulting in  . These features are concatenated and globally pooled, followed by a series of

. These features are concatenated and globally pooled, followed by a series of  convolutions to generate channel-wise weights via a sigmoid gate. The fused representation is obtained through channel-wise multiplication and residual addition, producing the final output

convolutions to generate channel-wise weights via a sigmoid gate. The fused representation is obtained through channel-wise multiplication and residual addition, producing the final output  for decoding.

for decoding.

Cross-scale alignment and channel projection

Each feature map  is first projected to a common channel dimension

is first projected to a common channel dimension  using a

using a  convolution

convolution  , and then upsampled to the spatial size of the shallowest feature

, and then upsampled to the spatial size of the shallowest feature  . The aligned feature maps are denoted as:

. The aligned feature maps are denoted as:

|

8 |

where  is the resolution of

is the resolution of  , and

, and  .

.

Progressive feature fusion

The aligned features  are fused in a progressive manner to construct a unified multi-scale representation. Starting from

are fused in a progressive manner to construct a unified multi-scale representation. Starting from  , each level is combined with the next via a gated attention mechanism that adaptively balances their contributions:

, each level is combined with the next via a gated attention mechanism that adaptively balances their contributions:

|

9 |

|

10 |

where the attention weights  are computed via:

are computed via:

|

11 |

with GAP indicating global average pooling,  denoting channel-wise concatenation, and

denoting channel-wise concatenation, and  being the sigmoid function.

being the sigmoid function.

Unified output representation

The final fused representation from MFIM is defined as:

|

12 |

which integrates low-level detail and high-level semantics into a single enhanced feature map. This output is then passed to the Transformer decoder for object-level prediction.

Transformer decoder

The Transformer Decoder receives the fused feature map  and predicts object classes and bounding boxes through a fixed set of learnable queries. The feature map is first flattened and added with positional encoding:

and predicts object classes and bounding boxes through a fixed set of learnable queries. The feature map is first flattened and added with positional encoding:

|

13 |

A set of queries  is iteratively updated through

is iteratively updated through  decoder layers, each consisting of self-attention, cross-attention, and a feed-forward block:

decoder layers, each consisting of self-attention, cross-attention, and a feed-forward block:

|

14 |

Each output query  is decoded into a class probability and a bounding box:

is decoded into a class probability and a bounding box:

|

15 |

The final prediction set includes  objects, with a matching strategy used during training and thresholding during inference.

objects, with a matching strategy used during training and thresholding during inference.

Optimization objective

The entire FoT framework is optimized in an end-to-end manner using a combination of classification and localization losses. Following the DETR-style formulation, the objective is defined over a fixed set of  predictions, where each predicted object is matched to a ground-truth instance via bipartite Hungarian matching. For each matched pair, the total loss is defined as:

predictions, where each predicted object is matched to a ground-truth instance via bipartite Hungarian matching. For each matched pair, the total loss is defined as:

|

16 |

where  is the cross-entropy loss for object classification (including a background class),

is the cross-entropy loss for object classification (including a background class),  is the

is the  loss for bounding box regression, and

loss for bounding box regression, and  is the Generalized IoU loss for spatial alignment. The weights

is the Generalized IoU loss for spatial alignment. The weights  ,

,  , and

, and  are set to balance the contributions of each term.

are set to balance the contributions of each term.

During training, unmatched predictions are supervised as background, and the total loss is averaged over all object queries.

Experiments

We evaluate the proposed Football Transformer (FoT) on two challenging football video datasets, focusing on both detection accuracy and real-time performance. This section presents the datasets, implementation details, and quantitative comparisons with existing methods.

Dataset description

Soccer-Det

Soccer-Det is a benchmark dataset constructed for fine-grained football object detection. It consists of high-resolution broadcast frames annotated with bounding boxes for players, referees, and the ball. The dataset contains 16,000 training images and 4,000 validation images from a variety of matches and viewing angles. Object categories exhibit large scale variations, with small and occluded instances occurring frequently.

FIFA-Vid

FIFA-Vid is a video-based football dataset built from FIFA World Cup broadcast footage. It includes over 25,000 frames sampled from 50 video clips, annotated with bounding boxes for the same categories as Soccer-Det. The dataset emphasizes temporal continuity and realistic motion blur, making it suitable for evaluating detection robustness under challenging visual conditions. For evaluation, we follow standard train/val/test splits as defined in prior works.

Implementation details

The FoT model is implemented in PyTorch. We use Swin-T10 as the backbone, initialized with ImageNet-1K pre-trained weights. The feature maps  are extracted after the 2nd, 3rd, and 4th stages of the backbone. The window size for LIAU is set to

are extracted after the 2nd, 3rd, and 4th stages of the backbone. The window size for LIAU is set to  , with local attention computed over

, with local attention computed over  neighborhoods. MFIM projects all features to a unified channel dimension of

neighborhoods. MFIM projects all features to a unified channel dimension of  . The Transformer decoder uses 6 layers, 100 object queries, and a hidden dimension of 256. We adopt AdamW optimizer with a base learning rate of

. The Transformer decoder uses 6 layers, 100 object queries, and a hidden dimension of 256. We adopt AdamW optimizer with a base learning rate of  , weight decay of

, weight decay of  , and a cosine learning rate schedule. The model is trained for 50 epochs on 4 NVIDIA V100 GPUs with a batch size of 32. During training, we use standard data augmentations including random cropping, horizontal flipping, and color jittering. For inference, non-maximum suppression is not applied, following DETR-style bipartite matching. Evaluation metrics include mean Average Precision (mAP) at IoU thresholds of 0.5 and 0.75, as well as frames per second (FPS) for runtime analysis.

, and a cosine learning rate schedule. The model is trained for 50 epochs on 4 NVIDIA V100 GPUs with a batch size of 32. During training, we use standard data augmentations including random cropping, horizontal flipping, and color jittering. For inference, non-maximum suppression is not applied, following DETR-style bipartite matching. Evaluation metrics include mean Average Precision (mAP) at IoU thresholds of 0.5 and 0.75, as well as frames per second (FPS) for runtime analysis.

Quantitative results

We compare our FoT framework with several representative detectors drawn from prior state-of-the-art work. These baselines span anchor-based, Transformer-based, and small-object-focused detection methods. All results are reported under consistent training settings.

Results on Soccer-Det

Table 1 shows the performance comparison on the Soccer-Det dataset. Among anchor-based methods, YOLOv55 achieves the highest FPS (42.1) but suffers from lower mAP, especially at higher IoU thresholds. Faster R-CNN3 achieves decent accuracy but at the cost of slower inference. Transformer-based models, including DETR8 and Deformable DETR9, offer better mAP but lack strong performance on small targets. RT-DETR15 and NAT13 improve efficiency and spatial modeling, yet still lag behind FoT. Small-object-focused methods such as IMFA21 and DQ-DETR23 close the performance gap but require additional modules like keypoint guidance or query density estimation. In contrast, FoT achieves the best overall performance (79.7% mAP@0.5, 61.5% mAP@0.75) with competitive speed, benefiting from its integrated local attention and multi-scale fusion design.

Table 1.

Comparison on Soccer-Det dataset. mAP is reported at IoU thresholds 0.5 and 0.75.

| Method | mAP@0.5 | mAP@0.75 | FPS |

|---|---|---|---|

| Faster R-CNN3 | 71.3 | 52.5 | 21.3 |

| YOLOv55 | 73.6 | 55.4 | 42.1 |

| DETR8 | 74.8 | 56.0 | 13.4 |

| Deformable DETR9 | 76.5 | 58.3 | 23.7 |

| RT-DETR15 | 77.0 | 59.2 | 31.8 |

| NAT13 | 75.8 | 57.9 | 28.4 |

| IMFA21 | 76.2 | 58.8 | 22.5 |

| DQ-DETR23 | 76.7 | 59.0 | 20.6 |

| FoT (Ours) | 79.7 | 61.5 | 34.5 |

Significant values are in bold.

Results on FIFA-Vid

Table 2 presents the evaluation results on the FIFA-Vid dataset. Similar trends can be observed: YOLOv5 delivers the fastest inference but lower accuracy, particularly under motion blur and multi-scale variation. Faster R-CNN performs worse due to its two-stage pipeline’s inefficiency in dynamic video frames. DETR-based models improve detection robustness but still trail behind FoT. RT-DETR and NAT achieve higher mAP than earlier Transformers, but lack specialized mechanisms for preserving small, fast-moving targets. IMFA and DQ-DETR maintain their performance, yet FoT consistently outperforms them in both accuracy and speed. The proposed framework achieves the best result of 77.2% mAP@0.5 and 59.8% mAP@0.75, verifying its effectiveness in real-world video understanding.

Table 2.

Comparison on FIFA-Vid dataset. mAP is reported at IoU thresholds 0.5 and 0.75.

| Method | mAP@0.5 | mAP@0.75 | FPS |

|---|---|---|---|

| Faster R-CNN3 | 69.8 | 51.0 | 19.7 |

| YOLOv55 | 72.2 | 54.3 | 40.8 |

| DETR8 | 73.1 | 55.2 | 12.9 |

| Deformable DETR9 | 75.0 | 57.0 | 22.9 |

| RT-DETR15 | 76.1 | 58.0 | 30.5 |

| NAT13 | 74.3 | 56.5 | 27.9 |

| IMFA21 | 75.6 | 57.5 | 21.8 |

| DQ-DETR23 | 75.9 | 57.9 | 20.1 |

| FoT (Ours) | 77.2 | 59.8 | 33.1 |

Significant values are in bold.

Ablation study

Effect of core modules

We first evaluate the contribution of each core module in FoT by incrementally activating the Local Interaction Aggregation Unit (LIAU) and the Multi-Scale Feature Interaction Module (MFIM). All experiments are conducted with the same Swin-T backbone and decoder settings. Results are shown in Table 3.

Table 3.

Ablation on core modules.  indicates the module is enabled.

indicates the module is enabled.

| LIAU | MFIM | mAP@0.5 | mAP@0.75 | FPS |

|---|---|---|---|---|

| 73.4 | 53.6 | 36.1 | ||

|

77.2 | 57.8 | 34.9 | |

|

76.1 | 56.4 | 35.3 | |

|

|

79.7 | 61.5 | 34.5 |

Significant values are in bold.

Analysis

Introducing LIAU improves the baseline by +3.8% mAP@0.5 and +4.2% mAP@0.75, demonstrating the effectiveness of localized self-attention and efficient spatial context modeling. Adding MFIM alone yields +2.7% mAP@0.5 and +2.8% mAP@0.75 improvement, showing its effectiveness in multi-scale feature fusion. Importantly, the combined improvement (+6.3% mAP@0.5), indicating a synergistic effect. This suggests that LIAU’s enhanced local features provide better input for MFIM’s multi-scale fusion, while MFIM’s unified representation benefits from LIAU’s spatial modeling, confirming their complementary nature without redundancy. These results confirm that both modules are essential and complementary in boosting fine-grained detection while maintaining real-time performance.

Effect of local window size in LIAU

We further analyze the effect of varying the window size  in the LIAU module. A smaller window allows for finer attention granularity but restricts context aggregation and introduces more computational overhead due to more windows. A larger window captures wider context but may weaken local structure modeling. Table 4 summarizes the results.

in the LIAU module. A smaller window allows for finer attention granularity but restricts context aggregation and introduces more computational overhead due to more windows. A larger window captures wider context but may weaken local structure modeling. Table 4 summarizes the results.

Table 4.

Ablation on LIAU window size  .

.  degenerates to standard pixel-wise attention.

degenerates to standard pixel-wise attention.

Window size

|

mAP@0.5 | mAP@0.75 | FPS |

|---|---|---|---|

| 1 | 74.0 | 54.8 | 30.5 |

| 3 | 76.2 | 56.9 | 32.8 |

| 6 | 78.4 | 59.5 | 34.8 |

| 8 | 79.7 | 61.5 | 34.5 |

| 10 | 78.8 | 60.3 | 34.3 |

| 12 | 77.8 | 58.7 | 34.1 |

Significant values are in bold.

Analysis

When  , attention is performed independently per pixel, equivalent to dense per-token modeling. This leads to the lowest accuracy and the slowest speed, due to the explosion in the number of attention windows and lack of context aggregation. Increasing

, attention is performed independently per pixel, equivalent to dense per-token modeling. This leads to the lowest accuracy and the slowest speed, due to the explosion in the number of attention windows and lack of context aggregation. Increasing  to 3 and 6 improves both performance and efficiency by enabling more stable local feature interaction. The best result is achieved with

to 3 and 6 improves both performance and efficiency by enabling more stable local feature interaction. The best result is achieved with  , where the window is large enough to capture meaningful context while maintaining local detail. For

, where the window is large enough to capture meaningful context while maintaining local detail. For  , performance slightly drops as windows become too large, reducing the model’s ability to focus on fine spatial structures. These results confirm that moderate window sizes (e.g.,

, performance slightly drops as windows become too large, reducing the model’s ability to focus on fine spatial structures. These results confirm that moderate window sizes (e.g.,  ) are optimal in balancing precision and efficiency for football detection tasks, and validate the LIAU design choice in FoT.

) are optimal in balancing precision and efficiency for football detection tasks, and validate the LIAU design choice in FoT.

Effect of neighborhood size in LIAU

In the LIAU module, local attention is computed over  neighborhoods, where

neighborhoods, where  controls the size of the receptive field for each attention operation. Smaller values of

controls the size of the receptive field for each attention operation. Smaller values of  focus more on local interactions, while larger values increase the context window and computational burden. Table 5 presents the results for different neighborhood sizes

focus more on local interactions, while larger values increase the context window and computational burden. Table 5 presents the results for different neighborhood sizes  .

.

Table 5.

Ablation on LIAU neighborhood size  .

.

Neighborhood size

|

mAP@0.5 | mAP@0.75 | FPS |

|---|---|---|---|

| 3 | 77.6 | 59.1 | 34.9 |

| 5 | 79.7 | 61.5 | 34.5 |

| 7 | 78.9 | 60.4 | 34.3 |

| 9 | 78.2 | 59.7 | 34.0 |

Significant values are in bold.

Analysis

When  , the attention neighborhood is small, which results in higher computational efficiency but limits the contextual information captured by each attention operation. This leads to a slight decrease in accuracy compared to

, the attention neighborhood is small, which results in higher computational efficiency but limits the contextual information captured by each attention operation. This leads to a slight decrease in accuracy compared to  . For

. For  , the model achieves the best balance between performance and computation, with significant improvements in mAP and FPS. As

, the model achieves the best balance between performance and computation, with significant improvements in mAP and FPS. As  increases to 7 and 9, the receptive field broadens, but the increase in computational complexity reduces the performance slightly, especially in terms of FPS. These results show that a moderate neighborhood size

increases to 7 and 9, the receptive field broadens, but the increase in computational complexity reduces the performance slightly, especially in terms of FPS. These results show that a moderate neighborhood size  strikes the best trade-off for local attention operations in FoT (Table 6).

strikes the best trade-off for local attention operations in FoT (Table 6).

Table 6.

Complexity and performance comparison of different attention mechanisms on Soccer-Det.

| Attention type | Complexity | GFLOPs | Memory (GB) | mAP@0.5 | mAP@0.75 | FPS |

|---|---|---|---|---|---|---|

| Global attention |

|

45.2 | 8.7 | 76.8 | 58.2 | 12.5 |

| Fixed window |

|

12.8 | 3.5 | 74.2 | 55.6 | 38.2 |

| LIAU (w/o shift) |

|

6.8 | 2.1 | 76.5 | 58.0 | 36.7 |

| LIAU (full) |

|

6.8 | 2.1 | 79.7 | 61.5 | 34.5 |

Significant values are in bold.

Complexity-performance trade-off analysis

We conduct comprehensive experiments comparing different attention mechanisms on the Soccer-Det dataset. The results demonstrate that LIAU achieves the best performance while using only 15% of the computational resources required by global attention. The shifted window mechanism (comparing rows 3 and 4) provides an additional 3.2% mAP improvement with negligible computational overhead, validating our multi-scale receptive field design. These findings confirm that local attention with proper cross-window interaction achieves superior efficiency-performance trade-offs for real-time football detection.

Hyperparameter sensitivity analysis

To further verify the robustness of our optimization objective, we conduct a detailed sensitivity analysis on the three key balancing weights involved in the total loss function (Eq. 16): classification loss weight  , bounding box regression weight

, bounding box regression weight  , and Generalized IoU loss weight

, and Generalized IoU loss weight  .

.

We evaluate three pairwise combinations of these weights while keeping the third fixed at its default value. Specifically, we sweep values in the range  for each pair and visualize the corresponding mAP values over the surface. As shown in Figure 4, all three surface plots exhibit smooth and flat landscapes, indicating that the performance is relatively insensitive to moderate changes in the loss weight configuration. Our method achieves the best result with

for each pair and visualize the corresponding mAP values over the surface. As shown in Figure 4, all three surface plots exhibit smooth and flat landscapes, indicating that the performance is relatively insensitive to moderate changes in the loss weight configuration. Our method achieves the best result with  , where the mAP peaks at 0.7975. Other combinations, such as

, where the mAP peaks at 0.7975. Other combinations, such as  and

and  , also lead to competitive mAP scores of 0.7967 and 0.7951, respectively. These results demonstrate that our framework is robust to hyperparameter variation, and the optimization objective does not require fine-grained tuning to achieve high performance.

, also lead to competitive mAP scores of 0.7967 and 0.7951, respectively. These results demonstrate that our framework is robust to hyperparameter variation, and the optimization objective does not require fine-grained tuning to achieve high performance.

Fig. 4.

Sensitivity analysis of loss weight combinations. Each surface plot shows the impact of varying two of the three weights: (left)  vs

vs  , (middle)

, (middle)  vs

vs  , and (right)

, and (right)  vs

vs  . Red dots indicate the best-performing configurations.

. Red dots indicate the best-performing configurations.

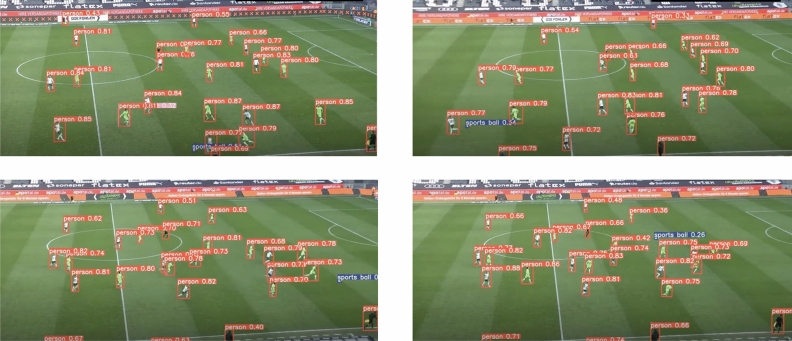

Qualitative visualization of detection results

To complement the quantitative results, we provide qualitative visualizations of detection outputs from our FoT framework, as shown in Figure 5. Each frame displays detected objects with class labels and confidence scores. The model demonstrates stable detection of both players and footballs across different camera perspectives and scene layouts. Despite the presence of scale variation, dense player distributions, and partial occlusions, the model consistently identifies relevant targets with high confidence. Notably, the football–despite being a small and fast-moving object–is successfully detected in multiple frames. This visual evidence supports the quantitative gains observed in small object detection and further verifies the effectiveness of our LIAU and MFIM modules in capturing fine-grained features.

Fig. 5.

Qualitative detection results from the FoT model. The bounding boxes show detected players and footballs along with confidence scores.

Attention visualization for small object localization

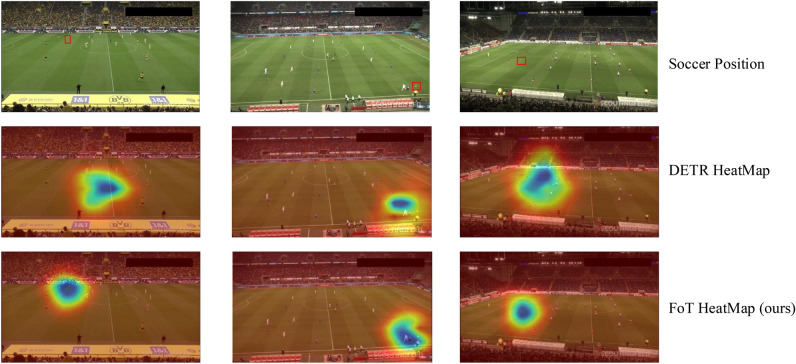

To better understand the effectiveness of our method in localizing small objects, we visualize the attention heatmaps from DETR and our FoT model, as shown in Fig. 6. The first row displays the original frames with the ground truth football locations highlighted by red boxes. The second row shows the attention response maps from DETR, while the third row illustrates the heatmaps produced by our FoT framework. Compared with DETR, which exhibits scattered or even misaligned attention distributions, our method demonstrates significantly better localization behavior. In particular, FoT focuses precisely on the football in all examples, even under challenging conditions such as small object scale, motion blur, and occlusion. These results support the claim that the proposed Local Interaction Aggregation Unit (LIAU) and Multi-Scale Feature Interaction Module (MFIM) enhance the model’s ability to capture fine-grained cues and preserve important spatial details for small object detection.

Fig. 6.

Attention heatmap comparison between DETR and our FoT model. Top row: original frames with annotated football locations. Middle row: attention heatmaps from DETR showing dispersed or incorrect focus. Bottom row: attention heatmaps from FoT correctly attending to small football targets.

Discussion

Our experimental results demonstrate that FoT achieves state-of-the-art performance on both Soccer-Det (79.7% mAP@0.5) and FIFA-Vid (77.2% mAP@0.5) datasets while maintaining real-time inference (34.5 FPS). The success can be attributed to the complementary design of LIAU and MFIM: LIAU’s local attention mechanism reduces computational complexity by 85% compared to global attention (from 45.2 to 6.8 GFLOPs) while achieving better accuracy, and MFIM’s progressive fusion strategy effectively preserves multi-scale features crucial for football detection. The ablation studies reveal that their combination yields a 6.3% mAP improvement over the baseline, with the synergistic effect evident from the fact that their joint improvement exceeds the sum of individual gains. The attention visualizations further confirm that FoT successfully focuses on small objects like footballs, addressing a key challenge where DETR struggles. These results establish FoT as an effective solution for real-time football video analysis, achieving the optimal balance between detection accuracy and computational efficiency that is essential for practical deployment in sports broadcasting and analytics applications.

Limitations and future work

To further analyze the performance limitations of our model, we present several failure cases in Fig. 7. The first row shows the original input images, the second row presents the ground truth annotations, and the third row visualizes detection results from our FoT model. For clarity, we only display those predictions that failed to match ground truth objects under the standard IoU threshold of 0.5. Specifically, we define a failure as a predicted bounding box whose IoU with any ground truth is below 0.5, which corresponds to cases where the detection does not contribute to the final mAP score.

Fig. 7.

Representative failure cases of the FoT model. Top row: input frames. Middle row: ground truth annotations. Bottom row: false predictions (IoU< 0.5). Columns 1–3 show confusion with advertising boards; column 4 highlights missed detections due to small objects and color similarity; column 5 reflects detection failures under strong illumination.

From the visualization, several representative failure modes can be observed. In the first three columns, false positive predictions are primarily caused by advertising boards and stadium decorations that visually resemble players due to their upright, rectangular appearance and placement near the field boundary. The fourth column demonstrates the difficulty in detecting far-distance players and small-scale footballs. In this case, the football occupies only a few pixels and appears visually ambiguous due to its color blending with players’ jerseys. The last column illustrates the negative impact of extreme lighting conditions, where strong sunlight leads to overexposed regions, reducing the model’s ability to distinguish object boundaries and fine textures. It is worth noting that many of these cases are intrinsically challenging even for human annotators. They are often induced by the dataset itself, including imperfect annotations, motion blur, harsh illumination, and low resolution. Although our method performs robustly across most scenarios, these failure cases highlight opportunities for further improvement.

In future work, we plan to incorporate scene-aware priors, such as pitch layout and contextual spatial constraints, to help disambiguate static background elements from active targets. Additionally, extending the model to exploit multi-frame temporal information may enhance its capability to track motion continuity, which is particularly beneficial for detecting small or fast-moving objects. We also aim to improve illumination robustness through light-invariant feature learning or adaptive exposure normalization. Furthermore, enhancing the quality and diversity of training data–especially with targeted augmentation for edge cases–could further improve generalization under challenging conditions.

Conclusion

In this work, we propose Football Transformer (FoT), a novel end-to-end detection framework tailored for football video analysis, which jointly addresses the challenges of real-time inference, complex scene structures, and small object localization. The key components of FoT include the Local Interaction Aggregation Unit (LIAU), which enables efficient and fine-grained attention modeling within local windows, and the Multi-Scale Feature Interaction Module (MFIM), which enhances semantic consistency across different feature levels. Extensive experiments conducted on Soccer-Det and FIFA-Vid demonstrate that our method achieves superior performance compared to both convolutional and Transformer-based baselines, with consistent improvements in mAP and inference speed. In particular, FoT exhibits a strong ability to detect small-scale and low-saliency targets such as footballs.

Acknowledgements

We sincerely thank all those who supported and assisted in this research. It is through your support and collaboration that this study was successfully completed. We are especially grateful to our supervisor for the dedicated guidance and strong support provided throughout the project. The supervisor contributed to the research design and offered crucial advice on technical approaches and research directions, greatly enhancing the scientific rigor and reliability of the study. We extend our heartfelt thanks once again to everyone who contributed to this research.

Author contributions

WT-Z was responsible for the overall design and planning of the study, conducted the literature search, data analysis, and drafted the initial manuscript. YC-Y participated in the study design and data processing, prepared figures, and contributed to the revision and editing of the manuscript. Both authors reviewed and approved the final version of the manuscript and take full responsibility for its content and accuracy.

Funding

This research received no external funding.

Data availibility

All data generated or analysed during this study are available to readers upon request to the author WT-Z.

Declarations

Competing interests

The authors declare no competing interests.

Footnotes

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

References

- 1.Liu, G., Luo, Y., Schulte, O. & Kharrat, T. Deep soccer analytics: Learning an action-value function for evaluating soccer players. Data Mining Knowl. Discov.34, 1531–1559 (2020). [Google Scholar]

- 2.Akan, S. & Varlı, S. Use of deep learning in soccer videos analysis: Survey. Multimedia Syst.29, 897–915 (2023). [Google Scholar]

- 3.Ren, S., He, K., Girshick, R. & Sun, J. Faster r-cnn: Towards real-time object detection with region proposal networks. IEEE Trans. Pattern Anal. Mach. Intell.39, 1137–1149 (2016). [DOI] [PubMed] [Google Scholar]

- 4.Liu, W. et al. Ssd: Single shot multibox detector. In Computer Vision–ECCV 2016: 14th European Conference, Amsterdam, The Netherlands, October 11–14, 2016, Proceedings, Part I 14, 21–37 (Springer, 2016).

- 5.Redmon, J., Divvala, S., Girshick, R. & Farhadi, A. You only look once: Unified, real-time object detection. In Proceedings of the IEEE conference on computer vision and pattern recognition, 779–788 (2016).

- 6.Lei, M. & Wang, X. Epps: advanced polyp segmentation via edge information injection and selective feature decoupling. arXiv:http://arxiv.org/abs/2405.11846arXiv:2405.11846 (2024).

- 7.Lei, M., Wu, H., Lv, X. & Wang, X. Condseg: A general medical image segmentation framework via contrast-driven feature enhancement. In Proceedings of the AAAI Conference on Artificial Intelligence39, 4571–4579 (2025).

- 8.Carion, N. et al. End-to-end object detection with transformers. In European conference on computer vision, 213–229 (Springer, 2020).

- 9.Zhu, X. et al. Deformable detr: Deformable transformers for end-to-end object detection. arXiv:http://arxiv.org/abs/2010.04159arXiv:2010.04159 (2020).

- 10.Liu, Z. et al. Swin transformer: Hierarchical vision transformer using shifted windows. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), 10012–10022 (2021).

- 11.Wang, W. et al. Pyramid vision transformer: A versatile backbone for dense prediction without convolutions. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), 568–578 (2021).

- 12.Dong, X. et al. Cswin transformer: A general vision transformer backbone with cross-shaped windows. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 12124–12134 (2022).

- 13.Hassani, A., Walton, S., Li, J., Li, S. & Shi, H. Neighborhood attention transformer. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, 6185–6194 (2023).

- 14.Liu, D., Cui, Y., Tan, W. & Chen, Y. Sg-net: Spatial granularity network for one-stage video instance segmentation. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, 9816–9825 (2021).

- 15.Zhao, Y. et al. Detrs beat yolos on real-time object detection. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, 16965–16974 (2024).

- 16.Han, C. et al. Prototypical transformer as unified motion learners. arXiv:http://arxiv.org/abs/2406.01559arXiv:2406.01559 (2024).

- 17.Wang, W., Han, C., Zhou, T. & Liu, D. Visual recognition with deep nearest centroids. arXiv:http://arxiv.org/abs/2209.07383arXiv:2209.07383 (2022).

- 18.Chen, Q. et al. You only look one-level feature. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, 13039–13048 (2021).

- 19.Xu, C. et al. Rfla: Gaussian receptive field based label assignment for tiny object detection. In European conference on computer vision 526–543 (Springer, 2022).

- 20.Yuan, X., Cheng, G., Yan, K., Zeng, Q. & Han, J. Small object detection via coarse-to-fine proposal generation and imitation learning. In Proceedings of the IEEE/CVF international conference on computer vision, 6317–6327 (2023).

- 21.Zhang, G. et al. Towards efficient use of multi-scale features in transformer-based object detectors. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, 6206–6216 (2023).

- 22.Liang, J., Zhou, T., Liu, D. & Wang, W. Clustseg: Clustering for universal segmentation. arXiv:http://arxiv.org/abs/2305.02187arXiv:2305.02187 (2023).

- 23.Huang, Y.-X., Liu, H.-I., Shuai, H.-H. & Cheng, W.-H. Dq-detr: Detr with dynamic query for tiny object detection. In European Conference on Computer Vision 290–305 (Springer, 2024).

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

All data generated or analysed during this study are available to readers upon request to the author WT-Z.