Abstract

Manifold learning builds on the “manifold hypothesis,” which posits that data in high-dimensional datasets are drawn from lower-dimensional manifolds. Current tools generate global embeddings of data, rather than the local maps used to define manifolds mathematically. These tools also cannot assess whether the manifold hypothesis holds true for a dataset. Here, we describe DeepAtlas, an algorithm that generates lower-dimensional representations of the data’s local neighborhoods, then trains deep neural networks that map between these local embeddings and the original data. Topological distortion is used to determine whether a dataset is drawn from a manifold and, if so, its dimensionality. Application to test datasets indicates that DeepAtlas can successfully learn manifold structures. Interestingly, many real datasets, including single-cell RNA-sequencing, do not conform to the manifold hypothesis. In cases where data is drawn from a manifold, DeepAtlas builds a model that can be used generatively and promises to allow the application of powerful tools from differential geometry to a variety of datasets.

Introduction

Over the past several decades, the collection and analysis of high-dimensional data has emerged as a major theme across widely disparate fields including agriculture (1), civil engineering (2), environmental studies (3), finance and economics (4), sales (5), and many, many more. High-dimensional data is particularly an issue for single-cell genomics, such as single-cell RNA-sequencing (scRNA-seq), where we simultaneously measure the expression levels of thousands of genes across thousands to millions of single cells (6, 7). The sheer size of these datasets poses significant challenges for their analysis (8, 9).

While high-dimensional, these data are often thought of as being sampled from a much-lower dimensional manifold (10–12). This has given rise to the so-called “manifold hypothesis,” which holds that high-dimensional datasets can be productively analyzed and understood in terms of the lower-dimensional manifold structures they contain (13, 14). This has given rise to “manifold learning,” which attempts to develop algorithms to find these manifold structures. Current manifold learning tools are focused on dimensionality reduction, and work by generating a lower-dimensional global representation of the data (15–18). Perhaps the most well-known example of such an algorithm is Principal Component Analysis (PCA), which works by identifying a set of orthogonal components whose linear combination can capture the maximal variation within the data in question (19). Non-linear tools like t-SNE and UMAP have also become extremely popular for dimensionality reduction and visualization of data, particularly in the context of single-cell genomics studies (20–23).

As an example, consider the S-curve, which is a classic example of a 2-dimensional manifold embedded in 3-dimensional space (Fig. 1A). This dataset can easily be globally embedded into 2 dimensions, simply by uncurling that flat plane (see Methods). Application of UMAP to this dataset with the default parameters unfolds the data into 2D but introduces a set of holes or “tears” into the data. In contrast, a sphere (Fig. 1B) is also a 2-dimensional manifold, but it is mathematically impossible to embed the sphere in 2 dimensions. Application of tools like UMAP to the sphere generate an even more distorted representation, with very large tears in the dataset. These tears are problematic, in that they severely disrupt the structure of the data: points that are near neighbors of one another in the original dataset can end up on completely opposite sides of the lower-dimensional representation (Figs. 1A and 1B).

Fig. 1. Learning manifolds.

(A) The 3D S-curve dataset and true 2D embedding (left) and UMAP embedding (right). (B) A spherical dataset and its UMAP embedding. (C) Schematic of the steps of DeepAtlas applied to a 2D oval shape.

We recently developed a method to quantify this type of topological distortion and used it to show that a large set of “manifold learning” tools cannot generate effective lower-dimensional embeddings (24). We found this was true both for simple synthetic data, such as hyperspheres, and for real data from single-cell genomics (25, 26). This suggests that, while potentially useful for visualization, currently-available manifold learning tools are not able to effectively learn manifold structures from data. This is in part due to the focus that these tools have on generating global embeddings. As described below, the mathematical definition of a manifold is local in nature, but that fact is not directly leveraged by any currently-available manifold learning tool.

In a practical sense, when presented with data, we may have two questions. First, is the data actually sampled from a lower-dimensional manifold? And, if so, can we generate a mathematical model of that manifold structure? Here, we present DeepAtlas, a tool that effectively answers both of those questions. The first step in this analysis pipeline involves assessing whether or not the data is likely drawn from a manifold, since we can hardly learn a manifold structure if none is present in the data. This step also allows us to determine the dimensionality of the manifold, if one is present. The second step of the pipeline involves using a deep neural network to learn a model of the manifold itself. We do this by learning a set of “local charts” for the data, and the collection of these charts forms an atlas for the manifold.

Application of the first part of the pipeline to synthetic data demonstrates that DeepAtlas accurately estimates the dimensionality of manifolds, even when the dimensionality of the data is high relative to the number of sampled data points. Interestingly, we find that several real datasets actually show no evidence of being drawn from a manifold, including those classically used for testing manifold learning tools and scRNA-seq data. Other real-world datasets, however, are consistent with being drawn from a manifold, including the classic MNIST test dataset of images of hand-written digits. For both synthetic and real data, we demonstrate that DeepAtlas can effectively learn a differentiable model from this data. We show that this model can be used generatively to produce new data points from the original manifold by sampling from the local representations of the data. To our knowledge, this work represents the first attempt to actually directly apply the mathematical definition of a manifold to study data.

Results

Overview of DeepAtlas

As mentioned in the introduction, high dimensional data is often analyzed based on the “manifold hypothesis,” which posits that high-dimensional data is drawn from a lower-dimensional manifold (see the examples of the S-curve and sphere in Figs. 1A and 1B). In discussing the manifold hypothesis further, it is helpful to revisit the mathematical definition of a manifold. Our discussion here is not meant to be completely formal, but is consistent with more rigorous treatments (11, 12, 27, 28). We say a set is an -dimensional manifold if and only if there exists a covering of open subsets of such that, for each open subset in this covering, there exists a homeomorphism between and an open subset of Euclidean space . Recall that a set is homeomorphic to a set when there is a continuous, invertible function between them such that the inverse is also continuous; we call this function for each member of this covering. Intuitively, this means that there is a way to “locally” represent regions of the manifold as an -dimensional Euclidean space (Fig. 1C). A great example of this is the surface of the Earth. Globally, the earth is obviously spherical, but it is possible to make a completely flat map of a city like Los Angeles. Every point in Los Angeles corresponds to a point on the map (e.g., think of finding a specific address on a map); that correspondence represents the action of the function . As a result of this obvious correspondence with maps of locations on the earth, these functions are often referred to as “charts” or “coordinate charts”. The collection of the set of charts and their corresponding open subsets of forms an “atlas,” similar to how a collection of local maps can form an atlas of the earth.

It is often helpful to consider a “differentiable manifold,” which imposes an additional requirement. Specifically, consider two sets and in the covering of open sets in , and say they have a non-trivial intersection . If the manifold is differentiable, then for any point in the image of this intersection, must be differentiable; here, we will take these functions to be smooth, meaning infinitely differentiable. These overlapping regions are often referred to as “transition regions.” In the example of using a collection of local 2D maps to describe the 3D globe of the Earth, these transition regions are the areas shown at the edges of multiple maps so that you can cross from one local region to the next while navigating.

The collection of algorithms referred to as “manifold learning” universally seek to obtain global embeddings of the data (11); for instance, UMAP attempts to globally “unfold” datasets like the S-curve globally into a 2-dimensional representation (Fig. 1A). However, many of these algorithms actually fail to generate reasonable embeddings. For instance, the popular manifold learning algorithm UMAP performs fairly well on the S-curve, but introduces tremendous distortion when applied to a non-trivial manifold like the sphere (Fig. 1B). Indeed, we found that manifold learning algorithms generally fail to generate effective embeddings, even when presented with relatively trivial problems like embedding a hypersphere (24). This suggests a need to develop algorithms that leverage the definition of a manifold as a local representation.

Here we propose a new algorithm, DeepAtlas, as a solution to this problem. The approach has three main steps: 1) generate local neighborhoods, 2) determine the embedding dimension and generate local embeddings, and 3) learn the functions to construct an atlas between the high and low dimensional representations of each local neighborhood (Fig. 1C). This process allows DeepAtlas to approach the data locally to minimize distortion, identify the dimension of a manifold if one exists, and learn the functions that map between local embeddings in that lower dimension and the original dataset.

Clustering

The first step in DeepAtlas is to determine the covering of subsets, which we do by generating “local neighborhoods” in the original data (Fig. 1C). Here we use the 𝑘-means clustering algorithm, which groups points based on the average distance to the cluster’s center. We chose to focus on 𝑘-means because it generates local neighborhoods that correspond to our geometric intuition from common examples like the sphere or S-curve, and because we found it works well on the example cases described below. Importantly, DeepAtlas is designed to be modular and any other clustering algorithm can be substituted as needed.

Once the data has been partitioned into clusters, we determine the transition regions between the clusters. To do this, we first compute the nearest neighbors of all the points in the dataset. A transition point is defined as a point that has at least one of these nearest neighbors in a different cluster. We then expand the original clusters as follows: for every transition point, we add each of its neighbors from other clusters to the original cluster. This means that our clusters have overlapping sets of points on their borders. As an example, we used 𝑘-means clustering to partition the S-curve into clusters (Fig. 2A). After adding the transition points to the relevant clusters with nearest neighbors (which are colored black in Fig. 2A), it is clear how these local regions can be “stitched together” by the transition regions between them. Note that and are parameters that can be set by the user; some discussion of how to determine meaningful values of these parameters, particularly , can be found below.

Fig. 2. DeepAtlas Leverages Local Neighborhoods.

(A) 3D S-curve example partitioned into clusters. Each cluster has transition points included in both neighboring clusters, highlighted in black. Clusters are reduced to 2D with PCA and aligned to show how they can be stitched together. (B) Visual explanation and mathematical definition of the Average Jaccard Distance (AJD), a metric used to quantify topological distortion introduced by dimensionality reduction. (C) Comparison of the AJDs of global PCA and average AJDs across local neighborhoods generated by DeepAtlas.

Generating local embeddings

The next step in the pipeline involves generating the lower-dimensional embeddings of these local regions. To do this, it is helpful to introduce our previous work, which focused on understanding to what extent an embedding introduces topological distortion into the data (24). To quantify this distortion, we developed a statistic based on the Jaccard distance, which measures dissimilarity between the nearest neighbors of a point in the original 𝑚-dimensional dataset and the nearest neighbors of that same point when it is compressed into dimensions, where . Let be the set of nearest neighbors of a given point in the original data and be the set of nearest neighbors of in the lower-dimensional representation (Fig. 2B). The Jaccard distance for point is defined as:

| (1) |

where represents the cardinality of the set . The larger the Jaccard distance, the more dissimilar the neighborhoods are: when the neighborhoods are identical and there is no distortion, and when the neighborhoods of the point are completely different. Formally, a lower-dimensional representation that is actually an embedding should have no distortion, so we should have at each point. A simple way to get a sense for the overall distortion in a dataset is to average the Jaccard distance across all the points in the dataset. This produces a statistic we call the “Average Jaccard Distance” or AJD:

| (2) |

where is the total number of points in the dataset (Fig. 2B).

We tested a wide variety of dimensionality reduction algorithms on simple manifolds like the hypersphere and found that nearly every algorithm we tested introduced high levels of distortion (24). For instance, application of UMAP to the S-curve in Figs. 1A and 2B results in an AJD of 0.35, indicating a high level of distortion even for this simple case. Indeed, the only approach that we found that could reliably reduce the dimensionality of data with close-to-0 distortion in the known minimal embedding dimension for hyperspheres was PCA (19, 24), which was effective regardless of the underlying dimensionality of the hypersphere from which the data was drawn. As such, in this work, we focus on using PCA to generate our local embeddings. As mentioned above, DeepAtlas is entirely modular, and can be used with any linear or non-linear dimensionality reduction tool.

We first tested this approach on the S-curve and sphere, which we know both have a local dimension of 2. We found that PCA generated very low-distortion embeddings for the local clusters in these data, even though the global structures could not be embedded in 2D without very high levels of distortion (Fig. 2C). We next considered the “Swiss Roll,” another locally 2D manifold frequently used for testing manifold learning algorithms (Fig. S1, 29). Interestingly, we found that setting resulted in high levels of distortion (Fig. 2C); visual inspection of the 2D embeddings showed that the problem in this case was caused by the fact that, at , the clusters were not confined to points on the manifold, but rather included points that were close in 3-dimensional space but not neighbors on the surface of the Swiss Roll (Fig. S1A and S1C). We progressively increased the value of until the local embeddings had low AJD (Fig. S1B and S1D). This demonstrates that the strategy of using PCA for local embeddings works also with the Swiss Roll, as well as highlighting how the AJD can be used to guide the choice of critical parameters.

In general, however, we will not know a priori what the local dimension is for any given dataset we encounter. The approach we take thus involves applying PCA to each of the -means clusters and varying the number of principal components (PCs) used to represent the data from 1 to , the original dimensionality of the data. The resulting plot of the AJD vs. local embedding dimension provides a straightforward way to determine the value of . To test this approach in higher dimensions, we considered the 9-dimensional hypersphere , which can be globally embedded in 10 dimensions or more ( or higher). We used a simple computational approach we developed in our previous work to sample 5,000 data points with uniform probability from the surface of the 9-dimensional hypersphere; note that each point sampled in this way is just a vector in (see Methods for further details, 24). We can trivially embed this manifold in by appending 10 zero elements to each vector. While this is obviously an extremely simple example, we note that all available “manifold learning” tools we tested, including popular tools like t-SNE and UMAP, were unable to identify a low-distortion embedding for this and similar examples, even when attempting to embed from into . Indeed, PCA was the only tool we found that could achieve success in this case (24).

To test our approach for identifying the correct local embedding dimension, we first applied -means clustering to the data, using as a starting point. This resulted in an approximately uniform set of cluster sizes (Fig. S2). We then calculated the AJD for the PCA embedding as a function of the embedding dimension for the individual clusters. As can be seen from Fig. 3A, there is a very distinct behavior here—the clusters’ AJD is close to 0 at a local dimension of 9, and the individual clusters generate almost exactly the same curve. We applied this approach to several other example hyperspheres, ranging in dimensionality from 5 to 1000, and found consistent performance—PCA was always able to identify the correct local embedding dimension (Fig. 3B, Fig. S3).

Fig. 3. DeepAtlas Performs Well on Test Datasets.

AJD vs. PCA embedding dimension plots, where each gray or colored line is a cluster and the black line is the average. (A) 10D hypersphere in 20D space. (B) 20D hypersphere in 40D space. (C) The Pima diabetes dataset. (D) The Boston housing dataset. (E) The wine quality dataset. (F) The wheat seeds dataset. Note that we do not see indication of a consistent lower-dimensional manifold in C or D.

We then applied this approach to more realistic datasets that are often used as examples for testing unsupervised and manifold learning algorithms (30). This includes a dataset of biomarkers from the Pima Native American peoples, features of houses related to housing prices, and physical characteristics of wine and wheat seed samples (Fig. 3B and 3C), as well as several others (Fig. S4). In each case, we chose an initial value of such that the smallest clusters would never have fewer than 50 points. Since these datasets vary significantly in size (from for the Wheat dataset to for the Wine dataset), the value of for our initial tests also varied (Figs. 3C–F). Interestingly, we saw two distinct classes of behaviors for these datasets. In the Pima and Housing datasets, we see that individual clusters vary wildly in terms of AJD vs. embedding dimension using PCA. For instance, in the 8-dimensional Pima dataset, some clusters achieve relatively low distortion (less than 0.2) at around 6 dimensions, while others require all 8 dimensions to achieve similarly low distortion (Fig. 3C). The case for the Housing dataset is similar (Fig. 3D). In the Wine and Wheat datasets, however, we see much more uniform behavior across clusters; the 12-dimensional Wine dataset is (locally) 11-dimensional (Fig. 3E), while the Wheat dataset is approximately 4-dimensional (Fig 3F).

These findings indicate that the local neighborhoods of the Pima and Housing datasets do not have a consistent dimensionality. Formally, a dataset might be drawn from a union of manifolds, that is, disjoint “pieces” of different dimensionality: consider, for instance, a dataset consisting of the union of a circle and a sphere (Fig. S5). Each connected component of this dataset is its own manifold with a single local dimensionality; if the local dimensions are the same, we can technically consider the entire dataset as a manifold. This suggests that, in addition to exploring the dimensionality of these local neighborhoods, it is also important to consider whether the dataset itself might consist of the union of different manifolds.

DeepAtlas offers two complementary approaches to determining the underlying connectivity of the dataset. The first is generated by the “transition points” between the clusters we obtain. We can use transition points to represent any dataset as a graph, where the nodes are the clusters and we place an edge between two clusters if they have transition points shared between them (in other words, if one of the nearest neighbors of a point from one cluster is in the other). A picture of this graph is provided for each of the datasets in Fig. 3; note that here, the weight or “width” of each edge is proportional to the number of transition points between the two clusters. This approach is similar to the PAGA algorithm developed by Wolf, Theis, and coworkers for visualization and analysis of scRNA-seq data (31). DeepAtlas also provides a complementary method for finding distinct connected components in the data, which is based on a graph-based approach that we call networks (also known as random geometric graphs, Fig. S6, 32).

The hypersphere datasets we generated clearly form a single connected component by construction, and that is reflected in the graph of connections between local neighborhoods for these datasets (Figs. 3A and 3B). The Pima dataset forms a single connected component regardless of whether we use the transition point graph (Fig. 3C) or the network approach (Fig. S6C). As mentioned above, however, the local neighborhoods of a connected component of a manifold must have the same dimensionality, and so this stage of DeepAtlas pipeline strongly suggests that the Pima data cannot be modeled as a manifold. Interestingly, we find that the Housing dataset almost certainly comprises two separate components (Fig. 3D and Fig. S6D); in this case, however, neither of those components have a consistent local dimension (Fig. 3D). So, as with the Pima data, the Housing data does not represent a manifold structure, at least as revealed in the current DeepAtlas analysis.

Both the Wine and the Wheat datasets, however, do seem to have a consistent manifold structure (Figs. 3E and 3F). In the case of the Wine dataset, there is one local cluster that has no connection with the rest of the data. Regardless, the connected component of the data has a consistent dimension of 11 (Fig. 3E). Interestingly, the Wheat dataset forms a simple line-like structure where each local neighborhood is approximately 4-dimensional (Fig. 3F). Using the approach shown in Fig. 3, users can simultaneously evaluate whether their data is likely taken from a manifold, and, if so, determine the local dimensionality of that manifold.

Learning the Atlas

The final step of DeepAtlas is to learn the functions to construct an atlas. We first note that the individual functions that comprise a smooth atlas are often taken to be diffeomorphisms, meaning that they are invertible, continuous, and smooth in both directions. For the “forward” direction, i.e. mapping between the high- and low-dimensional spaces, we just use PCA itself. PCA is lossy, and so does not possess an exact inverse, so we use a deep neural network to learn the inverse for each chart (Fig. 4A). This function takes in the position of a point in the PCA embedding and attempts to predict the original position of that point in the starting dataset. To train this network, we use the standard Mean Squared Error (MSE) approach, where we quantify the error based on the average square distance between the output values of the network and the position of that point in the original high-dimensional space. Given this architecture, we can use standard tools for training neural networks, for instance Stochastic Gradient Descent (SGD) methods implemented in Python, to train the networks themselves (33).

Fig. 4. Learning Inverse Charts.

(A) Schematic of the neural network structure used by DeepAtlas to approximate the inverse of each chart. Beneath we show an example of training the neural network to map back to the original high dimensional data. (B) AJD comparison to evaluate the performance of the neural network model on the S-curve data. The distributions in the violin plot are taken across the 5 -means clusters. (C) 10-Fold Cross Validation results to further evaluate the neural network performance, measured using Mean Squared Error (MSE).

Of course, the models’ usefulness hinges on the accuracy of their output embeddings. We tested multiple versions of this particular architecture with a varying number of layers on a variety of test datasets, all of which yielded similarly good results. For instance, applying our prototype algorithm to the S-curve data, the model achieved a low Mean Squared Error (MSE) with 10 hidden layers and 10,000 epochs of SGD (Fig. S7). To evaluate performance, we first considered the AJD between the original data (i.e. the points on the S-curve manifold, Fig. 4B) and the various low-dimensional representations. In particular, we see low AJD values between the neural network output and the original data, indicating that the training was very successful in this case. The model also consistently yields low MSE when tested with 10-fold cross-validation (Fig. 4C). We also applied trained similar models for the hypersphere data and the machine learning test datasets (namely the Wheat and Wine datasets, where we see evidence of a consistent manifold, Fig. 3E and 3F), obtaining similarly good performance (Fig. S8). We note that users can alter the network architecture and training protocol to suit any particular dataset. The combination of PCA and these learned inverses gives us an approximation of the atlas for the dataset.

scRNA-Seq Datasets Lack Manifold Structure

A major application area for manifold learning tools is scRNA-seq data. One of the main challenges of analyzing this data is its high dimensionality; raw datasets often report the gene expression of tens of thousands of genes in tens of thousands to even millions of cells. In these data, cells can be thought of as being points in a >20,000-dimensional vector space, and as a result manifold learning tools are essentially ubiquitous in this field. This includes the almost universal reliance on PCA upstream of analyses like cell type clustering, as well as a reliance on visual inspection of 2D visualizations of the data (particularly UMAP plots) to characterize and evaluate cell type clusters and patterns of gene expression (34–37). scRNA-seq data is widely thought to have a lower-dimensional manifold structure, and many pipelines (implicitly or explicitly) make an appeal to the manifold hypothesis to argue that downstream analyses can meaningfully be performed using lower-dimensional representations (36–38). Our previous work demonstrated that linear tools like PCA and non-linear tools like t-SNE and UMAP introduce tremendous topological distortion into scRNA-seq data, with AJD values typically above 0.9 (24). This and other studies (39–42) all suggest that current manifold learning techniques struggle to successfully embed or otherwise represent the actual structure of scRNA-seq data.

We applied DeepAtlas’ pipeline to explore whether scRNA-seq datasets likely conform to the manifold hypothesis. Very few (if any) analyses of scRNA-seq data are carried out on the raw count data itself. Rather, the data is subjected to a more-or-less standard pipeline of transformations before dimensionality reduction is performed; this includes normalizing the data by Counts Per Million (CPM), identifying and focusing on a subset of “Highly Variable Genes” (HVGs), and log transformation (6, 7, 36). We expect that the relevant lower-dimensional manifold should be evident from the raw data, but by applying DeepAtlas at each stage in this pipeline we were able to test how these transformations might influence the underlying structure of the data.

There are a large number of published scRNA-seq studies to which DeepAtlas could be applied. We first chose to focus on an important dataset in which immune cells were first separated into separate cell-type groups using Fluorescence-activated cell sorting (FACS) before being sequenced (43). This allows us to apply our pipeline to groups of cells that all come from the same cell type, based on well-established celltype markers. The data shown in Figure 5 is scRNA-seq data for the Natural Killer (NK) cells. We also applied DeepAtlas to other scRNA-seq data including the full PBMC data from the same study that provided the NK data, data from cell lines that also has cells separated into natural groups (44), and simulated scRNA-seq data (Fig. S9, 45).

Fig. 5. We Do Not Observe Manifolds in scRNA-seq.

PC vs AJD plots for the NK cell scRNA-seq data. All cases used clusters. (A) Raw data. (B) Highly Variable Genes (HVGs). (C) Counts Per Million (CPM) Normalized HVGs. (D) Fully transformed data: CPM and log transformation applied to the HVGs. Note the lack of consistent manifolds for any of these cases, particularly D, which is the space most often used as the basis for scRNA-seq analysis.

The first step of DeepAtlas is to generate local neighborhoods; here we used 𝑘-means clustering with . For 40 -means clusters in the raw NK cell data, we found that the clusters range in size from having as few as 5 cells in a cluster to as many as 1679 (Fig. S10A). We tested varying values of , but the results were similar (Fig. S11, S12). The trend of widely varying cluster sizes was consistent across all stages of data transformation/preprocessing and across all datasets we considered (Fig. S10). This difference between sizes of clusters was also consistent across various values of the parameter used for -means clustering (Fig. S11). These clustering results are very different compared to the other datasets we considered, where cluster size was very consistent across the various clusters (Figs. S2, S13), and may be a result of the highly uneven distribution of cells in these various transformed gene expression spaces (46).

From a practical perspective, the size of a cluster caps the number of dimensions that the smaller clusters can be reduced to; if we have only 5 cells in a cluster, then at the 5th PC there is essentially one component for each cell, and the dimensionality of that cluster cannot be reduced further. This results in sudden, dramatic decreases in the AJD when the PC number gets close to the number of cells in the cluster (Fig. 5). We found that the curves for each cluster do not align closely with each other; some clusters require hundreds of more PCs to reach a low AJD compared to others (Fig. 5). This indicates that, at least in space of the raw counts, the cells are not sampled from a consistent manifold (Fig. 5A). Interestingly, we found lower AJD values overall for the data when just considering the top 2000 HVGs (6, 7, 36), but the individual clusters still do not align to show a consistent local dimension (Fig. 5B). Neither CPM normalization of the data nor log transformation resulted in consistent dimensionality across clusters (Fig. 5C); indeed, after the final step of log transformation, the AJD values increase and the cluster curves visually separate even more (Fig. 5D). This trend was also seen when DeepAtlas was run across other scRNA-seq experiments (44) and even simulated scRNA-seq data generated using the scDesign package (45) (Fig. S7). Note that, in all these cases, analysis of transition points and 𝜖 networks both indicate that the datasets comprise a single connected component (Fig. S14). Taken together, these findings suggest that the manifold hypothesis actually does not hold for scRNA-seq data, calling into question the widespread application of that hypothesis in the literature (47–49).

MNIST Digits Data

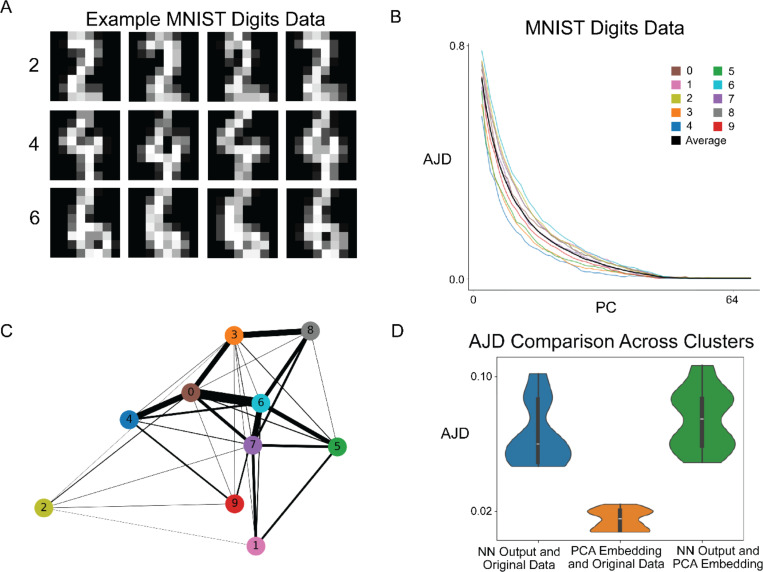

Since we did not find the expected manifold structure in our analyses of various scRNA-seq data, we sought to verify DeepAtlas on another higher-dimensional dataset where a lower-dimensional manifold is thought to exist: the popular dataset of handwritten digits from the Modified National Institute of Standards and Technology (MNIST) database (50). This is a set of 64-dimensional images of handwritten digits 0 through 9, where each value represents the intensity of a pixel of an 8×8 image (Fig. 6A).

Fig. 6. MNIST Digits Show Manifold Structure.

(A) Example images from the MNIST Digits dataset, which consists of 8×8 pixels representing handwritten images of digits 0–9. (B) PC vs. AJD plot of the MNIST data with clusters, indicating a lower dimensional manifold around dimension 40. (C) A graph representation of the MNIST dataset. Each node corresponds to one of the clusters and edges are placed between clusters that have shared nearest neighbors. The clusters are numbered according to which number in the MNIST set makes up the majority of that cluster. The colors here match panel B. The width of each edge is proportional to the number of transition points between the clusters. (D) AJD comparison after applying DeepAtlas and creating a neural network model.

We applied DeepAtlas to this data using for -means clustering, as we expect each of the ten digits to cluster together. The plot of PC vs. AJD for each local neighborhood does indeed indicate the existence of a lower-dimensional manifold (Fig. 6B). The lines representing each cluster are close together and show similar behavior across PC dimensions. Further, all clusters show little to no distortion in PC dimensions above 40. This, combined with the fact that the data consists of a single connected component (Fig. 6C and Fig. S15), suggests that the MNIST data is drawn from a 40-dimensional manifold. We then learned the functions to map from these local 40D PCA embeddings to the 64D original data. Since we are training on image data, we found better performance when using convolutional layers in our neural network. The trained model results in low AJD values, which means there is little distortion between the model output and the true data (Fig. 6D). This demonstrates that DeepAtlas can be trained to construct a differentiable model of real datasets in relatively high dimensions.

Model Applications

The fact that DeepAtlas learns a model of the manifold enables a number of interesting applications. For one, unlike some manifold learning tools, it is very straightforward to apply DeepAtlas to new data that might be experimentally generated for the same system. To map any new data point(s) to their local lower-dimensional representations in the model, all one has to do is assign each point to the appropriate cluster and then apply the PCA transformation for that cluster to represent that point in the local embedding. In the current implementation, assigning a point to a cluster is straightforward: one can do so simply by calculating the distance between the point and all the “ means” and choosing the cluster that is closest. So the nature of DeepAtlas makes it very extensible to model new data points.

Another example application of DeepAtlas is to sample points from the low-dimensional representation and then use the charts to map these new points back to the original manifold on which the model was trained. This effectively allows us to computationally sample new points on the same manifold without having to perform any further experiments. The idea here is that we first sample points in the lower-dimensional representation for each local cluster, and then use the neural networks to map these new points back into the high-dimensional space to generate new examples drawn from the same manifold. To sample a new point from the lower-dimensional representation, we first pick one of the original data points at random. We then sample “nearby” this original point by sampling from a ball of radius surrounding the point, where is the distance to one of the point’s nearest neighbors (Fig. 7A). We can then use the appropriate neural network in the atlas to map this new point back to the original manifold.

Fig. 7. Using DeepAtlas Generatively.

(A) Schematic of the process used to generate new data sampled from a manifold. (B) The model applied to the PCA embedding directly without sampling. (C) The model applied to the sampled points generated with the radius of the 1st nearest neighbor. (D) The model applied to the sampled points generated with the radius of the 5th nearest neighbor.

To demonstrate that DeepAtlas can be used generatively in this way, we revisited the S-curve dataset. We used the procedure above to sample exactly as many data points from each cluster as we had in the original dataset (Fig. 7A). As expected, when we apply DeepAtlas to the original points, we recover the S-curve (Fig. 7B). When applied to a new dataset of points sampled from the PCA embedding using a radius of the 1st and 5th nearest neighbor, the model was again able to generate the S-curve structure (Figs. 7C, 7D). This was also the case with larger radius values, though we do see more error as the radius increases (Fig. S16). This experiment demonstrates that DeepAtlas can be used to generate a new dataset that lies on the same lower dimensional manifold as the training data by sampling from the local embeddings.

Discussion

DeepAtlas takes a novel approach to manifold learning compared to previous tools. First, it provides a way for researchers to explore the assumption that their data is actually drawn from a lower-dimensional manifold. By examining the AJD at each PC dimension for different local neighborhoods in the data, one can determine not only whether a lower dimensional manifold exists, but also determine the dimensionality of that manifold. The analyses presented in Figs. 3, 5 and 6 thus provide a valuable empirical approach to exploring the manifold hypothesis for any given dataset. Second, DeepAtlas focuses on finding local embeddings of the data that minimize topological distortion. All other “manifold learning” algorithms of which we are aware attempt to generate a global embedding. Finally, DeepAtlas trains a neural network, yielding a set of differentiable functions that, in conjunction with PCA, approximates a set of invertible charts that can map back-and-forth between high dimensional data and the lower dimensional local representations. This model directly represents the standard mathematical definition of a (differentiable) manifold, and can be used generatively to create a new dataset with points that are sampled from the manifold itself. In these key ways, DeepAtlas addresses gaps in the existing standard practice, improves upon current methods, and moves the field of manifold learning in a new direction.

We have validated DeepAtlas approach using a variety of datasets. Several of these are designed to be manifolds, such as the S-curve and hyperspheres. In all of these test cases, DeepAtlas succeeded in: 1) identifying that the data was actually drawn from a manifold; 2) finding low-distortion local embeddings at the correct local dimension; and 3) using simple deep neural networks to train a set of differentiable functions that can map from the low-dimensional local embeddings to the original data with very low topological distortion. This stands in stark contrast to the performance of existing manifold learning tools, which cannot recover low-distortion global embeddings even for hyperspheres (24).

Interestingly, although the real-world data we explored is commonly assumed to be drawn from a lower dimensional manifold (30), most of the real-world datasets we considered here were not consistent with the manifold hypothesis. In particular, many of the machine learning test datasets and all of the single cell data we considered did not have a consistent local embedding dimension across various local neighborhoods. In the datasets that did exhibit evidence of being drawn from a manifold, DeepAtlas’ local embedding approach resulted in an overall lower AJD than all the traditional manifold learning algorithms we tested. From there, DeepAtlas was able to train a neural network to approximate the inverse of the chart for each local cluster. This model can be used to determine the location of a new point or can even be used generatively, as seen on the S-curve dataset.

While DeepAtlas is promising, the approach presented here has clear limitations. The diagnostic process of determining whether the data is likely drawn from a low-dimensional manifold, and identifying the local dimension of that manifold, is currently done manually through visual inspection. Because of this, there may be some subjectivity in a researcher’s interpretation. Additionally, the neural network training process may require changes to the layer structure and other parameters to achieve an effective model. For instance, our neural network model of the MNIST data used a convolutional layer whereas the other models trained used simple fully connected layers. As such, researchers may see different results depending on their decisions regarding certain parameters. While the approach is thus an iterative one, requiring extensive guidance from the user, DeepAtlas does provide extensive diagnostics that allow researchers to evaluate how well their model represents the data at any given step. In future work, we hope to improve upon the robustness of DeepAtlas and reduce the need for manual inspection and input.

Another limitation of this approach is the reliance on specific algorithms, notably -means clustering to identify local neighborhoods and PCA to generate local embeddings. In our experience, none of the non-linear dimensionality reduction tools broadly employed in the field generate lower distortion local embeddings than PCA, but improvements in such embedding tools could greatly enhance the performance of DeepAtlas. Similarly, -means clustering was used here for convenience and for its utility in identifying clusters that correspond to local areas in the dataset (i.e. clusters of points that are localized in the same region of the original high-dimensional space). The design of DeepAtlas is highly modular, allowing for different clustering and dimensionality reduction tools to be easily substituted based on the users’ preference. Importantly, the AJD curve and the cluster size distribution can be used to evaluate how such choices ultimately perform. In future work, we aim to explore more thoroughly different clustering and dimensionality-reduction tools on a range of real and synthetic data in order to provide potential users with a more comprehensive picture of how various tools perform in this context. Finally, future work will be needed to further explore DeepAtlas’ generative capabilities, and to investigate new applications for the output model, including leveraging the model for direct application of ideas from differential geometry and topological data analysis (51, 52). While future development of DeepAtlas is clearly necessary, we hope that this tool motivates and enables deeper exploration of manifolds in big data across a variety of fields.

Materials and Methods

Clustering

DeepAtlas begins by first partitioning the data into local neighborhoods. These neighborhoods will then be embedded into the lower dimension and can then be stitched back together. Through this process, DeepAtlas takes a local approach which allows for less topological distortion. Here, we use -means clustering to generate the local neighborhoods, which results in clusters of points grouped by distance to their centroid. This method aligns with our intuition that the clusters should be composed of points which are local neighbors. DeepAtlas is designed to be modular, so the user can choose to substitute a different clustering algorithm if desired.

The -means clustering algorithm requires the user to choose a value of clusters to generate. The choice of this parameter can affect DeepAtlas’ performance. One easily illustrated example of this is when -means is applied to the Swiss roll dataset. When a smaller value of is chosen, the clusters may contain points from different sections of the unraveled plane. When a sufficiently large value of is chosen, each cluster contains only points which are next to each other when the manifold is unraveled. This allows for more accuracy when embedding each local neighborhood and then subsequently stitching them back together into the 2D manifold. With higher dimensional data, it is more difficult to determine the optimal value of to use. We tested varying this parameter in our experiments to ensure that the downstream results were not affected. Generally, we proceed with a value of such that each cluster contains at least 50 points.

Transition Points

Transition points are the sets of points which connect the clusters. To determine them, we first take the original dataset and determine the nearest neighbors of each point. A point is in the transition region if one of its nearest neighbors is in a different cluster from the one to which it is assigned. While this is a free parameter of DeepAtlas, here we focus on a value of 10, which works well for the example datasets we considered. We then add the transition points to each of our clusters in a simple way: if a point that is not in a cluster is one of the nearest neighbors of a point in that cluster, we add that point to the cluster. This results in a set of points that are present in each neighboring cluster and when overlayed, connect the clusters together.

Quantifying Distortion

The metric we use to quantify distortion here is the Average Jaccard Distance (AJD). This measures the dissimilarity between a dataset and its embedding by comparing the nearest neighbors of each point. If all points have the same set of nearest neighbors in both the original data and the embedded data, the AJD will be 0. Conversely, if all points have a completely different set of neighbors in the embedded data than in the original data, the AJD will be 1. The Jaccard distance for each point can be calculated as:

| (1) |

where is the set of nearest neighbors of a given point in the original data, is the set of nearest neighbors of in the lower-dimensional representation, and represents the cardinality of the set . To get the AJD, we then take the average of the Jaccard distances of every point in the dataset:

| (2) |

where is the total number of points in the dataset.

PC vs. AJD Plots

DeepAtlas outputs a plot of the AJD between the original dataset and the embedding into every lower dimension using PCA. This PC vs. AJD plot helps the user determine whether a lower dimensional manifold exists, and if so, what the dimensionality of the manifold is. Each cluster is represented by a line on the plot. If the original data is drawn from a lower dimensional manifold, the clusters’ lines will all follow a similar curve, approaching 0 as the number of PCs approaches the dimensionality of the manifold. For example, in the simulated hypersphere data, the clusters’ lines are so similar that they appear on top of each other (Fig. 3A in the main text). Each cluster has a similar level of distortion at each PC, indicating that the data in each cluster is from the same manifold. The AJD is 0 at higher dimensions, and begins to rise at the minimal embedding dimension of the hypersphere. As the number of PCs decreases further, the AJD increases significantly.

However, if the clusters’ lines are not aligned, this suggests that there is not a common lower-dimensional manifold across clusters. For example, in the Boston Housing data, the clusters reach 0.1 AJD at varying PCs from 3 to 9. This wide range suggests that the clusters are not on the same lower-dimensional manifold. Visual inspection of these plots can be used to adjust hyperparameters in the data, such as the value of used for -means clustering.

Local Embedding

Once it has been determined that a lower-dimensional manifold exists and what its dimension is, we can proceed with embedding the data into that dimension. Each cluster is embedded separately and, since we have included transition points in each, the embedded clusters can then be re-aligned or “stitched” back together in the lower-dimensional space. This local approach results in less distortion overall as measured by AJD. Here we use PCA to embed each cluster into the lower dimension, as our previous work has found it to be the only method that could reliably reduce high-dimensional data with low distortion (24). Again, the modularity of DeepAtlas allows for the user to instead apply any other dimensionality reduction method of their choice.

Generating a true embedding of the S-curve

We generated all of our S-curve datasets using the “make_s_curve” function in the scikit-learn library in Python (29). The 3-dimensional S curve is generated by first drawing two random numbers. The first, , is drawn from a uniform distribution of real numbers on the interval ; the second, , is drawn from a uniform distribution on [0, 2]. For any given value of these two random numbers, which we represent as a 2-vector we can generate a corresponding 3-dimensional point using the following function:

| (3) |

where we have written out the three components of the output, and is the sign function, returning −1 if is negative, +1 if is positive, and 0 otherwise. Evidently, the function is a global 2D embedding of the 3D S-curve manifold generated by this approach. In Fig. 1 we use for the “true” embedding of the corresponding S-curve dataset.

Neural Network Charts as Diffeomorphisms

As mentioned in the main text, if we claim to generate a differentiable atlas of a manifold, we need to show that our approach approximates charts that are diffeomorphisms. To do so, here we set up a slightly more formal discussion of DeepAtlas’ framework and then show that the neural networks we train are guaranteed to satisfy the axioms needed to exhibit an atlas of a differentiable manifold.

In the main text, we stated briefly the requirements for a set to be considered a differentiable manifold. To restate this, such a set is a differentiable manifold if and only if:

There exists a covering of open subsets of , , such that each is diffeomorphic to an open subset . For any member of this covering we refer to the corresponding diffeomorphism as .

If two sets and in this covering have a non-trivial intersection, i.e. , then is (smoothly) differentiable on .

The above is a standard definition of a differentiable manifold, and further (and more formal) treatments can be found in textbooks on the subject (27, 28).

First, we note that DeepAtlas as described in the main text is trained on finite sets of points; each point in the data set is a vector in a real-valued vector space . Call this set of points , and say that is a finite sample of points from the manifold . Since we don’t know this manifold a priori, is just taken to be an appropriate collection of neighborhoods around the points in . Similarly, to construct a covering of , we use the -Nearest Neighbors clustering algorithm to first generate a partition of with subsets. We then add the transition points between neighboring clusters to those relevant clusters as described above, generating a set of subsets of ; call this set of subsets . Note each element of this subset is finite and thus closed; for the formal purpose of developing the differentiable structure here, we associate with each an collection of open neighborhoods “around” the points in to form a corresponding open subset in such a way that the collection forms a covering of . Note that we do not do this explicitly; this is just an implicit step in our algorithm to satisfy the mathematical definition above.

Now we have our covering of open subsets, and we have to generate a set of diffeomorphisms that will represent our charts. In our case, we have , so every input to our function will just be a point in an 𝑚-dimensional real vector space. DeepAtlas approximates each using two separate pieces. To define the “forward” direction, from to we use PCA. Note that PCA is a linear function and is thus smooth. We then train a deep neural network to approximate the inverse of these functions . Certain aspects of the architecture of these networks vary depending on the application area, but all these networks have the same basic mathematical structure. For each node at any given layer in the network, we have a set of incoming “links,” see Fig. 4A. Denote the output of some layer as ; the first step is to compute an intermediate value , where is the weight matrix at that layer. Note that is a vector. The next step is to produce the output of the layer, , by applying a non-linear activation function to each element of the vector. We used the activation function , the hyperbolic tangent, so . Note that each of these steps involves the use of a smooth function. Since each layer is a composition of smooth functions, and the entire function defined by the neural network is just a composition of smooth functions and thus is, itself, smooth.

As a result, we now have two smooth functions to approximate each chart; the linear PCA step to map from to (i.e. to reduce dimensionality) and the neural network to map back to from . We use these two functions separately to approximate the chart, and since these two directions are both smooth, this allows us to approximate the diffeomorphisms in the atlas.

Network Training

The neural network used by DeepAtlas is modular. The user should adjust the parameters to achieve a model that best fits their data. For our experiments here, we use a simple fully connected network implemented through dense layers using the Tensorflow and Keras libraries in Python3. The networks are trained with 10 layers for 10,000 epochs. We use the hyperbolic tangent function as the activation function. The network is trained using stochastic gradient descent with Adam optimization. It is validated using mean squared error as the loss during training. Once the training is complete, we further evaluate the model by calculating the AJD of the network’s output compared with both the original data and the PCA embedding. The only network with a different structure is the one used for the MNIST data, where we instead included some convolutional layers.

Cross Validation

To evaluate the performance of our neural network, we perform 10-fold cross validation. The data is split into 10 “folds” or partitions. The network is then trained with one fold left out to be used for validation each time. This demonstrates the model’s robustness, showing that the resulting low loss is repeatable and not due to overfitting.

Single Cell RNA-Seq Transformations

Raw single cell RNA-sequencing data refers to data which has not undergone any preprocessing. Following the typical standard practice, we apply 3 steps of preprocessing and test DeepAtlas at each stage. The first is to select the highly varying genes, proceeding with only this subset of the data. Next, the data is scaled using counts-per-million normalization. Last, the dataset undergoes log transformation. Typically, scRNA-seq data is analyzed in this last stage after all preprocessing steps are complete. However, we were interested in seeing whether a manifold existed in any stage and thus applied DeepAtlas after each preprocessing step.

Simulated scRNA-Seq Data

We tested DeepAtlas on simulated scRNA-Sequencing data that was made using a package called scDesign42. This package uses real scRNA-seq data to generate a synthetic dataset. We applied it with default parameters to scRNA-seq cell line data in order to obtain a simulated scRNA-seq dataset to use.

Generative Applications

Because DeepAtlas’ neural network is designed to be bidirectional, we can also apply the learned functions to data drawn from the same lower-dimensional manifold to obtain its position in the original high-dimensional space. We demonstrate this on the S-curve data by first generating new data sampled from the local 2D representations of the subsections of the manifold. The new data is generated by selecting a point from the PCA embedding of the real data at random and sampling from a ball surrounding that point, using a radius based on nearest neighbors. Our example shows the results when the data is sampled with a radius of both the 1st nearest neighbor and the 5th nearest neighbor. This sampling process is repeated until the generated dataset is of the same size as the original dataset (although one can in principle sample any number of desired points). We can then pass the generated dataset into the functions learned by DeepAtlas’ neural network. This yields the high-dimensional space representation of the new dataset sampled from the same low-dimensional manifold. As shown in the main text, this successfully allowed us to generate S-curve shapes from data sampled from the lower-dimensional space.

Statistical Analysis

Cross-validation calculations were carried out using 10-fold cross validation throughout the study, as indicated. DeepAtlas does not entail the use of other statistical approaches at present.

Supplementary Material

Acknowledgments

Funding:

National Institutes of Health grant R01GM143378 (EJD)

National Heart Lung and Blood Institute grant T32HL139450 (SJH)

Footnotes

Competing interests: The authors declare that they have no competing interests.

Data and materials availability:

All Code and example datasets can be found at https://github.com/DeedsLab/DeepAtlas.

References

- 1.Chergui N., Kechadi M. T., Data analytics for crop management: a big data view. Journal of Big Data 9, 123 (2022). [Google Scholar]

- 2.Salehi H., Gorodetsky A., Solhmirzaei R., Jiao P., High-dimensional data analytics in civil engineering: A review on matrix and tensor decomposition. Engineering Applications of Artificial Intelligence 125, 106659 (2023). [Google Scholar]

- 3.Liu J., Wan G., Liu W., Li C., Peng S., Xie Z., High-dimensional spatiotemporal visual analysis of the air quality in China. Sci Rep 13, 5462 (2023). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Jiang Y., Olmo J., Atwi M., High-dimensional multi-period portfolio allocation using deep reinforcement learning. International Review of Economics & Finance 98, 103996 (2025). [Google Scholar]

- 5.Jin J., Liu Y., Ji P., Liu H., Understanding big consumer opinion data for market-driven product design. International Journal of Production Research 54, 3019–3041 (2016). [Google Scholar]

- 6.Slovin S., Carissimo A., Panariello F., Grimaldi A., Bouché V., Gambardella G., Cacchiarelli D., “Single-Cell RNA Sequencing Analysis: A Step-by-Step Overview” in RNA Bioinformatics, Picardi E., Ed. (Springer US, New York, NY, 2021; 10.1007/978-1-0716-1307-8_19), pp. 343–365. [DOI] [PubMed] [Google Scholar]

- 7.AlJanahi A. A., Danielsen M., Dunbar C. E., An Introduction to the Analysis of Single-Cell RNA-Sequencing Data. Mol Ther Methods Clin Dev 10, 189–196 (2018). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Ayesha S., Hanif M. K., Talib R., Overview and comparative study of dimensionality reduction techniques for high dimensional data. Information Fusion 59, 44–58 (2020). [Google Scholar]

- 9.Altman N., Krzywinski M., The curse(s) of dimensionality. Nature Methods 15, 399–400 (2018). [DOI] [PubMed] [Google Scholar]

- 10.Bernstein A., Kuleshov A., “Low-Dimensional Data Representation in Data Analysis” in Artificial Neural Networks in Pattern Recognition, El Gayar N., Schwenker F., Suen C., Eds. (Springer International Publishing, Cham, 2014), pp. 47–58. [Google Scholar]

- 11.Huo X., Ni X., Smith A., A Survey of Manifold-Based Learning Methods. Recent Advances in Data Mining of Enterprise Data: Algorithms and Applications. 691–745 (2008).

- 12.Johnstone I. M., Titterington D. M., Statistical challenges of high-dimensional data. Phil. Trans. R. Soc. A. 367, 4237–4253 (2009). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Lee J. M., Introduction to Topological Manifolds (Springer New York, New York, NY, ed. 2, 2011; https://link.springer.com/10.1007/978-1-4419-7940-7)vol. 202 of Graduate Texts in Mathematics. [Google Scholar]

- 14.Izenman A. J., Introduction to manifold learning. WIREs Computational Stats 4, 439–446 (2012). [Google Scholar]

- 15.Tenenbaum J. B., Silva V. D., Langford J. C., A Global Geometric Framework for Nonlinear Dimensionality Reduction. Science 290, 2319–2323 (2000). [DOI] [PubMed] [Google Scholar]

- 16.Donoho D. L., Grimes C., Hessian eigenmaps: Locally linear embedding techniques for high-dimensional data. Proc. Natl. Acad. Sci. U.S.A. 100, 5591–5596 (2003). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Zhang Z., Zha H., Principal Manifolds and Nonlinear Dimensionality Reduction via Tangent Space Alignment. SIAM J. Sci. Comput. 26, 313–338 (2004). [Google Scholar]

- 18.Lunga D., Prasad S., Crawford M. M., Ersoy O., Manifold-Learning-Based Feature Extraction for Classification of Hyperspectral Data: A Review of Advances in Manifold Learning. IEEE Signal Processing Magazine 31, 55–66 (2014). [Google Scholar]

- 19.Wold S., Esbensen K., Geladi P., Principal component analysis. Chemometrics and Intelligent Laboratory Systems 2, 37–52 (1987). [Google Scholar]

- 20.van der Maaten L., Hinton G., Visualizing Data using t-SNE. Journal of Machine Learning Research 9, 2579–2605 (2008). [Google Scholar]

- 21.McInnes L., Healy J., Melville J., UMAP: Uniform Manifold Approximation and Projection for Dimension Reduction. arXiv arXiv:1802.03426 [Preprint] (2020). 10.48550/arXiv.1802.03426. [DOI]

- 22.Kobak D., Berens P., The art of using t-SNE for single-cell transcriptomics. Nat Commun 10, 5416 (2019). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Pal K., Sharma M., “Performance evaluation of non-linear techniques UMAP and t-SNE for data in higher dimensional topological space” in 2020 Fourth International Conference on I-SMAC (IoT in Social, Mobile, Analytics and Cloud) (I-SMAC) (2020; https://ieeexplore.ieee.org/abstract/document/9243502), pp. 1106–1110. [Google Scholar]

- 24.Cooley S. M., Hamilton T., Aragones S. D., Ray J. C. J., Deeds E. J., A novel metric reveals previously unrecognized distortion in dimensionality reduction of scRNA-seq data. bioRxiv [Preprint] (2022). 10.1101/689851. [DOI]

- 25.Siebert S., Farrell J. A., Cazet J. F., Abeykoon Y., Primack A. S., Schnitzler C. E., Juliano C. E., Stem cell differentiation trajectories in Hydra resolved at single-cell resolution. Science 365, eaav9314 (2019). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Cao J., Spielmann M., Qiu X., Huang X., Ibrahim D. M., Hill A. J., Zhang F., Mundlos S., Christiansen L., Steemers F. J., Trapnell C., Shendure J., The single-cell transcriptional landscape of mammalian organogenesis. Nature 566, 496–502 (2019). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.do Carmo M., Riemannian Geometry (Birkhäuser; , Boston, MA, ed. 1, 1992; https://link.springer.com/book/9780817634902). [Google Scholar]

- 28.Spivak M., A Comprehensive Introduction to Differential Geometry (Publish or Perish, Inc., Houston, Texas, ed. 3rd, 1999)vol. 1. [Google Scholar]

- 29.Pedregosa F., Varoquaux G., Gramfort A., Michel V., Thirion B., Grisel O., Blondel M., Prettenhofer P., Weiss R., Dubourg V., Vanderplas J., Passos A., Cournapeau D., Brucher M., Perrot M., Duchesnay É., Scikit-learn: Machine Learning in Python. Journal of Machine Learning Research 12, 2825–2830 (2011). [Google Scholar]

- 30.Brownlee J., 10 Standard Datasets for Practicing Applied Machine Learning, Machine Learning Mastery (2021). https://machinelearningmastery.com/standard-machine-learning-datasets/.

- 31.Wolf F. A., Hamey F. K., Plass M., Solana J., Dahlin J. S., Göttgens B., Rajewsky N., Simon L., Theis F. J., PAGA: graph abstraction reconciles clustering with trajectory inference through a topology preserving map of single cells. Genome Biology 20, 59 (2019). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Sparta B., Hamilton T., Hughes S., Natesan G., Deeds E. J., A lack of distinct cell identities in single-cell measurements: revisiting Waddington’s landscape. [Preprint] (2022). 10.1101/2022.06.03.494765. [DOI]

- 33.Abadi M., Barham P., Chen J., Chen Z., Davis A., Dean J., Devin M., Ghemawat S., Irving G., Isard M., Kudlur M., Levenberg J., Monga R., Moore S., Murray D. G., Steiner B., Tucker P., Vasudevan V., Warden P., Wicke M., Yu Y., Zheng X., “TensorFlow: a system for large-scale machine learning” in Proceedings of the 12th USENIX Conference on Operating Systems Design and Implementation (USENIX Association, USA, 2016)OSDI’16, pp. 265–283. [Google Scholar]

- 34.Xiang R., Wang W., Yang L., Wang S., Xu C., Chen X., A Comparison for Dimensionality Reduction Methods of Single-Cell RNA-seq Data. Front. Genet. 12 (2021). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Becht E., McInnes L., Healy J., Dutertre C.-A., Kwok I. W. H., Ng L. G., Ginhoux F., Newell E. W., Dimensionality reduction for visualizing single-cell data using UMAP. Nat Biotechnol 37, 38–44 (2019). [DOI] [PubMed] [Google Scholar]

- 36.Luecken M. D., Theis F. J., Current best practices in single‐cell RNA‐seq analysis: a tutorial. Mol Syst Biol 15, e8746 (2019). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Su M., Pan T., Chen Q.-Z., Zhou W.-W., Gong Y., Xu G., Yan H.-Y., Li S., Shi Q.-Z., Zhang Y., He X., Jiang C.-J., Fan S.-C., Li X., Cairns M. J., Wang X., Li Y.-S., Data analysis guidelines for single-cell RNA-seq in biomedical studies and clinical applications. Military Medical Research 9, 68 (2022). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Moon K. R., Stanley J. S., Burkhardt D., van Dijk D., Wolf G., Krishnaswamy S., Manifold learning-based methods for analyzing single-cell RNA-sequencing data. Current Opinion in Systems Biology 7, 36–46 (2018). [Google Scholar]

- 39.Sun S., Zhu J., Ma Y., Zhou X., Accuracy, robustness and scalability of dimensionality reduction methods for single-cell RNA-seq analysis. Genome Biol 20, 269 (2019). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Kiselev V. Y., Andrews T. S., Hemberg M., Challenges in unsupervised clustering of single-cell RNA-seq data. Nat Rev Genet 20, 273–282 (2019). [DOI] [PubMed] [Google Scholar]

- 41.Chari T., Pachter L., The specious art of single-cell genomics. PLOS Computational Biology 19, e1011288 (2023). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Wang S., Sontag E. D., Lauffenburger D. A., What cannot be seen correctly in 2D visualizations of single-cell ‘omics data? Cell Syst 14, 723–731 (2023). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Zheng G. X. Y., Terry J. M., Belgrader P., Ryvkin P., Bent Z. W., Wilson R., Ziraldo S. B., Wheeler T. D., McDermott G. P., Zhu J., Gregory M. T., Shuga J., Montesclaros L., Underwood J. G., Masquelier D. A., Nishimura S. Y., Schnall-Levin M., Wyatt P. W., Hindson C. M., Bharadwaj R., Wong A., Ness K. D., Beppu L. W., Deeg H. J., McFarland C., Loeb K. R., Valente W. J., Ericson N. G., Stevens E. A., Radich J. P., Mikkelsen T. S., Hindson B. J., Bielas J. H., Massively parallel digital transcriptional profiling of single cells. Nat Commun 8, 14049 (2017). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.40k Mixture of Mouse Cell Lines, Multiplexed Samples, 4 Probe Barcodes (Next GEM), 10x Genomics. https://www.10xgenomics.com/datasets/40k-mixture-of-mouse-cell-lines-multiplexed-samples-4-probe-barcodes-1-standard.

- 45.Li W. V., Li J. J., A statistical simulator scDesign for rational scRNA-seq experimental design. Bioinformatics 35, i41–i50 (2019). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.Bouland G. A., Mahfouz A., Reinders M. J. T., Consequences and opportunities arising due to sparser single-cell RNA-seq datasets. Genome Biology 24, 86 (2023). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Feng H., Cottrell S., Hozumi Y., Wei G.-W., Multiscale differential geometry learning of networks with applications to single-cell RNA sequencing data. Computers in Biology and Medicine 171, 108211 (2024). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48.Palma A., Rybakov S., Hetzel L., Theis F. J., “Modelling single-cell RNA-seq trajectories on a flat statistical manifold” (2023; https://openreview.net/forum?id=sXRpvW3cRR).

- 49.Verma A., Engelhardt B. E., A robust nonlinear low-dimensional manifold for single cell RNA-seq data. BMC Bioinformatics 21, 324 (2020). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50.Lecun Y., Bottou L., Bengio Y., Haffner P., Gradient-based learning applied to document recognition. Proceedings of the IEEE 86, 2278–2324 (1998). [Google Scholar]

- 51.Carlsson G., Topology and data. Bull. Amer. Math. Soc. 46, 255–308 (2009). [Google Scholar]

- 52.Singh G., Memoli F., Carlsson G., Topological Methods for the Analysis of High Dimensional Data Sets and 3D Object Recognition. The Eurographics Association; [Preprint] (2007). 10.2312/SPBG/SPBG07/091-100. [DOI] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Data Availability Statement

All Code and example datasets can be found at https://github.com/DeedsLab/DeepAtlas.