Abstract

Partial differential equations, essential for modeling dynamic systems, persistently confront computational complexity bottlenecks in high‐dimensional problems, yet DNA‐based parallel computing architectures, leveraging their discrete mathematics merits, provide transformative potential by harnessing inherent molecular parallelism. This research introduces an augmented matrix‐based DNA molecular neural network to achieve molecular‐level solving of biological Brusselator PDEs. Two crucial innovations address existing technological constraints: (i) an augmented matrix‐based error‐feedback DNA molecular neural network, enabling multidimensional parameter integration through DNA strand displacement cascades and iterative weight optimization; (ii) incorporating membrane diffusion theory with division operation principles into DNA circuits to develop partial differential calculation modules. Simulation results demonstrate that the augmented matrix‐based DNA neural network efficiently and accurately learns target functions; integrating the proposed partial derivative computation strategy, this architecture solves the biological Brusselator PDE numerically with errors below 0.02 within 12,500 s. This work establishes a novel intelligent non‐silicon‐based computational framework, providing theoretical foundations and potential implementation paradigms for future bio‐inspired computing and unconventional computing devices in life science research.

Keywords: chemical reaction networks (CRNs), DNA computing, DNA strand displacement reactions, neural networks circuits, partial differential equations

This research introduces an augmented matrix‐based DNA molecular neural network to achieve molecular‐level solving of biological Brusselator PDEs. Crucial innovations include: (i) an augmented matrix‐based DNA molecular neural network, enabling multidimensional parameter integration through DNA strand displacement cascades and iterative weight optimization; (ii) incorporating membrane diffusion theory with division operation principles into DNA circuits to develop partial differential calculation modules.

1. Introduction

Partial Differential Equations are the core mathematical tools used to characterize the dynamic behavior of physical, biological, and engineering systems. Efficient solutions to PDEs are essential for critical areas such as climate modeling and drug diffusion simulation.[ 1 , 2 , 3 ] However, traditional numerical methods, such as the finite element method (FEM) and the finite difference method (FDM), encounter fundamental challenges in solving high‐dimensional nonlinear PDEs. The computational complexity and cost increase exponentially as dimensionality grows, known as the curse of dimensionality. In recent years, biomolecular systems have garnered significant attention due to their natural parallelism and energy‐efficient properties,[ 4 , 5 , 6 , 7 , 8 ] providing new avenues for nontraditional computational frameworks based on biomolecular systems. The programmable and parallel computational capabilities of DNA molecules[ 9 ] through strand displacement reactions provides a new idea for continuous‐space differential arithmetic‐through the gradient distribution of molecular concentration and the dynamic reaction network, it is expected to map directly the differential operators of PDEs, circumventing the loss of accuracy caused by traditional discretization. This bio‐physical fusion computing architecture provides the possibility of breaking through the traditional computational complexity boundaries for the development of a new generation of PDE solvers.

Solving high‐dimensional PDE remains a formidable challenge in computational science, where conventional numerical methods suffer from the curse of dimensionality that compromises both accuracy and efficiency. Recent advances in deep neural networks leverage nonlinear activation functions and continuous function approximation to adaptively capture high‐dimensional and nonlinear features, eliminating the requirement for precise analytical models or mesh generation. However, conventional silicon‐based neural architectures face inherent limitations in energy efficiency and physical interconnect density imposed by von Neumann architectures, hindering their capability for massively parallel computation of ultra‐large‐scale PDE systems.[ 10 , 11 ]

Non‐silicon‐based DNA neural networks provide a transformative paradigm to resolve this conflict. Molecular reactions in DNA computation exhibit extraordinary parallelism (“epi‐bit” parallel DNA molecular data storage[ 12 ]) and ultra‐low energy consumption (DNA‐based programmable gate arrays, DPGA[ 13 ]). While neural networks demonstrate superior approximation capacity for PDE solutions, their electronic implementations encounter fundamental bottlenecks in power dissipation and scalability. The chemical implementation of neural networks through programmable DNA reactions offers a disruptive computational framework that inherently bypasses these limitations through massive molecular parallelism. Cherry and Qian[ 14 ] DNA extends the molecular pattern recognition capabilities of DNA neural networks by constructing a winner‐takes‐all neural network strategy to recognize nine molecular patterns. Zou et al.[ 15 , 16 ] designed a nonlinear neural network based on the DNA strand replacement reaction, which was then utilized to perform standard quadratic function learning. In addition, they tested the robustness of the nonlinear neural network to detection of DNA strand concentration, the strand replacement reaction rate, and noise. The DNA molecular neural network system demonstrates adaptive behavior and supervised learning capabilities, demonstrating the promise of DNA displacement reaction circuits for artificial intelligence applications. Zou[ 17 ] also proposed a novel activation function that was embedded in a DNA nonlinear neural network to achieve the fitting and prediction of specific nonlinear functions. The function can be realized by enzyme‐free DNA hybridization reactions and has good nesting properties, allowing the construction of complete circuits in cascade with other DNA reactions. Xiong et al.[ 18 ] addressed the key scientific challenges of developing an ultra‐large‐scale DNA molecular response network by achieving independent regulation of the signal transmission function and the weight‐giving function for weight sharing based on modularity of the functions of the weight‐regulating region and the recognition region, with the molecular switch gate architecture as the basic circuit component. A large‐scale DNA convolutional neural network (ConvNet) has been constructed by cascading several modular molecular computing units with synthetic DNA regulatory circuits, which is capable of recognizing and classifying 32 classes of 144‐bit molecular information profiles. The developed DNA neural network has robust molecular mapping information processing capacity that is predicted to be utilized in intelligent biosensing and other fields.

DNA's programmability, adaptability, and biocompatibility[ 19 ] make it an ideal candidate for integrating computational and control applications in synthetic biology. DNA strand displacement (DSD) reaction‐driven architectures enable the development of biochemical circuits from DNA strand sequences, allowing for digital computing and analog simulation.[ 20 , 21 , 22 , 23 , 24 , 25 ] Previous research has demonstrated the promise of DNA circuits for pattern classification and optimization issues,[ 26 , 27 , 28 ] but the implementation of continuous mathematical operations (such as partial derivative computations) remains a challenge.

This research provides a methodology for solving partial differential equations using DNA molecular neural network based on an augmentation matrix. Its main contributions include: (i) constructing a DSD reaction‐driven augmentation matrix‐based DNA molecular neural network, mapping the weights to the DNA strand concentration by introducing the augmentation matrix encoding strategy, and realizing multi‐parameter combination output by utilizing parallelism of DNA toehold mediated displacement reaction to realize the output of multi‐parameter combination. (ii) deeply integrating the membrane diffusion theory and division operation principles into DNA circuits, proposing a scheme for calculating partial derivatives using DSD reactions, and then simulating the partial derivatives process, which breaks through the theoretical limitations of molecular systems in continuous mathematical operations; (iii) constructing the first DNA molecular neural network architecture to support the solution of partial derivative equations, which achieves adaptive adjustment of the weight parameters through an error‐feedback mechanism. This research develops new partial differential computational strategies for biomolecular computers while also providing a non‐silicon based computational device, facilitating a significant transition from discrete logic to continuous mathematics in biocomputing.

2. Methodology

2.1. CRNs‐Based Multi‐Combinatorial Parameter Parallel Output Neural Networks

2.1.1. CRNs‐Based Error Back Propagation Neural Network

Assuming N input parameters of a neural network, that is X(k) = [x 1(k), x 2(k), …, x N (k)] T , weights are utilized to connect the i‐th unit of the input layer to multiple units of the hidden layer, where i = 1, 2, 3, …, N, (L + 1) denotes the number of units in the hidden layer; weights are used to connect the n‐th unit of the hidden layer with multiple units of the output layer; (M + 1) denotes the number of units in the output layer; and are the thresholds of the input and hidden layers, respectively. The outputs of the hidden and output layers are denoted by and y j (k), respectively, where n = 1, 2, 3, …, L and j = 1, 2, 3, …, M. The entire procedure depicted in Figure 1 can be characterized as:

| (1) |

where ψ(*) represents the activation function.

Figure 1.

CRNs‐based error back propagation neural network.

CRNs‐based input layer weighted sum (including threshold) calculation:

| (2) |

CRNs‐based hidden layer activation function: Taking the activation function ψ(*) = (*)2 as an example, the corresponding CRNs can be described as follows:

|

(3) |

CRNs‐based output layer activation function: Taking the activation function ψ(*) = (*)2 as an example, the corresponding CRNs can be given:

|

(4) |

CRNs‐based output layer weighted sum (including threshold) calculation:

|

(5) |

CRNs‐based weight update mechanism: The weight update of the back‐propagation neural network can be achieved utilizing the gradient descent algorithm:

| (6) |

where α(0 < α < 1) is the learning step size, , and . and are the back‐propagated errors.

Remark 1

The relationship between the neural network weight update and the loss function Loss can be indicated as follows:

(7) When substituting Equation (7) into Equation (6), the expression can be reformulated as:

(8)

CRNs‐based weight update mechanism can be described as:

| (9) |

where k m (m = 5, 7, 9, 11, 13) is the forward reaction rate, and k n (n = 6, 8, 10, 12, 14) is the reverse reaction rate. The initial concentrations of [W in , La in , Lb in ], [V nj , Lc in , Ld in ], [S n , Le n , Lf n ], [y n , Lg n , Lh n ], and [Y j , Lm j , Ln j ] satisfy the following conditions:

| (10) |

According to Reactions (2)‐(5), the ordinary differential equations (ODEs) of substances I

n

,  ,

,  , and

, and  can be written as:

can be written as:

|

(11) |

When the concentrations of substances I

n

,  ,

,  and

and  achieve steady‐state equilibrium, that is ,

achieve steady‐state equilibrium, that is ,  ,

,  , and

, and  , the following results can be obtained:

, the following results can be obtained:

|

(12) |

2.1.2. Realizing Multi‐Combination Variable Output Using Augmented Matrix Neural Network

Figure 2 depicts the structure of a backpropagation neural network utilizing the augmented matrix. By using the augmented matrix represented in the left sub‐figure, the neural network's input parameter setting could be indirectly controlled via the multiplication operation, allowing the neural network to regulate its output with regard to numerous combination parameters.

Figure 2.

Error back propagation neural network architecture diagram based on augmented matrix.

The functional relationship between the input and output layers of the right subgraph has been rigorously demonstrated in Equation (12). The augmented matrix parameter is applied to the first sub‐equation, which is equivalent to introducing an additional parameter ξ into , denoted as , where ξ is assigned a value of either 0 or 1. Noted that the augmented matrix in Figure 2 specifically refers to an augmented identity matrix, constructed by appending an additional row of unity elements to the conventional identity matrix. This particular matrix configuration constitutes a critical implementation aspect of the proposed methodology.

Assuming that the augmented matrix is the augmented identity matrix, the following results can be obtained:

| (13) |

Equation (12) describes the relationship between each neural network output d

j

and the input parameter x

i

as follows:  . By combining Equations (12) and (13), we obtain the following neural network output matrix.

. By combining Equations (12) and (13), we obtain the following neural network output matrix.

|

(14) |

2.2. Principle of CRNs‐Based Partial Derivative Calculation

Further analysis of Equation (14) yields:

|

(15) |

In Equation (15), d combi (x 1, x 2, x 3, …, x N ) represents the sum of the combined terms of at least two variables in the variable output x 1, x 2, x 3, …, x N by the neural network. If d combi (x 1, x 2, x 3, …, x N ) = 0, it means that the function learned by the neural network does not contain any composite terms; conversely, d combi (x 1, x 2, x 3, …, x N ) ≠ 0 means that the function learned by the neural network has composite terms. To determine whether there are composite terms, the partial derivative calculation of the input variable x 1, x 2, x 3, …, x N is taken into account.

According to the partial derivative calculation principle, the corresponding partial derivative is calculated for the neural network output :

| (16) |

2.2.1. Partial Derivatives Calculation of Single Variable Terms

If a function learned by the neural network does not include any composite terms, that is d combi (x 1, x 2, x 3, …, x N ) = 0, the following considerations are necessary when calculating partial derivatives:

| (17) |

where  , which is the output of the first N neural networks in Equation (14).

, which is the output of the first N neural networks in Equation (14).

The partial differential derivative process based on the membrane diffusion method shown in Figure 3 consists of two stages: the first‐order partial derivative and the second‐order partial derivative. The second‐order derivative calculation is obtained by performing a secondary derivative calculation based on the result of the first‐order derivative calculation.

Figure 3.

Architecture diagram of the partial differential derivation solution based on membrane diffusion theory.

Consider the first‐order derivative CRN process based on membrane diffusion, which consists of one input, an intermediate species, and two outputs. The external input U a‐ext exists in large quantities outside the membrane, so its concentration remains unaffected by dynamics. Once it passes through the membrane, it is labeled as U a‐in . Substance U a‐ext activates substance , and substance U a‐in activates substance . In addition, a rapid annihilation reaction removes and .

The first‐order core derivative CRN can be composed of exactly the following 12 reactions:

| (18) |

By applying mass action kinetics to the initial seven reactions outlined in Equation (18), the following outcomes can be derived:

| (19) |

Assuming , Equation (20) can be obtained from Equation (19):

| (20) |

As input, a sine wave offset to ensure positive value. CRNs of the sine wave U a‐ext (t) = 1 + sin (t) can be expressed:

| (21) |

The parameters l and fast represent the catalytic reaction rate and annihilation reaction rate, respectively, and are set to l = 1.0 and fast = 105. Figure 4a depicts the sine offset resulting from Equation (21); the simulated first‐order derivative of the sine wave function using the membrane diffusion theory is shown in Figure 4c.

Figure 4.

CRN simulation and derivative calculation of equation and . a) CRNs representations of the equation , b) CRNs representations of the equation , c) Simulation results of the derivative of equation , and d) Simulation results of the derivative of equation .

And a cosine wave offset to remain positive as input. CRNs of the cosine wave  can be expressed as:

can be expressed as:

|

(22) |

The parameters  and

and  represent the catalytic reaction rate and annihilation reaction rate, respectively, and are set to

represent the catalytic reaction rate and annihilation reaction rate, respectively, and are set to  and

and  . Figure 4b illustrates the cosine outcome represented by Equation (22), while Figure 4d displays the simulation results of the first‐order derivative of the cosine function using the membrane diffusion theory.

. Figure 4b illustrates the cosine outcome represented by Equation (22), while Figure 4d displays the simulation results of the first‐order derivative of the cosine function using the membrane diffusion theory.

Remark 2

Assuming , the first formula of Equation (19) can be symbolically integrated and transformed into

(23) Let u = t − s, then s = t − u, ds = −du. When s = 0, u = t is attained, and when s = t, u = 0 is produced. Then Equation (23) can be rewritten as:

(24) Using the property that exp(−k diff s) tends to 0 at s → ∞, the upper limit of the integral is extended to ∞.

(25) If k diff → ∞, then . Assuming U a‐ext is infinitely differentiable, Taylor expansion of U a‐ext (t −s) at s = 0 gives the following results:

(26) Substituting Equation (26) into Equation (25), equation(27) can be obtained.

(27) For the integral , integration by parts can be utilized to rewrite it. Let u = (− s) n , dv = exp (− k diff s)ds, then .

(28)

When s → ∞, ; when S = 0, . According to the above analysis, can be inferred. Equation (29) can be developed further.

| (29) |

Equation (29) can be substituted into Equation (27), yielding the following result:

|

(30) |

Substituting Equation (30) into Equation (20), Equation (31) can be obtained:

| (31) |

Further simplification yields equation(32).

| (32) |

Introducing a delay ε and setting , the following result can be achieved:

| (33) |

o(τ) is the high‐order infinitesimal term related to τ generated in the previous simplification process, and o(ε2) is the high‐order infinitesimal term generated by the approximation related to ε.

As illustrated in Figure 5 , the subtraction operation consists of the last five reactions in Equation (18), and the corresponding ordinary differential equations (ODEs) can be mathematically derived by

| (34) |

Assuming that the reaction rate α a = β a = δ a and U nn → 0, when the reaction system described by Equation (18) achieves steady‐state equilibrium, the left‐hand side terms and of Equation (34) can be set to zero, resulting in the following:

| (35) |

Combining Equation (20), Equation (36) can be obtained:

| (36) |

According to Equation (36), when the reaction system (18) achieves a steady state, the first‐order partial differential result U mm of substance U a‐ext can be produced:

| (37) |

Combining Equations (20), (30) and (37), the following result can be obtained:

| (38) |

where . Similarly, the second‐order derivative output can be derived as:

| (39) |

The parameter k diff2 denotes the rate in the second‐order derivative stage. Equation (38) yields the first‐order partial differential result O mm of substance (parameter) U a‐in , as well as the second‐order partial differential result of U a‐ext :

| (40) |

The differential CRN aims to compute a function f from an unknown input signal U a‐ext . Compute f(U a‐ext (t)) for a given function f. However, the differential CRN only provides for an approximation of the input signal's derivative. The first‐order calculation equation can be expressed as:

| (41) |

The second‐order calculation equation can be described by Equation (42).

| (42) |

Figure 5.

Block diagram of the subtraction operator.

Let us consider the cube function depicted in Figure 6:

| (43) |

And a sine wave offset to remain positive as input: U a‐ext (t) = 1 + sin (t). Theoretical verification of the high‐order derivative results of function 1 + sin (t) based on membrane diffusion theory:

| (44) |

Then compute the output to the second order and first order:

| (45) |

Figure 6.

Calculation of higher‐order derivatives based on membrane diffusion theory.

2.2.2. Partial Derivatives Calculation of Compound Terms

If the function learned by the neural network does not contain any composite terms, i.e., d combi (x 1, x 2, x 3, …, x N ) ≠ 0, the mutual influence between distinct variables in the composite terms needs to be addressed while calculating partial derivatives.

Case 1

If d combi (x, t) = xt + x 2 t 2, the corresponding CRNs can be described by:

(46) Using mass action kinetics, the ordinary differential equations (ODEs) of Equation (46) could be derived:

(47)

When the reaction system (46) reaches a steady state, the terms , , and on the left side of Equation (47) can be zeroed, resulting in Equation (48).

| (48) |

The partial differential derivation principle can yield the following results:

| (49) |

The first‐order partial derivative of a single variable x computed via CRNs is given as:

| (50) |

The ODEs of Equation (50) are indicated as:

| (51) |

When the reaction system (50) reaches a steady state, it can be obtained:

| (52) |

Obviously, the outcome of Equation (52) is consistent with that of Equation (49). Furthermore, the CRNs generated using the first‐order partial derivatives of variables x and t could be represented as:

| (53) |

Remark 3

Assuming that d combi (x, t) = a*xt + b*xt 2 + c*x 2 t 2 + d*x 3 t + e*x 3 t 2 + f*x 3 t 3 is the composite term, the following result could be reached using the partial differential derivation module.

(54)

According to the two equations and in Equation (54), the calculation rule of the first‐order partial derivative with respect to variable x or t can be derived:

| (55) |

The CRNs calculated by the first‐order partial derivative of a single variable x can be expressed as:

| (56) |

where is the exponent of the variable x in y combi‐current .

The CRNs calculated by the first‐order partial derivative of a single variable t can be described as:

| (57) |

where is the exponent of the variable t in y combi‐current . According to equation in Equation (54), calculation rules of the first‐order partial derivatives with respect to variables x and t can be obtained:

| (58) |

The CRNs generated using the first‐order partial derivatives of variables x and t could be represented as:

| (59) |

where represents multiplication of the exponents of the variable x and t in y combi‐current .

2.3. CRNs‐Based Objective Function Verification Module

Figure 7 depicts the architecture of the augmented matrix neural network for solving partial differential equations, which is based on the objective function verification module. It should be noted that adding a function verification module has two advantages: one is to characterize the learning process of the neural network function and dynamically calculate the real‐time result of the objective function, that is, the signal P* in Figure 7; and the other is to calculate the error value between the neural network output result and the expected result of the objective function, that is, the signal Error 1 in Figure 7.

Figure 7.

Augmented matrix neural network based on function verification module for solving partial differential equations.

Assume that the objective function required for the neural network to learn is a typical quadratic function, which is:

| (60) |

The CRNs‐based target function module in the function verification model could be stated as follows:

|

(61) |

The CRNs‐based error comparator module can be described as:

|

(62) |

Figure 7 illustrates how the error Error 2 (the equation error) could be derived while computing the partial differential equation. When combined with the actual target error Error 1 as specified by Equation (62), the computation error Loss of the entire system could be obtained:

|

(63) |

2.4. Augmented Matrix Neural Network for Solving Biological Brusselator Partial Differential Equation

2.4.1. Biological Brusselator Partial Differential Equation Based on Diffusion Term

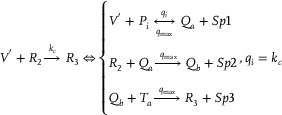

The Brusselator model is a classic theoretical model for studying the dissipative structure of nonlinear chemical systems and the formation of biological patterns. The Brusselator model (Figure 8) based on CRNs can be described as:

|

(64) |

Figure 8.

Biological Brusselator reaction model.

Assuming constant concentrations of substances A and B, the differential equation representing the concentration changes of intermediate species  and

and  with time and space, including diffusion terms in a one‐dimensional domain, could be described as:

with time and space, including diffusion terms in a one‐dimensional domain, could be described as:

|

(65) |

where  and

and  are the diffusion coefficients of

are the diffusion coefficients of  and

and  respectively, and k

a

, k

b

, k

c

, k

d

is a non‐negative non‐zero reaction rate. Constraints are implemented by applying homogeneous Neumann boundary conditions:

respectively, and k

a

, k

b

, k

c

, k

d

is a non‐negative non‐zero reaction rate. Constraints are implemented by applying homogeneous Neumann boundary conditions:

|

(66) |

Nonnegative initial conditions:

|

(67) |

The Brusselator reaction‐diffusion system has no known analytical solution and must be solved numerically. In the absence of diffusion, if Equation (65) obtains a steady‐state solution, the parameters (a, b) must satisfy:

|

(68) |

The parameters of the biological Brusselator model represented by Equation (64) are established; the starting concentrations of the two input chemicals  and

and  are set to 3nM; and the reaction rates are set to k

a

= 0.003, k

b

= 0.002, k

c

= 0.01, and k

d

= 0.001. The simulation results are depicted in Figure

9

.

are set to 3nM; and the reaction rates are set to k

a

= 0.003, k

b

= 0.002, k

c

= 0.01, and k

d

= 0.001. The simulation results are depicted in Figure

9

.

Figure 9.

Simulation results of the biological Brusselator model.

2.4.2. Solving the Biological Brusselator Partial Differential Equations

The Brusselator RD (reaction‐diffusion) system described by Equation (65) primarily involves differential calculations, second‐order partial derivative calculations, addition and subtraction operations, and so on. To simplify the partial differential equation solution process, the first equation in Equation (65) is chosen as the research target in the process. The major goal of this research is to solve partial differential equations using the proposed augmented matrix‐based back propagation neural network and the partial differential calculation theory.

|

(69) |

Assume that parameters  ,

,  , and

, and  are constants, and parameter

are constants, and parameter  is a function of x, t, i.e.,

is a function of x, t, i.e.,  , with

, with  also serving as the output of the augmented matrix‐based back propagation neural network. Equation (69) could be rewritten as:

also serving as the output of the augmented matrix‐based back propagation neural network. Equation (69) could be rewritten as:

|

(70) |

Using  ,

,  and

and  to represent

to represent  ,

,  and

and  respectively, the CRNs of Equation (70) can be expressed as:

respectively, the CRNs of Equation (70) can be expressed as:

|

(71) |

It is worth noting that the actual output species of the chemical system given by Equation (71) is Error 2, whereas Equation (70) produces R 11. The two output species demonstrate the following relationship:

| (72) |

When the reaction system is stable, the following result can be obtained through Equation (72).

| (73) |

From the perspective of solving partial differential equations, the final concentration of substance Error 2 can be utilized to represent the equation loss error.

3. Implementations With DSD Reaction Networks

In the second section, an augmented matrix neural network (AMN) is built using chemical reaction networks (CRNs) to produce multivariable combination items. The third section implements the membrane diffusion principle and the division calculation principle to calculate the partial derivatives of single‐variable and combination items based on CRNs. In the fourth section, the biological Brusselator model with diffusion terms is studied, the objective function verification module is designed, and the biological Brusselator partial differential equation is solved using an augmented matrix neural network based on the solving‐verified module.

To simplify the description, this section focuses on describing the DNA strand displacement reaction implementation pathways of typical catalytic, degradation, and annihilation reactions in different functional modules.

3.1. DNA Implementation of Back Propagation Neural Network Based on Augmented Matrix

Catalysis reaction model 1 (Figure 10 )

| (74) |

Figure 10.

Schematic representation of DNA reactions of Equation (74).

Degradation reaction model (Figure 11 )

| (75) |

Figure 11.

Schematic representation of DNA reactions of Equation (75).

Annihilation reaction module (Figure 12 )

| (76) |

Figure 12.

Schematic representation of DNA reactions of Equation (76).

Catalysis reaction model 2 (Figure 13 )

| (77) |

Figure 13.

Schematic representation of DNA reactions of Equation (77).

Adjustment reaction model (Figure 14 )

| (78) |

Figure 14.

Schematic representation of DNA reactions of Equation (78).

3.2. DNA Implementation of Partial Derivative Calculation

3.2.1. Calculation of Partial Derivatives of Single Variable Terms

Catalysis reaction model (Figure 15 )

| (79) |

Figure 15.

Schematic representation of DNA reactions of Equation (79).

Annihilation reaction module

| (80) |

Degradation reaction model (Figure 16 )

| (81) |

Figure 16.

Schematic representation of DNA reactions of Equation (81).

3.2.2. Calculation of Partial Derivatives of Compound Terms

Catalysis reaction model

| (82) |

Degradation reaction model 1

| (83) |

Degradation reaction model 2

| (84) |

3.3. DNA Implementation of Partial Differential Equation Solving & Objective Function Verification

Catalysis reaction mode 1

|

(85) |

Degradation reaction model

| (86) |

Catalysis reaction mode 2

|

(87) |

Catalysis reaction mode 3

|

(88) |

Catalysis reaction mode 4

| (89) |

Remark 4

For DNA realization, Set AS listed in Table 1 represents auxiliary substances involved in the reactions, whereas Set IS denotes intermediate substances. The subset Set IW comprises the inert wastes that does not interact with other species engage in subsequent interactions. In addition, C max indicates the initial concentration of auxiliary substances, while q max denotes the maximum strand‐displacement rate. The actual reaction rate q i is obtained from the corresponding DNA implementations. To mitigate the impact of auxiliary species consumption on kinetic process, the concentration of Set AS in Equations (74)–(89) is initialized at its maximum value.

Table 1.

| Classification | Species | ||

|---|---|---|---|

|

|

|

||

|

|

|

||

|

|

|

4. Results

Based on the design ideas mentioned above, the neural network scheme that utilizes augmented matrix to realize the solution of partial differential equations in biological Brussels verified by utilizing the function verification module can be implemented through the DSD mechanism. The feasibility of DSD reaction realization has been confirmed using Visual DSD software. All reaction rates and total substrate values involved in the CRNs‐based neural networks with augmented matrices, partial derivative calculations (integrating the principles of membrane diffusion and division operations) and the Brusselator partial differential equation are provided in Tables 2, 3, 4, with feasible values of C max = 1000nM and q max = 107/M/s. The simulation results are then methodically examined, with an emphasis on three aspects: overall error Loss, the objective function verification module error Error 1, and partial differential equation computation error Error 2. A quantitative comparison of actual simulation results and theoretical values in biological PDE calculations is shown in Table 5.

Table 2.

Parametric representation for augmented matrix‐based neural network response rates and weights.

| Parameters | Descriptions | Nominal‐Values |

|---|---|---|

| k 1 | Input layer rate | 6.0 × 10−5s−1 |

| k 2 | Hidden layer rate | 6.0 × 10−5s−1 |

| k 3 | Output layer rate | 6.0 × 10−5s−1 |

| k 4 | Activation function rate | 6.0 × 10−5s−1 |

| k 5, k 7, k 9, k 11, k 13 | Forward reaction rate | 3.65 × 10−6~s−1 |

| k 6, k 8, k 10, k 12, k 14 | Reverse reaction rate | 9.0 × 10−5s−1 |

| W 11 | Input layer weight | 0.63 |

| W 12 | Input layer weight | 0.24 |

| W 13 | Input layer weight | 0.06 |

| W 14 | Input layer weight | 0.42 |

| W 21 | Input layer weight | 0.39 |

| W 22 | Input layer weight | 0.36 |

| W 23 | Input layer weight | 0.06 |

| W 24 | Input layer weight | 0.26 |

| W 31 | Hidden layer weight | 0.11 |

| W 32 | Hidden layer weight | 0.42 |

| W 33 | Hidden layer weight | 0.09 |

| W 34 | Hidden‐layer weight | 0.01 |

| W 41 | Hidden layer weight | 0.16 |

| W 42 | Hidden layer weight | 0.06 |

| W 43 | Hidden layer weight | 0.35 |

| W 44 | Hidden layer weight | 0.38 |

| W 51 | Output layer weight | 0.19 |

| W 52 | Output layer weight | 0.21 |

Table 3.

Parametric representation for partial derivative calculations.

| Parameters | Descriptions | Nominal‐Values |

|---|---|---|

| k diff | Reaction rate | 4.0 × 10−4~s−1 |

| k | Reaction rate | 3.0 × 10−3~s−1 |

| k fast | Degradation reaction rate | 10 |

| α a | Catalytic reaction rate | 5.0 × 10−4‐s−1 |

| β a | Catalytic reaction rate | 5.0 × 10−4‐s−1 |

| δ a | Degradation reaction rate | 5.0 × 10−4‐s−1 |

| γ a | Annihilation reaction rate | 1.0 |

| k x | Reaction rate | 6.0 × 10−4‐s−1 |

Table 4.

Parametric representation for the Brusselator partial differential equation.

| Parameters | Descriptions | Nominal‐Values |

|---|---|---|

| D u | Constant | 0.5 |

| k a | Constant | 1.0 |

| k b | Constant | 0.3 |

| k c | Constant | 0.2 |

| k d | Constant | 0.1 |

| A′ | Constant | 2 |

| B′ | Constant | 2 |

| V′ | Constant | 1 |

Table 5.

Quantitative comparison of actual simulation results and theoretical values in biological PDE calculations.

| Parameters | Descriptions | Actual concentration(nM) | Theoretical concentration(nM) | |

|---|---|---|---|---|

| Error 1 | Neural network output error | 0 | 0 | |

| Error 2 | Partial differential equation (PDE) error | 0.02 | 0 | |

| Loss | Overall error | 0.02 | 0 | |

| P | Neural network output | 1.34 | 1.34 | |

| P* | Target function output | 1.34 | 1.34 | |

|

|

First order partial derivative of variable x | 1.86 | 1.85 | |

|

|

Second order partial derivative of variable x | 2 | 2 | |

|

|

First order partial derivative of variable t | 2.4 | 2.4 | |

| U x | Sum of terms of a single variable: x | 0.64 | 0.64 | |

|

|

First order partial derivative of variable x | 1.6 | 1.6 | |

| U combt | Sum of composite terms of variable x | 0.2 | 0.2 | |

|

|

First order partial derivative of variable x | 0.25 | 0.25 | |

| U t | Sum of terms of a single variable t | 0.5 | 0.5 | |

|

|

First order partial derivative of variable t | 2 | 2 | |

|

|

First order partial derivative of variable t | 0.4 | 0.4 |

4.1. Overall Error Loss

According to the architecture depicted in Figure 8 , the overall error Loss is an exceptionally important metric for the neural network based on the augmented matrix in solving the partial differential equations of the biological Brusselator. The overall error Loss consists of two parts: the error Error 1 between the actual output of the DNA‐based neural network and the ideal result of the objective function; the error Error 2 generated during the process of solving the partial differential equation by the neural network, and the simulation results are illustrated in Figure 17.

Figure 17.

Error analysis of the augmented matrix‐based neural network for solving partial differential equations in biological Brusselator.

All three error curves in Figure 17 clearly follow the same trend: gradually approaching zero steadily over time. Error 1 (red curve) is sufficient to control the precise output of the neural network at time t = 20000s, whereas Error 2 (green curve) and Loss (orange curve) are stable and converge to 0.02nM. The approach of solving the Bio‐Brusselator partial differential equations by a neural network based on the augmented matrix is viable and effective within the acceptable error range.

4.2. Objective Function Verification Module Error Error 1

This section focuses on the analysis of the results around the error Error 1 between the augmented matrix‐based neural network output P and the objective function result P*, as well as the calculation of partial derivatives for each variable.

4.2.1. Error 1 Between The Augmented Matrix‐Based Neural Network‐Based Output P and The Objective Function Result P*

The augmented matrix‐based neural network output error analysis is depicted in Figure 18 . In comparison to the target function output P* (green curve), the Neural network output P (red curve) achieves the same targeted concentration level with a faster response time and more efficiency. In addition, Neural network output error gradually approaches zero over time, indicating that the augmented matrix‐based neural network has strong tuning ability.

Figure 18.

Neural network output error analysis.

4.2.2. Partial Derivative Calculation of Various Variables

Partial derivative calculation of various variables is an important measure of the performance of neural networks based on augmented matrices. Figure 19 describes the results of calculating the first‐order and second‐order partial derivatives of the neural network outputs with respect to variables x and t, respectively (i.e., with reference to the steady‐state output concentration level).

Figure 19.

Partial derivative calculation analysis of various variable.

4.3. Calculation Error Error 2 in Partial Differential Equations

The derivation of partial derivatives of various variables, as well as the computational errors in partial differential equations, are critical for solving partial differential equations in the biological Brusselator. The first‐order and second‐order partial derivatives of the variable x are next examined, as well as the first‐order partial derivatives of the variable t and the computational error Error 2 of the partial differential equation.

4.3.1. Partial Derivative Calculation Analysis

Figure 20a demonstrates the first‐order partial derivative computation for variable x. Evidently, (blue curve) is divided into two parts: (the term accumulation U x of a single variable x and the first‐order partial derivatives with respect to the parameter x, green curve) and (the composite term accumulation U combi of a variable x and the first‐order partial derivatives with respect to the parameter x, purple curve).

Figure 20.

Partial derivative calculation analysis. a) First order partial derivative of variable x, b) First order partial derivative of variable t, and c) Second order partial derivative of variable x.

consists of two parts: (the term accumulation U t of a single variable t and the first‐order partial derivatives with respect to the parameter t) and (the composite term accumulation U combi of a variable t and the first‐order partial derivatives with respect to the parameter t). The first‐order partial derivatives of the variable t are computed, as shown in Figure 20(b).

The second‐order partial differentiation of the variable x is basically a second derivative calculation utilizing the findings of the first‐order partial derivatives, and the simulation results are illustrated in Figure 20(c).

4.3.2. Partial Differential Equation (PDE) Error Error 2

The augmented matrix‐based neural network scheme for solving partial differential equations in biological Brussels is feasible and effective, as demonstrated by Figure 21 . The error (blue curve) generated by the partial differential equation solving process gradually tends to zero over time, which is within a certain allowable error range.

Figure 21.

Partial differential equation (PDE) error analysis.

5. Conclusion

In this paper, the theoretical and technological development of molecular computing is advanced through a triple innovation. The augmented matrix encoding strategy transforms abstract neural network parameters into tunable DNA biochemical reaction rates and realizes multi‐parameter parallel computation using strand displacement cascade reactions; the partial derivative computation scheme based on membrane diffusion‐division synergistic mechanism fills the theoretical gap in spatial differential computation of molecular systems by simulating partial differential terms through precisely designed reaction networks; and the adaptive biochemical partial differential equations solver supporting biological partial differential equations solving DNA neural network architecture, which verifies the feasibility of continuous mathematical modeling in biochemical systems. Especially crucial is that the error feedback‐driven weight adjustment mechanism simulates the principle of biological homeostatic regulation, which provides a new idea for constructing autonomous molecular controllers. The framework not only extends DNA computation from discrete logic to continuous differential mathematics, but its molecular parallelism and continuous computing capability also enables real‐time dynamic analysis (e.g., metabolic flux redistribution simulation) of complex biological networks. This breakthrough provides new ideas for synthetic biology and opens up a new path for developing molecular‐scale artificial intelligence systems with embedded physical intelligence.

6. Experimental Section

Chemical reaction networks (CRNs): Complex chemical reaction networks provide a framework to capture the intricate behaviors of chemical systems, even when such systems are purely theoretical. These networks are typically modeled by differential equations based on mass action kinetics, as exemplified by the representation of system (90):

| (90) |

The parameters θ and γ indicate the binding and degradation rates, and k denotes the catalytic rate. The differential equations of Equation (90) can be written as:

| (91) |

In addition, the corresponding stoichiometry matrix M, which encapsulates the fundamental stoichiometric relationships, can be systematically derived.

|

(92) |

Biological signaling in biochemical circuits fundamentally relies on concentration‐based quantification, though constrained by the inherent non‐negativity of chemical species concentrations that precludes direct expression of negative values. The dual‐rail encoding strategy resolves this limitation through differential pair representation, where signal r is mathematically defined as r = r + − r − through opposing molecular species concentrations, with the corresponding kinetic relations maintaining mathematical consistency while allowing comprehensive signal representation.

DNA strand displacement reaction (DSD): Integrated complex chemical reactions are utilized in designing circuits capable of realizing desired behavior; to meet functional requirements, the molecules are structured as DNA strands by binding toeholds and their complementary toeholds.

For example, the DSD reactions of equation in Equation (90) are Equation (93).

| (93) |

The chemical species Ra and Rb are classified as substrates, whereas S a and S b are identified as reactants, with S c serving as the target product. As illustrated in Figure 22 , the reaction mechanism comprises a combination of multiple reactions involving single‐stranded and double‐stranded DNA species. Additionally, methodologies for implementing DNA strand displacement (DSD) in broader chemical reaction networks (CRNs), including degradation pathways, are systematically detailed in Section 4.

Figure 22.

Schematic representation of DSD reactions of Equation (93).

Simulation Methods

This work relies on Visual DSD software for verification, and the overall research framework adheres to the technical roadmap depicted in Figure 23 .

Figure 23.

Technical roadmap for the entire research framework.

Mathematical derivation and verification

The partial differential equation that should be solved is:

|

(94) |

Assume the solution is a standard quadratic function:

| (95) |

where a, b, c > 0.

Calculation of partial derivatives of solution functions:

| (96) |

Let D u = m 1, k c = m 2, V′ = v 1, k a = m 3, A′ = v 2, k b = m 4, B′ = v 3, k d = m 5; substituting Equation (96) into Equation (94), the following result can be obtained:

| (97) |

Additional sorting can be obtained:

| (98) |

Steady‐state condition analysis:

In order to satisfy that x = 0.8, t = 0.5 is a steady‐state solution, the partial differential equation is required to valid. Substituting a = b into Equation (95), Equation (99) can be produced.

| (99) |

Compute partial derivatives and other terms:

| (100) |

Substituting Equations (99) and (100) into Equation (94), the following results are obtained:

| (101) |

Parameter determination:

Constraints

Parameters a, b, c, m 1, m 2, m 3, m 4, m 5, v 1, v 2, v 3 > 0

The equation satisfies the steady‐state solution at x = 0.8, t = 0.5.

Parameter Design

| (102) |

Substituting the parameters in Equation (95), we can obtain:

| (103) |

Calculate the terms for x = 0.8, t = 0.5:

| (104) |

Substitute Equation (104) into the Equation (94) to verify:

| (105) |

Equation (103) is confirmed to be true, therefore

| (106) |

is the desired steady‐state solution.

Conflict of Interest

The authors declare no conflict of interest.

Author contributions

Y.X. and T.S. conceived the study. Y.X. designed the methodology and prepared the original draft. T.M., A.R.‐P. and T.S. provided conceptual guidance and wrote the manuscript. J.W., P.Z. and T.S. reviewed and edited the manuscript. T.S. supervised the study and acquired funding.

Acknowledgements

The authors gratefully acknowledge Postdoc Mathieu Hemery for help with understanding the differential calculations utilizing membrane diffusion theory. This work was supported by National Natural Science Foundation of China (Grant Nos. 62272479, 62372469, 62202498), Taishan Scholarship (tstp20240506).

Xiao Y., Rodríguez‐Patón A., Wang J., Zheng P., Ma T., and Song T., “Programmable DNA‐Based Molecular Neural Network Biocomputing Circuits for Solving Partial Differential Equations.” Adv. Sci. 12, no. 33 (2025): 12, e07060. 10.1002/advs.202507060

Contributor Information

Tongmao Ma, Email: tongmao.ma@upc.edu.cn.

Tao Song, Email: tsong@upc.edu.cn.

Data Availability Statement

The data that support the findings of this study are available from the corresponding author upon reasonable request.

References

- 1. Brunton S. L., Kutz J. N., Comput. Sci. 2024, 4, 483. [DOI] [PubMed] [Google Scholar]

- 2. McGreivy N., Hakim A., Mach. Intell. 2024, 6, 1256. [Google Scholar]

- 3. Simpson M. J., Murphy R. J., Maclaren O. J., Theor. Biol. 2024, 580, 111732. [DOI] [PubMed] [Google Scholar]

- 4. Fan D., Wang J., Wang E., Dong S., Sci. 2020, 7, 2001766. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5. Zhao H., Yan Y., Zhang L., Li X., Jia L., Ma L., Su X., Sci. 2025, e2416490. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6. Wang L., Li N., Cao M., Zhu Y., Xiong X., Li L., Zhu T., Pei H., Sci. 2024, 11, 2409880. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7. Parkin N. Srinivas, J., Seelig G., Winfree E., Solove‐ichik D., Science 2017, 358, eaal2052. [DOI] [PubMed] [Google Scholar]

- 8. Hemery M., Fages F., International Conference on Computational Methods in Systems Biology , Springer 2023, pp. 78–96.

- 9. Li J., Green A. A., Yan H., Fan C., Chem. 2017, 9, 1056. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10. Li Z., Zheng H., Kovachki N., Jin D., Chen H., Liu B., Azizzadenesheli K., Anandkumar A., ACM/JMS Journal of Data Science 2024, 1, 1. [Google Scholar]

- 11. Raissi M., Perdikaris P., Karniadakis G. E., J. Comput. Phys. 2019, 378, 686. [Google Scholar]

- 12. Zhang C., Wu R., Sun F., Lin Y., Liang Y., Teng J., Liu N., Ouyang Q., Qian L., Yan H., Nature 2024, 634, 824. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13. Lv H., Xie N., Li M., Dong M., Sun C., Zhang Q., Zhao L., Li J., Zuo X., Chen H., Wang F., Nature 2023, 622, 292. [DOI] [PubMed] [Google Scholar]

- 14. Cherry K. M., Qian L., Nature 2018, 559, 370. [DOI] [PubMed] [Google Scholar]

- 15. Zou C., Zhang Q., Zhou C., Cao W., Nanoscale 2022, 14, 6585. [DOI] [PubMed] [Google Scholar]

- 16. Zou C., Wei X., Zhang Q., Zhou C., IEEE‐ACM Trans. Comput. Biol. Bioinform. 2020, 19, 1424. [DOI] [PubMed] [Google Scholar]

- 17. Zou C., Phys. Chem. Chem. Phys. 2024, 26, 11854. [DOI] [PubMed] [Google Scholar]

- 18. Xiong X., Zhu T., Zhu Y., Cao M., Xiao J., Li L., Wang F., Fan C., Pei H., Nat. Mach. Intell. 2022, 4, 625. [Google Scholar]

- 19. Chen Y.‐J., Dalchau N., Phillips N. Srinivas, A., Cardelli L., Soloveichik D., Seelig G., Nat. Nanotechnol. 2013, 8, 755. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20. Shah S., Wee J., Song T., Ceze L., Strauss K., Chen Y.‐J., Reif J., J. Am. Chem. Soc. 2020, 142, 9587. [DOI] [PubMed] [Google Scholar]

- 21. Sun J., Mao T., Wang Y., IEEE Trans. Nanobiosci. 2021, 21, 511. [DOI] [PubMed] [Google Scholar]

- 22. Shang Z., Zhou C., Zhang Q., Curr. Issues Mol. Biol. 2022, 44, 1725. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23. Wang F., Lv H., Li Q., Li J., Zhang X., Shi J., Wang L., Fan C., Nat. Commun. 2020, 11, 121. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24. Yang S., Bögels B. W., Wang F., Xu C., Dou H., Mann S., Fan C., de Greef T. F., Nat. Rev. Chem. 2024, 8, 179. [DOI] [PubMed] [Google Scholar]

- 25. Xiao Y., Lv H., Wang X., IEEE Trans. Nanobiosci. 2023, 22, 967. [DOI] [PubMed] [Google Scholar]

- 26. Kieffer C., Genot A. J., Rondelez Y., Gines G., Adv. Biol. 2023, 7, 2200203. [DOI] [PubMed] [Google Scholar]

- 27. Ezziane Z., Nanotechnology 2005, 17, R27. [Google Scholar]

- 28. Yordanov B., Kim J., Petersen R. L., Shudy A., Kulkarni V. V., Phillips A., ACS Synth. Biol. 2014, 3, 600. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

The data that support the findings of this study are available from the corresponding author upon reasonable request.