Abstract

Background

Quantifying workload for clinical trial staff represents an ongoing challenge for healthcare facilities conducting cancer clinical trials. We developed and evaluated a staffing model designed to meet this need.

Methods

To address individual protocol acuity, the model's algorithms include metrics to account for visit frequency, and the quantity, and types of research-related procedures. Since implementation in 2012, the model has been used to justify clinical research team resource needs and to establish metrics for leadership to reference when reviewing replacement positions; particularly useful to justify resources at the institutional level during the COVID-19 pandemic.

In recent years, we identified a gap between predicted and actual staff workload. This precipitated a comprehensive review in 2021 of all aspects of scoring within the model including a comparison to modern protocols to ensure accounting for all types of protocol-related procedures and tests.

Results

Further investigation identified increasing complexity of trial screening, which had not been accounted for in the initial model. Specifically, screening-related activities accounted for up to 25% of coordinator effort. We incorporated this work into the model and demonstrated a statistically significant change in average protocol acuity (P = 0.002) following refinement of scoring to include study-specific screening complexity.

Conclusion

Over the past decade, cancer clinical trial screening has increased in complexity and duration. Planning a cancer center's clinical trial workforce requires consideration of screening-related staff effort. For any effort model to be successful, ongoing examination and malleability are critical in this evolving landscape of clinical trials.

Keywords: Staffing, Effort reporting, Clinical trial, Oncology, Staff Retention

1. Introduction

Activating and conducting clinical trials represents a central tenet of National Cancer Institute (NCI)-designated and other regional and national cancer centers. However, successful trial implementation and enrollment have become more challenging in recent years [1,2]. More detailed molecular characterization has divided cancers into ever smaller subtypes. Increasingly numerous and stringent eligibility criteria have led to longer and more complex screening periods, with fewer patients qualifying for trials, and inefficient trial design puts more pressure on staff and participants [[3], [4], [5]]. Furthermore, keeping up with the complexity of clinical trials becomes increasingly expensive and resource intensive for Cancer Centers [6].

Given these considerations, quantifying workload for clinical trial staff represents an important challenge for healthcare institutions to address. Colleagues have been pursuing a quantifiable way to measure effort for years. To measure trial complexity and estimate staffing needs, various systems have been developed and tested [[7], [8], [9], [10]]. ASCO and the NCI have both developed online calculators (neither are still publicly available) for this purpose [8,11]. Additionally, the Research Effort Tracking Application (RETA), out of the University of Michigan Comprehensive Cancer Center and the Ontario Protocol Assessment Level (OPAL), from the Ontario Institute for Cancer Research, have been developed [9,10]. However, due to perceived variability in the roles of clinical trial professionals across institutions, compounded by the consistent differences between industry- and NCI-study effort, wide-spread uptake has been limited [12].

Ideally, methods to determine staffing needs should be both detailed enough to provide an accurate and reproducible estimate, and straightforward enough to promote uptake and usability. We developed what we believe to be an adaptable clinical trial staffing model designed to meet the varied needs of a contemporary cancer center. We have used the model to justify additional coordinator and data management support as well as team supplemental pay according to protocol complexity. Here we report an analysis of model implementation and subsequent refinement.

2. Methods

2.1. Staffing model development

In 2012, UT Southwestern Harold C. Simmons Comprehensive Cancer Center Clinical Research Office leadership, with input and testing from research team managers and coordinators, created a system for scoring clinical research protocols based on acuity. The algorithm included metrics to account for study visit frequency, quantity and types of research-related procedures as well as frequency and anticipated complexity of data management activities for those who are responsible for working directly with patients (e.g., clinical research coordinators and research nurses) as well as data specialists, respectively. Activities fell into four post-enrollment categories: active patient management (during treatment phase of study participation), active follow up, long-term follow up, and data management [13]. Users employed a Microsoft Access Database for input and regular reporting.

As part of study start-up, each trial is entered into the database prior to activation. Then, monthly, study staff enters the number of patients that they are managing for each study. The study score is then multiplied by the number of screened or active patients to calculate a current effort score per team member.

Monthly reports generated from the database were (and still are) provided to clinical research managers and research office leadership for review. Through these reports, managers evaluate the capacity across and between teams of clinical research coordinators, research nurses, and data managers. At the initial implementation of the database, we studied effort and output over the course of six months to establish what we felt to be appropriate benchmarks. Since that time, the “Ideal Staffing Level” has been set at >150–180 points per full time employee per month, shown in Table 1. This score is set as ideal for a full-time coordinator, research nurse or data manager who is fully trained, working at full scope. It is anticipated that new employees in training may operate with a fewer number of points.

Table 1.

Indicators of staffing level by points per Full-Time Employee (FTE). The point ranges listed help quantify the workload for each team member.

| Points per Team Member | Staffing Level | Definition |

|---|---|---|

| <150 points | Under capacity | Undercapacity (<80 %) Consider adding study or patient assignments; opportunity to cross-train with other teams; may also indicate team member in training |

| ≥150–180 points | Ideal staffing level | At capacity (80–90 %), managing an expected workload with capacity to increase intermittently to 100 % as required for deadlines, new enrollments, etc. |

| >180 points | Over capacity | Working beyond capacity (>100 %). Monitor for 2–3 reporting periods, consider redistribution of workload or adding additional team members |

The primary function of the model is to justify coordinator and data management support. Establishing this model has allowed us to provide quantitative rationale to institutional leadership at a time when all new position requests are heavily scrutinized. The model has also been used to support supplemental pay for teams where the average protocol complexity score is significantly higher than that of other teams.

Finally, the staffing model allowed us to set clear guidelines for position replacement during a time of budget constraints during the COVID-19 pandemic. At that time, the institution recommended broad budget cuts by attrition. Seeing low enrollment levels at the start of the pandemic, and using the staffing model as a guide, the CRO Director set an overall point threshold per FTE as a department, below which replacement would not be justified and instead cross-coverage recommended. Once enrollment re-started and the average per FTE increased above the threshold, positions could be reevaluated for replacement on a case-by-case basis.

2.2. Staffing model evaluation and revision

In 2021, we began to look at contemporary studies and their perceived increasing complexity and discussed making changes to the staffing model. We established a working group comprising clinical research office leadership, managers, and coordinators to review model performance. To determine the extent to which the model accurately captured trial workload and whether any key activities were missing from the model, the group reviewed (1) point values assigned to research-related activities in the model, and (2) protocol documents.

The effort identified potential discrepancies between established modeling and actual complexity of the current portfolio of oncology clinical trials as well as opportunities to enhance the generalizability of the model such that it could be adapted across departments and institutions. The scoring system was re-organized from four original categories into seven (Table 2), which were updated to include pre-screening, screening and enrollment activities. We re-evaluated points for each procedure or activity according to the approximate time required to complete.

Table 2.

Protocol Activity Categories. The staffing model was designed to capture each element of a patient's clinical trial experience, and the effort contributed by clinical research staff to make it happen.

| Category | Description |

|---|---|

| Pre-Screening | Elements that occur prior to consent – medical record review, visit planning, etc. |

| Screening | Consent and all screening procedures required to ensure eligibility. |

| Enrolled | Eligibility completion, central confirmations as needed, randomization as needed, enrollment, scheduling for treatment. |

| Active/On Treatment | Treatment-related items, visit frequency, procedures, etc. |

| Active/Follow-Up | Visit frequency and procedures. Only used if follow up activities are protocol driven (not standard of care). |

| Long-Term Follow-Up | Activity required for long-term follow-up. |

| Data Management | Timelines for entry, average time for case report form (CRF) completion, monitoring and audit frequency, and patient visit frequency. |

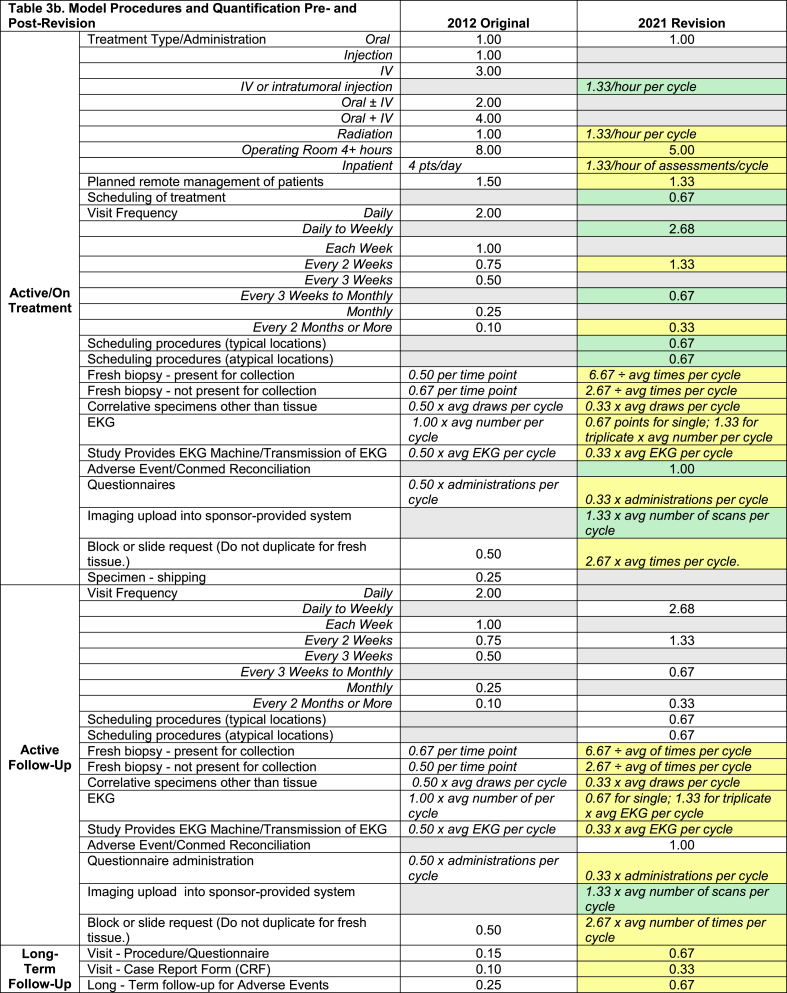

The working group reviewed all activities included in the previous version of the model to ensure consistent methodology for those and any new activities which would be added based on protocol reviews. This included, but was not limited to, a different point value for protocols that require triplicate versus single electrocardiograms (EKGs), for fresh biopsy requirements depending on whether the research coordinator would need to be present for the procedure, etc. For post-enrollment or on-treatment activities, scores for items such as correlative specimens and administration/collection of questionnaires were calculated per cycle, and the system included instructions on how to appropriately compute those numbers to ensure consistency (Table 3a–c). Our methodology was included with the informational documents and provided to managers responsible for scoring all new protocols. We also included a summary document of FAQs with tips on how to account for scoring of studies with multiple arms. Once we were satisfied with our edits, we worked with our database analyst to implement these changes in a new, updated Access Database.

Table 3.

(a–c). Staffing Model Quantification Pre-and Post-Revision. The staffing model scoring is presented in three parts, a. Screening, b. Coordinating Post-Enrollment, and c. Data Management. One point in the model is equivalent to 45 min of staff time. The gray cells in the points categories indicate “not present in 2012” or “deleted in 2021 version”. The yellow cells indicate revised scoring in 2021, and the green cells are complexity items added in the 2021 version.

3. Results

Post update, we reviewed the average acuity score from a sample of 78 active studies (spanning the period 2012–2021) that were transferred from the older version of the staffing model to the 2021 version of the staffing model. The average post-enrollment complexity score for protocols as calculated using the old model was 10.4 total points. The screening score was 10 points untailored to trial complexity, making the working average complexity score on the older model 20.4 ± 9.4 points. When we examined those same 78 studies in the 2021 version of the staffing model, which incorporated study-specific screening scores, using a student T-test, the overall average complexity, including our new variable screening score, was 25.2 points (standard deviation of 10.3) (P = 0.002) (Fig. 1).

Fig. 1.

Comparison of average study complexity between old and new model (N = 78). Brackets indicate screening points for each model version.

The average scoring breakdown by category is also shown in Fig. 1 with screening in the 2021 version now broken into three sections: pre-screening, screening, and enrollment. Review of the screening portion of the data (denoted by the brackets) shows that approximately 48.9 % of the acuity for protocols measured in the old model is attributable to screening activities v. 55.3 % in the new model (P < 0.001).

4. Discussion

Given the growing complexity and costs of cancer clinical trials, we developed a staffing model to project needs for research coordinator and data management support. We designed the model to account for the variability of clinical trial complexity and to be adaptable across trial sponsors, phases, cancer types, and diverse clinical settings. Since that time, we have used the model to justify additional coordinator and data management support as well as team supplemental pay according to protocol complexity.

Despite perceived and recognized changes to trial procedures and overall complexity, we noted no significant change in protocol acuity determined by the staffing model from 2014 to 21. This is despite the fact that study teams and other research/reviews showed that trials, cancer trials in particular, had increased in complexity over time [14]. In addition, we recognized that our study budgets were increasing in per patient cost over time. We hypothesized that this disconnect may be in part due to failure to account for the growing complexity of patient screening requirements for clinical trial enrollment along with the increasing time required by research staff for increasingly complex cancer agent delivery (e.g., cellular therapy trials).

The ability to measure complexity of clinical trials can provide an opportunity to staff appropriately and a way to measure feasibility of conducting the clinical trial depending on the capacity of the existing resources of the center. With a growing demand to address healthcare disparities, one way to do so is to expand access to clinical trials through geographies previously not served by clinical trials [15]. Such a tool could enable a community center to consider what resources may be required to open clinical trials where they were previously not available and or expand the portfolio of existing trials.

Our staffing database was designed to quantify the effort dedicated to direct patient care and data management activity per study. Other models include time spent doing other tasks, like staff meetings, sponsor communications, protocol deviation reporting, etc [9]. Our model is not intended to measure productivity but was rather developed as a pre-defined workload tool to help teams quantify the number of individuals needed to successfully conduct trials that vary in complexity both within and across teams. Additionally, our model captures the efforts needed for patient coordination and data reporting but does not account for tasks prior to study activation, regulatory submissions (e.g., IND safety reports, processing of protocol amendments) and other quality assurance and administrative tasks associated with clinical trials management.

While we have confidence in this model for our operation, we realize some limitations to broad applicability. As this is a homegrown system, it is specific to how our team conducts and coordinates oncology clinical trials. Having said that, our hope is that by establishing a mathematical equation which is driven by time spent per activity, other departments and perhaps other organizations may be able to replicate the model while making adjustments specific to their individual workflows.

Anytime multiple individuals enter information into a system there will be some variability, especially when the scoring device itself is designed to provide multiple adjustable variables. Accurate use of the model depends on regular review of reports by managers to ensure accuracy and ongoing review and training to improve accuracy and consistency in data entry.

5. Conclusion

In an age where accessibility of clinical trials has come under scrutiny, understanding staffing needs is critical to success. Investigators and research practices must have ways to better determine what level of staff involvement will be required for new trials, and our model not only provides that but can also help ensure that study budgets are both aligned and justifiable. Ongoing examination and malleability are critical to keep up with the evolving landscape of clinical trials and accurate capture of staff effort. Study sponsors could also consider such models in trial design, particularly when a broader representation of trial enrollment depends on the center's ability to conduct the trial successfully.

CRediT authorship contribution statement

Ellen Siglinsky: Writing – review & editing, Writing – original draft, Methodology, Investigation, Formal analysis. Hannah Phan: Writing – review & editing, Methodology, Data curation, Conceptualization. Silviya Meletath: Writing – review & editing, Conceptualization. Amber Neal: Writing – review & editing, Conceptualization. David E. Gerber: Writing – review & editing. Asal Rahimi: Writing – review & editing. Erin L. Williams: Writing – review & editing, Writing – original draft, Visualization, Supervision, Project administration, Conceptualization.

Funding

This work was supported by the Cancer Center Support Grant P30 CA142543.

Declaration of competing interest

The authors declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

Contributor Information

Ellen Siglinsky, Email: ellen.siglinsky@utsouthwestern.edu.

Hannah Phan, Email: hannah.phan@utsouthwestern.edu.

Silviya Meletath, Email: silviya.meletath@utsouthwestern.edu.

Amber Neal, Email: amber.neal@utsouthwestern.edu.

David E. Gerber, Email: david.gerber@utsouthwestern.edu.

Asal Rahimi, Email: asal.rahimi@utsouthwestern.edu.

Erin L. Williams, Email: erin.williams@utsouthwestern.edu.

References

- 1.Pennell N.A., Szczepanek C., Spigel D., Ramalingam S.S. Impact of workforce challenges and funds flow on cancer clinical trial development and conduct. Am. Soc. Clin. Oncol. Educ. Book. 2022;42:1–10. doi: 10.1200/EDBK_360253. PMID: 35439037. [DOI] [Google Scholar]

- 2.Getz K.A., Wenger J., Campo R.A., Seguine E.S., Kaitin K.I. Assessing the impact of protocol design changes on clinical trial performance. Am. J. Therapeut. 2008;15(5):450–457. doi: 10.1097/MJT.0b013e31816b9027. PMID: 18806521. [DOI] [Google Scholar]

- 3.Jin S., Pazdur R., Sridhara R. Re-evaluating eligibility criteria for oncology clinical trials: analysis of investigational new drug applications in 2015. J. Clin. Oncol. 2017;35(33):3745–3752. doi: 10.1200/JCO.2017.73.4186. PMID: 28968168; PMCID: PMC5692723. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Garcia S., Saltarski J.M., Yan J., Xie X., Gerber D.E. Time and effort required for tissue acquisition and submission in lung cancer clinical trials. Clin. Lung Cancer. 2017;18(6):626–630. doi: 10.1016/j.cllc.2017.04.012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Morton C., Sullivan R., Sarker D., Posner J., Spicer J. Revitalising cancer trials post-pandemic: time for reform. Br. J. Cancer. 2023;128(8):1409–1414. doi: 10.1038/s41416-023-02224-y. PMID: 36959378; PMCID: PMC10035974. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Lee C., et al. Clinical trial metrics: the complexity of conducting clinical trials in north American cancer centers. JCO Oncol. Pract. 2021;17:e77–e93. doi: 10.1200/OP.20.00501. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Good M.J., Lubejko B., Humphries K., Medders A. Measuring clinical trial-associated workload in a community clinical oncology program. J. Oncol. Pract. 2013;9(4):211–215. doi: 10.1200/JOP.2012.000797. PMID: 23942924; PMCID: PMC3710172. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Good M.J., Hurley P., Woo K.M., Szczepanek C., Stewart T., Robert N., Lyss A., Gönen M., Lilenbaum R. Assessing clinical trial-associated workload in community-based research programs using the ASCO clinical trial workload assessment tool. J. Oncol. Pract. 2016;12(5):e536–e547. doi: 10.1200/JOP.2015.008920. PMID: 27006354; PMCID: PMC5702801. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.James P., Bebee P., Beekman L., Browning D., Innes M., Kain J., Royce-Westcott T., Waldinger M. Creating an effort tracking tool to improve therapeutic cancer clinical trials workload management and budgeting. J. Natl. Compr. Cancer Netw. 2011;9(11):1228–1233. doi: 10.6004/jnccn.2011.0103. PMID: 22056655. [DOI] [Google Scholar]

- 10.Smuck B., Bettello P., Berghout K., Hanna T., Kowaleski B., Phippard L., Au D., Friel K. Ontario protocol assessment level: clinical trial complexity rating tool for workload planning in oncology clinical trials. J. Oncol. Pract. 2011;7(2):80–84. doi: 10.1200/JOP.2010.000051. PMID: 21731513; PMCID: PMC3051866. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Roche K., Paul N., Smuck B., Whitehead M., Zee B., Pater J., Hiatt M.A., Walker H. Factors affecting workload of cancer clinical trials: results of a multicenter study of the National Cancer Institute of Canada Clinical Trials Group. J. Clin. Oncol. 2002;20(2):545–556. doi: 10.1200/JCO.2002.20.2.545. PMID: 11786585. [DOI] [PubMed] [Google Scholar]

- 12.Fabbri F., Gentili G., Serra P., Vertogen B., Andreis D., Dall'Agata M., Fabbri G., Gallà V., Massa I., Montanari E., Monti M., Pagan F., Piancastelli A., Ragazzini A., Rudnas B., Testoni S., Valmorri L., Zingaretti C., Zumaglini F., Nanni O. How many cancer clinical trials can a clinical research coordinator manage? The clinical research coordinator workload assessment tool. JCO Oncol. Pract. 2021;17(1):e68–e76. doi: 10.1200/JOP.19.00386. PMID: 32936710. [DOI] [PubMed] [Google Scholar]

- 13.Williams E.L., Schiller J.H., Bolluyt J.D., Gerber D.E. Development and use of a staffing model for clinical research teams at an NCI Comprehensive Cancer Center. J. Clin. Oncol. 2016;34(15 suppl) doi: 10.1200/JCO.2016.34.15_suppl.e18267. [DOI] [Google Scholar]

- 14.Garcia S., Bisen A., Yan J., Xie X.J., Ramalingam S., Schiller J.H., Johnson D.H., Gerber D.E. Thoracic oncology clinical trial eligibility criteria and requirements continue to increase in number and complexity. J. Thorac. Oncol. 2017;12(10):1489–1495. doi: 10.1016/j.jtho.2017.07.020. PMID: 28802905; PMCID: PMC5610621. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Regnante J.M., Richie N.A., Fashoyin-Aje L., Vichnin M., Ford M., Roy U.B., Turner K., Hall L.L., Gonzalez E., Esnaola N., Clark L.T., Adams H.C., Alese O.B., Gogineni K., McNeill L., Petereit D., Sargeant I., Dang J., Obasaju C., Highsmith Q., Lee S.C., Hoover S.C., Williams E.L., Chen M.S. US cancer centers of excellence strategies for increased inclusion of racial and ethnic minorities in clinical trials. J. Oncol. Pract. 2019;15(4):e289–e299. doi: 10.1200/JOP.18.00638. PMID:30830833. [DOI] [PubMed] [Google Scholar]