Abstract

Objective

Diagnosis and treatment of progressive eye diseases require effective monitoring of visual structure and function. However, current methods for measuring visual function are limited in accuracy, reliability, and usability. We propose a novel approach that leverages virtual reality (VR) technology to overcome these challenges, enabling more comprehensive measurements of visual function endpoints.

Methods

We developed VisualR, a low-cost VR-based application that consists of a smartphone app and a simple VR headset. The virtual environment allows precise control of visual stimuli, including the field of view, separation of visual input to the left and right eyes, control of visual angles and blocking of background visual noise. We developed novel tests for metamorphopsia, contrast sensitivity and reading speed that can be performed by following simple instructions and providing verbal responses to visual cues, without expert supervision. The smartphone app operates offline and processes all data locally.

Results

We conducted empirical evaluations, including measurements of screen luminance, and performed simulations of psychometric functions to test the feasibility of building a visual function test device using widely available, inexpensive consumer-grade hardware. Our findings demonstrate the technical viability of this approach, and its potential to measure visual function endpoints during clinical development of new treatments or to support disease diagnosis and monitoring. We open-sourced the VisualR application code and provide guidelines for creating reliable and user-friendly VR-based tests.

Conclusion

Our results suggest that VR technology can open a new paradigm in visual function testing, and invite the wider community to build upon our work.

Author summary

Visual function describes how well the eyes can see and process basic visual information. Testing visual function is important for diagnosing and treating eye diseases that can affect vision, but current methods for measuring it are often unreliable or too complex to be performed by users without professional assistance. We propose a novel approach that uses VR technology to enable affordable and user-friendly assessments of visual function. We developed VisualR, a VR-based application that runs on a smartphone together with a simple VR headset. VisualR enables users to perform various vision function tests by following simple instructions and providing verbal responses to the visual stimuli displayed on the screen. As VisualR is smartphone-based, it eliminates the need for costly or specialized equipment. Our work demonstrates the technical feasibility and potential of creating affordable, accessible VR-based visual test devices. We open-sourced the code of VisualR and invite the broader community to build upon this work.

Keywords: Virtual reality < general, self-monitoring < personalised medicine, outcomes < studies, decentralized clinical trials < general, apps < personalised medicine

Introduction

Age-related eye diseases are leading causes of blindness and visual impairment worldwide. Age-related macular degeneration (AMD) alone affects about 200 million people, 1 while tens of millions of people suffer from diabetic retinopathy, cataracts and glaucoma. Due to demographic trends, the number of cases is expected to increase substantially in the coming decades.2–4

The diagnosis and treatment of degenerative eye diseases depends on effective monitoring of eye structure and function. While novel methods to image eye structure have revolutionized ophthalmology in the last two decades, 5 methods for assessing visual function are still limited in their sensitivity, reliability, and usability. This poses significant limitations to clinical practice and research.6,7

The most commonly used measure of visual function is best corrected visual acuity (BCVA). 8 However, there is overwhelming evidence that other visual function markers, such as contrast sensitivity (CS), perimetry, low luminance visual acuity, dark adaptation or metamorphopsia, could provide a more accurate and comprehensive assessment of degenerative eye diseases.9–12 These markers are especially important at early stages when BCVA is not sensitive enough to detect any changes.6–8 A more widespread use of these visual function endpoints could significantly improve the timing of diagnosis and allow a better monitoring of disease evolution, ultimately improving treatment efficacy and decreasing the number of cases that progress to irreversible vision loss.8,13 In addition, reliable measurements of visual function endpoints could be also used during clinical development, to support and accelerate the testing of new treatments.

Measuring visual function poses multiple challenges. Visual function tests typically require specialized equipment and the assistance of trained personnel, are often lengthy, and require strict visual environmental conditions. Additionally, the subjectivity inherent to testing visual function makes these tests prone to bias and variability due to individual differences in perception, cognition, motivation, or mood. These challenges are compounded when assessing multidimensional functional vision endpoints such as reading speed. 14 Altogether, these limitations make it difficult to implement these tests in clinical settings or to use them as endpoints in clinical trials.6,8 In recent years, there have been many attempts to develop novel tests for visual function using computer displays, tablets or smartphones. 15 These offer some advantages in terms of usability and accessibility, and often allow users to perform self-testing at home. However, it is extremely challenging to have appropriate control over the test conditions (e.g. room lighting and testing distance), and these digital tests have so far failed to gain widespread acceptance.

Virtual reality (VR) technology has the potential to overcome many of the limitations of current visual function tests. VR creates an immersive and interactive environment that allows precise control of the visual stimuli presented to the user, while capturing their responses through intuitive input commands such as voice or physical movements. VR has been effectively utilised as a tool for clinical training in ophthalmology, particularly in surgical training.16,17 Additionally, there have been significant efforts to employ VR in vision therapy and rehabilitation,18,19 as well as to support the diagnosis of various diseases. This includes applications such as perimetry measurements for glaucoma, and the diagnosis of amblyopia and binocular vision disorders.17,20,21 However, several challenges remain. Measuring functional endpoints such as visual acuity has yielded less positive results with limitations in resolution and display quality of smartphone and VR displays,22,23 hindering the development of accurate and reliable tests. Moreover, the high capital costs associated with VR technology and the difficulties in long-term support have been significant barriers to its broader adoption in ophthalmology. 17

To overcome these difficulties, we propose a new approach for measuring visual function using smartphone-based VR. Smartphone-based VR leverages widely available consumer-grade hardware, making it a more scalable solution than high-end displays such as Meta Quest, HTC Vive or the Apple Vision Pro. While the high-resolution displays and advanced tracking capabilities of these headsets are beneficial for certain applications, they also come with several limitations when considering their use in real-world settings. Firstly, not only are initial acquisition costs high, but high-end VR headsets can also rapidly become obsolete or discontinued, resulting in frequent and complex upgrades. In contrast, the lower cost of smartphone-based VR makes it a more feasible option for long-term and widespread adoption. Additionally, smartphone-based VR systems are generally easy to set up and use, requiring minimal technical expertise. The simplicity of these systems makes them accessible to a broader range of users, including those with limited technical skills. Another advantage of smartphone-based VR is its scalability. With the proliferation of smartphones globally, the potential reach of a smartphone-based VR device is vast. This widespread availability means that visual function testing can be conducted in a variety of settings, without the need for specialized equipment.

In this article, we demonstrate the technical feasibility of conducting a range of visual function tests using a low-cost headset. We developed VisualR, a smartphone app that is compatible with affordable and easily available consumer headsets, and designed novel tests for metamorphopsia, CS, and reading speed. Our aim was to measure visual function endpoints in a robust way, engaging and user-friendly manner, while ensuring the scalability and independence from specific VR hardware. The goal of this work is to demonstrate the technical feasibility of using VR for visual function testing using consumer-grade hardware.

Methods

This was a non-clinical, technical feasibility study focused on the development and evaluation of a VR-based application for visual function testing. The study involved software development, hardware testing, and simulation-based validation. No human participants, data, or tissue were involved. All work was conducted at BI X (Germany) and the Fraunhofer Institute for Reliability and Microintegration IZM (Germany), over the period 2022–2025.

VisualR consists of a smartphone app that is used together with a simple VR headset. It is currently optimized for iOS phones, particularly the iPhone 13. The navigation in the app before entering the VR mode is based on standard touch interaction with user interface (UI) elements (Figure 1(a)). Before starting a test, users insert the smartphone into a compatible VR headset (Figure 1(b)) and wear the headset until the test terminates. During the visual tests in VR mode (Figure 1(c)), instructions are shown to the user and a corresponding voice-over is played. The users interact with the app using speech commands that are transcribed with the offline speech recognition system Vosk. 24 All processing and storage is performed locally in the device; therefore, no internet access is required.

Figure 1.

VisualR concept. (a) The app navigation is based on standard touch interaction with UI elements. (b) The smartphone is inserted in a VR headset. (c) The user wears the VR headset with the inserted smartphone, and the content is displayed in VR mode.

UI: user interface; VR: virtual reality.

The app is developed using a combination of Flutter 25 and Unity. 26 Flutter is used for the rendering of the UI elements and the navigation through them, as well as for the logic of the visual tests and the storage of the tests’ results. Flutter was chosen for its ability to create cross-platform applications with a single codebase, efficiently handling UI rendering, navigation, logic, and storage.

The rendering of all the visual test elements in VR mode is based on Unity. Unity was selected for its advanced 3D graphics and VR support. Combining Flutter and Unity leverages their strengths in UI development and VR rendering.

For details on the architecture and implementation, see Supplemental Appendix A. We used a Destek V5 VR as the VR headset. The source code for VisualR is available at https://github.com/BIX-Digital/VisualR. An archived version of the source code referenced in the article is available at https://doi.org/10.5281/zenodo.10053869.

Results

VR offers many potential benefits for measuring visual function endpoints, but it also faces some challenges such as high cost, low familiarity, and rapid outdating of VR devices. We aimed to develop a scalable and device-independent solution using smartphone-based VR technology, which is widely accessible and compatible with various VR headsets. We designed novel tests for metamorphopsia, CS and reading speed that leveraged VR and smartphone features, are easy to use, and directly relate to standard visual function tests used in clinical development. We assessed the feasibility of running VisualR on a standard smartphone (iPhone 13), including measuring display luminance, ensuring correct visual angles and an adequate computational performance.

Interpupillary distance (IPD) adjustment

IPD, the distance between the centres of the pupils of the two eyes, is an important parameter that should be taken into consideration when using stereoscopic devices. 27 VR headsets often allow users to adjust the distance between lenses to optimize stereoscopic image quality. However, this setting is potentially challenging and error-prone for many users, could be inadvertently changed between sessions (thereby introducing a bias in longitudinal studies), and may not even be available in simpler headsets.

Therefore, we designed a simple IPD adjustment test that is entirely software-based. The key principle is to identify the distance between left-eye and right-eye content that creates the most clear and comfortable image quality. A sequence of images are shown in Figure 2: each image is formed using two copies of the image, one shown to the left eye and another one shown to the right. The distance between the two copies is varied, and the resulting image is perceived differently from the user, depending on their IPD. Nine different distances corresponding to IPD in the range 50–75 mm are tested using three sets of three images each: for each set, the user should choose the sharpest image. Finally, a set of three images is shown using the distances corresponding to the answers from the previous sets; the estimated IPD is then calculated as the average of the answers to the four sets. The IPD adjustment is performed only once, when the user uses the app for the first time, and requires ∼1 or 2 minutes. This IPD estimate is stored and applied when rendering content in all visual tests.

Figure 2.

Interpupillary distance (IPD) adjustment. Three sequential screens with a set of image pairs with varying distances along the horizontal axis. Depending on their specific IPD (depicted at right), users will perceive one of the three pairs as clearer than the others.

Metamorphopsia test

Metamorphopsia is a form of distorted visual perception where linear objects are perceived incorrectly as curved or discontinuous, and is a common symptom associated with age-related macular degeneration and epiretinal membrane. 12 The simplest, and by far most common, test of metamorphopsia is the Amsler grid, 28 which qualitatively assesses the presence of metamorphopsia in a field of vision (FOV) of ± 10°.

One method that allows a quantification of metamorphopsia is the M-CHARTS. 29 The method is based on showing a single, straight, horizontal or vertical line centred in the FOV. The user should say if they perceive the line as straight or distorted. In case the line is perceived as distorted, the same line is shown again, but rendered using dots with increasing spacing (19 types of lines, with dot spacings corresponding to visual angles of 0.2°–2°). Figure 3(a) shows how the perception of distortion should disappear with increasing dot spacing: low metamorphopsia levels cause the appearance of distortion in lines made up of dense dots, but not in lines made up of sparse dots, and strong metamorphopsia levels cause the appearance of distortion also in sparsely spaced dots. The level of metamorphopsia is calculated as the smallest dot spacing for which the user did not perceive a distortion and is measured in degrees, corresponding to the visual angle between the dots.

Figure 3.

Metamorphopsia test. (a) The key principle behind the VisualR metamorphopsia test (based on the M-CHARTS test) is that metamorphopsia can be quantified using dotted lines with varying distances between the dots. The diagram simulates how three users with varying degrees of metamorphopsia perceive a straight line. Healthy users always correctly perceive the three lines (dense dots to sparse dots) as straight. For a user with weak metamorphopsia, the dense dotted lines are perceived as distorted, but that perception gradually disappears as the distance between the dots is increased. However, for users with strong metamorphopsia, even lines composed of sparse dots are perceived as distorted. The distance between the dots (expressed as a visual angle) can be used as a proxy for the level of metamorphopsia. (b) Overview of the field of view of ± 6° (full circle) and the coverage with lines (in blue). There are nine horizontal (left panel) and nine vertical (right panel) lines for each eye. The dashed green lines indicate bins, namely groups of three adjacent lines. (c) Temporal sequence of the app content for each line tested (zoomed in): after the initial target with a pulsing centre is shown to the user, a test line is shown for 250 ms, and then the user is asked if the line was straight. The target and the final text are shown to both eyes, but the test line is displayed only to one eye at the time.

The idea behind the VisualR metamorphopsia test is to combine the spatial aspects of the Amsler grid and the quantification capabilities of the M-CHARTS. It follows the fundamental logic of the M-CHARTS but extends the method to a larger FOV of ± 6°. To cover this FOV, lines in different regions have been defined as in Figure 3(b): there are nine horizontal and nine vertical lines of different lengths that cover the FOV of each eye, left and right (for a total of 36 lines). The number of lines chosen is a compromise between spanning the full FOV and avoiding too many lines to be tested, which would result in a longer test. Visible in Figure 3(b) are also different bins, namely groups of three adjacent lines.

During the test, the level of metamorphopsia for each line is quantified, and an independent score is built. To simplify the scoring system, four summary scores are also built from the vertical and horizontal lines and each eye. First, a score for each spatial bin is created as the maximum score among the three lines in each bin. Then, each summary score is evaluated as the average of the three corresponding bin scores.

The two eyes are tested during the same test: the lines are shown alternating randomly between the left and the right part of the phone display, so that the user does not know when a line is shown to the left or to the right eye. Just before showing a test line, a target with a pulsing centre is displayed to help the user focus on the desired location (Figure 3(c)). The test lines are shown only for a short interval of 250 ms to reduce the chance of saccade and making sure the lines are perceived by the user on the correct field of view location. After the line is shown, the user is asked if the line is straight or not.

The test consists of two parts, and the logic is shown in Figure 4. In the first part, each of the 36 lines is shown to the user in a random sequence, and the user should say if the line is distorted or not. The user has 5 seconds to give their answers. In case no answer is given, this is interpreted as no distortion in the corresponding line was seen. In addition to the 36 lines, four artificially distorted lines are also shown during the sequence, to add variety to the test and keep the attention of the user high. The answers given for these lines are not considered in the test scoring.

Figure 4.

Metamorphopsia test flowchart. The metamorphopsia test has two parts: in the first part, all the available lines are tested. The second part of the test starts only in case the user perceived some lines as distorted in the first part, and ends when the level of distortion of each line is quantified. A filter mechanism to reduce the lines tested in the second part is used in case the user has extended metamorphopsia.

In case the user does not perceive any straight line as distorted, the test finishes and the corresponding scores are set to zero. As visible in the test logic in Figure 4, in case the user did perceive one or more straight lines as distorted, the test continues after a short break, with the purpose of quantifying the level of metamorphopsia corresponding to each line perceived as distorted in the first part. To keep the length of the test short, a maximum of 12 lines is followed up in the second part of the test: the bins described in Figure 3(a) are used to select the relevant lines. Following the conceptual approach of the M-CHARTS test, different versions of the same line using different dot spacing are displayed to the user. However, unlike in the M-CHARTS (which uses a fixed set of dot spacings), an adaptive sequence based on two independent binary searches is created depending on the user input. The average of the two binary searches is the final score for each line.

The length of this test varies quite a lot depending on the extension of the visual field in which the user observes distortions. All the users go through the first part of the test, which lasts a few minutes (maximum 4 minutes, average of 2–3 minutes). The length of the second part of the test depends on the number of lines that were perceived as distorted: the worst-case scenario lasts 12 minutes (in this situation, there are two small pauses, where the user keeps the headset on).

CS test

The CS is a measure of the ability to discern between luminance of different levels in a static image. As in the Pelli-Robson, 30 the aim of the VisualR test is to measure the CS of the user eyes at a specific value of spatial frequency. The CS is measured in terms of log units, logCS. The VisualR app contains two independent CS test versions, using, respectively, light patterns on a dark grey background or dark patterns on a light grey background. The patterns chosen are rings based on the Landolt optotypes proportions, 31 defined to match a spatial frequency of 1.67 cycles/degree (Figure 5(a) and (b)).

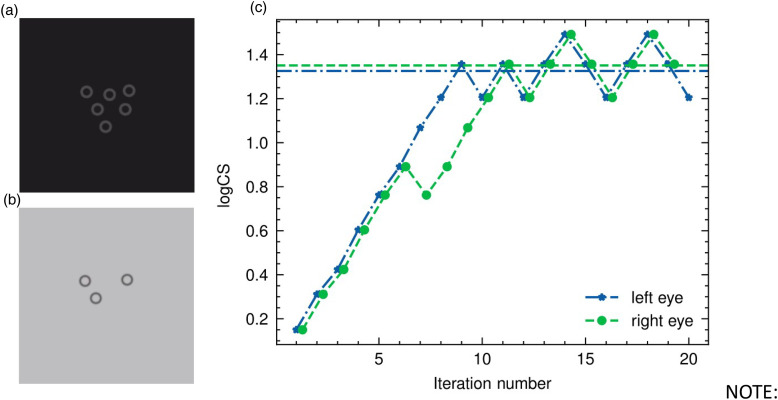

Figure 5.

Contrast sensitivity test. Example of rings displayed to a single eye during the contrast sensitivity test, respectively for the version with dark grey background (a) and light grey background (b). The pictures are a zoom of the full app screenshot. (c) Staircase from left (blue stars) and right eye (green full circles) from one typical test. The contrast sensitivity level (y-axis) is varied at each iteration (x-axis). The staircase for the right eye is slightly moved along the x-axis to facilitate the view. In each staircase, there are eight ‘ups’ and ‘downs’ (reversals). The last six reversals are averaged to evaluate the final score, indicated by the corresponding horizontal lines in the plot. The staircase for the right eye shows an example of a wrong answer in the sixth iteration, which in this case does not affect the result since the score is evaluated only on the last six reversals.

Both test versions follow the same logic. The two eyes are tested during the same test: the images are shown alternating randomly between the left and the right part of the phone display, so that the user does not know when an image is shown at the left or the right eye.

The colour of the background is fixed along the test, while the one of the rings vary to produce the different contrast levels with respect to the background. Following the approach and definitions of Hwang and Peli, 32 we evaluate the contrast levels using the standard Weber definition in case of dark rings on a light grey background, and the inverse Weber definition in case of light grey rings on a dark grey background. We will refer to these two cases as negative polarity (NP) and positive polarity (PP), respectively. Both contrast definitions can be summarized by this formula: , where L is the luminance, and the prefix max or min refer to the highest or lowest luminance between background or rings (i.e. opposite in the two versions of the test). The main difference between the two cases is that in the case of light background, the denominator of the formula stays constant, while for the dark background case the denominator changes for each contrast level. Moreover, the values of the denominator are larger in absolute terms for the light background, implying that it is easier to achieve small values of contrast than in the case of the dark background.

All the grey values are defined in the app using RGB triplets. We decided to use the same contrast levels as in Pelli-Robson to allow an easier clinical comparison with this test. To achieve the demanding lower levels of contrast, we used the bit stealing technique 33 following the approach of Hwang and Peli. 32 We performed detailed measurement of the display luminance in order to have absolute values. The blue circles in Figure 6 indicate the measured logCS versus the nominal ones for the PP scenario. The last level of contrast could not be produced even with a very high-quality display, such as the one from iPhone 13. The uncertainties on the logCS increase with the logCS, meaning that for future versions of the app, we could change and increase the number of levels in the region until logCS <1.7, but for higher values, the bin width cannot decrease much. This would mainly affect the result of users who do not experience a low CS, who are those supposed to reach levels with low contrast values during the test. Similar results have been obtained for the NP scenario. Supplemental Appendix B contains a detailed description of the procedure followed to measure the absolute luminance corresponding to an RGB triplet and to find the RGB triplets needed to produce the desired contrast levels, as well as the main learnings from the display characterization.

Figure 6.

Empirically measured contrast sensitivity values. Comparison of the measured contrast sensitivity values (y-axis, left; dark blue circles) versus the nominal one from the Pelli-Robson (x-axis). The grey diagonal line shows the ideal reference, namely where the two sets of values are the same. The differences between the two sets of values (residual) are indicated as light blue stars (y-axis, right). The lowest logCS values can be obtained with a small deviation from the nominal ones, well below the 0.05 logCS level. The last levels show a bigger deviation and also a larger error, showing how these levels cannot be measured with the same accuracy obtained for the lower ones.

In order to guarantee the reproducibility of the luminance, all the iPhone functions that have an influence on the display, such as auto-brightness and ‘True Tone’, should be turned off during the test. Since there is no way to do this via software, the user should properly set these settings before taking the test. The relative brightness level is set via software as soon as the test starts (respectively to 60% and 30% for PP and NP).

The test is based on an adaptive staircase procedure, where the luminance of the rings is varied according to the previous answer of the user. The number of rings shown at each step of the sequence varies randomly between three and six. At each step, the user is asked how many rings they saw. The sequence starts from a high contrast level, and this is decreased at each step until the user can see more than 50% of the rings. When this is not the case anymore, the contrast is increased back. This procedure of increasing/decreasing the contrast continues until the staircase defines eight reversals (also indicated by ‘ups’ and ‘downs’). The final score, namely the estimated logCS threshold, is the average of the last six reversals of the staircase. A representation of typical staircase sequences can be seen in Figure 5(c), where the contrast levels for each iteration are shown; the horizontal lines in the figure indicate the estimated logCS threshold. The staircase for the left eye is a good example, in which all the reversals are at similar contrast levels. On the contrary, in the staircase of the right eye, the first pair of reversals (iterations 6 and 7) appear at a quite different contrast level with respect to the other reversals, suggesting that the answer given by the user at iteration 6 was a mistake. In this particular example, the final score is not affected since it is not using the first pair of reversals.

Extra steps with one, two, or seven rings at high contrast level are randomly interspersed during the test with a frequency of 15%. The reason to introduce these steps is two-fold: on the one side, they add variety and thus maintain user engagement during the test. On the other side, since they extend the range of number of rings seen by the user (from 3–6 to 1–7), they should lower the ‘guessing rate’, namely the probability that a user can just guess the correct number of rings. The user has 10 seconds to answer the question. In case no answer is given, this is interpreted as no rings were seen.

The characteristics of the staircase procedure, the number of reversals, and the number of rings were chosen after Monte Carlo simulations following the approach by Pelli et al. 30 and Arditi. 34 This approach is based on defining the CS function as a Weibull function with four parameters (CS threshold, slope, guessing rate and misreporting rate, namely the probability of making a mistake) and varying systematically different characteristics of the test design. For each variation, 1000 test simulations were run, the corresponding CS threshold extracted and its difference with the theoretical threshold of the Weibull function analysed. From the different distribution, it was possible to extract the offset and the standard deviation to check the absolute values and the behaviour as a function of different parameters and thus choose the best test parameters. More details on the MC simulation are given in Supplemental Appendix C.

The length of this test depends on the level of contrast that can be perceived by the user and on the time needed to give the answer. On average, the total length is around 3 minutes, but it can also reach 8 minutes. We opted for a simplified test using fixed ring sizes, but a possible extension of these tests would be to display rings with varying sizes, thereby estimating a CS function. 35 However, such a modified test would likely considerably extend the test duration, and be limited by the resolution of the phone display (see also the Discussion section).

Reading speed test

Reading difficulty is one of the most common complaints affecting people with eye conditions such as cataract and AMD. 36 There are several versions of reading speed tests used in a clinical context. Usually, the objective is to measure the reading speed and the number of spelling errors made by the user. For the VisualR implementation, we took as reference mainly the SKread37,38 and the Wilkins reading test. 39

The test simulates a 3D virtual screen displaying 100 random words (Figure 7). The task of the user is to read all the words aloud. To see all the words displayed, the user should slightly turn the head left and right. The two eyes are tested one after the other: first, the left eye is tested, then the right eye.

Figure 7.

Reading speed test. Screenshot of the three-dimensional (3D) virtual screen with the 100 words. In this screenshot, only the left eye is being tested.

The words are randomly sampled from the most common (roughly 1000) words in a given language (based on the B1 level of the Common European Framework of Reference for Languages). The words are not semantically connected, to reduce the influence of cognitive function, reading skills and education level in the test and focus on the visual function.

The height of each letter is equivalent to 2° of the visual field. This means that even people who have a logarithm of the minimum angle of resolution (logMAR) score of 1.3 (the World Health Organization threshold for legal blindness) should be able to read the letters clearly (the threshold on the optotypes size being 1.67° of the visual field). As in the other tests, the words of the users are processed in real time by the speech recognition system Vosk, in order to recognize the vocal commands and react accordingly. In this case, the test finishes automatically when the last three words of the sequence are recognized. In this case, if the initial recognition of those three words fails, the test can still be ended by saying a specific voice command. The audio transcription used for the scoring of the test is done with Whisper, an automatic speech recognition model that is run locally on the phone and provides accuracy near the state-of-the-art level and robustness to accents and background noise. 40 The audio is processed while the user is doing the test, the corresponding words transcribed and timestamps added. The time for the test is evaluated as the difference between the end of the last word and the beginning of the first word, and it is used to compute the number of words per minute, that is, the score shown in the app. The score is computed for each eye independently. From the comparison between the words transcribed and those displayed, the word error rate and the character error rate are evaluated, but these values are not currently incorporated in the score. We envision using these rates to identify edge cases during post-collection data analyses. After the scores are computed, neither the audio nor the transcribed words are saved.

The duration of this test depends entirely on the reading speed of the user. On average, for healthy users, it is 1–2 minutes per eye.

Validation of visual angle calibration

An accurate setting of visual angles is essential for the proper implementation of vision tests, as it determines the size and position of the test stimuli on the retina. In most conventional visual function tests, visual angles are controlled by using test objects with known dimensions (e.g. Sloan letters), and instructing users to position themselves at a specific distance from the test device. However, this variable distance can be problematic for self-administered tests, especially if they are performed on tablets, smartphones, or computer monitors. In contrast, VR offers a more convenient and accurate way to calibrate visual angles, as the optical distance and the display characteristics are fixed. In VisualR, all test content is defined in visual angles, and these are converted to pixel dimensions during display, according to the phone display size and resolution and VR headset optics, as described in more detail in Supplemental Appendix A. To verify that the content is displayed at the correct visual angles, we developed a simple calibration test based on the detection of the blind spot. The blind spot is a receptor-free area of the retina where the optic nerve leaves the eye, centred 13°–16° temporally. 41 In the VisualR calibration test, a large red circle is displayed at the expected location of the blind spot when the user fixates a central cross (Figure 8). If the visual angles are correctly calibrated, then the red circle should not be visible by the user. This test can be used to confirm that VisualR is set properly with any new phone model or VR headset.

Figure 8.

Validation of visual angles using the blind spot. The 3.3° red dot is set to be displayed 15° from the central cross fixation point. If the pixel/visual angle calibration is set correctly, then the red dot will not be visible to the user. The dots next to the central cross can be used as alternative fixation points to identify a possible misalignment. The screenshot in the figure is displayed to the left eye; a symmetric image is displayed to the fellow eye.

Discussion

VisualR was conceived as a toolbox that runs multiple types of visual tests. Each visual test is programmed as an independent component, and it is intended that new independent tests or variations of the present ones will be developed. We are planning to conduct clinical studies using patient populations with varying degrees of metamorphopsia, CS and reading speed to assess the clinical performance and agreement of VisualR tests to standard visual function tests.

When developing further tests, the balance between test session length and user burden should be taken into account. In the current implementation, the reading speed and CS tests last about 2–4 minutes, while the length of the metamorphopsia test is more variable (potentially requiring over 10 minutes for patients with advanced metamorphopsia). An interesting possibility created by VR is the exploration of gamification, which can substantially improve user experience and engagement during typically monotonous vision tests such as perimetry or dark adaptation. VisualR currently supports English and German, but can easily be extended to many more languages: speech recognition is supported by Vosk and Whisper, which currently support more than 20 and 100 languages, respectively.24,40 However, performance may vary across languages and should always be properly assessed. VisualR was written in Flutter (a cross-platform framework), and it is intended to be compatible with various phone models and operating systems, including iOS and Android. However, since different phone manufacturers and phone models often diverge in how they implement display features (including automated brightness, colour settings and various accessibility options), care should be taken to evaluate the app and potentially adapt the software to a specific phone model. More fundamentally, scalability to different phone models is dependent on central processing unit (CPU) and memory performance, and display characteristics. We have initially focused on correctly supporting iOS, and particularly the iPhone 13, 42 a widely available smartphone. The iPhone 13 was selected due to its large user base and, unlike many Android phones, Apple's consistent hardware and software updates, which ensure long-term interoperability and maintainability. The memory and CPU usage of the app on an iPhone 13 can be seen in Table 1. Most of the app can be used with a fraction of the available memory and one CPU core at maximum. Only during the transcription of audio clips via Whisper, four of the six available CPU cores are active. This shows that it is feasible to run VisualR (including running Unity as a module for graphic rendering and using offline speech recognition) in widely available consumer hardware.

Table 1.

App performance on an iPhone 13 measured via memory usage and CPU consumption during various situations.

| Usage | Memory usage (megabyte) | CPU consumption a |

|---|---|---|

| Idle | 110 | 0% |

| Navigating the UI of the app | 130 | <50% |

| Running IPD adjustment / contrast sensitivity / metamorphopsia tests | 375 | 15%–25% |

| Running reading speed | 475 | 45% |

| Idle (after Unit ran once) | 300 | 10% |

| Navigating the UI of the app (after Unity ran once) | 385 | <70% |

| Transcribing an audio clip during reading speed test using Whisper | 800 | ∼400% |

CPU: central processing unit; UI: user interface; IPD: interpupillary distance.

The maximum CPU consumption of an iPhone 13 is 600% as it has six CPU cores.

The quality of the phone display plays a central role in VisualR. As discussed above, the CS test is particularly dependent on a correct estimation of luminance of the display. For this initial version, we extensively measured the display luminance on an iPhone 13; however, this approach is very cumbersome and not easily sustainable if many phone models are meant to be closely supported. There has been the interesting suggestion of a possible psychophysical display calibration 43 that would avoid the need for physical measurement to support a new phone model. However, that would require normally sighted persons to perform it, which can become unreliable and a source of bias. Another open point for the CS test is if the logCS levels can be produced with enough accuracy, as in the iPhone 13, which has a high contrast ratio and broad luminance range. While it is expected that the quality of phone displays will continue to improve in the future, it is not certain that luminance-related tests will be feasible in lower-quality phones.

One of the limitations of VisualR is screen resolution: the iPhone 13 has a resolution of 460 ppi, which, when viewed through a standard VR headset, creates an effective resolution of ∼ 5 arc minutes (arcmin) per pixel. While this resolution is sufficient for the visual tests presented here, it is clearly not sufficient to adequately test visual acuity (normal 20/20 vision/logMAR score of 1.0 would require about 1 arcmin). A few smartphones on the market already have substantially superior resolutions, which may eventually allow the development of testing visual, reading and contrast acuity tests.

High scalability was one of the key motivations behind using smartphone-based VR.

The only hardware requirements for VisualR are a high-quality smartphone and a Google Cardboard compatible headset. The headset can be customized and mass-produced (including using 3D printing technology) or bought individually from retail stores. We have tested VisualR with a Destek V5 VR headset, 44 which has a retail price of around 40 euros/United States dollars in 2025. However, there are some overhead costs involved in developing the app before distributing it. A suitable Unity developer licence is needed to use Unity for non-personal projects, and some paid assets are also necessary: in the current version, these are Recognissimo (a Unity asset for offline speech recognition with Vosk models) and Lean GUI Shapes (a Unity asset for vector graphics rendering); their prices are 60 and 5 euros, respectively, in 2023. Our goal was to enhance the scalability, flexibility and accessibility of VisualR by using smartphone-based VR. However, our proposed tests and interaction patterns for VisualR can in principle be adapted to other VR devices. There have been considerable efforts to develop and expand consumer-grade VR hardware, and there is a realistic expectation that high-quality devices will be widely available within the next years, potentially changing the cost–benefit ratio of smartphones and dedicated VR hardware.

Conclusion

Visual function testing is critical for the monitoring of various eye diseases. In this article, we argued that VR can solve many challenges that have hampered visual function tests in the past, and demonstrated the technical feasibility of building a VR-based visual function testing device using consumer-grade hardware.

We presented VisualR, an extendable and user-friendly VR-based smartphone application built using primarily free and open-source components. One of the benefits of VisualR is the ability to conduct visual tests remotely, without the need for trained personnel or specialized equipment. This could save time and resources for both patients and healthcare providers, and encourage regular and reliable usage of visual function testing. In the future, we envision that VisualR could be used for longitudinal monitoring of visual function during clinical trials, for treatment evaluation or early detection of eye diseases. We open-sourced VisualR and provided guidelines and suggestions for developing visual function tests in VR. We hope that this work will inspire and facilitate further research and innovation in this field.

Supplemental Material

Supplemental material, sj-pdf-1-dhj-10.1177_20552076251365039 for VisualR: A novel and scalable solution for assessing visual function using virtual reality by Federica Sozzi, Henning Groß, Bratislav Ljubisic, Jascha Adams, Andras Gyacsok, Giuseppe Graziano, Steffen Weiß and Nuno D Pires in DIGITAL HEALTH

Video 1.

Acknowledgements

We are grateful to Oliver Kirsch of the Fraunhofer Institute for Reliability and Microintegration IZM (Berlin, Germany) for conducting the luminance measurements necessary for the display calibration.

Footnotes

ORCID iDs: Federica Sozzi https://orcid.org/0009-0002-4687-4849

Nuno D Pires https://orcid.org/0000-0002-7113-3519

Ethics approval: Not applicable: this research did not require Institutional Review Board approval because it did not involve human participants, human data, or human tissue.

Author contributions: All authors contributed to the design and implementation of VisualR. FS, HG, and NDP wrote the manuscript.

Funding: The author(s) disclosed receipt of the following financial support for the research, authorship, and/or publication of this article: This work was supported by Boehringer Ingelheim.

The author(s) declared the following potential conflicts of interest with respect to the research, authorship, and/or publication of this article: FS, HG, BL, JA, AG, and NDP are employees of Boehringer Ingelheim, which funded this study and is developing new treatments for various retinal diseases. The remaining authors have no competing interests.

Availability of data and materials: The source code for VisualR is available at https://github.com/BIX-Digital/VisualR. An archived version of the source code referenced in the manuscript is available at https://doi.org/10.5281/zenodo.10053869.

Guarantor: Nuno D Pires

Supplemental material: Supplemental material for this article is available online.

References

- 1.Wong WL, Su X, Li X, et al. Global prevalence of age-related macular degeneration and disease burden projection for 2020 and 2040: a systematic review and meta-analysis. Lancet Glob Health 2014; 2: e106–e116. [DOI] [PubMed] [Google Scholar]

- 2.Tham YC, Li X, Wong TY, et al. Global prevalence of glaucoma and projections of glaucoma burden through 2040: a systematic review and meta-analysis. Ophthalmology 2014; 121: 2081–2090. [DOI] [PubMed] [Google Scholar]

- 3.Teo ZL, Tham YC, Yu M, et al. Global prevalence of diabetic retinopathy and projection of burden through 2045 systematic review and meta-analysis. Ophthalmology 2021; 128: 1580–1591. [DOI] [PubMed] [Google Scholar]

- 4.Hashemi H, Pakzad R, Yekta A, et al. Global and regional prevalence of age-related cataract: a comprehensive systematic review and meta-analysis. Eye 2020; 34: 1357–1370. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Duker JS, Waheed NK, Goldman D. Handbook of retinal OCT: optical coherence tomography. Philadelphia, PA: Elsevier, 2021. Available from: https://books.google.de/books?id=iQU7EAAAQBAJ. [Google Scholar]

- 6.Csaky K, Ferris F, Chew EY, et al. Report from the NEI/FDA endpoints workshop on age-related macular degeneration and inherited retinal diseases. Invest Ophthalmol Visual Sci 2017; 58: 3456–3463. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Wickström K, Moseley J. Biomarkers and surrogate endpoints in drug development: a European regulatory view. Invest Opthalmol Vis Sci 2017; 58: BIO27. [DOI] [PubMed] [Google Scholar]

- 8.Schmetterer L, Scholl H, Garhöfer G, et al. Endpoints for clinical trials in ophthalmology. Prog Retinal Eye Res 2022; 97: 101160. [DOI] [PubMed] [Google Scholar]

- 9.Pondorfer SG, Heinemann M, Wintergerst MWM, et al. Detecting vision loss in intermediate age-related macular degeneration: a comparison of visual function tests. PLoS ONE 2020; 15: e0231748. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Cocce KJ, Stinnett SS, Luhmann UFO, et al. Visual function metrics in early and intermediate dry age-related macular degeneration for use as clinical trial endpoints. Am J Ophthalmol 2018; 189: 127–138. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Künzel SH, Lindner M, Sassen J, et al. Association of reading performance in geographic atrophy secondary to age-related macular degeneration with visual function and structural biomarkers. JAMA Ophthalmol 2021; 139: 1191–1199. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Hanumunthadu D, Lescrauwaet B, Jaffe M, et al. Clinical update on metamorphopsia: epidemiology, diagnosis and imaging. Curr Eye Res 2021; 46: 1777–1791. [DOI] [PubMed] [Google Scholar]

- 13.Finger RP, Schmitz-Valckenberg S, Schmid M, et al. MACUSTAR: development and clinical validation of functional, structural, and patient-reported endpoints in intermediate age-related macular degeneration. Ophthalmologica 2019; 241: 61–72. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Colenbrander A. Aspects of vision loss – visual functions and functional vision. Vis Impair Res 2003; 5: 115–136. [Google Scholar]

- 15.Balaskas K, Drawnel F, Khanani AM, et al. Home vision monitoring in patients with maculopathy: current and future options for digital technologies. Eye 2023; 37: 3108–3120. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Muñoz EG, Fabregat R, Bacca-Acosta J, et al. Augmented reality, virtual reality, and game technologies in ophthalmology training. Information 2022; 13: 22. [Google Scholar]

- 17.Ahuja AS, III AAP, Eisel MLS, et al. The utility of virtual reality in ophthalmology: a review. Clin Ophthalmol (Auckland, NZ) 2025; 19: 1683–1692. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Pur DR, Lee-Wing N, Bona MD. The use of augmented reality and virtual reality for visual field expansion and visual acuity improvement in low vision rehabilitation: a systematic review. Graefe’s Arch Clin Exp Ophthalmol 2023; 261: 1743–1755. [DOI] [PubMed] [Google Scholar]

- 19.Leal-Vega L, Piñero DP, Molina-Martín A, et al. Pilot study assessing the safety and acceptance of a novel virtual reality system to improve visual function. Semin Ophthalmol 2024; 39: 394–399. [DOI] [PubMed] [Google Scholar]

- 20.Ma MKI, Saha C, Poon SHL, et al. Virtual reality and augmented reality—emerging screening and diagnostic techniques in ophthalmology: a systematic review. Surv Ophthalmol 2022; 67: 1516–1530. [DOI] [PubMed] [Google Scholar]

- 21.Griffin JM, Slagle GT, Vu TA, et al. Prospective comparison of VisuALL virtual reality perimetry and Humphrey automated perimetry in glaucoma. J Curr Glaucoma Pract 2024; 18: 4–9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Lynn MH, Luo G, Tomasi M, et al. Measuring virtual reality headset resolution and field of view: implications for vision care applications. Optom Vis Sci 2020; 97: 573–582. [DOI] [PubMed] [Google Scholar]

- 23.Rodríguez-Vallejo M, Monsoriu JA, Furlan WD. Inter-display reproducibility of contrast sensitivity measurement with iPad. Optom Vis Sci 2016; 93: 1532–1536. [DOI] [PubMed] [Google Scholar]

- 24.Vosk. Available from: https://alphacephei.com/vosk/ [cited 2023-09-11].

- 25.Flutter. Available from: https://flutter.dev [cited 2023-09-21].

- 26.Unity. Available from: https://unity.com [cited 2023-09-21].

- 27.Dodgson NA. Variation and extrema of human interpupillary distance. In: Stereoscopic displays and virtual reality systems XI, San Jose, CA, 2004, pp. 36–46. SPIE. [Google Scholar]

- 28.Amsler M. L’Examen qualitatif de la fonction maculaire. Ophthalmologica 1947; 114: 248–261. [Google Scholar]

- 29.Matsumoto C, Arimura E, Okuyama S, et al. Quantification of metamorphopsia in patients with epiretinal membranes. Invest Ophthalmol Visual Sci 2003; 44: 4012–4016. [DOI] [PubMed] [Google Scholar]

- 30.Pelli DG, Robson JG, Wilkins AJ. The design of a new letter chart for measuring contrast sensitivity. Clin Vis Sci 1988; 2: 187–199. [Google Scholar]

- 31.de Jong PTVM. A history of visual acuity testing and optotypes. Eye 2024; 38: 13–24. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Hwang AD, Peli E. Positive and negative polarity contrast sensitivity measuring app. Electron Imaging 2016; 2016: 1–6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Tyler CW, Chan H, Liu L, et al. Bit stealing: how to get 1786 or more gray levels from an 8-bit color monitor. In: Human vision, visual processing, and digital display III, San Jose, CA, 2004, pp. 36–46. SPIE. [Google Scholar]

- 34.Arditi A. Improving the design of the letter contrast sensitivity test. Invest Ophthalmol Visual Sci 2005; 46: 2225–2229. [DOI] [PubMed] [Google Scholar]

- 35.Pelli DG, Bex P. Measuring contrast sensitivity. Vision Res 2013; 90: 10–14. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Rubin GS. Measuring reading performance. Vision Res 2013; 90: 43–51. [DOI] [PubMed] [Google Scholar]

- 37.MacKeben M, Nair UKW, Walker LL, et al. Random word recognition chart helps scotoma assessment in low vision. Optom Vis Sci 2015; 92: 421–428. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Eisenbarth W, Pado U, Schriever S, et al. Lokalisation von Skotomen mittels Lesetest bei AMD. Ophthalmologe 2016; 113: 754–762. [DOI] [PubMed] [Google Scholar]

- 39.Gilchrist JM, Allen PM, Monger L, et al. Precision, reliability and application of the Wilkins rate of reading test. Ophthalmic Physiol Opt 2021; 41: 1198–1208. [DOI] [PubMed] [Google Scholar]

- 40.Whisper. Available from: https://github.com/openai/whisper [cited 2023-09-21].

- 41.Wang M, Shen LQ, Boland MV, et al. Impact of natural blind spot location on perimetry. Sci Rep 2017; 7: 6143. doi: 10.1038/s41598-017-06580-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.iPhone 13. Available from: https://www.apple.com/iphone-13/specs/ [cited 2023-08-23].

- 43.Mulligan J. Psychophysical calibration of mobile touch-screens for vision testing in the field. J Vis 2015; 15: 475. [Google Scholar]

- 44.Destek VR headset. Available from: https://destek.us/collections/vr-headset [cited 2023-09-26].

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Supplemental material, sj-pdf-1-dhj-10.1177_20552076251365039 for VisualR: A novel and scalable solution for assessing visual function using virtual reality by Federica Sozzi, Henning Groß, Bratislav Ljubisic, Jascha Adams, Andras Gyacsok, Giuseppe Graziano, Steffen Weiß and Nuno D Pires in DIGITAL HEALTH

Video 1.