Abstract

Contingencies of reinforcement specify how reinforcers are earned and how they are obtained. Ratio contingencies specify the number of responses that earn a reinforcer, and the response satisfying the ratio requirement obtains the earned reinforcer. Simple interval schedules specify that a certain time earns a reinforcer, which is obtained by the first response after the interval. The earning of reinforcers has been overlooked, perhaps because simple schedules confound the rates of earning reinforcers with the rates of obtaining reinforcers. In concurrent variable-interval schedules, however, spending time at one alternative earns reinforcers not only at that alternative, but at the other alternative as well. Reinforcers earned for delivery at the other alternative are obtained after changing over. Thus the rates of earning reinforcers are not confounded with the rate of obtaining reinforcers, but the rates of earning reinforcers are the same at both alternatives, which masks their possibly differing effects on preference. Two experiments examined the separate effects of earning reinforcers and of obtaining reinforcers on preference by using concurrent interval schedules composed of two pairs of stay and switch schedules (MacDonall, 2000). In both experiments, the generalized matching law, which is based on rates of obtaining reinforcers, described responding only when rates of earning reinforcers were the same at each alternative. An equation that included both the ratio of the rates of obtaining reinforcers and the ratio of the rates of earning reinforcers described the results from all conditions from each experiment.

Keywords: preference, concurrent schedule, earning reinforcers, optimal foraging theory, generalized matching law, lever press, rats

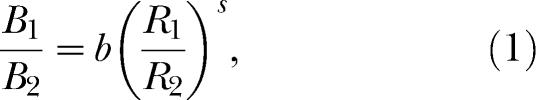

Empirical and theoretical investigations of preference using concurrent schedules continue more than 40 years after Herrnstein's (1961) first systematic investigation. He reported that exposing pigeons to concurrent variable-interval (VI) schedules resulted in the proportion of responses at one alternative approximately equaling the proportion of reinforcers obtained at that alternative. Subsequent investigations extended this result to proportions of time at one alternative equaling the proportion of reinforcers obtained at that alternative (e.g., Baum & Rachlin, 1969). Because of systematic deviations from this relation, Baum (1974) introduced the generalized matching law:

|

where B1 and B2 are behaviors (i.e., responses or time) allocated to, and R1 and R2 are the rates of reinforcers obtained at, Alternatives 1 and 2, respectively. There are two fitted parameters: s, called sensitivity to reinforcement, which measures the degree to which log response ratios change with changes in reinforcer ratios; and b, which is interpreted as response bias unrelated to reinforcer allocation (Trevett, Davison, & Williams, 1972; see Baum, 1974 for a review).

A basic characteristic of concurrent VI VI schedules is that, as subjects earn reinforcers by spending time at one alternative, they also earn reinforcers at a second alternative because the VI timer for the second alternative continues operating (Houston & McNamara, 1981; MacDonall, 1998, 1999). A reinforcer arranged at the second alternative while the subject is at the first alternative is obtained following the first response at the second alternative, possibly after a changeover delay (COD). Thus there are two modes of obtaining reinforcers on concurrent VI VI schedules: stay reinforcers that are earned and obtained while working at the same alternative, and switch reinforcers that are earned by working at one alternative but obtained following a switch to the other alternative.

The distinction between earning and obtaining reinforcers is based on differences in contingencies (Rachlin, Green, & Tormey, 1988). Some contingencies specify how to earn reinforcers. For example, responding on a ratio contingency earns reinforcers. Once earned, however, reinforcers are held until a contingency for obtaining the earned reinforcers is satisfied. Thus on standard fixed- or variable-ratio schedules, the same operant response earns and obtains reinforcers (Ferster & Skinner, 1957). The contingency, however, can involve a different operant response as in counting (Mechner, 1958) in which different responses earn and obtain reinforcers. The contingency for earning reinforcers on simple interval schedules is spending time on the schedule, but because the schedule operates throughout the session, reinforcers do not appear to be earned. Once it has been earned, the next response obtains the reinforcer. On concurrent interval schedules, the differing contingencies for earning and obtaining reinforcers are clearer: Spending time at an alternative earns reinforcers for staying at that alternative and earns reinforcers for switching to the other alternative. The contingency for obtaining reinforcers earned for staying at an alternative is a response at the associated alternative. The contingency for obtaining reinforcers earned for switching to the other alternative is a response at the other alternative.

Simple schedules of reinforcement (fixed ratio, fixed interval, variable ratio, and variable interval) confound the rates of earning reinforcers and the rates of obtaining reinforcers. The rate of obtaining reinforcers is the number of delivered reinforcers divided by the time at the schedule, which is the duration of the session. The rate of earning reinforcers is the number of reinforcers divided by the time earning those reinforcers, which is also the duration of the session. Thus in simple schedules of reinforcement, the rate of earning reinforcers always equals the rate of obtaining reinforcers. Earning and obtained reinforcers can be unconfounded in complex schedules, such as concurrent VI VI schedules. This is because switch reinforcers are earned by spending time at one alternative but obtained by responding at the other alternative. The contingency for earning stay reinforcers makes immediate contact with the stay response because the response that earns the reinforcer also delivers the reinforcer. The contingency for earning switch reinforcers makes contact, sometimes delayed, with the switch response because the switch reinforcer is delivered when the animal commences responding on the other alternative (Dreyfus, Dorman, Fetterman, & Stubbs, 1982). When a COD is not used, there is immediate contact between earning and delivering the switch reinforcer, but when a COD is used the contact is delayed, which may alter the effect of earning reinforcers.

The generalized matching law is a special case of the concatenated generalized matching law (Baum & Rachlin, 1969; Davison & McCarthy, 1988, ch. 4) that was developed to include all obtained reinforcer parameters that affect behavior (i.e., rates, magnitudes, immediacies [reciprocal of delay], and qualities of reinforcers). The concatenated generalized matching law states that preference is a function of the ratios of the rates, magnitudes, immediacies, and qualities of reinforcement obtained at the alternatives. Note that the concatenated generalized matching law allows a different sensitivity value for each of these parameters of reinforcement. When the magnitudes, immediacies, and qualities of reinforcement are the same at each alternative, then the respective ratios equal 1.0 and drop from the equation. This leaves the ratio of the rates of obtaining reinforcers, that is, Equation 1.

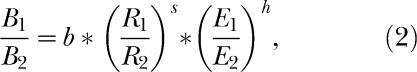

If the ratio of the rates of earning reinforcers influences preference, then that ratio may be able to be included in the concatenated generalized matching law. An appropriate form of Equation 1 would be:

|

where, En is the rate of earning reinforcers at alternative n, and h is the sensitivity to the ratio of the rates of earning reinforcers.

The separate effects of the rates of earning and of obtaining reinforcers are seldom investigated. Earning reinforcers, however, influenced preference in a simplified analog of a concurrent VI variable-ratio (VR) schedule (Rachlin et al., 1988). Only time at Alternative 1 earned reinforcers, and these were obtained only by responses at Alternative 2. Time at Alternative 2 never earned reinforcers and responses at Alternative 1 never obtained reinforcers. Although responding at Alternative 1 obtained no reinforcers, from 10% to 40% of the time was spent at Alternative 1. This suggests that rates of earning reinforcers affect preference. The purpose of the following experiments was to examine further the effects of rates of earning reinforcers on preference.

EXPERIMENT 1

An operant and the associated contingencies correspond to each of the stay and switch reinforcers at an alternative. Thus there are four operants; two stay operants for staying at each of the alternatives, and two switch operants for switching to each of the alternatives. Each stay operant is any response at an alternative that is reinforced according to the associated stay schedule, and each switch operant is any response that is reinforced according to the associated switch schedule. Thus a concurrent schedule can be implemented with four separate VI timers, one for each operant, that operate in pairs as the stay and switch schedules when the animal is at each alternative (Houston & McNamara, 1981; MacDonall, 2000). Each pair consists of a stay schedule that arranges reinforcers for staying and responding at one alternative and a switch schedule that arranges reinforcers for switching to the other alternative. For example, in a concurrent VI 36 s VI 320 s schedule, the stay schedule at Alternative 1 is VI 36 s and the switch schedule is VI 320 s, both of which operate only while the subject is at Alternative 1. The VI 36 s arranges reinforcers for staying and responding at Alternative 1, whereas the VI 320 s arranges reinforcers for switching to Alternative 2. At Alternative 2, the stay schedule is VI 320 s and the switch schedule is VI 36 s, both of which operate only while the subject is at Alternative 2. The VI 320 s arranges reinforcers for staying and responding at Alternative 2, whereas the VI 36 s arranges reinforcers for switching to Alternative 1. Changing between alternatives exchanges the pair of schedules operating. When using a COD, reinforcers arranged for staying and for switching would follow the first or subsequent response after the COD had elapsed.

The previous example demonstrates that the pairs of schedules are symmetrical in the standard concurrent procedure, that is, the value of the stay schedule that operates at each alternative equals the value of the switch schedule that operates at the other alternative. When using four separate schedules, the values of the schedules may be arranged differently. For example, swapping the values of the switch schedules between alternatives produces an asymmetrical arrangement: The value of the stay schedule at each alternative equals the value of the switch schedule at the same alternative. Swapping the values of the switch schedules in the previous example produces an asymmetrical arrangement with the following pairs of schedules: A VI 36 s for staying at the first alternative and VI 36 s for switching to the second alternative, and a VI 320 s for staying at the second alternative and VI 320 s for switching back to the first alternative.

The symmetrical arrangement produces the same rates of earning reinforcers at the alternatives. The rate of earning reinforcers at an alternative is the sum of the rates of earning stay and earning switch reinforcers. The rate of earning stay reinforcers is the number of stay reinforcers delivered at an alternative divided by the time spent at that alternative, which earned those reinforcers. The rate of earning switch reinforcers is the number of reinforcers delivered for switching to an alternative divided by the time spent at the other alternative, which earned those switch reinforcers. Thus the rate of earning reinforcers at Alternative 1 is the sum of the reinforcers earned for staying at Alternative 1 plus the reinforcers earned for switching to Alternative 2 divided by the time at Alternative 1.

The asymmetrical arrangement, however, produces different rates of earning reinforcers at the alternatives. For example, at the alternative with VI 36 s for staying and VI 36 s for switching, 18 reinforcers, on average, are earned every 320 s: Nine reinforcers are earned from the VI 36 s for staying plus nine reinforcers are earned from the VI 36 s for switching. At the alternative with VI 320 s for staying and VI 320 s for switching, two reinforcers, on average, are earned every 320 s: One reinforcer is earned from the VI 320 s for staying and one reinforcer is earned from the VI 320 s for switching.

If the allocation of behavior between alternatives is sensitive to rates of earning reinforcers, then the difference in the rates of earning reinforcers under the asymmetrical arrangement will affect preference: There will be a preference for the alternative associated with a higher rate of earning reinforcers. This preference occurs even though, in the asymmetrical arrangement, the rate of obtaining reinforcers at each alternative is the same. At the alternative with, say, VI 36 s for staying and VI 36 s for switching, nine reinforcers, on average, will be obtained from the VI 36 s for staying at Alternative 1 and nine reinforcers will be obtained at Alternative 2 from the VI 36 s for switching. At Alternative 2, one reinforcer will be obtained from the VI 320 s for staying at that alternative and one reinforcer will be obtained at Alternative 1 from the VI 320 s for switching. Thus, on average, a total of 10 reinforcers will be obtained every 320 s at each alternative.

The analysis of concurrent schedules into pairs of stay and switch schedules applies to the Findley (1958) procedure as well as to the usual two-manipulandum choice procedure. In the Findley procedure, responses at one (changeover) manipulandum switch alternatives, which are signaled by different stimuli (lights, tones, etc.), whereas responses at a second (main) manipulandum earn and obtain the stay and switch reinforcers. In the present experiment, preference was examined on concurrent VI VI schedules using a Findley procedure. In half of the conditions, symmetrical schedules were arranged and in half of the conditions asymmetrical schedules were arranged. In this way, the effects of rates of earning reinforcers at each alternative were investigated. A COD was not used because this would modify the contingency for obtaining switch reinforcers. The generalized matching law should describe the data from the symmetrical conditions, which would verify that the four-schedule procedure used here produces data consistent with the generalized matching law. If the rates of earning reinforcers do not affect preference, the generalized matching law also should describe data from the asymmetrical arrangement. If the generalized matching law describes data from the symmetrical conditions but not those from the asymmetrical conditions, then rates of earning reinforcers need to be included in the list of choice-affecting variables in the concatenated generalized matching law.

Method

Subjects

The subjects were 6 naive female Sprague-Dawley rats obtained from Hilltop Lab Animals (Scottdale, PA) and maintained at 85% of their free-feeding weights. They were approximately 100 days old when the experiment began and were housed individually in a temperature-controlled colony room on a 14:10 hr light/dark cycle with free access to water in their home cages.

Apparatus

Six operant conditioning chambers were used. Four chambers were approximately 225 mm wide and 195 mm high; three of the chambers were 235 mm in length, whereas the fourth was 350 mm in length. Each chamber was located in a light- and sound-controlled box. The 50 mm square opening for the food cup was centered horizontally on one 225-mm by 195-mm wall, 20 mm above the floor. Two response levers (Model G6312, R. Gerbrands Co.), 45 mm long by 13 mm thick, protruded 15 mm into the chamber. The centers of the levers were 60 mm to the left or right of the center of the food cup and 50 mm above the floor. Each lever required a force of approximately 0.3 N to operate. A Gerbrands feeder (Model G5120), located behind the wall containing the food cup, dispensed 45-mg food pellets (Formula A/1, P. J. Noyes Co.), which were 85% Purina® Rodent Chow. The other two chambers were 305 mm wide, 270 mm high, and 250 mm long. On one 305-mm by 270-mm wall were three response levers (Model G6312) 95 mm above the floor. One lever was centered on the wall and the other two levers were 90 mm on either side of the center of the center lever. Only the two outside levers were used in this experiment. Centered horizontally on the opposite wall, 35 mm above the floor, was a 50-mm square opening to the food cup. The food cup was located on the opposite wall so that it was approximately equidistant to each of the three levers, which was important in experiments examining three-alternative choice. A Gerbrands feeder, located behind the food cup, dispensed 45-mg food pellets (Formula A/1). A 24-V DC stimulus light was centered approximately 75 mm above each lever. All chambers were illuminated during sessions by a pair of houselights mounted on the top center of the chamber. White noise was presented through a speaker centered between the houselights. An IBM®-compatible computer and MED-PC® software and hardware (MED Associates Inc.) controlled the experimental events and recorded responses.

Procedure

All conditions used a changeover-lever procedure (Findley, 1958) to expose rats to concurrent VI VI schedules. The alternative in effect at the beginning of a session, signaled by either a light or white noise, was randomly determined. One pair of stay and switch schedules arranged stay and switch reinforcers during light, and a different pair arranged reinforcers during noise (Table 1). Pressing the changeover (right) lever switched stimuli and the associated pairs of schedules in effect. During either light or noise, when a stay schedule arranged a reinforcer that schedule stopped until a press of the main (left) lever delivered that reinforcer. If the rat switched alternatives before the reinforcer was delivered, that reinforcer was held until the rat switched back to that alternative, and the first press at the main lever then delivered that reinforcer. Also during either light or noise, when a switch schedule arranged a reinforcer that schedule stopped. It resumed when the rat returned to that alternative, as in standard concurrent VI VI schedules. Switch reinforcers, when arranged, were delivered by the first response at the main lever after pressing the changeover lever. Thus, when a switch reinforcer was arranged, the rat was required to press the changeover lever to change alternatives, and then to press the main lever to deliver the switch reinforcer. There was no COD. If both a stay and a switch reinforcer were arranged, the first response delivered the switch reinforcer and the next response delivered the stay reinforcer. For this to occur, the stay reinforcer must be arranged after the last press at the main lever during the brief time traveling to the changeover lever. Then during the visit at the other alternative, a reinforcer for switching back to the first alternative must also be arranged. It was rare for both reinforcers to be arranged at the same time.

Table 1. For each rat in Experiment 1, the sequence of conditions, the number of sessions in each condition, and the values of the stay and switch schedules of reinforcement at each alternative for the symmetrical (S) and asymmetrical (A) conditions are shown. Also shown are the sums over the last five sessions in each condition of responses at each alternative, total time spent at each alternative, the total number of stay and switch reinforcers obtained at each alternative, and the total number of changeovers to the other alternative.

| Arrangement | Sessions | Variable-interval schedule (in s) for |

Responses in |

Time (s) in |

Reinforcers from |

Changeover to |

|||||||||

| Stay in light | Switch to noise | Stay in noise | Switch to light | Light | Noise | Light | Noise | Stay in light | Switch to noise | Stay in noise | Switch to light | Noise | Light | ||

| Rat 407 | |||||||||||||||

| A | 42 | 320 | 320 | 36 | 36 | 1,425 | 6,187 | 3,788 | 10,059 | 18 | 11 | 264 | 207 | 627 | 625 |

| A | 27 | 43 | 43 | 128 | 128 | 4,434 | 2,986 | 9,209 | 7,110 | 192 | 186 | 61 | 61 | 1,314 | 1,312 |

| S | 11 | 64 | 64 | 64 | 64 | 3,442 | 3,558 | 8,672 | 8,550 | 124 | 130 | 117 | 129 | 1,416 | 1,413 |

| A | 23 | 128 | 128 | 43 | 43 | 3,162 | 4,708 | 7,830 | 8,991 | 68 | 56 | 191 | 185 | 1,072 | 1,069 |

| A | 15 | 36 | 36 | 320 | 320 | 4,329 | 2,991 | 9,107 | 6,755 | 250 | 203 | 29 | 18 | 1,492 | 1,489 |

| S | 18 | 36 | 320 | 320 | 36 | 4,993 | 2,064 | 13,220 | 4,142 | 334 | 48 | 18 | 100 | 1,104 | 1,103 |

| S | 35 | 43 | 128 | 128 | 43 | 4,680 | 3,084 | 11,760 | 5,788 | 261 | 77 | 35 | 127 | 1,060 | 1,060 |

| S | 11 | 64 | 64 | 64 | 64 | 3,356 | 3,616 | 8,894 | 7,988 | 137 | 125 | 134 | 104 | 1,626 | 1,623 |

| S | 22 | 128 | 43 | 43 | 128 | 2,958 | 3,771 | 7,360 | 9,777 | 56 | 167 | 204 | 73 | 1,608 | 1,604 |

| S | 21 | 320 | 36 | 36 | 320 | 2,466 | 3,697 | 6,127 | 10,919 | 22 | 148 | 290 | 40 | 1,584 | 1,584 |

| Rat 408 | |||||||||||||||

| A | 22 | 43 | 43 | 128 | 128 | 4,573 | 2,695 | 9,530 | 6,221 | 213 | 208 | 34 | 45 | 1,205 | 1,208 |

| A | 20 | 320 | 320 | 36 | 36 | 1,782 | 6,117 | 3,717 | 9,733 | 16 | 6 | 289 | 189 | 689 | 690 |

| A | 32 | 36 | 36 | 320 | 320 | 6,565 | 1,947 | 9,594 | 4,054 | 268 | 208 | 10 | 14 | 861 | 864 |

| S | 22 | 64 | 64 | 64 | 64 | 5,472 | 3,278 | 10,792 | 6,939 | 164 | 138 | 102 | 96 | 1,033 | 1,036 |

| A | 24 | 128 | 128 | 43 | 43 | 3,389 | 5,900 | 7,044 | 9,255 | 52 | 44 | 221 | 183 | 1,350 | 1,354 |

| S | 25 | 43 | 128 | 128 | 43 | 4,273 | 3,135 | 10,432 | 6,807 | 228 | 68 | 56 | 148 | 1,196 | 1,201 |

| S | 26 | 320 | 36 | 36 | 320 | 3,563 | 8,195 | 5,434 | 11,523 | 20 | 126 | 321 | 33 | 1,240 | 1,240 |

| S | 26 | 36 | 320 | 320 | 36 | 9,294 | 3,055 | 12,810 | 4,372 | 331 | 30 | 24 | 115 | 700 | 705 |

| S | 19 | 128 | 43 | 43 | 128 | 3,868 | 7,521 | 6,770 | 10,305 | 42 | 150 | 230 | 78 | 1,243 | 1,245 |

| S | 19 | 64 | 64 | 64 | 64 | 4,666 | 6,115 | 8,114 | 9,234 | 128 | 86 | 155 | 131 | 1,073 | 1,076 |

| Rat 409 | |||||||||||||||

| S | 32 | 320 | 36 | 36 | 320 | 1,806 | 8,201 | 2,597 | 14,171 | 5 | 53 | 390 | 52 | 999 | 995 |

| S | 19 | 43 | 128 | 128 | 43 | 5,325 | 3,823 | 10,993 | 6,225 | 252 | 71 | 59 | 118 | 1,383 | 1,386 |

| S | 10 | 64 | 64 | 64 | 64 | 4,914 | 4,469 | 9,068 | 7,807 | 149 | 129 | 114 | 108 | 1,591 | 1,589 |

| A | 26 | 128 | 128 | 43 | 43 | 3,401 | 4,012 | 8,938 | 8,475 | 58 | 73 | 201 | 168 | 1,507 | 1,504 |

| A | 41 | 36 | 36 | 320 | 320 | 5,088 | 3,554 | 8,868 | 7,090 | 236 | 219 | 17 | 28 | 1,426 | 1,426 |

| S | 18 | 64 | 64 | 64 | 64 | 4,542 | 3,534 | 9,216 | 7,812 | 141 | 131 | 102 | 126 | 1,368 | 1,369 |

| A | 23 | 43 | 43 | 128 | 128 | 4,984 | 3,424 | 9,419 | 6,499 | 208 | 196 | 54 | 42 | 1,324 | 1,322 |

| A | 33 | 320 | 320 | 36 | 36 | 3,901 | 4,470 | 7,865 | 9,315 | 14 | 34 | 260 | 192 | 1,064 | 1,062 |

| S | 25 | 128 | 43 | 43 | 128 | 2,823 | 5,368 | 5,885 | 11,640 | 46 | 122 | 253 | 79 | 1,260 | 1,258 |

| Rat 410 | |||||||||||||||

| S | 21 | 43 | 128 | 128 | 43 | 4,121 | 1,947 | 12,051 | 4,934 | 261 | 96 | 33 | 110 | 1,301 | 1,300 |

| S | 29 | 320 | 36 | 36 | 320 | 2,719 | 5,843 | 4,271 | 12,667 | 22 | 104 | 337 | 37 | 1,184 | 1,180 |

| S | 19 | 36 | 320 | 320 | 36 | 5,257 | 2,508 | 12,320 | 5,001 | 314 | 45 | 13 | 128 | 1,158 | 1,158 |

| S | 13 | 64 | 64 | 64 | 64 | 3,074 | 2,542 | 9,240 | 8,049 | 135 | 142 | 107 | 116 | 1,126 | 1,123 |

| A | 15 | 36 | 36 | 320 | 320 | 2,227 | 1,114 | 10,427 | 4,056 | 270 | 215 | 9 | 6 | 615 | 611 |

| A | 41 | 128 | 128 | 43 | 43 | 1,554 | 2,239 | 5,101 | 10,927 | 45 | 31 | 243 | 181 | 591 | 590 |

| A | 28 | 43 | 43 | 128 | 128 | 2,081 | 1,610 | 9,908 | 7,221 | 200 | 195 | 48 | 57 | 608 | 606 |

| S | 20 | 64 | 64 | 64 | 64 | 2,078 | 2,115 | 8,554 | 9,477 | 114 | 110 | 158 | 118 | 574 | 572 |

| A | 29 | 320 | 320 | 36 | 36 | 1,357 | 1,754 | 5,051 | 10,498 | 7 | 20 | 247 | 226 | 641 | 637 |

| S | 19 | 128 | 43 | 43 | 128 | 1,313 | 2,311 | 4,874 | 13,621 | 33 | 103 | 274 | 90 | 567 | 566 |

| Rat 411 | |||||||||||||||

| A | 34 | 36 | 36 | 320 | 320 | 3,690 | 2,093 | 9,688 | 4,680 | 243 | 223 | 20 | 14 | 1,140 | 1,140 |

| A | 45 | 128 | 128 | 43 | 43 | 2,249 | 2,856 | 6,642 | 10,001 | 47 | 38 | 227 | 188 | 1,109 | 1,111 |

| A | 14 | 320 | 320 | 36 | 36 | 1,864 | 3,334 | 5,459 | 9,834 | 13 | 6 | 256 | 225 | 973 | 975 |

| S | 17 | 64 | 64 | 64 | 64 | 2,799 | 4,235 | 6,123 | 11,185 | 94 | 70 | 182 | 154 | 1,551 | 1,555 |

| A | 27 | 43 | 43 | 128 | 128 | 2,369 | 2,394 | 9,380 | 7,955 | 208 | 173 | 62 | 57 | 1,427 | 1,431 |

| S | 28 | 128 | 43 | 43 | 128 | 3,202 | 3,363 | 9,465 | 7,810 | 69 | 199 | 162 | 70 | 1,661 | 1,659 |

| S | 16 | 320 | 36 | 36 | 320 | 2,739 | 3,437 | 8,658 | 9,368 | 28 | 183 | 259 | 30 | 1,654 | 1,654 |

| S | 10 | 64 | 64 | 64 | 64 | 3,449 | 3,551 | 8,875 | 8,291 | 127 | 131 | 136 | 106 | 1,609 | 1,612 |

| S | 24 | 36 | 320 | 320 | 36 | 4,278 | 2,426 | 13,708 | 3,791 | 359 | 31 | 16 | 94 | 1,516 | 1,520 |

| Rat 412 | |||||||||||||||

| S | 32 | 36 | 320 | 320 | 36 | 4,008 | 1,502 | 13,174 | 4,534 | 320 | 51 | 13 | 116 | 1,004 | 1,005 |

| S | 24 | 128 | 43 | 43 | 128 | 2,216 | 3,142 | 6,221 | 11,074 | 67 | 128 | 229 | 76 | 1,305 | 1,301 |

| S | 15 | 320 | 36 | 36 | 320 | 2,053 | 4,102 | 3,871 | 13,843 | 19 | 98 | 346 | 37 | 1,363 | 1,358 |

| S | 15 | 64 | 64 | 64 | 64 | 3,184 | 3,179 | 8,579 | 8,578 | 145 | 125 | 109 | 121 | 1,504 | 1,503 |

| A | 26 | 320 | 320 | 36 | 36 | 2,964 | 3,481 | 7,551 | 8,983 | 33 | 29 | 219 | 219 | 1,580 | 1,577 |

| S | 19 | 64 | 64 | 64 | 64 | 2,563 | 3,485 | 6,807 | 10,530 | 106 | 91 | 165 | 138 | 1,371 | 1,371 |

| A | 44 | 36 | 36 | 128 | 128 | 3,088 | 2,161 | 8,894 | 5,994 | 221 | 185 | 53 | 41 | 853 | 852 |

| S | 15 | 43 | 128 | 128 | 43 | 4,789 | 2,601 | 12,984 | 4,278 | 284 | 91 | 33 | 92 | 1,681 | 1,680 |

| A | 27 | 43 | 43 | 128 | 128 | 4,178 | 2,219 | 10,426 | 4,778 | 225 | 179 | 51 | 45 | 1,126 | 1,123 |

Conditions differed according to the arrangement of stay and switch schedules at the alternatives. During the symmetrical conditions, the value of the stay schedule that operated at each alternative equaled the value of the switch schedule that operated at the other alternative. The values of the schedules were selected to provide a wide range of log ratios of rates of obtaining reinforcers. During the asymmetrical conditions, the value of the stay schedule that operated at each alternative equaled the value of the switch schedule that operated at the same alternative. The operation of the schedules and arranging of reinforcers was identical in the symmetrical and asymmetrical arrangements.

Each VI schedule consisted of 10 intervals obtained by the method described by Fleshler and Hoffman (1962) that gives an approximately exponential distribution of intervals, and the intervals were randomly selected without replacement. For the symmetrical arrangement, the stay and switch schedules in each condition were selected to maintain an approximately constant overall rate of reinforcement of one per 32 s. This was accomplished by selecting values of the stay and switch schedules whose reciprocals summed to the reciprocal of 32 (see Herrnstein, 1961). The values of the stay and switch schedules for the asymmetrical arrangement were obtained by exchanging the values of the switch schedules in the symmetrical arrangement.

Because the rats were naive, they were first trained to approach the food cup at the sound of the feeder operating. Then their behavior was shaped to press the main lever. Pressing the changeover lever emerged when responses at the main lever were reinforced intermittently. The rats then were exposed to the first condition in Table 1, which shows, for each rat, the sequence of conditions, the values of the schedules in each condition, and the number of sessions that each condition was in effect.

To identify possible order effects, 3 rats first were exposed to the symmetrical conditions and the other 3 to the asymmetrical conditions. A condition remained in effect for at least 10 sessions and until visual inspection showed there were no apparent upward or downward trends in the logs of the ratios of the rates of obtaining reinforcers, of responses, and of times for five consecutive sessions. Sessions were typically conducted 7 days a week and ended after the first changeover response following the 100th reinforcer.

Results and Discussion

All the results reported here are based on the sums of the data from the last five sessions of each condition. Table 1 presents these sums of presses at the main lever in the light and noise alternatives, total time (in seconds) spent at the light and noise alternatives, reinforcers obtained for staying at and switching to the light and noise alternatives, and total changeovers to the noise and to the light alternatives. For completeness, Table 1 includes the time allocation data although only the results of analyses of response allocations are shown.

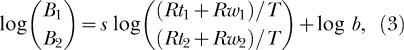

The generalized matching law described the data from the symmetrical conditions. The rate of obtaining reinforcers at an alternative is the sum of the reinforcers obtained for staying plus the reinforcers obtained for switching to that alternative divided by the session time. When rates of obtaining stay and switch reinforcers are explicitly noted, the generalized matching law may be expressed in logarithmic form as:

|

where Rt1 and Rt2 represent the number of stay reinforcers obtained at Alternatives 1 and 2, respectively. Rw1 and Rw2 represent the number of switch reinforcers obtained at Alternatives 1 and 2, respectively, which were earned at Alternatives 2 (light) and 1 (noise), respectively. T is the session time, which of course divides out of Equation 3. It is important to note that in all equations the subscripts refer to the alternative in which reinforcers were obtained. The other symbols are the same as in Equation 1. This equation describes a straight line, and can be fitted to the data using least-squares linear regression. Ratios of the rates of obtaining reinforcer were the sum of the stay reinforcers obtained (and earned) by a response in light plus the switch reinforcers obtained by a response in light that were earned in noise divided by session time. This quotient was divided by the sum of the stay reinforcers obtained (and earned) by a response in noise plus the switch reinforcers obtained by a response in noise that were earned in light divided by session time. This analysis is the standard application of the generalized matching law to concurrent choice with the stay and switch reinforcers explicitly noted.

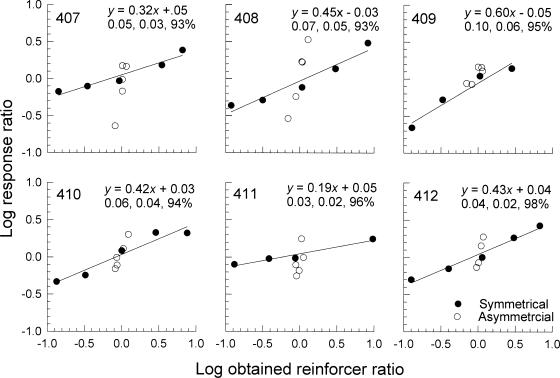

Figure 1 shows that for each condition, the logs of the response ratios increased with increases in the logs of the ratios of the rates of obtaining reinforcers. For the symmetrical conditions, the generalized matching law (Equation 3) described the logs of the response ratios (r2 > .93). Undermatching, that is, behavior ratios changing less than the reinforcer ratios, was consistently found, probably because a COD was not used. Log b did not consistently differ from zero so there was no consistent bias. For the asymmetrical conditions, the generalized matching law did not describe the results; inspection of Figure 1 shows that the data deviated systematically from the path of the data described by the symmetrical conditions. The data are aligned almost vertically and appear to be described by a sensitivity (s in Equation 3) considerably greater than 1 (i.e., overmatching).

Fig. 1. The logs of the response ratios plotted as a function of the logs of the ratios of the rates of obtaining reinforcers in Experiment 1.

Also shown is the best-fitting line to data from the symmetrical conditions, using Equation 3 and least-squares linear regression, and the resulting equation for that line; the standard errors of sensitivity and bias and percentage of the variance accounted for by the equation are below it.

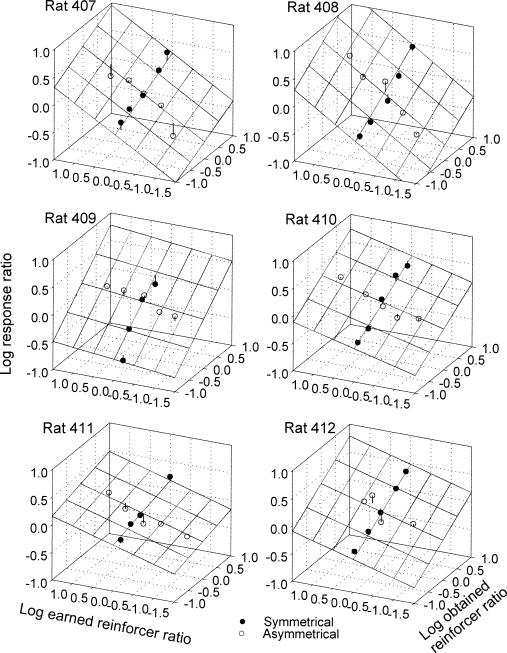

Figure 2 plots log response ratios as a joint function of the ratios of the rates of earning reinforcers and obtaining reinforcers and shows that log response ratios increased as a joint function of these two variables. This figure also shows that the symmetrical conditions produced variations in the ratios of obtaining reinforcers but little change in the ratios of the rates of earning reinforcers; in contrast, the asymmetrical conditions produced different ratios of earning reinforcers but little change in the ratios of the rates of obtaining reinforcers.

Fig. 2. The logs of the response ratios plotted as a joint function of the logs of the ratios of the rates of obtaining reinforcers and the logs of the ratios of the rates of earning reinforcers in Experiment 1.

The tilting plane shows the best-fitting plane using Equation 4 and the data from the symmetrical conditions combined with the data from the asymmetrical conditions. The vertical lines show the difference between the obtained and predicted data from each condition. When the residuals are not visible they are smaller than the data point.

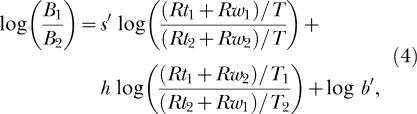

Explicitly noting the stay and switch reinforcers in Equation 2, and expressing the resulting equation in logarithmic form produces:

|

where Tn represents the time spent at Alternative n and parameters s′ and b′ correspond to parameters s and b in Equation 1; the primes are used to distinguish them. The other symbols are the same as in the previous equations. Equation 4 plots as a flat plane and may be fitted to the data using least-squares multiple linear regression. The results of regressions using Equation 4 are shown in Table 2. The descriptions of the response ratios were good (r2 > .87) for Rats 408, 409, 410, and 412 but poorer for Rats 407 and 411 (.79 > r2 > .75).

Table 2. Results of least-squares multiple linear regressions for response allocations using Equation 4 for data from the symmetrical conditions combined with data from the asymmetrical conditions in Experiment 1.

| Rat | s′ | SE | h | SE | log b′ | SE | r2 | df |

| 407 | 0.32 | 0.11 | 0.44 | 0.11 | −0.03 | −0.05 | .79 | 8 |

| 408 | 0.45 | 0.07 | 0.49 | 0.07 | 0.02 | 0.03 | .93 | 8 |

| 409 | 0.64 | 0.10 | 0.08 | 0.07 | 0.02 | 0.04 | .90 | 7 |

| 410 | 0.43 | 0.05 | 0.19 | 0.05 | 0.03 | 0.02 | .93 | 8 |

| 411 | 0.16 | 0.07 | 0.21 | 0.06 | 0.00 | 0.03 | .75 | 7 |

| 412 | 0.44 | 0.07 | 0.21 | 0.11 | 0.04 | 0.03 | .87 | 7 |

The parameter estimates in Table 2 show that the parameters s′ and h are both necessary to describe the data. Parameter values for s′ and h were more than two standard errors greater than zero in all six comparisons and in five of the six comparisons, respectively. This indicates that s′ and h were not equal to zero. The two parameters differed by more than two standard errors for Rats 409 and 410, which indicates that both of these parameters need to be included in Equation 4, so it is unlikely that s′ and h could be taken as equal.

EXPERIMENT 2

Experiment 2 replicated Experiment 1 using a different method of varying the ratio of the rates of earning reinforcers, namely, a two-manipulandum concurrent procedure instead of a Findley procedure, and random-interval (RI) rather than VI schedules. In the weighted conditions in Experiment 2, the rate of earning reinforcers was always greater at one alternative. This was accomplished by using a pair of stay and switch schedules at one alternative; the values of the schedules in the corresponding symmetrical pair were multiplied by a constant, and this pair of schedules was assigned to the other alternative. Consider the schedules in the first condition for Rat 476. The values of the pair of schedules at the left alternative were VI 16.7 s for staying and VI 50 s for switching to the right alternative. The symmetrical pair would be VI 50 s for staying at the right alternative and VI 16.7 s for switching to the left alternative. The values of this pair of schedules were multiplied by 5 producing VI 250 s for staying at the right alternative and VI 83.2 s for switching to the left alternative. The values of the pair of schedules at the left alternative were not changed. Across weighted conditions, the multiplier was constant for each rat, but the values in the initial pair of schedules varied. As in the previous experiment, several conditions used the symmetrical arrangement and several conditions used the weighted arrangement.

Method

Subjects

The subjects were 3 female Sprague-Dawley rats obtained from Hilltop Lab Animals (Scottdale, PA) and maintained at 85% of their just-determined free-feeding weights. They were approximately 170 days old when the experiment began and were housed individually in a temperature-controlled colony room on a 14:10 hr light/dark cycle with free access to water. The rats previously had been used in an introductory psychology laboratory class in which students trained them to go to the food cup when food pellets were delivered. The students then shaped lever pressing.

Apparatus

This experiment used the same two-lever operant conditioning chambers that were used in Experiment 1.

Procedure

All conditions used a two-lever procedure (Herrnstein, 1961) to expose rats to concurrent RI RI schedules. While at the left alternative, one pair of stay and switch RI schedules arranged stay and switch reinforcers; while at the right alternative, a different pair of stay and switch RI schedules arranged stay and switch reinforcers (Table 3). The first press at the left or right lever started the stay and switch schedules associated with that alternative and stopped the stay and switch schedules associated with the other alternative. When either stay schedule arranged a reinforcer, that stay schedule stopped until a press of the associated lever delivered that reinforcer. If the rat switched alternatives before the stay reinforcer was delivered, that reinforcer was held until the rat switched back to that alternative by responding at the associated lever, which obtained that stay reinforcer. When either switch schedule arranged a reinforcer, that switch schedule stopped: The first press at the other lever obtained that switch reinforcer. That switch schedule resumed operating when the rat switched back to the associated alternative. There was no COD.

Table 3. For each rat in Experiment 2, the sequence of conditions, the values of the stay and switch RI schedules in the symmetrical (S) and weighted (W) condition, and the number of sessions in each condition are shown. Also shown are the sums over the last five sessions of each condition of the total number of responses at the left and right levers, total time at each lever, number of stay and switch reinforcers obtained at each lever, and number of changeovers to each lever.

| Arrangement | Sessions | RI schedule (in s) for |

Responses |

Time (s) |

Reinforcers obtained from |

Changeovers to |

|||||||||

| Stay left | Switch to right | Stay right | Switch to left | Left | Right | Left | Right | Stay left | Switch to right | Stay right | Switch to left | Right | Left | ||

| Rat 476 | |||||||||||||||

| W | 27 | 16.7 | 50 | 250 | 83.5 | 2,433 | 731 | 2,592 | 6,114 | 334 | 119 | 17 | 32 | 657 | 658 |

| W | 17 | 125 | 13.9 | 69.5 | 625 | 3,131 | 1,975 | 6,667 | 5,644 | 56 | 335 | 100 | 11 | 1,450 | 1,452 |

| W | 13 | 25 | 25 | 125 | 125 | 2,983 | 1,035 | 3,308 | 6,355 | 237 | 226 | 26 | 22 | 948 | 946 |

| S | 16 | 208 | 23 | 23 | 208 | 2,238 | 3,879 | 7,614 | 4,114 | 20 | 139 | 311 | 35 | 936 | 941 |

| S | 14 | 28 | 83 | 83 | 28 | 2,920 | 1,893 | 3,306 | 7,805 | 253 | 95 | 44 | 109 | 1,033 | 1,028 |

| S | 15 | 42 | 42 | 42 | 42 | 1,661 | 2,545 | 5,449 | 5,448 | 121 | 119 | 133 | 129 | 898 | 901 |

| W | 21 | 13.9 | 125 | 625 | 69.5 | 1,828 | 262 | 742 | 7,500 | 469 | 37 | 1 | 10 | 163 | 158 |

| W | 15 | 50 | 16.7 | 83.5 | 250 | 2,431 | 1,812 | 6,053 | 6,091 | 106 | 306 | 70 | 19 | 1,011 | 1,013 |

| Rat 477 | |||||||||||||||

| W | 10 | 35 | 313 | 156.5 | 17.5 | 1,860 | 715 | 3,359 | 14,465 | 298 | 52 | 18 | 139 | 652 | 648 |

| W | 26 | 313 | 35 | 17.5 | 156.5 | 536 | 2,585 | 9,000 | 1,669 | 6 | 46 | 400 | 48 | 457 | 456 |

| W | 22 | 42 | 125 | 62.5 | 21 | 2,439 | 2,386 | 4,907 | 9,031 | 191 | 66 | 73 | 176 | 1,258 | 1,256 |

| S | 15 | 23 | 208 | 208 | 23 | 4,245 | 1,329 | 2,328 | 9,218 | 348 | 56 | 10 | 89 | 863 | 858 |

| S | 23 | 208 | 23 | 23 | 208 | 1,386 | 5,527 | 9,112 | 2,149 | 13 | 89 | 366 | 36 | 996 | 1,000 |

| S | 19 | 42 | 42 | 42 | 42 | 2,226 | 3,236 | 6,421 | 5,304 | 121 | 117 | 147 | 116 | 1,025 | 1,024 |

| W | 14 | 125 | 42 | 21 | 62.5 | 1,024 | 3,207 | 7,081 | 3,050 | 24 | 59 | 302 | 116 | 639 | 639 |

| W | 18 | 63 | 63 | 31.5 | 31.5 | 1,199 | 3,862 | 6,141 | 4,260 | 75 | 56 | 202 | 172 | 702 | 700 |

| Rat 478 | |||||||||||||||

| S | 26 | 63 | 21 | 21 | 63 | 882 | 1,452 | 5,405 | 4,361 | 71 | 145 | 206 | 81 | 567 | 568 |

| S | 23 | 17 | 156 | 156 | 17 | 2,994 | 183 | 365 | 8,425 | 452 | 44 | 2 | 12 | 178 | 177 |

| S | 23 | 31 | 31 | 31 | 31 | 2,508 | 498 | 1,250 | 7,907 | 252 | 189 | 30 | 37 | 475 | 475 |

| W | 12 | 62.5 | 21 | 42 | 125 | 3,069 | 861 | 2,328 | 8,741 | 136 | 299 | 58 | 11 | 829 | 827 |

| W | 14 | 17.5 | 156.5 | 313 | 35 | 3,014 | 315 | 430 | 9,256 | 438 | 50 | 3 | 17 | 307 | 303 |

| W | 17 | 156.5 | 17.5 | 35 | 313 | 2,444 | 1,012 | 3,371 | 8,532 | 68 | 339 | 81 | 12 | 932 | 934 |

| W | 14 | 31.5 | 31.5 | 63 | 63 | 2,583 | 671 | 1,475 | 7,688 | 240 | 221 | 20 | 22 | 666 | 662 |

| W | 16 | 21 | 62.5 | 125 | 42 | 2,342 | 479 | 969 | 8,368 | 364 | 119 | 5 | 23 | 454 | 450 |

Conditions differed according to the arrangement of stay and switch schedules at the alternatives. In the symmetrical condition, the arrangement of the stay and switch schedules was identical to the symmetrical conditions in Experiment 1; the value of the stay schedule that operated at each alternative equaled the value of the switch schedule that operated at the other alternative. In the weighted conditions, the rates of earning stay and switch reinforcers at one alternative were a multiple of the rates at the other alternative. This was accomplished by (a) starting with one pair of schedules and assigning them to one alternative, and (b) multiplying the corresponding symmetrical pair of stay and switch RI schedules by a constant and assigning these schedules to the other alternative. For Rat 476, the schedules associated with the right alternative were multiplied by 5; for Rat 477, the schedules associated with the left alternative were multiplied by 2; and for Rat 478, the schedules associated with the right alternative were multiplied by 2. Consequently, for Rat 477 the programmed rates of earning reinforcers at the right alternative were 2 times the programmed rates of earning reinforcers at the left alternative. For Rats 476 and 478, the programmed rates of earning reinforcers at the left alternative were 5 and 2 times, respectively, the programmed rates of earning reinforcers at the right alternative. The values of the initial pair of schedules were selected so that, given the multiplier for each rat, the overall rate of obtaining reinforcers per session was generally within the range of the overall rates of obtaining reinforcers in the symmetrical conditions. The operation of the schedules and arranging of reinforcers were identical in the symmetrical and weighted conditions.

For each RI schedule, a probability generator was sampled every 0.5 s. When a signal occurred, the next response associated with that schedule was reinforced. For each rat, Table 3 lists the sequence of conditions, the number of sessions that each condition was in effect, and the arrangement of schedules in each condition. Two rats were first exposed to the weighted conditions and the 3rd rat to the symmetrical conditions. A condition remained in effect for at least 10 sessions and until visual inspection showed there were no apparent upward or downward trends in the logs of the ratios of the rates of obtaining reinforcers, of responses, and of times for five consecutive sessions. Sessions typically were conducted 7 days a week and ended after the first changeover response following the 100th reinforcer.

Because the rats had pressed a lever in a different operant chamber, lever pressing was not shaped. Instead, they were placed in the chamber on a concurrent RI 4-s RI 4-s schedule for one session followed by a RI 10 s RI 10 s for 1 day and RI 20 s RI 20 s for two sessions. Then they were placed on the schedules in the first condition listed in Table 3.

Results and Discussion

All the results reported here are based on the sums of the data from the last five sessions of each condition. Table 3 presents these sums of presses at the left and right levers, total time (in seconds) at the left and right alternatives, reinforcers obtained from the stay and switch schedule at each alternative, and changeovers to the right and to the left alternative. Time at an alternative was measured from the first press at an alternative to the first press at the other alternative. For completeness, Table 3 includes the time allocation data although only the results of analyses of response allocations are presented.

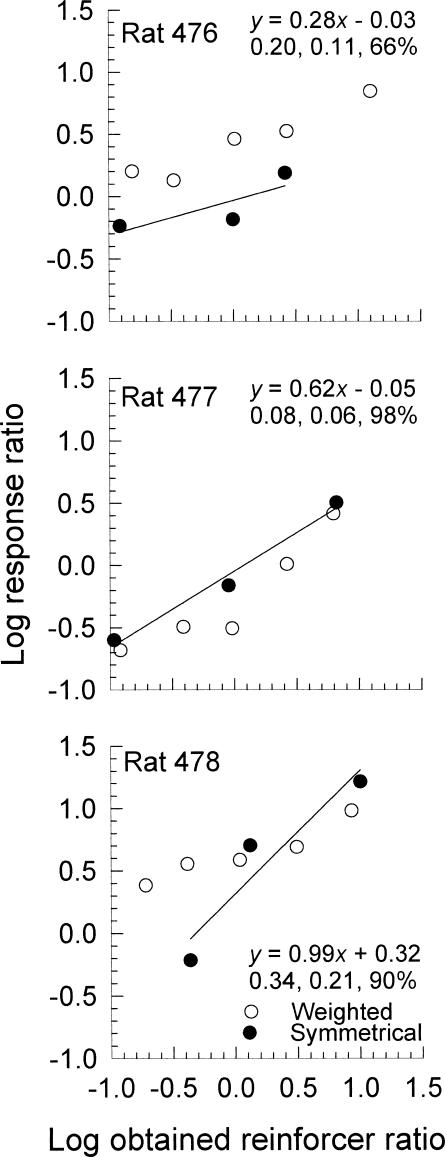

Figure 3 shows that, for each arrangement, the logs of the ratios of responses increased approximately linearly with increases in logs of the ratios of the rates of obtaining reinforcers. For the symmetrical conditions, the generalized matching law (Equation 3) accurately described the data for 2 rats (r2 > .85); the poor description for Rat 476's data probably results from the low slope. Sensitivity values ranged from 0.28 to 0.99, and log bias values from −0.05 to 0.32. Although the standard errors of estimate were large (> 0.20) for Rats 476 and 478, these results support the conclusion that the present procedures were sufficient to produce data consistent with the generalized matching law, as was found in Experiment 1. Figure 3 also shows, for each rat, that the data from the weighted conditions did not follow the path of the data from the symmetrical conditions. Rather, the data for Rats 476 and 477 were consistently displaced toward the alternative with the higher earning rate. The weighted condition data for Rat 478 was displaced toward the higher earning rate only when the log obtained reinforcer rate was less than zero.

Fig. 3. The logs of the ratios of responses plotted as a function of the logs of the ratios of the rates of obtaining reinforcers in Experiment 2.

Also shown is the best-fitting line to data from the symmetrical conditions, using Equation 3 and least-squares linear regression, and the resulting equation for that line; the standard errors of sensitivity and bias and percentage of the variance accounted for by the equation are below it. All ratios are data from the left alternative divided by data from the right alternative.

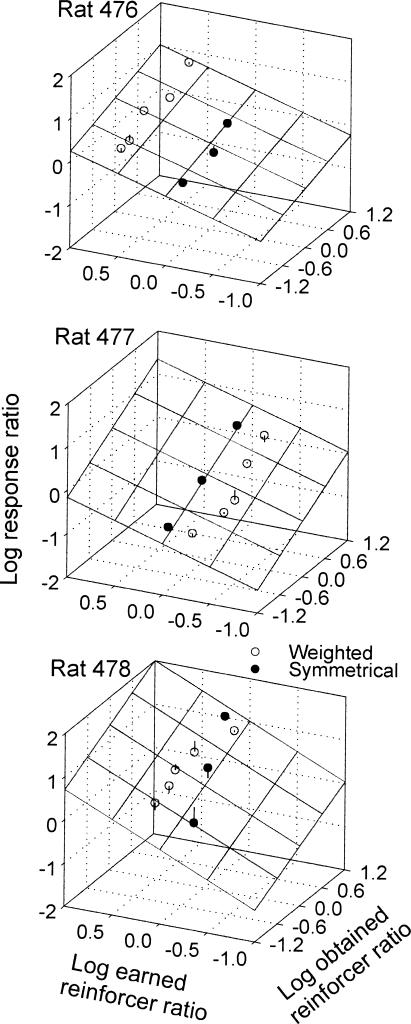

Clearly, the ratios of the rates of earning reinforcers affected preference. Figure 4 shows that logs of the ratios of responses increased as a joint function of increases in the logs of the ratios of the rates of obtaining reinforcers and the logs of the ratios of rates of earning reinforcers. This figure also shows that, for the symmetrical and for the weighted conditions, the logs of the ratios of rates of obtaining reinforcers varied and the logs of the ratios of the rates of earning reinforcers remained approximately constant. For each rat, the data from the symmetrical and from the weighted conditions each form an approximately straight line and these lines were roughly parallel to each other. The logs of the ratios of the rates of earning reinforcers from the weighted conditions were generally displaced towards the alternative that earned the higher rate of reinforcers. In addition, the greater weightings produced greater displacements of the logs of the ratios of rate of earning reinforcers. The amount of the displacement was larger for Rat 476 whose weighting was 5 as compared to the displacement for Rats 477 and 478, whose weightings were 2. Equation 5 described the data for Rats 476 and 477 (r2 = .93). The description was poorer for Rat 478 (r2 = .73).

Fig. 4. The logs of the response ratios plotted as a joint function of the logs of the ratios of the rates of obtaining reinforcers and the logs of the ratios of the rates of earning reinforcers in Experiment 2.

The plane shows the best-fitting plane using Equation 4 for the data from the symmetrical conditions combined with the data from the weighted conditions. The vertical lines show the difference between the obtained and predicted data. When the residuals are not visible they are smaller than the data point.

The estimates of the values of the two sensitivity parameters, s′ and h, were different for Rats 476 and 478, suggesting that different s′ and h parameter values are needed to describe the results of individual rats; the difference was more than two standard errors for Rat 476. The fitted parameter s′, sensitivity to ratios of the rates of obtaining reinforcers, was more than two standard errors from zero for Rat 476. The fitted parameter h, sensitivity to ratios of rates of earning reinforcers, was more than two standard errors from zero for all 3 rats. Values of log b′ were similar to those obtained from fits to the symmetrical conditions—in particular, the large positive bias for Rat 478 was maintained.

Overall maximizing is a theory of behavior that assumes animals behave optimally; they behave to obtain the most reinforcers for the least effort (Stephens & Krebs, 1986). The results from the weighted conditions are not predicted by overall maximizing. Under the weighted arrangement, the optimal strategy would be to stay at the richer alternative, the alternative associated with the greater rate of earning reinforcers, making brief visits to the leaner alternative to obtain switch reinforcers. Inspection of Figure 3 shows that the absolute value of the log behavior ratio ranged from 0.1 to 1.0. Converting log behavior ratios to percentage of responses at an alternative means that, for all rats and all conditions, between 50% and 91% of the responses were at the richer alternative. Across rats, in 11 of 15 weighted conditions, less than 80% of the responses were at the richer alternative, which is not consistent with overall maximizing.

GENERAL DISCUSSION

The results of Experiments 1 and 2 show that the ratios of the rates of earning reinforcers influenced preference. Consequently, these rates need to be included in equations used to describe concurrent performances, at least when rates of obtaining reinforcers at an alternative are different from the rates of earning reinforcers at the other alternative. These experiments also demonstrated the importance of considering concurrent schedules as consisting of two functionally independent pairs of stay and switch schedules. It was only this view of concurrent schedules that showed the influence of the ratio of the rates of earning reinforcers.

A changeover delay changes the stay and switch contingencies. In particular, when a switch reinforcer follows a COD that switch reinforcer also reinforces stay responses during that COD. The increased reinforcement of stay responses during the COD may reduce the effects of the asymmetrical and weighted arrangements compared to symmetrical arrangements. Responding during a COD occurs at a constant rate, regardless of schedule value (Silberberg & Fantino, 1970), which could further increase the attenuation by adding a constant to the numerator and denominator of the behavior ratio. However, another effect of a COD may minimize this effect: A COD reduces the rate of switching between alternatives, which means that the number of responses during a visit to an alternative increases (Shahan & Lattal, 1998). The overall increased visit length may mask the increased responding at the less preferred alternative. In an experiment that did not use a COD, run lengths (i.e., the mean number of responses during a visit to an alternative) were a function of the ratio of the rates of earning reinforcers for staying divided by switching (MacDonall, 2000). Replicating this experiment with a COD replicated the function relation; the only difference was that the rate of switching between alternatives decreased (MacDonall, 2003). Whether a COD alters the effects produced by the asymmetrical and weighted arrangements remains to be determined.

Quantitative Considerations

The generalized matching law accurately described the data from 8 of the 9 rats exposed to the symmetrical arrangements in Experiments 1 and 2. The data from both the asymmetrical (Figure 1) and the weighted (Figure 3) conditions were not on the path of the data from the symmetrical conditions because the ratios of the rates of earning reinforcers were ignored in these figures.

Equation 4 described the data from the symmetrical conditions combined with the data from the asymmetrical conditions in Experiment 1 and the data from the symmetrical conditions combined with the data from the weighted conditions in Experiment 2. The descriptions of the response ratios were good for 6 of the 9 rats (r2 > .85); only one description was poor (r2 < .75). Along with showing that the ratio of the rates of earning reinforcers directly affects preference, this equation provided simultaneous estimates of each rat's behavioral sensitivity to the ratio of the rates of obtaining reinforcers and the ratio of the rates of earning reinforcers.

One aspect of Equation 4 is problematic: The time spent at each alternative, a dependent variable, appears on the right side of the equation. Although having a dependent variable on the right side of the equation is a concern, the good fits by the equation are not an artifact. This is most easily seen when fitting time ratios using the equivalent of Equation 4, in which the time ratio, T1/T2, is on the left side of the equation. The ratio of the rates of earning stay plus switch reinforcers can be expressed as the product of two ratios: ((St1 + Sw2)/(St2 + Sw1))h and (T2/T1)h; here, the ratio of times at the alternatives is T2/T1. The time ratio on the left side, T1/T2, is the reciprocal of the time ratio on the right side, T2/T1; obviously, they are perfectly negatively correlated. Consequently, one might expect the slope, h, to be negative, reflecting this negative correlation. The slopes, however, are all positive (not shown). The reason they are positive is that the logs of the ratios of the sum of the stay and switch reinforcers earned at each alternative ranged from −1.3 to 1.3, and the logs of the ratio of times spent at the alternatives ranged from −0.5 to 0.5. Because the range of the logs of the time ratios were approximately one third the range of the reinforcer ratios, the logs of the time ratios have much less influence in determining the value of h. The greater range of the ratio of reinforcers earned at each alternative overcomes the negative correlation of the time ratios. Because logs of the response and time ratios are highly correlated, the same argument applies to understanding that the fits to the response ratios are not an artifact of having the reciprocal of the time ratios on the right side of the equation. Although the fits of Equation 4 are not an artifact with these data, a preferred equation would not have the time ratio on the right side.

Decomposing Concurrent Schedules

In previous research (MacDonall, 2000), rats were trained on one pair of stay and switch RI schedules. Across conditions, the values of the schedules varied so that by the end of the experiment rats were trained on 8 or 10 conditions that could be formed into four or five pairs of symmetrical schedules. The order of conditions varied so the conditions forming a symmetrical pair did not follow each other. The performance in each condition was functionally independent of the performances and contingencies in the other conditions. The generalized matching law described composite performances that were obtained from sets of conditions whose pairs of stay and switch schedules formed symmetrical pairs. This was accomplished by obtaining the responses per visit, duration of visits, stay reinforcers per visit, and switch reinforcers per visit for each condition. Conditions that formed symmetrical pairs were mated together and the per-visit performance of the mated pairs was used in the analysis. Because the generalized matching law described these composite performances, it appears that concurrent performances are composed of two functionally independent performances, each maintained by a pair of independent stay and switch schedules operating at the alternatives. In a standard concurrent schedule, the changeover response joins the otherwise independent performances by exchanging the stay and switch schedules in effect as the subject switches alternatives.

The symmetrical conditions of Experiments 1 and 2 used two pairs of functionally independent stay and switch interval schedules, rather than the usual procedure of two interval schedules that run continuously, and the generalized matching law described the results. The good descriptions using the generalized matching law to data from the symmetrical conditions is consistent with the view that concurrent performance consists of two functionally independent performances each maintained by a pair of stay and switch schedules (MacDonall, 1999, 2000). At each alternative, the choice was to stay or to switch. In the present experiments, the schedules controlling behavior at one alternative were independent of the schedules controlling behavior at the other alternative because different pairs of stay and switch schedules were used. It remains to be shown experimentally that the performances at each alternative of a concurrent schedule are functionally independent of each other.

Earning and Obtaining Reinforcers

The results of Experiments 1 and 2 suggest that earning reinforcers is a critical variable in understanding performance in concurrent choice. Figures 2 and 4 show that the logs of the ratios of the rates of earning reinforcers and the logs of the ratios of the rates of obtaining reinforcers affected preference. The concatenated generalized matching law is based on obtained reinforcers—not only the rates of obtained reinforcers but also the magnitudes of obtained reinforcers, the immediacies of obtained reinforcers, and the qualities of obtained reinforcers. Given the influence of rates of earning reinforcers, it remains for further research to determine whether the magnitudes, immediacies, and qualities of earned reinforcers also influence preference, and if so, how such effects can be described quantitatively.

Table 4. Results of least-squares multiple linear regressions for response allocations using Equation 5 for the data from the symmetrical conditions combined with data from the weighted conditions in Experiment 2.

| Rat | s′ | SE | h | SE | log b′ | SE | df | r2 |

| 476 | 0.63 | 0.13 | 0.33 | 0.07 | 0.01 | 0.06 | 5 | .93 |

| 477 | 0.66 | 0.35 | 0.63 | 0.08 | −0.06 | 0.08 | 5 | .93 |

| 478 | 0.99 | 0.74 | 0.55 | 0.16 | 0.37 | 0.15 | 5 | .73 |

Acknowledgments

I thank Lawrence DeCarlo for many informative discussions on stay and switch scheduling and for suggesting the asymmetrical arrangement used in the present research. I am grateful to Jonathan Galente for constructing and maintaining the equipment. Data collection was supported in part by a research grant from Fordham University. I thank AnnMarie Abernanty, Deidre Beneri, Lisa Musorrafiti, Meg Rugerrio, and Ben Ventura for assistance in data collection. Portions of the data were presented at the 1998 meeting of the Society for the Quantitative Analysis of Behavior.

REFERENCES

- Baum W.M. On two types of deviation from the matching law: Bias and undermatching. Journal of the Experimental Analysis of Behavior. 1974;22:231–242. doi: 10.1901/jeab.1974.22-231. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Baum W.M, Rachlin H.C. Choice as time allocation. Journal of the Experimental Analysis of Behavior. 1969;12:861–874. doi: 10.1901/jeab.1969.12-861. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Davison M, McCarthy D. The Matching Law: A research review. Hillsdale, NJ: Erlbaum; 1988. [Google Scholar]

- Dreyfus L.R, Dorman L.G, Fetterman J.G, Stubbs D.A. An invariant relation between changing over and reinforcement. Journal of the Experimental Analysis of Behavior. 1982;38:327–338. doi: 10.1901/jeab.1982.38-327. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ferster C.B, Skinner B.F. Schedules of reinforcement. New York: Appleton-Century-Crofts; 1957. [Google Scholar]

- Findley J.D. Preference and switching under concurrent scheduling. Journal of the Experimental Analysis of Behavior. 1958;1:123–144. doi: 10.1901/jeab.1958.1-123. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fleshler M, Hoffman H.S. A progression for generating variable interval schedules. Journal of the Experimental Analysis of Behavior. 1962;5:529–530. doi: 10.1901/jeab.1962.5-529. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Herrnstein R.J. Relative and absolute strength of response as a function of frequency of reinforcement. Journal of the Experimental Analysis of Behavior. 1961;4:267–272. doi: 10.1901/jeab.1961.4-267. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Houston A.I, McNamara J. How to maximize reward rate on two variable interval paradigms. Journal of the Experimental Analysis of Behavior. 1981;35:367–396. doi: 10.1901/jeab.1981.35-367. [DOI] [PMC free article] [PubMed] [Google Scholar]

- MacDonall J.S. Run length, visit duration, and reinforcer per visit in concurrent performance. Journal of the Experimental Analysis of Behavior. 1998;69:275–293. doi: 10.1901/jeab.1998.69-275. [DOI] [PMC free article] [PubMed] [Google Scholar]

- MacDonall J.S. A local model of concurrent performance. Journal of the Experimental Analysis of Behavior. 1999;71:57–74. doi: 10.1901/jeab.1999.71-57. [DOI] [PMC free article] [PubMed] [Google Scholar]

- MacDonall J.S. Synthesizing concurrent interval performance. Journal of the Experimental Analysis of Behavior. 2000;74:189–206. doi: 10.1901/jeab.2000.74-189. [DOI] [PMC free article] [PubMed] [Google Scholar]

- MacDonall J.S. Reinforcing staying and switching while using a changeover delay. Journal of the Experimental Analysis of Behavior. 2003;79:219–232. doi: 10.1901/jeab.2003.79-219. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mechner F. Probabilistic relations within response sequences under ratio reinforcement. Journal of the Experimental Analysis of Behavior. 1958;1:109–121. doi: 10.1901/jeab.1958.1-109. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rachlin H, Green L, Tormey B. Is there a decisive test between matching and maximizing? Journal of the Experimental Analysis of Behavior. 1988;50:113–123. doi: 10.1901/jeab.1988.50-113. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Shahan T.A, Lattal K.A. On the functions of the changeover delay. Journal of the Experimental Analysis of Behavior. 1998;69:141–160. doi: 10.1901/jeab.1998.69-141. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Silberberg A, Fantino E. Choice, rate of reinforcement, and the changeover delay. Journal of the Experimental Analysis of Behavior. 1970:187–197. doi: 10.1901/jeab.1970.13-187. 9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stephens D.W, Krebs J.R. Foraging theory. Princeton, NJ: Princeton University Press; 1986. [Google Scholar]

- Trevett A.J, Davison M.C, Williams R.J. Preference on concurrent interval schedules. Journal of the Experimental Analysis of Behavior. 1972;17:369–374. doi: 10.1901/jeab.1972.17-369. [DOI] [PMC free article] [PubMed] [Google Scholar]