Abstract

A model of conditional discrimination performance (Davison & Nevin, 1999) is combined with the notion that unmeasured attending to the sample and comparison stimuli, in the steady state and during disruption, depends on reinforcement in the same way as predicted for overt free-operant responding by behavioral momentum theory (Nevin & Grace, 2000). The rate of observing behavior, a measurable accompaniment of attending, is well described by an equation for steady-state responding derived from momentum theory, and the resistance to change of observing conforms to predictions of momentum theory, supporting a key assumption of the model. When probabilities of attending are less than 1.0, the model accounts for some aspects of conditional-discrimination performance that posed problems for the Davison-Nevin model: (a) the effects of differential reinforcement on the allocation of responses to the comparison stimuli and on accuracy in several matching-to-sample and signal-detection tasks where the differences between the stimuli or responses were varied across conditions, (b) the effects of overall reinforcer rate on the asymptotic level and resistance to change of both response rate and accuracy of matching to sample in multiple schedules, and (c) the effects of fixed-ratio reinforcement on accuracy. Some tests and extensions of the model are suggested, and the role of unmeasured events in behavior theory is considered.

Keywords: attending, behavioral momentum, conditional discrimination, matching to sample, signal detection, observing behavior

In order for stimuli to control behavior, organisms must attend to them. Dinsmoor (1985) suggested that effective stimulus control depends on contact with the relevant stimuli via overt observing behavior, and that for a complete understanding “. . . we are obliged to consider analogous [to observing] processes . . . commonly known as attention. The processes involved in attention are not as readily accessible to observation as the more peripheral adjustments, but it is my hope and my working hypothesis that they obey similar principles.” (p. 365). Although attention is usually construed as a cognitive process, we view attending as unmeasured (possibly covert) operant behavior that accompanies measurable observing. Attending, we suggest, is selected and strengthened by the reinforcing consequences of overt discriminated operant behavior that would be less frequently reinforced in the absence of attending. Following Dinsmoor, we assume that the unmeasured behavior of attending to discriminative stimuli is related to the rates of reinforcement correlated with those stimuli in the same way as measured free-operant response rate.

In this paper, we develop a model of attending that parallels a version of behavioral momentum theory for free-operant responding and incorporate it into a general account of discriminated operant behavior (Davison & Nevin, 1999). We begin by reviewing behavioral momentum theory as it applies to resistance to change of overt responding, extend it to account for steady-state response rate, and propose a model of attending based on its principles. Next, we review the Davison-Nevin model and indicate some of its shortcomings. We then show that when the momentum-based model of attending is combined with the Davison-Nevin model, the combination can account for some data that posed problems for the original model: the effects of differential reinforcement for the two correct responses in conditional discriminations; the effects of overall reinforcer rate on conditional discrimination accuracy in the steady state and during disruption by briefly imposed experimental variables; and the effects of fixed-ratio reinforcement for conditional discrimination.

The development of our model depends on three fundamental assumptions. First, measured operant behavior in the steady state as well as during disruption depends on the reinforcer rate correlated with a distinctive stimulus, relative to the overall reinforcer rate in the experimental context, according to a function derived from behavioral momentum theory (Nevin & Grace, 2000). Second, attending to discriminative stimuli depends on the reinforcer rate that accompanies the unmeasured behavior of attending in the same way as measured operant responding, both in the steady state and during disruption, with reinforcer rate expressed relative to the context in which those stimuli appear. And third, given that a subject attends to the relevant stimuli, its behavior is described by the Davison-Nevin (1999) model of conditional-discrimination performance.

Readers may question the utility of a model that invokes unmeasured, possibly covert, attending behavior (see, for example, the vigorous discussions of the varieties of theory in behavior analysis edited by Marr [2004]). There are several reasons for pursuing this approach. First, we show that the value of a single variable in the model—the probability of attending—determines the form of relations between measured discrimination performance and empirical variables. Thus the model provides a basis for organizing diverse results that have been reported in the literature. Second, the model identifies a behavioral process with properties like those of overt behavior that leads to testable predictions and challenges researchers to investigate directly measurable counterparts of the terms of the model in relation to reinforcement variables. Third, even if overt counterparts of its terms prove to be elusive, the model provides a way to infer the effects of reinforcement on covert activities that are involved in stimulus control, consistent with the radical behaviorist view that “. . . private events are natural and in all important respects like public events” (Baum, 1994, p. 41; see also Skinner, 1974).

BEHAVIORAL MOMENTUM THEORY

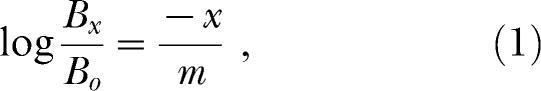

Behavioral momentum theory (Nevin & Grace, 2000) has been concerned with resistance to change during relatively short-term disruption. It is related metaphorically to Newton's second law in classical mechanics, which states that the change in the velocity of a body is directly proportional to an external force and inversely proportional to the body's inertial mass. Nevin, Mandell, and Atak (1983) modeled response rates during disruption as

|

where Bo is baseline response rate, Bx is response rate during disruption, x is the value of the disrupter with its decremental effects indicated by the minus sign, and m is behavioral mass. Virtually all of the relevant research has employed multiple variable-interval (VI) VI schedules to control obtained reinforcer rates and to permit within-session comparisons of resistance to change. In a review of all his data, Nevin (1992b) suggested that behavioral mass (m) in a schedule component was a power function of reinforcer rate in that component (rs), relative to the overall average reinforcer rate in a session (ra), which is based on time in both components and in intercomponent intervals. Thus m = (rs/ra)b, where b measures the sensitivity of relative resistance to relative reinforcement. For example, if b = 1.0, the ratio of log proportions of baseline in two multiple-schedule components is equal to the ratio of reinforcer rates. The value of b has been found to be approximately 0.5 in a number of studies that arranged multiple VI VI schedules (Nevin, 2002) and will be used throughout this paper. Equation 2, with reinforcement terms inserted in lieu of behavioral mass m, describes the highly reliable finding that resistance to change relative to baseline is greater in a multiple-schedule component with more frequent reinforcement:1

|

By expressing responding during disruption relative to baseline, Equation 2 ignores the determiners of steady-state responding. But even in the steady state, responding is measured against a background that includes potential disrupters such as competition from unspecified activities that entail their own unmeasured reinforcers. Herrnstein (1970) formalized this idea in his well-known hyperbola relating steady-state response rate B and reinforcer rate r:

|

where re represents unmeasured “extraneous” reinforcers, and k is the asymptotic response rate as r goes to infinity.

Equation 2 can be rewritten to describe response rate in the steady state as well as during disruption, thus bringing behavioral momentum theory to bear on baseline response rates as well as their resistance to change (see Nevin & Grace, 2005). Adding a scale constant k′ to express predictions in responses per minute, converting to natural logarithms, and exponentiating, Equation 2 becomes:

|

The disrupter x in Equation 4 plays the same role as extraneous reinforcers re in Equation 3 for steady-state response rate. Other parameters must be added to the numerator of the exponent to characterize the effects of short-term disrupters in tests of resistance to change. Like k in Equation 3, k′ is the asymptotic response rate as rs/ra goes to infinity.

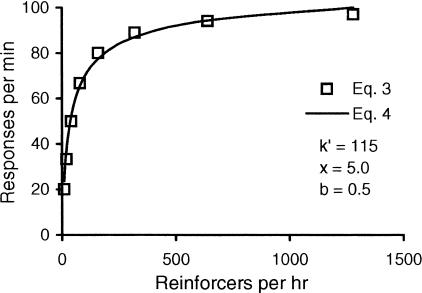

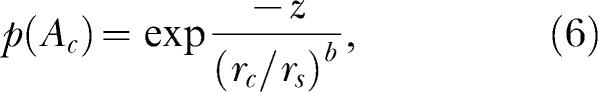

The steady-state predictions of Equations 3 and 4 for single VI schedules arranged over successive conditions are strikingly similar. To generate representative predictions from Equation 3, we set k = 100 and re = 40. We then estimated the parameters of Equation 4 that gave similar predictions, with ra set arbitrarily at 1.0 because when reinforcer rate is varied over successive conditions, the “component” becomes the experimental session, and the overall average reinforcer rate includes that during the subject's extraexperimental life, which presumably has a low and constant value. With b = 0.5, x = 5.0, and k′ = 115, the predictions of Equation 4 are virtually indistinguishable from those of Equation 3, as shown in Figure 1 (if another value were chosen for ra, the value of x would differ). More generally, the extensive data that are adequately fit by Equation 3 also will be adequately fit by Equation 4.

Fig. 1. The relations between steady-state response rate and reinforcer rate on VI schedules according to Herrnstein (1970; Equation 3 here) and according to a modified version of behavioral momentum theory (Equation 4, with parameter values in the legend).

A reliable finding of research on resistance to change is that adding experimentally defined extraneous reinforcers to one component of a multiple schedule both decreases response rate and increases resistance to change in that component even if the added reinforcers are qualitatively different from those produced by responding (e.g., Grimes & Shull, 2001; Shahan & Burke, 2004). This general result is not well explained by Equation 3 (see Nevin, Tota, Torquato, & Shull, 1990), but follows from Equation 4. Moreover, Herrnstein's (1970) extension of Equation 3 to describe multiple-schedule performance made some predictions that have proven erroneous. Because Equation 4 characterizes resistance to change as well as steady-state performance, we will employ it throughout this paper.

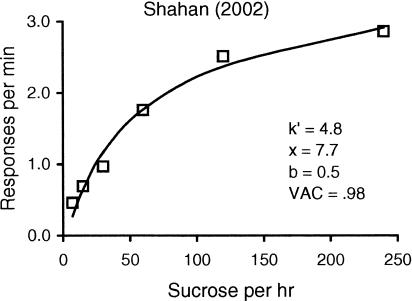

In addition to describing rates of food-reinforced operants, Equation 4 also describes rates of observing responses that produce contact with discriminative stimuli. Shahan (2002) examined the effects of variations in rate of primary reinforcement on observing-response rates of rats. Observing responses produced exposure to stimuli differentially correlated with otherwise unsignaled periods of response-independent sucrose deliveries or extinction. The rate of sucrose deliveries was varied across conditions. As shown in Figure 2, his average data conform closely to the predictions of Equation 4 with b equal to 0.5.

Fig. 2. The relation between the rate of observing by rats (from Shahan, 2002) and the predictions of Equation 4.

Parameter values are given in the legend, and VAC indicates the proportion of variance explained.

Relatedly, Shahan, Magee, & Dobberstein (2003) examined the resistance to change of observing behavior in pigeons. In their experiments, unsignaled periods of food reinforcement on a random-interval (RI) schedule alternated with extinction in both components of the multiple schedule. Observing responses in both components produced stimuli correlated with the RI and extinction periods. The RI schedule in one component arranged food deliveries at a rate four times higher than in the other component. Observing occurred at a higher rate and was more resistant to prefeeding and intercomponent food deliveries in the component in which it produced discriminative stimuli associated with a higher rate of primary reinforcement. A structural-relation analysis of their data found that the exponent b in Equation 4 was close to 0.5 for both observing and food-key responding. Thus observing—an overt analog of unmeasured attending to discriminative stimuli—is functionally similar to response rates and resistance to change in single and multiple schedules of food-maintained responding. For these reasons, we use Equation 4 to predict the probability of attending as a function of reinforcer rate in the model of conditional discrimination performance developed below.

THE DAVISON-NEVIN MODEL OF CONDITIONAL DISCRIMINATION PERFORMANCE

In a typical observing-response procedure, responses produce stimuli that signal the conditions of reinforcement for a single response. In a conditional discrimination procedure such as matching to sample (MTS), by contrast, reinforcers are given for one or the other of two responses depending on the value of a preceding stimulus. Specifically, one of two sample stimuli is presented at the start of each trial. After a fixed period of exposure, or after completion of a response requirement, two comparison stimuli are presented, one of which is the same as the sample. A response to the comparison that is the same as the sample is deemed correct and may be reinforced. In arbitrary or symbolic matching, the comparisons are physically different from the samples, and reinforcement availability is determined by a rule specifying the correct comparison for each sample. In experiments characterized as signal detection or recognition, responses are usually defined topographically (e.g., pecks at left or right key with pigeons; saying “Yes” or “No” with humans). The conditional-discrimination paradigm encompasses both matching-to-sample and signal detection, and our model applies to both tasks.

For simplicity and consistency with previous analyses, we will designate the samples as S1 and S2, with responses B1 and B2 defined by the comparisons C1 and C2. Thus, in a standard MTS procedure with pigeons, red or green illumination of a center key will be designated S1 or S2. Illumination of the side keys with red and green, alternating irregularly between left and right, will be designated C1 and C2, and pecks on the keys displaying C1 and C2 will be designated B1 and B2 regardless of their left or right position.

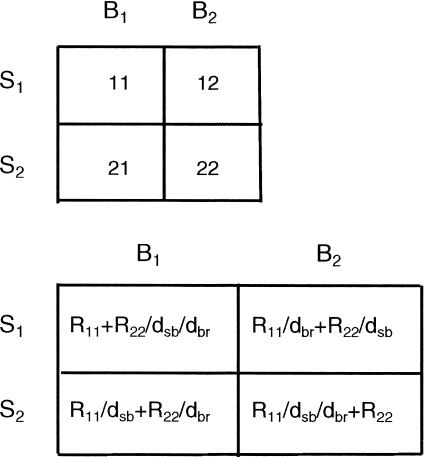

In discrete-trial conditional discriminations, it is convenient to array the stimuli (S1 and S2) and responses (B1 and B2) in a 2 × 2 matrix as shown in the upper panel of Figure 3. Cell entries are subscripted by row–column notation and represent numbers of events. Thus, for example, B11 and B22 are the numbers of correct responses, and B12 and B21 are the numbers of incorrect responses, each tallied over a specified period of experimentation. Likewise, R11 and R22 are the numbers of reinforcers for correct responses, and R12 and R21 are 0 (no experimentally arranged consequences) in all cases considered here. Following Alsop (1991) and Davison (1991), Davison and Nevin (1999) assumed that the effects of R11 and R22 generalized to the other cells as a result of confusion between the stimuli and contingencies within the matrix. They identified confusability as the inverse of distance in a two-dimensional psychometric space, with its axes defined by stimulus-behavior and behavior-reinforcer contingencies. The distance between two discriminated operants with different stimuli is given by dsb, which depends on the physical difference between S1 and S2 and the sensory capacities of the subject. The distance between operants with different response definitions or contingencies is given by dbr, which depends on the physical difference between the definitions of B1 and B2 and on variables such as unsignaled delays to reinforcement that would alter the discriminability of the behavior-reinforcer contingency. Confusabilities are expressed as the inverse of distances, 1/dsb and 1/dbr; if either parameter equals 1.0, discrimination performance is at chance. The resulting matrix of direct and generalized reinforcers is shown in the bottom panel of Figure 3.

Fig. 3. The basic conditional-discrimination matrix for two stimuli and two responses; cells are designated by row-column notation as shown at the top, and cell entries represent numbers of events.

The lower panel presents the matrix of effective reinforcers with R11 contingent on B11 and with R22 contingent on B22, generalizing to the other cells according to the Davison-Nevin (1999) model.

Davison and Nevin (1999) assumed that responses were allocated to the cells of the matrix in the top panel of Figure 3 so as to match the ratios of the sums of direct and generalized reinforcers, as shown in the lower panel. The resulting expressions are cumbersome and can be found in Davison and Nevin (pp. 447–450), together with a more extensive rationale for their approach. From these expressions, Davison and Nevin calculated the expected numbers of responses in each cell of the matrix for various values of dsb, dbr, and R11/R22, and predicted the value of discrimination accuracy defined as log D = 0.5*log(B11/B21*B22/B12). Note that log D is the log of the geometric mean of the predicted ratios of correct to incorrect responses on S1 and S2 trials. It is defined identically to a frequently used empirical measure of discrimination accuracy, log d, which is calculated from experimental data (see Davison & Tustin, 1978).

Although the Davison-Nevin (1999) model was quite successful in accounting for a wide range of results for conditional discriminations in discrete trials, it had three shortcomings—all of which were acknowledged—that are addressed in this paper.

First, the model predicts that when the R11/R22 ratio is varied with dsb constant, the relations between log(B1/B2) and log(R11/R22), plotted separately for S1 and S2 trials, are curvilinear and converge as log(R11/R22) becomes extreme. As a result, the predicted relation between log D and log(R11/R22) is concave down with a maximum at log(R11/R22) = 0. This function form has rarely been reported in any of the many relevant studies (e.g., McCarthy & Davison, 1980); indeed, a review by Johnstone and Alsop (1999) found that many reported functions were concave up, exactly the opposite of the Davison-Nevin predictions.

Second, the model predicts that log D is the same for all conditions with the same dsb, dbr, and R11/R22 ratio, regardless of the absolute rates or values of R11 and R22. Thus it could not explain the positive relation between signaled reinforcer probability and accuracy of signal detection reported by Nevin, Jenkins, Whittaker, and Yarensky (1982, Experiment 2) and systematically replicated with MTS in multiple schedules by Nevin, Milo, Odum, and Shahan (2003).

Third, although the Davison-Nevin (1999) model could be extended to free-operant multiple-schedule performance when S1 and S2 durations are lengthened so that B1 and B2 can occur repeatedly, with B1 and B2 identical, their model was cumbersome and laden with free parameters (pp. 467–469). Moreover, they did not attempt to model the well-established finding that response rates in multiple schedules are more resistant to change in the richer component.

The mispredictions of steady-state discrimination performance result from an implicit assumption in the Davison-Nevin (1999) model—namely, that the subject always attends to the stimuli, no matter how infrequent the reinforcers. To address these shortcomings in the Davison-Nevin model, we will use Equation 4, derived from behavioral momentum theory, to predict the probability of attending to the stimuli in discrete-trial conditional discriminations as well as response rates and their resistance to change, which are well described by Equation 4.

A MODEL OF ATTENDING

In this section, we outline a model of attending to the sample and comparison stimuli in a conditional discrimination. The model has two components: a structure that is independent of reinforcement effects, and a momentum-based model of attending in relation to reinforcement.

Model Structure

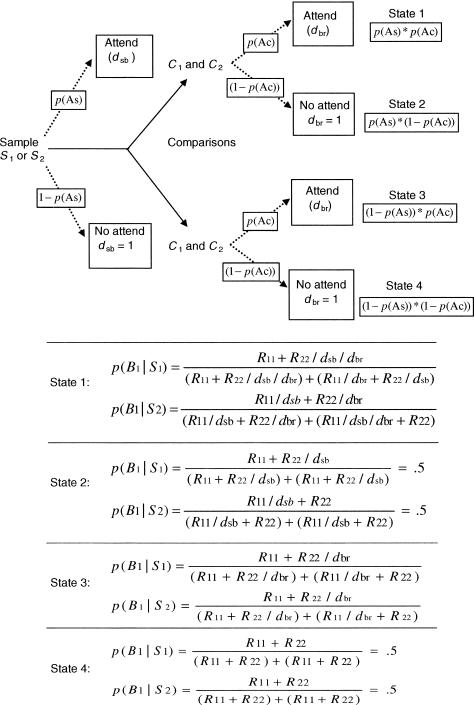

In a standard MTS trial with pigeons as subjects, the sample S1 or S2 is presented on a center key followed by comparisons C1 and C2, which define choice responses B1 or B2, on the side keys. We assume that on each trial the subject attends to the sample with probability p(As), and then attends to the comparisons with probability p(Ac). We assume further that p(As) is the same for S1 and S2, whichever is presented on a given trial, and that p(Ac) is the same for C1 and C2. The process may be represented as a Markov chain as shown in Figure 4. If the subject attends to the sample and comparisons, it emits B1 or B2 as predicted by Davison and Nevin (1999; see bottom panel of Figure 3). The formulas for probabilities of B1|S1 and B1|S2 are given in Figure 4, State 1. If the subject does not attend to the samples, S1 and S2 are ignored (or completely confused), so that dsb in the model is 1.0. If it then attends to the comparisons, the probabilities of B1|S1 and B1|S2 are determined by R11/R22 as modulated by dbr only (State 3 in Figure 4). Note that if R11 = R22, the expressions for State 3 reduce to 0.5. If the subject does not attend to the comparisons, C1 and C2 are ignored, dbr is 1.0, and the expressions in Figure 4, States 2 and 4, reduce to 0.5. Consequently, responses are directed randomly to the left or right keys with probability 0.5 regardless of whether the subject attended to the sample or not (for present purposes, we will neglect inherent side-key biases).

Fig. 4. Schematic model of attending in a conditional discrimination.

The subject attends to the sample with p(As). If it attends, the discriminability of stimulus–behavior relations is dsb in the Davison-Nevin (1999) model; if it does not attend, dsb = 1. It then attends to the comparisons with p(Ac). If it attends, the discriminability of behavior–reinforcer relations is dbr in the Davison-Nevin model; if it does not attend, dbr = 1. Combining these possibilities leads to four states; for each state, the Davison-Nevin expressions for the probabilities of B1|S1 and B1|S2 (see Figure 3) are given in the lower portion of the figure.

The overall performance resulting from a mixture of trials with and without attending to sample and comparison stimuli is predicted by pooling the response probabilities for trials in each of the four states summarized in Figure 4 weighted by p(As), p(Ac), 1-p(As), and 1-p(Ac). The basic idea is the same as Heinemann and Avin's (1973) quantification of attending during the acquisition of a conditional discrimination (see also Blough, 1996). Our analysis of attending in relation to reinforcer probabilities in multiple schedules (see below) implies that attending, like overt operant behavior, can be controlled by environmental stimuli such as the key colors signaling schedule components. As such, our account is consistent with the work of Heinemann, Chase, and Mandell (1968), who demonstrated control of attending to one or the other of two stimulus dimensions by differential reinforcement with respect to those dimensions.

The discriminability parameters dsb and dbr and the attending probabilities p(As) and p(Ac) have somewhat similar functions in the proposed model. For example, setting dsb = 1.0 has the same effect as setting p(As) = 0. Likewise, setting dbr = 1.0 has the same effect as setting p(Ac) = 0. However, there are some important differences between them. As noted above, Davison and Nevin (1999) conceptualized dsb and dbr as distances in a psychometric space within which various discriminated operants could be arrayed. Thus their values reflect long-term structural features of the experiment such as the sensory capacities of the subject, the physical differences between S1 and S2 and between C1 and C2, and the distinctiveness of the contingency between B1 and B2 (which are defined by C1 and C2) and the reinforcers R11 and R22. By contrast, attending occurs probabilistically from trial to trial, where p(As) and p(Ac) depend on reinforcer rates in the same way as free-operant response rates. The independence of attending and discriminability as model parameters suggests that a subject could attend with high probability (because of frequent reinforcement) to stimuli that were difficult to distinguish (low dsb or dbr), or conversely, attend with low probability (because of infrequent reinforcement) to stimuli that were highly discriminable.

Effects of Overall Reinforcer Rate

We assume that unmeasured attending is related to the rate of reinforcement correlated with a stimulus relative to its context in the same way as food-reinforced free-operant responding or overt observing behavior that produces discriminative stimuli. Thus attending to the samples and comparisons would be more probable and more resistant to change in the presence of stimuli correlated with higher rates of reinforcement.

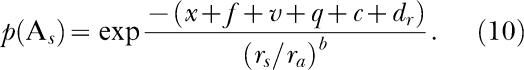

We propose that attending to S1 and S2 in a conditional discrimination is given by the following version of Equation 4:

|

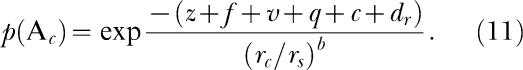

where x is background disruption or distraction that interferes with attending to the sample. The reinforcer rate in a schedule component rs is here identified with the reinforcer rate for observing or attending behavior preceding and during sample presentation. Thus rs is given by reinforcers per session divided by total time from onset of intertrial intervals or multiple-schedule components to offset of the samples. The session average reinforcer rate ra is defined as above for Equation 4. No scalar analogous to k′ in Equation 4 is needed because the asymptote of p(As) is 1.0. Equation 5 states that attending in a schedule component is positively related to component reinforcer rate rs relative to the overall average session reinforcer rate ra. Therefore, p(As) is predicted to be higher and more resistant to change in the richer of two multiple-schedule components with a given increase in disruption, just like response rate. We also assume that attending to C1 and C2 is similarly dependent on reinforcer rate relative to its context. However, for p(Ac), the relevant reinforcer rate is that obtained within the MTS trial after sample offset, designated rc, and the context is the schedule component within which the trial occurs. Thus

|

where z represents background disruption of attending to C1 and C2, which may or may not be the same as x, the background disruption of attending to the samples. In standard MTS trials, the reinforcer rate rc is given by the reciprocal of the mean latencies of B1 and B2. When a retention interval intervenes between samples and comparisons, rc is given by the reciprocal of the sum of the retention interval and the mean latencies of B1 and B2. Because latencies of responding to the comparison stimuli are rarely reported, we assume 1-s latencies throughout. The model parameters and related terms are summarized in Appendix A.

Predicted Effects of Differential Reinforcement

The predictions that follow from variations in p(As) within the Markov structure of Figure 4 are illustrated in the following section. These illustrative predictions assume that the ratio of reinforcers for the two sorts of correct responses, R11/R22, is varied systematically with total reinforcement, R11 + R22, held constant. The constancy of R11 + R22 implies constancy of p(As) and p(Ac) while the ratio of reinforcers, R11/R22, is varied. Therefore, Equations 5 and 6 are irrelevant and we can ignore the parameters b, x, and z. Because p(Ac) is likely to be close to 1.0 in detection or MTS experiments with frequent reinforcement and no retention interval separating samples and comparisons, we concentrate on the predicted effects of p(As) < 1.0, corresponding to values of x > 0 in Equation 5, with p(Ac) = 1.0.

Davison and Tustin (1978) suggested that when the difference between S1 and S2 is constant, with the reinforcer ratio R11/R22 varied, the ratios of B1 to B2 on S1 and S2 trials could be described by the generalized matching law. In logarithmic form, neglecting inherent bias:

where a represents sensitivity to reinforcement ratios and logd provides an empirical measure of discrimination between S1 and S2 (see above). Many data sets conform reasonably well to the predicted linear relation between log response and reinforcer ratios. However, the equations of Davison and Nevin (1999) predict functions for S1 and S2 that are curved, becoming horizontal at high and low reinforcer ratios, respectively.

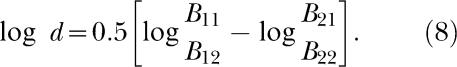

The empirical measure of discrimination accuracy, log d, is obtained by subtracting Equation 7b from Equation 7a (we use logarithms to the base 10 here and for the applications below):

|

Equation 8 implies that measured discrimination, log d, is independent of the reinforcer ratio, and a number of studies have reported rough constancy of log d when the reinforcer ratio is varied. By contrast, the Davison-Nevin equations predict that log D is an inverted-U function of the log reinforcer ratio. As noted above, such functions are rarely reported.

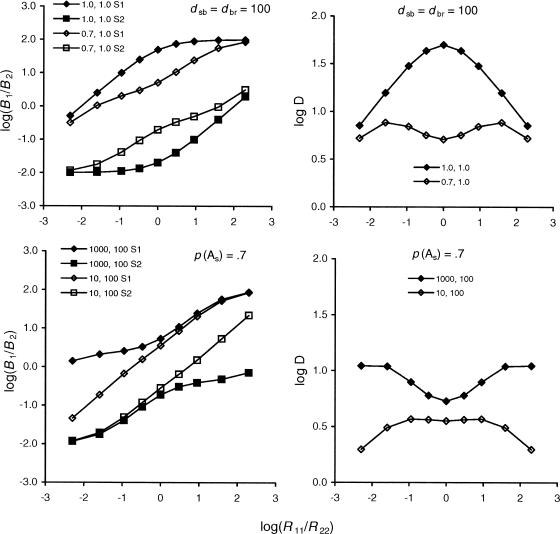

Interestingly, when the ratio of reinforcers for the two correct responses, R11/R22, is varied with total reinforcement, R11 + R22, constant, the model summarized in Figure 4 can generate a range of function forms for response ratios and log D, as shown in Figure 5. The filled symbols in the top left panel show the Davison-Nevin (1999) predictions with both p(As) and p(Ac) set at 1.0, with R11/R22 varied over a wide range. If p(As) is reduced to .7 (unfilled symbols), the functions become more nearly linear, with some wiggles that would be difficult to detect in real data. In the top right panel, the corresponding functions for log D show the strong inverted-U form predicted by Davison and Nevin when p(As) = 1.0 (filled symbols), and a more nearly horizontal gull-wing form when p(As) = .7 (unfilled symbols). The bottom left panel shows the effects of different values of dsb with dbr fixed at 100 and p(As) = .7. If dsb = 1000 (an extremely easy discrimination, filled symbols), the functions are nonlinear with two clear inflections. If dsb = 10 (a moderately difficult discrimination, unfilled symbols) the functions are essentially linear over the range from −1.5 to +1.5 log units on R11/R22. (The function for dsb = 100, p(As) = .7 in the top panel is an intermediate version.) The corresponding functions for log D in the bottom right panel are U-shaped for dsb = 1000 (filled symbols) and roughly horizontal for dsb = 10 (unfilled symbols) over the same range. Thus the relation between log D and log R11/R22 can take on a variety of forms, depending on the values of dsb and p(As). Because most signal-detection research has arranged moderately difficult discriminations and a restricted range of R11/R22, the absence of clear curvilinearity in the response-ratio functions and the apparent independence of measured discrimination, log d, from the reinforcer ratio are not surprising.

Fig. 5. The left column displays predicted functions relating log ratios of responses, B1/B2, to the log ratio of reinforcers for correct responses, R11/R22, separately for S1 and S2 trials.

The upper panel shows the effects of two values of p(As) with dsb = dbr = 100. The lower panel shows the effects of two values of dsb with dbr and p(As) constant. The right column displays predicted relations between log D, which is given by the difference between the corresponding functions in the left column, and the log reinforcer ratio. p(Ac) is set at 1.0 for all predictions. See text for further description.

It is important to note that although variations in dsb and p(As) lead to clearly distinguishable predictions, the same is not true for dbr and p(Ac). For example, with p(As) = 1.0, predictions for dbr = 100, p(Ac) = .8, are indistinguishable from those for dbr = 8, p(Ac) = 1.0. However, it is possible to distinguish their effects empirically. Because we have identified dbr with the discriminability of the response-reinforcer contingency, the model predicts that the estimated value of dbr should be positively related to the physical difference between C1 and C2. By contrast, p(Ac) depends on the within-trial reinforcer rate, so its estimated value should be constant with respect to the difference between C1 and C2. We show below that when Jones (2003) varied the difference between C1 and C2 in MTS, with R11/R22 varied over a wide range and R11 + R22 constant, his data accord with these expectations. Conversely, in delayed matching to sample, dbr should be constant and p(Ac) should decrease as rc decreases with the length of the retention interval. A model and analysis of delayed discrimination by Nevin, Davison, Odum, and Shahan (in preparation) will address these expectations.2

APPLICATIONS: DIFFERENTIAL REINFORCEMENT

We now apply the model of attending summarized above to three studies that arranged differential reinforcement for the two correct responses, B11 and B22, in conditional discriminations while holding reinforcer totals constant. First, Jones (2003) varied the reinforcer ratio over a wide range with two levels of discriminability between the comparisons C1 and C2. Second, Alsop (1988) varied the reinforcer ratio over three values with five levels of discriminability between samples S1 and S2. Third, Nevin, Cate, and Alsop (1993) varied the reinforcer ratio over five values with two levels of discriminability between samples S1 and S2 and between responses, B11 and B22. Taken together, these studies test the ability of the present model to account for data that are not entirely consistent with Davison and Nevin's (1999) model, which in effect assumed that p(As) and p(Ac) were always 1.0.

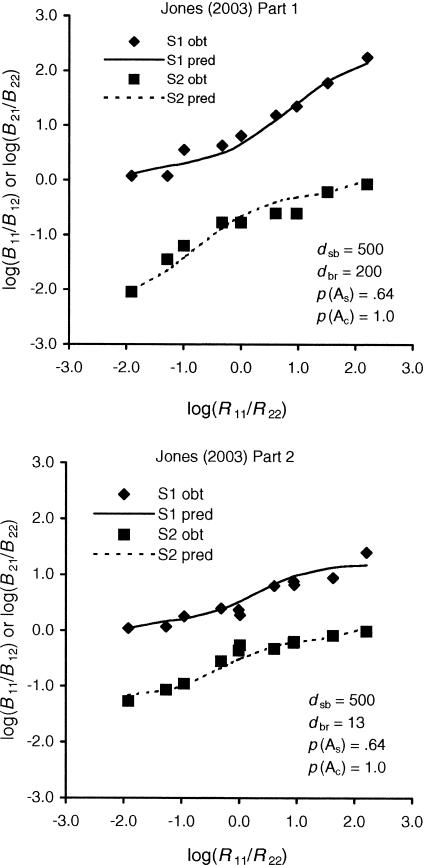

Jones (2003) reported a comprehensive set of MTS data with R11 + R22 constant and R11/R22 varied over an unusually wide range and with unusually extensive exposure to each condition. The sample and comparison stimuli differed in brightness. In Part 1 of his experiment, the differences between both S1 and S2 and C1 and C2 were large; in Part 2, the difference between C1 and C2 was reduced. The functions relating log(B1/B2) to log(R11/R22) for S1 and S2 trials were curvilinear and were not well described by the basic Davison-Nevin (1999) model (see Jones, 2003, Figure 5). However, predictions of the present model fitted the data quite well, as shown in Figure 6. Because the overall reinforcer rate was the same in all conditions of both parts, Equations 5 and 6 are not relevant, so we fitted p(As) and p(Ac) directly for the entire data set. We estimated dbr separately for Parts 1 and 2 (designated dbr1 and dbr2) because the comparison stimuli differed between parts, with dsb the same for both parts because the S1-S2 difference was the same. There were 40 independent data points fitted by five parameters: dsb, dbr1, dbr2, p(As), and p(Ac); their values are given in the panels of Figure 6. The overall proportion of variance explained by the model (VAC) is .98. The best-fitting value of dsb was 500, but varying dsb over the range from 100 to 1000 decreased VAC by less than .02. The reason for the relatively poor estimation of dsb is that high values correspond to very low error rates. For example, dsb = 100 corresponds to one error in 100 trials, whereas dsb = 1000 corresponds to one error in 1000 trials. For this reason, the value of dsb accounts for rather little of the data variance in easy discriminations.

Fig. 6. Fits of predictions by the model to the data of Jones (2003), Part 1 (upper panel) and Part 2 (lower panel).

Parameter values are shown in the legend for each panel; overall VAC = .98. See text for explanation.

The best-fitting values of dbr1 and dbr2 were 200 and 13. The lower value of dbr for the data of Part 2 reflects the reduced discriminability of the comparisons when the difference between C1 and C2 was decreased. When dbr1 and dbr2 were varied independently, dbr1 could vary from 50 to 1000, and dbr2 could vary from 5 to 20, with no more than .02 loss in VAC. Thus, although dbr1 and dbr2 were not tightly estimated, the data were best fitted with dbr1 greater than dbr2, whereas p(Ac) was constrained to take the same value for both parts because the conditions of reinforcement were the same. These results are consistent with the separate determination of dbr and p(Ac) by the response-defining stimuli and by reinforcement variables.

In one condition of Part 2, Jones (2003) arranged extinction for B1 on S1 trials and continuous reinforcement for B2 on S2 trials. Thus the ratio of R11 to R22 was zero, and the data cannot be plotted in Figure 6. Nevertheless, the data are interesting and challenging as an extreme case. According to the model of attending shown in Figure 4, p(B1|S1) and p(B1|S2), and therefore log(B1|S1/B2|S1) and log(B1|S2/B2|S2), must be the same whenever R11 or R22 is zero. That is, discrimination between S1 and S2 is predicted to be zero. To the contrary, Jones obtained average values of −0.04 and −0.80 for log(B11/B21) and log(B12/B22), respectively, implying fairly good discrimination (log d = 0.38).

To interpret his results, Jones (2003) construed trials with C1 on the left and C2 on the right, and trials with C1 on the right and C2 on the left, as different configurations, thus defining eight discriminated operants maintained by different relative frequencies of reinforcement. His data for the condition with extinction versus continuous reinforcement, as well as his other data, were explained by this approach. As shown in Figure 6, our attention-based model accounts for all his other data quite well without invoking different configurations of C1 and C2. Furthermore, our model can accommodate the effects of extinction on S1 trials by adding a small value to all cells of the reinforcement matrix of Figure 1, based on the fact that all responses have the effect of advancing the trial sequence and thereby lead to delayed reinforcement. Doing so would introduce another free parameter into the model, and we forego this added complexity for the present.

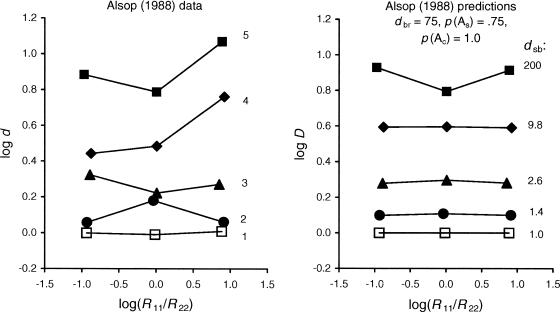

A second data set was provided by Alsop (1988), who varied the physical difference between S1 and S2 (rather than between C1 and C2, as in Jones, 2003) over five conditions in a signal-detection procedure where B1 and B2 were defined by their location. Differences between S1 and S2 were defined ordinally, including a condition with no difference, with reinforcer ratios R11/R22 arranged at 9:1, 1:1, and 1:9 in each condition. The left panel of Figure 7 presents Alsop's (1988) mean data, showing that the shape of the function relating log d to R11/R22 depended on the level of discrimination between S1 and S2. The right panel of Figure 7 shows our model's predictions with different values of dsb for each function, but with dbr, p(As), and p(Ac) the same for all five functions. Thus predicted log D values were derived from eight free parameters estimated from 30 response ratios, with overall VAC = .96. The predicted functions are concave up at high levels of discrimination and nearly horizontal at intermediate and low levels, corresponding to the trends in Alsop's data. With p(As) < 1.0, our model captures the main effects in Alsop's data with the smallest possible number of free parameters. However, the asymmetries in the data cannot be explained by our model without additional (and ad hoc) parameters.

Fig. 7. The left panel displays the relation between log d and log(R11/R22) with the difference between S1 and S2 varied over five conditions, including zero difference, from Alsop (1988).

The functions are identified by the ordinal difference between S1 and S2. The right panel displays predicted relations between log D and log (R11/R22) for different values of dsb, shown with each function; other parameter values are given in the legend. Overall VAC = .96. See text for explanation.

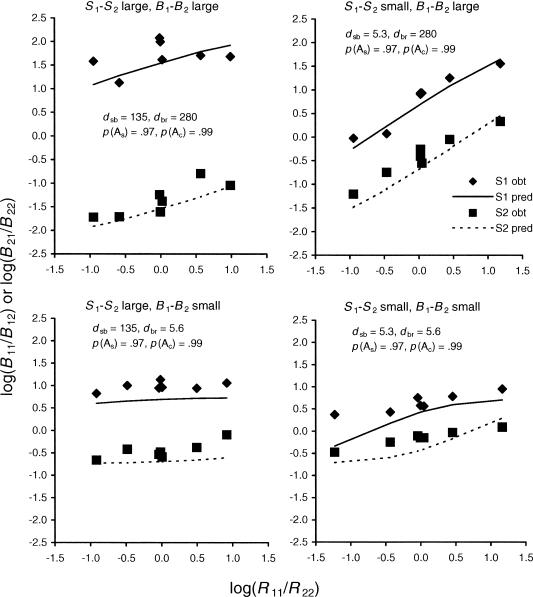

A third data set was provided by Nevin et al. (1993), who varied the differences between samples S1 and S2 and between responses B1 and B2 in a factorial design. S1 and S2 were defined as brighter or dimmer lights on one key, and B1 and B2 were defined as shorter or longer response latencies on a second key. Over four sets of conditions, the difference between S1 and S2 luminances was either large (0.066 log units) or small (0.032 log units), and the difference between B1 and B2 latencies was either large (0 to 1 s vs > 2 s) or small (1 to 2 s vs. 2 to 3 s). Within each set of conditions, the reinforcer ratio R11/R22 was varied systematically over five values with two replications for R11 = R22, giving 56 data points in all. Total reinforcement, R11 + R22, was constant across all conditions. The data are shown in Figure 8 together with model predictions and parameter values. Measured discrimination (log d; the separation between response-ratio functions for S1 and S2) was directly related to the differences between S1 and S2 and between B1 and B2. The sensitivity of response ratios to reinforcer ratios (the slope of the response-ratio functions; a in Equations 7a and 7b) was directly related to the difference between B1 and B2, but inversely related to the difference between S1 and S2.

Fig. 8. Fits of predictions of the model to the data of Nevin et al. (1993).

The panels display data and predictions for sets of conditions with large or small differences between S1 and S2 and between B1 and B2. Parameter values are given in each panel; overall VAC = .92. See text for explanation.

This complex pattern of results is predicted by our model with two values of dsb, two values of dbr, p(As) = .97, and p(Ac) = .99. The overall VAC is 0.92; if dsb and dbr were allowed to take different values for each set of conditions, VAC improves by less than 0.01. The data generally fall above the predictions, suggesting a bias toward the shorter-latency response that was most pronounced when the difference between B1 and B2 was small. The model cannot account for inherent biases of this sort without introducing an additional parameter that would take different values across sets of conditions. Also, the predicted functions for small differences between S1 and S2 and B1 and B2 (lower right panel) are curved, whereas the data are linear. The major result, though, is that the main effects of the differences between S1 and S2 and between B1 and B2 were well predicted with dsb independent of the difference between B1 and B2 and with dbr independent of the difference between S1 and S2. When Nevin et al. (1993) fitted their data with an earlier version of the Davison-Nevin (1999) model proposed by Alsop (1991) and Davison (1991), they found that dbr depended on the difference between S1 and S2, and that the differences between S1 and S2 and between B1 and B2 interacted in determining dsb. The present model, with probabilities of attending slightly less than 1.0, remedies these difficulties.

In summary, when reinforcer totals are constant and reinforcer ratios are varied, the model correctly predicts the forms of functions relating log response ratios or log d to log reinforcer ratios when the differences between the sample stimuli and the comparison stimuli or responses are varied separately in conditional discriminations. Moreover, it does so with a minimum of parameters, and the values of discriminability parameters dsb and dbr correspond at least ordinally with empirical variables. More important, dsb remains constant when the comparison stimuli or responses are varied, and dbr remains constant when the samples are varied. We turn now to the effects of varying total reinforcement on the probabilities of attending to the samples and comparisons, which were constant within the experiments analyzed above.

APPLICATION: MULTIPLE SCHEDULES OF REINFORCEMENT FOR CORRECT RESPONSES

As noted above, the original Davison-Nevin (1999) model predicted that variations in total reinforcement, R11 + R22, would have no effect on accuracy of conditional discrimination performance, and did not consider the effects of total reinforcement on its resistance to change. However, Nevin et al. (2003) found that both steady-state accuracy and its resistance to change depended on total reinforcement in much the same way as response rate in multiple schedules where R11 + R22 varied between components.

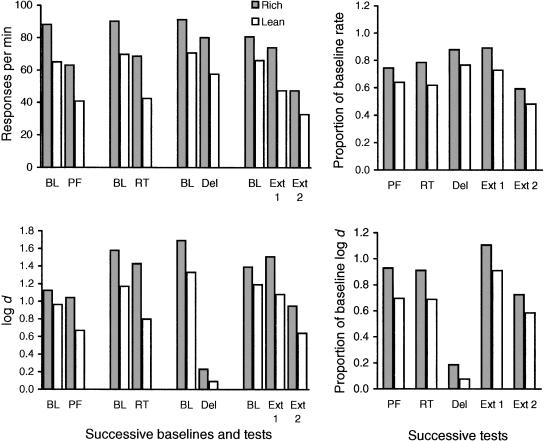

In a variation of a paradigm introduced by Schaal, Odum, and Shahan (2000), Nevin et al. (2003) arranged equal VI 30-s schedules in two multiple-schedule components, where responding produced MTS trials with vertical and slanted lines as the samples (S1, S2) and comparisons (C1, C2). Reinforcer probabilities for correct matches were .8 in one component (designated rich) and .2 in the other (designated lean). After stable response rates and accuracies were established, performance was disrupted by prefeeding, by presenting food during intercomponent (ICI) intervals, or by inserting a 3-s retention interval before onset of C1 and C2, each for five sessions. Baseline performances were reestablished after each disruption. Finally, food reinforcement was discontinued for 10 sessions of extinction. Nevin et al. found that response rates and discrimination accuracies (measured as log d) usually were higher in baseline and were more resistant to all four disrupters in the richer component. They concluded that the strength of discriminating, like the strength of free-operant responding, was positively related to reinforcer rate. Here, we show that these reinforcement effects on discrimination accuracy follow directly from our model.

A summary of the average data from Nevin et al. (2003) is shown in Figure 9. The response-rate data are consistent with standard multiple-schedule results in that baseline response rates were higher and decreased less under disruption, relative to baseline, in the rich component. The discrimination data suggest that accuracy was similarly related to component reinforcer rates. Specifically, log d was higher and decreased less, relative to baseline, in the rich component, and the decreases were similar to those observed for response rate during prefeeding, intercomponent food, and extinction. However, when performance was disrupted by inserting a 3-s retention interval, response rates were relatively unaffected whereas log d decreased to near-chance levels.

Fig. 9. A summary of the average data of Nevin et al. (2003).

The upper left panel shows response rates and the lower left panel shows discrimination accuracies, measured as log d, in baseline and during disruption by prefeeding (PF), ICI food presentations at random times (RT), the abrupt introduction of a 3-s retention interval between sample and comparison stimuli (Del), and the termination of reinforcement (Ext, in two 5-session blocks), separately for rich (shaded bars) and lean (open bars) components. The corresponding right panels show average levels of performance during disruption expressed as proportions of the immediately preceding baseline.

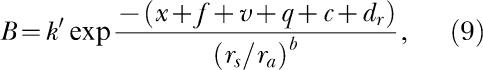

We begin by showing that Equation 4, with the numerator of the exponent modified by adding terms corresponding to the addition of the various disrupters, describes the average multiple-schedule VI response rates of Nevin et al. (2003). Equation 4 is repeated here as Equation 9 with added terms representing the four disruptive operations:

|

where k′, b, x, rs, and ra are defined as above; f represents the additional disruptive effect of prefeeding; v represents the additional effect of ICI food; q represents the additional effect of the retention interval; and c and d represent the additional effects of discontinuing the contingency and changing the reinforcer rate from rs to zero during extinction (see Nevin, McLean, & Grace, 2001; Nevin & Grace, 2005). We used a nonlinear curve-fitting program (Microsoft Excel Solver) to estimate values of the parameters, with rs and ra based on programmed reinforcer rates. The results are shown in Figure 10, left panel, and the fitted parameter values are given in Table 1, with the exponent b fixed at 0.50. Because there were seven free parameters and 18 data points, an excellent fit is hardly surprising.

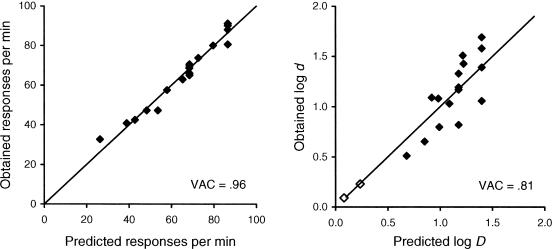

Fig. 10. The left panel shows the agreement between obtained response rates displayed in Figure 9 and those predicted by Equation 9 with parameter values given in Table 1.

The right panel shows the agreement between log d values displayed in Figure 9 and those predicted by Equations 10 and 11 with parameter values given in Table 1 (filled diamonds). The unfilled diamonds represent log d during introduction of a 3-s retention interval.

Table 1. Parameter values for model fits to the data of Nevin et al. (2003). Response rates were fitted by Equation 4, and log d was fitted by Equations 10 and 11 with dsb = dbr = 150, b = 0.5.

| k′ | x | z | f | v | q | c | d | VAC | |

| Responses per minute | 109 | 0.33 | — | 0.40 | 0.34 | 0.12 | 0.15 | 0.001 | .96 |

| Log d | — | 0.08 | 0.00 | 0.18 | 0.05 | 1.41 | 0.05 | 0.000 | .81 |

As described above, we assume that the effects of reinforcement on attending to S1 and S2 are quantitatively similar to effects on response rate in the data of Nevin et al. (2003), and rewrite Equation 4 for p(As) with terms added as in Equation 5:

|

Likewise, for attending to C1 and C2, we rewrite Equation 6 with the same added terms:

|

In the procedure of Nevin et al. (2003), rc/rs is the same in both rich and lean components because reinforcers occur four times more frequently within MTS trials, just as within the components themselves, so p(Ac) must be the same. Moreover, because within-trial latencies are short relative to the average time between trial presentations, rc/rs is high and p(Ac) should approximate 1.0. Therefore, States 2 and 4 of Figure 4 will rarely if ever be entered, and variations in the data will depend primarily on variations in p(As) except when retention intervals are used as disrupters.

We fitted Equations 10 and 11 to the accuracy data of Nevin et al. (2003) by determining the parameter values that minimized the sum of squared differences between predicted log D and obtained log d. The calculations for predicting log D are summarized in Appendix B; the full worksheet for estimating parameter values is available on the JEAB website. As noted above, it is necessary to assume or to fit dsb and dbr in order to calculate predicted log D. In a study of symbolic matching to sample, Godfrey and Davison (1998) found that varying the difference between S1 and S2 did not affect dbr, and that varying the difference between C1 and C2 did not affect dsb (see also the analysis of Nevin et al., 1993, above). Moreover, they found that when the difference between S1 and S2 was the same as the difference between C1 and C2, dsb was equal to dbr. Accordingly, we will assume that dsb = dbr here and in another MTS study below. With dsb = dbr = 150, predicted log D approximated the maximum average value of log d in the baseline data, so this value was used in fitting the full data set.

The results are shown in the right panel of Figure 10. Note that the unfilled diamonds, which represent the disruptive effects of inserting a 3-s retention interval between sample and comparison stimuli, are well explained by the decrease in rc that necessarily follows when a nonzero retention interval is introduced; a fully developed model of delayed discriminations is in preparation.

The best-fitting parameters are given in Table 1; the value of z was 0.0, so that p(Ac) in baseline was 1.0 as suggested above. The value of dsb = dbr has relatively little impact on the quality of the fit: Values ranging from 100 to 1000 altered VAC by less than .02 for reasons noted above. Clearly, the fit is less satisfactory than for response rate, but examination of Figures 9 and 10 suggests that much of the data variance arises from variations in log d between successive baseline determinations, displayed in the roughly vertical clusters of data points in Figure 10. Most importantly, our model of attending captures the major ordinal results of Nevin et al. that were problematic for the Davison-Nevin (1999) model: Accuracy of discrimination, like response rate, is higher and more resistant to change in the richer component.

APPLICATION: FIXED-RATIO REINFORCEMENT FOR CONDITIONAL DISCRIMINATIONS

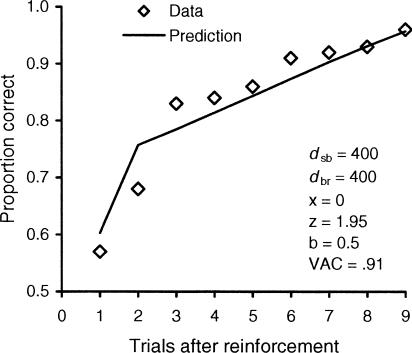

Another finding that raises problems for the Davison-Nevin (1999) model is the progressive increase in accuracy within a series of trials between reinforcers that are contingent on a fixed number of correct responses in successive (but not necessarily consecutive) trials. The result holds for MTS (Mintz, Mourer, & Weinberg, 1966; Nevin, Cumming, & Berryman, 1963) and for signal detection (Nevin & MacWilliams, 1983). It is problematic because, as noted above, the Davison-Nevin model predicts that if dsb and dbr are constant, then variations in overall reinforcer rate or probability between conditions, between components, or within trial sequences have no effect on accuracy. Although it might be argued that the delay to reinforcement for responses early in the fixed ratio would degrade dbr, the discriminability of response-reinforcer relations, it would then be necessary to assume that dbr itself comes under the control of the number of trials elapsing since reinforcement. It is at least equally reasonable to assume that attending, like overt responding, depends on delay to reinforcement. Here we show that if successive trials are treated analogously to multiple-schedule components in the paradigm of Nevin et al. (2003), with reinforcer rate in each successive trial given by the reciprocal of delay to reinforcement, the progressive increase in accuracy within a fixed ratio follows directly from our model. The assumption of correspondence between schedule components and successive trials is supported by the work of Mintz et al., who provided exteroceptive cues corresponding to postreinforcer trial number, demonstrated that the cues controlled accuracy, and obtained results similar to those of Nevin et al. (1963).

Nevin et al. (1963) required five key pecks at the center-key sample, following which the comparisons were presented on the side keys while the sample remained lighted. A 1-s intertrial interval separated successive trials. Reinforcement followed 10 (not necessarily consecutive) trials with correct responses. In a separate condition, all correct responses were reinforced (FR 1). To determine p(As) and p(Ac), we used Equations 5 and 6 with ra as the overall average reinforcer rate in a session and rsi as the reinforcer rate (reciprocal of the sum of intertrial time plus cumulative time to complete five sample-key pecks from the ith trial to reinforcement, as reported by the authors) in the ith trial after a previous reinforcer. The within-trial reinforcer rate rci was taken as the reciprocal of cumulative latencies from the ith trial to reinforcement, assuming 1-s latencies to the comparisons. As a result, both rs/ra and rc/rs increase systematically as the ratio advances. Then, with dsb = dbr = 400 (chosen to approximate the data with FR 1) and with b = 0.5, the model accounted for 91% of the data variance. Again, the values of dsb and dbr had little effect on the quality of the fit: With dsb = dbr ranging from 100 to 4000, VAC was altered by less than .01 for the reasons described above. The predicted function agrees reasonably well with the average data, as shown in Figure 11. The model parameters are also shown in Figure 11. According to the model, the fact that x = 0 implies that p(As) = 1.0 (i.e., the subjects attended to the samples on every trial) so the entire effect arises from variations in attending to the comparisons, p(Ac).

Fig. 11. Proportion of correct responses over nine consecutive unreinforced trials when reinforcement is available for the 10th trial with a correct response.

Data are averages from Nevin et al. (1963); the predicted function is given by Equations 5 and 6 with parameter values shown in the figure. See text for explanation.

In summary, the Davison-Nevin (1999) model in conjunction with a model of attending to sample and comparison stimuli (Figure 4) explains the effects of differential reinforcement for the two correct responses on response-ratio functions and on measured discrimination when total reinforcement is constant across conditions. It also accounts for the effects of varying the discriminability of the samples or comparisons. When the probability of attending is assumed to depend on variations in reinforcement in accordance with Equations 5 and 6, derived from behavioral momentum theory, the model also explains the positive relation between baseline accuracy and resistance to change and total reinforcement in multiple schedules. It also accounts for the progressive increase in accuracy under fixed-ratio reinforcement of conditional-discrimination performance.

GENERAL DISCUSSION

Our model has at least two levels: its core assumptions, and their instantiation in a model that generates predictions for comparison with empirical data sets. The core assumptions that were set forth in the Introduction are repeated here.

Core Assumptions

First, the measured rate of an overt free operant, both in the steady state and during disruption, depends on reinforcer rate relative to the context according to a function (Equation 4) derived from Nevin's (1992b) formulation of behavioral momentum. This extension of behavioral momentum theory to steady-state response rate is supported by the similarity of the predictions of Equation 4 to those of Herrnstein's (1970) widely accepted formulation of steady-state response rate (Equation 3). In addition, Equation 4 was derived from Equation 2, which describes a great deal of the data on resistance to change, and several studies of resistance to change support Equation 4 over Equation 3.

Second, in a conditional discrimination, unmeasured probabilities of attending to the sample and comparison stimuli depend on reinforcement correlated with those stimuli relative to the context within which they appear according to the same function as for overt responding, both in the steady state and during disruption, with independent parameters characterizing disrupters of attending to the samples and comparisons. This assumption cannot be supported directly because attending is measurable only by inference from a model. However, indirect support comes from research on observing behavior, which is widely construed as an overt, measurable expression of attending and which was related to reinforcement and disruption in the same way as suggested by Equation 4 (Shahan, 2002; Shahan et al., 2003).

Third, given that a subject attends to the relevant stimuli, its behavior is described by the Davison-Nevin (1999) model of conditional-discrimination performance. This assumption is supported by the various lines of evidence marshaled by Davison and Nevin (1999).

From Assumptions to Predictions

The probabilities of attending to the samples and comparisons, p(As) and p(Ac), are determined by Equations 5 and 6 with parameters x and z representing the disruptive effects of unspecified but constant background factors such as competition from extraneous activities and their reinforcers. Additional parameters are needed to represent the effects of added, experimentally defined disrupters. All disrupter values are free parameters with values constrained to be greater than or equal to 0.

The relevant reinforcer rate for attending to the samples, rs, is calculated over the time before sample presentation, when a subject may engage in observing behavior or unmeasured attending that is reinforced by sample onset, plus the time when the sample is present. The session average reinforcer rate, ra, is calculated over an entire session excluding reinforcer durations.

To estimate the probability of attending to the comparisons, we have used the within-trial reinforcer rate, rc, based on time from offset of the sample to reinforcement (or time out if the response is not reinforced), with the reinforcer rate for the samples, rs, as the context. In calculating within-trial reinforcer rates, we assumed 1-s latencies to the comparisons in order to avoid infinite reinforcer rates in trials with zero retention intervals. We also assumed 1-s latencies in application to the data of Nevin et al. (2003) when a retention interval was introduced as a disrupter.

We have assumed that the parameters representing the effects of background disrupters can take different values for attending to the samples (x) and comparisons (z). When overall reinforcement was held constant and no explicit external disrupters were arranged, as in Jones (2003), Alsop (1988), and Nevin et al. (1993), p(Ac) was equal or close to 1.0. As a result, p(Ac) did not contribute to the data fits and could be omitted from the model for those studies. However, the effects of explicit disrupters in Nevin et al. (2003) and of fixed-ratio reinforcement in Nevin et al. (1963) appear in the model as values of p(Ac), as well as p(As), less than 1.0. Thus for generality of model application, and for conceptual symmetry, both p(As) and p(Ac) are needed.

In view of their close temporal proximity in zero-retention-interval procedures, it may seem unreasonable to use different reinforcer rates, contexts, and background disrupters for attending to the samples and comparisons as specified in Equations 5 and 6. For the present, this approach appears to be successful in fitting a number of findings in the literature, but future research may suggest the need for modifications.

The exponent b in Equations 2, 4, 5, 6, 9, 10, and 11 represents the extent to which reinforcement determines resistance to change. For a given rate of reinforcement relative to the context, larger values of b correspond to greater resistance to background or experimentally arranged disrupters. Experiments with many different reinforcer rates and two levels of disruption, or with two reinforcer rates and many disrupter values, are needed to estimate b reliably. The experiments considered here were not designed to give reliable estimates of b; accordingly, we set b = 0.5 because that value is approximated in a number of multiple VI VI schedule studies designed specifically to evaluate it (Nevin, 2002).

Overview of Model Parameters and Fits

Ideally, a model parameter would take the same value, within error, across different sorts of determinations. For example, timing balls rolling down inclined planes, or timing the swings of a pendulum, give the same value of acceleration due to gravity in classical mechanics. Such consistency is a rarity in the quantitative analysis of behavior. However, the present modeling efforts provide several examples of parametric consistency between sets of conditions within an experiment. For example, the data of Jones (2003) were well fitted by holding dsb, p(As), and p(Ac) constant across conditions with differences in C1 and C2, and the data of Alsop (1988) were adequately fitted by holding dbr, p(As), and p(Ac) constant across conditions with differences in S1 and S2. Moreover, these constancies were dictated by the definitions of the terms of the model: dsb and dbr should depend on the sample and comparison stimuli and be independent of reinforcer rates (Davison & Nevin, 1999), whereas p(As) and p(Ac) should depend on reinforcer rates, which were essentially constant across conditions in both studies. When reinforcer rates varied between components within an experiment, as in Nevin et al. (2003), the background disrupter values were held constant across components because the evaluation of differential resistance to change requires that the same disrupter be applied to both components (Nevin, 1992b), and dsb and dbr were held constant across components because the stimuli were unchanged. Overall, we obtained good to excellent fits by holding constant those parameters that were identified with consistent aspects of the experiments, and allowing variation in parameters that were identified with experimental variables. Although the number of parameters in our model is large by comparison with, say, the generalized matching law, all of them are necessary to represent experimentally defined features of the studies examined here, and the number of data points is substantially greater than the number of parameters. The constancy of parameters across independent studies may be evaluated as systematic, parametric research on conditional discriminations continues.

Testing the Model

The model predicts several effects that have not, to our knowledge, been explored in the research literature. For example, because we assume, following Nevin (1992b), that attending is controlled by reinforcement relative to a context (i.e., rs/ra) variations in reinforcer rate in one multiple-schedule component should produce contrast effects in accuracy, as well as response rate, in a second, constant component, with no changes in model parameters. Also, the progressive increase in accuracy within fixed-ratio trial sequences was modeled as resulting from increases in p(Ac). Because p(Ac) determines the extent to which differential reinforcement affects responding to C1 or C2, sensitivity to differential reinforcement should increase as the ratio advances. Research along these lines could provide some data of interest in their own right as well as tests of the present model.

As the model is structured, there is no way to be sure, a priori, whether a given experimental disrupter affects p(As), p(Ac), or both. In our analysis of the resistance-to-change data of Nevin et al. (2003), we assumed that all disrupters operated identically on both p(As) and p(Ac) (see Equations 10 and 11). It would be useful to devise methods for disrupting p(As) or p(Ac) separately—for example, presenting distracters only during presentation of the samples or the comparisons—to evaluate the independence of these attentional terms. In particular, as shown by example in Appendix B, decreasing p(As) by increasing background disrupter x is predicted to reduce measured accuracy more in the lean component of a multiple schedule, whereas decreasing p(Ac) by increasing background disrupter z is predicted to reduce measured accuracy more in the rich component. The effects of targeted disrupters could provide strong tests of the model.

It would be of special interest to examine measurable aspects of behavior that might accompany or correspond to attending, such as requiring the subject to respond differentially to the samples (e.g., Urcuioli, 1985) or to adopt different positions within the chamber to view the comparisons (Wright & Sands, 1981). Analyses of this sort could evaluate the effects of disrupters directly for comparison with model estimates of p(As) and p(Ac).

FINAL REMARKS

In the larger scheme of behavior theory, there are at least three ways to conceptualize our model. First, attending may be construed as a mental way station between discriminative stimuli and behavior, and as such our model may be accused of explaining behavioral data by “appeals to events taking place at some other level of observation, described in different terms, and measured, if at all, in different dimensions” (Skinner, 1950, p. 193). Second, the probabilities of attending may be construed as intervening variables whose names and attributes are irrelevant because they have no significance beyond their role in organizing and summarizing data. Third, attending may be construed as a hypothetical construct that refers to physically real but unmeasured activities that have properties similar to measured overt responding, and that must be evaluated by inference via a mathematical model. It is this final perspective that informs our theoretical efforts and provides challenges for future research.

Acknowledgments

Preparation of this article was supported by NIMH Grant MH65949 to the University of New Hampshire. A preliminary version was presented at the meeting of the Society for the Quantitative Analyses of Behavior, May 2004. We thank Stephen Lea for his thoughtful comments and suggestions on an earlier version of the manuscript.

APPENDIX A

Model Parameters and Terms

| Components of Conditional Discriminations | |

| S1, S2 | Sample stimuli in matching to sample or signal detection |

| C1, C2 | Comparison stimuli in matching to sample |

| B1, B2 | Responses defined by comparison stimuli or topography, represented as counts in the conditional-discrimination matrix of Figure 1. |

| R11, R22 | Numbers of reinforcers for B11, B22 |

| Model Structure | |

| dsb | Discriminability of stimulus-behavior relation. Depends on S1-S2 difference, sensory capacity; does not depend on reinforcer rate or allocation |

| dbr | Discriminability of behavior-reinforcer contingency. Depends on B1-B2 or C1-C2 difference, sensory or motor capacity; does not depend on reinforcer rate or allocation |

| p(As) | Probability of attending to S1 and S2. Depends on reinforcer rate relative to session context; does not depend on reinforcer allocation, dsb, or dbr |

| p(Ac) | Probability of attending to C1 and C2. Depends on within-trial reinforcer rate relative to context; does not depend on reinforcer allocation, dsb, or dbr |

| Momentum Equations | |

| B | Measured response rate (B/min) |

| rs | Component reinforcer rate in multiple free-operant schedules or reinforcer rate for attending to S1 and S2 |

| rc | Within-trial reinforcer rate after offset of sample stimuli for attending to C1 and C2 |

| ra | Overall average session reinforcer rate; ra = 1 for single free-operant schedules |

| x | Background disruption or competition for responding and for attending to sample stimuli |

| z | Background disruption or competition for attending to comparison stimuli |

| f, v, q, | Parameters representing the effects of experimentally arranged disrupters: |

| c, d | prefeeding, ICI food, delay, contingency termination, and generalization decrement. |

| b | Sensitivity of changes in responding or in attending to values of rs/ra or rc/rs |

APPENDIX B

Method for calculating predicted log D for the rich and lean components in the study by Nevin et al. (2003), assuming dsb = dbr = 150 (see Figure 4).

| Rich | Lean | |||||||

|

R11 |

R12 |

R21 |

R22 |

R11 |

R12 |

R21 |

R22 |

|

| Scheduled reinforcers | 96 | 0 | 0 | 96 | 24 | 0 | 0 | 24 |

| Effective reinforcers | 96 | 1.28 | 1.28 | 96 | 24 | 0.32 | 0.32 | 24 |

| Response probabilities | p(B1|S1) | p(B1|S2) | p(B1|S1) | p(B1|S2) | ||||

| State 1 (attend to S1/S2, attend to C1/C2) | .987 | .013 | .987 | .013 | ||||

| State 2 (attend to S1/S2, no attend to C1/C2) | .500 | .500 | .500 | .500 | ||||

| State 3 (no attend to S1/S2, attend to C1/C2) | .500 | .500 | .500 | .500 | ||||

| State 4 (no attend to S1/S2, no attend to C1/C2) | .500 | .500 | .500 | .500 | ||||

Reinforcement rates required to calculate p(As) and p(Ac) from Equations 5 and 6 for rich and lean components with VI 30-s schedules, 2-s samples, 1-s latencies to comparisons, four trials per component separated by 30-s intercomponent intervals:

| Rich | Lean | |||

| Rft/hr for attending to S1/S2 (rs) | 90 | 22.5 | ||

| Rft/hr for attending to C1/C2 (rc) | 2,880 | 720 | ||

| Session average rft/hr (ra) | 44.4 | 44.4 | ||

| Parameters: x = 0.1, z = 0.0, b = 0.5 | ||||

| Rich | Lean | |||

| Calculated probabilities | p(As) | p(Ac) | p(As) | p(Ac) |

| of attending | .932 | 1.00 | .869 | 1.00 |

| Rich | Lean | |||

| Weighted probabilities | p(B1|S1) | p(B1|S2) | p(B1|S1) | p(B1|S2) |

| of responding | .954 | .046 | .923 | .077 |

| Predicted log D | Rich | Lean | ||

| with x = 0.1, z = 0.0 | 1.315 | 1.079 | ||

| with x = 0.3, z = 0.0 | .927 | .657 | ||

| with x = 0.1, z = 1.0 | .866 | .769 | ||

Note that relative to log D with x = 0.1, z = 0, increasing x reduces log D rich less than log D lean, whereas increasing z reduces log D rich more than log D lean.

Footnotes

The role of context in determining resistance to change is not ideally clear. Nevin (1992a) found a strong effect when context varied between conditions, and context played a major role in several other studies reviewed by Nevin (1992b). However, Nevin and Grace (1999) found no effect when context varied within sessions. A systematic replication of Nevin (1992a) by Grace, McLean, and Nevin (2003) obtained mixed results.

A preliminary version entitled “Reinforcement, attending, and remembering” was presented at the meeting of the California Association for Behavior Analysis, February 2005.

REFERENCES

- Alsop B. Detection and choice. 1988 Unpublished doctoral dissertation, University of Auckland, New Zealand. [Google Scholar]

- Alsop B. Behavioral models of signal detection and detection models of choice. In: Commons M.L, Nevin J.A, Davison M.C, editors. Signal detection: Mechanisms, models, and applications. Hillsdale, NJ: Erlbaum; 1991. pp. 39–55. [Google Scholar]

- Baum W.M. Understanding behaviorism: Science, behavior, and culture. New York: HarperCollins; 1994. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Blough D.S. Error factors in pigeon discrimination and delayed matching. Journal of Experimental Psychology: Animal Behavior Processes. 1996;22:118–131. [Google Scholar]

- Davison M.C. Stimulus discriminability, contingency discriminability, and complex stimulus control. In: Commons M.L, Nevin J.A, Davison M.C, editors. Signal detection: Mechanisms, models, and applications. Hillsdale, NJ: Erlbaum; 1991. pp. 57–78. [Google Scholar]

- Davison M, Nevin J.A. Stimuli, reinforcers, and behavior: An integration. Journal of the Experimental Analysis of Behavior. 1999;71:439–482. doi: 10.1901/jeab.1999.71-439. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Davison M.C, Tustin R.D. The relation between the generalized matching law and signal-detection theory. Journal of the Experimental Analysis of Behavior. 1978;29:331–336. doi: 10.1901/jeab.1978.29-331. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dinsmoor J.A. The role of observing and attention in establishing stimulus control. Journal of the Experimental Analysis of Behavior. 1985;43:365–381. doi: 10.1901/jeab.1985.43-365. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Godfrey R, Davison M. Effects of varying sample- and choice-stimulus disparity on symbolic matching-to-sample performance. Journal of the Experimental Analysis of Behavior. 1998;69:311–326. doi: 10.1901/jeab.1998.69-311. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Grace R.C, McLean A.P, Nevin J.A. Reinforcement context and resistance to change. Behavioural Processes. 2003;64:91–101. doi: 10.1016/s0376-6357(03)00126-8. [DOI] [PubMed] [Google Scholar]

- Grimes J.A, Shull R.L. Response-independent milk delivery enhanced persistence of pellet-reinforced lever pressing by rats. Journal of the Experimental Analysis of Behavior. 2001;76:179–194. doi: 10.1901/jeab.2001.76-179. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Heinemann E.G, Avin E. On the development of stimulus control. Journal of the Experimental Analysis of Behavior. 1973;20:183–195. doi: 10.1901/jeab.1973.20-183. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Heinemann E.G, Chase S, Mandell C. Discriminative control of “attention.”. Science. 1968, May 3;160:553–554. doi: 10.1126/science.160.3827.553. [DOI] [PubMed] [Google Scholar]

- Herrnstein R.J. On the law of effect. Journal of the Experimental Analysis of Behavior. 1970;13:243–266. doi: 10.1901/jeab.1970.13-243. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Johnstone V, Alsop B. Stimulus presentation ratios and the outcomes for correct responses in signal-detection procedures. Journal of the Experimental Analysis of Behavior. 1999;72:1–20. doi: 10.1901/jeab.1999.72-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jones B.M. Quantitative analyses of matching to sample performance. Journal of the Experimental Analysis of Behavior. 2003;79:323–350. doi: 10.1901/jeab.2003.79-323. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Marr M.J. The interpretation of themes: Opening the Baum-Staddon inkbattle. Journal of the Experimental Analysis of Behavior. 2004;82:71. doi: 10.1901/jeab.2004.82-71. [DOI] [PMC free article] [Google Scholar]

- McCarthy D.C, Davison M. Independence of sensitivity to relative reinforcement and discriminability in signal detection. Journal of the Experimental Analysis of Behavior. 1980;34:273–284. doi: 10.1901/jeab.1980.34-273. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mintz D.E, Mourer D.J, Weinberg L.S. Stimulus control in fixed-ratio matching to sample. Journal of the Experimental Analysis of Behavior. 1966;9:627–630. doi: 10.1901/jeab.1966.9-627. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nevin J.A. Behavioral contrast and behavioral momentum. Journal of Experimental Psychology: Animal Behavior Processes. 1992a;18:126–133. [Google Scholar]

- Nevin J.A. An integrative model for the study of behavioral momentum. Journal of the Experimental Analysis of Behavior. 1992b;57:301–316. doi: 10.1901/jeab.1992.57-301. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nevin J.A. Measuring behavioral momentum. Behavioural Processes. 2002;57:187–198. doi: 10.1016/s0376-6357(02)00013-x. [DOI] [PubMed] [Google Scholar]

- Nevin J.A, Cate H, Alsop B. Effects of differences between stimuli, responses, and reinforcer rates on conditional discrimination performance. Journal of the Experimental Analysis of Behavior. 1993;59:147–161. doi: 10.1901/jeab.1993.59-147. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nevin J.A, Cumming W.W, Berryman R. Ratio reinforcement of matching behavior. Journal of the Experimental Analysis of Behavior. 1963;6:149–154. doi: 10.1901/jeab.1963.6-149. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nevin J.A, Grace R.C. Does the context of reinforcement affect resistance to change? Journal of Experimental Psychology: Animal Behavior Processes. 1999;25:256–268. doi: 10.1037//0097-7403.25.2.256. [DOI] [PubMed] [Google Scholar]