Abstract

Context

Clinicians' nonverbal behaviors contribute to patients' responses to prognosis communication, yet little empirical evidence supports precise nonverbal behaviors and the mechanisms by which they contribute to perceptions of high-quality communication. Virtual reality (VR) is a promising tool for researching communication, allowing researchers to manipulate nonverbal behaviors in controlled simulation and examine outcomes.

Objectives

The goal of this pilot study was to assess whether manipulated changes in avatar doctors' nonverbal behaviors could lead to measurable differences of participant feelings, reactions, or sense of immersion in a VR scenario of prognosis communication.

Methods

In this pilot experiment, university student participants were randomized to a short prognosis communication simulation in immersive VR representing one of five nonverbal conditions: No nonverbals, Smile only, Nod only, Lean only, All nonverbals. Outcomes included cognitive (e.g., cognitive load, recall), socioemotional (e.g., emotional valence, satisfaction, anxiety), and immersion and presence.

Results

Our sample comprised 229 participants. Pilot experimental findings suggest that some participant responses differed as a result of the manipulation of nonverbal behaviors. However, results did not point to the presence or absence of a particular nonverbal behavior as driving reactions to prognostic communication.

Conclusions

VR can allow for experimental manipulation of nonverbal behavior. There is need for further development to optimally conduct sensitive and ecologically valid communication simulations for research, and for research into discrete nonverbal behaviors to improve serious illness communication training and practice.

Innovation

VR experimental simulations are a promising tool for building the evidence base of nonverbal behavior in serious illness communication.

Keywords: Prognosis, Communication, Nonverbal communication, Virtual reality, Patient outcome assessment

Highlights

-

•

Virtual reality simulations can help us study communication behaviors.

-

•

High-quality communication skills include nonverbals like smiling, nodding, leaning.

-

•

We could measure differences in responses to simulated nonverbal behaviors.

-

•

Future work: develop further to generate evidence for communication skills training.

1. Introduction

High-quality clinical communication, a necessary component of quality care in serious illness, relies on both verbal (i.e., speech) and nonverbal behaviors (i.e., posture, facial expressions, paraverbal expressions like tone, volume, etc.) [1,2]. Comparatively less studied than their verbal counterparts, clinicians' nonverbal behaviors contribute to patients' responses to the encounter (e.g., satisfaction [3]); yet, little empirical evidence supports precise behaviors [4] and the mechanisms by which they contribute to perceptions of high-quality communication [5].

Training in clinical communication often lacks specific evidence and remains dependent on expert opinion (e.g., prognosis communication skills training [6,7]). If nonverbal behavior is included in clinical communication training, it is often referenced via aggregate or subjective items (i.e., “show good nonverbal behavior” [8], “listen intently and completely” [9,10]). These descriptions are difficult to translate to specific action (i.e., informing changes to training, improving practice), as clinicians often require robust evidence of effectiveness before investing time and resources in changing or adapting established practices [11,12].

Virtual reality (VR) offers a promising research tool for communication, as it enables the creation of highly controlled clinical scenario simulations [13,14]. By allowing for the systematic manipulation of the presence or absence of specific nonverbal behaviors across verbal communication conditions (control unachievable to even highly trained actors in a video vignette, as observational studies in real settings cannot adequately control for the breadth of potential confounding variables impacting encounters [4,[15], [16], [17], [18]]), VR could enable the exploration of causal relationships between communication behaviors and outcomes in a manner that would otherwise be infeasible [4,19]. We have developed a VR oncology clinic environment with a virtual physician avatar, and are able to manipulate communication content, delivery, and contextual cues (e.g. clinician race or gender, environment). With this environment, we seek to examine how common nonverbal communication behaviors impact simulation viewers' emotional arousal, cognitive load, and other foundational factors relevant to effective serious illness communication, in the context of a prognosis information delivery simulation. Working in an experimental virtual reality platform represents a first step toward building an evidence base for nonverbal behavior in prognosis, prior to work with patients or caregivers in the serious illness setting.

The goal of this pilot study was to assess whether manipulated changes in avatars' nonverbal behaviors in a virtual reality interaction could lead to measurable differences of participant feelings, reactions, or sense of immersion in the simulation. In this pilot experiment, we manipulated three nonverbal behaviors (smiling, nodding, and forward lean) commonly included in descriptions of high-quality, patient-centered and empathetic care [[20], [21], [22]] and examined university students' reactions to the simulations.

2. Materials and methods

2.1. Participants

Participants were recruited by email from Bentley University, located in the northeastern United States. Recruiting materials indicated that participants were invited to participate in a VR-based study of communication between doctors and patients. All participants provided their informed consent according to the requirements of Bentley University's Institutional Review Board (inclusion and exclusion criteria are reported in Appendix B). The study session required 60 min to complete, and participants received a $10 Amazon eGift Card as an incentive after completing the study.

2.2. Experimental design

This study employed a 4 (physician avatar) x 5 (nonverbal behavior) experimental design, with physician avatar (Angelo, James, Keegan, Khalil) and nonverbal behavior (None, Smile Only, Nod Only, Lean Only, Smile+Nod+Lean) as between-subjects factors. Our goal was to test specific nonverbal behaviors; we chose these three behaviors because they are commonly included in literature and training about compassionate, patient-centered care [[20], [21], [22]]; we were able to manipulate them in the VR platform utilized in the study; and they did not involve the participant. Other nonverbal behaviors that are important to patient-centered communication, such as eye contact or touch, were not chosen because they would have required measurement of the participant gaze or were otherwise not feasible in our existing VR hardware or software. We wanted to pilot multiple nonverbal behaviors but were limited in sample size, so we also chose not to do a fully crossed experimental design (e.g. including conditions like Smile + Nod without Lean). We prepared the same simulations with four different avatars to mitigate difference in perception perhaps based on perceived race, holding simulated nonverbals the same. Participants were randomly assigned to the experimental conditions (Table 1), and experienced one of the physician avatars (Fig. 1) delivering one of the nonverbal behavior animations (Fig. 2, Fig. 3).

Table 1.

Experimental Study Design.

|

Physician Avatar |

|||||

|---|---|---|---|---|---|

| Angelo | James | Keegan | Khalil | ||

| Nonverbal behavior | No Nonverbals | n = 12 | n = 11 | n = 11 | n = 11 |

| Smile Only | n = 12 | n = 11 | n = 13 | n = 13 | |

| Nod Only | n = 12 | n = 13 | n = 13 | n = 10 | |

| Lean Only | n = 10 | n = 11 | n = 11 | n = 11 | |

| Smile + Nod + Lean | n = 9 | n = 12 | n = 14 | n = 9 | |

| Subtotal (N = 229) | n = 55 | n = 58 | n = 62 | n = 54 | |

Fig. 1.

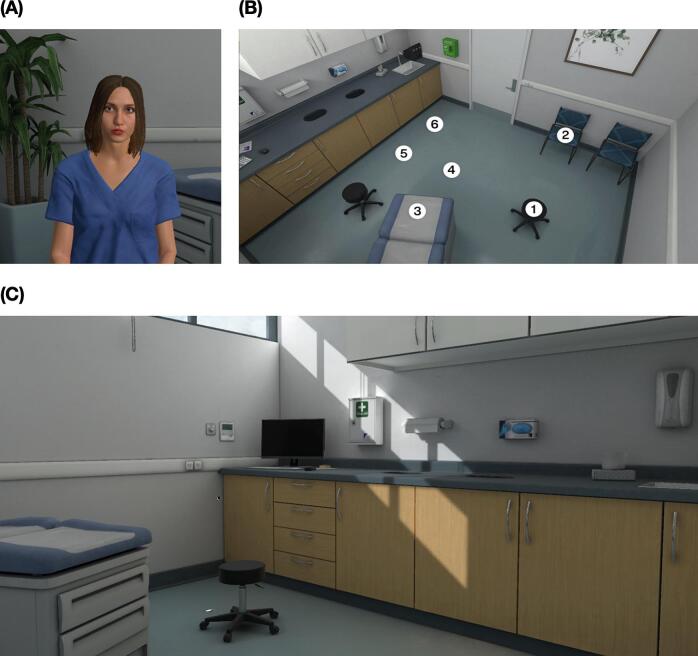

Nurse and views of clinical environment. (A) Nurse avatar (“Gabriella”). (B) Overhead view of the virtual clinic environment. The participant was seated in a chair aligned with the virtual chair at location #2 in the image. The nurse and doctor avatars appeared across from the participant, in the stool located at position #1 in the image. (C) Example view of the virtual clinic environment from the participants' perspective.

Fig. 2.

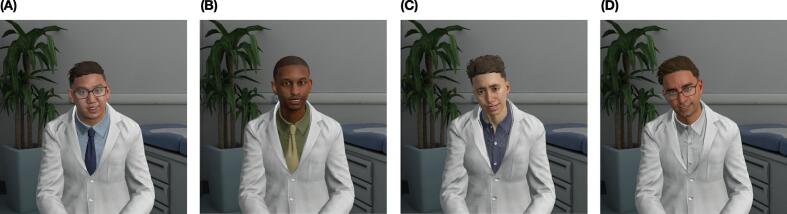

Physician avatars: (A) “Angelo”, (C) “James”, (D) “Keegan”, (E) “Khalil”. The physician avatar names are those provided within the Talespin platform (participants were not aware of these names, e.g., the nurse refers to the physician as the “doctor” during the simulation). The images of all four avatars are drawn from the Smile + Nod + Lean conditions.

Fig. 3.

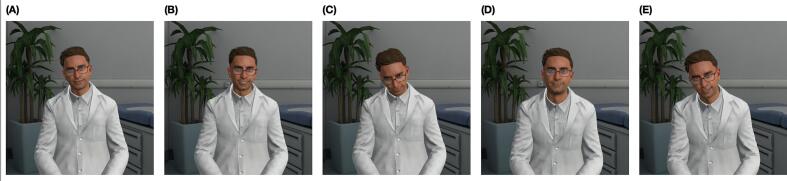

Nonverbal behavior conditions. (A) No Nonverbals, (B) Smile Only, (C) Nod Only, (D) Lean Only, (E) Smile + Nod + Lean.

A script for the clinician's communication can be found in Appendix A. The verbal communication provided to the patient was the same in all conditions, following a patient-centered script aligned with guidance by prior research and the National Cancer Institute [23,24]. The nonverbal behaviors manipulated in the present study included smiling, nodding, and leaning toward the patient [1,25]. Nonverbal behavior animations were added using Cornerstone Immerse (formerly Talespin CoPilot Designer) avatar animation features. We selected these nonverbal behaviors for their common inclusion in definitions of high-quality communication, because they are discreet, modifiable, and trainable [26,27], and their ability to be manipulated in the VR platform with which we built our simulations [28]. The exam room environment was selected from among the default environment options available in the VR platform.

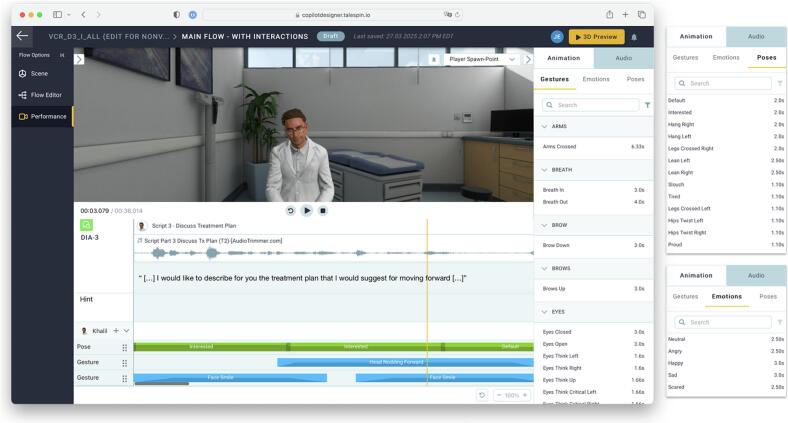

2.3. Platform

We created simulations using Cornerstone Immerse (formerly Talespin's CoPilot Designer) [28]. We selected an avatar and positioned the avatar in the clinical environment, prepared camera angles / POV where the viewing participant would be located in VR, inserted the script and flow of selections, and added nonverbal behaviors to the avatar's presentation at indicated time points (Fig. 4). After iteratively testing and refining the flow of the simulation with the study team, we then created versions of the same simulation with different clinician avatars and toggling on/off nonverbals. This platform allowed us to maintain the exact same intensity, quantity, frequency, and duration of nonverbal behaviors across avatars.

Fig. 4.

Adding nonverbal behaviors. Nonverbal behaviors were added using the Cornerstone Immerse (formerly Talespin CoPilot Designer) avatar animation features. The interface enables poses and gestures to be combined and aligned to the conversation. The vertical yellow bar indicates the current frame in which the avatar is leaning forward (“Interested” pose) while nodding (“Head Nodding Forward” gesture) and smiling (“Face Smile” gesture). (For interpretation of the references to colour in this figure legend, the reader is referred to the web version of this article.)

2.4. Measures

Outcome measures are described in Table 2. Measures were based on a model of virtual reality in serious illness communication [29] and included: cognitive outcomes (how much effort it took the participant to interact with the VR avatar, what they remembered); social and emotional outcomes (how pleasant/unpleasant, awake or active, and positive/negative the participant felt after, anxiety, satisfaction, and uncertainty); and immersion and presence outcomes (social presence, place presence, and general immersion and presence).

Table 2.

Dependent Measures.

| Measure | Description & Items | |

|---|---|---|

| Cognitive load | Paas Cognitive Load Scale [46]. | Single item: level of effort invested while interacting with doctor (1–9 scale; 9 being very, very high mental effort). |

| Recall | Questions assessing (1) recall of clinical information delivered as part of the script and (2) recall of Doctor's appearance. | Eight items multiple choice items scored from incorrect (0) to correct (1). |

| Emotional valence | Emotional valence sliding-scale item [31]. | Single item: how pleasant or unpleasant the participant feels at the moment (−50 − +50 scale; +50 being very pleasant). |

| Emotional arousal | Emotional arousal sliding-scale item [32,33]. | Single item: how awake and active the participant feels at the moment (−50 − +50 scale; +50 being very awake and active). |

| Positive Affect, Negative Affect | PANAS-SF [34]. | Twenty items (10–50 scale score for each emotion category), score calculated indicative of positive affect and negative affect. |

| State-Trait Anxiety Inventory | STAI [35]. | Six items (1–4 scale; higher scores indicate greater anxiety). |

| Quality of communication | Questions related to / adapted from quality of end-of life communication scale [36]. | Nine items (0–10 score; averaged; higher score indicates better quality of communication). |

| Satisfaction with clinician | Questions related to trust, recommendations, visiting again, and explicit satisfaction. | Four items using Likert scales (1–5 scales; averaged; higher score indicates greater satisfaction). |

| Prognostic uncertainty | Mishel's Uncertainty in Illness scale [37]. | Four items using Likert scales (1–5 scales, with one item reverse-scored; averaged; higher score indicates greater uncertainty). |

| Immersion and Presence | Questions based on social presence [[38], [39], [40]], place presence [41], and general immersion and presence [42,43]. | Social presence: six multiple-choice items using Likert scales (1–5 scales). Place presence: five multiple-choice items. General immersion and presence: eleven scale-based or multiple-choice items. |

Cognitive outcomes: We sought to assess whether different nonverbal behaviors would impact what people remembered from the simulation, how they felt after the simulation, and their reactions to the delivered communication and clinician avatar. We asked participants about the level of mental effort (cognitive load) they invested while interacting with the doctor avatar (single-item Paas Cognitive Load Scale [30]; very, very low – very, very high), and about information they remembered (recall) from the simulated conversation (eight multiple-choice items related to clinical information stated by the doctor and details of the doctor avatar's appearance).

Socioemotional outcomes: We asked about how pleasant or unpleasant and awake and active the simulation made them feel (emotional valence [31], −50 to +50 scale; emotional arousal [32,33], −50 to +50 scale), and assessed positive and negative affect (twenty-item PANAS-SF questionnaire [34], 10–50 score per emotion category) and anxiety (six-item state-trait anxiety inventory (STAI) [35], 1–4 score per item, higher scores: greater anxiety) following the simulation. We assessed participants' assessment of the quality of communication [36] (nine items, 0–10 scores averaged, higher score: higher quality), and how satisfied they were with the clinician (four items related to trust, recommending clinician, visiting again, and explicit satisfaction, 1–5 Likert scales). Lastly, we asked participants about their prognostic uncertainty [37] following the simulation (four items, 1–5 Likert scales, more detail in Table 2).

Immersion and presence outcomes: We were interested in seeing whether different nonverbal behaviors could impact how realistic or immersive the simulation felt to participants. We asked participants how socially realistic their interactions with the avatar were (social presence [[38], [39], [40]], six items, agree-disagree Likert scales, e.g., ‘I felt like the doctor could see me’, ‘I felt I was actually interacting with the doctor’), how realistic they felt the simulated setting to be (place presence [41], five items, agree-disagree Likert scales, e.g., ‘I felt I was sitting by the doctor in the office’), and general questions about their perceptions of immersion and presence [42,43] (eleven scale-based or multiple-choice items, e.g., ‘How involved were you in the experience?’, ‘Do you think of your experience more as images you saw, or more as somewhere that you visited?’).

2.5. Procedure

After providing informed consent, participants were fitted with a binocular VR headset (HTC Vive Pro Eye, 98°H x 98°V visible field of view, 1440px × 1600px per eye, 90 Hz refresh rate with integrated stereo headphones). The distance between the headset lenses was adjusted based on each participant's interpupillary distance, to minimize the possibility of participants experiencing eye strain or motion sickness. The encounter was then presented; after a standard calibration step in a simulated waiting room, participants experienced one of the experimental conditions (Table 1). All participants experienced the same prognostic information (see Appendix A). After interacting with the clinician, the experimenter helped the participant remove the VR headset. The participant then responded to a Qualtrics-based survey including outcome measures on a laptop computer. Participants were also asked if they experienced any dizziness or nausea while in VR.

Finally, participants were debriefed regarding the purpose of the study and were encouraged to ask the experimenters any questions they might have about the study.

2.6. Analysis

We collapsed conditions across physician avatars for analysis and used Levene's test statistic to assess equality of variances across experimental conditions. Due to unequal variances and unequal sample sizes of experimental groups, we used Welch's t-test for ANOVA calculations to determine statistically significant differences in the main effect of nonverbals (Smile + Nod + Lean) on outcomes. Where differences were observed, Tukey post hoc tests were used to evaluate significant pairwise differences between conditions. Data were analyzed using Python; the significance criterion was set to α = 0.10, and partial eta-squared (η2) was used as a measure of effect size.

3. Results

3.1. Sample

Our initial sample comprised 240 undergraduate and graduate student participants; eleven were excluded from analysis due to technical or procedural issues encountered during the experiment. There were 229 participants included in the final sample (MAGE = 20.8 years, SDAGE = 3.8 years), described in Table 3. Two (0.9 %) participants reported a personal previous cancer diagnosis; 154 participants (66.7 %) reported a family history of cancer. Twenty-six (11.3 %) participants reported experiencing some dizziness or nausea after the VR experience, and 165 participants (71.4 %) had previously experienced VR.

Table 3.

Demographic Information.

| Number of Participants | N = 229 |

|---|---|

| Age, mean (SD) [min-max] | 20.8 (3.8) [[18], [19], [20], [21], [22], [23], [24], [25], [26], [27], [28], [29], [30], [31], [32], [33], [34], [35], [36], [37], [38], [39]] years |

| Sex, N | |

| Female | 105 |

| Male | 122 |

| Prefer not to answer | 1 |

| Prefer to self-describe | 1 |

| Race, N | |

| White | 116 |

| Asian Indian | 33 |

| Chinese | 24 |

| Other | 12 |

| Black or African American | 12 |

| Other Asian | 8 |

| Vietnamese | 7 |

| White, Other | 2 |

| White, Black or African American | 2 |

| White, Black or African American, Chinese | 1 |

| Chinese, Other Asian | 1 |

| White, Other Asian | 1 |

| White, Asian Indian | 1 |

| American Indian or Alaska Native, Chinese | 1 |

| Black or African American, Other Asian | 1 |

| White, Black or African American, Chinese | 1 |

| Chinese, Other Asian | 1 |

| White, Other Asian | 1 |

| White, Asian Indian | 1 |

| American Indian or Alaska Native, Chinese | 1 |

| Black or African American, Other Asian | 1 |

| White, American Indian or Alaska Native, Chinese, Other | 1 |

| White, Japanese | 1 |

| Chinese, Vietnamese | 1 |

| White, Native Hawaiian | 1 |

| Korean | 1 |

| White, Filipino | 1 |

| White, Chinese | 1 |

| Ethnicity, N | |

| No, not of Hispanic, Latino/a/x, or Spanish origin | 197 |

| Yes, another Hispanic, Latino/a/x, or Spanish origin | 25 |

| Yes, Mexican, Mexican American, Chicano/a/x | 3 |

| Yes, Puerto Rican | 2 |

| Yes, Puerto Rican, Yes, Cuban | 1 |

| Yes, Puerto Rican, Yes, another Hispanic, Latino/a/x, or Spanish origin | 1 |

| Education, N | |

| Some college | 136 |

| 2 years or completed high school | 50 |

| College graduate | 30 |

| Postgraduate | 11 |

| Post high school training other than college (vocational or technical) | 2 |

3.2. Results for outcome variables

3.2.1. Cognitive outcomes

There were no differences between experimental conditions on cognitive load, recall of communication, or recall of the doctor's appearance (p > 0.1 for all).

3.2.2. Socioemotional outcomes

Emotional valence, emotional arousal, positive affect, and anxiety were not found to differ by experimental condition (p > 0.1 for all). Regarding negative affect, Welch's ANOVA revealed a significant main effect of nonverbal behavior (F(4, 110.225) = 2.567, p-value = 0.04, η2 = 0.036 (low)); a Tukey post-hoc test revealed that participants who observed the doctor exhibiting Smile only (M = 1.555) reported lower negative affect than those who saw the Lean only simulation (M = 1.953), though this effect was not statistically significant (mean difference = −0.398, 95 % CI [−0.767, −0.03], p-value = 0.06).

Average scores across the communication quality questionnaire did not show differences by experimental condition (p > 0.1); however, some individual items were responded to differently. One question asked inquired about how good the doctor was at looking you in the eye; Welch's ANOVA revealed a significant main effect of nonverbal behavior (F(4, 110.101) = 3.195, p = 0.016, η2 = 0.071 (medium)), with participants who received all nonverbal behaviors (Smile + Nod + Lean) (M = 8.409) feeling the doctor looked them in the eye more than those seeing the Lean only simulation (M = 7.14) (mean difference = −1.27, 95 % CI [−2.37, −0.17], p-value = 0.037). Participants seeing simulations with Smile only (M = 8.388) and No nonverbals (M = 8.844) also found the doctor looked at them more than those seeing the Lean only condition (mean difference between Smile only and Lean only = 1.248, 95 % CI [0.176, 2.32], p = 0.035; mean difference between No nonverbals and Lean only = 1.705, 95 % CI [0.611, 2.799], p = 0.001).

Another communication quality item asked whether the doctor was perceived as giving the participant their full attention; Welch's ANOVA showed main effects of nonverbal behavior, F(4, 107.603) = 2.526, p = 0.045, η2 = 0.043 (low). Participants reported that the doctor appeared more attentive in the Smile only condition (M = 8.939) as opposed to Lean only (M = 7.721) (mean difference = 1.218, 95 % CI [0.128, 2.308], p = 0.048).

There were no significant differences between conditions for satisfaction with the doctor (p-values >0.10), nor were any significant differences observed regarding prognostic uncertainty or intolerance of uncertainty.

3.2.3. Immersion and presence outcomes

There were no significant differences observed regarding social presence, place presence, or general immersion and preferences (p-values >0.1 for all). There appeared some differences among responses to one of the items on the Slater et al. [43] presence scale (‘Do you think of your experience more as images you saw, or more as somewhere that you visited?’; F(4, 111.387) = 3.637, p = 0.008, η2 = 0.047 (low)). Participants seeing No nonverbals (M = 4.6) reported more presence than those seeing Full nonverbals (Smile + Nod + Lean) (M = 3.545), with a mean difference of 1.054 (95 % CI [0.051, 2.058], p = 0.073); and those who saw Smile only simulations (M = 3.408) reported less presence less than No nonverbals (M = 4.6), with a mean difference of −1.192 (95 % CI [−2.169, −0.215], p = 0.023).

4. Discussion and conclusion

4.1. Discussion

Testing the effects of specific nonverbal behavior in prognostic communication poses unique challenges for researchers. We conducted a pilot study to experimentally assess whether manipulating nonverbal behavior in a VR-based simulation of prognostic communication leads to differences in participant responses. Overall, results suggest that some participant responses differed as a result of the manipulation of nonverbal behaviors. However, results did not point to the presence or absence of a particular nonverbal behavior as driving reactions to prognostic communication. Taken together, our results suggest that there is potential to experimentally study nonverbal communication using this technology; however, much more work is needed to be able to use a VR platform like this to definitively assess the impact of nonverbal behavior on communication. There remain several considerations around studying nonverbals with VR, as highlighted by this study, including the balance between realistic nonverbal behaviors and the potential for the Uncanny Valley effect (i.e., the perception of avatar appearance or behavior as eerie, unsettling, or distracting as they approach but fail to achieve realism) [44,45].

Although some nonverbal behavior conditions led to different responses (e.g., participants saw the doctors as more attentive when smiling as opposed to leaning), these initial results should not be used in isolation to inform training or suggest doctors should smile more or avoid leaning during prognostic communication. Such broad conclusions would be premature given this work represents an initial pilot. However, this study does begin to build an evidence base for discrete, specific nonverbal behaviors and suggests future work using virtual reality could continue to tease apart aspects of these behaviors that could inform training and practice. Given remaining questions around how to best present nonverbal behaviors, continued iterative development is still needed to improve our simulations to the point that simulated nonverbal behaviors present both as noticeable and realistic while not being distracting. Additionally, we have not answered some of the important methodological questions about researching communication in a vacuum: for example, although we seek to detect the specific effects of nonverbals on communication, does nonverbal communication function in a manner that is extricable from other elements of communication and presentation? Is nonverbal communication inherently responsive and interactive between conversation parties (i.e., with limited interactivity in our simulation, do nonverbal behaviors fill their communication function the same way, if they are not perceived as personalized in timing or quality to the simulated other participant in the simulated conversation)? Future work and development are needed to explore remaining methodological questions.

Limitations: The present feasibility study has several limitations. First, despite efforts to improve the natural flow of behaviors during simulation development, nonverbal behaviors were manually assigned to the avatar by the research team using a point-and-click interface, which does not fully capture the dynamic and context-dependent nature of real-world nonverbal communication; future work should explore creating avatar behaviors using a live actor with a motion-tracking suit to add poses and gestures. Second, the simulation permitted limited participant interaction, potentially reducing immersion and ecological validity. Third, in our experimental setup, we assessed the impact of perceived race, maintaining the same avatar stature and gender (Male) across all avatars; we did not explore gender or other avatar factors, which will be important to investigate in future tests. Additionally, though nonverbal movements were standardized across avatars, the potential remains for differential interpretation of perceived ‘naturalness’ of the same behaviors for avatars of different perceived race or gender, raising challenges to balancing realism and avoiding encoding stereotypes. Future explorations should incorporate more dynamic, interactive, and diverse simulations.

4.2. Innovation

The results of the present study indicate that virtual reality may allow for the manipulation of nonverbal behavior to experimentally assess effects on responses to clinical communication. VR is a tool which can be used when studying nonverbal behavior in real prognostic communication interactions would be unethical or not feasible.

5. Conclusion

Virtual reality can allow for experimental manipulation and readily adaptable simulations; virtual reality experimental simulations are a promising tool for building the evidence-base of nonverbal behavior in serious illness communication. There is need for further development and capacity-building of virtual reality to optimally conduct sensitive and ecologically valid communication simulations for research, as well as a need for more public research and evidence into discrete nonverbal behaviors to help improve serious illness communication training and practice in the future.

CRediT authorship contribution statement

Brigitte N. Durieux: Writing – review & editing, Writing – original draft, Funding acquisition, Formal analysis, Conceptualization. Jonathan Gordon: Writing – review & editing, Writing – original draft, Methodology, Formal analysis, Data curation, Conceptualization. Justin J. Sanders: Supervision, Resources, Methodology, Funding acquisition, Formal analysis, Conceptualization. Ja-Nae Duane: Supervision, Methodology, Funding acquisition, Formal analysis, Conceptualization. Danielle Blanch-Hartigan: Writing – review & editing, Writing – original draft, Resources, Methodology, Funding acquisition, Formal analysis, Data curation, Conceptualization. Jonathan Ericson: Writing – review & editing, Writing – original draft, Visualization, Supervision, Resources, Project administration, Funding acquisition, Formal analysis, Data curation, Conceptualization.

Funding sources

This work was funded in part by the Bentley University Center for Health and Business (CHB), the Bentley University Research Council (BRC) the Bentley University Faculty Affairs Committee (FAC) and the Oppenheimer Fund of the Dana-Farber Cancer Institute.

Declaration of competing interest

The authors declare the following financial interests/personal relationships which may be considered as potential competing interests: Given her role as guest co-editor at PEC Innovation for this special issue, Danielle Blanch-Hartigan had no involvement in the peer review of this article and had no access to information regarding its peer review. Full responsibility for the editorial process for this article was delegated to another journal editor. If there are other authors, they declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

Acknowledgments

We would like to thank Shivam Ohri, Danielle Solar, Sanghmitra Choudhary, Chinmoyee Karmakar, Morgan Stosic, Erryca Robicheaux, Madelyn Sando, Kirpreet Dharni, Faria Noor, and Kajamathy Subramaniam.

Footnotes

This article is part of a Special issue entitled: ‘Extended reality (XR)’ published in PEC Innovation.

Supplementary data to this article can be found online at https://doi.org/10.1016/j.pecinn.2025.100424.

Appendix A. Supplementary data

Supplementary material

References

- 1.Mast M.S. On the importance of nonverbal communication in the physician-patient interaction. Patient Educ Couns. 2007;67:315–318. doi: 10.1016/j.pec.2007.03.005. [DOI] [PubMed] [Google Scholar]

- 2.Mast M.S., Cousin G. In: The Oxford handbook of health communication, behavior change, and treatment adherence. Martin L.R., DiMatteo M.R., editors. Oxford University Press; 2013. The role of nonverbal communication in medical interactions: Empirical results, theoretical bases, and methodological issues; p. 0. [DOI] [Google Scholar]

- 3.Henry S.G., Fuhrel-Forbis A., Rogers M.A.M., Eggly S. Association between nonverbal communication during clinical interactions and outcomes: a systematic review and meta-analysis. Patient Educ Couns. 2012;86:297–315. doi: 10.1016/j.pec.2011.07.006. [DOI] [PubMed] [Google Scholar]

- 4.Sanders J.J., Blanch-Hartigan D., Ericson J., Tarbi E., Rizzo D., Gramling R., et al. Methodological innovations to strengthen evidence-based serious illness communication. Patient Educ Couns. 2023;114 doi: 10.1016/j.pec.2023.107790. [DOI] [PubMed] [Google Scholar]

- 5.Roter D.L., Frankel R.M., Hall J.A., Sluyter D. The expression of emotion through nonverbal behavior in medical visits. J Gen Intern Med. 2006;21:28–34. doi: 10.1111/j.1525-1497.2006.00306.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Campbell T.C., Carey E.C., Jackson V.A., Saraiya B., Yang H.B., Back A.L. Discussing prognosis: balancing hope and realism. Cancer J. 2010;16:461–466. doi: 10.1097/PPO.0b013e3181f30e07. [DOI] [PubMed] [Google Scholar]

- 7.Baile W.F., Buckman R., Lenzi R., Glober G., Beale E.A., Kudelka A.P. SPIKES-A six-step protocol for delivering bad news: application to the patient with cancer. Oncologist. 2000;5:302–311. doi: 10.1634/theoncologist.5-4-302. [DOI] [PubMed] [Google Scholar]

- 8.Krupat E., Frankel R., Stein T., Irish J. The Four Habits Coding Scheme: validation of an instrument to assess clinicians’ communication behavior. Patient Educ Couns. 2006;62:38–45. doi: 10.1016/j.pec.2005.04.015. [DOI] [PubMed] [Google Scholar]

- 9.Tele-Presence 5 Presence. https://med.stanford.edu/presence/initiatives/stanford-presence-5/tele-presence-5.html (accessed April 25, 2025)

- 10.Pines R., Haverfield M.C., Wong Chen S., Lee E., Brown-Johnson C., Kline M., et al. Evaluating the implementation of a relationship-centered communication training for connecting with patients in virtual visits. J Patient Experience. 2024;11 doi: 10.1177/23743735241241179. 23743735241241179. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Cabana M.D., Rand C.S., Powe N.R., Wu A.W., Wilson M.H., Abboud P.A., et al. Why don’t physicians follow clinical practice guidelines? A framework for improvement. JAMA. 1999;282:1458–1465. doi: 10.1001/jama.282.15.1458. [DOI] [PubMed] [Google Scholar]

- 12.Grol R., Grimshaw J. From best evidence to best practice: effective implementation of change in patients’ care. Lancet. 2003;362:1225–1230. doi: 10.1016/S0140-6736(03)14546-1. [DOI] [PubMed] [Google Scholar]

- 13.D’Agostino T.A., Bylund C.L. Nonverbal accommodation in health care communication. Health Commun. 2014;29:563–573. doi: 10.1080/10410236.2013.783773. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Hamel L.M., Moulder R., Harper F.W.K., Penner L.A., Albrecht T.L., Eggly S. Examining the dynamic nature of nonverbal communication between Black patients with cancer and their oncologists. Cancer. 2021;127:1080–1090. doi: 10.1002/cncr.33352. [DOI] [PubMed] [Google Scholar]

- 15.Henselmans I., Smets E.M., Han P.K., Haes H.C., Laarhoven H.W. How long do I have? Observational study on communication about life expectancy with advanced cancer patients. Patient Educ Couns. 2017;100:1820–1827. doi: 10.1016/j.pec.2017.05.012. [DOI] [PubMed] [Google Scholar]

- 16.Glare P., Virik K., Jones M., Hudson M., Eychmuller S., Simes J., et al. A systematic review of physicians’ survival predictions in terminally ill cancer patients. BMJ. 2003;327 doi: 10.1136/bmj.327.7408.195. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Craig K., Washington K.T. Family perspectives on prognostic communication in the palliative oncology clinic: “Someone was kind enough to just tell me,”. J Clin Oncol. 2018;36:24. [Google Scholar]

- 18.Enzinger A.C., Zhang B., Schrag D., Prigerson H.G. Outcomes of prognostic disclosure: associations with prognostic understanding, distress, and relationship with physician among patients with advanced cancer. J Clin Oncol. 2015;33:3809–3816. doi: 10.1200/JCO.2015.61.9239. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Hillen M.A., van Vliet L.M., de Haes H.C.J.M., Smets E.M.A. Developing and administering scripted video vignettes for experimental research of patient-provider communication. Patient Educ Couns. 2013;91:295–309. doi: 10.1016/j.pec.2013.01.020. [DOI] [PubMed] [Google Scholar]

- 20.Zulman D.M., Haverfield M.C., Shaw J.G., Brown-Johnson C.G., Schwartz R., Tierney A.A., et al. Practices to foster physician presence and connection with patients in the clinical encounter. JAMA. 2020;323:70–81. doi: 10.1001/jama.2019.19003. [DOI] [PubMed] [Google Scholar]

- 21.Hashim M.J. Patient-centered communication: basic skills. Am Fam Physician. 2017;95:29–34. [PubMed] [Google Scholar]

- 22.Kurtz S.M., Silverman J.D. The Calgary—Cambridge Referenced Observation Guides: an aid to defining the curriculum and organizing the teaching in communication training programmes. Med Educ. 1996;30:83–89. doi: 10.1111/j.1365-2923.1996.tb00724.x. [DOI] [PubMed] [Google Scholar]

- 23.Dean M., Street R.L., Jr. Textbook of palliative care communication. Oxford University Press; 2015. Patient-centered communication; p. 238. [Google Scholar]

- 24.Epstein R., Street R.L. US Department of Health and Human Services; 2007. Patient-centered communication in cancer care: Promoting healing and reducing suffering. [Google Scholar]

- 25.Ruben M.A., Blanch-Hartigan D., Hall J.A. The Wiley handbook of healthcare treatment engagement: Theory, research, and clinical practice. Wiley Blackwell; Hoboken, NJ, US: 2020. Communication skills to engage patients in treatment; pp. 274–296. [DOI] [Google Scholar]

- 26.Riess H., Kraft-Todd G. E.M.P.A.T.H.Y.: a tool to enhance nonverbal communication between clinicians and their patients. Acad Med. 2014;89:1108–1112. doi: 10.1097/ACM.0000000000000287. [DOI] [PubMed] [Google Scholar]

- 27.Liu C., Lim R.L., McCabe K.L., Taylor S., Calvo R.A. A web-based telehealth training platform incorporating automated nonverbal behavior feedback for teaching communication skills to medical students: a randomized crossover study. J Med Internet Res. 2016;18 doi: 10.2196/jmir.6299. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Talespin - Enterprise VR, AR & AI https://www.talespin.com/ (accessed April 25, 2025)

- 29.Tarbi E.C., Blanch-Hartigan D., van Vliet L.M., Gramling R., Tulsky J.A., Sanders J.J. Toward a basic science of communication in serious illness. Patient Educ Couns. 2022;105:1963–1969. doi: 10.1016/j.pec.2022.03.019. [DOI] [PubMed] [Google Scholar]

- 30.Paas F., Tuovinen J.E., Tabbers H., Van Gerven P.W.M. Cognitive load measurement as a means to advance cognitive load theory. Educ Psychol. 2003;38:63–71. doi: 10.1207/S15326985EP3801_8. [DOI] [Google Scholar]

- 31.Wormwood J.B., Quigley K.S., Barrett L.F. Emotion and threat detection: the roles of affect and conceptual knowledge. Emotion. 2022;22:1929–1941. doi: 10.1037/emo0000884. [DOI] [PubMed] [Google Scholar]

- 32.Barrett L.F., Russell J.A. The structure of current affect: controversies and emerging consensus. Curr Dir Psychol Sci. 1999;8:10–14. doi: 10.1111/1467-8721.00003. [DOI] [Google Scholar]

- 33.Kuppens P., Tuerlinckx F., Russell J.A., Barrett L.F. The relation between valence and arousal in subjective experience. Psychol Bull. 2013;139:917–940. doi: 10.1037/a0030811. [DOI] [PubMed] [Google Scholar]

- 34.Watson D., Clark L.A., Tellegen A. Development and validation of brief measures of positive and negative affect: the PANAS scales. J Pers Soc Psychol. 1988;54:1063–1070. doi: 10.1037/0022-3514.54.6.1063. [DOI] [PubMed] [Google Scholar]

- 35.Marteau T.M., Bekker H. The development of a six-item short-form of the state scale of the Spielberger State—Trait Anxiety Inventory (STAI) Br J Clin Psychol. 1992;31:301–306. doi: 10.1111/j.2044-8260.1992.tb00997.x. [DOI] [PubMed] [Google Scholar]

- 36.Engelberg R.A., Downey L., Wenrich M.D., Carline J.D., Silvestri G.A., Dotolo D., et al. Measuring the quality of end-of-life care. J Pain Symptom Manage. 2010;39:951–971. doi: 10.1016/j.jpainsymman.2009.11.313. [DOI] [PubMed] [Google Scholar]

- 37.Mishel M.H. The measurement of uncertainty in illness. Nurs Res. 1981;30:258–263. [PubMed] [Google Scholar]

- 38.Heeter C. Being there: the subjective experience of presence. Presence Teleop Virt. 1992;1:262–271. doi: 10.1162/pres.1992.1.2.262. [DOI] [Google Scholar]

- 39.IJsselsteijn W.A. Technische Universiteit Eindhoven; 2004. Presence in depth, Phd Thesis 1 (Research TU/e / Graduation TU/e) [DOI] [Google Scholar]

- 40.Lee K.M. Vol. 14. 2004. Presence, explicated, communication theory; pp. 27–50. [DOI] [Google Scholar]

- 41.Aitamurto T., Zhou S., Sakshuwong S., Saldivar J., Sadeghi Y., Tran A. Proceedings of the 2018 CHI Conference on Human Factors in Computing Systems. Association for Computing Machinery; New York, NY, USA: 2018. Sense of presence, attitude change, perspective-taking and usability in first-person split-sphere 360° video; pp. 1–12. [DOI] [Google Scholar]

- 42.Witmer B.G., Singer M.J. Measuring presence in virtual environments: a presence questionnaire. Presence Teleop Virt. 1998;7:225–240. doi: 10.1162/105474698565686. [DOI] [Google Scholar]

- 43.Slater M., Steed A., Usoh M. In: Virtual environments ‘95. Göbel M., editor. Springer; Vienna: 1995. The virtual treadmill: A naturalistic metaphor for navigation in immersive virtual environments; pp. 135–148. [DOI] [Google Scholar]

- 44.Mori M., MacDorman K.F., Kageki N. The uncanny valley [from the field] IEEE Robot Automat Mag. 2012;19:98–100. doi: 10.1109/MRA.2012.2192811. [DOI] [Google Scholar]

- 45.MacDorman K.F., Chattopadhyay D. Reducing consistency in human realism increases the uncanny valley effect; increasing category uncertainty does not. Cognition. 2016;146:190–205. doi: 10.1016/j.cognition.2015.09.019. [DOI] [PubMed] [Google Scholar]

- 46.Paas F., Tuovinen J.E., Tabbers H., Gerven P.W.M.V. Cognitive load theory. Routledge; 2003. Cognitive load measurement as a means to advance cognitive load theory. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Supplementary material