Abstract

A fundamental issue in human development concerns how the young infant's ability to recognize emotional signals is acquired through both biological programming and learning factors. This issue is extremely difficult to investigate because of the variety of sensory experiences to which humans are exposed immediately after birth. We examined the effects of emotional experience on emotion recognition by studying abused children, whose experiences violated cultural standards of care. We found that the aberrant social experience of abuse was associated with a change in children's perceptual preferences and also altered the discriminative abilities that influence how children categorize angry facial expressions. This study suggests that affective experiences can influence perceptual representations of basic emotions.

Categorization is an adaptive feature of perception and cognition that allows us to respond quickly and appropriately to features of our environment. Social signals, such as those conveyed by the face, have long been regarded as important cues from the environment that require immediate and accurate recognition (1), yet we know little about the mechanisms through which humans form perceptual categories of emotion. The phenomenon of “categorical perception” may help us to understand more about the ontogenesis of emotional categories. Categorical perception effects occur when some kind of perceptual mechanism enhances our perception of differences between categories, at the expense of our perception of incremental changes in the stimulus within a category. Perceiving in terms of categories allows an observer to efficiently assess changes between categories (to see that a traffic light has changed from green to yellow) at the cost of noticing subtle changes in a stimulus (such as shades of greens or yellows across individual traffic lights). Here we show that human perceptual categories for basic emotions appear to be different among a group of children that have had social experiences that violate cultural standards of care. Children who experienced extreme physical harm from their parents showed alterations in their perceptual categories for anger as compared with nonabused children. Yet, abused children identified and discriminated other emotions in the same manner as nonabused children. This finding both addresses central issues about the developmental processes through which humans learn to perceive emotion expressions and also sheds light on the factors that put maltreated children at risk for behavioral pathology.

The earliest demonstrations of categorical perception in the area of speech perception stressed the importance of specialized innate mechanisms (2, 3). However, later investigations have revealed that perceptual capacities for speech, as well as other perceptual domains, are not determined by maturational processes independent of experience (4–7). Rather, it appears that although human infants may enter the world with perceptual capacities that allow them to conduct a preliminary analysis of their environments, they need to adjust or tune these mechanisms to process specific aspects of their environment (8, 9).

Categorical perception of facial expressions of emotions has been demonstrated in adults (10–12). These studies have found that although participants are presented with instances of facial expressions distributed along a continuum between emotions (e.g., happiness to sadness), observers perceive these stimuli as belonging to discrete categories (happiness or sadness). Studies have demonstrated that category boundaries for familiar and unfamiliar faces can be shifted in adults as a function of frequency of exposure to those faces (13). This feature of perceptual processing of faces is associated with frequency of exposure in adults, suggesting that experience may also play an important role in face perception. However, testing the effects of familiarity with classes of emotional expressions is considerably more difficult than assessing recognition of individual faces. This is because it is difficult to measure the frequency of a developing child's exposure to facial displays of emotion, as human children are always exposed to complicated affective experiences from birth. Not surprisingly, people are very skilled at understanding each other's facial expressions and appear to develop this expertise at an early age (14, 15). The present experiment sought to identify experiential effects in human recognition of facial displays of affect.

Because it is difficult to empirically measure the parameters of an individual's prior knowledge of, and experience with, basic emotion signals, we examined the perception of emotion in severely abused children. We reasoned that abused children would have had prior experiences with the communication of emotion that differed in important ways from nonabused children. The emotional environments of these children include perturbations in both the frequency and content of their emotional interactions with their caregivers (16, 17). Children who were physically abused by their parents and nonabused controls (matched on age and IQ to the maltreated children) performed a facial discrimination task that required them to distinguish faces that had been morphed to produce a continuum on which each face differed in signal intensity. To create continua of facial expressions of affect, we used a two-dimensional morphing system to generate stimuli that spanned four emotional categories: happiness, anger, fear, and sadness (Fig. 1). After the discrimination task, children completed an identification task with each facial image used in the previous discrimination task.

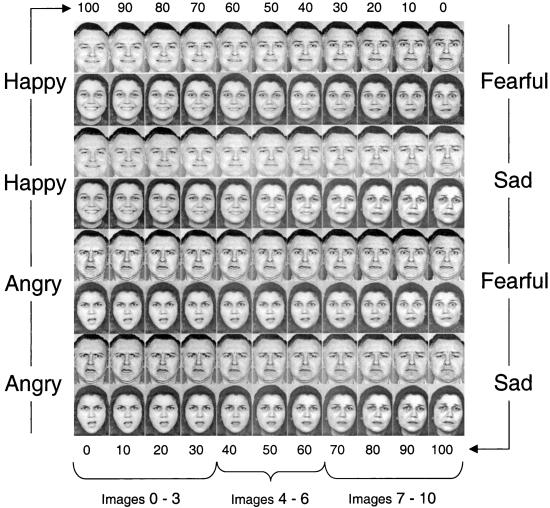

Figure 1.

The stimuli. Children categorized randomly generated “morphs” from blends of four prototypes. Morphed images were created to lie between prototypes of happiness and fearfulness, happiness and sadness, anger and fearfulness, and anger and sadness. These four continua each contained 11 male and 11 female images, of which the end positions were unmorphed prototypes of each emotion. The images each differed by 10% in pixel intensities. The middle face in each continua was a 50% blend of each emotion pair. (Adapted from ref. 18.)

Methods

Subjects.

Forty children (mean age = 9 years, 3 months), 22 males and 18 females, participated in these studies. Seventeen nonabused children were recruited from a local after-school program and 23 physically abused children were recruited from a state psychiatric facility and a local social welfare agency serving maltreated children. Abuse histories (or lack thereof) were verified based on reviews of clinical and legal records. All participants had normal or corrected-to-normal vision. Children's parents or legal guardians gave informed consent for both release of information and children's participation.

Stimuli.

Two posers (a female and a male, shown in Fig. 1) were chosen as prototype images from a set of published photographs (18). The prototype images were scanned to 213 × 480 pixels and morphed to produce a linear continuum of images between the two endpoints. For each continuum, pertinent anatomical areas such as the mouth, eyes, nose, and hairline were used as control points. To create the morphed transformation, the pixels around key points were changed from their positions in the start image to their positions in the end image, and their colors were transformed from one image to the other by a Delauney tessellation procedure based on linear triangulation of elements. An important feature of this procedure is that control points are shifted by an equal percentage of the total distance between their initial and final positions.

Procedure.

Each participant completed two separate experimental tasks, one involving discrimination and the other identification of the facial images. In both tasks, facial images were presented in randomized order so that the continuum being examined was not apparent to the subject (e.g., happy → fear → angry → sad → happy). In the ABX discrimination task subjects viewed two pictures (A and B) that differed by two steps or 20% increments (e.g., A = 0% image and B = 20% image). The third face (X) was identical either to A or B. In half of the trials face X was the same expression as face A and in the other half face B. This order was counterbalanced. Each of the possible nine pairs of images was presented in each of four orders (ABA, ABB, BAA, BAB), yielding 144 trials. At the beginning of each trial a central fixation cross was presented for 250 msec, followed by a blank interval of 250 msec, and then three successive faces from one continuum for 750 msec each. Each face subtended to a horizontal visual angle of 16° and a vertical visual angle of 10° (approximately half the size of an adult human face as viewed from the same distance). Participants responded by using an ELO Graphics (Fremont, CA) touch-screen monitor.

After completing the ABX task, the children completed an identification task in which they identified the expression of each facial image. Each trial began with a central fixation cross, presented for 250 msec, followed by a blank interval of 250 msec. In each trial, the facial image remained in view until a response was made. The participant's task was to decide which emotion the face most resembled by touching one of two labels that appeared beneath the image on the touch monitor. Each of the 44 morphed and prototype faces was presented one at a time in 10% increments, not grouped according to underlying continua, either four (images 0–3 and 7–10) or eight (images 4–6) times, yielding 224 total trials.

Data Analyses.

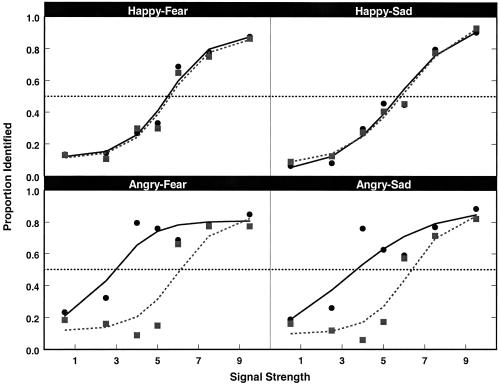

To measure children's identification of each facial image, we fitted logistic functions for each continuum for each individual by using the following formula:

|

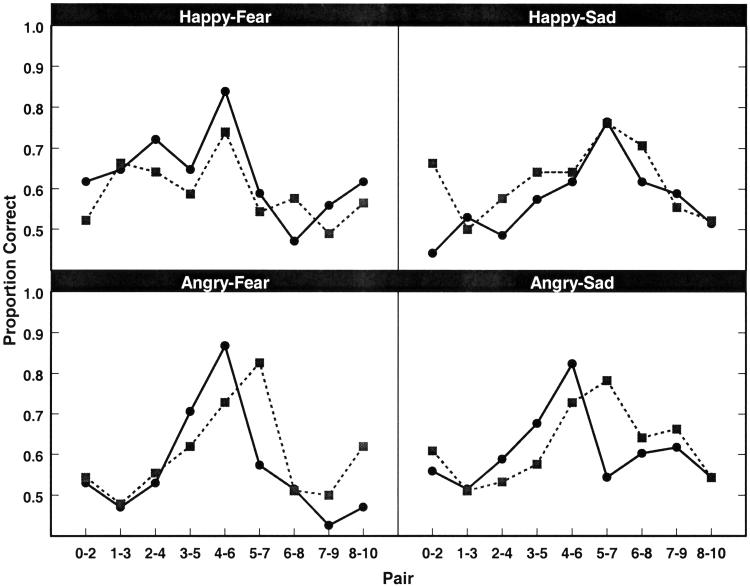

where x is signal strength and P is the probability of identification. Four parameters were estimated: a is the function midpoint, 1/b is the slope, and c and d represent the lower and the upper asymptotes, respectively. The category boundary is estimated by computing the signal value corresponding to P = 0.5. To obtain more reliable estimates, we grouped data from adjacent categories that had small numbers of trials (images 0 + 1, 2 + 3, 7 + 8, 9 + 10). Category boundary means and standard errors for each group are presented in Table 1. The observed data and fitted functions are plotted for each group in Fig. 2. Steep identification slopes provide evidence for categorical perception, whereas shallow slopes would suggest an emotion was perceived more continuously (see Fig. 2). In Fig. 3, we plot each group's mean observed discrimination performance as proportion correct. Because ABX is a two-alternative–forced-choice procedure, it provides an unbiased estimate of discrimination. Chance performance is 50%, therefore Fig. 3 shows very clearly where discrimination peaks occurred on each continuum.

Table 1.

Perceptual threshold estimates (standard error)

| Category | Control | Physically abused |

|---|---|---|

| Happy–fear | 5.49 (0.27) | 5.83 (0.28) |

| Happy–sad | 5.59 (0.23) | 5.56 (0.21) |

| Angry–fear | 2.90 (0.23) | 6.15 (0.16) |

| Angry–sad | 3.80 (0.31) | 6.42 (0.16) |

The data are modeled with four-parameter logistic functions that were fit to the identification data to estimate a signal threshold (e.g., category boundary), slope, and lower and upper asymptotes for each individual subject. The parameter of interest is the threshold estimate, for which the two groups did not differ on the happy–sad and the happy–fear continua, but did differ on the angry–fear and angry–sad continua.

Figure 2.

Results from the emotion identification task for each emotion pair. The ordinate refers to the proportion of trials in which responses matched the identity of the second emotion in the pair relative to the first emotion in the pair (e.g., for the happy–fear pair, signal strength 1 = 90% happy/10% fear whereas signal strength 9 = 10% happy/90% fear). Data for controls are plotted in solid lines and data for abused children are dashed lines. The horizontal dashed line at P = 0.50 is plotted to help identify the threshold for each group. Data for all four emotion continua show a clear change in identity judgments as a function of changes in stimulus intensity. However, abused children overidentified anger relative to fear and sadness.

Figure 3.

Results from the emotion discrimination task for each emotion pair. Discrimination performance for the four emotion continua are plotted as proportion correct for sequential pairs of faces along each continuum. Chance performance (0.5) indicates that children had difficulty discriminating between the two sample stimuli. As can be seen, both groups of children showed discrimination peaks for morph pairs in the center of the continua. In all four conditions, discrimination scores were higher for face pairs at the predicted peaks than for pairs near the prototypes. Average discrimination scores for the control children are plotted in solid lines and data for abused children are dashed lines. For controls, peak discrimination scores were near the midpoint of each continuum. Perceptual category boundaries for abused children differed from controls only in continua that involved angry facial expressions. The shift in the abused children's peak discrimination scores relative to controls reflects broader perceptual categorization of anger relative to fear and sadness, respectively.

Results

Both the abused and control groups of children showed a clear shift in identification of emotional expressions (Fig. 2). An ANOVA performed on the category boundary estimates revealed a significant group × emotion interaction [F(3,99) = 25.27, P < 0.0001]. For the happy–sad and happy–fear continua, category boundaries were near the midpoint of the continua. However, for the two continua involving anger, the estimated category boundaries for the control group were lower than predicted, whereas those of the abused group were higher (Table 1). The identification data suggest that controls tended to underidentify anger, whereas the abused children overidentified anger. However, this shift is not definitive because it may be an artifact of the forced-choice response. If stimuli along a continuum are categorized differently by the abused children, we should observe differential peaks in the discrimination task for the two-step image pair that straddles the perceptual boundary.

As predicted, the point at which the two groups of children placed their category boundaries for facial expressions differed, with abused children displaying a boundary shift for perceptual categories of anger relative to nonmaltreated children (Fig. 3). Additionally, face pairs to either side of the peaks may partially straddle the category boundaries. Because crossing the category boundary should cause an increase in accuracy, we would expect these pairs to also be more easily discriminable than are fully within-category pairs. This expectation seems to hold. In summary, abused children displayed equivalent category boundaries to controls when discriminating continua of happiness blended into fear and sadness. However, these same children evinced different category boundaries when discriminating angry faces blended into either fear or sadness.

Discussion

The present experiment provides a rigorous empirical test of the perception of emotion in children in that evaluation of both identification and discrimination performance was measured in the same individuals. These data suggest effects of experience on the formation of perceptual representations of emotion. An alternative hypothesis is that some latent genetic variable in the child, rather than experience, underlies both emotion perception and risk for maltreatment. Although this experiment cannot rule out this possibility, the likelihood of genetic effects accounting for both being abused and perception of emotion seems small. First, the differential effect observed in the abused children appears to be specific to one facial expression. Second, recent behavioral genetics research (19) suggests that the negative outcomes associated with child abuse cannot be accounted for by genetic factors. Third, sociodemographic and family background variables except for maltreatment were extremely similar between the two groups of children studied.

In short, the specificity of these effects is critical: abused children did not differ from controls in their ability to identify single exemplars of emotion or in the discrimination of emotions that did not involve anger, an emotion that may be a key environmental signal in these children's environments. As do most children, abused children receive a vast amount of information about their environments in the form of emotional signals. We speculated that the abused child's affective environment might involve emotional signals that differ in significant ways from nonabused children. Within abusive home environments, anger may become particularly salient to children if it is associated with high-intensity aggressive outbursts or injury. Anger may also be expressed with greater frequency if aggression and hostility is a chronic interpersonal pattern in the home. We view the effects observed in the present study as reflecting an adaptive process for maltreated children, allowing them to better track emotional cues of anger in the environment. The cost of such a process may be, unfortunately, to overinterpret signals as threatening and perhaps make incorrect judgments about other facial expressions. The decoding of affective signals within social contexts represents an enormously complex learning problem for all children. Learning to recognize and understand the meaning of facial displays of emotion may be a function of salience and/or relative frequency. Yet, relative frequency or perceptual salience alone may not be the most efficient routes to learning how to recognize emotions. Instead, competence may be more readily achieved through more complex associative learning, such as effectively pairing a signal—in this case facial expressions—with an outcome.

It would be fruitful to explore the differences reported here in paradigms that might explicate the neural mechanisms underlying categorization of emotion. For example, the present results are consistent with studies suggesting experiential malleability in the neural circuitry underlying modification of prefrontal neurons (20–22). However, the prefrontal cortex is certainly not the only brain area involved in cognitive tasks as complex as categorization of emotion. Other structures are likely to be relevant to children's emotional functioning such as temporal lobe structures and inferior temporal cortex, both of which may underlie the storage of memories and associations relevant to emotion perception, as well as attentional effects on fusiform gyrus, implicated in development of face expertise networks (23–26).

Further understanding of the neural mechanisms involved in perception of emotional expressions may be able to explicate both when and what kinds of learning about emotion are occurring across development. Little is known about how emotional expressions come to be represented in the developing brain. Regardless of whether the mechanisms used to perceive and categorize emotion are biologically specified, acquired through experience, specialized for this purpose, or generalized to various kinds of learning, it is clear that some kind of learning, refinement, or elaboration based on experience must occur as a function of the salience afforded to various affective signals in the environment.

Acknowledgments

We thank Anna Bechner for assistance in data acquisition, Richard Aslin, James Dannemiller, and Frederic Wightman for advice and discussion, and Craig Rypstat for programming. Recruitment of children for this study would not have been possible without the assistance of Robert Lee, Dane County Wisconsin Department of Human Services, Drs. Edward Musholt, Valerie Ahl, and the staff of the Children's Treatment Unit, Mendota Mental Health Institute. Paul Ekman generously allowed us to alter his stimulus set of facial images. This work was supported by grants from the National Institutes of Mental Health (MH-61285) and the National Institute of Child Health and Human Development (HD-03352).

Footnotes

This paper was submitted directly (Track II) to the PNAS office.

References

- 1.Darwin C. The Expression of Emotions in Man and Animals. Chicago: Univ. of Chicago Press; 1872/1965. [Google Scholar]

- 2.Eimas P D, Siqueland E R, Jusczyk P, Vigorito J. Science. 1971;171:303–306. doi: 10.1126/science.171.3968.303. [DOI] [PubMed] [Google Scholar]

- 3.Liberman A M, Harris K S, Hoffman H S, Griffith B C. J Exp Psychol. 1957;54:358–368. doi: 10.1037/h0044417. [DOI] [PubMed] [Google Scholar]

- 4.Bornstein M H. In: Categorical Perception: The Groundwork of Cognition. Harnad S, editor. New York: Cambridge Univ. Press; 1987. pp. 287–300. [Google Scholar]

- 5.Williams L. Percept Psychophys. 1977;21:289–297. [Google Scholar]

- 6.Werker J F, Tees R C. Annu Rev Neurosci. 1992;15:377–402. doi: 10.1146/annurev.ne.15.030192.002113. [DOI] [PubMed] [Google Scholar]

- 7.Werker J F, Tees R C. Infant Behav Dev. 1984;7:49–63. [Google Scholar]

- 8.Aslin R N, Jusczyk P W, Pisoni D B. In: Handbook of Child Psychology: Cognition, Perception, and Language. Damon W, Kuhn D, Siegler R S, editors. Vol. 2. New York: Wiley; 1988. pp. 147–198. [Google Scholar]

- 9.Raskin L A, Maital S, Bornstein M H. Psychol Res. 1983;45:135–145. doi: 10.1007/BF00308665. [DOI] [PubMed] [Google Scholar]

- 10.Calder A J, Young A W, Perret D I, Etcoff N L, Rowland D. Visual Cognit. 1996;3:81–117. [Google Scholar]

- 11.Etcoff N L, Magee J J. Cognition. 1992;44:227–240. doi: 10.1016/0010-0277(92)90002-y. [DOI] [PubMed] [Google Scholar]

- 12.Young A W, Rowland D, Calder A J, Etcoff N L, Seth A, Perret D I. Cognition. 1997;63:271–313. doi: 10.1016/s0010-0277(97)00003-6. [DOI] [PubMed] [Google Scholar]

- 13.Beale J M, Keil F C. Cognition. 1995;57:217–239. doi: 10.1016/0010-0277(95)00669-x. [DOI] [PubMed] [Google Scholar]

- 14.Morton J, Johnson M H. Psychol Rev. 1991;98:164–181. doi: 10.1037/0033-295x.98.2.164. [DOI] [PubMed] [Google Scholar]

- 15.Nelson C A. Child Dev. 1987;58:889–909. [PubMed] [Google Scholar]

- 16.U.S. Department of Health and Human Services. Child Maltreatment, 1994: Reports from the States to the National Center on Child Abuse and Neglect. Washington, DC: Government Printing Office; 1996. [Google Scholar]

- 17.Camras L, Ribordy S, Hill J, Martino S, Sachs V, Spacarelli S, Stefani R. Dev Psychol. 1990;26:304–312. [Google Scholar]

- 18.Ekman P, Friesen W. Pictures of Facial Affect. Palo Alto, CA.: Consulting Psychologist's Press; 1976. [Google Scholar]

- 19.Kendler K S, Bulik C M, Silberg J, Hettema J M, Myers J, Prescott C A. Arch Gen Psychiatry. 2000;57:953–959. doi: 10.1001/archpsyc.57.10.953. [DOI] [PubMed] [Google Scholar]

- 20.Freedman D J, Maximilian R, Poggio T, Miller E K. Science. 2001;291:312–316. doi: 10.1126/science.291.5502.312. [DOI] [PubMed] [Google Scholar]

- 21.Bichot N P, Schall J D, Thompson K G. Nature (London) 1996;381:697–699. doi: 10.1038/381697a0. [DOI] [PubMed] [Google Scholar]

- 22.Rainer G, Miller E K. Neuron. 2000;27:179. doi: 10.1016/s0896-6273(00)00019-2. [DOI] [PubMed] [Google Scholar]

- 23.Webster M J, Bachevalier J, Undergleider L G. Cereb Cortex. 1994;4:470–488. doi: 10.1093/cercor/4.5.470. [DOI] [PubMed] [Google Scholar]

- 24.Narumoto J, Okada T, Sadato N, Fukui K, Yonekura Y. Cognit Brain Res. 2001;12:225–231. doi: 10.1016/s0926-6410(01)00053-2. [DOI] [PubMed] [Google Scholar]

- 25.Tomita H, Ohbayashi M, Nakahara K, Hasegawa I, Miyashita Y. Nature (London) 1999;401:699–701. doi: 10.1038/44372. [DOI] [PubMed] [Google Scholar]

- 26.Wallis J D, Anderson K C, Miller E K. Nature (London) 2001;411:953–956. doi: 10.1038/35082081. [DOI] [PubMed] [Google Scholar]