Abstract

Background

Applying functional anatomy to clinical examination techniques in shoulder examination is challenging for physicians at all learning stages. Anatomy teaching has shifted toward a more function-oriented approach and has increasingly incorporated e-learning. There is limited evidence on whether the integrated teaching of professionalism, clinical examination technique, and functional anatomy via e-learning is effective.

Objective

This study aimed to investigate the impact of an integrated blended learning course on the ability of first-year medical students to perform a shoulder examination on healthy volunteers.

Methods

Based on Kolb’s experiential learning theory, we designed a course on shoulder anatomy and clinical examination techniques that integrates preclinical and clinical content across all 4 stages of Kolb’s learning cycle. The study is a randomized, observer-blinded controlled trial involving first-year medical students who are assigned to one of two groups. Both groups participated in blended learning courses; however, the intervention group’s course combined clinical examination, anatomy, and professional behavior and included a peer-assisted practice session as well as a flipped classroom seminar. The control group’s course combined an online lecture with self-study and self-examination. After completing the course, participants uploaded a video of their shoulder examination. The videos were scored by 2 blinded raters using a standardized examination checklist with a total score of 40.

Results

Thirty-eight medical students were included from the 80 participants needed based on the power calculation. Seventeen intervention and 14 control students completed the 3-week study. The intervention group students scored a mean of 34.71 (SD 1.99). The control students scored a mean of 29.43 (SD 5.13). The difference of means was 5.3 points and proved to be statistically significant (P<.001; 2-sided Mann-Whitney U test).

Conclusions

The study shows that anatomy, professional behavior, and clinical examination skills can also be taught in an integrated blended learning approach. For first-year medical students, this approach proved more effective than online lectures and self-study.

Introduction

Although clinical guidelines recommend that imaging diagnostics be based on a structured clinical examination, primary care physicians frequently rely on imaging modalities such as magnetic resonance imaging when managing shoulder complaints [1-4]. This raises the broader issue of how well clinical anatomy and musculoskeletal examination skills are integrated and emphasized during medical education and training. In fact, both residents and medical students report substantial learning needs in musculoskeletal examination techniques, except among those with a preexisting interest in musculoskeletal specialties [5-11].

Learning clinical competencies is a complex process that can be better understood through the application of a theoretical framework [12-14]. Kolb’s experiential learning theory provides such a framework. It conceptualizes learning as a continuous cycle involving concrete experience, reflective observation, abstract conceptualization, and active experimentation [15]. This model is particularly relevant in traditional Flexnerian curricula, where preclinical and clinical training are distinctly delineated, with the cultivation of clinical competencies unfolding over the course of several semesters. It encompasses various dimensions—such as interpreting pathology, applying appropriate examination techniques, and demonstrating professional behavior—achieved through diverse instructional methods, all within a stressful learning environment [3,11,16-20,undefined,undefined,undefined,undefined].

Due to the fragmented way students encounter clinical skills and related knowledge, anatomy education has increasingly moved toward region- and function-based integration—though this shift remains incomplete [21-25].

The advent of the COVID-19 pandemic, along with its associated restrictions on in-person interaction, accelerated the adoption of digital teaching formats in medical education. This shift aligned with a general trend toward expanding e-learning in both clinical and anatomical instruction [26-36]. Specifically, shoulder examination techniques can be taught either face-to-face or digitally through synchronous and asynchronous methods, with face-to-face formats demonstrating superior outcomes and higher learner acceptance [9,13,14,27,37]. Blended learning approaches, such as the flipped classroom, which combine digital and in-person elements, have gained increased traction since the pandemic by effectively linking clinical and anatomical content [11,25-27,32,33,38-41,undefined,undefined,undefined,undefined,undefined]. In addition, peer teaching has been shown to enhance students’ confidence in clinical examination skills and facilitate the transfer of anatomical knowledge into clinical practice [42-44].

The optimal timing for introducing clinical skills within anatomy education and how best to align these skills with examination formats remain subjects of debate [11,45-47,undefined,undefined]. Clinical skills are predominantly assessed through objective structured clinical examinations (OSCEs), which can also be conducted using video recordings [14,16,48,49].

In summary, Flexnerian curricula typically teach and assess anatomical knowledge, clinical skills, and professional behavior separately, using fragmented instructional methods that include both digital and face-to-face formats. These components correspond to distinct stages of Kolb’s experiential learning cycle—for instance, peer teaching facilitates reflective observation and abstract conceptualization [42], while clinical training fosters concrete experience and active experimentation [9,37]. However, Kolb emphasizes that meaningful and deep learning requires progression through all stages of the cycle [15]. This suggests that educational approaches intentionally integrating these phases may lead to improved learning outcomes.

Although previous studies have demonstrated that anatomy and clinical skills can be taught through both face-to-face and digital modalities [36-38,50,undefined,undefined], there remains a lack of robust empirical evidence supporting integrated teaching approaches that unify preclinical and clinical domains. Our intervention was therefore designed not only to bridge preclinical and clinical content but also to combine multiple instructional methods, aligning with Kolb’s model to support an effective learning process.

This study aimed to assess the effect of this integrated blended-learning course on first-year medical students’ clinical performance, professionalism, and anatomical knowledge.

Methods

This paper follows the CONSORT (Consolidated Standards of Reporting Trials) guidelines [51].

Study Design

The study was conducted as a 2-arm, randomized, observer-blinded intervention. The study’s acronym, TraceX, is derived from “TRansfer of AnatomiCal knowledge in the EXamination situation for preclinical medical students.” It was conducted as part of a curricular development project with the same name. The project was funded by the Medical Faculty of Tübingen University Hospital (Universitätsklinikum Tübingen [UKT]). The study protocol underwent an external peer-review process by reviewers not involved in the project. The dean of the faculty and the faculty commission approved the project in 2020.

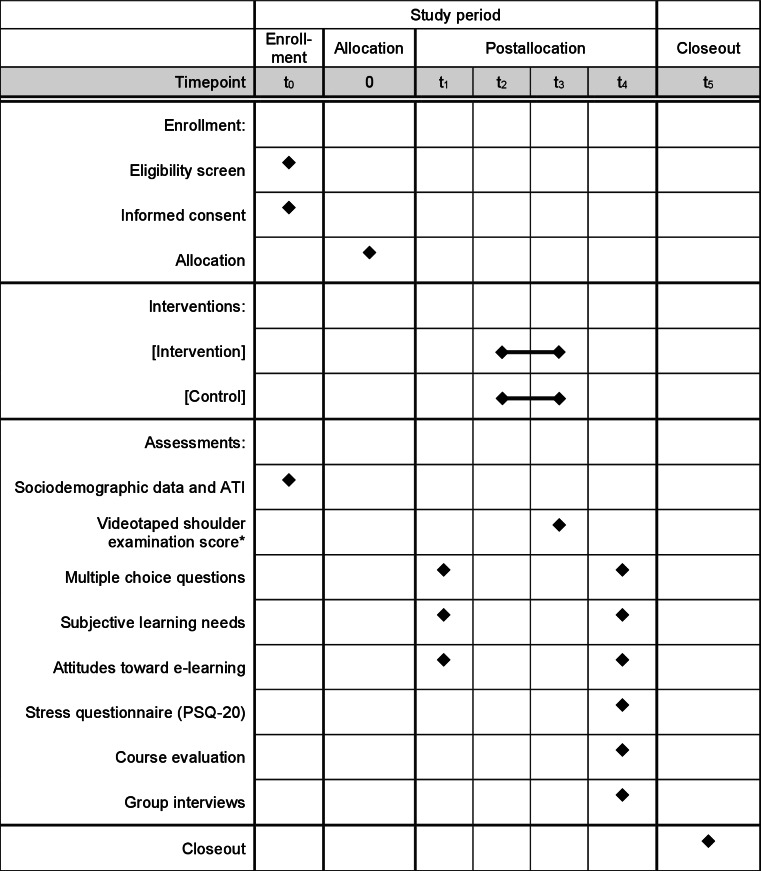

Its protocol is illustrated in Figure 1, based on the SPIRIT (Standard Protocol Items: Recommendations for Interventional Trials) figure template [52]. The study compared students’ performance in a videotaped shoulder examination after a 3-week blended-learning course. The intervention and control groups received 2 different blended-learning modules.

Figure 1. Transfer of anatomical knowledge in the examination situation for preclinical medical students (TraceX) study design protocol. The asterisk indicates the primary outcome. ATI: affinity for technology interaction; PSQ-20: Perceived Stress Questionnaire.

Study Population and Setting

The study took place at the Medical Faculty of Eberhard Karls University Tübingen in southern Germany. It was conducted between October 2021 and March 2022 by the Institute for General Practice and Interprofessional Health Care in cooperation with the Institute of Clinical Anatomy and Cell Analysis. In Tübingen, online courses are provided using the Integrated Learning, Information, and Work Cooperation System (ILIAS) online-learning platform, which is a commonly used learning management system among German universities (ILIAS open source e-Learning e.V). It allows log-in via authenticated student email accounts.

Pilot Study

Several instruments used in the study were piloted in 2020 with 18 first-year medical students. The primary purpose of the pilot study was to check interrater reliability for the primary outcome measurement instrument and to calculate the internal reliability of the self-developed questionnaire items. The results of these checks and their impact on the development of the items are listed below for each respective instrument.

In addition, participants of the pilot study were asked to assess the online learning modules. Based on their feedback, the course content was adapted. Two of the pilot study participants provided valuable comments on course benefits and weaknesses in an additional voluntary online interview. The interview served as an extended evaluation. Furthermore, LG (who later conducted the group interviews in the main study) piloted interview guidelines and received interviewer training based on the participants’ feedback.

Recruitment

Recruitment for the main study started in May 2021. First-year medical students were approached via email and in 2 online lectures. Interested students were invited to contact the Institute for General Practice’s office to receive additional information (eg, type of study, goal, significance, and participant rights). Study participation was voluntary and did not impact other courses in the curriculum. For students who did not wish to participate in the study, regular curriculum activities proceeded. The teaching coordinator (responsible for course and lecture coordination at the Institute) communicated with students but did not participate in the study design or execution. Eligibility criteria included being a first-year medical student, having provided informed consent to use ILIAS, and feeling healthy enough to participate in the study.

Intervention and Control

Intervention and control courses were developed collaboratively in interdisciplinary workshops involving general practitioners (GPs), a medical didactics expert, an orthopedic surgeon, a psychotherapist, medical students, a physiotherapist, and a medical psychologist.

Common learning objectives were developed first and then applied to both groups, which differed in instructional design and learning format. The intervention group received access to structured online modules, including physiotherapist-led examination videos, functional and topographic anatomy videos performed by an anatomy lecturer, and modules on daily life impact and professional conduct. Content was integrated across preclinical and clinical domains. After self-study, students joined a 90-minute flipped-classroom seminar (taught by the same anatomy lecturer and a GP), which included preparation for a peer-training session. Due to COVID-19 restrictions, this 90-minute peer-training session was held in decentralized student pairs or moderated small groups on Zoom (Zoom Communications Inc), with trained peer tutors facilitating feedback.

The control group followed a more traditional format with lectures and self-study. Students received literature for self-study and instructions for self-examination (eg, palpation in front of a mirror). In addition, they attended 2 distinct synchronous online lectures: one on anatomy (anatomy lecturer) and one on clinical examination (GP), based on the same content as the intervention videos but presented with still images, self-demonstration by the lecturers, and a greater separation of clinical and preclinical content.

In summary, the intervention emphasized integrated, interactive learning with structured feedback and more practice time, providing a learning process with all 4 steps of Kolb’s learning cycle [15], while the control group reflected conventional, lecture-based learning with limited interaction and a more fragmented learning process. Please refer to Figure 2 for an overview.

Figure 2. Intervention and control design. GP: general practitioner.

Randomization and Allocation

From those students who signed a written informed consent to participate in the study, basic sociodemographic data were obtained at t0 to enable stratified randomization. Randomization was undertaken in a 1:1 ratio using lists generated with the R programming package (The R Core Team, R Foundation, and R Consortium). Randomization was stratified for gender and occupational experience and used block sizes of 2 and 4. Individualized identification codes were generated by the participants in the surveys (t1 and t4) to allow for linking of presurvey and postsurvey data without compromising participant anonymity or disclosing group affiliation. All other data that could reveal group affiliation were concealed until grading of the final examinations and statistical analysis was completed. Participants were not blinded to their own group affiliation. Randomization and group allocation were performed by 2 researchers not involved in the assessment and development of course content.

The groups received the same instructions for exam performance and grading criteria. Finally, a postcourse questionnaire was distributed to both groups, and voluntary postcourse online group interviews were conducted (t4). After study completion, members of the control group were provided full access to all e-learning resources to preclude disadvantage.

Primary Outcome

This study hypothesizes that an integrated course based on Kolb’s learning theory would improve first-year medical students’ performance in a standardized, complex OSCE shoulder station requiring structured clinical examination, anatomical knowledge, and professional conduct. Students in the intervention group attended the integrated course and were expected to outperform those in the control group. The primary outcome was OSCE performance at t3, assessed through a structured examination focusing on inspection, palpation, and functional movement assessment, with emphasis on identifying anatomical landmarks rather than detecting pathology.

Because contact restrictions prevented a face-to-face OSCE setting, participants recorded videos of their shoulder examinations and uploaded them to ILIAS. Video length was limited to 10 minutes and included a volunteer (peer, spouse, or family member) acting as a simulation patient. Students were instructed to ensure their volunteers had no preexisting shoulder pain or functional impairments and to obtain consent before recording. Simulation patients were asked to follow the students’ instructions and cooperate during the examination, creating a controlled environment intended to minimize bias introduced by the simulation patients.

The uploaded videos were assessed independently by a pair of blinded raters (GP and a medical student) using an examination checklist. The checklist comprised a total of 40 items, including 12 items on anatomical knowledge, such as topography and functional anatomy, 17 items on structured clinical examination skills, and 9 items on medical professionalism. It was developed in multidisciplinary workshops based on existing literature [14] and items from the OSCE used at UKT [53]. It was piloted in the pilot study. In that study, the pair of most congruent raters (a medical student and a GP) achieved an interrater validity of κ=0.573 (Cohen κ). Due to this moderate agreement, several items were operationalized for better standardization of examiners [54]. The final version of the examination score used in this study is included as Multimedia Appendix 1.

Secondary Outcomes

Participants’ subjective learning needs were assessed across three domains—anatomical knowledge, clinical examination skills, and professionalism—at time points t1 and t4 using a questionnaire. This questionnaire was developed during an interdisciplinary workshop and subsequently piloted in the initial study phase. The sample of 18 pilot study participants showed a Cronbach α of 0.92. The domain “anatomical knowledge” contained 5 items (ie, visible landmarks, anatomical structure of the shoulder, function, range of motion, and association of function with activities of daily living). The domain “clinical examination” also consisted of 5 items (ie, structured examination steps, history taking, shoulder inspection, shoulder palpation, and test of function). Finally, the domain “professionalism” was covered with 4 items (ie, use of patient-centered language, autonomous handling of the examination situation, perceptiveness toward patient feedback, and addressing patient feedback during the examination). All items were operationalized on a 4-point Likert scale. This yielded sum scores on a 5- to 20-point range and a 4- to 16-point range, respectively.

Theoretical anatomical knowledge was assessed before and after the course (at t1 and t4) using the same set of 10 randomized multiple-choice questions (MCQs). Each question had 5 answer options, with only 1 correct answer, resulting in a knowledge score ranging from 0 to 10. These MCQs reflect the current standard used in the written state medical examination in Germany.

Using Likert scale rating questions, we used 4 items of the standardized Affinity for Technology Interaction Short Scale (ATI-S), rendering a sum score on a 4- to 24-point scale. The ATI-S is a reliable (Cronbach α 0.88-0.92) and validated instrument [55].

The course evaluation included 5 items adapted from the standard evaluation used by the Medical Faculty of Tübingen, covering overall assessment, contribution to personal learning, curriculum alignment, course structure, and clarity of learning goals. Cronbach α for these items was 0.741 based on pilot study data. The evaluation was conducted online via ILIAS at time point t4. In addition, several items assessing blended learning formats were incorporated at t1 and t4, aiming to assess attitudes toward blended learning before and after the intervention. Students were asked to rate whether blended learning promotes self-directed learning, encourages lesson preparation and review, supports exam readiness, enhances curricular value, enables flexible learning, and increases study satisfaction.

Participants were additionally invited to online group interviews at t4. The interviews were analyzed with thematic analysis [56]. An in-depth mixed methods evaluation that includes the interviews will be published separately.

To evaluate the possible impact of the intervention on perceived stress, the validated and standardized 20-item Perceived Stress Questionnaire (PSQ-20) was applied at t4. It contains 20 items with 4 subscales: worries, tension, joy, and demands. Cronbach α is 0.80-0.86 [57].

Statistical Analysis

General

Data were analyzed using SPSS (version 27; IBM Corp). Summary statistics on participants’ demographic characteristics, baseline information, course evaluation, and primary outcome were computed using means, SDs, and proportions.

The following statistical tests were used to assess group differences in primary and secondary outcomes. For the primary outcome and exploratory analysis of subscores, 2-sided Mann-Whitney U tests were performed to account for nonparametric data distributions. For comparison between groups for nominal scales, chi-square (χ²) tests were performed or, if cell size was less than 5, Fisher exact test. For the secondary outcomes on longitudinal effects preintervention and postintervention, Wilcoxon rank tests were performed.

Concerning the internal reliability of questionnaires and evaluation based on the pilot study data, Cronbach α was calculated. We also calculated κ statistics underlying the primary outcome for assessment of interrater reliability in examination grading.

The study’s intention-to-treat approach considers possible contamination effects by between-group communication or student account misuse between groups.

Power

Power analysis suggested a sample size of 62 subjects to detect intergroup differences in examination scores of 16% with a SD of 20% and an estimated effect size of 0.71 when using 2-sided t tests for independent samples at a 5% significance level [58]. Adding a 25% buffer for dropouts, a total of 80 participants were aimed for as the total sample size.

Ethical Considerations

The study was assessed by the Ethics Committee of the Medical Faculty of Tübingen University on May 4, 2020, (231/2020BO1) and did not require ethical approval. Study participation was voluntary and students provided informed consent to participate in the study and access the respective course material in ILIAS. No financial inducement was given for study participation. However, all participating students received a bookstore voucher to compensate for their time commitment. Furthermore, participants who completed the study requirements were eligible to enter a lottery drawing for one iPad. Data were stored on ISO 27001–certified servers at UKT. The trial protocol is available from the authors upon request.

Results

Study Flow

Of 39 enrolled students, 38 were allocated to the intervention and control groups. In both groups, one participant, who, despite having signed informed consent, did not sign in to ILIAS. In the intervention group, one student dropped out before analysis due to an unresolvable video error. The control group had 4 dropouts who did not upload a video. In total, analyzable data from 31 study participants were obtained. Figure 3 below shows the study flow (CONSORT chart).

Figure 3. CONSORT (Consolidated Standards of Reporting Trials) study flowchart.

Sociodemographic and Occupational Characteristics

The majority of participants had no current employment. The intervention group had 2 more participants currently employed in the medical sector. Statistically, neither current nor past employment differed significantly between groups. Participant characteristics at baseline (t1) are presented in Table 1.

Table 1. Participant characteristics (N=18).

| Characteristic | Intervention | Control | P value |

|---|---|---|---|

| Age (years), mean (SD) | 21.78 (3.56) | 21.94 (5.68) | .92a |

| Femaleb, n (%) | 13 (72) | 14 (78) | ≥.99c |

| Previous occupational experience, n (%) | |||

| Yes | 9 (50) | 8 (44) | .74d |

| Currently employed, n (%) | |||

| Yes, medical sector | 4 (22) | 2 (11) | .73c |

| Yes, nonmedical sector | 1 (6) | 2 (11) | —e |

| No | 13 (72) | 13 (72) | — |

| Missing | 0 (0) | 1 (6) | — |

Student t test.

No participant identified as nonbinary.

Fisher exact test.

Chi-square test.

Not applicable.

Primary Outcome

Overview

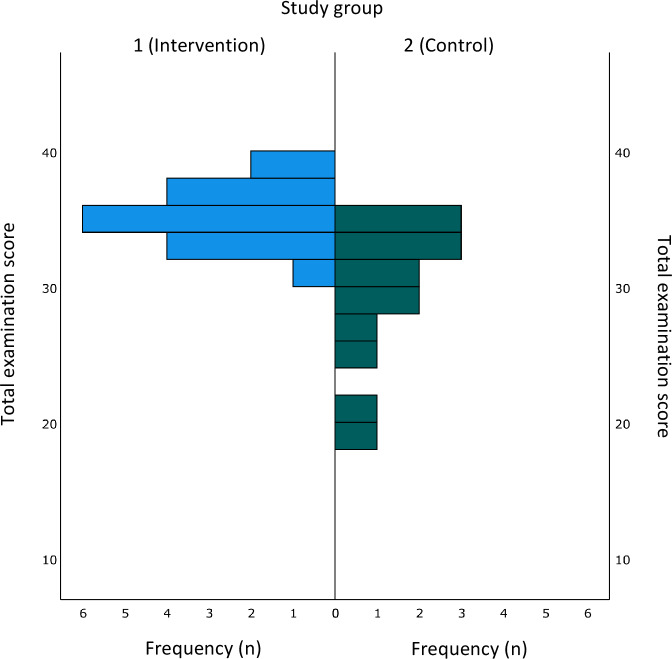

Intervention group participants reached higher mean performance scores compared to those in the control group (mean 34.71, SD 1.99 vs mean 29.43, SD 5.13), with differences being statistically significant at P<.001. Score distributions are shown in Figure 4. Interrater reliability for the shoulder examination checklist score between the two blinded raters was 0.763.

Figure 4. Distribution of sum scores in the graded examination per group.

Exploratory Analysis of Primary Outcome Subscores

We conducted a post hoc exploratory analysis of the primary outcome subscores: anatomical knowledge, clinical examination skills, and professionalism. In the anatomy subscore, the intervention group performed slightly better than the control group, with the difference reaching statistical significance. For clinical examination skills, the difference between groups was less pronounced but remained statistically significant. Table 2 summarizes the results of this analysis.

Table 2. Exploratory analysis of primary outcome subscores.

| Variable | Intervention, mean (SD) | Control, mean (SD) | P valuea |

|---|---|---|---|

| Total performance score (40 items, primary outcome) | 34.71 (1.99) | 29.43 (5.13) | <.001 |

| Anatomical knowledge (12-item subscore) | 9.88 (1.73) | 8.21 (1.93) | .02 |

| Clinical examination (17-item subscore) | 17.88 (0.86) | 15.14 (3.92) | .05 |

| Medical professionalism (9-item subscore) | 6.94 (0.90) | 6.07 (1.44) | .15 |

Mann-Whitney U test, exact significance.

Secondary Outcomes

Both groups showed a significant reduction in self-reported learning needs in anatomy and clinical examination. MCQ performance improved significantly in both groups after the course, with no significant differences between groups. Participants in the intervention group spent, on average, 2 hours more in the learning module than those in the control group, a statistically significant difference. No relevant group differences were found in attitudes toward blended learning. In the course evaluation, the intervention group rated the blended learning experience and overall course evaluation less favorably but gave higher ratings for the achievement of learning goals and curricular alignment compared to the control group.

Table 3 provides an overview of the secondary outcomes. Group differences at t1 were not significant and are not included.

Table 3. Secondary outcomes.

| Measurement | Dropout (n=5), t1, mean (SD) | Intervention (n=17), t1, mean (SD) | Intervention, mean (SD) | Difference, t4- t1 |

P valuea (interv) |

Control (n=14), t1, mean (SD) | Control, t4, mean (SD) | Difference, t4- t1 | P valuea (control) | Difference intervention-control (time); positive: favors intervention | P valueb |

|---|---|---|---|---|---|---|---|---|---|---|---|

| Affinity for technology interaction | 11.2 (4.55) | 12.65 (3.52) | —c | — | — | 14 (2.96) | — | — | — | −1.35 (t1) | .36 |

| Time spent (hours) | — | — | 6.06 (2.42) | — | — | — | 4.0 (1.76) | — | — | 2.06 (t4) | .01 |

| Perceived Stress Questionnaire score, index | — | — | 0.46 (0.09) | — | — | — | 0.47 (0.11) | — | — | −0.01 (t4) | .98 |

| Learning needs | |||||||||||

| Subscore anatomy | 17.2 (2.17) | 15.76 (2.73) | 9.53 (2.27) | −6.24 | .00 | 15.57 (3.8) | 10.43 (2.06) | −5.14 | .00 | −0.90 (t4) | .22 |

| Subscore professional conduct | 11.6 (3.78) | 8.76 (2.75) | 6.82 (2.43) | −1.94 | .00 | 9.5 (4.07) | 8.07 (2.06) | −1.43 | .13 | −1.25 (t4) | .19 |

| Subscore examination skills | 19 (1.73) | 18.41 (1.77) | 9 (2.35) | −9.41 | .00 | 18.43 (3.52) | 11.5 (2.47) | −6.93 | .00 | −2.50 (t4) | .01 |

| MCQse | 4.6 (2.07) | 5.53 (2) | 6.94 (1.43) | 1.41 | .01 | 4.71 (2.09) | 6.5 (1.83) | 1.79 | .00 | 0.82 (t4) | .63 |

| Attitudes toward blended learning (mean item score; 1=strongly disagree, 5=strongly agree) | |||||||||||

| Facilitate continuous self-sufficient learning | 2.4 (1.14) | 2.76 (1.2) | 2.65 (1.32) | −0.12 | .56 | 2.93 (0.73) | 2.79 (0.89) | −0.14 | .63 | −0.14 (t4) | .68 |

| Motivate me to prepare for or follow up on classroom events | 2.8 (1.3) | 2.94 (0.93) | 2.94 (1.02) | 0.00 | 1 | 2.93 (0.83) | 2.57 (0.51) | −0.36 | .17 | 0.37 (t4) | .32 |

| Support me in preparing for exams | 2 (1.23) | 2.65 (0.93) | 2.59 (0.8) | −0.06 | .76 | 2.50 (0.86) | 2.29 (0.61) | −0.21 | .37 | 0.3 (t4) | .23 |

| Provide added value in learning | 4.2 (1.1) | 3.35 (1) | 3.47 (1.07) | 0.12 | .6 | 3.43 (0.76) | 3.36 (0.63) | −0.07 | .74 | 0.11 (t4) | .92 |

| Allow learning at any time and from any place | 1.2 (0.45) | 1.59 (0.87) | 1.53 (0.87) | −0.06 | .65 | 1.14 (0.36) | 1.86 (0.86) | 0.72 | .01 | −0.33 (t4) | .22 |

| Increase satisfaction with my studies | 2.2 (1.3) | 3.18 (1.07) | 2.71 (1.26) | −0.47 | .05 | 3.57 (0.85) | 3.14 (0.66) | −0.43 | .11 | −0.43 (t4) | .22 |

| Facilitate my learning | 2.2 (1.1) | 2.76 (1.25) | 2.47 (1.18) | −0.29 | .06 | 2.71 (0.83) | 3.07 (0.73 | 0.36 | .21 | −0.6 (t4) | .11 |

| Evaluation (mean item score; 1=strongly disagree, 5=strongly agree) | |||||||||||

| The blended learning module was well executed | — | — | 1.94 (0.83) | — | — | — | 2.86 (0.66) | — | — | −0.92 (t4) | .01 |

| Learning goals were clearly defined | — | — | 4.24 (0.56) | — | — | — | 3.21 (0.8) | — | — | 1.03 (t4) | .00 |

| The course was clearly structured | — | — | 4.59 (0.5) | — | — | — | 3.57 (0.94) | — | — | 1.02 (t4) | .00 |

| The course aligned well with the curriculum | — | — | 4.35 (0.6) | — | — | — | 3.57 (1.16) | — | — | 0.78 (t4) | .03 |

| The course contributed to my learning success | — | — | 4.06 (0.75) | — | — | — | 3.57 (1.1) | — | — | 0.49 (t4) | .17 |

| Course assessment (grade; 1=very good, 6=insufficient) | — | — | 1.88 (0.6) | — | — | — | 2.57 (0.85) | — | — | −0.69 (t4) | .03 |

Wilcoxon rank-sign test.

Mann-Whitney U test.

Not applicable.

MCQ: multiple-choice question.

Discussion

Principal Findings

Overview

This randomized, observer-blinded trial tested whether an integrated course based on Kolb’s learning theory would improve first-year medical students’ OSCE performance. Students in the intervention group outperformed the control group after a 3-week course. Both groups showed reduced learning needs, though intervention students spent approximately 2 hours more in the course. The study confirmed the hypothesis but had limitations to consider.

Clinical Examination Skills

The observed difference in clinical examination performance between the 2 groups corresponded to a significant reduction in learning needs related to examination skills. These findings align with previous studies. Brewer et al [37] found that second-year students who received face-to-face instruction in clinical examination skills performed better than those in asynchronous e-learning or self-study groups, with the self-study group achieving the lowest scores. Brewer’s study used simulated patients without pathology and included a broader range of clinical tests. Both Brewer et al [37] and Vivekananda-Schmidt et al [13] reported high effect sizes for OSCE shoulder performance when students supplemented regular studies with asynchronous computer-assisted learning. Vivekananda-Schmidt also noted improved student confidence with computer-assisted learning, though our study did not assess confidence due to its limited relevance to performance [7,12].

Anatomical Knowledge

Participants in the intervention group demonstrated improved clinical examination performance without a negative impact on their theoretical anatomical knowledge, as measured by MCQs. We believe that the exclusion of advanced clinical examination tasks such as the Jobe test or Hawkins sign from the learning goals contributed to the increase in anatomical knowledge demonstrated in the exam and the more pronounced reduction of anatomical learning needs in the intervention group. According to the participating preclinical and clinical teachers in the multidisciplinary workshop, these tests require extensive knowledge of clinical pathology and might confuse first-year medical students. This notion is in line with existing research that advises careful didactic planning of anatomical content [2,3,11].

Although the online instruction in our study offered only basic anatomical content—focusing on muscles, bones, and function while underrepresenting coverage of nerves and vessels—both groups had recently attended curricular lectures designed to provide deeper anatomical knowledge. After the course, participants in both groups could identify anatomical structures and assess normal findings in a structured way. The key difference was that the intervention group had the chance to apply their theoretical knowledge in practice and receive structured feedback during the peer tutoring session [11,42]. Our findings suggest that early integration of practical experience does not hinder but may, in fact, support deeper anatomical and clinical learning if implemented appropriately [3,11,25].

Professional Conduct

Neither the professional subscore in the shoulder examination nor learning needs showed significant differences between groups. Professional conduct might represent a subjective feeling of confidence rather than a measurable outcome. It may not have been adequately operationalized in this study; for example, the relative passive role of the standardized patients posed no real challenges for professionalism. In addition, too little time was allocated to student-teacher interaction and exchange about professional conduct—a common shortcoming of blended learning [32]. This shows that some aspects of learning should be performed face-to-face and that time must be allocated for this interaction [26,30,32]. Also, professional conduct is difficult to teach explicitly and is often learned from various sources over a long period of time [13,18,19,27]. Social learning elements play a crucial role in fostering professionalism even in a predominantly remote learning environment [32]. It thrives through direct interaction between instructors and learners. This insight will inform the curricular integration of our learning module.

In summary, this study shows that clinical examination skills can successfully be taught early in the curriculum using a blended learning approach. The reduction in learning needs was not dependent on current or past employment or different levels of preexisting anatomical knowledge. We controlled for differences in gender and preoccupation through randomization. A baseline performance check using a structured examination before the study would perhaps have shown a clearer dependence on preexisting examination skills [59].

Dropout Analysis

The 5 dropouts had higher subjective learning needs at t1, scored lower in the MCQ knowledge test, and had a slightly lower technical affinity. Their attitudes toward blended learning differed from those of the other study participants. While they believed that blended learning provided added value to learning, they were less confident that it helped them prepare for exams or increase personal satisfaction with their studies. The dropout sample was too small for statistical analyses. However, there are 2 ways to interpret this: there might be a subgroup of students with such low expectations of blended learning that they might not use the method if given the choice [60], or the small study population could reflect a selection bias of motivated, stress-resilient, technology-affine, high-performing students who would do well in any learning environment [32].

Other Secondary Outcomes

The intervention group required, on average, 2 additional hours to complete the course compared to the control group. This was due to engagement with video lectures and demonstrations, as well as participation in the peer-assisted synchronous learning session, all of which required learners to allocate additional time, even though they had greater flexibility to choose when and where to engage with most of the content than the control group. Similar increases in workload have been observed in other studies on blended learning formats [36].

This interpretation is further supported by the intervention group’s high ratings of course structure, clarity of learning objectives, and perceived alignment with the curriculum.

Although overall stress levels were high in both groups at t4, they were comparable to those of other medical student cohorts [20]. We therefore infer that the increased workload did not result in a heightened perception of stress among intervention group participants.

Technical affinity was equally high across both groups, consistent with findings that current medical student cohorts are generally digitally literate [27]. For our sample, this suggests that time invested in studying, rather than digital affinity, was more strongly associated with learning success [46,47].

Implications for Curricular Development

When Should Clinical Aspects in Anatomy Learning Be Integrated?

Our study supports the integration of anatomy teaching and clinical examination early in the curriculum. Both study groups, exposed to clinical examination content during the first year, reported reduced learning needs and demonstrated measurable skill acquisition without compromising anatomical knowledge. These findings suggest that early competency development is feasible and particularly effective when teaching content and assessment formats are well aligned [10,14,21-25,45,undefined,undefined,undefined,undefined].

What is the Role of Blended Learning in Integrated Clinical and Anatomy Learning?

Our study demonstrated that an intervention using synchronous peer-assisted clinical training paired with asynchronous training videos in a flipped-classroom seminar improved student outcomes compared to self-study and synchronous online lectures, aligning with previous studies [30,37]. However, despite improved performance, students reported a less satisfactory blended learning experience, highlighting the challenge of effectively implementing such approaches [27,29]. Based on our findings, key factors for successful blended learning include clear learning goals, structured delivery, and theory-guided integration of content and teaching methods, which may be more influential than the delivery format itself on student evaluation [32,33]. In addition, effective implementation must account for contextual factors such as the COVID-19 pandemic, which may have influenced student perceptions in our study [26]. These findings highlight that while blended learning can enhance outcomes, its effectiveness depends on thoughtful design, clear objectives, and contextual factors—underscoring the need for nuanced, context-aware research in this field [41].

Limitations

This study is limited by its small sample size, although a power analysis indicated a need for 80 participants; only 38 were recruited, and 31 completed the trial. While randomization controlled for gender and professional experience, the small sample size limits generalizability and prevents definitive conclusions about causality.

Recruitment was difficult despite incentives, likely due to increased student workload during the COVID-19 pandemic [26,27]. Although results were promising, the recruitment shortfall and end of funding prevented a second cohort. In addition, curriculum changes during the later stages of the pandemic affected the study environment, leading us to conclude the trial and prioritize efforts to integrate the intervention into the curriculum.

Concerning the main outcome, the intervention course provided learners with tailored modular learning material and feedback opportunities, in contrast to the control group. Importantly, both groups received teaching on shoulder anatomy and clinical skills—a more integrated approach than the regular curriculum—and identical guidance on the performance assessment. This substantially reduces the study’s ability to isolate the effect of blended versus traditional learning. However, both groups showed knowledge gains, suggesting that early integration of clinical content is effective overall. The superior performance in the intervention group likely reflects not only the learning format but also the stronger alignment between the instructional design and the assessment tasks—consistent with our hypothesis that an integrated, theory-based course would improve clinical competency [47].

A reconnaissance effect on anatomical knowledge cannot be excluded, as the same MCQs were repeated at t4. However, student performance and learning needs indicated stable or improved knowledge. Peer learning sessions and exams were intended to be conducted face-to-face but were instead held via videoconferencing due to COVID-19. Although this deviated from the protocol, comparable learning outcomes have been reported in other studies. Video-recorded OSCEs are an established method [16], with only one upload failure. Interobserver reliability was substantial and consistent with other studies [9,14,37].

Conclusions

First-year medical students’ clinical performance on shoulder examinations can objectively be improved by an integrated blended-learning course in anatomy, professional conduct, and clinical examination skills. The results encourage early integration of clinical examination skills in preclinical medical education. Didactically, early clinical examination courses should focus on functional anatomy, a structured examination approach, and healthy subjects. Methodologically, the content can be delivered using blended learning that considers social aspects of learning and aligns the content to a standardized shoulder examination simulating a single OSCE station. If a face-to-face exam is not possible, videotaped examinations are a viable alternative. This study does not provide information about the sustainability of the learning. A cohort study that follows the progression through surface and deep learning phases to the transfer phase in this integrative approach is warranted [11].

Supplementary material

Acknowledgments

We thank Anna-Jasmin Wetzel and Nadine Nicole Koch for their support in the randomization process. We also thank all participants of the pilot study and our medical student tutors who moderated the online training sessions. The generative artificial intelligence tool ChatGPT (OpenAI) was used to revise the language and style of the manuscript, which were further reviewed and revised by the study group. The authors acknowledge support from the Open Access Publication fund of the University of Tübingen. The project received intramural funds from UKT (Profil Plus funds for curricular development).

Abbreviations

- ATI-S

Affinity for Technology Interaction Short Scale

- CONSORT

Consolidated Standards of Reporting Trials

- GP

general practitioner

- ILIAS

Integrated Learning, Information, and Work Cooperation System

- MCQ

multiple-choice question

- OSCE

objective structured clinical examination

- PSQ-20

20-item Perceived Stress Questionnaire

- SPIRIT

Standard Protocol Items: Recommendations for Interventional Trials

- TraceX

Transfer of anatomical knowledge in the examination situation for preclinical medical students

- UKT

Universitätsklinikum Tübingen

Footnotes

Data Availability: The datasets generated or analyzed during this study are not publicly available due to data protection regulations but are available from the corresponding author on reasonable request.

Authors’ Contributions: TS and RK contributed to the conceptualization of the study, with additional input from LG and TFW. Data curation and formal analysis were performed by NG, LG, and RK. TS and RK were responsible for funding acquisition. LG, NG, and RK conducted the investigation. Methodology was developed by NG, LG, RK, and TFW. Project administration was carried out by NG, LG, and RK. SJ, BH, and TS provided resources, while RK and NG developed the software. Supervision was provided by SJ, BH, and TS. Validation was carried out by SJ, BH, and TS. RK, NG, and LG were responsible for visualization. RK, LG, and NG prepared the original draft, and BH, TS, TFW, and SJ reviewed and edited the manuscript.

Conflicts of Interest: None declared.

References

- 1.Kjelle E, Andersen ER, Krokeide AM, et al. Characterizing and quantifying low-value diagnostic imaging internationally: a scoping review. BMC Med Imaging. 2022 Apr 21;22(1):73. doi: 10.1186/s12880-022-00798-2. doi. Medline. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Mitchell C, Adebajo A, Hay E, Carr A. Shoulder pain: diagnosis and management in primary care. BMJ. 2005 Nov 12;331(7525):1124–1128. doi: 10.1136/bmj.331.7525.1124. doi. Medline. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Meder A, Stefanescu MC, Ateschrang A, et al. Evidence-based examination techniques for the shoulder joint. Z Orthop Unfall. 2021 Jun;159(3):332–335. doi: 10.1055/a-1440-2242. doi. Medline. [DOI] [PubMed] [Google Scholar]

- 4.Cortes A, Quinlan NJ, Nazal MR, Upadhyaya S, Alpaugh K, Martin SD. A value-based care analysis of magnetic resonance imaging in patients with suspected rotator cuff tendinopathy and the implicated role of conservative management. J Shoulder Elbow Surg. 2019 Nov;28(11):2153–2160. doi: 10.1016/j.jse.2019.04.003. doi. Medline. [DOI] [PubMed] [Google Scholar]

- 5.Kroop SF, Chung CP, Davidson MA, Horn L, Damp JB, Dewey C. Rheumatologic skills development: what are the needs of internal medicine residents? Clin Rheumatol. 2016 Aug;35(8):2109–2115. doi: 10.1007/s10067-015-3150-4. doi. Medline. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Denizard-Thompson N, Feiereisel KB, Pedley CF, Burns C, Campos C. Musculoskeletal basics: the shoulder and the knee workshop for primary care residents. MedEdPORTAL. 2018 Sep 15;14:10749. doi: 10.15766/mep_2374-8265.10749. doi. Medline. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Day CS, Yeh AC. Evidence of educational inadequacies in region-specific musculoskeletal medicine. Clin Orthop Relat Res. 2008 Oct;466(10):2542–2547. doi: 10.1007/s11999-008-0379-0. doi. Medline. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Day CS, Yeh AC, Franko O, Ramirez M, Krupat E. Musculoskeletal medicine: an assessment of the attitudes and knowledge of medical students at Harvard Medical School. Acad Med. 2007 May;82(5):452–457. doi: 10.1097/ACM.0b013e31803ea860. doi. Medline. [DOI] [PubMed] [Google Scholar]

- 9.Stansfield RB, Diponio L, Craig C, et al. Assessing musculoskeletal examination skills and diagnostic reasoning of 4th year medical students using a novel objective structured clinical exam. BMC Med Educ. 2016 Oct 14;16(1):268. doi: 10.1186/s12909-016-0780-4. doi. Medline. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Skelley NW, Tanaka MJ, Skelley LM, LaPorte DM. Medical student musculoskeletal education: an institutional survey. J Bone Joint Surg Am. 2012 Oct 3;94(19):e146. doi: 10.2106/JBJS.K.01286. doi. Medline. [DOI] [PubMed] [Google Scholar]

- 11.Cheung CC, Bridges SM, Tipoe GL. Why is anatomy difficult to learn? The implications for undergraduate medical curricula. Anat Sci Educ. 2021 Nov;14(6):752–763. doi: 10.1002/ase.2071. doi. Medline. [DOI] [PubMed] [Google Scholar]

- 12.Vivekananda-Schmidt P, Lewis M, Hassell AB, et al. Validation of MSAT: an instrument to measure medical students’ self-assessed confidence in musculoskeletal examination skills. Med Educ. 2007 Apr;41(4):402–410. doi: 10.1111/j.1365-2929.2007.02712.x. doi. Medline. [DOI] [PubMed] [Google Scholar]

- 13.Vivekananda-Schmidt P, Lewis M, Hassell AB, ARC Virtual Rheumatology CAL Research Group Cluster randomized controlled trial of the impact of a computer-assisted learning package on the learning of musculoskeletal examination skills by undergraduate medical students. Arthritis Rheum. 2005 Oct 15;53(5):764–771. doi: 10.1002/art.21438. doi. Medline. [DOI] [PubMed] [Google Scholar]

- 14.Battistone MJ, Barker AM, Beck JP, Tashjian RZ, Cannon GW. Validity evidence for two objective structured clinical examination stations to evaluate core skills of the shoulder and knee assessment. BMC Med Educ. 2017 Jan 13;17(1):13. doi: 10.1186/s12909-016-0850-7. doi. Medline. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Kolb DA. Experiential Learning: Experience as the Source of Learning and Development. Financial Times/Prentice Hall; 2015. ISBN.0-13-389240-9 [Google Scholar]

- 16.Vivekananda-Schmidt P, Lewis M, Coady D, et al. Exploring the use of videotaped objective structured clinical examination in the assessment of joint examination skills of medical students. Arthritis Rheum. 2007 Jun 15;57(5):869–876. doi: 10.1002/art.22763. doi. Medline. [DOI] [PubMed] [Google Scholar]

- 17.Zabel J, Sterz J, Hoefer SH, et al. The use of teaching associates for knee and shoulder examination: a comparative effectiveness analysis. J Surg Educ. 2019;76(5):1440–1449. doi: 10.1016/j.jsurg.2019.03.006. doi. Medline. [DOI] [PubMed] [Google Scholar]

- 18.Cappi V, Artioli G, Ninfa E, et al. The use of blended learning to improve health professionals’ communication skills: a literature review. Acta Biomed. 2019 Mar 28;90(4-S):17–24. doi: 10.23750/abm.v90i4-S.8330. doi. Medline. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Shiozawa T, Griewatz J, Hirt B, Zipfel S, Lammerding-Koeppel M, Herrmann-Werner A. Development of a seminar on medical professionalism accompanying the dissection course. Ann Anat. 2016 Nov;208:208–211. doi: 10.1016/j.aanat.2016.07.004. doi. Medline. [DOI] [PubMed] [Google Scholar]

- 20.Heinen I, Bullinger M, Kocalevent RD. Perceived stress in first year medical students - associations with personal resources and emotional distress. BMC Med Educ. 2017 Jan 6;17(1):4. doi: 10.1186/s12909-016-0841-8. doi. Medline. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Reynolds A, Goodwin M, O’Loughlin VD. General trends in skeletal muscle coverage in undergraduate human anatomy and anatomy and physiology courses. Adv Physiol Educ. 2022 Jun 1;46(2):309–318. doi: 10.1152/advan.00084.2021. doi. Medline. [DOI] [PubMed] [Google Scholar]

- 22.Burger A, Huenges B, Köster U, et al. 15 years of the model study course in medicine at the Ruhr University Bochum. GMS J Med Educ. 2019;36(5):Doc59. doi: 10.3205/zma001267. doi. Medline. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Simon M, Martens A, Finsterer S, Sudmann S, Arias J. The Aachen model study course in medicine - development and implementation. Fifteen years of a reformed medical curriculum at RWTH Aachen University. GMS J Med Educ. 2019;36(5):Doc60. doi: 10.3205/zma001268. doi. Medline. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.McBride JM, Drake RL. National survey on anatomical sciences in medical education. Anat Sci Educ. 2018 Jan;11(1):7–14. doi: 10.1002/ase.1760. doi. Medline. [DOI] [PubMed] [Google Scholar]

- 25.Khalil MK, Giannaris EL, Lee V, et al. Integration of clinical anatomical sciences in medical education: design, development and implementation strategies. Clin Anat. 2021 Jul;34(5):785–793. doi: 10.1002/ca.23736. doi. Medline. [DOI] [PubMed] [Google Scholar]

- 26.Shin M, Prasad A, Sabo G, et al. Anatomy education in US Medical Schools: before, during, and beyond COVID-19. BMC Med Educ. 2022 Feb 16;22(1):103. doi: 10.1186/s12909-022-03177-1. doi. Medline. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Xiao J, Evans DJR. Anatomy education beyond the Covid-19 pandemic: a changing pedagogy. Anat Sci Educ. 2022 Nov;15(6):1138–1144. doi: 10.1002/ase.2222. doi. Medline. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Park H, Shim S, Lee YM. A scoping review on adaptations of clinical education for medical students during COVID-19. Prim Care Diabetes. 2021 Dec;15(6):958–976. doi: 10.1016/j.pcd.2021.09.004. doi. Medline. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Banovac I, Katavić V, Blažević A, et al. The anatomy lesson of the SARS-CoV-2 pandemic: irreplaceable tradition (cadaver work) and new didactics of digital technology. Croat Med J. 2021 Apr 30;62(2):173–186. doi: 10.3325/cmj.2021.62.173. doi. Medline. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.McWatt SC. Responding to Covid-19: a thematic analysis of students’ perspectives on modified learning activities during an emergency transition to remote human anatomy education. Anat Sci Educ. 2021 Nov;14(6):721–738. doi: 10.1002/ase.2136. doi. Medline. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Wolniczak E, Roskoden T, Rothkötter HJ, Storsberg SD. Course of macroscopic anatomy in Magdeburg under pandemic conditions. GMS J Med Educ. 2020;37(7):Doc65. doi: 10.3205/zma001358. doi. Medline. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Venkatesh S, Rao YK, Nagaraja H, Woolley T, Alele FO, Malau-Aduli BS. Factors influencing medical students’ experiences and satisfaction with blended integrated e-learning. Med Princ Pract. 2020;29(4):396–402. doi: 10.1159/000505210. doi. Medline. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Kuhn S, Frankenhauser S, Tolks D. Digital learning and teaching in medical education: already there or still at the beginning? Bundesgesundheitsblatt Gesundheitsforschung Gesundheitsschutz. 2018 Feb;61(2):201–209. doi: 10.1007/s00103-017-2673-z. doi. Medline. [DOI] [PubMed] [Google Scholar]

- 34.Tarpada SP, Hsueh WD, Gibber MJ. Resident and student education in otolaryngology: a 10-year update on e-learning. Laryngoscope. 2017 Jul;127(7):E219–E224. doi: 10.1002/lary.26320. doi. Medline. [DOI] [PubMed] [Google Scholar]

- 35.Van Nuland SE, Rogers KA. The skeletons in our closet: e-learning tools and what happens when one side does not fit all. Anat Sci Educ. 2017 Nov;10(6):570–588. doi: 10.1002/ase.1708. doi. Medline. [DOI] [PubMed] [Google Scholar]

- 36.Green RA, Whitburn LY. Impact of introduction of blended learning in gross anatomy on student outcomes. Anat Sci Educ. 2016 Oct;9(5):422–430. doi: 10.1002/ase.1602. doi. Medline. [DOI] [PubMed] [Google Scholar]

- 37.Brewer PE, Racy M, Hampton M, Mushtaq F, Tomlinson JE, Ali FM. A three-arm single blind randomised control trial of naïve medical students performing a shoulder joint clinical examination. BMC Med Educ. 2021 Jul 21;21(1):390. doi: 10.1186/s12909-021-02822-5. doi. Medline. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Kyaw BM, Posadzki P, Paddock S, Car J, Campbell J, Tudor Car L. Effectiveness of digital education on communication skills among medical students: systematic review and meta-analysis by the digital health education collaboration. J Med Internet Res. 2019 Aug 27;21(8):e12967. doi: 10.2196/12967. doi. Medline. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Garrison DR, Kanuka H. Blended learning: uncovering its transformative potential in higher education. Internet High Educ. 2004 Apr;7(2):95–105. doi: 10.1016/j.iheduc.2004.02.001. doi. [DOI] [Google Scholar]

- 40.Lin DC, Bunch B, De Souza RZD, et al. Effectiveness of pedagogical tools for teaching medical gross anatomy during the COVID-19 pandemic. Med Sci Educ. 2022 Apr;32(2):411–422. doi: 10.1007/s40670-022-01524-x. doi. Medline. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Chen F, Lui AM, Martinelli SM. A systematic review of the effectiveness of flipped classrooms in medical education. Med Educ. 2017 Jun;51(6):585–597. doi: 10.1111/medu.13272. doi. Medline. [DOI] [PubMed] [Google Scholar]

- 42.Baskaran R, Mukhopadhyay S, Ganesananthan S, et al. Enhancing medical students` confidence and performance in integrated structured clinical examinations (ISCE) through a novel near-peer, mixed model approach during the COVID-19 pandemic. BMC Med Educ. 2023 Feb 23;23(1):128. doi: 10.1186/s12909-022-03970-y. doi. Medline. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Ivarson J, Hermansson A, Meister B, Zeberg H, Bolander Laksov K, Ekström W. Transfer of anatomy during surgical clerkships: an exploratory study of a student-staff partnership. Int J Med Educ. 2022 Aug 31;13:221–229. doi: 10.5116/ijme.62eb.850a. doi. Medline. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Avonts M, Michels NR, Bombeke K, et al. Does peer teaching improve academic results and competencies during medical school? A mixed methods study. BMC Med Educ. 2022 Jun 4;22(1):431. doi: 10.1186/s12909-022-03507-3. doi. Medline. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.Biggs J. Enhancing teaching through constructive alignment. High Educ. 1996 Oct;32(3):347–364. doi: 10.1007/BF00138871. doi. [DOI] [Google Scholar]

- 46.Backhaus J, Huth K, Entwistle A, Homayounfar K, Koenig S. Digital affinity in medical students influences learning outcome: a cluster analytical design comparing vodcast with traditional lecture. J Surg Educ. 2019;76(3):711–719. doi: 10.1016/j.jsurg.2018.12.001. doi. Medline. [DOI] [PubMed] [Google Scholar]

- 47.Farkas GJ, Mazurek E, Marone JR. Learning style versus time spent studying and career choice: which is associated with success in a combined undergraduate anatomy and physiology course? Anat Sci Educ. 2016;9(2):121–131. doi: 10.1002/ase.1563. doi. Medline. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48.Brannick MT, Erol-Korkmaz HT, Prewett M. A systematic review of the reliability of objective structured clinical examination scores. Med Educ. 2011 Dec;45(12):1181–1189. doi: 10.1111/j.1365-2923.2011.04075.x. doi. Medline. [DOI] [PubMed] [Google Scholar]

- 49.Gormley GJ, Collins K, Boohan M, Bickle IC, Stevenson M. Is there a place for e-learning in clinical skills? A survey of undergraduate medical students’ experiences and attitudes. Med Teach. 2009 Jan;31(1):e6–12. doi: 10.1080/01421590802334317. doi. Medline. [DOI] [PubMed] [Google Scholar]

- 50.Pereira JA, Pleguezuelos E, Merí A, Molina-Ros A, Molina-Tomás MC, Masdeu C. Effectiveness of using blended learning strategies for teaching and learning human anatomy. Med Educ. 2007 Feb;41(2):189–195. doi: 10.1111/j.1365-2929.2006.02672.x. doi. Medline. [DOI] [PubMed] [Google Scholar]

- 51.Schulz KF, Altman DG, Moher D, CONSORT Group CONSORT 2010 statement: updated guidelines for reporting parallel group randomised trials. BMJ. 2010 Mar 23;340:c332. doi: 10.1136/bmj.c332. doi. Medline. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 52.Chan AW, Tetzlaff JM, Altman DG, et al. SPIRIT 2013 statement: defining standard protocol items for clinical trials. Ann Intern Med. 2013 Feb 5;158(3):200–207. doi: 10.7326/0003-4819-158-3-201302050-00583. doi. Medline. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 53.Graf J, Smolka R, Simoes E, et al. Communication skills of medical students during the OSCE: gender-specific differences in a longitudinal trend study. BMC Med Educ. 2017 May 2;17(1):75. doi: 10.1186/s12909-017-0913-4. doi. Medline. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 54.Zimmermann P, Kadmon M. Standardized examinees: development of a new tool to evaluate factors influencing OSCE scores and to train examiners. GMS J Med Educ. 2020;37(4):Doc40. doi: 10.3205/zma001333. doi. Medline. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 55.Franke T, Attig C, Wessel D. A personal resource for technology interaction: development and validation of the Affinity for Technology Interaction (ATI) scale. Int J Hum Comput Interact. 2019 Apr 3;35(6):456–467. doi: 10.1080/10447318.2018.1456150. doi. [DOI] [Google Scholar]

- 56.Braun V, Clarke V, Hayfield N, Terry G. In: Handbook of Research Methods in Health Social Sciences. Liamputtong P, editor. Springer; 2019. Thematic analysis; pp. 978–981. doi. ISBN.978-981-10-5251-4 [DOI] [Google Scholar]

- 57.Fliege H, Rose M, Arck P, et al. The Perceived Stress Questionnaire (PSQ) reconsidered: validation and reference values from different clinical and healthy adult samples. Psychosom Med. 2005;67(1):78–88. doi: 10.1097/01.psy.0000151491.80178.78. doi. Medline. [DOI] [PubMed] [Google Scholar]

- 58.Cohen J. Statistical Power Analysis for the Behavioral Sciences. 2nd. Routledge; 2013. doi. ISBN.9780203771587 [DOI] [Google Scholar]

- 59.Westphale S, Backhaus J, Koenig S. Quantifying teaching quality in medical education: the impact of learning gain calculation. Med Educ. 2022 Mar;56(3):312–320. doi: 10.1111/medu.14694. doi. Medline. [DOI] [PubMed] [Google Scholar]

- 60.Azizi SM, Roozbahani N, Khatony A. Factors affecting the acceptance of blended learning in medical education: application of UTAUT2 model. BMC Med Educ. 2020 Oct 16;20(1):367. doi: 10.1186/s12909-020-02302-2. doi. Medline. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.